Interactive visualization requires JavaScript

Related research and data

- Artificial intelligence has advanced despite having few resources dedicated to its development – now investments have increased substantially

- Affiliation of research teams building notable AI systems, by year of publication

- Annual attendance at major artificial intelligence conferences

- Annual global corporate investment in artificial intelligence, by type

- Annual granted patents related to artificial intelligence, by industry

- Annual industrial robots installed

- Annual patent applications related to AI per million people

- Annual patent applications related to AI, by status

- Annual patent applications related to artificial intelligence

- Annual private investment in artificial intelligence NetBase Quid

- Annual private investment in artificial intelligence CSET

- Annual private investment in artificial intelligence, by focus area NetBase Quid

- Annual professional service robots installed, by application area

- Annual reported artificial intelligence incidents and controversies

- Artificial intelligence: Performance on knowledge tests vs. dataset size

- Artificial intelligence: Performance on knowledge tests vs. number of parameters

- Artificial intelligence: Performance on knowledge tests vs. training computation

- Chess ability of the best computers

- Computation used to train notable AI systems, by affiliation of researchers

- Computation used to train notable artificial intelligence systems

- Countries with national artificial intelligence strategies

- Cumulative AI-related bills passed into law since 2016

- Cumulative number of notable AI systems by domain

- Datapoints used to train notable artificial intelligence systems

- Domain of notable artificial intelligence systems, by year of publication

- Employer of new AI PhDs in the United States and Canada

- GPU computational performance per dollar

- Global views about AI's impact on society in the next 20 years, by demographic group

- Global views about the safety of riding in a self-driving car, by demographic group

- How worried are Americans about their work being automated?

- ImageNet: Top-performing AI systems in labeling images

- Industrial robots: Annual installations and total in operation

- Market share for logic chip production, by manufacturing stage

- Newly-funded artificial intelligence companies

- Parameters in notable artificial intelligence systems

- Parameters vs. training dataset size in notable AI systems, by researcher affiliation

- Protein folding prediction accuracy

- Scholarly publications on artificial intelligence per million people

- Share of artificial intelligence jobs among all job postings

- Share of companies using artificial intelligence technology

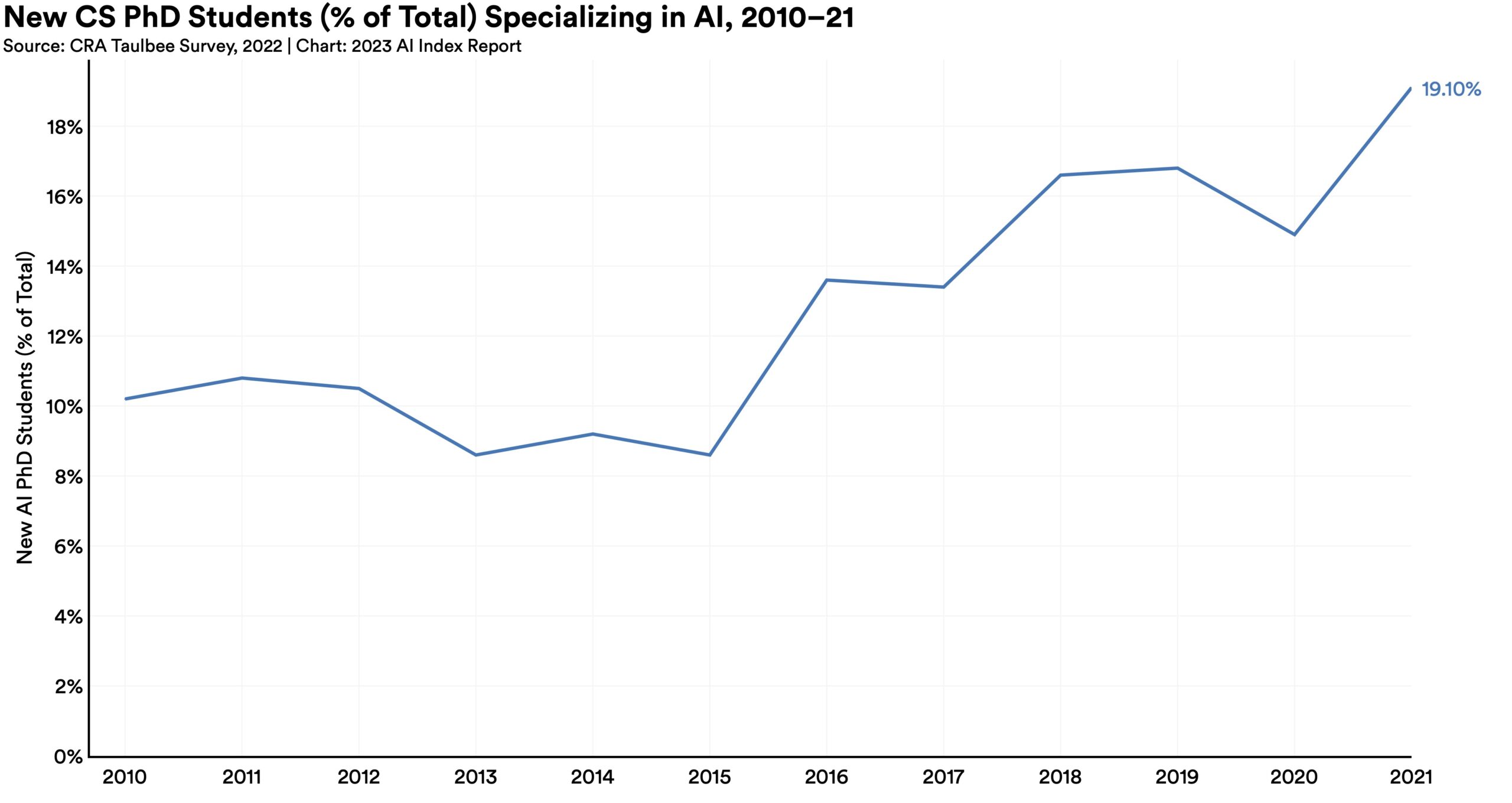

- Share of computer science PhDs specializing in artificial intelligence in the US and Canada

- Share of notable AI systems by domain

- Share of notable AI systems by researcher affiliation

- Share of women among new artificial intelligence and computer science PhDs, United States and Canada

- Top performing AI systems in coding, math, and language-based knowledge tests

- Training computation vs. dataset size in notable AI systems, by researcher affiliation

- Training computation vs. parameters in notable AI systems, by domain

- Training computation vs. parameters in notable AI systems, by researcher affiliation

- Views about AI's impact on society in the next 20 years

- Views about the safety of riding in a self-driving car

- Views of Americans about robot vs. human intelligence

Our World in Data is free and accessible for everyone.

Help us do this work by making a donation.

THE AI INDEX REPORT

Measuring trends in Artificial Intelligence

ai iNDEX anNUAL rEPORT

Welcome to the 2023 AI Index Report

The AI Index is an independent initiative at the Stanford Institute for Human-Centered Artificial Intelligence (HAI), led by the AI Index Steering Committee, an interdisciplinary group of experts from across academia and industry. The annual report tracks , collates , distills , and visualizes data relating to artificial intelligence, enabling decision-makers to take meaningful action to advance AI responsibly and ethically with humans in mind. The AI Index collaborates with many different organizations to track progress in artificial intelligence. These organizations include: the Center for Security and Emerging Technology at Georgetown University, LinkedIn, NetBase Quid, Lightcast, and McKinsey. The 2023 report also features more self-collected data and original analysis than ever before. This year’s report included new analysis on foundation models, including their geopolitics and training costs, the environmental impact of AI systems, K-12 AI education, and public opinion trends in AI. The AI Index also broadened its tracking of global AI legislation from 25 countries in 2022 to 127 in 2023.

TOP TAKEAWAYS

- Industry races ahead of academia.

Until 2014, most significant machine learning models were released by academia. Since then, industry has taken over. In 2022, there were 32 significant industry-produced machine learning models compared to just three produced by academia. Building state-of-the-art AI systems increasingly requires large amounts of data, compute, and money, resources that industry actors inherently possess in greater amounts compared to nonprofits and academia.

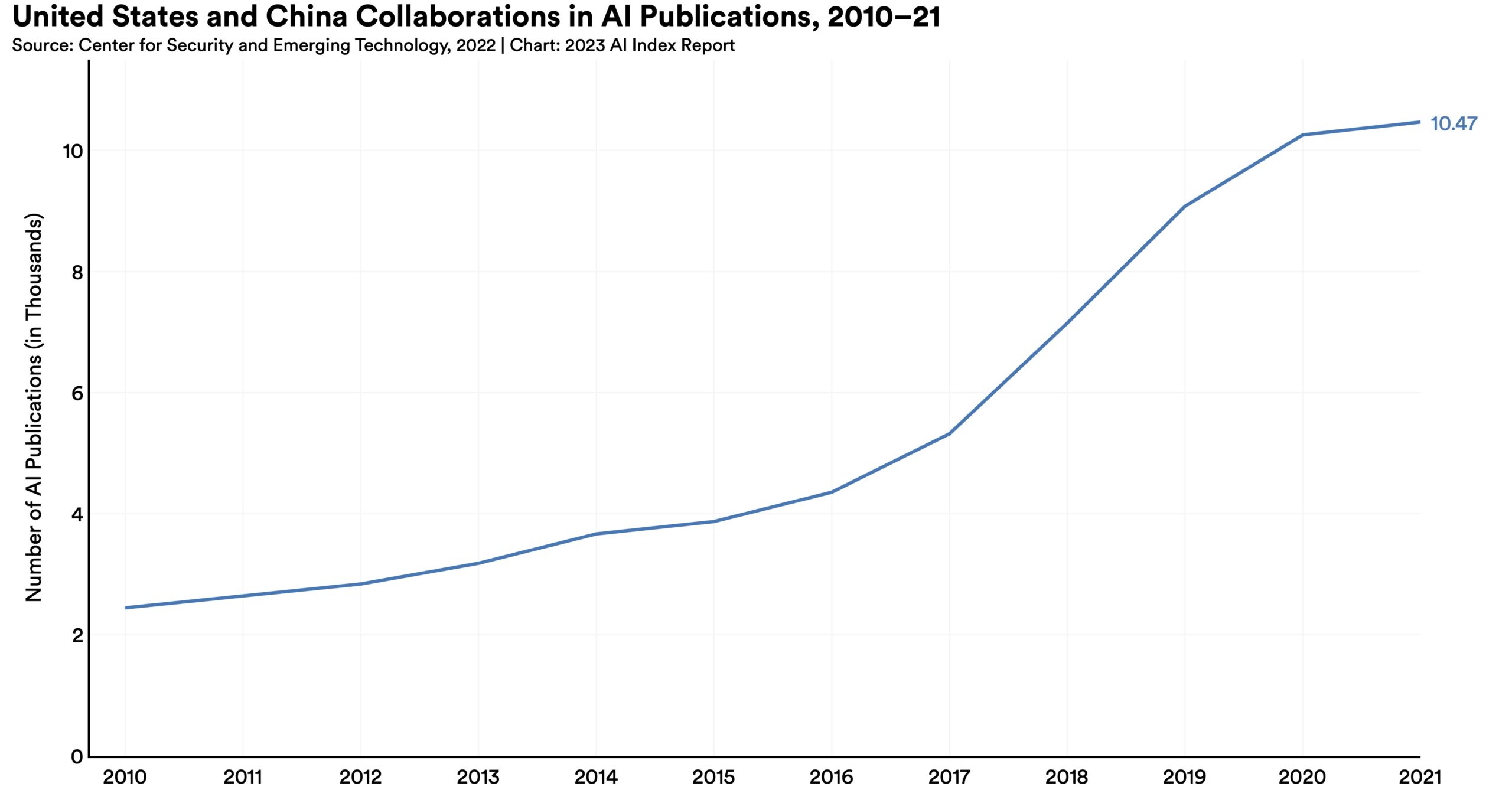

- Performance saturation on traditional benchmarks.

AI continued to post state-of-the-art results, but year-over-year improvement on many benchmarks continues to be marginal. Moreover, the speed at which benchmark saturation is being reached is increasing. However, new, more comprehensive benchmarking suites such as BIG-bench and HELM are being released.

- AI is both helping and harming the environment.

New research suggests that AI systems can have serious environmental impacts. According to Luccioni et al., 2022, BLOOM’s training run emitted 25 times more carbon than a single air traveler on a one-way trip from New York to San Francisco. Still, new reinforcement learning models like BCOOLER show that AI systems can be used to optimize energy usage.

- The world’s best new scientist … AI?

AI models are starting to rapidly accelerate scientific progress and in 2022 were used to aid hydrogen fusion, improve the efficiency of matrix manipulation, and generate new antibodies.

- The number of incidents concerning the misuse of AI is rapidly rising.

According to the AIAAIC database, which tracks incidents related to the ethical misuse of AI, the number of AI incidents and controversies has increased 26 times since 2012. Some notable incidents in 2022 included a deepfake video of Ukrainian President Volodymyr Zelenskyy surrendering and U.S. prisons using call-monitoring technology on their inmates. This growth is evidence of both greater use of AI technologies and awareness of misuse possibilities.

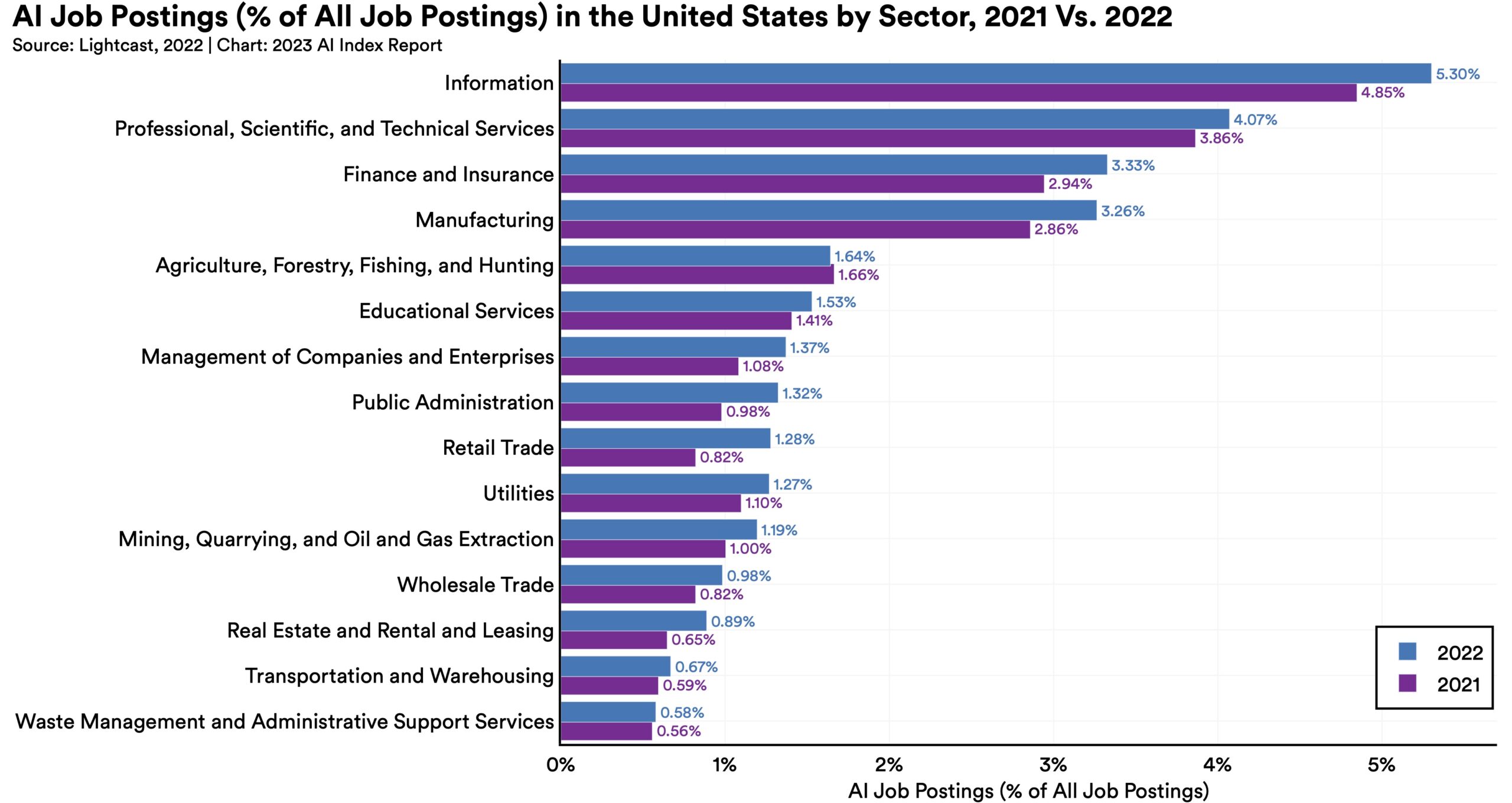

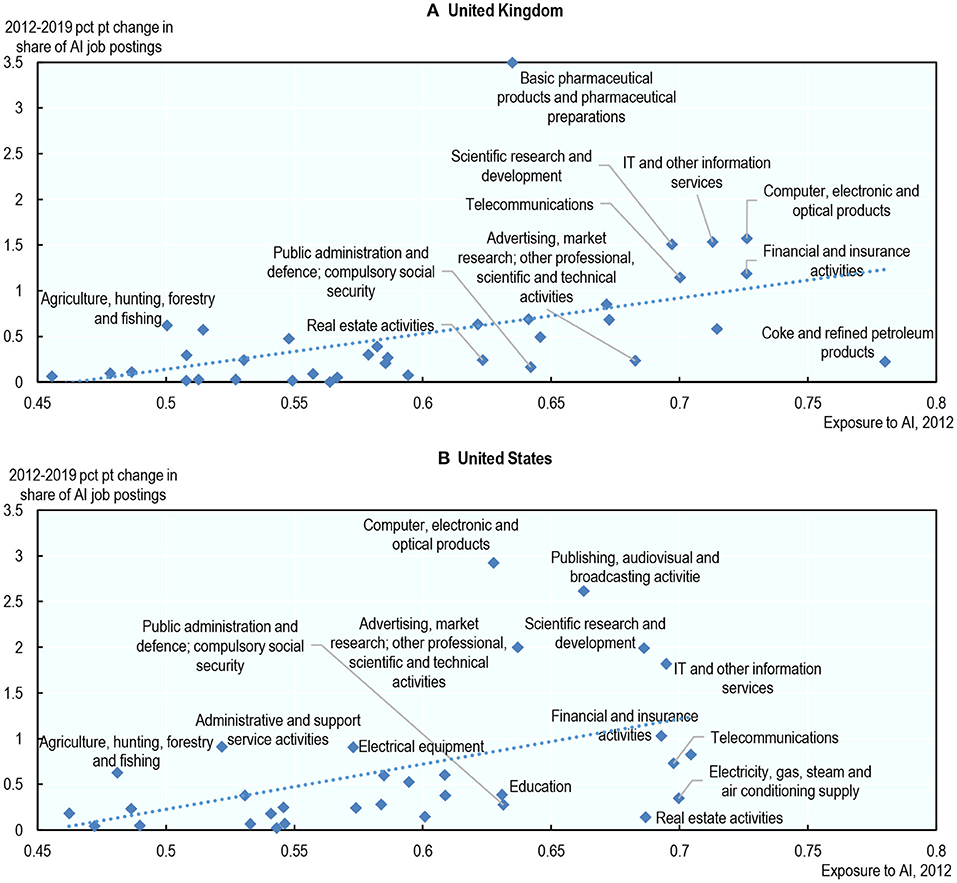

- The demand for AI-related professional skills is increasing across virtually every American industrial sector.

Across every sector in the United States for which there is data (with the exception of agriculture, forestry, fishery and hunting), the number of AI-related job postings has increased on average from 1.7% in 2021 to 1.9% in 2022. Employers in the United States are increasingly looking for workers with AI-related skills.

- For the first time in the last decade, year-over-year private investment in AI decreased.

Global AI private investment was $91.9 billion in 2022, which represented a 26.7% decrease since 2021. The total number of AI-related funding events as well as the number of newly funded AI companies likewise decreased. Still, during the last decade as a whole, AI investment has significantly increased. In 2022 the amount of private investment in AI was 18 times greater than it was in 2013.

- While the proportion of companies adopting AI has plateaued, the companies that have adopted AI continue to pull ahead.

The proportion of companies adopting AI in 2022 has more than doubled since 2017, though it has plateaued in recent years between 50% and 60%, according to the results of McKinsey’s annual research survey. Organizations that have adopted AI report realizing meaningful cost decreases and revenue increases.

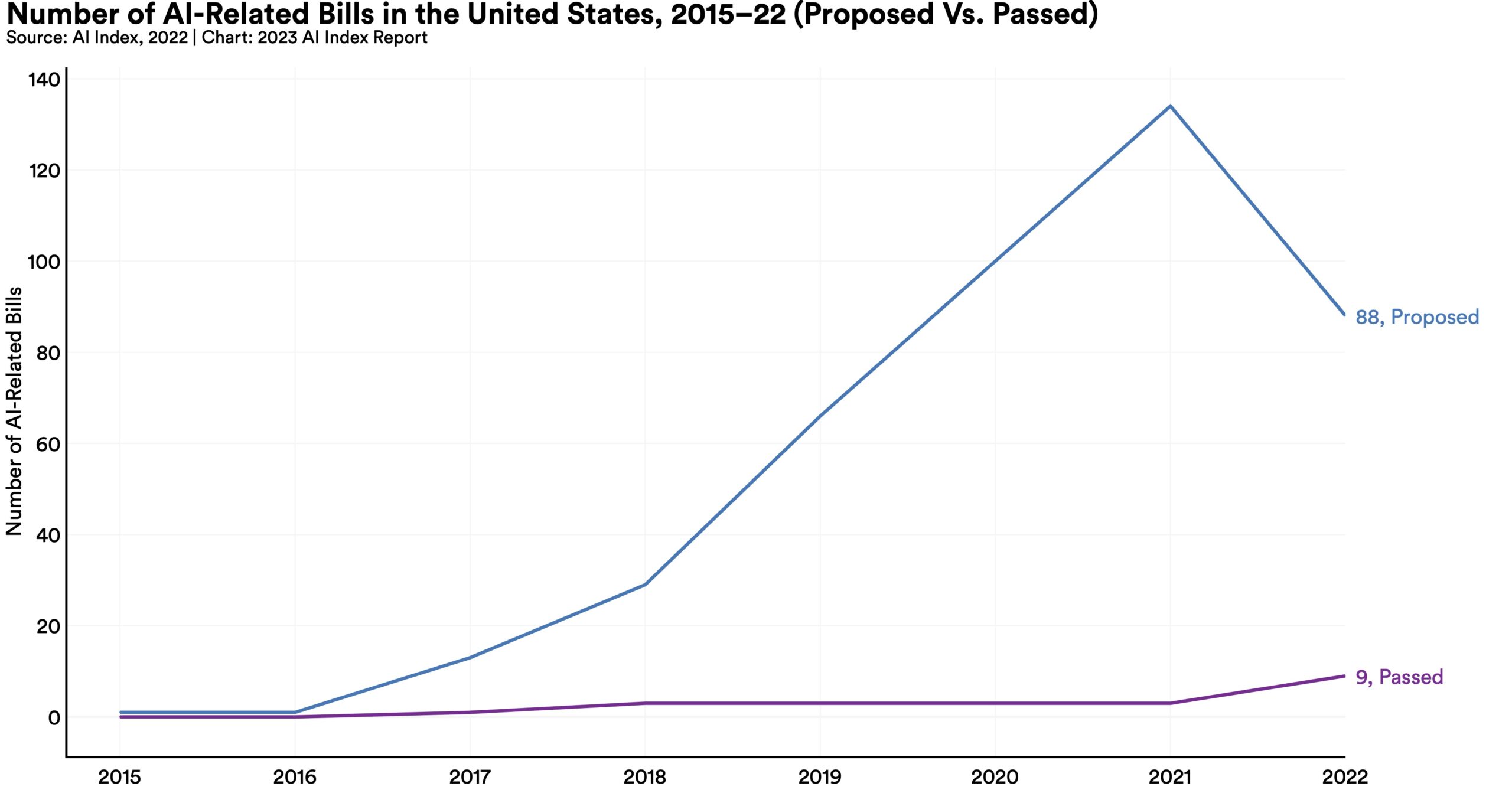

Policymaker interest in AI is on the rise.

An AI Index analysis of the legislative records of 127 countries shows that the number of bills containing “artificial intelligence” that were passed into law grew from just 1 in 2016 to 37 in 2022. An analysis of the parliamentary records on AI in 81 countries likewise shows that mentions of AI in global legislative proceedings have increased nearly 6.5 times since 2016.

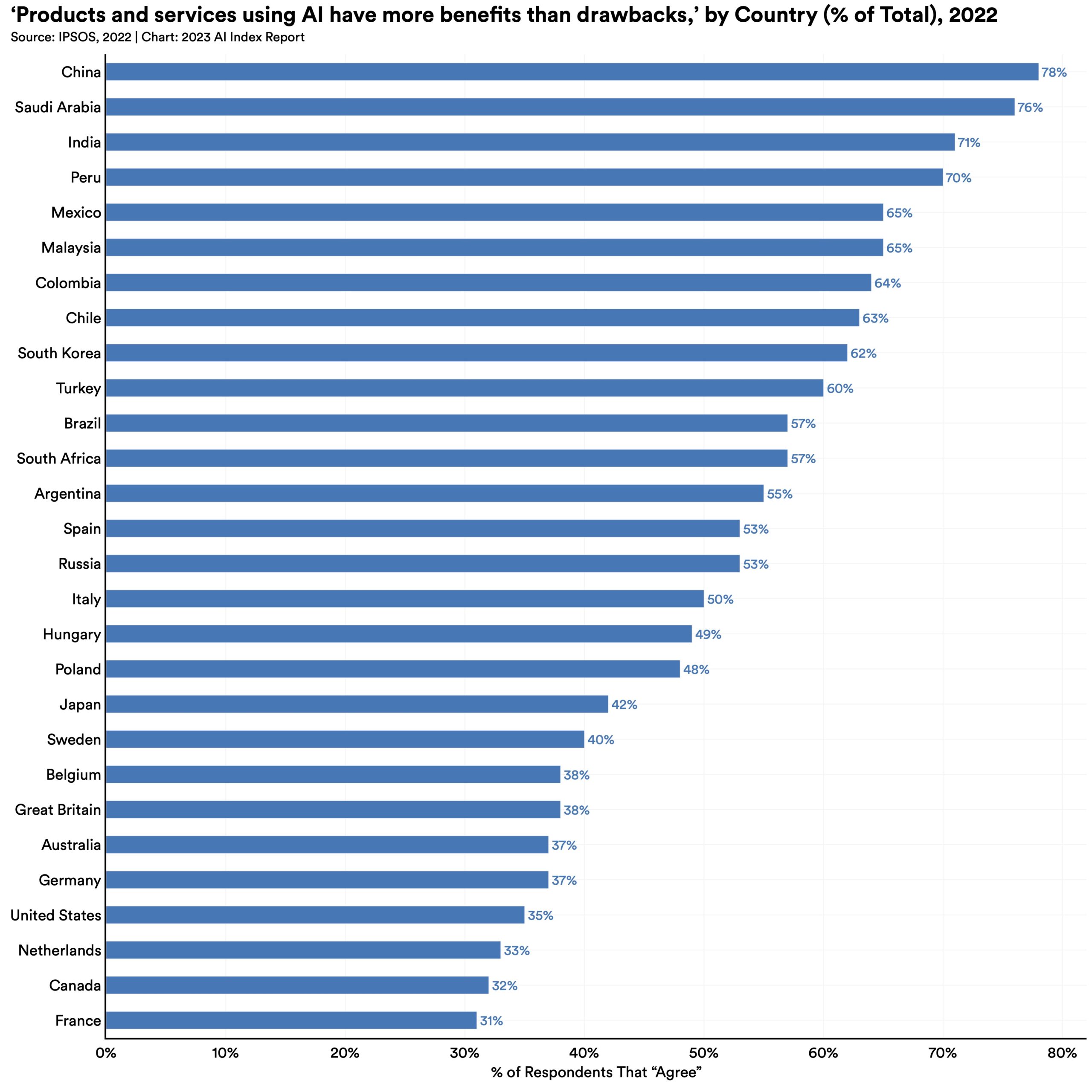

Chinese citizens are among those who feel the most positively about AI products and services. Americans … not so much.

In a 2022 IPSOS survey, 78% of Chinese respondents (the highest proportion of surveyed countries) agreed with the statement that products and services using AI have more benefits than drawbacks. After Chinese respondents, those from Saudi Arabia (76%) and India (71%) felt the most positive about AI products. Only 35% of sampled Americans (among the lowest of surveyed countries) agreed that products and services using AI had more benefits than drawbacks.

Chapter 1: Research and Development

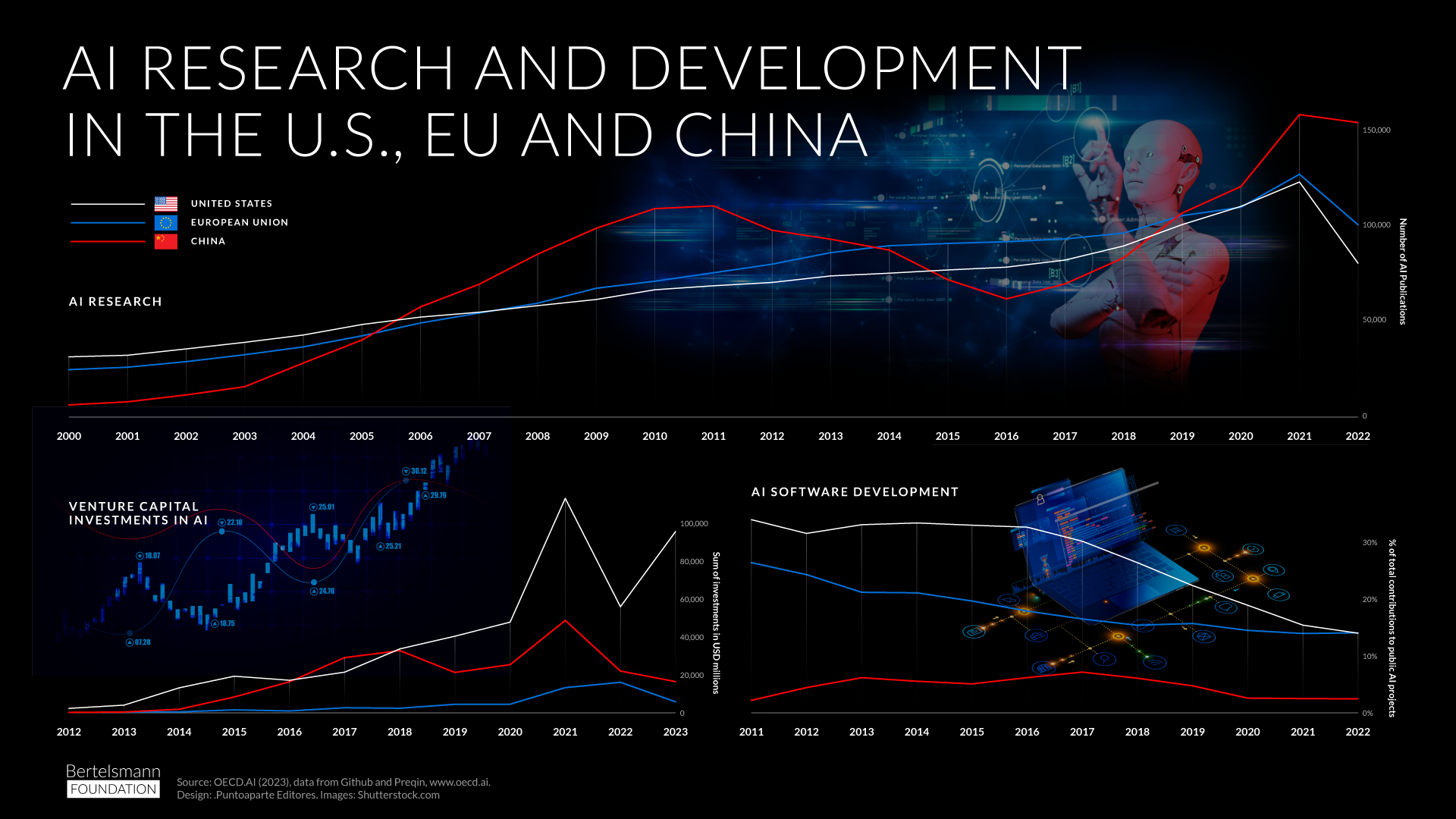

This chapter captures trends in AI R&D. It begins by examining AI publications, including journal articles, conference papers, and repositories. Next it considers data on significant machine learning systems, including large language and multimodal models. Finally, the chapter concludes by looking at AI conference attendance and open-source AI research. Although the United States and China continue to dominate AI R&D, research efforts are becoming increasingly geographically dispersed.

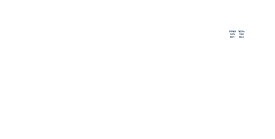

- The United States and China had the greatest number of cross-country collaborations in AI publications from 2010 to 2021, although the pace of collaboration has since slowed.

- AI research is on the rise, across the board.

- China continues to lead in total AI journal, conference, and repository publications.

- Large language models are getting bigger and more expensive.

Chapter 2: Technical Performance

This year’s technical performance chapter features analysis of the technical progress in AI during 2022. Building on previous reports, this chapter chronicles advancement in computer vision, language, speech, reinforcement learning, and hardware. Moreover, this year this chapter features an analysis on the environmental impact of AI, a discussion of the ways in which AI has furthered scientific progress, and a timeline-style overview of some of the most significant recent AI developments.

- Generative AI breaks into the public consciousness.

- AI systems become more flexible.

- Capable language models still struggle with reasoning.

- AI starts to build better AI.

Chapter 3: Technical AI Ethics

Fairness, bias, and ethics in machine learning continue to be topics of interest among both researchers and practitioners. As the technical barrier to entry for creating and deploying generative AI systems has lowered dramatically, the ethical issues around AI have become more apparent to the general public. Startups and large companies find themselves in a race to deploy and release generative models, and the technology is no longer controlled by a small group of actors. In addition to building on analysis in last year’s report, this year the AI Index highlights tensions between raw model performance and ethical issues, as well as new metrics quantifying bias in multimodal models.

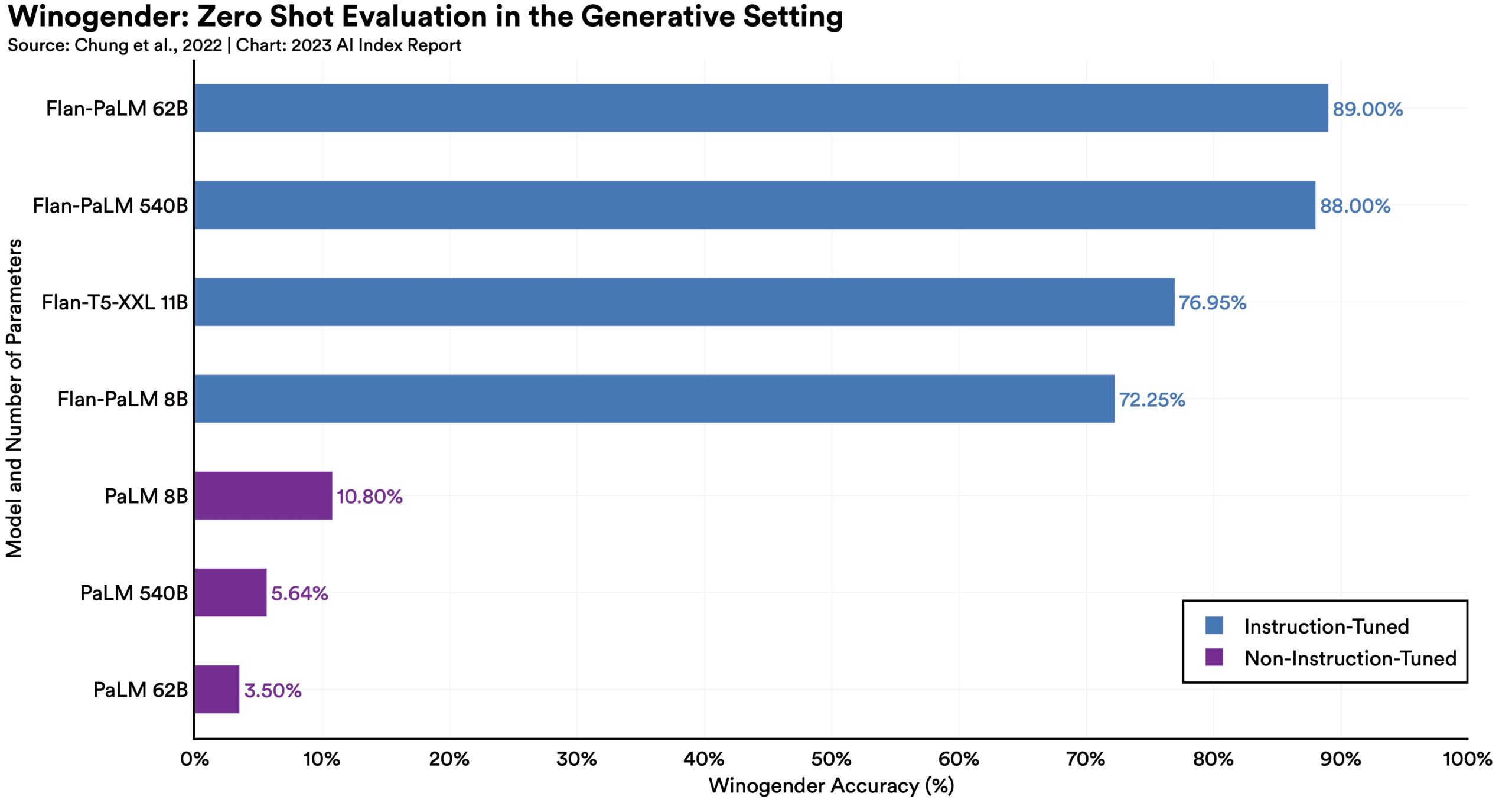

- The effects of model scale on bias and toxicity are confounded by training data and mitigation methods.

- Generative models have arrived and so have their ethical problems.

- Fairer models may not be less biased.

- Interest in AI ethics continues to skyrocket.

- Automated fact-checking with natural language processing isn’t so straightforward after all.

Chapter 4: The Economy

Increases in the technical capabilities of AI systems have led to greater rates of AI deployment in businesses, governments, and other organizations. The heightening integration of AI and the economy comes with both excitement and concern. Will AI increase productivity or be a dud? Will it boost wages or lead to the widespread replacement of workers? To what degree are businesses embracing new AI technologies and willing to hire AI-skilled workers? How has investment in AI changed over time, and what particular industries, regions, and fields of AI have attracted the greatest amount of investor interest? This chapter examines AI-related economic trends by using data from Lightcast, LinkedIn, McKinsey, Deloitte, and NetBase Quid, as well as the International Federation of Robotics (IFR). This chapter begins by looking at data on AI-related occupations and then moves on to analyses of AI investment, corporate adoption of AI, and robot installations.

- Once again, the United States leads in investment in AI.

- In 2022, the AI focus area with the most investment was medical and healthcare ($6.1 billion); followed by data management, processing, and cloud ($5.9 billion); and Fintech ($5.5 billion).

- AI is being deployed by businesses in multifaceted ways.

- AI tools like Copilot are tangibly helping workers.

- China dominates industrial robot installations.

Chapter 5: Education

Studying the state of AI education is important for gauging some of the ways in which the AI workforce might evolve over time. AI-related education has typically occurred at the postsecondary level; however, as AI technologies have become increasingly ubiquitous, this education is being embraced at the K–12 level. This chapter examines trends in AI education at the postsecondary and K–12 levels, in both the United States and the rest of the world. We analyze data from the Computing Research Association’s annual Taulbee Survey on the state of computer science and AI postsecondary education in North America, Code.org’s repository of data on K–12 computer science in the United States, and a recent UNESCO report on the international development of K–12 education curricula.

- More and more AI specialization.

- New AI PhDs increasingly head to industry.

- New North American CS, CE, and information faculty hires stayed flat.

- The gap in external research funding for private versus public American CS departments continues to widen.

- Interest in K–12 AI and computer science education grows in both the United States and the rest of the world.

Chapter 6: Policy and Governance

The growing popularity of AI has prompted intergovernmental, national, and regional organizations to craft strategies around AI governance. These actors are motivated by the realization that the societal and ethical concerns surrounding AI must be addressed to maximize its benefits. The governance of AI technologies has become essential for governments across the world. This chapter examines AI governance on a global scale. It begins by highlighting the countries leading the way in setting AI policies. Next, it considers how AI has been discussed in legislative records internationally and in the United States. The chapter concludes with an examination of trends in various national AI strategies, followed by a close review of U.S. public sector investment in AI.

- From talk to enactment—the U.S. passed more AI bills than ever before.

- When it comes to AI, policymakers have a lot of thoughts.

- The U.S. government continues to increase spending on AI.

- The legal world is waking up to AI.

Chapter 7: Diversity

AI systems are increasingly deployed in the real world. However, there often exists a disparity between the individuals who develop AI and those who use AI. North American AI researchers and practitioners in both industry and academia are predominantly white and male. This lack of diversity can lead to harms, among them the reinforcement of existing societal inequalities and bias. This chapter highlights data on diversity trends in AI, sourced primarily from academia. It borrows information from organizations such as Women in Machine Learning (WiML), whose mission is to improve the state of diversity in AI, as well as the Computing Research Association (CRA), which tracks the state of diversity in North American academic computer science. Finally, the chapter also makes use of Code.org data on diversity trends in secondary computer science education in the United States. Note that the data in this subsection is neither comprehensive nor conclusive. Publicly available demographic data on trends in AI diversity is sparse. As a result, this chapter does not cover other areas of diversity, such as sexual orientation. The AI Index hopes that as AI becomes more ubiquitous, the amount of data on diversity in the field will increase such that the topic can be covered more thoroughly in future reports.

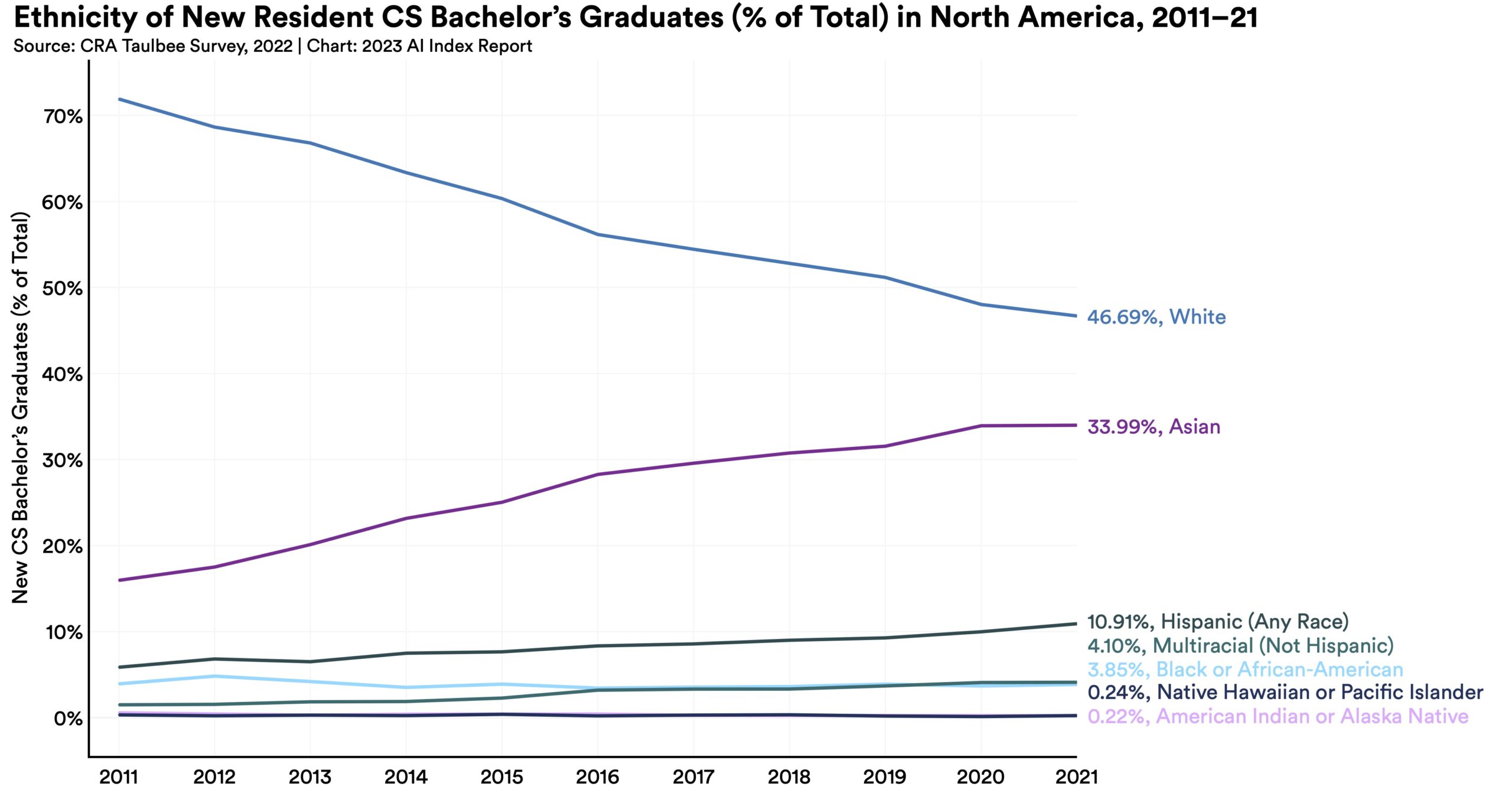

- North American bachelor’s, master’s, and PhD-level computer science students are becoming more ethnically diverse.

- New AI PhDs are still overwhelmingly male.

- Women make up an increasingly greater share of CS, CE, and information faculty hires.

- American K–12 computer science education has become more diverse, in terms of both gender and ethnicity.

Chapter 8: Public Opinion

AI has the potential to have a transformative impact on society. As such it has become increasingly important to monitor public attitudes toward AI. Better understanding trends in public opinion is essential in informing decisions pertaining to AI’s development, regulation, and use. This chapter examines public opinion through global, national, demographic, and ethnic lenses. Moreover, we explore the opinions of AI researchers, and conclude with a look at the social media discussion that surrounded AI in 2022. We draw on data from two global surveys, one organized by IPSOS, and another by Lloyd’s Register Foundation and Gallup, along with a U.S-specific survey conducted by PEW Research. It is worth noting that there is a paucity of longitudinal survey data related to AI asking the same questions of the same groups of people over extended periods of time. As AI becomes more and more ubiquitous, broader efforts at understanding AI public opinion will become increasingly important.

- Chinese citizens are among those who feel the most positively about AI products and services. Americans … not so much.

- Men tend to feel more positively about AI products and services than women. Men are also more likely than women to believe that AI will mostly help rather than harm.

- People across the world and especially America remain unconvinced by self-driving cars.

- Different causes for excitement and concern.

- NLP researchers … have some strong opinions as well.

Past Reports

- Stanford Home

- Maps & Directions

- Search Stanford

- Emergency Info

- Terms of Use

- Non-Discrimination

- Accessibility

AI Papers by Country

- By Olive Marshal

- Published November 23, 2019

- Updated December 14, 2023

- 13 mins read

Artificial Intelligence (AI) research has been rapidly growing over recent years, with numerous countries contributing to this development. In this article, we will examine the number of AI papers published by different countries and gain insights into their respective contributions to this field.

Key Takeaways

- AI research is a global phenomenon, with countries around the world actively participating.

- China and the United States lead in terms of AI paper production, followed by other major research hubs.

- Collaboration between countries is an important aspect of AI research, leading to increased knowledge sharing.

**China** has emerged as a frontrunner in AI research, producing a vast number of papers in recent years. With its strong governmental support and investment in technology, the country aims to become the global leader in AI by 2030. *The rapid growth of AI research in China is a testament to the nation’s commitment to technological advancement.*

The **United States** has long been a leader in AI research and development. It boasts a number of prestigious institutions, such as Stanford University and MIT, which contribute significantly to the field. *The United States continues to be at the forefront of groundbreaking AI research, pushing the boundaries of what is possible.*

Overview of AI Research Publications by Country

Other countries, such as **India** and **Canada**, have also made significant contributions to AI research. India, with its growing tech industry and skilled workforce, is steadily increasing its presence in the field. *India’s research and development efforts in AI are expected to further accelerate in the coming years.* Meanwhile, Canada is known for its expertise in deep learning and hosts leading research institutions like the Vector Institute. *Canada’s AI research has gained international recognition, attracting top talent from around the globe*.

Collaboration and Knowledge Sharing

International collaboration plays a crucial role in driving AI research forward. Researchers from different countries often collaborate on projects, combining their expertise and resources to tackle complex problems. Such collaboration leads to knowledge sharing and accelerates advancements in the field. Additionally, research conferences and journals provide platforms for researchers to present their findings and exchange ideas. *These platforms foster collaboration and facilitate the dissemination of knowledge in the AI community.*

Global AI Research Collaboration

- In 2019, the most common international collaboration in AI research was between the United States and China.

- Other prominent collaborations include China with the United Kingdom, Germany, and Australia.

- International collaboration strengthens the ecosystem of AI research, fostering innovation and global progress in the field.

AI research is a global effort, with countries around the world actively contributing to the field. China and the United States lead in terms of the number of AI papers published, but collaborations between different countries are also instrumental in advancing AI knowledge and driving innovation. As AI continues to evolve, so too will the contributions of different nations, shaping the future of this transformative field.

Common Misconceptions

1. ai papers by country.

There are several common misconceptions surrounding the topic of AI papers by country. One misconception is that only developed countries produce high-quality AI research. However, this is not true as countries with emerging economies, such as India and China, have also made significant contributions to the field. Another misconception is that the number of AI papers published by a country reflects its overall expertise in AI. While a high number of papers can indicate a strong research community, it does not necessarily reflect the quality or impact of the research. Furthermore, some may assume that AI research is primarily dominated by academic institutions, but there is also a growing presence of research contributions from industry players and collaborations between academia and industry.

- Emerging economies contribute to AI research

- Number of papers doesn’t guarantee expertise

- Industry players also contribute to AI research

2. AI Research Competition

Another misconception is that AI research is a competition among countries to establish dominance. While there might be some level of competitiveness, the AI research community operates on a collaborative basis. Researchers from different countries often collaborate and share knowledge to advance the field collectively. The global nature of AI research encourages the exchange of ideas and fosters innovation. Additionally, AI research benefits from diversity and different perspectives, making international collaboration crucial for its progress.

- AI research community is collaborative

- Countries often share knowledge and collaborate

- International collaboration fosters innovation

3. Research Output and AI Leadership

There is a misconception that the number of AI research papers produced by a country is directly proportional to its AI leadership. While research output can be an indicator of a country’s research activity, AI leadership involves various factors such as investment in AI technology, talent pool, infrastructure, and government policies. Some countries may prioritize the application of AI over research, leading to a higher implementation rate without necessarily publishing a large number of research papers. It is important to have a holistic view of a country’s AI ecosystem rather than solely relying on research output.

- Research output doesn’t determine AI leadership

- AI leadership involves multiple factors

- Prioritizing AI application may not reflect in research output

4. Bias and AI Research

There is a misconception that AI research is entirely unbiased and objective. However, AI systems are developed by humans and can inherit biases from the data they are trained on or the algorithms used. Researchers are increasingly aware of this issue and are actively working on mitigating biases in AI systems. AI research explores techniques for fair and ethical AI, but it is an ongoing challenge. Understanding and addressing bias in AI research is vital to ensure AI systems are inclusive and do not perpetuate discrimination or inequality.

- AI research can contain biases

- Researchers are working on mitigating biases in AI systems

- Fair and ethical AI is an ongoing challenge

5. AI’s Impact on Employment

One common misconception is the belief that AI will replace human workers entirely, leading to widespread unemployment. While AI technologies may automate certain tasks and job roles, it is unlikely to replace all human workers. AI has the potential to augment human capabilities and enable humans to focus on higher-value tasks that require creativity, empathy, and critical thinking. Moreover, as AI technologies advance, new job opportunities will emerge in sectors related to AI development, implementation, and maintenance. Preparing the workforce for these changes is important to ensure a smooth societal transition.

- AI augments human capabilities

- New job opportunities will arise in AI-related sectors

- Preparing the workforce is essential for a smooth transition

AI Research Papers by Country: A Global Perspective

In recent years, the field of artificial intelligence (AI) has witnessed significant growth, with research papers emerging from various countries around the world. This article presents a global perspective on AI research, highlighting the number of papers published by different countries. The tables below showcase the contributions of each country, shedding light on their role in shaping the advancements of AI technologies.

United States: Pioneers in AI Research

As a leader in technological advancements, the United States has played a pivotal role in AI research. The table below presents the top five states within the US that have produced a substantial number of AI research papers.

China: Rapid Growth in AI Research

China has rapidly emerged as a major contributor to the field of AI, with significant investments in research and development. The following table highlights the top five provinces within China that have made remarkable contributions to AI research.

United Kingdom: Solid Contributions to AI Research

The United Kingdom has a rich history of scientific research and has made notable contributions to AI. The table below showcases the top five universities in the UK that have contributed significantly to AI research.

Canada: Advancing AI Technologies

Canada has emerged as a leading country in AI research, fostering a collaborative and innovative environment. The following table highlights the top five cities within Canada that have made significant contributions to AI research.

Germany: Influential Contributions to AI Research

Germany has been instrumental in shaping AI research through its commitment to scientific excellence. The following table highlights the top five research institutions in Germany that have produced a significant number of AI research papers.

Australia: Thriving AI Research Scene

Australia has witnessed a thriving AI research scene, with universities and research institutions making significant contributions. The table below showcases the top five universities in Australia that have excelled in AI research.

India: Emerging Hub for AI Research

India has rapidly emerged as an important hub for AI research, with a growing number of research papers being published. The following table highlights the top five cities in India that have made significant contributions to AI research.

Japan: Contributions to Cutting-Edge AI Research

Japan has a rich history of technological innovation and has played a vital role in AI research. The table below showcases the top five research institutions in Japan that have contributed significantly to AI advancements.

This article sheds light on the global landscape of AI research, showcasing the contributions made by various countries and institutions. The United States, China, the United Kingdom, Canada, Germany, Australia, India, and Japan have emerged as key players in shaping the field of AI. Through substantial investments, collaborations, and scientific contributions, these countries have driven innovations and breakthroughs in AI technologies. As research in AI continues to expand, it is crucial for countries and institutions worldwide to foster cross-border collaborations and interdisciplinary approaches in order to further advance the potential of AI and its positive impact on society.

Frequently Asked Questions

What are the top ai research papers from the united states.

Some notable AI research papers from the United States include ‘A Few Useful Things to Know About Machine Learning’ by Pedro Domingos, ‘DeepFace Recognition’ by Yaniv Taigman et al., ‘Playing Atari with Deep Reinforcement Learning’ by Volodymyr Mnih et al., and ‘Generative Adversarial Networks’ by Ian J. Goodfellow et al.

You Might Also Like

AI Content Pro

Content Creator Hashtags

Copy AI Careers

Artificial intelligence national strategy in a developing country

- Open access

- Published: 01 October 2023

Cite this article

You have full access to this open access article

- Mona Nabil Demaidi ORCID: orcid.org/0000-0001-8161-4992 1

5535 Accesses

7 Altmetric

Explore all metrics

Artificial intelligence (AI) national strategies provide countries with a framework for the development and implementation of AI technologies. Sixty countries worldwide published their AI national strategies. The majority of these countries with more than 70% are developed countries. The approach of AI national strategies differentiates between developed and developing countries in several aspects including scientific research, education, talent development, and ethics. This paper examined AI readiness assessment in a developing country (Palestine) to help develop and identify the main pillars of the AI national strategy. AI readiness assessment was applied across education, entrepreneurship, government, and research and development sectors in Palestine (case of a developing country). In addition, it examined the legal framework and whether it is coping with trending technologies. The results revealed that Palestinians have low awareness of AI. Moreover, AI is barely used across several sectors and the legal framework is not coping with trending technologies. The results helped develop and identify the following five main pillars that Palestine’s AI national strategy should focus on: AI for Government, AI for Development, AI for Capacity Building in the private, public and technical and governmental sectors, AI and Legal Framework, and international Activities.

Similar content being viewed by others

Education for AI, not AI for Education: The Role of Education and Ethics in National AI Policy Strategies

Daniel Schiff

The AI gambit: leveraging artificial intelligence to combat climate change—opportunities, challenges, and recommendations

Josh Cowls, Andreas Tsamados, … Luciano Floridi

Explainable AI Methods - A Brief Overview

Avoid common mistakes on your manuscript.

1 Introduction

Artificial intelligence (AI) is a cutting-edge technology (Chatterjee et al. 2022 ). Its applications can be found in many fields including computer science, banking, agriculture, and healthcare (Pham et al. 2020 ; Zhang et al. 2020 ). AI has two domains: Weak AI and Strong AI. Weak AI is specialized for specific tasks, while Strong AI aims to create machines with human-like general intelligence. Developed countries lead in these advancements with advanced technologies and ample resources (Tizhoosh and Pantanowitz 2018 ).

Nations have recognized the transformational potential of AI (Fatima et al. 2020 ). Therefore, more than 60 countries published their AI national strategies in the past 5 years following Canada which was the first to publish the strategy in 2017 (Vats et al. 2022 ; Zhang et al. 2021 ). The majority of countries (more than 70%) who launched their AI national strategies are developed countries (Holon IQ 2020 ).

The approach of AI national strategies differentiates between developed and developing countries. Developed countries have advanced economies and strong technological infrastructures, focusing on leveraging AI to maintain their competitive advantage and drive economic growth. They are at the forefront of both Weak and Strong AI. In the domain of Weak AI, they utilize specialized systems for practical applications in various industries such as healthcare, finance, and transportation, resulting in efficiency gains and economic advantages. In Strong AI endeavors, their significant research involves infrastructure, financial resources, and access to top talent propel innovation. These countries prioritize the development of ethical AI frameworks, invest in education and workforce development, and foster global collaborations to sustain their AI leadership. Their dedication to AI innovation places them at the vanguard of technology, shaping the future of AI-driven industries and applications.

Developing countries, on the other hand, are navigating the AI landscape with varying degrees of progress in Weak and Strong AI. In Weak AI applications, they often rely on cost-effective solutions, such as chatbots or data analytics, to address local challenges like healthcare access and agriculture optimization. However, resource limitations hinder their full adoption. The pursuit of Strong AI remains a challenge due to inadequate research infrastructure and funding constraints. Developing nations prioritize capacity building, fostering local talent, and seeking international collaborations to bridge the AI technology gap. While progress is gradual, their commitment to AI development is a crucial step toward unlocking future socio-economic benefits. The difference between developed and developing countries is expected since developing countries are consumers of technologies produced by developed countries (Monasterio Astobiza et al. 2022 ). Moreover, developing countries have low awareness of applications of AI across several fields (Kahn et al. 2018 ). This increases the gap of AI technology development between developed and developing countries (Kahn et al. 2018 ).

Several developed and developing countries in the MENA region are coping with AI and developing their AI national strategies. According to Google report, the potential economic impact of AI on the Middle East and North Africa (MENA) region is estimated to 320 billion USD dollars by 2030 (Economist Impact 2022 ). Currently, the following 7 countries out of 19 in MENA region launched their AI national strategies: United Arab Emirates, Qatar, Saudi Arabia, Egypt, Oman, Tunisia, and Jordan.

Following other countries in the MENA region, in 2021, the Ministry of Telecom and Information Technology in Palestine issued the need for an AI national strategy. Palestine has good infrastructure since 92% of Palestinian households have home Internet access and in 2022, the optical fiber network was established in Palestine. Therefore, this paper aims to identify AI national strategy pillars in Palestine which is a case of a developing country in the MENA region. To achieve this, the paper assessed the AI status across education, entrepreneurship, government, and research and development sectors in Palestine (the case of a developing country). In addition, it examined the legal framework and whether it is coping with trending technologies.

The paper is structured as follows. Section 2 provides a brief review of AI national strategies in developed and developing countries. The AI readiness assessment in Palestine as a case of a developing country is explained in Sect. 3 . Section 4 presents the results obtained which are essential to identify the Palestinian AI national strategy’s main pillars. Finally, conclusions and future work are depicted in Sect. 5 .

2 Literature review

In recent years, many countries have developed national strategies for AI, which provide a framework for the development and implementation of the technology. These strategies have focused on different pillars, depending on the specific country and its needs (Jorge et al. 2022 ; Economist Impact 2022 ; Kazim et al. 2021 ; Escobar and Sciortino 2022 ).

The use of AI benefits both developed and developing countries (Makridakis 2017 ). However, the ways in which these countries approach AI can be quite different. In general, developed countries have the resources and infrastructure necessary to support the development and implementation of advanced AI technologies (Mhlanga 2021 ). As a result, AI national strategies in these countries focus on using technology to improve efficiencies and productivity in various industries, such as healthcare, finance, and transportation (Ahmed et al. 2022 ; Wahl et al. 2018 ; Kshetri 2021 ; Abduljabbar et al. 2019 ). In contrast, developing countries have more limited resources and infrastructure, so their AI national strategies tend to focus on using technology to address specific needs in their communities. For example, a developing country prioritizes using AI to improve access to education or healthcare or to promote economic growth (Ahmed et al. 2022 ; Guo and Li 2018 ). Additionally, developing countries are focused on using AI to help bridge the gap between themselves and developed countries, in terms of technological advancement and economic growth (Goralski and Tan 2020 ). Overall, the AI national strategies of developed and developing countries tend to differ in terms of their focus and priorities.

This section provides a comprehensive review of existing AI national strategies, and how they differentiate in developed and developing countries.

2.1 AI national strategies in developed countries

Many developed countries have recognized the potential of AI to drive economic growth, improve public services, and advance scientific research. As a result, they have developed national strategies to support the development and deployment of AI technologies in a way that is responsible, ethical, and beneficial to society (Zhang et al. 2021 ).

The United States, released a national AI strategy called the “American AI Initiative” in 2019, which focused on promoting public–private partnerships, investing in AI research and development, and increasing access to data and computing resources for AI researchers (Johnson 2019 ). The initiative is based on the following five key pillars:

Investing in research and development: The United States is investing in AI-focused research institutions and incubators, and is providing support for businesses that are developing AI-related products and services.

Fostering public–private partnerships: The United States is promoting collaboration between government agencies, academia, and the private sector to advance AI research and development.

Promoting the responsible and ethical use of AI: The United States is implementing policies and initiatives to promote the responsible and ethical use of AI by engaging with stakeholders and addressing potential negative impacts of AI.

Supporting the growth of the AI industry: The United States is providing support for businesses that are developing AI-related products and services, and is implementing policies to support the growth of the AI industry.

Building the technological infrastructure and capabilities needed to enable the use of AI: The United States is investing in the development of the technological infrastructure and capabilities needed to enable the use of AI, by implementing policies to support the growth of the AI industry.

Canada has implemented the Pan-Canadian Artificial Intelligence Strategy, which is focused on supporting the growth of the AI industry, and on using AI to address challenges in areas such as healthcare and transportation (Escobar and Sciortino 2022 ). The strategy is based on the following four key pillars:

Investing in research and development: Canada is investing in AI-focused research institutions and incubators, and is providing support for businesses that are developing AI-related products and services.

Supporting the growth of the AI industry: Canada is providing support for businesses that are developing AI-related products and services, and is implementing policies to support the growth of the AI industry.

Using AI to address challenges: Canada is using AI to address challenges in areas such as healthcare and transportation, by implementing AI-powered solutions and initiatives.

Building the technological infrastructure and capabilities needed to enable the use of AI: Canada is investing in the development of the technological infrastructure and capabilities needed to enable the use of AI, by implementing policies to support the growth of the AI industry.

The United Kingdom also launched its strategy “AI Sector Deal” in 2018. This strategy includes a number of initiatives to support the growth of the country’s AI industry, including investments in AI research and development, the establishment of an AI skills institute, and the creation of an AI advisory council to help develop ethical guidelines for the use of AI (Bourne 2019 ). The strategy is based on key pillars similar to the USA.

In Europe, the European Union has also been working on a comprehensive AI strategy “EU AI Strategy”, which includes initiatives to support the development and deployment of AI technologies, as well as measures to ensure the responsible and ethical use of AI (European Commission 2020 ; Cohen et al. 2020 ). The EU AI Strategy is based on three key pillars:

Investing in research and development: The European Union is investing in AI-focused research institutions and incubators, and is providing support for businesses that are developing AI-related products and services.

Supporting the growth of the AI industry: The European Union is providing support for businesses that are developing AI-related products and services, and is implementing policies to support the growth of the AI industry.

Addressing ethical and societal concerns related to AI: The European Union is implementing policies and initiatives to address ethical and societal concerns related to AI, by engaging with stakeholders and promoting the responsible and ethical use of AI.

Other developed countries, such as Japan and South Korea, are also taking steps to develop national AI strategies. Japan has developed the Society 5.0 initiative, which aims to use AI and other emerging technologies to drive economic growth and social development (Fukuyama 2018 ; Shiroishi et al. 2018 ). The Society 5.0 initiative is based on four key pillars similar to Canada.

South Korea has adopted the AI National Development Plan, which is focused on investing in AI research and development, supporting the growth of the AI industry, and promoting the use of AI in various sectors (Chung 2020 ). The AI National Development Plan is based on three key pillars:

Investing in research and development: South Korea is investing in AI-focused research institutions and incubators, and is providing support for businesses that are developing AI-related products and services.

Supporting the growth of the AI industry: South Korea is providing support for businesses that are developing AI-related products and services, and is implementing policies to support the growth of the AI industry.

Promoting the use of AI in various sectors: South Korea is promoting the use of AI in various sectors, by implementing AI-powered solutions and initiatives in areas such as healthcare and transportation.

AI is also increasingly adopted by a number of developing countries in MENA region, including the United Arab Emirates (UAE), Saudi Arabia, and Qatar (Radu 2021 ; Malkawi 2022 ; Ghazwani et al. 2022 ; Alelyani et al. 2021 ). These countries have made significant investments in the development and use of AI technologies, and have implemented a number of initiatives and policies to support the growth of the AI industry (Sharfi 2021 ). For example, the UAE has established partnerships with leading tech companies to develop AI-powered healthcare solutions, and has launched initiatives to support the use of AI in education (Dumas et al. 2022 ; Bhattacharya and Nakhare 2019 ). Saudi Arabia has also invested heavily in research and development in AI, and has implemented policies to support the growth of the AI industry (Bugami 2022 ).

2.2 AI national strategies in developing countries

Many developing countries are still in the early stages of developing and implementing AI national strategies, as the technology is relatively new and can be expensive to implement (Radu 2021 ; Sharma et al. 2022 ). In addition, developing countries face challenges such as limited access to technology and funding, as well as a shortage of skilled workers with expertise in AI (De-Arteaga et al. 2018 ; Sharma et al. 2022 ). As a result, it is likely that the adoption of AI in developing countries will be slower compared to more developed countries.

Regardless of the limited resources and slow adoption of AI, several developing countries have launched AI national strategies following developed countries for several reasons. First and foremost, AI has the potential to benefit developing countries, by providing innovative solutions to challenges and needs in these countries (Strusani and Houngbonon 2019 ). For example, AI-powered healthcare systems can help to improve the availability of medical services in underserved communities (Ilhan et al. 2021 ).

Additionally, developing countries aim to participate in the global AI ecosystem. As AI becomes more prevalent, there is an increasing demand for skilled AI professionals, and developing countries can play a significant role in meeting this demand (Su et al. 2021 ; Squicciarini and Nachtigall 2021 ; Millington 2017 ). By investing in AI education and training, developing countries can help to develop a skilled workforce that is capable of participating in the global AI industry (Millington 2017 ; Sharma et al. 2022 ).

India, Brazil, Mexico, and South Africa developed their AI national strategies which are focused on using AI to address challenges in areas such as healthcare, agriculture, and education, and on building the technological infrastructure and capabilities needed to enable the use of AI (Chatterjee 2020 ; Malerbi and Melo 2022 ; Criado et al. 2021 ; Arakpogun et al. 2021 ). China has implemented the “Next Generation Artificial Intelligence Development” Plan, which is focused on investing in AI research and development, supporting the growth of the AI industry, and promoting the use of AI in various sectors.

Developing countries in the MENA region also launched their AI national strategies or recognized the importance of AI and are currently in the process. Three out of thirteen developing countries in the MENA region (Egypt, Tunisia, and Jordan) launched their AI national strategies (Ministry of Communications and Innovation Technology (Egypt) 2021 ). Their strategies focused on the following pillars:

Building human capacities, expertise, and spreading awareness on AI (develop the capabilities of senior government and private sector leaders in the field of AI).

Importance of participating in AI international and regional conferences and seminars.

Promoting the use and adoption of artificial intelligence and its applications in the public sector and building the necessary partnerships with the private sector

Integrating AI in entrepreneurship and business.

Upskilling employees working in the technology field.

Conducting training for government agencies.

Develop policies related to ethical guidelines, legislative reforms, and standardization.

Develop AI educational courses that could be taught at schools and universities.

As mentioned earlier developing countries recognize the potential benefits of AI, and are taking steps to incorporate it into their economies and societies. This is similar to the current situation in Palestine, as in 2021, the Ministry of Telecom and Information Technology issued the need for an AI national strategy. To develop the strategy, the AI readiness assessment is needed to examine the status of AI in the educational sector (schools, universities), entrepreneurship sector, research and development, governmental sector, and privacy and data Protection. In Palestine, no data is available. Therefore, Sect. 3 illustrates the research methodology which explains in detail the experiment setup needed to identify the main pillars of the Palestinian AI national strategy.

3 Methodology

This paper aims to present AI readiness assessment in Palestine to help develop and identify the main pillars in the AI national strategy. This section describes the experiment questions, the experimental setup, and the participants.

3.1 Research questions

This experiment aims to answer the following main questions:

Do Palestinians have awareness of artificial intelligence?

What is the status of AI across education, entrepreneurship, government, research and development, and sectors in Palestine?

What are the main pillars of the Palestinian AI national strategy?

3.2 Experimental setup

To address the research questions mentioned above, the AI readiness assessment was examined across the educational sector (schools, universities), entrepreneurship sector, research and development sector, governmental sector, and privacy and data protection in Palestine. No data are available in Palestine related to this topic. Therefore, the following data collection methodologies were applied:

One-to-one interviews with experts from the private, public, government, and educational sectors inside and outside Palestine were conducted between 1/9/2021 and 30/8/2022. The experts were presented with a set of interview questions that focused on the current status of AI in their domain and the opportunities and challenges of applying AI in Palestine.

Exploratory research to analyze the higher education BSc and MSc programs, and identify AI courses across universities in Palestine. The data were retrieved from the Ministry of Higher Education in Palestine.

Exploratory research to analyze tech-based educational courses at schools in Palestine. The material taught to school students between fifth grade and twelfth grade was analyzed to assess their coverage of AI-related topics.

Focus groups to assess school students’ and teachers’ awareness of artificial intelligence and identify the existing gaps.

Focus group with MSc students enrolled in AI-related topics.

Questionnaire to assess the Palestinian community’s awareness of AI and identify the existing gaps. The questionnaire consisted of 25 questions to assess participants’ knowledge of AI, AI applications, and gaps to apply AI in Palestine. The questionnaire focused on awareness of Weak AI.

3.3 Participants

Three different groups of participants were involved in the study and informed consent was obtained. The first group included 45 key experts (45+ interview hours) from the private, public, government, and educational sectors inside and outside Palestine. Experts included ministers, chief executive officers from private companies, banks, non-governmental organizations (NGO), incubators, and accelerators in Palestine.

The second group included the following three focus groups:

Ten MSc students enrolled in AI-related programs.

Eight school teachers teaching technology course.

Forty school students (42.8% females and 57.2% males).

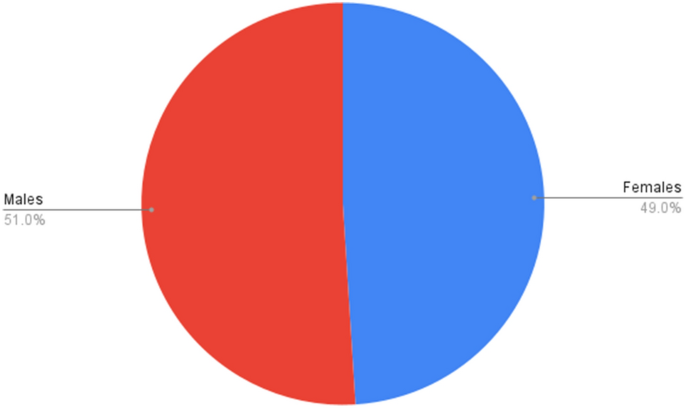

The third group consisted of a sample of 240 (44% males and 55.2% females) participants which represent the Palestinian community as it included representatives from the educational, governmental, and private sectors.

4 Results and discussion

This section illustrates the research questions and presents the results obtained.

4.1 Awareness of Palestinians about artificial intelligence

What is your assessment of the level of awareness of the following aspects of AI in Palestine

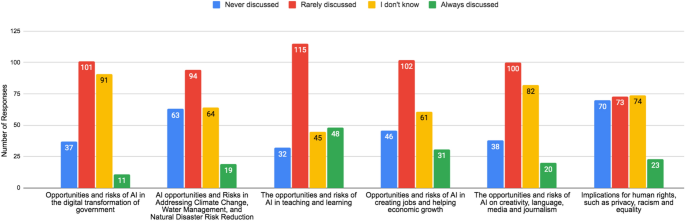

To assess the level of AI awareness among Palestinians, interviews were conducted with 45 experts and 3 focus groups, and a questionnaire was distributed to a sample of 240 people. The results revealed that Palestinians have low awareness of the concept and applications of AI in public, private, educational, leadership, innovation, and research and development sectors. The results of the questionnaire also confirmed that the following topics were not discussed in the field of AI in Palestine (Fig. 1 ):

Opportunities and risks of AI in the government digital transformation.

AI opportunities and risks in addressing climate change, water management, and natural disaster risk reduction.

The opportunities and risks of AI in teaching and learning.

Opportunities and risks of AI in creating jobs and contributing to economic growth.

The opportunities and risks of AI on creativity, language, media, and journalism.

Implications for human rights, such as privacy, discrimination, and equality.

4.2 AI in education

This section aims to examine the integration of AI into the Palestinian educational curriculum at schools and universities.

4.2.1 AI in Palestinian schools

Palestinian schools introduced a technology course that is taught to students from the fifth grade to the twelfth grade. The topics related to AI in each grade are summarized in Table 1 .

To assess the knowledge of school students about AI concept and teachers’ perspective on the importance of adding educational materials focusing on AI topics to the Palestinian curriculum, the following two focus groups were carried out:

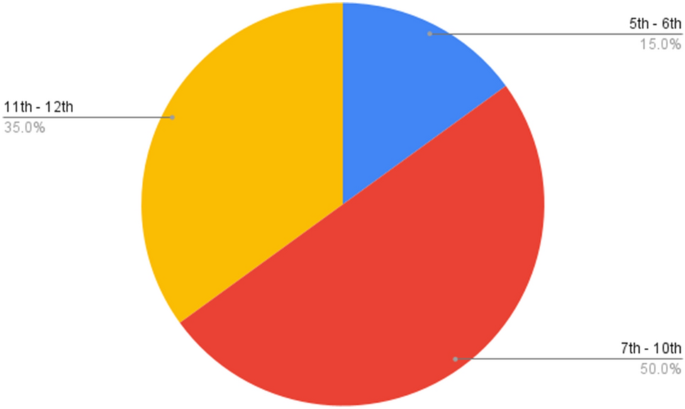

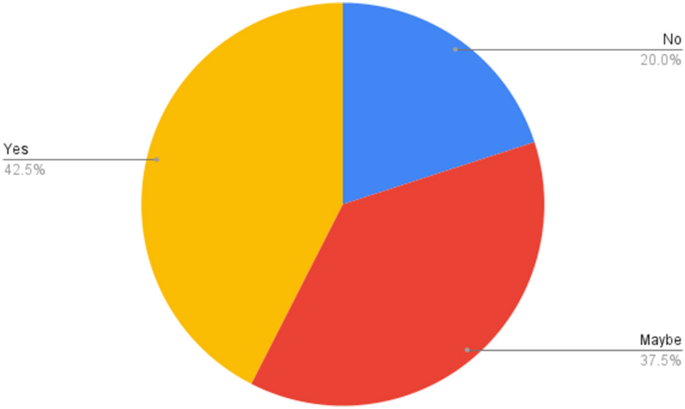

A focus group with 40 school students (57.2% of participants were male and 42.8% were female) enrolled in grades 5 up to 12 (Fig. 2 )

Eight teachers teaching the technology course at schools.

Percentage of school students participating in focus groups

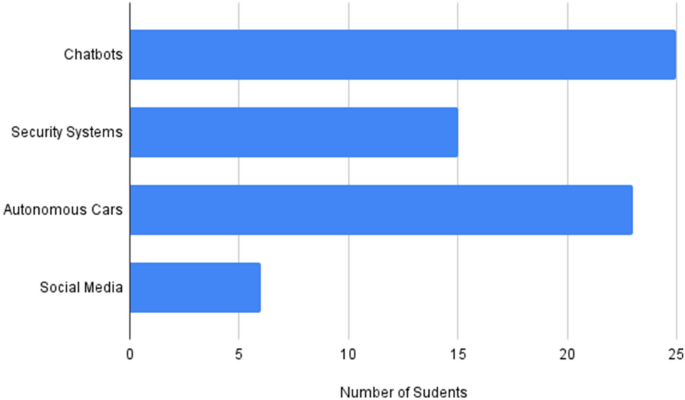

The results revealed that 42% of students stated that they know the definition of AI (Fig. 3 ). This is expected since the definition is introduced in the educational curriculum. However, there is a gap in students’ understanding of the practical applications of AI. More than 50% of school students did not recognize the practical applications of AI. Figure 4 shows that only 15% of school students knew that AI is used in social medial applications such as TikTok (De Leyn et al. 2021 ). This indicates that students’ have low awareness of the applications of AI.

On the other hand, 90% of the students participating in the study expressed interest to learn more about AI and its applications. Teachers had a similar opinion as they strongly agreed that adding AI-related topics to the Palestinian curriculum is necessary since minimal information is provided in the current curriculum.

School students’ knowledge of the definition of AI

School students’ knowledge of AI applications

4.2.2 AI in Palestinian universities

The AI Index 2021 annual report released by Stanford University revealed that there is a total of 1032 AI programs in 27 European Union countries (Zhang et al. 2021 ). The vast majority of academic programs specialized in AI in the European Union are taught at the master’s level. The programs aim to provide students with strong competencies for the workforce. Germany provides the highest number of programs specialized in AI, followed by the Netherlands, France, and Sweden.

In Palestine, the number of universities and colleges is 55 (Palestinian Ministry of Higher Education and Scientific Research 2022 ), and only 9% of Palestinian universities and colleges offer academic programs specialized in AI. Palestine offers six programs specialized in AI, which is close to other countries in the European Union. These programs constitute only 2.6% of the 224 technological academic programs offered at universities and colleges. The vast majority of these programs (83.3%) are master’s programs and there is still no Ph.D. program specialized in AI.

The results also revealed that the number of graduates from Palestinian colleges and universities specializing in AI between 2016 and 2021 is very low. Table 2 shows that only 28 out of 13,939 students are specialized in AI. Moreover, 60.7% of students are males and 39.3% are females. This indicates the low participation of females in the field of AI, in contrast to their close participation in various technological sectors (Fig. 5 ).

Percentage of male and female graduates in technological disciplines

In 2022, the number of graduates specializing in AI in Palestine doubled by nearly 2.7 (the number of students increased from 28 to 76). However, the number of students enrolled in Palestinian universities specializing in AI constitutes only 0.1% of the 104,499 enrolled in Palestinian universities and colleges from 2016 to 2021, which is a very small percentage. This contributes to the asymmetry between AI skills and industry needs which is currently a pain point in many countries that published their AI national strategies (Vats et al. 2022 ).

The results revealed that Palestine is at a very early stage in terms of the availability of educational resources and trainers. This was confirmed in the interviews with 45 experts and the results of a survey that were published to 240 participants to assess the awareness of the Palestinian community in AI and to identify gaps. The results showed that 53.3% of the sample confirmed that AI educational resources are not available, and 46% of the sample confirmed the lack of expertise in the field of AI (Table 3 ).

Further analysis was carried out with a focus group of ten master’s students enrolled in AI programs at Palestinian universities. The group confirmed the low awareness of the importance of AI in the educational and technological sectors in Palestine. This is due to the lack of AI-applied courses in Palestinian universities. The group also agreed that the labor market in Palestine has become more interested in the field of AI.

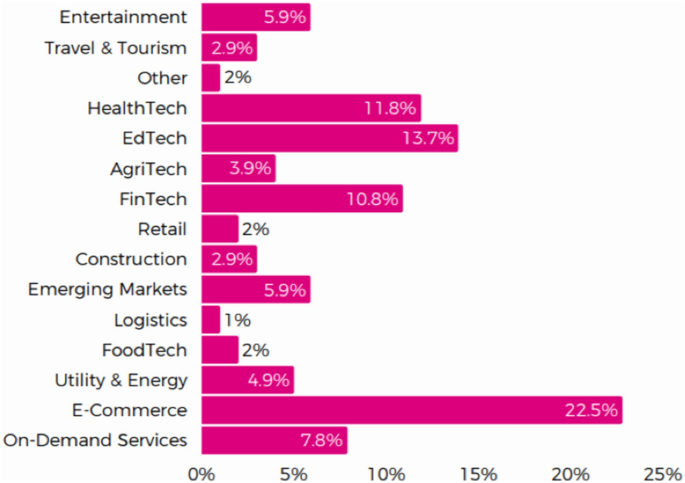

4.3 AI in entrepreneurial ecosystem

Palestine has 102 technology-based startups and 94 registered organizations that have worked during the year 2021 and have at least 1 program or project focused on empowering startups (Polaris 2021 ). The vast majority of startups are e-commerce companies, followed by the education and health sector (see Fig. 6 ).

Startups per sector in Palestine (Polaris 2021 )

Further analyses were carried out to examine the usage of AI technology in existing startups. The results revealed that a small percentage of startups use AI (0.09%). This was confirmed in interviews with experts leading technology incubators and accelerators, as they emphasized that the number of AI startups is small and there is not enough expertise to evaluate or supervise startups during the incubation and acceleration process in Palestine.

4.4 AI in research and development

According to EduRank, there are 1.51 million academic publications in the field of AI submitted by 2797 universities in the world (EduRank 2022 ). Table 4 shows the top universities in the world ranked based on their research performance in AI. In the MENA region, the number of publications per university highly decreased and the region achieves merely 5.5% of peer-reviewed AI publications (Economist Impact 2022 ). Table 5 shows the universities in the MENA region with the highest number of publications.

In Palestine, the total publications across universities are less than the publications in the American University of Beirut (see Table 6 ).

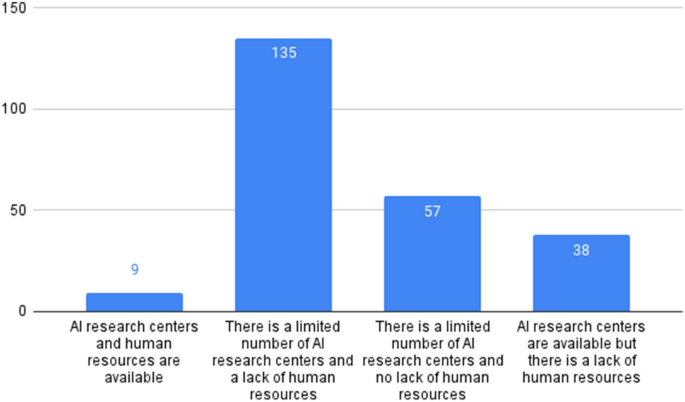

The results revealed that Palestine had minimal knowledge in the field of AI. This was also confirmed in the interviews with experts and the results of the questionnaire published to 240 people. Figure 7 shows that 56.5% of the sample confirmed that there is a limited number of research centers and a lack of human resources and expertise in the field of AI. This was also confirmed by the interviews with experts who emphasized that there are no links between global AI expertise and national and global AI researchers.

Status of research and development in Palestine

4.5 AI in governmental sector

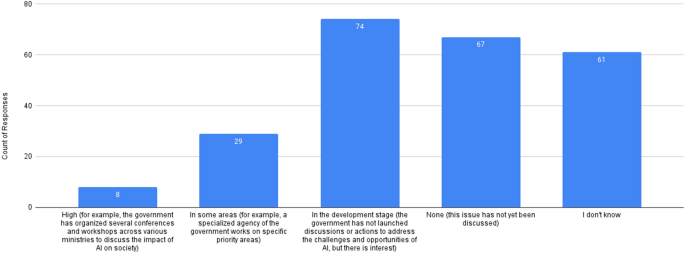

The results of the interviews and the questionnaire published to 240 participants showed that 31% of the sample believed that the level of governmental participation in topics related to AI is at an early development stage (see Fig. 8 ).

Level of government participation in topics related to AI

4.6 AI and privacy and protection

Based on the United Nations Conference on Trade and Development (UNCTAD), 128 out of 194 countries had put in place legislation to secure the protection of data and privacy (UNCTAD 2021 ). Table 7 shows the status of protection of data and privacy laws in the MENA region. The status of Privacy and Protection laws in Palestine is also at a very early stage. An exploratory study had been carried out by “7amleh” to study the status of privacy and digital data protection in Palestine (7amleh 2021 ). The results revealed that there are no laws and legislation in Palestine which keep pace with trending technologies. This causes privacy and data protection violations.

This was also confirmed by the research results, as the questionnaire, focus groups, and interviews with experts confirmed that there is a gap in the development of a legal framework that keeps pace with AI. 83.3% of 240 participants confirmed that the legal frameworks have not yet been developed to keep pace with AI in Palestine.

4.7 AI national strategy overview

This section translates the aforementioned findings into a strategic framework that tries to address weaknesses and minimize threats while building on strengths and opportunities. The government sector in Palestine is currently undergoing a significant digital transformation, which inevitably needs to happen concurrently with the implementation of the AI strategy. Additionally, to demonstrate the value of AI across various domains, it is critical to focus on areas where the greatest gains can be made in the short term given that the country has relatively few resources. Therefore, the following sections present the AI national strategy vision and mission statements that spell out precisely what Palestine hopes to accomplish by implementing AI, and where tradeoffs will be made. In addition, it illustrates the objectives and main pillars required to achieve the objectives.

4.7.1 Vision

The AI national strategy vision is “A globally distinguished position in Artificial Intelligence, with sustainable productivity, economic gains, and creation of new areas of growth.”

4.7.2 Mission

The AI national strategy mission is to “Establish an Artificial Intelligence industry in Palestine that includes the development of skills, technology, and infrastructure to ensure its sustainability and competitiveness.”

4.7.3 Goals

To achieve the aforementioned vision and mission, Palestine will work on the following goals:

Support lifelong learning and reskilling programs to contribute to workforce development and sustainability.

Facilitate multi-stakeholder dialogue on the deployment of responsible AI for the benefit of society and encourage relevant policy discussions.

Encourage investment in AI research and entrepreneurship through partnerships between the public and private sectors, initiatives, universities, and research centers.

Make Palestine a regional center and a talent pool in the field of AI by meeting the needs of local and regional markets and attracting international experts and researchers specialized in AI.

Integrating AI technologies into government services to ensure the services are more efficient and transparent.

Use AI in the main development sectors to achieve an economic impact and find solutions to local problems in line with the goals of sustainable development.

Create a thriving environment for AI by encouraging and supporting companies, startups, and scientific research.

Promote a human-centered approach in which people’s well-being is a priority and facilitate multi-stakeholder dialogue on the deployment of responsible AI for the benefit of society.

Using AI as an opportunity to include marginalized people in initiatives that promote human advancement and self-development.

Facilitate cooperation at the local, regional, and international levels in the field of AI.

Contribute to global efforts and international forums on AI ethics, the future of work, responsible AI, and the social and economic impact of AI.

Support the research bridges between Palestinian and international universities in the field of AI.

In addition to the aforementioned goals, the AI national strategy will help achieve the following numeric goals in the upcoming 5 years: having 300 graduates specialized in AI, 100 specialists in the field of AI in Palestine, systematic integration of AI into 4 educational sectors, 30% of the technology startups in Palestine use AI technology, 10% of private companies in Palestine adopt AI-based solutions, 200 published research papers in the field of AI in Palestine, 20 people specializing in privacy and digital data protection, and 50 datasets uploaded into opendata website in Palestine.

4.7.4 AI national strategy pillars

To achieve the goals above, the strategy has been divided into the following five main pillars:

AI for Government: the rapid adoption of AI technology via the automation of governmental procedures and the integration of AI into the decision-making process to improve productivity and transparency.

AI for Development: apply AI to several industries using a staged strategy to realize efficiencies, achieve more economic growth, and improve competitiveness. This could be achieved through domestic and international partnerships.

AI for Capacity Building: spread awareness and provide personalized training to private, public, and governmental sectors.

AI and Legal Framework: develop a legal framework to empower using AI across several sectors.

International Activities: play a key role in fostering cooperation on the regional and international levels by championing relevant initiatives, and actively participating in AI-related discussions and international projects.

These five pillars form a comprehensive approach to the AI national strategies, covering the government’s role, industry-specific implementation, workforce development, legal considerations, and international collaboration. By addressing these dimensions, Palestine can establish a solid foundation for responsible, inclusive, and sustainable AI deployment (Chatterjee 2020 ; Nankervis et al. 2021 ; Barton et al. 2017 ).

4.8 AI national strategy governance

AI national strategy governance is essential to ensure the implementation of AI national strategy. It guides the responsible and effective adoption, development, and use of AI in Palestine. Therefore, in 6/9/2021, the Council of Ministries in Palestine approved the decision to form an AI national team headed by the Ministry of Telecommunications and Information Technology and had 16 representatives from several ministries in Palestine, the private sector, and the educational sector (Telecommunication and Technology 2023 ). The national team is responsible for implementing and managing the AI national strategy in coordination with relevant experts and agencies. Their responsibilities can be summarized as follows:

Establishing a follow-up mechanism for the implementation of the AI national strategy which is consistent with international best practices in this field.

Setting national priorities in the field of AI applications.

Reviewing any form of cooperation at the regional and international levels, including the exchange of best practices and experiences.

Providing recommendations for national policies and plans related to technical, legal, and economic frameworks for AI applications.

Recommending programs for capacity building and to support the AI industry in Palestine.

Reviewing international protocols and agreements in the field of AI.

In addition to the AI national team, an advisory committee has been formed from the private and educational sectors in Palestine to support and assist the AI national team and help them achieve their responsibilities.

5 Conclusion

Sixty countries worldwide published their AI national strategies (Zhang et al. 2021 ). The approach of AI national strategies differentiates between developed and developing countries, since developed countries are consumers of technologies produced by developing countries (Monasterio Astobiza et al. 2022 ). Moreover, developed countries have low awareness of applications of AI across several fields (Kahn et al. 2018 ). This increases the gap in AI technology development between developed and developing countries (Kahn et al. 2018 ).

This paper aims to identify AI national strategy pillars in a developing country. Therefore, the paper assessed the AI status across education, entrepreneurship, government, and research and development sectors in Palestine (the case of a developing country). In addition, it examined the legal framework and whether it is coping with trending technologies.

Three different groups of participants were involved in the study. The first group included 45 experts (45+ interview hours) from the private, public, government, and educational sectors inside and outside Palestine. The second group included three focus groups which consisted of MSc students enrolled in AI-related programs, school teachers, and school students. The third group consisted of a sample of 240 participants which represent the Palestinian community as it included representatives from the educational, governmental, and private sectors.

The results revealed that Palestinians have low awareness of AI. Moreover, AI is barely used across several sectors and the legal framework is not coping with trending technologies. The results helped develop and identify five main pillars Palestine should focus on in the AI national strategy: AI for Government, AI for Development, AI for Capacity Building in the private, public, technical, and governmental sectors, AI and Legal Framework, and International Activities. The pillars will help achieve the following in the upcoming 5 years: having 300 graduates specialized in AI, 100 specialists in the field of AI in Palestine, systematic integration of AI into 4 educational sectors, 30% of the technology startups in Palestine use artificial intelligence techniques, 10% of private companies in Palestine adopt AI-based solutions, 200 published research papers in the field of artificial intelligence in Palestine, 20 people specializing in privacy and digital data protection, and 50 datasets uploaded into opendata website in Palestine. The AI national strategy was approved by the Palestinian cabinet in June 2023.

In the future, further analysis will be carried out to assess Palestinians’ awareness of Weak and Strong AI, in addition to the progress and outcome of AI national strategy across education, entrepreneurship, government, and research and development sectors.

Data availibility

The data analyzed during the current study are available from the corresponding author on reasonable request.

7amleh (2021) The reality of privacy & digital data protection in Palestine–background and summary. https://privacy.7amleh.org/ . Accessed 29 Nov 2022

Abduljabbar R, Dia H, Liyanage S, Bagloee SA (2019) Applications of artificial intelligence in transport: an overview. Sustainability 11(1):189

Article Google Scholar

Ahmed Z, Bhinder KK, Tariq A, Tahir MJ, Mehmood Q, Tabassum MS, Malik M, Aslam S, Asghar MS, Yousaf Z (2022) Knowledge, attitude, and practice of artificial intelligence among doctors and medical students in Pakistan: a cross-sectional online survey. Ann Med Surg 76:103493

Alelyani M, Alamri S, Alqahtani MS, Musa A, Almater H, Alqahtani N, Alshahrani F, Alelyani S (2021) Radiology community attitude in Saudi Arabia about the applications of artificial intelligence in radiology. In: Healthcare, vol 9. MDPI, p. 834

Arakpogun EO, Elsahn Z, Olan F, Elsahn F (2021) Artificial intelligence in Africa: challenges and opportunities. The fourth industrial revolution: implementation of artificial intelligence for growing business success, pp 375–388

Barton D, Woetzel J, Seong J, Tian Q (2017) Artificial intelligence: implications for China. McKinsey Global Institute, San Francisco

Bhattacharya P, Nakhare S (2019) Exploring AI-enabled intelligent tutoring system in the vocational studies sector in UAE. In: 2019 Sixth HCT information technology trends (ITT). IEEE, pp 230–233

Bourne C (2019) Ai cheerleaders: public relations, neoliberalism and artificial intelligence. Public Relat Inquiry 8(2):109–125

Bugami MA (2022) Saudi Arabia’s march towards sustainable development through innovation and technology. In: 2022 9th International conference on computing for sustainable global development (INDIACom). IEEE, pp 01–06

Chatterjee S (2020) Ai strategy of India: policy framework, adoption challenges and actions for government. Transform Gov People Process Policy 14(5):757–775

Google Scholar

Chatterjee S, Chaudhuri R, Kamble S, Gupta S, Sivarajah U (2022) Adoption of artificial intelligence and cutting-edge technologies for production system sustainability: a moderator-mediation analysis. Inf Syst Front. https://doi.org/10.1007/s10796-022-10317-x

Chung C-S (2020) Developing digital governance: South Korea as a global digital government leader. Routledge, Milton Park

Book Google Scholar

Cohen IG, Evgeniou T, Gerke S, Minssen T (2020) The European artificial intelligence strategy: implications and challenges for digital health. Lancet Digit Health 2(7):376–379

Criado JI, Sandoval-Almazan R, Valle-Cruz D, Ruvalcaba-Gómez EA (2021) Chief information officers’ perceptions about artificial intelligence: a comparative study of implications and challenges for the public sector. First Monday

De Leyn T, De Wolf R, Vanden Abeele M, De Marez L (2021) In-between child’s play and teenage pop culture: Tweens, Tiktok & privacy. J Youth Stud 25:1108–1125

De-Arteaga M, Herlands W, Neill DB, Dubrawski A (2018) Machine learning for the developing world. ACM Trans Manag Inf Syst (TMIS) 9(2):1–14

Dumas S, Pedersen C, Smith S (2022) Hybrid healthcare will lead the way in empowering women across emerging markets: reimagine and revolutionise the future of female healthcare. Impact of women’s empowerment on SDGs in the digital era. IGI Global, Hershey, pp 251–275

Chapter Google Scholar

Economist Impact (2022) Pushing forward: the future of AI in the middle east and north Africa. https://impact.economist.com . Accessed 12 July 2022

EduRank (2022) World’s best artificial intelligence (AI) universities [Rankings]. https://edurank.org/cs/ai/ . Accessed 29 Oct 2022

Escobar S, Sciortino D (2022) Artificial Intelligence in Canada. In: Munoz J, Maurya A (eds) International Perspectives on Artificial Intelligence. Anthem Press, p 13–22

European Commission (2020) On artificial intelligence—a European approach to excellence and trust. https://ec.europa.eu . Accessed 12 July 022

Fatima S, Desouza KC, Dawson GS (2020) National strategic artificial intelligence plans: a multi-dimensional analysis. Econ Anal Policy 67:178–194

Fukuyama M (2018) Society 5.0: aiming for a new human-centered society. Jpn Spotlight 27(5):47–50

Ghazwani S, Esch P, Cui YG, Gala P (2022) Artificial intelligence, financial anxiety and cashier-less checkouts: a Saudi Arabian perspective. Int J Bank Market 40:1200–1216

Goralski MA, Tan TK (2020) Artificial intelligence and sustainable development. Int J Manag Educ 18(1):100330

Guo J, Li B (2018) The application of medical artificial intelligence technology in rural areas of developing countries. Health Equity 2(1):174–181

Article MathSciNet Google Scholar

Holon IQ (2020) 50 National AI strategies—the 2020 AI strategy landscape. Holon IQ. Accessed on 12 July 2022

Ilhan B, Guneri P, Wilder-Smith P (2021) The contribution of artificial intelligence to reducing the diagnostic delay in oral cancer. Oral Oncol 116:105254

Johnson J (2019) Artificial intelligence & future warfare: implications for international security. Defense Secur Anal 35(2):147–169