How to Write Excellent Expository Essays

WHAT IS AN EXPOSITORY ESSAY?

An Expository essay ‘exposes’ information to the reader to describe or explain a particular topic logically and concisely.

The purpose of expository writing is to educate or inform the reader first and foremost.

Though the term is sometimes used to include persuasive writing , which exposes us to new ways of thinking, a true expository text does not allow the writer’s personal opinion to intrude into the text and should not be confused.

Expository Writing follows a structured format with an introduction, body paragraphs presenting information and examples, and a conclusion summarising key points and reinforcing the thesis. Common expository essays include process, comparison/contrast, cause and effect, and informative essays.

EXPOSITORY ESSAY STRUCTURE

TEXT ORGANIZATION Organize your thoughts before writing.

CLARITY Use clear and concise wording. There is no room for banter.

THESIS STATEMENT State position in direct terms.

TOPIC SENTENCE Open each paragraph with a topic sentence.

SUPPORTING DETAIL Support the topic sentence with further explanation and evidence.

LINK End each body paragraph by linking to the next.

EXPOSITORY ESSAY TYPES

PROCESS Tell your audience how to achieve something, such as how to bake a cake.

CAUSE & EFFECT Explore relationships between subjects, such as climate change and its impact.

PROBLEM & SOLUTION Explain how to solve a problem, such as improving physical fitness.

COMPARE & CONTRAST Compare and contrast two or more items, such as life in China life vs life in the United States or Australia.

DEFINITION Provides a detailed definition of a word or phrase, such as self-confidence.

CLASSIFICATION Organizes things into categories or groups, such as types of music.

STRUCTURE & FEATURES OF EXPOSITORY WRITING

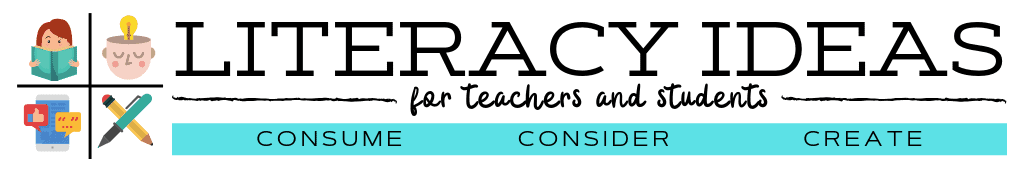

While there are many types of expository essays, the basic underlying structure is the same. The Hamburger or 5-Paragraph Essay structure is an excellent scaffold for students to build their articles. Let’s explore the expository essay outline.

INTRODUCTION:

This is the top bun of the burger, and here the student introduces the exposition topic. This usually consists of a general statement on the subject, providing an essay overview. It may also preview each significant section, indicating what aspects of the subject will be covered in the text. These sections will likely relate to the headings and subheadings identified at the planning stage.

If the introduction is the top bun of the burger, then each body paragraph is a beef patty. Self-contained in some regards, each patty forms an integral part of the whole.

EXPOSITORY PARAGRAPHS

Each body paragraph deals with one idea or piece of information. More complex topics may be grouped under a common heading, and the number of paragraphs will depend on the complexity of the topic. For example, an expository text on wolves may include a series of paragraphs under headings such as habitat, breeding habits, what they eat, etc.

Each paragraph should open with a topic sentence indicating to the reader what the paragraph is about. The following sentences should further illuminate this main idea through discussion and/or explanation. Encourage students to use evidence and examples here, whether statistical or anecdotal. Remind students to keep things factual – this is not an editorial piece for a newspaper!

Expository writing is usually not the place for flowery flourishes of figurative imagery! Students should be encouraged to select a straightforward language that is easy for the reader to understand. After all, the aim here is to inform and explain, and this is best achieved with explicit language.

As we’ve seen, several variations of the expository essay exist, but the following are the most common features students must include.

The title should be functional. It should instantly inform the reader what they will learn about in the text. This is not the place for opaque poetry!

A table of contents in long essays will help the reader locate helpful information quickly. Usually, the page numbers found here will be linked to headings and subheadings to be found in the text.

HEADINGS / SUBHEADINGS:

These assist the reader in finding information by summarizing the content in their wording.

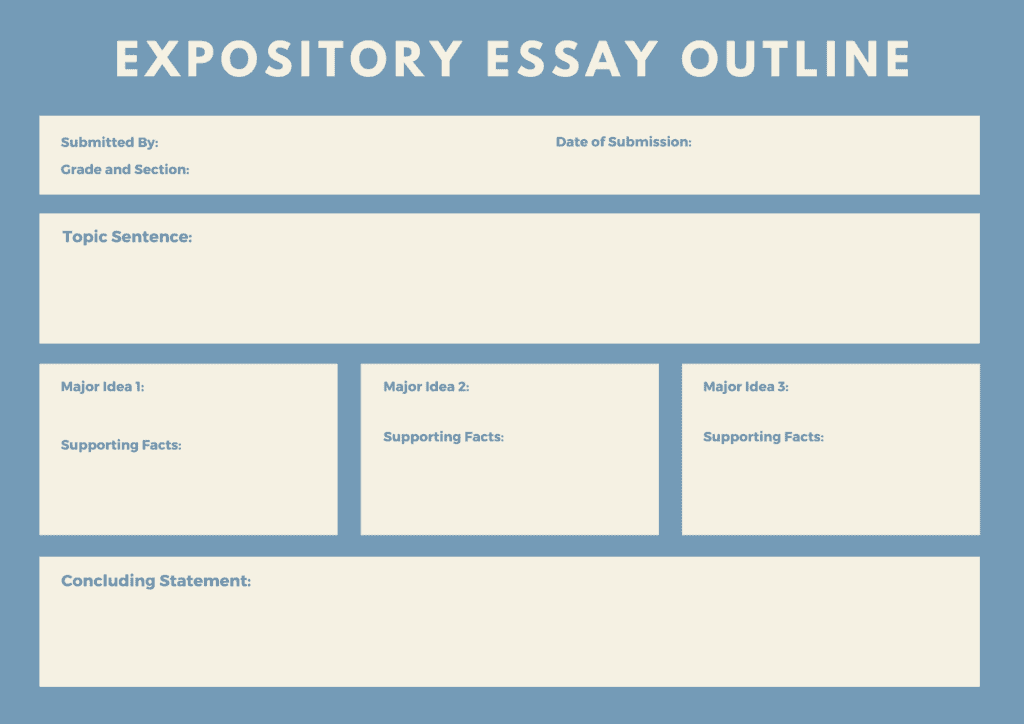

Usually listed alphabetically, the glossary defines unusual or topic-specific vocabulary and is sometimes accompanied by pictures, illustrations etc.

The index lets the reader identify where to find specific information in longer texts. An index is much more detailed than a table of contents.

VISUAL FORMS OF INFORMATION

Expository essays sometimes support the text with visuals, such as:

- Pictures / Illustrations / Photographs:

These can be used to present a central idea or concept within the text and are often accompanied by a caption explaining what the image shows. Photographs can offer a broad overview or a close-up of essential details.

Diagrams are a great way to convey complex information quickly. They should be labelled clearly to ensure the reader knows what they are looking at.

- Charts and Graphs:

These are extremely useful for showing data and statistics in an easy-to-read manner. They should be labelled clearly and correspond to the information in the nearby text.

Maps may be used to explain where something is or was located.

THE ULTIMATE NONFICTION WRITING TEACHING RESOURCE

- 270 pages of the most effective teaching strategies

- 50+ digital tools ready right out of the box

- 75 editable resources for student differentiation

- Loads of tricks and tips to add to your teaching tool bag

- All explanations are reinforced with concrete examples.

- Links to high-quality video tutorials

- Clear objectives easy to match to the demands of your curriculum

Types of expository essay

There are many different types of expository texts (e.g. encyclopaedias, travel guides, information reports , etc.), but there are also various expository essays, with the most common being.

- Process Essays

- Cause and Effect Essays

- Problem and Solution Essays

- Compare and Contrast Essays

- Definition Essays

- Classification Essays

We will examine each of these in greater detail in the remainder of this article, as they have slight nuances and differences that make them unique. The graphic below explains the general structure for all text types from the expository writing family.

THE PROCESS ESSAY

This how-to essay often takes the form of a set of instructions. Also known as a procedural text , the process essay has very specific features that guide the reader on how to do or make something.

To learn more about this type of writing, check out our information-packed article here .

Features of a process essay

Some of the main features of the process essay include:

- ‘How to’ title

- Numbered or bullet points

- Time connectives

- Imperatives (bossy words)

- List of resources

Example Expository Process Essay:

The cause and effect essay.

The purpose of a cause-and-effect essay is to explore the causal relationships between things. Essays like this often bring the focus back to a single cause. These essays frequently have a historical focus.

The text should focus on facts rather than assumptions as an expository essay. However, cause-and-effect essays sometimes explore hypothetical situations too.

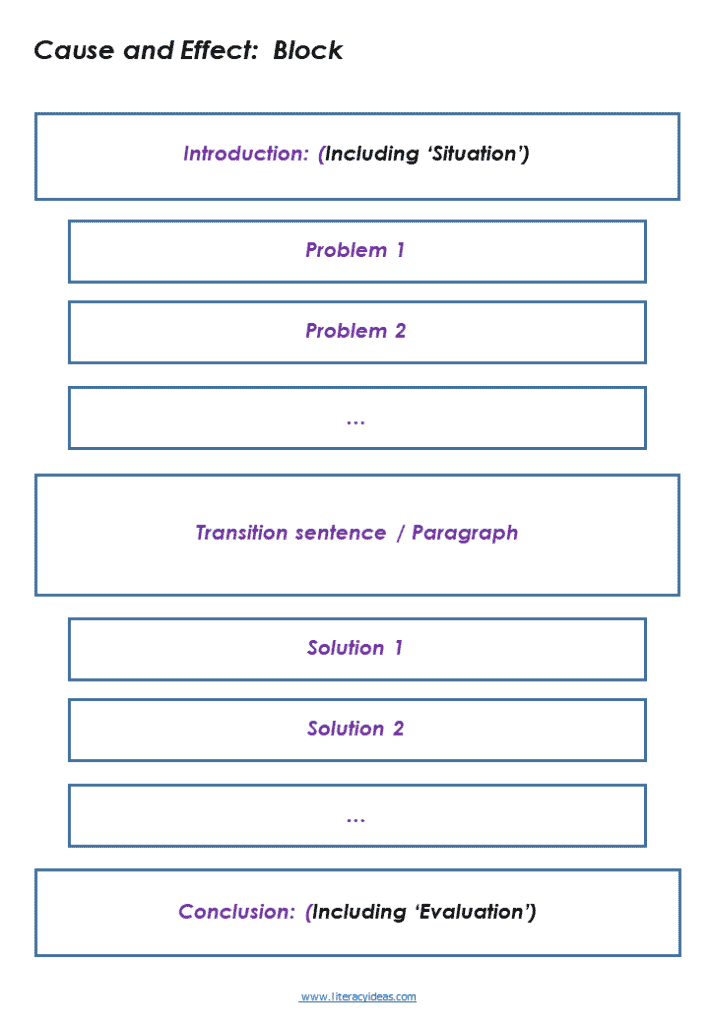

There are two main ways to structure a cause-and-effect essay.

The Block Structure presents all the causes first. The writer then focuses on the effects of these causes in the second half of the essay.

The Chain Structure presents each cause and then immediately follows with the effects it created.

Example Expository Cause and Effect Essay:

The problem and solution essay.

In this type of essay, the writer first identifies a problem and then explores the topic from various angles to ultimately propose a solution. It is similar to the cause-and-effect essay.

While the problem and solution essay can use the block and chain structures as outlined above – substitute cause with problem and effect with a solution – it will also usually work through the following elements:

- Identifies a problem

- Contains a clear thesis statement

- Each paragraph has a topic sentence

- Supports with facts, examples, evidence

- The conclusion summarizes the main points

Suggested Title: What Can Be Done to Prevent Bullying in Schools?

Example Expository Problem and Solution Essay:

The compare and contrast essay.

In this type of essay, students evaluate the similarities and differences between two or more things, ideas, people, etc. Usually, the subjects will belong to the same category.

The compare-and-contrast expository essay can be organized in several different ways. Three of these are outlined below.

In the three structures outlined, it is assumed that two subjects are being compared and contrasted. Of course, the precise number of paragraphs required in the text will depend on the number of points the student wishes to make and the number of subjects being compared and contrasted.

Suggested Title: In-Class or Remote Learning: Which Is Best?

DEFINITION ESSAYS

This type of essay provides a detailed description and definition of a word or phrase. It can be a concrete term, such as car or glass, or a more abstract concept, such as love or fear .

A definition essay comprehensively explains a term’s purpose and meaning. It will frequently contain some or all of the following elements:

- A definition of the term

- An analysis of its meaning

- The etymology of the term

- A comparison to related terms

- Examples to illustrate the meaning

- A summary of the main points

Example Expository Definition Essay:

CLASSIFICATION ESSAYS

Like definition essays, a classification essay sorts or organizes things into various groups or categories and explains each group or category in detail.

Classification essays focus on:

- Sorting things into functional categories

- Ensuring each category follows a common organizing principle

- Provides examples that illustrate each category.

Example Expository Classification Essay:

One of the best ways to understand the different features of expository essays is to see them in action. The sample essay below is a definition essay but shares many features with other expository essays.

EXPOSITORY WRITING PROMPTS

Examples of Expository Essay Titles

Expository essay prompts are usually pretty easy to spot.

They typically contain keywords that ask the student to explain something, such as “define,” “outline,” “describe,” or, most directly of all, “explain.”

This article will examine the purpose of an expository essay and its structure. It will also examine the primary language and stylistic features of this vital text type.

After this, we’ll explore five distinct tips for helping your students get the most out of writing their expository essays.

Expository Essays vs Argumentative Essays

Expository essays are often confused with their close cousin, the argumentative essay. Still, it’s easy to help students distinguish between the two by quickly examining their similarities and differences.

In an expository essay, students will attempt to write about a thing or a concept neutrally and objectively, unlike an argumentative essay where the writer’s opinions permeate the text throughout. Simple as it sounds, this may take some doing for some students as it requires the writer to refine their personal voice almost out of existence!

Luckily, choosing the correct viewpoint from which to write the essay can go a long way to helping students achieve the desired objectivity. Generally, students should write their expository essays from the third-person perspective.

Contrastingly, argumentative essays are subjective in nature and will usually be written from the first-person perspective as a result.

In an expository essay, the text’s prime focus is the topic rather than the writer’s feelings on that topic. For the writer, disassociating their personal feelings on a topic is much easier when they’re a step removed from the narration by using the third-person POV rather than the first-person POV.

Expository Essay Tips

Follow these top tips from the experts to craft an amazing expository essay.

Tip #1: Choose the Right Tool for the Job

Surprising as it may seem, not all expository essays are created equal.

In fact, there are several different types of expository essays, and our students must learn to recognize each and choose the correct one for their specific needs when producing their own expository essays.

To do this, students will need to know the 5 types of expository essays:

- The Cause and Effect Essay : This type of essay requires that the writer explain why something happened and what occurred due to that event and subsequent events. It explores the relationship between people, ideas, events, or things and other people, ideas, events, or things.

- The Compare and Contrast Essay: In a compare and contrast essay, the writer examines the similarities and differences between two subjects or ideas throughout the body of the piece and usually brings things together in an analysis at the end .

- The Descriptive Essay: This is a very straightforward expository essay with a detailed description or explanation of a topic. The topic may be an event, place, person, object, or experience. This essay’s direct style is balanced with the freedom of the writer can inject some of their creativity into the description.

- The Problem and Solution Essay : In this expository essay, the student will work to find valid solutions to a specific problem or problem.

- The Process Essay : Also called a how-to essay, this essay type is similar to instruction writing, except in essay form. It provides a step-by-step procedure breakdown to teach the reader how to do something.

When choosing a specific topic to write about, students should consider several factors:

● Do they know the topic well enough to explain the ins and outs of the subject to an unfamiliar audience?

● Do they have enough interest in this topic to sustain thorough research and writing about it?

● Is enough relevant information and credible sources available to fuel the student’s writing on this topic?

Tip # 2: Research the Topic Thoroughly

Regardless of which type of expository essay your students are working on, they must approach the research stage of the writing process with diligence and focus. The more thorough they are at the research stage, the smoother the remainder of the writing process will be.

A common problem for students while researching is that sometimes they don’t have a clear understanding of the objective of their research. They lack a clear focus on their efforts.

Research is not mindlessly scanning documents and scrawling occasional notes. As with any part of the writing process, it begins with determining clear objectives.

Often, students will start the research process with a broad focus, and as they continue researching, they will naturally narrow their focus as they learn more about the topic.

Take the time to help students understand that writing isn’t only about expressing what we think; it’s also about discovering what we think.

When researching, students should direct their efforts to the following:

- Gather Supporting Evidence : The research process is not only for uncovering the points to be made within the essay but also the evidence to support those points. The aim here is to provide an objective description or analysis of the topic; therefore, the student will need to gather relevant supporting evidence, such as facts and statistics, to bolster their writing. Usually, each paragraph will open with a topic sentence, and subsequent sentences in the paragraph will focus on providing a factual, statistical, and logical analysis of the paragraph’s main point.

- Cite Sources : It’s an essential academic skill to be able to cite sources accurately. There are several accepted methods of doing this, and you must choose a citation style appropriate to your student’s age, abilities, and context. However, whatever style you choose, students should get used to citing any sources they use in their essays, either in the form of embedded quotations, endnotes, or bibliography – or all three!

- Use Credible Sources: The Internet has profoundly impacted knowledge sharing as the Gutenberg Press did almost 600 years ago. It has provided unparalleled access to the sum total of human knowledge as never before, with each student having a dizzying number of sources available at their fingertips. However, we must ensure our students understand that not all sources are created equal. Encourage students to seek credible sources in their research and filter out the more dubious sources. Some questions students can ask themselves to help determine a source’s credibility include:

● Have I searched thoroughly enough to find the most relevant sources for my topic?

● Has this source been published recently? Is it still relevant?

● Has the source been peer-reviewed? Have other sources confirmed this source?

● What is the publication’s reputation?

● Is the author an expert in their field?

● Is the source fact-based or opinion-based?

Tip #3: Sketch an Outline

Every kid knows you can’t find the pirate treasure without a map, which is true of essay writing. Using their knowledge of the essay’s structure, students start whipping their research notes into shape by creating an outline for their essay.

The 5-paragraph essay or ‘Hamburger’ essay provides a perfect template for this.

Students start by mapping out an appealing introduction built around the main idea of their essay. Then, from their mound of research, they’ll extract their most vital ideas to assign to the various body paragraphs of their text.

Finally, they’ll sketch out their conclusion, summarize their essay’s main points, and, where appropriate, make their final statement on the topic.

Tip #4: Write a Draft

Title chosen? Check! Topic researched? Check! Outline sketched? Check!

Well, then, it’s time for the student to begin writing in earnest by completing the first draft of their essay.

They’ll already have a clear idea of the shape their essay will take from their research and outlining processes, but ensure your students allow themselves some leeway to adapt as the writing process throws up new ideas and problems.

That said, students will find it helpful to refer back to their thesis statement and outline to help ensure they stay on track as they work their way through the writing process towards their conclusions.

As students work through their drafts, encourage them to use transition words and phrases to help them move smoothly through the different sections of their essays.

Sometimes, students work directly from an outline as if on a checklist. This can sometimes be seen as the finished essay resembling Frankenstein. That is an incongruous series of disparate body parts crudely stitched together.

Learning to use transitions effectively will help students create an essay that is all of a whole, with all the joins and seams sanded and smoothed from view.

Tip #5: Edit with a Fresh Pair of Eyes

Once the draft is complete, students enter the final crucial editing stage.

But, not so hasty! Students must pencil in some time to let their drafts ‘rest’. If the editing process occurs immediately after the student finishes writing their draft, they’ll likely overlook much.

Editing is best done when students have time to gain a fresh perspective on their work. Ideally, this means leaving the essay overnight or over a few nights. However, practically, this isn’t always possible. Usually, though, it will be possible for students to put aside their writing for a few hours.

With the perspective that only time gives, when returning to their work, students can identify areas for improvement that they may have missed. Some important areas for students to look at in the editing process include:

- Bias : Students need to remember the purpose of this essay is to present a balanced and objective description of the topic. They need to ensure they haven’t let their own personal bias slip through during the writing process – an all too easy thing to do!

- Clarity : Clarity is as much a function of structure as language. Students must ensure their paragraphs are well organized and express their ideas clearly. Where necessary, some restructuring and rewriting may be required.

- Proofread: With stylistic and structural matters taken care of, it’s now time for the student to shift their focus onto matters of spelling , vocabulary choice, grammar, and punctuation. This final proofread represents the last run-through of the editing process. It’s the students’ final chance to catch mistakes and errors that may bias the assessor (aka You! ) against the effectiveness of the piece of writing. Where the text has been word-processed, the student can enlist inbuilt spelling and grammar checkers to help. Still, they should also take the time to go through each line word by word. Automatic checkers are a helpful tool, but they are a long way from infallible, and the final judgement on a text should employ the writer’s own judgement.

Expository essays are relatively straightforward pieces of writing. By following the guidelines mentioned above and practising them regularly, students can learn to produce well-written expository essays quickly and competently.

Explaining and describing events and processes objectively and clearly is a useful skill that students can add to their repertoire. Although it may seem challenging at first, with practice, it will become natural.

To write a good expository essay, students need a good understanding of its basic features and a firm grasp of the hamburger essay structure. As with any writing genre, prewriting is essential, particularly for expository writing.

Since expository writing is designed primarily to inform the reader, sound research and note-taking are essential for students to produce a well-written text. Developing these critical skills is an excellent opportunity for students through expository writing, which will be helpful to them as they continue their education.

Redrafting and editing are also crucial for producing a well-written expository essay. Students should double-check facts and statistics, and the language should be edited tightly for concision.

And, while grading their efforts, we might even learn a thing or two ourselves!

ARTICLES RELATED TO EXPOSITORY ESSAY WRITING

How to Start an Essay with Strong Hooks and Leads

How to write a perfect 5 Paragraph Essay

Top 5 Essay Writing Tips

Top Research strategies for Students

IMAGES

VIDEO

COMMENTS

Tip # 2: Research the Topic Thoroughly. Regardless of which type of expository essay your students are working on, they must approach the research stage of the writing process with diligence and focus. The more thorough they are at the research stage, the smoother the remainder of the writing process will be.

LIKE and SUBSCRIBE with NOTIFICATIONS ON if you enjoyed the video! 👍Each lesson will have the following: Animated lessons Teacher led video lessons (New ...

The Expository Essay - This resource begins with a general description of essay writing and moves to a discussion of common essay genres students may encounter across the curriculum. Expository Essay Collection - Prompts and examples. Expository Essay Prompts - "If you could make changes in your school lunchroom what would they be?"