Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Chapter 13: Inferential Statistics

Understanding Null Hypothesis Testing

Learning Objectives

- Explain the purpose of null hypothesis testing, including the role of sampling error.

- Describe the basic logic of null hypothesis testing.

- Describe the role of relationship strength and sample size in determining statistical significance and make reasonable judgments about statistical significance based on these two factors.

The Purpose of Null Hypothesis Testing

As we have seen, psychological research typically involves measuring one or more variables for a sample and computing descriptive statistics for that sample. In general, however, the researcher’s goal is not to draw conclusions about that sample but to draw conclusions about the population that the sample was selected from. Thus researchers must use sample statistics to draw conclusions about the corresponding values in the population. These corresponding values in the population are called parameters . Imagine, for example, that a researcher measures the number of depressive symptoms exhibited by each of 50 clinically depressed adults and computes the mean number of symptoms. The researcher probably wants to use this sample statistic (the mean number of symptoms for the sample) to draw conclusions about the corresponding population parameter (the mean number of symptoms for clinically depressed adults).

Unfortunately, sample statistics are not perfect estimates of their corresponding population parameters. This is because there is a certain amount of random variability in any statistic from sample to sample. The mean number of depressive symptoms might be 8.73 in one sample of clinically depressed adults, 6.45 in a second sample, and 9.44 in a third—even though these samples are selected randomly from the same population. Similarly, the correlation (Pearson’s r ) between two variables might be +.24 in one sample, −.04 in a second sample, and +.15 in a third—again, even though these samples are selected randomly from the same population. This random variability in a statistic from sample to sample is called sampling error . (Note that the term error here refers to random variability and does not imply that anyone has made a mistake. No one “commits a sampling error.”)

One implication of this is that when there is a statistical relationship in a sample, it is not always clear that there is a statistical relationship in the population. A small difference between two group means in a sample might indicate that there is a small difference between the two group means in the population. But it could also be that there is no difference between the means in the population and that the difference in the sample is just a matter of sampling error. Similarly, a Pearson’s r value of −.29 in a sample might mean that there is a negative relationship in the population. But it could also be that there is no relationship in the population and that the relationship in the sample is just a matter of sampling error.

In fact, any statistical relationship in a sample can be interpreted in two ways:

- There is a relationship in the population, and the relationship in the sample reflects this.

- There is no relationship in the population, and the relationship in the sample reflects only sampling error.

The purpose of null hypothesis testing is simply to help researchers decide between these two interpretations.

The Logic of Null Hypothesis Testing

Null hypothesis testing is a formal approach to deciding between two interpretations of a statistical relationship in a sample. One interpretation is called the null hypothesis (often symbolized H 0 and read as “H-naught”). This is the idea that there is no relationship in the population and that the relationship in the sample reflects only sampling error. Informally, the null hypothesis is that the sample relationship “occurred by chance.” The other interpretation is called the alternative hypothesis (often symbolized as H 1 ). This is the idea that there is a relationship in the population and that the relationship in the sample reflects this relationship in the population.

Again, every statistical relationship in a sample can be interpreted in either of these two ways: It might have occurred by chance, or it might reflect a relationship in the population. So researchers need a way to decide between them. Although there are many specific null hypothesis testing techniques, they are all based on the same general logic. The steps are as follows:

- Assume for the moment that the null hypothesis is true. There is no relationship between the variables in the population.

- Determine how likely the sample relationship would be if the null hypothesis were true.

- If the sample relationship would be extremely unlikely, then reject the null hypothesis in favour of the alternative hypothesis. If it would not be extremely unlikely, then retain the null hypothesis .

Following this logic, we can begin to understand why Mehl and his colleagues concluded that there is no difference in talkativeness between women and men in the population. In essence, they asked the following question: “If there were no difference in the population, how likely is it that we would find a small difference of d = 0.06 in our sample?” Their answer to this question was that this sample relationship would be fairly likely if the null hypothesis were true. Therefore, they retained the null hypothesis—concluding that there is no evidence of a sex difference in the population. We can also see why Kanner and his colleagues concluded that there is a correlation between hassles and symptoms in the population. They asked, “If the null hypothesis were true, how likely is it that we would find a strong correlation of +.60 in our sample?” Their answer to this question was that this sample relationship would be fairly unlikely if the null hypothesis were true. Therefore, they rejected the null hypothesis in favour of the alternative hypothesis—concluding that there is a positive correlation between these variables in the population.

A crucial step in null hypothesis testing is finding the likelihood of the sample result if the null hypothesis were true. This probability is called the p value . A low p value means that the sample result would be unlikely if the null hypothesis were true and leads to the rejection of the null hypothesis. A high p value means that the sample result would be likely if the null hypothesis were true and leads to the retention of the null hypothesis. But how low must the p value be before the sample result is considered unlikely enough to reject the null hypothesis? In null hypothesis testing, this criterion is called α (alpha) and is almost always set to .05. If there is less than a 5% chance of a result as extreme as the sample result if the null hypothesis were true, then the null hypothesis is rejected. When this happens, the result is said to be statistically significant . If there is greater than a 5% chance of a result as extreme as the sample result when the null hypothesis is true, then the null hypothesis is retained. This does not necessarily mean that the researcher accepts the null hypothesis as true—only that there is not currently enough evidence to conclude that it is true. Researchers often use the expression “fail to reject the null hypothesis” rather than “retain the null hypothesis,” but they never use the expression “accept the null hypothesis.”

The Misunderstood p Value

The p value is one of the most misunderstood quantities in psychological research (Cohen, 1994) [1] . Even professional researchers misinterpret it, and it is not unusual for such misinterpretations to appear in statistics textbooks!

The most common misinterpretation is that the p value is the probability that the null hypothesis is true—that the sample result occurred by chance. For example, a misguided researcher might say that because the p value is .02, there is only a 2% chance that the result is due to chance and a 98% chance that it reflects a real relationship in the population. But this is incorrect . The p value is really the probability of a result at least as extreme as the sample result if the null hypothesis were true. So a p value of .02 means that if the null hypothesis were true, a sample result this extreme would occur only 2% of the time.

You can avoid this misunderstanding by remembering that the p value is not the probability that any particular hypothesis is true or false. Instead, it is the probability of obtaining the sample result if the null hypothesis were true.

Role of Sample Size and Relationship Strength

Recall that null hypothesis testing involves answering the question, “If the null hypothesis were true, what is the probability of a sample result as extreme as this one?” In other words, “What is the p value?” It can be helpful to see that the answer to this question depends on just two considerations: the strength of the relationship and the size of the sample. Specifically, the stronger the sample relationship and the larger the sample, the less likely the result would be if the null hypothesis were true. That is, the lower the p value. This should make sense. Imagine a study in which a sample of 500 women is compared with a sample of 500 men in terms of some psychological characteristic, and Cohen’s d is a strong 0.50. If there were really no sex difference in the population, then a result this strong based on such a large sample should seem highly unlikely. Now imagine a similar study in which a sample of three women is compared with a sample of three men, and Cohen’s d is a weak 0.10. If there were no sex difference in the population, then a relationship this weak based on such a small sample should seem likely. And this is precisely why the null hypothesis would be rejected in the first example and retained in the second.

Of course, sometimes the result can be weak and the sample large, or the result can be strong and the sample small. In these cases, the two considerations trade off against each other so that a weak result can be statistically significant if the sample is large enough and a strong relationship can be statistically significant even if the sample is small. Table 13.1 shows roughly how relationship strength and sample size combine to determine whether a sample result is statistically significant. The columns of the table represent the three levels of relationship strength: weak, medium, and strong. The rows represent four sample sizes that can be considered small, medium, large, and extra large in the context of psychological research. Thus each cell in the table represents a combination of relationship strength and sample size. If a cell contains the word Yes , then this combination would be statistically significant for both Cohen’s d and Pearson’s r . If it contains the word No , then it would not be statistically significant for either. There is one cell where the decision for d and r would be different and another where it might be different depending on some additional considerations, which are discussed in Section 13.2 “Some Basic Null Hypothesis Tests”

Although Table 13.1 provides only a rough guideline, it shows very clearly that weak relationships based on medium or small samples are never statistically significant and that strong relationships based on medium or larger samples are always statistically significant. If you keep this lesson in mind, you will often know whether a result is statistically significant based on the descriptive statistics alone. It is extremely useful to be able to develop this kind of intuitive judgment. One reason is that it allows you to develop expectations about how your formal null hypothesis tests are going to come out, which in turn allows you to detect problems in your analyses. For example, if your sample relationship is strong and your sample is medium, then you would expect to reject the null hypothesis. If for some reason your formal null hypothesis test indicates otherwise, then you need to double-check your computations and interpretations. A second reason is that the ability to make this kind of intuitive judgment is an indication that you understand the basic logic of this approach in addition to being able to do the computations.

Statistical Significance Versus Practical Significance

Table 13.1 illustrates another extremely important point. A statistically significant result is not necessarily a strong one. Even a very weak result can be statistically significant if it is based on a large enough sample. This is closely related to Janet Shibley Hyde’s argument about sex differences (Hyde, 2007) [2] . The differences between women and men in mathematical problem solving and leadership ability are statistically significant. But the word significant can cause people to interpret these differences as strong and important—perhaps even important enough to influence the college courses they take or even who they vote for. As we have seen, however, these statistically significant differences are actually quite weak—perhaps even “trivial.”

This is why it is important to distinguish between the statistical significance of a result and the practical significance of that result. Practical significance refers to the importance or usefulness of the result in some real-world context. Many sex differences are statistically significant—and may even be interesting for purely scientific reasons—but they are not practically significant. In clinical practice, this same concept is often referred to as “clinical significance.” For example, a study on a new treatment for social phobia might show that it produces a statistically significant positive effect. Yet this effect still might not be strong enough to justify the time, effort, and other costs of putting it into practice—especially if easier and cheaper treatments that work almost as well already exist. Although statistically significant, this result would be said to lack practical or clinical significance.

Key Takeaways

- Null hypothesis testing is a formal approach to deciding whether a statistical relationship in a sample reflects a real relationship in the population or is just due to chance.

- The logic of null hypothesis testing involves assuming that the null hypothesis is true, finding how likely the sample result would be if this assumption were correct, and then making a decision. If the sample result would be unlikely if the null hypothesis were true, then it is rejected in favour of the alternative hypothesis. If it would not be unlikely, then the null hypothesis is retained.

- The probability of obtaining the sample result if the null hypothesis were true (the p value) is based on two considerations: relationship strength and sample size. Reasonable judgments about whether a sample relationship is statistically significant can often be made by quickly considering these two factors.

- Statistical significance is not the same as relationship strength or importance. Even weak relationships can be statistically significant if the sample size is large enough. It is important to consider relationship strength and the practical significance of a result in addition to its statistical significance.

- Discussion: Imagine a study showing that people who eat more broccoli tend to be happier. Explain for someone who knows nothing about statistics why the researchers would conduct a null hypothesis test.

- The correlation between two variables is r = −.78 based on a sample size of 137.

- The mean score on a psychological characteristic for women is 25 ( SD = 5) and the mean score for men is 24 ( SD = 5). There were 12 women and 10 men in this study.

- In a memory experiment, the mean number of items recalled by the 40 participants in Condition A was 0.50 standard deviations greater than the mean number recalled by the 40 participants in Condition B.

- In another memory experiment, the mean scores for participants in Condition A and Condition B came out exactly the same!

- A student finds a correlation of r = .04 between the number of units the students in his research methods class are taking and the students’ level of stress.

Long Descriptions

“Null Hypothesis” long description: A comic depicting a man and a woman talking in the foreground. In the background is a child working at a desk. The man says to the woman, “I can’t believe schools are still teaching kids about the null hypothesis. I remember reading a big study that conclusively disproved it years ago.” [Return to “Null Hypothesis”]

“Conditional Risk” long description: A comic depicting two hikers beside a tree during a thunderstorm. A bolt of lightning goes “crack” in the dark sky as thunder booms. One of the hikers says, “Whoa! We should get inside!” The other hiker says, “It’s okay! Lightning only kills about 45 Americans a year, so the chances of dying are only one in 7,000,000. Let’s go on!” The comic’s caption says, “The annual death rate among people who know that statistic is one in six.” [Return to “Conditional Risk”]

Media Attributions

- Null Hypothesis by XKCD CC BY-NC (Attribution NonCommercial)

- Conditional Risk by XKCD CC BY-NC (Attribution NonCommercial)

- Cohen, J. (1994). The world is round: p < .05. American Psychologist, 49 , 997–1003. ↵

- Hyde, J. S. (2007). New directions in the study of gender similarities and differences. Current Directions in Psychological Science, 16 , 259–263. ↵

Values in a population that correspond to variables measured in a study.

The random variability in a statistic from sample to sample.

A formal approach to deciding between two interpretations of a statistical relationship in a sample.

The idea that there is no relationship in the population and that the relationship in the sample reflects only sampling error.

The idea that there is a relationship in the population and that the relationship in the sample reflects this relationship in the population.

When the relationship found in the sample would be extremely unlikely, the idea that the relationship occurred “by chance” is rejected.

When the relationship found in the sample is likely to have occurred by chance, the null hypothesis is not rejected.

The probability that, if the null hypothesis were true, the result found in the sample would occur.

How low the p value must be before the sample result is considered unlikely in null hypothesis testing.

When there is less than a 5% chance of a result as extreme as the sample result occurring and the null hypothesis is rejected.

Research Methods in Psychology - 2nd Canadian Edition Copyright © 2015 by Paul C. Price, Rajiv Jhangiani, & I-Chant A. Chiang is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Hypothesis Testing | A Step-by-Step Guide with Easy Examples

Published on November 8, 2019 by Rebecca Bevans . Revised on June 22, 2023.

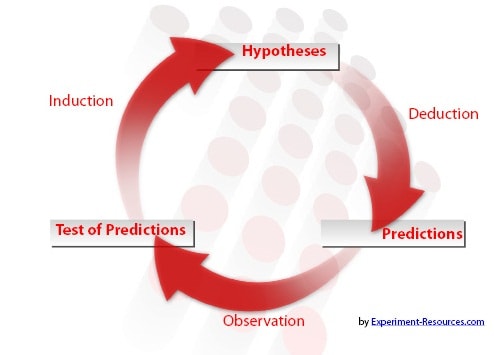

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics . It is most often used by scientists to test specific predictions, called hypotheses, that arise from theories.

There are 5 main steps in hypothesis testing:

- State your research hypothesis as a null hypothesis and alternate hypothesis (H o ) and (H a or H 1 ).

- Collect data in a way designed to test the hypothesis.

- Perform an appropriate statistical test .

- Decide whether to reject or fail to reject your null hypothesis.

- Present the findings in your results and discussion section.

Though the specific details might vary, the procedure you will use when testing a hypothesis will always follow some version of these steps.

Table of contents

Step 1: state your null and alternate hypothesis, step 2: collect data, step 3: perform a statistical test, step 4: decide whether to reject or fail to reject your null hypothesis, step 5: present your findings, other interesting articles, frequently asked questions about hypothesis testing.

After developing your initial research hypothesis (the prediction that you want to investigate), it is important to restate it as a null (H o ) and alternate (H a ) hypothesis so that you can test it mathematically.

The alternate hypothesis is usually your initial hypothesis that predicts a relationship between variables. The null hypothesis is a prediction of no relationship between the variables you are interested in.

- H 0 : Men are, on average, not taller than women. H a : Men are, on average, taller than women.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

For a statistical test to be valid , it is important to perform sampling and collect data in a way that is designed to test your hypothesis. If your data are not representative, then you cannot make statistical inferences about the population you are interested in.

There are a variety of statistical tests available, but they are all based on the comparison of within-group variance (how spread out the data is within a category) versus between-group variance (how different the categories are from one another).

If the between-group variance is large enough that there is little or no overlap between groups, then your statistical test will reflect that by showing a low p -value . This means it is unlikely that the differences between these groups came about by chance.

Alternatively, if there is high within-group variance and low between-group variance, then your statistical test will reflect that with a high p -value. This means it is likely that any difference you measure between groups is due to chance.

Your choice of statistical test will be based on the type of variables and the level of measurement of your collected data .

- an estimate of the difference in average height between the two groups.

- a p -value showing how likely you are to see this difference if the null hypothesis of no difference is true.

Based on the outcome of your statistical test, you will have to decide whether to reject or fail to reject your null hypothesis.

In most cases you will use the p -value generated by your statistical test to guide your decision. And in most cases, your predetermined level of significance for rejecting the null hypothesis will be 0.05 – that is, when there is a less than 5% chance that you would see these results if the null hypothesis were true.

In some cases, researchers choose a more conservative level of significance, such as 0.01 (1%). This minimizes the risk of incorrectly rejecting the null hypothesis ( Type I error ).

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

The results of hypothesis testing will be presented in the results and discussion sections of your research paper , dissertation or thesis .

In the results section you should give a brief summary of the data and a summary of the results of your statistical test (for example, the estimated difference between group means and associated p -value). In the discussion , you can discuss whether your initial hypothesis was supported by your results or not.

In the formal language of hypothesis testing, we talk about rejecting or failing to reject the null hypothesis. You will probably be asked to do this in your statistics assignments.

However, when presenting research results in academic papers we rarely talk this way. Instead, we go back to our alternate hypothesis (in this case, the hypothesis that men are on average taller than women) and state whether the result of our test did or did not support the alternate hypothesis.

If your null hypothesis was rejected, this result is interpreted as “supported the alternate hypothesis.”

These are superficial differences; you can see that they mean the same thing.

You might notice that we don’t say that we reject or fail to reject the alternate hypothesis . This is because hypothesis testing is not designed to prove or disprove anything. It is only designed to test whether a pattern we measure could have arisen spuriously, or by chance.

If we reject the null hypothesis based on our research (i.e., we find that it is unlikely that the pattern arose by chance), then we can say our test lends support to our hypothesis . But if the pattern does not pass our decision rule, meaning that it could have arisen by chance, then we say the test is inconsistent with our hypothesis .

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Descriptive statistics

- Measures of central tendency

- Correlation coefficient

Methodology

- Cluster sampling

- Stratified sampling

- Types of interviews

- Cohort study

- Thematic analysis

Research bias

- Implicit bias

- Cognitive bias

- Survivorship bias

- Availability heuristic

- Nonresponse bias

- Regression to the mean

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

A hypothesis states your predictions about what your research will find. It is a tentative answer to your research question that has not yet been tested. For some research projects, you might have to write several hypotheses that address different aspects of your research question.

A hypothesis is not just a guess — it should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations and statistical analysis of data).

Null and alternative hypotheses are used in statistical hypothesis testing . The null hypothesis of a test always predicts no effect or no relationship between variables, while the alternative hypothesis states your research prediction of an effect or relationship.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bevans, R. (2023, June 22). Hypothesis Testing | A Step-by-Step Guide with Easy Examples. Scribbr. Retrieved April 1, 2024, from https://www.scribbr.com/statistics/hypothesis-testing/

Is this article helpful?

Rebecca Bevans

Other students also liked, choosing the right statistical test | types & examples, understanding p values | definition and examples, what is your plagiarism score.

9.1 Null and Alternative Hypotheses

The actual test begins by considering two hypotheses . They are called the null hypothesis and the alternative hypothesis . These hypotheses contain opposing viewpoints.

H 0 , the — null hypothesis: a statement of no difference between sample means or proportions or no difference between a sample mean or proportion and a population mean or proportion. In other words, the difference equals 0.

H a —, the alternative hypothesis: a claim about the population that is contradictory to H 0 and what we conclude when we reject H 0 .

Since the null and alternative hypotheses are contradictory, you must examine evidence to decide if you have enough evidence to reject the null hypothesis or not. The evidence is in the form of sample data.

After you have determined which hypothesis the sample supports, you make a decision. There are two options for a decision. They are reject H 0 if the sample information favors the alternative hypothesis or do not reject H 0 or decline to reject H 0 if the sample information is insufficient to reject the null hypothesis.

Mathematical Symbols Used in H 0 and H a :

H 0 always has a symbol with an equal in it. H a never has a symbol with an equal in it. The choice of symbol depends on the wording of the hypothesis test. However, be aware that many researchers use = in the null hypothesis, even with > or < as the symbol in the alternative hypothesis. This practice is acceptable because we only make the decision to reject or not reject the null hypothesis.

Example 9.1

H 0 : No more than 30 percent of the registered voters in Santa Clara County voted in the primary election. p ≤ 30 H a : More than 30 percent of the registered voters in Santa Clara County voted in the primary election. p > 30

A medical trial is conducted to test whether or not a new medicine reduces cholesterol by 25 percent. State the null and alternative hypotheses.

Example 9.2

We want to test whether the mean GPA of students in American colleges is different from 2.0 (out of 4.0). The null and alternative hypotheses are the following: H 0 : μ = 2.0 H a : μ ≠ 2.0

We want to test whether the mean height of eighth graders is 66 inches. State the null and alternative hypotheses. Fill in the correct symbol (=, ≠, ≥, <, ≤, >) for the null and alternative hypotheses.

- H 0 : μ __ 66

- H a : μ __ 66

Example 9.3

We want to test if college students take fewer than five years to graduate from college, on the average. The null and alternative hypotheses are the following: H 0 : μ ≥ 5 H a : μ < 5

We want to test if it takes fewer than 45 minutes to teach a lesson plan. State the null and alternative hypotheses. Fill in the correct symbol ( =, ≠, ≥, <, ≤, >) for the null and alternative hypotheses.

- H 0 : μ __ 45

- H a : μ __ 45

Example 9.4

An article on school standards stated that about half of all students in France, Germany, and Israel take advanced placement exams and a third of the students pass. The same article stated that 6.6 percent of U.S. students take advanced placement exams and 4.4 percent pass. Test if the percentage of U.S. students who take advanced placement exams is more than 6.6 percent. State the null and alternative hypotheses. H 0 : p ≤ 0.066 H a : p > 0.066

On a state driver’s test, about 40 percent pass the test on the first try. We want to test if more than 40 percent pass on the first try. Fill in the correct symbol (=, ≠, ≥, <, ≤, >) for the null and alternative hypotheses.

- H 0 : p __ 0.40

- H a : p __ 0.40

Collaborative Exercise

Bring to class a newspaper, some news magazines, and some internet articles. In groups, find articles from which your group can write null and alternative hypotheses. Discuss your hypotheses with the rest of the class.

As an Amazon Associate we earn from qualifying purchases.

This book may not be used in the training of large language models or otherwise be ingested into large language models or generative AI offerings without OpenStax's permission.

Want to cite, share, or modify this book? This book uses the Creative Commons Attribution License and you must attribute Texas Education Agency (TEA). The original material is available at: https://www.texasgateway.org/book/tea-statistics . Changes were made to the original material, including updates to art, structure, and other content updates.

Access for free at https://openstax.org/books/statistics/pages/1-introduction

- Authors: Barbara Illowsky, Susan Dean

- Publisher/website: OpenStax

- Book title: Statistics

- Publication date: Mar 27, 2020

- Location: Houston, Texas

- Book URL: https://openstax.org/books/statistics/pages/1-introduction

- Section URL: https://openstax.org/books/statistics/pages/9-1-null-and-alternative-hypotheses

© Jan 23, 2024 Texas Education Agency (TEA). The OpenStax name, OpenStax logo, OpenStax book covers, OpenStax CNX name, and OpenStax CNX logo are not subject to the Creative Commons license and may not be reproduced without the prior and express written consent of Rice University.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

Why checking model assumptions using null hypothesis significance tests does not suffice: A plea for plausibility

Jesper tijmstra.

Department of Methodology and Statistics, Faculty of Social Sciences, Tilburg University, PO Box 90153, 5000 LE Tilburg, The Netherlands

This article explores whether the null hypothesis significance testing (NHST) framework provides a sufficient basis for the evaluation of statistical model assumptions. It is argued that while NHST-based tests can provide some degree of confirmation for the model assumption that is evaluated—formulated as the null hypothesis—these tests do not inform us of the degree of support that the data provide for the null hypothesis and to what extent the null hypothesis should be considered to be plausible after having taken the data into account. Addressing the prior plausibility of the model assumption is unavoidable if the goal is to determine how plausible it is that the model assumption holds. Without assessing the prior plausibility of the model assumptions, it remains fully uncertain whether the model of interest gives an adequate description of the data and thus whether it can be considered valid for the application at hand. Although addressing the prior plausibility is difficult, ignoring the prior plausibility is not an option if we want to claim that the inferences of our statistical model can be relied upon.

Introduction

One of the core objectives of the social sciences is to critically evaluate its theories on the basis of empirical observations. Bridging this gap between data and theory is achieved through statistical modeling: Only if a statistical model is specified can the data be brought to bear upon the scientific theory. Without making assumptions about the statistical model, no conclusions can be drawn about the hypotheses of substantive interest. While in practice these statistical model assumptions may often be glossed over, establishing these assumptions to be plausible is crucial for establishing the validity of the inferences: Only if the statistical model is specified (approximately) correctly can inferences about hypotheses of interest be relied upon. Hence, critically evaluating the statistical model assumptions is of crucial importance for scientific enquiry. These statistical model assumptions can themselves be investigated using statistical methods, many of which make use of null-hypothesis significance tests (NHST).

As the statement of the American Statistical Association exemplifies (Wasserstein & Lazar, 2016 ), recently much attention in psychology and related fields has been devoted to problems that arise when NHST is employed to evaluate substantive hypotheses. Criticisms of NHST are usually two-fold. Firstly, it is noted that in practice NHST is often abused to draw inferences that are not warranted by the procedure (Meehl, 1978 ; Cohen, 1994 ; Gigerenzer & Murray, 1987 ; Gigerenzer, 1993 ). For instance, it is noted that NHST is not actually used to critically test the substantive hypothesis of interest (Cohen, 1994 ), that practitioners often conclude that a significant result indicates that the null hypothesis is false and the alternative hypothesis is true or likely to be true (Gigerenzer, 2004 ), that a p value is taken to be the probability that the observed result was due to chance (rather than a probability conditional on the null hypothesis being true, Cohen, 1994 ; Wagenmakers, 2007 ), and that a significant result is taken to indicate that the finding is likely to replicate (Gigerenzer, 2000 ). The second criticism is that the types of inferences that one can draw on the basis of NHST are of limited use for the evaluation of scientific theories (Gigerenzer & Murray, 1987 ; Gigerenzer, 1993 , 2004 ), with alternatives such as using confidence intervals (Cumming & Finch, 2005 ; Fidler & Loftus, 2009 ; Cumming, 2014 ) or Bayesian approaches (Wagenmakers, 2007 ) being proposed as more informative and more appropriate tools for statistical inference. These criticisms have been unanimous in their rejection of the use of (solely) NHST-based methods for evaluating substantive hypotheses and have had an important impact on both recommended and actual statistical practices, although the uncritical and inappropriate use of NHST unfortunately still appears to be quite common in practice.

The attention in the discussion of the (in)adequacy of NHST has focused almost exclusively on the use of NHST for the evaluation of substantive hypotheses. Importantly, standard methods for assessing whether statistical model assumptions hold often rely on NHST as well (e.g., Tabachnick & Fidell, 2001 ; Field, 2009 ), and are even employed when the substantive analyses do not use NHST (e.g., item response theory; Lord, 1980 ). These NHST procedures formulate the model assumption as a statistical hypothesis, but—unlike the statistical hypothesis derived from the substantive hypothesis—treat this hypothesis as a null hypothesis to be falsified. It may also be noted here that despite users usually being interested in determining whether the model assumption at least approximately holds, the null hypothesis that is tested specifies that the model assumption holds exactly , something that often may not be plausible a priori. Given the differences in the way NHST deals with model assumptions compared to substantive hypotheses, given the different purpose of assessing model assumptions (i.e., evaluating the adequacy of the statistical model), and given the common usage of NHST for evaluating model assumptions, it is important to specifically consider the use of NHST for the evaluation of model assumptions and the unique issues that arise in that context.

This paper explores whether NHST-based approaches succeed in providing sufficient information to determine how plausible it is that a model assumption holds, and whether a model assumption is plausible enough to be accepted. The paper starts out with a short motivating example, aimed at clarifying the specific issues that arise when model assumptions are evaluated using null hypothesis tests. In “ The NHST framework ”, the background of the NHST framework is discussed, as well as the specific issues that arise when it is applied to evaluate model assumptions. “ Confirmation of model assumptions using NHST ” explores the extent to which a null hypothesis significance test can provide confirmation for the model assumption it evaluates. “ Revisiting the motivating example ” briefly returns to the motivating example, where it is illustrated how Bayesian methods that do not rely on NHST may provide a more informative and appropriate alternative for evaluating model assumptions.

A motivating example

Consider a researcher who wants to find out whether it makes sense to develop different types of educational material for boys and for girls, as (we assume) is predicted by a particular theory. Specifically, the researcher is interested in children’s speed of spatial reasoning. She constructs a set of ten items to measure this ability and for each respondent records the total response time.

Assume that the researcher plans on using Student’s independent samples t test to compare the average response speed of boys and girls. Before she can interpret the results of this t test, she has to make sure that the assumptions are met. In this case, she has to check whether both the response times of the boys and the response times of the girls are independently and identically distributed, whether the distributions are Gaussian, and whether the variances of these distributions are the same (Student, 1908 ; Field, 2009 ). The researcher is aware of the unequal variances t test (Welch, 1947 ) (i.e., a different statistical model), but because this procedure has lower power, she prefers to use Student’s.

For simplicity, consider only the assumption of equal variances. In this case, Levene’s test (Levene, 1960 ) can be used to evaluate the null hypothesis H 0 : σ boys 2 = σ girls 2 . Assume that Levene’s test yields a p value of 0.060, which exceeds the level of significance (which for Levene’s test is usually set to 0.05 or 0.01, Field, 2009 ). 1 The researcher concludes that there is no reason to worry about a violation of the assumption of equal variances, and proceeds to apply Student’s t test (using α = 0.05). The t test shows that boys are significantly faster than girls in solving the spatial reasoning items ( p = 0.044). The researcher notes that her statistical software also produces the output for the t test for groups with unequal variances (Welch, 1947 ), which yields p = 0.095. However, since Levene’s test did not indicate a violation of the assumption of equal variances, the researcher feels justified in ignoring the results of the Welch test and sticks to her conclusion that there is a significant difference between the two groups.

The present example is meant to exemplify how statistical model assumptions are commonly dealt with in practice (see also Gigerenzer, 2004 ). Important questions can be asked about the justification of the inferences made in this example, and in cases like this in general. Should the researcher be worried about her model assumption if she has background knowledge that boys commonly show a larger variance in cognitive processing speed than girls? Can she safely conclude that the variances are indeed equal in the population and that the model assumption holds, or that this is at least very likely? And if there remains uncertainty about the plausibility of this model assumption, how should this uncertainty influence her statistical inferences?

Questions like these are not restricted to the application of t tests, but apply equally strongly to all areas where statistical models are used to make inferences, and includes areas where the option of simply using a less restrictive model (e.g., using Welch’s t test rather than Student’s) is not readily available. Since statistical inferences based on these models often form the basis for updating our scientific beliefs as well as for taking action, determining whether we can rely on inferences that are made about model assumptions using NHST is of both theoretical and practical importance.

The NHST framework

Background of the nhst framework.

The basis of the NHST framework goes back to the statistical paradigm founded by Fisher in the 1930s (Fisher, 1930 , 1955 , 1956 , 1960 ; Hacking, 1976 ; Gigerenzer, 1993 ), as well as the statistical paradigm founded by Neyman and Pearson in that same period (Neyman, 1937 , 1957 ; Pearson, 1955 ; Neyman & Pearson, 1967 ; Hacking, 1976 ; Gigerenzer, 1993 ). Whereas Neyman and Pearson proposed to contrast two hypotheses that are in principle on equal footing, Fisher’s approach focuses on evaluating the fit of a single hypothesis to the data, and has a strong focus on falsification. Starting from the 1950s, elements from both approaches were incorporated in the hybrid NHST framework as it exists today in the social and behavioral sciences (Gigerenzer & Murray, 1987 ; Gigerenzer, Swijtink, Porter, Daston, Beatty, & Krüger, 1989 ; Gigerenzer, 1993 ; Lehmann, 2006 ): While this framework proposes to evaluate a null hypothesis in contrast to an alternative hypothesis—in line with Neyman and Pearson—the focus lies on attempting to reject the null hypothesis, in line with Fisher’s methodology. Thus, despite the important differences that existed between the paradigms of Fisher and that of Neyman and Pearson (Gigerenzer, 1993 ), the current framework constitutes a hybrid form of the two paradigms (Gigerenzer et al., 1989 ; Gigerenzer, 1993 ; Hubbard & Bayarri, 2003 ).

In line with Fisher, in NHST, the evaluation of the null hypothesis is done solely based on the p value: the probability of observing an outcome under the null hypothesis that is at least as extreme as the outcome that was observed. If the p value falls below a preset level of significance α , it is concluded that the data are unlikely to be observed under the null hypothesis (in line with Fisher), in which case the null hypothesis is rejected and the alternative hypothesis is accepted (in line with Neyman and Pearson). If p ≥ α , the null hypothesis is retained, but no conclusions are drawn about the truth of the null hypothesis (Fisher, 1955 ).

Because the NHST framework explicitly does not address the probability that the null hypothesis is true (Edwards, Lindman & Savage, 1963 ), NHST remains silent about the effect that a failure to reject the null hypothesis should have on our assessment of the plausibility of the null hypothesis—the extent or degree to which we believe that it is credible or likely to be true. Similar to the way in which Popper suggests to evaluate theories (Popper, 1959 ), null hypotheses are simply considered hypotheses that have not yet successfully been rejected, but should not be considered to be likely to be true.

Differences between evaluating substantive hypotheses and model assumptions

Many authors have criticized the use of NHST for the evaluation of substantive hypotheses (see for example Meehl, 1978 ; Cohen, 1994 ; Gigerenzer, 2004 ; Wagenmakers, 2007 ). However, the use of NHST for the evaluation of model assumptions differs in at least three important ways from the use of NHST for evaluating substantive theories, making it important to specifically consider the merits and demerits of employing NHST in the context of evaluating model assumptions.

One important difference lies in the type of inference that is desired. In the context of evaluating scientific theories, one can argue that conclusions about substantive hypotheses are always provisional , and that we should refrain from drawing any strong conclusions on the basis of a single hypothesis test, requiring instead that the results are to be consistently replicable. In this context, one can argue (Mayo, 1996 ) that the only probabilities that are relevant are the error probabilities of the procedure (in the case of NHST the Type I and Type II error), and one can in principle refrain from taking action on the basis of finite, and hence principally inconclusive, evidence. Unfortunately, this appeal to the long-run success of the procedure is not available when evaluating model assumptions, since in that context we always need to make a decision about whether in this particular instance we will proceed to apply the statistical model (i.e., acting as though its assumptions have been met).

A second important difference lies in the fact that when evaluating model assumptions using NHST, the null hypothesis is actually the hypothesis of interest, rather than a ‘no effect’ or ‘nil’ hypothesis (Cohen, 1994 ) that the researcher hopes to reject in favor of the proposed alternative. Thus, while substantive hypotheses are only evaluated indirectly by attempting to reject a null hypothesis, when model assumptions are concerned, researchers are testing the relevant hypothesis ‘directly’, sticking (at least at first glance) more closely to the idea that one should aim to falsify one’s hypotheses and expose them to a critical test (see e.g., Mayo, 1996 ). In this sense, the use of NHST for evaluating model assumptions may escape the criticism (Cohen, 1994 ) that the hypothesis of interest is not the hypothesis that is actually critically tested.

A third difference concerns the desired control over NHST’s two main error probabilities: the probability of incorrectly rejecting the null hypothesis when it is true (Type I error), and the probability of failing to reject it when it is false (Type II error). By testing the hypothesis of interest directly, the use of NHST for evaluating model assumptions implies an important shift in the relative importance of Type I and Type II errors compared to its use for the evaluation of substantive hypotheses. In this latter setting, the standard approach to using NHST is to fix the level of significance, ensuring control over the Type I error rate, and only then (if at all) consider the power of the procedure to detect meaningful deviations from this null hypothesis. However, when model assumptions are concerned, Type II errors are arguably much more harmful than Type I errors, as they result in the unwarranted use of statistical procedures and in incorrect inferences about the substantive hypotheses (e.g., due to inflated Type I error rates, overly small confidence intervals, or parameter bias). Thus, the standard practice of mainly focusing on controlling the Type I error rate by selecting an acceptably low level of significance α does not appear to translate well to the evaluation of model assumptions, since this leaves the more important Type II error rate uncontrolled and dependent on factors such as sample size that should arguably not affect the severity with which we critically evaluate our assumptions. While controlling the Type I error rate may often be straightforward, controlling the Type II error rate will be problematic for researchers who do not assess the plausibility of model assumptions and the size of possible violations, as will be shown in “ Assessing the plausibility of H 0 using a null hypothesis test ”.

Using null hypothesis tests to evaluate model assumptions

The standard way in which NHST is used to evaluate a model assumption is by formulating that assumption as a simple null hypothesis, stating that, for example, a set of parameters (e.g., group variances) are exactly the same. While less common, one could also consider using the NHST framework to assess whether a model assumption is approximately satisfied; that is, whether it falls within the bounds of what is considered acceptable for the model when we take the robustness of the model against violations of that assumption into account. Under such an approach, one would consider a composite null hypothesis that states that the differences between those parameters are not too large (e.g., that none of the group variances differ by more than a factor 10) for the model inferences to be seriously affected (see also Berger & Delampady, 1987 ; Hoijtink, 2012 ). While the statistical hypothesis that is tested would differ, the motivation for testing that hypothesis using NHST would be the same: to determine whether inferences based on the model can be trusted. Because of this similarity, the issues concerning the use of NHST for evaluating model assumptions discussed in this paper apply equally to the use of standard null hypothesis tests and approximate null hypothesis tests. 2 Since practically all standard use of NHST for evaluating model assumptions makes use of simple null hypotheses these shall be the focus of most of the article, but the relevance of using approximate null hypotheses is revisited at the end of “ Assessing the plausibility of H 0 using a null hypothesis test ”.

The difficulty lies in the fact that the NHST framework normally informs us that a nonrejection of the null hypothesis does not confirm the null hypothesis. This strict and Fisherian application of the NHST framework (here called ‘strict approach’) only informs us whether the model should be considered to be inappropriate and not whether we can assume that it is correct. While this strict position may be theoretically defensible, its implications are highly restrictive and do not match scientific practice. That is, using only NHST, no model assumption would ever receive any degree of confirmation from the data, and their plausibility would always remain completely uncertain. Since claiming that we never have any evidence in favor of statistical model assumptions would make all statistical inference arbitrary, it is assumed in the remainder of the paper that the application of the NHST framework to model assumptions only makes sense if it allows for some form of confirmation of the null hypothesis, and hence that the strict approach cannot be defended in practice.

One way to avoid the implication that we cannot put any faith in our statistical models is to change the implication of a nonrejection of H 0 : Instead of retaining it, we could decide to accept it. This ‘confirmatory approach’ seems to be implicitly embraced in practice (and in the motivating example), where researchers check their assumptions and in the absence of falsification proceed as though the assumption has been confirmed. It can also be thought to be in line with the decision-oriented methodology of Neyman and Pearson, where after performing a statistical test one of the two hypotheses is accepted. However, for this confirmatory approach to be defensible, it has to be argued that a nonrejection of H 0 should provide us with sufficient reason to accept H 0 , which will be explored in the next section.

Another possible response to the problems of the strict approach to NHST is to abandon the idea that a dichotomous decision needs to be made about the model assumption based on the outcome of the null hypothesis test. Instead, one could decide to always continue using the statistical model, while taking into consideration that a model assumption for which a significant result was obtained may be less plausible than one for which no significant result was obtained. This would mean treating the significance of the test statistic as a dichotomous measure that provides us with some degree of support in favor (i.e., nonsignificant) or against (i.e., significant) the model assumption, which is evidence that we should take into account when determining the extent to which we can rely on inferences based on the model. The feasibility of this ‘evidential approach’ to NHST is also considered in the next section.

Confirmation of model assumptions using NHST

The previous section concluded with two possible adaptations of the NHST framework that could potentially make NHST suitable for the evaluation of model assumptions. However, the possible success of both approaches hinges on whether NHST is able to provide the user with sufficient information to assess the plausibility of the model assumption. This section explores whether this is the case, for which the concept of plausibility will first need to be further defined.

Prior and posterior plausibility of the model assumption

Let us formalize the notion of plausibility by requiring it to take on a value that can range from 0 (completely implausible or certainly wrong) to 1 (completely plausible or certainly right) (see also Jaynes, 1968 , 2003 ). This value represents the degree of plausibility that is assigned to a proposition, for example the proposition ‘ H 0 is true’.

Since model assumptions are arguably either true or false, if we had complete information, there would be no uncertainty about the model assumptions and we would assign a value of either 0 or 1 to the plausibility of a model assumption being true. However, researchers are forced to assess the plausibility of the assumption using incomplete information, and their assessment of the plausibility depends on the limited information that they have and the way in which they evaluate this information. Thus, when we speak of the plausibility of a model assumption, it will always be conditional on the person that is doing the evaluation and the information that she has.

Denote the plausibility of a hypothesis H 0 as it is assessed by a rational and coherent person j by P j ( H 0 ) (see also Jaynes, 2003 ). Such rational and coherent persons may not actually exist, but can serve as idealizations for the way in which we should revise our beliefs in the face of new evidence. Thus, P j ( H 0 ) represents the degree to which person j believes in the proposition ‘ H 0 is true’, and it therefore informs us to what extent person j thinks that it is probable that H 0 is true. In line with the Bayesian literature on statistics and epistemology, this ‘degree of belief’ could also be called the ‘subjective probability’ or ‘personal probability’ that a person assigns to the truth of a proposition (see Savage, 1972 ; Howson & Urbach, 1989 ; Earman, 1992 ; Suppes, 2007 ). As a way of quantifying this degree of belief, we could imagine asking this person how many cents she would be willing to bet on the claim that H 0 is true if she will receive 1 dollar in the case that H 0 is indeed true (Ramsey, 1926 ; Gillies, 2000 ).

It is important to emphasize that this degree of belief need not be arbitrary, in the sense of depending on the subjective whims or preferences of the person (Jeffreys, 1961 ; Howson & Urbach, 1989 ; Lee & Wagenmakers, 2005 ). Rather, we can demand that the belief is rationally constructed on the basis of the set of information that is available to the person and a set of rules of reasoning (Jaynes, 1968 , 2003 ). The idea would be to use methods to elicit the relevant information that a person may have and to translate this information through a carefully designed and objective set of operations into an assessment of the prior plausibility (see also Kadane & Wolfson, 1998 ; O’Hagan, 1998 ; O’Hagan et al., 2006 ). This assessment would only be subjective in the sense that persons might differ in the information that is available to them, and we may require that persons with the same information available to them should reach the same assessment of prior plausibility, making the assessment itself objective.

Let us assume that person j has obtained a data set X —the data to which she hopes to apply the model—and that she wants to determine how plausible it is that H 0 holds after having taken the data into consideration. Let us call her prior assessment P j ( H 0 ) of the plausibility of H 0 before considering the data X the prior plausibility . Since person j wants to determine whether she should trust inferences based on the model, she wants to make use of the information in the data to potentially improve her assessment of the plausibility of H 0 . Thus, to make a better assessment of how plausible H 0 is, she wants to update her prior belief based on the information in the data. Let us call this assessment of the plausibility that has been updated based on the data X person j ’s posterior plausibility, which we denote by P j ( H 0 | X ).

Relevance of prior knowledge about the model assumption

Since both the confirmatory and the evidential approach to NHST posit that our evaluation of the plausibility of H 0 should be based only on the data (see also Trafimow, 2003 ), they tell us that our informed prior beliefs about the possible truth of H 0 should be completely overridden by the data. The idea is that this way the influence of possible subjective considerations is minimized (see also Mayo, 1996 ). Proponents of the NHST framework cannot allow the prior assessment of the plausibility of H 0 to influence the conclusions that are drawn about the plausibility of H 0 without abandoning the idea that the p value contains all the relevant information about the plausibility of H 0 . Hence, it will be assumed that if person j follows NHST-based guidelines in assessing the plausibility of H 0 , P j ( H 0 | X ) will not depend on P j ( H 0 ), but solely depends on the p value.

However, there are clear cases where our assessment of the plausibility of H 0 should depend on our prior knowledge if we are to be consistent. If for some reason we already know the truth or falsehood of H 0 , then basing our assessment of the plausibility purely on the result of a null hypothesis test—with the possibility of a Type I and Type II error, respectively—can only make our assessment of the plausibility of H 0 worse, not better. When we know in advance that a model assumption is wrong, failing to reject it should not in any way influence our assessment of the assumption.

More generally, one can conclude that the less plausible a null hypothesis is on the basis of the background information, the more hesitant we should be to consider it to be plausible if it fails to be rejected. In the context of the motivating example, the researcher may be aware of previous research indicating that boys generally show greater variance in cognitive processing speed than girls. This could give her strong reasons to suspect that boys will also show greater variance on her particular measure than girls. This background information would then be incorporated in her assessment of the plausibility of the assumption of equal variance before she considers the data. In this case, the researcher would assign low prior plausibility (e.g., P j ( H 0 ) = .1 or even P j ( H 0 ) = .01) to the assumption of equal variances. The data may subsequently provide us with relevant information about the model assumption, but this should influence our assessment of the plausibility of H 0 in a way that is consistent with our prior assessment of the plausibility of H 0 . That is, P j ( H 0 | X ) should depend on P j ( H 0 ).

Assessing the plausibility of H 0 using a null hypothesis test

To examine how NHST may help to evaluate the plausibility of a statistical model assumption, let us further examine the hypothetical case of researcher j who wants to apply a statistical model to a data set X , and who wants to evaluate one of the assumptions defining that model. In line with standard practice, let us assume that the model assumption that she evaluates is formulated as a simple null hypothesis, specifying that a parameter has a specific value. For example, this null hypothesis could correspond to the assumption of equal variances that was discussed in the motivating example, in which case H 0 : δ = 1, where δ = σ boys 2 / σ girls 2 .

The researcher has some prior beliefs about the plausibility of this assumption, based on the background information that she has about the particular situation she is dealing with. Since research never takes place in complete isolation from all previous research or substantive theory, the researcher will always have some background knowledge that is relevant for the particular context that she is in. If the researcher assigns either a probability of 0 or 1 to the model assumption being true before observing the data, she will consider statistically testing this hypothesis to be redundant. Thus, if researcher j tests H 0 , we have to assume that

Let us also assume that the researcher applies a null hypothesis test to the data X , and that she contrasts H 0 with an alternative simple hypothesis H i (e.g., specifying a specific non-unity ratio for the variances). This procedure results either in a significant or a nonsignificant test statistic. For now, we will assume that the researcher follows the guidelines of the confirmatory approach to NHST. Thus, a significant test statistic results in the researcher rejecting H 0 —the event of which is denoted by R —and a nonsignificant value means that H 0 is accepted—denoted by ¬ R .

For the test statistic to provide some form of justification for accepting (or rejecting) H 0 over H i , it must also be the case that

From Eq. 2 it follows that

Thus, a failure to reject H 0 is more likely under H 0 than under H i .

For convenience, let us assume that Eq. 2 holds for all possible simple alternatives of H 0 (which are all mutually exclusive and which as a set together with H 0 are exhaustive),

Equation 4 generally holds for NHST-based tests for model assumptions, such as Levene’s test for equality of variances (Levene, 1960 ). Let us denote the composite hypothesis that is the complement of H 0 by ¬ H 0 . Because the complement incorporates all possible alternatives to H 0 , ¬ H 0 is also known as the ‘catch-all’ hypothesis (Fitelson, 2006 , 2007 ). For the evaluation of model assumptions using NHST, the alternative hypothesis needs to correspond to the catch-all hypothesis, since the two hypotheses together should be exhaustive if we are to assess the plausibility of the assumption.

Our assessment of the probability of obtaining a nonsignificant test statistic under the catch-all hypothesis depends on how plausible we consider each of the possible alternatives to H 0 to be. That is,

where β j denotes person j ’s assessment of the probability of a Type II error. Thus, β j depends on the person that evaluates it, since P ( R | H i ) will differ for different H i s and persons may differ with respect to their values for each P j ( H i ). The power to detect a violation of the model assumption under the catch-all hypothesis thus cannot be assessed without considering the prior plausibility of each of the possible alternatives to H 0 . 3 In the context of our motivating example, our assessment of the power of the test depends on what values for σ boys 2 and σ girls 2 we consider to be plausible. If we expect a large difference between the two variances, we would expect the testing procedure to have a higher power to detect these differences than if we expect a small difference.

Equation 4 implies that

Equation 6 informs us that the power of the test to detect a violation of the model assumption is larger than the probability of a Type I error given the truth of H 0 . From Eq. 6 it also follows that

Equation 7 informs us that a nonrejection is more likely under H 0 than under ¬ H 0 . Thus, obtaining a nonsignificant test statistic should increase person j ’s assessment of the plausibility of H 0 ,

Hence, a null hypothesis test can indeed provide some degree of confirmation for the model assumption it evaluates. However, based on Eq. 8 alone, we do not know how plausible H 0 is after a failure to reject it, nor do we know how much more plausible it has become due to this nonrejection.

The degree to which H 0 has become more plausible after having obtained a nonsignificant test statistic can be determined (see also Kass & Raftery, 1995 ; Trafimow, 2003 ) by means of

That is, the odds of H 0 versus ¬ H 0 increase by a factor 1 − α β j after having obtained a nonsignificant test statistic, 4 which depends on our assessment of the power of the procedure. To determine how plausible H 0 is after having obtained a nonsignificant result, we thus cannot avoid relying on a subjective assessment of the power of the test based on what we consider to be plausible alternatives to H 0 .

By combining Eq. 9 with the fact that P j ( H 0 ) = 1 − P j (¬ H 0 ), we can obtain the plausibility of H 0 after having observed a nonsignificant result through

Equation 10 shows that our conclusion about the plausibility of H 0 should depend on our prior assessment of its plausibility. It also shows that the degree to which H 0 has become more plausible depends on our assessment of the power of the test, which Eq. 5 shows depends on the prior plausibility of the different specific alternatives to H 0 . Thus, it is not possible to assess the degree to which the data support H 0 through NHST alone, and the evidential approach to NHST cannot succeed if it does not take the prior plausibility into account.

Equation 10 also illustrates why the confirmatory approach to NHST cannot provide us with defensible guidelines for accepting or rejecting H 0 . Since the confirmatory approach does not take the prior plausibility of H 0 into account, it has to make a decision about the plausibility of H 0 based on the p value alone (Trafimow, 2003 ). However, the p value only tells us whether the data are consistent with the assumption being true, not whether this assumption is actually likely to be true. That is, a p value only informs us of the probability of obtaining data at least as extreme as the data that were actually obtained conditional on the truth of the null hypothesis, and it does not represent the probability of that hypothesis being true given the data (Wagenmakers, 2007 ).

The fact that in practice the p value is often misinterpreted as the probability that the null hypothesis is true (see e.g., Guilford, 1978 ; Gigerenzer, 1993 ; Nickerson, 2000 ; Wagenmakers & Grunwald, 2005 ; Wagenmakers, 2007 ) already suggests that it is this probability that researchers are often interested in Gigerenzer ( 1993 ). However, because they do not address the prior plausibility of the assumption, both the confirmatory and the evidential approach to NHST are unable to inform the user how plausible it is that the assumption is true after having taken the data into consideration.

Proponents of NHST might argue that we still should avoid the subjective influence introduced by including the prior plausibility in our assessment of model assumptions, and that an objective decision rule based on the confirmatory approach is still acceptable. They might state that we simply have to accept uncertainty about our decision about the model assumption: If we repeatedly use NHST to evaluate model assumptions, we will be wrong in a certain proportion of times, and this is something that simply cannot be avoided. But the problem is that the proportion of times we can expect to be wrong if we simply accept H 0 when the test statistic is not significant also depends on the prior probability of H 0 , as Eq. 10 shows. If our model assumption cannot possibly be true, all failures to reject H 0 are Type II errors, and the decision to accept H 0 will be wrong 100% of the time. Thus, this uncertainty about the proportion of times that an accepted null hypothesis is in fact wrong cannot at all be assessed without also assessing the prior plausibility. However, it seems plausible that in the context of evaluating model assumptions. it is exactly this error rate (rather than the Type I and Type II error rates) that is of most importance, as it determines the proportion of times that we end up using an inappropriate statistical model if we would rely solely on NHST.

To illustrate the risks of adopting the confirmatory approach to NHST, we can consider the proportion of times that a person following this approach can expect a conclusion that H 0 is true to be incorrect. Adopting the confirmatory approach to NHST would mean accepting H 0 whenever p ≥ α , regardless of its posterior plausibility. If we accept a hypothesis for which we would assess the posterior plausibility to be .70, we should expect such a decision to be wrong 30% of the time (provided the prior plausibility fully matched our prior beliefs). Thus, Eq. 10 can be used to determine the proportion of acceptances of H 0 that one can expect to be incorrect, for a given assessment of the power and prior plausibility.

The impact of the prior plausibility on the proportion of incorrect acceptances of H 0 based on a null hypothesis test ( α = .05) is illustrated in Table 1 . These results show that even with an assessed power (1 − β j ) as high as .90, person j can determine that she should expect to incorrectly accept H 0 in about 49% of times for hypotheses that she a priori considers to be implausible ( P j ( H 0 ) = .1), or even 91% of times if she considers hypotheses for which P j ( H 0 ) = .01. Thus, while power analysis is important in evaluating the support that the model assumption receives, Table 1 illustrates that a high power (or a claim about severity, Mayo, 1996 ) alone is not sufficient to result in convincing claims that the assumption is plausible enough to be accepted (barring hypothetical cases where the power is 1). Without assessing the prior plausibility, the plausibility of the assumption after having observed the data can have any value between 0 and 1 regardless of the p value that is obtained.

Proportion of acceptances of H 0 based on a null hypothesis test that person j should expect to be wrong, for varying levels of power and prior plausibility ( α = .05)

It is important here to emphasize that often model assumptions are chosen not because they are deemed plausible, but because of their mathematical convenience or usefulness to develop a statistical model. If there is no substantive theory that backs up these model assumptions with convincing arguments, there is little reason to assume that the model assumptions actually hold exactly, and assigning a potentially very low (possibly 0) prior plausibility to these assumptions may be the only reasonable response. Consequently, testing such a priori implausible exact null hypotheses in the hopes of finding enough support to accept them may often be hopeless, since “[e]ssentially, all models are wrong” (Box and Draper, 1987 , p. 424). Our models try to simplify reality with the goal of representing it in a convenient and useful way, but because of this simplification those models often cannot completely capture the vast complexity of the reality they try to represent. As such, the idea that they are completely correct may in many cases be highly implausible.

Luckily, a model does not need to be exactly correct for its inferences to be useful. Many models are robust to small or even large violations of their assumptions, meaning that inferences made using the model might still be (approximately) correct if the violations of the assumptions are not too severe. This suggests that what researchers should be after in evaluating model assumptions is not confirming an a priori dismissible exact null hypothesis, but rather an approximate null hypothesis (Berger & Sellke, 1987 ; Hoijtink, 2012 ) that specifies an admissible range of values rather than point values for the parameter(s) that relate to the model assumption. Such ‘robust’ approximate null hypotheses might have a much higher prior plausibility, making the effort to determine whether they should be accepted more reasonable. Such hypotheses are also more in line with what researchers are interested in: figuring out whether the model assumption is not violated beyond an acceptable limit. But regardless of whether we formulate our model assumptions in the form of an exact null hypothesis or an approximate null hypothesis, we want to assess the plausibility of the assumptions that we have to make. Hence, regardless of the precise specification of the null hypothesis, the prior plausibility of that assumption needs to be assessed.

Revisiting the motivating example

Since relying on NHST for the evaluation of the assumption of equal variances will not provide her with a sufficient assessment of the plausibility of that assumption, our researcher could decide to make use of statistical methods that do provide her with the information she needs. One useful and accessible method for evaluating this assumption has been proposed by Boing-Messing, van Assen, Hofman, Hoijtink, & Mulder ( 2017 ), who make use of a Bayesian statistical framework to evaluate hypotheses about group variances. Their method allows users to contrast any number of hypotheses about the relevant group variances. For each pair of hypotheses, their procedure calculates a Bayes factor, which captures the degree of support that the data provide in favor of one hypothesis over the other (Kass & Raftery, 1995 ). The Bayes factor can range from 0 to infinity, with values far removed from 1 indicating strong evidence in favor of one of the hypotheses, and values close to 1 indicating that the data do not strongly favor one hypothesis over the other. Such a continuous measure of support is exactly what is needed to be able to update one’s assessment of the plausibility of a hypothesis on the basis of new empirical evidence (Morey, Romeijn, & Rouder, 2016 ).

Before the procedure can be applied, the researcher has to decide on which hypotheses to consider. Since the procedure has not yet been extended to cover approximate null hypotheses, the focus will here be on evaluating the exact null hypothesis of equal variances, contrasting H 0 : σ boys 2 = σ girls 2 with its complement. She then needs to assess, based on her background knowledge, how plausible she takes both of these hypotheses to be. If she has strong background information that indicates that the variances are likely not equal while still not dismissing it entirely, she could specify P j ( H 0 ) = .1 and P j (¬ H 0 ) = .9. If she instead would have believed that there is no specific reason to suspect the assumption to be violated, it could be that choosing P j ( H 0 ) = .5 and P j (¬ H 0 ) = .5 had made sense to her (the default option offered by the procedure). The benefit of the procedure of Boeing-Messing et al. is that regardless of the choice of prior probabilities for the hypotheses, the Bayes factor that is obtained remains the same and provides an objective summary of the degree to which the data support one hypothesis over the other.

In this case, a Bayes factor of 1.070 is obtained, indicating that the data are almost equally likely to be observed under H 0 as under ¬ H 0 , and hence the data do not provide any real evidence in favor or against H 0 . Consequently, the posterior plausibility of H 0 hardly differs from the prior plausibility: P j ( H 0 | X ) = .106 if our researcher used P j ( H 0 ) = .1. 5 Thus, if she were skeptical about whether the model assumption held beforehand, she will remain skeptical about it after seeing the data. This is in stark contrast to the conclusion that she was led to using the confirmatory NHST approach, where the nonsignificant p value that was obtained led to an acceptance of H 0 . Thus, using this Bayesian approach in which she was able to take her background information into account, she has to conclude that there is little reason to accept that the model assumption holds, and has to question whether she should rely on inferences obtained based on models that assume equal variances. Developing more elaborate Bayesian procedures that allow for approximate hypotheses could be helpful here for assessing whether the assumption at least approximately holds. It may also be helpful for her to consider an estimation framework and attempt to assess the severity of the violation, as this could help her decide whether the model can still be relied upon to some degree.

Conclusions