Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- Exploratory Research | Definition, Guide, & Examples

Exploratory Research | Definition, Guide, & Examples

Published on December 6, 2021 by Tegan George . Revised on November 20, 2023.

Exploratory research is a methodology approach that investigates research questions that have not previously been studied in depth.

Exploratory research is often qualitative and primary in nature. However, a study with a large sample conducted in an exploratory manner can be quantitative as well. It is also often referred to as interpretive research or a grounded theory approach due to its flexible and open-ended nature.

Table of contents

When to use exploratory research, exploratory research questions, exploratory research data collection, step-by-step example of exploratory research, exploratory vs. explanatory research, advantages and disadvantages of exploratory research, other interesting articles, frequently asked questions about exploratory research.

Exploratory research is often used when the issue you’re studying is new or when the data collection process is challenging for some reason.

You can use this type of research if you have a general idea or a specific question that you want to study but there is no preexisting knowledge or paradigm with which to study it.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

Exploratory research questions are designed to help you understand more about a particular topic of interest. They can help you connect ideas to understand the groundwork of your analysis without adding any preconceived notions or assumptions yet.

Here are some examples:

- What effect does using a digital notebook have on the attention span of middle schoolers?

- What factors influence mental health in undergraduates?

- What outcomes are associated with an authoritative parenting style?

- In what ways does the presence of a non-native accent affect intelligibility?

- How can the use of a grocery delivery service reduce food waste in single-person households?

Collecting information on a previously unexplored topic can be challenging. Exploratory research can help you narrow down your topic and formulate a clear hypothesis and problem statement , as well as giving you the “lay of the land” on your topic.

Data collection using exploratory research is often divided into primary and secondary research methods, with data analysis following the same model.

Primary research

In primary research, your data is collected directly from primary sources : your participants. There is a variety of ways to collect primary data.

Some examples include:

- Survey methodology: Sending a survey out to the student body asking them if they would eat vegan meals

- Focus groups: Compiling groups of 8–10 students and discussing what they think of vegan options for dining hall food

- Interviews: Interviewing students entering and exiting the dining hall, asking if they would eat vegan meals

Secondary research

In secondary research, your data is collected from preexisting primary research, such as experiments or surveys.

Some other examples include:

- Case studies : Health of an all-vegan diet

- Literature reviews : Preexisting research about students’ eating habits and how they have changed over time

- Online polls, surveys, blog posts, or interviews; social media: Have other schools done something similar?

For some subjects, it’s possible to use large- n government data, such as the decennial census or yearly American Community Survey (ACS) open-source data.

How you proceed with your exploratory research design depends on the research method you choose to collect your data. In most cases, you will follow five steps.

We’ll walk you through the steps using the following example.

Therefore, you would like to focus on improving intelligibility instead of reducing the learner’s accent.

Step 1: Identify your problem

The first step in conducting exploratory research is identifying what the problem is and whether this type of research is the right avenue for you to pursue. Remember that exploratory research is most advantageous when you are investigating a previously unexplored problem.

Step 2: Hypothesize a solution

The next step is to come up with a solution to the problem you’re investigating. Formulate a hypothetical statement to guide your research.

Step 3. Design your methodology

Next, conceptualize your data collection and data analysis methods and write them up in a research design.

Step 4: Collect and analyze data

Next, you proceed with collecting and analyzing your data so you can determine whether your preliminary results are in line with your hypothesis.

In most types of research, you should formulate your hypotheses a priori and refrain from changing them due to the increased risk of Type I errors and data integrity issues. However, in exploratory research, you are allowed to change your hypothesis based on your findings, since you are exploring a previously unexplained phenomenon that could have many explanations.

Step 5: Avenues for future research

Decide if you would like to continue studying your topic. If so, it is likely that you will need to change to another type of research. As exploratory research is often qualitative in nature, you may need to conduct quantitative research with a larger sample size to achieve more generalizable results.

Prevent plagiarism. Run a free check.

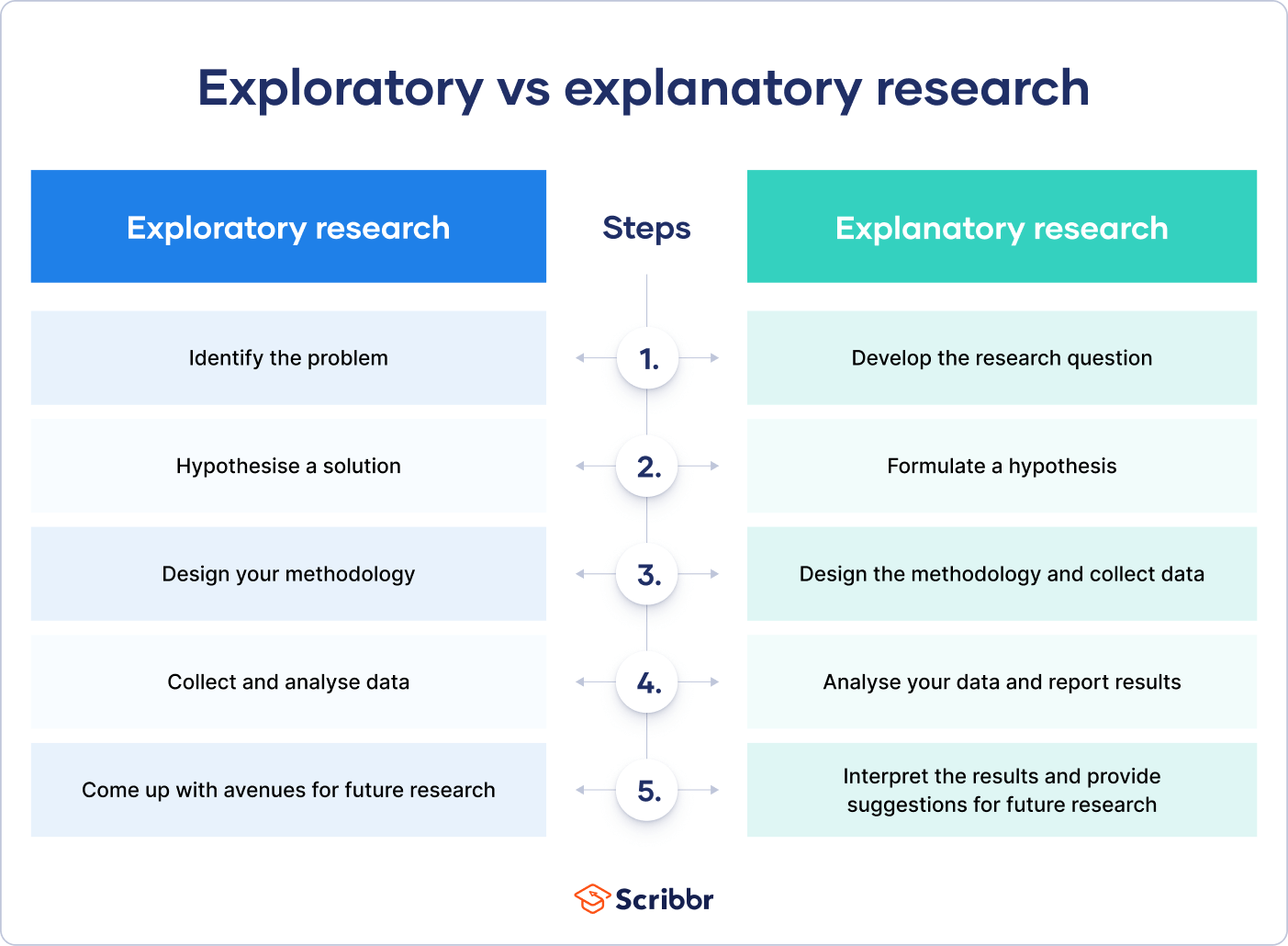

It can be easy to confuse exploratory research with explanatory research. To understand the relationship, it can help to remember that exploratory research lays the groundwork for later explanatory research.

Exploratory research investigates research questions that have not been studied in depth. The preliminary results often lay the groundwork for future analysis.

Explanatory research questions tend to start with “why” or “how”, and the goal is to explain why or how a previously studied phenomenon takes place.

Like any other research design , exploratory studies have their trade-offs: they provide a unique set of benefits but also come with downsides.

- It can be very helpful in narrowing down a challenging or nebulous problem that has not been previously studied.

- It can serve as a great guide for future research, whether your own or another researcher’s. With new and challenging research problems, adding to the body of research in the early stages can be very fulfilling.

- It is very flexible, cost-effective, and open-ended. You are free to proceed however you think is best.

Disadvantages

- It usually lacks conclusive results, and results can be biased or subjective due to a lack of preexisting knowledge on your topic.

- It’s typically not externally valid and generalizable, and it suffers from many of the challenges of qualitative research .

- Since you are not operating within an existing research paradigm, this type of research can be very labor-intensive.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Degrees of freedom

- Null hypothesis

- Discourse analysis

- Control groups

- Mixed methods research

- Non-probability sampling

- Quantitative research

- Ecological validity

Research bias

- Rosenthal effect

- Implicit bias

- Cognitive bias

- Selection bias

- Negativity bias

- Status quo bias

Exploratory research is a methodology approach that explores research questions that have not previously been studied in depth. It is often used when the issue you’re studying is new, or the data collection process is challenging in some way.

Exploratory research aims to explore the main aspects of an under-researched problem, while explanatory research aims to explain the causes and consequences of a well-defined problem.

You can use exploratory research if you have a general idea or a specific question that you want to study but there is no preexisting knowledge or paradigm with which to study it.

Quantitative research deals with numbers and statistics, while qualitative research deals with words and meanings.

Quantitative methods allow you to systematically measure variables and test hypotheses . Qualitative methods allow you to explore concepts and experiences in more detail.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

George, T. (2023, November 20). Exploratory Research | Definition, Guide, & Examples. Scribbr. Retrieved March 18, 2024, from https://www.scribbr.com/methodology/exploratory-research/

Is this article helpful?

Tegan George

Other students also liked, explanatory research | definition, guide, & examples, qualitative vs. quantitative research | differences, examples & methods, what is a research design | types, guide & examples, what is your plagiarism score.

- - Google Chrome

Intended for healthcare professionals

- Access provided by Google Indexer

- My email alerts

- BMA member login

- Username * Password * Forgot your log in details? Need to activate BMA Member Log In Log in via OpenAthens Log in via your institution

Search form

- Advanced search

- Search responses

- Search blogs

- Exploratory analyses...

Exploratory analyses in aetiologic research and considerations for assessment of credibility: mini-review of literature

- Related content

- Peer review

- Kim Luijken , doctoral student 1 ,

- Olaf M Dekkers , professor 1 ,

- Frits R Rosendaal , professor 1 ,

- Rolf H H Groenwold , professor 1 2

- 1 Department of Clinical Epidemiology, Leiden University Medical Centre, Leiden, Netherlands

- 2 Department of Biomedical Data Sciences, Leiden University Medical Centre, Leiden, Netherlands

- Correspondence to: K Luijken k.luijken{at}umcutrecht.nl

- Accepted 22 March 2022

Objective To provide considerations for reporting and interpretation that can improve assessment of the credibility of exploratory analyses in aetiologic research.

Design Mini-review of the literature and account of exploratory research principles.

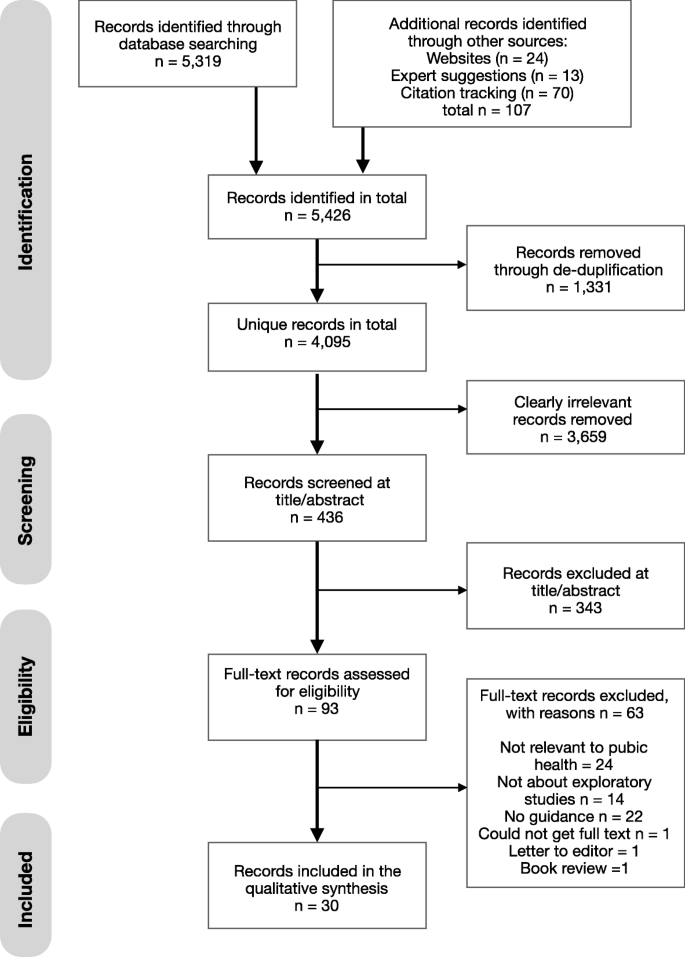

Setting This study focuses on a particular type of causal research, namely aetiologic studies, which investigate the causal effect of one or multiple risk factors on a particular health outcome or disease. The mini review included aetiologic research articles published in four epidemiology journals in the first issue of 2021: American Journal of Epidemiology , Epidemiology , European Journal of Epidemiology , and International Journal of Epidemiology , specifically focusing on observational studies of causal risk factors of diseases.

Main outcome measures Number of exposure-outcome associations reported, grouped by type of analysis (main, sensitivity, and additional).

Results The journal articles reported many exposure-outcome associations: a mean number of 33 (range 1-120) exposure-outcome associations for the primary analysis, 30 (0-336) for sensitivity analyses, and 163 (0-1467) for additional analyses. Six considerations were discussed that are important in assessing the credibility of exploratory analyses: research problem, protocol, statistical criteria, interpretation of findings, completeness of reporting, and effect of exploratory findings on future causal research.

Conclusions Based on this mini-review, exploratory analyses in aetiologic research were not always reported properly. Six considerations for reporting of exploratory analyses in aetiologic research were provided to stimulate a discussion about their preferred handling and reporting. Researchers should take responsibility for the results of exploratory analyses by clearly reporting their exploratory nature and specifying which findings should be investigated in future research and how.

Introduction

Reports of aetiologic studies often have results of multiple exploratory analyses, with the aim of identifying topics for future research. Although this form of reporting might seem reasonable, it is not without risk, because compared with the results of a confirmatory study, assessing the credibility of exploratory findings is generally more complicated.

The origin of exploratory data analysis can be traced back at least to Tukey in the 1960s and 1970s 1 2 who encouraged statisticians to develop visualisation techniques for representing and capturing structures in datasets to establish new research questions. These new research questions should subsequently be answered with independent datasets (often termed confirmatory analysis). For example, when a new biomarker is thought to be part of a known causal pathway, performing a small preparatory exploratory study before conducting a full blown large cohort study seems worthwhile, because the cohort study is financially expensive and requires large investments of resources. Similarly, if a known exposure-outcome effect is thought to vary across subgroups of the population, exploring this idea first before embarking on confirmative analyses of the effect of heterogeneity seems appropriate.

Even when researchers consider an analysis to be exploratory, a hypothesis is easily promoted to a fact. For example, findings in journal articles can be exaggerated to more certain statements in press releases and news articles. 3 In medical science in particular, where results are sometimes quickly implemented in clinical practice, researchers should take responsibility for the results they report. The Hippocratic oath (“First, do not harm”) applies as well to medical research as it does to clinical practice.

In this paper, we discuss issues that complicate the interpretation of exploratory analyses in causal studies. Causal research can refer to different types of research, such as randomised studies or intervention studies. We do not address these studies in our manuscript; we focus on aetiologic research, in which causes of disease are investigated. Specifically, the causal effect of risk factors on a health outcome or disease are studied, typically in an observational setting. We provide practical pointers for researchers on how to report exploratory analyses in aetiologic research and how to clarify what the exploratory results imply for future research and implementation in practice. We hope to encourage a discussion about the preferred handling and reporting of these analyses.

Exploratory analyses in aetiologic research

The term exploratory analysis typically refers to analyses for which the hypothesis was not specified before the data analysis. 4 Considering exploratory analyses in a broader sense, however, is probably more relevant in aetiologic research, because of the observational data and clustering of analyses within cohorts. We use the term exploratory analyses here to indicate analyses that are initial and preliminary steps towards solving a research problem. Exploratory analyses are often conducted in addition to planned primary analyses of a study. We do not consider sensitivity analyses, where the main hypothesis is evaluated under different assumptions, to be exploratory in this paper. We also do not consider outcomes that are evaluated as a secondary objective but are correlated with the primary outcome to be exploratory, because these analyses contribute to the investigation of the primary research question. Genome-wide association studies, where the exploratory nature of analyses is commonly accounted for by looking at multiple testing, 5 are beyond the scope of this paper.

Mini-review and overview of existing reporting guidance

Before we discuss considerations about the reporting of exploratory aetiologic studies, we wanted to illustrate some of the aspects of exploratory studies that need explicit reporting. Hence we performed a small review of published aetiologic studies. We identified all articles on original research in four journals in their first issue of 2021: American Journal of Epidemiology , Epidemiology , European Journal of Epidemiology , and International Journal of Epidemiology . We excluded studies that did not look at an aetiologic research question, such as prediction studies, studies on therapeutic interventions, and randomised trials. For each article, we counted the number of primary analyses, sensitivity analyses, and additional analyses that were performed. The unit of counting was the association estimator, where we counted only one association if the association was reported on different scales (eg, absolute and relative scales for binary endpoints).

Also, we reviewed existing reporting guidance documents on aspects relevant to exploratory analyses, specifically the STROBE (Strengthening the Reporting of Observational Studies in Epidemiology) statement, 6 RECORD (REporting of studies Conducted using Observational Routinely collected health Data) statement, 7 STROBE-MR (Strengthening the Reporting of Observational Studies in Epidemiology Using Mendelian Randomisation) for mendelian randomisation studies, 8 STREGA (Strengthening the Reporting of Genetic Association Studies) for genome association studies, 9 and the CONSORT (Consolidated Standards of Reporting Trials) extension to randomised pilot and feasibility trials. 10

Patient and public involvement

Involving patients or the public in the design, conduct, reporting, or dissemination plans of our research was not appropriate or possible.

Mini-review

The mini-review included 25 original aetiologic articles. These articles reported a mean number of 33 (range 1-120) exposure-outcome associations for the primary analysis, 30 (0-336) for sensitivity analyses, and 163 (0-1467) for additional analyses, mainly concerning subgroup or interaction analyses (supplementary file). Most articles did not explicitly report which analyses were prespecified, and only one study referred to a publicly available protocol. 11 The methodological scrutiny of the subgroup analyses varied from thoughtful evaluations of exposure effect heterogeneity in well established subgroups to evaluations of exposure effects across subgroups that seemed to have been formed exhaustively across many potential risk factors. Despite the fact that our review included only a small sample of studies, the image that arises from it is that many results were presented, and insufficient information was reported to fully judge the validity and merits of the results.

Existing reporting guidance

The STROBE 6 and RECORD 7 statements provide checklists of items to report in observational studies that are relevant to exploratory analyses ( table 1 ). Extensions of STROBE, such as STROBE-MR 8 and STREGA, 9 provide additional guidance for reporting of studies where many analyses are performed. Guidance for reporting randomised trials also provides helpful information for reporting exploratory analyses in aetiologic research, in particular the CONSORT extension to randomised pilot and feasibility trials. 10 Not all of these recommendations can be directly applied to observational aetiologic studies, however, because the procedures for generating and testing of hypotheses are more established in randomised studies than in observational settings.

Considerations for reporting of exploratory aetiologic research

- View inline

Exploratory research principles

Inspired by the existing recommendations for reporting, we list six considerations for reporting and interpretation that can improve the assessment of the credibility of exploratory analyses in aetiologic research ( table 1 ). The list is not exhaustive but we hope it will encourage further discussion on the reporting of exploratory research.

Consideration 1: explicitly state the objective of all analyses, including exploratory analyses

Stating the objective of an aetiologic study clarifies how to interpret the results. The objectives of confirmatory aetiologic research ideally contain a well defined targeted effect of a specific aetiologic factor on a specific outcome in a specific population. 13 14 In early discovery research, objectives are not always rigorously defined but could be specified more generally (eg, understanding the origin of a particular outcome). An implication of stating the objective in general terms, however, is that the methodological handling of the analysis becomes less clear and the number of researchers’ degrees of freedom becomes large. 15 Consequently, interpreting results without deriving spurious (causal) conclusions requires thought and effort because the analysis does not necessarily provide information towards a causal effect (see consideration 4). 16 17 18 The more general an objective is stated, the more provisional the analysis becomes. This caveat includes machine learning approaches where no explicit causal modelling assumptions are made.

Because exploratory analyses in aetiologic research often aim to inform a future in-depth causal analysis, reporting both the objective of the provisional exploratory analysis and the (future) confirmatory analysis is important. This reporting is in line with the CONSORT reporting checklist for pilot randomised controlled trials which recommends that researchers state the objective of the eventual trial in the manuscript of a pilot study. 10 The rationale and need for the exploratory analysis in aetiologic research should be outlined together with uncertainties that need to be dealt with before performing an independent confirmative analysis of the causal mechanism. Reporting the position of provisional analyses relative to future research clarifies the level of credibility of the findings from exploratory analyses.

Consideration 2: establish a study protocol before data analysis and make the protocol available to readers

Preregistered protocols help distinguish which analyses were planned before observing the data and which analyses were performed post hoc, thereby avoiding hypothesising after the results are known. For randomised trials, preregistration of the study protocol is considered the norm. 19 Preregistration does not seem as widespread in observational aetiologic research, but is increasingly encouraged, 20 21 and explicitly recommended in the RECORD reporting checklist. 7 Because aetiologic research often uses existing cohort data that have been analysed for related research questions, preregistration of aetiologic studies does not ensure the same level of credibility of statistical evidence as preregistration before collecting the data.

Nosek and colleagues 22 have provided preliminary guidance on preregistration of analyses conducted with existing data. These authors suggest that what was known in advance about the dataset should be transparently reported so that the credibility of statistical findings can be assessed, taking into account analyses that have been performed previously. Implementing this advice is probably challenging in large epidemiological cohort studies because of the many analyses that might have been performed. But trying to clarify why and how an analysis is conducted before observing the data is a laudable practice that can be implemented directly in aetiologic studies. This practice is ideally accompanied by work on developing guidance for preregistration of aetiologic studies that use existing data.

Preregistration of analyses that are exploratory in nature is even less common, possibly contradicting the definition of exploration. We consider exploratory analysis, however, as discovery work that serves to motivate funding for larger studies that are, for example, better able to control confounding or to collect data rigorously. Given this important probing role, simply stating in a research protocol that certain relations will be explored is not enough; time and effort must be invested in designing the analysis appropriately. Not every detail can be specified in advance, but interpretation of the results provided by data can be challenging and unintentionally overconfident when no question was clearly articulated before seeing the answer.

Consideration 3: do not base judgments on significance values only

Only reporting the results of analyses that provided a P value below the prespecified α level (eg, 0.05) is discouraged throughout all scientific disciplines (for example, as discussed in a 2019 supplementary issue of The American Statistician ). 23 Avoiding selective reporting based on significance values is particularly relevant to exploratory findings because the statistical properties of exploratory tests are less well known than those of confirmatory tests. 24 For example, the expected number of false positives (that is, the type I error rate) is probably increased when the choice for a statistical test was based on pattens in the observed data. Although procedures have been developed for correction of multiple testing in confirmatory settings, consensus on how to prevent false positive findings in exploratory settings has not yet been established. 24 25 26

Increasing the number of exploratory analyses, without correction for multiple testing, raises the risk of deriving false positive conclusions, but too strict corrections for multiple testing increases the probability of false negative findings (that is, the type II error rate). 27 A raised type II error rate could occur, for example, when an analysis of various positively correlated hypotheses is corrected for multiple testing as if all of the hypotheses were independent (eg, by applying a Bonferroni correction). The decision to statistically correct for multiple testing depends, among other issues, on the total number of tests performed in the same dataset, correlation between the hypotheses being tested, and sample size. Reporting each of these considerations clarifies the analytical context of findings and helps to assess the credibility of the results. This form of reporting is in line with the STROBE-MR 8 and STREGA 9 checklists which recommend stating how multiple comparisons were managed, although recommendations for the handling of multiple testing seem more established in genome-wide association studies than in clinical aetiologic cohort studies. 5

Consideration 4: interpret findings in line with the nature of the analysis

Interpreting and communicating results in line with the exploratory nature of an analysis is challenging because an accurate representation of the degree of tentativeness of the results is required. Assessing this degree of tentativeness based on only the results of an analysis (that is, based on the numerical estimates) is complicated because seemingly convincing results can be misleading and a clinical explanation can be found that does not follow from the statistical evidence. 28 29 Cognitive biases, such as hindsight bias, can distort the interpretation of findings.

Reporting of findings from exploratory analyses starts with indicating whether the analysis was planned before or after observing the data, which is recommended in the CONSORT extension to randomised pilot and feasibility trials. 10 Results of exploratory analyses can be interpreted by focusing on what is reported about the objectives and applied methodology rather than overstepping the findings. The specificity with which findings are interpreted should match the generality with which the objective is stated (see consideration 1). 16 17 18 For example, when various subgroup analyses are performed with the general aim of identifying possible subgroups from the available data where an exposure effect was different, researchers should report that many subgroups were explored, including characterisation of the subgroups and description of the presence or absence of effect heterogeneity, rather than discussing only one or two specific subgroups where the effect size was extreme. Furthermore, exploratory analyses often fail to support strong conclusions. Recommendations for clinical practice or generalisations based on exploratory analyses should generally be avoided.

Consideration 5: report (summarised) results of all exploratory analyses that were performed

When findings are selectively reported, especially when reporting is guided by significant findings (see consideration 3), the credibility of reported findings is probably overstated. 30 Reporting the results of all of the exploratory analyses that were conducted (possibly in a supplementary file) provides a transparent and honest report of the analysis and facilitates better interpretation of the findings. This approach is in line with the STROBE extension in STREGA, which recommends that all results of analyses should be presented, even if numerous analyses were undertaken. 9

Reporting all analyses that have been conducted seems simple, but can be challenging in practice, mainly because the process of performing a study is typically iterative. A framework for initial data analysis by Huebner and colleagues could help keep track of all subanalyses that are conducted as part of a main analysis. 31 This framework distinguishes exploratory analyses that are part of a primary analysis from additional exploratory analyses that require separate reporting. Another helpful practice could be to have a reflection period after performing analyses to establish whether the analyses look at (slightly) different research questions and to report separate analyses for each research question.

Consideration 6: accompany exploratory analyses by a proposed research agenda

The credibility of exploratory findings can be communicated through a research agenda prioritising future research and how this research should be set up. Reporting a research agenda is similar to the CONSORT extension to randomised pilot and feasibility trials that recommends reporting which and how future confirmative trials can be informed by the pilot study. 10

Formulating a research agenda allows researchers to take responsibility for the exploratory findings presented and future research that should be performed, avoiding the empty statement that “more research is needed”. In medical science in particular, where study results are sometimes quickly implemented into clinical practice, researchers are encouraged to take responsibility for the results they report by clearly explaining which exploratory findings should be investigated in future research and how.

Our mini-review showed that exploratory analyses in aetiologic research were not always reported optimally. The credibility of exploratory results is affected by a combination of the theoretical rationale for the analysis, clarity of the defined research problem, applied methodology, and degree to which analytical decisions are driven by the data. Choosing a particular analysis based on observed patterns in the data complicates statistical inferences. Moreover, the design and methods applied in an exploratory analysis might be less optimal than the primary analysis of the study, which further complicates interpretation of exploratory analyses. Therefore, information on these aspects should be clearly reported.

Exploration is essential to the progress of science. Strict confirmatory studies are a powerful mechanism for final evaluations before implementation in clinical practice, but will probably not stimulate new ideas. 32 33 Open minded exploratory analyses can lead to unexpected discoveries and resourceful innovations of epidemiological science, but effort is required to accurately interpret the results. Because exploratory analyses are usually done to generate new research questions, quickly performing a statistical test (or multiple tests) to get the first answer to the problem is tempting. When quick test results are presented in a research article, however, their interpretation might be ad hoc and unintentionally overconfident.

To show their full value, exploratory analyses of aetiologic research need to be conducted and interpreted correctly . We have provided six considerations for reporting of exploratory analyses to encourage a discussion on exploratory analyses and how the credibility of these analyses is ideally assessed in aetiologic research. Continuation of this discussion will contribute to the understanding of inferences that can be made from exploratory analyses in aetiologic research and will help strike a balance between their opportunities and risks.

What is already known on this topic

Exploratory analyses in aetiologic research are initial steps towards solving a research problem and are often conducted in addition to planned primary analyses of a study

Exploratory analyses might lead to new discoveries in aetiologic research, but effort is needed to accurately interpret the results because these analyses are often conducted with few data resources and insufficient adjusting for confounding

Statistical properties of exploratory tests are less well known than those of confirmatory tests

What this study adds

This study focuses on a particular type of causal research, namely aetiologic studies, which investigate the causal effect of one or multiple risk factors on a particular health outcome or disease

Six considerations for reporting of exploratory analyses in aetiologic research were provided to stimulate a discussion about their preferred handling and reporting

Researchers should take responsibility for results of exploratory analyses by clearly reporting their exploratory nature and specifying which findings should be investigated in future research and how

Ethics statements

Ethical approval.

Not required.

Data availability statement

No additional data available.

Contributors: KL was involved in the conceptualisation, investigation, methodology, visualisation, and writing (original draft) of the article. OMD was involved in the conceptualisation, investigation, methodology, and writing (review and editing) of the article. FRR was involved in the conceptualisation, investigation, methodology, and writing (review and editing) of the article. RHHG was involved in the conceptualisation, investigation, methodology, supervision, and writing (review and editing) of the article. KL, OMD, FRR, RHHG gave final approval of the version to be published and are accountable for all aspects of the work. KL is the main guarantor of this study. The corresponding author attests that all listed authors meet authorship criteria and that no others meeting the criteria have been omitted.

Funding: RHHG was supported by grants from the Netherlands Organisation for Scientific Research (ZonMW, project 917.16.430) and from Leiden University Medical Centre. The funders had no role in considering the study design or in the collection, analysis, interpretation of data, writing of the report, or decision to submit the article for publication.

Competing interests: All authors have completed the ICMJE uniform disclosure form at www.icmje.org/disclosure-of-interest/ and declare: support from the Netherlands Organisation for Scientific Research and Leiden University Medical Centre for the submitted work; no financial relationships with any organisations that might have an interest in the submitted work in the previous three years; no other relationships or activities that could appear to have influenced the submitted work.

The lead author (the manuscript’s guarantor) affirms that the manuscript is an honest, accurate, and transparent account of the study being reported; that no important aspects of the study have been omitted; and that any discrepancies from the study as planned (and, if relevant, registered) have been explained.

Dissemination to participants and related patient and public communities: An abstract was submitted to the annual Dutch epidemiology conference ( www.weon.nl ). The authors aim to share their work with stakeholders at the annual Dutch epidemiology conference ( www.weon.nl ), at institutional meetings, and will post a link with a plain language summary on their personal websites ( www.rolfgroenwold.nl ).

Provenance and peer review: not commissioned; externally peer reviewed.

This is an Open Access article distributed in accordance with the Creative Commons Attribution Non Commercial (CC BY-NC 4.0) license, which permits others to distribute, remix, adapt, build upon this work non-commercially, and license their derivative works on different terms, provided the original work is properly cited and the use is non-commercial. See: http://creativecommons.org/licenses/by-nc/4.0/ .

- Vivian-Griffiths S ,

- Wagenmakers E-J ,

- Wetzels R ,

- Borsboom D ,

- van der Maas HL ,

- von Elm E ,

- Altman DG ,

- Pocock SJ ,

- Gøtzsche PC ,

- Vandenbroucke JP ,

- STROBE Initiative

- Benchimol EI ,

- Guttmann A ,

- RECORD Working Committee

- Skrivankova VW ,

- Richmond RC ,

- Woolf BAR ,

- Higgins JP ,

- Ioannidis JP ,

- Eldridge SM ,

- Campbell MJ ,

- PAFS consensus group

- Serra-Burriel M ,

- Martínez-Lizaga N ,

- Hopewell S ,

- Schulz KF ,

- Goetghebeur E ,

- le Cessie S ,

- De Stavola B ,

- Moodie EE ,

- Waernbaum I ,

- “on behalf of” the topic group Causal Inference (TG7) of the STRATOS initiative

- Simmons JP ,

- Nelson LD ,

- Simonsohn U

- Grimes DA ,

- Westreich D ,

- Greenland S

- Williams RJ ,

- Rajakannan T

- Hemingway H ,

- Ebersole CR ,

- DeHaven AC ,

- American Statistician

- Goeman JJ ,

- Westfall PH

- Groenwold RHH ,

- Cessie SL ,

- Goldacre B ,

- Drysdale H ,

- Marston C ,

- Greenland S ,

- Rothman KJ ,

- Ioannidis JP

- Huebner M ,

- Schmidt CO ,

- Vandenbroucke JP

- Privacy Policy

Buy Me a Coffee

Home » Exploratory Research – Types, Methods and Examples

Exploratory Research – Types, Methods and Examples

Table of Contents

Exploratory Research

Definition:

Exploratory research is a type of research design that is used to investigate a research question when the researcher has limited knowledge or understanding of the topic or phenomenon under study.

The primary objective of exploratory research is to gain insights and gather preliminary information that can help the researcher better define the research problem and develop hypotheses or research questions for further investigation.

Exploratory Research Methods

There are several types of exploratory research, including:

Literature Review

This involves conducting a comprehensive review of existing published research, scholarly articles, and other relevant literature on the research topic or problem. It helps to identify the gaps in the existing knowledge and to develop new research questions or hypotheses.

Pilot Study

A pilot study is a small-scale preliminary study that helps the researcher to test research procedures, instruments, and data collection methods. This type of research can be useful in identifying any potential problems or issues with the research design and refining the research procedures for a larger-scale study.

This involves an in-depth analysis of a particular case or situation to gain insights into the underlying causes, processes, and dynamics of the issue under investigation. It can be used to develop a more comprehensive understanding of a complex problem, and to identify potential research questions or hypotheses.

Focus Groups

Focus groups involve a group discussion that is conducted to gather opinions, attitudes, and perceptions from a small group of individuals about a particular topic. This type of research can be useful in exploring the range of opinions and attitudes towards a topic, identifying common themes or patterns, and generating ideas for further research.

Expert Opinion

This involves consulting with experts or professionals in the field to gain their insights, expertise, and opinions on the research topic. This type of research can be useful in identifying the key issues and concerns related to the topic, and in generating ideas for further research.

Observational Research

Observational research involves gathering data by observing people, events, or phenomena in their natural settings to gain insights into behavior and interactions. This type of research can be useful in identifying patterns of behavior and interactions, and in generating hypotheses or research questions for further investigation.

Open-ended Surveys

Open-ended surveys allow respondents to provide detailed and unrestricted responses to questions, providing valuable insights into their attitudes, opinions, and perceptions. This type of research can be useful in identifying common themes or patterns, and in generating ideas for further research.

Data Analysis Methods

Exploratory Research Data Analysis Methods are as follows:

Content Analysis

This method involves analyzing text or other forms of data to identify common themes, patterns, and trends. It can be useful in identifying patterns in the data and developing hypotheses or research questions. For example, if the researcher is analyzing social media posts related to a particular topic, content analysis can help identify the most frequently used words, hashtags, and topics.

Thematic Analysis

This method involves identifying and analyzing patterns or themes in qualitative data such as interviews or focus groups. The researcher identifies recurring themes or patterns in the data and then categorizes them into different themes. This can be helpful in identifying common patterns or themes in the data and developing hypotheses or research questions. For example, a thematic analysis of interviews with healthcare professionals about patient care may identify themes related to communication, patient satisfaction, and quality of care.

Cluster Analysis

This method involves grouping data points into clusters based on their similarities or differences. It can be useful in identifying patterns in large datasets and grouping similar data points together. For example, if the researcher is analyzing customer data to identify different customer segments, cluster analysis can be used to group similar customers together based on their demographic, purchasing behavior, or preferences.

Network Analysis

This method involves analyzing the relationships and connections between data points. It can be useful in identifying patterns in complex datasets with many interrelated variables. For example, if the researcher is analyzing social network data, network analysis can help identify the most influential users and their connections to other users.

Grounded Theory

This method involves developing a theory or explanation based on the data collected during the exploratory research process. The researcher develops a theory or explanation that is grounded in the data, rather than relying on pre-existing theories or assumptions. This can be helpful in developing new theories or explanations that are supported by the data.

Applications of Exploratory Research

Exploratory research has many practical applications across various fields. Here are a few examples:

- Marketing Research : In marketing research, exploratory research can be used to identify consumer needs, preferences, and behavior. It can also help businesses understand market trends and identify new market opportunities.

- Product Development: In product development, exploratory research can be used to identify customer needs and preferences, as well as potential design flaws or issues. This can help companies improve their product offerings and develop new products that better meet customer needs.

- Social Science Research: In social science research, exploratory research can be used to identify new areas of study, as well as develop new theories and hypotheses. It can also be used to identify potential research methods and approaches.

- Healthcare Research : In healthcare research, exploratory research can be used to identify new treatments, therapies, and interventions. It can also be used to identify potential risk factors or causes of health problems.

- Education Research: In education research, exploratory research can be used to identify new teaching methods and approaches, as well as identify potential areas of study for further research. It can also be used to identify potential barriers to learning or achievement.

Examples of Exploratory Research

Here are some more examples of exploratory research from different fields:

- Social Science : A researcher wants to study the experience of being a refugee, but there is limited existing research on this topic. The researcher conducts exploratory research by conducting in-depth interviews with refugees to better understand their experiences, challenges, and needs.

- Healthcare : A medical researcher wants to identify potential risk factors for a rare disease but there is limited information available. The researcher conducts exploratory research by reviewing medical records and interviewing patients and their families to identify potential risk factors.

- Education : A teacher wants to develop a new teaching method to improve student engagement, but there is limited information on effective teaching methods. The teacher conducts exploratory research by reviewing existing literature and interviewing other teachers to identify potential approaches.

- Technology : A software developer wants to develop a new app, but is unsure about the features that users would find most useful. The developer conducts exploratory research by conducting surveys and focus groups to identify user preferences and needs.

- Environmental Science : An environmental scientist wants to study the impact of a new industrial plant on the surrounding environment, but there is limited existing research. The scientist conducts exploratory research by collecting and analyzing soil and water samples, and conducting interviews with residents to better understand the impact of the plant on the environment and the community.

How to Conduct Exploratory Research

Here are the general steps to conduct exploratory research:

- Define the research problem: Identify the research problem or question that you want to explore. Be clear about the objective and scope of the research.

- Review existing literature: Conduct a review of existing literature and research on the topic to identify what is already known and where gaps in knowledge exist.

- Determine the research design : Decide on the appropriate research design, which will depend on the nature of the research problem and the available resources. Common exploratory research designs include case studies, focus groups, interviews, and surveys.

- Collect data: Collect data using the chosen research design. This may involve conducting interviews, surveys, or observations, or collecting data from existing sources such as archives or databases.

- Analyze data: Analyze the data collected using appropriate qualitative or quantitative techniques. This may include coding and categorizing qualitative data, or running descriptive statistics on quantitative data.

- I nterpret and report findings: Interpret the findings of the analysis and report them in a way that is clear and understandable. The report should summarize the findings, discuss their implications, and make recommendations for further research or action.

- Iterate : If necessary, refine the research question and repeat the process of data collection and analysis to further explore the topic.

When to use Exploratory Research

Exploratory research is appropriate in situations where there is limited existing knowledge or understanding of a topic, and where the goal is to generate insights and ideas that can guide further research. Here are some specific situations where exploratory research may be particularly useful:

- New product development: When developing a new product, exploratory research can be used to identify consumer needs and preferences, as well as potential design flaws or issues.

- Emerging technologies: When exploring emerging technologies, exploratory research can be used to identify potential uses and applications, as well as potential challenges or limitations.

- Developing research hypotheses: When developing research hypotheses, exploratory research can be used to identify potential relationships or patterns that can be further explored through more rigorous research methods.

- Understanding complex phenomena: When trying to understand complex phenomena, such as human behavior or societal trends, exploratory research can be used to identify underlying patterns or factors that may be influencing the phenomenon.

- Developing research methods : When developing new research methods, exploratory research can be used to identify potential issues or limitations with existing methods, and to develop new methods that better capture the phenomena of interest.

Purpose of Exploratory Research

The purpose of exploratory research is to gain insights and understanding of a research problem or question where there is limited existing knowledge or understanding. The objective is to explore and generate ideas that can guide further research, rather than to test specific hypotheses or make definitive conclusions.

Exploratory research can be used to:

- Identify new research questions: Exploratory research can help to identify new research questions and areas of inquiry, by providing initial insights and understanding of a topic.

- Develop hypotheses: Exploratory research can help to develop hypotheses and testable propositions that can be further explored through more rigorous research methods.

- Identify patterns and trends : Exploratory research can help to identify patterns and trends in data, which can be used to guide further research or decision-making.

- Understand complex phenomena: Exploratory research can help to provide a deeper understanding of complex phenomena, such as human behavior or societal trends, by identifying underlying patterns or factors that may be influencing the phenomena.

- Generate ideas: Exploratory research can help to generate new ideas and insights that can be used to guide further research, innovation, or decision-making.

Characteristics of Exploratory Research

The following are the main characteristics of exploratory research:

- Flexible and open-ended : Exploratory research is characterized by its flexible and open-ended nature, which allows researchers to explore a wide range of ideas and perspectives without being constrained by specific research questions or hypotheses.

- Qualitative in nature : Exploratory research typically relies on qualitative methods, such as in-depth interviews, focus groups, or observation, to gather rich and detailed data on the research problem.

- Limited scope: Exploratory research is generally limited in scope, focusing on a specific research problem or question, rather than attempting to provide a comprehensive analysis of a broader phenomenon.

- Preliminary in nature : Exploratory research is preliminary in nature, providing initial insights and understanding of a research problem, rather than testing specific hypotheses or making definitive conclusions.

- I terative process : Exploratory research is often an iterative process, where the research design and methods may be refined and adjusted as new insights and understanding are gained.

- I nductive approach : Exploratory research typically takes an inductive approach to data analysis, seeking to identify patterns and relationships in the data that can guide further research or hypothesis development.

Advantages of Exploratory Research

The following are some advantages of exploratory research:

- Provides initial insights: Exploratory research is useful for providing initial insights and understanding of a research problem or question where there is limited existing knowledge or understanding. It can help to identify patterns, relationships, and potential hypotheses that can guide further research.

- Flexible and adaptable : Exploratory research is flexible and adaptable, allowing researchers to adjust their methods and approach as they gain new insights and understanding of the research problem.

- Qualitative methods : Exploratory research typically relies on qualitative methods, such as in-depth interviews, focus groups, and observation, which can provide rich and detailed data that is useful for gaining insights into complex phenomena.

- Cost-effective : Exploratory research is often less costly than other research methods, such as large-scale surveys or experiments. It is typically conducted on a smaller scale, using fewer resources and participants.

- Useful for hypothesis generation : Exploratory research can be useful for generating hypotheses and testable propositions that can be further explored through more rigorous research methods.

- Provides a foundation for further research: Exploratory research can provide a foundation for further research by identifying potential research questions and areas of inquiry, as well as providing initial insights and understanding of the research problem.

Limitations of Exploratory Research

The following are some limitations of exploratory research:

- Limited generalizability: Exploratory research is typically conducted on a small scale and uses non-random sampling techniques, which limits the generalizability of the findings to a broader population.

- Subjective nature: Exploratory research relies on qualitative methods and is therefore subject to researcher bias and interpretation. The findings may be influenced by the researcher’s own perceptions, beliefs, and assumptions.

- Lack of rigor: Exploratory research is often less rigorous than other research methods, such as experimental research, which can limit the validity and reliability of the findings.

- Limited ability to test hypotheses: Exploratory research is not designed to test specific hypotheses, but rather to generate initial insights and understanding of a research problem. It may not be suitable for testing well-defined research questions or hypotheses.

- Time-consuming : Exploratory research can be time-consuming and resource-intensive, particularly if the researcher needs to gather data from multiple sources or conduct multiple rounds of data collection.

- Difficulty in interpretation: The open-ended nature of exploratory research can make it difficult to interpret the findings, particularly if the researcher is unable to identify clear patterns or relationships in the data.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Case Study – Methods, Examples and Guide

Qualitative Research – Methods, Analysis Types...

Descriptive Research Design – Types, Methods and...

Qualitative Research Methods

Basic Research – Types, Methods and Examples

One-to-One Interview – Methods and Guide

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- Exploratory Research | Definition, Guide, & Examples

Exploratory Research | Definition, Guide, & Examples

Published on 6 May 2022 by Tegan George . Revised on 20 January 2023.

Exploratory research is a methodology approach that investigates topics and research questions that have not previously been studied in depth.

Exploratory research is often qualitative in nature. However, a study with a large sample conducted in an exploratory manner can be quantitative as well. It is also often referred to as interpretive research or a grounded theory approach due to its flexible and open-ended nature.

Table of contents

When to use exploratory research, exploratory research questions, exploratory research data collection, step-by-step example of exploratory research, exploratory vs explanatory research, advantages and disadvantages of exploratory research, frequently asked questions about exploratory research.

Exploratory research is often used when the issue you’re studying is new or when the data collection process is challenging for some reason.

You can use this type of research if you have a general idea or a specific question that you want to study but there is no preexisting knowledge or paradigm with which to study it.

Prevent plagiarism, run a free check.

Exploratory research questions are designed to help you understand more about a particular topic of interest. They can help you connect ideas to understand the groundwork of your analysis without adding any preconceived notions or assumptions yet.

Here are some examples:

- What effect does using a digital notebook have on the attention span of primary schoolers?

- What factors influence mental health in undergraduates?

- What outcomes are associated with an authoritative parenting style?

- In what ways does the presence of a non-native accent affect intelligibility?

- How can the use of a grocery delivery service reduce food waste in single-person households?

Collecting information on a previously unexplored topic can be challenging. Exploratory research can help you narrow down your topic and formulate a clear hypothesis , as well as giving you the ‘lay of the land’ on your topic.

Data collection using exploratory research is often divided into primary and secondary research methods, with data analysis following the same model.

Primary research

In primary research, your data is collected directly from primary sources : your participants. There is a variety of ways to collect primary data.

Some examples include:

- Survey methodology: Sending a survey out to the student body asking them if they would eat vegan meals

- Focus groups: Compiling groups of 8–10 students and discussing what they think of vegan options for dining hall food

- Interviews: Interviewing students entering and exiting the dining hall, asking if they would eat vegan meals

Secondary research

In secondary research, your data is collected from preexisting primary research, such as experiments or surveys.

Some other examples include:

- Case studies : Health of an all-vegan diet

- Literature reviews : Preexisting research about students’ eating habits and how they have changed over time

- Online polls, surveys, blog posts, or interviews; social media: Have other universities done something similar?

For some subjects, it’s possible to use large- n government data, such as the decennial census or yearly American Community Survey (ACS) open-source data.

How you proceed with your exploratory research design depends on the research method you choose to collect your data. In most cases, you will follow five steps.

We’ll walk you through the steps using the following example.

Therefore, you would like to focus on improving intelligibility instead of reducing the learner’s accent.

Step 1: Identify your problem

The first step in conducting exploratory research is identifying what the problem is and whether this type of research is the right avenue for you to pursue. Remember that exploratory research is most advantageous when you are investigating a previously unexplored problem.

Step 2: Hypothesise a solution

The next step is to come up with a solution to the problem you’re investigating. Formulate a hypothetical statement to guide your research.

Step 3. Design your methodology

Next, conceptualise your data collection and data analysis methods and write them up in a research design.

Step 4: Collect and analyse data

Next, you proceed with collecting and analysing your data so you can determine whether your preliminary results are in line with your hypothesis.

In most types of research, you should formulate your hypotheses a priori and refrain from changing them due to the increased risk of Type I errors and data integrity issues. However, in exploratory research, you are allowed to change your hypothesis based on your findings, since you are exploring a previously unexplained phenomenon that could have many explanations.

Step 5: Avenues for future research

Decide if you would like to continue studying your topic. If so, it is likely that you will need to change to another type of research. As exploratory research is often qualitative in nature, you may need to conduct quantitative research with a larger sample size to achieve more generalisable results.

It can be easy to confuse exploratory research with explanatory research. To understand the relationship, it can help to remember that exploratory research lays the groundwork for later explanatory research.

Exploratory research investigates research questions that have not been studied in depth. The preliminary results often lay the groundwork for future analysis.

Explanatory research questions tend to start with ‘why’ or ‘how’, and the goal is to explain why or how a previously studied phenomenon takes place.

Like any other research design , exploratory research has its trade-offs: it provides a unique set of benefits but also comes with downsides.

- It can be very helpful in narrowing down a challenging or nebulous problem that has not been previously studied.

- It can serve as a great guide for future research, whether your own or another researcher’s. With new and challenging research problems, adding to the body of research in the early stages can be very fulfilling.

- It is very flexible, cost-effective, and open-ended. You are free to proceed however you think is best.

Disadvantages

- It usually lacks conclusive results, and results can be biased or subjective due to a lack of preexisting knowledge on your topic.

- It’s typically not externally valid and generalisable, and it suffers from many of the challenges of qualitative research .

- Since you are not operating within an existing research paradigm, this type of research can be very labour-intensive.

Exploratory research is a methodology approach that explores research questions that have not previously been studied in depth. It is often used when the issue you’re studying is new, or the data collection process is challenging in some way.

You can use exploratory research if you have a general idea or a specific question that you want to study but there is no preexisting knowledge or paradigm with which to study it.

Exploratory research explores the main aspects of a new or barely researched question.

Explanatory research explains the causes and effects of an already widely researched question.

Quantitative research deals with numbers and statistics, while qualitative research deals with words and meanings.

Quantitative methods allow you to test a hypothesis by systematically collecting and analysing data, while qualitative methods allow you to explore ideas and experiences in depth.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

George, T. (2023, January 20). Exploratory Research | Definition, Guide, & Examples. Scribbr. Retrieved 18 March 2024, from https://www.scribbr.co.uk/research-methods/exploratory-research-design/

Is this article helpful?

Tegan George

Other students also liked, qualitative vs quantitative research | examples & methods, descriptive research design | definition, methods & examples, case study | definition, examples & methods.

The potential of working hypotheses for deductive exploratory research

- Open access

- Published: 08 December 2020

- Volume 55 , pages 1703–1725, ( 2021 )

Cite this article

You have full access to this open access article

- Mattia Casula ORCID: orcid.org/0000-0002-7081-8153 1 ,

- Nandhini Rangarajan 2 &

- Patricia Shields ORCID: orcid.org/0000-0002-0960-4869 2

57k Accesses

77 Citations

4 Altmetric

Explore all metrics

While hypotheses frame explanatory studies and provide guidance for measurement and statistical tests, deductive, exploratory research does not have a framing device like the hypothesis. To this purpose, this article examines the landscape of deductive, exploratory research and offers the working hypothesis as a flexible, useful framework that can guide and bring coherence across the steps in the research process. The working hypothesis conceptual framework is introduced, placed in a philosophical context, defined, and applied to public administration and comparative public policy. Doing so, this article explains: the philosophical underpinning of exploratory, deductive research; how the working hypothesis informs the methodologies and evidence collection of deductive, explorative research; the nature of micro-conceptual frameworks for deductive exploratory research; and, how the working hypothesis informs data analysis when exploratory research is deductive.

Similar content being viewed by others

What is Qualitative in Qualitative Research

Patrik Aspers & Ugo Corte

Criteria for Good Qualitative Research: A Comprehensive Review

Drishti Yadav

Reporting reliability, convergent and discriminant validity with structural equation modeling: A review and best-practice recommendations

Gordon W. Cheung, Helena D. Cooper-Thomas, … Linda C. Wang

Avoid common mistakes on your manuscript.

1 Introduction

Exploratory research is generally considered to be inductive and qualitative (Stebbins 2001 ). Exploratory qualitative studies adopting an inductive approach do not lend themselves to a priori theorizing and building upon prior bodies of knowledge (Reiter 2013 ; Bryman 2004 as cited in Pearse 2019 ). Juxtaposed against quantitative studies that employ deductive confirmatory approaches, exploratory qualitative research is often criticized for lack of methodological rigor and tentativeness in results (Thomas and Magilvy 2011 ). This paper focuses on the neglected topic of deductive, exploratory research and proposes working hypotheses as a useful framework for these studies.

To emphasize that certain types of applied research lend themselves more easily to deductive approaches, to address the downsides of exploratory qualitative research, and to ensure qualitative rigor in exploratory research, a significant body of work on deductive qualitative approaches has emerged (see for example, Gilgun 2005 , 2015 ; Hyde 2000 ; Pearse 2019 ). According to Gilgun ( 2015 , p. 3) the use of conceptual frameworks derived from comprehensive reviews of literature and a priori theorizing were common practices in qualitative research prior to the publication of Glaser and Strauss’s ( 1967 ) The Discovery of Grounded Theory . Gilgun ( 2015 ) coined the terms Deductive Qualitative Analysis (DQA) to arrive at some sort of “middle-ground” such that the benefits of a priori theorizing (structure) and allowing room for new theory to emerge (flexibility) are reaped simultaneously. According to Gilgun ( 2015 , p. 14) “in DQA, the initial conceptual framework and hypotheses are preliminary. The purpose of DQA is to come up with a better theory than researchers had constructed at the outset (Gilgun 2005 , 2009 ). Indeed, the production of new, more useful hypotheses is the goal of DQA”.

DQA provides greater level of structure for both the experienced and novice qualitative researcher (see for example Pearse 2019 ; Gilgun 2005 ). According to Gilgun ( 2015 , p. 4) “conceptual frameworks are the sources of hypotheses and sensitizing concepts”. Sensitizing concepts frame the exploratory research process and guide the researcher’s data collection and reporting efforts. Pearse ( 2019 ) discusses the usefulness for deductive thematic analysis and pattern matching to help guide DQA in business research. Gilgun ( 2005 ) discusses the usefulness of DQA for family research.

Given these rationales for DQA in exploratory research, the overarching purpose of this paper is to contribute to that growing corpus of work on deductive qualitative research. This paper is specifically aimed at guiding novice researchers and student scholars to the working hypothesis as a useful a priori framing tool. The applicability of the working hypothesis as a tool that provides more structure during the design and implementation phases of exploratory research is discussed in detail. Examples of research projects in public administration that use the working hypothesis as a framing tool for deductive exploratory research are provided.

In the next section, we introduce the three types of research purposes. Second, we examine the nature of the exploratory research purpose. Third, we provide a definition of working hypothesis. Fourth, we explore the philosophical roots of methodology to see where exploratory research fits. Fifth, we connect the discussion to the dominant research approaches (quantitative, qualitative and mixed methods) to see where deductive exploratory research fits. Sixth, we examine the nature of theory and the role of the hypothesis in theory. We contrast formal hypotheses and working hypotheses. Seven, we provide examples of student and scholarly work that illustrates how working hypotheses are developed and operationalized. Lastly, this paper synthesizes previous discussion with concluding remarks.

2 Three types of research purposes

The literature identifies three basic types of research purposes—explanation, description and exploration (Babbie 2007 ; Adler and Clark 2008 ; Strydom 2013 ; Shields and Whetsell 2017 ). Research purposes are similar to research questions; however, they focus on project goals or aims instead of questions.

Explanatory research answers the “why” question (Babbie 2007 , pp. 89–90), by explaining “why things are the way they are”, and by looking “for causes and reasons” (Adler and Clark 2008 , p. 14). Explanatory research is closely tied to hypothesis testing. Theory is tested using deductive reasoning, which goes from the general to the specific (Hyde 2000 , p. 83). Hypotheses provide a frame for explanatory research connecting the research purpose to other parts of the research process (variable construction, choice of data, statistical tests). They help provide alignment or coherence across stages in the research process and provide ways to critique the strengths and weakness of the study. For example, were the hypotheses grounded in the appropriate arguments and evidence in the literature? Are the concepts imbedded in the hypotheses appropriately measured? Was the best statistical test used? When the analysis is complete (hypothesis is tested), the results generally answer the research question (the evidence supported or failed to support the hypothesis) (Shields and Rangarajan 2013 ).

Descriptive research addresses the “What” question and is not primarily concerned with causes (Strydom 2013 ; Shields and Tajalli 2006 ). It lies at the “midpoint of the knowledge continuum” (Grinnell 2001 , p. 248) between exploration and explanation. Descriptive research is used in both quantitative and qualitative research. A field researcher might want to “have a more highly developed idea of social phenomena” (Strydom 2013 , p. 154) and develop thick descriptions using inductive logic. In science, categorization and classification systems such as the periodic table of chemistry or the taxonomies of biology inform descriptive research. These baseline classification systems are a type of theorizing and allow researchers to answer questions like “what kind” of plants and animals inhabit a forest. The answer to this question would usually be displayed in graphs and frequency distributions. This is also the data presentation system used in the social sciences (Ritchie and Lewis 2003 ; Strydom 2013 ). For example, if a scholar asked, what are the needs of homeless people? A quantitative approach would include a survey that incorporated a “needs” classification system (preferably based on a literature review). The data would be displayed as frequency distributions or as charts. Description can also be guided by inductive reasoning, which draws “inferences from specific observable phenomena to general rules or knowledge expansion” (Worster 2013 , p. 448). Theory and hypotheses are generated using inductive reasoning, which begins with data and the intention of making sense of it by theorizing. Inductive descriptive approaches would use a qualitative, naturalistic design (open ended interview questions with the homeless population). The data could provide a thick description of the homeless context. For deductive descriptive research, categories, serve a purpose similar to hypotheses for explanatory research. If developed with thought and a connection to the literature, categories can serve as a framework that inform measurement, link to data collection mechanisms and to data analysis. Like hypotheses they can provide horizontal coherence across the steps in the research process.

Table 1 demonstrated these connections for deductive, descriptive and explanatory research. The arrow at the top emphasizes the horizontal or across the research process view we emphasize. This article makes the case that the working hypothesis can serve the same purpose as the hypothesis for deductive, explanatory research and categories for deductive descriptive research. The cells for exploratory research are filled in with question marks.

The remainder of this paper focuses on exploratory research and the answers to questions found in the table:

What is the philosophical underpinning of exploratory, deductive research?

What is the Micro-conceptual framework for deductive exploratory research? [ As is clear from the article title we introduce the working hypothesis as the answer .]

How does the working hypothesis inform the methodologies and evidence collection of deductive exploratory research?

How does the working hypothesis inform data analysis of deductive exploratory research?

3 The nature of exploratory research purpose

Explorers enter the unknown to discover something new. The process can be fraught with struggle and surprises. Effective explorers creatively resolve unexpected problems. While we typically think of explorers as pioneers or mountain climbers, exploration is very much linked to the experience and intention of the explorer. Babies explore as they take their first steps. The exploratory purpose resonates with these insights. Exploratory research, like reconnaissance, is a type of inquiry that is in the preliminary or early stages (Babbie 2007 ). It is associated with discovery, creativity and serendipity (Stebbins 2001 ). But the person doing the discovery, also defines the activity or claims the act of exploration. It “typically occurs when a researcher examines a new interest or when the subject of study itself is relatively new” (Babbie 2007 , p. 88). Hence, exploration has an open character that emphasizes “flexibility, pragmatism, and the particular, biographically specific interests of an investigator” (Maanen et al. 2001 , p. v). These three purposes form a type of hierarchy. An area of inquiry is initially explored . This early work lays the ground for, description which in turn becomes the basis for explanation . Quantitative, explanatory studies dominate contemporary high impact journals (Twining et al. 2017 ).

Stebbins ( 2001 ) makes the point that exploration is often seen as something like a poor stepsister to confirmatory or hypothesis testing research. He has a problem with this because we live in a changing world and what is settled today will very likely be unsettled in the near future and in need of exploration. Further, exploratory research “generates initial insights into the nature of an issue and develops questions to be investigated by more extensive studies” (Marlow 2005 , p. 334). Exploration is widely applicable because all research topics were once “new.” Further, all research topics have the possibility of “innovation” or ongoing “newness”. Exploratory research may be appropriate to establish whether a phenomenon exists (Strydom 2013 ). The point here, of course, is that the exploratory purpose is far from trivial.

Stebbins’ Exploratory Research in the Social Sciences ( 2001 ), is the only book devoted to the nature of exploratory research as a form of social science inquiry. He views it as a “broad-ranging, purposive, systematic prearranged undertaking designed to maximize the discovery of generalizations leading to description and understanding of an area of social or psychological life” (p. 3). It is science conducted in a way distinct from confirmation. According to Stebbins ( 2001 , p. 6) the goal is discovery of potential generalizations, which can become future hypotheses and eventually theories that emerge from the data. He focuses on inductive logic (which stimulates creativity) and qualitative methods. He does not want exploratory research limited to the restrictive formulas and models he finds in confirmatory research. He links exploratory research to Glaser and Strauss’s ( 1967 ) flexible, immersive, Grounded Theory. Strydom’s ( 2013 ) analysis of contemporary social work research methods books echoes Stebbins’ ( 2001 ) position. Stebbins’s book is an important contribution, but it limits the potential scope of this flexible and versatile research purpose. If we accepted his conclusion, we would delete the “Exploratory” row from Table 1 .