Reference management. Clean and simple.

The top list of academic search engines

1. Google Scholar

4. science.gov, 5. semantic scholar, 6. baidu scholar, get the most out of academic search engines, frequently asked questions about academic search engines, related articles.

Academic search engines have become the number one resource to turn to in order to find research papers and other scholarly sources. While classic academic databases like Web of Science and Scopus are locked behind paywalls, Google Scholar and others can be accessed free of charge. In order to help you get your research done fast, we have compiled the top list of free academic search engines.

Google Scholar is the clear number one when it comes to academic search engines. It's the power of Google searches applied to research papers and patents. It not only lets you find research papers for all academic disciplines for free but also often provides links to full-text PDF files.

- Coverage: approx. 200 million articles

- Abstracts: only a snippet of the abstract is available

- Related articles: ✔

- References: ✔

- Cited by: ✔

- Links to full text: ✔

- Export formats: APA, MLA, Chicago, Harvard, Vancouver, RIS, BibTeX

BASE is hosted at Bielefeld University in Germany. That is also where its name stems from (Bielefeld Academic Search Engine).

- Coverage: approx. 136 million articles (contains duplicates)

- Abstracts: ✔

- Related articles: ✘

- References: ✘

- Cited by: ✘

- Export formats: RIS, BibTeX

CORE is an academic search engine dedicated to open-access research papers. For each search result, a link to the full-text PDF or full-text web page is provided.

- Coverage: approx. 136 million articles

- Links to full text: ✔ (all articles in CORE are open access)

- Export formats: BibTeX

Science.gov is a fantastic resource as it bundles and offers free access to search results from more than 15 U.S. federal agencies. There is no need anymore to query all those resources separately!

- Coverage: approx. 200 million articles and reports

- Links to full text: ✔ (available for some databases)

- Export formats: APA, MLA, RIS, BibTeX (available for some databases)

Semantic Scholar is the new kid on the block. Its mission is to provide more relevant and impactful search results using AI-powered algorithms that find hidden connections and links between research topics.

- Coverage: approx. 40 million articles

- Export formats: APA, MLA, Chicago, BibTeX

Although Baidu Scholar's interface is in Chinese, its index contains research papers in English as well as Chinese.

- Coverage: no detailed statistics available, approx. 100 million articles

- Abstracts: only snippets of the abstract are available

- Export formats: APA, MLA, RIS, BibTeX

RefSeek searches more than one billion documents from academic and organizational websites. Its clean interface makes it especially easy to use for students and new researchers.

- Coverage: no detailed statistics available, approx. 1 billion documents

- Abstracts: only snippets of the article are available

- Export formats: not available

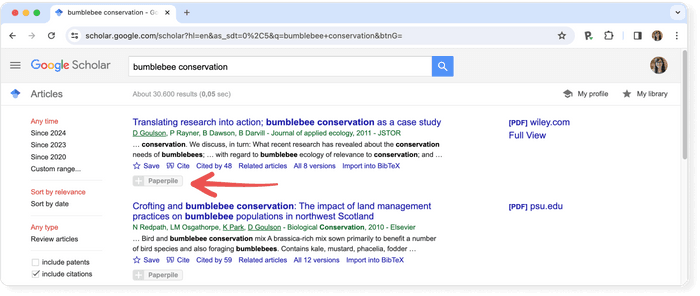

Consider using a reference manager like Paperpile to save, organize, and cite your references. Paperpile integrates with Google Scholar and many popular databases, so you can save references and PDFs directly to your library using the Paperpile buttons:

Google Scholar is an academic search engine, and it is the clear number one when it comes to academic search engines. It's the power of Google searches applied to research papers and patents. It not only let's you find research papers for all academic disciplines for free, but also often provides links to full text PDF file.

Semantic Scholar is a free, AI-powered research tool for scientific literature developed at the Allen Institute for AI. Sematic Scholar was publicly released in 2015 and uses advances in natural language processing to provide summaries for scholarly papers.

BASE , as its name suggest is an academic search engine. It is hosted at Bielefeld University in Germany and that's where it name stems from (Bielefeld Academic Search Engine).

CORE is an academic search engine dedicated to open access research papers. For each search result a link to the full text PDF or full text web page is provided.

Science.gov is a fantastic resource as it bundles and offers free access to search results from more than 15 U.S. federal agencies. There is no need any more to query all those resources separately!

28 Best Academic Search Engines That make your research easier

This post may contain affiliate links that allow us to earn a commission at no expense to you. Learn more

If you’re a researcher or scholar, you know that conducting effective online research is a critical part of your job. And if you’re like most people, you’re always on the lookout for new and better ways to do it.

I’m sure you are familiar with some research databases. But, top researchers keep an open mind and are always looking for inspiration in unexpected places.

This article aims to give you an edge over researchers that rely mainly on Google for their entire research process.

Our list of 28 academic search engines will start with the more familiar to less.

Table of Contents

#1. Google Scholar

Google Scholar is an academic search engine that indexes the full text or metadata of scholarly literature across an array of publishing formats and disciplines.

Great for academic research, you can use Google Scholar to find articles from academic journals, conference proceedings, theses, and dissertations. The results returned by Google Scholar are typically more relevant and reliable than those from regular search engines like Google.

Tip: You can restrict your results to peer-reviewed articles only by clicking on the “Scholarly”

- Scholarly results are typically more relevant and reliable than those from regular search engines like Google.

- You can restrict your results to peer-reviewed articles only by clicking on the “Scholarly” tab.

- Google Scholar database Coverage is extensive, with approx. 200 million articles indexed.

- Abstracts are available for most articles.

- Related articles are shown, as well as the number of times an article has been cited.

- Links to full text are available for many articles.

- Abstracts are only a snippet of the full article, so you might need to do additional searching to get the full information you need.

- Not all articles are available in full text.

Google Scholar is completely free.

#2. ERIC (Education Resources Information Center)

ERIC (short for educational resources information center) is a great academic search engine that focuses on education-related literature. It is sponsored by the U.S. Department of Education and produced by the Institute of Education Sciences.

ERIC indexes over a million articles, reports, conference papers, and other resources on all aspects of education from early childhood to higher education. So, search results are more relevant to Education on ERIC.

- Extensive coverage: ERIC indexes over a million articles, reports, and other resources on all aspects of education from early childhood to higher education.

- You can limit your results to peer-reviewed journals by clicking on the “Peer-Reviewed” tab.

- Great search engine for educators, as abstracts are available for most articles.

ERIC is a free online database of education-related literature.

You might also like:

- Best Plagiarism Checkers For Research Papers

- 30+ Essential Software For Researchers

- Best AI-Based Summary Generators

- 25 Best Schools For International Relations In The US

- GPTZero Review

#3. Wolfram Alpha

Wolfram Alpha is a “computational knowledge engine” that can answer factual questions posed in natural language. It can be a useful search tool.

Type in a question like “What is the square root of 64?” or “What is the boiling point of water?” and Wolfram Alpha will give you an answer.

Wolfram Alpha can also be used to find academic articles. Just type in your keywords and Wolfram Alpha will generate a list of academic articles that match your query.

Tip: You can restrict your results to peer-reviewed journals by clicking on the “Scholarly” tab.

- Can answer factual questions posed in natural language.

- Can be used to find academic articles.

- Results are ranked by relevance.

- Results can be overwhelming, so it’s important to narrow down your search criteria as much as possible.

- The experience feels a bit more structured but it could also be a bit restrictive

Wolfram Alpha offers a few pricing options, including a “Pro” subscription that gives you access to additional features, such as the ability to create custom reports. You can also purchase individual articles or download them for offline use.

Pro costs $5.49 and Pro Premium costs $9.99

#4. iSEEK Education

- 15 Best Websites To Download Research Papers For Free

- 15 Best Academic Research Trend Prediction Platforms

- Academic Tools

- 15 Best Academic Networking And Collaboration Platforms

iSEEK is a search engine targeting students, teachers, administrators, and caregiver. It’s designed to be safe with editor-reviewed content.

iSEEK Education also includes a “Cited by” feature which shows you how often an article has been cited by other researchers.

- Editor-reviewed content.

- “Cited by” feature shows how often an article has been cited by other researchers.

- Limited to academic content.

- Doesn’t have the breadth of coverage that some of the other academic search engines have.

iSEEK Education is free to use.

#5. BASE (Bielefeld Academic Search Engine)

BASE is hosted at Bielefeld University in Germany and that’s where it name stems from (Bielefeld Academic Search Engine).

Known as “one of the most comprehensive academic web search engines,” it contains over 100 million documents from 4,000 different sources.

Users can narrow their search using the advanced search option, so regardless of whether you need a book, a review, a lecture, a video or a thesis, BASE has what you need.

BASE indexes academic articles from a variety of disciplines, including the arts, humanities, social sciences, and natural sciences.

- One of the world’s most voluminous search engines,

- Indexes academic articles from a variety of disciplines, especially for academic web resources

- Includes an “Advanced Search” feature that lets you restrict your results to peer-reviewed journals.

- Doesn’t include abstracts for most articles.

- Doesn’t have related articles, references, cited by

BASE is free to use.

- 10 Best Reference Management Software for Research 2023

- 15 Best Academic Networking and Collaboration Platforms

- 30+ Essential Software for Researchers

- 15 Best Academic Blogging and Content Management

- 11 Best Academic Writing Tools For Researchers

CORE is an academic search engine that focuses on open access research papers. A link to the full text PDF or complete text web page is supplied for each search result. It’s academic search engine dedicated to open access research papers.

- Focused on open access research papers.

- Links to full text PDF or complete text web page are supplied for each search result.

- Export formats include BibTeX, Endnote, RefWorks, Zotero.

- Coverage is limited to open access research papers.

- No abstracts are available for most articles.

- No related articles, references, or cited by features.

CORE is free to use.

- Best Plagiarism Checkers for Research Papers in 2024

#7. Science.gov

Science.gov is a search engine developed and managed by the United States government. It includes results from a variety of scientific databases, including NASA, EPA, USGS, and NIST.

US students are more likely to have early exposure to this tool for scholarly research.

- Coverage from a variety of scientific databases (200 million articles and reports).

- Links to full text are available for some articles.

Science.gov is free to use.

- 15 Best Academic Journal Discovery Platforms

- Sci Hub Review

#8. Semantic Scholar

Semantic Scholar is a recent entrant to the field. Its goal is to provide more relevant and effective search results via artificial intelligence-powered methods that detect hidden relationships and connections between research topics.

- Powered by artificial intelligence, which enhances search results.

- Covers a large number of academic articles (approx. 40 million).

- Related articles, references, and cited by features are all included.

- Links to full text are available for most articles.

Semantic Scholar is free to use.

- 11 Best Academic Writing Tools For Researchers

- 10 Best Reference Management Software for Research

- 15 Best Academic Journal Discovery Platforms

#9. RefSeek

RefSeek searches more than five billion documents, including web pages, books, encyclopedias, journals, and newspapers.

This is one of the free search engines that feels like Yahoo with a massive directory. It could be good when you are just looking for research ideas from unexpected angles. It could lead you to some other database that you might not know such as the CIA The World Factbook, which is a great reference tool.

- Searches more than five billion documents.

- The Documents tab is very focused on research papers and easy to use.

- Results can be filtered by date, type of document, and language.

- Good source for free academic articles, open access journals, and technical reports.

- The navigation and user experience is very dated even to millenials…

- It requires more than 3 clicks to dig up interesting references (which is how it could lead to you something beyond the 1st page of Google)

- The top part of the results are ALL ads (well… it’s free to use)

RefSeek is free to use.

#10. ResearchGate

A mixture of social networking site + forum + content databases where researchers can build their profile, share research papers, and interact with one another.

Although it is not an academic search engine that goes outside of its site, ResearchGate ‘s library of works offers an excellent choice for any curious scholar.

There are more than 100 million publications available on the site from over 11 million researchers. It is possible to search by publication, data, and author, as well as to ask the researchers questions.

- A great place to find research papers and researchers.

- Can follow other researchers and get updates when they share new papers or make changes to their profile.

- The network effect can be helpful in finding people who have expertise in a particular topic.

- Interface is not as user friendly

- Can be overwhelming when trying to find relevant papers.

- Some papers are behind a paywall.

ResearchGate is free to use.

- 15 Best Academic Research Trend Prediction Platforms

- 25 Best Tools for Tracking Research Impact and Citations

#11. DataONE Search (formerly CiteULike)

A social networking site for academics who want to share and discover academic articles and papers.

- A great place to find academic papers that have been shared by other academics.

- Some papers are behind a paywall

CiteULike is free to use.

#12. DataElixir

DataElixir is deigned to help you find, understand and use data. It includes a curated list of the best open datasets, tools and resources for data science.

- Dedicated resource for finding open data sets, tools, and resources for data science.

- The website is easy to navigate.

- The content is updated regularly

- The resources are grouped by category.

- Not all of the resources are applicable to academic research.

- Some of the content is outdated.

DataElixir is free to use.

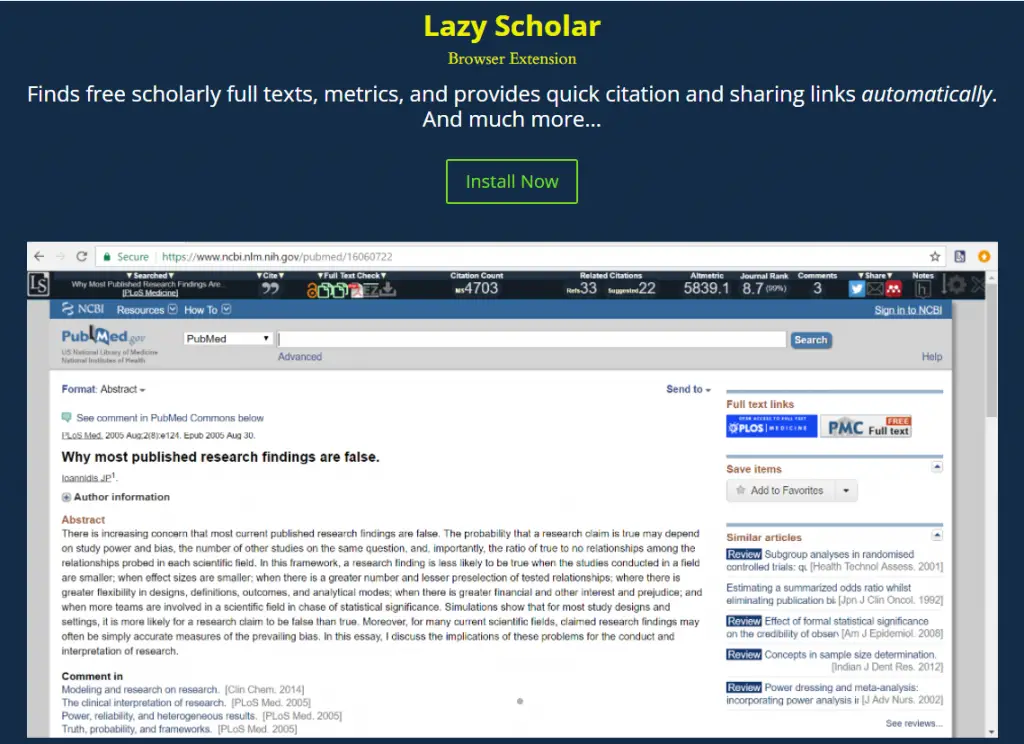

#13. LazyScholar – browser extension

LazyScholar is a free browser plugin that helps you discover free academic full texts, metrics, and instant citation and sharing links. Lazy Scholar is created Colby Vorland, a postdoctoral fellow at Indiana University.

- It can integrate with your library to find full texts even when you’re off-campus.

- Saves your history and provides an interface to find it.

- A pre-formed citation is availlable in over 900 citation styles.

- Can recommend you topics and scans new PubMed listings to suggest new papers

- Results can be a bit hit or miss

LazyScholar is free to use.

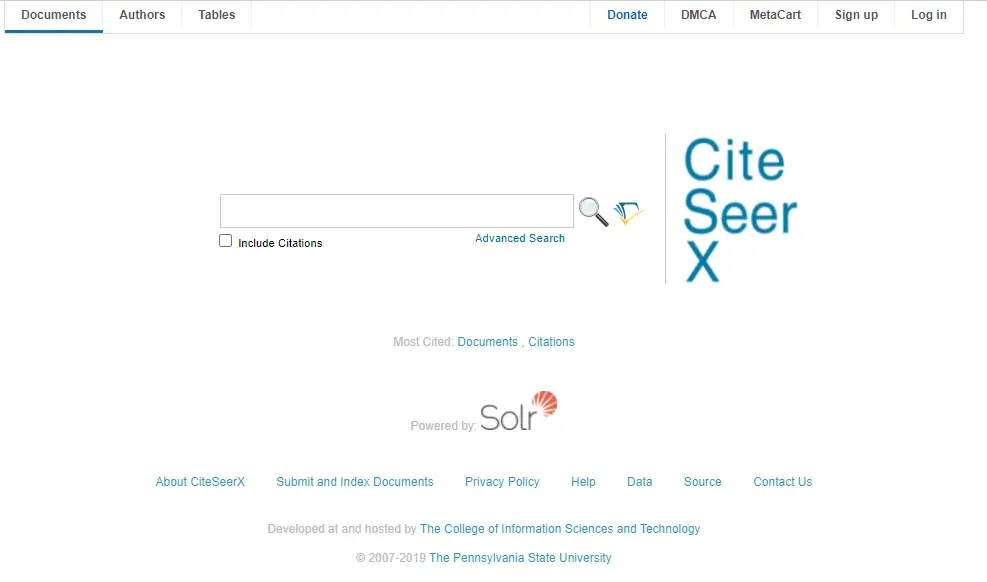

#14. CiteseerX – digital library from PenState

CiteseerX is a digital library stores and indexes research articles in Computer Science and related fields. The site has a robust search engine that allows you to filter results by date, author.

- Searches a large number of academic papers.

- Results can be filtered by date, author, and topic.

- The website is easy to use.

- You can create an account and save your searches for future reference.

CiteseerX is free to use.

- Surfer Review: Is It Worth It?

- 25 Best Tools For Tracking Research Impact And Citations

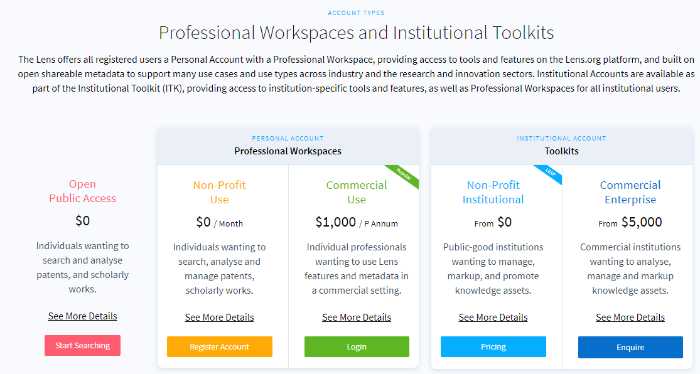

#15. The Lens – patents search

The Lens or the Patent Lens is an online patent and scholarly literature search facility, provided by Cambia, an Australia-based non-profit organization.

- Searches for a large number of academic papers.

The price range can be free for non-profit use to $5,000 for commercial enterprise.

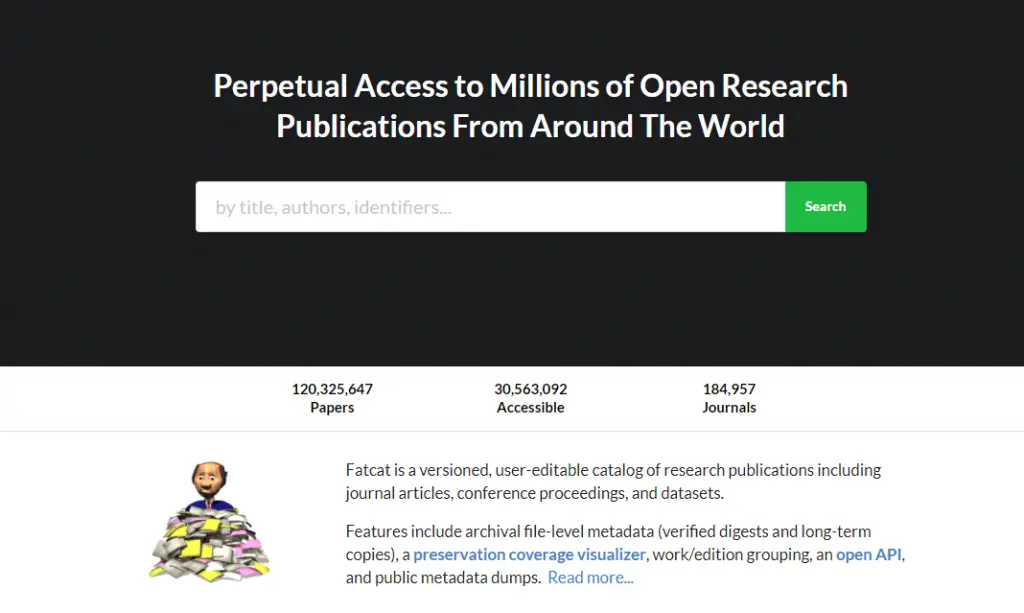

#16. Fatcat – wiki for bibliographic catalog

Fatcat is an open bibliographic catalog of written works. The scope of works is somewhat flexible, with a focus on published research outputs like journal articles, pre-prints, and conference proceedings. Records are collaboratively editable, versioned, available in bulk form, and include URL-agnostic file-level metadata.

- Open source and collaborative

- You can be part of the community that is very focused on its mission

- The archival file-level metadata (verified digests and long-term copies) is a great feature.

- Could prove to be another rabbit hole

- People either love or hate the text-only interface

#17. Lexis Web – Legal database

Are you researching legal topics? You can turn to Lexis Web for any law-related questions you may have. The results are drawn from legal sites and can be filtered based on criteria such as news, blogs, government, and commercial. Additionally, users can filter results by jurisdiction, practice area, source and file format.

- Results are drawn from legal sites.

- Filters are available based on criteria such as news, blogs, government, and commercial.

- Users can filter results by jurisdiction, practice area, source and file format.

- Not all law-related questions will be answered by this search engine.

- Coverage is limited to legal sites only.

Lexis Web is free for up to three searches per day. After that, a subscription is required.

#18. Infotopia – part of the VLRC family

Infotopia touts itself as an “alternative to Google safe search.” Scholarly book results are curated by librarians, teachers, and other educational workers. Users can select from a range of topics such as art, health, and science and technology, and then see a list of resources pertaining to the topic.

Consequently, if you aren’t able to find what you are looking for within Infotopia’s pages, you will probably find it on one of its many suggested websites.

#19. Virtual Learning Resources Center

Virtual Learning Resources Center (VLRC) is an academic search engine that features thousands of academic sites chosen by educators and librarians worldwide. Using an index generated from a research portal, university, and library internet subject guides, students and instructors can find current, authoritative information for school.

- Thousands of academic information websites indexed by it. You will also be able to get more refined results with custom Google search, which will speed up your research.

- Many people consider VLRC as one of the best free search engines to start looking for research material.

- TeachThought rated the Virtual LRC #3 in it’s list of 100 Search Engines For Academic Research

- More relevant to education

- More relevant to students

Powered by Google Custom Search Engine (CSE), Jurn is a free online search engine for accessing and downloading free full-text scholarly papers. It was created by David Haden in a public open beta version in February 2009, initially for locating open access electronic journal articles in the arts and humanities.

After the indexing process was completed, a website containing additional public directories of web links to indexed publications was introduced in mid-2009. The Jurn search service and directory has been regularly modified and cleaned since then.

- A great resource for finding academic papers that are behind paywalls.

- The content is updated regularly.uren

Jurn is free to use.

#21. WorldWideScience

The Office of Scientific and Technical Information—a branch of the Office of Science within the U.S. Department of Energy—hosts the portal WorldWideScience , which has dubbed itself “The Global Science Gateway.”

Over 70 countries’ databases are used on the website. When a user enters a query, it contacts databases from all across the world and shows results in both English and translated journals and academic resources.

- Results can be filtered by language and type of resource

- Interface is easy to use

- Contains both academic journal articles and translated academic resources

- The website can be difficult to navigate.

WorldWideScience is free to use.

#22. Google Books

A user can browse thousands of books on Google Books, from popular titles to old titles, to find pages that include their search terms. You can look through pages, read online reviews, and find out where to buy a hard copy once you find the book you are interested in.

#23. DOAJ (Directory of Open Access Journals)

DOAJ is a free search engine for scientific and scholarly materials. It is a searchable database with over 8,000 peer-reviewed research papers organized by subject. It’s one of the most comprehensive libraries of scientific and scholarly resources, with over 8,000 journals available on a variety of themes.

#24. Baidu Scholar

Baidu Xueshu (Academic) is the Chinese version for Google Scholar. IDU Scholar indexes academic papers from a variety of disciplines in both Chinese and English.

- Articles are available in full text PDF.

- Covers a variety of academic disciplines.

- No abstracts are available for most articles, but summaries are provided for some.

- A great portal that takes you to different specialized research platform

- You need to be able to read Chinese to use the site

- Since 2021 there is a rise of focus on China and the Chinese Communist Party

Baidu Scholar is free to use.

#25. PubMed Central

PubMed is a free search engine that provides references and abstracts for medical, life sciences, and biomedical topics.

If you’re studying anything related to healthcare or science, this site is perfect. PublicMed Central is operated by the National Center for Biotechnology Information, a division of the U.S. National Library of Medicine. It contains more than 3 million full-text journal articles.

It’s similar to PubMed Health, which focuses on health-related research and includes abstracts and citations to over 26 million articles.

#26. MEDLINE®

MEDLINE® is a paid subscription database for life sciences and biomedicine that includes more than 28 million citations to journal articles. For finding reliable, carefully chosen health information, Medline Plus provides a powerful search tool and even a dictionary.

- A great database for life sciences and biomedicine.

- Contains more than 28 million references to journal articles.

- References can be filtered by date, type of document, and language.

- The database is expensive to access.

- Some people find it difficult to navigate and find what they are looking for.

MEDLINE is not free to use ( pricing information ).

Defunct Academic Search Engines

#27. microsoft academic .

Microsoft Academic

Microsoft Academic Search seemed to be a failure from the beginning. It ended in 2012, then re-launched in 2016 as Microsoft Academic. It provides the researcher with the opportunity to search academic publications,

Microsoft Academic used to be the second-largest academic search engine after Google Scholar. Microsoft Academic provides a wealth of data for free, but Microsoft has announced that it will shut Microsoft Academic down in by 2022.

#28. Scizzle

Designed to help researchers stay on top of the literature by setting up email alerts, based on key terms, for newspapers.

Unfortunately, academic search engines come and go. These are two that are no longer available.

Final Thoughts

There are many academic search engines that can help researchers and scholars find the information they need. This list provides a variety of options, starting with more familiar engines and moving on to less well-known ones.

Keeping an open mind and exploring different sources is essential for conducting effective online research. With so much information at our fingertips, it’s important to make sure we’re using the best tools available to us.

Tell us in the comment below which academic search engine have you not heard of? Which database do you think we should add? What database do your professional societies use? What are the most useful academic websites for research in your opinion?

There is more.

Check out our other articles on the Best Academic Tools Series for Research below.

- Learn how to get more done with these Academic Writing Tools

- Learn how to proofread your work with these Proofreading Tools

- Learn how to broaden your research landscape with these Academic Search Engines

- Learn how to manage multiple research projects with these Project Management Tools

- Learn how to run effective survey research with these Survey Tools for Research

- Learn how get more insights from important conversations and interviews with Transcription Tools

- Learn how to manage the ever-growing list of references with these Reference Management Software

- Learn how to double your productivity with literature reviews with these AI-Based Summary Generators

- Learn how to build and develop your audience with these Academic Social Network Sites

- Learn how to make sure your content is original and trustworthy with these Plagiarism Checkers

- Learn how to talk about your work effectively with these Science Communication Tools

10 thoughts on “28 Best Academic Search Engines That make your research easier”

Thank you so much Joannah..I have found this information useful to me as librarian in an academic library

You are welcome! We are happy to hear that!

Thank You Team, for providing a comprehensive list of academic search engines that can help make research easier for students and scholars. The variety of search engines included offers a range of options for finding scholarly articles, journals, and other academic resources. The article also provides a brief summary of each search engine’s features, which helps in determining which one is the best fit for a specific research topic. Overall, this article is a valuable resource for anyone looking for a quick and easy way to access a wealth of academic information.

Thank you for taking the time to share your feedback with us. We are delighted to hear that you found our list of academic search engines helpful in making research easier for students and scholars. We understand the importance of having a variety of options when it comes to finding scholarly articles, journals, and other academic resources, and we strive to provide a comprehensive list of resources to meet those needs.

We are glad that you found the brief summary of each search engine’s features helpful in determining which one is the best fit for a specific research topic. Our goal is to make it easy for our readers to access valuable academic information and we’re glad that we were able to achieve that for you.

We appreciate your support and thank you for your kind words. We will continue to provide valuable resources for students and researchers in the future. Please let us know if you have any further questions or suggestions.

No more questions Thank You

I cannot thank you enough!!! thanks alot 🙂

Typography animation is a technique that combines text and motion to create visually engaging and dynamic animations. It involves animating individual letters, words, or phrases in various ways to convey a message, evoke emotions, or enhance the visual impact of a design or video. – Typography Animation Techniques Tools and Online Software {43}

Hi Joannah! Here’s another one you may want to add! Expontum ( https://www.expontum.com/ ) – Helps researchers quickly find knowledge gaps and identify what research projects have been completed before. Thanks!

Expontum – Helps researchers quickly find knowledge gaps and identify what research projects have been completed before. Expontum is free, open access, and available to all globally with no paid versions of the site. Automated processes scan research article information 24/7 so this website is constantly updating. By looking at over 35 million research publications (240 million by the end of 2023), the site has 146 million tagged research subjects and 122 million tagged research attributes. Learn more about methodology and sources on the Expontum About Page ( https://www.expontum.com/about.php )

Hey Ryan, I clicked and checked your site and thought it was very relevant to our reader. Thank you for sharing. And, we will be reviewing your site soon.

Sounds good! Thanks, Joannah!

Leave a Comment Cancel reply

Save my name, email, and website in this browser for the next time I comment.

We maintain and update science journals and scientific metrics. Scientific metrics data are aggregated from publicly available sources. Please note that we do NOT publish research papers on this platform. We do NOT accept any manuscript.

2012-2024 © scijournal.org

A free, AI-powered research tool for scientific literature

- Charles E. Jones

- Pattern Recognition

New & Improved API for Developers

Introducing semantic reader in beta.

Stay Connected With Semantic Scholar Sign Up What Is Semantic Scholar? Semantic Scholar is a free, AI-powered research tool for scientific literature, based at the Allen Institute for AI.

An official website of the United States government

Here's how you know

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

Effective March 14, 2024, HHS Virtual Library customers will have a streamlined login , with a common URL for all HHS customers using a PIV card. IHS and other HHS customers without a PIV card will continue to login using temporary credentials provided by the NIH Library. Further guidance is available here .

Literature Search: Databases and Gray Literature

The literature search.

- A systematic review search includes a search of databases, gray literature, personal communications, and a handsearch of high impact journals in the related field. See our list of recommended databases and gray literature sources on this page.

- a comprehensive literature search can not be dependent on a single database, nor on bibliographic databases only.

- inclusion of multiple databases helps avoid publication bias (georaphic bias or bias against publication of negative results).

- The Cochrane Collaboration recommends PubMed, Embase and the Cochrane Central Register of Controlled Trials (CENTRAL) at a minimum.

- NOTE: The Cochrane Collaboration and the IOM recommend that the literature search be conducted by librarians or persons with extensive literature search experience. Please contact the NIH Librarians for assistance with the literature search component of your systematic review.

Cochrane Library

A collection of six databases that contain different types of high-quality, independent evidence to inform healthcare decision-making. Search the Cochrane Central Register of Controlled Trials here.

European database of biomedical and pharmacologic literature.

PubMed comprises more than 21 million citations for biomedical literature from MEDLINE, life science journals, and online books.

Largest abstract and citation database of peer-reviewed literature and quality web sources. Contains conference papers.

Web of Science

World's leading citation databases. Covers over 12,000 of the highest impact journals worldwide, including Open Access journals and over 150,000 conference proceedings. Coverage in the sciences, social sciences, arts, and humanities, with coverage to 1900.

Subject Specific Databases

APA PsycINFO

Over 4.5 million abstracts of peer-reviewed literature in the behavioral and social sciences. Includes conference papers, book chapters, psychological tests, scales and measurement tools.

CINAHL Plus

Comprehensive journal index to nursing and allied health literature, includes books, nursing dissertations, conference proceedings, practice standards and book chapters.

Latin American and Caribbean health sciences literature database

Gray Literature

- Gray Literature is the term for information that falls outside the mainstream of published journal and mongraph literature, not controlled by commercial publishers

- hard to find studies, reports, or dissertations

- conference abstracts or papers

- governmental or private sector research

- clinical trials - ongoing or unpublished

- experts and researchers in the field

- Library catalogs

- Professional association websites

- Google Scholar - Search scholarly literature across many disciplines and sources, including theses, books, abstracts and articles.

- Dissertation Abstracts - dissertation and theses database - NIH Library biomedical librarians can access and search for you.

- NTIS - central resource for government-funded scientific, technical, engineering, and business related information.

- AHRQ - agency for healthcare research and quality

- Open Grey - system for information on grey literature in Europe. Open access to 700,000 references to the grey literature.

- World Health Organization - providing leadership on global health matters, shaping the health research agenda, setting norms and standards, articulating evidence-based policy options, providing technical support to countries and monitoring and assessing health trends.

- New York Academy of Medicine Grey Literature Report - a bimonthly publication of The New York Academy of Medicine (NYAM) alerting readers to new gray literature publications in health services research and selected public health topics. NOTE: Discontinued as of Jan 2017, but resources are still accessible.

- Gray Source Index

- OpenDOAR - directory of academic repositories

- International Clinical Trials Registery Platform - from the World Health Organization

- Australian New Zealand Clinical Trials Registry

- Brazilian Clinical Trials Registry

- Chinese Clinical Trial Registry -

- ClinicalTrials.gov - U.S. and international federally and privately supported clinical trials registry and results database

- Clinical Trials Registry - India

- EU clinical Trials Register

- Japan Primary Registries Network

- Pan African Clinical Trials Registry

- En español – ExME

- Em português – EME

Literature searches: what databases are available?

Posted on 6th April 2021 by Izabel de Oliveira

Many types of research require a search of the medical literature as part of the process of understanding the current evidence or knowledge base. This can be done using one or more biomedical bibliographic databases. [1]

Bibliographic databases make the information contained in the papers more visible to the scientific community and facilitate locating the desired literature.

This blog describes some of the main bibliographic databases which index medical journals.

PubMed was launched in 1996 and, since June 1997, provides free and unlimited access for all users through the internet. PubMed database contains more than 30 million references of biomedical literature from approximately 7,000 journals. The largest percentage of records in PubMed comes from MEDLINE (95%), which contains 25 million records from over 5,600 journals. Other records derive from other sources such as In-process citations, ‘Ahead of Print’ citations, NCBI Bookshelf, etc.

The second largest component of PubMed is PubMed Central (PMC) . Launched in 2000, PMC is a permanent collection of full-text life sciences and biomedical journal articles. PMC also includes articles deposited by journal publishers and author manuscripts, published articles that are submitted in compliance with the public access policies of the National Institutes of Health (NIH) and other research funding agencies. PMC contains approximately 4.5 million articles.

Some National Library of Medicine (NLM) resources associated with PubMed are the NLM Catalog and MedlinePlus. The NLM Catalog contains bibliographic records for over 1.4 million journals, books, audiovisuals, electronic resources, and other materials. It also includes detailed indexing information for journals in PubMed and other NCBI databases, although not all materials in the NLM Catalog are part of NLM’s collection. MedlinePlus is a consumer health website providing information on various health topics, drugs, dietary supplements, and health tools.

MeSH (Medical Subject Headings) is the NLM controlled vocabulary used for indexing articles in PubMed. It is used by indexers who analyze and maintain the PubMed database to reflect the subject content of journal articles as they are published. Indexers typically select 10–12 MeSH terms to describe every paper.

Embase is considered the second most popular database after MEDLINE. More than 32 million records from over 8,200 journals from more than 95 countries, and ‘grey literature’ from over 2.4 million conference abstracts, are estimated to be in the Embase content.

Embase contains subtopics in health care such as complementary and alternative medicine, prognostic studies, telemedicine, psychiatry, and health technology. Besides that, it is also widely used for research on drug-related topics as it offers better coverage than MEDLINE on pharmaceutics-related literature.

In 2010, Embase began to include all MEDLINE citations. MEDLINE records are delivered to Elsevier daily and are incorporated into Embase after de-duplication with records already indexed by Elsevier to produce ‘MEDLINE-unique’ records. These MEDLINE-unique records are not re-indexed by Elsevier. However, their indexing is mapped to Emtree terms used in Embase to ensure that Emtree terminology can be used to search all Embase records, including those originally derived from MEDLINE.

Since this coverage expansion—at least in theory and without taking into consideration the different indexing practices of the two databases—a search in Embase alone should cover every record in both Embase and MEDLINE, making Embase a possible “one-stop” search engine for medical research [1].

Emtree is a hierarchically structured, controlled vocabulary for biomedicine and the related life sciences. It includes a whole range of terms for drugs, diseases, medical devices, and essential life science concepts. Emtree is used to index all of the Embase content. This process includes full-text indexing of journal articles, which is done by experts.

The most important index of the technical-scientific literature in Latin America and the Caribbean, LILACS , was created in 1985 to record scientific and technical production in health. It has been maintained and updated by a network of more than 600 institutions of education, government, and health research and coordinated by Latin America and Caribbean Center on Health Sciences Information (BIREME), Pan American Health Organization (PAHO), and World Health Organization (WHO).

LILACS contains scientific and technical literature from over 908 journals from 26 countries in Latin America and the Caribbean, with free access. About 900,000 records from articles with peer review, theses and dissertations, government documents, conference proceedings, and books; more than 480,000 of them are available with the full-text link in open access.

The LILACS Methodology is a set of standards, manuals, guides, and applications in continuous development, intended for the collection, selection, description, indexing of documents, and generation of databases. This centralised methodology enables the cooperation between Latin American and Caribbean countries to create local and national databases, all feeding into the LILACS database. Currently, the databases LILACS, BBO, BDENF, MEDCARIB, and national databases of the countries of Latin America are part of the LILACS System.

Health Sciences Descriptors (DeCS) is the multilingual and structured vocabulary created by BIREME to serve as a unique language in indexing articles from scientific journals, books, congress proceedings, technical reports, and other types of materials, and also for searching and retrieving subjects from scientific literature from information sources available on the Virtual Health Library (VHL) such as LILACS, MEDLINE, and others. It was developed from the MeSH with the purpose of permitting the use of common terminology for searching in multiple languages, and providing a consistent and unique environment for the retrieval of information. DeCS vocabulary is dynamic and totals 34,118 descriptors and qualifiers, of which 29,716 come from MeSH, and 4,402 are exclusive.

Cochrane CENTRAL

The Cochrane Central Register of Controlled Trials (CENTRAL) is a database of reports of randomized and quasi-randomized controlled trials. Most records are obtained from the bibliographic databases PubMed and Embase, with additional records from the published and unpublished sources of CINAHL, ClinicalTrials.gov, and the WHO’s International Clinical Trials Registry Platform.

Although CENTRAL first began publication in 1996, records are included irrespective of the date of publication, and the language of publication is also not a restriction to being included in the database. You won’t find the full text to the article on CENTRAL but there is often a summary of the article, in addition to the standard details of author, source, and year.

Within CENTRAL, there are ‘Specialized Registers’ which are collected and maintained by Cochrane Review Groups (plus a few Cochrane Fields), which include reports of controlled trials relevant to their area of interest. Some Cochrane Centres search the general healthcare literature of their countries or regions in order to contribute records to CENTRAL.

ScienceDirect

ScienceDirect i s Elsevier’s most important peer-reviewed academic literature platform. It was launched in 1997 and contains 16 million records from over 2,500 journals, including over 250 Open Access publications, such as Cell Reports and The Lancet Global Health, as well as 39,000 eBooks.

ScienceDirect topics include:

- health sciences;

- life sciences;

- physical sciences;

- engineering;

- social sciences; and

- humanities.

Web of Science

Web of Science (previously Web of Knowledge) is an online scientific citation indexing service created in 1997 by the Institute for Scientific Information (ISI), and currently maintained by Clarivate Analytics.

Web of Science covers several fields of the sciences, social sciences, and arts and humanities. Its main resource is the Web of Science Core Collection which includes over 1 billion cited references dating back to 1900, indexed from 21,100 peer-reviewed journals, including Open Access journals, books and proceedings.

Web of Science also offers regional databases which cover:

- Latin America (SciELO Citation Index);

- China (Chinese Science Citation Database);

- Korea (Korea Citation Index);

- Russia (Russian Science Citation Index).

Boolean operators

To make the search more precise, we can use boolean operators in databases between our keywords.

We use boolean operators to focus on a topic, particularly when this topic contains multiple search terms, and to connect various pieces of information in order to find exactly what we are looking for.

Boolean operators connect the search words to either narrow or broaden the set of results. The three basic boolean operators are: AND, OR, and NOT.

- AND narrows a search by telling the database that all keywords used must be found in the article in order for it to appear in our results.

- OR broadens a search by telling the database that any of the words it connects are acceptable (this is useful when we are searching for synonymous words).

- NOT narrows the search by telling the database to eliminate all terms that follow it from our search results (this is helpful when we are interested in a specific aspect of a topic or when we want to exclude a type of article.

References (pdf)

You may also be interested in the following blogs for further reading:

Conducting a systematic literature search

Reviewing the evidence: what method should I use?

Cochrane Crowd for students: what’s in it for you?

Izabel de Oliveira

Leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Subscribe to our newsletter

You will receive our monthly newsletter and free access to Trip Premium.

Related Articles

Epistemonikos: the world’s largest repository of healthcare systematic reviews

Learn more about the Epistemonikos Foundation and its repository of healthcare systematic reviews. The first in a series of three blogs.

How do you use the Epistemonikos database?

Learn how to use the Epistemonikos database, the world’s largest multilingual repository of healthcare evidence. The second in a series of three blogs.

Epistemonikos: All you need is L·OVE

Discover more about the ‘Living OVerview of Evidence’ platform from Epistemonikos, which maps the best evidence relevant for making health decisions. The final blog in a series of three focusing on the Epistemonikos Foundation.

- Open access

- Published: 14 August 2018

Defining the process to literature searching in systematic reviews: a literature review of guidance and supporting studies

- Chris Cooper ORCID: orcid.org/0000-0003-0864-5607 1 ,

- Andrew Booth 2 ,

- Jo Varley-Campbell 1 ,

- Nicky Britten 3 &

- Ruth Garside 4

BMC Medical Research Methodology volume 18 , Article number: 85 ( 2018 ) Cite this article

197k Accesses

199 Citations

118 Altmetric

Metrics details

Systematic literature searching is recognised as a critical component of the systematic review process. It involves a systematic search for studies and aims for a transparent report of study identification, leaving readers clear about what was done to identify studies, and how the findings of the review are situated in the relevant evidence.

Information specialists and review teams appear to work from a shared and tacit model of the literature search process. How this tacit model has developed and evolved is unclear, and it has not been explicitly examined before.

The purpose of this review is to determine if a shared model of the literature searching process can be detected across systematic review guidance documents and, if so, how this process is reported in the guidance and supported by published studies.

A literature review.

Two types of literature were reviewed: guidance and published studies. Nine guidance documents were identified, including: The Cochrane and Campbell Handbooks. Published studies were identified through ‘pearl growing’, citation chasing, a search of PubMed using the systematic review methods filter, and the authors’ topic knowledge.

The relevant sections within each guidance document were then read and re-read, with the aim of determining key methodological stages. Methodological stages were identified and defined. This data was reviewed to identify agreements and areas of unique guidance between guidance documents. Consensus across multiple guidance documents was used to inform selection of ‘key stages’ in the process of literature searching.

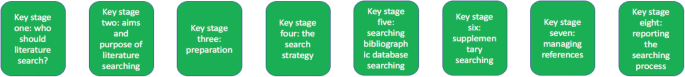

Eight key stages were determined relating specifically to literature searching in systematic reviews. They were: who should literature search, aims and purpose of literature searching, preparation, the search strategy, searching databases, supplementary searching, managing references and reporting the search process.

Conclusions

Eight key stages to the process of literature searching in systematic reviews were identified. These key stages are consistently reported in the nine guidance documents, suggesting consensus on the key stages of literature searching, and therefore the process of literature searching as a whole, in systematic reviews. Further research to determine the suitability of using the same process of literature searching for all types of systematic review is indicated.

Peer Review reports

Systematic literature searching is recognised as a critical component of the systematic review process. It involves a systematic search for studies and aims for a transparent report of study identification, leaving review stakeholders clear about what was done to identify studies, and how the findings of the review are situated in the relevant evidence.

Information specialists and review teams appear to work from a shared and tacit model of the literature search process. How this tacit model has developed and evolved is unclear, and it has not been explicitly examined before. This is in contrast to the information science literature, which has developed information processing models as an explicit basis for dialogue and empirical testing. Without an explicit model, research in the process of systematic literature searching will remain immature and potentially uneven, and the development of shared information models will be assumed but never articulated.

One way of developing such a conceptual model is by formally examining the implicit “programme theory” as embodied in key methodological texts. The aim of this review is therefore to determine if a shared model of the literature searching process in systematic reviews can be detected across guidance documents and, if so, how this process is reported and supported.

Identifying guidance

Key texts (henceforth referred to as “guidance”) were identified based upon their accessibility to, and prominence within, United Kingdom systematic reviewing practice. The United Kingdom occupies a prominent position in the science of health information retrieval, as quantified by such objective measures as the authorship of papers, the number of Cochrane groups based in the UK, membership and leadership of groups such as the Cochrane Information Retrieval Methods Group, the HTA-I Information Specialists’ Group and historic association with such centres as the UK Cochrane Centre, the NHS Centre for Reviews and Dissemination, the Centre for Evidence Based Medicine and the National Institute for Clinical Excellence (NICE). Coupled with the linguistic dominance of English within medical and health science and the science of systematic reviews more generally, this offers a justification for a purposive sample that favours UK, European and Australian guidance documents.

Nine guidance documents were identified. These documents provide guidance for different types of reviews, namely: reviews of interventions, reviews of health technologies, reviews of qualitative research studies, reviews of social science topics, and reviews to inform guidance.

Whilst these guidance documents occasionally offer additional guidance on other types of systematic reviews, we have focused on the core and stated aims of these documents as they relate to literature searching. Table 1 sets out: the guidance document, the version audited, their core stated focus, and a bibliographical pointer to the main guidance relating to literature searching.

Once a list of key guidance documents was determined, it was checked by six senior information professionals based in the UK for relevance to current literature searching in systematic reviews.

Identifying supporting studies

In addition to identifying guidance, the authors sought to populate an evidence base of supporting studies (henceforth referred to as “studies”) that contribute to existing search practice. Studies were first identified by the authors from their knowledge on this topic area and, subsequently, through systematic citation chasing key studies (‘pearls’ [ 1 ]) located within each key stage of the search process. These studies are identified in Additional file 1 : Appendix Table 1. Citation chasing was conducted by analysing the bibliography of references for each study (backwards citation chasing) and through Google Scholar (forward citation chasing). A search of PubMed using the systematic review methods filter was undertaken in August 2017 (see Additional file 1 ). The search terms used were: (literature search*[Title/Abstract]) AND sysrev_methods[sb] and 586 results were returned. These results were sifted for relevance to the key stages in Fig. 1 by CC.

The key stages of literature search guidance as identified from nine key texts

Extracting the data

To reveal the implicit process of literature searching within each guidance document, the relevant sections (chapters) on literature searching were read and re-read, with the aim of determining key methodological stages. We defined a key methodological stage as a distinct step in the overall process for which specific guidance is reported, and action is taken, that collectively would result in a completed literature search.

The chapter or section sub-heading for each methodological stage was extracted into a table using the exact language as reported in each guidance document. The lead author (CC) then read and re-read these data, and the paragraphs of the document to which the headings referred, summarising section details. This table was then reviewed, using comparison and contrast to identify agreements and areas of unique guidance. Consensus across multiple guidelines was used to inform selection of ‘key stages’ in the process of literature searching.

Having determined the key stages to literature searching, we then read and re-read the sections relating to literature searching again, extracting specific detail relating to the methodological process of literature searching within each key stage. Again, the guidance was then read and re-read, first on a document-by-document-basis and, secondly, across all the documents above, to identify both commonalities and areas of unique guidance.

Results and discussion

Our findings.

We were able to identify consensus across the guidance on literature searching for systematic reviews suggesting a shared implicit model within the information retrieval community. Whilst the structure of the guidance varies between documents, the same key stages are reported, even where the core focus of each document is different. We were able to identify specific areas of unique guidance, where a document reported guidance not summarised in other documents, together with areas of consensus across guidance.

Unique guidance

Only one document provided guidance on the topic of when to stop searching [ 2 ]. This guidance from 2005 anticipates a topic of increasing importance with the current interest in time-limited (i.e. “rapid”) reviews. Quality assurance (or peer review) of literature searches was only covered in two guidance documents [ 3 , 4 ]. This topic has emerged as increasingly important as indicated by the development of the PRESS instrument [ 5 ]. Text mining was discussed in four guidance documents [ 4 , 6 , 7 , 8 ] where the automation of some manual review work may offer efficiencies in literature searching [ 8 ].

Agreement between guidance: Defining the key stages of literature searching

Where there was agreement on the process, we determined that this constituted a key stage in the process of literature searching to inform systematic reviews.

From the guidance, we determined eight key stages that relate specifically to literature searching in systematic reviews. These are summarised at Fig. 1 . The data extraction table to inform Fig. 1 is reported in Table 2 . Table 2 reports the areas of common agreement and it demonstrates that the language used to describe key stages and processes varies significantly between guidance documents.

For each key stage, we set out the specific guidance, followed by discussion on how this guidance is situated within the wider literature.

Key stage one: Deciding who should undertake the literature search

The guidance.

Eight documents provided guidance on who should undertake literature searching in systematic reviews [ 2 , 4 , 6 , 7 , 8 , 9 , 10 , 11 ]. The guidance affirms that people with relevant expertise of literature searching should ‘ideally’ be included within the review team [ 6 ]. Information specialists (or information scientists), librarians or trial search co-ordinators (TSCs) are indicated as appropriate researchers in six guidance documents [ 2 , 7 , 8 , 9 , 10 , 11 ].

How the guidance corresponds to the published studies

The guidance is consistent with studies that call for the involvement of information specialists and librarians in systematic reviews [ 12 , 13 , 14 , 15 , 16 , 17 , 18 , 19 , 20 , 21 , 22 , 23 , 24 , 25 , 26 ] and which demonstrate how their training as ‘expert searchers’ and ‘analysers and organisers of data’ can be put to good use [ 13 ] in a variety of roles [ 12 , 16 , 20 , 21 , 24 , 25 , 26 ]. These arguments make sense in the context of the aims and purposes of literature searching in systematic reviews, explored below. The need for ‘thorough’ and ‘replicable’ literature searches was fundamental to the guidance and recurs in key stage two. Studies have found poor reporting, and a lack of replicable literature searches, to be a weakness in systematic reviews [ 17 , 18 , 27 , 28 ] and they argue that involvement of information specialists/ librarians would be associated with better reporting and better quality literature searching. Indeed, Meert et al. [ 29 ] demonstrated that involving a librarian as a co-author to a systematic review correlated with a higher score in the literature searching component of a systematic review [ 29 ]. As ‘new styles’ of rapid and scoping reviews emerge, where decisions on how to search are more iterative and creative, a clear role is made here too [ 30 ].

Knowing where to search for studies was noted as important in the guidance, with no agreement as to the appropriate number of databases to be searched [ 2 , 6 ]. Database (and resource selection more broadly) is acknowledged as a relevant key skill of information specialists and librarians [ 12 , 15 , 16 , 31 ].

Whilst arguments for including information specialists and librarians in the process of systematic review might be considered self-evident, Koffel and Rethlefsen [ 31 ] have questioned if the necessary involvement is actually happening [ 31 ].

Key stage two: Determining the aim and purpose of a literature search

The aim: Five of the nine guidance documents use adjectives such as ‘thorough’, ‘comprehensive’, ‘transparent’ and ‘reproducible’ to define the aim of literature searching [ 6 , 7 , 8 , 9 , 10 ]. Analogous phrases were present in a further three guidance documents, namely: ‘to identify the best available evidence’ [ 4 ] or ‘the aim of the literature search is not to retrieve everything. It is to retrieve everything of relevance’ [ 2 ] or ‘A systematic literature search aims to identify all publications relevant to the particular research question’ [ 3 ]. The Joanna Briggs Institute reviewers’ manual was the only guidance document where a clear statement on the aim of literature searching could not be identified. The purpose of literature searching was defined in three guidance documents, namely to minimise bias in the resultant review [ 6 , 8 , 10 ]. Accordingly, eight of nine documents clearly asserted that thorough and comprehensive literature searches are required as a potential mechanism for minimising bias.

The need for thorough and comprehensive literature searches appears as uniform within the eight guidance documents that describe approaches to literature searching in systematic reviews of effectiveness. Reviews of effectiveness (of intervention or cost), accuracy and prognosis, require thorough and comprehensive literature searches to transparently produce a reliable estimate of intervention effect. The belief that all relevant studies have been ‘comprehensively’ identified, and that this process has been ‘transparently’ reported, increases confidence in the estimate of effect and the conclusions that can be drawn [ 32 ]. The supporting literature exploring the need for comprehensive literature searches focuses almost exclusively on reviews of intervention effectiveness and meta-analysis. Different ‘styles’ of review may have different standards however; the alternative, offered by purposive sampling, has been suggested in the specific context of qualitative evidence syntheses [ 33 ].

What is a comprehensive literature search?

Whilst the guidance calls for thorough and comprehensive literature searches, it lacks clarity on what constitutes a thorough and comprehensive literature search, beyond the implication that all of the literature search methods in Table 2 should be used to identify studies. Egger et al. [ 34 ], in an empirical study evaluating the importance of comprehensive literature searches for trials in systematic reviews, defined a comprehensive search for trials as:

a search not restricted to English language;

where Cochrane CENTRAL or at least two other electronic databases had been searched (such as MEDLINE or EMBASE); and

at least one of the following search methods has been used to identify unpublished trials: searches for (I) conference abstracts, (ii) theses, (iii) trials registers; and (iv) contacts with experts in the field [ 34 ].

Tricco et al. (2008) used a similar threshold of bibliographic database searching AND a supplementary search method in a review when examining the risk of bias in systematic reviews. Their criteria were: one database (limited using the Cochrane Highly Sensitive Search Strategy (HSSS)) and handsearching [ 35 ].

Together with the guidance, this would suggest that comprehensive literature searching requires the use of BOTH bibliographic database searching AND supplementary search methods.

Comprehensiveness in literature searching, in the sense of how much searching should be undertaken, remains unclear. Egger et al. recommend that ‘investigators should consider the type of literature search and degree of comprehension that is appropriate for the review in question, taking into account budget and time constraints’ [ 34 ]. This view tallies with the Cochrane Handbook, which stipulates clearly, that study identification should be undertaken ‘within resource limits’ [ 9 ]. This would suggest that the limitations to comprehension are recognised but it raises questions on how this is decided and reported [ 36 ].

What is the point of comprehensive literature searching?

The purpose of thorough and comprehensive literature searches is to avoid missing key studies and to minimize bias [ 6 , 8 , 10 , 34 , 37 , 38 , 39 ] since a systematic review based only on published (or easily accessible) studies may have an exaggerated effect size [ 35 ]. Felson (1992) sets out potential biases that could affect the estimate of effect in a meta-analysis [ 40 ] and Tricco et al. summarize the evidence concerning bias and confounding in systematic reviews [ 35 ]. Egger et al. point to non-publication of studies, publication bias, language bias and MEDLINE bias, as key biases [ 34 , 35 , 40 , 41 , 42 , 43 , 44 , 45 , 46 ]. Comprehensive searches are not the sole factor to mitigate these biases but their contribution is thought to be significant [ 2 , 32 , 34 ]. Fehrmann (2011) suggests that ‘the search process being described in detail’ and that, where standard comprehensive search techniques have been applied, increases confidence in the search results [ 32 ].

Does comprehensive literature searching work?

Egger et al., and other study authors, have demonstrated a change in the estimate of intervention effectiveness where relevant studies were excluded from meta-analysis [ 34 , 47 ]. This would suggest that missing studies in literature searching alters the reliability of effectiveness estimates. This is an argument for comprehensive literature searching. Conversely, Egger et al. found that ‘comprehensive’ searches still missed studies and that comprehensive searches could, in fact, introduce bias into a review rather than preventing it, through the identification of low quality studies then being included in the meta-analysis [ 34 ]. Studies query if identifying and including low quality or grey literature studies changes the estimate of effect [ 43 , 48 ] and question if time is better invested updating systematic reviews rather than searching for unpublished studies [ 49 ], or mapping studies for review as opposed to aiming for high sensitivity in literature searching [ 50 ].

Aim and purpose beyond reviews of effectiveness

The need for comprehensive literature searches is less certain in reviews of qualitative studies, and for reviews where a comprehensive identification of studies is difficult to achieve (for example, in Public health) [ 33 , 51 , 52 , 53 , 54 , 55 ]. Literature searching for qualitative studies, and in public health topics, typically generates a greater number of studies to sift than in reviews of effectiveness [ 39 ] and demonstrating the ‘value’ of studies identified or missed is harder [ 56 ], since the study data do not typically support meta-analysis. Nussbaumer-Streit et al. (2016) have registered a review protocol to assess whether abbreviated literature searches (as opposed to comprehensive literature searches) has an impact on conclusions across multiple bodies of evidence, not only on effect estimates [ 57 ] which may develop this understanding. It may be that decision makers and users of systematic reviews are willing to trade the certainty from a comprehensive literature search and systematic review in exchange for different approaches to evidence synthesis [ 58 ], and that comprehensive literature searches are not necessarily a marker of literature search quality, as previously thought [ 36 ]. Different approaches to literature searching [ 37 , 38 , 59 , 60 , 61 , 62 ] and developing the concept of when to stop searching are important areas for further study [ 36 , 59 ].

The study by Nussbaumer-Streit et al. has been published since the submission of this literature review [ 63 ]. Nussbaumer-Streit et al. (2018) conclude that abbreviated literature searches are viable options for rapid evidence syntheses, if decision-makers are willing to trade the certainty from a comprehensive literature search and systematic review, but that decision-making which demands detailed scrutiny should still be based on comprehensive literature searches [ 63 ].

Key stage three: Preparing for the literature search

Six documents provided guidance on preparing for a literature search [ 2 , 3 , 6 , 7 , 9 , 10 ]. The Cochrane Handbook clearly stated that Cochrane authors (i.e. researchers) should seek advice from a trial search co-ordinator (i.e. a person with specific skills in literature searching) ‘before’ starting a literature search [ 9 ].

Two key tasks were perceptible in preparing for a literature searching [ 2 , 6 , 7 , 10 , 11 ]. First, to determine if there are any existing or on-going reviews, or if a new review is justified [ 6 , 11 ]; and, secondly, to develop an initial literature search strategy to estimate the volume of relevant literature (and quality of a small sample of relevant studies [ 10 ]) and indicate the resources required for literature searching and the review of the studies that follows [ 7 , 10 ].

Three documents summarised guidance on where to search to determine if a new review was justified [ 2 , 6 , 11 ]. These focused on searching databases of systematic reviews (The Cochrane Database of Systematic Reviews (CDSR) and the Database of Abstracts of Reviews of Effects (DARE)), institutional registries (including PROSPERO), and MEDLINE [ 6 , 11 ]. It is worth noting, however, that as of 2015, DARE (and NHS EEDs) are no longer being updated and so the relevance of this (these) resource(s) will diminish over-time [ 64 ]. One guidance document, ‘Systematic reviews in the Social Sciences’, noted, however, that databases are not the only source of information and unpublished reports, conference proceeding and grey literature may also be required, depending on the nature of the review question [ 2 ].

Two documents reported clearly that this preparation (or ‘scoping’) exercise should be undertaken before the actual search strategy is developed [ 7 , 10 ]).

The guidance offers the best available source on preparing the literature search with the published studies not typically reporting how their scoping informed the development of their search strategies nor how their search approaches were developed. Text mining has been proposed as a technique to develop search strategies in the scoping stages of a review although this work is still exploratory [ 65 ]. ‘Clustering documents’ and word frequency analysis have also been tested to identify search terms and studies for review [ 66 , 67 ]. Preparing for literature searches and scoping constitutes an area for future research.

Key stage four: Designing the search strategy

The Population, Intervention, Comparator, Outcome (PICO) structure was the commonly reported structure promoted to design a literature search strategy. Five documents suggested that the eligibility criteria or review question will determine which concepts of PICO will be populated to develop the search strategy [ 1 , 4 , 7 , 8 , 9 ]. The NICE handbook promoted multiple structures, namely PICO, SPICE (Setting, Perspective, Intervention, Comparison, Evaluation) and multi-stranded approaches [ 4 ].

With the exclusion of The Joanna Briggs Institute reviewers’ manual, the guidance offered detail on selecting key search terms, synonyms, Boolean language, selecting database indexing terms and combining search terms. The CEE handbook suggested that ‘search terms may be compiled with the help of the commissioning organisation and stakeholders’ [ 10 ].

The use of limits, such as language or date limits, were discussed in all documents [ 2 , 3 , 4 , 6 , 7 , 8 , 9 , 10 , 11 ].

Search strategy structure

The guidance typically relates to reviews of intervention effectiveness so PICO – with its focus on intervention and comparator - is the dominant model used to structure literature search strategies [ 68 ]. PICOs – where the S denotes study design - is also commonly used in effectiveness reviews [ 6 , 68 ]. As the NICE handbook notes, alternative models to structure literature search strategies have been developed and tested. Booth provides an overview on formulating questions for evidence based practice [ 69 ] and has developed a number of alternatives to the PICO structure, namely: BeHEMoTh (Behaviour of interest; Health context; Exclusions; Models or Theories) for use when systematically identifying theory [ 55 ]; SPICE (Setting, Perspective, Intervention, Comparison, Evaluation) for identification of social science and evaluation studies [ 69 ] and, working with Cooke and colleagues, SPIDER (Sample, Phenomenon of Interest, Design, Evaluation, Research type) [ 70 ]. SPIDER has been compared to PICO and PICOs in a study by Methley et al. [ 68 ].

The NICE handbook also suggests the use of multi-stranded approaches to developing literature search strategies [ 4 ]. Glanville developed this idea in a study by Whitting et al. [ 71 ] and a worked example of this approach is included in the development of a search filter by Cooper et al. [ 72 ].

Writing search strategies: Conceptual and objective approaches

Hausner et al. [ 73 ] provide guidance on writing literature search strategies, delineating between conceptually and objectively derived approaches. The conceptual approach, advocated by and explained in the guidance documents, relies on the expertise of the literature searcher to identify key search terms and then develop key terms to include synonyms and controlled syntax. Hausner and colleagues set out the objective approach [ 73 ] and describe what may be done to validate it [ 74 ].

The use of limits

The guidance documents offer direction on the use of limits within a literature search. Limits can be used to focus literature searching to specific study designs or by other markers (such as by date) which limits the number of studies returned by a literature search. The use of limits should be described and the implications explored [ 34 ] since limiting literature searching can introduce bias (explored above). Craven et al. have suggested the use of a supporting narrative to explain decisions made in the process of developing literature searches and this advice would usefully capture decisions on the use of search limits [ 75 ].

Key stage five: Determining the process of literature searching and deciding where to search (bibliographic database searching)

Table 2 summarises the process of literature searching as reported in each guidance document. Searching bibliographic databases was consistently reported as the ‘first step’ to literature searching in all nine guidance documents.

Three documents reported specific guidance on where to search, in each case specific to the type of review their guidance informed, and as a minimum requirement [ 4 , 9 , 11 ]. Seven of the key guidance documents suggest that the selection of bibliographic databases depends on the topic of review [ 2 , 3 , 4 , 6 , 7 , 8 , 10 ], with two documents noting the absence of an agreed standard on what constitutes an acceptable number of databases searched [ 2 , 6 ].

The guidance documents summarise ‘how to’ search bibliographic databases in detail and this guidance is further contextualised above in terms of developing the search strategy. The documents provide guidance of selecting bibliographic databases, in some cases stating acceptable minima (i.e. The Cochrane Handbook states Cochrane CENTRAL, MEDLINE and EMBASE), and in other cases simply listing bibliographic database available to search. Studies have explored the value in searching specific bibliographic databases, with Wright et al. (2015) noting the contribution of CINAHL in identifying qualitative studies [ 76 ], Beckles et al. (2013) questioning the contribution of CINAHL to identifying clinical studies for guideline development [ 77 ], and Cooper et al. (2015) exploring the role of UK-focused bibliographic databases to identify UK-relevant studies [ 78 ]. The host of the database (e.g. OVID or ProQuest) has been shown to alter the search returns offered. Younger and Boddy [ 79 ] report differing search returns from the same database (AMED) but where the ‘host’ was different [ 79 ].

The average number of bibliographic database searched in systematic reviews has risen in the period 1994–2014 (from 1 to 4) [ 80 ] but there remains (as attested to by the guidance) no consensus on what constitutes an acceptable number of databases searched [ 48 ]. This is perhaps because thinking about the number of databases searched is the wrong question, researchers should be focused on which databases were searched and why, and which databases were not searched and why. The discussion should re-orientate to the differential value of sources but researchers need to think about how to report this in studies to allow findings to be generalised. Bethel (2017) has proposed ‘search summaries’, completed by the literature searcher, to record where included studies were identified, whether from database (and which databases specifically) or supplementary search methods [ 81 ]. Search summaries document both yield and accuracy of searches, which could prospectively inform resource use and decisions to search or not to search specific databases in topic areas. The prospective use of such data presupposes, however, that past searches are a potential predictor of future search performance (i.e. that each topic is to be considered representative and not unique). In offering a body of practice, this data would be of greater practicable use than current studies which are considered as little more than individual case studies [ 82 , 83 , 84 , 85 , 86 , 87 , 88 , 89 , 90 ].