Experimental Research Design — 6 mistakes you should never make!

Since school days’ students perform scientific experiments that provide results that define and prove the laws and theorems in science. These experiments are laid on a strong foundation of experimental research designs.

An experimental research design helps researchers execute their research objectives with more clarity and transparency.

In this article, we will not only discuss the key aspects of experimental research designs but also the issues to avoid and problems to resolve while designing your research study.

Table of Contents

What Is Experimental Research Design?

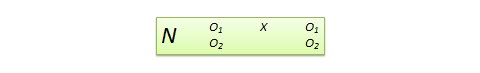

Experimental research design is a framework of protocols and procedures created to conduct experimental research with a scientific approach using two sets of variables. Herein, the first set of variables acts as a constant, used to measure the differences of the second set. The best example of experimental research methods is quantitative research .

Experimental research helps a researcher gather the necessary data for making better research decisions and determining the facts of a research study.

When Can a Researcher Conduct Experimental Research?

A researcher can conduct experimental research in the following situations —

- When time is an important factor in establishing a relationship between the cause and effect.

- When there is an invariable or never-changing behavior between the cause and effect.

- Finally, when the researcher wishes to understand the importance of the cause and effect.

Importance of Experimental Research Design

To publish significant results, choosing a quality research design forms the foundation to build the research study. Moreover, effective research design helps establish quality decision-making procedures, structures the research to lead to easier data analysis, and addresses the main research question. Therefore, it is essential to cater undivided attention and time to create an experimental research design before beginning the practical experiment.

By creating a research design, a researcher is also giving oneself time to organize the research, set up relevant boundaries for the study, and increase the reliability of the results. Through all these efforts, one could also avoid inconclusive results. If any part of the research design is flawed, it will reflect on the quality of the results derived.

Types of Experimental Research Designs

Based on the methods used to collect data in experimental studies, the experimental research designs are of three primary types:

1. Pre-experimental Research Design

A research study could conduct pre-experimental research design when a group or many groups are under observation after implementing factors of cause and effect of the research. The pre-experimental design will help researchers understand whether further investigation is necessary for the groups under observation.

Pre-experimental research is of three types —

- One-shot Case Study Research Design

- One-group Pretest-posttest Research Design

- Static-group Comparison

2. True Experimental Research Design

A true experimental research design relies on statistical analysis to prove or disprove a researcher’s hypothesis. It is one of the most accurate forms of research because it provides specific scientific evidence. Furthermore, out of all the types of experimental designs, only a true experimental design can establish a cause-effect relationship within a group. However, in a true experiment, a researcher must satisfy these three factors —

- There is a control group that is not subjected to changes and an experimental group that will experience the changed variables

- A variable that can be manipulated by the researcher

- Random distribution of the variables

This type of experimental research is commonly observed in the physical sciences.

3. Quasi-experimental Research Design

The word “Quasi” means similarity. A quasi-experimental design is similar to a true experimental design. However, the difference between the two is the assignment of the control group. In this research design, an independent variable is manipulated, but the participants of a group are not randomly assigned. This type of research design is used in field settings where random assignment is either irrelevant or not required.

The classification of the research subjects, conditions, or groups determines the type of research design to be used.

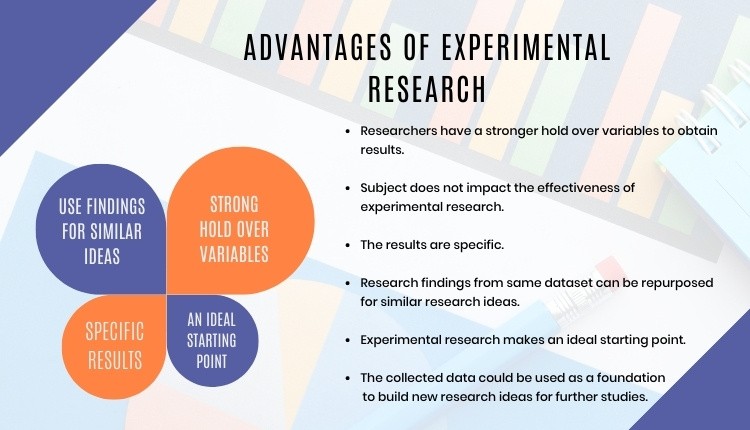

Advantages of Experimental Research

Experimental research allows you to test your idea in a controlled environment before taking the research to clinical trials. Moreover, it provides the best method to test your theory because of the following advantages:

- Researchers have firm control over variables to obtain results.

- The subject does not impact the effectiveness of experimental research. Anyone can implement it for research purposes.

- The results are specific.

- Post results analysis, research findings from the same dataset can be repurposed for similar research ideas.

- Researchers can identify the cause and effect of the hypothesis and further analyze this relationship to determine in-depth ideas.

- Experimental research makes an ideal starting point. The collected data could be used as a foundation to build new research ideas for further studies.

6 Mistakes to Avoid While Designing Your Research

There is no order to this list, and any one of these issues can seriously compromise the quality of your research. You could refer to the list as a checklist of what to avoid while designing your research.

1. Invalid Theoretical Framework

Usually, researchers miss out on checking if their hypothesis is logical to be tested. If your research design does not have basic assumptions or postulates, then it is fundamentally flawed and you need to rework on your research framework.

2. Inadequate Literature Study

Without a comprehensive research literature review , it is difficult to identify and fill the knowledge and information gaps. Furthermore, you need to clearly state how your research will contribute to the research field, either by adding value to the pertinent literature or challenging previous findings and assumptions.

3. Insufficient or Incorrect Statistical Analysis

Statistical results are one of the most trusted scientific evidence. The ultimate goal of a research experiment is to gain valid and sustainable evidence. Therefore, incorrect statistical analysis could affect the quality of any quantitative research.

4. Undefined Research Problem

This is one of the most basic aspects of research design. The research problem statement must be clear and to do that, you must set the framework for the development of research questions that address the core problems.

5. Research Limitations

Every study has some type of limitations . You should anticipate and incorporate those limitations into your conclusion, as well as the basic research design. Include a statement in your manuscript about any perceived limitations, and how you considered them while designing your experiment and drawing the conclusion.

6. Ethical Implications

The most important yet less talked about topic is the ethical issue. Your research design must include ways to minimize any risk for your participants and also address the research problem or question at hand. If you cannot manage the ethical norms along with your research study, your research objectives and validity could be questioned.

Experimental Research Design Example

In an experimental design, a researcher gathers plant samples and then randomly assigns half the samples to photosynthesize in sunlight and the other half to be kept in a dark box without sunlight, while controlling all the other variables (nutrients, water, soil, etc.)

By comparing their outcomes in biochemical tests, the researcher can confirm that the changes in the plants were due to the sunlight and not the other variables.

Experimental research is often the final form of a study conducted in the research process which is considered to provide conclusive and specific results. But it is not meant for every research. It involves a lot of resources, time, and money and is not easy to conduct, unless a foundation of research is built. Yet it is widely used in research institutes and commercial industries, for its most conclusive results in the scientific approach.

Have you worked on research designs? How was your experience creating an experimental design? What difficulties did you face? Do write to us or comment below and share your insights on experimental research designs!

Frequently Asked Questions

Randomization is important in an experimental research because it ensures unbiased results of the experiment. It also measures the cause-effect relationship on a particular group of interest.

Experimental research design lay the foundation of a research and structures the research to establish quality decision making process.

There are 3 types of experimental research designs. These are pre-experimental research design, true experimental research design, and quasi experimental research design.

The difference between an experimental and a quasi-experimental design are: 1. The assignment of the control group in quasi experimental research is non-random, unlike true experimental design, which is randomly assigned. 2. Experimental research group always has a control group; on the other hand, it may not be always present in quasi experimental research.

Experimental research establishes a cause-effect relationship by testing a theory or hypothesis using experimental groups or control variables. In contrast, descriptive research describes a study or a topic by defining the variables under it and answering the questions related to the same.

good and valuable

Very very good

Good presentation.

Rate this article Cancel Reply

Your email address will not be published.

Enago Academy's Most Popular Articles

- Publishing Research

- Reporting Research

How to Optimize Your Research Process: A step-by-step guide

For researchers across disciplines, the path to uncovering novel findings and insights is often filled…

- Industry News

- Trending Now

Breaking Barriers: Sony and Nature unveil “Women in Technology Award”

Sony Group Corporation and the prestigious scientific journal Nature have collaborated to launch the inaugural…

Achieving Research Excellence: Checklist for good research practices

Academia is built on the foundation of trustworthy and high-quality research, supported by the pillars…

- Promoting Research

Plain Language Summary — Communicating your research to bridge the academic-lay gap

Science can be complex, but does that mean it should not be accessible to the…

Science under Surveillance: Journals adopt advanced AI to uncover image manipulation

Journals are increasingly turning to cutting-edge AI tools to uncover deceitful images published in manuscripts.…

Choosing the Right Analytical Approach: Thematic analysis vs. content analysis for…

Comparing Cross Sectional and Longitudinal Studies: 5 steps for choosing the right…

Research Recommendations – Guiding policy-makers for evidence-based decision making

Sign-up to read more

Subscribe for free to get unrestricted access to all our resources on research writing and academic publishing including:

- 2000+ blog articles

- 50+ Webinars

- 10+ Expert podcasts

- 50+ Infographics

- 10+ Checklists

- Research Guides

We hate spam too. We promise to protect your privacy and never spam you.

I am looking for Editing/ Proofreading services for my manuscript Tentative date of next journal submission:

What should universities' stance be on AI tools in research and academic writing?

Experimental Design: Types, Examples & Methods

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

Experimental design refers to how participants are allocated to different groups in an experiment. Types of design include repeated measures, independent groups, and matched pairs designs.

Probably the most common way to design an experiment in psychology is to divide the participants into two groups, the experimental group and the control group, and then introduce a change to the experimental group, not the control group.

The researcher must decide how he/she will allocate their sample to the different experimental groups. For example, if there are 10 participants, will all 10 participants participate in both groups (e.g., repeated measures), or will the participants be split in half and take part in only one group each?

Three types of experimental designs are commonly used:

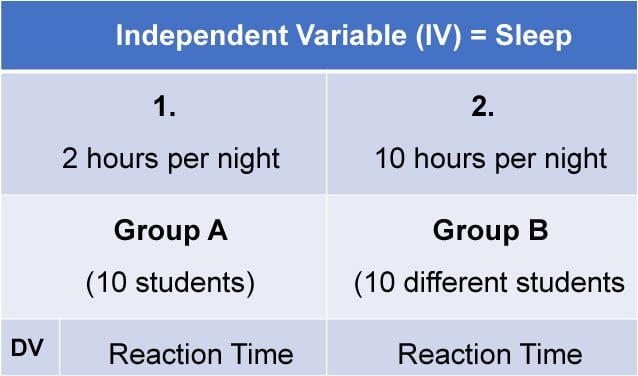

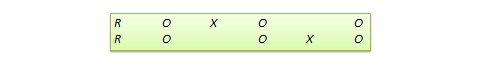

1. Independent Measures

Independent measures design, also known as between-groups , is an experimental design where different participants are used in each condition of the independent variable. This means that each condition of the experiment includes a different group of participants.

This should be done by random allocation, ensuring that each participant has an equal chance of being assigned to one group.

Independent measures involve using two separate groups of participants, one in each condition. For example:

- Con : More people are needed than with the repeated measures design (i.e., more time-consuming).

- Pro : Avoids order effects (such as practice or fatigue) as people participate in one condition only. If a person is involved in several conditions, they may become bored, tired, and fed up by the time they come to the second condition or become wise to the requirements of the experiment!

- Con : Differences between participants in the groups may affect results, for example, variations in age, gender, or social background. These differences are known as participant variables (i.e., a type of extraneous variable ).

- Control : After the participants have been recruited, they should be randomly assigned to their groups. This should ensure the groups are similar, on average (reducing participant variables).

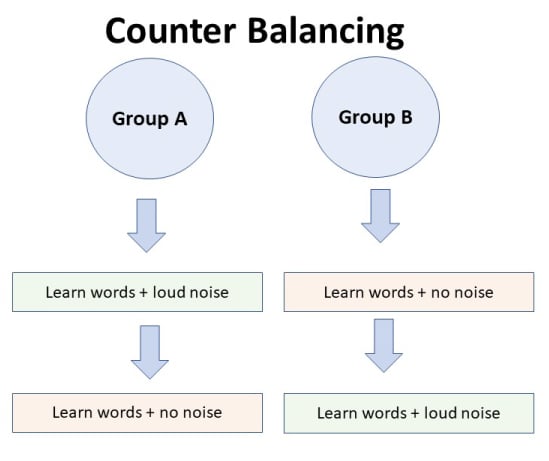

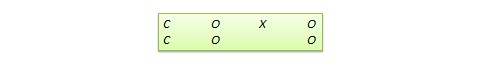

2. Repeated Measures Design

Repeated Measures design is an experimental design where the same participants participate in each independent variable condition. This means that each experiment condition includes the same group of participants.

Repeated Measures design is also known as within-groups or within-subjects design .

- Pro : As the same participants are used in each condition, participant variables (i.e., individual differences) are reduced.

- Con : There may be order effects. Order effects refer to the order of the conditions affecting the participants’ behavior. Performance in the second condition may be better because the participants know what to do (i.e., practice effect). Or their performance might be worse in the second condition because they are tired (i.e., fatigue effect). This limitation can be controlled using counterbalancing.

- Pro : Fewer people are needed as they participate in all conditions (i.e., saves time).

- Control : To combat order effects, the researcher counter-balances the order of the conditions for the participants. Alternating the order in which participants perform in different conditions of an experiment.

Counterbalancing

Suppose we used a repeated measures design in which all of the participants first learned words in “loud noise” and then learned them in “no noise.”

We expect the participants to learn better in “no noise” because of order effects, such as practice. However, a researcher can control for order effects using counterbalancing.

The sample would be split into two groups: experimental (A) and control (B). For example, group 1 does ‘A’ then ‘B,’ and group 2 does ‘B’ then ‘A.’ This is to eliminate order effects.

Although order effects occur for each participant, they balance each other out in the results because they occur equally in both groups.

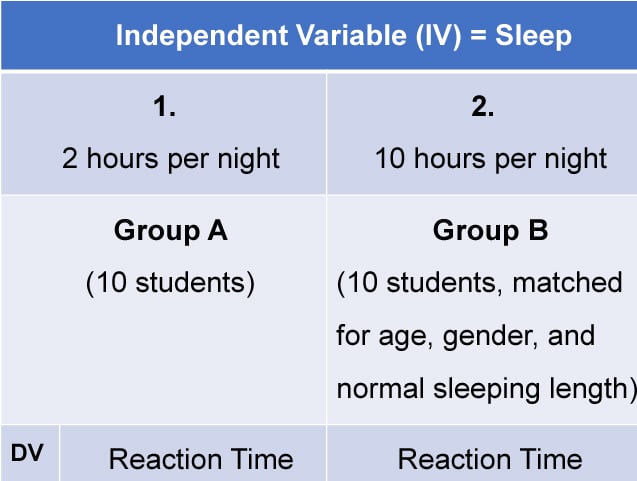

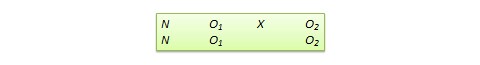

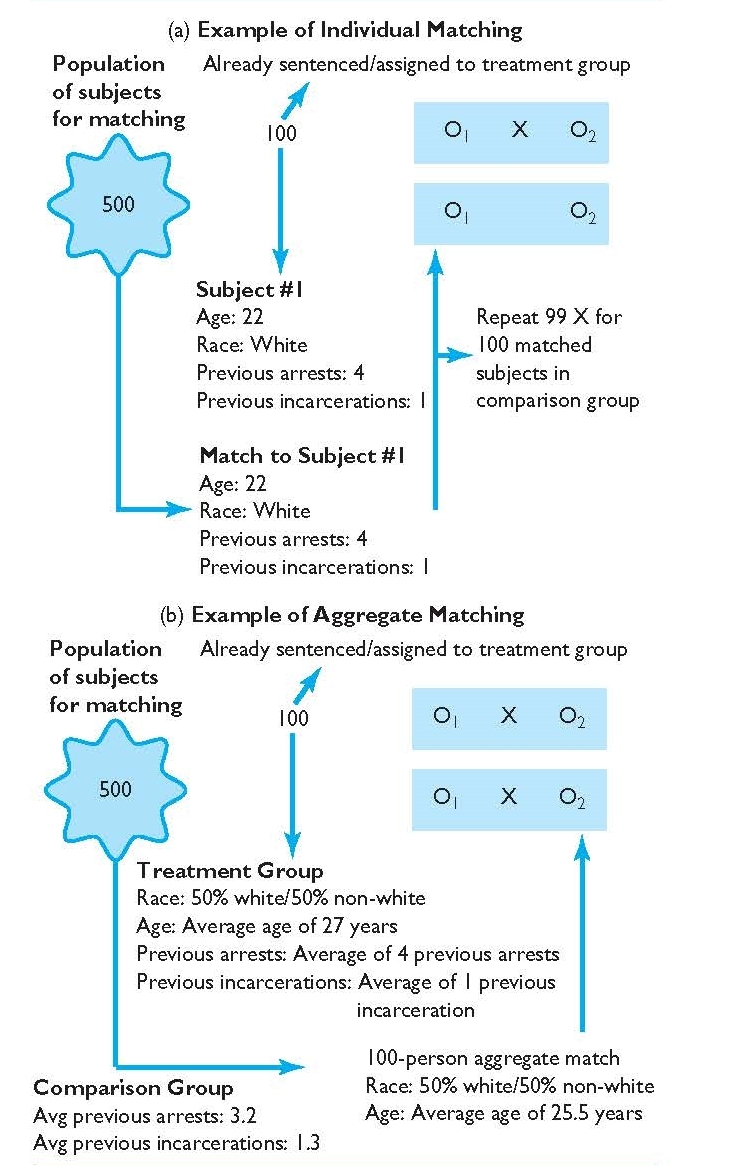

3. Matched Pairs Design

A matched pairs design is an experimental design where pairs of participants are matched in terms of key variables, such as age or socioeconomic status. One member of each pair is then placed into the experimental group and the other member into the control group .

One member of each matched pair must be randomly assigned to the experimental group and the other to the control group.

- Con : If one participant drops out, you lose 2 PPs’ data.

- Pro : Reduces participant variables because the researcher has tried to pair up the participants so that each condition has people with similar abilities and characteristics.

- Con : Very time-consuming trying to find closely matched pairs.

- Pro : It avoids order effects, so counterbalancing is not necessary.

- Con : Impossible to match people exactly unless they are identical twins!

- Control : Members of each pair should be randomly assigned to conditions. However, this does not solve all these problems.

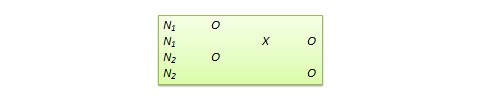

Experimental design refers to how participants are allocated to an experiment’s different conditions (or IV levels). There are three types:

1. Independent measures / between-groups : Different participants are used in each condition of the independent variable.

2. Repeated measures /within groups : The same participants take part in each condition of the independent variable.

3. Matched pairs : Each condition uses different participants, but they are matched in terms of important characteristics, e.g., gender, age, intelligence, etc.

Learning Check

Read about each of the experiments below. For each experiment, identify (1) which experimental design was used; and (2) why the researcher might have used that design.

1 . To compare the effectiveness of two different types of therapy for depression, depressed patients were assigned to receive either cognitive therapy or behavior therapy for a 12-week period.

The researchers attempted to ensure that the patients in the two groups had similar severity of depressed symptoms by administering a standardized test of depression to each participant, then pairing them according to the severity of their symptoms.

2 . To assess the difference in reading comprehension between 7 and 9-year-olds, a researcher recruited each group from a local primary school. They were given the same passage of text to read and then asked a series of questions to assess their understanding.

3 . To assess the effectiveness of two different ways of teaching reading, a group of 5-year-olds was recruited from a primary school. Their level of reading ability was assessed, and then they were taught using scheme one for 20 weeks.

At the end of this period, their reading was reassessed, and a reading improvement score was calculated. They were then taught using scheme two for a further 20 weeks, and another reading improvement score for this period was calculated. The reading improvement scores for each child were then compared.

4 . To assess the effect of the organization on recall, a researcher randomly assigned student volunteers to two conditions.

Condition one attempted to recall a list of words that were organized into meaningful categories; condition two attempted to recall the same words, randomly grouped on the page.

Experiment Terminology

Ecological validity.

The degree to which an investigation represents real-life experiences.

Experimenter effects

These are the ways that the experimenter can accidentally influence the participant through their appearance or behavior.

Demand characteristics

The clues in an experiment lead the participants to think they know what the researcher is looking for (e.g., the experimenter’s body language).

Independent variable (IV)

The variable the experimenter manipulates (i.e., changes) is assumed to have a direct effect on the dependent variable.

Dependent variable (DV)

Variable the experimenter measures. This is the outcome (i.e., the result) of a study.

Extraneous variables (EV)

All variables which are not independent variables but could affect the results (DV) of the experiment. Extraneous variables should be controlled where possible.

Confounding variables

Variable(s) that have affected the results (DV), apart from the IV. A confounding variable could be an extraneous variable that has not been controlled.

Random Allocation

Randomly allocating participants to independent variable conditions means that all participants should have an equal chance of taking part in each condition.

The principle of random allocation is to avoid bias in how the experiment is carried out and limit the effects of participant variables.

Order effects

Changes in participants’ performance due to their repeating the same or similar test more than once. Examples of order effects include:

(i) practice effect: an improvement in performance on a task due to repetition, for example, because of familiarity with the task;

(ii) fatigue effect: a decrease in performance of a task due to repetition, for example, because of boredom or tiredness.

- Experimental Research Designs: Types, Examples & Methods

Experimental research is the most familiar type of research design for individuals in the physical sciences and a host of other fields. This is mainly because experimental research is a classical scientific experiment, similar to those performed in high school science classes.

Imagine taking 2 samples of the same plant and exposing one of them to sunlight, while the other is kept away from sunlight. Let the plant exposed to sunlight be called sample A, while the latter is called sample B.

If after the duration of the research, we find out that sample A grows and sample B dies, even though they are both regularly wetted and given the same treatment. Therefore, we can conclude that sunlight will aid growth in all similar plants.

What is Experimental Research?

Experimental research is a scientific approach to research, where one or more independent variables are manipulated and applied to one or more dependent variables to measure their effect on the latter. The effect of the independent variables on the dependent variables is usually observed and recorded over some time, to aid researchers in drawing a reasonable conclusion regarding the relationship between these 2 variable types.

The experimental research method is widely used in physical and social sciences, psychology, and education. It is based on the comparison between two or more groups with a straightforward logic, which may, however, be difficult to execute.

Mostly related to a laboratory test procedure, experimental research designs involve collecting quantitative data and performing statistical analysis on them during research. Therefore, making it an example of quantitative research method .

What are The Types of Experimental Research Design?

The types of experimental research design are determined by the way the researcher assigns subjects to different conditions and groups. They are of 3 types, namely; pre-experimental, quasi-experimental, and true experimental research.

Pre-experimental Research Design

In pre-experimental research design, either a group or various dependent groups are observed for the effect of the application of an independent variable which is presumed to cause change. It is the simplest form of experimental research design and is treated with no control group.

Although very practical, experimental research is lacking in several areas of the true-experimental criteria. The pre-experimental research design is further divided into three types

- One-shot Case Study Research Design

In this type of experimental study, only one dependent group or variable is considered. The study is carried out after some treatment which was presumed to cause change, making it a posttest study.

- One-group Pretest-posttest Research Design:

This research design combines both posttest and pretest study by carrying out a test on a single group before the treatment is administered and after the treatment is administered. With the former being administered at the beginning of treatment and later at the end.

- Static-group Comparison:

In a static-group comparison study, 2 or more groups are placed under observation, where only one of the groups is subjected to some treatment while the other groups are held static. All the groups are post-tested, and the observed differences between the groups are assumed to be a result of the treatment.

Quasi-experimental Research Design

The word “quasi” means partial, half, or pseudo. Therefore, the quasi-experimental research bearing a resemblance to the true experimental research, but not the same. In quasi-experiments, the participants are not randomly assigned, and as such, they are used in settings where randomization is difficult or impossible.

This is very common in educational research, where administrators are unwilling to allow the random selection of students for experimental samples.

Some examples of quasi-experimental research design include; the time series, no equivalent control group design, and the counterbalanced design.

True Experimental Research Design

The true experimental research design relies on statistical analysis to approve or disprove a hypothesis. It is the most accurate type of experimental design and may be carried out with or without a pretest on at least 2 randomly assigned dependent subjects.

The true experimental research design must contain a control group, a variable that can be manipulated by the researcher, and the distribution must be random. The classification of true experimental design include:

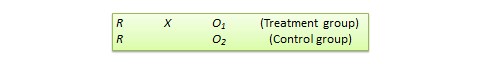

- The posttest-only Control Group Design: In this design, subjects are randomly selected and assigned to the 2 groups (control and experimental), and only the experimental group is treated. After close observation, both groups are post-tested, and a conclusion is drawn from the difference between these groups.

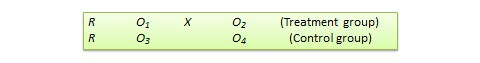

- The pretest-posttest Control Group Design: For this control group design, subjects are randomly assigned to the 2 groups, both are presented, but only the experimental group is treated. After close observation, both groups are post-tested to measure the degree of change in each group.

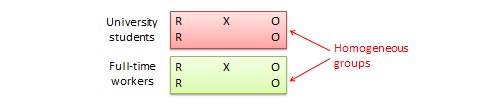

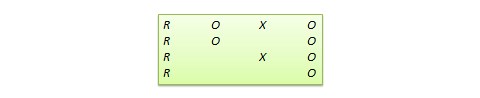

- Solomon four-group Design: This is the combination of the pretest-only and the pretest-posttest control groups. In this case, the randomly selected subjects are placed into 4 groups.

The first two of these groups are tested using the posttest-only method, while the other two are tested using the pretest-posttest method.

Examples of Experimental Research

Experimental research examples are different, depending on the type of experimental research design that is being considered. The most basic example of experimental research is laboratory experiments, which may differ in nature depending on the subject of research.

Administering Exams After The End of Semester

During the semester, students in a class are lectured on particular courses and an exam is administered at the end of the semester. In this case, the students are the subjects or dependent variables while the lectures are the independent variables treated on the subjects.

Only one group of carefully selected subjects are considered in this research, making it a pre-experimental research design example. We will also notice that tests are only carried out at the end of the semester, and not at the beginning.

Further making it easy for us to conclude that it is a one-shot case study research.

Employee Skill Evaluation

Before employing a job seeker, organizations conduct tests that are used to screen out less qualified candidates from the pool of qualified applicants. This way, organizations can determine an employee’s skill set at the point of employment.

In the course of employment, organizations also carry out employee training to improve employee productivity and generally grow the organization. Further evaluation is carried out at the end of each training to test the impact of the training on employee skills, and test for improvement.

Here, the subject is the employee, while the treatment is the training conducted. This is a pretest-posttest control group experimental research example.

Evaluation of Teaching Method

Let us consider an academic institution that wants to evaluate the teaching method of 2 teachers to determine which is best. Imagine a case whereby the students assigned to each teacher is carefully selected probably due to personal request by parents or due to stubbornness and smartness.

This is a no equivalent group design example because the samples are not equal. By evaluating the effectiveness of each teacher’s teaching method this way, we may conclude after a post-test has been carried out.

However, this may be influenced by factors like the natural sweetness of a student. For example, a very smart student will grab more easily than his or her peers irrespective of the method of teaching.

What are the Characteristics of Experimental Research?

Experimental research contains dependent, independent and extraneous variables. The dependent variables are the variables being treated or manipulated and are sometimes called the subject of the research.

The independent variables are the experimental treatment being exerted on the dependent variables. Extraneous variables, on the other hand, are other factors affecting the experiment that may also contribute to the change.

The setting is where the experiment is carried out. Many experiments are carried out in the laboratory, where control can be exerted on the extraneous variables, thereby eliminating them.

Other experiments are carried out in a less controllable setting. The choice of setting used in research depends on the nature of the experiment being carried out.

- Multivariable

Experimental research may include multiple independent variables, e.g. time, skills, test scores, etc.

Why Use Experimental Research Design?

Experimental research design can be majorly used in physical sciences, social sciences, education, and psychology. It is used to make predictions and draw conclusions on a subject matter.

Some uses of experimental research design are highlighted below.

- Medicine: Experimental research is used to provide the proper treatment for diseases. In most cases, rather than directly using patients as the research subject, researchers take a sample of the bacteria from the patient’s body and are treated with the developed antibacterial

The changes observed during this period are recorded and evaluated to determine its effectiveness. This process can be carried out using different experimental research methods.

- Education: Asides from science subjects like Chemistry and Physics which involves teaching students how to perform experimental research, it can also be used in improving the standard of an academic institution. This includes testing students’ knowledge on different topics, coming up with better teaching methods, and the implementation of other programs that will aid student learning.

- Human Behavior: Social scientists are the ones who mostly use experimental research to test human behaviour. For example, consider 2 people randomly chosen to be the subject of the social interaction research where one person is placed in a room without human interaction for 1 year.

The other person is placed in a room with a few other people, enjoying human interaction. There will be a difference in their behaviour at the end of the experiment.

- UI/UX: During the product development phase, one of the major aims of the product team is to create a great user experience with the product. Therefore, before launching the final product design, potential are brought in to interact with the product.

For example, when finding it difficult to choose how to position a button or feature on the app interface, a random sample of product testers are allowed to test the 2 samples and how the button positioning influences the user interaction is recorded.

What are the Disadvantages of Experimental Research?

- It is highly prone to human error due to its dependency on variable control which may not be properly implemented. These errors could eliminate the validity of the experiment and the research being conducted.

- Exerting control of extraneous variables may create unrealistic situations. Eliminating real-life variables will result in inaccurate conclusions. This may also result in researchers controlling the variables to suit his or her personal preferences.

- It is a time-consuming process. So much time is spent on testing dependent variables and waiting for the effect of the manipulation of dependent variables to manifest.

- It is expensive.

- It is very risky and may have ethical complications that cannot be ignored. This is common in medical research, where failed trials may lead to a patient’s death or a deteriorating health condition.

- Experimental research results are not descriptive.

- Response bias can also be supplied by the subject of the conversation.

- Human responses in experimental research can be difficult to measure.

What are the Data Collection Methods in Experimental Research?

Data collection methods in experimental research are the different ways in which data can be collected for experimental research. They are used in different cases, depending on the type of research being carried out.

1. Observational Study

This type of study is carried out over a long period. It measures and observes the variables of interest without changing existing conditions.

When researching the effect of social interaction on human behavior, the subjects who are placed in 2 different environments are observed throughout the research. No matter the kind of absurd behavior that is exhibited by the subject during this period, its condition will not be changed.

This may be a very risky thing to do in medical cases because it may lead to death or worse medical conditions.

2. Simulations

This procedure uses mathematical, physical, or computer models to replicate a real-life process or situation. It is frequently used when the actual situation is too expensive, dangerous, or impractical to replicate in real life.

This method is commonly used in engineering and operational research for learning purposes and sometimes as a tool to estimate possible outcomes of real research. Some common situation software are Simulink, MATLAB, and Simul8.

Not all kinds of experimental research can be carried out using simulation as a data collection tool . It is very impractical for a lot of laboratory-based research that involves chemical processes.

A survey is a tool used to gather relevant data about the characteristics of a population and is one of the most common data collection tools. A survey consists of a group of questions prepared by the researcher, to be answered by the research subject.

Surveys can be shared with the respondents both physically and electronically. When collecting data through surveys, the kind of data collected depends on the respondent, and researchers have limited control over it.

Formplus is the best tool for collecting experimental data using survey s. It has relevant features that will aid the data collection process and can also be used in other aspects of experimental research.

Differences between Experimental and Non-Experimental Research

1. In experimental research, the researcher can control and manipulate the environment of the research, including the predictor variable which can be changed. On the other hand, non-experimental research cannot be controlled or manipulated by the researcher at will.

This is because it takes place in a real-life setting, where extraneous variables cannot be eliminated. Therefore, it is more difficult to conclude non-experimental studies, even though they are much more flexible and allow for a greater range of study fields.

2. The relationship between cause and effect cannot be established in non-experimental research, while it can be established in experimental research. This may be because many extraneous variables also influence the changes in the research subject, making it difficult to point at a particular variable as the cause of a particular change

3. Independent variables are not introduced, withdrawn, or manipulated in non-experimental designs, but the same may not be said about experimental research.

Conclusion

Experimental research designs are often considered to be the standard in research designs. This is partly due to the common misconception that research is equivalent to scientific experiments—a component of experimental research design.

In this research design, one or more subjects or dependent variables are randomly assigned to different treatments (i.e. independent variables manipulated by the researcher) and the results are observed to conclude. One of the uniqueness of experimental research is in its ability to control the effect of extraneous variables.

Experimental research is suitable for research whose goal is to examine cause-effect relationships, e.g. explanatory research. It can be conducted in the laboratory or field settings, depending on the aim of the research that is being carried out.

Connect to Formplus, Get Started Now - It's Free!

- examples of experimental research

- experimental research methods

- types of experimental research

- busayo.longe

You may also like:

Experimental Vs Non-Experimental Research: 15 Key Differences

Differences between experimental and non experimental research on definitions, types, examples, data collection tools, uses, advantages etc.

Simpson’s Paradox & How to Avoid it in Experimental Research

In this article, we are going to look at Simpson’s Paradox from its historical point and later, we’ll consider its effect in...

What is Experimenter Bias? Definition, Types & Mitigation

In this article, we will look into the concept of experimental bias and how it can be identified in your research

Response vs Explanatory Variables: Definition & Examples

In this article, we’ll be comparing the two types of variables, what they both mean and see some of their real-life applications in research

Formplus - For Seamless Data Collection

Collect data the right way with a versatile data collection tool. try formplus and transform your work productivity today..

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- Types of Research Designs Compared | Guide & Examples

Types of Research Designs Compared | Guide & Examples

Published on June 20, 2019 by Shona McCombes . Revised on June 22, 2023.

When you start planning a research project, developing research questions and creating a research design , you will have to make various decisions about the type of research you want to do.

There are many ways to categorize different types of research. The words you use to describe your research depend on your discipline and field. In general, though, the form your research design takes will be shaped by:

- The type of knowledge you aim to produce

- The type of data you will collect and analyze

- The sampling methods , timescale and location of the research

This article takes a look at some common distinctions made between different types of research and outlines the key differences between them.

Table of contents

Types of research aims, types of research data, types of sampling, timescale, and location, other interesting articles.

The first thing to consider is what kind of knowledge your research aims to contribute.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

The next thing to consider is what type of data you will collect. Each kind of data is associated with a range of specific research methods and procedures.

Finally, you have to consider three closely related questions: how will you select the subjects or participants of the research? When and how often will you collect data from your subjects? And where will the research take place?

Keep in mind that the methods that you choose bring with them different risk factors and types of research bias . Biases aren’t completely avoidable, but can heavily impact the validity and reliability of your findings if left unchecked.

Choosing between all these different research types is part of the process of creating your research design , which determines exactly how your research will be conducted. But the type of research is only the first step: next, you have to make more concrete decisions about your research methods and the details of the study.

Read more about creating a research design

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Degrees of freedom

- Null hypothesis

- Discourse analysis

- Control groups

- Mixed methods research

- Non-probability sampling

- Quantitative research

- Ecological validity

Research bias

- Rosenthal effect

- Implicit bias

- Cognitive bias

- Selection bias

- Negativity bias

- Status quo bias

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

McCombes, S. (2023, June 22). Types of Research Designs Compared | Guide & Examples. Scribbr. Retrieved April 15, 2024, from https://www.scribbr.com/methodology/types-of-research/

Is this article helpful?

Shona McCombes

Other students also liked, what is a research design | types, guide & examples, qualitative vs. quantitative research | differences, examples & methods, what is a research methodology | steps & tips, what is your plagiarism score.

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Experimental Research: What it is + Types of designs

Any research conducted under scientifically acceptable conditions uses experimental methods. The success of experimental studies hinges on researchers confirming the change of a variable is based solely on the manipulation of the constant variable. The research should establish a notable cause and effect.

What is Experimental Research?

Experimental research is a study conducted with a scientific approach using two sets of variables. The first set acts as a constant, which you use to measure the differences of the second set. Quantitative research methods , for example, are experimental.

If you don’t have enough data to support your decisions, you must first determine the facts. This research gathers the data necessary to help you make better decisions.

You can conduct experimental research in the following situations:

- Time is a vital factor in establishing a relationship between cause and effect.

- Invariable behavior between cause and effect.

- You wish to understand the importance of cause and effect.

Experimental Research Design Types

The classic experimental design definition is: “The methods used to collect data in experimental studies.”

There are three primary types of experimental design:

- Pre-experimental research design

- True experimental research design

- Quasi-experimental research design

The way you classify research subjects based on conditions or groups determines the type of research design you should use.

0 1. Pre-Experimental Design

A group, or various groups, are kept under observation after implementing cause and effect factors. You’ll conduct this research to understand whether further investigation is necessary for these particular groups.

You can break down pre-experimental research further into three types:

- One-shot Case Study Research Design

- One-group Pretest-posttest Research Design

- Static-group Comparison

0 2. True Experimental Design

It relies on statistical analysis to prove or disprove a hypothesis, making it the most accurate form of research. Of the types of experimental design, only true design can establish a cause-effect relationship within a group. In a true experiment, three factors need to be satisfied:

- There is a Control Group, which won’t be subject to changes, and an Experimental Group, which will experience the changed variables.

- A variable that can be manipulated by the researcher

- Random distribution

This experimental research method commonly occurs in the physical sciences.

0 3. Quasi-Experimental Design

The word “Quasi” indicates similarity. A quasi-experimental design is similar to an experimental one, but it is not the same. The difference between the two is the assignment of a control group. In this research, an independent variable is manipulated, but the participants of a group are not randomly assigned. Quasi-research is used in field settings where random assignment is either irrelevant or not required.

Importance of Experimental Design

Experimental research is a powerful tool for understanding cause-and-effect relationships. It allows us to manipulate variables and observe the effects, which is crucial for understanding how different factors influence the outcome of a study.

But the importance of experimental research goes beyond that. It’s a critical method for many scientific and academic studies. It allows us to test theories, develop new products, and make groundbreaking discoveries.

For example, this research is essential for developing new drugs and medical treatments. Researchers can understand how a new drug works by manipulating dosage and administration variables and identifying potential side effects.

Similarly, experimental research is used in the field of psychology to test theories and understand human behavior. By manipulating variables such as stimuli, researchers can gain insights into how the brain works and identify new treatment options for mental health disorders.

It is also widely used in the field of education. It allows educators to test new teaching methods and identify what works best. By manipulating variables such as class size, teaching style, and curriculum, researchers can understand how students learn and identify new ways to improve educational outcomes.

In addition, experimental research is a powerful tool for businesses and organizations. By manipulating variables such as marketing strategies, product design, and customer service, companies can understand what works best and identify new opportunities for growth.

Advantages of Experimental Research

When talking about this research, we can think of human life. Babies do their own rudimentary experiments (such as putting objects in their mouths) to learn about the world around them, while older children and teens do experiments at school to learn more about science.

Ancient scientists used this research to prove that their hypotheses were correct. For example, Galileo Galilei and Antoine Lavoisier conducted various experiments to discover key concepts in physics and chemistry. The same is true of modern experts, who use this scientific method to see if new drugs are effective, discover treatments for diseases, and create new electronic devices (among others).

It’s vital to test new ideas or theories. Why put time, effort, and funding into something that may not work?

This research allows you to test your idea in a controlled environment before marketing. It also provides the best method to test your theory thanks to the following advantages:

- Researchers have a stronger hold over variables to obtain desired results.

- The subject or industry does not impact the effectiveness of experimental research. Any industry can implement it for research purposes.

- The results are specific.

- After analyzing the results, you can apply your findings to similar ideas or situations.

- You can identify the cause and effect of a hypothesis. Researchers can further analyze this relationship to determine more in-depth ideas.

- Experimental research makes an ideal starting point. The data you collect is a foundation for building more ideas and conducting more action research .

Whether you want to know how the public will react to a new product or if a certain food increases the chance of disease, experimental research is the best place to start. Begin your research by finding subjects using QuestionPro Audience and other tools today.

LEARN MORE FREE TRIAL

MORE LIKE THIS

Top 7 DEI Software Solutions to Empower Your Workplace

Apr 16, 2024

The Power of AI in Customer Experience — Tuesday CX Thoughts

Employee Lifecycle Management Software: Top of 2024

Apr 15, 2024

Top 15 Sentiment Analysis Software That Should Be on Your List

Other categories.

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

Experimental design: Guide, steps, examples

Last updated

27 April 2023

Reviewed by

Miroslav Damyanov

Experimental research design is a scientific framework that allows you to manipulate one or more variables while controlling the test environment.

When testing a theory or new product, it can be helpful to have a certain level of control and manipulate variables to discover different outcomes. You can use these experiments to determine cause and effect or study variable associations.

This guide explores the types of experimental design, the steps in designing an experiment, and the advantages and limitations of experimental design.

Make research less tedious

Dovetail streamlines research to help you uncover and share actionable insights

- What is experimental research design?

You can determine the relationship between each of the variables by:

Manipulating one or more independent variables (i.e., stimuli or treatments)

Applying the changes to one or more dependent variables (i.e., test groups or outcomes)

With the ability to analyze the relationship between variables and using measurable data, you can increase the accuracy of the result.

What is a good experimental design?

A good experimental design requires:

Significant planning to ensure control over the testing environment

Sound experimental treatments

Properly assigning subjects to treatment groups

Without proper planning, unexpected external variables can alter an experiment's outcome.

To meet your research goals, your experimental design should include these characteristics:

Provide unbiased estimates of inputs and associated uncertainties

Enable the researcher to detect differences caused by independent variables

Include a plan for analysis and reporting of the results

Provide easily interpretable results with specific conclusions

What's the difference between experimental and quasi-experimental design?

The major difference between experimental and quasi-experimental design is the random assignment of subjects to groups.

A true experiment relies on certain controls. Typically, the researcher designs the treatment and randomly assigns subjects to control and treatment groups.

However, these conditions are unethical or impossible to achieve in some situations.

When it's unethical or impractical to assign participants randomly, that’s when a quasi-experimental design comes in.

This design allows researchers to conduct a similar experiment by assigning subjects to groups based on non-random criteria.

Another type of quasi-experimental design might occur when the researcher doesn't have control over the treatment but studies pre-existing groups after they receive different treatments.

When can a researcher conduct experimental research?

Various settings and professions can use experimental research to gather information and observe behavior in controlled settings.

Basically, a researcher can conduct experimental research any time they want to test a theory with variable and dependent controls.

Experimental research is an option when the project includes an independent variable and a desire to understand the relationship between cause and effect.

- The importance of experimental research design

Experimental research enables researchers to conduct studies that provide specific, definitive answers to questions and hypotheses.

Researchers can test Independent variables in controlled settings to:

Test the effectiveness of a new medication

Design better products for consumers

Answer questions about human health and behavior

Developing a quality research plan means a researcher can accurately answer vital research questions with minimal error. As a result, definitive conclusions can influence the future of the independent variable.

Types of experimental research designs

There are three main types of experimental research design. The research type you use will depend on the criteria of your experiment, your research budget, and environmental limitations.

Pre-experimental research design

A pre-experimental research study is a basic observational study that monitors independent variables’ effects.

During research, you observe one or more groups after applying a treatment to test whether the treatment causes any change.

The three subtypes of pre-experimental research design are:

One-shot case study research design

This research method introduces a single test group to a single stimulus to study the results at the end of the application.

After researchers presume the stimulus or treatment has caused changes, they gather results to determine how it affects the test subjects.

One-group pretest-posttest design

This method uses a single test group but includes a pretest study as a benchmark. The researcher applies a test before and after the group’s exposure to a specific stimulus.

Static group comparison design

This method includes two or more groups, enabling the researcher to use one group as a control. They apply a stimulus to one group and leave the other group static.

A posttest study compares the results among groups.

True experimental research design

A true experiment is the most common research method. It involves statistical analysis to prove or disprove a specific hypothesis .

Under completely experimental conditions, researchers expose participants in two or more randomized groups to different stimuli.

Random selection removes any potential for bias, providing more reliable results.

These are the three main sub-groups of true experimental research design:

Posttest-only control group design

This structure requires the researcher to divide participants into two random groups. One group receives no stimuli and acts as a control while the other group experiences stimuli.

Researchers perform a test at the end of the experiment to observe the stimuli exposure results.

Pretest-posttest control group design

This test also requires two groups. It includes a pretest as a benchmark before introducing the stimulus.

The pretest introduces multiple ways to test subjects. For instance, if the control group also experiences a change, it reveals that taking the test twice changes the results.

Solomon four-group design

This structure divides subjects into two groups, with two as control groups. Researchers assign the first control group a posttest only and the second control group a pretest and a posttest.

The two variable groups mirror the control groups, but researchers expose them to stimuli. The ability to differentiate between groups in multiple ways provides researchers with more testing approaches for data-based conclusions.

Quasi-experimental research design

Although closely related to a true experiment, quasi-experimental research design differs in approach and scope.

Quasi-experimental research design doesn’t have randomly selected participants. Researchers typically divide the groups in this research by pre-existing differences.

Quasi-experimental research is more common in educational studies, nursing, or other research projects where it's not ethical or practical to use randomized subject groups.

- 5 steps for designing an experiment

Experimental research requires a clearly defined plan to outline the research parameters and expected goals.

Here are five key steps in designing a successful experiment:

Step 1: Define variables and their relationship

Your experiment should begin with a question: What are you hoping to learn through your experiment?

The relationship between variables in your study will determine your answer.

Define the independent variable (the intended stimuli) and the dependent variable (the expected effect of the stimuli). After identifying these groups, consider how you might control them in your experiment.

Could natural variations affect your research? If so, your experiment should include a pretest and posttest.

Step 2: Develop a specific, testable hypothesis

With a firm understanding of the system you intend to study, you can write a specific, testable hypothesis.

What is the expected outcome of your study?

Develop a prediction about how the independent variable will affect the dependent variable.

How will the stimuli in your experiment affect your test subjects?

Your hypothesis should provide a prediction of the answer to your research question .

Step 3: Design experimental treatments to manipulate your independent variable

Depending on your experiment, your variable may be a fixed stimulus (like a medical treatment) or a variable stimulus (like a period during which an activity occurs).

Determine which type of stimulus meets your experiment’s needs and how widely or finely to vary your stimuli.

Step 4: Assign subjects to groups

When you have a clear idea of how to carry out your experiment, you can determine how to assemble test groups for an accurate study.

When choosing your study groups, consider:

The size of your experiment

Whether you can select groups randomly

Your target audience for the outcome of the study

You should be able to create groups with an equal number of subjects and include subjects that match your target audience. Remember, you should assign one group as a control and use one or more groups to study the effects of variables.

Step 5: Plan how to measure your dependent variable

This step determines how you'll collect data to determine the study's outcome. You should seek reliable and valid measurements that minimize research bias or error.

You can measure some data with scientific tools, while you’ll need to operationalize other forms to turn them into measurable observations.

- Advantages of experimental research

Experimental research is an integral part of our world. It allows researchers to conduct experiments that answer specific questions.

While researchers use many methods to conduct different experiments, experimental research offers these distinct benefits:

Researchers can determine cause and effect by manipulating variables.

It gives researchers a high level of control.

Researchers can test multiple variables within a single experiment.

All industries and fields of knowledge can use it.

Researchers can duplicate results to promote the validity of the study .

Replicating natural settings rapidly means immediate research.

Researchers can combine it with other research methods.

It provides specific conclusions about the validity of a product, theory, or idea.

- Disadvantages (or limitations) of experimental research

Unfortunately, no research type yields ideal conditions or perfect results.

While experimental research might be the right choice for some studies, certain conditions could render experiments useless or even dangerous.

Before conducting experimental research, consider these disadvantages and limitations:

Required professional qualification

Only competent professionals with an academic degree and specific training are qualified to conduct rigorous experimental research. This ensures results are unbiased and valid.

Limited scope

Experimental research may not capture the complexity of some phenomena, such as social interactions or cultural norms. These are difficult to control in a laboratory setting.

Resource-intensive

Experimental research can be expensive, time-consuming, and require significant resources, such as specialized equipment or trained personnel.

Limited generalizability

The controlled nature means the research findings may not fully apply to real-world situations or people outside the experimental setting.

Practical or ethical concerns

Some experiments may involve manipulating variables that could harm participants or violate ethical guidelines .

Researchers must ensure their experiments do not cause harm or discomfort to participants.

Sometimes, recruiting a sample of people to randomly assign may be difficult.

- Experimental research design example

Experiments across all industries and research realms provide scientists, developers, and other researchers with definitive answers. These experiments can solve problems, create inventions, and heal illnesses.

Product design testing is an excellent example of experimental research.

A company in the product development phase creates multiple prototypes for testing. With a randomized selection, researchers introduce each test group to a different prototype.

When groups experience different product designs , the company can assess which option most appeals to potential customers.

Experimental research design provides researchers with a controlled environment to conduct experiments that evaluate cause and effect.

Using the five steps to develop a research plan ensures you anticipate and eliminate external variables while answering life’s crucial questions.

Get started today

Go from raw data to valuable insights with a flexible research platform

Editor’s picks

Last updated: 21 December 2023

Last updated: 16 December 2023

Last updated: 6 October 2023

Last updated: 5 March 2024

Last updated: 25 November 2023

Last updated: 15 February 2024

Last updated: 11 March 2024

Last updated: 12 December 2023

Last updated: 6 March 2024

Last updated: 10 April 2023

Last updated: 20 December 2023

Latest articles

Related topics.

- Types of experimental

Log in or sign up

Get started for free

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

14.1 What is experimental design and when should you use it?

Learning objectives.

Learners will be able to…

- Describe the purpose of experimental design research

- Describe nomethetic causality and the logic of experimental design

- Identify the characteristics of a basic experiment

- Discuss the relationship between dependent and independent variables in experiments

- Identify the three major types of experimental designs

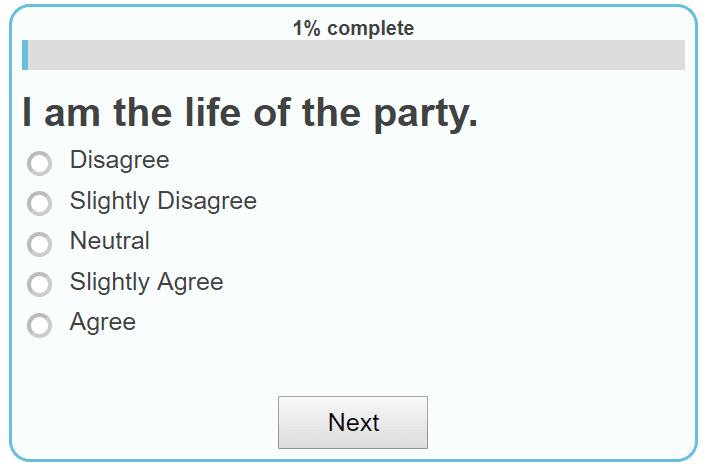

Pre-awareness check (Knowledge)

What are your thoughts on the phrase ‘experiment’ in the realm of social sciences? In an experiment, what is the independent variable?

The basics of experiments

In social work research, experimental design is used to test the effects of treatments, interventions, programs, or other conditions to which individuals, groups, organizations, or communities may be exposed to. There are a lot of experiments social work researchers can use to explore topics such as treatments for depression, impacts of school-based mental health on student outcomes, or prevention of abuse of people with disabilities. The American Psychological Association defines an experiment as:

a series of observations conducted under controlled conditions to study a relationship with the purpose of drawing causal inferences about that relationship. An experiment involves the manipulation of an independent variable , the measurement of a dependent variable , and the exposure of various participants to one or more of the conditions being studied. Random selection of participants and their random assignment to conditions also are necessary in experiments .

In experimental design, the independent variable is the intervention, treatment, or condition that is being investigated as a potential cause of change (i.e., the experimental condition ). The effect, or outcome, of the experimental condition is the dependent variable. Trying out a new restaurant, dating a new person – we often call these things “experiments.” However, a true social science experiment would include recruitment of a large enough sample, random assignment to control and experimental groups, exposing those in the experimental group to an experimental condition, and collecting observations at the end of the experiment.

Social scientists use this level of rigor and control to maximize the internal validity of their research. Internal validity is the confidence researchers have about whether the independent variable (e.g, treatment) truly produces a change in the dependent, or outcome, variable. The logic and features of experimental design are intended to help establish causality and to reduce threats to internal validity , which we will discuss in Section 14.5 .

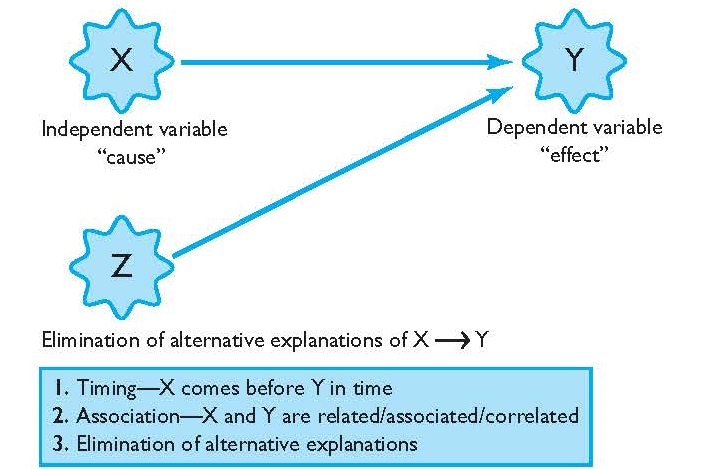

Experiments attempt to establish a nomothetic causal relationship between two variables—the treatment and its intended outcome. We discussed the four criteria for establishing nomothetic causality in Section 4.3 :

- plausibility,

- covariation,

- temporality, and

- nonspuriousness.

Experiments should establish plausibility , having a plausible reason why their intervention would cause changes in the dependent variable. Usually, a theory framework or previous empirical evidence will indicate the plausibility of a causal relationship.

Covariation can be established for causal explanations by showing that the “cause” and the “effect” change together. In experiments, the cause is an intervention, treatment, or other experimental condition. Whether or not a research participant is exposed to the experimental condition is the independent variable. The effect in an experiment is the outcome being assessed and is the dependent variable in the study. When the independent and dependent variables covary, they can have a positive association (e.g., those exposed to the intervention have increased self-esteem) or a negative association (e.g., those exposed to the intervention have reduced anxiety).

Since researcher controls when the intervention is administered, they can be assured that changes in the independent variable (the treatment) happens before changes in the dependent variable (the outcome). In this way, experiments assure temporality .

Finally, one of the most important features of experiments is that they allow researchers to eliminate spurious variables to support the criterion of nonspuriousness . True experiments are usually conducted under strictly controlled conditions. The intervention is given in the same way to each person, with a minimal number of other variables that might cause their post-test scores to change.

The logic of experimental design

How do we know that one phenomenon causes another? The complexity of the social world in which we practice and conduct research means that causes of social problems are rarely cut and dry. Uncovering explanations for social problems is key to helping clients address them, and experimental research designs are one road to finding answers.

Just because two phenomena are related in some way doesn’t mean that one causes the other. Ice cream sales increase in the summer, and so does the rate of violent crime; does that mean that eating ice cream is going to make me violent? Obviously not, because ice cream is great. The reality of that association is far more complex—it could be that hot weather makes people more irritable and, at times, violent, while also making people want ice cream. More likely, though, there are other social factors not accounted for in the way we just described this association.

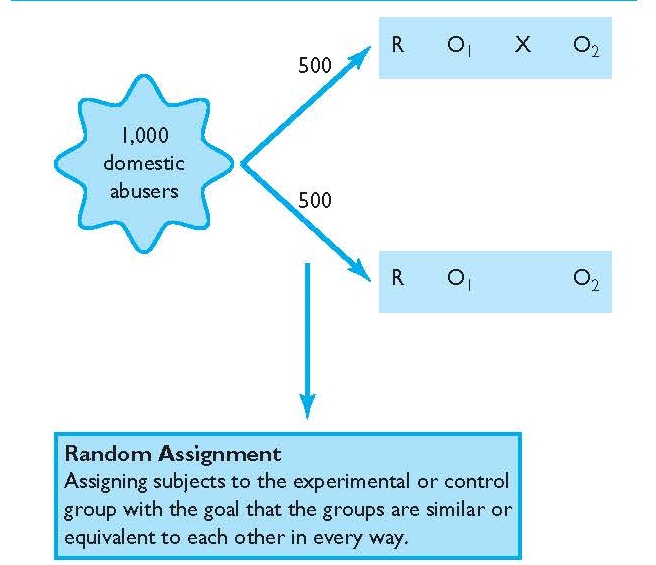

As we have discussed, experimental designs can help clear up at least some of this fog by allowing researchers to isolate the effect of interventions on dependent variables by controlling extraneous variables . In true experimental design (discussed in the next section) and quasi-experimental design, researchers accomplish this w ith a control group or comparison group and the experimental group . The experimental group is sometimes called the treatment group because people in the experimental group receive the treatment or are exposed to the experimental condition (but we will call it the experimental group in this chapter.) The control/comparison group does not receive the treatment or intervention. Instead they may receive what is known as “treatment as usual” or perhaps no treatment at all.

In a well-designed experiment, the control group should look almost identical to the experimental group in terms of demographics and other relevant factors. What if we want to know the effect of CBT on social anxiety, but we have learned in prior research that men tend to have a more difficult time overcoming social anxiety? We would want our control and experimental groups to have a similar portions of men, since ostensibly, both groups’ results would be affected by the men in the group. If your control group has 5 women, 6 men, and 4 non-binary people, then your experimental group should be made up of roughly the same gender balance to help control for the influence of gender on the outcome of your intervention. (In reality, the groups should be similar along other dimensions, as well, and your group will likely be much larger.) The researcher will use the same outcome measures for both groups and compare them, and assuming the experiment was designed correctly, get a pretty good answer about whether the intervention had an effect on social anxiety.

Random assignment [/pb_glossary], also called randomization, entails using a random process to decide which participants are put into the control or experimental group (which participants receive an intervention and which do not). By randomly assigning participants to a group, you can reduce the effect of extraneous variables on your research because there won’t be a systematic difference between the groups.

Do not confuse random assignment with random sampling . Random sampling is a method for selecting a sample from a population and is rarely used in psychological research. Random assignment is a method for assigning participants in a sample to the different conditions, and it is an important element of all experimental research in psychology and other related fields. Random sampling helps a great deal with external validity, or generalizability , whereas random assignment increases internal validity .

Other Features of Experiments that Help Establish Causality

To control for spuriousness (as well as meeting the three other criteria for establishing causality), experiments try to control as many aspects of the research process as possible: using control groups, having large enough sample sizes, standardizing the treatment, etc. Researchers in large experiments often employ clinicians or other research staff to help them. Researchers train their staff members exhaustively, provide pre-scripted responses to common questions, and control the physical environment of the experiment so each person who participates receives the exact same treatment. Experimental researchers also document their procedures, so that others can review them and make changes in future research if they think it will improve on the ability to control for spurious variables.

An interesting example is Bruce Alexander’s (2010) Rat Park experiments. Much of the early research conducted on addictive drugs, like heroin and cocaine, was conducted on animals other than humans, usually mice or rats. The scientific consensus up until Alexander’s experiments was that cocaine and heroin were so addictive that rats, if offered the drugs, would consume them repeatedly until they perished. Researchers claimed this behavior explained how addiction worked in humans, but Alexander was not so sure. He knew rats were social animals and the experimental procedure from previous experiments did not allow them to socialize. Instead, rats were kept isolated in small cages with only food, water, and metal walls. To Alexander, social isolation was a spurious variable, causing changes in addictive behavior not due to the drug itself. Alexander created an experiment of his own, in which rats were allowed to run freely in an interesting environment, socialize and mate with other rats, and of course, drink from a solution that contained an addictive drug. In this environment, rats did not become hopelessly addicted to drugs. In fact, they had little interest in the substance. To Alexander, the results of his experiment demonstrated that social isolation was more of a causal factor for addiction than the drug itself.

One challenge with Alexander’s findings is that subsequent researchers have had mixed success replicating his findings (e.g., Petrie, 1996; Solinas, Thiriet, El Rawas, Lardeux, & Jaber, 2009). Replication involves conducting another researcher’s experiment in the same manner and seeing if it produces the same results. If the causal relationship is real, it should occur in all (or at least most) rigorous replications of the experiment.

Replicability

[INSERT A PARAGRAPH ABOUT REPLICATION/REPRODUCTION HERE. CAN USE/REFERENCE THIS IF IT’S HELPFUL; include glossary definition as well as other general info]

To allow for easier replication, researchers should describe their experimental methods diligently. Researchers with the Open Science Collaboration (2015) [1] conducted the Reproducibility Project , which caused a significant controversy regarding the validity of psychological studies. The researchers with the project attempted to reproduce the results of 100 experiments published in major psychology journals since 2008. What they found was shocking. Although 97% of the original studies reported significant results, only 36% of the replicated studies had significant findings. The average effect size in the replication studies was half that of the original studies. The implications of the Reproducibility Project are potentially staggering, and encourage social scientists to carefully consider the validity of their reported findings and that the scientific community take steps to ensure researchers do not cherry-pick data or change their hypotheses simply to get published.

Generalizability

Let’s return to Alexander’s Rat Park study and consider the implications of his experiment for substance use professionals. The conclusions he drew from his experiments on rats were meant to be generalized to the population. If this could be done, the experiment would have a high degree of external validity , which is the degree to which conclusions generalize to larger populations and different situations. Alexander argues his conclusions about addiction and social isolation help us understand why people living in deprived, isolated environments may become addicted to drugs more often than those in more enriching environments. Similarly, earlier rat researchers argued their results showed these drugs were instantly addictive to humans, often to the point of death.