Case study: Facebook–Cambridge Analytica data breach scandal

Cambridge Analytica is a federal data analytics, marketing, and consulting firm based in London, UK, that is accused of illegally obtaining Facebook data and using it to determine a variety of federal crusades. These crusades include those of American Senator Ted Cruz and, to an extent, Donald Trump and the Leave-EU Brexit campaign, which resulted in the UK’s withdrawal from the EU. In 2018, the Facebook–Cambridge Analytica data scandal was a major disgrace, with Cambridge Analytica collecting the private data of millions of people’s Facebook profiles without their permission and using it for Political Advertising. It was defined as a watershed flash in the country’s understanding of private data, prompting a seventeen (17) per cent drop in Facebook’s cut-rate and summons for stricter laws governing tech companies’ usage of private data.

Background Information

Fotis International Law Firm aims to provide our readers with a brief overview of the Facebook Data Breach that happened. A lot of people took a survey in 2014 that looked similar and included not only the user’s personally identifiable information or data but also the data of the user’s Facebook friends with the Company that worked for President Trump’s 2016 campaign. This is where Cambridge Analytica (CA) entered the picture, partnering with Aleksandr Kogan, a UK research academic who was using Facebook for research purposes. Kogan’s survey, which appeared innocuous and included over 100 personality traits with which surveyees could agree or disagree, was sent to 3L Americans.

But there’s a catch: to take the survey, surveyee’s must log in or sign up for Facebook, giving Kogan access to the user’s profile, birth date, and location. Kogan created a psychometric model, which is similar to a personality profile, by combining the survey results with the user’s Facebook data. The data was then combined with voter records and sent to CA by Kogan. CA claimed that the results of this survey, combined with the personal traits of various users and models, were crucial in determining how they profiled a user’s psychoneurosis and other susceptible traits.

In only a few months, two lakh twenty thousand people took part in the survey of Kogan, and data from up to 87 million Facebook user profiles were harvested, accounting for nearly a quarter of all Facebook users in the United States. The goal was to use the data to target users/surveyees with political messaging that would aid Trump’s campaign strategy, but the campaign objected. Even though Kogan’s work was for academic research, he shared the formulated data with CA, which is against Facebook’s policy. In response to the violation, Facebook CEO Mark Zuckerberg stated that it was not a data breach because no passwords were stolen or any systems were infiltrated, but it was a violation of the terms of service. In response to the breach, the CEO of Facebook who is Mark Zuckerberg stated that it was not a data breach because no passwords were stolen or any systems were infiltrated, but rather a breach of contravention between Facebook and its users. The Federal Trade Commission of the US took up the investigation after that.

Facebook Data Breach

CA’s illegitimate procurement of personally identifiable data was first revealed in December 2015 by Harry Davies, a Guardian journalist. CA was working for US Senator Ted Cruz, according to Harry, and had obtained data from millions of Facebook accounts without their permission. Facebook declined to comment on the story other than to say that it was looking into it. The scandal finally blew up in March 2018 when a conspirator, Christopher Wylie, an ex-CA employee, was exposed. Christopher was an unidentified source for Cadwalladr’s article “The Great British Brexit Robbery” in 2017. This report was well-received, but it was met with scepticism in some quarters, prompting sceptical responses in publications such as The New York Times. In March 2018, the news organizations released their stories concurrently, causing a massive public outcry that resulted in more than $100 billion being deducted from Facebook’s retail funding in a matter of days. Senators from the US and the UK have demanded answers from Facebook CEO Mark Zuckerberg. Following the scandal, Mark Zuckerberg agreed to testify in front of the US Congress.

Summary of the Case

CA’s parent company, Strategic Communication Laboratories Group, was a private British behavioural and strategic research communication corporation. In the US and other countries, SCL sparked public outrage by obtaining data through data mining and data analysis on its users with the help of a university researcher named Aleksandr Kogan, who was tasked with developing an app called “This is your digital life” and along with that, he was told to create a survey on the behavioural patterns of users that he had obtained from Facebook’s social media users, to use the data for electoral/political purposes without the approval of Facebook or the users of Facebook, since the data was detailed enough to create a profile that implied which type of advertisement would be most effective in influencing them. Based on the findings, the data would be carefully targeted to key audience associations to change behaviour in line with SCL’s client’s objective, resulting in a breach of trust between Facebook and its users.

Legal Implications

As a result, the Facebook CEO was questioned, and the stock price dropped by seventeen (17) per cent. He was also requested to enforce strict regulations on the protection of users’ data. Users were afterwards told that the access they had provided for various applications had been withdrawn and reviewed in the settings, as well as there being audit trials on breach investigation. Meanwhile, Facebook has vowed to create an app that would require users to delete all of their Facebook web search data. CA has been the subject of multiple baseless allegations in past years, and despite the firm’s efforts to improve the record, it has been chastised for actions that are not only legal but also generally acknowledged as a routine component of internet promotion in both the federal and industrial sectors.

Julian Malins, a third-party auditor, was appointed by CA to look into the allegations of wrongdoing. According to the firm, the inquiry determined that the charges were not supported by the facts. Despite CA’s constant belief that its employees have acted ethically and lawfully, a belief that is now completely supported by Mr Malin’s declaration, the Company’s clients and suppliers have been driven away implicitly as a result of the media coverage. As a result, in May 2018, it was decided that continuing to manage the firm was no longer practicable, leaving CA with no practical alternative for bringing the firm into government.

The General Data Protection Regulation (GDPR), which had come into effect in May 2018, establishes logical data security laws across Europe. It applies to all companies that prepare private data about EU citizens, regardless of where they are situated. Processing is a comprehensive term that refers to everything linked to private data, such as how a company handles and uses data, such as settling, saving, using, and destroying it.

While many of the GDPR’s requirements are based on EU data protection regulations, the GDPR has a greater reach, more precise standards, and ample penalties. For example, it necessitates a higher level of consent for the use of certain types of data and enhances people’s rights to request and shifting their data. Failure to comply with the GDPR can result in significant penalties, including fines of up to 4% of worldwide annual income for multiple violations or infringements. In terms of policy changes, data may only be accessed by others, including developers. If permissions are granted, data settings are stricter, and a research tool is used to scrutinize the search.

Regardless matter how many changes or updates are made to specific applications, the user of that platform should be aware of the types of personal data and apps to which rights should be granted. In addition, maintaining a check, such as evaluating account activity, revoking access to illegal applications, and monitoring its settings at regular intervals, is critical to keeping their data safe and being aware of the repercussions of a breach. The case of CA is the precedent. Countries should create a legal framework that will severely restrict the operations of firms like CA and prevent the globally uncontrolled exploitation of personal data on social media. No one can guarantee that a government would resist the temptation to utilize technology for its ends. It’s quite probable that it’s going on right now.

Facebook data breach: what happened and why it’s hard to know if your data was leaked

Associate Dean (Computing and Security), Edith Cowan University

Disclosure statement

Paul Haskell-Dowland does not work for, consult, own shares in or receive funding from any company or organisation that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.

Edith Cowan University provides funding as a member of The Conversation AU.

View all partners

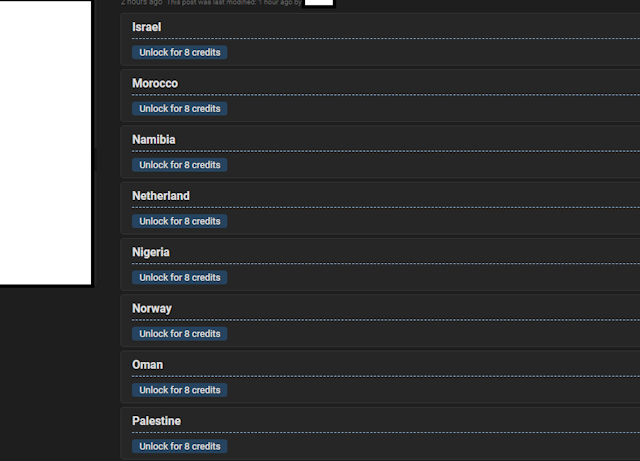

Over the long weekend reports emerged of an alleged data breach, impacting half a billion Facebook users from 106 countries.

And while this figure is staggering, there’s more to the story than 533 million sets of data. This breach once again highlights how many of the systems we use aren’t designed to adequately protect our information from cyber criminals.

Nor is it always straightforward to figure out whether your data have been compromised in a breach or not.

What happened?

More than 500 million Facebook users’ details were published online on an underground website used by cyber criminals.

It quickly became clear this was not a new data breach, but an older one which had come back to haunt Facebook and the millions of users whose data are now available to purchase online.

The data breach is believed to relate to a vulnerability which Facebook reportedly fixed in August of 2019 . While the exact source of the data can’t be verified, it was likely acquired through the misuse of legitimate functions in the Facebook systems .

Such misuses can occur when a seemingly innocent feature of a website is used for an unexpected purpose by attackers, as was the case with a PayID attack in 2019.

Read more: PayID data breaches show Australia's banks need to be more vigilant to hacking

In the case of Facebook, criminals can mine Facebook’s systems for users’ personal information by using techniques which automate the process of harvesting data.

This may sound familiar. In 2018 Facebook was reeling from the Cambridge Analytica scandal . This too was not a hacking incident , but a misuse of a perfectly legitimate function of the Facebook platform.

While the data were initially obtained legitimately — as least, as far as Facebook’s rules were concerned — it was then passed on to a third party without the appropriate consent from users.

Read more: We need to talk about the data we give freely of ourselves online and why it's useful

Were you targeted?

There’s no easy way to determine if your details were breached in the recent leak. If the website concerned is acting in your best interest, you should at least receive a notification. But this isn’t guaranteed .

Even a tech-savvy user would be limited to hunting for the leaked data themselves on underground websites.

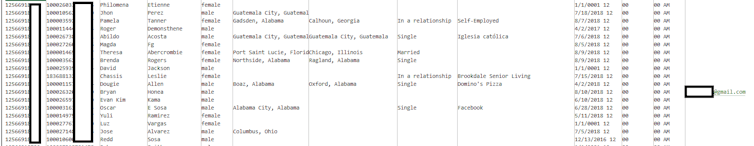

The data being sold online contain plenty of key information. According to haveibeenpwned.com, most of the records include names and genders, with many also including dates of birth, location, relationship status and employer.

Although, it has been reported only a small proportion of the stolen data contained a valid email address (about 2.5 million records).

This is important since a user’s data are less valuable without the corresponding email address. It’s the combination of date of birth, name, phone number and email which provides a useful starting point for identity theft and exploitation .

If you’re not sure why these details would be valuable to a criminal, think about how you confirm your identity over the phone with your bank, or how you last reset a password on a website.

Haveibeenpwned.com creator and web security expert Troy Hunt has said a secondary use for the data could be to enhance phishing and SMS-based spam attacks.

How to protect yourself

Given the nature of the leak, there is very little Facebook users could have done proactively to protect themselves from this breach. As the attack targeted Facebook’s systems, the responsibility for securing the data lies entirely with Facebook.

On an individual level, while you can opt to withdraw from the platform, for many this isn’t a simple option. That said, there are certain changes you can make to your social media behaviours to help reduce your risk from data breaches.

1) Ask yourself if you need to share all your information with Facebook

There are some bits of information we inevitably have to forfeit in exchange for using Facebook, including mobile numbers for new accounts (as a security measure, ironically). But there are plenty of details you can withhold to retain a modicum of control over your data.

2) Think about what you share

Apart from the leak being reported, there are plenty of other ways to harvest user data from Facebook. If you use a fake birth date on your account, you should also avoid posting birthday party photos on the real day. Even our seemingly innocent photos can reveal sensitive information.

3) Avoid using Facebook to sign in to other websites

Although the “sign-in with Facebook” feature is potentially time-saving (and reduces the number of accounts you have to maintain), it also increases potential risk to you — especially if the site you’re signing into isn’t a trusted one. If your Facebook account is compromised, the attacker will have automatic access to all the linked websites.

4) Use unique passwords

Always use a different password for each online account, even if it is a pain. Installing a password manager will help with this (and this is how I have more than 400 different passwords). While it won’t stop your data from ever being stolen, if your password for a site is leaked it will only work for that one site.

If you really want a scare, you can always download a copy of all the data Facebook has on you . This is useful if you’re considering leaving the platform and want a copy of your data before closing your account.

Read more: New evidence shows half of Australians have ditched social media at some point, but millennials lag behind

- Social media

- Online security

- Cybersecurity

- Data breaches

- Online data

Audience Development Coordinator (fixed-term maternity cover)

Data and Reporting Analyst

Lecturer (Hindi-Urdu)

Director, Defence and Security

Opportunities with the new CIEHF

TechRepublic

Account information.

Share with Your Friends

Facebook data privacy scandal: A cheat sheet

Your email has been sent

A decade of apparent indifference for data privacy at Facebook has culminated in revelations that organizations harvested user data for targeted advertising, particularly political advertising, to apparent success. While the most well-known offender is Cambridge Analytica–the political consulting and strategic communication firm behind the pro-Brexit Leave EU campaign, as well as Donald Trump’s 2016 presidential campaign–other companies have likely used similar tactics to collect personal data of Facebook users.

TechRepublic’s cheat sheet about the Facebook data privacy scandal covers the ongoing controversy surrounding the illicit use of profile information. This article will be updated as more information about this developing story comes to the forefront. It is also available as a download, Cheat sheet: Facebook Data Privacy Scandal (free PDF) .

SEE: Navigating data privacy (ZDNet/TechRepublic special feature) | Download the free PDF version (TechRepublic)

What is the Facebook data privacy scandal?

The Facebook data privacy scandal centers around the collection of personally identifiable information of “ up to 87 million people ” by the political consulting and strategic communication firm Cambridge Analytica. That company–and others–were able to gain access to personal data of Facebook users due to the confluence of a variety of factors, broadly including inadequate safeguards against companies engaging in data harvesting, little to no oversight of developers by Facebook, developer abuse of the Facebook API, and users agreeing to overly broad terms and conditions.

SEE: Information security policy (TechRepublic Premium)

In the case of Cambridge Analytica, the company was able to harvest personally identifiable information through a personality quiz app called thisisyourdigitiallife, based on the OCEAN personality model. Information gathered via this app is useful in building a “psychographic” profile of users (the OCEAN acronym stands for openness, conscientiousness, extraversion, agreeableness, and neuroticism). Adding the app to your Facebook account to take the quiz gives the creator of the app access to profile information and user history for the user taking the quiz, as well as all of the friends that user has on Facebook. This data includes all of the items that users and their friends have liked on Facebook.

Researchers associated with Cambridge University claimed in a paper that it “can be used to automatically and accurately predict a range of highly sensitive personal attributes including: sexual orientation, ethnicity, religious and political views, personality traits, intelligence, happiness, use of addictive substances, parental separation, age, and gender,” with a model developed by the researchers that uses a combination of dimensionality reduction and logistic/linear regression to infer this information about users.

The model–according to the researchers–is effective due to the relationship of likes to a given attribute. However, most likes are not explicitly indicative of their attributes. The researchers note that “less than 5% of users labeled as gay were connected with explicitly gay groups,” but that liking “Juicy Couture” and “Adam Lambert” are likes indicative of gay men, while “WWE” and “Being Confused After Waking Up From Naps” are likes indicative of straight men. Other such connections are peculiarly lateral, with “curly fries” being an indicator of high IQ, “sour candy” being an indicator of not smoking, and “Gene Wilder” being an indicator that the user’s parents had not separated by age 21.

SEE: Can Russian hackers be stopped? Here’s why it might take 20 years (TechRepublic cover story) | download the PDF version

Additional resources

- How a Facebook app scraped millions of people’s personal data (CBS News)

- Facebook reportedly thinks there’s no ‘expectation of privacy’ on social media (CNET)

- Cambridge Analytica: ‘We know what you want before you want it’ (TechRepublic)

- Average US citizen had personal information stolen at least 4 times in 2019 (TechRepublic)

- Facebook: We’ll pay you to track down apps that misuse your data (ZDNet)

- Most consumers do not trust big tech with their privacy (TechRepublic)

- Facebook asks permission to use personal data in Brazil (ZDNet)

What is the timeline of the Facebook data privacy scandal?

Facebook has more than a decade-long track record of incidents highlighting inadequate and insufficient measures to protect data privacy. While the severity of these individual cases varies, the sequence of repeated failures paints a larger picture of systemic problems.

SEE: All TechRepublic cheat sheets and smart person’s guides

In 2005, researchers at MIT created a script that downloaded publicly posted information of more than 70,000 users from four schools. (Facebook only began to allow search engines to crawl profiles in September 2007.)

In 2007, activities that users engaged in on other websites was automatically added to Facebook user profiles as part of Beacon, one of Facebook’s first attempts to monetize user profiles. As an example, Beacon indicated on the Facebook News Feed the titles of videos that users rented from Blockbuster Video, which was a violation of the Video Privacy Protection Act . A class action suit was filed, for which Facebook paid $9.5 million to a fund for privacy and security as part of a settlement agreement.

SEE: The Brexit dilemma: Will London’s start-ups stay or go? (TechRepublic cover story)

In 2011, following an FTC investigation, the company entered into a consent decree, promising to address concerns about how user data was tracked and shared. That investigation was prompted by an incident in December 2009 in which information thought private by users was being shared publicly, according to contemporaneous reporting by The New York Times .

In 2013, Facebook disclosed details of a bug that exposed the personal details of six million accounts over approximately a year . When users downloaded their own Facebook history, that user would obtain in the same action not just their own address book, but also the email addresses and phone numbers of their friends that other people had stored in their address books. The data that Facebook exposed had not been given to Facebook by users to begin with–it had been vacuumed from the contact lists of other Facebook users who happen to know that person. This phenomenon has since been described as “shadow profiles.”

The Cambridge Analytica portion of the data privacy scandal starts in February 2014. A spate of reviews on the Turkopticon website–a third-party review website for users of Amazon’s Mechanical Turk–detail a task requested by Aleksandr Kogan asking users to complete a survey in exchange for money. The survey required users to add the thisisyourdigitiallife app to their Facebook account, which is in violation of Mechanical Turk’s terms of service . One review quotes the request as requiring users to “provide our app access to your Facebook so we can download some of your data–some demographic data, your likes, your friends list, whether your friends know one another, and some of your private messages.”

In December 2015, Facebook learned for the first time that the data set Kogan generated with the app was shared with Cambridge Analytica. Facebook founder and CEO Mark Zuckerberg claims “we immediately banned Kogan’s app from our platform, and demanded that Kogan and Cambridge Analytica formally certify that they had deleted all improperly acquired data. They provided these certifications.”

According to Cambridge Analytica, the company took legal action in August 2016 against GSR (Kogan) for licensing “illegally acquired data” to the company, with a settlement reached that November.

On March 17, 2018, an exposé was published by The Guardian and The New York Times , initially reporting that 50 million Facebook profiles were harvested by Cambridge Analytica; the figure was later revised to “up to 87 million” profiles. The exposé relies on information provided by Christopher Wylie, a former employee of SCL Elections and Global Science Research, the creator of the thisisyourdigitiallife app. Wylie claimed that the data from that app was sold to Cambridge Analytica, which used the data to develop “psychographic” profiles of users, and target users with pro-Trump advertising, a claim that Cambridge Analytica denied.

On March 16, 2018, Facebook threatened to sue The Guardian over publication of the story, according to a tweet by Guardian reporter Carole Cadwalladr . Campbell Brown, a former CNN journalist who now works as head of news partnerships at Facebook, said it was “not our wisest move,” adding “If it were me I would have probably not threatened to sue The Guardian.” Similarly, Cambridge Analytica threatened to sue The Guardian for defamation .

On March 20, 2018, the FTC opened an investigation to determine if Facebook had violated the terms of the settlement from the 2011 investigation.

In April 2018, reports indicated that Facebook granted Zuckerberg and other high ranking executives powers over controlling personal information on a platform that is not available to normal users. Messages from Zuckerberg sent to other users were remotely deleted from users’ inboxes, which the company claimed was part of a corporate security measure following the 2014 Sony Pictures hack . Facebook subsequently announced plans to make available the “unsend” capability “to all users in several months,” and that Zuckerberg will be unable to unsend messages until such time that feature rolls out. Facebook added the feature 10 months later , on February 6, 2019. The public feature permits users to delete messages up to 10 minutes after the messages were sent. In the controversy prompting this feature to be added, Zuckerberg deleted messages months after they were sent.

On April 4, 2018, The Washington Post reported that Facebook announced “malicious actors” abused the search function to gather public profile information of “most of its 2 billion users worldwide.”

In a CBS News/YouGov poll published on April 10, 2018, 61% of Americans said Congress should do more to regulate social media and tech companies. This sentiment was echoed in a CBS News interview with Box CEO Aaron Levie and YML CEO Ashish Toshniwal who called on Congress to regulate Facebook. According to Levie, “There are so many examples where we don’t have modern ways of either regulating, controlling, or putting the right protections in place in the internet age. And this is a fundamental issue that, that we’re gonna have to grapple with as an industry for the next decade.”

On April 18, 2018, Facebook updated its privacy policy .

On May 2, 2018, SCL Group, which owns Cambridge Analytica, was dissolved. In a press release , the company indicated that “the siege of media coverage has driven away virtually all of the Company’s customers and suppliers.”

On May 15, 2018, The New York Times reported that Cambridge Analytica is being investigated by the FBI and the Justice Department. A source indicated to CBS News that prosecutors are focusing on potential financial crimes.

On May 16, 2018, Christopher Wylie testified before the Senate Judiciary Committee . Among other things, Wylie noted that Cambridge Analytica, under the direction of Steve Bannon, sought to “exploit certain vulnerabilities in certain segments to send them information that will remove them from the public forum, and feed them conspiracies and they’ll never see mainstream media.” Wylie also noted that the company targeted people with “characteristics that would lead them to vote for the Democratic party, particularly African American voters.”

On June 3, 2018, a report in The New York Times indicated that Facebook had maintained data-sharing partnerships with mobile device manufacturers, specifically naming Apple, Amazon, BlackBerry, Microsoft, and Samsung. Under the terms of this personal information sharing, device manufacturers were able to gather information about users in order to deliver “the Facebook experience,” the Times quotes a Facebook official as saying. Additionally, the report indicates that this access allowed device manufacturers to obtain data about a user’s Facebook friends, even if those friends had configured their privacy settings to deny information sharing with third parties.

The same day, Facebook issued a rebuttal to the Times report indicating that the partnerships were conceived because “the demand for Facebook outpaced our ability to build versions of the product that worked on every phone or operating system,” at a time when the smartphone market included BlackBerry’s BB10 and Windows Phone operating systems, among others. Facebook claimed that “contrary to claims by the New York Times, friends’ information, like photos, was only accessible on devices when people made a decision to share their information with those friends. We are not aware of any abuse by these companies.” The distinction being made is partially semantic, as Facebook does not consider these partnerships a third party in this case. Facebook noted that changes to the platform made in April began “winding down” access to these APIs, and that 22 of the partnerships had already been ended.

On June 5, 2018, the The Washington Post and The New York Times reported that the Chinese device manufacturers Huawei, Lenovo, Oppo, and TCL were granted access to user data under this program. Huawei, along with ZTE, are facing scrutiny from the US government on unsubstantiated accusations that products from these companies pose a national security risk .

On July 2, 2018, The Washington Post reported that the US Securities and Exchange Commission, Federal Trade Commission, and Federal Bureau of Investigation have joined the Department of Justice inquiry into the Facebook/Cambridge Analytica data scandal. In a statement to CNET , Facebook indicated that “We’ve provided public testimony, answered questions, and pledged to continue our assistance as their work continues.” On July 11th, the Wall Street Journal reported that the SEC is separately investigating if Facebook adequately warned investors in a timely manner about the possible misuse and improper collection of user data. The same day, the UK assessed a £500,000 fine to Facebook , the maximum permitted by law, over its role in the data scandal. The UK’s Information Commissioner’s Office is also preparing to launch a criminal probe into SCL Elections over their involvement in the scandal.

On July 3, 2018, Facebook acknowledged a “bug” unblocked people that users has blocked between May 29 and June 5.

On July 12, 2018, a CNBC report indicated that a privacy loophole was discovered and closed. A Chrome plug-in intended for marketing research called Grouply.io allowed users to access the list of members for private Facebook groups. Congress sent a letter to Zuckerberg on February 19, 2019 demanding answers about the data leak, stating in part that “labeling these groups as closed or anonymous potentially misled Facebook users into joining these groups and revealing more personal information than they otherwise would have,” and “Facebook may have failed to properly notify group members that their personal health information may have been accessed by health insurance companies and online bullies, among others.”

Fallout from a confluence of factors in the Facebook data privacy scandal has come to bear in the last week of July 2018. On July 25th, Facebook announced that daily active user counts have fallen in Europe, and growth has stagnated in the US and Canada. The following day, Facebook suffered the worst single-day market value decrease for a public company in the US, dropping $120 billion , or 19%. On the July 28th, Reuters reported that shareholders are suing Facebook, Zuckerberg, and CFO David Wehner for “making misleading statements about or failing to disclose slowing revenue growth, falling operating margins, and declines in active users.”

On August 22, 2018, Facebook removed Facebook-owned security app Onavo from the App Store , for violating privacy rules. Data collected through the Onavo app is shared with Facebook.

In testimony before the Senate, on September 5, 2018, COO Sheryl Sandberg conceded that the company “[was] too slow to spot this and too slow to act” on privacy protections. Sandberg, and Twitter CEO Jack Dorsey faced questions focusing on user privacy, election interference, and political censorship. Senator Mark Warner of Virginia even said that, “The era of the wild west in social media is coming to an end,” which seems to indicate coming legislation.

On September 6, 2018, a spokesperson indicated that Joseph Chancellor was no longer employed by Facebook . Chancellor was a co-director of Global Science Research, the firm which improperly provided user data to Cambridge Analytica. An internal investigation was launched in March in part to determine his involvement. No statement was released indicating the result of that investigation.

On September 7, 2018, Zuckerberg stated in a post that fixing issues such as “defending against election interference by nation states, protecting our community from abuse and harm, or making sure people have control of their information and are comfortable with how it’s used,” is a process which “will extend through 2019.”

On September 26, 2018, WhatsApp co-founder Brian Acton stated in an interview with Forbes that “I sold my users’ privacy” as a result of the messaging app being sold to Facebook in 2014 for $22 billion.

On September 28, 2018, Facebook disclosed details of a security breach which affected 50 million users . The vulnerability originated from the “view as” feature which can be used to let users see what their profiles look like to other people. Attackers devised a way to export “access tokens,” which could be used to gain control of other users’ accounts .

A CNET report published on October 5, 2018, details the existence of an “ Internet Bill of Rights ” drafted by Rep. Ro Khanna (D-CA). The bill is likely to be introduced in the event the Democrats regain control of the House of Representatives in the 2018 elections. In a statement, Khanna noted that “As our lives and the economy are more tied to the internet, it is essential to provide Americans with basic protections online.”

On October 11, 2018, Facebook deleted over 800 pages and accounts in advance of the 2018 elections for violating rules against spam and “inauthentic behavior.” The same day, it disabled accounts for a Russian firm called “Social Data Hub,” which claimed to sell scraped user data. A Reuters report indicates that Facebook will ban false information about voting in the midterm elections.

On October 16, 2018, rules requiring public disclosure of who pays for political advertising on Facebook, as well as identity verification of users paying for political advertising, were extended to the UK . The rules were first rolled out in the US in May.

On October 25, 2018, Facebook was fined £500,000 by the UK’s Information Commissioner’s Office for their role in the Cambridge Analytica scandal. The fine is the maximum amount permitted by the Data Protection Act 1998. The ICO indicated that the fine was final. A Facebook spokesperson told ZDNet that the company “respectfully disagreed,” and has filed for appeal .

The same day, Vice published a report indicating that Facebook’s advertiser disclosure policy was trivial to abuse. Reporters from Vice submitted advertisements for approval attributed to Mike Pence, DNC Chairman Tom Perez, and Islamic State, which were approved by Facebook. Further, the contents of the advertisements were copied from Russian advertisements. A spokesperson for Facebook confirmed to Vice that the copied content does not violate rules, though the false attribution does. According to Vice, the only denied submission was attributed to Hillary Clinton.

On October 30, 2018, Vice published a second report in which it claimed that it successfully applied to purchase advertisements attributed to all 100 sitting US Senators, indicating that Facebook had yet to fix the problem reported in the previous week. According to Vice, the only denied submission in this test was attributed to Mark Zuckerberg.

On November 14, 2018, the New York Times published an exposé on the Facebook data privacy scandal, citing interviews of more than 50 people, including current and former Facebook executives and employees. In the exposé, the Times reports:

- In the Spring of 2016, a security expert employed by Facebook informed Chief Security Officer Alex Stamos of Russian hackers “probing Facebook accounts for people connected to the presidential campaigns,” which Stamos, in turn, informed general counsel Colin Stretch.

- A group called “Project P” was assembled by Zuckerberg and Sandberg to study false news on Facebook. By January 2017, this group “pressed to issue a public paper” about their findings, but was stopped by board members and Facebook vice president of global public policy Joel Kaplan, who had formerly worked in former US President George W. Bush’s administration.

- In Spring and Summer of 2017, Facebook was “publicly claiming there had been no Russian effort of any significance on Facebook,” despite an ongoing investigation into the extent of Russian involvement in the election.

- Sandberg “and deputies” insisted that the post drafted by Stamos to publicly acknowledge Russian involvement for the first time be made “less specific” before publication.

- In October 2017, Facebook expanded their engagement with Republican-linked firm Definers Public Affairs to discredit “activist protesters.” That firm worked to link people critical of Facebook to liberal philanthropist George Soros , and “[lobbied] a Jewish civil rights group to cast some criticism of the company as anti-Semitic.”

- Following comments critical of Facebook by Apple CEO Tim Cook , a spate of articles critical of Apple and Google began appearing on NTK Network, an organization which shares an office and staff with Definers. Other articles appeared on the website downplaying the Russians’ use of Facebook.

On November 15, 2018, Facebook announced it had terminated its relationship with Definers Public Affairs, though it disputed that either Zuckerberg or Sandberg was aware of the “specific work being done.” Further, a Facebook spokesperson indicated “It is wrong to suggest that we have ever asked Definers to pay for or write articles on Facebook’s behalf, or communicate anything untrue.”

On November 22, 2018, Sandberg acknowledged that work produced by Definers “was incorporated into materials presented to me and I received a small number of emails where Definers was referenced.”

On November 25, 2018, the founder of Six4Three, on a business trip to London, was compelled by Parliament to hand over documents relating to Facebook . Six4Three obtained these documents during the discovery process relating to an app developed by the startup that used image recognition to identify photos of women in bikinis shared on Facebook users’ friends’ pages. Reports indicate that Parliament sent an official to the founder’s hotel with a warning that noncompliance would result in possible fines or imprisonment. Despite the warning, the founder of the startup remained noncompliant, prompting him to be escorted to Parliament, where he turned over the documents.

A report in the New York Times published on November 29, 2018, indicates that Sheryl Sandberg personally asked Facebook communications staff in January to “research George Soros’s financial interests in the wake of his high-profile attacks on tech companies.”

On December 5, 2018, documents obtained in the probe of Six4Three were released by Parliament . Damian Collins, the MP who issued the order compelling the handover of the documents in November, highlighted six key points from the documents:

- Facebook entered into whitelisting agreements with Lyft, Airbnb, Bumble, and Netflix, among others, allowing those groups full access to friends data after Graph API v1 was discontinued. Collins indicates “It is not clear that there was any user consent for this, nor how Facebook decided which companies should be whitelisted or not.”

- According to Collins, “increasing revenues from major app developers was one of the key drivers behind the Platform 3.0 changes at Facebook. The idea of linking access to friends data to the financial value of the developers’ relationship with Facebook is a recurring feature of the documents.”

- Data reciprocity between Facebook and app developers was a central focus for the release of Platform v3, with Zuckerberg discussing charging developers for access to API access for friend lists.

- Internal discussions of changes to the Facebook Android app acknowledge that requesting permissions to collect calls and texts sent by the user would be controversial, with one project manager stating it was “a pretty high-risk thing to do from a PR perspective.”

- Facebook used data collected through Onavo, a VPN service the company acquired in 2013, to survey the use of mobile apps on smartphones. According to Collins, this occurred “apparently without [users’] knowledge,” and was used by Facebook to determine “which companies to acquire, and which to treat as a threat.”

- Collins contends that “the files show evidence of Facebook taking aggressive positions against apps, with the consequence that denying them access to data led to the failure of that business.” Documents disclosed specifically indicate Facebook revoked API access to video sharing service Vine.

In a statement , Facebook claimed, “Six4Three… cherrypicked these documents from years ago.” Zuckerberg responded separately to the public disclosure on Facebook, acknowledging, “Like any organization, we had a lot of internal discussion and people raised different ideas.” He called the Facebook scrutiny “healthy given the vast number of people who use our services,” but said it shouldn’t “misrepresent our actions or motives.”

On December 14, 2018, a vulnerability was disclosed in the Facebook Photo API that existed between September 13-25, 2018, exposing private photos of 6.8 million users. The Photo API bug affected people who use Facebook to log in to third-party services.

On December 18, 2018, The New York Times reported on special data sharing agreements that “[exempted] business partners from its usual privacy rules, naming Microsoft’s Bing search engine, Netflix, Spotify, Amazon, and Yahoo as partners in the report. Partners were capable of accessing data including friend lists and private messages, “despite public statements it had stopped that type of sharing years earlier.” Facebook claimed the data sharing was about “helping people,” and that this was not done without user consent.

On January 17, 2019, Facebook disclosed that it removed hundreds of pages and accounts controlled by Russian propaganda organization Sputnik, including accounts posing as politicians from primarily Eastern European countries.

On January 29, 2019, a TechCrunch report uncovered the “Facebook Research” program , which paid users aged 13 to 35 to receive up to $20 per month to install a VPN application similar to Onavo that allowed Facebook to gather practically all information about how phones were used. On iOS, this was distributed using Apple’s Developer Enterprise Program, for which Apple briefly revoked Facebook’s certificate as a result of the controversy .

Facebook initially indicated that “less than 5% of the people who chose to participate in this market research program were teens,” and on March 1, 2019 amended the statement to “about 18 percent.”

On February 7, 2019, the German antitrust office ruled that Facebook must obtain consent before collecting data on non-Facebook members, following a three-year investigation.

On February 20, 2019, Facebook added new location controls to its Android app that allows users to limit background data collection when the app is not in use .

The same day, ZDNet reported that Microsoft’s Edge browser contained a secret whitelist allowing Facebook to run Adobe Flash, bypassing the click-to-play policy that other websites are subject to for Flash objects over 398×298 pixels. The whitelist was removed in the February 2019 Patch Tuesday update.

On March 6, 2019, Zuckerberg announced a plan to rebuild services around encryption and privacy , “over the next few years.” As part of these changes, Facebook will make messages between Facebook, Instagram, and WhatsApp interoperable. Former Microsoft executive Steven Sinofsky –who was fired after the poor reception of Windows 8–called the move “fantastic,” comparing it to Microsoft’s Trustworthy Computing initiative in 2002.

CNET and CBS News Senior Producer Dan Patterson noted on CBSN that Facebook can benefit from this consolidation by making the messaging platforms cheaper to operate, as well as profiting from users sending money through the messaging platform, in a business model similar to Venmo.

On March 21, 2019, Facebook disclosed a lapse in security that resulted in hundreds of millions of passwords being stored in plain text, affecting users of Facebook, Facebook Lite, and Instagram. Facebook claimed that “these passwords were never visible to anyone outside of Facebook and we have found no evidence to date that anyone internally abused or improperly accessed them.”

Though Facebook’s post does not provide specifics, a report by veteran security reporter Brian Krebs claimed “between 200 million and 600 million” users were affected, and that “more than 20,000 Facebook employees” would have had access.

On March 22, 2019, a court filing by the attorney general of Washington DC alleged that Facebook knew about the Cambridge Analytica scandal months prior to the first public reports in December 2015. Facebook claimed that employees knew of rumors relating to Cambridge Analytica, but the claims relate to a “different incident” than the main scandal, and insisted that the company did not mislead anyone about the timeline of the scandal.

Facebook is seeking to have the case filed in Washington DC dismissed, as well as to seal a document filed in that case.

On March 31, 2019, The Washington Post published an op-ed by Zuckerberg calling for governments and regulators to take a “more active role” in regulating the internet. Shortly after, Facebook introduced a feature that explains why content is shown to users on their news feeds .

On April 3, 2019, over 540 million Facebook-related records were found on two improperly protected AWS servers . The data was collected by Cultura Colectiva, a Mexico-based online media platform, using Facebook APIs. Amazon deactivated the associated account at Facebook’s request.

On April 15, 2019, it was discovered that Oculus, a company owned by Facebook, shipped VR headsets with internal etchings including text such as “ Big Brother is Watching .”

On April 18, 2019, Facebook disclosed the “unintentional” harvesting of email contacts belonging to approximately 1.5 million users over the course of three years. Affected users were asked to provide email address credentials to verify their identity.

On April 30, 2019, at Facebook’s F8 developer conference , the company unveiled plans to overhaul Messenger and re-orient Facebook to prioritize Groups instead of the timeline view, with Zuckerberg declaring “The future is private.”

On May 9, 2019, Facebook co-founder Chris Hughes called for Facebook to be broken up by government regulators, in an editorial in The New York Times. Hughes, who left the company in 2007, cited concerns that Zuckerberg has surrounded himself with people who do not challenge him . “We are a nation with a tradition of reining in monopolies, no matter how well-intentioned the leaders of these companies may be. Mark’s power is unprecedented and un-American,” Hughes said.

Proponents of a Facebook breakup typically point to unwinding the social network’s purchase of Instagram and WhatsApp.

Zuckerberg dismissed Hughes’ appeal for a breakup in comments to France 2, stating in part that “If what you care about is democracy and elections, then you want a company like us to invest billions of dollars a year, like we are, in building up really advanced tools to fight election interference.”

On May 24, 2019, a report from Motherboard claimed “multiple” staff members of Snapchat used internal tools to spy on users .

On July 8, 2019, Apple co-founder Steve Wozniak warned users to get off of Facebook .

On July 18, 2019, lawmakers in a House Committee on Financial Services hearing expressed mistrust of Facebook’s Libra cryptocurrency plan due to its “pattern of failing to keep consumer data private.” Lawmakers had previously issued a letter to Facebook requesting the company pause development of the project.

On July 24, 2019, the FTC announced a $5 billion settlement with Facebook over user privacy violations. Facebook agreed to conduct an overhaul of its consumer privacy practices as part of the settlement. Access to friend data by Sony and Facebook was “immediately” restricted as part of this settlement, according to CNET. Separately, the FTC settled with Aleksandr Kogan and former Cambridge Analytica CEO Alexander Nix , “restricting how they conduct any business in the future, and requiring them to delete or destroy any personal information they collected.” The FTC announced a lawsuit against Cambridge Analytica the same day.

Also on July 24, 2019, Netflix released “The Great Hack,” a documentary about the Cambridge Analytica scandal .

In early July, 2020, Facebook admitted to sharing user data with an estimated 5,000 third-party developers after it access to that data was supposed to expire.

Zuckerberg testified before Congress again on July 29, 2020, as part of an antitrust hearing that included Amazon’s Jeff Bezos, Apple’s Tim Cook, and Google’s Sundar Pichai . The hearing didn’t touch on Facebook’s data privacy scandal, and was instead focused on Facebook’s purchase of Instagram and WhatsApp , as well as its treatment of other competing services.

- Facebook knew of illicit user profile harvesting for 2 years, never acted (CBS News)

- Facebook’s FTC consent decree deal: What you need to know (CNET)

- Australia’s Facebook investigation expected to take at least 8 months (ZDNet)

- Election tech: The truth about Cambridge Analytica’s political big data (TechRepublic)

- Google sued by ACCC for allegedly linking data for ads without consent (ZDNet)

- Midterm elections, social media and hacking: What you need to know (CNET)

- Critical flaw revealed in Facebook Fizz TLS project (ZDNet)

- CCPA: What California’s new privacy law means for Facebook, Twitter users (CNET)

What are the key companies involved in the Facebook data privacy scandal?

In addition to Facebook, these are the companies connected to this data privacy story.

SCL Group (formerly Strategic Communication Laboratories) is at the center of the privacy scandal, though it has operated primarily through subsidiaries. Nominally, SCL was a behavioral research/strategic communication company based in the UK. The company was dissolved on May 1, 2018.

Cambridge Analytica and SCL USA are offshoots of SCL Group, primarily operating in the US. Registration documentation indicates the pair formally came into existence in 2013. As with SCL Group, the pair were dissolved on May 1, 2018.

Global Science Research was a market research firm based in the UK from 2014 to 2017. It was the originator of the thisisyourdigitiallife app. The personal data derived from the app (if not the app itself) was sold to Cambridge Analytica for use in campaign messaging.

Emerdata is the functional successor to SCL and Cambridge Analytica. It was founded in August 2017, with registration documents listing several people associated with SCL and Cambridge Analytica, as well as the same address as that of SCL Group’s London headquarters.

AggregateIQ is a Canadian consulting and technology company founded in 2013. The company produced Ripon, the software platform for Cambridge Analytica’s political campaign work, which leaked publicly after being discovered in an unprotected GitLab bucket .

Cubeyou is a US-based data analytics firm that also operated surveys on Facebook, and worked with Cambridge University from 2013 to 2015. It was suspended from Facebook in April 2018 following a CNBC report .

Six4Three was a US-based startup that created an app that used image recognition to identify photos of women in bikinis shared on Facebook users’ friends’ pages. The company sued Facebook in April 2015, when the app became inoperable after access to this data was revoked when the original version of Facebook’s Graph API was discontinued .

Onavo is an analytics company that develops mobile apps. They created Onavo Extend and Onavo Protect, which are VPN services for data protection and security, respectively. Facebook purchased the company in October 2013 . Data from Onavo is used by Facebook to track usage of non-Facebook apps on smartphones .

The Internet Research Agency is a St. Petersburg-based organization with ties to Russian intelligence services. The organization engages in politically-charged manipulation across English-language social media, including Facebook.

- If your organization advertises on Facebook, beware of these new limitations (TechRepublic)

- Data breach exposes Cambridge Analytica’s data mining tools (ZDNet)

- Was your business’s Twitter feed sold to Cambridge Analytica? (TechRepublic)

- US special counsel indicts 13 members of Russia’s election meddling troll farm (ZDNet)

Who are the key people involved in the Facebook data privacy scandal?

Nigel Oakes is the founder of SCL Group, the parent company of Cambridge Analytica. A report from Buzzfeed News unearthed a quote from 1992 in which Oakes stated, “We use the same techniques as Aristotle and Hitler. … We appeal to people on an emotional level to get them to agree on a functional level.”

Alexander Nix was the CEO of Cambridge Analytica and a director of SCL Group. He was suspended following reports detailing a video in which Nix claimed the company “offered bribes to smear opponents as corrupt,” and that it “campaigned secretly in elections… through front companies or using subcontractors.”

Robert Mercer is a conservative activist, computer scientist, and a co-founder of Cambridge Analytica. A New York Times report indicates that Mercer invested $15 million in the company. His daughters Jennifer Mercer and Rebekah Anne Mercer serve as directors of Emerdata.

Christopher Wylie is the former director of research at Cambridge Analytica. He provided information to The Guardian for its exposé of the Facebook data privacy scandal. He has since testified before committees in the US and UK about Cambridge Analytica’s involvement in this scandal.

Steve Bannon is a co-founder of Cambridge Analytica, as well as a founding member and former executive chairman of Breitbart News, an alt-right news outlet. Breitbart News has reportedly received funding from the Mercer family as far back as 2010. Bannon left Breitbart in January 2018. According to Christopher Wylie, Bannon is responsible for testing phrases such as “ drain the swamp ” at Cambridge Analytica, which were used extensively on Breitbart.

Aleksandr Kogan is a Senior Research Associate at Cambridge University and co-founder of Global Science Research, which created the data harvesting thisisyourdigitiallife app. He worked as a researcher and consultant for Facebook in 2013 and 2015. Kogan also received Russian government grants and is an associate professor at St. Petersburg State University, though he claims this is an honorary role .

Joseph Chancellor was a co-director of Global Science Research, which created the data harvesting thisisyourdigitiallife app. Around November 2015, he was hired by Facebook as a “quantitative social psychologist.” A spokesperson indicated on September 6, 2018, that he was no longer employed by Facebook.

Michal Kosinski , David Stillwell , and Thore Graepel are the researchers who proposed and developed the model to “psychometrically” analyze users based on their Facebook likes. At the time this model was published, Kosinski and Stillwell were affiliated with Cambridge University, while Graepel was affiliated with the Cambridge-based Microsoft Research. (None have an association with Cambridge Analytica, according to Cambridge University .)

Mark Zuckerberg is the founder and CEO of Facebook. He founded the website in 2004 from his dorm room at Harvard.

Sheryl Sandberg is the COO of Facebook. She left Google to join the company in March 2008. She became the eighth member of the company’s board of directors in 2012 and is the first woman in that role.

Damian Collins is a Conservative Party politician based in the United Kingdom. He currently serves as the Chair of the House of Commons Culture, Media and Sport Select Committee. Collins is responsible for issuing orders to seize documents from the American founder of Six4Three while he was traveling in London, and releasing those documents publicly.

Chris Hughes is one of four Facebook co-founders, who originally took on beta testing and feedback for the website, until leaving in 2007. Hughes is the first to call for Facebook to be broken up by regulators.

- Facebook investigates employee’s ties to Cambridge Analytica (CBS News)

- Aleksandr Kogan: The link between Cambridge Analytica and Facebook (CBS News)

- Video: Cambridge Analytica shuts down following data scandal (CBS News)

How have Facebook and Mark Zuckerberg responded to the data privacy scandal?

Each time Facebook finds itself embroiled in a privacy scandal, the general playbook seems to be the same: Mark Zuckerberg delivers an apology, with oft-recycled lines, such as “this was a big mistake,” or “I know we can do better.” Despite repeated controversies regarding Facebook’s handling of personal data, it has continued to gain new users. This is by design–founding president Sean Parker indicated at an Axios conference in November 2017 that the first step of building Facebook features was “How do we consume as much of your time and conscious attention as possible?” Parker also likened the design of Facebook to “exploiting a vulnerability in human psychology.”

On March 16, 2018, Facebook announced that SCL and Cambridge Analytica had been banned from the platform. The announcement indicated, correctly, that “Kogan gained access to this information in a legitimate way and through the proper channels that governed all developers on Facebook at that time,” and passing the information to a third party was against the platform policies.

The following day, the announcement was amended to state:

The claim that this is a data breach is completely false. Aleksandr Kogan requested and gained access to information from users who chose to sign up to his app, and everyone involved gave their consent. People knowingly provided their information, no systems were infiltrated, and no passwords or sensitive pieces of information were stolen or hacked.

On March 21, 2018, Mark Zuckerberg posted his first public statement about the issue, stating in part that:

“We have a responsibility to protect your data, and if we can’t then we don’t deserve to serve you. I’ve been working to understand exactly what happened and how to make sure this doesn’t happen again.”

On March 26, 2018, Facebook placed full-page ads stating : “This was a breach of trust, and I’m sorry we didn’t do more at the time. We’re now taking steps to ensure this doesn’t happen again,” in The New York Times, The Washington Post, and The Wall Street Journal, as well as The Observer, The Sunday Times, Mail on Sunday, Sunday Mirror, Sunday Express, and Sunday Telegraph in the UK.

In a blog post on April 4, 2018, Facebook announced a series of changes to data handling practices and API access capabilities. Foremost among these include limiting the Events API, which is no longer able to access the guest list or wall posts. Additionally, Facebook removed the ability to search for users by phone number or email address and made changes to the account recovery process to fight scraping.

On April 10, 2018, and April 11, 2018, Mark Zuckerberg testified before Congress. Details about his testimony are in the next section of this article.

On April 10, 2018, Facebook announced the launch of its data abuse bug bounty program. While Facebook has an existing security bug bounty program, this is targeted specifically to prevent malicious users from engaging in data harvesting. There is no limit to how much Facebook could potentially pay in a bounty, though to date the highest amount the company has paid is $40,000 for a security bug.

On May 14, 2018, “around 200” apps were banned from Facebook as part of an investigation into if companies have abused APIs to harvest personal information. The company declined to provide a list of offending apps.

On May 22, 2018, Mark Zuckerberg testified, briefly, before the European Parliament about the data privacy scandal and Cambridge Analytica. The format of the testimony has been the subject of derision, as all of the questions were posed to Zuckerberg before he answered. Guy Verhofstadt, an EU Parliament member representing Belgium, said , “I asked you six ‘yes’ and ‘no’ questions, and I got not a single answer.”

What did Mark Zuckerberg say in his testimony to Congress?

In his Senate testimony on April 10, 2018, Zuckerberg reiterated his apology, stating that “We didn’t take a broad enough view of our responsibility, and that was a big mistake. And it was my mistake. And I’m sorry. I started Facebook, I run it, and I’m responsible for what happens here,” adding in a response to Sen. John Thune that “we try not to make the same mistake multiple times.. in general, a lot of the mistakes are around how people connect to each other, just because of the nature of the service.”

Sen. Amy Klobuchar asked if Facebook had determined whether Cambridge Analytica and the Internet Research Agency were targeting the same users. Zuckerberg replied, “We’re investigating that now. We believe that it is entirely possible that there will be a connection there.” According to NBC News , this was the first suggestion there is a link between the activities of Cambridge Analytica and the Russian disinformation campaign.

On June 11, 2018, nearly 500 pages of new testimony from Zuckerberg was released following promises of a follow-up to questions for which he did not have sufficient information to address during his Congressional testimony. The Washington Post notes that the release, “in some instances sidestepped lawmakers’ questions and concerns,” but that the questions being asked were not always relevant, particularly in the case of Sen. Ted Cruz, who attempted to bring attention to Facebook’s donations to political organizations, as well as how Facebook treats criticism of “Taylor Swift’s recent cover of an Earth, Wind and Fire song.”

- Facebook gave Apple, Samsung access to data about users — and their friends (CNET)

- Zuckerberg doubles down on Facebook’s fight against fake news, data misuse (CNET)

- Tech execs react to Mark Zuckerberg’s apology: “I think he’s sorry he has to testify” (CBS News)

- On Facebook, Zuckerberg gets privacy and you get nothing (ZDNet)

- 6 Facebook security mistakes to fix on Data Privacy Day (CNET)

- Zuckerberg takes Facebook data apology tour to Washington (CNET)

- Zuckerberg’s Senate hearing highlights in 10 minutes (CNET via YouTube)

- Russian politicians call on Facebook’s Mark Zuckerberg to testify on privacy (CNET)

What is the 2016 US presidential election connection to the Facebook data privacy scandal?

In December 2015, The Guardian broke the story of Cambridge Analytica being contracted by Ted Cruz’s campaign for the Republican Presidential Primary. Despite Cambridge Analytica CEO Alexander Nix’s claim i n an interview with TechRepublic that the company is “fundamentally politically agnostic and an apolitical organization,” the primary financier of the Cruz campaign is Cambridge Analytica co-founder Robert Mercer, who donated $11 million to a pro-Cruz Super PAC. Following Cruz’s withdrawal from the campaign in May 2016, the Mercer family began supporting Donald Trump.

In January 2016, Facebook COO Sheryl Sandberg told investors that the election was “a big deal in terms of ad spend,” and that through “using Facebook and Instagram ads you can target by congressional district, you can target by interest, you can target by demographics or any combination of those.”

In October 2017, Facebook announced changes to its advertising platform, requiring identity and location verification and prior authorization in order to run electoral advertising. In the wake of the fallout from the data privacy scandal, further restrictions were added in April 2018, making “issue ads” regarding topics of current interest similarly restricted .

In secretly recorded conversations by an undercover team from Channel 4 News, Cambridge Analytica’s Nix claimed the firm was behind the “defeat crooked Hillary” advertising campaign, adding, “We just put information into the bloodstream of the internet and then watch it grow, give it a little push every now and again over time to watch it take shape,” and that “this stuff infiltrates the online community, but with no branding, so it’s unattributable, untrackable.” The same exposé quotes Chief Data Officer Alex Tayler as saying, “When you think about the fact that Donald Trump lost the popular vote by 3 million votes but won the electoral college vote, that’s down to the data and the research.”

- How Cambridge Analytica used your Facebook data to help elect Trump (ZDNet)

- Facebook takes down fake accounts operated by ‘Roger Stone and his associates’ (ZDNet)

- Facebook, Cambridge Analytica and data mining: What you need to know (CNET)

- Civil rights auditors slam Facebook stance on Trump, voter suppression (ZDNet)

- The Trump campaign app is tapping a “gold mine” of data about Americans (CBS News)

What is the Brexit tie-in to the Facebook data privacy scandal?

AggregateIQ was retained by Nigel Farage’s Vote Leave organization in the Brexit campaign , and both The Guardian and BBC claim that the Canadian company is connected to Cambridge Analytica and its parent organization SCL Group. UpGuard, the organization that found a public GitLab instance with code from AggregateIQ, has extensively detailed its connection to Cambridge Analytica and its involvement in Brexit campaigning .

Additionally, The Guardian quotes Wylie as saying the company “was set up as a Canadian entity for people who wanted to work on SCL projects who didn’t want to move to London.”

- Brexit: A cheat sheet (TechRepublic)

- Facebook suspends another data analytics firm, AggregateIQ (CBS News)

- Lawmakers grill academic at heart of Facebook scandal (CBS News)

How is Facebook affected by the GDPR?

Like any organization providing services to users in European Union countries, Facebook is bound by the EU General Data Protection Regulation ( GDPR ). Due to the scrutiny Facebook is already facing regarding the Cambridge Analytica scandal, as well as the general nature of the social media giant’s product being personal information, its strategy for GDPR compliance is similarly receiving a great deal of focus from users and other companies looking for a model of compliance.

While in theory the GDPR is only applicable to people residing in the EU, Facebook will require users to review their data privacy settings. According to a ZDNet article , Facebook users will be asked if they want to see advertising based on partner information–in practice, websites that feature Facebook’s “Like” buttons. Users globally will be asked if they wish to continue sharing political, religious, and relationship information, while users in Europe and Canada will be given the option of switching automatic facial recognition on again.

Facebook members outside the US and Canada have heretofore been governed by the company’s terms of service in Ireland. This has reportedly been changed prior to the start of GDPR enforcement, as this would seemingly make Facebook liable for damages for users internationally, due to Ireland’s status as an EU member.

- Google, Facebook hit with serious GDPR complaints: Others will be soon (ZDNet)

- Facebook rolls out changes to comply with new EU privacy law (CBS News)

- European court strikes down EU-US Privacy Shield user data exchange agreement as invalid (ZDNet)

- GDPR security pack: Policies to protect data and achieve compliance (TechRepublic Premium)

- IT pro’s guide to GDPR compliance (free PDF) (TechRepublic)

What are Facebook “shadow profiles?”

“Shadow profiles” are stores of information that Facebook has obtained about other people–who are not necessarily Facebook users. The existence of “shadow profiles” was discovered as a result of a bug in 2013. When a user downloaded their Facebook history, that user would obtain not just his or her address book, but also the email addresses and phone numbers of their friends that other people had stored in their address books.

Facebook described the issue in an email to the affected users. This is an excerpt of the email, according to security site Packet Storm:

When people upload their contact lists or address books to Facebook, we try to match that data with the contact information of other people on Facebook in order to generate friend recommendations. Because of the bug, the email addresses and phone numbers used to make friend recommendations and reduce the number of invitations we send were inadvertently stored in their account on Facebook, along with their uploaded contacts. As a result, if a person went to download an archive of their Facebook account through our Download Your Information (DYI) tool, which included their uploaded contacts, they may have been provided with additional email addresses or telephone numbers.

Because of the way that Facebook synthesizes data in order to attribute collected data to existing profiles, data of people who do not have Facebook accounts congeals into dossiers, which are popularly called a “shadow profile.” It is unclear what other sources of input are added to said “shadow profiles,” a term that Facebook does not use, according to Zuckerberg in his Senate testimony.

- Shadow profiles: Facebook has information you didn’t hand over (CNET)

- Finally, the world is getting concerned about data privacy (TechRepublic)

- Firm: Facebook’s shadow profiles are ‘frightening’ dossiers on everyone (ZDNet)

What are the possible implications for enterprises and business users?

Business users and business accounts should be aware that they are as vulnerable as consumers to data exposure. Because Facebook harvests and shares metadata–including SMS and voice call records–between the company’s mobile applications, business users should be aware that their risk profile is the same as a consumer’s. The stakes for businesses and employees could be higher, given that incidental or accidental data exposure could expose the company to liability, IP theft, extortion attempts, and cybercriminals.

Though deleting or deactivating Facebook applications won’t prevent the company from creating so-called advertising “shadow profiles,” it will prevent the company from capturing geolocation and other sensitive data. For actional best practices, contact your company’s legal counsel.

- Social media policy (TechRepublic Premium)

- Want to attain and retain customers? Adopt data privacy policies (TechRepublic)

- Hiring kit: Digital campaign manager (TechRepublic Premium)

- Photos: All the tech celebrities and brands that have deleted Facebook (TechRepublic)

How can I change my Facebook privacy settings?

According to Facebook, in 2014 the company removed the ability for apps that friends use to collect information about an individual user. If you wish to disable third-party use of Facebook altogether–including Login With Facebook and apps that rely on Facebook profiles such as Tinder–this can be done in the Settings menu under Apps And Websites. The Apps, Websites And Games field has an Edit button–click that, and then click Turn Off.

Facebook has been proactively notifying users who had their data collected by Cambridge Analytica, though users can manually check to see if their data was shared by going to this Facebook Help page .

Facebook is also developing a Clear History button, which the company indicates is “their database record of you.” CNET and CBS News Senior Producer Dan Patterson noted on CBSN that “there aren’t a lot of specifics on what that clearing of the database will do, and of course, as soon as you log back in and start creating data again, you set a new cookie and you start the process again.”

To gain a better understanding of how Facebook handles user data, including what options can and cannot be modified by end users, it may be helpful to review Facebook’s Terms of Service , as well as its Data Policy and Cookies Policy .

- Ultimate guide to Facebook privacy and security (Download.com)

- Facebook’s new privacy tool lets you manage how you’re tracked across the web (CNET)

- Securing Facebook: Keep your data safe with these privacy settings (ZDNet)

- How to check if Facebook shared your data with Cambridge Analytica (CNET)

Note: This article was written and reported by James Sanders and Dan Patterson. It was updated by Brandon Vigliarolo.

Subscribe to the Cybersecurity Insider Newsletter

Strengthen your organization's IT security defenses by keeping abreast of the latest cybersecurity news, solutions, and best practices. Delivered Tuesdays and Thursdays

Create a TechRepublic Account

Get the web's best business technology news, tutorials, reviews, trends, and analysis—in your inbox. Let's start with the basics.

* - indicates required fields

Sign in to TechRepublic

Lost your password? Request a new password

Reset Password

Please enter your email adress. You will receive an email message with instructions on how to reset your password.

Check your email for a password reset link. If you didn't receive an email don't forgot to check your spam folder, otherwise contact support .

Welcome. Tell us a little bit about you.

This will help us provide you with customized content.

Want to receive more TechRepublic news?

You're all set.

Thanks for signing up! Keep an eye out for a confirmation email from our team. To ensure any newsletters you subscribed to hit your inbox, make sure to add [email protected] to your contacts list.

To revisit this article, visit My Profile, then View saved stories .

- Backchannel

- Newsletters

- WIRED Insider

- WIRED Consulting

Louise Matsakis Issie Lapowsky

Everything We Know About Facebook's Massive Security Breach

Facebook’s privacy problems severely escalated Friday when the social network disclosed that an unprecedented security issue, discovered September 25, impacted almost 50 million user accounts. Unlike the Cambridge Analytica scandal, in which a third-party company erroneously accessed data that a then-legitimate quiz app had siphoned up, this vulnerability allowed attackers to directly take over user accounts.

The bugs that enabled the attack have since been patched, according to Facebook. The company says that the attackers could see everything in a victim's profile, although it's still unclear if that includes private messages or if any of that data was misused. As part of that fix, Facebook automatically logged out 90 million Facebook users from their accounts Friday morning, accounting both for the 50 million that Facebook knows were affected, and an additional 40 million that potentially could have been. Later Friday, Facebook also confirmed that third-party sites that those users logged into with their Facebook accounts could also be affected .

Facebook says that affected users will see a message at the top of their News Feed about the issue when they log back into the social network. "Your privacy and security are important to us," the update reads. "We want to let you know about recent action we've taken to secure your account." The message is followed by a prompt to click and learn more details. If you were not logged out but want to take extra security precautions, you can check this page to see the places where your account is currently logged in, and log them out.

Facebook has yet to identify the hackers, or where they may have originated. “We may never know,” Guy Rosen, Facebook’s vice president of product, said on a call with reporters Friday. The company is now working with the Federal Bureau of Investigation to identify the attackers. A Taiwanese hacker named Chang Chi-yuan had earlier this week promised to live-stream the deletion of Mark Zuckerberg's Facebook account, but Rosen said Facebook was "not aware that that person was related to this attack."

“If the attacker exploited custom and isolated vulnerabilities, and the attack was a highly targeted one, there simply might be no suitable trace or intelligence allowing investigators to connect the dots,” says Lukasz Olejnik, a security and privacy researcher and member of the W3C Technical Architecture Group.

On the same call, Facebook CEO Mark Zuckerberg reiterated previous statements he has made about security being an “arms race.”

“This is a really serious security issue, and we’re taking it really seriously,” he said. “I’m glad that we found this, and we were able to fix the vulnerability and secure the accounts, but it definitely is an issue that it happened in the first place.”

The social network says its investigation into the breach began on September 16, when it saw an unusual spike in users accessing Facebook. On September 25, the company’s engineering team discovered that hackers appear to have exploited a series of bugs related to a Facebook feature that lets people see what their own profile looks like to someone else. The " View As " feature is designed to allow users to experience how their privacy settings look to another person.

The first bug prompted Facebook's video upload tool to mistakenly show up on the "View As" page. The second one caused the uploader to generate an access token—what allows you to remain logged into your Facebook account on a device, without having to sign in every time you visit—that had the same sign-in permissions as the Facebook mobile app. Finally, when the video uploader did appear in "View As" mode, it triggered an access code for whoever the hacker was searching for.

David Kushner

Andy Greenberg

David Gilbert

Emily Mullin

“This is a complex interaction of multiple bugs,” Rosen said, adding that the hackers likely required some level of sophistication.