Architectural Intelligence pp 117–127 Cite as

ArchiGAN: Artificial Intelligence x Architecture

- Stanislas Chaillou 6

- First Online: 03 September 2020

5703 Accesses

34 Citations

AI will soon massively empower architects in their day-to-day practice. This article provides a proof of concept. The framework used here offers a springboard for discussion, inviting architects to start engaging with AI, and data scientists to consider Architecture as a field of investigation.

This is a preview of subscription content, log in via an institution .

Buying options

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

- Durable hardcover edition

Tax calculation will be finalised at checkout

Purchases are for personal use only

Zheng, H., & Huang, W. (2018). Architectural drawings recognition and generation through machine learning . Cambridge: MA, ACADIA.

Google Scholar

Peters, N. (2017). Master thesis: “Enabling alternative architectures: Collaborative frameworks for participatory design.” Cambridge, MA: Harvard Graduate School of Design.

Martinez, N. (2016). Suggestive drawing among human and artificial intelligences . Cambridge, MA: Harvard Graduate School of Design.

Download references

Author information

Authors and affiliations.

Spacemaker, Oslo, Norway

Stanislas Chaillou

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Stanislas Chaillou .

Editor information

Editors and affiliations.

College of Architecture and Urban Planning, Tongji University, Shanghai, China

Philip F. Yuan

School of Engineering Cluster, Civil and Infrastructure Engineering, Melbourne, VIC, Australia

Rights and permissions

Reprints and permissions

Copyright information

© 2020 Springer Nature Singapore Pte Ltd.

About this chapter

Cite this chapter.

Chaillou, S. (2020). ArchiGAN: Artificial Intelligence x Architecture. In: Yuan, P.F., Xie, M., Leach, N., Yao, J., Wang, X. (eds) Architectural Intelligence. Springer, Singapore. https://doi.org/10.1007/978-981-15-6568-7_8

Download citation

DOI : https://doi.org/10.1007/978-981-15-6568-7_8

Published : 03 September 2020

Publisher Name : Springer, Singapore

Print ISBN : 978-981-15-6567-0

Online ISBN : 978-981-15-6568-7

eBook Packages : Computer Science Computer Science (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

- Architecture and Design

- Asian and Pacific Studies

- Business and Economics

- Classical and Ancient Near Eastern Studies

- Computer Sciences

- Cultural Studies

- Engineering

- General Interest

- Geosciences

- Industrial Chemistry

- Islamic and Middle Eastern Studies

- Jewish Studies

- Library and Information Science, Book Studies

- Life Sciences

- Linguistics and Semiotics

- Literary Studies

- Materials Sciences

- Mathematics

- Social Sciences

- Sports and Recreation

- Theology and Religion

- Publish your article

- The role of authors

- Promoting your article

- Abstracting & indexing

- Publishing Ethics

- Why publish with De Gruyter

- How to publish with De Gruyter

- Our book series

- Our subject areas

- Your digital product at De Gruyter

- Contribute to our reference works

- Product information

- Tools & resources

- Product Information

- Promotional Materials

- Orders and Inquiries

- FAQ for Library Suppliers and Book Sellers

- Repository Policy

- Free access policy

- Open Access agreements

- Database portals

- For Authors

- Customer service

- People + Culture

- Journal Management

- How to join us

- Working at De Gruyter

- Mission & Vision

- De Gruyter Foundation

- De Gruyter Ebound

- Our Responsibility

- Partner publishers

Your purchase has been completed. Your documents are now available to view.

Artificial Intelligence and Architecture

From research to practice.

- Stanislas Chaillou

Please login or register with De Gruyter to order this product.

- Language: English

- Publisher: Birkhäuser

- Copyright year: 2022

- Audience: Architects, Urban Planers, Designer, Students

- Main content: 208

- Illustrations: 34

- Coloured Illustrations: 40

- Keywords: Design ; Automation ; Digital Architecture

- Published: March 7, 2022

- ISBN: 9783035624045

- Published: April 4, 2022

- ISBN: 9783035624007

Data, Digital Media, and a Different Design Office

An image generated by artificial intelligence from the prompt: "An architectural model of complexity, on a white background." By Certain Measures.

In the summer of 2022, the nonprofit artificial intelligence group OpenAI introduced DALL-E, a groundbreaking text-to-image artificial intelligence software engine. With a prompt of a few simple words, this engine could produce dozens of photorealistic images that depicted the prompt. The popular press marveled at the striking new technology and a flood of AI-generated images, created as easily as one might make an errant comment, left some critics to ponder the future of the creative process. Architects were quick to seize on the complexities and contradictions of DALL-E with a surge of freshly generated and startlingly compelling architectural images on the one hand, and a moral panic around the implications of rapidly advancing artificial intelligence on the other. By autumn, it was clear that this technology, developed for purposes totally unrelated to architecture, was confronting architects with profound new questions around the future of design.

The advent of new generative AI methods seemed to resurface latent sympathies and hostilities about the role of technology, software, media, and data in architectural practice. Arguments about authorship and agency that emerged with the first application of computers to design around 50 years ago—and that seem to recur with each technological cycle—were exhumed for another ritual airing. Tacit questions about the hierarchy of design practice, such as who truly holds creative power, hovered at the margins as well. Design technology is often reflexively associated with the tooling of production labor, a means to control the execution or documentation of ideas rather than a locus of creation itself. Yet new AI systems erase the distinction between production and creation with a technology that accelerates both, a medium through which to imagine novel design ideas rather than merely a utilitarian tool to more efficiently produce drawings, images, or models. By destabilizing the typical media of representation and upending the hierarchy of creative labor, are these new technologies inimical to the foundational conventions of architectural practice?

Perhaps architectural practice needs some creative destruction. Young architecture offices have always faced challenges, from finding clients willing to trust large capital investments to untested designers with scant built work, to the risky up-front investment by architects themselves to keep a practice going until viable commissions can support the office as a business. Yet today, the classical model of a small design practice nurtured by will, pluck, and ineffable creative fervor is more precarious than ever. If the number of successful young firms is a barometer of how welcoming an industry is to innovation and generational opportunity, the architectural scene is a dismal picture. Rankings of the most financially successful architecture firms are dominated by offices with roots that date back decades. Even modest mid-sized firms—say, those with revenue in the $25–$50 million range—were founded more than 45 years ago on average, with some being twice as old.

Young designers have to think in terms of decades or generations to establish their practices, while venture-backed start-ups, in contrast, think of growing to the same scale in terms of months. And while the survival rate of firms varies widely by industry, a recent estimate from the US Bureau of Labor Statistics suggests that firms in the construction space—which architecture is ultimately a part of—have among the lowest 10-year survival rates, roughly half that of health-care businesses. Moreover, within architecture, engineering, and construction, a decade of mergers and acquisitions has created a much more hegemonic and consolidated business landscape. By 2018, about half of 2008’s largest architecture, engineering, and construction firms had consolidated with others. Starting any small business has also gotten more daunting. Between 2000 and 2010, the number of new jobs created by small businesses (defined as companies of fewer than 250 employees) of any type in the United States plummeted by nearly half. Ironically, by this measure, many of the largest architecture offices in the US are still small businesses. Regardless of talent and determination, the fortunes of small design offices are dominated by macroeconomic factors and by their ability to compete in a differentiated way, and by these metrics, competition is tough indeed.

The march of digital automation is likely to cloud the picture for new practices further. The striking products of AI are only one example of how technology may restructure, democratize, and upend architectural practice and labor. Beyond manual jobs most susceptible to automation, scholars have warned that the so-called knowledge professions, including architecture, must adapt or reinvent themselves. While the most dire disruptions hinted at by newer AI technologies—massive workforce redundancy or the wholesale replacement of architects by automated tools—will likely be avoided, architects must confront these developments free of any sentimental or antiquated image of what their discipline should be.

The future health of the discipline demands deeper experimentation with alternative models of practice that embrace the opportunities of a changing cultural, technological, and economic landscape without nostalgia. To pioneer new work, young offices must be more resourceful about developing transdisciplinary approaches grounded in architecture but claiming a broader design mandate. An integrated practice that fuses design, data, and technology more holistically is not only better aligned with seismic technological and economic shifts than classical models of practice, but it is also, arguably, better placed in the contemporary cultural conversation.

Data has become a common lingua franca among disparate disciplines and industries, and as such constitutes an indispensable mode of analysis, insight, and action around complex multidimensional problems. As vexing ecological, social, and economic issues call for systemic transformation, data can provide a common framework for understanding and action. Architects intuitively feel the need to respond to these challenges today, yet rudimentary data literacy—including topics like data sourcing, acquisition, and cleaning; data visualization; and elementary statistics—is virtually absent from architectural practice and training. If architects want to expand their impact in tackling systemic challenges and win allies as informed system designers, leveraging data is an essential tool.

Data is essential not only to inform how we deal with urgent societal and planetary challenges as a discipline, but today it is also fundamental to contemporary cultural life. In fact, by some measures, data has long surpassed architecture as a subject of popular fascination. Consider, for instance, how frequently “architecture” or “data” are mentioned in the popular press and published conversation. In the 19th century, during the initial professionalization of architecture, “architecture” and “data” appear with comparable frequency in published sources. Of course, data in the 19th century was not the electronically manipulable computational quanta we now know. When that usage became current in the 1940s, references exploded, so that by the mid-1980s, data was mentioned in published sources a staggering 60 times more frequently than architecture. Moreover, today data drives and shapes every digitally mediated interaction and is embedded in how we access and experience much of our world. There can be little doubt that data has captured the cultural imagination of the 21st century.

We hardly need statistics to corroborate the ubiquity of data and digital media in popular culture. Yet digital media is transforming the more elite spheres just as profoundly. One index of the burgeoning cultural influence of technology is the swelling number of institutions devoted to the intersection of design, art, and technology. Lisbon’s Museum of Art, Architecture and Technology, Basel’s HeK (Haus der Elektronischen Künste), Shanghai’s Aiiiii Art Center, Berlin’s Futurium, Dubai’s Museum of the Future, and Tokyo’s teamLab exhibition space, as well as older institutions including Karlsruhe’s ZKM and Tokyo’s InterCommunication Center and Miraikan, have all been founded in the last 20 years or so to champion the possibilities of integration of art, design, and technology. When compared to the number of new institutions devoted specifically to architecture founded in the same period, one might plausibly argue that data and digital media are more relevant as a cultural force than architecture itself.

Beyond its popular and social relevance, interweaving data more deeply with design practice offers creative possibilities to address the ever expanding visual data that is an essential product of our collective society. Try as we may, human eyes and brains are finite. If one scrolled through Google Images endlessly—16 hours a day, for 80 years—one would have seen about 25 billion images, a miniscule proportion of the estimated 750 billion images on the internet, or the 1.72 trillion photographs taken every year. In training as an architect, one could not hope to see more than a few tens of thousands at an absolute limit. Data science techniques open the possibility of comparative analysis for vastly larger sets of visual data, effectively augmenting the intuition and capacity of an architect. In recent years, new tactics of data sensemaking such as machine learning and neural computation have opened up the possibility to address qualitative dimensions of visual data that were once thought to be beyond the purview of calculation—dimensions like textual descriptions or comparisons that had been the exclusive domain of architectural critics. This blurs the boundaries between the humanistic and computational techniques of analysis and criticism, and further calls into question the sacrosanct distinction between design and technique.

What, then, would a data- and media-fluent design practice that could respond to these evolutions look like? First, it would be multidisciplinary to its core, drawing people with versatile design, technical, and humanistic backgrounds capable of negotiating our world’s multidimensional design problems. Thanks to this broad disciplinary background, it could omnivorously imagine projects across scales and industries, engaging the relevant issues at a deeply strategic level. Second, it could imagine innovations in spatial design beyond the typical typologies by intersecting physical space with data-enabled ways of perceiving and understanding it. Third, it would be an office very much about making the future in tangible, physical, spatial detail, not merely visualizing possibilities digitally. It would understand the continuum between physical and digital experience as more fluid today than ever before, and that fluidity is a uniquely contemporary design opportunity. Fourth, it would embrace collaborations in many forms, engaging corporate, institutional, governmental, and cultural clients with equal relevance because it could speak the common data language of systemic challenges.

Some might protest that architecture is a generalist discipline, and that an expertise developed in media or data smacks of specialization. Yet ironically, one could argue that data science is the generalist discipline of our time, in demand and applicable in virtually every field and a veritable necessity to understand the complexities of contemporary life. By connecting spatial imagination, creative drive, and a technically synthetic approach, a young office could prototype a new kind of creative generalist.

The survival of any fledgling design venture depends on its ability to create a differentiated, valuable, and defensible expertise. While architectural methodologies and business models for young offices have remained complacently stagnant for decades, the context in which they operate has dramatically transformed. Beyond macroeconomic and technological changes, over the last 20 years, the very definition of design itself has mutated around architecture, with the advent of user-centered design, design for manufacturing, strategic design, and design futures, to name only a few. Yet architecture has remained largely oblivious to these developments, content to conform to outdated models of practice until the bitter end. To create a future for design, we must look to the challenges and opportunities of today. The data-enabled design office is no universal panacea for the ills afflicting architectural practice. Yet it might be a conduit for a richer and more vital way of practicing design—one attuned to our age and embracing the opportunities ahead.

Andrew Witt is an Associate Professor in Practice in Architecture at the GSD. Witt is also co-founder, with Tobias Nolte, of Certain Measures , a Boston/Berlin-based design and technology studio.

- Computation

Academia.edu no longer supports Internet Explorer.

To browse Academia.edu and the wider internet faster and more securely, please take a few seconds to upgrade your browser .

Enter the email address you signed up with and we'll email you a reset link.

- We're Hiring!

- Help Center

ARTIFICIAL INTELLIGENCE IN THE FIELD OF ARCHITECTURE IN THE INDIAN CONTEXT

- We're Hiring!

- Help Center

- Find new research papers in:

- Health Sciences

- Earth Sciences

- Cognitive Science

- Mathematics

- Computer Science

- Academia ©2024

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Perspective

- Published: 06 March 2024

Artificial intelligence and illusions of understanding in scientific research

- Lisa Messeri ORCID: orcid.org/0000-0002-0964-123X 1 na1 &

- M. J. Crockett ORCID: orcid.org/0000-0001-8800-410X 2 , 3 na1

Nature volume 627 , pages 49–58 ( 2024 ) Cite this article

19k Accesses

3 Citations

708 Altmetric

Metrics details

- Human behaviour

- Interdisciplinary studies

- Research management

- Social anthropology

Scientists are enthusiastically imagining ways in which artificial intelligence (AI) tools might improve research. Why are AI tools so attractive and what are the risks of implementing them across the research pipeline? Here we develop a taxonomy of scientists’ visions for AI, observing that their appeal comes from promises to improve productivity and objectivity by overcoming human shortcomings. But proposed AI solutions can also exploit our cognitive limitations, making us vulnerable to illusions of understanding in which we believe we understand more about the world than we actually do. Such illusions obscure the scientific community’s ability to see the formation of scientific monocultures, in which some types of methods, questions and viewpoints come to dominate alternative approaches, making science less innovative and more vulnerable to errors. The proliferation of AI tools in science risks introducing a phase of scientific enquiry in which we produce more but understand less. By analysing the appeal of these tools, we provide a framework for advancing discussions of responsible knowledge production in the age of AI.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

24,99 € / 30 days

cancel any time

Subscribe to this journal

Receive 51 print issues and online access

185,98 € per year

only 3,65 € per issue

Rent or buy this article

Prices vary by article type

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Nobel Turing Challenge: creating the engine for scientific discovery

Hiroaki Kitano

Accelerating science with human-aware artificial intelligence

Jamshid Sourati & James A. Evans

On scientific understanding with artificial intelligence

Mario Krenn, Robert Pollice, … Alán Aspuru-Guzik

Crabtree, G. Self-driving laboratories coming of age. Joule 4 , 2538–2541 (2020).

Article CAS Google Scholar

Wang, H. et al. Scientific discovery in the age of artificial intelligence. Nature 620 , 47–60 (2023). This review explores how AI can be incorporated across the research pipeline, drawing from a wide range of scientific disciplines .

Article CAS PubMed ADS Google Scholar

Dillion, D., Tandon, N., Gu, Y. & Gray, K. Can AI language models replace human participants? Trends Cogn. Sci. 27 , 597–600 (2023).

Article PubMed Google Scholar

Grossmann, I. et al. AI and the transformation of social science research. Science 380 , 1108–1109 (2023). This forward-looking article proposes a variety of ways to incorporate generative AI into social-sciences research .

Gil, Y. Will AI write scientific papers in the future? AI Mag. 42 , 3–15 (2022).

Google Scholar

Kitano, H. Nobel Turing Challenge: creating the engine for scientific discovery. npj Syst. Biol. Appl. 7 , 29 (2021).

Article PubMed PubMed Central Google Scholar

Benjamin, R. Race After Technology: Abolitionist Tools for the New Jim Code (Oxford Univ. Press, 2020). This book examines how social norms about race become embedded in technologies, even those that are focused on providing good societal outcomes .

Broussard, M. More Than a Glitch: Confronting Race, Gender, and Ability Bias in Tech (MIT Press, 2023).

Noble, S. U. Algorithms of Oppression: How Search Engines Reinforce Racism (New York Univ. Press, 2018).

Bender, E. M., Gebru, T., McMillan-Major, A. & Shmitchell, S. On the dangers of stochastic parrots: can language models be too big? in Proc. 2021 ACM Conference on Fairness, Accountability, and Transparency 610–623 (Association for Computing Machinery, 2021). One of the first comprehensive critiques of large language models, this article draws attention to a host of issues that ought to be considered before taking up such tools .

Crawford, K. Atlas of AI: Power, Politics, and the Planetary Costs of Artificial Intelligence (Yale Univ. Press, 2021).

Johnson, D. G. & Verdicchio, M. Reframing AI discourse. Minds Mach. 27 , 575–590 (2017).

Article Google Scholar

Atanasoski, N. & Vora, K. Surrogate Humanity: Race, Robots, and the Politics of Technological Futures (Duke Univ. Press, 2019).

Mitchell, M. & Krakauer, D. C. The debate over understanding in AI’s large language models. Proc. Natl Acad. Sci. USA 120 , e2215907120 (2023).

Kidd, C. & Birhane, A. How AI can distort human beliefs. Science 380 , 1222–1223 (2023).

Birhane, A., Kasirzadeh, A., Leslie, D. & Wachter, S. Science in the age of large language models. Nat. Rev. Phys. 5 , 277–280 (2023).

Kapoor, S. & Narayanan, A. Leakage and the reproducibility crisis in machine-learning-based science. Patterns 4 , 100804 (2023).

Hullman, J., Kapoor, S., Nanayakkara, P., Gelman, A. & Narayanan, A. The worst of both worlds: a comparative analysis of errors in learning from data in psychology and machine learning. In Proc. 2022 AAAI/ACM Conference on AI, Ethics, and Society (eds Conitzer, V. et al.) 335–348 (Association for Computing Machinery, 2022).

Rudin, C. Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat. Mach. Intell. 1 , 206–215 (2019). This paper articulates the problems with attempting to explain AI systems that lack interpretability, and advocates for building interpretable models instead .

Crockett, M. J., Bai, X., Kapoor, S., Messeri, L. & Narayanan, A. The limitations of machine learning models for predicting scientific replicability. Proc. Natl Acad. Sci. USA 120 , e2307596120 (2023).

Article CAS PubMed PubMed Central Google Scholar

Lazar, S. & Nelson, A. AI safety on whose terms? Science 381 , 138 (2023).

Article PubMed ADS Google Scholar

Collingridge, D. The Social Control of Technology (St Martin’s Press, 1980).

Wagner, G., Lukyanenko, R. & Paré, G. Artificial intelligence and the conduct of literature reviews. J. Inf. Technol. 37 , 209–226 (2022).

Hutson, M. Artificial-intelligence tools aim to tame the coronavirus literature. Nature https://doi.org/10.1038/d41586-020-01733-7 (2020).

Haas, Q. et al. Utilizing artificial intelligence to manage COVID-19 scientific evidence torrent with Risklick AI: a critical tool for pharmacology and therapy development. Pharmacology 106 , 244–253 (2021).

Article CAS PubMed Google Scholar

Müller, H., Pachnanda, S., Pahl, F. & Rosenqvist, C. The application of artificial intelligence on different types of literature reviews – a comparative study. In 2022 International Conference on Applied Artificial Intelligence (ICAPAI) https://doi.org/10.1109/ICAPAI55158.2022.9801564 (Institute of Electrical and Electronics Engineers, 2022).

van Dinter, R., Tekinerdogan, B. & Catal, C. Automation of systematic literature reviews: a systematic literature review. Inf. Softw. Technol. 136 , 106589 (2021).

Aydın, Ö. & Karaarslan, E. OpenAI ChatGPT generated literature review: digital twin in healthcare. In Emerging Computer Technologies 2 (ed. Aydın, Ö.) 22–31 (İzmir Akademi Dernegi, 2022).

AlQuraishi, M. AlphaFold at CASP13. Bioinformatics 35 , 4862–4865 (2019).

Jumper, J. et al. Highly accurate protein structure prediction with AlphaFold. Nature 596 , 583–589 (2021).

Article CAS PubMed PubMed Central ADS Google Scholar

Lee, J. S., Kim, J. & Kim, P. M. Score-based generative modeling for de novo protein design. Nat. Computat. Sci. 3 , 382–392 (2023).

Gómez-Bombarelli, R. et al. Design of efficient molecular organic light-emitting diodes by a high-throughput virtual screening and experimental approach. Nat. Mater. 15 , 1120–1127 (2016).

Krenn, M. et al. On scientific understanding with artificial intelligence. Nat. Rev. Phys. 4 , 761–769 (2022).

Extance, A. How AI technology can tame the scientific literature. Nature 561 , 273–274 (2018).

Hastings, J. AI for Scientific Discovery (CRC Press, 2023). This book reviews current and future incorporation of AI into the scientific research pipeline .

Ahmed, A. et al. The future of academic publishing. Nat. Hum. Behav. 7 , 1021–1026 (2023).

Gray, K., Yam, K. C., Zhen’An, A. E., Wilbanks, D. & Waytz, A. The psychology of robots and artificial intelligence. In The Handbook of Social Psychology (eds Gilbert, D. et al.) (in the press).

Argyle, L. P. et al. Out of one, many: using language models to simulate human samples. Polit. Anal. 31 , 337–351 (2023).

Aher, G., Arriaga, R. I. & Kalai, A. T. Using large language models to simulate multiple humans and replicate human subject studies. In Proc. 40th International Conference on Machine Learning (eds Krause, A. et al.) 337–371 (JMLR.org, 2023).

Binz, M. & Schulz, E. Using cognitive psychology to understand GPT-3. Proc. Natl Acad. Sci. USA 120 , e2218523120 (2023).

Ornstein, J. T., Blasingame, E. N. & Truscott, J. S. How to train your stochastic parrot: large language models for political texts. Github , https://joeornstein.github.io/publications/ornstein-blasingame-truscott.pdf (2023).

He, S. et al. Learning to predict the cosmological structure formation. Proc. Natl Acad. Sci. USA 116 , 13825–13832 (2019).

Article MathSciNet CAS PubMed PubMed Central ADS Google Scholar

Mahmood, F. et al. Deep adversarial training for multi-organ nuclei segmentation in histopathology images. IEEE Trans. Med. Imaging 39 , 3257–3267 (2020).

Teixeira, B. et al. Generating synthetic X-ray images of a person from the surface geometry. In Proc. IEEE Conference on Computer Vision and Pattern Recognition 9059–9067 (Institute of Electrical and Electronics Engineers, 2018).

Marouf, M. et al. Realistic in silico generation and augmentation of single-cell RNA-seq data using generative adversarial networks. Nat. Commun. 11 , 166 (2020).

Watts, D. J. A twenty-first century science. Nature 445 , 489 (2007).

boyd, d. & Crawford, K. Critical questions for big data. Inf. Commun. Soc. 15 , 662–679 (2012). This article assesses the ethical and epistemic implications of scientific and societal moves towards big data and provides a parallel case study for thinking about the risks of artificial intelligence .

Jolly, E. & Chang, L. J. The Flatland fallacy: moving beyond low–dimensional thinking. Top. Cogn. Sci. 11 , 433–454 (2019).

Yarkoni, T. & Westfall, J. Choosing prediction over explanation in psychology: lessons from machine learning. Perspect. Psychol. Sci. 12 , 1100–1122 (2017).

Radivojac, P. et al. A large-scale evaluation of computational protein function prediction. Nat. Methods 10 , 221–227 (2013).

Bileschi, M. L. et al. Using deep learning to annotate the protein universe. Nat. Biotechnol. 40 , 932–937 (2022).

Barkas, N. et al. Joint analysis of heterogeneous single-cell RNA-seq dataset collections. Nat. Methods 16 , 695–698 (2019).

Demszky, D. et al. Using large language models in psychology. Nat. Rev. Psychol. 2 , 688–701 (2023).

Karjus, A. Machine-assisted mixed methods: augmenting humanities and social sciences with artificial intelligence. Preprint at https://arxiv.org/abs/2309.14379 (2023).

Davies, A. et al. Advancing mathematics by guiding human intuition with AI. Nature 600 , 70–74 (2021).

Peterson, J. C., Bourgin, D. D., Agrawal, M., Reichman, D. & Griffiths, T. L. Using large-scale experiments and machine learning to discover theories of human decision-making. Science 372 , 1209–1214 (2021).

Ilyas, A. et al. Adversarial examples are not bugs, they are features. Preprint at https://doi.org/10.48550/arXiv.1905.02175 (2019)

Semel, B. M. Listening like a computer: attentional tensions and mechanized care in psychiatric digital phenotyping. Sci. Technol. Hum. Values 47 , 266–290 (2022).

Gil, Y. Thoughtful artificial intelligence: forging a new partnership for data science and scientific discovery. Data Sci. 1 , 119–129 (2017).

Checco, A., Bracciale, L., Loreti, P., Pinfield, S. & Bianchi, G. AI-assisted peer review. Humanit. Soc. Sci. Commun. 8 , 25 (2021).

Thelwall, M. Can the quality of published academic journal articles be assessed with machine learning? Quant. Sci. Stud. 3 , 208–226 (2022).

Dhar, P. Peer review of scholarly research gets an AI boost. IEEE Spectrum spectrum.ieee.org/peer-review-of-scholarly-research-gets-an-ai-boost (2020).

Heaven, D. AI peer reviewers unleashed to ease publishing grind. Nature 563 , 609–610 (2018).

Conroy, G. How ChatGPT and other AI tools could disrupt scientific publishing. Nature 622 , 234–236 (2023).

Nosek, B. A. et al. Replicability, robustness, and reproducibility in psychological science. Annu. Rev. Psychol. 73 , 719–748 (2022).

Altmejd, A. et al. Predicting the replicability of social science lab experiments. PLoS ONE 14 , e0225826 (2019).

Yang, Y., Youyou, W. & Uzzi, B. Estimating the deep replicability of scientific findings using human and artificial intelligence. Proc. Natl Acad. Sci. USA 117 , 10762–10768 (2020).

Youyou, W., Yang, Y. & Uzzi, B. A discipline-wide investigation of the replicability of psychology papers over the past two decades. Proc. Natl Acad. Sci. USA 120 , e2208863120 (2023).

Rabb, N., Fernbach, P. M. & Sloman, S. A. Individual representation in a community of knowledge. Trends Cogn. Sci. 23 , 891–902 (2019). This comprehensive review paper documents the empirical evidence for distributed cognition in communities of knowledge and the resultant vulnerabilities to illusions of understanding .

Rozenblit, L. & Keil, F. The misunderstood limits of folk science: an illusion of explanatory depth. Cogn. Sci. 26 , 521–562 (2002). This paper provided an empirical demonstration of the illusion of explanatory depth, and inspired a programme of research in cognitive science on communities of knowledge .

Hutchins, E. Cognition in the Wild (MIT Press, 1995).

Lave, J. & Wenger, E. Situated Learning: Legitimate Peripheral Participation (Cambridge Univ. Press, 1991).

Kitcher, P. The division of cognitive labor. J. Philos. 87 , 5–22 (1990).

Hardwig, J. Epistemic dependence. J. Philos. 82 , 335–349 (1985).

Keil, F. in Oxford Studies In Epistemology (eds Gendler, T. S. & Hawthorne, J.) 143–166 (Oxford Academic, 2005).

Weisberg, M. & Muldoon, R. Epistemic landscapes and the division of cognitive labor. Philos. Sci. 76 , 225–252 (2009).

Sloman, S. A. & Rabb, N. Your understanding is my understanding: evidence for a community of knowledge. Psychol. Sci. 27 , 1451–1460 (2016).

Wilson, R. A. & Keil, F. The shadows and shallows of explanation. Minds Mach. 8 , 137–159 (1998).

Keil, F. C., Stein, C., Webb, L., Billings, V. D. & Rozenblit, L. Discerning the division of cognitive labor: an emerging understanding of how knowledge is clustered in other minds. Cogn. Sci. 32 , 259–300 (2008).

Sperber, D. et al. Epistemic vigilance. Mind Lang. 25 , 359–393 (2010).

Wilkenfeld, D. A., Plunkett, D. & Lombrozo, T. Depth and deference: when and why we attribute understanding. Philos. Stud. 173 , 373–393 (2016).

Sparrow, B., Liu, J. & Wegner, D. M. Google effects on memory: cognitive consequences of having information at our fingertips. Science 333 , 776–778 (2011).

Fisher, M., Goddu, M. K. & Keil, F. C. Searching for explanations: how the internet inflates estimates of internal knowledge. J. Exp. Psychol. Gen. 144 , 674–687 (2015).

De Freitas, J., Agarwal, S., Schmitt, B. & Haslam, N. Psychological factors underlying attitudes toward AI tools. Nat. Hum. Behav. 7 , 1845–1854 (2023).

Castelo, N., Bos, M. W. & Lehmann, D. R. Task-dependent algorithm aversion. J. Mark. Res. 56 , 809–825 (2019).

Cadario, R., Longoni, C. & Morewedge, C. K. Understanding, explaining, and utilizing medical artificial intelligence. Nat. Hum. Behav. 5 , 1636–1642 (2021).

Oktar, K. & Lombrozo, T. Deciding to be authentic: intuition is favored over deliberation when authenticity matters. Cognition 223 , 105021 (2022).

Bigman, Y. E., Yam, K. C., Marciano, D., Reynolds, S. J. & Gray, K. Threat of racial and economic inequality increases preference for algorithm decision-making. Comput. Hum. Behav. 122 , 106859 (2021).

Claudy, M. C., Aquino, K. & Graso, M. Artificial intelligence can’t be charmed: the effects of impartiality on laypeople’s algorithmic preferences. Front. Psychol. 13 , 898027 (2022).

Snyder, C., Keppler, S. & Leider, S. Algorithm reliance under pressure: the effect of customer load on service workers. Preprint at SSRN https://doi.org/10.2139/ssrn.4066823 (2022).

Bogert, E., Schecter, A. & Watson, R. T. Humans rely more on algorithms than social influence as a task becomes more difficult. Sci Rep. 11 , 8028 (2021).

Raviv, A., Bar‐Tal, D., Raviv, A. & Abin, R. Measuring epistemic authority: studies of politicians and professors. Eur. J. Personal. 7 , 119–138 (1993).

Cummings, L. The “trust” heuristic: arguments from authority in public health. Health Commun. 29 , 1043–1056 (2014).

Lee, M. K. Understanding perception of algorithmic decisions: fairness, trust, and emotion in response to algorithmic management. Big Data Soc. 5 , https://doi.org/10.1177/2053951718756684 (2018).

Kissinger, H. A., Schmidt, E. & Huttenlocher, D. The Age of A.I. And Our Human Future (Little, Brown, 2021).

Lombrozo, T. Explanatory preferences shape learning and inference. Trends Cogn. Sci. 20 , 748–759 (2016). This paper provides an overview of philosophical theories of explanatory virtues and reviews empirical evidence on the sorts of explanations people find satisfying .

Vrantsidis, T. H. & Lombrozo, T. Simplicity as a cue to probability: multiple roles for simplicity in evaluating explanations. Cogn. Sci. 46 , e13169 (2022).

Johnson, S. G. B., Johnston, A. M., Toig, A. E. & Keil, F. C. Explanatory scope informs causal strength inferences. In Proc. 36th Annual Meeting of the Cognitive Science Society 2453–2458 (Cognitive Science Society, 2014).

Khemlani, S. S., Sussman, A. B. & Oppenheimer, D. M. Harry Potter and the sorcerer’s scope: latent scope biases in explanatory reasoning. Mem. Cognit. 39 , 527–535 (2011).

Liquin, E. G. & Lombrozo, T. Motivated to learn: an account of explanatory satisfaction. Cogn. Psychol. 132 , 101453 (2022).

Hopkins, E. J., Weisberg, D. S. & Taylor, J. C. V. The seductive allure is a reductive allure: people prefer scientific explanations that contain logically irrelevant reductive information. Cognition 155 , 67–76 (2016).

Weisberg, D. S., Hopkins, E. J. & Taylor, J. C. V. People’s explanatory preferences for scientific phenomena. Cogn. Res. Princ. Implic. 3 , 44 (2018).

Jerez-Fernandez, A., Angulo, A. N. & Oppenheimer, D. M. Show me the numbers: precision as a cue to others’ confidence. Psychol. Sci. 25 , 633–635 (2014).

Kim, J., Giroux, M. & Lee, J. C. When do you trust AI? The effect of number presentation detail on consumer trust and acceptance of AI recommendations. Psychol. Mark. 38 , 1140–1155 (2021).

Nguyen, C. T. The seductions of clarity. R. Inst. Philos. Suppl. 89 , 227–255 (2021). This article describes how reductive and quantitative explanations can generate a sense of understanding that is not necessarily correlated with actual understanding .

Fisher, M., Smiley, A. H. & Grillo, T. L. H. Information without knowledge: the effects of internet search on learning. Memory 30 , 375–387 (2022).

Eliseev, E. D. & Marsh, E. J. Understanding why searching the internet inflates confidence in explanatory ability. Appl. Cogn. Psychol. 37 , 711–720 (2023).

Fisher, M. & Oppenheimer, D. M. Who knows what? Knowledge misattribution in the division of cognitive labor. J. Exp. Psychol. Appl. 27 , 292–306 (2021).

Chromik, M., Eiband, M., Buchner, F., Krüger, A. & Butz, A. I think I get your point, AI! The illusion of explanatory depth in explainable AI. In 26th International Conference on Intelligent User Interfaces (eds Hammond, T. et al.) 307–317 (Association for Computing Machinery, 2021).

Strevens, M. No understanding without explanation. Stud. Hist. Philos. Sci. A 44 , 510–515 (2013).

Ylikoski, P. in Scientific Understanding: Philosophical Perspectives (eds De Regt, H. et al.) 100–119 (Univ. Pittsburgh Press, 2009).

Giudice, M. D. The prediction–explanation fallacy: a pervasive problem in scientific applications of machine learning. Preprint at PsyArXiv https://doi.org/10.31234/osf.io/4vq8f (2021).

Hofman, J. M. et al. Integrating explanation and prediction in computational social science. Nature 595 , 181–188 (2021). This paper highlights the advantages and disadvantages of explanatory versus predictive approaches to modelling, with a focus on applications to computational social science .

Shmueli, G. To explain or to predict? Stat. Sci. 25 , 289–310 (2010).

Article MathSciNet Google Scholar

Hofman, J. M., Sharma, A. & Watts, D. J. Prediction and explanation in social systems. Science 355 , 486–488 (2017).

Logg, J. M., Minson, J. A. & Moore, D. A. Algorithm appreciation: people prefer algorithmic to human judgment. Organ. Behav. Hum. Decis. Process. 151 , 90–103 (2019).

Nguyen, C. T. Cognitive islands and runaway echo chambers: problems for epistemic dependence on experts. Synthese 197 , 2803–2821 (2020).

Breiman, L. Statistical modeling: the two cultures. Stat. Sci. 16 , 199–215 (2001).

Gao, J. & Wang, D. Quantifying the benefit of artificial intelligence for scientific research. Preprint at arxiv.org/abs/2304.10578 (2023).

Hanson, B. et al. Garbage in, garbage out: mitigating risks and maximizing benefits of AI in research. Nature 623 , 28–31 (2023).

Kleinberg, J. & Raghavan, M. Algorithmic monoculture and social welfare. Proc. Natl Acad. Sci. USA 118 , e2018340118 (2021). This paper uses formal modelling methods to demonstrate that when companies all rely on the same algorithm to make decisions (an algorithmic monoculture), the overall quality of those decisions is reduced because valuable options can slip through the cracks, even when the algorithm performs accurately for individual companies .

Article MathSciNet CAS PubMed PubMed Central Google Scholar

Hofstra, B. et al. The diversity–innovation paradox in science. Proc. Natl Acad. Sci. USA 117 , 9284–9291 (2020).

Hong, L. & Page, S. E. Groups of diverse problem solvers can outperform groups of high-ability problem solvers. Proc. Natl Acad. Sci. USA 101 , 16385–16389 (2004).

Page, S. E. Where diversity comes from and why it matters? Eur. J. Soc. Psychol. 44 , 267–279 (2014). This article reviews research demonstrating the benefits of cognitive diversity and diversity in methodological approaches for problem solving and innovation .

Clarke, A. E. & Fujimura, J. H. (eds) The Right Tools for the Job: At Work in Twentieth-Century Life Sciences (Princeton Univ. Press, 2014).

Silva, V. J., Bonacelli, M. B. M. & Pacheco, C. A. Framing the effects of machine learning on science. AI Soc. https://doi.org/10.1007/s00146-022-01515-x (2022).

Sassenberg, K. & Ditrich, L. Research in social psychology changed between 2011 and 2016: larger sample sizes, more self-report measures, and more online studies. Adv. Methods Pract. Psychol. Sci. 2 , 107–114 (2019).

Simon, A. F. & Wilder, D. Methods and measures in social and personality psychology: a comparison of JPSP publications in 1982 and 2016. J. Soc. Psychol. https://doi.org/10.1080/00224545.2022.2135088 (2022).

Anderson, C. A. et al. The MTurkification of social and personality psychology. Pers. Soc. Psychol. Bull. 45 , 842–850 (2019).

Latour, B. in The Social After Gabriel Tarde: Debates and Assessments (ed. Candea, M.) 145–162 (Routledge, 2010).

Porter, T. M. Trust in Numbers: The Pursuit of Objectivity in Science and Public Life (Princeton Univ. Press, 1996).

Lazer, D. et al. Meaningful measures of human society in the twenty-first century. Nature 595 , 189–196 (2021).

Knox, D., Lucas, C. & Cho, W. K. T. Testing causal theories with learned proxies. Annu. Rev. Polit. Sci. 25 , 419–441 (2022).

Barberá, P. Birds of the same feather tweet together: Bayesian ideal point estimation using Twitter data. Polit. Anal. 23 , 76–91 (2015).

Brady, W. J., McLoughlin, K., Doan, T. N. & Crockett, M. J. How social learning amplifies moral outrage expression in online social networks. Sci. Adv. 7 , eabe5641 (2021).

Article PubMed PubMed Central ADS Google Scholar

Barnes, J., Klinger, R. & im Walde, S. S. Assessing state-of-the-art sentiment models on state-of-the-art sentiment datasets. In Proc. 8th Workshop on Computational Approaches to Subjectivity, Sentiment and Social Media Analysis (eds Balahur, A. et al.) 2–12 (Association for Computational Linguistics, 2017).

Gitelman, L. (ed.) “Raw Data” is an Oxymoron (MIT Press, 2013).

Breznau, N. et al. Observing many researchers using the same data and hypothesis reveals a hidden universe of uncertainty. Proc. Natl Acad. Sci. USA 119 , e2203150119 (2022). This study demonstrates how 73 research teams analysing the same dataset reached different conclusions about the relationship between immigration and public support for social policies, highlighting the subjectivity and uncertainty involved in analysing complex datasets .

Gillespie, T. in Media Technologies: Essays on Communication, Materiality, and Society (eds Gillespie, T. et al.) 167–194 (MIT Press, 2014).

Leonelli, S. Data-Centric Biology: A Philosophical Study (Univ. Chicago Press, 2016).

Wang, A., Kapoor, S., Barocas, S. & Narayanan, A. Against predictive optimization: on the legitimacy of decision-making algorithms that optimize predictive accuracy. ACM J. Responsib. Comput. , https://doi.org/10.1145/3636509 (2023).

Athey, S. Beyond prediction: using big data for policy problems. Science 355 , 483–485 (2017).

del Rosario Martínez-Ordaz, R. Scientific understanding through big data: from ignorance to insights to understanding. Possibility Stud. Soc. 1 , 279–299 (2023).

Nussberger, A.-M., Luo, L., Celis, L. E. & Crockett, M. J. Public attitudes value interpretability but prioritize accuracy in artificial intelligence. Nat. Commun. 13 , 5821 (2022).

Zittrain, J. in The Cambridge Handbook of Responsible Artificial Intelligence: Interdisciplinary Perspectives (eds. Voeneky, S. et al.) 176–184 (Cambridge Univ. Press, 2022). This article articulates the epistemic risks of prioritizing predictive accuracy over explanatory understanding when AI tools are interacting in complex systems.

Shumailov, I. et al. The curse of recursion: training on generated data makes models forget. Preprint at arxiv.org/abs/2305.17493 (2023).

Latour, B. Science In Action: How to Follow Scientists and Engineers Through Society (Harvard Univ. Press, 1987). This book provides strategies and approaches for thinking about science as a social endeavour .

Franklin, S. Science as culture, cultures of science. Annu. Rev. Anthropol. 24 , 163–184 (1995).

Haraway, D. Situated knowledges: the science question in feminism and the privilege of partial perspective. Fem. Stud. 14 , 575–599 (1988). This article acknowledges that the objective ‘view from nowhere’ is unobtainable: knowledge, it argues, is always situated .

Harding, S. Objectivity and Diversity: Another Logic of Scientific Research (Univ. Chicago Press, 2015).

Longino, H. E. Science as Social Knowledge: Values and Objectivity in Scientific Inquiry (Princeton Univ. Press, 1990).

Daston, L. & Galison, P. Objectivity (Princeton Univ. Press, 2007). This book is a historical analysis of the shifting modes of ‘objectivity’ that scientists have pursued, arguing that objectivity is not a universal concept but that it shifts alongside scientific techniques and ambitions .

Prescod-Weinstein, C. Making Black women scientists under white empiricism: the racialization of epistemology in physics. Signs J. Women Cult. Soc. 45 , 421–447 (2020).

Mavhunga, C. What Do Science, Technology, and Innovation Mean From Africa? (MIT Press, 2017).

Schiebinger, L. The Mind Has No Sex? Women in the Origins of Modern Science (Harvard Univ. Press, 1991).

Martin, E. The egg and the sperm: how science has constructed a romance based on stereotypical male–female roles. Signs J. Women Cult. Soc. 16 , 485–501 (1991). This case study shows how assumptions about gender affect scientific theories, sometimes delaying the articulation of what might be considered to be more accurate descriptions of scientific phenomena .

Harding, S. Rethinking standpoint epistemology: What is “strong objectivity”? Centen. Rev. 36 , 437–470 (1992). In this article, Harding outlines her position on ‘strong objectivity’, by which clearly articulating one’s standpoint can lead to more robust knowledge claims .

Oreskes, N. Why Trust Science? (Princeton Univ. Press, 2019). This book introduces the reader to 20 years of scholarship in science and technology studies, arguing that the tools the discipline has for understanding science can help to reinstate public trust in the institution .

Rolin, K., Koskinen, I., Kuorikoski, J. & Reijula, S. Social and cognitive diversity in science: introduction. Synthese 202 , 36 (2023).

Hong, L. & Page, S. E. Problem solving by heterogeneous agents. J. Econ. Theory 97 , 123–163 (2001).

Sulik, J., Bahrami, B. & Deroy, O. The diversity gap: when diversity matters for knowledge. Perspect. Psychol. Sci. 17 , 752–767 (2022).

Lungeanu, A., Whalen, R., Wu, Y. J., DeChurch, L. A. & Contractor, N. S. Diversity, networks, and innovation: a text analytic approach to measuring expertise diversity. Netw. Sci. 11 , 36–64 (2023).

AlShebli, B. K., Rahwan, T. & Woon, W. L. The preeminence of ethnic diversity in scientific collaboration. Nat. Commun. 9 , 5163 (2018).

Campbell, L. G., Mehtani, S., Dozier, M. E. & Rinehart, J. Gender-heterogeneous working groups produce higher quality science. PLoS ONE 8 , e79147 (2013).

Nielsen, M. W., Bloch, C. W. & Schiebinger, L. Making gender diversity work for scientific discovery and innovation. Nat. Hum. Behav. 2 , 726–734 (2018).

Yang, Y., Tian, T. Y., Woodruff, T. K., Jones, B. F. & Uzzi, B. Gender-diverse teams produce more novel and higher-impact scientific ideas. Proc. Natl Acad. Sci. USA 119 , e2200841119 (2022).

Kozlowski, D., Larivière, V., Sugimoto, C. R. & Monroe-White, T. Intersectional inequalities in science. Proc. Natl Acad. Sci. USA 119 , e2113067119 (2022).

Fehr, C. & Jones, J. M. Culture, exploitation, and epistemic approaches to diversity. Synthese 200 , 465 (2022).

Nakadai, R., Nakawake, Y. & Shibasaki, S. AI language tools risk scientific diversity and innovation. Nat. Hum. Behav. 7 , 1804–1805 (2023).

National Academies of Sciences, Engineering, and Medicine et al. Advancing Antiracism, Diversity, Equity, and Inclusion in STEMM Organizations: Beyond Broadening Participation (National Academies Press, 2023).

Winner, L. Do artifacts have politics? Daedalus 109 , 121–136 (1980).

Eubanks, V. Automating Inequality: How High-Tech Tools Profile, Police, and Punish the Poor (St. Martin’s Press, 2018).

Littmann, M. et al. Validity of machine learning in biology and medicine increased through collaborations across fields of expertise. Nat. Mach. Intell. 2 , 18–24 (2020).

Carusi, A. et al. Medical artificial intelligence is as much social as it is technological. Nat. Mach. Intell. 5 , 98–100 (2023).

Raghu, M. & Schmidt, E. A survey of deep learning for scientific discovery. Preprint at arxiv.org/abs/2003.11755 (2020).

Bishop, C. AI4Science to empower the fifth paradigm of scientific discovery. Microsoft Research Blog www.microsoft.com/en-us/research/blog/ai4science-to-empower-the-fifth-paradigm-of-scientific-discovery/ (2022).

Whittaker, M. The steep cost of capture. Interactions 28 , 50–55 (2021).

Liesenfeld, A., Lopez, A. & Dingemanse, M. Opening up ChatGPT: Tracking openness, transparency, and accountability in instruction-tuned text generators. In Proc. 5th International Conference on Conversational User Interfaces 1–6 (Association for Computing Machinery, 2023).

Chu, J. S. G. & Evans, J. A. Slowed canonical progress in large fields of science. Proc. Natl Acad. Sci. USA 118 , e2021636118 (2021).

Park, M., Leahey, E. & Funk, R. J. Papers and patents are becoming less disruptive over time. Nature 613 , 138–144 (2023).

Frith, U. Fast lane to slow science. Trends Cogn. Sci. 24 , 1–2 (2020). This article explains the epistemic risks of a hyperfocus on scientific productivity and explores possible avenues for incentivizing the production of higher-quality science on a slower timescale .

Stengers, I. Another Science is Possible: A Manifesto for Slow Science (Wiley, 2018).

Lake, B. M. & Baroni, M. Human-like systematic generalization through a meta-learning neural network. Nature 623 , 115–121 (2023).

Feinman, R. & Lake, B. M. Learning task-general representations with generative neuro-symbolic modeling. Preprint at arxiv.org/abs/2006.14448 (2021).

Schölkopf, B. et al. Toward causal representation learning. Proc. IEEE 109 , 612–634 (2021).

Mitchell, M. AI’s challenge of understanding the world. Science 382 , eadm8175 (2023).

Sartori, L. & Bocca, G. Minding the gap(s): public perceptions of AI and socio-technical imaginaries. AI Soc. 38 , 443–458 (2023).

Download references

Acknowledgements

We thank D. S. Bassett, W. J. Brady, S. Helmreich, S. Kapoor, T. Lombrozo, A. Narayanan, M. Salganik and A. J. te Velthuis for comments. We also thank C. Buckner and P. Winter for their feedback and suggestions.

Author information

These authors contributed equally: Lisa Messeri, M. J. Crockett

Authors and Affiliations

Department of Anthropology, Yale University, New Haven, CT, USA

Lisa Messeri

Department of Psychology, Princeton University, Princeton, NJ, USA

M. J. Crockett

University Center for Human Values, Princeton University, Princeton, NJ, USA

You can also search for this author in PubMed Google Scholar

Contributions

The authors contributed equally to the research and writing of the paper.

Corresponding authors

Correspondence to Lisa Messeri or M. J. Crockett .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Peer review

Peer review information.

Nature thanks Cameron Buckner, Peter Winter and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

Reprints and permissions

About this article

Cite this article.

Messeri, L., Crockett, M.J. Artificial intelligence and illusions of understanding in scientific research. Nature 627 , 49–58 (2024). https://doi.org/10.1038/s41586-024-07146-0

Download citation

Received : 31 July 2023

Accepted : 31 January 2024

Published : 06 March 2024

Issue Date : 07 March 2024

DOI : https://doi.org/10.1038/s41586-024-07146-0

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

Ai is no substitute for having something to say.

Nature Reviews Physics (2024)

Perché gli scienziati si fidano troppo dell'intelligenza artificiale - e come rimediare

Nature Italy (2024)

Why scientists trust AI too much — and what to do about it

Nature (2024)

By submitting a comment you agree to abide by our Terms and Community Guidelines . If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

- Search for: Toggle Search

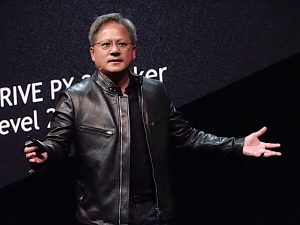

‘You Transformed the World,’ NVIDIA CEO Tells Researchers Behind Landmark AI Paper

Of GTC ’s 900+ sessions, the most wildly popular was a conversation hosted by NVIDIA founder and CEO Jensen Huang with seven of the authors of the legendary research paper that introduced the aptly named transformer — a neural network architecture that went on to change the deep learning landscape and enable today’s era of generative AI.

“Everything that we’re enjoying today can be traced back to that moment,” Huang said to a packed room with hundreds of attendees, who heard him speak with the authors of “ Attention Is All You Need .”

Sharing the stage for the first time, the research luminaries reflected on the factors that led to their original paper, which has been cited more than 100,000 times since it was first published and presented at the NeurIPS AI conference. They also discussed their latest projects and offered insights into future directions for the field of generative AI.

While they started as Google researchers, the collaborators are now spread across the industry, most as founders of their own AI companies.

“We have a whole industry that is grateful for the work that you guys did,” Huang said.

Origins of the Transformer Model

The research team initially sought to overcome the limitations of recurrent neural networks , or RNNs, which were then the state of the art for processing language data.

Noam Shazeer, cofounder and CEO of Character.AI, compared RNNs to the steam engine and transformers to the improved efficiency of internal combustion.

“We could have done the industrial revolution on the steam engine, but it would just have been a pain,” he said. “Things went way, way better with internal combustion.”

“Now we’re just waiting for the fusion,” quipped Illia Polosukhin, cofounder of blockchain company NEAR Protocol.

The paper’s title came from a realization that attention mechanisms — an element of neural networks that enable them to determine the relationship between different parts of input data — were the most critical component of their model’s performance.

“We had very recently started throwing bits of the model away, just to see how much worse it would get. And to our surprise it started getting better,” said Llion Jones, cofounder and chief technology officer at Sakana AI.

Having a name as general as “transformers” spoke to the team’s ambitions to build AI models that could process and transform every data type — including text, images, audio, tensors and biological data.

“That North Star, it was there on day zero, and so it’s been really exciting and gratifying to watch that come to fruition,” said Aidan Gomez, cofounder and CEO of Cohere. “We’re actually seeing it happen now.”

Envisioning the Road Ahead

Adaptive computation, where a model adjusts how much computing power is used based on the complexity of a given problem, is a key factor the researchers see improving in future AI models.

“It’s really about spending the right amount of effort and ultimately energy on a given problem,” said Jakob Uszkoreit, cofounder and CEO of biological software company Inceptive. “You don’t want to spend too much on a problem that’s easy or too little on a problem that’s hard.”

A math problem like two plus two, for example, shouldn’t be run through a trillion-parameter transformer model — it should run on a basic calculator, the group agreed.

They’re also looking forward to the next generation of AI models.

“I think the world needs something better than the transformer,” said Gomez. “I think all of us here hope it gets succeeded by something that will carry us to a new plateau of performance.”

“You don’t want to miss these next 10 years,” Huang said. “Unbelievable new capabilities will be invented.”

The conversation concluded with Huang presenting each researcher with a framed cover plate of the NVIDIA DGX-1 AI supercomputer, signed with the message, “You transformed the world.”

There’s still time to catch the session replay by registering for a virtual GTC pass — it’s free.

To discover the latest in generative AI, watch Huang’s GTC keynote address:

NVIDIA websites use cookies to deliver and improve the website experience. See our cookie policy for further details on how we use cookies and how to change your cookie settings.

Share on Mastodon

Open-source AI models released by Tokyo lab Sakana founded by former Google researchers

By Anna Tong

SAN JOSE (Reuters) - Sakana AI, a Tokyo-based artificial intelligence startup founded by two prominent former Google researchers, released AI models on Wednesday it said were built using a novel method inspired by evolution, akin to breeding and natural selection.

Sakana AI employed a technique called "model merging" which combines existing AI models to yield a new model, combining it with an approach inspired by evolution, leading to the creation of hundreds of model generations.

The most successful models from each generation were then identified, becoming the "parents" of the next generation.

The company is releasing the three Japanese language models and two are being open-sourced, Sakana AI founder David Ha told Reuters in online remarks from Tokyo.

The company's founders are former Google researchers Ha and Llion Jones.

Jones is an author on Google's 2017 research paper "Attention Is All You Need", which introduced the "transformer" deep learning architecture that formed the basis for viral chatbot ChatGPT, leading to the race to develop products powered by generative AI.

Ha was previously the head of research at Stability AI and a Google Brain researcher.

All the authors of the ground-breaking Google paper have since left the organisation.

Venture investors have poured millions of dollars in funding into their new ventures, such as AI chatbot startup Character.AI run by Noam Shazeer, and the large language model startup Cohere founded by Aidan Gomez.

Sakana AI seeks to put the Japanese capital on the map as an AI hub, just as OpenAI did for San Francisco and the company DeepMind did for London earlier. In January Sakana AI said it had raised $30 million in seed financing led by Lux Capital.

(Reporting by Anna Tong in San Jose; Editing by Clarence Fernandez)

Help | Advanced Search

Computer Science > Artificial Intelligence

Title: polaris: a safety-focused llm constellation architecture for healthcare.

Abstract: We develop Polaris, the first safety-focused LLM constellation for real-time patient-AI healthcare conversations. Unlike prior LLM works in healthcare focusing on tasks like question answering, our work specifically focuses on long multi-turn voice conversations. Our one-trillion parameter constellation system is composed of several multibillion parameter LLMs as co-operative agents: a stateful primary agent that focuses on driving an engaging conversation and several specialist support agents focused on healthcare tasks performed by nurses to increase safety and reduce hallucinations. We develop a sophisticated training protocol for iterative co-training of the agents that optimize for diverse objectives. We train our models on proprietary data, clinical care plans, healthcare regulatory documents, medical manuals, and other medical reasoning documents. We align our models to speak like medical professionals, using organic healthcare conversations and simulated ones between patient actors and experienced nurses. This allows our system to express unique capabilities such as rapport building, trust building, empathy and bedside manner. Finally, we present the first comprehensive clinician evaluation of an LLM system for healthcare. We recruited over 1100 U.S. licensed nurses and over 130 U.S. licensed physicians to perform end-to-end conversational evaluations of our system by posing as patients and rating the system on several measures. We demonstrate Polaris performs on par with human nurses on aggregate across dimensions such as medical safety, clinical readiness, conversational quality, and bedside manner. Additionally, we conduct a challenging task-based evaluation of the individual specialist support agents, where we demonstrate our LLM agents significantly outperform a much larger general-purpose LLM (GPT-4) as well as from its own medium-size class (LLaMA-2 70B).

Submission history

Access paper:.

- Download PDF

- HTML (experimental)

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

BibTeX formatted citation

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

You are using an outdated browser. Please upgrade your browser to improve your experience.

UPDATED 18:04 EDT / MARCH 17 2024

Elon Musk’s xAI releases Grok-1 architecture, while Apple advances multimodal AI research

by Duncan Riley

The Elon Musk-run artificial intelligence startup xAI Corp. today released the weights and architecture of its Grok-1 large language model as open source code, shortly after Apple Inc. published a paper describing its own work on multimode LLMs.

Musk first said that xAI would release Grok as open source on March 11 , but the release today of the base model and weights, fundamental components of how the model works makes this the company’s first open-source release.

What has been released is part of the network architecture of Grok’s structural design, including how layers and nodes are arranged and interconnected to process data. Base model weights are the parameters within a given model’s architecture that have been adjusted during training, encoding the learned information and determining how input data is transformed into output.

Grok-1 is a 314 billion parameter “Mixture-of-Experts” model trained from scratch by xAI. A Mixture-of-Experts model is a machine learning approach that combines the outputs of multiple specialized sub-models, also known as experts, to make a final prediction, optimizing for diverse tasks or data subsets by leveraging the expertise of each individual model.

The release is the raw base model checkpoint from the Grok-1 pre-training phase, which concluded in October 2023. According to the company, “this means that the model is not fine-tuned for any specific application, such as dialogue.” No further information was provided in what was only a brief blog post .

Musk revealed in July that he had founded xAI and that it will compete against AI services from companies such as Google LLC and OpenAI. The company’s first model, Grok, was claimed by xAI to have been modeled after Douglas Adams’ classic book “The Hitchhiker’s Guide to the Galaxy” and is “intended to answer almost anything and, far harder, even suggest what questions to ask!”

Meanwhile, at Apple, the company Steve Jobs built quietly published a paper Thursday describing its work on MM1, a set of multimodal LLMs for captioning images, answering visual questions, and natural language inference.

Thurott reported today that the paper describes MM1 as a family of multimodal models that support up to 30 billion parameters and “achieve competitive performance after supervised fine-tuning on a range of established multimodal benchmarks.” The researchers also claim that multimodal large language models have emerged as “the next frontier in foundation models” after traditional LLMs and they “achieve superior capabilities.”

A multimodal LLM is an AI system capable of understanding and generating responses across multiple types of data, such as text, images and audio, integrating diverse forms of information to perform complex tasks. The Apple researchers believe that their model delivers a breakthrough that will help others scale these models into larger sets of data with better performance and reliability.

Apple’s previous work on multimodal LLMs includes Ferret, a model that was quietly open-sourced in October before being noticed in December .

Image: DALL-E 3

A message from john furrier, co-founder of siliconangle:, your vote of support is important to us and it helps us keep the content free., one click below supports our mission to provide free, deep, and relevant content. , join our community on youtube, join the community that includes more than 15,000 #cubealumni experts, including amazon.com ceo andy jassy, dell technologies founder and ceo michael dell, intel ceo pat gelsinger, and many more luminaries and experts..

Like Free Content? Subscribe to follow.

LATEST STORIES

Blackwell: Nvidia's GPU architecture to power new generation of trillion-parameter generative AI models

Figure Markets raises $60M to build crypto ‘everything marketplace’

Report: Apple in talks with Google to use Google Gemini AI model on iPhone

Deloitte introduces CyberSphere to enhance cyber operational efficiency with automation and AI

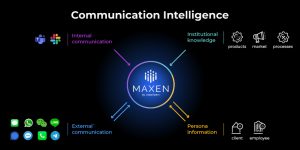

LeapXpert introduces 'Maxen' to transform client relationships with AI

Nvidia's GTC conference is on deck. What should you expect?

INFRA - BY MIKE WHEATLEY . 17 MINS AGO

BLOCKCHAIN - BY KYT DOTSON . 5 HOURS AGO

AI - BY KYT DOTSON . 6 HOURS AGO

SECURITY - BY DUNCAN RILEY . 8 HOURS AGO

AI - BY DUNCAN RILEY . 15 HOURS AGO

AI - BY ZEUS KERRAVALA . 22 HOURS AGO

NVIDIA DGX B200

The foundation for your AI center of excellence.

- Introduction

Performance

Get Started

Revolutionary Performance Backed by Evolutionary Innovation

NVIDIA DGX™ B200 is an unified AI platform for develop-to-deploy pipelines for businesses of any size at any stage in their AI journey. Equipped with eight NVIDIA Blackwell GPUs interconnected with fifth-generation NVIDIA® NVLink® , DGX B200 delivers leading-edge performance, offering 3X the training performance and 15X the inference performance of previous generations. Leveraging the NVIDIA Blackwell GPU architecture, DGX B200 can handle diverse workloads—including large language models, recommender systems, and chatbots—making it ideal for businesses looking to accelerate their AI transformation.

AI Infrastructure for Any Workload

Learn how DGX B200 enables enterprises to accelerate any AI workload, from data prep to training to inference.

Examples of Successful Enterprise Deployments

Read how the NVIDIA DGX platform and NVIDIA NeMo™ have empowered leading enterprises.

A Unified AI Platform

One platform for develop-to-deploy pipelines.

Enterprises require massive amounts of compute power to handle complex AI datasets at every stage of the AI pipeline, from training to fine-tuning to inference. With NVIDIA DGX B200, enterprises can arm their developers with a single platform built to accelerate their workflows.

Powerhouse of AI Performance

Powered by the NVIDIA Blackwell architecture’s advancements in computing, DGX B200 delivers 3X the training performance and 15X the inference performance of DGX H100. As the foundation of NVIDIA DGX BasePOD™ and NVIDIA DGX SuperPOD™ , DGX B200 delivers leading-edge performance for any workload.

Proven Infrastructure Standard

DGX B200 is a fully optimized hardware and software platform that includes the complete NVIDIA AI software stack, including NVIDIA Base Command and NVIDIA AI Enterprise software, a rich ecosystem of third-party support, and access to expert advice from NVIDIA professional services.

Next-Generation Performance Powered by DGX B200

Real time large language model inference.

Projected performance subject to change. Token-to-token latency (TTL) = 50ms real time, first token latency (FTL) = 5s, input sequence length = 32,768, output sequence length = 1,028, 8x eight-way DGX H100 GPUs air-cooled vs. 1x eight-way DGX B200 air-cooled, per GPU performance comparison.

Supercharged AI Training Performance

Projected performance subject to change. 32,768 GPU scale, 4,096x eight-way DGX H100 air-cooled cluster: 400G IB network, 4,096x 8-way DGX B200 air-cooled cluster: 400G IB network.

Accelerated Data Processing

Projected performance subject to change. Database join query with Snappy / Deflate compression derived from TPC-H Q4 query. 1x x86, 1x H100 GPU, and 1x Blackwell single GPU.

Specifications

NVIDIA DGX B200 Specifications

Delivering supercomputing to every enterprise, nvidia dgx superpod.

NVIDIA DGX SuperPOD™ with DGX B200 systems enables leading enterprises to deploy large-scale, turnkey infrastructure backed by NVIDIA’s AI expertise.

Maximize the Value of the DGX Platform

NVIDIA Enterprise Services provide support, education, and professional services for your DGX infrastructure. With NVIDIA experts available at every step of your AI journey, Enterprise Services can help you get your projects up and running quickly and successfully.

Receive an Exclusive Training Offer as a DGX Customer

Learn how to achieve cutting-edge breakthroughs with AI faster and special technical training, offered expressly to DGX customers from the AI experts at the NVIDIA Deep Learning Institute (DLI) .

Take the Next Steps

Get the nvidia dgx platform.

The DGX platform is made up of a wide variety of products and services to fit the needs of every AI enterprise.

Discover the Benefits of the NVIDIA DGX Platform

NVIDIA DGX is the proven standard on which enterprise AI is built.

Need Help Selecting the Right Product or Partner?

Talk to an NVIDIA product specialist about your professional needs.

- Data Center GPUs

- NVIDIA DGX Platform

- NVIDIA EGX Platform

- NVIDIA HGX Platform

- Networking Products

- Virtual GPUs

- NVIDIA Blackwell Architecture

- NVIDIA Hopper Architecture

- Confidential Computing

- NVLink/NVSwitch

- Tensor Cores

- Multi-Instance GPU

- IndeX ParaView Plugin

- Cybersecurity - Morpheus

- Customer Stories

- Data Center Blogs

- Data Center GPUs Product Literature

- DGX Product Literature

- Documentation

- Energy Efficiency Calculator

- GPU Apps Catalog

- GPU Test Drive

- GTC AI Conference

- NVIDIA GRID Community Advisors

- Qualified System Catalog

- Technical Blog

- Technical Training

- Training for IT Professionals

- Where to Buy

- Virtual GPU Forum

- Virtual GPU Product Literature

- Company Overview

- Venture Capital (NVentures)

- NVIDIA Foundation

- Social Responsibility

- Technologies

- Privacy Policy

- Manage My Privacy

- Do Not Sell or Share My Data

- Terms of Service

- Accessibility

- Corporate Policies

- Product Security

Building Meta’s GenAI Infrastructure

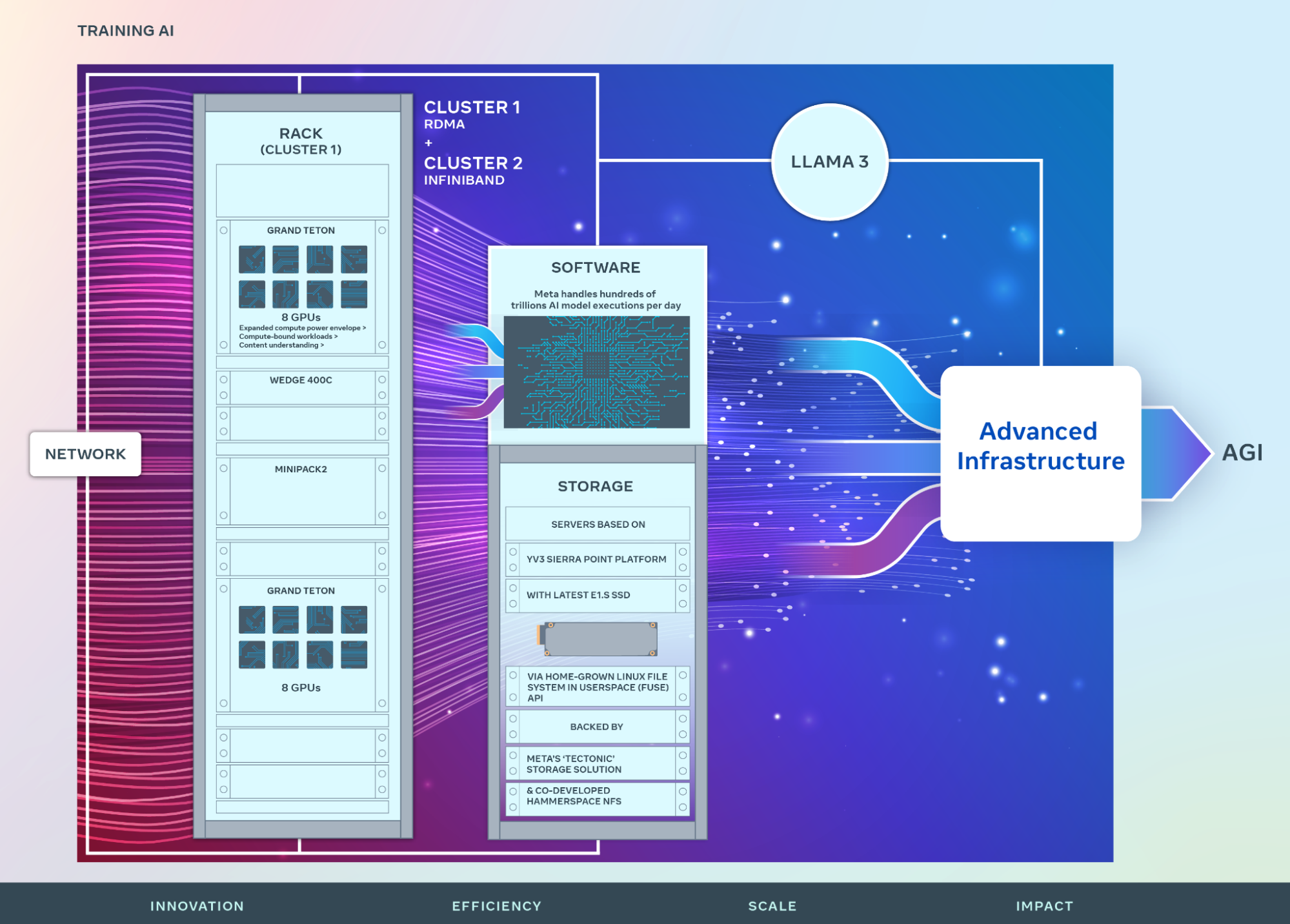

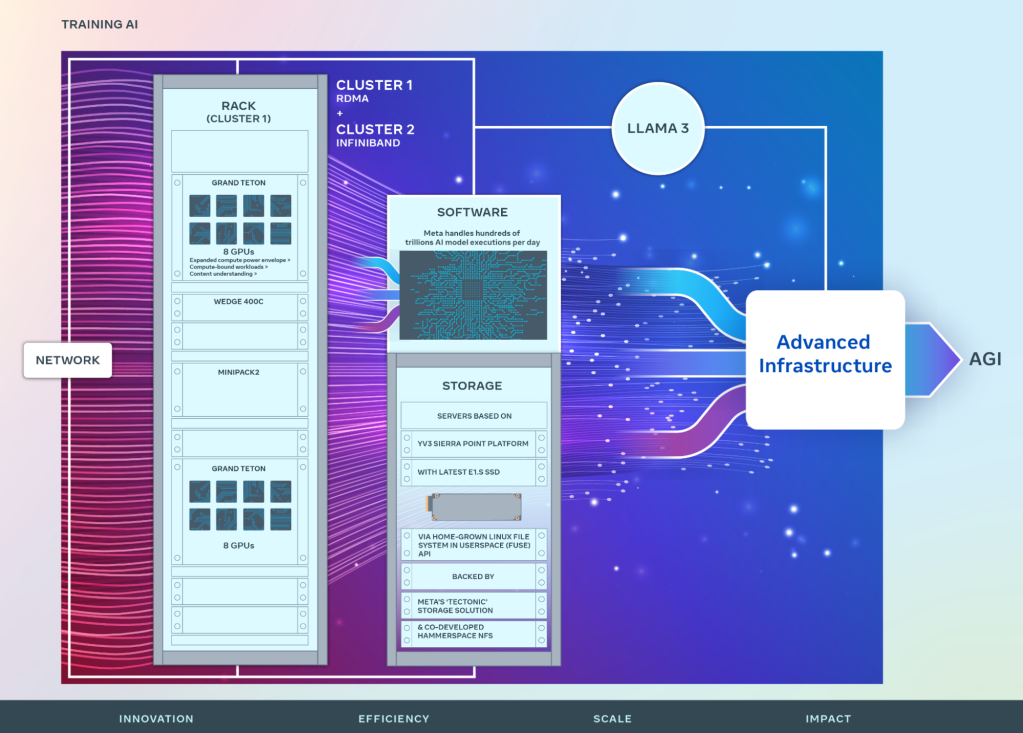

- Marking a major investment in Meta’s AI future, we are announcing two 24k GPU clusters. We are sharing details on the hardware, network, storage, design, performance, and software that help us extract high throughput and reliability for various AI workloads. We use this cluster design for Llama 3 training.

- We are strongly committed to open compute and open source. We built these clusters on top of Grand Teton , OpenRack , and PyTorch and continue to push open innovation across the industry.

- This announcement is one step in our ambitious infrastructure roadmap. By the end of 2024, we’re aiming to continue to grow our infrastructure build-out that will include 350,000 NVIDIA H100 GPUs as part of a portfolio that will feature compute power equivalent to nearly 600,000 H100s.

To lead in developing AI means leading investments in hardware infrastructure. Hardware infrastructure plays an important role in AI’s future. Today, we’re sharing details on two versions of our 24,576-GPU data center scale cluster at Meta. These clusters support our current and next generation AI models, including Llama 3, the successor to Llama 2 , our publicly released LLM, as well as AI research and development across GenAI and other areas .

A peek into Meta’s large-scale AI clusters

Meta’s long-term vision is to build artificial general intelligence (AGI) that is open and built responsibly so that it can be widely available for everyone to benefit from. As we work towards AGI, we have also worked on scaling our clusters to power this ambition. The progress we make towards AGI creates new products, new AI features for our family of apps , and new AI-centric computing devices.

While we’ve had a long history of building AI infrastructure, we first shared details on our AI Research SuperCluster (RSC) , featuring 16,000 NVIDIA A100 GPUs, in 2022. RSC has accelerated our open and responsible AI research by helping us build our first generation of advanced AI models. It played and continues to play an important role in the development of Llama and Llama 2 , as well as advanced AI models for applications ranging from computer vision, NLP, and speech recognition, to image generation , and even coding .

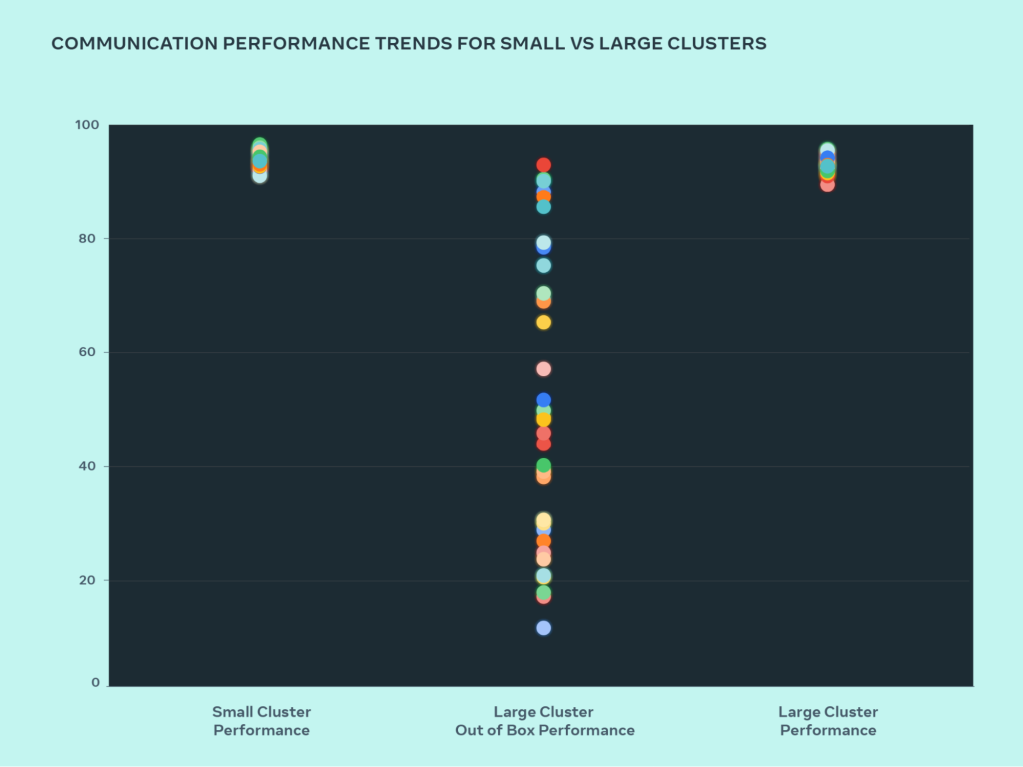

Under the hood