- Skip to main content

- Skip to FDA Search

- Skip to in this section menu

- Skip to footer links

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you're on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

U.S. Food and Drug Administration

- Search

- Menu

- Medical Devices

- Science and Research | Medical Devices

iMRMC: Software to do Multi-reader Multi-case Statistical Analysis of Reader Studies

Catalog of Regulatory Science Tools to Help Assess New Medical Devices

Technical Description

The primary objective of the iMRMC statistical software is to assist investigators with analyzing and sizing multi-reader multi-case (MRMC) reader studies that compare the difference in the area under Receiver Operating Characteristic curves (AUCs) from two modalities. The iMRMC application is a software package that includes simulation tools to characterize bias and variance of the MRMC variance estimates.

The core elements of this application include the ability to perform MRMC variance analysis and the ability to size an MRMC trial.

- The core iMRMC application is a stand-alone, precompiled, license-free Java applications and the source code. It can be used in GUI mode or on the command line.

- There is also an R package that utilizes the core Java application. Examples for using the programs can be found in the R help files.

- Additional functionality of the GitHub package includes an example to guide users on how to perform a noninferiority study using the iMRMC R package.

The software treats arbitrary study designs that are not "fully-crossed."

Intended Purpose

The iMRMC package analyzes data from Multiple Readers and Multiple Cases (MRMC) studies, which are often imaging studies where clinicians (readers) evaluate patient images (cases). The MRMC methods apply to any scenario in which clinicians interpret data to make clinical decisions. The iMRMC package calculates the reader-averaged area under the receiver operating characteristic curve: the AUC of the ROC curve. AUC is a diagnostic performance measure. Additional functions analyze other endpoints (binary performance and score differences). This package also estimates variances, confidence intervals and p-values. These uncertainty characteristics are needed for hypothesis tests to size and assess the efficacy of diagnostic imaging devices and computer aids (artificial intelligence).

The analysis is important because imaging studies are designed so that every reader reads every case in all modalities, a fully-crossed study. In this case, the data is cross-correlated, and the readers and cases are considered to be cross-correlated random effects. An MRMC analysis accounts for the variability and correlations from the readers and cases when estimating variances, confidence intervals, and p-values. The functions in this package can treat arbitrary study designs and studies with missing data, not just fully-crossed study designs.

The methods in the iMRMC package are not standard. The package permits industry statisticians to use a validated statistical analysis method without having to develop and validate it themselves.

Related FDA Product Codes

The FDA product codes this tool is applicable to include, but are not limited to:

- KPS: System, Tomography, Computed, Emission

- LLZ: System, Image Processing, Radiological

- PAA: Automated Breast Ultrasound

- POK: Computer-Assisted Diagnostic Software For Lesions Suspicious For Cancer

- QDQ: Radiological Computer Assisted Detection/Diagnosis Software For Lesions Suspicious For Cancer

- QPN: Software Algorithm Device To Assist Users In Digital Pathology

- QNP: Gastrointestinal lesion software detection system

The tool has been characterized through simulations (bias and variance of the estimates) and has been compared with other methods as appropriate for the task.

The following peer-reviewed research includes the detailed verification methods and results

- Desc: Study that uses the software and related research methods and study designs in a large study. Supplementary materials include data and scripts to reproduce study results.

- Desc: Original description of method and validation with simulations. Results comparable to jackknife resampling technique.

- Generalize method to binary performance measures.

- Provide framework for understanding method and comparing to other methods analytically and with simulations.

- Gallas, B. D., & Brown, D. G. (2008). Reader studies for validation of CAD systems. Neural Networks Special Conference Issue, 21 (2), 387–397. https://doi.org/10.1016/j.neunet.2007.12.013

Limitations

Currently, the tool can produce negative variance estimates if the relevant dataset is small.

Supporting Documentation

Tool websites:.

- Primary: https://github.com/DIDSR/iMRMC

- Secondary: https://cran.r-project.org/web/packages/iMRMC/index.html

User manual for java app

- http://didsr.github.io/iMRMC/000_iMRMC/userManualPDF/iMRMCuserManual.pdf

User manual for R package

- https://cran.r-project.org/web/packages/iMRMC/iMRMC.pdf

- https://github.com/DIDSR/iMRMC/wiki/iMRMC-FAQ

Supplementary materials

- Data and scripts to reproduce results for manuscripts that use iMRMC

- https://github.com/DIDSR/iMRMC/wiki/iMRMC-Datasets

Related Work

- Chen, W., Gong, Q., Gallas, B.D. (2018). Paired split-plot designs of multireader multicase studies. Journal of Medical Imaging 5, 031410. https://doi.org/10.1117/1.JMI.5.3.031410

- Obuchowski, N.A., Gallas, B.D., Hillis, S.L. (2012). Multi-Reader ROC studies with Split-Plot Designs: A Comparison of Statistical Methods. Acad Radiol 19, 1508– 1517. https://doi.org/10.1016/j.acra.2012.09.012

- Gallas, B.D., Chan, H.-P., D’Orsi, C.J., Dodd, L.E., Giger, M.L., Gur, D., Krupinski,

- E.A., Metz, C.E., Myers, K.J., Obuchowski, N.A., Sahiner, B., Toledano, A.Y., Zuley, M.L. (2012). Evaluating imaging and computer-aided detection and diagnosis devices at the FDA. Acad Radiol 19, 463–477. https://doi.org/10.1016/j.acra.2011.12.016

- Obuchowski, N. A., Gallas, B. D., & Hillis, S. L. (2012). Multi-Reader ROC studies with Split-Plot Designs: A Comparison of Statistical Methods. Academic Radiology, 19 (12), 1508–1517. https://doi.org/10.1016/j.acra.2012.09.012

- Gallas, B. D., & Hillis, S. L. (2014). Generalized Roe and Metz ROC model: Analytic link between simulated decision scores and empirical AUC variances and covariances. J Med Img, 1 (3), 031006. https://doi.org/doi:10.1117/1.JMI.1.3.031006

Tool Reference

In addition to citing relevant publications please reference the use of this tool using DOI: 10.5281/zenodo.8383591

For more information

- Catalog of Regulatory Science Tools to Help Assess New Medical Devices

Click through the PLOS taxonomy to find articles in your field.

For more information about PLOS Subject Areas, click here .

Loading metrics

Open Access

Peer-reviewed

Research Article

Multi-Reader Multi-Case Studies Using the Area under the Receiver Operator Characteristic Curve as a Measure of Diagnostic Accuracy: Systematic Review with a Focus on Quality of Data Reporting

Affiliation Department of Radiology, Prince of Songkla University, Hat Yai, Thailand

Affiliation Centre for Medical Imaging, University College London, London, United Kingdom

* E-mail: [email protected]

Affiliation Nuffield Department of Primary Care Health Sciences, Oxford University, Oxford, United Kingdom

Affiliation Centre for Statistics in Medicine, Wolfson College, Oxford University, Oxford, United Kingdom

- Thaworn Dendumrongsup,

- Andrew A. Plumb,

- Steve Halligan,

- Thomas R. Fanshawe,

- Douglas G. Altman,

- Susan Mallett

- Published: December 26, 2014

- https://doi.org/10.1371/journal.pone.0116018

- Reader Comments

Introduction

We examined the design, analysis and reporting in multi-reader multi-case (MRMC) research studies using the area under the receiver-operating curve (ROC AUC) as a measure of diagnostic performance.

We performed a systematic literature review from 2005 to 2013 inclusive to identify a minimum 50 studies. Articles of diagnostic test accuracy in humans were identified via their citation of key methodological articles dealing with MRMC ROC AUC. Two researchers in consensus then extracted information from primary articles relating to study characteristics and design, methods for reporting study outcomes, model fitting, model assumptions, presentation of results, and interpretation of findings. Results were summarized and presented with a descriptive analysis.

Sixty-four full papers were retrieved from 475 identified citations and ultimately 49 articles describing 51 studies were reviewed and extracted. Radiological imaging was the index test in all. Most studies focused on lesion detection vs. characterization and used less than 10 readers. Only 6 (12%) studies trained readers in advance to use the confidence scale used to build the ROC curve. Overall, description of confidence scores, the ROC curve and its analysis was often incomplete. For example, 21 (41%) studies presented no ROC curve and only 3 (6%) described the distribution of confidence scores. Of 30 studies presenting curves, only 4 (13%) presented the data points underlying the curve, thereby allowing assessment of extrapolation. The mean change in AUC was 0.05 (−0.05 to 0.28). Non-significant change in AUC was attributed to underpowering rather than the diagnostic test failing to improve diagnostic accuracy.

Conclusions

Data reporting in MRMC studies using ROC AUC as an outcome measure is frequently incomplete, hampering understanding of methods and the reliability of results and study conclusions. Authors using this analysis should be encouraged to provide a full description of their methods and results.

Citation: Dendumrongsup T, Plumb AA, Halligan S, Fanshawe TR, Altman DG, Mallett S (2014) Multi-Reader Multi-Case Studies Using the Area under the Receiver Operator Characteristic Curve as a Measure of Diagnostic Accuracy: Systematic Review with a Focus on Quality of Data Reporting. PLoS ONE 9(12): e116018. https://doi.org/10.1371/journal.pone.0116018

Editor: Delphine Sophie Courvoisier, University of Geneva, Switzerland

Received: September 23, 2014; Accepted: December 2, 2014; Published: December 26, 2014

Copyright: © 2014 Dendumrongsup et al. This is an open-access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Data Availability: The authors confirm that all data underlying the findings are fully available without restriction. All relevant data are within the paper and its Supporting Information files.

Funding: This work was supported by the UK National Institute for Health (NIHR) Research under its Programme Grants for Applied Research funding scheme (RP-PG-0407-10338). The funder had no role in the design, execution, analysis, reporting, or decision to submit for publication.

Competing interests: The authors have declared that no competing interests exist.

The receiver operator characteristic (ROC) curve describes a plot of sensitivity versus 1-specificity for a diagnostic test, across the whole range of possible diagnostic thresholds [1] . The area under the ROC curve (ROC AUC) is a well-recognised single measure that is often used to combine elements of both sensitivity and specificity, sometimes replacing these two measures. ROC AUC is often used to describe the diagnostic performance of radiological tests, either to compare the performance of different tests or the same test under different circumstances [2] , [3] . Radiological tests must be interpreted by human observers and a common study design uses multiple readers to interpret multiple image cases; the multi-reader multi-case (MRMC) design [4] . The MRMC design is popular because once a radiologist has viewed 20 cases there is less information to be gained by asking him to view a further 20 than by asking a different radiologist to view the same 20. This procedure enhances the generalisability of study results and having multiple readers interpret multiple cases enhances statistical power. Because multiple radiologists view the same cases, “clustering” occurs. For example, small lesions are generally seen less frequently than larger lesions, i.e. reader observations are clustered within cases. Similarly, more experienced readers are likely to perform better across a series of cases than less experienced readers, i.e. results are correlated within readers. Bootstrap resampling and multilevel modeling can account for clustering, linking results from the same observers and cases, so that 95% confidence intervals are not too narrow. MRMC studies using ROC AUC as the primary outcome are often required by regulatory bodies for the licensing of new radiological devices [5] .

We attempted to use ROC AUC as the primary outcome measure in a prior MRMC study of computer-assisted detection (CAD) for CT colonography [6] . However, we encountered several difficulties when trying to implement this approach, described in detail elsewhere [7] . Many of these difficulties were related to issues implementing confidence scores in a transparent and reliable fashion, which led ultimately to a flawed analysis. We considered, therefore, that for ROC AUC to be a valid measure there are methodological components that need addressing in study design, data collection and analysis, and interpretation. Based on our attempts to implement the MRMC ROC AUC analysis, we were interested in whether other researchers have encountered similar hurdles and, if so, how these issues were tackled.

In order to investigate how often other studies have addressed and reported on methodological issues with implementing ROC AUC, we performed a systematic review of MRMC studies using ROC AUC an outcome measure. We searched and investigated the available literature with the objective to describe the statistical methods used, the completeness of data presentation, and investigate whether any problems with analysis were encountered and reported.

Ethics statement

Ethical approval is not required by our institutions for research studies of published data.

Search strategy, inclusion and exclusion criteria

This systematic review was performed guided by the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA), an evidence-based minimum set of items for reporting in systematic reviews and meta-analyses [8] . We developed an extraction sheet for the systematic review, broken down into different sections (used as subheadings for the Results section of this report), with notes relating to each individual item extracted ( S1 File ). In consensus we considered approximately 50 articles would provide a sufficiently representative overview of current reporting practice. Based on our prior experience of performing systematic reviews we believed that searching for additional articles beyond 50 would be unlikely to yield valuable additional data (i.e. we believed we would reach “saturation” by 50 articles) yet would present a very considerable extraction burden.

In order to achieve this, potentially eligible primary articles published between 2005 and February 2013 inclusive were identified by a radiologist researcher (TD) using PUBMED via their citation of one or more of 8 key methodological articles relating to MRMC ROC AUC analysis [9] – [16] . To achieve this the Authors' names (combined using “AND”) were entered in the PUBMED search field and the specific article identified and clicked in the results list. The abstract was then accessed and the “Cited By # PubMed Central Articles” link and “Related Citations” link used to identify those articles in the PubMed Central database that have cited the original article. There was no language restriction. Online abstracts were examined in reverse chronological order, the full text of potentially eligible papers then retrieved, and selection stopped once the threshold of 50 studies fulfilling inclusion criteria had been passed.

To be eligible, primary studies had to be diagnostic test accuracy studies of human observers interpreting medical image data from real patients, and attempting to use a MRMC ROC AUC analysis as a study outcome based on the following methodological approaches [9] – [16] ; Reviews, solely methodological papers, and those using simulated imaging data were excluded.

Data extraction

An initial pilot sample of 5 full-paper articles were extracted and the data checked by a subgroup of investigators in consensus, to both confirm the process was feasible and to identify potential problems. These papers were extracted by TD using the search strategy described in the previous section. A further 10 full-papers were extracted by two radiologist researchers again using the same search strategy and working independently (TD, AP) to check agreement further. The remaining articles included in the review were extracted predominantly by TD, who discussed any concerns/uncertainty with AP. Any disagreement following their discussion was arbitrated by SH and/or SM where necessary. These discussions took place during two meetings when the authors met to discuss progress of the review; multiple papers and issues were discussed on each occasion.

The extraction covered the following broad topics: Study characteristics, methods to record study outcomes, model assumptions, model fitting, data presentation ( S1 File ).

We extracted data relating to the organ and disease studied, the nature of the diagnostic task (e.g. characterization vs. localization vs. presence/absence), test methods, patient source and characteristics, study design (e.g. prospective/retrospective, secondary analysis, single/multicenter) and reference standard. We extracted the number of readers, their prior experience, specific interpretation training for the study (e.g. use of CAD software), blinding to clinical data and/or reference results, the number of times they read each case and the presence of any washout period to diminish recall bias, case ordering, and whether all readers read all cases (i.e. a fully-crossed design). We extracted the unit of analysis (e.g. patient vs. organ vs. segment), and sample size for patients with and without pathology.

We noted whether study imaging reflected normal daily clinical practice or was modified for study purposes (e.g. restricted to limited images). We noted the confidence scores used for the ROC curve and their scale, and whether training was provided for scoring. We noted if there were multiple lesions per unit of analysis. We noted if scoring differed for positive and negative patient cases, whether score distribution was reported, and whether transformation to a normal distribution was performed.

We extracted if ROC cures were presented in the published article and, if so, whether for individual readers, whether the curve was smoothed, and if underlying data points were shown. We defined unreasonable extrapolation as an absence of data in the right-hand 25% of the plot space. We noted the method for curve fitting and whether any problems with fitting were reported, and the method used to compare AUC or pAUC. We extracted the primary outcome, the accuracy measures reported, and whether these were overall or for individual readers. We noted the size of any change in AUC, whether this was significant, and made a subjective assessment of whether significance could be attributed to a single reader or case. We noted how the study authors interpreted change in AUC, if any, and whether any change was reported in terms of effect on individual patients. We also noted if a ROC researcher was named as an author or acknowledged, defined as an individual who had published indexed research papers dealing with ROC methodology.

Data were summarized in an Excel worksheet (Excel For Mac 14.3.9, Microsoft Corporation) with additional cells for explanatory free text. A radiologist researcher (SH) then compiled the data and extracted frequencies, consulting the two radiologists who performed the extraction for clarification when necessary. The investigator group discussed the implication of the data subsequently, to guide interpretation.

Four hundred and seventy five citations of the 8 key methodological papers were identified and 64 full papers retrieved subsequently. Fifteen [17] – [31] of these were rejected after reading the full text (the papers and reason for rejection are shown in Table 1 ) leaving 49 [32] – [80] for extraction and analysis that were published between 2010 and 2012 inclusive; these are detailed in Table 1 . Two papers [61] , [75] contributed two separate studies each, meaning that 51 studies were extracted in total. The PRISMA checklist [8] is detailed in Fig. 1 . The raw extracted data are available in S2 File .

- PPT PowerPoint slide

- PNG larger image

- TIFF original image

https://doi.org/10.1371/journal.pone.0116018.g001

https://doi.org/10.1371/journal.pone.0116018.t001

Study characteristics

The index test was imaging in all studies. Breast was the commonest organ studied (20 studies), followed by lung (11 studies) and brain (7 studies). Mammography (15 studies) was the commonest individual modality investigated, followed by plain film (12 studies), CT and MRI (11 studies each), tomosynthesis (six studies), ultrasound (two studies) and PET (one study); 9 studies investigated multiple modalities. In most studies (28 studies) the prime interpretation task was lesion detection. Eleven studies focused on lesion characterization and 12 combined detection and characterization. Forty-one studies compared 2 tests/conditions (i.e. a single test but used in different ways) to a reference standard (41 studies), while 2 studies compared 1 test/condition, 7 studies compared 3 tests/conditions, and 1 study compared 4 tests/conditions. Twenty-five studies combined data to create a reference standard while the reference was a single finding in 24 (14 imaging, 5 histology, 5 other – e.g. endoscopy). The reference method was unclear in 2 studies [54] , [55] .

Twenty-four studies were single center, 12 multicenter, with the number of centers unclear in 15 (29%) studies. Nine studies recruited symptomatic patients, 8 asymptomatic, and 7 a combination, but the majority (53%; 27 studies) did not state whether patients were symptomatic or not. 42 (82%) studies described the origin of patients with half of these stating a precise geographical region or hospital name. However, 9 (18%) studies did not sufficiently describe the source of patients and 21 (41%) did not describe patients' age and/or gender distribution.

Study design

Extracted data relating to study design and readers are presented graphically in Fig. 2 . Most studies (29; 57%) used patient data collected retrospectively. Fourteen (28%) were prospective while 2 used an existing database. Whether prospective/retrospective data was used was unstated/unclear in a further 6 (12%). While 13 studies (26%) used cases unselected other than for the disease in question, the majority (34; 67%) applied further criteria, for example to preselect “difficult” cases (11 studies), or to enrich disease prevalence (4 studies). How this selection bias was applied was stated explicitly in 18 (53%) of these 34. Whether selection bias was used was unclear in 4 studies.

https://doi.org/10.1371/journal.pone.0116018.g002

The number of readers per study ranged from 2 [56] to 258 [76] . The mean number was 13, median 6. The large majority of studies (35; 69%) used fewer than 10 readers. Reader experience was described in 40 (78%) studies but not in 11. Specific reader training for image interpretation was described in 31 (61%) studies. Readers were not trained specifically in 14 studies and in 6 it was unclear whether readers were trained specifically or not. Readers were blind to clinical information for individual patients in 37 (73%) studies, unblind in 3, and this information was unrecorded or uncertain in 11 (22%). Readers were blind to prevalence in the dataset in 21 (41%) studies, unblind in 2, but this information was unsure/unrecorded or uncertain in the majority (28, 55%).

Observers read the same patient case on more than one occasion in 50 studies; this information was unclear in the single further study [70] . A fully crossed design (i.e. all readers read all patients with all modalities) was used in 47 (92%) studies, but not stated explicitly in 23 of these. A single study [72] did not use a fully crossed design and the design was unclear or unrecorded in 3 [34] , [70] , [76] . Case ordering was randomised (either a different random order across all readers or a different random order for each individual reader) between consecutive readings in 31 (61%) studies, unchanged in 6, and unclear/unrecorded in 14 (27%). The ordering of the index test being compared varied between consecutive readings in 20 (39%) studies, was unchanged in 17 (33%), and was unclear/unrecorded in 14 (27%). 26 (51%) studies employed a time interval between readings that ranged from 3 hours [50] to 2 months [63] , with a median of 4 weeks. There was no interval (i.e. reading of cases in all conditions occurred at the same sitting) in 17 (33%) studies, and time interval was unclear/unrecorded in 8 (16%).

Methods of reporting study outcomes

The unit of analysis for the ROC AUC analysis was the patient in 23 (45%) studies, an organ in 5, an organ segment in 5, a lesion in 11 (22%), other in 2, and unclear or unrecorded in 6 (12%); one study [34] examined both organ and lesion so there were 52 extractions for this item. Analysis was based on multiple images in 33 (65%) studies, a single image in 16 (31%), multiple modalities in a single study [40] , and unclear in a single study [57] ; no study used videos.

The number of disease positive patients per study ranged between 10 [79] and 100 [53] (mean 42, median 48) in 46 studies, and was unclear/unrecorded in 5 studies. The number of disease positive units of outcome for the primary ROC AUC analysis ranged between 10 [79] and 240 [41] (mean 59, median 50) in 43 studies, and was unclear/unrecorded in 8 studies. The number of disease negative patients per study ranged between 3 [69] and 352 [34] (mean 66, median 38) in 44 studies, was zero in 1 study [80] , and was unclear/unrecorded in 6 studies. The number of disease negative units of analysis for the primary outcome for the ROC AUC analysis ranged between 10 [51] and 535 [39] (mean 99, median 68) in 42 studies, and was unclear/unrecorded in the remaining 9 studies. The large majority of studies (41, 80%) presented readers with an image or set of images reflecting normal clinical practice whereas 10 presented specific lesions or regions of interest to readers.

Calculation of ROC AUC requires the use of confidence scores, where readers rate their confidence in the presence of a lesion or its characterization. In our previous study [6] we identified the assignment of confidence scores to be potentially on separate scales for disease positive and negative cases [7] . For rating scores used to calculate ROC AUC, 25 (49%) studies used a relatively small number of categories (defined as up to 10) and 25 (49%) used larger scales or a continuous measurement (e.g. visual analogue scale). One study did not specify the scale used [76] . Only 6 (12%) studies stated explicitly that readers were trained in advance to use the scoring system, for example being encouraged to use the full range available. In 15 (29%) studies there was the potential for multiple abnormalities in each unit of analysis (stated explicitly by 12 of these). This situation was dealt with by asking readers to assess the most advanced or largest lesion (e.g. [43] ), by an analysis using the highest score attributed (e.g. [42] ), or by adopting a per-lesion analysis (e.g. [52] ). For 23 studies only a single abnormality per unit of analysis was possible, whereas this issue was unclear in 13 studies.

Model assumptions

The majority of studies (41, 80%) asked readers to ascribe the same scoring system to both disease-positive and disease-negative patients. Another 9 studies asked that different scoring systems be used, depending on whether the case was perceived as positive or negative (e.g. [61] ), or depending on the nature of the lesion perceived (e.g. [66] ). Scoring was unclear in a single study [76] . No study stated that two types of true-negative classifications were possible (i.e. where a lesion was seen but misclassified vs. not being seen at all), a situation that potentially applied to 22 (43%) of the 51 studies. Another concern occurs when more than one observation for each patient is included in the analysis, violating the assumption that data are independent. This could occur if multiple diseased segments were analysed for each patient without using a statistical method that treats these as clustered data. An even more flawed approach occurs when analysis includes one segment for patients without disease but multiple segments for patients with disease.

When publically available DBM MRMC software [81] is used for ROC AUC modeling, this requires assumptions of normality for confidence scores or their transformations if the standard parametric ROC curve fitting methods are used. When scores are not normally distributed, even if non parametric approaches are used to estimate ROC AUC, this lack of normality may indicate additional problems with obtaining reliable estimates of ROC AUC [82] – [86] . While 17 studies stated explicitly that the data fulfilled the assumptions necessary for modeling, none described whether confidence scores were transformed to a normal distribution for analysis. Indeed, only 3 studies [54] , [73] , [76] described the distribution of confidence scores, which was non-normal in each case.

Model fitting

Thirty (59%) studies presented ROC curves based on confidence scores; i.e. 21 (41%) studies showed no ROC curve. Of the 30 with curves, only 5 presented a curve for each reader whereas 24 presented curves averaged over all readers; a further study presented both. Of the 30 studies presenting ROC curves, 26 (87%) showed only smoothed curves, with the data points underlying the ROC curve presented in only 4 (13%) [43] , [51] , [63] , [78] . Thus, a ROC curve with underlying data points was presented in only 4 of 51 (8%) studies overall. The degree of extrapolation is critical in understanding the reliability of the ROC AUC result [7] . However, extrapolation could only be assessed in these four articles, with unreasonable extrapolation, by our definition, occurring in two [43] , [63] .

The majority of studies (31, 61%) did not specify the method used for curve fitting. Of the 20 that did, 7 used non-parametric methods (Trapezoidal/Wilcoxon), 8 used parametric methods (7 of which used Proproc), 3 used other methods, and 2 used a combination. Previous research [7] , [84] has demonstrated considerable problems fitting ROC curves due to degenerate data where the fitted ROC curve corresponds to vertical and horizontal lines, e.g there are no FP data. Only 2 articles described problems with curve fitting [55] , [61] . Two studies stated that data was degenerate: Subhas and co-workers [66] stated that, “data were not well dispersed over the five confidence level scores”. Moin and co-workers [53] stated that, “If we were to recode categories 1 and 2, and discard BI-RADS 0 in the ROC analysis, it would yield degenerative results because the total number of cases collected would not be adequate”. While all studies used MRMC AUC methods to compare AUC outcomes, 5 studies also used other methods (e.g. t-testing) [37] , [52] , [60] , [67] , [77] . Only 3 studies described using a partial AUC [42] , [55] , [77] . Forty-four studies additionally reported non-AUC outcomes (e.g. McNemar's test to compare test performance at a specified diagnostic threshold [58] , Wilcoxon signed rank test to compare changes in patient management decisions [64] ). Eight (16%) of the studies included a ROC researcher as an author [39] , [47] , [48] , [54] , [60] , [65] , [66] , [72] .

Presentation of results

Extracted data relating to the presentation of individual study results is presented graphically in Fig. 3 . All studies presented ROC AUC as an accuracy measure with 49 (96%) presenting the change in AUC for the conditions tested. Thirty-five (69%) studies presented additional measures such as change in sensitivity/specificity (24 studies), positive/negative predictive values (5 studies), or other measures (e.g. changes in clinical management decisions [64] , intraobserver agreement [36] ). Change in AUC was the primary outcome in 45 (88%) studies. Others used sensitivity [34] , [40] , accuracy [35] , [69] , the absolute AUC [44] or JAFROC figure of merit [68] . All studies presented an average of the primary outcome over all readers, with individual reader results presented in 38 (75%) studies but not in 13 (25%). The mean change/difference in AUC was 0.051 (range −0.052 to 0.280) across the extracted studies and was stated as “significant” in 31 and “non-significant” in the remaining 20. No study failed to comment on significance of the stated change/difference in AUC. In 22 studies we considered that a significant change in AUC was unlikely to be due to results from a single reader/patient but we could not determine whether this was possible in 11 studies, and judged this not-applicable in a further 18 studies. One study appeared to report an advantage for a test when the AUC increased, but not significantly [65] . There were 5 (10%) studies where there appeared to be discrepancies between the data presented in the abstract/text/ROC curve [36] , [38] , [69] , [77] , [80] .

https://doi.org/10.1371/journal.pone.0116018.g003

While the majority of studies (42, 82%) did not present an interpretation of their data framed in terms of changes to individual patient diagnoses, 9 (18%) did so, using outcomes in addition to ROC AUC: For example, as a false-positive to true-positive ratio [35] or the proportion of additional biopsies precipitated and disease detected [64] , or effect on callback rate [43] . The change in AUC was non-significant in 22 studies and in 12 of these the authors speculated why, for example stating that the number of cases was likely to be inadequate [65] , [70] , that the observer task was insufficiently taxing [36] , or that the difference was too subtle to be resolved [45] . For studies where a non-significant change in AUC was observed, authors sometimes framed this as demonstrating equivalence (16 studies, e.g. [55] , [74] ), stated that there were other benefits (3 studies), or adopted other interpretations. For example, one study stated that there were “beneficial” effects on many cases despite a non-significant change in AUC [54] and one study stated that the intervention “improved visibility” of microcalcifications noting that the lack of any statistically significant difference warranted further investigation [65] .

While many studies have used ROC AUC as an outcome measure, very little research has investigated how these studies are conducted, analysed and presented. We could find only a single existing systematic review that has investigated this question [87] . The authors stated in their Introduction, “we are not aware of any attempt to provide an overview of the kinds of ROC analyses that have been most commonly published in radiologic research.” They investigated articles published in the journal “Radiology” between 1997 and 2006, identifying 295 studies [87] . The authors concluded that “ROC analysis is widely used in radiologic research, confirming its fundamental role in assessing diagnostic performance”. For the present review, we wished to focus on MRMC studies specifically, since these are most complex and are often used as the basis for technology licensing. We also wished to broaden our search criteria beyond a single journal. Our systematic review found that the quality of data reporting in MRMC studies using ROC AUC as an outcome measure was frequently incomplete and who would therefore agree with the conclusions of Shiraishi et al. who stated that studies, “were not always adequate to support clear and clinically relevant conclusions” [87] .

Many omissions we identified were those related to general study design and execution, and are well-covered by the STARD initiative [88] as factors that should be reported in studies of diagnostic test accuracy in general. For example, we found that the number of participating research centres was unclear in approximately one-third of studies, that most studies did not describe whether patients were symptomatic or asymptomatic, that criteria applied to case selection were sometimes unclear, and that observer blinding was not mentioned in one-fifth of studies. Regarding statistical methods, STARD states that studies should, “describe methods for calculating or comparing measures of diagnostic accuracy” [88] ; this systematic review aimed to focus on description of methods for MRMC studies using ROC AUC as an outcome measure.

The large majority of studies used less than 10 observers, some did not describe reader experience, and the majority did not mention whether observers were aware of prevalence of abnormality, a factor that may influence diagnostic vigilance. Most studies required readers to detect lesions while a minority asked for characterization, and others were a combination of the two. We believe it is important for readers to understand the precise nature of the interpretative task since this will influence the rating scale used to build the ROC curve. A variety of units of analysis were adopted, with just under half being the patient case. We were surprised that some studies failed to record the number of disease-positive and disease-negative patients in their dataset. Concerning the confidence scales used to construct the ROC curve, only a small minority (12%) of studies stated that readers were trained to use these in advance of scoring. We believe such training is important so that readers can appreciate exactly how the interpretative task relates to the scale; there is evidence that radiologists score in different ways when asked to perform the same scoring task because of differences in how they interpret the task [89] . For example, readers should appreciate how the scale reflects lesion detection and/or characterization, especially if both are required, and how multiple abnormalities per unit of analysis are handled. Encouragement to use the full range of the scale is required for normal rating distributions. Whether readers must use the same scale for patients with and without pathology is also important to know.

Despite their importance for understanding the validity of study results, we found that description of the confidence scores, the ROC curve and its analysis was often incomplete. Strikingly, only three studies described the distribution of confidence scores and none stated whether transformation to a normal distribution was needed. When publically available DBM MRMC software (ref DBM) is used for ROC AUC modeling, this requires assumptions of normality for confidence scores or their transformations when ROC curve fitting methods are used. Where confidence scores are not normally distributed these software methods are not recommended [84] – [86] , [90] . Although Hanley shows that ROC curves can be reasonable under some distributions of non normal data [91] , concerns have been raised particularly in imaging detection studies measuring clinically useful tests with good performance to distinguish well defined abnormalities. In tests with good performance two factors make estimation of ROC AUC unreliable. Firstly readers' scores are by definition often at the ends of the confidence scale so that the confidence score distributions for normal and abnormal cases have very little overlap [82] – [86] . Secondly tests with good performance also have few false positives making ROC AUC estimation highly dependent on confidence scores assigned to possibly fewer than 5% or 10% of cases in the study [86] .

Most studies did not describe the method used for curve fitting. Over 40% of studies presented no ROC curve in the published article. When present, the large majority were smoothed and averaged over all readers. Only four articles presented data points underlying the curve meaning that the degree of any extrapolation could not be assessed despite this being an important factor regarding interpretation of results [92] . While, by definition, all studies used MRMC AUC methods, most reported additional non-AUC outcomes. Approximately one-quarter of studies did not present AUC data for individual readers. Because of this, variability between readers and/or the effect of individual readers on the ultimate statistical analysis could not be assessed.

Interpretation of study results was variable. Notably, when no significant change in AUC was demonstrated, authors stated that the number of cases was either insufficient or that the difference could not be resolved by the study, appearing to claim that their studies were underpowered rather than that the intervention was ineffective when required to improve diagnostic accuracy. Indeed some studies claimed an advantage for a new test in the face of a non-significant increase in AUC, or turned to other outcomes as proof of benefit. Some interpreted no significant difference in AUC as implying equivalence.

Our review does have limitations. Indexing of the statistical methods used to analyse studies is not common so we used a proxy to identify studies; their citation of “key” references related to MRMC ROC methodology. While it is possible we missed some studies, our aim was not to identify all studies using such analyses. Rather, we aimed to gather a representative sample that would provide a generalizable picture of how such studies are reported. It is also possible that by their citation of methodological papers (and on occasion including a ROC researcher as an author), our review was biased towards papers likely to be of higher methodological quality than average. This systematic review was cross-disciplinary and two radiological researchers performed the bulk of the extraction rather than statisticians. This proved challenging since the depth of statistical knowledge required was demanding, especially when details of the analysis was being considered. We anticipated this and piloted extraction on a sample of five papers to determine if the process was feasible, deciding that it was. Advice from experienced statisticians was also available when uncertainty arose.

In summary, via systematic review we found that MRMC studies using ROC AUC as the primary outcome measure often omit important information from both the study design and analysis, and presentation of results is frequently not comprehensive. Authors using MRMC ROC analyses should be encouraged to provide a full description of their methods and results so as to increase interpretability.

Supporting Information

Extraction sheet used for the systematic review.

https://doi.org/10.1371/journal.pone.0116018.s001

Raw data extracted for the systematic review.

https://doi.org/10.1371/journal.pone.0116018.s002

S1 PRISMA Checklist.

https://doi.org/10.1371/journal.pone.0116018.s003

Author Contributions

Conceived and designed the experiments: TD AAP SH TRF DGA SM. Performed the experiments: TD AAP SH TRF DGA SM. Analyzed the data: TD AAP SH TRF DGA SM. Contributed reagents/materials/analysis tools: TD AAP SH TRF DGA SM. Wrote the paper: TD AAP SH TRF DGA SM.

- View Article

- Google Scholar

- 7. Mallett S, Halligan S, Collins GS, Altman DG (2014) Exploration of analysis methods for diagnostic imaging tests: Problems woth ROC AUC and confidence scores in CT colonography. PLoS One (in press).

- 81. v2.1 DMs. Available: http://www-radiology.uchicago.edu/krl/KRL_ROC/software_index6.htm .

- 85. Zhou XH, Obuchowski N, McClish DK (2002) Statistical methods in diagnostic medicine. New York NY: Wiley.

iMRMC Multi-Reader, Multi-Case Analysis Methods (ROC, Agreement, and Other Metrics)

- convertDF: Convert MRMC data frames

- convertDFtoDesignMatrix: Convert an MRMC data frame to a design matrix

- convertDFtoScoreMatrix: Convert an MRMC data frame to a score matrix

- createGroups: Assign a group label to items in a vector

- createIMRMCdf: Convert a data frame with all needed factors to doIMRMC...

- doIMRMC: MRMC analysis of the area under the ROC curve

- extractPairedComparisonsBRBM: Extract between-reader between-modality pairs of scores

- extractPairedComparisonsWRBM: Extract within-reader between-modality pairs of scores

- getBRBM: Get between-reader, between-modality paired data from an MRMC...

- getMRMCscore: Get a score from an MRMC data frame

- getWRBM: Get within-reader, between-modality paired data from an MRMC...

- init.lecuyerRNG: Initialize the l'Ecuyer random number generator

- laBRBM: MRMC analysis of between-reader between-modality limits of...

- laWRBM: MRMC analysis of within-reader between-modality limits of...

- renameCol: Rename a data frame column name or a list object name

- roc2binary: Convert ROC data formatted for doIMRMC to TPF and FPF data...

- roeMetzConfigs: roeMetzConfigs

- sim.gRoeMetz: Simulate an MRMC data set of an ROC experiment comparing two...

- sim.gRoeMetz.config: Create a configuration object for the sim.gRoeMetz program

- simMRMC: Simulate an MRMC data set

- simRoeMetz.example: Simulates a sample MRMC ROC experiment

- successDFtoROCdf: Convert an MRMC data frame of successes to one formatted for...

- undoIMRMCdf: Convert a doIMRMC formatted data frame to a standard data...

- uStat11: Analysis of U-statistics degree 1,1

- uStat11.diff: Create the kernel and design matrices for uStat11

- uStat11.identity: Create the kernel and design matrices for uStat11

- Browse all...

iMRMC: Multi-Reader, Multi-Case Analysis Methods (ROC, Agreement, and Other Metrics)

Do Multi-Reader, Multi-Case (MRMC) analyses of data from imaging studies where clinicians (readers) evaluate patient images (cases). What does this mean? ... Many imaging studies are designed so that every reader reads every case in all modalities, a fully-crossed study. In this case, the data is cross-correlated, and we consider the readers and cases to be cross-correlated random effects. An MRMC analysis accounts for the variability and correlations from the readers and cases when estimating variances, confidence intervals, and p-values. The functions in this package can treat arbitrary study designs and studies with missing data, not just fully-crossed study designs. The initial package analyzes the reader-average area under the receiver operating characteristic (ROC) curve with U-statistics according to Gallas, Bandos, Samuelson, and Wagner 2009 <doi:10.1080/03610920802610084>. Additional functions analyze other endpoints with U-statistics (binary performance and score differences) following the work by Gallas, Pennello, and Myers 2007 <doi:10.1364/JOSAA.24.000B70>. Package development and documentation is at <https://github.com/DIDSR/iMRMC/tree/master>.

Getting started

Browse package contents, try the imrmc package in your browser.

Any scripts or data that you put into this service are public.

R Package Documentation

Browse r packages, we want your feedback.

Add the following code to your website.

REMOVE THIS Copy to clipboard

For more information on customizing the embed code, read Embedding Snippets .

Advertisement

Chest radiograph classification and severity of suspected COVID-19 by different radiologist groups and attending clinicians: multi-reader, multi-case study

- Open access

- Published: 25 October 2022

- Volume 33 , pages 2096–2104, ( 2023 )

Cite this article

You have full access to this open access article

- Arjun Nair ORCID: orcid.org/0000-0001-9270-3771 1 ,

- Alexander Procter 1 ,

- Steve Halligan 2 ,

- Thomas Parry 2 ,

- Asia Ahmed 1 ,

- Mark Duncan 1 ,

- Magali Taylor 1 ,

- Manil Chouhan 1 ,

- Trevor Gaunt 1 ,

- James Roberts 1 ,

- Niels van Vucht 1 ,

- Alan Campbell 1 ,

- Laura May Davis 1 ,

- Joseph Jacob 3 ,

- Rachel Hubbard 1 ,

- Shankar Kumar 1 ,

- Ammaarah Said 1 ,

- Xinhui Chan 4 ,

- Tim Cutfield 4 ,

- Akish Luintel 4 ,

- Michael Marks 4 ,

- Neil Stone 4 &

- Sue Mallet 2

1923 Accesses

1 Altmetric

Explore all metrics

To quantify reader agreement for the British Society of Thoracic Imaging (BSTI) diagnostic and severity classification for COVID-19 on chest radiographs (CXR), in particular agreement for an indeterminate CXR that could instigate CT imaging, from single and paired images.

Twenty readers (four groups of five individuals)—consultant chest (CCR), general consultant (GCR), and specialist registrar (RSR) radiologists, and infectious diseases clinicians (IDR)—assigned BSTI categories and severity in addition to modified Covid-Radiographic Assessment of Lung Edema Score (Covid-RALES), to 305 CXRs (129 paired; 2 time points) from 176 guideline-defined COVID-19 patients. Percentage agreement with a consensus of two chest radiologists was calculated for (1) categorisation to those needing CT (indeterminate) versus those that did not (classic/probable, non-COVID-19); (2) severity; and (3) severity change on paired CXRs using the two scoring systems.

Agreement with consensus for the indeterminate category was low across all groups (28–37%). Agreement for other BSTI categories was highest for classic/probable for the other three reader groups (66–76%) compared to GCR (49%). Agreement for normal was similar across all radiologists (54–61%) but lower for IDR (31%). Agreement for a severe CXR was lower for GCR (65%), compared to the other three reader groups (84–95%). For all groups, agreement for changes across paired CXRs was modest.

Agreement for the indeterminate BSTI COVID-19 CXR category is low, and generally moderate for the other BSTI categories and for severity change, suggesting that the test, rather than readers, is limited in utility for both deciding disposition and serial monitoring.

• Across different reader groups, agreement for COVID-19 diagnostic categorisation on CXR varies widely.

• Agreement varies to a degree that may render CXR alone ineffective for triage, especially for indeterminate cases.

• Agreement for serial CXR change is moderate, limiting utility in guiding management.

Similar content being viewed by others

Observer agreement and clinical significance of chest CT reporting in patients suspected of COVID-19

Marie-Pierre Debray, Helena Tarabay, … Antoine Khalil

Diagnostic accuracy and inter-observer agreement with the CO-RADS lexicon for CT chest reporting in COVID-19

Anirudh Venugopalan Nair, Matthew McInnes, … Mahmoud Al-Heidous

Inter-reader agreement of high-resolution computed tomography findings in patients with COVID-19 pneumonia: A multi-reader study

Lorenzo Cereser, Rossano Girometti, … Chiara Zuiani

Avoid common mistakes on your manuscript.

Introduction

Coronavirus disease 2019 (COVID-19), caused by the novel severe acute respiratory syndrome coronavirus 2 virus (SARS-CoV-2), became a global pandemic. In the UK, the pandemic caused record deaths and exerted unprecedented strain on the National Health Service (NHS). Facing such overwhelming demand, clinicians must rapidly and accurately categorise patients with suspected COVID-19 into high and low probability and severity. In March 2020, the British Society of Thoracic Imaging (BSTI) and NHS England produced a decision support algorithm to triage suspected COVID-19 patients [ 1 ]. This assumed that laboratory diagnosis might not be rapidly or widely available, emphasising clinical assessment and chest radiography (CXR).

CXR therefore assumes a pivotal role, not only in diagnosis but also in the classification and monitoring of severity, which directs clinical decision-making. This includes whether intensive treatment is required (those with “classic severe” disease), along with subsequent chest computed tomography (CT) in those with uncertain diagnosis [ 2 , 3 , 4 ] or whose CXR is deteriorating.

Clearly, this requires that CXR interpretation reflects both diagnosis and severity accurately. While immediate interpretation by specialist chest radiologists is desirable, this is unrealistic given demands, and interpretation falls frequently to non-chest radiologists, radiologists in training, or attending clinicians. However, we are unaware of any study that compares agreement and variation between these groups for CXR diagnosis and severity of COVID-19. We aimed to rectify this by performing a multi-case, multi-reader study comparing the interpretation of radiologists (including specialists, non-specialists, and trainees) and non-radiologists to a consensus reference standard, for the CXR diagnosis, severity, and temporal change of COVID-19.

Due to the continued admission of patients to hospital for COVID-19 as the virus becomes another seasonal coronavirus infection, this study has important ongoing relevance to clinical practice.

Materials and methods

Study design and ethical approval.

We used a multi-reader, multi-case design in this single-centre study. Our institution granted ethical approval for COVID-19-related imaging studies (Integrated Research Application Service reference IRAS 282063). Informed consent was waived as part of the approval.

Study population and image acquisition

A list of patients aged ≥ 18 years of age consecutively presenting to our emergency department with suspected COVID-19 infection, as per contemporary national and international definitions [ 5 ], between 25 th February 2020 and 22 nd April 2020, who had undergone at least one CXR, was supplied by our infectious diseases clinical team. All CXRs were acquired as computed or digital radiographs, in the anteroposterior (AP) projection using portable X-ray units as per institutional protocol.

We recruited four groups of readers (each consisting of five individuals), required to interpret suspected COVID-19 CXR in daily practice, as follows:

Group 1: Consultant chest radiologists (CCR) (with 7 to 19 years of radiology experience)

Group 2: Consultant radiologists not specialising in chest radiology (GCR) (with 8–30 years of radiology experience)

Group 3: Radiology specialist residents in training (RSR) (with 2–5 years of radiology experience

Group 4: Infectious diseases consultants and senior trainees (IDR) (with no prior radiology experience)

ID clinicians were chosen as a non-radiologist group because, at our institution and others, their daily practice necessitated both triage and subsequent management of COVID-19 patients via their own interpretation of CXR without radiological assistance.

Case identification, allocation, and consensus standard

Two subspecialist chest radiologists (with 16 and seven years of experience, respectively) first independently assigned BSTI classifications (Table 1 ) to the CXRs of 266 consecutive eligible patients, unaware of the ultimate diagnosis and all clinical information. Of these, 129 had paired CXRs; that is, they had a second CXR at least 24 h after their presentation CXR. The remaining 137 patients had a single presentation CXR. We included patients with unpaired CXRs as well as paired CXRs to enable us to enrich the study cohort with potential CVCX2 cases, because a high institutional prevalence of COVID-19 during the study period meant that few consecutive cases would be designated “indeterminate” or “normal”. However, evaluating this category is central to understanding downstream management implications for patients. There were 47/137 unpaired CXRs where at least one of the two subspecialist chest radiologists classified the CXR as CVCX2 (indeterminate), and so we used these 47 CXRs to enrich the cohort with CVCX2 cases. The final study cohort comprised 176 patients with 305 CXRs: 129 paired and 47 unpaired.

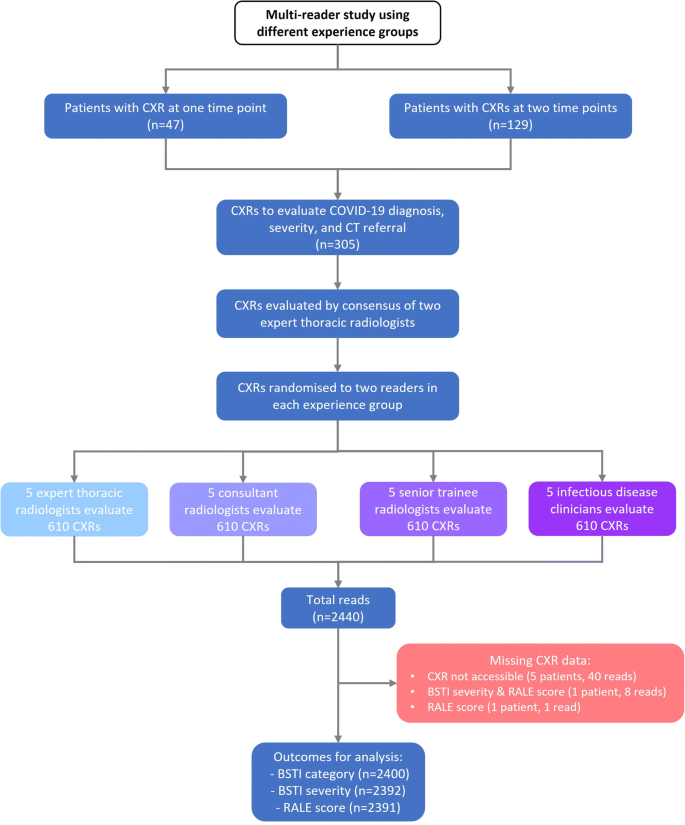

From this cohort of 305 CXRs, five random reading sets were generated, each containing approximately equal numbers of paired and unpaired CXRs (Table 2 ); each CXR was interpreted by 2 readers from each group. The same reader interpreted both time points for paired CXRs. Minor number variations were due to randomisation. Accordingly, individuals designated Reader 1 (CCR1, GCR1, RSR1, and IDR1) in each group would read the same cases, Reader 2 would read the same, and so on. In this way, 610 reads were generated for each reader group, resulting in 2440 reads overall (Fig. 1 ). The distribution of the total number of these cases paralleled cumulative COVID-19 referrals to London hospitals over the period under study (Fig. S1 ).

STARD flowchart showing the derivation of CXR reading dataset per reading group

The same two subspecialist chest radiologists assigned an “expert consensus” score to all 305 CXRs at a separate sitting, two months following their original reading to avoid recall bias, blinded to any reader interpretation (including their own).

Image interpretation

Readers were provided with a refresher presentation explaining BSTI categorisation and severity scoring, with examples. Readers were asked to assume they were reading in a high prevalence “pandemic” clinical scenario, with high pre-test probability, and to categorise incidental findings (e.g. cardiomegaly or minor atelectasis) as CVCX0, and any non-COVID-19 process (e.g. cardiac failure) as CVCX3.

Irrespective of the diagnostic category, we asked readers to classify severity using two scoring systems: the subjective BSTI severity scale (normal, mild, moderate, or severe), and a semiquantitative score (“Covid-RALES”) modified by Wong et al for COVID-19 CXR interpretation from the Radiographic Assessment of Lung Edema (RALE) score [ 3 ]. This score quantifies the extent of airspace opacification in each lung (0 = no involvement; 1 = < 25%; 2 = 25–50%; 3 = 50–75%; 4 = > 75% involvement). Thus, the minimum possible score is 0 and the maximum 8. We evaluated this score because it has been assessed by others and is used to assess the severity for clinical trials at our institution.

All cases were assigned a unique anonymised identifier on our institutional Picture Archiving and Communications System (PACS). Readers viewed each CXR unaware of clinical information and any prior or subsequent imaging. Any paired CXRs were therefore read as individual studies, without direct comparison between pairs. Observers evaluated CXRs on displays replicating their normal practice. Thus, radiologists used displays conforming to standards set by the Royal College of Radiologists while ID clinicians used high-definition flat panel liquid crystal display (LCD) monitors used for ward-based clinical image review at our institution.

Sample size and power calculation

The study was powered to detect a 10% difference between experts and other reader groups for correct detection of CXR reads for CT referral based on indeterminate CXR findings (defined as CVCX2). It was estimated the most experienced group (CCR) would correctly refer 90% of patients to CT. At 80% power, 86 indeterminates would be required to detect a 10% difference in referral to CT using paired proportions, requiring 305 CXRs (176 patients) based on the prevalence of uncertain findings in pre-study reads of CXRs by 2 expert readers > 1 months prior to study reads.

Statistical analysis

The primary outcome was reader group agreement with expert consensus for an indeterminate CXR which, from the BSTI is the surrogate for CT referral. Indeterminate COVID-19 (CVCX2) is the potential surrogate for triage for CT, but an alternative clinical triage categorisation for CT referral would be to combine “indeterminate” and “normal” BSTI categories (CVCX0 and CVCX2). Therefore, we first calculated the percentage agreement between each reader and the consensus reading for each BSTI diagnostic categorisation. We then also assessed this percentage agreement when the BSTI categorisation was dichotomised to (1) CVCX0 and CVCX2 (i.e. the categories that might still warrant CT if there was sufficiently high clinical suspicion), versus (2) CVCX1 and CVCX3 (i.e. the categories that would probably not warrant CT). We assessed agreement for BSTI severity scoring. All percentage agreements are described with their means and 95% confidence intervals per reader group.

Finally, for paired CXR reads we calculated the number and percentage agreement between each group and the consensus standard for no change, decrease, or increase in (1), the BSTI severity classification and (2), the COVID-RALES.

Baseline characteristics

The 176 patients had a median age of 70 years (range 18–99 years); 118 (67%) were male. Due to image processing errors, a CXR was unreadable in one patient without paired imaging and three with, leaving 301 CXRs.

The expert consensus assigned the following BSTI categories: CVCX0 in 97 (32%), CVCX1 in 119 (40%), CVCX2 in 58 (19%), and CVCX3 in 27 (9.0%). Consensus BSTI severity was normal, mild, moderate, or severe in 97 (32%), 93 (31%), 68 (23%), and 27 (14%) respectively. The median consensus COVID-RALES was 2, IQR 0 to 4, range 0 to 8).

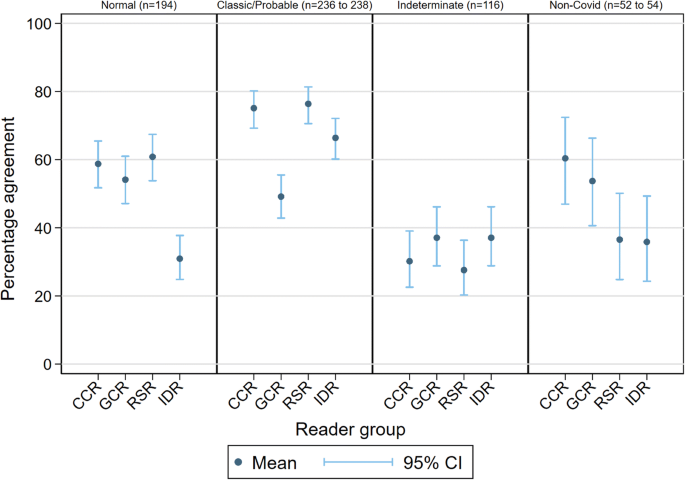

Agreement for indeterminate category (Fig. 2 )

Our primary outcome was reader group agreement with expert consensus for indeterminate COVID-19 (CVCX2), reflecting potential triage to CT. The mean agreement for CVCX2 was generally low (28 to 37%). For all reader groups, the main alternative classification for CVCX2 was CVCX1 (“classic” COVID-19), followed by CVCX3 (not COVID-19) (Fig. S2 ). Even CCR1 and CCR2, who were the two subspecialist readers composing the expert consensus, demonstrated low agreement with their own consensus for CVCX2 (Fig. S3 ). These data suggest that basing CT referral on CXR interpretation is unreliable, even when interpreted by chest subspecialist radiologists.

Percentage agreement with consensus for individual BSTI categories for reader groups

An alternative clinical triage categorisation for CT referral would be to combine “indeterminate” and “normal” BSTI categories (CVCX0 and CVCX2), which resulted in higher agreement (CCR 73% (95% CI 68%, 77)% , RSR 75% (71%, 79%), GCR 58% (53%, 62%), and IDR 61% (56%, 65%)).

Agreement for BSTI categorisation (Table 3 and Fig. 2 )

Agreement was highest for CVCX1 (“classic/probable”) for the CCR (75% (69%, 80%)), RSR (76% (71%, 81%)), and IDR (66%(60%, 72%)) groups, but interestingly not for GCR (49% (43%, 55%)), where agreement was comparable to their agreement for CVCX0 and CVCX3 (“non-COVID-19”) (although still higher than their agreement for CVCX2 (“indeterminate”)). When disagreeing with the consensus CVCX1, GCR were most likely to assign CVCX2 (Fig. S1 ).

Agreement with consensus for CVCX0 (“normal”) was similar for radiologists of all types (mean agreement for CCR, GCR, and RSR of 59%, 54%, and 61% respectively), but lower for IDR (31%). For CVCX3 (not COVID-19), CCR and GCR were generally more likely than RSR and IDR readers to agree with the consensus.

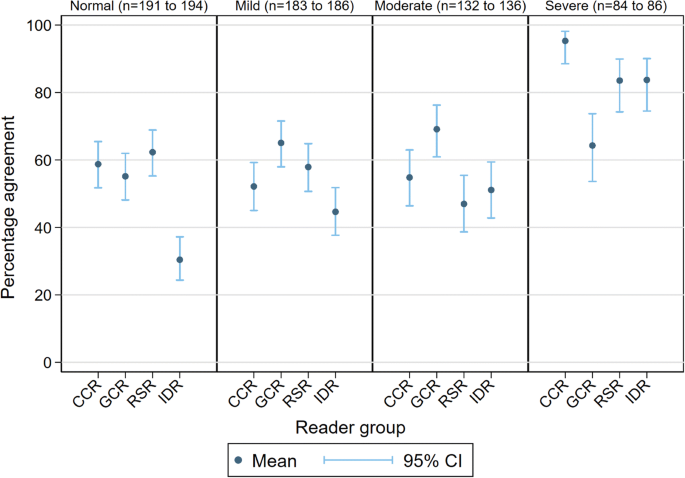

Agreement for BSTI severity classification (Table 4 and Fig. 3 )

Agreement that classification was “severe” was highest for all groups, but lower for GCR (65% (54%, 74%)) than other groups (means of 95% (89%, 98%), 84% (74%, 90%), and 84% (75%, 90%) for CCR, RSR, and IDR respectively). The majority of consensus-graded normal cases were likely to be designated “mild” (Fig. S4 ).

Percentage agreement with consensus for BSTI severity classification for reader groups

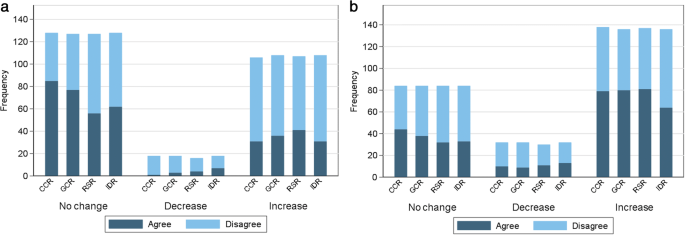

Agreement for change on CXRs (Table 5 and Fig. 4 )

The expert consensus reference found that the majority of BSTI severity scores did not change where paired CXR examinations were separated by just one or two days. Using the BSTI severity classification, the highest agreement with consensus across all groups was for “no change”, with percentage agreement of 66%, 61%, 44%, and 48% for CCR, GCR, RSR, and IDR respectively.

Frequency charts showing agreement with consensus for score change using the BSTI severity classification ( a ) and the Covid-RALES for reader groups ( b )

In contrast, when using Covid-RALES, the highest agreement with consensus across all groups was for an “increased score”, with percentage agreement of 57%, 59%, 59%, and 47% for CCR, GCR, RSR, and IDR respectively. This most likely reflects the larger number of individual categories assigned by Covid-RALES.

Thus far, studies of CXR for COVID-19 have either reported its diagnostic accuracy [ 6 , 7 ], implications of CXR severity assessment using various scores [ 4 , 8 , 9 , 10 ], or quantification using computer vision techniques [ 11 , 12 , 13 ]. Inter-observer agreement for categorisation of COVID-19 CXRs, including for the BSTI classification (but not BSTI severity) has been assessed amongst consultant radiologists [ 14 ], while inter-observer differences according to radiologist experience have been described [ 15 , 16 ]. Notably, in a case-control study, Hare et al compared the agreement for the BSTI classification amongst seven consultant radiologists, including two fellowship-trained chest radiologists (with the latter providing the reference standard). They found that only fair agreement was obtained for the CVCX2 category κ = 0.23), and “non-COVID-19” ( κ = 0.37) categories, but that combining the scores of the CVCX2 and CVCX3 scores improved inter-observer agreement ( κ = 0.58) [ 14 ]. A recent study compared the sensitivity and specificity (but not agreement) of using the “classic/probable” BSTI category for COVID-19 diagnosis between Emergency Department clinicians and radiologists (both of various grades), based on a retrospective review of their classifications [ 17 ].

Our study differs in that it pivots around three potential clinical scenarios that use the CXR to manage suspected COVID-19. Using a prospective multi-reader, multi-case design, we determined reader agreement for four clinical groups who are tasked with CXR interpretation in daily practice and compared these to a consensus reference standard. Firstly, we evaluated reader agreement when using CXR to triage patients for CT when CXR imaging is insufficient to diagnose COVID-19. Secondly, we examined agreement for disease severity using two scores (BSTI and RALES). Thirdly, we investigated whether paired CXRs could monitor any change in severity.

When CXR was used to identify which patients need CT, based on our pre-specified BSTI category of an indeterminate interpretation, agreement with our consensus was low (28 to 37%) or moderate (58 to 75%) respectively. All four reader groups had a similar agreement to the consensus for identifying indeterminates, indicating that the level or specialism or radiologist expertise did not enhance agreement. When combining indeterminates with normal, GCR and IDR groups had lower agreement because the GCR group assigned more indeterminates as non-COVID-19, whereas the IDR group assigned more to classic/probable COVID-19.

Similar (albeit modest) agreement for the “normal” category amongst radiologists of all grades and types suggests that these factors are not influential when assigning this category. Radiologists seemed willing to consider many CXRs normal despite assuming a high prevalence setting. Reassuringly, this suggests that patient disposition, if based on normal CXR interpretation, is unlikely to vary much depending on the category of radiologist. Conversely, the lower agreement of ID clinicians for a normal CXR suggests an inclination to overall abnormal, since they classified normal CXRs as mostly “indeterminate” but also “classic/probable” COVID-19. We speculate that the contemporary pandemic clinical experience of ID clinicians made it difficult for them to consider a CXR normal, even when deprived of supporting clinical information.

In contrast, general consultant radiologists were less inclined to assign the “classic/probable” category, predominantly favouring the indeterminate category. Our results are somewhat at odds with Hare et al [ 14 ], who found substantial agreement for the CVCX1 category amongst seven consultant radiologists. Reasons underpinning the reticence to assign this category (even in a high prevalence setting) are difficult to intuit but may be partly attributable to a desire to adhere to strict definitions for this category, and thus maintain specificity.

Severity scores can quantify disease fluctuations that influence patient management, have prognostic implications [ 8 , 9 , 10 ], and may also be employed in clinical trials. However, this is only possible if scores are reliable, which is reflected by reader agreement regarding both value and change. For our second and third clinical scenarios for CXR, we also found that assessment of severity and change, and therefore of CXR severity itself, varied between reader groups and readers using either severity scoring system, but in different ways. It is probably unsurprising that agreement for no change in BSTI severity was highest for all reader groups, given that the four-grade nature of that classification is less likely to detect subtle change. In contrast, the finer gradation of Covid-RALES allows smaller severity increments to be captured more readily. A higher number of categories also encourages disagreement; despite this, agreement was modest.

We wished to examine CXR utility in a real-world clinical setting using consecutive patients presenting to our emergency department with suspected COVID-19 infection. Our findings are important because they examine clear clinical roles for CXR beyond a purely binary diagnosis of COVID-19 versus non-COVID-19. Rather, we examined the CXR as an aid to clinical decision-making and as an adjunct to clinical and molecular testing. CXR has moderate pooled sensitivity and specificity for COVID-19 (81% and 72% respectively) [ 18 ] and, in the context of other clinical and diagnostic tests [ 19 ], such diagnostic accuracy could be considered favourable. Although thoracic CT has a higher sensitivity for diagnosing COVID-19 [ 18 ], CXR has been used and investigated in this triage role both in the UK and internationally [ 20 ]. However, our results do have important implications when using CXR for diagnosis because interpretation appears susceptible to substantial inter-reader variation. Investigating reader variability will also be crucial for development, training, and evaluation of artificial intelligence algorithms to diagnose COVID-19, such as that now underway using the National COVID-19 Chest Imaging Database (NCCID) [ 21 ].

Our study has limitations. ID clinicians, as the first clinicians to assess potential COVID-19 cases, were the only group of non-radiologist clinicians evaluated. While we would wish to evaluate the emergency department and general medical colleagues also, this proved impractical. However, we have no a priori expectation that these would perform any differently. Our reference standard interpretation used two subspecialist chest radiologists; like any subjective standard, ours is imperfect, but with precedent [ 14 ]. We point out that our data around variability of reader classifications are robust regardless of the reference standard (see data in supplementary Figs. S2 and S4 ). Arguably, we disadvantaged ID clinicians by requiring them to interpret CXRs using LCD monitors, but this reflects normal clinical practice. It is possible that readers may have focussed on BSTI diagnostic categories in isolation, rather than considering the implications of how their categorisation would be used to decide patient management but, again, this reflects normal practice (since radiologists do not determine management). Readers did not compare serial CXRs directly, but read them in isolation: We note a potential role for monitoring disease progression when serial CXRs are viewed simultaneously, but this outcome would require assessment by other studies.

In conclusion, across a diverse group of clinicians, agreement for BSTI diagnostic categorisation for COVID-19 CXR classification varies widely for many categories, and to such a degree that may render CXR ineffective for triage using such categories. Agreement for serial change over time was also moderate, underscoring the need for cautious interpretation of changes in severity scores if using these to guide management and predict outcome, when these scores have been assigned to serial CXRs read in isolation.

Abbreviations

British Society of Thoracic Imaging

Consultant chest radiologists

Radiographic Assessment of Lung Edema (RALE) score, modified for COVID-19 interpretation

BSTI COVID-19 chest radiograph category

Chest radiograph

General consultant radiologists

Infectious diseases consultants and senior trainees

National Health Service

Radiology specialist residents in training

Nair A, Rodrigues JCL, Hare S et al (2020) A British Society of Thoracic Imaging statement: considerations in designing local imaging diagnostic algorithms for the COVID-19 pandemic. Clin Radiol 75(5):329–334

Article CAS PubMed PubMed Central Google Scholar

Guan WJ, Zhong NS (2020) Clinical characteristics of Covid-19 in China. Reply N Engl J Med 382(19):1861–1862

PubMed Google Scholar

Wong HYF, Lam HYS, Fong AH et al (2020) Frequency and distribution of chest radiographic findings in patients positive for COVID-19. Radiology. 296(2):E72–EE8

Article PubMed Google Scholar

Liang W, Liang H, Ou L et al (2020) Development and validation of a clinical risk score to predict the occurrence of critical illness in hospitalized patients with COVID-19. JAMA Intern Med 180(8):1081–1089

Article CAS PubMed Google Scholar

Public Health England(2020) COVID-19: investigation and initial clinical management of possible cases [updated 12/14/2020. Available from: https://www.gov.uk/government/publications/wuhan-novel-coronavirus-initial-investigation-of-possible-cases/investigation-and-initial-clinical-management-of-possible-cases-of-wuhan-novel-coronavirus-wn-cov-infection . Accessed 23 Dec 2021

Schiaffino S, Tritella S, Cozzi A et al (2020) Diagnostic performance of chest X-ray for COVID-19 pneumonia during the SARS-CoV-2 pandemic in Lombardy, Italy. J Thorac Imaging 35(4):W105–W1W6

Gatti M, Calandri M, Barba M et al (2020) Baseline chest X-ray in coronavirus disease 19 (COVID-19) patients: association with clinical and laboratory data. Radiol Med 125(12):1271–1279

Article PubMed PubMed Central Google Scholar

Orsi MA, Oliva G, Toluian T, Valenti PC, Panzeri M, Cellina M (2020) Feasibility, reproducibility, and clinical validity of a quantitative Chest X-ray assessment for COVID-19. Am J Trop Med Hyg 103(2):822–827

Toussie D, Voutsinas N, Finkelstein M et al (2020) Clinical and chest radiography features determine patient outcomes in young and middle-aged adults with COVID-19. Radiology. 297(1):E197–E206

Balbi M, Caroli A, Corsi A et al (2021) Chest X-ray for predicting mortality and the need for ventilatory support in COVID-19 patients presenting to the emergency department. Eur Radiol 31(4):1999–2012

Murphy K, Smits H, Knoops AJG et al (2020) COVID-19 on chest radiographs: a multireader evaluation of an artificial intelligence system. Radiology. 296(3):E166–EE72

Ebrahimian S, Homayounieh F, Rockenbach MABC et al (2021) Artificial intelligence matches subjective severity assessment of pneumonia for prediction of patient outcome and need for mechanical ventilation: a cohort study. Sci Rep 11(1):858

Jang SB, Lee SH, Lee DE et al (2020) Deep-learning algorithms for the interpretation of chest radiographs to aid in the triage of COVID-19 patients: A multicenter retrospective study. PLoS One 15(11):e0242759

Hare SS, Tavare AN, Dattani V et al (2020) Validation of the British Society of Thoracic Imaging guidelines for COVID-19 chest radiograph reporting. Clin Radiol 75(9):710–7e9

Article PubMed Central Google Scholar

Cozzi A, Schiaffino S, Arpaia F et al (2020) Chest x-ray in the COVID-19 pandemic: radiologists' real-world reader performance. Eur J Radiol 132:109272

Reeves RA, Pomeranz C, Gomella AA et al (2021) Performance of a severity score on admission chest radiography in predicting clinical outcomes in hospitalized patients with coronavirus disease (COVID-19). Am J Roentgenol 217(3):623–632. https://doi.org/10.2214/AJR.20.24801

Kemp OJ, Watson DJ, Swanson-Low CL, Cameron JA, Von Vopelius-Feldt J (2020) Comparison of chest X-ray interpretation by Emergency Department clinicians and radiologists in suspected COVID-19 infection: a retrospective cohort study. BJR Open 2(1):20200020

PubMed PubMed Central Google Scholar

Islam N, Ebrahimzadeh S, Salameh J-P et al (2021) Thoracic imaging tests for the diagnosis of COVID‐19. Cochrane Database Syst Rev 3(3):CD013639. https://doi.org/10.1002/14651858.CD013639.pub4

Mallett S, Allen AJ, Graziadio S et al (2020) At what times during infection is SARS-CoV-2 detectable and no longer detectable using RT-PCR-based tests? A systematic review of individual participant data. BMC Med 18(1):346

Çinkooğlu A, Bayraktaroğlu S, Ceylan N, Savaş R (2021) Efficacy of chest X-ray in the diagnosis of COVID-19 pneumonia: comparison with computed tomography through a simplified scoring system designed for triage. Egypt J Radiol Nucl Med 52(1):1–9

Article Google Scholar

Jacob J, Alexander D, Baillie JK et al (2020) Using imaging to combat a pandemic: rationale for developing the UK National COVID-19 Chest Imaging Database. Eur Respir J 56(2):2001809

Download references

The authors state that this work has not received any funding.

Author information

Arjun Nair and Alexander Procter contributed equally to this work.

Authors and Affiliations