To read this content please select one of the options below:

Please note you do not have access to teaching notes, critical thinking for understanding fallibility and falsifiability of our knowledge.

A Primer on Critical Thinking and Business Ethics

ISBN : 978-1-83753-309-1 , eISBN : 978-1-83753-308-4

Publication date: 27 July 2023

Executive Summary

All of us seek truth via objective inquiry into various human and nonhuman phenomena that nature presents to us on a daily basis. We are empirical (or nonempirical) decision makers who hold that uncertainty is our discipline, and that understanding how to act under conditions of incomplete information is the highest and most urgent human pursuit (Karl Popper, as cited in Taleb, 2010, p. 57). We verify (prove something as right) or falsify (prove something as wrong), and this asymmetry of knowledge enables us to distinguish between science and nonscience. According to Karl Popper (1971), we should be an “open society,” one that relies on skepticism as a modus operandi, refusing and resisting definitive (dogmatic) truths. An open society, maintained Popper, is one in which no permanent truth is held to exist; this would allow counter-ideas to emerge. Hence, any idea of Utopia is necessarily closed since it chokes its own refutations. A good model for society that cannot be left open for falsification is totalitarian and epistemologically arrogant. The difference between an open and a closed society is that between an open and a closed mind (Taleb, 2004, p. 129). Popper accused Plato of closing our minds. Popper's idea was that science has problems of fallibility or falsifiability. In this chapter, we deal with fallibility and falsifiability of human thinking, reasoning, and inferencing as argued by various scholars, as well as the falsifiability of our knowledge and cherished cultures and traditions. Critical thinking helps us cope with both vulnerabilities. In general, we argue for supporting the theory of “open mind and open society” in order to pursue objective truth.

Mascarenhas, O.A.J. , Thakur, M. and Kumar, P. (2023), "Critical Thinking for Understanding Fallibility and Falsifiability of Our Knowledge", A Primer on Critical Thinking and Business Ethics , Emerald Publishing Limited, Leeds, pp. 187-216. https://doi.org/10.1108/978-1-83753-308-420231007

Emerald Publishing Limited

Copyright © 2023 Oswald A. J. Mascarenhas, Munish Thakur and Payal Kumar. Published under exclusive licence by Emerald Publishing Limited

We’re listening — tell us what you think

Something didn’t work….

Report bugs here

All feedback is valuable

Please share your general feedback

Join us on our journey

Platform update page.

Visit emeraldpublishing.com/platformupdate to discover the latest news and updates

Questions & More Information

Answers to the most commonly asked questions here

Law of Falsifiability

The Law of Falsifiability is a rule that a famous thinker named Karl Popper came up with. In simple terms, for something to be called scientific, there must be a way to show it could be incorrect. Imagine you’re saying you have an invisible, noiseless, pet dragon in your room that no one can touch or see. If no one can test to see if the dragon is really there, then it’s not scientific. But if you claim that water boils at 100 degrees Celsius at sea level, we can test this. If it turns out water does not boil at this temperature under these conditions, then the claim would be proven false. That’s what Karl Popper was getting at – science is about making claims that can be tested, possibly shown to be false, and that’s what keeps it trustworthy and moving forward.

Examples of Law of Falsifiability

- Astrology – Astrology is like saying certain traits or events will happen to you based on star patterns. But because its predictions are too general and can’t be checked in a clear way, it doesn’t pass the test of falsifiability. This means astrology cannot be considered a scientific theory since you can’t show when it’s wrong with specific tests.

- The Theory of Evolution – In contrast, the theory of evolution is something we can test. It says that different living things developed over a very long time. If someone were to find an animal’s remains in a rock layer where it should not be, such as a rabbit in rock that’s 500 million years old, that would challenge the theory. Since we can test it by looking for evidence like this, evolution is considered falsifiable.

Why is it Important?

The Law of Falsifiability matters a lot because it separates what’s considered scientific from what’s not. When an idea can’t be tested or shown to be wrong, it can lead people down the wrong path. By focusing on theories we can test, science gets stronger and we learn more about the world for real. For everyday people, this is key because it means we can rely on science for things like medicine, technology, and understanding our environment. If scientists didn’t use this rule, we might believe in things that aren’t true, like magic potions or the idea that some stars can predict your future.

Implications and Applications

The rule of being able to test if something is false is basic in the world of science and is used in all sorts of subjects. For example, in an experiment, scientists try really hard to see if their guess about something can be shown wrong. If their guess survives all the tests, it’s a good sign; if not, they need to think again or throw it out. This is how science gets better and better.

Comparison with Related Axioms

- Verifiability : This means checking if a statement or idea is true. Both verifiability and falsifiability have to do with testing, but falsifiability is seen as more important because things that can be proven wrong are usually also things we can check for truth.

- Empiricism : This is the belief that knowledge comes from what we can sense – like seeing, hearing, or touching. Falsifiability and empiricism go hand in hand because both involve using real evidence to test out ideas.

- Reproducibility : This idea says that doing the same experiment in the same way should give you the same result. To show something is falsifiable, you should be able to repeat a test over and over, with the chance that it might fail.

Karl Popper brought the Law of Falsifiability into the world in the 1900s. He didn’t like theories that seemed to answer everything because, to him, they actually explained nothing. By making this law, he aimed to make a clear line between what could be taken seriously in science and what could not. It was his way of making sure scientific thinking stayed sharp and clear.

Controversies

Not everyone agrees that falsifiability is the only way to tell if something is scientific. Some experts point out areas in science, like string theory from physics, which are really hard to test and so are hard to apply this law to. Also, in science fields that look at history, like how the universe began or how life changed over time, it’s not always about predictions that can be tested, but more about understanding special events. These differences in opinion show that while it’s a strong part of scientific thinking, falsifiability might not work for every situation or be the only thing that counts for scientific ideas.

Related Topics

- Scientific Method : This is the process scientists use to study things. It involves asking questions, making a hypothesis, running experiments, and seeing if the results support the hypothesis. Falsifiability is part of this process because scientists have to be able to test their hypotheses.

- Peer Review : When scientists finish their work, other experts check it to make sure it was done right. This involves reviewing if the experiments and tests were set up in a way that they could have shown the work was false if it wasn’t true.

- Logic and Critical Thinking : These are skills that help us make good arguments and decisions. Understanding falsifiability helps people develop these skills because it teaches them to always look for ways to test ideas.

In conclusion, the Law of Falsifiability, as brought up by Karl Popper, is like a key part of a scientist’s toolbox. It makes sure that ideas need to be able to be tested and possibly shown to be not true. By using this rule, we avoid believing in things without good evidence, and we make the stuff we learn about the world through science stronger and more reliable.

- Foundations

- Write Paper

Search form

- Experiments

- Anthropology

- Self-Esteem

- Social Anxiety

- Foundations >

- Reasoning >

Falsifiability

Karl popper's basic scientific principle, karl popper's basic scientific principle.

Falsifiability, according to the philosopher Karl Popper, defines the inherent testability of any scientific hypothesis.

This article is a part of the guide:

- Inductive Reasoning

- Deductive Reasoning

- Hypothetico-Deductive Method

- Scientific Reasoning

- Testability

Browse Full Outline

- 1 Scientific Reasoning

- 2.1 Falsifiability

- 2.2 Verification Error

- 2.3 Testability

- 2.4 Post Hoc Reasoning

- 3 Deductive Reasoning

- 4.1 Raven Paradox

- 5 Causal Reasoning

- 6 Abductive Reasoning

- 7 Defeasible Reasoning

Science and philosophy have always worked together to try to uncover truths about the universe we live in. Indeed, ancient philosophy can be understood as the originator of many of the separate fields of study we have today, including psychology, medicine, law, astronomy, art and even theology.

Scientists design experiments and try to obtain results verifying or disproving a hypothesis, but philosophers are interested in understanding what factors determine the validity of scientific endeavors in the first place.

Whilst most scientists work within established paradigms, philosophers question the paradigms themselves and try to explore our underlying assumptions and definitions behind the logic of how we seek knowledge. Thus there is a feedback relationship between science and philosophy - and sometimes plenty of tension!

One of the tenets behind the scientific method is that any scientific hypothesis and resultant experimental design must be inherently falsifiable. Although falsifiability is not universally accepted, it is still the foundation of the majority of scientific experiments. Most scientists accept and work with this tenet, but it has its roots in philosophy and the deeper questions of truth and our access to it.

What is Falsifiability?

Falsifiability is the assertion that for any hypothesis to have credence, it must be inherently disprovable before it can become accepted as a scientific hypothesis or theory.

For example, someone might claim "the earth is younger than many scientists state, and in fact was created to appear as though it was older through deceptive fossils etc.” This is a claim that is unfalsifiable because it is a theory that can never be shown to be false. If you were to present such a person with fossils, geological data or arguments about the nature of compounds in the ozone, they could refute the argument by saying that your evidence was fabricated to appeared that way, and isn’t valid.

Importantly, falsifiability doesn’t mean that there are currently arguments against a theory, only that it is possible to imagine some kind of argument which would invalidate it. Falsifiability says nothing about an argument's inherent validity or correctness. It is only the minimum trait required of a claim that allows it to be engaged with in a scientific manner – a dividing line between what is considered science and what isn’t. Another important point is that falsifiability is not any claim that has yet to be proven true. After all, a conjecture that hasn’t been proven yet is just a hypothesis.

The idea is that no theory is completely correct , but if it can be shown both to be falsifiable and supported with evidence that shows it's true, it can be accepted as truth.

For example, Newton's Theory of Gravity was accepted as truth for centuries, because objects do not randomly float away from the earth. It appeared to fit the data obtained by experimentation and research , but was always subject to testing.

However, Einstein's theory makes falsifiable predictions that are different from predictions made by Newton's theory, for example concerning the precession of the orbit of Mercury, and gravitational lensing of light. In non-extreme situations Einstein's and Newton's theories make the same predictions, so they are both correct. But Einstein's theory holds true in a superset of the conditions in which Newton's theory holds, so according to the principle of Occam's Razor , Einstein's theory is preferred. On the other hand, Newtonian calculations are simpler, so Newton's theory is useful for almost any engineering project, including some space projects. But for GPS we need Einstein's theory. Scientists would not have arrived at either of these theories, or a compromise between both of them, without the use of testable, falsifiable experiments.

Popper saw falsifiability as a black and white definition; that if a theory is falsifiable, it is scientific , and if not, then it is unscientific. Whilst some "pure" sciences do adhere to this strict criterion, many fall somewhere between the two extremes, with pseudo-sciences falling at the extreme end of being unfalsifiable.

Pseudoscience

According to Popper, many branches of applied science, especially social science, are not truly scientific because they have no potential for falsification.

Anthropology and sociology, for example, often use case studies to observe people in their natural environment without actually testing any specific hypotheses or theories.

While such studies and ideas are not falsifiable, most would agree that they are scientific because they significantly advance human knowledge.

Popper had and still has his fair share of critics, and the question of how to demarcate legitimate scientific enquiry can get very convoluted. Some statements are logically falsifiable but not practically falsifiable – consider the famous example of “it will rain at this location in a million years' time.” You could absolutely conceive of a way to test this claim, but carrying it out is a different story.

Thus, falsifiability is not a simple black and white matter. The Raven Paradox shows the inherent danger of relying on falsifiability, because very few scientific experiments can measure all of the data, and necessarily rely upon generalization . Technologies change along with our aims and comprehension of the phenomena we study, and so the falsifiability criterion for good science is subject to shifting.

For many sciences, the idea of falsifiability is a useful tool for generating theories that are testable and realistic. Testability is a crucial starting point around which to design solid experiments that have a chance of telling us something useful about the phenomena in question. If a falsifiable theory is tested and the results are significant , then it can become accepted as a scientific truth.

The advantage of Popper's idea is that such truths can be falsified when more knowledge and resources are available. Even long accepted theories such as Gravity, Relativity and Evolution are increasingly challenged and adapted.

The major disadvantage of falsifiability is that it is very strict in its definitions and does not take into account the contributions of sciences that are observational and descriptive .

- Psychology 101

- Flags and Countries

- Capitals and Countries

Martyn Shuttleworth , Lyndsay T Wilson (Sep 21, 2008). Falsifiability. Retrieved Apr 09, 2024 from Explorable.com: https://explorable.com/falsifiability

You Are Allowed To Copy The Text

The text in this article is licensed under the Creative Commons-License Attribution 4.0 International (CC BY 4.0) .

This means you're free to copy, share and adapt any parts (or all) of the text in the article, as long as you give appropriate credit and provide a link/reference to this page.

That is it. You don't need our permission to copy the article; just include a link/reference back to this page. You can use it freely (with some kind of link), and we're also okay with people reprinting in publications like books, blogs, newsletters, course-material, papers, wikipedia and presentations (with clear attribution).

Want to stay up to date? Follow us!

Save this course for later.

Don't have time for it all now? No problem, save it as a course and come back to it later.

Footer bottom

- Privacy Policy

- Subscribe to our RSS Feed

- Like us on Facebook

- Follow us on Twitter

5 Falsifiability

Textbook chapters (or similar texts).

- Deductive Logic

- Persuasive Reasoning and Fallacies

- The Falsifiability Criterion of Science

- Understanding Science

Journal articles

- Why a Confirmation Strategy Dominates Psychological Science

*******************************************************

Inquiry-based Activity: Popular media and falsifiability

Introduction : Falsifiability, or the ability for a statement/theory to be shown to be false, was noted by Karl Popper to be the clearest way to distinguish science from pseudoscience. While incredibly important to scientific inquiry, it is also important for students to understand how this criterion can be applied to the news and information they interact with in their day-to-day lives. In this activity, students will apply the logic of falsifiability to rumors and news they have heard of in the popular media, demonstrating the applicability of scientific thinking to the world beyond the classroom.

Question to pose to students : Think about the latest celebrity rumor you have heard about in the news or through social media. If you cannot think of one, some examples might include, “the CIA killed Marilyn Monroe” and “Tupac is alive.” Have students get into groups, discuss their rumors, and select one to work with.

Note to instructors: Please modify/update these examples if needed to work for the students in your course. Snopes is a good source for recent examples.

Students form a hypothesis : Thinking about that rumor, decide what evidence would be necessary to prove that it was correct. That is, imagine you were a skeptic and automatically did not believe the rumor – what would someone need to tell or show you to convince you that it was true?

Students test their hypotheses : Each group (A) should then pair up with one other group (B) and try to convince them their rumor is true, providing them with the evidence from above. Members of group B should then come up with any reasons they can think of why the rumor may still be false. For example – if “Tupac is alive” is the rumor and “show the death certificate” is a piece of evidence provided by group A, group B could posit that the death certificate was forged by whoever kidnapped Tupac. Once group B has evaluated all of group A’s evidence, have the groups switch such that group B is now trying to convince group A about their rumor.

Do the students’ hypotheses hold up? : Together, have the groups work out whether the rumors they discussed are falsifiable. That is, can it be “proven?” Remember, a claim is non-falsifiable if there can always be an explanation for the absence of evidence and/or an exhaustive search for evidence would be required. Depending on the length of your class, students can repeat the previous step with multiple groups.

Share This Book

- Increase Font Size

Karl Popper: Theory of Falsification

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

Karl Popper’s theory of falsification contends that scientific inquiry should aim not to verify hypotheses but to rigorously test and identify conditions under which they are false. For a theory to be valid according to falsification, it must produce hypotheses that have the potential to be proven incorrect by observable evidence or experimental results. Unlike verification, falsification focuses on categorically disproving theoretical predictions rather than confirming them.

- Karl Popper believed that scientific knowledge is provisional – the best we can do at the moment.

- Popper is known for his attempt to refute the classical positivist account of the scientific method by replacing induction with the falsification principle.

- The Falsification Principle, proposed by Karl Popper, is a way of demarcating science from non-science. It suggests that for a theory to be considered scientific, it must be able to be tested and conceivably proven false.

- For example, the hypothesis that “all swans are white” can be falsified by observing a black swan.

- For Popper, science should attempt to disprove a theory rather than attempt to continually support theoretical hypotheses.

Theory of Falsification

Karl Popper is prescriptive and describes what science should do (not how it actually behaves). Popper is a rationalist and contended that the central question in the philosophy of science was distinguishing science from non-science.

Karl Popper, in ‘The Logic of Scientific Discovery’ emerged as a major critic of inductivism, which he saw as an essentially old-fashioned strategy.

Popper replaced the classical observationalist-inductivist account of the scientific method with falsification (i.e., deductive logic) as the criterion for distinguishing scientific theory from non-science.

All inductive evidence is limited: we do not observe the universe at all times and in all places. We are not justified, therefore, in making a general rule from this observation of particulars.

According to Popper, scientific theory should make predictions that can be tested, and the theory should be rejected if these predictions are shown not to be correct.

He argued that science would best progress using deductive reasoning as its primary emphasis, known as critical rationalism.

Popper gives the following example:

Europeans, for thousands of years had observed millions of white swans. Using inductive evidence, we could come up with the theory that all swans are white.

However, exploration of Australasia introduced Europeans to black swans. Poppers’ point is this: no matter how many observations are made which confirm a theory, there is always the possibility that a future observation could refute it. Induction cannot yield certainty.

Karl Popper was also critical of the naive empiricist view that we objectively observe the world. Popper argued that all observation is from a point of view, and indeed that all observation is colored by our understanding. The world appears to us in the context of theories we already hold: it is ‘theory-laden.’

Popper proposed an alternative scientific method based on falsification. However, many confirming instances exist for a theory; it only takes one counter-observation to falsify it. Science progresses when a theory is shown to be wrong and a new theory is introduced that better explains the phenomena.

For Popper, the scientist should attempt to disprove his/her theory rather than attempt to prove it continually. Popper does think that science can help us progressively approach the truth, but we can never be certain that we have the final explanation.

Critical Evaluation

Popper’s first major contribution to philosophy was his novel solution to the problem of the demarcation of science. According to the time-honored view, science, properly so-called, is distinguished by its inductive method – by its characteristic use of observation and experiment, as opposed to purely logical analysis, to establish its results.

The great difficulty was that no run of favorable observational data, however long and unbroken, is logically sufficient to establish the truth of an unrestricted generalization.

Popper’s astute formulations of logical procedure helped to reign in the excessive use of inductive speculation upon inductive speculation, and also helped to strengthen the conceptual foundation for today’s peer review procedures.

However, the history of science gives little indication of having followed anything like a methodological falsificationist approach.

Indeed, and as many studies have shown, scientists of the past (and still today) tended to be reluctant to give up theories that we would have to call falsified in the methodological sense, and very often, it turned out that they were correct to do so (seen from our later perspective).

The history of science shows that sometimes it is best to ’stick to one’s guns’. For example, “In the early years of its life, Newton’s gravitational theory was falsified by observations of the moon’s orbit”

Also, one observation does not falsify a theory. The experiment may have been badly designed; data could be incorrect.

Quine states that a theory is not a single statement; it is a complex network (a collection of statements). You might falsify one statement (e.g., all swans are white) in the network, but this should not mean you should reject the whole complex theory.

Critics of Karl Popper, chiefly Thomas Kuhn , Paul Feyerabend, and Imre Lakatos, rejected the idea that there exists a single method that applies to all science and could account for its progress.

Popperp, K. R. (1959). The logic of scientific discovery . University Press.

Further Information

- Thomas Kuhn – Paradigm Shift Is Psychology a Science?

- Steps of the Scientific Method

- Positivism in Sociology: Definition, Theory & Examples

- The Scientific Revolutions of Thomas Kuhn: Paradigm Shifts Explained

The Concept of Falsifiability in Psychology: Importance and Applications

In the field of psychology, the concept of falsifiability plays a crucial role in promoting scientific rigor, testability, and critical thinking. By allowing hypotheses to be tested and findings to be reproducible, falsifiability aids in the advancement of psychological research.

This article explores how falsifiability is applied in psychological research, with examples from theories such as Freud’s Psychoanalytic Theory and Skinner’s Behaviorism. We also delve into the limitations of falsifiability in psychology and provide suggestions for improving its application, including the incorporation of qualitative data and promoting open science practices.

- Falsifiability is an essential concept in psychology that promotes scientific rigor and critical thinking.

- It allows for testability and reproducibility of research findings, leading to more reliable and valid conclusions.

- While it has limitations, incorporating qualitative data, utilizing multiple methods of data collection, and promoting open science practices can improve the application of falsifiability in psychological research.

- 1 What Is Falsifiability in Psychology?

- 2.1 Promotes Scientific Rigor

- 2.2 Allows for Testability and Reproducibility

- 2.3 Encourages Critical Thinking

- 3.1 Hypothesis Testing

- 3.2 Experimental Design

- 3.3 Statistical Analysis

- 4.1 Freud’s Psychoanalytic Theory

- 4.2 Maslow’s Hierarchy of Needs

- 4.3 Skinner’s Behaviorism

- 5.1 Overemphasis on Quantitative Data

- 5.2 Difficulty in Measuring Complex Psychological Phenomena

- 5.3 Potential for Bias and Misinterpretation

- 6.1 Incorporating Qualitative Data

- 6.2 Utilizing Multiple Methods of Data Collection

- 6.3 Promoting Open Science Practices

- 7.1 What is the concept of falsifiability in psychology and why is it important?

- 7.2 How does falsifiability impact the validity of psychological research?

- 7.3 Can you provide an example of falsifiability in psychology?

- 7.4 How does the concept of falsifiability impact the practice of psychology?

- 7.5 In what ways is falsifiability applied in psychological research?

- 7.6 How does the concept of falsifiability differ from the concept of verification?

What Is Falsifiability in Psychology?

Falsifiability in psychology refers to the principle proposed by Karl Popper that scientific theories must be capable of being tested and disproven through empirical observation and experimentation.

Karl Popper’s concept of falsifiability has played a significant role in distinguishing scientific theories from pseudoscience in psychology. By requiring that theories be testable and subject to potential refutation, falsifiability sets a high standard for scientific validity.

For example, in psychological research, this principle is applied when researchers design experiments to specifically test hypotheses that can be proven wrong. This ensures that the conclusions drawn are based on solid empirical evidence rather than unfalsifiable claims.

One famous application of falsifiability in psychology is in behaviorism, where theories like those by B.F. Skinner were subject to rigorous experimental testing, leading to the development of evidence-based practices.

Why Is Falsifiability Important in Psychology?

Falsifiability holds paramount importance in psychology as it ensures that theories and hypotheses are grounded in empirical evidence, fostering critical thinking and advancing towards scientific truth as advocated by Karl Popper.

Falsifiability not only serves as a cornerstone of scientific inquiry in psychology but also acts as a safeguard against pseudoscience and unsubstantiated claims.

By requiring that hypotheses be formulated in a way that they can be potentially proven wrong through observation or experimentation, falsifiability encourages researchers to test and refine their ideas rigorously.

This rigorous testing process helps differentiate between valid scientific theories and mere speculation, contributing to the credibility and reliability of psychological research.

Promotes Scientific Rigor

The principle of falsifiability promotes scientific rigor by establishing stringent criteria for theories to be substantiated through empirical methods and logical criteria, thereby fostering genuine scientific discovery.

One of the key standards that falsifiability sets for empirical validation is the requirement for theories to make predictions that can be tested and potentially proven false. This process ensures that scientific claims are not merely based on speculation but on concrete evidence that can either support or refute the proposed theory.

Falsifiability also emphasizes the importance of logical coherence in scientific theories. By demanding that theories are internally consistent and free from logical contradictions, this principle safeguards the integrity of scientific reasoning and prevents unfounded conclusions.

The application of falsifiability encourages scientists to constantly strive for genuine scientific breakthroughs. The willingness to subject theories to rigorous testing and potential falsification pushes researchers to explore new ideas, refine existing concepts, and ultimately advance the frontiers of knowledge in their respective fields.

Allows for Testability and Reproducibility

Falsifiability allows for the testability and reproducibility of scientific claims through rigorous experimental design and empirical tests, ensuring the reliability and validity of scientific experiments.

One of the key aspects of using falsifiability in scientific research is the ability to formulate testable hypotheses , which can be empirically examined and potentially proven wrong. By setting up experiments with clear parameters and measurable outcomes, researchers can systematically test their hypotheses and gather data to support or refute their claims. This process not only promotes transparency in the scientific method but also encourages replication studies by other scientists to validate the initial findings.

- Experimental methodologies such as randomized controlled trials, observational studies, and laboratory experiments play a crucial role in gathering empirical evidence to support scientific claims. These methodologies involve manipulating variables, collecting data, and analyzing results to draw meaningful conclusions.

- Validating hypotheses requires a rigorous approach to data collection and analysis. Utilizing statistical tools and peer review processes, researchers can ensure the credibility of their findings and mitigate biases that may affect the outcomes of their experiments.

- The peer review system serves as a quality control mechanism in the scientific community, where experts evaluate the methodology, results, and interpretations of a study before it gets published. This critical assessment helps uphold the standards of scientific research and enhances the overall reliability of scientific claims.

Encourages Critical Thinking

Falsifiability encourages critical thinking by fostering a logical relationship between theories and empirical observations, inviting critique and scrutiny to refine scientific understanding in psychology.

This fundamental concept, introduced by philosopher Karl Popper, underlines the significance of empirical evidence in testing the validity of hypotheses.

Psychologists leverage falsifiability to ensure their theories are subject to rigorous examination, advocating for testable assertions grounded in observable phenomena.

Through the lens of falsifiability, researchers navigate the complexities of psychological phenomena with a commitment to evidence-based reasoning and logical coherence.

How Is Falsifiability Applied in Psychological Research?

Falsifiability is applied in psychological research through rigorous hypothesis testing, meticulous experimental design, and the systematic analysis of empirical data to validate or invalidate theoretical propositions.

By adhering to the principle of falsifiability, researchers aim to formulate hypotheses that are precise, testable, and potentially refutable.

Experiment design plays a crucial role in this process, as it involves creating conditions that allow for the systematic manipulation of variables to observe their effects on the outcomes.

The collection and analysis of data are then conducted with meticulous attention to detail, utilizing statistical techniques to draw meaningful conclusions about the validity of the underlying psychological theories.

Hypothesis Testing

Hypothesis testing in psychology involves subjecting theoretical propositions to empirical scrutiny and logical verification, aligning with the principles of falsifiability to establish the validity of scientific claims.

When formulating hypotheses in psychological research, researchers first identify the variables they are interested in studying and propose a relationship between them. These hypotheses are often based on existing theories or empirical observations. The hypotheses are then tested through carefully designed experiments or observational studies. Data is collected, analyzed, and interpreted to determine whether the results support or refute the hypothesis.

Through this process, researchers aim to make inferences about the population from which the sample was drawn. Statistical tests are employed to assess the likelihood that the results are due to chance or if they reflect a true relationship. Validating hypotheses in psychology requires rigorous methodological approaches and adherence to ethical standards to ensure the reliability and validity of the findings.

Experimental Design

Experimental design in psychological research adheres to the principles of falsifiability by following the scientific method, gathering empirical evidence, and structuring experiments to test specific hypotheses.

By carefully constructing experiments, researchers aim to isolate the effects of certain variables on the outcome, ensuring that any observed changes can be attributed to the manipulated factor. Controlled variables play a crucial role in this process, as they help to eliminate extraneous influences that could confound the results. Through systematic manipulation and control of variables, researchers can establish cause-and-effect relationships, providing valuable insights into the intricate workings of the human mind.

Statistical Analysis

Statistical analysis plays a crucial role in psychological research by evaluating empirical data, testing theoretical predictions, and applying Bayesian inductive logic to draw valid conclusions in alignment with the principles of falsifiability.

Statistical analysis supports falsifiability in psychology through rigorous hypothesis testing. By establishing clear hypotheses and systematically collecting data, researchers can objectively evaluate and validate their theories. Statistical modeling allows for the assessment of complex relationships among variables, aiding in the thorough evaluation of psychological phenomena. The use of inferential statistics enables researchers to make generalizations about populations based on sample data, providing a basis for confident conclusions. By embracing statistical analysis in psychological research, scholars uphold the standards of scientific inquiry and contribute to the advancement of the field.

What Are Some Examples of Falsifiability in Psychology?

Examples of falsifiability in psychology include Freud’s Psychoanalytic Theory , Maslow’s Hierarchy of Needs , and Skinner’s Behaviorism , where empirical observations and experimental tests are used to validate or refute these theoretical frameworks.

Freud’s Psychoanalytic Theory posited that unconscious processes significantly influence human behavior and personality development, involving concepts like the id, ego, and superego. Through empirical testing, researchers have been able to explore the applicability of these constructs and their impact on individuals’ mental lives.

In Maslow’s Hierarchy of Needs, the framework suggests that human motivation progresses through distinct stages based on fulfilling fundamental needs before advancing to higher-level aspirations. This model has been subjected to empirical research, analyzing whether individuals indeed prioritize basic needs before pursuing self-actualization.

Skinner’s Behaviorism focuses on the impact of reinforcement and punishment on shaping behavior. Through experimental studies, researchers have investigated the effectiveness of operant conditioning in altering behavior patterns and determining its generalizability to various contexts.

Freud’s Psychoanalytic Theory

Freud’s Psychoanalytic Theory is subject to falsifiability through empirical observations and experimental tests, where the validity of concepts such as the Oedipus complex and unconscious motivations can be scrutinized for scientific rigor.

By applying the principle of falsifiability, researchers can design studies to test the predictions and hypotheses derived from Freud’s theory. For example, studies using neuroimaging techniques have provided insights into brain activity related to unconscious thoughts and desires, offering empirical support for Freud’s ideas.

Behavioral experiments and clinical observations have allowed psychologists to explore manifestations of defense mechanisms, offering an avenue to examine the inherent conflicts in the human psyche, as posited by Freud. This rigorous scientific approach distinguishes psychology as a discipline that continually seeks to refine its understanding of complex human behavior.

Maslow’s Hierarchy of Needs

Maslow’s Hierarchy of Needs presents an example of falsifiability in psychology, where the hierarchical structure of human needs can be empirically tested and validated, aligning with the principles of scientific inquiry.

Researchers have utilized various methodologies to scrutinize the concept of Maslow’s Hierarchy , conducting surveys, experiments, and case studies.

Through empirical testing, psychologists can analyze whether the hierarchy accurately reflects human behavior across different cultures and contexts. By observing individuals’ responses to different levels of needs fulfillment, researchers can assess the model’s reliability and predictive power.

This process highlights the importance of empirical evidence in shaping psychological theories and understanding human motivation.

Skinner’s Behaviorism

Skinner’s Behaviorism exemplifies falsifiability in psychology by emphasizing observable behaviors, experimental analysis, and the application of scientific methods to test and validate behavioral principles through empirical evidence.

Skinner believed that the key to understanding human behavior lied in studying observable actions rather than diving into unobservable mental processes. This focus on external behaviors transformed psychology by introducing a systematic approach that involved setting up controlled experiments to observe and record behaviors in a scientific manner. By using rigorous experimental designs, such as operant conditioning chambers, Skinner demonstrated how behavior could be shaped through reinforcement and punishment, providing empirical support for his theories. This empirical methodology allowed behaviorism to develop as a verifiable and testable framework within psychology.

What Are the Limitations of Falsifiability in Psychology?

While essential, falsifiability in psychology has limitations including an overemphasis on quantitative data, challenges in measuring complex psychological phenomena, and the potential for bias and misinterpretation in empirical studies.

Quantitative data, although valuable, may not always capture the depth and nuances of human behavior and emotions. In the realm of psychology, where intricacies abound, relying solely on numbers can restrict the understanding of subjective experiences. Complex concepts such as self-esteem or motivation defy simple quantification, posing a challenge for researchers aiming to validate or invalidate hypotheses. The inherent subjectivity in psychological assessments introduces the risk of confirmation bias, where researchers might unconsciously seek data that supports their preconceived notions, jeopardizing the objectivity of their findings.

Overemphasis on Quantitative Data

One limitation of falsifiability in psychology is the potential overemphasis on quantitative data, which may prioritize statistical analysis over qualitative insights, potentially diminishing the pursuit of scientific truth.

While quantitative data plays a crucial role in evidence-based research, solely relying on numerical figures can sometimes overshadow the rich qualitative nuances that characterize human behavior and experiences. Psychology, as a field deeply rooted in understanding the complexities of the mind, requires a balanced integration of both quantitative and qualitative approaches to capture the multidimensional nature of psychological phenomena.

Difficulty in Measuring Complex Psychological Phenomena

Measuring complex psychological phenomena poses a significant challenge to falsifiability in psychology, as intricate concepts, subjective experiences, and multifaceted behaviors may defy straightforward empirical assessment.

When attempting to quantify attributes such as emotions, consciousness, or personality traits, researchers encounter methodological hurdles that necessitate innovative approaches. The very nature of these phenomena often eludes simplistic measurement techniques, requiring a nuanced understanding of cognitive processes and human behavior.

Employing standardized assessments for subjective constructs risks oversimplification and undermines the rich tapestry of individual differences present within human psychology. This highlights the importance of utilizing a diverse array of methodologies, from qualitative interviews to experimental paradigms, to capture the multidimensionality of psychological phenomena.

Potential for Bias and Misinterpretation

The potential for bias and misinterpretation in empirical tests poses a limitation to falsifiability in psychology, as subjective influences, preconceptions, or methodological errors may distort the interpretation of research findings.

These biases can arise from various sources, such as the researchers’ personal beliefs, societal norms, or even the funding sources behind the study.

Methodological flaws like small sample sizes, lack of control groups, or inadequate statistical analyses can further exacerbate the risk of misinterpretation.

Misinterpretations can occur when researchers draw unwarranted conclusions from the data or overlook alternative explanations that could provide a more accurate understanding of the phenomena under investigation.

How Can Falsifiability Be Improved in Psychological Research?

Enhancing falsifiability in psychological research involves integrating qualitative data alongside quantitative measures, utilizing diverse methods of data collection, and promoting open science practices to augment the empirical foundation of psychological inquiry.

Integrating qualitative data enriches research outcomes by providing depth and context to statistical findings. This approach allows researchers to capture nuances, individual experiences, and subjective perspectives that quantitative measures alone may miss. Moreover, diverse data collection methodologies such as surveys, interviews, and observational studies offer a comprehensive view of the phenomenon under investigation, reducing potential biases inherent in singular data sources.

Adopting transparent and reproducible scientific practices is paramount in fostering credibility and trust within the scientific community. It involves sharing raw data, analysis scripts, and methodological details to ensure research findings can be verified by others. By advocating for open science practices , researchers contribute to a culture of accountability and rigor, enhancing the quality and reliability of psychological research outcomes.

Incorporating Qualitative Data

Enhancing falsifiability in psychology can be achieved by incorporating qualitative data, which enriches scientific discovery, supports empirical methods, and broadens the scope of psychological inquiry beyond quantitative measures.

Qualitative data in psychology serves as a valuable tool in enriching research methodologies by providing a deeper understanding of human behavior and experiences. By capturing the nuances, complexities, and unique perspectives that quantitative data may overlook, qualitative insights enable researchers to delve into the intricacies of the human mind and emotions. This not only enhances the credibility and rigor of psychological studies but also fosters a more holistic approach to investigating psychological phenomena.

Utilizing Multiple Methods of Data Collection

Expanding the repertoire of data collection methods is instrumental in enhancing falsifiability in psychological research, as employing diverse approaches enables comprehensive empirical testing and strengthens the scientific foundation of psychological inquiry.

By incorporating various data collection techniques such as surveys, experiments, observations, and interviews, researchers can cross-validate findings across multiple methodologies , thus reinforcing the validity and reliability of their results. The integration of quantitative and qualitative data gathering methods not only enriches the depth of analysis but also fosters a more nuanced understanding of complex psychological phenomena. The use of mixed-method approaches can help researchers triangulate findings, enhancing the overall credibility and robustness of their research outcomes.

Promoting Open Science Practices

Promoting open science practices is crucial for advancing falsifiability in psychological research, as transparency, replicability, and collaborative scrutiny foster scientific discovery, validate empirical experiments, and uphold the principles of rigorous inquiry.

Falsifiability in psychological research refers to the capability of a hypothesis or theory to be empirically disproven through observation or experimentation. By embracing open science practices, researchers can enhance the credibility and reliability of their findings. Transparency ensures that all aspects of a study are openly shared, allowing for scrutiny and replication by other researchers. Reproducibility is key in demonstrating the robustness of results and findings, enabling the scientific community to build upon existing knowledge collectively.

Open science encourages collaborative engagement among researchers, facilitating peer review processes that help weed out errors and biases while also promoting a culture of constructive criticism and continuous improvement. This collaborative scrutiny not only enhances the quality of psychological research but also contributes towards building a stronger foundation for future scientific inquiries.

Frequently Asked Questions

What is the concept of falsifiability in psychology and why is it important.

Falsifiability refers to the idea that scientific theories and hypotheses should be able to be proven false through empirical evidence. In psychology, this means that theories and claims must be testable and open to being disproven. This is important because it ensures that research and findings are based on solid evidence rather than speculation or personal bias.

How does falsifiability impact the validity of psychological research?

Falsifiability plays a crucial role in determining the validity of psychological research. If a theory or hypothesis cannot be disproven, it cannot be considered a valid scientific claim. This means that the conclusions drawn from the research may not be reliable or accurate.

Can you provide an example of falsifiability in psychology?

One example of falsifiability in psychology is the theory of cognitive dissonance, which suggests that individuals experience discomfort when they hold contradictory beliefs. This theory is falsifiable because it can be tested and potentially proven false through empirical research.

How does the concept of falsifiability impact the practice of psychology?

The concept of falsifiability is integral to the scientific method, which is the foundation of psychological research. It ensures that psychologists use rigorous and empirical methods to test their theories and claims, leading to more reliable and valid findings. Without falsifiability, psychology would not be considered a legitimate science.

In what ways is falsifiability applied in psychological research?

Falsifiability is applied in psychological research through the use of experiments, surveys, and other empirical methods. These methods allow researchers to test their theories and hypotheses in a controlled environment, making it possible to either support or disprove them.

How does the concept of falsifiability differ from the concept of verification?

Falsifiability and verification are two opposing concepts in the field of science. While falsifiability focuses on testing theories and hypotheses to prove them false, verification is the process of confirming them. In psychology, the emphasis is on falsifiability as it promotes a critical and skeptical approach to research rather than simply seeking evidence to support existing beliefs.

Vanessa Patel is an expert in positive psychology, dedicated to studying happiness, resilience, and the factors that contribute to a fulfilling life. Her writing explores techniques for enhancing well-being, overcoming adversity, and building positive relationships and communities. Vanessa’s articles are a resource for anyone looking to find more joy and meaning in their daily lives, backed by the latest research in the field.

Similar Posts

Uncovering the Origins: The Founder of Psychodynamic Psychology

The article was last updated by Marcus Wong on February 5, 2024. Have you ever wondered who is the mastermind behind psychodynamic psychology? In this…

Interdisciplinary Perspectives: Why 20th-century Anthropologists Embraced Psychology

The article was last updated by Samantha Choi on February 6, 2024. Anthropology and psychology may seem like two separate fields, but in the 20th…

Pioneers in Psychology: Founding Contributors Who Shaped Behaviorism

The article was last updated by Emily (Editor) on February 26, 2024. Behaviorism is a foundational concept in the field of psychology, and its development…

Overview of the 5 Major Schools of Thought in Psychology: Evolution and Influence

The article was last updated by Alicia Rhodes on February 4, 2024. Psychology is a fascinating field that delves into the intricacies of the human…

Exploring Psychology by Pastorino: A Comprehensive Overview

The article was last updated by Gabriel Silva on February 9, 2024. Have you ever wondered what exactly psychology is and how it is defined?…

Tracing the Origins of Psychology: A Historical Perspective

The article was last updated by Samantha Choi on February 9, 2024. Have you ever wondered about the origins of psychology and the key figures…

- About OpenScience

Being Scientific: Falsifiability, Verifiability, Empirical Tests, and Reproducibility

If you ask a scientist what makes a good experiment, you’ll get very specific answers about reproducibility and controls and methods of teasing out causal relationships between variables and observables. If human observations are involved, you may get detailed descriptions of blind and double-blind experimental designs. In contrast, if you ask the very same scientists what makes a theory or explanation scientific, you’ll often get a vague statement about falsifiability . Scientists are usually very good at designing experiments to test theories. We invent theoretical entities and explanations all the time, but very rarely are they stated in ways that are falsifiable. It is also quite rare for anything in science to be stated in the form of a deductive argument. Experiments often aren’t done to falsify theories, but to provide the weight of repeated and varied observations in support of those same theories. Sometimes we’ll even use the words verify or confirm when talking about the results of an experiment. What’s going on? Is falsifiability the standard? Or something else?

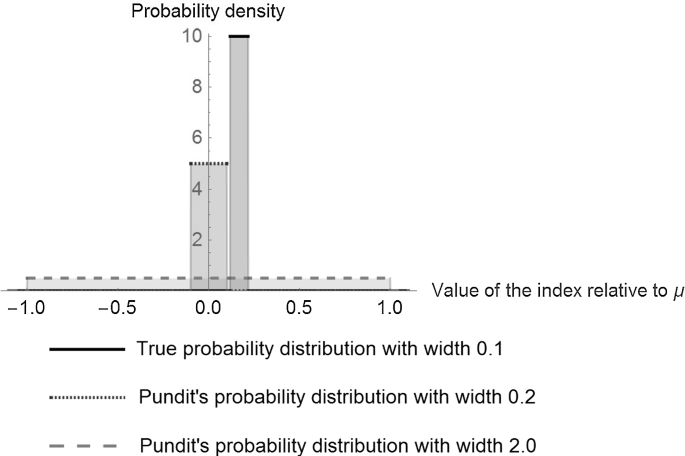

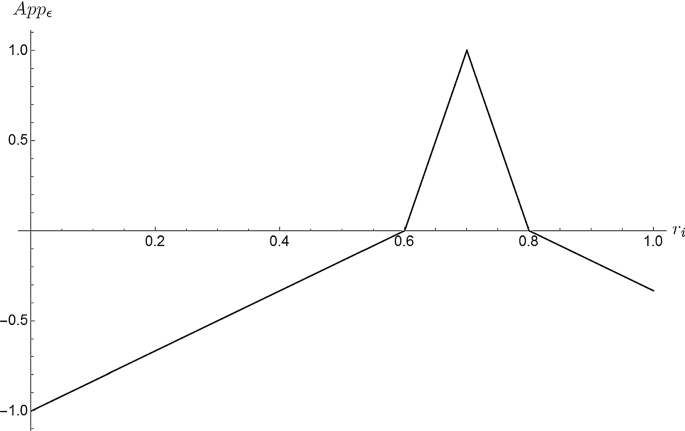

The difference between falsifiability and verifiability in science deserves a bit of elaboration. It is not always obvious (even to scientists) what principles they are using to evaluate scientific theories, 1 so we’ll start a discussion of this difference by thinking about Popper’s asymmetry. 2 Consider a scientific theory ( T ) that predicts an observation ( O ). There are two ways we could approach adding the weight of experiment to a particular theory. We could attempt to falsify or verify the observation. Only one of these approaches (falsification) is deductively valid:

Popper concluded that it is impossible to know that a theory is true based on observations ( O ); science can tell us only that the theory is false (or that it has yet to be refuted). He concluded that meaningful scientific statements are falsifiable.

Scientific theories may not be this simple. We often base our theories on a set of auxiliary assumptions which we take as postulates for our theories. For example, a theory for liquid dynamics might depend on the whole of classical mechanics being taken as a postulate, or a theory of viral genetics might depend on the Hardy-Weinberg equilibrium. In these cases, classical mechanics (or the Hardy-Wienberg equilibrium) are the auxiliary assumptions for our specific theories.

These auxiliary assumptions can help show that science is often not a deductively valid exercise. The Quine-Duhem thesis 3 recovers the symmetry between falsification and verification when we take into account the role of the auxiliary assumptions ( AA ) of the theory ( T ):

That is, if the predicted observation ( O ) turns out to be false, we can deduce only that something is wrong with the conjunction, ( T and AA ); we cannot determine from the premises that it is T rather than AA that is false. In order to recover the asymmetry, we would need our assumptions ( AA ) to be independently verifiable:

Falsifying a theory requires that auxiliary assumption ( AA ) be demonstrably true. Auxiliary assumptions are often highly theoretical — remember, auxiliary assumptions might be statements like the entirety of classical mechanics is correct or the Hardy-Weinberg equilibrium is valid ! It is important to note, that if we can’t verify AA , we will not be able to falsify T by using the valid argument above. Contrary to Popper, there really is no asymmetry between falsification and verification. If we cannot verify theoretical statements, then we cannot falsify them either.

Since verifying a theoretical statement is nearly impossible, and falsification often requires verification of assumptions, where does that leave scientific theories? What is required of a statement to make it scientific?

Carl Hempel came up with one of the more useful statements about the properties of scientific theories: 4 “The statements constituting a scientific explanation must be capable of empirical test.” And this statement about what exactly it means to be scientific brings us right back to things that scientists are very good at: experimentation and experimental design. If I propose a scientific explanation for a phenomenon, it should be possible to subject that theory to an empirical test or experiment. We should also have a reasonable expectation of universality of empirical tests. That is multiple independent (skeptical) scientists should be able to subject these theories to similar tests in different locations, on different equipment, and at different times and get similar answers. Reproducibility of scientific experiments is therefore going to be required for universality.

So to answer some of the questions we might have about reproducibility:

- Reproducible by whom ? By independent (skeptical) scientists, working elsewhere, and on different equipment, not just by the original researcher.

- Reproducible to what degree ? This would depend on how closely that independent scientist can reproduce the controllable variables, but we should have a reasonable expectation of similar results under similar conditions.

- Wouldn’t the expense of a particular apparatus make reproducibility very difficult? Good scientific experiments must be reproducible in both a conceptual and an operational sense. 5 If a scientist publishes the results of an experiment, there should be enough of the methodology published with the results that a similarly-equipped, independent, and skeptical scientist could reproduce the results of the experiment in their own lab.

Computational science and reproducibility

If theory and experiment are the two traditional legs of science, simulation is fast becoming the “third leg”. Modern science has come to rely on computer simulations, computational models, and computational analysis of very large data sets. These methods for doing science are all reproducible in principle . For very simple systems, and small data sets this is nearly the same as reproducible in practice . As systems become more complex and the data sets become large, calculations that are reproducible in principle are no longer reproducible in practice without public access to the code (or data). If a scientist makes a claim that a skeptic can only reproduce by spending three decades writing and debugging a complex computer program that exactly replicates the workings of a commercial code, the original claim is really only reproducible in principle. If we really want to allow skeptics to test our claims, we must allow them to see the workings of the computer code that was used. It is therefore imperative for skeptical scientific inquiry that software for simulating complex systems be available in source-code form and that real access to raw data be made available to skeptics.

Our position on open source and open data in science was arrived at when an increasing number of papers began crossing our desks for review that could not be subjected to reproducibility tests in any meaningful way. Paper A might have used a commercial package that comes with a license that forbids people at university X from viewing the code ! 6

Paper 2 might use a code which requires parameter sets that are “trade secrets” and have never been published in the scientific literature . Our view is that it is not healthy for scientific papers to be supported by computations that cannot be reproduced except by a few employees at a commercial software developer. Should this kind of work even be considered Science? It may be research , and it may be important , but unless enough details of the experimental methodology are made available so that it can be subjected to true reproducibility tests by skeptics, it isn’t Science.

- This discussion closely follows a treatment of Popper’s asymmetry in: Sober, Elliot Philosophy of Biology (Boulder: Westview Press, 2000), pp. 50-51.

- Popper, Karl R. “The Logic of Scientific Discovery” 5th ed. (London: Hutchinson, 1959), pp. 40-41, 46.

- Gillies, Donald. “The Duhem Thesis and the Quine Thesis”, in Martin Curd and J.A. Cover ed. Philosophy of Science: The Central Issues, (New York: Norton, 1998), pp. 302-319.

- C. Hempel. Philosophy of Natural Science 49 (1966).

- Lett, James, Science, Reason and Anthropology, The Principles of Rational Inquiry (Oxford: Rowman & Littlefield, 1997), p. 47

- See, for example www.bannedbygaussian.org

5 Responses to Being Scientific: Falsifiability, Verifiability, Empirical Tests, and Reproducibility

Pingback: pligg.com

“If we cannot verify theoretical statements, then we cannot falsify them either.

Since verifying a theoretical statement is nearly impossible, and falsification often requires verification of assumptions…”

An invalid argument is invalid regardless of the truth of the premises. I would suggest that an hypothesis based on unverifiable assumptions could be ‘falsified’ the same way an argument with unverifiable premises could be shown to be invalid. Would you not agree?

“Falsifying a theory requires that auxiliary assumption (AA) be demonstrably true.”

No, it only requires them to be true.

In the falisificationist method, you can change the AA so long as that increases the theories testability. (the theory includes AA and the universal statement, btw) . In your second box you misrepresent the first derivation. in the conclusion it would be ¬(t and AA). after that you can either modify the AA (as long as it increase the theories falsifiability) or abandon the theory. Therefore you do not need the third box, it explains something that does not need explaining, or that could be explained more concisely and without error by reconstructing the process better. This process is always tentative and open to re-evaluation (that is the risky and critical nature of conjectures and refutations). Falsificationism does not pretend conclusiveness, it abandoned that to the scrap heap along with the hopelessly defective interpretation of science called inductivism.

“Contrary to Popper, there really is no asymmetry between falsification and verification. If we cannot verify theoretical statements, then we cannot falsify them either.” There is an asymmetry. You cannot refute the asymmetry by showing that falsification is not conclusive. Because the asymmetry is a logical relationship between statements. What you would have shown, if your argument was valid or accurate, would be that falsification is not possible in practice. Not that the asymmetry is false.

Popper wanted to replace induction and verification with deduction and falsification.

He held that a theory that was once accepted but which, thanks to a novel experiment or observation, turns out to be false, confronts us with a new problem, to which new solutions are needed. In his view, this process is the hallmark of scientific progress.

Surprisingly, Popper failed to note that, despite his efforts to present it as deductive, this process is at bottom inductive, since it assumes that a theory falsified today will remain falsified tomorrow.

Accepting that swans are either white or black because a black one has been spotted rests on the assumption that there are other black swans around and that the newly discovered black one will not become white at a later stage. It is obvious but also inductive thinking in the sense that they project the past into the future, that is, extrapolate particulars into a universal.

In other words, induction, the process that Popper was determined to avoid, lies at the heart of his philosophy of science as he defined it.

Despite positivism’s limitations, science is positive or it is not science : positive science’s theories are maybe incapable of demonstration (as Hume wrote of causation), but there are not others available.

If it is impossible to demonstrate that fire burns, putting one’s hand in it is just too painful.

Pingback: House of Eratosthenes

Leave a Reply

Your email address will not be published. Required fields are marked *

- Search for:

- Conferences (3)

- education (10)

- Open Access (10)

- Open Data (13)

- open science (34)

- Policy (48)

- Science (135)

- Engineering (1)

- Physical (1)

- Speech Communication (2)

- Structural (1)

- Anthropology and Archaeology (3)

- Artificial Life (9)

- Planetary Sciences (1)

- Aviation and Aeronautics (2)

- Analytical (4)

- Atmospheric (1)

- Biochemistry (6)

- Biophysical (3)

- Chemical Information (3)

- Crystallography (2)

- Electrochemistry (1)

- Molecule Viewers and Editors (39)

- Synthesis (1)

- Periodic Tables (3)

- Kinetics (1)

- Polymers (1)

- Surfaces (1)

- Ab Initio Quantum Chemistry (9)

- Molecular Dynamics (22)

- Monte Carlo methods (2)

- Neural Networks (2)

- Complex Systems (2)

- Algorithms And Computational Theory (2)

- Artificial Intelligence (4)

- Data Communication (4)

- Information Retrieval (1)

- Knowledge Discovery and Data Mining (3)

- Fortran (1)

- Measurement and Evaluation (1)

- Simulation and Modeling (2)

- Software Engineering (2)

- Symbolic and Algebraic Manipulation (1)

- Geology and Geophysics (5)

- Hydrology (5)

- Meteorology (1)

- Oceanography (3)

- Engineering (24)

- Forensics (2)

- Geography (12)

- Information Technology (1)

- Bioinformatics (34)

- Evolution and Population Genetics (3)

- Statistical (1)

- Theoretical (1)

- Population (1)

- Medical Sciences (7)

- Physiology (2)

- Linguistics (2)

- Abstract Algebra (9)

- Combinatorics (1)

- Fluid Dynamics (7)

- Ordinary (3)

- Partial (7)

- Dynamical Systems (4)

- Education (1)

- Geometry (3)

- Linear Algebra (26)

- Number Theory (6)

- Numerical Methods (5)

- Optimization (12)

- Probability (1)

- Set Theory (1)

- Statistics (8)

- Topology (1)

- Measurements and Units (3)

- Nanotechnology (2)

- Astrophysics (1)

- Atomic and Molecular (1)

- Computational (2)

- Condensed Matter (4)

- High Energy (4)

- Magnetism (1)

- Materials (1)

- Nuclear (4)

- Required Reading and Other Sites (25)

- Numerical Libraries (8)

- Random Number Generators (2)

- 2D Plotting (8)

- 3D Plotting (2)

- Uncategorized (4)

The Falsification Principle – Towards Empirical Testing

The Falsification Principle is a pivotal concept in the philosophy of science . It was proposed by philosopher Karl Popper in the mid-20th century. This principle serves as a criterion for demarcating scientific theories from non-scientific ones. It also emphasizes the importance of empirical testing and the potential for falsification in scientific inquiry.

This principle challenges the traditional notion of verification. It argues that scientific theories should be formulated in a way that allows for the possibility of being proven false through observation and experimentation.

Here, we will delve into the theoretical background of the Falsification Principle, and explore its key concepts and assumptions. Also, we examine the criticisms it has faced and analyze its applications and implications in various fields.

Further, we compare it with other rival principles, discuss its contemporary relevance, and contemplate its future directions. By exploring the Falsification Principle, we aim to gain a deeper understanding of the nature and methodology of scientific inquiry.

1. Introduction to the Falsification Principle

Definition of the falsification principle.

Imagine you have a theory that claims something is true. According to the Falsification Principle, for that theory to be considered scientific, it must be able to be proven false. In other words, a scientific theory should make specific predictions. If these are found to be false through empirical observation or experimentation, would lead us to reject the theory.

The Falsification Principle, proposed by philosopher Karl Popper, revolutionized the way we understand scientific knowledge. It challenged the prevailing idea that science could only confirm theories through empirical evidence. This principle instead emphasized the importance of potential falsifiability.

Historical Context and Origins

The Falsification Principle emerged in the mid-20th century as a response to the Logical Positivism movement. That movement aimed to define science solely by its empirical verifiability. Popper, dissatisfied with the idea that science could only confirm theories, sought to establish a demarcation criterion that would separate science from non-science.

Popper was inspired by the work of philosopher David Hume. He argued that induction, which forms the foundation of empirical verification, was logically flawed. Instead, he proposed that science should focus on deductive reasoning and the testing of hypotheses through attempts to falsify them.

2. Theoretical Background of the Falsification Principle

Influential thinkers and philosophical movements.

Popper’s Falsification Principle drew inspiration from philosophers such as Hume and Immanuel Kant. Hume’s skepticism towards induction highlighted the problem of confirming theories solely based on past observations. Kant’s emphasis on deductive reasoning and the existence of synthetic a priori knowledge influenced Popper’s thoughts on scientific methodology.

In terms of philosophical movements, the Falsification Principle emerged as a critique. It is an alternative to Logical Positivism , which dominated the Vienna Circle during the early 20th century. Popper’s ideas gained momentum and went on to shape the philosophy of science .

Development of the Falsification Principle

Popper’s development of the Falsification Principle took place in his groundbreaking book, “The Logic of Scientific Discovery,” published in 1934. Throughout subsequent revisions and editions, Popper refined and expanded upon his ideas. He solidified the Falsification Principle as a key component of his overall philosophy of science .

Popper’s discussions on falsifiability and the demarcation between science and pseudo-science attracted both praise and criticism from the scientific community. Nevertheless, his insights have had a lasting impact, influencing scientific methodology and shaping debates surrounding the nature of scientific knowledge.

3. Key Concepts and Assumptions of the Falsification Principle

Empirical observation and testability.

At the core of the Falsification Principle is the belief that scientific theories must be empirically testable. This means that theories should make predictions that can be subjected to observation or experimentation. The ability to test and potentially falsify theories distinguishes them from unfalsifiable claims that fall outside the realm of science .

Falsifiability and Refutability

Falsifiability refers to the potential for a theory to be proven false through empirical evidence. According to the Falsification Principle, a theory gains scientific legitimacy when it puts itself at risk of being refuted by specific observations or experiments. The more a theory withstands attempts to falsify it, the more confidence we can have in its validity.

It is crucial to note that the absence of falsification does not inherently prove a theory to be true or certain. The principle merely highlights the importance of subjecting theories to rigorous testing. It then finds specific evidence that could potentially refute them.

Language and Meaning in Science

The Falsification Principle also delves into the role of language and meaning in scientific discourse. Popper argued that scientific statements should be formulated in precise and testable terms. These should allow clear criteria to determine their falsifiability. He emphasized the importance of avoiding vague or ambiguous statements that could hinder empirical scrutiny.

By emphasizing the need for precise language and clear meaning, Popper aimed to create a framework for scientific discourse that promotes objective evaluation and critical scrutiny.

4. Critiques and Challenges to the Falsification Principle

Objections from popper’s contemporaries.

While the Falsification Principle made remarkable contributions to the philosophy of science , it faced notable criticisms from Popper’s contemporaries. Some argued that the principle was overly simplistic and failed to account for the complex nature of scientific practice. Others contended that it was difficult to draw a clear line between falsifiability and confirmation. This fact makes the demarcation between science and non-science less straightforward.

Revisions and Modifications to the Principle

Over time, various philosophers and scientists have proposed revisions and modifications to the Falsification Principle to address its limitations. These adjustments often aim to strike a balance between the importance of empirical testing and the recognition that scientific knowledge is open to revision and refinement.

Some argue for a more nuanced understanding of falsifiability, considering degrees of confidence rather than binary outcomes. Others advocate for the incorporation of Bayesian inference, which allows for the updating of probabilities based on new evidence.

The Falsification Principle may not provide a definitive answer to the nature of scientific knowledge. It however has its lasting impact and influence on scientific methodology cannot be denied. It continues to shape discussions and debates, reminding us of the importance of critical thinking. This theory is an inspiration for rigorous testing in the pursuit of scientific understanding.

5. Applications and Implications of the Falsification Principle

Scientific methodology and research design.

The falsification principle has had a profound impact on scientific methodology and research design. By emphasizing the importance of testing hypotheses through empirical observation, it has helped scientists develop rigorous and reliable methods for conducting experiments. Researchers now strive to design experiments that can potentially falsify their hypotheses, as this strengthens the validity of their findings.

Furthermore, the falsification principle has led to the development of stringent criteria for evaluating scientific theories. Instead of simply accepting theories based on supporting evidence, scientists now actively seek to challenge and disprove them. This approach ensures that scientific knowledge is constantly refined and updated. The theories that withstand rigorous testing are more likely to accurately explain the phenomena they seek to describe.

Impact on Philosophy of Science

The falsification principle has had a significant impact on the philosophy of science . It has challenged previously held notions that scientific theories can be verified or proven true. Instead, it is asserting that they can only be disproven or falsified. This shift in perspective has led to a more refined understanding of the nature of scientific inquiry.

Philosophers of science now recognize that the falsifiability criterion plays a crucial role in distinguishing scientific theories from non-scientific claims. By requiring empirical evidence that could potentially disprove a theory, the falsification principle helps maintain the integrity and credibility of scientific knowledge.

6. Comparison and Contrast with other Philosophical Principles

Verificationism vs. falsificationism.

Verificationism, an opposing philosophical principle, asserts that a statement is meaningful only if it can be empirically verified. In contrast, the falsification principle argues that a statement must be potentially falsifiable to be meaningful. While verificationism seeks to confirm statements, falsificationism focuses on refutation.