Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

- Null and Alternative Hypotheses | Definitions & Examples

Null & Alternative Hypotheses | Definitions, Templates & Examples

Published on May 6, 2022 by Shaun Turney . Revised on June 22, 2023.

The null and alternative hypotheses are two competing claims that researchers weigh evidence for and against using a statistical test :

- Null hypothesis ( H 0 ): There’s no effect in the population .

- Alternative hypothesis ( H a or H 1 ) : There’s an effect in the population.

Table of contents

Answering your research question with hypotheses, what is a null hypothesis, what is an alternative hypothesis, similarities and differences between null and alternative hypotheses, how to write null and alternative hypotheses, other interesting articles, frequently asked questions.

The null and alternative hypotheses offer competing answers to your research question . When the research question asks “Does the independent variable affect the dependent variable?”:

- The null hypothesis ( H 0 ) answers “No, there’s no effect in the population.”

- The alternative hypothesis ( H a ) answers “Yes, there is an effect in the population.”

The null and alternative are always claims about the population. That’s because the goal of hypothesis testing is to make inferences about a population based on a sample . Often, we infer whether there’s an effect in the population by looking at differences between groups or relationships between variables in the sample. It’s critical for your research to write strong hypotheses .

You can use a statistical test to decide whether the evidence favors the null or alternative hypothesis. Each type of statistical test comes with a specific way of phrasing the null and alternative hypothesis. However, the hypotheses can also be phrased in a general way that applies to any test.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

The null hypothesis is the claim that there’s no effect in the population.

If the sample provides enough evidence against the claim that there’s no effect in the population ( p ≤ α), then we can reject the null hypothesis . Otherwise, we fail to reject the null hypothesis.

Although “fail to reject” may sound awkward, it’s the only wording that statisticians accept . Be careful not to say you “prove” or “accept” the null hypothesis.

Null hypotheses often include phrases such as “no effect,” “no difference,” or “no relationship.” When written in mathematical terms, they always include an equality (usually =, but sometimes ≥ or ≤).

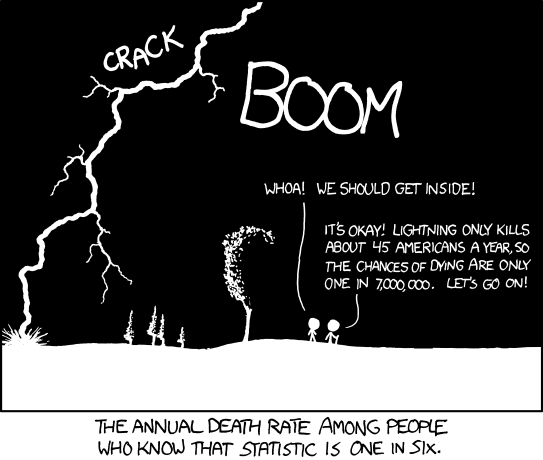

You can never know with complete certainty whether there is an effect in the population. Some percentage of the time, your inference about the population will be incorrect. When you incorrectly reject the null hypothesis, it’s called a type I error . When you incorrectly fail to reject it, it’s a type II error.

Examples of null hypotheses

The table below gives examples of research questions and null hypotheses. There’s always more than one way to answer a research question, but these null hypotheses can help you get started.

*Note that some researchers prefer to always write the null hypothesis in terms of “no effect” and “=”. It would be fine to say that daily meditation has no effect on the incidence of depression and p 1 = p 2 .

The alternative hypothesis ( H a ) is the other answer to your research question . It claims that there’s an effect in the population.

Often, your alternative hypothesis is the same as your research hypothesis. In other words, it’s the claim that you expect or hope will be true.

The alternative hypothesis is the complement to the null hypothesis. Null and alternative hypotheses are exhaustive, meaning that together they cover every possible outcome. They are also mutually exclusive, meaning that only one can be true at a time.

Alternative hypotheses often include phrases such as “an effect,” “a difference,” or “a relationship.” When alternative hypotheses are written in mathematical terms, they always include an inequality (usually ≠, but sometimes < or >). As with null hypotheses, there are many acceptable ways to phrase an alternative hypothesis.

Examples of alternative hypotheses

The table below gives examples of research questions and alternative hypotheses to help you get started with formulating your own.

Null and alternative hypotheses are similar in some ways:

- They’re both answers to the research question.

- They both make claims about the population.

- They’re both evaluated by statistical tests.

However, there are important differences between the two types of hypotheses, summarized in the following table.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

To help you write your hypotheses, you can use the template sentences below. If you know which statistical test you’re going to use, you can use the test-specific template sentences. Otherwise, you can use the general template sentences.

General template sentences

The only thing you need to know to use these general template sentences are your dependent and independent variables. To write your research question, null hypothesis, and alternative hypothesis, fill in the following sentences with your variables:

Does independent variable affect dependent variable ?

- Null hypothesis ( H 0 ): Independent variable does not affect dependent variable.

- Alternative hypothesis ( H a ): Independent variable affects dependent variable.

Test-specific template sentences

Once you know the statistical test you’ll be using, you can write your hypotheses in a more precise and mathematical way specific to the test you chose. The table below provides template sentences for common statistical tests.

Note: The template sentences above assume that you’re performing one-tailed tests . One-tailed tests are appropriate for most studies.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Descriptive statistics

- Measures of central tendency

- Correlation coefficient

Methodology

- Cluster sampling

- Stratified sampling

- Types of interviews

- Cohort study

- Thematic analysis

Research bias

- Implicit bias

- Cognitive bias

- Survivorship bias

- Availability heuristic

- Nonresponse bias

- Regression to the mean

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

Null and alternative hypotheses are used in statistical hypothesis testing . The null hypothesis of a test always predicts no effect or no relationship between variables, while the alternative hypothesis states your research prediction of an effect or relationship.

The null hypothesis is often abbreviated as H 0 . When the null hypothesis is written using mathematical symbols, it always includes an equality symbol (usually =, but sometimes ≥ or ≤).

The alternative hypothesis is often abbreviated as H a or H 1 . When the alternative hypothesis is written using mathematical symbols, it always includes an inequality symbol (usually ≠, but sometimes < or >).

A research hypothesis is your proposed answer to your research question. The research hypothesis usually includes an explanation (“ x affects y because …”).

A statistical hypothesis, on the other hand, is a mathematical statement about a population parameter. Statistical hypotheses always come in pairs: the null and alternative hypotheses . In a well-designed study , the statistical hypotheses correspond logically to the research hypothesis.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Turney, S. (2023, June 22). Null & Alternative Hypotheses | Definitions, Templates & Examples. Scribbr. Retrieved April 16, 2024, from https://www.scribbr.com/statistics/null-and-alternative-hypotheses/

Is this article helpful?

Shaun Turney

Other students also liked, inferential statistics | an easy introduction & examples, hypothesis testing | a step-by-step guide with easy examples, type i & type ii errors | differences, examples, visualizations, what is your plagiarism score.

9.1 Null and Alternative Hypotheses

The actual test begins by considering two hypotheses . They are called the null hypothesis and the alternative hypothesis . These hypotheses contain opposing viewpoints.

H 0 , the — null hypothesis: a statement of no difference between sample means or proportions or no difference between a sample mean or proportion and a population mean or proportion. In other words, the difference equals 0.

H a —, the alternative hypothesis: a claim about the population that is contradictory to H 0 and what we conclude when we reject H 0 .

Since the null and alternative hypotheses are contradictory, you must examine evidence to decide if you have enough evidence to reject the null hypothesis or not. The evidence is in the form of sample data.

After you have determined which hypothesis the sample supports, you make a decision. There are two options for a decision. They are reject H 0 if the sample information favors the alternative hypothesis or do not reject H 0 or decline to reject H 0 if the sample information is insufficient to reject the null hypothesis.

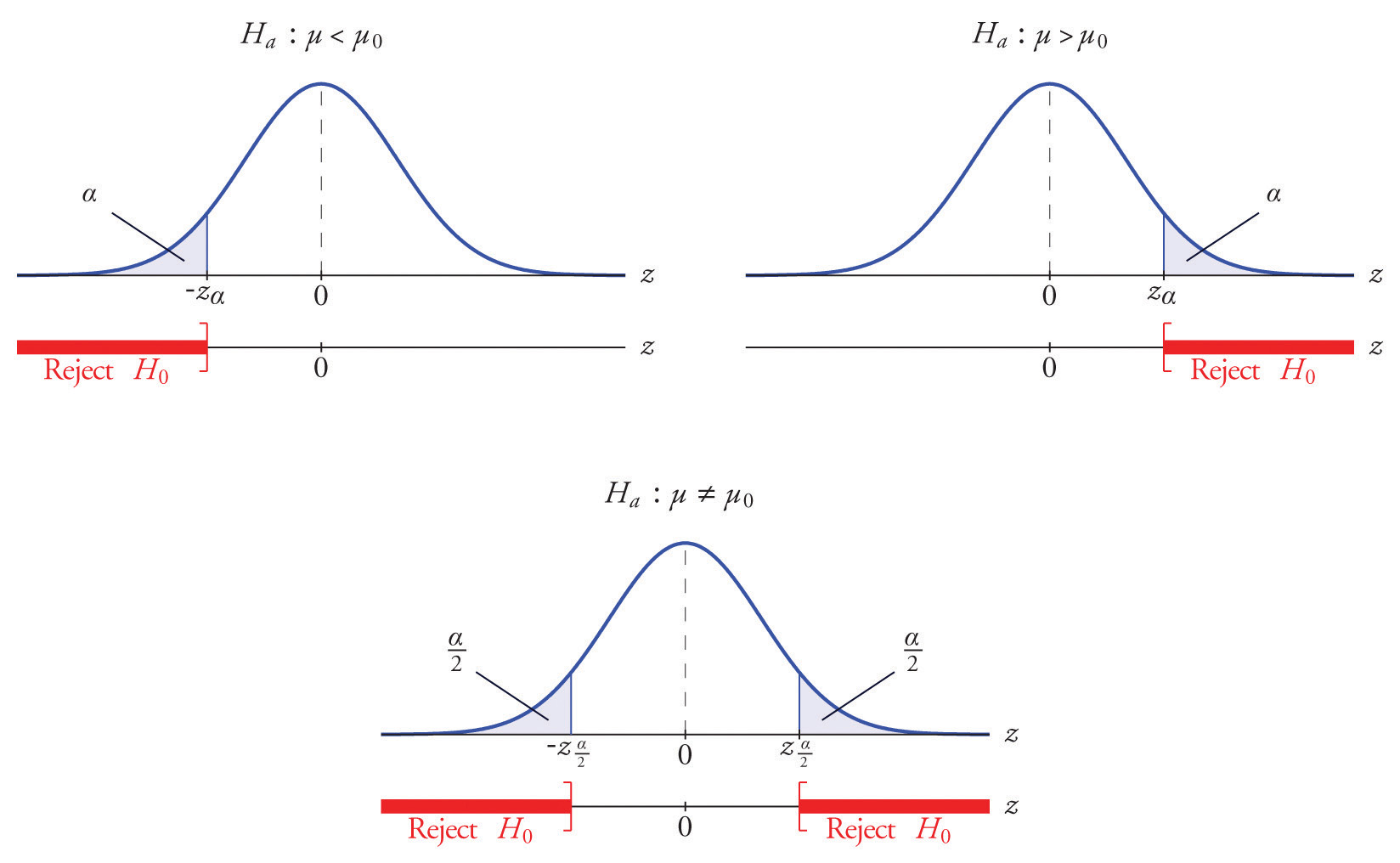

Mathematical Symbols Used in H 0 and H a :

H 0 always has a symbol with an equal in it. H a never has a symbol with an equal in it. The choice of symbol depends on the wording of the hypothesis test. However, be aware that many researchers use = in the null hypothesis, even with > or < as the symbol in the alternative hypothesis. This practice is acceptable because we only make the decision to reject or not reject the null hypothesis.

Example 9.1

H 0 : No more than 30 percent of the registered voters in Santa Clara County voted in the primary election. p ≤ 30 H a : More than 30 percent of the registered voters in Santa Clara County voted in the primary election. p > 30

A medical trial is conducted to test whether or not a new medicine reduces cholesterol by 25 percent. State the null and alternative hypotheses.

Example 9.2

We want to test whether the mean GPA of students in American colleges is different from 2.0 (out of 4.0). The null and alternative hypotheses are the following: H 0 : μ = 2.0 H a : μ ≠ 2.0

We want to test whether the mean height of eighth graders is 66 inches. State the null and alternative hypotheses. Fill in the correct symbol (=, ≠, ≥, <, ≤, >) for the null and alternative hypotheses.

- H 0 : μ __ 66

- H a : μ __ 66

Example 9.3

We want to test if college students take fewer than five years to graduate from college, on the average. The null and alternative hypotheses are the following: H 0 : μ ≥ 5 H a : μ < 5

We want to test if it takes fewer than 45 minutes to teach a lesson plan. State the null and alternative hypotheses. Fill in the correct symbol ( =, ≠, ≥, <, ≤, >) for the null and alternative hypotheses.

- H 0 : μ __ 45

- H a : μ __ 45

Example 9.4

An article on school standards stated that about half of all students in France, Germany, and Israel take advanced placement exams and a third of the students pass. The same article stated that 6.6 percent of U.S. students take advanced placement exams and 4.4 percent pass. Test if the percentage of U.S. students who take advanced placement exams is more than 6.6 percent. State the null and alternative hypotheses. H 0 : p ≤ 0.066 H a : p > 0.066

On a state driver’s test, about 40 percent pass the test on the first try. We want to test if more than 40 percent pass on the first try. Fill in the correct symbol (=, ≠, ≥, <, ≤, >) for the null and alternative hypotheses.

- H 0 : p __ 0.40

- H a : p __ 0.40

Collaborative Exercise

Bring to class a newspaper, some news magazines, and some internet articles. In groups, find articles from which your group can write null and alternative hypotheses. Discuss your hypotheses with the rest of the class.

As an Amazon Associate we earn from qualifying purchases.

This book may not be used in the training of large language models or otherwise be ingested into large language models or generative AI offerings without OpenStax's permission.

Want to cite, share, or modify this book? This book uses the Creative Commons Attribution License and you must attribute Texas Education Agency (TEA). The original material is available at: https://www.texasgateway.org/book/tea-statistics . Changes were made to the original material, including updates to art, structure, and other content updates.

Access for free at https://openstax.org/books/statistics/pages/1-introduction

- Authors: Barbara Illowsky, Susan Dean

- Publisher/website: OpenStax

- Book title: Statistics

- Publication date: Mar 27, 2020

- Location: Houston, Texas

- Book URL: https://openstax.org/books/statistics/pages/1-introduction

- Section URL: https://openstax.org/books/statistics/pages/9-1-null-and-alternative-hypotheses

© Jan 23, 2024 Texas Education Agency (TEA). The OpenStax name, OpenStax logo, OpenStax book covers, OpenStax CNX name, and OpenStax CNX logo are not subject to the Creative Commons license and may not be reproduced without the prior and express written consent of Rice University.

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

10.2: Understanding Null Hypothesis Testing

- Last updated

- Save as PDF

- Page ID 20196

- Rajiv S. Jhangiani, I-Chant A. Chiang, Carrie Cuttler, & Dana C. Leighton

- Kwantlen Polytechnic U., Washington State U., & Texas A&M U.—Texarkana

- Explain the purpose of null hypothesis testing, including the role of sampling error.

- Describe the basic logic of null hypothesis testing.

- Describe the role of relationship strength and sample size in determining statistical significance and make reasonable judgments about statistical significance based on these two factors.

The Purpose of Null Hypothesis Testing

As we have seen, psychological research typically involves measuring one or more variables in a sample and computing descriptive summary data (e.g., means, correlation coefficients) for those variables. These descriptive data for the sample are called statistics . In general, however, the researcher’s goal is not to draw conclusions about that sample but to draw conclusions about the population that the sample was selected from. Thus researchers must use sample statistics to draw conclusions about the corresponding values in the population. These corresponding values in the population are called parameters . Imagine, for example, that a researcher measures the number of depressive symptoms exhibited by each of 50 adults with clinical depression and computes the mean number of symptoms. The researcher probably wants to use this sample statistic (the mean number of symptoms for the sample) to draw conclusions about the corresponding population parameter (the mean number of symptoms for adults with clinical depression).

Unfortunately, sample statistics are not perfect estimates of their corresponding population parameters. This is because there is a certain amount of random variability in any statistic from sample to sample. The mean number of depressive symptoms might be 8.73 in one sample of adults with clinical depression, 6.45 in a second sample, and 9.44 in a third—even though these samples are selected randomly from the same population. Similarly, the correlation (Pearson’s r ) between two variables might be +.24 in one sample, −.04 in a second sample, and +.15 in a third—again, even though these samples are selected randomly from the same population. This random variability in a statistic from sample to sample is called sampling error . (Note that the term error here refers to random variability and does not imply that anyone has made a mistake. No one “commits a sampling error.”)

One implication of this is that when there is a statistical relationship in a sample, it is not always clear that there is a statistical relationship in the population. A small difference between two group means in a sample might indicate that there is a small difference between the two group means in the population. But it could also be that there is no difference between the means in the population and that the difference in the sample is just a matter of sampling error. Similarly, a Pearson’s r value of −.29 in a sample might mean that there is a negative relationship in the population. But it could also be that there is no relationship in the population and that the relationship in the sample is just a matter of sampling error.

In fact, any statistical relationship in a sample can be interpreted in two ways:

- There is a relationship in the population, and the relationship in the sample reflects this.

- There is no relationship in the population, and the relationship in the sample reflects only sampling error.

The purpose of null hypothesis testing is simply to help researchers decide between these two interpretations.

The Logic of Null Hypothesis Testing

Null hypothesis testing (often called null hypothesis significance testing or NHST) is a formal approach to deciding between two interpretations of a statistical relationship in a sample. One interpretation is called the null hypothesis (often symbolized H 0 and read as “H-zero”). This is the idea that there is no relationship in the population and that the relationship in the sample reflects only sampling error. Informally, the null hypothesis is that the sample relationship “occurred by chance.” The other interpretation is called the alternative hypothesis (often symbolized as H 1 ). This is the idea that there is a relationship in the population and that the relationship in the sample reflects this relationship in the population.

Again, every statistical relationship in a sample can be interpreted in either of these two ways: It might have occurred by chance, or it might reflect a relationship in the population. So researchers need a way to decide between them. Although there are many specific null hypothesis testing techniques, they are all based on the same general logic. The steps are as follows:

- Assume for the moment that the null hypothesis is true. There is no relationship between the variables in the population.

- Determine how likely the sample relationship would be if the null hypothesis were true.

- If the sample relationship would be extremely unlikely, then reject the null hypothesis in favor of the alternative hypothesis. If it would not be extremely unlikely, then retain the null hypothesis .

Following this logic, we can begin to understand why Mehl and his colleagues concluded that there is no difference in talkativeness between women and men in the population. In essence, they asked the following question: “If there were no difference in the population, how likely is it that we would find a small difference of d = 0.06 in our sample?” Their answer to this question was that this sample relationship would be fairly likely if the null hypothesis were true. Therefore, they retained the null hypothesis—concluding that there is no evidence of a sex difference in the population. We can also see why Kanner and his colleagues concluded that there is a correlation between hassles and symptoms in the population. They asked, “If the null hypothesis were true, how likely is it that we would find a strong correlation of +.60 in our sample?” Their answer to this question was that this sample relationship would be fairly unlikely if the null hypothesis were true. Therefore, they rejected the null hypothesis in favor of the alternative hypothesis—concluding that there is a positive correlation between these variables in the population.

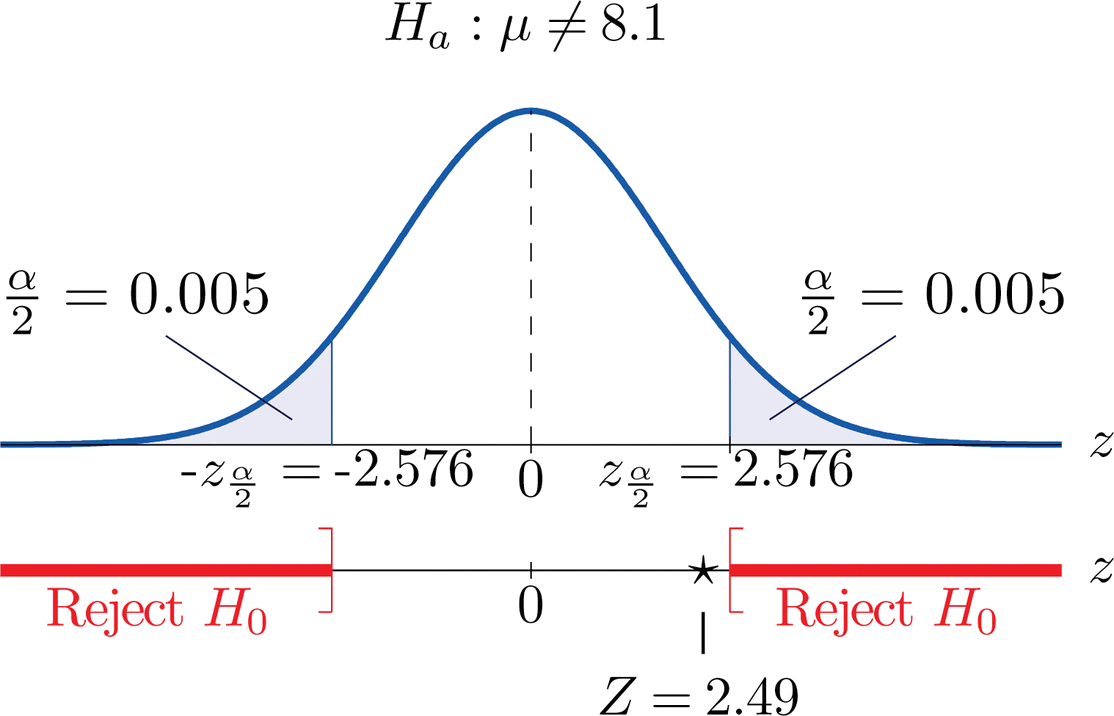

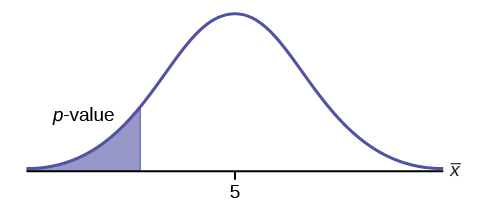

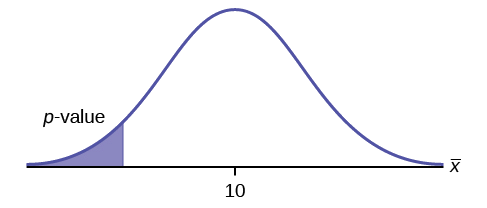

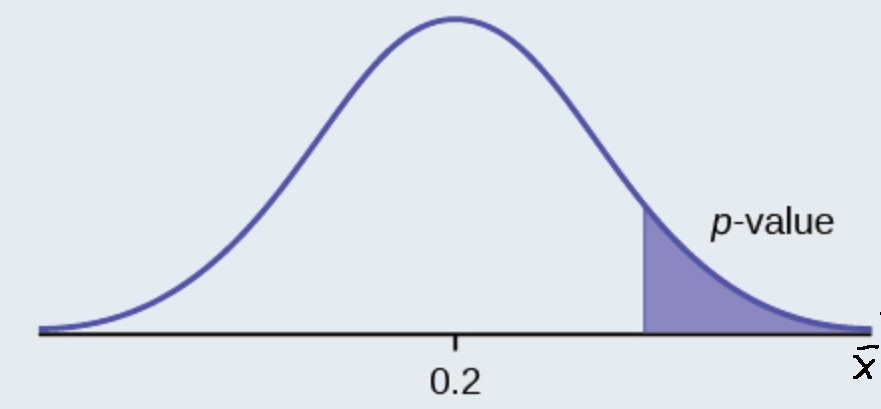

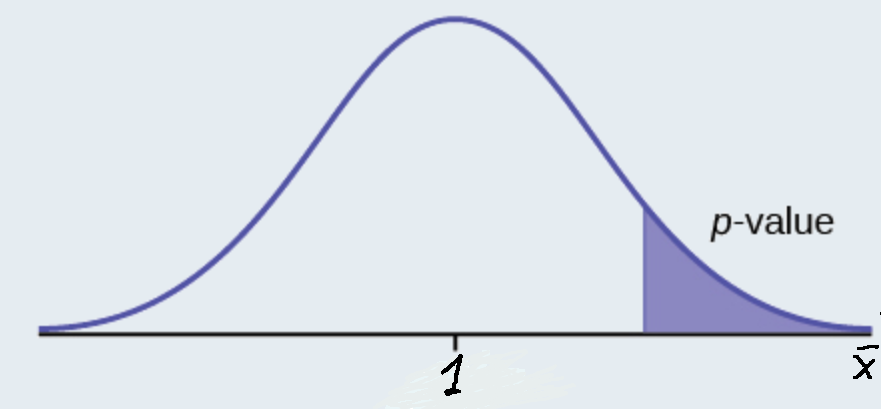

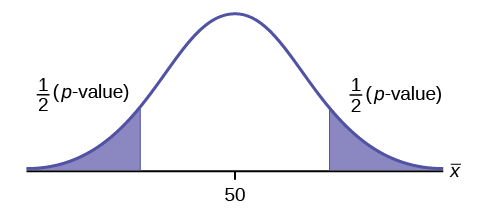

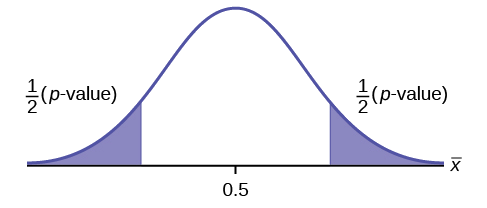

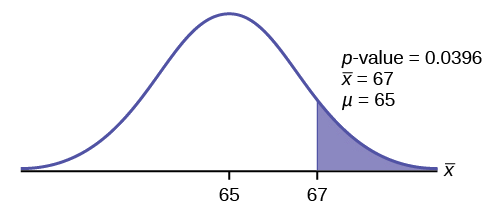

A crucial step in null hypothesis testing is finding the probability of the sample result or a more extreme result if the null hypothesis were true (Lakens, 2017). [1] This probability is called the p value . A low p value means that the sample or more extreme result would be unlikely if the null hypothesis were true and leads to the rejection of the null hypothesis. A p value that is not low means that the sample or more extreme result would be likely if the null hypothesis were true and leads to the retention of the null hypothesis. But how low must the p value criterion be before the sample result is considered unlikely enough to reject the null hypothesis? In null hypothesis testing, this criterion is called α (alpha) and is almost always set to .05. If there is a 5% chance or less of a result at least as extreme as the sample result if the null hypothesis were true, then the null hypothesis is rejected. When this happens, the result is said to be statistically significant . If there is greater than a 5% chance of a result as extreme as the sample result when the null hypothesis is true, then the null hypothesis is retained. This does not necessarily mean that the researcher accepts the null hypothesis as true—only that there is not currently enough evidence to reject it. Researchers often use the expression “fail to reject the null hypothesis” rather than “retain the null hypothesis,” but they never use the expression “accept the null hypothesis.”

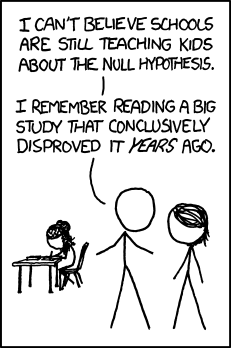

The p value is one of the most misunderstood quantities in psychological research (Cohen, 1994) [2] . Even professional researchers misinterpret it, and it is not unusual for such misinterpretations to appear in statistics textbooks!

The most common misinterpretation is that the p value is the probability that the null hypothesis is true—that the sample result occurred by chance. For example, a misguided researcher might say that because the p value is .02, there is only a 2% chance that the result is due to chance and a 98% chance that it reflects a real relationship in the population. But this is incorrect . The p value is really the probability of a result at least as extreme as the sample result if the null hypothesis were true. So a p value of .02 means that if the null hypothesis were true, a sample result this extreme would occur only 2% of the time.

You can avoid this misunderstanding by remembering that the p value is not the probability that any particular hypothesis is true or false. Instead, it is the probability of obtaining the sample result if the null hypothesis were true.

Role of Sample Size and Relationship Strength

Recall that null hypothesis testing involves answering the question, “If the null hypothesis were true, what is the probability of a sample result as extreme as this one?” In other words, “What is the p value?” It can be helpful to see that the answer to this question depends on just two considerations: the strength of the relationship and the size of the sample. Specifically, the stronger the sample relationship and the larger the sample, the less likely the result would be if the null hypothesis were true. That is, the lower the p value. This should make sense. Imagine a study in which a sample of 500 women is compared with a sample of 500 men in terms of some psychological characteristic, and Cohen’s d is a strong 0.50. If there were really no sex difference in the population, then a result this strong based on such a large sample should seem highly unlikely. Now imagine a similar study in which a sample of three women is compared with a sample of three men, and Cohen’s d is a weak 0.10. If there were no sex difference in the population, then a relationship this weak based on such a small sample should seem likely. And this is precisely why the null hypothesis would be rejected in the first example and retained in the second.

Of course, sometimes the result can be weak and the sample large, or the result can be strong and the sample small. In these cases, the two considerations trade off against each other so that a weak result can be statistically significant if the sample is large enough and a strong relationship can be statistically significant even if the sample is small. Table \(\PageIndex{1}\) shows roughly how relationship strength and sample size combine to determine whether a sample result is statistically significant. The columns of the table represent the three levels of relationship strength: weak, medium, and strong. The rows represent four sample sizes that can be considered small, medium, large, and extra large in the context of psychological research. Thus each cell in the table represents a combination of relationship strength and sample size. If a cell contains the word Yes , then this combination would be statistically significant for both Cohen’s d and Pearson’s r . If it contains the word No , then it would not be statistically significant for either. There is one cell where the decision for d and r would be different and another where it might be different depending on some additional considerations, which are discussed in Section 13.2.

Although Table \(\PageIndex{1}\) provides only a rough guideline, it shows very clearly that weak relationships based on medium or small samples are never statistically significant and that strong relationships based on medium or larger samples are always statistically significant. If you keep this lesson in mind, you will often know whether a result is statistically significant based on the descriptive statistics alone. It is extremely useful to be able to develop this kind of intuitive judgment. One reason is that it allows you to develop expectations about how your formal null hypothesis tests are going to come out, which in turn allows you to detect problems in your analyses. For example, if your sample relationship is strong and your sample is medium, then you would expect to reject the null hypothesis. If for some reason your formal null hypothesis test indicates otherwise, then you need to double-check your computations and interpretations. A second reason is that the ability to make this kind of intuitive judgment is an indication that you understand the basic logic of this approach in addition to being able to do the computations.

Statistical Significance Versus Practical Significance

Table \(\PageIndex{1}\) illustrates another extremely important point. A statistically significant result is not necessarily a strong one. Even a very weak result can be statistically significant if it is based on a large enough sample. This is closely related to Janet Shibley Hyde’s argument about sex differences (Hyde, 2007) [3] . The differences between women and men in mathematical problem solving and leadership ability are statistically significant. But the word significant can cause people to interpret these differences as strong and important—perhaps even important enough to influence the college courses they take or even who they vote for. As we have seen, however, these statistically significant differences are actually quite weak—perhaps even “trivial.”

This is why it is important to distinguish between the statistical significance of a result and the practical significance of that result. Practical significance refers to the importance or usefulness of the result in some real-world context. Many sex differences are statistically significant—and may even be interesting for purely scientific reasons—but they are not practically significant. In clinical practice, this same concept is often referred to as “clinical significance.” For example, a study on a new treatment for social phobia might show that it produces a statistically significant positive effect. Yet this effect still might not be strong enough to justify the time, effort, and other costs of putting it into practice—especially if easier and cheaper treatments that work almost as well already exist. Although statistically significant, this result would be said to lack practical or clinical significance.

- Lakens, D. (2017, December 25). About p -values: Understanding common misconceptions. [Blog post] Retrieved from https://correlaid.org/en/blog/understand-p-values/ ↵

- Cohen, J. (1994). The world is round: p < .05. American Psychologist, 49 , 997–1003. ↵

- Hyde, J. S. (2007). New directions in the study of gender similarities and differences. Current Directions in Psychological Science, 16 , 259–263. ↵

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

13.1 Understanding Null Hypothesis Testing

Learning objectives.

- Explain the purpose of null hypothesis testing, including the role of sampling error.

- Describe the basic logic of null hypothesis testing.

- Describe the role of relationship strength and sample size in determining statistical significance and make reasonable judgments about statistical significance based on these two factors.

The Purpose of Null Hypothesis Testing

As we have seen, psychological research typically involves measuring one or more variables for a sample and computing descriptive statistics for that sample. In general, however, the researcher’s goal is not to draw conclusions about that sample but to draw conclusions about the population that the sample was selected from. Thus researchers must use sample statistics to draw conclusions about the corresponding values in the population. These corresponding values in the population are called parameters . Imagine, for example, that a researcher measures the number of depressive symptoms exhibited by each of 50 clinically depressed adults and computes the mean number of symptoms. The researcher probably wants to use this sample statistic (the mean number of symptoms for the sample) to draw conclusions about the corresponding population parameter (the mean number of symptoms for clinically depressed adults).

Unfortunately, sample statistics are not perfect estimates of their corresponding population parameters. This is because there is a certain amount of random variability in any statistic from sample to sample. The mean number of depressive symptoms might be 8.73 in one sample of clinically depressed adults, 6.45 in a second sample, and 9.44 in a third—even though these samples are selected randomly from the same population. Similarly, the correlation (Pearson’s r ) between two variables might be +.24 in one sample, −.04 in a second sample, and +.15 in a third—again, even though these samples are selected randomly from the same population. This random variability in a statistic from sample to sample is called sampling error . (Note that the term error here refers to random variability and does not imply that anyone has made a mistake. No one “commits a sampling error.”)

One implication of this is that when there is a statistical relationship in a sample, it is not always clear that there is a statistical relationship in the population. A small difference between two group means in a sample might indicate that there is a small difference between the two group means in the population. But it could also be that there is no difference between the means in the population and that the difference in the sample is just a matter of sampling error. Similarly, a Pearson’s r value of −.29 in a sample might mean that there is a negative relationship in the population. But it could also be that there is no relationship in the population and that the relationship in the sample is just a matter of sampling error.

In fact, any statistical relationship in a sample can be interpreted in two ways:

- There is a relationship in the population, and the relationship in the sample reflects this.

- There is no relationship in the population, and the relationship in the sample reflects only sampling error.

The purpose of null hypothesis testing is simply to help researchers decide between these two interpretations.

The Logic of Null Hypothesis Testing

Null hypothesis testing is a formal approach to deciding between two interpretations of a statistical relationship in a sample. One interpretation is called the null hypothesis (often symbolized H 0 and read as “H-naught”). This is the idea that there is no relationship in the population and that the relationship in the sample reflects only sampling error. Informally, the null hypothesis is that the sample relationship “occurred by chance.” The other interpretation is called the alternative hypothesis (often symbolized as H 1 ). This is the idea that there is a relationship in the population and that the relationship in the sample reflects this relationship in the population.

Again, every statistical relationship in a sample can be interpreted in either of these two ways: It might have occurred by chance, or it might reflect a relationship in the population. So researchers need a way to decide between them. Although there are many specific null hypothesis testing techniques, they are all based on the same general logic. The steps are as follows:

- Assume for the moment that the null hypothesis is true. There is no relationship between the variables in the population.

- Determine how likely the sample relationship would be if the null hypothesis were true.

- If the sample relationship would be extremely unlikely, then reject the null hypothesis in favor of the alternative hypothesis. If it would not be extremely unlikely, then retain the null hypothesis .

Following this logic, we can begin to understand why Mehl and his colleagues concluded that there is no difference in talkativeness between women and men in the population. In essence, they asked the following question: “If there were no difference in the population, how likely is it that we would find a small difference of d = 0.06 in our sample?” Their answer to this question was that this sample relationship would be fairly likely if the null hypothesis were true. Therefore, they retained the null hypothesis—concluding that there is no evidence of a sex difference in the population. We can also see why Kanner and his colleagues concluded that there is a correlation between hassles and symptoms in the population. They asked, “If the null hypothesis were true, how likely is it that we would find a strong correlation of +.60 in our sample?” Their answer to this question was that this sample relationship would be fairly unlikely if the null hypothesis were true. Therefore, they rejected the null hypothesis in favor of the alternative hypothesis—concluding that there is a positive correlation between these variables in the population.

A crucial step in null hypothesis testing is finding the likelihood of the sample result if the null hypothesis were true. This probability is called the p value . A low p value means that the sample result would be unlikely if the null hypothesis were true and leads to the rejection of the null hypothesis. A high p value means that the sample result would be likely if the null hypothesis were true and leads to the retention of the null hypothesis. But how low must the p value be before the sample result is considered unlikely enough to reject the null hypothesis? In null hypothesis testing, this criterion is called α (alpha) and is almost always set to .05. If there is less than a 5% chance of a result as extreme as the sample result if the null hypothesis were true, then the null hypothesis is rejected. When this happens, the result is said to be statistically significant . If there is greater than a 5% chance of a result as extreme as the sample result when the null hypothesis is true, then the null hypothesis is retained. This does not necessarily mean that the researcher accepts the null hypothesis as true—only that there is not currently enough evidence to conclude that it is true. Researchers often use the expression “fail to reject the null hypothesis” rather than “retain the null hypothesis,” but they never use the expression “accept the null hypothesis.”

The Misunderstood p Value

The p value is one of the most misunderstood quantities in psychological research (Cohen, 1994). Even professional researchers misinterpret it, and it is not unusual for such misinterpretations to appear in statistics textbooks!

The most common misinterpretation is that the p value is the probability that the null hypothesis is true—that the sample result occurred by chance. For example, a misguided researcher might say that because the p value is .02, there is only a 2% chance that the result is due to chance and a 98% chance that it reflects a real relationship in the population. But this is incorrect . The p value is really the probability of a result at least as extreme as the sample result if the null hypothesis were true. So a p value of .02 means that if the null hypothesis were true, a sample result this extreme would occur only 2% of the time.

You can avoid this misunderstanding by remembering that the p value is not the probability that any particular hypothesis is true or false. Instead, it is the probability of obtaining the sample result if the null hypothesis were true.

Role of Sample Size and Relationship Strength

Recall that null hypothesis testing involves answering the question, “If the null hypothesis were true, what is the probability of a sample result as extreme as this one?” In other words, “What is the p value?” It can be helpful to see that the answer to this question depends on just two considerations: the strength of the relationship and the size of the sample. Specifically, the stronger the sample relationship and the larger the sample, the less likely the result would be if the null hypothesis were true. That is, the lower the p value. This should make sense. Imagine a study in which a sample of 500 women is compared with a sample of 500 men in terms of some psychological characteristic, and Cohen’s d is a strong 0.50. If there were really no sex difference in the population, then a result this strong based on such a large sample should seem highly unlikely. Now imagine a similar study in which a sample of three women is compared with a sample of three men, and Cohen’s d is a weak 0.10. If there were no sex difference in the population, then a relationship this weak based on such a small sample should seem likely. And this is precisely why the null hypothesis would be rejected in the first example and retained in the second.

Of course, sometimes the result can be weak and the sample large, or the result can be strong and the sample small. In these cases, the two considerations trade off against each other so that a weak result can be statistically significant if the sample is large enough and a strong relationship can be statistically significant even if the sample is small. Table 13.1 “How Relationship Strength and Sample Size Combine to Determine Whether a Result Is Statistically Significant” shows roughly how relationship strength and sample size combine to determine whether a sample result is statistically significant. The columns of the table represent the three levels of relationship strength: weak, medium, and strong. The rows represent four sample sizes that can be considered small, medium, large, and extra large in the context of psychological research. Thus each cell in the table represents a combination of relationship strength and sample size. If a cell contains the word Yes , then this combination would be statistically significant for both Cohen’s d and Pearson’s r . If it contains the word No , then it would not be statistically significant for either. There is one cell where the decision for d and r would be different and another where it might be different depending on some additional considerations, which are discussed in Section 13.2 “Some Basic Null Hypothesis Tests”

Table 13.1 How Relationship Strength and Sample Size Combine to Determine Whether a Result Is Statistically Significant

Although Table 13.1 “How Relationship Strength and Sample Size Combine to Determine Whether a Result Is Statistically Significant” provides only a rough guideline, it shows very clearly that weak relationships based on medium or small samples are never statistically significant and that strong relationships based on medium or larger samples are always statistically significant. If you keep this in mind, you will often know whether a result is statistically significant based on the descriptive statistics alone. It is extremely useful to be able to develop this kind of intuitive judgment. One reason is that it allows you to develop expectations about how your formal null hypothesis tests are going to come out, which in turn allows you to detect problems in your analyses. For example, if your sample relationship is strong and your sample is medium, then you would expect to reject the null hypothesis. If for some reason your formal null hypothesis test indicates otherwise, then you need to double-check your computations and interpretations. A second reason is that the ability to make this kind of intuitive judgment is an indication that you understand the basic logic of this approach in addition to being able to do the computations.

Statistical Significance Versus Practical Significance

Table 13.1 “How Relationship Strength and Sample Size Combine to Determine Whether a Result Is Statistically Significant” illustrates another extremely important point. A statistically significant result is not necessarily a strong one. Even a very weak result can be statistically significant if it is based on a large enough sample. This is closely related to Janet Shibley Hyde’s argument about sex differences (Hyde, 2007). The differences between women and men in mathematical problem solving and leadership ability are statistically significant. But the word significant can cause people to interpret these differences as strong and important—perhaps even important enough to influence the college courses they take or even who they vote for. As we have seen, however, these statistically significant differences are actually quite weak—perhaps even “trivial.”

This is why it is important to distinguish between the statistical significance of a result and the practical significance of that result. Practical significance refers to the importance or usefulness of the result in some real-world context. Many sex differences are statistically significant—and may even be interesting for purely scientific reasons—but they are not practically significant. In clinical practice, this same concept is often referred to as “clinical significance.” For example, a study on a new treatment for social phobia might show that it produces a statistically significant positive effect. Yet this effect still might not be strong enough to justify the time, effort, and other costs of putting it into practice—especially if easier and cheaper treatments that work almost as well already exist. Although statistically significant, this result would be said to lack practical or clinical significance.

Key Takeaways

- Null hypothesis testing is a formal approach to deciding whether a statistical relationship in a sample reflects a real relationship in the population or is just due to chance.

- The logic of null hypothesis testing involves assuming that the null hypothesis is true, finding how likely the sample result would be if this assumption were correct, and then making a decision. If the sample result would be unlikely if the null hypothesis were true, then it is rejected in favor of the alternative hypothesis. If it would not be unlikely, then the null hypothesis is retained.

- The probability of obtaining the sample result if the null hypothesis were true (the p value) is based on two considerations: relationship strength and sample size. Reasonable judgments about whether a sample relationship is statistically significant can often be made by quickly considering these two factors.

- Statistical significance is not the same as relationship strength or importance. Even weak relationships can be statistically significant if the sample size is large enough. It is important to consider relationship strength and the practical significance of a result in addition to its statistical significance.

- Discussion: Imagine a study showing that people who eat more broccoli tend to be happier. Explain for someone who knows nothing about statistics why the researchers would conduct a null hypothesis test.

Practice: Use Table 13.1 “How Relationship Strength and Sample Size Combine to Determine Whether a Result Is Statistically Significant” to decide whether each of the following results is statistically significant.

- The correlation between two variables is r = −.78 based on a sample size of 137.

- The mean score on a psychological characteristic for women is 25 ( SD = 5) and the mean score for men is 24 ( SD = 5). There were 12 women and 10 men in this study.

- In a memory experiment, the mean number of items recalled by the 40 participants in Condition A was 0.50 standard deviations greater than the mean number recalled by the 40 participants in Condition B.

- In another memory experiment, the mean scores for participants in Condition A and Condition B came out exactly the same!

- A student finds a correlation of r = .04 between the number of units the students in his research methods class are taking and the students’ level of stress.

Cohen, J. (1994). The world is round: p < .05. American Psychologist, 49 , 997–1003.

Hyde, J. S. (2007). New directions in the study of gender similarities and differences. Current Directions in Psychological Science , 16 , 259–263.

Research Methods in Psychology Copyright © 2016 by University of Minnesota is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Biochem Med (Zagreb)

- v.31(1); 2021 Feb 15

Sample size, power and effect size revisited: simplified and practical approaches in pre-clinical, clinical and laboratory studies

Ceyhan ceran serdar.

1 Medical Biology and Genetics, Faculty of Medicine, Ankara Medipol University, Ankara, Turkey

Murat Cihan

2 Ordu University Training and Research Hospital, Ordu, Turkey

Doğan Yücel

3 Department of Medical Biochemistry, Lokman Hekim University School of Medicine, Ankara, Turkey

Muhittin A Serdar

4 Department of Medical Biochemistry, Acibadem Mehmet Ali Aydinlar University, Istanbul, Turkey

Calculating the sample size in scientific studies is one of the critical issues as regards the scientific contribution of the study. The sample size critically affects the hypothesis and the study design, and there is no straightforward way of calculating the effective sample size for reaching an accurate conclusion. Use of a statistically incorrect sample size may lead to inadequate results in both clinical and laboratory studies as well as resulting in time loss, cost, and ethical problems. This review holds two main aims. The first aim is to explain the importance of sample size and its relationship to effect size (ES) and statistical significance. The second aim is to assist researchers planning to perform sample size estimations by suggesting and elucidating available alternative software, guidelines and references that will serve different scientific purposes.

Introduction

Statistical analysis is a crucial part of a research. A scientific study must include statistical tools in the study, beginning from the planning stage. Developed in the last 20-30 years, information technology, along with evidence-based medicine, increased the spread and applicability of statistical science. Although scientists have understood the importance of statistical analysis for researchers, a significant number of researchers admit that they lack adequate knowledge about statistical concepts and principles ( 1 ). In a study by West and Ficalora, more than two-thirds of the clinicians emphasized that “the level of biostatistics education that is provided to the medical students is not sufficient” ( 2 ). As a result, it was suggested that statistical concepts were either poorly understood or not understood at all ( 3 , 4 ). Additionally, intentionally or not, researchers tend to draw conclusions that cannot be supported by the actual study data, often due to the misuse of statistics tools ( 5 ). As a result, a large number of statistical errors occur affecting the research results.

Although there are a variety of potential statistical errors that might occur in any kind of scientific research, it has been observed that the sources of error have changed due to the use of dedicated software that facilitates statistics in recent years. A summary of main statistical errors frequently encountered in scientific studies is provided below ( 6 - 13 ):

- Flawed and inadequate hypothesis;

- Improper study design;

- Lack of adequate control condition/group;

- Spectrum bias;

- Overstatement of the analysis results;

- Spurious correlations;

- Inadequate sample size;

- Circular analysis (creating bias by selecting the properties of the data retrospectively);

- Utilization of inappropriate statistical studies and fallacious bending of the analyses;

- p-hacking ( i.e. addition of new covariates post hoc to make P values significant);

- Excessive interpretation of limited or insignificant results (subjectivism);

- Confusion (intentionally or not) of correlations, relationships, and causations;

- Faulty multiple regression models;

- Confusion between P value and clinical significance; and

- Inappropriate presentation of the results and effects (erroneous tables, graphics, and figures).

Relationship among sample size, power, P value and effect size

In this review, we will concentrate on the problems associated with the relationships among sample size, power, P value, and effect size (ES). Practical suggestions will be provided whenever possible. In order to understand and interpret the sample size, power analysis, effect size, and P value, it is necessary to know how the hypothesis of the study was formed. It is best to evaluate a study for Type I and Type II errors ( Figure 1 ) through consideration of the study results in the context of its hypotheses ( 14 - 16 ).

Illustration of Type I and Type II errors.

A statistical hypothesis is the researcher’s best guess as to what the result of the experiment will show. It states, in a testable form the proposition the researcher plans to examine in a sample to be able to find out if the proposition is correct in the relevant population. There are two commonly used types of hypotheses in statistics. These are the null hypothesis (H0) and the alternative (H1) hypothesis. Essentially, the H1 is the researcher’s prediction of what will be the situation of the experimental group after the experimental treatment is applied. The H0 expresses the notion that there will be no effect from the experimental treatment.

Prior to the study, in addition to stating the hypothesis, the researcher must also select the alpha (α) level at which the hypothesis will be declared “supported”. The α represents how much risk the researcher is willing to take that the study will conclude H1 is correct when (in the full population) it is not correct (and thus, the null hypothesis is really true). In other words, alpha represents the probability of rejecting H0 when it actually is true. (Thus, the researcher has made an error by reporting that the experimental treatment makes a difference, when in fact, in the full population, that treatment has no effect.)

The most common α level chosen is 0.05, meaning the researcher is willing to take a 5% chance that a result supporting the hypothesis will be untrue in the full population. However, other alpha levels may also be appropriate in some circumstances. For pilot studies, α is often set at 0.10 or 0.20. In studies where it is especially important to avoid concluding a treatment is effective when it actually is not, the alpha may be set at a much lower value; it might be set at 0.001 or even lower. Drug studies are examples for studies that often set the alpha at 0.001 or lower because the consequences of releasing an ineffective drug can be extremely dangerous for patients.

Another probability value is called “the P value”. The P value is simply the obtained statistical probability of incorrectly accepting the alternate hypothesis. The P value is compared to the alpha value to determine if the result is “statistically significant”, meaning that with high probability the result found in the sample will also be true in the full population. If the P value is at or lower than alpha, H1 is accepted. If it is higher than alpha, the H1 is rejected and H0 is accepted instead.

There are actually two types of errors: the error of accepting H1 when it is not true in the population; this is called a Type I error; and is a false positive. The alpha defines the probability of a Type I error. Type I errors can happen for many reasons, from poor sampling that results in an experimental sample quite different from the population, to other mistakes occurring in the design stage or implementation of the research procedures. It is also possible to make an erroneous decision in the opposite direction; by incorrectly rejecting H1 and thus wrongly accepting H0. This is called a Type II error (or a false negative). The β defines the probability of a Type II error. The most common reason for this type of error is small sample size, especially when combined with moderately low or low effect sizes. Both small sample sizes and low effect sizes reduce the power in the study.

Power, which is the probability of rejecting a false null hypothesis, is calculated as 1-β (also expressed as “1 - Type II error probability”). For a Type II error of 0.15, the power is 0.85. Since reduction in the probability of committing a Type II error increases the risk of committing a Type I error (and vice versa ), a delicate balance should be established between the minimum allowed levels for Type I and Type II errors. The ideal power of a study is considered to be 0.8 (which can also be specified as 80%) ( 17 ). Sufficient sample size should be maintained to obtain a Type I error as low as 0.05 or 0.01 and a power as high as 0.8 or 0.9.

However, when power value falls below < 0.8, one cannot immediately conclude that the study is totally worthless. In parallel with this, the concept of “cost-effective sample size” has gained importance in recent years ( 18 ).

Additionally, the traditionally chosen alpha and beta error limits are generally arbitrary and are being used as a convention rather than being based on any scientific validity. Another key issue for a study is the determination, presentation and discussion of the effect size of the study, as will be discussed below in detail.

Although increasing the sample size is suggested to decrease the Type II errors, it will increase the cost of the project and delay the completion of the research activities in a foreseen period of time. In addition, it should not be forgotten that redundant samples may cause ethical problems ( 19 , 20 ).

Therefore, determination of the effective sample size is crucial to enable an efficient study with high significance, increasing the impact of the outcome. Unfortunately, information regarding sample size calculations are not often provided by clinical investigators in most diagnostic studies ( 21 , 22 ).

Calculation of the sample size

Different methods can be utilized before the onset of the study to calculate the most suitable sample size for the specific research. In addition to manual calculation, various nomograms or software can be used. The Figure 2 illustrates one of the most commonly used nomograms for sample size estimation using effect size and power ( 23 ).

Nomogram for sample size and power, for comparing two groups of equal size. Gaussian distributions assumed. Standardized difference (effect size) and aimed power values are initially selected on the nomogram. The line connecting these values cross the significance level region of the nomogram. The intercept at the appropriate significance value presents the required sample size for the study. In the above example, for effect size = 1, power = 0.8 and alpha value = 0.05, the sample size is found to be 30. (Adapted from reference 16 ).

Although manual calculation is preferred by the experts of the subject, it is a bit complicated and difficult for the researchers that are not statistics experts. In addition, considering the variety of the research types and characteristics, it should be noted that a great number of calculations will be required with too many variables ( Table 1 ) ( 16 , 24 - 30 ).

In recent years, numerous software and websites have been developed which can successfully calculate sample size in various study types. Some of the important software and websites are listed in Table 2 and are evaluated based both on the remarks stated in the literature and on our own experience, with respect to the content, ease of use, and cost ( 31 , 32 ). G-Power, R, and Piface stand out among the listed software in terms of being free-to use. G-Power is a free-to use tool that be used to calculate statistical power for many different t-tests, F-tests, χ 2 tests, z-tests and some exact tests. R is an open source programming language which can be tailored to meet individual statistical needs, by adding specific program modules called packages onto a specific base program. Piface is a java application specifically designed for sample size estimation and post-hoc power analysis. The most professional software is PASS (Power Analysis and Sample Size). With PASS, it is possible to analyse sample size and power for approximately 200 different study types. In addition, many websites provide substantial aid in calculating power and sample size, basing their methodology on scientific literature.

The sample size or the power of the study is directly related to the ES of the study. What is this important ES? The ES provides important information on how well the independent variable or variables predict the dependent variable. Low ES means that, independent variables don’t predict well because they are only slightly related to the dependent variable. Strong ES means that, independent variables are very good predictors of the dependent variable. Thus, ES is clinically important for evaluating how efficiently the clinicians can predict outcomes from the independent variables.

The scale of the ES values for different types of statistical tests conducted in different study types are presented in Table 3 .

In order to evaluate the effect of the study and indicate its clinical significance, it is very important to evaluate the effect size along with statistical significance. P value is important in the statistical evaluation of the research. While it provides information on presence/absence of an effect, it will not account for the size of the effect. For comprehensive presentation and interpretation of the studies, both effect size and statistical significance (P value) should be provided and considered.

It would be much easier to understand ES through an example. For example, assume that independent sample t-test is used to compare total cholesterol levels for two groups having normal distribution. Where X, SD and N stands for mean, standard deviation and sample size, respectively. Cohen’s d ES can be calculated as follows:

Mean (X), mmol/L Standard deviation (SD) Sample size (N)

Group 1 6.5 0.5 30

Group 2 5.2 0.8 30

Cohen d ES results represents: 0.8 large, 0.5 medium, 0.2 small effects). The result of 1.94 indicates a very large effect. Means of the two groups are remarkably different.

In the example above, the means of the two groups are largely different in a statistically significant manner. Yet, clinical importance of the effect (whether this effect is important for the patient, clinical condition, therapy type, outcome, etc .) needs to be specifically evaluated by the experts of the topic.

Power, alpha values, sample size, and ES are closely related with each other. Let us try to explain this relationship through different situations that we created using G-Power ( 33 , 34 ).

The Figure 3 shows the change of sample size depending on the ES changes (0.2, 1 and 2.5, respectively) provided that the power remains constant at 0.8. Arguably, case 3 is particularly common in pre-clinical studies, cell culture, and animal studies (usually 5-10 samples in animal studies or 3-12 samples in cell culture studies), while case 2 is more common in clinical studies. In clinical, epidemiological or meta-analysis studies, where the sample size is very large; case 1, which emphasizes the importance of smaller effects, is more commonly observed ( 33 ).

Relationship between effect size and sample size. P – power. ES - effect size. SS - sample size. The required sample size increases as the effect size decreases. In all cases, P value is set to 0.8. The sample sizes (SS) when ES is 0.2, 1, or 2.5; are 788, 34 and 8, respectively. The graphs at the bottom represent the influence of change in the sample size on the power.

In Figure 4 , case 4 exemplifies the change in power and ES values when the sample size is kept constant ( i.e. as low as 8). As can be seen here, in studies with low ES, working with few samples will mean waste of time, redundant processing, or unnecessary use of laboratory animals.

Relationship between effect size and power. Two different cases are schematized where the sample size is kept constant either at 8 or at 30. When the sample size is kept constant, the power of the study decreases as the effect size decreases. When the effect size is 2.5, even 8 samples are sufficient to obtain power = ~0.8. When the effect size is 1, increasing sample size from 8 to 30 significantly increases the power of the study. Yet, even 30 samples are not sufficient to reach a significant power value if effect size is as low as 0.2.

Likewise, case 5 exemplifies the situation where the sample size is kept constant at 30. In this case, it is important to note that when ES is 1, the power of the study will be around 0.8. Some statisticians arbitrarily regard 30 as a critical sample size. However, case 5 clearly demonstrates that it is essential not to underestimate the importance of ES, while deciding on the sample size.

Especially in recent years, where clinical significance or effectiveness of the results has outstripped the statistical significance; understanding the effect size and power has gained tremendous importance ( 35 – 38 ).

Preliminary information about the hypothesis is eminently important to calculate the sample size at intended power. Usually, this is accomplished by determining the effect size from the results of a previous study or a preliminary study. There are software available which can calculate sample size using the effect size

We now want to focus on sample size and power analysis in some of the most common research areas.

Determination of sample size in pre-clinical studies

Animal studies are the most critical studies in terms of sample size. Especially due to ethical concerns, it is vital to keep the sample size at the lowest sufficient level. It should be noted that, animal studies are radically different from human studies because many animal studies use inbred animals having extremely similar genetic background. Thus, far fewer animals are needed in the research because genetic differences that could affect the study results are kept to a minimum ( 39 , 40 ).

Consequently, alternative sample size estimation methodologies were suggested for each study type ( 41 - 44 ). If the effect size is to be determined using the results from previous or preliminary studies, sample size estimation may be performed using G-Power. In addition, Table 4 may also be used for easy estimation of the sample size ( 40 ).

In addition to sample size estimations that may be computed according to Table 4 , formulas stated in Table 1 and the websites mentioned in Table 2 may also be utilized to estimate sample size in animal studies. Relying on previous studies pose certain limitations since it may not always be possible to acquire reliable “pooled standard deviation” and “group mean” values.

Arifin et al. proposed simpler formulas ( Table 5 ) to calculate sample size in animal studies ( 45 ). In group comparison studies, it is possible to calculate the sample size as follows: N = (DF/k)+1 (Eq. 4).

Based on acceptable range of the degrees of freedom (DF), the DF in formulas are replaced with the minimum ( 10 ) and maximum ( 20 ). For example, in an experimental animal study where the use of 3 investigational drugs are tested minimum number of animals that will be required: N = (10/3)+1 = 4.3; rounded up to 5 animals / group, total sample size = 5 x 3 = 15 animals. Maximum number of animals that will be required: N = (20/3)+1 = 7.7; rounded down to 7 animals / group, total sample size = 7 x 3 = 21 animals.

In conclusion, for the recommended study, 5 to 7 animals per group will be required. In other words, a total of 15 to 21 animals will be required to keep the DF within the range of 10 to 20.

In a compilation where Ricci et al. reviewed 15 studies involving animal models, it was noted that the sample size used was 10 in average (between 6 and 18), however, no formal power analysis was reported by any of the groups. It was striking that, all studies included in the review have used parametric analysis without prior normality testing ( i.e. Shapiro-Wilk) to justify their statistical methodology ( 46 ).

It is noteworthy that, unnecessary animal use could be prevented by keeping the power at 0.8 and selecting one-tailed analysis over two-tailed analysis with an accepted 5% risk of making type I error as performed in some pharmacological studies, reducing the number of required animals by 14% ( 47 ).

Neumann et al. proposed a group-sequential design to minimize animal use without a decrease in statistical power. In this strategy, researchers started the experiments with only 30% of the animals that were initially planned to be included in the study. After an interim analysis of the results obtained with 30% of the animals, if sufficient power is not reached, another 30% is included in the study. If results from this initial 60% of the animals provide sufficient statistical power, then the rest of the animals are excused from the study. If not, the remaining animals are also included in the study. This approach was reported to save 20% of the animals in average, without leading to a decrease in statistical power ( 48 ).

Alternative sample size estimation strategies are implemented for animal testing in different countries. As an example, a local authority in southwestern Germany recommended that, in the absence of a formal sample size estimation, less than 7 animals per experimental group should be included in pilot studies and the total number of experimental animals should not exceed 100 ( 48 ).

On the other hand, it should be noted that, for a sample size of 8 to 10 animals per group, statistical significance will not be accomplished unless a large or very large ES (> 2) is expected ( 45 , 46 ). This problem remains as an important limitation for animal studies. Software like G-Power can be used for sample size estimation. In this case, results obtained from a previous or a preliminary study will be required to be used in the calculations. However, even when a previous study is available in literature, using its data for a sample size estimation will still pose an uncertainty risk unless a clearly detailed study design and data is provided in the publication. Although researchers suggested that reliability analyses could be performed by methods such as Markov Chain Monte Carlo, further research is needed in this regard ( 49 ).

The output of the joint workshop held by The National Institutes of Health (NIH), Nature Publishing Group and Science; “Principles and Guidelines for Reporting Preclinical Research” that was published in 2014, has since been acknowledged by many organizations and journals. This guide has shed significant light on studies using biological materials, involving animal studies, and handling image-based data ( 50 ).

Another important point regarding animal studies is the use of technical repetition (pseudo replication) instead of biological repetition. Technical repetition is a specific type of repetition where the same sample is measured multiple times, aiming to probe the noise associated with the measurement method or the device. Here, no matter how many times the same sample is measured, the actual sample size will remain the same. Let us assume a research group is investigating the effect of a therapeutic drug on blood glucose level. If the researchers measure the blood glucose level of 3 mice receiving the actual treatment and 3 mice receiving placebo, this would be a biological repetition. On the other hand, if the blood glucose level of a single mouse receiving the actual treatment and the blood glucose level of a single mouse receiving placebo are each measured 3 times, this would be technical repetition. Both designs will provide 6 data points to calculate P value, yet the P value obtained from the second design would be meaningless since each treatment group will only have one member ( Figure 5 ). Multiple measurements on single mice are pseudo replication; therefore do not contribute to N. No matter how ingenious, no statistical analysis method can fix incorrectly selected replicates at the post-experimental stage; replicate types should be selected accurately at the design stage. This problem is a critical limitation, especially in pre-clinical studies that conduct cell culture experiments. It is very important for critical assessment and evaluation of the published research results ( 51 ). This issue is mostly underestimated, concealed or ignored. It is striking that in some publications, the actual sample size is found to be as low as one. Experiments comparing drug treatments in a patient-derived stem cell line are specific examples for this situation. Although there may be many technical replications for such experiments and the experiment can be repeated several times, the original patient is a single biological entity. Similarly, when six metatarsals are harvested from the front paws of a single mouse and cultured as six individual cultures, another pseudo replication is practiced where the sample size is actually 1, instead of 6 ( 52 ). Lazic et al . suggested that almost half of the studies (46%) had mistaken pseudo replication (technical repeat) for genuine replication, while 32% did not provide sufficient information to enable evaluation of appropriateness of the sample size ( 53 , 54 ).

Technical vs biological repeat.

In studies providing qualitative data (such as electrophoresis, histology, chromatography, electron microscopy), the number of replications (“number of repeats” or “sample size”) should explicitly be stated.

Especially in pre-clinical studies, standard error of the mean (SEM) is frequently used instead of SD in some situations and by certain journals. The SEM is calculated by dividing the SD by the square root of the sample size (N). The SEM will indicate how variable the mean will be if the whole study is repeated many times. Whereas the SD is a measure of how scattered the scores within a set of data are. Since SD is usually higher than SEM, researchers tend to use SEM. While SEM is not a distribution criterion; there is a relation between SEM and 95% confidence interval (CI). For example, when N = 3, 95% CI is almost equal to mean ± 4 SEM, but when N ≥ 10; 95% CI equals to mean ± 2 SEM. Standard deviation and 95% CI can be used to report the statistical analysis results such as variation and precision on the same plot to demonstrate the differences between test groups ( 52 , 55 ).

Given the attrition and unexpected death risk of the laboratory animals during the study, the researchers are generally recommended to increase the sample size by 10% ( 56 ).

Sample size calculation for some genetic studies

Sample size is important for genetic studies as well. In genetic studies, calculation of allele frequencies, calculation of homozygous and heterozygous frequencies based on Hardy-Weinberg principle, natural selection, mutation, genetic drift, association, linkage, segregation, haplotype analysis are carried out by means of probability and statistical models ( 57 - 62 ). While G-Power is useful for basic statistics, substantial amount of analyses can be conducted using genetic power calculator ( http://zzz.bwh.harvard.edu/gpc/ ) ( 61 , 62 ). This calculator, which provides automated power analysis for variance components (VC) quantitative trait locus (QTL) linkage and association tests in sibships, and other common tests, is significantly effective especially for genetics studies analysing complex diseases.

Case-control association studies for single nucleotide polymorphisms (SNPs) may be facilitated using OSSE web site ( http://osse.bii.a-star.edu.sg/ ). As an example, let us assume the minor allele frequencies of an SNP in cases and controls are approximately 15% and 7% respectively. To have a power of 0.8 with 0.05 significance, the study is required to include 239 samples both for cases and controls, adding up to 578 samples in total ( Figure 6 ).

Interface of Online Sample Size Estimator (OSSE) Tool. (Available at: http://osse.bii.a-star.edu.sg/ ).

Hong and Park have proposed tables and graphics in their article for facilitating sample size estimation ( 57 ). With the assumption of 5% disease prevalence, 5% minor allele frequency and complete linkage disequilibrium (D’ = 1), the sample size in a case-control study with a single SNP marker, 1:1 case-to-control ratio, 0.8 statistical power, and 5% type I error rate can be calculated according to the genetic models of inheritance (allelic, additive, dominant, recessive, and co-dominant models) and the odd ratios of heterozygotes/rare homozygotes ( Table 6 ). As demonstrated by Hong and Park among all other types of inheritance, dominant inheritance requires the lowest sample size to achieve 0.8 statistical power. Whereas, testing a single SNP in a recessive inheritance model requires a very large sample size even with a high homozygote ratio, that is practically challenging with a limited budget ( 57 ). The Table 6 illustrates the difficulty in detecting a disease allele following a recessive mode of inheritance with moderate sample size.

Sample size and power analyses in clinical studies

In clinical research, sample size is calculated in line with the hypothesis and study design. The cross-over study design and parallel study design apply different approaches for sample size estimation. Unlike pre-clinical studies, a significant number of clinical journals necessitate sample size estimation for clinical studies.

The basic rules for sample size estimation in clinical trials are as follows ( 63 , 64 ):

- Error level (alpha): It is generally set as < 0.05. The sample size should be increased to compensate for the decrease in the effect size.

- Power must be > 0.8: The sample size should be increased to increase the power of the study. The higher the power, the lower the risk of missing an actual effect.

The relationship among clinical significance, statistical significance, power and effect size. In the example above, in order to provide a clinically significant effect, a treatment is required to trigger at least 0.5 mmol/L decreases in cholesterol levels. Four different scenarios are given for a candidate treatment, each having different mean total cholesterol change and 95% confidence interval. ES - effect size. N – number of participant. Adapted from reference 65 .

- Similarity and equivalence: The sample size required demonstrating similarity and equivalence is very low.

Sample size estimation can be performed manually using the formulas in Table 1 as well as software and websites in Table 2 (especially by G-Power). However, all of these calculations require preliminary results or previous study outputs regarding the hypothesis of interest. Sample size estimations are difficult in complex or mixed study designs. In addition: a) unplanned interim analysis, b) planned interim analysis and

- adjustments for common variables may be required for sample size estimation.

In addition, post-hoc power analysis (possible with G-Power, PASS) following the study significantly facilitates the evaluation of the results in clinical studies.

A number of high-quality journals emphasize that the statistical significance is not sufficient on its own. In fact, they would require evaluation of the results in terms of effect size and clinical effect as well as statistical significance.