- International edition

- Australia edition

- Europe edition

What’s the point of personal statements when ChatGPT can say it so much better?

Thanks in part to AI, students will no longer have to wax lyrical about struggles and achievements to secure a university place

T he decision to scrap personal statements from university applications is overdue. Not only for the stated reason – that the practice of writing a 4,000-character essay about yourself is seen to favour middle-class kids (and the genetically smug) – but also because the temptations of help from artificial intelligence are increasingly hard to resist. Who is going to labour for days over a side of cringeworthy A4 about the formative influence of a Saturday job when ChatGPT and its rivals can do the job in five seconds?

“Overcoming difficulties has been a defining aspect of my life,” the app, when prompted, suggests of its struggles. “I grew up in a low-income household and my family struggled to make ends meet. This made it difficult for me to afford the necessary resources to excel academically. However, I refused to let my circumstances define me. I volunteered at local schools and tutored my peers.” Or, prompted differently: “Growing up in a middle-class background, I have had opportunities to help me excel academically and personally. As I have grown older, I have come to realise the importance of giving back to those who are less fortunate. I have been actively involved in community service both locally and internationally. I am now ready to take the next step in my education and personal development at university.”

Give that bot a scholarship.

In the clouds

If software might sometimes promise us more time, it will no doubt also be employed to check up on what we are doing with it. There was a chilling aspect to the case of Canadian woman Karlee Besse who, in suing her employer for wrongful dismissal, was ordered instead last week to compensate her bosses with a payment equivalent to £1,600. Her company had been using tracking software called TimeCamp, which spied on the hours Besse claimed to have been working from home on her laptop. According to the data, Besse had charged for 50 hours that “did not appear to have been spent on work-related tasks”. Reading Besse’s story prompted me to look up something the polymathic physicist Carlo Rovelli once said to me, when I interviewed him about that famous loafer Albert Einstein: “You don’t get anywhere by not wasting time.” Or how, as Billy Liar might have idly wondered to himself, do you put a price on daydreaming?

First Noele

The advance publicity for Russell T Davies’s miniseries Nolly , in which Helena Bonham Carter plays Crossroads star and 1970s “Queen of the Midlands” Noele Gordon, prompted a strange recovered memory. As a kid, I once went up to our local VG convenience store and encountered Gordon, then pulling in 12 million viewers a night, in full Meg Richardson makeup and fur coat buying something for her tea. At the time, this was about as close as suburban Birmingham came to Sunset Boulevard. Gordon, the first woman ever to appear on colour television , had been such a fixture on the ATV schedules of my childhood that it was disturbing to discover her in daylight. She seemed slightly unsure of the possibility herself. Crossroads ran for 18 years before it engineered its star’s drugged-up demise in a motel fire. If you were ever in doubt that the past was another country, look back at the Guardian ’s front page of 5 November 1981, the night after Gordon’s departure. There, the story of television switchboards being jammed with calls from weeping viewers distraught about the end of an era competes for space with Mrs Thatcher promising far better times ahead in her Queen’s speech.

- Universities

- Higher education

- Artificial intelligence (AI)

- Working from home

- Russell T Davies

Most viewed

Using ChatGPT to Write a Statement of Purpose (Personal Statement)

Writing a compelling Statement of Purpose (SOP) or Personal Statement is a crucial part of the graduate school application process. It requires careful thought, self-reflection, and effective storytelling. With ChatGPT and several AI writing tools out there, you can create a standout SOP, here’s how:

1. Generating Ideas and Inspiration:

One of the initial challenges when writing an SOP is brainstorming ideas and finding inspiration. ChatGPT can serve as a creative partner by generating prompts and asking thought-provoking questions. Try asking questions like:

“What are some topics for a personal statement of a student applying to Masters in Biotechnology?” “Suggest themes for an SOP for MS in Data Science”

2. Structuring and Organizing your SOP:

ChatGPT can save you tons of time by making a base draft of the essay. To get started, ask something like:

“Write an SOP for a student applying to MS in Chemical engineering who is passionate about developing eco-friendly alternative fuels.”

3. Refining Language and Style:

A well written story makes all the difference. ChatGPT can help refine your writing style by offering suggestions for sentence structure, word choice, and phrasing. Prompts like are useful here:

“Improve the style of writing of the following paragraph: …”

4. Maintaining Authenticity:

Do NOT, and we can’t emphasize this enough: Do not copy paste direct essays from ChatGPT. Remember that the final piece should reflect your personal experiences, goals, and aspirations. Use ChatGPT as the first draft, but always modify it with your unique story. Copy-paste essays are easy to detect and will cost you an admission.

5. Final review:

Fix the grammar and get feedback from some friends / professors on your essay. Another cool way to use ChatGPT is to ask for improvements on your essay, but use your human judgment while receiving feedback.

Conclusion:

The use of AI can greatly enhance the process of writing a standout Statement of Purpose. It offers assistance in generating ideas, organizing your thoughts, refining language, and identifying errors. However, it's crucial to remember that ChatGPT is a tool, and the final product should reflect your own voice and experiences. Alternatively, with a thoughtful approach and the support of GradGPT, you can craft an impactful SOP that effectively communicates your passion, qualifications, and aspirations to the admissions committee.

Celebrating 150 years of Harvard Summer School. Learn about our history.

Should I Use ChatGPT to Write My Essays?

Everything high school and college students need to know about using — and not using — ChatGPT for writing essays.

Jessica A. Kent

ChatGPT is one of the most buzzworthy technologies today.

In addition to other generative artificial intelligence (AI) models, it is expected to change the world. In academia, students and professors are preparing for the ways that ChatGPT will shape education, and especially how it will impact a fundamental element of any course: the academic essay.

Students can use ChatGPT to generate full essays based on a few simple prompts. But can AI actually produce high quality work, or is the technology just not there yet to deliver on its promise? Students may also be asking themselves if they should use AI to write their essays for them and what they might be losing out on if they did.

AI is here to stay, and it can either be a help or a hindrance depending on how you use it. Read on to become better informed about what ChatGPT can and can’t do, how to use it responsibly to support your academic assignments, and the benefits of writing your own essays.

What is Generative AI?

Artificial intelligence isn’t a twenty-first century invention. Beginning in the 1950s, data scientists started programming computers to solve problems and understand spoken language. AI’s capabilities grew as computer speeds increased and today we use AI for data analysis, finding patterns, and providing insights on the data it collects.

But why the sudden popularity in recent applications like ChatGPT? This new generation of AI goes further than just data analysis. Instead, generative AI creates new content. It does this by analyzing large amounts of data — GPT-3 was trained on 45 terabytes of data, or a quarter of the Library of Congress — and then generating new content based on the patterns it sees in the original data.

It’s like the predictive text feature on your phone; as you start typing a new message, predictive text makes suggestions of what should come next based on data from past conversations. Similarly, ChatGPT creates new text based on past data. With the right prompts, ChatGPT can write marketing content, code, business forecasts, and even entire academic essays on any subject within seconds.

But is generative AI as revolutionary as people think it is, or is it lacking in real intelligence?

The Drawbacks of Generative AI

It seems simple. You’ve been assigned an essay to write for class. You go to ChatGPT and ask it to write a five-paragraph academic essay on the topic you’ve been assigned. You wait a few seconds and it generates the essay for you!

But ChatGPT is still in its early stages of development, and that essay is likely not as accurate or well-written as you’d expect it to be. Be aware of the drawbacks of having ChatGPT complete your assignments.

It’s not intelligence, it’s statistics

One of the misconceptions about AI is that it has a degree of human intelligence. However, its intelligence is actually statistical analysis, as it can only generate “original” content based on the patterns it sees in already existing data and work.

It “hallucinates”

Generative AI models often provide false information — so much so that there’s a term for it: “AI hallucination.” OpenAI even has a warning on its home screen , saying that “ChatGPT may produce inaccurate information about people, places, or facts.” This may be due to gaps in its data, or because it lacks the ability to verify what it’s generating.

It doesn’t do research

If you ask ChatGPT to find and cite sources for you, it will do so, but they could be inaccurate or even made up.

This is because AI doesn’t know how to look for relevant research that can be applied to your thesis. Instead, it generates content based on past content, so if a number of papers cite certain sources, it will generate new content that sounds like it’s a credible source — except it likely may not be.

There are data privacy concerns

When you input your data into a public generative AI model like ChatGPT, where does that data go and who has access to it?

Prompting ChatGPT with original research should be a cause for concern — especially if you’re inputting study participants’ personal information into the third-party, public application.

JPMorgan has restricted use of ChatGPT due to privacy concerns, Italy temporarily blocked ChatGPT in March 2023 after a data breach, and Security Intelligence advises that “if [a user’s] notes include sensitive data … it enters the chatbot library. The user no longer has control over the information.”

It is important to be aware of these issues and take steps to ensure that you’re using the technology responsibly and ethically.

It skirts the plagiarism issue

AI creates content by drawing on a large library of information that’s already been created, but is it plagiarizing? Could there be instances where ChatGPT “borrows” from previous work and places it into your work without citing it? Schools and universities today are wrestling with this question of what’s plagiarism and what’s not when it comes to AI-generated work.

To demonstrate this, one Elon University professor gave his class an assignment: Ask ChatGPT to write an essay for you, and then grade it yourself.

“Many students expressed shock and dismay upon learning the AI could fabricate bogus information,” he writes, adding that he expected some essays to contain errors, but all of them did.

His students were disappointed that “major tech companies had pushed out AI technology without ensuring that the general population understands its drawbacks” and were concerned about how many embraced such a flawed tool.

Explore Our High School Programs

How to Use AI as a Tool to Support Your Work

As more students are discovering, generative AI models like ChatGPT just aren’t as advanced or intelligent as they may believe. While AI may be a poor option for writing your essay, it can be a great tool to support your work.

Generate ideas for essays

Have ChatGPT help you come up with ideas for essays. For example, input specific prompts, such as, “Please give me five ideas for essays I can write on topics related to WWII,” or “Please give me five ideas for essays I can write comparing characters in twentieth century novels.” Then, use what it provides as a starting point for your original research.

Generate outlines

You can also use ChatGPT to help you create an outline for an essay. Ask it, “Can you create an outline for a five paragraph essay based on the following topic” and it will create an outline with an introduction, body paragraphs, conclusion, and a suggested thesis statement. Then, you can expand upon the outline with your own research and original thought.

Generate titles for your essays

Titles should draw a reader into your essay, yet they’re often hard to get right. Have ChatGPT help you by prompting it with, “Can you suggest five titles that would be good for a college essay about [topic]?”

The Benefits of Writing Your Essays Yourself

Asking a robot to write your essays for you may seem like an easy way to get ahead in your studies or save some time on assignments. But, outsourcing your work to ChatGPT can negatively impact not just your grades, but your ability to communicate and think critically as well. It’s always the best approach to write your essays yourself.

Create your own ideas

Writing an essay yourself means that you’re developing your own thoughts, opinions, and questions about the subject matter, then testing, proving, and defending those thoughts.

When you complete school and start your career, projects aren’t simply about getting a good grade or checking a box, but can instead affect the company you’re working for — or even impact society. Being able to think for yourself is necessary to create change and not just cross work off your to-do list.

Building a foundation of original thinking and ideas now will help you carve your unique career path in the future.

Develop your critical thinking and analysis skills

In order to test or examine your opinions or questions about a subject matter, you need to analyze a problem or text, and then use your critical thinking skills to determine the argument you want to make to support your thesis. Critical thinking and analysis skills aren’t just necessary in school — they’re skills you’ll apply throughout your career and your life.

Improve your research skills

Writing your own essays will train you in how to conduct research, including where to find sources, how to determine if they’re credible, and their relevance in supporting or refuting your argument. Knowing how to do research is another key skill required throughout a wide variety of professional fields.

Learn to be a great communicator

Writing an essay involves communicating an idea clearly to your audience, structuring an argument that a reader can follow, and making a conclusion that challenges them to think differently about a subject. Effective and clear communication is necessary in every industry.

Be impacted by what you’re learning about :

Engaging with the topic, conducting your own research, and developing original arguments allows you to really learn about a subject you may not have encountered before. Maybe a simple essay assignment around a work of literature, historical time period, or scientific study will spark a passion that can lead you to a new major or career.

Resources to Improve Your Essay Writing Skills

While there are many rewards to writing your essays yourself, the act of writing an essay can still be challenging, and the process may come easier for some students than others. But essay writing is a skill that you can hone, and students at Harvard Summer School have access to a number of on-campus and online resources to assist them.

Students can start with the Harvard Summer School Writing Center , where writing tutors can offer you help and guidance on any writing assignment in one-on-one meetings. Tutors can help you strengthen your argument, clarify your ideas, improve the essay’s structure, and lead you through revisions.

The Harvard libraries are a great place to conduct your research, and its librarians can help you define your essay topic, plan and execute a research strategy, and locate sources.

Finally, review the “ The Harvard Guide to Using Sources ,” which can guide you on what to cite in your essay and how to do it. Be sure to review the “Tips For Avoiding Plagiarism” on the “ Resources to Support Academic Integrity ” webpage as well to help ensure your success.

Sign up to our mailing list to learn more about Harvard Summer School

The Future of AI in the Classroom

ChatGPT and other generative AI models are here to stay, so it’s worthwhile to learn how you can leverage the technology responsibly and wisely so that it can be a tool to support your academic pursuits. However, nothing can replace the experience and achievement gained from communicating your own ideas and research in your own academic essays.

About the Author

Jessica A. Kent is a freelance writer based in Boston, Mass. and a Harvard Extension School alum. Her digital marketing content has been featured on Fast Company, Forbes, Nasdaq, and other industry websites; her essays and short stories have been featured in North American Review, Emerson Review, Writer’s Bone, and others.

5 Key Qualities of Students Who Succeed at Harvard Summer School (and in College!)

This guide outlines the kinds of students who thrive at Harvard Summer School and what the programs offer in return.

Harvard Division of Continuing Education

The Division of Continuing Education (DCE) at Harvard University is dedicated to bringing rigorous academics and innovative teaching capabilities to those seeking to improve their lives through education. We make Harvard education accessible to lifelong learners from high school to retirement.

I asked ChatGPT to write college entrance essays. Admissions professionals said they passed for essays written by students but I wouldn't have a chance at any top colleges.

- I asked OpenAI's ChatGPT to write some college admissions essays and sent them to experts to review.

- Both of the experts said the essays seemed like they had been written by a real student.

- However, they said the essays wouldn't have had a shot at highly selective colleges.

ChatGPT can be used for many things: school work , cover letters , and apparently, college admissions essays.

College essays, sometimes known as personal statements, are a time-consuming but important part of the application process . They are not required for all institutions, but experts say they can make or break a candidate's chances when they are.

The essays are often based on prompts that require students to write about a personal experience, such as:

Describe a topic, idea, or concept you find so engaging that it makes you lose all track of time. Why does it captivate you? What or who do you turn to when you want to learn more?

I asked ChatGPT to whip up a few based on some old questions from the Common App , a widely used application process across the US. In about 10 minutes I had three entrance essays that were ready to use.

At first, the chatbot refused to write a college application essay for me, telling me it was important I wrote from my personal experience. However, after prompting it to write me a "specific example answer" to an essay question with vivid language to illustrate the points, it generated some pretty good text based on made-up personal experiences.

I sent the results to two admissions professionals to see what they thought.

The essays seemed like they had been written by real students, experts say

Both of the experts I asked said the essays would pass for a real student.

Adam Nguyen, founder of tutoring company Ivy Link , previously worked as an admissions reader and interviewer in Columbia's Office of Undergraduate Admission and as an academic advisor at Harvard University. He told Insider: "Having read thousands of essays over the years, I can confidently say that it would be extremely unlikely to ascertain with the naked eye that these essays were AI-generated."

Kevin Wong, Princeton University alumnus and cofounder of tutoring service PrepMaven, which specializes in college admissions, agreed.

Related stories

"Without additional tools, I don't think it would be easy to conclude that these essays were AI-generated," he said. "The essays do seem to follow a predictable pattern, but it isn't plainly obvious that they weren't written by a human."

"Plenty of high school writers struggle with basic prose, grammar, and structure, and the AI essays do not seem to have any difficulty with these basic but important areas," he added.

Nguyen also praised the grammar and structure of the essays, and said that they also directly addressed the questions.

"There were some attempts to provide examples and evidence to support the writer's thesis or position. The essays are in the first-person narrative format, which is how these essays should be written," he said.

Wong thought the essays may even have been successful at some colleges. "Assuming these essays weren't flagged as AI-generated, I think they could pass muster at some colleges. I know that students have been admitted to colleges after submitting essays lower in quality than these," he said.

OpenAI did not immediately respond to Insider's request for comment.

They weren't good enough for top colleges

Nguyen said I wouldn't be able to apply to any of the top 50 colleges in the US using the AI-generated essays.

"These essays are exemplary of what a very mediocre, perhaps even a middle school, student would produce," Nguyen said. "If I were to assign a grade, the essays would get a grade of B or lower."

Wong also said the essays wouldn't stack up at "highly selective" colleges . "Admissions officers are looking for genuine emotion, careful introspection, and personal growth," he said. "The ChatGPT essays express insight and reflection mostly through superficial and cliched statements that anyone could write."

Nguyen said the writing in the essays was fluffy, trite, lacked specific details, and was overly predictable.

"There's no element of surprise, and the reader knows how the essay is going to end. These essays shouldn't end on a neat note, as if the student has it all figured out, and life is perfect," he said.

"With all three, I would scrap 80-90% and start over," he said.

Axel Springer, Business Insider's parent company, has a global deal to allow OpenAI to train its models on its media brands' reporting.

- Main content

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 06 May 2024

The consequences of generative AI for online knowledge communities

- Gordon Burtch 1 ,

- Dokyun Lee 1 &

- Zhichen Chen 1

Scientific Reports volume 14 , Article number: 10413 ( 2024 ) Cite this article

3123 Accesses

17 Altmetric

Metrics details

- Human behaviour

- Psychology and behaviour

Generative artificial intelligence technologies, especially large language models (LLMs) like ChatGPT, are revolutionizing information acquisition and content production across a variety of domains. These technologies have a significant potential to impact participation and content production in online knowledge communities. We provide initial evidence of this, analyzing data from Stack Overflow and Reddit developer communities between October 2021 and March 2023, documenting ChatGPT’s influence on user activity in the former. We observe significant declines in both website visits and question volumes at Stack Overflow, particularly around topics where ChatGPT excels. By contrast, activity in Reddit communities shows no evidence of decline, suggesting the importance of social fabric as a buffer against the community-degrading effects of LLMs. Finally, the decline in participation on Stack Overflow is found to be concentrated among newer users, indicating that more junior, less socially embedded users are particularly likely to exit.

Similar content being viewed by others

Highly accurate protein structure prediction with AlphaFold

Maximum diffusion reinforcement learning

Entropy, irreversibility and inference at the foundations of statistical physics

Introduction.

Recent advancements in generative artificial intelligence (Gen AI) technologies, especially large language models (LLMs) such as ChatGPT, have been significant. LLMs demonstrate remarkable proficiency in tasks that involve information retrieval and content creation 1 , 2 , 3 . Given these capabilities, it is important to consider their potential to drive seismic shifts in the way knowledge is developed and exchanged within online knowledge communities 4 , 5 .

LLMs may drive both positive and negative impacts on participation and activity at online knowledge communities. On the positive side, LLMs can enhance knowledge sharing by providing immediate, relevant responses to user queries, potentially bolstering community engagement by helping users to efficiently address a wider range of peer questions. Viewed from this perspective, Gen AI tools may complement and enhance existing activities in a community, enabling a greater supply of information. On the negative side, LLMs may replace online knowledge communities altogether.

If the displacement effect dominates, it would give rise to several serious concerns. First, while LLMs offer innovative solutions for information retrieval and content creation and have been shown to significantly enhance individual productivity in a variety of writing and coding tasks, they have also been found to hallucinate, i.e., providing ‘confidently incorrect’ responses to user queries 6 , and to undermine worker performance on certain types of tasks 3 . Second, if individual participation in online communities were to decline, this would imply a decline in opportunities for all manner of interpersonal interaction, upon which many important activities depend, e.g., collaboration, mentorship, job search. Further, to the extent a similar dynamic may emerge within formal organizations and work contexts, it would raise the prospect of analogous declines in organizational attachment, peer learning, career advancement and innovation 7 , 8 , 9 , 10 , 11 , 12 .

With the above in mind, we address two questions in this work. First, we examine the effects that generative artificial intelligence (AI), particularly large language models (LLMs), have on individual engagement in online knowledge communities. Specifically, we assess how LLMs influence user participation and content creation in online knowledge communities. Second, we explore factors that moderate (amplify or attenuate) the effects of LLMs on participation and content creation at online knowledge communities. By addressing these relationships, we aim to advance our understanding of the role LLMs may play in shaping the future of knowledge sharing and collaboration online. Further, we seek to provide insights into approaches and strategies that can encourage a sustainable knowledge sharing dynamic between human users and AI technologies.

We evaluate our questions in the context of ChatGPT’s release, in late November of 2022. We start by examining how the release of ChatGPT impacted Stack Overflow. We show that ChatGPT’s release led to a marked decline in web traffic to Stack Overflow, and a commensurate decline in question posting volumes. We then consider how declines in participation may vary across community contexts. Leveraging data on posting activity in Reddit developer communities over the same period, we highlight a notable contrast: no detectible declines in participation. We attribute this difference to social fabric; whereas Stock Overflow focuses on pure information exchange, Reddit developer communities are characterized by stronger social bonds. Further, considering heterogeneity across topic domains within Stack Overflow, we show that declines in participation varied greatly depending on the availability of historical community data, a likely proxy for LLM’s ability to address questions in a domain, given that data would likely have been used in training. Finally, we explore which users were most affected by ChatGPT’s release, and the impact ChatGPT has had on the characteristics of content being posted. We show that newer users were most likely to exit the community after ChatGPT was released. Further, and relatedly, we show that the questions posted to Stack Overflow became systematically more complex and sophisticated after ChatGPT’s release.

To address these questions, we leverage a combination of data sources and methods (additional details are provided in the supplement). First, we employ a proprietary dataset capturing daily aggregate counts of visitors to stackoverflow.com, and a large set of other popular websites. This data covers the period from September 2022 through March 2023. Additionally, we employ data on the questions and answers posted to Stack Overflow, along with characteristics of the posting users, from two calendar periods that cover the same span of the calendar year. The two samples cover October 2021 through mid-March of 2022, and October 2022 through mid-March of 2023. These data sets were obtained via the Stack Exchange Data Explorer, which provides downloadable, anonymized data on activity in different Stack Exchange communities. Further, we employ data from subredditstats.com, which tracks aggregate daily counts of posting volumes to each sub-Reddit. Our data sources do not include any personal user information, and none of our analyses make use of any personal user information.

We first examined the effect that ChatGPT’s release on November 30th of 2022 had on web traffic arriving at Stack Overflow, leveraging the daily web traffic dataset. The sample, sourced from SimilarWeb, includes daily traffic to the top 1000 websites. We employ a variant of the synthetic control method 13 , namely Synthetic Control Using LASSO, or SCUL 14 . Taking the time series of web visits to stackoverflow.com as treated, the method identifies, via LASSO 15 , a linear, weighted combination of candidate control series (websites) that yields an accurate prediction of traffic to stackoverflow.com prior to ChatGPT’s release. The resulting linear combination is then used to impute a counterfactual estimate of traffic at stackoverflow.com in the period following ChatGPT’s release, reflecting predictions of web traffic volumes that would have been observed in the absence of ChatGPT.

Second, we examined ChatGPT’s effects on the volume of questions being posted to Stack Overflow. We identified the top 50 most popular topic tags associated with questions on Stack Overflow during our period of study, calculating the daily count of questions including each tag over a time window bracketing the date of ChatGPT’s release. We then followed the approach of Refs. 16 , 17 , constructing the same set of topic panels for the same calendar period, one year prior, to serve as our control within a difference-in-differences design, to estimate an average treatment effect, and to enable evaluation both of the parallel trends assumption (which is supported by the absence of significant pre-treatment differences) and treatment effect dynamics 18 . Figure S1 in the supplement provides a visual explanation of our research design.

Third, we considered whether the effects might differ across online knowledge communities, depending on the degree to which a community is focused strictly on information exchange. That is, we considered the potential mitigating effect of social fabric, i.e. social bonds and connections, as a buffer against LLMs negative effects on connection with human peers. The logic for this test is that LLMs, despite being capable of high-quality information provision around many topics, are of less clear value as a pure substitute for human social connections 19 . We thus contrasted our average effect estimates from Stack Overflow with effect estimates obtained using panels of daily posting volumes from analogous sub-communities at Reddit (sub-Reddits), focused on the same sets of topics. Reddit is a useful point of comparison because it has been well documented that Reddit developer communities are relatively more social and communal than Stack Overflow 20 , 21 . We also explored heterogeneity in the Stack Overflow effects across topics, repeating our difference-in-differences regression for each Stack Overflow topic and associated sub-reddit.

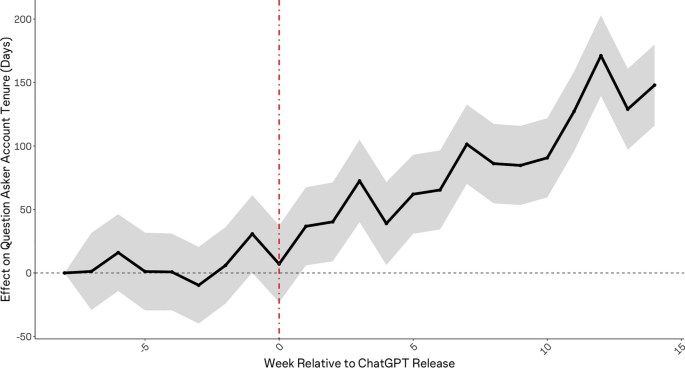

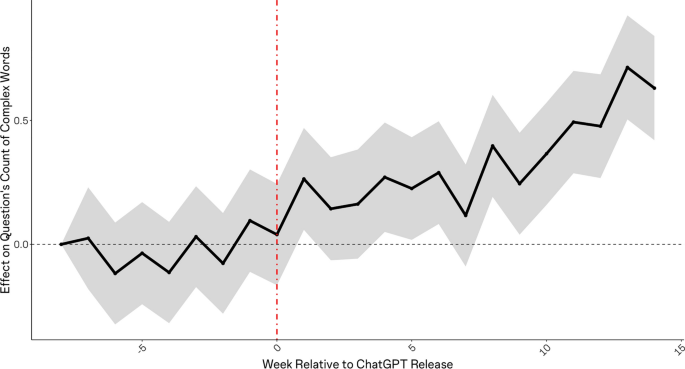

Lastly, we explored shifts in the average characteristics of users and questions at Stack Overflow following ChatGPT’s release, specifically in terms of the posting users’ account tenure, in days, and, relatedly, the average complexity of posted questions. It is reasonable to expect that the individuals most likely to rely on ChatGPT are junior, newer members of the community, as these individuals likely have less social attachment to the community, and they are likely to ask relatively simpler questions, which ChatGPT is better able to address. In turn, it is reasonable to expect that the questions that fail to be posted are those that would have been relatively simpler. We tested these possibilities in two ways, considering question-level data from Stack Overflow. We began by estimating the effect of ChatGPT’s release on the average tenure (in days) of posting users’ accounts. Next, we estimated a similar model, considering the average frequency of ‘long’ words (words with 6 or more characters) within posted questions, as a proxy for complexity.

Overall impact of LLMs on community engagement

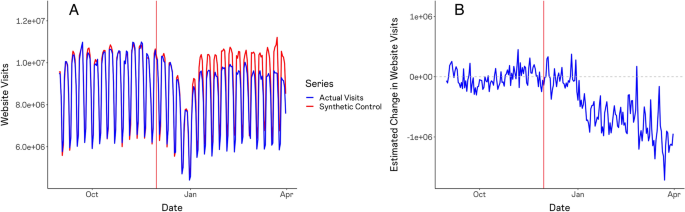

Figure 1 A depicts the actual daily web traffic to Stack Overflow (blue) alongside our estimates of the traffic that Stack Overflow would have experienced in the absence of ChatGPT’s release (red). The Synthetic Control estimates closely mirror the true time series prior to ChatGPT’s release, supporting their validity as a counterfactual for what would have occurred post. Figure 1 B presents the difference between these time series. We estimate that Stack Overflow’s daily web traffic has declined by approximately 1 million individuals per day, equivalent to approximately 12% of the site’s daily web traffic just prior to ChatGPT’s release.

Synthetic control estimates of decline in daily web traffic to stack overflow. Estimates are obtained via synthetic control using LASSO (SCUL), based on daily web traffic estimates according to SimilarWeb for the 1000 most popular websites on the internet. Panel ( A ) depicts the actual web traffic volumes (in blue) recorded by SimilarWeb alongside the Synthetic Control (in red). Panel ( B ) depicts the difference between the two series, reflecting the estimated causal effect of ChatGPT.

LLMs' effect on user content production

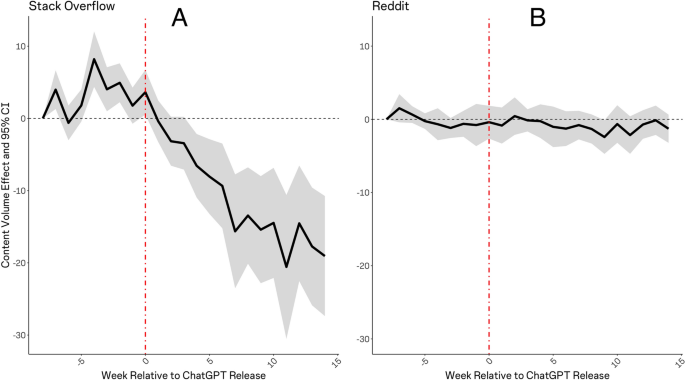

Our difference-in-differences estimations employing data on posting activity at Stack Overflow revealed that question posting volumes per-topic on Stack Overflow have declined markedly since ChatGPT’s release (Fig. 2 A). This result reinforces the idea that LLMs are replacing online communities as a source of knowledge for many users. Repeating the same analysis using Reddit data, we observed no evidence that ChatGPT has had any effects on user engagement at Reddit (Fig. 2 B). We replicate these results in Fig. S2 of the supplement employing the matrix completion estimator of Ref. 22 .

Estimated effects of ChatGPT on user activity at stack overflow and reddit. Estimates are obtained via difference-in-differences regression, comparing content posting volumes over a period bracketing the release of ChatGPT (on November 30th, 2022) with a window of equal length observed one calendar year prior. Panel ( A ) depicts effects over time (by week) on Stack Overflow question volumes per topic. Panel ( B ) depicts effects on Reddit posting volumes, per sub-reddit, for sub-reddits dealing with an overlapping set of topics. The shaded area represents 95% confidence intervals.

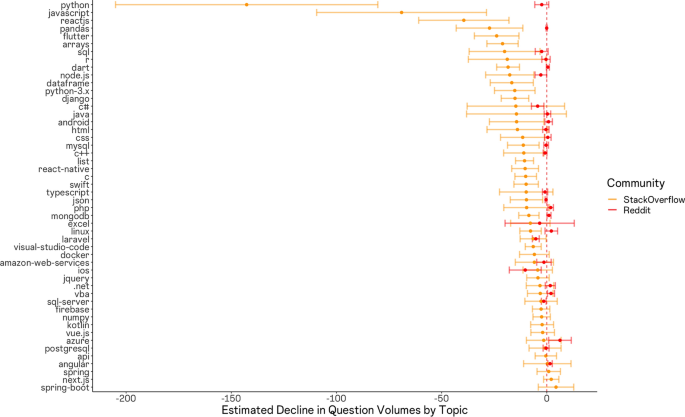

Heterogeneity in ChatGPT’s effect on stack overflow posting volumes by topic

We observed a great deal of heterogeneity across Stack Overflow topics, yet consistently null results across sub-reddits (Fig. 3 ). Our estimates thus indicate, again, that Reddit developer communities have been largely unaffected by ChatGPT’s release. Our Stack Overflow results further indicate that the most substantially affected topics are those most heavily tied to concrete, self-contained software coding activities. That is, the most heavily affected topics are also those where we might anticipate that ChatGPT would perform quite well, due to the prevalence of accessible training data.

Topic-specific effects of ChatGPT on stack overflow and reddit. Estimates are obtained via difference-in-differences regression, per topic. The figure depicts effect estimates for each stack overflow topic (in orange) with 95% confidence intervals and estimates for each sub-reddit (in red), where available. Note that data on sub-reddit posting volumes was not available for three sub-reddit communities: javascript, jQuery, and Django. Other Reddit estimates are omitted due to the lack of a clearly analogous sub-reddit addressing that topic.

For example, Python, CSS, Flutter, ReactJS, Django, SQL, Arrays, and Pandas are all references to programming languages, specific programming libraries, or data types and structures that one might encounter while working with a programming language. In contrast, relatively unaffected tags appear more likely to relate to topics involving complex tasks, requiring not only appropriate syntax but also contextual information that would often have been outside of the scope of ChatGPT's training data. For example, Spring and Spring-boot are Java-based frameworks for enterprise solutions, often involving back-end (server-side) programming logic with private enterprise knowledge bases and software infrastructures. Questions related to these topics are intuitive questions for which an automated (i.e. cut-and-paste) solution would be less straightforward, and less likely to appear in the textual training data available for training the LLM. Additional examples here include the tags related to Amazon Web Services, Firebase, Docker, SQL Server, and Microsoft Azure.

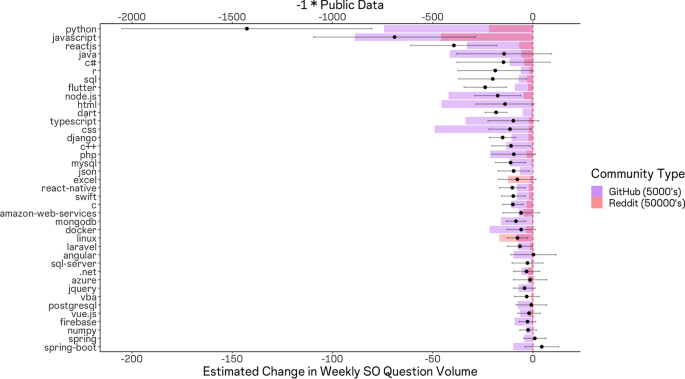

To evaluate this possible explanation more directly, we collected data on the volume of active GitHub repositories making use of each language or framework, as well as the number of individuals subscribed to sub-reddits focused on each language or framework. We then plotted a scaled measure of each value atop the observed effect sizes and obtained Fig. 4 . The figure indicates a rough correlation between available public sources of training data and our effect sizes.

Topic-specific effects of ChatGPT on stack overflow (black points with 95% confidence intervals) with Number of Github repositories (purple) and sub-reddit subscribers (red) overlaid. We observe a rough correlation between the volume of Github repositories making use of a given language or framework, the level of activity in associated sub-reddit communities, and the magnitude of effect sizes. This associate suggests effects are larger for topics where more public data was available to train the LLM.

ChatGPT’s effect on average user account age and question complexity

Figure 5 depicts the change in average posting users’ account tenure, making clear that, upon ChatGPT’s release, a systematic rise began to take place, such that users were increasingly likely to be more established, older accounts. The implication of this result is that newer user accounts became systematically less likely to participate in the Stack Overflow community after ChatGPT became available. Figure 6 depicts the effects, indicating that questions exhibited a systematic rise in complexity following the release of ChatGPT.

Effect of ChatGPT release on the average tenure (in days) of user accounts posting questions to stack overflow. Shortly after ChatGPT’s release, we see a systematic rise in the average age (in days) for the user accounts posting questions to StackOverflow. We see that average account age rises systematically once ChatGPT is released, consistent with newer accounts systematically reducing their participation and exiting the community.

Effect of ChatGPT's release on the average complexity of questions posted to Stack Overflow, reflected by the average frequency of ‘long’ words (words with 6 or more characters). Shortly after ChatGPT’s release, we see a systematic rise in the average complexity of questions. This result is again consistent with the idea that newer accounts systematically reduced their participation and exited the community.

These findings, consistent with the idea that more junior and less experienced users began to exit might be cause for concern if a similar dynamic is playing out in more formal organization and work contexts. This is because junior individuals may stand to lose the most from declines in peer interaction—these individuals typically are more marginal members of organizations and thus have less robust networks and have the most to lose in terms of opportunities for career advancement 23 . Further, these individuals may be least capable of recognizing mistakes in the output of LLMs, which are well known to engage in hallucination, providing ‘confidently wrong’ answers to user queries 6 . Indeed, recent work observes that non-experts face the greatest difficulty determining whether the information they have obtained from an LLM is correct 24 .

We have shown that ChatGPTs release was associated with a discontinuous decline in web traffic and question posting volumes at Stock Overflow. This result is consistent with the idea that many individuals are now relying on LLMs for knowledge acquisition in lieu of human peers in online knowledge communities. Our results demonstrate that these effects manifested for Stack Overflow, yet not for Reddit developer communities.

Further, we have shown that these effects were more pronounced for very popular topics as compared to less popular topics, and evidence suggests that this heterogeneity derived from the volume of training data available for LLM training prior to ChatGPTs release. Finally, our results demonstrate that ChatGPT’s release was associated with a significance, discontinuous increase in the average tenure of accounts participating on Stack Overflow, and in the complexity of questions posted (as reflected by the prevalence of lengthy words within questions). These results are consistent with the idea that that newer, less expert users were more likely to begin relying on ChatGPT in lieu of the online knowledge community.

Our findings bear several important implications for the management of online knowledge communities. For online communities, our findings highlight the importance of social fabric as a means of ensuring the sustainability and success of online communities in the age of generative AI. Our findings thus highlight that managers of online knowledge communities can combat the eroding influence of LLMs by enabling socialization, as a complement to pure information exchange. Our findings also highlight how content characteristics and community membership can shift because of LLMs, observations that can inform community managers content moderation strategies and their activities centered on community growth and churn prevention.

Beyond the potential concerns about what the observed dynamics may imply for online communities and their members, our findings also raise important concerns about the future of content production in online communities, which by all accounts have served as a key source of training data for many of the most popular LLMs, including OpenAI’s GPT. To the extent content production declines in these open communities, it will reinforce concerns that have been raised in the literature about limitations on the volume of data available for model training 25 . Our findings suggest that long-term content licensing agreements that have recently been signed between LLM creators and online community operators may be undermined. If these issues are left unaddressed, the continued advancement of generative AI models may necessitate that their creators identify alternative data sources.

Our work is not without limitations, some of which present opportunities for future research. First, for our research design to yield causal interpretations, we must assume the absence of confounded treatments. For example, were another large online community to have emerged around the same time, the possibility exists that it may explain the decline in participation at Stack Overflow. Second, our study lacks a nuanced analysis of changes in content characteristics. Although we study changes in answer quality using net vote scores (see the supplement), our measures may reflect changes in other aspects unrelated to information quality. Similarly, although we study changes in question complexity, our measure of complexity is tied to word length. Future work can thus revisit these questions employing a variety of other measures of quality and complexity.

Third, although we have shown a decline in participation at Stack Overflow, we are unable to speak to whether the same dynamic is playing out in other organizational settings, e.g. workplaces. It is also important to recognize that the context of our analyses may be unique. To the extent Stack Overflow and Reddit developer communities might not be representative of developer communities more broadly, the generalizability of these results would be constrained. Relatedly, it is possible that the results we observe are unique to knowledge communities that focus on software development and information technology. The dynamics of content production may differ markedly in other knowledge domains. Finally, our work demonstrates effects over a relatively short period of time (several months). It is possible that the longer-run dynamics of the observed effects may shift. Given these points, future work can and should endeavor to explore the generalizability of our findings to other communities, and future work should examine the longer-run effects of generative AI technologies on community participation and knowledge sharing.

We anticipate that our study will inspire more sophisticated analyses of the effects that generative AI technologies, including LLMs, but also generative image, audio, and video models, may have on patterns of knowledge sharing and collaboration within organizations and society more broadly. Such work is crucially needed, to better understand the nuances of where and when individuals may rely on human peers versus Generative AI tools, and the desirable and undesirable consequences for organizations and society, such that we can begin to plan for and manage this new dynamic.

Data availability

Data on Stack Overflow users, questions, and answers was obtained via the Stack Exchange Data Explorer at https://data.stackexchange.com/stackoverflow/query/new . Data on sub-reddit posting volumes was obtained from https://subredditstats.com . Similar Web daily web traffic data is not available for public dissemination, though it is available for purchase from https://deweydata.io . Stack Overflow data, Reddit data and analysis scripts are available in a public repository at the OSF: https://osf.io/qs6b3/ .

Noy, S. & Zhang, W. Experimental evidence on the productivity effects of generative artificial intelligence. Science https://doi.org/10.2139/ssrn.4375283 (2023).

Article PubMed Google Scholar

Peng, S., Kalliamvakou, E., Cihon, P., Demirer, M. The impact of AI on developer productivity: Evidence from Github copilot. Preprint at https://arXiv.org/2302.06590 (2023).

Dell-Acqua, F. et al. Navigating the jagged technological frontier: Field experimental evidence of the effects of AI on knowledge worker productivity and quality. Harvard Business School Working Paper , no. 24-013(2023).

Hwang, E. H., Singh, P. V. & Argote, L. Knowledge sharing in online communities: Learning to cross geographic and hierarchical boundaries. Organ. Sci. 26 (6), 1593–1611 (2015).

Article Google Scholar

Hwang, E. H. & Krackhardt, D. Online knowledge communities: Breaking or sustaining knowledge silos?. Prod. Oper. Manag. 29 (1), 138–155 (2020).

Bang, Y. et al. A multitask, multilingual, multimodal evaluation of ChatGPT on reasoning, hallucination, and interactivity. In Proc. of the 13th International Joint Conference on Natural Language Processing and the 3rd Conference of the Asia-Pacific Chapter of the Association for Computational Linguistics (Volume 1: Long Papers) , pp. 675–718 (2023).

Saxenian, A. Regional Advantage: Culture and Competition in Silicon Valley and Route 128 (Harvard University Press, 1996). https://doi.org/10.4159/9780674418042 .

Book Google Scholar

Atkin, D., Chen, M. K., Popov, A. The returns to face-to-face interactions: Knowledge spillovers in Silicon Valley. National Bureau of Economic Research , no. w30147(2022).

Roche, M. P., Oettl, A., & Catalini, C. (Co-)working in close proximity: Knowledge spillovers and social interactions. National Bureau of Economic Research , no. w30120 (2022).

Tubiana, M., Miguelez, E. & Moreno, R. In knowledge we trust: Learning-by-interacting and the productivity of inventors. Res. Policy 51 (1), 104388 (2022).

Hooijberg, R. & Watkins, M. When do we really need face-to-face interactions? https://hbr.org/2021/01/when-do-we-really-need-face-to-face-interactions (Harvard Business Publishing, 2021).

Allen, T. J. Managing the Flow of Technology: Technology Transfer and the Dissemination of Technological Information within the R&D Organization (MIT Press Books, 1984).

Google Scholar

Abadie, A. Using synthetic controls: Feasibility, data requirements, and methodological aspects. J. Econ. Lit. 59 (2), 391–425 (2021).

Hollingsworth, A., Wing, C. Tactics for design and inference in synthetic control studies: An applied example using high-dimensional data. Available at SSRN, Paper no. 3592088 (2020).

Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B Stat. Methodol. 58 (1), 267–288 (1996).

Article MathSciNet Google Scholar

Goldberg, S., Johnson, G. & Shriver, S. Regulating privacy online: An economic evaluation of the GDPR. Am. Econ. J. Econ. Policy 16 (1), 325–358 (2024).

Eichenbaum, M., Godinho de Matos, M., Lima, F., Rebelo, S. & Trabandt, M. Expectations, infections, and economic activity. J. Polit. Econ. https://doi.org/10.1086/729449 (2023).

Angrist, J. D. & Pischke, J. S. Mostly Harmless Econometrics: An Empiricist’s Companion (Princeton University Press, 2009).

Peters, J. Reddit thinks AI chatbots will ‘complement’ human connection, not replace it. The Verge . https://www.theverge.com/2023/2/10/23594786/reddit-bing-chatgpt-ai-google-search-bard (Accessed 17 September 2023) (2023).

Antelmi, A., Cordasco, G., De Vinco, D., Spagnuolo, C.The age of snippet programming: Toward understanding developer communities in stack overflow and reddit. In Companion Proceedings of the ACM Web Conference , pp. 1218–1224 (2023).

Sengupta, S. ‘Learning to code in a virtual world’ A preliminary comparative analysis of discourse and learning in two online programming communities. In Conference Companion Publication of the 2020 on Computer Supported Cooperative Work and Social Computing , pp. 389–394 (2020).

Athey, S., Bayati, M., Doudchenko, N., Imbens, G. & Khosravi, K. Matrix completion methods for causal panel data models. J. Am. Stat. Assoc. 116 (536), 1716–1730 (2021).

Article MathSciNet CAS Google Scholar

Wu, L. & Kane, G. C. Network-biased technical change: How modern digital collaboration tools overcome some biases but exacerbate others. Organ. Sci. 32 (2), 273–292 (2021).

Kabir, S., Udo-Imeh, D. N., Kou, B., Zhang, T. Who answers it better? An in-depth analysis of ChatGPT and stack overflow answers to software engineering questions. Preprint at https://arXiv.org/2308.02312 (2023).

Villalobos, P., Sevilla, J., Heim, L., Besiroglu, T., Hobbhahn, M., Ho, A. Will we run out of data? An analysis of the limits of scaling datasets in machine learning. Preprint at https://arXiv.org/2211.04325 (2022).

Download references

Acknowledgements

We thank participants at Boston University, the Wharton Business and Generative AI workshop, the BU Platforms Symposium, and the INFORMS Conference on Information Systems and Technology for useful comments. We also thank Michael Kümmer and Chris Forman for valuable feedback. All user data that we analyzed is publicly available, except data on daily website traffic which was purchased from Dewey Data.

Author information

Authors and affiliations.

Questrom School of Business, Boston University, Boston, MA, 02215, USA

Gordon Burtch, Dokyun Lee & Zhichen Chen

You can also search for this author in PubMed Google Scholar

Contributions

Conceptualization: GB, DL. Methodology: GB, ZC. Investigation: GB, ZC. Visualization: GB. Project administration: GB, DL. Supervision: GB, DL. Writing—original draft: GB, DL, ZC. Writing—review & editing: GB, DL, ZC.

Corresponding author

Correspondence to Gordon Burtch .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Supplementary information., rights and permissions.

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Cite this article.

Burtch, G., Lee, D. & Chen, Z. The consequences of generative AI for online knowledge communities. Sci Rep 14 , 10413 (2024). https://doi.org/10.1038/s41598-024-61221-0

Download citation

Received : 23 October 2023

Accepted : 02 May 2024

Published : 06 May 2024

DOI : https://doi.org/10.1038/s41598-024-61221-0

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

By submitting a comment you agree to abide by our Terms and Community Guidelines . If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

7 Best ChatGPT Prompts To Help You Write Your Resume

Looking for a job? You’re going to want to perfect your resume. The hard thing is that writing a resume is time-consuming, and it seems like tips for writing a resume are always changing. This is where ChatGPT can be of service.

Make Money With AI and ChatGPT: How To Earn $1,000 a Month

Learn: How To Build Your Savings From Scratch

Experts told GOBankingRates that there are certain prompts that can help craft your resume into something that will catch recruiters’ eyes. Here are the top prompts for ChatGPT to help you write your resume.

Ask ChatGPT To Create a Custom Resume for a Specific Job

The more specific a resume is, the better. Recruiters are looking to see that you have the exact skills that fulfill the job they’re hiring for.

Career expert Michael Gardon says there’s a simple method to create a specified resume using ChatGPT. “Here is the best prompt: ask ChatGPT to ‘please personalize my resume for [this job] at [this company]. Here is the job description [paste it]. Here is my resume [paste resume],'” Gardon advised.

See Also: 5 Ways To Use AI To Generate Passive Income

Get Keywords Based on the Job Description

The truth is most recruiters — or systems they use — are looking for keywords to separate your resume from those that aren’t qualified. ChatGPT can help you find those keywords.

“You can share a job posting and ask [ChatGPT] to provide you with a list of keywords or skills from the job description,” said Laci Baker, a career advisor at University of Phoenix . “You can then share your resume with [ChatGPT] and ask if all those keywords and skills are present on your resume, which can help with ensuring you are tailoring your resume to the job description.”

Ask ChatGPT To Follow a Resume Template

Found a resume template you like online? Phil Siegel, the founder of AI nonprofit CAPTRS , says you can submit that to ChatGPT and ask for a similar resume based on your experience.

“Start from a template you like (they are all over the web) and give ChatGPT some detailed information about you. Ask it to create a resume using the format and your data. The advantage is it will give a more detailed resume and it can be done fairly quickly,” Siegel said.

Use Your Experience To Create a Professional Summary

Writing the professional summary at the top of your resume can be challenging. It’s hard to know how to speak about your personal experience in a way that hiring managers will gravitate toward.

Baker suggests typing elements of your past job experience into ChatGPT and asking it to write a professional summary. “You can share your past experiences and a target role you are hoping to apply to, and [ChatGPT] can give you a career summary that you can then edit and make your own,” Baker said.

Use ChatGPT as a Career Expert

Siegel says there is a way you can “chat” with ChatGPT that can help it create a resume for you. “‘Pretend’ ChatGPT is a friendly expert and hold a conversation with it about creating your resume. Tell it you’re creating a resume for a job role and ask it to ask you about the most important information to build a strong resume.”

Siegel notes this will take more time than other prompts but will help create a stronger and more focused resume that’s tailored to your strengths.

Change the Tone of Your Resume

Sometimes you know what you want to say, but you’re not sure how to say it in a professional tone. ChatGPT can help tailor your wording to fit what a recruiter is looking for. “[ChatGPT] can rewrite resume elements to be more focused and more formal if those are elements you need assistance with,” Baker said.

You can even prompt ChatGPT to make your resume sound more impressive with this prompt from Juliet Dreamhunter, the founder of Juliety : “Here is the experience section of my resume. Rewrite it in bullet points and use powerful action verbs to highlight my expertise and achievements. Make it sound more impressive and professional.”

Reduce the Length of Your Resume

Generally speaking, you want your resume to be 1-2 pages. If your resume is longer, ChatGPT can help reduce it. “You can ask [ChatGPT] to take the content you already have and rewrite it to reduce the length to 2 pages,” Baker said.

More From GOBankingRates

- Mark Cuban Says This Is the No. 1 Thing To Do To Build Wealth

- Why Home Prices Are Plummeting in These 6 Cities

- 3 Things You Must Do When Your Savings Reach $50,000

- Financial Goals That Are Easy To Stick To All Year

This article originally appeared on GOBankingRates.com : 7 Best ChatGPT Prompts To Help You Write Your Resume

- Election 2024

- Entertainment

- Newsletters

- Photography

- Personal Finance

- AP Investigations

- AP Buyline Personal Finance

- AP Buyline Shopping

- Press Releases

- Israel-Hamas War

- Russia-Ukraine War

- Global elections

- Asia Pacific

- Latin America

- Middle East

- Election Results

- Delegate Tracker

- AP & Elections

- Auto Racing

- 2024 Paris Olympic Games

- Movie reviews

- Book reviews

- Personal finance

- Financial Markets

- Business Highlights

- Financial wellness

- Artificial Intelligence

- Social Media

California is testing new generative AI tools. Here’s what to know

FILE - California Gov. Gavin Newsom during a news conference, March 21, 2024, in Los Angeles. California announced Thursday, May 9, 2024, it is partnering with five companies to develop generative AI tools to help deliver public services. (AP Photo/Damian Dovarganes, File)

- Copy Link copied

SACRAMENTO, Calif. (AP) — Generative artificial intelligence tools will soon be used by California’s government.

Democratic Gov. Gavin Newsom’s administration announced Thursday the state will partner with five companies to develop and test generative AI tools that could improve public service.

California is among the first states to roll out guidelines on when and how state agencies can buy AI tools as lawmakers across the country grapple with how to regulate the emerging technology.

Here’s a closer look at the details:

WHAT IS GENERATIVE AI?

Generative AI is a branch of artificial intelligence that can create new content such as text, audio and photos in response to prompts. It’s the technology behind ChatGPT , the controversial writing tool launched by Microsoft-backed OpenAI. The San Francisco-based company Anthropic, with backing from Google and Amazon, is also in the generative AI game.

HOW MIGHT CALIFORNIA USE IT?

California envisions using this type of technology to help cut down on customer call wait times at state agencies, and to improve traffic and road safety, among other things.

Initially, four state departments will test generative AI tools: The Department of Tax and Fee Administration, the California Department of Transportation, the Department of Public Health, and the Health and Human Services Department.

The tax and fee agency administers more than 40 programs and took more than 660,000 calls from businesses last year, director Nick Maduros said. The state hopes to deploy AI to listen in on those calls and pull up key information on state tax codes in real time, allowing the workers to more quickly answer questions because they don’t have to look up the information themselves.

In another example, the state wants to use the technology to provide people with information about health and social service benefits in languages other than English.

WHO WILL USE THESE AI TOOLS?

The public doesn’t have access to these tools quite yet, but possibly will in the future. The state will start a six-month trial, during which the tools will be tested by state workers internally. In the tax example, the state plans to have the technology analyze recordings of calls from businesses and see how the AI handles them afterward — rather than have it run in real-time, Maduros said.

Not all the tools are designed to interact with the public though. For instance, the tools designed to help improve highway congestion and road safety would only be used by state officials to analyze traffic data and brainstorm potential solutions.

State workers will test and evaluate their effectiveness and risks. If the tests go well, the state will consider deploying the technology more broadly.

HOW MUCH DOES IT COST?

The ultimate cost is unclear. For now, the state will pay each of the five companies $1 to start a six-month internal trial. Then, the state can assess whether to sign new contracts for long-term use of the tools.

“If it turns out it doesn’t serve the public better, then we’re out a dollar,” Maduros said. “And I think that’s a pretty good deal for the citizens of California.”

The state currently has a massive budget deficit, which could make it harder for Newsom to make the case that such technology is worth deploying.

Administration officials said they didn’t have an estimate on what such tools would eventually cost the state, and they did not immediately release copies of the agreements with the five companies that will test the technology on a trial basis. Those companies are: Deloitte Consulting, LLP, INRIX, Inc., Accenture, LLP, Ignyte Group, LLC, SymSoft Solutions LLC.

WHAT COULD GO WRONG?

The rapidly growing technology has also raised concerns about job loss, misinformation, privacy and automation bias .

State officials and academic experts say generative AI has significant potential to help government agencies become more efficient but there’s also an urgent need for safeguards and oversight.

Testing the tools on a limited basis is one way to limit potential risks, said Meredith Lee, chief technical adviser for UC Berkeley’s College of Computing, Data Science, and Society.

But, she added, the testing can’t stop after six months. The state must have a consistent process for testing and learning about the tools’ potential risks if it decides to deploy them on a wider scale.

IMAGES

VIDEO

COMMENTS

Using ChatGPT to generate a personal statement is suboptimal as the AI only knows as much as you give him - the hard part of the PS is finding a good story, something that is going to grab attention and hold it and make a good impression. For this purpose, ChatGPT and other AI-like software are just an advanced version of a thesaurus.

I used chat gpt to help join a lot of paragraphs or ideas I wrote, so it wasn't purely "write me a personal statement", then copy paste. Also, as for the cheating part, if you check my marks, community service, voluntary work, extra projects, etc…. (without bragging), these completely outweigh a personal statement.

That's like being in the NFL or MLB and asking if it's cool to use some Gear during your off season workouts. They all be doing it. Edit: Here's what you do. Word vomit EVERYTHING you're thinking of onto a google doc. Don't stop writing. Don't edit. Don't give a shit about grammar etc. then when you're done, give chat GPT ...

chat gpt can pepper in buzzwords and write good sentences. but it can't possibly know the stories in YOUR life that make you want to go into medicine. it will never be able to replace that. showing and not telling will just become even more important in the era of chat gpt. Y'all have to be honest answering this because if you aren't you ...

ChatGPT can be a great efficiency tool if you train it properly. If you use ChatGPT right of the bat, it gives clunky, generic samples that don't provide depth/nuance to your writing. I am a nontraditional student that has YEARS worth of different personal statement, personal journal entries, academic assignments, small grant proposals, and a ...

For sure. Use chatgpt only as copy editor/proofreader. Do not use it to generate your personal statement. I think it's good for helping phrase certain things. If you ask it to write a LoR and you just copy and paste it, it'll be shit because it won't be personal to you and will be super generic.

It's important to remember that while ChatGPT can generate text, it's not a substitute for your own thoughts and experiences. It's called a personal statement for a reason and universities want to hear from you, not an AI bot. You could use ChatGPT as a tool to help inspire, clarify and articulate your own ideas, rather than asking it to write ...

ChatGPT prompt: Write a short story about an admissions committee debate on admitting a cat into medical school. The admissions committee sat around the large conference table, each member flipping through the thick stack of applications in front of them. ... For example, I plugged in my personal statement (first draft + final draft), my ...

Chat gpt for personal statement My friend used chat gpt to write his personal statement and apparently he's actually willing to submit it later next week. Although everything he's said he's done is true, he used it to hone some phrasing and structure it well and make it flow nicely.

There may be some ways to use ChatGPT effectively and ethically when working on your applications for graduate school. The key is to use AI tools for discreet activities with work you have already produced yourself, such as: Re-writing a sentence in your essay using other words and phrasing. Reducing the word count from a paragraph of your ...

Your post cleverly brings attention to the irony of using an AI like ChatGPT to enhance communication while applying for positions, as the committee might easily recognize it as non-human communication, potentially harming your application.

T he decision to scrap personal statements from university applications is overdue. Not only for the stated reason - that the practice of writing a 4,000-character essay about yourself is seen ...

You can use ChatGPT to brainstorm potential research questions or to narrow down your thesis statement. Begin by inputting a description of the research topic or assigned question. Then include a prompt like "Write 3 possible research questions on this topic.". You can make the prompt as specific as you like.

It requires careful thought, self-reflection, and effective storytelling. With ChatGPT and several AI writing tools out there, you can create a standout SOP, here's how: 1. Generating Ideas and Inspiration: One of the initial challenges when writing an SOP is brainstorming ideas and finding inspiration. ChatGPT can serve as a creative partner ...

Alternatively, write your own first draft, based on the answers to the questions or any other brainstorming that you do, and the structure ChatGPT suggests. After that, ask ChatGPT for feedback on the statement, and ask it for help on how to improve it. This really is no different from asking a friend or adviser.

In academia, students and professors are preparing for the ways that ChatGPT will shape education, and especially how it will impact a fundamental element of any course: the academic essay. Students can use ChatGPT to generate full essays based on a few simple prompts. But can AI actually produce high quality work, or is the technology just not ...

This intel will help you tailor your statement and demonstrate a genuine interest. So, let's dive into the essential tips that will help you create a compelling personal statement. 1. Be Authentic. Your personal statement is your chance to showcase who you are beyond test scores and grades. Be genuine and let your true voice shine through.

For the article, there are two ways to have ChatGPT summarize it. The first requires you to type in the words 'TLDR:' and then paste the article's URL next to it. The second method is a bit ...

How to review your statement of purpose, personal statements, and other written docs with AI tools such as ChatGPT. A sample prompt in ChatGPT for requesting...

Examples: Using ChatGPT to generate an essay outline. Provide a very short outline for a college admission essay. The essay will be about my experience working at an animal shelter. The essay will be 500 words long. Introduction. Hook: Share a brief and engaging anecdote about your experience at the animal shelter.

Feb 25, 2023, 3:00 AM PST. Experts gave their views on the college admissions essays that were written by ChatGPT. Imeh Akpanudosen / Stringer / Getty Images. I asked OpenAI's ChatGPT to write ...

This intel will help you tailor your statement and demonstrate a genuine interest. So, let's dive into the essential tips that will help you create a compelling personal statement. 1. Be Authentic. Your personal statement is your chance to showcase who you are beyond test scores and grades. Be genuine and let your true voice shine through.

Feed the bot some details on the size and scope of your role, and provide a prompt like this: "Using the data and numbers provided, create three to five concise bullet points for my roles at ...

Effectiveness and uses. In a blinded test, ChatGPT was judged to have passed graduate-level exams at the University of Minnesota at the level of a C+ student and at Wharton with a B to B− grade. The performance of ChatGPT for computer programming of numerical methods was assessed by a Stanford University student and faculty in March 2023 through a variety of computational mathematics examples.

Topic-specific effects of ChatGPT on stack overflow (black points with 95% confidence intervals) with Number of Github repositories (purple) and sub-reddit subscribers (red) overlaid.

Here are the top prompts for ChatGPT to help you write your resume. Ask ChatGPT To Create a Custom Resume for a Specific Job The more specific a resume is, the better.

California envisions using this type of technology to help cut down on customer call wait times at state agencies, and to improve traffic and road safety, among other things. Initially, four state departments will test generative AI tools: The Department of Tax and Fee Administration, the California Department of Transportation, the Department ...