- Ask a Librarian

Research: Overview & Approaches

- Getting Started with Undergraduate Research

- Planning & Getting Started

- Building Your Knowledge Base

- Locating Sources

- Reading Scholarly Articles

- Creating a Literature Review

- Productivity & Organizing Research

- Scholarly and Professional Relationships

Introduction to Empirical Research

Databases for finding empirical research, guided search, google scholar, examples of empirical research, sources and further reading.

- Interpretive Research

- Action-Based Research

- Creative & Experimental Approaches

Your Librarian

- Introductory Video This video covers what empirical research is, what kinds of questions and methods empirical researchers use, and some tips for finding empirical research articles in your discipline.

- Guided Search: Finding Empirical Research Articles This is a hands-on tutorial that will allow you to use your own search terms to find resources.

- Study on radiation transfer in human skin for cosmetics

- Long-Term Mobile Phone Use and the Risk of Vestibular Schwannoma: A Danish Nationwide Cohort Study

- Emissions Impacts and Benefits of Plug-In Hybrid Electric Vehicles and Vehicle-to-Grid Services

- Review of design considerations and technological challenges for successful development and deployment of plug-in hybrid electric vehicles

- Endocrine disrupters and human health: could oestrogenic chemicals in body care cosmetics adversely affect breast cancer incidence in women?

- << Previous: Scholarly and Professional Relationships

- Next: Interpretive Research >>

- Last Updated: May 23, 2024 11:51 AM

- URL: https://guides.lib.purdue.edu/research_approaches

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Module 2 Chapter 3: What is Empirical Literature & Where can it be Found?

In Module 1, you read about the problem of pseudoscience. Here, we revisit the issue in addressing how to locate and assess scientific or empirical literature . In this chapter you will read about:

- distinguishing between what IS and IS NOT empirical literature

- how and where to locate empirical literature for understanding diverse populations, social work problems, and social phenomena.

Probably the most important take-home lesson from this chapter is that one source is not sufficient to being well-informed on a topic. It is important to locate multiple sources of information and to critically appraise the points of convergence and divergence in the information acquired from different sources. This is especially true in emerging and poorly understood topics, as well as in answering complex questions.

What Is Empirical Literature

Social workers often need to locate valid, reliable information concerning the dimensions of a population group or subgroup, a social work problem, or social phenomenon. They might also seek information about the way specific problems or resources are distributed among the populations encountered in professional practice. Or, social workers might be interested in finding out about the way that certain people experience an event or phenomenon. Empirical literature resources may provide answers to many of these types of social work questions. In addition, resources containing data regarding social indicators may also prove helpful. Social indicators are the “facts and figures” statistics that describe the social, economic, and psychological factors that have an impact on the well-being of a community or other population group.The United Nations (UN) and the World Health Organization (WHO) are examples of organizations that monitor social indicators at a global level: dimensions of population trends (size, composition, growth/loss), health status (physical, mental, behavioral, life expectancy, maternal and infant mortality, fertility/child-bearing, and diseases like HIV/AIDS), housing and quality of sanitation (water supply, waste disposal), education and literacy, and work/income/unemployment/economics, for example.

Three characteristics stand out in empirical literature compared to other types of information available on a topic of interest: systematic observation and methodology, objectivity, and transparency/replicability/reproducibility. Let’s look a little more closely at these three features.

Systematic Observation and Methodology. The hallmark of empiricism is “repeated or reinforced observation of the facts or phenomena” (Holosko, 2006, p. 6). In empirical literature, established research methodologies and procedures are systematically applied to answer the questions of interest.

Objectivity. Gathering “facts,” whatever they may be, drives the search for empirical evidence (Holosko, 2006). Authors of empirical literature are expected to report the facts as observed, whether or not these facts support the investigators’ original hypotheses. Research integrity demands that the information be provided in an objective manner, reducing sources of investigator bias to the greatest possible extent.

Transparency and Replicability/Reproducibility. Empirical literature is reported in such a manner that other investigators understand precisely what was done and what was found in a particular research study—to the extent that they could replicate the study to determine whether the findings are reproduced when repeated. The outcomes of an original and replication study may differ, but a reader could easily interpret the methods and procedures leading to each study’s findings.

What is NOT Empirical Literature

By now, it is probably obvious to you that literature based on “evidence” that is not developed in a systematic, objective, transparent manner is not empirical literature. On one hand, non-empirical types of professional literature may have great significance to social workers. For example, social work scholars may produce articles that are clearly identified as describing a new intervention or program without evaluative evidence, critiquing a policy or practice, or offering a tentative, untested theory about a phenomenon. These resources are useful in educating ourselves about possible issues or concerns. But, even if they are informed by evidence, they are not empirical literature. Here is a list of several sources of information that do not meet the standard of being called empirical literature:

- your course instructor’s lectures

- political statements

- advertisements

- newspapers & magazines (journalism)

- television news reports & analyses (journalism)

- many websites, Facebook postings, Twitter tweets, and blog postings

- the introductory literature review in an empirical article

You may be surprised to see the last two included in this list. Like the other sources of information listed, these sources also might lead you to look for evidence. But, they are not themselves sources of evidence. They may summarize existing evidence, but in the process of summarizing (like your instructor’s lectures), information is transformed, modified, reduced, condensed, and otherwise manipulated in such a manner that you may not see the entire, objective story. These are called secondary sources, as opposed to the original, primary source of evidence. In relying solely on secondary sources, you sacrifice your own critical appraisal and thinking about the original work—you are “buying” someone else’s interpretation and opinion about the original work, rather than developing your own interpretation and opinion. What if they got it wrong? How would you know if you did not examine the primary source for yourself? Consider the following as an example of “getting it wrong” being perpetuated.

Example: Bullying and School Shootings . One result of the heavily publicized April 1999 school shooting incident at Columbine High School (Colorado), was a heavy emphasis placed on bullying as a causal factor in these incidents (Mears, Moon, & Thielo, 2017), “creating a powerful master narrative about school shootings” (Raitanen, Sandberg, & Oksanen, 2017, p. 3). Naturally, with an identified cause, a great deal of effort was devoted to anti-bullying campaigns and interventions for enhancing resilience among youth who experience bullying. However important these strategies might be for promoting positive mental health, preventing poor mental health, and possibly preventing suicide among school-aged children and youth, it is a mistaken belief that this can prevent school shootings (Mears, Moon, & Thielo, 2017). Many times the accounts of the perpetrators having been bullied come from potentially inaccurate third-party accounts, rather than the perpetrators themselves; bullying was not involved in all instances of school shooting; a perpetrator’s perception of being bullied/persecuted are not necessarily accurate; many who experience severe bullying do not perpetrate these incidents; bullies are the least targeted shooting victims; perpetrators of the shooting incidents were often bullying others; and, bullying is only one of many important factors associated with perpetrating such an incident (Ioannou, Hammond, & Simpson, 2015; Mears, Moon, & Thielo, 2017; Newman &Fox, 2009; Raitanen, Sandberg, & Oksanen, 2017). While mass media reports deliver bullying as a means of explaining the inexplicable, the reality is not so simple: “The connection between bullying and school shootings is elusive” (Langman, 2014), and “the relationship between bullying and school shooting is, at best, tenuous” (Mears, Moon, & Thielo, 2017, p. 940). The point is, when a narrative becomes this publicly accepted, it is difficult to sort out truth and reality without going back to original sources of information and evidence.

What May or May Not Be Empirical Literature: Literature Reviews

Investigators typically engage in a review of existing literature as they develop their own research studies. The review informs them about where knowledge gaps exist, methods previously employed by other scholars, limitations of prior work, and previous scholars’ recommendations for directing future research. These reviews may appear as a published article, without new study data being reported (see Fields, Anderson, & Dabelko-Schoeny, 2014 for example). Or, the literature review may appear in the introduction to their own empirical study report. These literature reviews are not considered to be empirical evidence sources themselves, although they may be based on empirical evidence sources. One reason is that the authors of a literature review may or may not have engaged in a systematic search process, identifying a full, rich, multi-sided pool of evidence reports.

There is, however, a type of review that applies systematic methods and is, therefore, considered to be more strongly rooted in evidence: the systematic review .

Systematic review of literature. A systematic reviewis a type of literature report where established methods have been systematically applied, objectively, in locating and synthesizing a body of literature. The systematic review report is characterized by a great deal of transparency about the methods used and the decisions made in the review process, and are replicable. Thus, it meets the criteria for empirical literature: systematic observation and methodology, objectivity, and transparency/reproducibility. We will work a great deal more with systematic reviews in the second course, SWK 3402, since they are important tools for understanding interventions. They are somewhat less common, but not unheard of, in helping us understand diverse populations, social work problems, and social phenomena.

Locating Empirical Evidence

Social workers have available a wide array of tools and resources for locating empirical evidence in the literature. These can be organized into four general categories.

Journal Articles. A number of professional journals publish articles where investigators report on the results of their empirical studies. However, it is important to know how to distinguish between empirical and non-empirical manuscripts in these journals. A key indicator, though not the only one, involves a peer review process . Many professional journals require that manuscripts undergo a process of peer review before they are accepted for publication. This means that the authors’ work is shared with scholars who provide feedback to the journal editor as to the quality of the submitted manuscript. The editor then makes a decision based on the reviewers’ feedback:

- Accept as is

- Accept with minor revisions

- Request that a revision be resubmitted (no assurance of acceptance)

When a “revise and resubmit” decision is made, the piece will go back through the review process to determine if it is now acceptable for publication and that all of the reviewers’ concerns have been adequately addressed. Editors may also reject a manuscript because it is a poor fit for the journal, based on its mission and audience, rather than sending it for review consideration.

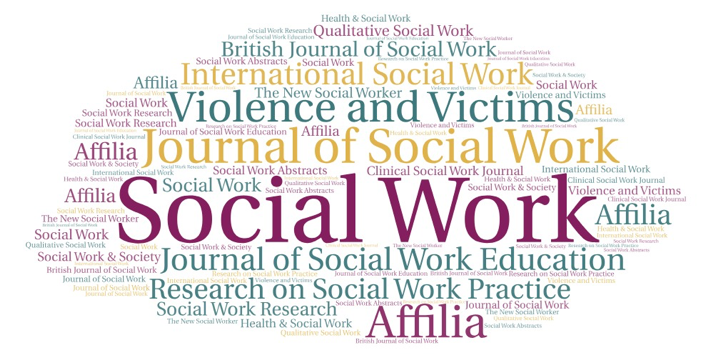

Indicators of journal relevance. Various journals are not equally relevant to every type of question being asked of the literature. Journals may overlap to a great extent in terms of the topics they might cover; in other words, a topic might appear in multiple different journals, depending on how the topic was being addressed. For example, articles that might help answer a question about the relationship between community poverty and violence exposure might appear in several different journals, some with a focus on poverty, others with a focus on violence, and still others on community development or public health. Journal titles are sometimes a good starting point but may not give a broad enough picture of what they cover in their contents.

In focusing a literature search, it also helps to review a journal’s mission and target audience. For example, at least four different journals focus specifically on poverty:

- Journal of Children & Poverty

- Journal of Poverty

- Journal of Poverty and Social Justice

- Poverty & Public Policy

Let’s look at an example using the Journal of Poverty and Social Justice . Information about this journal is located on the journal’s webpage: http://policy.bristoluniversitypress.co.uk/journals/journal-of-poverty-and-social-justice . In the section headed “About the Journal” you can see that it is an internationally focused research journal, and that it addresses social justice issues in addition to poverty alone. The research articles are peer-reviewed (there appear to be non-empirical discussions published, as well). These descriptions about a journal are almost always available, sometimes listed as “scope” or “mission.” These descriptions also indicate the sponsorship of the journal—sponsorship may be institutional (a particular university or agency, such as Smith College Studies in Social Work ), a professional organization, such as the Council on Social Work Education (CSWE) or the National Association of Social Work (NASW), or a publishing company (e.g., Taylor & Frances, Wiley, or Sage).

Indicators of journal caliber. Despite engaging in a peer review process, not all journals are equally rigorous. Some journals have very high rejection rates, meaning that many submitted manuscripts are rejected; others have fairly high acceptance rates, meaning that relatively few manuscripts are rejected. This is not necessarily the best indicator of quality, however, since newer journals may not be sufficiently familiar to authors with high quality manuscripts and some journals are very specific in terms of what they publish. Another index that is sometimes used is the journal’s impact factor . Impact factor is a quantitative number indicative of how often articles published in the journal are cited in the reference list of other journal articles—the statistic is calculated as the number of times on average each article published in a particular year were cited divided by the number of articles published (the number that could be cited). For example, the impact factor for the Journal of Poverty and Social Justice in our list above was 0.70 in 2017, and for the Journal of Poverty was 0.30. These are relatively low figures compared to a journal like the New England Journal of Medicine with an impact factor of 59.56! This means that articles published in that journal were, on average, cited more than 59 times in the next year or two.

Impact factors are not necessarily the best indicator of caliber, however, since many strong journals are geared toward practitioners rather than scholars, so they are less likely to be cited by other scholars but may have a large impact on a large readership. This may be the case for a journal like the one titled Social Work, the official journal of the National Association of Social Workers. It is distributed free to all members: over 120,000 practitioners, educators, and students of social work world-wide. The journal has a recent impact factor of.790. The journals with social work relevant content have impact factors in the range of 1.0 to 3.0 according to Scimago Journal & Country Rank (SJR), particularly when they are interdisciplinary journals (for example, Child Development , Journal of Marriage and Family , Child Abuse and Neglect , Child Maltreatmen t, Social Service Review , and British Journal of Social Work ). Once upon a time, a reader could locate different indexes comparing the “quality” of social work-related journals. However, the concept of “quality” is difficult to systematically define. These indexes have mostly been replaced by impact ratings, which are not necessarily the best, most robust indicators on which to rely in assessing journal quality. For example, new journals addressing cutting edge topics have not been around long enough to have been evaluated using this particular tool, and it takes a few years for articles to begin to be cited in other, later publications.

Beware of pseudo-, illegitimate, misleading, deceptive, and suspicious journals . Another side effect of living in the Age of Information is that almost anyone can circulate almost anything and call it whatever they wish. This goes for “journal” publications, as well. With the advent of open-access publishing in recent years (electronic resources available without subscription), we have seen an explosion of what are called predatory or junk journals . These are publications calling themselves journals, often with titles very similar to legitimate publications and often with fake editorial boards. These “publications” lack the integrity of legitimate journals. This caution is reminiscent of the discussions earlier in the course about pseudoscience and “snake oil” sales. The predatory nature of many apparent information dissemination outlets has to do with how scientists and scholars may be fooled into submitting their work, often paying to have their work peer-reviewed and published. There exists a “thriving black-market economy of publishing scams,” and at least two “journal blacklists” exist to help identify and avoid these scam journals (Anderson, 2017).

This issue is important to information consumers, because it creates a challenge in terms of identifying legitimate sources and publications. The challenge is particularly important to address when information from on-line, open-access journals is being considered. Open-access is not necessarily a poor choice—legitimate scientists may pay sizeable fees to legitimate publishers to make their work freely available and accessible as open-access resources. On-line access is also not necessarily a poor choice—legitimate publishers often make articles available on-line to provide timely access to the content, especially when publishing the article in hard copy will be delayed by months or even a year or more. On the other hand, stating that a journal engages in a peer-review process is no guarantee of quality—this claim may or may not be truthful. Pseudo- and junk journals may engage in some quality control practices, but may lack attention to important quality control processes, such as managing conflict of interest, reviewing content for objectivity or quality of the research conducted, or otherwise failing to adhere to industry standards (Laine & Winker, 2017).

One resource designed to assist with the process of deciphering legitimacy is the Directory of Open Access Journals (DOAJ). The DOAJ is not a comprehensive listing of all possible legitimate open-access journals, and does not guarantee quality, but it does help identify legitimate sources of information that are openly accessible and meet basic legitimacy criteria. It also is about open-access journals, not the many journals published in hard copy.

An additional caution: Search for article corrections. Despite all of the careful manuscript review and editing, sometimes an error appears in a published article. Most journals have a practice of publishing corrections in future issues. When you locate an article, it is helpful to also search for updates. Here is an example where data presented in an article’s original tables were erroneous, and a correction appeared in a later issue.

- Marchant, A., Hawton, K., Stewart A., Montgomery, P., Singaravelu, V., Lloyd, K., Purdy, N., Daine, K., & John, A. (2017). A systematic review of the relationship between internet use, self-harm and suicidal behaviour in young people: The good, the bad and the unknown. PLoS One, 12(8): e0181722. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5558917/

- Marchant, A., Hawton, K., Stewart A., Montgomery, P., Singaravelu, V., Lloyd, K., Purdy, N., Daine, K., & John, A. (2018).Correction—A systematic review of the relationship between internet use, self-harm and suicidal behaviour in young people: The good, the bad and the unknown. PLoS One, 13(3): e0193937. http://journals.plos.org/plosone/article?id=10.1371/journal.pone.0193937

Search Tools. In this age of information, it is all too easy to find items—the problem lies in sifting, sorting, and managing the vast numbers of items that can be found. For example, a simple Google® search for the topic “community poverty and violence” resulted in about 15,600,000 results! As a means of simplifying the process of searching for journal articles on a specific topic, a variety of helpful tools have emerged. One type of search tool has previously applied a filtering process for you: abstracting and indexing databases . These resources provide the user with the results of a search to which records have already passed through one or more filters. For example, PsycINFO is managed by the American Psychological Association and is devoted to peer-reviewed literature in behavioral science. It contains almost 4.5 million records and is growing every month. However, it may not be available to users who are not affiliated with a university library. Conducting a basic search for our topic of “community poverty and violence” in PsychINFO returned 1,119 articles. Still a large number, but far more manageable. Additional filters can be applied, such as limiting the range in publication dates, selecting only peer reviewed items, limiting the language of the published piece (English only, for example), and specified types of documents (either chapters, dissertations, or journal articles only, for example). Adding the filters for English, peer-reviewed journal articles published between 2010 and 2017 resulted in 346 documents being identified.

Just as was the case with journals, not all abstracting and indexing databases are equivalent. There may be overlap between them, but none is guaranteed to identify all relevant pieces of literature. Here are some examples to consider, depending on the nature of the questions asked of the literature:

- Academic Search Complete—multidisciplinary index of 9,300 peer-reviewed journals

- AgeLine—multidisciplinary index of aging-related content for over 600 journals

- Campbell Collaboration—systematic reviews in education, crime and justice, social welfare, international development

- Google Scholar—broad search tool for scholarly literature across many disciplines

- MEDLINE/ PubMed—National Library of medicine, access to over 15 million citations

- Oxford Bibliographies—annotated bibliographies, each is discipline specific (e.g., psychology, childhood studies, criminology, social work, sociology)

- PsycINFO/PsycLIT—international literature on material relevant to psychology and related disciplines

- SocINDEX—publications in sociology

- Social Sciences Abstracts—multiple disciplines

- Social Work Abstracts—many areas of social work are covered

- Web of Science—a “meta” search tool that searches other search tools, multiple disciplines

Placing our search for information about “community violence and poverty” into the Social Work Abstracts tool with no additional filters resulted in a manageable 54-item list. Finally, abstracting and indexing databases are another way to determine journal legitimacy: if a journal is indexed in a one of these systems, it is likely a legitimate journal. However, the converse is not necessarily true: if a journal is not indexed does not mean it is an illegitimate or pseudo-journal.

Government Sources. A great deal of information is gathered, analyzed, and disseminated by various governmental branches at the international, national, state, regional, county, and city level. Searching websites that end in.gov is one way to identify this type of information, often presented in articles, news briefs, and statistical reports. These government sources gather information in two ways: they fund external investigations through grants and contracts and they conduct research internally, through their own investigators. Here are some examples to consider, depending on the nature of the topic for which information is sought:

- Agency for Healthcare Research and Quality (AHRQ) at https://www.ahrq.gov/

- Bureau of Justice Statistics (BJS) at https://www.bjs.gov/

- Census Bureau at https://www.census.gov

- Morbidity and Mortality Weekly Report of the CDC (MMWR-CDC) at https://www.cdc.gov/mmwr/index.html

- Child Welfare Information Gateway at https://www.childwelfare.gov

- Children’s Bureau/Administration for Children & Families at https://www.acf.hhs.gov

- Forum on Child and Family Statistics at https://www.childstats.gov

- National Institutes of Health (NIH) at https://www.nih.gov , including (not limited to):

- National Institute on Aging (NIA at https://www.nia.nih.gov

- National Institute on Alcohol Abuse and Alcoholism (NIAAA) at https://www.niaaa.nih.gov

- National Institute of Child Health and Human Development (NICHD) at https://www.nichd.nih.gov

- National Institute on Drug Abuse (NIDA) at https://www.nida.nih.gov

- National Institute of Environmental Health Sciences at https://www.niehs.nih.gov

- National Institute of Mental Health (NIMH) at https://www.nimh.nih.gov

- National Institute on Minority Health and Health Disparities at https://www.nimhd.nih.gov

- National Institute of Justice (NIJ) at https://www.nij.gov

- Substance Abuse and Mental Health Services Administration (SAMHSA) at https://www.samhsa.gov/

- United States Agency for International Development at https://usaid.gov

Each state and many counties or cities have similar data sources and analysis reports available, such as Ohio Department of Health at https://www.odh.ohio.gov/healthstats/dataandstats.aspx and Franklin County at https://statisticalatlas.com/county/Ohio/Franklin-County/Overview . Data are available from international/global resources (e.g., United Nations and World Health Organization), as well.

Other Sources. The Health and Medicine Division (HMD) of the National Academies—previously the Institute of Medicine (IOM)—is a nonprofit institution that aims to provide government and private sector policy and other decision makers with objective analysis and advice for making informed health decisions. For example, in 2018 they produced reports on topics in substance use and mental health concerning the intersection of opioid use disorder and infectious disease, the legal implications of emerging neurotechnologies, and a global agenda concerning the identification and prevention of violence (see http://www.nationalacademies.org/hmd/Global/Topics/Substance-Abuse-Mental-Health.aspx ). The exciting aspect of this resource is that it addresses many topics that are current concerns because they are hoping to help inform emerging policy. The caution to consider with this resource is the evidence is often still emerging, as well.

Numerous “think tank” organizations exist, each with a specific mission. For example, the Rand Corporation is a nonprofit organization offering research and analysis to address global issues since 1948. The institution’s mission is to help improve policy and decision making “to help individuals, families, and communities throughout the world be safer and more secure, healthier and more prosperous,” addressing issues of energy, education, health care, justice, the environment, international affairs, and national security (https://www.rand.org/about/history.html). And, for example, the Robert Woods Johnson Foundation is a philanthropic organization supporting research and research dissemination concerning health issues facing the United States. The foundation works to build a culture of health across systems of care (not only medical care) and communities (https://www.rwjf.org).

While many of these have a great deal of helpful evidence to share, they also may have a strong political bias. Objectivity is often lacking in what information these organizations provide: they provide evidence to support certain points of view. That is their purpose—to provide ideas on specific problems, many of which have a political component. Think tanks “are constantly researching solutions to a variety of the world’s problems, and arguing, advocating, and lobbying for policy changes at local, state, and federal levels” (quoted from https://thebestschools.org/features/most-influential-think-tanks/ ). Helpful information about what this one source identified as the 50 most influential U.S. think tanks includes identifying each think tank’s political orientation. For example, The Heritage Foundation is identified as conservative, whereas Human Rights Watch is identified as liberal.

While not the same as think tanks, many mission-driven organizations also sponsor or report on research, as well. For example, the National Association for Children of Alcoholics (NACOA) in the United States is a registered nonprofit organization. Its mission, along with other partnering organizations, private-sector groups, and federal agencies, is to promote policy and program development in research, prevention and treatment to provide information to, for, and about children of alcoholics (of all ages). Based on this mission, the organization supports knowledge development and information gathering on the topic and disseminates information that serves the needs of this population. While this is a worthwhile mission, there is no guarantee that the information meets the criteria for evidence with which we have been working. Evidence reported by think tank and mission-driven sources must be utilized with a great deal of caution and critical analysis!

In many instances an empirical report has not appeared in the published literature, but in the form of a technical or final report to the agency or program providing the funding for the research that was conducted. One such example is presented by a team of investigators funded by the National Institute of Justice to evaluate a program for training professionals to collect strong forensic evidence in instances of sexual assault (Patterson, Resko, Pierce-Weeks, & Campbell, 2014): https://www.ncjrs.gov/pdffiles1/nij/grants/247081.pdf . Investigators may serve in the capacity of consultant to agencies, programs, or institutions, and provide empirical evidence to inform activities and planning. One such example is presented by Maguire-Jack (2014) as a report to a state’s child maltreatment prevention board: https://preventionboard.wi.gov/Documents/InvestmentInPreventionPrograming_Final.pdf .

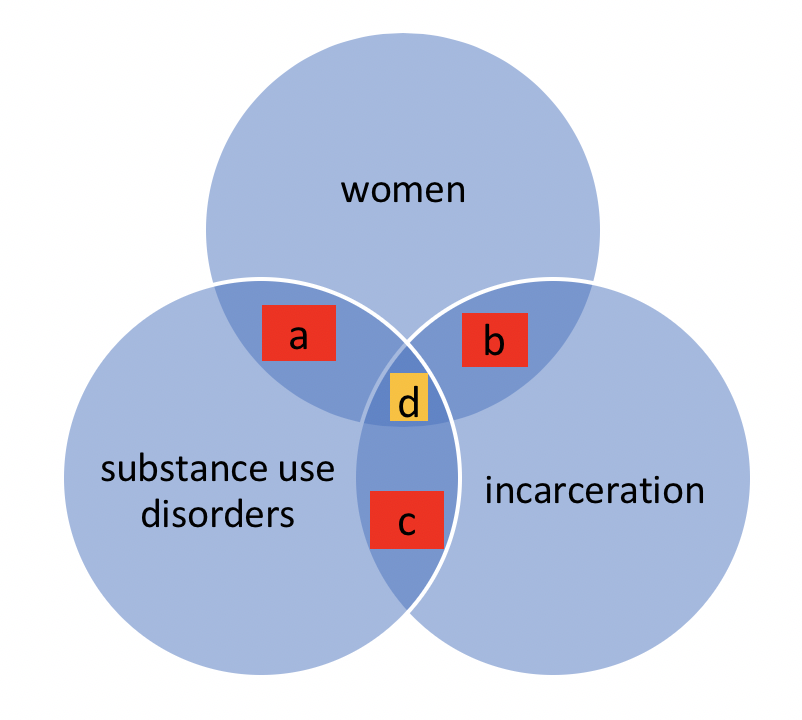

When Direct Answers to Questions Cannot Be Found. Sometimes social workers are interested in finding answers to complex questions or questions related to an emerging, not-yet-understood topic. This does not mean giving up on empirical literature. Instead, it requires a bit of creativity in approaching the literature. A Venn diagram might help explain this process. Consider a scenario where a social worker wishes to locate literature to answer a question concerning issues of intersectionality. Intersectionality is a social justice term applied to situations where multiple categorizations or classifications come together to create overlapping, interconnected, or multiplied disadvantage. For example, women with a substance use disorder and who have been incarcerated face a triple threat in terms of successful treatment for a substance use disorder: intersectionality exists between being a woman, having a substance use disorder, and having been in jail or prison. After searching the literature, little or no empirical evidence might have been located on this specific triple-threat topic. Instead, the social worker will need to seek literature on each of the threats individually, and possibly will find literature on pairs of topics (see Figure 3-1). There exists some literature about women’s outcomes for treatment of a substance use disorder (a), some literature about women during and following incarceration (b), and some literature about substance use disorders and incarceration (c). Despite not having a direct line on the center of the intersecting spheres of literature (d), the social worker can develop at least a partial picture based on the overlapping literatures.

Figure 3-1. Venn diagram of intersecting literature sets.

Take a moment to complete the following activity. For each statement about empirical literature, decide if it is true or false.

Social Work 3401 Coursebook Copyright © by Dr. Audrey Begun is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License , except where otherwise noted.

Share This Book

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Empirical Research: Definition, Methods, Types and Examples

Content Index

Empirical research: Definition

Empirical research: origin, quantitative research methods, qualitative research methods, steps for conducting empirical research, empirical research methodology cycle, advantages of empirical research, disadvantages of empirical research, why is there a need for empirical research.

Empirical research is defined as any research where conclusions of the study is strictly drawn from concretely empirical evidence, and therefore “verifiable” evidence.

This empirical evidence can be gathered using quantitative market research and qualitative market research methods.

For example: A research is being conducted to find out if listening to happy music in the workplace while working may promote creativity? An experiment is conducted by using a music website survey on a set of audience who are exposed to happy music and another set who are not listening to music at all, and the subjects are then observed. The results derived from such a research will give empirical evidence if it does promote creativity or not.

LEARN ABOUT: Behavioral Research

You must have heard the quote” I will not believe it unless I see it”. This came from the ancient empiricists, a fundamental understanding that powered the emergence of medieval science during the renaissance period and laid the foundation of modern science, as we know it today. The word itself has its roots in greek. It is derived from the greek word empeirikos which means “experienced”.

In today’s world, the word empirical refers to collection of data using evidence that is collected through observation or experience or by using calibrated scientific instruments. All of the above origins have one thing in common which is dependence of observation and experiments to collect data and test them to come up with conclusions.

LEARN ABOUT: Causal Research

Types and methodologies of empirical research

Empirical research can be conducted and analysed using qualitative or quantitative methods.

- Quantitative research : Quantitative research methods are used to gather information through numerical data. It is used to quantify opinions, behaviors or other defined variables . These are predetermined and are in a more structured format. Some of the commonly used methods are survey, longitudinal studies, polls, etc

- Qualitative research: Qualitative research methods are used to gather non numerical data. It is used to find meanings, opinions, or the underlying reasons from its subjects. These methods are unstructured or semi structured. The sample size for such a research is usually small and it is a conversational type of method to provide more insight or in-depth information about the problem Some of the most popular forms of methods are focus groups, experiments, interviews, etc.

Data collected from these will need to be analysed. Empirical evidence can also be analysed either quantitatively and qualitatively. Using this, the researcher can answer empirical questions which have to be clearly defined and answerable with the findings he has got. The type of research design used will vary depending on the field in which it is going to be used. Many of them might choose to do a collective research involving quantitative and qualitative method to better answer questions which cannot be studied in a laboratory setting.

LEARN ABOUT: Qualitative Research Questions and Questionnaires

Quantitative research methods aid in analyzing the empirical evidence gathered. By using these a researcher can find out if his hypothesis is supported or not.

- Survey research: Survey research generally involves a large audience to collect a large amount of data. This is a quantitative method having a predetermined set of closed questions which are pretty easy to answer. Because of the simplicity of such a method, high responses are achieved. It is one of the most commonly used methods for all kinds of research in today’s world.

Previously, surveys were taken face to face only with maybe a recorder. However, with advancement in technology and for ease, new mediums such as emails , or social media have emerged.

For example: Depletion of energy resources is a growing concern and hence there is a need for awareness about renewable energy. According to recent studies, fossil fuels still account for around 80% of energy consumption in the United States. Even though there is a rise in the use of green energy every year, there are certain parameters because of which the general population is still not opting for green energy. In order to understand why, a survey can be conducted to gather opinions of the general population about green energy and the factors that influence their choice of switching to renewable energy. Such a survey can help institutions or governing bodies to promote appropriate awareness and incentive schemes to push the use of greener energy.

Learn more: Renewable Energy Survey Template Descriptive Research vs Correlational Research

- Experimental research: In experimental research , an experiment is set up and a hypothesis is tested by creating a situation in which one of the variable is manipulated. This is also used to check cause and effect. It is tested to see what happens to the independent variable if the other one is removed or altered. The process for such a method is usually proposing a hypothesis, experimenting on it, analyzing the findings and reporting the findings to understand if it supports the theory or not.

For example: A particular product company is trying to find what is the reason for them to not be able to capture the market. So the organisation makes changes in each one of the processes like manufacturing, marketing, sales and operations. Through the experiment they understand that sales training directly impacts the market coverage for their product. If the person is trained well, then the product will have better coverage.

- Correlational research: Correlational research is used to find relation between two set of variables . Regression analysis is generally used to predict outcomes of such a method. It can be positive, negative or neutral correlation.

LEARN ABOUT: Level of Analysis

For example: Higher educated individuals will get higher paying jobs. This means higher education enables the individual to high paying job and less education will lead to lower paying jobs.

- Longitudinal study: Longitudinal study is used to understand the traits or behavior of a subject under observation after repeatedly testing the subject over a period of time. Data collected from such a method can be qualitative or quantitative in nature.

For example: A research to find out benefits of exercise. The target is asked to exercise everyday for a particular period of time and the results show higher endurance, stamina, and muscle growth. This supports the fact that exercise benefits an individual body.

- Cross sectional: Cross sectional study is an observational type of method, in which a set of audience is observed at a given point in time. In this type, the set of people are chosen in a fashion which depicts similarity in all the variables except the one which is being researched. This type does not enable the researcher to establish a cause and effect relationship as it is not observed for a continuous time period. It is majorly used by healthcare sector or the retail industry.

For example: A medical study to find the prevalence of under-nutrition disorders in kids of a given population. This will involve looking at a wide range of parameters like age, ethnicity, location, incomes and social backgrounds. If a significant number of kids coming from poor families show under-nutrition disorders, the researcher can further investigate into it. Usually a cross sectional study is followed by a longitudinal study to find out the exact reason.

- Causal-Comparative research : This method is based on comparison. It is mainly used to find out cause-effect relationship between two variables or even multiple variables.

For example: A researcher measured the productivity of employees in a company which gave breaks to the employees during work and compared that to the employees of the company which did not give breaks at all.

LEARN ABOUT: Action Research

Some research questions need to be analysed qualitatively, as quantitative methods are not applicable there. In many cases, in-depth information is needed or a researcher may need to observe a target audience behavior, hence the results needed are in a descriptive analysis form. Qualitative research results will be descriptive rather than predictive. It enables the researcher to build or support theories for future potential quantitative research. In such a situation qualitative research methods are used to derive a conclusion to support the theory or hypothesis being studied.

LEARN ABOUT: Qualitative Interview

- Case study: Case study method is used to find more information through carefully analyzing existing cases. It is very often used for business research or to gather empirical evidence for investigation purpose. It is a method to investigate a problem within its real life context through existing cases. The researcher has to carefully analyse making sure the parameter and variables in the existing case are the same as to the case that is being investigated. Using the findings from the case study, conclusions can be drawn regarding the topic that is being studied.

For example: A report mentioning the solution provided by a company to its client. The challenges they faced during initiation and deployment, the findings of the case and solutions they offered for the problems. Such case studies are used by most companies as it forms an empirical evidence for the company to promote in order to get more business.

- Observational method: Observational method is a process to observe and gather data from its target. Since it is a qualitative method it is time consuming and very personal. It can be said that observational research method is a part of ethnographic research which is also used to gather empirical evidence. This is usually a qualitative form of research, however in some cases it can be quantitative as well depending on what is being studied.

For example: setting up a research to observe a particular animal in the rain-forests of amazon. Such a research usually take a lot of time as observation has to be done for a set amount of time to study patterns or behavior of the subject. Another example used widely nowadays is to observe people shopping in a mall to figure out buying behavior of consumers.

- One-on-one interview: Such a method is purely qualitative and one of the most widely used. The reason being it enables a researcher get precise meaningful data if the right questions are asked. It is a conversational method where in-depth data can be gathered depending on where the conversation leads.

For example: A one-on-one interview with the finance minister to gather data on financial policies of the country and its implications on the public.

- Focus groups: Focus groups are used when a researcher wants to find answers to why, what and how questions. A small group is generally chosen for such a method and it is not necessary to interact with the group in person. A moderator is generally needed in case the group is being addressed in person. This is widely used by product companies to collect data about their brands and the product.

For example: A mobile phone manufacturer wanting to have a feedback on the dimensions of one of their models which is yet to be launched. Such studies help the company meet the demand of the customer and position their model appropriately in the market.

- Text analysis: Text analysis method is a little new compared to the other types. Such a method is used to analyse social life by going through images or words used by the individual. In today’s world, with social media playing a major part of everyone’s life, such a method enables the research to follow the pattern that relates to his study.

For example: A lot of companies ask for feedback from the customer in detail mentioning how satisfied are they with their customer support team. Such data enables the researcher to take appropriate decisions to make their support team better.

Sometimes a combination of the methods is also needed for some questions that cannot be answered using only one type of method especially when a researcher needs to gain a complete understanding of complex subject matter.

We recently published a blog that talks about examples of qualitative data in education ; why don’t you check it out for more ideas?

Since empirical research is based on observation and capturing experiences, it is important to plan the steps to conduct the experiment and how to analyse it. This will enable the researcher to resolve problems or obstacles which can occur during the experiment.

Step #1: Define the purpose of the research

This is the step where the researcher has to answer questions like what exactly do I want to find out? What is the problem statement? Are there any issues in terms of the availability of knowledge, data, time or resources. Will this research be more beneficial than what it will cost.

Before going ahead, a researcher has to clearly define his purpose for the research and set up a plan to carry out further tasks.

Step #2 : Supporting theories and relevant literature

The researcher needs to find out if there are theories which can be linked to his research problem . He has to figure out if any theory can help him support his findings. All kind of relevant literature will help the researcher to find if there are others who have researched this before, or what are the problems faced during this research. The researcher will also have to set up assumptions and also find out if there is any history regarding his research problem

Step #3: Creation of Hypothesis and measurement

Before beginning the actual research he needs to provide himself a working hypothesis or guess what will be the probable result. Researcher has to set up variables, decide the environment for the research and find out how can he relate between the variables.

Researcher will also need to define the units of measurements, tolerable degree for errors, and find out if the measurement chosen will be acceptable by others.

Step #4: Methodology, research design and data collection

In this step, the researcher has to define a strategy for conducting his research. He has to set up experiments to collect data which will enable him to propose the hypothesis. The researcher will decide whether he will need experimental or non experimental method for conducting the research. The type of research design will vary depending on the field in which the research is being conducted. Last but not the least, the researcher will have to find out parameters that will affect the validity of the research design. Data collection will need to be done by choosing appropriate samples depending on the research question. To carry out the research, he can use one of the many sampling techniques. Once data collection is complete, researcher will have empirical data which needs to be analysed.

LEARN ABOUT: Best Data Collection Tools

Step #5: Data Analysis and result

Data analysis can be done in two ways, qualitatively and quantitatively. Researcher will need to find out what qualitative method or quantitative method will be needed or will he need a combination of both. Depending on the unit of analysis of his data, he will know if his hypothesis is supported or rejected. Analyzing this data is the most important part to support his hypothesis.

Step #6: Conclusion

A report will need to be made with the findings of the research. The researcher can give the theories and literature that support his research. He can make suggestions or recommendations for further research on his topic.

A.D. de Groot, a famous dutch psychologist and a chess expert conducted some of the most notable experiments using chess in the 1940’s. During his study, he came up with a cycle which is consistent and now widely used to conduct empirical research. It consists of 5 phases with each phase being as important as the next one. The empirical cycle captures the process of coming up with hypothesis about how certain subjects work or behave and then testing these hypothesis against empirical data in a systematic and rigorous approach. It can be said that it characterizes the deductive approach to science. Following is the empirical cycle.

- Observation: At this phase an idea is sparked for proposing a hypothesis. During this phase empirical data is gathered using observation. For example: a particular species of flower bloom in a different color only during a specific season.

- Induction: Inductive reasoning is then carried out to form a general conclusion from the data gathered through observation. For example: As stated above it is observed that the species of flower blooms in a different color during a specific season. A researcher may ask a question “does the temperature in the season cause the color change in the flower?” He can assume that is the case, however it is a mere conjecture and hence an experiment needs to be set up to support this hypothesis. So he tags a few set of flowers kept at a different temperature and observes if they still change the color?

- Deduction: This phase helps the researcher to deduce a conclusion out of his experiment. This has to be based on logic and rationality to come up with specific unbiased results.For example: In the experiment, if the tagged flowers in a different temperature environment do not change the color then it can be concluded that temperature plays a role in changing the color of the bloom.

- Testing: This phase involves the researcher to return to empirical methods to put his hypothesis to the test. The researcher now needs to make sense of his data and hence needs to use statistical analysis plans to determine the temperature and bloom color relationship. If the researcher finds out that most flowers bloom a different color when exposed to the certain temperature and the others do not when the temperature is different, he has found support to his hypothesis. Please note this not proof but just a support to his hypothesis.

- Evaluation: This phase is generally forgotten by most but is an important one to keep gaining knowledge. During this phase the researcher puts forth the data he has collected, the support argument and his conclusion. The researcher also states the limitations for the experiment and his hypothesis and suggests tips for others to pick it up and continue a more in-depth research for others in the future. LEARN MORE: Population vs Sample

LEARN MORE: Population vs Sample

There is a reason why empirical research is one of the most widely used method. There are a few advantages associated with it. Following are a few of them.

- It is used to authenticate traditional research through various experiments and observations.

- This research methodology makes the research being conducted more competent and authentic.

- It enables a researcher understand the dynamic changes that can happen and change his strategy accordingly.

- The level of control in such a research is high so the researcher can control multiple variables.

- It plays a vital role in increasing internal validity .

Even though empirical research makes the research more competent and authentic, it does have a few disadvantages. Following are a few of them.

- Such a research needs patience as it can be very time consuming. The researcher has to collect data from multiple sources and the parameters involved are quite a few, which will lead to a time consuming research.

- Most of the time, a researcher will need to conduct research at different locations or in different environments, this can lead to an expensive affair.

- There are a few rules in which experiments can be performed and hence permissions are needed. Many a times, it is very difficult to get certain permissions to carry out different methods of this research.

- Collection of data can be a problem sometimes, as it has to be collected from a variety of sources through different methods.

LEARN ABOUT: Social Communication Questionnaire

Empirical research is important in today’s world because most people believe in something only that they can see, hear or experience. It is used to validate multiple hypothesis and increase human knowledge and continue doing it to keep advancing in various fields.

For example: Pharmaceutical companies use empirical research to try out a specific drug on controlled groups or random groups to study the effect and cause. This way, they prove certain theories they had proposed for the specific drug. Such research is very important as sometimes it can lead to finding a cure for a disease that has existed for many years. It is useful in science and many other fields like history, social sciences, business, etc.

LEARN ABOUT: 12 Best Tools for Researchers

With the advancement in today’s world, empirical research has become critical and a norm in many fields to support their hypothesis and gain more knowledge. The methods mentioned above are very useful for carrying out such research. However, a number of new methods will keep coming up as the nature of new investigative questions keeps getting unique or changing.

Create a single source of real data with a built-for-insights platform. Store past data, add nuggets of insights, and import research data from various sources into a CRM for insights. Build on ever-growing research with a real-time dashboard in a unified research management platform to turn insights into knowledge.

LEARN MORE FREE TRIAL

MORE LIKE THIS

Top 10 Dynata Alternatives & Competitors

May 27, 2024

What Are My Employees Really Thinking? The Power of Open-ended Survey Analysis

May 24, 2024

I Am Disconnected – Tuesday CX Thoughts

May 21, 2024

20 Best Customer Success Tools of 2024

May 20, 2024

Other categories

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

Penn State University Libraries

Empirical research in the social sciences and education.

- What is Empirical Research and How to Read It

- Finding Empirical Research in Library Databases

- Designing Empirical Research

- Ethics, Cultural Responsiveness, and Anti-Racism in Research

- Citing, Writing, and Presenting Your Work

Contact the Librarian at your campus for more help!

Introduction: What is Empirical Research?

Empirical research is based on observed and measured phenomena and derives knowledge from actual experience rather than from theory or belief.

How do you know if a study is empirical? Read the subheadings within the article, book, or report and look for a description of the research "methodology." Ask yourself: Could I recreate this study and test these results?

Key characteristics to look for:

- Specific research questions to be answered

- Definition of the population, behavior, or phenomena being studied

- Description of the process used to study this population or phenomena, including selection criteria, controls, and testing instruments (such as surveys)

Another hint: some scholarly journals use a specific layout, called the "IMRaD" format, to communicate empirical research findings. Such articles typically have 4 components:

- Introduction : sometimes called "literature review" -- what is currently known about the topic -- usually includes a theoretical framework and/or discussion of previous studies

- Methodology: sometimes called "research design" -- how to recreate the study -- usually describes the population, research process, and analytical tools used in the present study

- Results : sometimes called "findings" -- what was learned through the study -- usually appears as statistical data or as substantial quotations from research participants

- Discussion : sometimes called "conclusion" or "implications" -- why the study is important -- usually describes how the research results influence professional practices or future studies

Reading and Evaluating Scholarly Materials

Reading research can be a challenge. However, the tutorials and videos below can help. They explain what scholarly articles look like, how to read them, and how to evaluate them:

- CRAAP Checklist A frequently-used checklist that helps you examine the currency, relevance, authority, accuracy, and purpose of an information source.

- IF I APPLY A newer model of evaluating sources which encourages you to think about your own biases as a reader, as well as concerns about the item you are reading.

- Credo Video: How to Read Scholarly Materials (4 min.)

- Credo Tutorial: How to Read Scholarly Materials

- Credo Tutorial: Evaluating Information

- Credo Video: Evaluating Statistics (4 min.)

- Next: Finding Empirical Research in Library Databases >>

- Last Updated: Feb 18, 2024 8:33 PM

- URL: https://guides.libraries.psu.edu/emp

Empirical Research

Introduction, what is empirical research, attribution.

- Finding Empirical Research in Library Databases

- Designing Empirical Research

- Case Sudies

Empirical research is based on observed and measured phenomena and derives knowledge from actual experience rather than from theory or belief.

How do you know if a study is empirical? Read the subheadings within the article, book, or report and look for a description of the research "methodology." Ask yourself: Could I recreate this study and test these results?

Key characteristics to look for:

- Specific research questions to be answered

- Definition of the population, behavior, or phenomena being studied

- Description of the process used to study this population or phenomena, including selection criteria, controls, and testing instruments (such as surveys)

Another hint: some scholarly journals use a specific layout, called the "IMRaD" format, to communicate empirical research findings. Such articles typically have 4 components:

- Introduction : sometimes called "literature review" -- what is currently known about the topic -- usually includes a theoretical framework and/or discussion of previous studies

- Methodology: sometimes called "research design" -- how to recreate the study -- usually describes the population, research process, and analytical tools

- Results : sometimes called "findings" -- what was learned through the study -- usually appears as statistical data or as substantial quotations from research participants

- Discussion : sometimes called "conclusion" or "implications" -- why the study is important -- usually describes how the research results influence professional practices or future studies

Portions of this guide were built using suggestions from other libraries, including Penn State and Utah State University libraries.

- Next: Finding Empirical Research in Library Databases >>

- Last Updated: Jan 10, 2023 8:31 AM

- URL: https://enmu.libguides.com/EmpiricalResearch

Canvas | University | Ask a Librarian

- Library Homepage

- Arrendale Library

Empirical Research: Quantitative & Qualitative

- Empirical Research

Introduction: What is Empirical Research?

Quantitative methods, qualitative methods.

- Quantitative vs. Qualitative

- Reference Works for Social Sciences Research

- Contact Us!

Call us at 706-776-0111

Chat with a Librarian

Send Us Email

Library Hours

Empirical research is based on phenomena that can be observed and measured. Empirical research derives knowledge from actual experience rather than from theory or belief.

Key characteristics of empirical research include:

- Specific research questions to be answered;

- Definitions of the population, behavior, or phenomena being studied;

- Description of the methodology or research design used to study this population or phenomena, including selection criteria, controls, and testing instruments (such as surveys);

- Two basic research processes or methods in empirical research: quantitative methods and qualitative methods (see the rest of the guide for more about these methods).

(based on the original from the Connelly LIbrary of LaSalle University)

Empirical Research: Qualitative vs. Quantitative

Learn about common types of journal articles that use APA Style, including empirical studies; meta-analyses; literature reviews; and replication, theoretical, and methodological articles.

Academic Writer

© 2024 American Psychological Association.

- More about Academic Writer ...

Quantitative Research

A quantitative research project is characterized by having a population about which the researcher wants to draw conclusions, but it is not possible to collect data on the entire population.

- For an observational study, it is necessary to select a proper, statistical random sample and to use methods of statistical inference to draw conclusions about the population.

- For an experimental study, it is necessary to have a random assignment of subjects to experimental and control groups in order to use methods of statistical inference.

Statistical methods are used in all three stages of a quantitative research project.

For observational studies, the data are collected using statistical sampling theory. Then, the sample data are analyzed using descriptive statistical analysis. Finally, generalizations are made from the sample data to the entire population using statistical inference.

For experimental studies, the subjects are allocated to experimental and control group using randomizing methods. Then, the experimental data are analyzed using descriptive statistical analysis. Finally, just as for observational data, generalizations are made to a larger population.

Iversen, G. (2004). Quantitative research . In M. Lewis-Beck, A. Bryman, & T. Liao (Eds.), Encyclopedia of social science research methods . (pp. 897-898). Thousand Oaks, CA: SAGE Publications, Inc.

Qualitative Research

What makes a work deserving of the label qualitative research is the demonstrable effort to produce richly and relevantly detailed descriptions and particularized interpretations of people and the social, linguistic, material, and other practices and events that shape and are shaped by them.

Qualitative research typically includes, but is not limited to, discerning the perspectives of these people, or what is often referred to as the actor’s point of view. Although both philosophically and methodologically a highly diverse entity, qualitative research is marked by certain defining imperatives that include its case (as opposed to its variable) orientation, sensitivity to cultural and historical context, and reflexivity.

In its many guises, qualitative research is a form of empirical inquiry that typically entails some form of purposive sampling for information-rich cases; in-depth interviews and open-ended interviews, lengthy participant/field observations, and/or document or artifact study; and techniques for analysis and interpretation of data that move beyond the data generated and their surface appearances.

Sandelowski, M. (2004). Qualitative research . In M. Lewis-Beck, A. Bryman, & T. Liao (Eds.), Encyclopedia of social science research methods . (pp. 893-894). Thousand Oaks, CA: SAGE Publications, Inc.

- Next: Quantitative vs. Qualitative >>

- Last Updated: Mar 22, 2024 10:47 AM

- URL: https://library.piedmont.edu/empirical-research

- Ebooks & Online Video

- New Materials

- Renew Checkouts

- Faculty Resources

- Library Friends

- Library Services

- Our Mission

- Library History

- Ask a Librarian!

- Making Citations

- Working Online

Arrendale Library Piedmont University 706-776-0111

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

Reviewing the research methods literature: principles and strategies illustrated by a systematic overview of sampling in qualitative research

Stephen j. gentles.

1 Department of Clinical Epidemiology and Biostatistics, McMaster University, Hamilton, Ontario Canada

4 CanChild Centre for Childhood Disability Research, McMaster University, 1400 Main Street West, IAHS 408, Hamilton, ON L8S 1C7 Canada

Cathy Charles

David b. nicholas.

2 Faculty of Social Work, University of Calgary, Alberta, Canada

Jenny Ploeg

3 School of Nursing, McMaster University, Hamilton, Ontario Canada

K. Ann McKibbon

Associated data.

The systematic methods overview used as a worked example in this article (Gentles SJ, Charles C, Ploeg J, McKibbon KA: Sampling in qualitative research: insights from an overview of the methods literature. The Qual Rep 2015, 20(11):1772-1789) is available from http://nsuworks.nova.edu/tqr/vol20/iss11/5 .

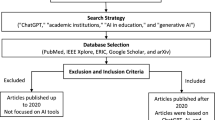

Overviews of methods are potentially useful means to increase clarity and enhance collective understanding of specific methods topics that may be characterized by ambiguity, inconsistency, or a lack of comprehensiveness. This type of review represents a distinct literature synthesis method, although to date, its methodology remains relatively undeveloped despite several aspects that demand unique review procedures. The purpose of this paper is to initiate discussion about what a rigorous systematic approach to reviews of methods, referred to here as systematic methods overviews , might look like by providing tentative suggestions for approaching specific challenges likely to be encountered. The guidance offered here was derived from experience conducting a systematic methods overview on the topic of sampling in qualitative research.

The guidance is organized into several principles that highlight specific objectives for this type of review given the common challenges that must be overcome to achieve them. Optional strategies for achieving each principle are also proposed, along with discussion of how they were successfully implemented in the overview on sampling. We describe seven paired principles and strategies that address the following aspects: delimiting the initial set of publications to consider, searching beyond standard bibliographic databases, searching without the availability of relevant metadata, selecting publications on purposeful conceptual grounds, defining concepts and other information to abstract iteratively, accounting for inconsistent terminology used to describe specific methods topics, and generating rigorous verifiable analytic interpretations. Since a broad aim in systematic methods overviews is to describe and interpret the relevant literature in qualitative terms, we suggest that iterative decision making at various stages of the review process, and a rigorous qualitative approach to analysis are necessary features of this review type.

Conclusions

We believe that the principles and strategies provided here will be useful to anyone choosing to undertake a systematic methods overview. This paper represents an initial effort to promote high quality critical evaluations of the literature regarding problematic methods topics, which have the potential to promote clearer, shared understandings, and accelerate advances in research methods. Further work is warranted to develop more definitive guidance.

Electronic supplementary material

The online version of this article (doi:10.1186/s13643-016-0343-0) contains supplementary material, which is available to authorized users.

While reviews of methods are not new, they represent a distinct review type whose methodology remains relatively under-addressed in the literature despite the clear implications for unique review procedures. One of few examples to describe it is a chapter containing reflections of two contributing authors in a book of 21 reviews on methodological topics compiled for the British National Health Service, Health Technology Assessment Program [ 1 ]. Notable is their observation of how the differences between the methods reviews and conventional quantitative systematic reviews, specifically attributable to their varying content and purpose, have implications for defining what qualifies as systematic. While the authors describe general aspects of “systematicity” (including rigorous application of a methodical search, abstraction, and analysis), they also describe a high degree of variation within the category of methods reviews itself and so offer little in the way of concrete guidance. In this paper, we present tentative concrete guidance, in the form of a preliminary set of proposed principles and optional strategies, for a rigorous systematic approach to reviewing and evaluating the literature on quantitative or qualitative methods topics. For purposes of this article, we have used the term systematic methods overview to emphasize the notion of a systematic approach to such reviews.

The conventional focus of rigorous literature reviews (i.e., review types for which systematic methods have been codified, including the various approaches to quantitative systematic reviews [ 2 – 4 ], and the numerous forms of qualitative and mixed methods literature synthesis [ 5 – 10 ]) is to synthesize empirical research findings from multiple studies. By contrast, the focus of overviews of methods, including the systematic approach we advocate, is to synthesize guidance on methods topics. The literature consulted for such reviews may include the methods literature, methods-relevant sections of empirical research reports, or both. Thus, this paper adds to previous work published in this journal—namely, recent preliminary guidance for conducting reviews of theory [ 11 ]—that has extended the application of systematic review methods to novel review types that are concerned with subject matter other than empirical research findings.

Published examples of methods overviews illustrate the varying objectives they can have. One objective is to establish methodological standards for appraisal purposes. For example, reviews of existing quality appraisal standards have been used to propose universal standards for appraising the quality of primary qualitative research [ 12 ] or evaluating qualitative research reports [ 13 ]. A second objective is to survey the methods-relevant sections of empirical research reports to establish current practices on methods use and reporting practices, which Moher and colleagues [ 14 ] recommend as a means for establishing the needs to be addressed in reporting guidelines (see, for example [ 15 , 16 ]). A third objective for a methods review is to offer clarity and enhance collective understanding regarding a specific methods topic that may be characterized by ambiguity, inconsistency, or a lack of comprehensiveness within the available methods literature. An example of this is a overview whose objective was to review the inconsistent definitions of intention-to-treat analysis (the methodologically preferred approach to analyze randomized controlled trial data) that have been offered in the methods literature and propose a solution for improving conceptual clarity [ 17 ]. Such reviews are warranted because students and researchers who must learn or apply research methods typically lack the time to systematically search, retrieve, review, and compare the available literature to develop a thorough and critical sense of the varied approaches regarding certain controversial or ambiguous methods topics.

While systematic methods overviews , as a review type, include both reviews of the methods literature and reviews of methods-relevant sections from empirical study reports, the guidance provided here is primarily applicable to reviews of the methods literature since it was derived from the experience of conducting such a review [ 18 ], described below. To our knowledge, there are no well-developed proposals on how to rigorously conduct such reviews. Such guidance would have the potential to improve the thoroughness and credibility of critical evaluations of the methods literature, which could increase their utility as a tool for generating understandings that advance research methods, both qualitative and quantitative. Our aim in this paper is thus to initiate discussion about what might constitute a rigorous approach to systematic methods overviews. While we hope to promote rigor in the conduct of systematic methods overviews wherever possible, we do not wish to suggest that all methods overviews need be conducted to the same standard. Rather, we believe that the level of rigor may need to be tailored pragmatically to the specific review objectives, which may not always justify the resource requirements of an intensive review process.

The example systematic methods overview on sampling in qualitative research

The principles and strategies we propose in this paper are derived from experience conducting a systematic methods overview on the topic of sampling in qualitative research [ 18 ]. The main objective of that methods overview was to bring clarity and deeper understanding of the prominent concepts related to sampling in qualitative research (purposeful sampling strategies, saturation, etc.). Specifically, we interpreted the available guidance, commenting on areas lacking clarity, consistency, or comprehensiveness (without proposing any recommendations on how to do sampling). This was achieved by a comparative and critical analysis of publications representing the most influential (i.e., highly cited) guidance across several methodological traditions in qualitative research.