News alert: UC Berkeley has announced its next university librarian

Secondary menu

- Log in to your Library account

- Hours and Maps

- Connect from Off Campus

- UC Berkeley Home

Search form

Research methods--quantitative, qualitative, and more: overview.

- Quantitative Research

- Qualitative Research

- Data Science Methods (Machine Learning, AI, Big Data)

- Text Mining and Computational Text Analysis

- Evidence Synthesis/Systematic Reviews

- Get Data, Get Help!

About Research Methods

This guide provides an overview of research methods, how to choose and use them, and supports and resources at UC Berkeley.

As Patten and Newhart note in the book Understanding Research Methods , "Research methods are the building blocks of the scientific enterprise. They are the "how" for building systematic knowledge. The accumulation of knowledge through research is by its nature a collective endeavor. Each well-designed study provides evidence that may support, amend, refute, or deepen the understanding of existing knowledge...Decisions are important throughout the practice of research and are designed to help researchers collect evidence that includes the full spectrum of the phenomenon under study, to maintain logical rules, and to mitigate or account for possible sources of bias. In many ways, learning research methods is learning how to see and make these decisions."

The choice of methods varies by discipline, by the kind of phenomenon being studied and the data being used to study it, by the technology available, and more. This guide is an introduction, but if you don't see what you need here, always contact your subject librarian, and/or take a look to see if there's a library research guide that will answer your question.

Suggestions for changes and additions to this guide are welcome!

START HERE: SAGE Research Methods

Without question, the most comprehensive resource available from the library is SAGE Research Methods. HERE IS THE ONLINE GUIDE to this one-stop shopping collection, and some helpful links are below:

- SAGE Research Methods

- Little Green Books (Quantitative Methods)

- Little Blue Books (Qualitative Methods)

- Dictionaries and Encyclopedias

- Case studies of real research projects

- Sample datasets for hands-on practice

- Streaming video--see methods come to life

- Methodspace- -a community for researchers

- SAGE Research Methods Course Mapping

Library Data Services at UC Berkeley

Library Data Services Program and Digital Scholarship Services

The LDSP offers a variety of services and tools ! From this link, check out pages for each of the following topics: discovering data, managing data, collecting data, GIS data, text data mining, publishing data, digital scholarship, open science, and the Research Data Management Program.

Be sure also to check out the visual guide to where to seek assistance on campus with any research question you may have!

Library GIS Services

Other Data Services at Berkeley

D-Lab Supports Berkeley faculty, staff, and graduate students with research in data intensive social science, including a wide range of training and workshop offerings Dryad Dryad is a simple self-service tool for researchers to use in publishing their datasets. It provides tools for the effective publication of and access to research data. Geospatial Innovation Facility (GIF) Provides leadership and training across a broad array of integrated mapping technologies on campu Research Data Management A UC Berkeley guide and consulting service for research data management issues

General Research Methods Resources

Here are some general resources for assistance:

- Assistance from ICPSR (must create an account to access): Getting Help with Data , and Resources for Students

- Wiley Stats Ref for background information on statistics topics

- Survey Documentation and Analysis (SDA) . Program for easy web-based analysis of survey data.

Consultants

- D-Lab/Data Science Discovery Consultants Request help with your research project from peer consultants.

- Research data (RDM) consulting Meet with RDM consultants before designing the data security, storage, and sharing aspects of your qualitative project.

- Statistics Department Consulting Services A service in which advanced graduate students, under faculty supervision, are available to consult during specified hours in the Fall and Spring semesters.

Related Resourcex

- IRB / CPHS Qualitative research projects with human subjects often require that you go through an ethics review.

- OURS (Office of Undergraduate Research and Scholarships) OURS supports undergraduates who want to embark on research projects and assistantships. In particular, check out their "Getting Started in Research" workshops

- Sponsored Projects Sponsored projects works with researchers applying for major external grants.

- Next: Quantitative Research >>

- Last Updated: Apr 3, 2023 3:14 PM

- URL: https://guides.lib.berkeley.edu/researchmethods

An official website of the United States government

Here’s how you know

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock A locked padlock ) or https:// means you’ve safely connected to the .gov website. Share sensitive information only on official, secure websites.

In Spring 2021, the National Library of Medicine (NLM) PubMed® Special Query on this page will no longer be curated by NLM. If you have questions, please contact NLM Customer Support at https://support.nlm.nih.gov/

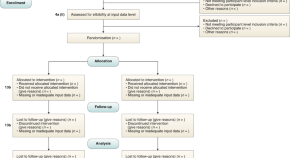

This chart lists the major biomedical research reporting guidelines that provide advice for reporting research methods and findings. They usually "specify a minimum set of items required for a clear and transparent account of what was done and what was found in a research study, reflecting, in particular, issues that might introduce bias into the research" (Adapted from the EQUATOR Network Resource Centre ). The chart also includes editorial style guides for writing research reports or other publications.

See the details of the search strategy. More research reporting guidelines are at the EQUATOR Network Resource Centre .

Last Reviewed: April 14, 2023

When you choose to publish with PLOS, your research makes an impact. Make your work accessible to all, without restrictions, and accelerate scientific discovery with options like preprints and published peer review that make your work more Open.

- PLOS Biology

- PLOS Climate

- PLOS Complex Systems

- PLOS Computational Biology

- PLOS Digital Health

- PLOS Genetics

- PLOS Global Public Health

- PLOS Medicine

- PLOS Mental Health

- PLOS Neglected Tropical Diseases

- PLOS Pathogens

- PLOS Sustainability and Transformation

- PLOS Collections

- Collections Home

- About Collections

- Browse Collections

- Calls for Papers

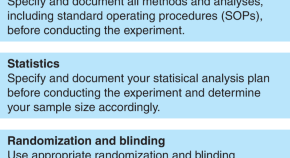

Reporting Guidelines

Reporting guidelines are statements intended to advise authors reporting research methods and findings. They can be presented as a checklist, flow diagram or text, and describe what is required to give a clear and transparent account of a study's research and results. These guidelines are prepared through consideration of specific issues that may introduce bias, and are supported by the latest evidence in the field. The Reporting Guidelines Collection highlights articles published across PLOS journals and includes guidelines and guidance, commentary, and related research on guidelines. This collection features some of the many resources available to facilitate the rigorous reporting of scientific studies, and to improve the presentation and evaluation of published studies.

Image Credit: CCAC North Library, Flickr

- From the Blogs

- Observational & Epidemiological Research

- Randomized Controlled Trials

- Systematic Reviews & Meta-Analyses

- Diagnostic & Prognostic Research

- Animal & Cell Models

- General Guidance

- Image credit CCAC North Library, Flickr.com Speaking of Medicine Maximizing the Impact of Research: New Reporting Guidelines Collection from PLOS – Speaking of Medicine September 3, 2013 Amy Ross, Laureen Connell

- Image credit 10.1371/journal.pmed.1001885 PLOS Medicine The REporting of studies Conducted using Observational Routinely-collected health Data (RECORD) Statement October 6, 2015 Eric I. Benchimol, Liam Smeeth, Astrid Guttmann, Katie Harron, David Moher, Irene Petersen, Henrik T. Sørensen, Erik von Elm, Sinéad M. Langan, RECORD Working Committee

- Image credit 10.1371/journal.pmed.0040297 PLOS Medicine Strengthening the Reporting of Observational Studies in Epidemiology (STROBE): Explanation and Elaboration October 16, 2007 Jan P Vandenbroucke, Erik von Elm, Douglas G Altman, Peter C Gøtzsche, Cynthia D Mulrow, Stuart J Pocock, Charles Poole, James J Schlesselman, Matthias Egger, for the STROBE Initiative

- Image credit 10.1371/journal.pmed.0040296 PLOS Medicine The Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) Statement: Guidelines for Reporting Observational Studies October 16, 2007 Erik von Elm, Douglas G Altman, Matthias Egger, Stuart J Pocock, Peter C Gøtzsche, Jan P Vandenbroucke, for the STROBE Initiative

- Image credit PLOS PLOS Medicine Reporting Guidelines for Survey Research: An Analysis of Published Guidance and Reporting Practices August 2, 2011 Carol Bennett, Sara Khangura, Jamie Brehaut, Ian Graham, David Moher, Beth Potter, Jeremy Grimshaw

- Image credit 10.1371/journal.pone.0064733 PLOS ONE Impact of STROBE Statement Publication on Quality of Observational Study Reporting: Interrupted Time Series versus Before-After Analysis August 26, 2013 Sylvie Bastuji-Garin, Emilie Sbidian, Caroline Gaudy-Marqueste, Emilie Ferrat, Jean-Claude Roujeau, Marie-Aleth Richard, Florence Canoui-Poitrine

- Image credit 10.1371/journal.pone.0094412 PLOS ONE The Reporting of Observational Clinical Functional Magnetic Resonance Imaging Studies: A Systematic Review April 22, 2014 Qing Guo, Melissa Parlar, Wanda Truong, Geoffrey Hall, Lehana Thabane, Margaret McKinnon, Ron Goeree, Eleanor Pullenayegum

- Image credit 10.1371/journal.pone.0101176 PLOS ONE A Review of Published Analyses of Case-Cohort Studies and Recommendations for Future Reporting June 27, 2014 Stephen Sharp, Manon Poulaliou, Simon Thompson, Ian White, Angela Wood

- Image credit 10.1371/journal.pone.0103360 PLOS ONE Outlier Removal and the Relation with Reporting Errors and Quality of Psychological Research July 29, 2014 Marjan Bakker, Jelte Wicherts

- Image credit 10.1371/journal.pmed.1000022 PLOS Medicine STrengthening the REporting of Genetic Association Studies (STREGA)— An Extension of the STROBE Statement February 3, 2009 Julian Little, Julian P.T Higgins, John P.A Ioannidis, David Moher, France Gagnon, Erik von Elm, Muin J Khoury, Barbara Cohen, George Davey-Smith, Jeremy Grimshaw, Paul Scheet, Marta Gwinn, Robin E Williamson, Guang Yong Zou, Kim Hutchings, Candice Y Johnson, Valerie Tait, Miriam Wiens, Jean Golding, Cornelia van Duijn, John McLaughlin, Andrew Paterson, George Wells, Isabel Fortier, Matthew Freedman, Maja Zecevic, Richard King, Claire Infante-Rivard, Alex Stewart, Nick Birkett

- Image credit 10.1371/journal.pmed.1001117 PLOS Medicine STrengthening the Reporting of OBservational studies in Epidemiology – Molecular Epidemiology (STROBE-ME): An Extension of the STROBE Statement October 25, 2011 Valentina Gallo, Matthias Egger, Valerie McCormack, Peter Farmer, John Ioannidis, Micheline Kirsch-Volders, Giuseppe Matullo, David Phillips, Bernadette Schoket, Ulf Strömberg, Roel Vermeulen, Christopher Wild, Miquel Porta, Paolo Vineis

- Image credit PLOS PLOS Medicine Observational Studies: Getting Clear about Transparency August 26, 2014 The PLoS Medicine Editors

- Image credit 10.1371/journal.pmed.0050020 PLOS Medicine CONSORT for Reporting Randomized Controlled Trials in Journal and Conference Abstracts: Explanation and Elaboration January 22, 2008 Sally Hopewell, Mike Clarke, David Moher, Elizabeth Wager, Philippa Middleton, Douglas G Altman, Kenneth F Schulz, and the CONSORT Group

- Image credit PLOS PLOS ONE Endorsement of the CONSORT Statement by High-Impact Medical Journals in China: A Survey of Instructions for Authors and Published Papers February 13, 2012 Xiao-qian Li, Kun-ming Tao, Qinghui Zhou, David Moher, Hong-yun Chen, Fu-zhe Wang, Chang-quan Ling

- Image credit PLOS PLOS ONE Assessing the Quality of Reports about Randomized Controlled Trials of Acupuncture Treatment on Diabetic Peripheral Neuropathy July 2, 2012 Chen Bo, Zhao Xue, Guo Yi, Chen Zelin, Bai Yang, Wang Zixu, Wang Yajun

- Image credit 10.1371/journal.pone.0065442 PLOS ONE Reporting Quality of Social and Psychological Intervention Trials: A Systematic Review of Reporting Guidelines and Trial Publications May 29, 2013 Sean Grant, Evan Mayo-Wilson, G. J. Melendez-Torres, Paul Montgomery

- Image credit 10.1371/journal.pone.0084779 PLOS ONE Are Reports of Randomized Controlled Trials Improving over Time? A Systematic Review of 284 Articles Published in High-Impact General and Specialized Medical Journals December 31, 2013 Matthew To, Jennifer Jones, Mohamed Emara, Alejandro Jadad

- Image credit 10.1371/journal.pone.0086360 PLOS ONE Assessment of the Reporting Quality of Randomized Controlled Trials on Treatment of Coronary Heart Disease with Traditional Chinese Medicine from the Chinese Journal of Integrated Traditional and Western Medicine: A Systematic Review January 28, 2014 Fan Fang, Xu Qin, Sun Qi, Zhao Jun, Wang Ping, Guo Rui

- Image credit 10.1371/journal.pmed.1001666 PLOS Medicine Evidence for the Selective Reporting of Analyses and Discrepancies in Clinical Trials: A Systematic Review of Cohort Studies of Clinical Trials June 24, 2014 Kerry Dwan, Douglas Altman, Mike Clarke, Carrol Gamble, Julian Higgins, Jonathan Sterne, Paula Williamson, Jamie Kirkham

- Image credit 10.1371/journal.pone.0110229 PLOS ONE Systematic Evaluation of the Patient-Reported Outcome (PRO) Content of Clinical Trial Protocols October 15, 2014 Derek Kyte, Helen Duffy, Benjamin Fletcher, Adrian Gheorghe, Rebecca Mercieca-Bebber, Madeleine King, Heather Draper, Jonathan Ives, Michael Brundage, Jane Blazeby, Melanie Calvert

- Image credit 10.1371/journal.pone.0110216 PLOS ONE Patient-Reported Outcome (PRO) Assessment in Clinical Trials: A Systematic Review of Guidance for Trial Protocol Writers October 15, 2014 Melanie Calvert, Derek Kyte, Helen Duffy, Adrian Gheorghe, Rebecca Mercieca-Bebber, Jonathan Ives, Heather Draper, Michael Brundage, Jane Blazeby, Madeleine King

- Image credit 10.1371/journal.pmed.1000261 PLOS Medicine Revised STandards for Reporting Interventions in Clinical Trials of Acupuncture (STRICTA): Extending the CONSORT Statement June 8, 2010 Hugh MacPherson, Douglas G. Altman, Richard Hammerschlag, Li Youping, Wu Taixiang, Adrian White, David Moher, on behalf of the STRICTA Revision Group

- Image credit PLOS PLOS Medicine Comparative Effectiveness Research: Challenges for Medical Journals April 27, 2010 Harold C. Sox, Mark Helfand, Jeremy Grimshaw, Kay Dickersin, the PLoS Medicine Editors , David Tovey, J. André Knottnerus, Peter Tugwell

- Image credit PLOS PLOS Medicine Reporting of Systematic Reviews: The Challenge of Genetic Association Studies June 26, 2007 Muin J Khoury, Julian Little, Julian Higgins, John P. A Ioannidis, Marta Gwinn

- Image credit 10.1371/journal.pmed.0040078 PLOS Medicine Epidemiology and Reporting Characteristics of Systematic Reviews March 27, 2007 David Moher, Jennifer Tetzlaff, Andrea C Tricco, Margaret Sampson, Douglas G Altman

- Image credit 10.1371/journal.pone.0027611 PLOS ONE From QUOROM to PRISMA: A Survey of High-Impact Medical Journals’ Instructions to Authors and a Review of Systematic Reviews in Anesthesia Literature November 16, 2011 Kun-ming Tao, Xiao-qian Li, Qing-hui Zhou, David Moher, Chang-quan Ling, weifeng yu

- Image credit 10.1371/journal.pone.0075122 PLOS ONE Testing the PRISMA-Equity 2012 Reporting Guideline: the Perspectives of Systematic Review Authors October 10, 2013 Belinda Burford, Vivian Welch, Elizabeth Waters, Peter Tugwell, David Moher, Jennifer O'Neill, Tracey Koehlmoos, Mark Petticrew

- Image credit 10.1371/journal.pone.0092508 PLOS ONE The Quality of Reporting Methods and Results in Network Meta-Analyses: An Overview of Reviews and Suggestions for Improvement March 26, 2014 Brian Hutton, Georgia Salanti, Anna Chaimani, Deborah Caldwell, Chris Schmid, Kristian Thorlund, Edward Mills, Lucy Turner, Ferran Catala-Lopez, Doug Altman, David Moher

- Image credit 10.1371/journal.pone.0096407 PLOS ONE Blinded by PRISMA: Are Systematic Reviewers Focusing on PRISMA and Ignoring Other Guidelines? May 1, 2014 Padhraig Fleming, Despina Koletsi, Nikolaos Pandis

- Image credit 10.1371/journal.pmed.1000097 PLOS Medicine Preferred Reporting Items for Systematic Reviews and Meta-Analyses: The PRISMA Statement July 21, 2009 David Moher, Alessandro Liberati, Jennifer Tetzlaff, Douglas G. Altman, The PRISMA Group

- Image credit 10.1371/journal.pmed.1001333 PLOS Medicine PRISMA-Equity 2012 Extension: Reporting Guidelines for Systematic Reviews with a Focus on Health Equity October 30, 2012 Vivian Welch, Mark Petticrew, Peter Tugwell, David Moher, Jennifer O'Neill, Elizabeth Waters, Howard White

- Image credit 10.1371/journal.pmed.1001419 PLOS Medicine PRISMA for Abstracts: Reporting Systematic Reviews in Journal and Conference Abstracts April 9, 2013 Elaine Beller, Paul Glasziou, Douglas Altman, Sally Hopewell, Hilda Bastian, Iain Chalmers, Peter Gotzsche, Toby Lasserson, David Tovey

- Image credit PLOS PLOS Medicine Many Reviews Are Systematic but Some Are More Transparent and Completely Reported than Others March 27, 2007 The PLoS Medicine Editors

- Image credit 10.1371/journal.pmed.1000100 PLOS Medicine The PRISMA Statement for Reporting Systematic Reviews and Meta-Analyses of Studies That Evaluate Health Care Interventions: Explanation and Elaboration July 21, 2009 Alessandro Liberati, Douglas G. Altman, Jennifer Tetzlaff, Cynthia Mulrow, Peter C. Gøtzsche, John P. A. Ioannidis, Mike Clarke, P. J. Devereaux, Jos Kleijnen, David Moher

- Image credit 10.1371/journal.pone.0007753 PLOS ONE Quality and Reporting of Diagnostic Accuracy Studies in TB, HIV and Malaria: Evaluation Using QUADAS and STARD Standards November 13, 2009 Patricia Scolari Fontela, Nitika Pant Pai, Ian Schiller, Nandini Dendukuri, Andrew Ramsay, Madhukar Pai

- Image credit 10.1371/journal.pmed.1001531 PLOS Medicine Use of Expert Panels to Define the Reference Standard in Diagnostic Research: A Systematic Review of Published Methods and Reporting October 15, 2013 Loes Bertens, Berna Broekhuizen, Christiana Naaktgeboren, Frans Rutten, Arno Hoes, Yvonne van Mourik, Karel Moons, Johannes Reitsma

- Image credit 10.1371/journal.pone.0085908 PLOS ONE The Assessment of the Quality of Reporting of Systematic Reviews/Meta-Analyses in Diagnostic Tests Published by Authors in China January 21, 2014 Long Ge, Jian-cheng Wang, Jin-long Li, Li Liang, Ni An, Xin-tong Shi, Yin-chun Liu, JH Tian

- Image credit 10.1371/journal.pmed.1000420 PLOS Medicine Strengthening the Reporting of Genetic Risk Prediction Studies: The GRIPS Statement March 15, 2011 A. Cecile J. W. Janssens, John P. A. Ioannidis, Cornelia M. van Duijn, Julian Little, Muin J. Khoury, for the GRIPS Group

- Image credit 10.1371/journal.pmed.1001216 PLOS Medicine Reporting Recommendations for Tumor Marker Prognostic Studies (REMARK): Explanation and Elaboration May 29, 2012 Doug Altman, Lisa McShane, Willi Sauerbrei, Sheila Taube

- Image credit 10.1371/journal.pmed.1001381 PLOS Medicine Prognosis Research Strategy (PROGRESS) 3: Prognostic Model Research February 5, 2013 Ewout Steyerberg, Karel Moons, Danielle van der Windt, Jill Hayden, Pablo Perel, Sara Schroter, Richard Riley, Harry Hemingway, Douglas Altman

- Image credit 10.1371/journal.pmed.1001380 PLOS Medicine Prognosis Research Strategy (PROGRESS) 2: Prognostic Factor Research February 5, 2013 Richard Riley, Jill Hayden, Ewout Steyerberg, Karel Moons, Keith Abrams, Panayiotis Kyzas, Nuria Malats, Andrew Briggs, Sara Schroter, Douglas Altman, Harry Hemingway

- Image credit 10.1371/journal.pmed.1001671 PLOS Medicine Improving the Transparency of Prognosis Research: The Role of Reporting, Data Sharing, Registration, and Protocols July 8, 2014 George Peat, Richard Riley, Peter Croft, Katherine Morley, Panayiotis Kyzas, Karel Moons, Pablo Perel, Ewout Steyerberg, Sara Schroter, Douglas Altman, Harry Hemingway

- Image credit 10.1371/journal.pone.0007824 PLOS ONE Survey of the Quality of Experimental Design, Statistical Analysis and Reporting of Research Using Animals November 30, 2009 Carol Kilkenny, Nick Parsons, Ed Kadyszewski, Michael F. W. Festing, Innes C. Cuthill, Derek Fry, Jane Hutton, Douglas G. Altman

- Image credit 10.1371/journal.pmed.1001489 PLOS Medicine Threats to Validity in the Design and Conduct of Preclinical Efficacy Studies: A Systematic Review of Guidelines for In Vivo Animal Experiments July 23, 2013 Valerie Henderson, Jonathan Kimmelman, Dean Fergusson, Jeremy Grimshaw, Dan Hackam

- Image credit 10.1371/journal.pone.0088266 PLOS ONE Five Years MIQE Guidelines: The Case of the Arabian Countries February 4, 2014 Afif Abdel Nour, Esam Azhar, Ghazi Damanhouri, Stephen Bustin

- Image credit 10.1371/journal.pone.0101131 PLOS ONE The Quality of Methods Reporting in Parasitology Experiments July 30, 2014 Oscar Flórez-Vargas, Michael Bramhall, Harry Noyes, Sheena Cruickshank, Robert Stevens, Andy Brass

- Image credit 10.1371/journal.pbio.1000412 PLOS Biology Improving Bioscience Research Reporting: The ARRIVE Guidelines for Reporting Animal Research June 29, 2010 Carol Kilkenny, William J. Browne, Innes C. Cuthill, Michael Emerson, Douglas G. Altman

- Image credit 10.1371/journal.pbio.1001481 PLOS Biology Whole Animal Experiments Should Be More Like Human Randomized Controlled Trials February 12, 2013 Beverly Muhlhausler, Frank Bloomfield, Matthew Gillman

- Image credit 10.1371/journal.pbio.1001756 PLOS Biology Two Years Later: Journals Are Not Yet Enforcing the ARRIVE Guidelines on Reporting Standards for Pre-Clinical Animal Studies January 7, 2014 David Baker, Katie Lidster, Ana Sottomayor, Sandra Amor

- Image credit PLOS PLOS Biology Reporting Animal Studies: Good Science and a Duty of Care June 29, 2010 Catriona J. MacCallum

- Image credit PLOS PLOS Biology Open Science and Reporting Animal Studies: Who’s Accountable? January 7, 2014 Catriona MacCallum, Jonathan Eisen, Emma Ganley

- Image credit PLOS PLOS Computational Biology Minimum Information About a Simulation Experiment (MIASE) April 28, 2011 Dagmar Waltemath, Richard Adams, Daniel A. Beard, Frank T. Bergmann, Upinder S. Bhalla, Randall Britten, Vijayalakshmi Chelliah, Michael T. Cooling, Jonathan Cooper, Edmund J. Crampin, Alan Garny, Stefan Hoops, Michael Hucka, Peter Hunter, Edda Klipp, Camille Laibe, Andrew K. Miller, Ion Moraru, David Nickerson, Poul Nielsen, Macha Nikolski, Sven Sahle, Herbert M. Sauro, Henning Schmidt, Jacky L. Snoep, Dominic Tolle, Olaf Wolkenhauer, Nicolas Le Novère

- Image credit 10.1371/journal.pmed.0050139 PLOS Medicine Guidelines for Reporting Health Research: The EQUATOR Network’s Survey of Guideline Authors June 24, 2008 Iveta Simera, Douglas G Altman, David Moher, Kenneth F Schulz, John Hoey

- Image credit 10.1371/journal.pmed.1000217 PLOS Medicine Guidance for Developers of Health Research Reporting Guidelines February 16, 2010 David Moher, Kenneth F. Schulz, Iveta Simera, Douglas G. Altman

- Image credit 10.1371/journal.pone.0035621 PLOS ONE Are Peer Reviewers Encouraged to Use Reporting Guidelines? A Survey of 116 Health Research Journals April 27, 2012 Allison Hirst, Douglas Altman

- Image credit PLOS PLOS Neglected Tropical Diseases Research Ethics and Reporting Standards at PLoS Neglected Tropical Diseases October 31, 2007 Gavin Yamey

- Image credit PLOS PLOS Medicine Better Reporting, Better Research: Guidelines and Guidance in PLoS Medicine April 29, 2008 The PLoS Medicine Editors

- Image credit CCAC North Library, Flickr.com PLOS Medicine Better Reporting of Scientific Studies: Why It Matters August 27, 2013 The PLoS Medicine Editors

- Image credit 10.1371/journal.pone.0097492 PLOS ONE Do Editorial Policies Support Ethical Research? A Thematic Text Analysis of Author Instructions in Psychiatry Journals June 5, 2014 Daniel Strech, Courtney Metz, Hannes Knüppel

Research methods & reporting

Quantifying possible bias in clinical and epidemiological studies with quantitative bias analysis: common approaches and limitations, assessing robustness to worst case publication bias using a simple subset meta-analysis, regression discontinuity design studies: a guide for health researchers, process guide for inferential studies using healthcare data from routine clinical practice to evaluate causal effects of drugs, updated recommendations for the cochrane rapid review methods guidance for rapid reviews of effectiveness, avoiding conflicts of interest and reputational risks associated with population research on food and nutrition, the estimands framework: a primer on the ich e9(r1) addendum, evaluation of clinical prediction models (part 3): calculating the sample size required for an external validation study, evaluation of clinical prediction models (part 2): how to undertake an external validation study, evaluation of clinical prediction models (part 1): from development to external validation, emulation of a target trial using electronic health records and a nested case-control design, rob-me: a tool for assessing risk of bias due to missing evidence in systematic reviews with meta-analysis, enhancing reporting quality and impact of early phase dose-finding clinical trials: consort dose-finding extension (consort-define) guidance, enhancing quality and impact of early phase dose-finding clinical trial protocols: spirit dose-finding extension (spirit-define) guidance, understanding how health interventions or exposures produce their effects using mediation analysis, a guide and pragmatic considerations for applying grade to network meta-analysis, a framework for assessing selection and misclassification bias in mendelian randomisation studies: an illustrative example between bmi and covid-19, practical thematic analysis: a guide for multidisciplinary health services research teams engaging in qualitative analysis, selection bias due to conditioning on a collider, the imprinting effect of covid-19 vaccines: an expected selection bias in observational studies, a step-by-step approach for selecting an optimal minimal important difference, recommendations for the development, implementation, and reporting of control interventions in trials of self-management therapies, methods for deriving risk difference (absolute risk reduction) from a meta-analysis, transparent reporting of multivariable prediction models for individual prognosis or diagnosis: checklist for systematic reviews and meta-analyses, consort harms 2022 statement, explanation, and elaboration: updated guideline for the reporting of harms in randomised trials, transparent reporting of multivariable prediction models: : explanation and elaboration, transparent reporting of multivariable prediction models: tripod-cluster checklist, bias by censoring for competing events in survival analysis, code-ehr best practice framework for the use of structured electronic healthcare records in clinical research, validation of prediction models in the presence of competing risks, reporting guideline for the early stage clinical evaluation of decision support systems driven by artificial intelligence, searching clinical trials registers: guide for systematic reviewers, how to design high quality acupuncture trials—a consensus informed by evidence, early phase clinical trials extension to guidelines for the content of statistical analysis plans, incorporating dose effects in network meta-analysis, consolidated health economic evaluation reporting standards 2022 statement, strengthening the reporting of observational studies in epidemiology using mendelian randomisation (strobe-mr): explanation and elaboration, a new framework for developing and evaluating complex interventions, adapting interventions to new contexts—the adapt guidance, recommendations for including or reviewing patient reported outcome endpoints in grant applications, consort extension for the reporting of randomised controlled trials conducted using cohorts and routinely collected data (consort-routine): checklist with explanation and elaboration, consort extension for the reporting of randomised controlled trials conducted using cohorts and routinely collected data, guidance for the design and reporting of studies evaluating the clinical performance of tests for present or past sars-cov-2 infection, the prisma 2020 statement: an updated guideline for reporting systematic reviews, prisma 2020 explanation and elaboration: updated guidance and exemplars for reporting systematic reviews, preferred reporting items for journal and conference abstracts of systematic reviews and meta-analyses of diagnostic test accuracy studies (prisma-dta for abstracts): checklist, explanation, and elaboration, designing and undertaking randomised implementation trials: guide for researchers, start-rwe: structured template for planning and reporting on the implementation of real world evidence studies, methodological standards for qualitative and mixed methods patient centered outcomes research, grade approach to drawing conclusions from a network meta-analysis using a minimally contextualised framework, grade approach to drawing conclusions from a network meta-analysis using a partially contextualised framework, use of multiple period, cluster randomised, crossover trial designs for comparative effectiveness research, when to replicate systematic reviews of interventions: consensus checklist, reporting guidelines for clinical trial reports for interventions involving artificial intelligence: the consort-ai extension, guidelines for clinical trial protocols for interventions involving artificial intelligence: the spirit-ai extension, preferred reporting items for systematic review and meta-analysis of diagnostic test accuracy studies (prisma-dta): explanation, elaboration, and checklist, non-adherence in non-inferiority trials: pitfalls and recommendations, the adaptive designs consort extension (ace) statement: a checklist with explanation and elaboration guideline for reporting randomised trials that use an adaptive design, machine learning and artificial intelligence research for patient benefit: 20 critical questions on transparency, replicability, ethics, and effectiveness, calculating the sample size required for developing a clinical prediction model, spirit extension and elaboration for n-of-1 trials: spent 2019 checklist, synthesis without meta-analysis (swim) in systematic reviews: reporting guideline, alternative approaches for confounding adjustment in observational studies using weighting based on the propensity score: a primer for practitioners, a guide to prospective meta-analysis, rob 2: a revised tool for assessing risk of bias in randomised trials, consort 2010 statement: extension to randomised crossover trials, when and how to use data from randomised trials to develop or validate prognostic models, guide to presenting clinical prediction models for use in clinical settings, a guide to systematic review and meta-analysis of prognostic factor studies, when continuous outcomes are measured using different scales: guide for meta-analysis and interpretation, the reporting of studies conducted using observational routinely collected health data statement for pharmacoepidemiology (record-pe), reporting of stepped wedge cluster randomised trials: extension of the consort 2010 statement with explanation and elaboration, delta,2, guidance on choosing the target difference and undertaking and reporting the sample size calculation for a randomised controlled trial, outcome reporting bias in trials: a methodological approach for assessment and adjustment in systematic reviews, reading mendelian randomisation studies: a guide, glossary, and checklist for clinicians, how to use fda drug approval documents for evidence syntheses, how to avoid common problems when using clinicaltrials.gov in research: 10 issues to consider, tidier-php: a reporting guideline for population health and policy interventions, analysis of cluster randomised trials with an assessment of outcome at baseline, key design considerations for adaptive clinical trials: a primer for clinicians, population attributable fraction, how to estimate the effect of treatment duration on survival outcomes using observational data, concerns about composite reference standards in diagnostic research, statistical methods to compare functional outcomes in randomized controlled trials with high mortality, consort-equity 2017 extension and elaboration for better reporting of health equity in randomised trials, handling time varying confounding in observational research, four study design principles for genetic investigations using next generation sequencing, amstar 2: a critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions, or both, multivariate and network meta-analysis of multiple outcomes and multiple treatments: rationale, concepts, and examples, stard for abstracts: essential items for reporting diagnostic accuracy studies in journal or conference abstracts, statistics notes: percentage differences, symmetry, and natural logarithms, statistics notes: what is a percentage difference, gripp2 reporting checklists: tools to improve reporting of patient and public involvement in research, enhancing the usability of systematic reviews by improving the consideration and description of interventions, how to design efficient cluster randomised trials, consort 2010 statement: extension checklist for reporting within person randomised trials, life expectancy difference and life expectancy ratio: two measures of treatment effects in randomised trials with non-proportional hazards, standards for reporting implementation studies (stari) statement, meta-analytical methods to identify who benefits most from treatments: daft, deluded, or deft approach, follow us on, content links.

- Collections

- Health in South Asia

- Women’s, children’s & adolescents’ health

- News and views

- BMJ Opinion

- Rapid responses

- Editorial staff

- BMJ in the USA

- BMJ in South Asia

- Submit your paper

- BMA members

- Subscribers

- Advertisers and sponsors

Explore BMJ

- Our company

- BMJ Careers

- BMJ Learning

- BMJ Masterclasses

- BMJ Journals

- BMJ Student

- Academic edition of The BMJ

- BMJ Best Practice

- The BMJ Awards

- Email alerts

- Activate subscription

Information

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

Collection 18 December 2023

Best Practices in Method Reporting

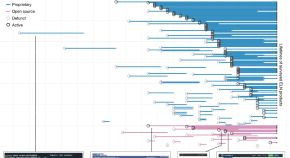

For many years, SpringerNature has raised awareness of the challenges in replicating scientific experiments, and promoted the publication of detailed methods and protocols. Following a workshop organised by European Commission's Joint Research Centre, we contributed to a document drafting recommendations to improve methodological clarity in life sciences publications which has inspired this Collection highlighting content across a spectrum of different journals and subject areas.

Other collections of similar articles can be found in the “Related Collections” tab. The “Resources” tab includes a list of journals dedicated to the publication of methods or protocols as well as links to suitable preprint servers related to method and data sharing, and recorded webinars.

Bronwen Dekker

Nature Protocols

- Collection content

- Participating journals

- Related Collections

Editorials and Featues

Ensuring accurate resource identification.

Nature Protocols is pleased to be a part of the Resource Identification Initiative, a project aimed at improving the reproducibility of research by clearly identifying key biological resources. Stable unique digital identifiers, called Research Resource Identifiers (RRIDs), are assigned to individual resources, allowing users to accurately identify and source them, track their history, identify known problems (such as cell line contamination and misidentification) and find relevant research papers. Following a successful 6-month trial, we will now require authors to provide RRIDs for all antibodies and cell lines used in their protocols. We will also be encouraging them to add RRIDs for other tools (such as plasmids and organisms) where they think this is helpful.

Five keys to writing a reproducible lab protocol

Effective sharing of experimental methods is crucial to ensuring that others can repeat results. An abundance of tools is available to help.

- Monya Baker

Share methods through visual and digital protocols

Documenting experimental methods ensures reproducibility and accountability, and there are innovative ways to disseminate and update them.

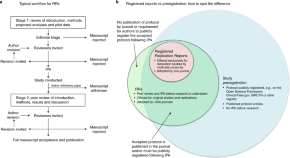

Registered Reports at Nature Methods

Nature Methods is introducing a new article format: Registered Reports. We encourage all authors interested in submitting comparative analyses of the performance of established, related tools or methods to familiarize themselves with this alternative approach to peer review.

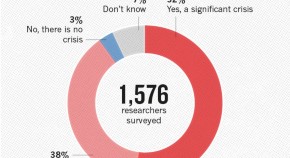

1,500 scientists lift the lid on reproducibility

Survey sheds light on the ‘crisis’ rocking research.

Consensus Statements

Minimum Information for Reporting on the Comet Assay (MIRCA): recommendations for describing comet assay procedures and results

Here, members of the hCOMET COST Action program provide a consensus statement on the Minimum Information for Reporting Comet Assays (MIRCA).

- Peter Møller

- Amaya Azqueta

- Sabine A. S. Langie

Reporting guidelines for clinical trial reports for interventions involving artificial intelligence: the CONSORT-AI extension

The CONSORT-AI and SPIRIT-AI extensions improve the transparency of clinical trial design and trial protocol reporting for artificial intelligence interventions.

- Xiaoxuan Liu

- Samantha Cruz Rivera

- The SPIRIT-AI and CONSORT-AI Working Group

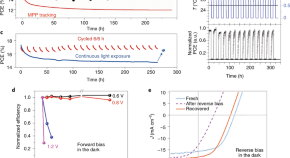

Consensus statement for stability assessment and reporting for perovskite photovoltaics based on ISOS procedures

Reliability of stability data for perovskite solar cells is undermined by a lack of consistency in the test conditions and reporting. This Consensus Statement outlines practices for testing and reporting stability tailoring ISOS protocols for perovskite devices.

- Mark V. Khenkin

- Eugene A. Katz

- Monica Lira-Cantu

Reviews and Perspectives

Considerations for implementing electronic laboratory notebooks in an academic research environment

This review explores factors to consider when introducing electronic laboratory notebooks, including discussion of integration with research data management and the functionalities to compare when evaluating specific software packages.

- Stuart G. Higgins

- Akemi A. Nogiwa-Valdez

- Molly M. Stevens

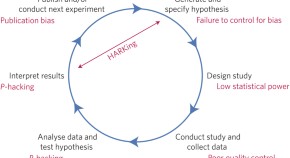

A manifesto for reproducible science

Leading voices in the reproducibility landscape call for the adoption of measures to optimize key elements of the scientific process.

- Marcus R. Munafò

- Brian A. Nosek

- John P. A. Ioannidis

A practical guide for the analysis, standardization and interpretation of oxygen consumption measurements

Measurements of oxygen consumption rates have been central to the resurgent interest in studying cellular metabolism. To enhance the overall reproducibility and reliability of these measurements, Divakaruni and Jastroch provide a guide advising on the selection of experimental models and instrumentation as well as the analysis and interpretation of data.

- Ajit S. Divakaruni

- Martin Jastroch

Best practices for analysing microbiomes

Complex microbial communities shape the dynamics of various environments. In this Review, Knight and colleagues discuss the best practices for performing a microbiome study, including experimental design, choice of molecular analysis technology, methods for data analysis and the integration of multiple omics data sets.

- Alison Vrbanac

- Pieter C. Dorrestein

The past, present and future of Registered Reports

Registered Reports were introduced a decade ago as a means for improving the rigour and credibility of confirmatory research. Chambers and Tzavella overview the format’s past, its current status and future developments.

- Christopher D. Chambers

- Loukia Tzavella

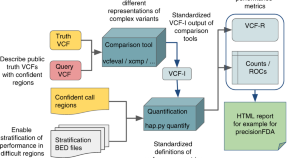

Best practices for benchmarking germline small-variant calls in human genomes

A new standard allows the accuracy of variant calls to be assessed and compared across different technologies, variant types and genomic regions.

- Peter Krusche

- the Global Alliance for Genomics and Health Benchmarking Team

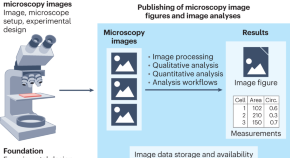

Community-developed checklists for publishing images and image analyses

Community-developed checklists offer best-practice guidance for biologists preparing light microscopy images and describing image analyses for publications.

- Christopher Schmied

- Michael S. Nelson

- Helena Klara Jambor

Initial recommendations for performing, benchmarking and reporting single-cell proteomics experiments

A community of researchers working in the emerging field of single-cell proteomics propose best-practice experimental and computational recommendations and reporting guidelines for studies analyzing proteins from single cells by mass spectrometry.

- Laurent Gatto

- Ruedi Aebersold

- Nikolai Slavov

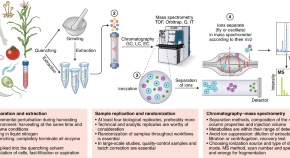

Mass spectrometry-based metabolomics: a guide for annotation, quantification and best reporting practices

This Perspective, from a large group of metabolomics experts, provides best practices and simplified reporting guidelines for practitioners of liquid chromatography– and gas chromatography–mass spectrometry-based metabolomics.

- Saleh Alseekh

- Asaph Aharoni

- Alisdair R. Fernie

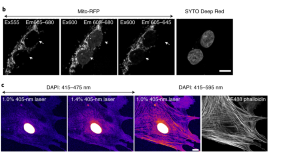

Best practices and tools for reporting reproducible fluorescence microscopy methods

Comprehensive guidelines and resources to enable accurate reporting for the most common fluorescence light microscopy modalities are reported with the goal of improving microscopy reporting, rigor and reproducibility.

- Paula Montero Llopis

- Rebecca A. Senft

- Michelle S. Itano

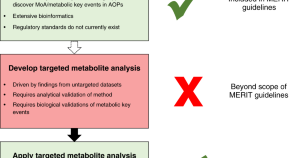

Use cases, best practice and reporting standards for metabolomics in regulatory toxicology

Lack of best practice guidelines currently limits the application of metabolomics in the regulatory sciences. Here, the MEtabolomics standaRds Initiative in Toxicology (MERIT) proposes methods and reporting standards for several important applications of metabolomics in regulatory toxicology.

- Mark R. Viant

- Timothy M. D. Ebbels

- Ralf J. M. Weber

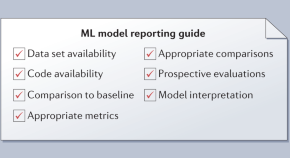

Evaluation guidelines for machine learning tools in the chemical sciences

Studies employing machine-learning (ML) tools in the chemical sciences often report their evaluations in a heterogeneous way. The evaluation guidelines provided in this Perspective should enable more rigorous ML reporting.

- Andreas Bender

- Nadine Schneider

- Tiago Rodrigues

Best practices for single-cell analysis across modalities

Practitioners in the field of single-cell omics are now faced with diverse options for analytical tools to process and integrate data from various molecular modalities. In an Expert Recommendation article, the authors provide guidance on robust single-cell data analysis, including choices of best-performing tools from benchmarking studies.

- Lukas Heumos

- Anna C. Schaar

- Fabian J. Theis

Method Reporting with Initials for Transparency (MeRIT) promotes more granularity and accountability for author contributions

Lack of information on authors’ contribution to specific aspects of a study hampers reproducibility and replicability. Here, the authors propose a new, easily implemented reporting system to clarify contributor roles in the Methods section of an article.

- Shinichi Nakagawa

- Edward R. Ivimey-Cook

- Malgorzata Lagisz

Towards a Rosetta stone for metabolomics: recommendations to overcome inconsistent metabolite nomenclature

The metabolomics literature suffers from ambiguity in the nomenclature for individual metabolites, which introduces a disconnect between publications and leads to misinterpretations. This Comment proposes recommendations for metabolite annotations to engage the scientific community and publishers to adopt a more consistent approach to metabolite nomenclature.

- Ville Koistinen

- Olli Kärkkäinen

- Kati Hanhineva

An update to SPIRIT and CONSORT reporting guidelines to enhance transparency in randomized trials

Results from clinical trials can be deemed trustworthy only if they are properly conducted and their methods are fully reported. The SPIRIT and CONSORT checklists, which have improved clinical trial design, conduct and reporting, are being updated to reflect recent advances and improve the assessment of healthcare interventions.

- Sally Hopewell

- Isabelle Boutron

- David Moher

The EQIPD framework for rigor in the design, conduct, analysis and documentation of animal experiments

The EQIPD framework for rigor in animal experiments aims to unify current recommendations based on evidence behind their rationale and was prospectively tested for feasibility in multicenter animal experiments.

- Jan Vollert

- Malcolm Macleod

- Andrew S. C. Rice

Best practices in machine learning for chemistry

Statistical tools based on machine learning are becoming integrated into chemistry research workflows. We discuss the elements necessary to train reliable, repeatable and reproducible models, and recommend a set of guidelines for machine learning reports.

- Nongnuch Artrith

- Keith T. Butler

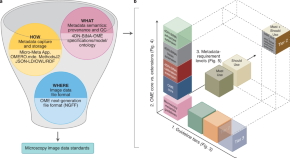

Towards community-driven metadata standards for light microscopy: tiered specifications extending the OME model

Rigorous record-keeping and quality control are required to ensure the quality, reproducibility and value of imaging data. The 4DN Initiative and BINA here propose light Microscopy Metadata Specifications that extend the OME Data Model, scale with experimental intent and complexity, and make it possible for scientists to create comprehensive records of imaging experiments.

- Mathias Hammer

- Maximiliaan Huisman

- Caterina Strambio-De-Castillia

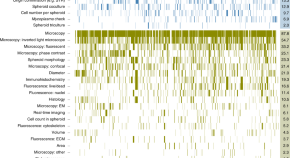

MISpheroID: a knowledgebase and transparency tool for minimum information in spheroid identity

A knowledgebase developed for increased the transparency of reporting in spheroid research.

- Arne Peirsman

- Eva Blondeel

- Olivier De Wever

Reproducibility standards for machine learning in the life sciences

To make machine-learning analyses in the life sciences more computationally reproducible, we propose standards based on data, model and code publication, programming best practices and workflow automation. By meeting these standards, the community of researchers applying machine-learning methods in the life sciences can ensure that their analyses are worthy of trust.

- Benjamin J. Heil

- Michael M. Hoffman

- Stephanie C. Hicks

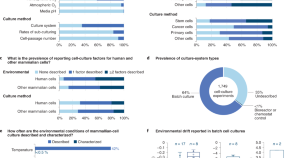

A prevalent neglect of environmental control in mammalian cell culture calls for best practices

In biomedical studies, the environmental conditions used in mammalian cell culture are often underreported, and are seldom monitored or controlled. Best-practice standards are urgently needed.

- Shannon G. Klein

- Samhan M. Alsolami

- Carlos M. Duarte

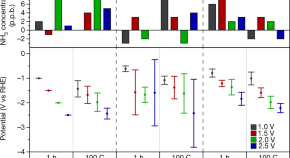

A rigorous electrochemical ammonia synthesis protocol with quantitative isotope measurements

A protocol for the electrochemical reduction of nitrogen to ammonia enables isotope-sensitive quantification of the ammonia produced and the identification and removal of contaminants.

- Suzanne Z. Andersen

- Viktor Čolić

- Ib Chorkendorff

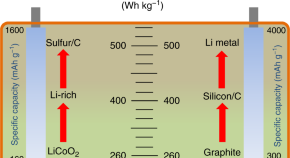

Aligning academia and industry for unified battery performance metrics

- Tiefeng Liu

- Chengdu Liang

Best practices in data analysis and sharing in neuroimaging using MRI

Responding to widespread concerns about reproducibility, the Organization for Human Brain Mapping created a working group to identify best practices in data analysis, results reporting and data sharing to promote open and reproducible research in neuroimaging. We describe the challenges of open research and the barriers the field faces.

- Thomas E Nichols

- B T Thomas Yeo

Reproducibility: changing the policies and culture of cell line authentication

Quality control of cell lines used in biomedical research is essential to ensure reproducibility. Although cell line authentication has been widely recommended for many years, misidentification, including cross-contamination, remains a serious problem. We outline a multi-stakeholder, incremental approach and policy-related recommendations to facilitate change in the culture of cell line authentication.

- Leonard P Freedman

- Mark C Gibson

- Yvonne A Reid

Improved reproducibility by assuring confidence in measurements in biomedical research

'Irreproducibility' is symptomatic of a broader challenge in measurement in biomedical research. From the US National Institute of Standards and Technology (NIST) perspective of rigorous metrology, reproducibility is only one aspect of establishing confidence in measurements. Appropriate controls, reference materials, statistics and informatics are required for a robust measurement process. Research is required to establish these tools for biological measurements, which will lead to greater confidence in research results.

- Anne L Plant

- Laurie E Locascio

- Patrick D Gallagher

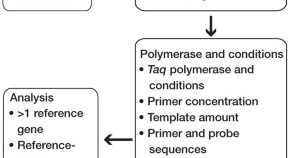

The need for transparency and good practices in the qPCR literature

Two surveys of over 1,700 publications whose authors use quantitative real-time PCR (qPCR) reveal a lack of transparent and comprehensive reporting of essential technical information. Reporting standards are significantly improved in publications that cite the Minimum Information for Publication of Quantitative Real-Time PCR Experiments (MIQE) guidelines, although such publications are still vastly outnumbered by those that do not.

- Stephen A Bustin

- Vladimir Benes

- Jo Vandesompele

Ethical reproducibility: towards transparent reporting in biomedical research

Optimism about biomedicine is challenged by the increasingly complex ethical, legal and social issues it raises. Reporting of scientific methods is no longer sufficient to address the complex relationship between science and society. To promote 'ethical reproducibility', we call for transparent reporting of research ethics methods used in biomedical research.

- James A Anderson

- Marleen Eijkholt

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

- Privacy Policy

Buy Me a Coffee

Home » Research Methodology – Types, Examples and writing Guide

Research Methodology – Types, Examples and writing Guide

Table of Contents

Research Methodology

Definition:

Research Methodology refers to the systematic and scientific approach used to conduct research, investigate problems, and gather data and information for a specific purpose. It involves the techniques and procedures used to identify, collect , analyze , and interpret data to answer research questions or solve research problems . Moreover, They are philosophical and theoretical frameworks that guide the research process.

Structure of Research Methodology

Research methodology formats can vary depending on the specific requirements of the research project, but the following is a basic example of a structure for a research methodology section:

I. Introduction

- Provide an overview of the research problem and the need for a research methodology section

- Outline the main research questions and objectives

II. Research Design

- Explain the research design chosen and why it is appropriate for the research question(s) and objectives

- Discuss any alternative research designs considered and why they were not chosen

- Describe the research setting and participants (if applicable)

III. Data Collection Methods

- Describe the methods used to collect data (e.g., surveys, interviews, observations)

- Explain how the data collection methods were chosen and why they are appropriate for the research question(s) and objectives

- Detail any procedures or instruments used for data collection

IV. Data Analysis Methods

- Describe the methods used to analyze the data (e.g., statistical analysis, content analysis )

- Explain how the data analysis methods were chosen and why they are appropriate for the research question(s) and objectives

- Detail any procedures or software used for data analysis

V. Ethical Considerations

- Discuss any ethical issues that may arise from the research and how they were addressed

- Explain how informed consent was obtained (if applicable)

- Detail any measures taken to ensure confidentiality and anonymity

VI. Limitations

- Identify any potential limitations of the research methodology and how they may impact the results and conclusions

VII. Conclusion

- Summarize the key aspects of the research methodology section

- Explain how the research methodology addresses the research question(s) and objectives

Research Methodology Types

Types of Research Methodology are as follows:

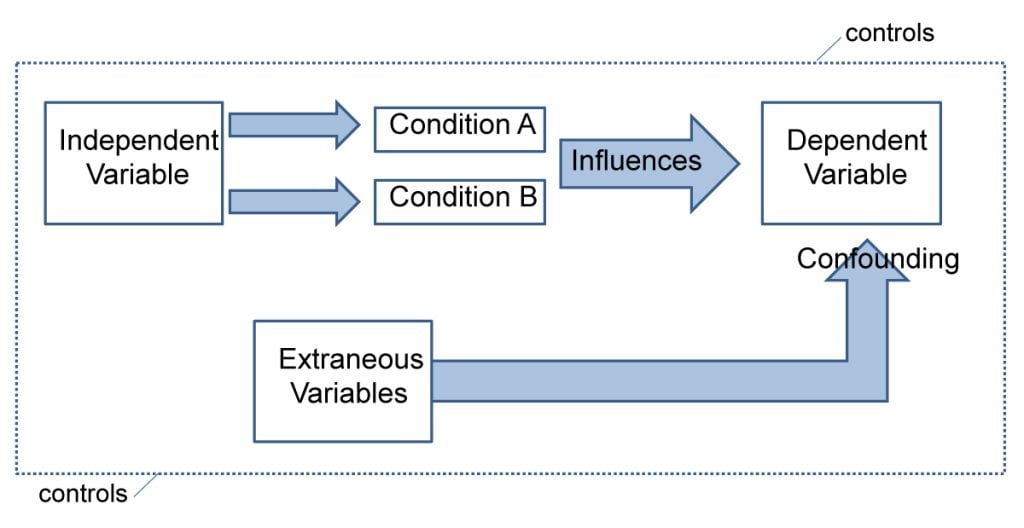

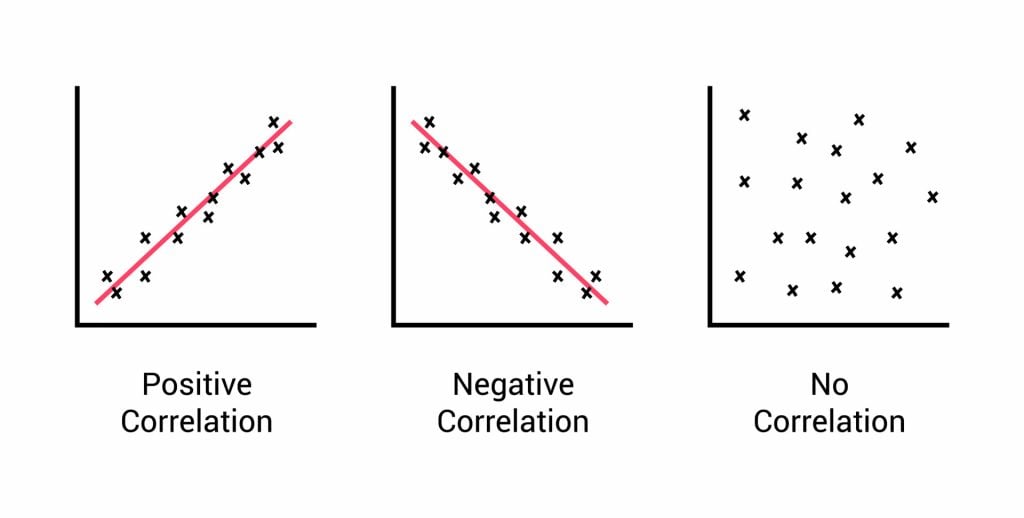

Quantitative Research Methodology

This is a research methodology that involves the collection and analysis of numerical data using statistical methods. This type of research is often used to study cause-and-effect relationships and to make predictions.

Qualitative Research Methodology

This is a research methodology that involves the collection and analysis of non-numerical data such as words, images, and observations. This type of research is often used to explore complex phenomena, to gain an in-depth understanding of a particular topic, and to generate hypotheses.

Mixed-Methods Research Methodology

This is a research methodology that combines elements of both quantitative and qualitative research. This approach can be particularly useful for studies that aim to explore complex phenomena and to provide a more comprehensive understanding of a particular topic.

Case Study Research Methodology

This is a research methodology that involves in-depth examination of a single case or a small number of cases. Case studies are often used in psychology, sociology, and anthropology to gain a detailed understanding of a particular individual or group.

Action Research Methodology

This is a research methodology that involves a collaborative process between researchers and practitioners to identify and solve real-world problems. Action research is often used in education, healthcare, and social work.

Experimental Research Methodology

This is a research methodology that involves the manipulation of one or more independent variables to observe their effects on a dependent variable. Experimental research is often used to study cause-and-effect relationships and to make predictions.

Survey Research Methodology

This is a research methodology that involves the collection of data from a sample of individuals using questionnaires or interviews. Survey research is often used to study attitudes, opinions, and behaviors.

Grounded Theory Research Methodology

This is a research methodology that involves the development of theories based on the data collected during the research process. Grounded theory is often used in sociology and anthropology to generate theories about social phenomena.

Research Methodology Example

An Example of Research Methodology could be the following:

Research Methodology for Investigating the Effectiveness of Cognitive Behavioral Therapy in Reducing Symptoms of Depression in Adults

Introduction:

The aim of this research is to investigate the effectiveness of cognitive-behavioral therapy (CBT) in reducing symptoms of depression in adults. To achieve this objective, a randomized controlled trial (RCT) will be conducted using a mixed-methods approach.

Research Design:

The study will follow a pre-test and post-test design with two groups: an experimental group receiving CBT and a control group receiving no intervention. The study will also include a qualitative component, in which semi-structured interviews will be conducted with a subset of participants to explore their experiences of receiving CBT.

Participants:

Participants will be recruited from community mental health clinics in the local area. The sample will consist of 100 adults aged 18-65 years old who meet the diagnostic criteria for major depressive disorder. Participants will be randomly assigned to either the experimental group or the control group.

Intervention :

The experimental group will receive 12 weekly sessions of CBT, each lasting 60 minutes. The intervention will be delivered by licensed mental health professionals who have been trained in CBT. The control group will receive no intervention during the study period.

Data Collection:

Quantitative data will be collected through the use of standardized measures such as the Beck Depression Inventory-II (BDI-II) and the Generalized Anxiety Disorder-7 (GAD-7). Data will be collected at baseline, immediately after the intervention, and at a 3-month follow-up. Qualitative data will be collected through semi-structured interviews with a subset of participants from the experimental group. The interviews will be conducted at the end of the intervention period, and will explore participants’ experiences of receiving CBT.

Data Analysis:

Quantitative data will be analyzed using descriptive statistics, t-tests, and mixed-model analyses of variance (ANOVA) to assess the effectiveness of the intervention. Qualitative data will be analyzed using thematic analysis to identify common themes and patterns in participants’ experiences of receiving CBT.

Ethical Considerations:

This study will comply with ethical guidelines for research involving human subjects. Participants will provide informed consent before participating in the study, and their privacy and confidentiality will be protected throughout the study. Any adverse events or reactions will be reported and managed appropriately.

Data Management:

All data collected will be kept confidential and stored securely using password-protected databases. Identifying information will be removed from qualitative data transcripts to ensure participants’ anonymity.

Limitations:

One potential limitation of this study is that it only focuses on one type of psychotherapy, CBT, and may not generalize to other types of therapy or interventions. Another limitation is that the study will only include participants from community mental health clinics, which may not be representative of the general population.

Conclusion:

This research aims to investigate the effectiveness of CBT in reducing symptoms of depression in adults. By using a randomized controlled trial and a mixed-methods approach, the study will provide valuable insights into the mechanisms underlying the relationship between CBT and depression. The results of this study will have important implications for the development of effective treatments for depression in clinical settings.

How to Write Research Methodology

Writing a research methodology involves explaining the methods and techniques you used to conduct research, collect data, and analyze results. It’s an essential section of any research paper or thesis, as it helps readers understand the validity and reliability of your findings. Here are the steps to write a research methodology:

- Start by explaining your research question: Begin the methodology section by restating your research question and explaining why it’s important. This helps readers understand the purpose of your research and the rationale behind your methods.

- Describe your research design: Explain the overall approach you used to conduct research. This could be a qualitative or quantitative research design, experimental or non-experimental, case study or survey, etc. Discuss the advantages and limitations of the chosen design.

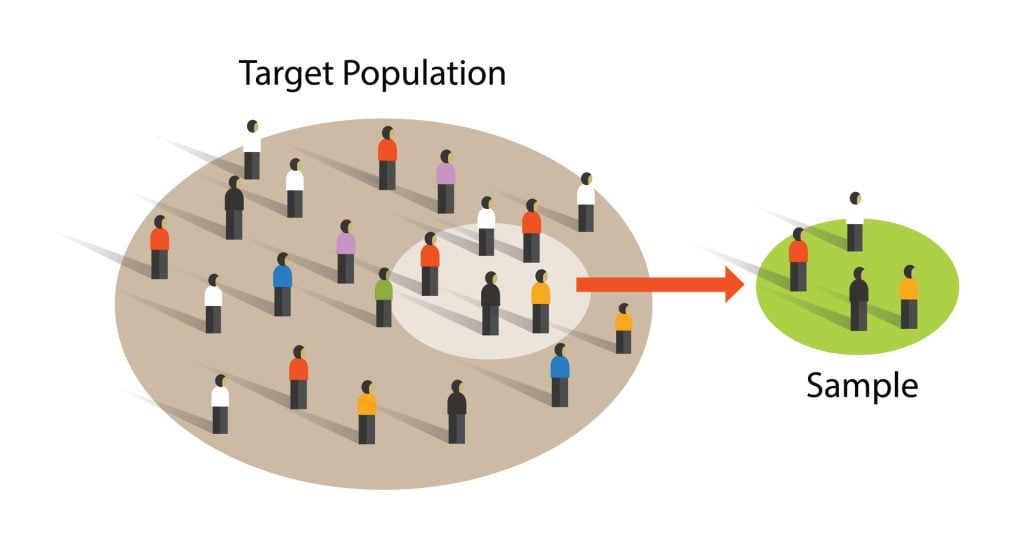

- Discuss your sample: Describe the participants or subjects you included in your study. Include details such as their demographics, sampling method, sample size, and any exclusion criteria used.

- Describe your data collection methods : Explain how you collected data from your participants. This could include surveys, interviews, observations, questionnaires, or experiments. Include details on how you obtained informed consent, how you administered the tools, and how you minimized the risk of bias.

- Explain your data analysis techniques: Describe the methods you used to analyze the data you collected. This could include statistical analysis, content analysis, thematic analysis, or discourse analysis. Explain how you dealt with missing data, outliers, and any other issues that arose during the analysis.

- Discuss the validity and reliability of your research : Explain how you ensured the validity and reliability of your study. This could include measures such as triangulation, member checking, peer review, or inter-coder reliability.

- Acknowledge any limitations of your research: Discuss any limitations of your study, including any potential threats to validity or generalizability. This helps readers understand the scope of your findings and how they might apply to other contexts.

- Provide a summary: End the methodology section by summarizing the methods and techniques you used to conduct your research. This provides a clear overview of your research methodology and helps readers understand the process you followed to arrive at your findings.

When to Write Research Methodology

Research methodology is typically written after the research proposal has been approved and before the actual research is conducted. It should be written prior to data collection and analysis, as it provides a clear roadmap for the research project.

The research methodology is an important section of any research paper or thesis, as it describes the methods and procedures that will be used to conduct the research. It should include details about the research design, data collection methods, data analysis techniques, and any ethical considerations.

The methodology should be written in a clear and concise manner, and it should be based on established research practices and standards. It is important to provide enough detail so that the reader can understand how the research was conducted and evaluate the validity of the results.

Applications of Research Methodology

Here are some of the applications of research methodology:

- To identify the research problem: Research methodology is used to identify the research problem, which is the first step in conducting any research.

- To design the research: Research methodology helps in designing the research by selecting the appropriate research method, research design, and sampling technique.

- To collect data: Research methodology provides a systematic approach to collect data from primary and secondary sources.

- To analyze data: Research methodology helps in analyzing the collected data using various statistical and non-statistical techniques.

- To test hypotheses: Research methodology provides a framework for testing hypotheses and drawing conclusions based on the analysis of data.

- To generalize findings: Research methodology helps in generalizing the findings of the research to the target population.

- To develop theories : Research methodology is used to develop new theories and modify existing theories based on the findings of the research.

- To evaluate programs and policies : Research methodology is used to evaluate the effectiveness of programs and policies by collecting data and analyzing it.

- To improve decision-making: Research methodology helps in making informed decisions by providing reliable and valid data.

Purpose of Research Methodology

Research methodology serves several important purposes, including:

- To guide the research process: Research methodology provides a systematic framework for conducting research. It helps researchers to plan their research, define their research questions, and select appropriate methods and techniques for collecting and analyzing data.

- To ensure research quality: Research methodology helps researchers to ensure that their research is rigorous, reliable, and valid. It provides guidelines for minimizing bias and error in data collection and analysis, and for ensuring that research findings are accurate and trustworthy.

- To replicate research: Research methodology provides a clear and detailed account of the research process, making it possible for other researchers to replicate the study and verify its findings.

- To advance knowledge: Research methodology enables researchers to generate new knowledge and to contribute to the body of knowledge in their field. It provides a means for testing hypotheses, exploring new ideas, and discovering new insights.

- To inform decision-making: Research methodology provides evidence-based information that can inform policy and decision-making in a variety of fields, including medicine, public health, education, and business.

Advantages of Research Methodology

Research methodology has several advantages that make it a valuable tool for conducting research in various fields. Here are some of the key advantages of research methodology:

- Systematic and structured approach : Research methodology provides a systematic and structured approach to conducting research, which ensures that the research is conducted in a rigorous and comprehensive manner.

- Objectivity : Research methodology aims to ensure objectivity in the research process, which means that the research findings are based on evidence and not influenced by personal bias or subjective opinions.

- Replicability : Research methodology ensures that research can be replicated by other researchers, which is essential for validating research findings and ensuring their accuracy.

- Reliability : Research methodology aims to ensure that the research findings are reliable, which means that they are consistent and can be depended upon.

- Validity : Research methodology ensures that the research findings are valid, which means that they accurately reflect the research question or hypothesis being tested.

- Efficiency : Research methodology provides a structured and efficient way of conducting research, which helps to save time and resources.

- Flexibility : Research methodology allows researchers to choose the most appropriate research methods and techniques based on the research question, data availability, and other relevant factors.

- Scope for innovation: Research methodology provides scope for innovation and creativity in designing research studies and developing new research techniques.

Research Methodology Vs Research Methods

About the author.

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

How to Cite Research Paper – All Formats and...

Data Collection – Methods Types and Examples

Delimitations in Research – Types, Examples and...

Research Paper Format – Types, Examples and...

Research Process – Steps, Examples and Tips

Research Design – Types, Methods and Examples

Research report guide: Definition, types, and tips

Last updated

5 March 2024

Reviewed by

From successful product launches or software releases to planning major business decisions, research reports serve many vital functions. They can summarize evidence and deliver insights and recommendations to save companies time and resources. They can reveal the most value-adding actions a company should take.

However, poorly constructed reports can have the opposite effect! Taking the time to learn established research-reporting rules and approaches will equip you with in-demand skills. You’ll be able to capture and communicate information applicable to numerous situations and industries, adding another string to your resume bow.

- What are research reports?

A research report is a collection of contextual data, gathered through organized research, that provides new insights into a particular challenge (which, for this article, is business-related). Research reports are a time-tested method for distilling large amounts of data into a narrow band of focus.

Their effectiveness often hinges on whether the report provides:

Strong, well-researched evidence

Comprehensive analysis

Well-considered conclusions and recommendations

Though the topic possibilities are endless, an effective research report keeps a laser-like focus on the specific questions or objectives the researcher believes are key to achieving success. Many research reports begin as research proposals, which usually include the need for a report to capture the findings of the study and recommend a course of action.

A description of the research method used, e.g., qualitative, quantitative, or other

Statistical analysis

Causal (or explanatory) research (i.e., research identifying relationships between two variables)

Inductive research, also known as ‘theory-building’

Deductive research, such as that used to test theories

Action research, where the research is actively used to drive change

- Importance of a research report

Research reports can unify and direct a company's focus toward the most appropriate strategic action. Of course, spending resources on a report takes up some of the company's human and financial resources. Choosing when a report is called for is a matter of judgment and experience.

Some development models used heavily in the engineering world, such as Waterfall development, are notorious for over-relying on research reports. With Waterfall development, there is a linear progression through each step of a project, and each stage is precisely documented and reported on before moving to the next.

The pace of the business world is faster than the speed at which your authors can produce and disseminate reports. So how do companies strike the right balance between creating and acting on research reports?

The answer lies, again, in the report's defined objectives. By paring down your most pressing interests and those of your stakeholders, your research and reporting skills will be the lenses that keep your company's priorities in constant focus.

Honing your company's primary objectives can save significant amounts of time and align research and reporting efforts with ever-greater precision.

Some examples of well-designed research objectives are:

Proving whether or not a product or service meets customer expectations

Demonstrating the value of a service, product, or business process to your stakeholders and investors

Improving business decision-making when faced with a lack of time or other constraints

Clarifying the relationship between a critical cause and effect for problematic business processes

Prioritizing the development of a backlog of products or product features

Comparing business or production strategies

Evaluating past decisions and predicting future outcomes

- Features of a research report

Research reports generally require a research design phase, where the report author(s) determine the most important elements the report must contain.

Just as there are various kinds of research, there are many types of reports.

Here are the standard elements of almost any research-reporting format:

Report summary. A broad but comprehensive overview of what readers will learn in the full report. Summaries are usually no more than one or two paragraphs and address all key elements of the report. Think of the key takeaways your primary stakeholders will want to know if they don’t have time to read the full document.

Introduction. Include a brief background of the topic, the type of research, and the research sample. Consider the primary goal of the report, who is most affected, and how far along the company is in meeting its objectives.

Methods. A description of how the researcher carried out data collection, analysis, and final interpretations of the data. Include the reasons for choosing a particular method. The methods section should strike a balance between clearly presenting the approach taken to gather data and discussing how it is designed to achieve the report's objectives.

Data analysis. This section contains interpretations that lead readers through the results relevant to the report's thesis. If there were unexpected results, include here a discussion on why that might be. Charts, calculations, statistics, and other supporting information also belong here (or, if lengthy, as an appendix). This should be the most detailed section of the research report, with references for further study. Present the information in a logical order, whether chronologically or in order of importance to the report's objectives.

Conclusion. This should be written with sound reasoning, often containing useful recommendations. The conclusion must be backed by a continuous thread of logic throughout the report.

- How to write a research paper

With a clear outline and robust pool of research, a research paper can start to write itself, but what's a good way to start a research report?

Research report examples are often the quickest way to gain inspiration for your report. Look for the types of research reports most relevant to your industry and consider which makes the most sense for your data and goals.

The research report outline will help you organize the elements of your report. One of the most time-tested report outlines is the IMRaD structure:

Introduction

...and Discussion

Pay close attention to the most well-established research reporting format in your industry, and consider your tone and language from your audience's perspective. Learn the key terms inside and out; incorrect jargon could easily harm the perceived authority of your research paper.

Along with a foundation in high-quality research and razor-sharp analysis, the most effective research reports will also demonstrate well-developed:

Internal logic

Narrative flow

Conclusions and recommendations

Readability, striking a balance between simple phrasing and technical insight

How to gather research data for your report

The validity of research data is critical. Because the research phase usually occurs well before the writing phase, you normally have plenty of time to vet your data.

However, research reports could involve ongoing research, where report authors (sometimes the researchers themselves) write portions of the report alongside ongoing research.

One such research-report example would be an R&D department that knows its primary stakeholders are eager to learn about a lengthy work in progress and any potentially important outcomes.

However you choose to manage the research and reporting, your data must meet robust quality standards before you can rely on it. Vet any research with the following questions in mind:

Does it use statistically valid analysis methods?

Do the researchers clearly explain their research, analysis, and sampling methods?

Did the researchers provide any caveats or advice on how to interpret their data?

Have you gathered the data yourself or were you in close contact with those who did?

Is the source biased?

Usually, flawed research methods become more apparent the further you get through a research report.

It's perfectly natural for good research to raise new questions, but the reader should have no uncertainty about what the data represents. There should be no doubt about matters such as:

Whether the sampling or analysis methods were based on sound and consistent logic

What the research samples are and where they came from

The accuracy of any statistical functions or equations

Validation of testing and measuring processes

When does a report require design validation?

A robust design validation process is often a gold standard in highly technical research reports. Design validation ensures the objects of a study are measured accurately, which lends more weight to your report and makes it valuable to more specialized industries.

Product development and engineering projects are the most common research-report examples that typically involve a design validation process. Depending on the scope and complexity of your research, you might face additional steps to validate your data and research procedures.

If you’re including design validation in the report (or report proposal), explain and justify your data-collection processes. Good design validation builds greater trust in a research report and lends more weight to its conclusions.

Choosing the right analysis method