What are you looking for?

The researchers sought to understand how the reward structure of social media sites drives users to develop habits of posting misinformation on social media. (Photo/AdobeStock)

USC study reveals the key reason why fake news spreads on social media

The USC-led study of more than 2,400 Facebook users suggests that platforms — more than individual users — have a larger role to play in stopping the spread of misinformation online.

USC researchers may have found the biggest influencer in the spread of fake news: social platforms’ structure of rewarding users for habitually sharing information.

The team’s findings, published Monday by Proceedings of the National Academy of Sciences , upend popular misconceptions that misinformation spreads because users lack the critical thinking skills necessary for discerning truth from falsehood or because their strong political beliefs skew their judgment.

Just 15% of the most habitual news sharers in the research were responsible for spreading about 30% to 40% of the fake news.

The research team from the USC Marshall School of Business and the USC Dornsife College of Letters, Arts and Sciences wondered: What motivates these users? As it turns out, much like any video game, social media has a rewards system that encourages users to stay on their accounts and keep posting and sharing. Users who post and share frequently, especially sensational, eye-catching information, are likely to attract attention.

“Due to the reward-based learning systems on social media, users form habits of sharing information that gets recognition from others,” the researchers wrote. “Once habits form, information sharing is automatically activated by cues on the platform without users considering critical response outcomes, such as spreading misinformation.”

Posting, sharing and engaging with others on social media can, therefore, become a habit.

“[Misinformation is] really a function of the structure of the social media sites themselves.” — Wendy Wood , USC expert on habits

“Our findings show that misinformation isn’t spread through a deficit of users. It’s really a function of the structure of the social media sites themselves,” said Wendy Wood , an expert on habits and USC emerita Provost Professor of psychology and business.

“The habits of social media users are a bigger driver of misinformation spread than individual attributes. We know from prior research that some people don’t process information critically, and others form opinions based on political biases, which also affects their ability to recognize false stories online,” said Gizem Ceylan, who led the study during her doctorate at USC Marshall and is now a postdoctoral researcher at the Yale School of Management . “However, we show that the reward structure of social media platforms plays a bigger role when it comes to misinformation spread.”

In a novel approach, Ceylan and her co-authors sought to understand how the reward structure of social media sites drives users to develop habits of posting misinformation on social media.

Why fake news spreads: behind the social network

Overall, the study involved 2,476 active Facebook users ranging in age from 18 to 89 who volunteered in response to online advertising to participate. They were compensated to complete a “decision-making” survey approximately seven minutes long.

Surprisingly, the researchers found that users’ social media habits doubled and, in some cases, tripled the amount of fake news they shared. Their habits were more influential in sharing fake news than other factors, including political beliefs and lack of critical reasoning.

Frequent, habitual users forwarded six times more fake news than occasional or new users.

“This type of behavior has been rewarded in the past by algorithms that prioritize engagement when selecting which posts users see in their news feed, and by the structure and design of the sites themselves,” said second author Ian A. Anderson , a behavioral scientist and doctoral candidate at USC Dornsife. “Understanding the dynamics behind misinformation spread is important given its political, health and social consequences.”

Experimenting with different scenarios to see why fake news spreads

In the first experiment, the researchers found that habitual users of social media share both true and fake news.

In another experiment, the researchers found that habitual sharing of misinformation is part of a broader pattern of insensitivity to the information being shared. In fact, habitual users shared politically discordant news — news that challenged their political beliefs — as much as concordant news that they endorsed.

Lastly, the team tested whether social media reward structures could be devised to promote sharing of true over false information. They showed that incentives for accuracy rather than popularity (as is currently the case on social media sites) doubled the amount of accurate news that users share on social platforms.

The study’s conclusions:

- Habitual sharing of misinformation is not inevitable.

- Users could be incentivized to build sharing habits that make them more sensitive to sharing truthful content.

- Effectively reducing misinformation would require restructuring the online environments that promote and support its sharing.

These findings suggest that social media platforms can take a more active step than moderating what information is posted and instead pursue structural changes in their reward structure to limit the spread of misinformation.

About the study: The research was supported and funded by the USC Dornsife College of Letters, Arts and Sciences Department of Psychology, the USC Marshall School of Business and the Yale University School of Management.

Related Articles

Exactly what opportunities does quantum computing present, anyway, the best place to see the total solar eclipse in 2024, study explores the future of at-home cancer treatment.

Suggestions or feedback?

MIT News | Massachusetts Institute of Technology

- Machine learning

- Social justice

- Black holes

- Classes and programs

Departments

- Aeronautics and Astronautics

- Brain and Cognitive Sciences

- Architecture

- Political Science

- Mechanical Engineering

Centers, Labs, & Programs

- Abdul Latif Jameel Poverty Action Lab (J-PAL)

- Picower Institute for Learning and Memory

- Lincoln Laboratory

- School of Architecture + Planning

- School of Engineering

- School of Humanities, Arts, and Social Sciences

- Sloan School of Management

- School of Science

- MIT Schwarzman College of Computing

Systems scientists find clues to why false news snowballs on social media

Press contact :, media download.

*Terms of Use:

Images for download on the MIT News office website are made available to non-commercial entities, press and the general public under a Creative Commons Attribution Non-Commercial No Derivatives license . You may not alter the images provided, other than to crop them to size. A credit line must be used when reproducing images; if one is not provided below, credit the images to "MIT."

Previous image Next image

The spread of misinformation on social media is a pressing societal problem that tech companies and policymakers continue to grapple with, yet those who study this issue still don’t have a deep understanding of why and how false news spreads.

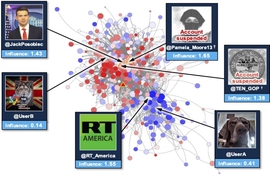

To shed some light on this murky topic, researchers at MIT developed a theoretical model of a Twitter-like social network to study how news is shared and explore situations where a non-credible news item will spread more widely than the truth. Agents in the model are driven by a desire to persuade others to take on their point of view: The key assumption in the model is that people bother to share something with their followers if they think it is persuasive and likely to move others closer to their mindset. Otherwise they won’t share.

The researchers found that in such a setting, when a network is highly connected or the views of its members are sharply polarized, news that is likely to be false will spread more widely and travel deeper into the network than news with higher credibility.

This theoretical work could inform empirical studies of the relationship between news credibility and the size of its spread, which might help social media companies adapt networks to limit the spread of false information.

“We show that, even if people are rational in how they decide to share the news, this could still lead to the amplification of information with low credibility. With this persuasion motive, no matter how extreme my beliefs are — given that the more extreme they are the more I gain by moving others’ opinions — there is always someone who would amplify [the information],” says senior author Ali Jadbabaie, professor and head of the Department of Civil and Environmental Engineering and a core faculty member of the Institute for Data, Systems, and Society (IDSS) and a principal investigator in the Laboratory for Information and Decision Systems (LIDS).

Joining Jadbabaie on the paper are first author Chin-Chia Hsu, a graduate student in the Social and Engineering Systems program in IDSS, and Amir Ajorlou, a LIDS research scientist. The research will be presented this week at the IEEE Conference on Decision and Control.

Pondering persuasion

This research draws on a 2018 study by Sinan Aral, the David Austin Professor of Management at the MIT Sloan School of Management; Deb Roy, a professor of media arts and sciences at the Media Lab; and former postdoc Soroush Vosoughi (now an assistant professor of computer science at Dartmouth University). Their empirical study of data from Twitter found that false news spreads wider, faster, and deeper than real news.

Jadbabaie and his collaborators wanted to drill down on why this occurs.

They hypothesized that persuasion might be a strong motive for sharing news — perhaps agents in the network want to persuade others to take on their point of view — and decided to build a theoretical model that would let them explore this possibility.

In their model, agents have some prior belief about a policy, and their goal is to persuade followers to move their beliefs closer to the agent’s side of the spectrum.

A news item is initially released to a small, random subgroup of agents, which must decide whether to share this news with their followers. An agent weighs the newsworthiness of the item and its credibility, and updates its belief based on how surprising or convincing the news is.

“They will make a cost-benefit analysis to see if, on average, this piece of news will move people closer to what they think or move them away. And we include a nominal cost for sharing. For instance, taking some action, if you are scrolling on social media, you have to stop to do that. Think of that as a cost. Or a reputation cost might come if I share something that is embarrassing. Everyone has this cost, so the more extreme and the more interesting the news is, the more you want to share it,” Jadbabaie says.

If the news affirms the agent’s perspective and has persuasive power that outweighs the nominal cost, the agent will always share the news. But if an agent thinks the news item is something others may have already seen, the agent is disincentivized to share it.

Since an agent’s willingness to share news is a product of its perspective and how persuasive the news is, the more extreme an agent’s perspective or the more surprising the news, the more likely the agent will share it.

The researchers used this model to study how information spreads during a news cascade, which is an unbroken sharing chain that rapidly permeates the network.

Connectivity and polarization

The team found that when a network has high connectivity and the news is surprising, the credibility threshold for starting a news cascade is lower. High connectivity means that there are multiple connections between many users in the network.

Likewise, when the network is largely polarized, there are plenty of agents with extreme views who want to share the news item, starting a news cascade. In both these instances, news with low credibility creates the largest cascades.

“For any piece of news, there is a natural network speed limit, a range of connectivity, that facilitates good transmission of information where the size of the cascade is maximized by true news. But if you exceed that speed limit, you will get into situations where inaccurate news or news with low credibility has a larger cascade size,” Jadbabaie says.

If the views of users in the network become more diverse, it is less likely that a poorly credible piece of news will spread more widely than the truth.

Jadbabaie and his colleagues designed the agents in the network to behave rationally, so the model would better capture actions real humans might take if they want to persuade others.

“Someone might say that is not why people share, and that is valid. Why people do certain things is a subject of intense debate in cognitive science, social psychology, neuroscience, economics, and political science,” he says. “Depending on your assumptions, you end up getting different results. But I feel like this assumption of persuasion being the motive is a natural assumption.”

Their model also shows how costs can be manipulated to reduce the spread of false information. Agents make a cost-benefit analysis and won’t share news if the cost to do so outweighs the benefit of sharing.

“We don’t make any policy prescriptions, but one thing this work suggests is that, perhaps, having some cost associated with sharing news is not a bad idea. The reason you get lots of these cascades is because the cost of sharing the news is actually very low,” he says.

“The role of social networks in shaping opinions and affecting behavior has been widely noted. Empirical research by Sinan Aral and his collaborators at MIT shows that false news is passed on more widely than true news,” says Sanjeev Goyal, professor of economics at Cambridge University, who was not involved with this research. “In their new paper, Ali Jadbabaie and his collaborators offer us an explanation for this puzzle with the help of an elegant model”

This work was supported by an Army Research Office Multidisciplinary University Research Initiative grant and a Vannevar Bush Fellowship from the Office of the Secretary of Defense.

Share this news article on:

Related links.

- Ali Jadbabaie

- Chin-Chia Hsu

- Amir Ajorlou

- Institute for Data, Systems, and Society

- Laboratory for Information Decision Systems

- Schwarzman College of Computing

Related Topics

- Social media

- Social networks

- Technology and society

- Laboratory for Information and Decision Systems (LIDS)

Related Articles

Study: Crowds can wise up to fake news

Artificial intelligence system could help counter the spread of disinformation

A remedy for the spread of false news.

Study: On Twitter, false news travels faster than true stories

Previous item Next item

More MIT News

Programming functional fabrics

Read full story →

Does technology help or hurt employment?

Most work is new work, long-term study of U.S. census data shows

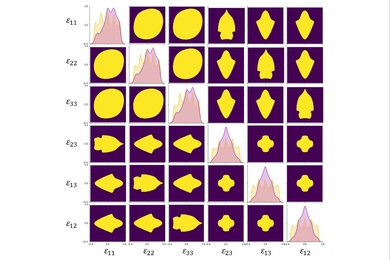

A first-ever complete map for elastic strain engineering

“Life is short, so aim high”

Shining a light on oil fields to make them more sustainable

- More news on MIT News homepage →

Massachusetts Institute of Technology 77 Massachusetts Avenue, Cambridge, MA, USA

- Map (opens in new window)

- Events (opens in new window)

- People (opens in new window)

- Careers (opens in new window)

- Accessibility

- Social Media Hub

- MIT on Facebook

- MIT on YouTube

- MIT on Instagram

- Frontiers in Psychology

- Research Topics

The Psychology of Fake News on Social Media: Who falls for it, who shares it, why, and can we help users detect it?

Total Downloads

Total Views and Downloads

About this Research Topic

The proliferation of fake news on social media has become a major societal concern which has been shown to impact U.S. elections, referenda, and most recently effective public health messaging for the COVID-19 pandemic. While some advances on the use of automated systems to detect and highlight fake news have ...

Keywords : Fake news, misinformation, social media, election, referendum, politics, democracy, communication, Facebook, Twitter, psychology, individual differences, intervention

Important Note : All contributions to this Research Topic must be within the scope of the section and journal to which they are submitted, as defined in their mission statements. Frontiers reserves the right to guide an out-of-scope manuscript to a more suitable section or journal at any stage of peer review.

Topic Editors

Topic coordinators, recent articles, submission deadlines.

Submission closed.

Participating Journals

Total views.

- Demographics

No records found

total views article views downloads topic views

Top countries

Top referring sites, about frontiers research topics.

With their unique mixes of varied contributions from Original Research to Review Articles, Research Topics unify the most influential researchers, the latest key findings and historical advances in a hot research area! Find out more on how to host your own Frontiers Research Topic or contribute to one as an author.

- Follow us on Facebook

- Follow us on Twitter

- Criminal Justice

- Environment

- Politics & Government

- Race & Gender

Expert Commentary

Fake news and the spread of misinformation: A research roundup

This collection of research offers insights into the impacts of fake news and other forms of misinformation, including fake Twitter images, and how people use the internet to spread rumors and misinformation.

Republish this article

This work is licensed under a Creative Commons Attribution-NoDerivatives 4.0 International License .

by Denise-Marie Ordway, The Journalist's Resource September 1, 2017

This <a target="_blank" href="https://journalistsresource.org/politics-and-government/fake-news-conspiracy-theories-journalism-research/">article</a> first appeared on <a target="_blank" href="https://journalistsresource.org">The Journalist's Resource</a> and is republished here under a Creative Commons license.<img src="https://journalistsresource.org/wp-content/uploads/2020/11/cropped-jr-favicon-150x150.png" style="width:1em;height:1em;margin-left:10px;">

It’s too soon to say whether Google ’s and Facebook ’s attempts to clamp down on fake news will have a significant impact. But fabricated stories posing as serious journalism are not likely to go away as they have become a means for some writers to make money and potentially influence public opinion. Even as Americans recognize that fake news causes confusion about current issues and events, they continue to circulate it. A December 2016 survey by the Pew Research Center suggests that 23 percent of U.S. adults have shared fake news, knowingly or unknowingly, with friends and others.

“Fake news” is a term that can mean different things, depending on the context. News satire is often called fake news as are parodies such as the “Saturday Night Live” mock newscast Weekend Update. Much of the fake news that flooded the internet during the 2016 election season consisted of written pieces and recorded segments promoting false information or perpetuating conspiracy theories. Some news organizations published reports spotlighting examples of hoaxes, fake news and misinformation on Election Day 2016.

The news media has written a lot about fake news and other forms of misinformation, but scholars are still trying to understand it — for example, how it travels and why some people believe it and even seek it out. Below, Journalist’s Resource has pulled together academic studies to help newsrooms better understand the problem and its impacts. Two other resources that may be helpful are the Poynter Institute’s tips on debunking fake news stories and the First Draft Partner Network , a global collaboration of newsrooms, social media platforms and fact-checking organizations that was launched in September 2016 to battle fake news. In mid-2018, JR ‘s managing editor, Denise-Marie Ordway, wrote an article for Harvard Business Review explaining what researchers know to date about the amount of misinformation people consume, why they believe it and the best ways to fight it.

—————————

“The Science of Fake News” Lazer, David M. J.; et al. Science , March 2018. DOI: 10.1126/science.aao2998.

Summary: “The rise of fake news highlights the erosion of long-standing institutional bulwarks against misinformation in the internet age. Concern over the problem is global. However, much remains unknown regarding the vulnerabilities of individuals, institutions, and society to manipulations by malicious actors. A new system of safeguards is needed. Below, we discuss extant social and computer science research regarding belief in fake news and the mechanisms by which it spreads. Fake news has a long history, but we focus on unanswered scientific questions raised by the proliferation of its most recent, politically oriented incarnation. Beyond selected references in the text, suggested further reading can be found in the supplementary materials.”

“Who Falls for Fake News? The Roles of Bullshit Receptivity, Overclaiming, Familiarity, and Analytical Thinking” Pennycook, Gordon; Rand, David G. May 2018. Available at SSRN. DOI: 10.2139/ssrn.3023545.

Abstract: “Inaccurate beliefs pose a threat to democracy and fake news represents a particularly egregious and direct avenue by which inaccurate beliefs have been propagated via social media. Here we present three studies (MTurk, N = 1,606) investigating the cognitive psychological profile of individuals who fall prey to fake news. We find consistent evidence that the tendency to ascribe profundity to randomly generated sentences — pseudo-profound bullshit receptivity — correlates positively with perceptions of fake news accuracy, and negatively with the ability to differentiate between fake and real news (media truth discernment). Relatedly, individuals who overclaim regarding their level of knowledge (i.e. who produce bullshit) also perceive fake news as more accurate. Conversely, the tendency to ascribe profundity to prototypically profound (non-bullshit) quotations is not associated with media truth discernment; and both profundity measures are positively correlated with willingness to share both fake and real news on social media. We also replicate prior results regarding analytic thinking — which correlates negatively with perceived accuracy of fake news and positively with media truth discernment — and shed further light on this relationship by showing that it is not moderated by the presence versus absence of information about the new headline’s source (which has no effect on perceived accuracy), or by prior familiarity with the news headlines (which correlates positively with perceived accuracy of fake and real news). Our results suggest that belief in fake news has similar cognitive properties to other forms of bullshit receptivity, and reinforce the important role that analytic thinking plays in the recognition of misinformation.”

“Social Media and Fake News in the 2016 Election” Allcott, Hunt; Gentzkow, Matthew. Working paper for the National Bureau of Economic Research, No. 23089, 2017.

Abstract: “We present new evidence on the role of false stories circulated on social media prior to the 2016 U.S. presidential election. Drawing on audience data, archives of fact-checking websites, and results from a new online survey, we find: (i) social media was an important but not dominant source of news in the run-up to the election, with 14 percent of Americans calling social media their “most important” source of election news; (ii) of the known false news stories that appeared in the three months before the election, those favoring Trump were shared a total of 30 million times on Facebook, while those favoring Clinton were shared eight million times; (iii) the average American saw and remembered 0.92 pro-Trump fake news stories and 0.23 pro-Clinton fake news stories, with just over half of those who recalled seeing fake news stories believing them; (iv) for fake news to have changed the outcome of the election, a single fake article would need to have had the same persuasive effect as 36 television campaign ads.”

“Debunking: A Meta-Analysis of the Psychological Efficacy of Messages Countering Misinformation” Chan, Man-pui Sally; Jones, Christopher R.; Jamieson, Kathleen Hall; Albarracín, Dolores. Psychological Science , September 2017. DOI: 10.1177/0956797617714579.

Abstract: “This meta-analysis investigated the factors underlying effective messages to counter attitudes and beliefs based on misinformation. Because misinformation can lead to poor decisions about consequential matters and is persistent and difficult to correct, debunking it is an important scientific and public-policy goal. This meta-analysis (k = 52, N = 6,878) revealed large effects for presenting misinformation (ds = 2.41–3.08), debunking (ds = 1.14–1.33), and the persistence of misinformation in the face of debunking (ds = 0.75–1.06). Persistence was stronger and the debunking effect was weaker when audiences generated reasons in support of the initial misinformation. A detailed debunking message correlated positively with the debunking effect. Surprisingly, however, a detailed debunking message also correlated positively with the misinformation-persistence effect.”

“Displacing Misinformation about Events: An Experimental Test of Causal Corrections” Nyhan, Brendan; Reifler, Jason. Journal of Experimental Political Science , 2015. doi: 10.1017/XPS.2014.22.

Abstract: “Misinformation can be very difficult to correct and may have lasting effects even after it is discredited. One reason for this persistence is the manner in which people make causal inferences based on available information about a given event or outcome. As a result, false information may continue to influence beliefs and attitudes even after being debunked if it is not replaced by an alternate causal explanation. We test this hypothesis using an experimental paradigm adapted from the psychology literature on the continued influence effect and find that a causal explanation for an unexplained event is significantly more effective than a denial even when the denial is backed by unusually strong evidence. This result has significant implications for how to most effectively counter misinformation about controversial political events and outcomes.”

“Rumors and Health Care Reform: Experiments in Political Misinformation” Berinsky, Adam J. British Journal of Political Science , 2015. doi: 10.1017/S0007123415000186.

Abstract: “This article explores belief in political rumors surrounding the health care reforms enacted by Congress in 2010. Refuting rumors with statements from unlikely sources can, under certain circumstances, increase the willingness of citizens to reject rumors regardless of their own political predilections. Such source credibility effects, while well known in the political persuasion literature, have not been applied to the study of rumor. Though source credibility appears to be an effective tool for debunking political rumors, risks remain. Drawing upon research from psychology on ‘fluency’ — the ease of information recall — this article argues that rumors acquire power through familiarity. Attempting to quash rumors through direct refutation may facilitate their diffusion by increasing fluency. The empirical results find that merely repeating a rumor increases its power.”

“Rumors and Factitious Informational Blends: The Role of the Web in Speculative Politics” Rojecki, Andrew; Meraz, Sharon. New Media & Society , 2016. doi: 10.1177/1461444814535724.

Abstract: “The World Wide Web has changed the dynamics of information transmission and agenda-setting. Facts mingle with half-truths and untruths to create factitious informational blends (FIBs) that drive speculative politics. We specify an information environment that mirrors and contributes to a polarized political system and develop a methodology that measures the interaction of the two. We do so by examining the evolution of two comparable claims during the 2004 presidential campaign in three streams of data: (1) web pages, (2) Google searches, and (3) media coverage. We find that the web is not sufficient alone for spreading misinformation, but it leads the agenda for traditional media. We find no evidence for equality of influence in network actors.”

“Analyzing How People Orient to and Spread Rumors in Social Media by Looking at Conversational Threads” Zubiaga, Arkaitz; et al. PLOS ONE, 2016. doi: 10.1371/journal.pone.0150989.

Abstract: “As breaking news unfolds people increasingly rely on social media to stay abreast of the latest updates. The use of social media in such situations comes with the caveat that new information being released piecemeal may encourage rumors, many of which remain unverified long after their point of release. Little is known, however, about the dynamics of the life cycle of a social media rumor. In this paper we present a methodology that has enabled us to collect, identify and annotate a dataset of 330 rumor threads (4,842 tweets) associated with 9 newsworthy events. We analyze this dataset to understand how users spread, support, or deny rumors that are later proven true or false, by distinguishing two levels of status in a rumor life cycle i.e., before and after its veracity status is resolved. The identification of rumors associated with each event, as well as the tweet that resolved each rumor as true or false, was performed by journalist members of the research team who tracked the events in real time. Our study shows that rumors that are ultimately proven true tend to be resolved faster than those that turn out to be false. Whilst one can readily see users denying rumors once they have been debunked, users appear to be less capable of distinguishing true from false rumors when their veracity remains in question. In fact, we show that the prevalent tendency for users is to support every unverified rumor. We also analyze the role of different types of users, finding that highly reputable users such as news organizations endeavor to post well-grounded statements, which appear to be certain and accompanied by evidence. Nevertheless, these often prove to be unverified pieces of information that give rise to false rumors. Our study reinforces the need for developing robust machine learning techniques that can provide assistance in real time for assessing the veracity of rumors. The findings of our study provide useful insights for achieving this aim.”

“Miley, CNN and The Onion” Berkowitz, Dan; Schwartz, David Asa. Journalism Practice , 2016. doi: 10.1080/17512786.2015.1006933.

Abstract: “Following a twerk-heavy performance by Miley Cyrus on the Video Music Awards program, CNN featured the story on the top of its website. The Onion — a fake-news organization — then ran a satirical column purporting to be by CNN’s Web editor explaining this decision. Through textual analysis, this paper demonstrates how a Fifth Estate comprised of bloggers, columnists and fake news organizations worked to relocate mainstream journalism back to within its professional boundaries.”

“Emotions, Partisanship, and Misperceptions: How Anger and Anxiety Moderate the Effect of Partisan Bias on Susceptibility to Political Misinformation”

Weeks, Brian E. Journal of Communication , 2015. doi: 10.1111/jcom.12164.

Abstract: “Citizens are frequently misinformed about political issues and candidates but the circumstances under which inaccurate beliefs emerge are not fully understood. This experimental study demonstrates that the independent experience of two emotions, anger and anxiety, in part determines whether citizens consider misinformation in a partisan or open-minded fashion. Anger encourages partisan, motivated evaluation of uncorrected misinformation that results in beliefs consistent with the supported political party, while anxiety at times promotes initial beliefs based less on partisanship and more on the information environment. However, exposure to corrections improves belief accuracy, regardless of emotion or partisanship. The results indicate that the unique experience of anger and anxiety can affect the accuracy of political beliefs by strengthening or attenuating the influence of partisanship.”

“Deception Detection for News: Three Types of Fakes” Rubin, Victoria L.; Chen, Yimin; Conroy, Niall J. Proceedings of the Association for Information Science and Technology , 2015, Vol. 52. doi: 10.1002/pra2.2015.145052010083.

Abstract: “A fake news detection system aims to assist users in detecting and filtering out varieties of potentially deceptive news. The prediction of the chances that a particular news item is intentionally deceptive is based on the analysis of previously seen truthful and deceptive news. A scarcity of deceptive news, available as corpora for predictive modeling, is a major stumbling block in this field of natural language processing (NLP) and deception detection. This paper discusses three types of fake news, each in contrast to genuine serious reporting, and weighs their pros and cons as a corpus for text analytics and predictive modeling. Filtering, vetting, and verifying online information continues to be essential in library and information science (LIS), as the lines between traditional news and online information are blurring.”

“When Fake News Becomes Real: Combined Exposure to Multiple News Sources and Political Attitudes of Inefficacy, Alienation, and Cynicism” Balmas, Meital. Communication Research , 2014, Vol. 41. doi: 10.1177/0093650212453600.

Abstract: “This research assesses possible associations between viewing fake news (i.e., political satire) and attitudes of inefficacy, alienation, and cynicism toward political candidates. Using survey data collected during the 2006 Israeli election campaign, the study provides evidence for an indirect positive effect of fake news viewing in fostering the feelings of inefficacy, alienation, and cynicism, through the mediator variable of perceived realism of fake news. Within this process, hard news viewing serves as a moderator of the association between viewing fake news and their perceived realism. It was also demonstrated that perceived realism of fake news is stronger among individuals with high exposure to fake news and low exposure to hard news than among those with high exposure to both fake and hard news. Overall, this study contributes to the scientific knowledge regarding the influence of the interaction between various types of media use on political effects.”

“Faking Sandy: Characterizing and Identifying Fake Images on Twitter During Hurricane Sandy” Gupta, Aditi; Lamba, Hemank; Kumaraguru, Ponnurangam; Joshi, Anupam. Proceedings of the 22nd International Conference on World Wide Web , 2013. doi: 10.1145/2487788.2488033.

Abstract: “In today’s world, online social media plays a vital role during real world events, especially crisis events. There are both positive and negative effects of social media coverage of events. It can be used by authorities for effective disaster management or by malicious entities to spread rumors and fake news. The aim of this paper is to highlight the role of Twitter during Hurricane Sandy (2012) to spread fake images about the disaster. We identified 10,350 unique tweets containing fake images that were circulated on Twitter during Hurricane Sandy. We performed a characterization analysis, to understand the temporal, social reputation and influence patterns for the spread of fake images. Eighty-six percent of tweets spreading the fake images were retweets, hence very few were original tweets. Our results showed that the top 30 users out of 10,215 users (0.3 percent) resulted in 90 percent of the retweets of fake images; also network links such as follower relationships of Twitter, contributed very little (only 11 percent) to the spread of these fake photos URLs. Next, we used classification models, to distinguish fake images from real images of Hurricane Sandy. Best results were obtained from Decision Tree classifier, we got 97 percent accuracy in predicting fake images from real. Also, tweet-based features were very effective in distinguishing fake images tweets from real, while the performance of user-based features was very poor. Our results showed that automated techniques can be used in identifying real images from fake images posted on Twitter.”

“The Impact of Real News about ‘Fake News’: Intertextual Processes and Political Satire” Brewer, Paul R.; Young, Dannagal Goldthwaite; Morreale, Michelle. International Journal of Public Opinion Research , 2013. doi: 10.1093/ijpor/edt015.

Abstract: “This study builds on research about political humor, press meta-coverage, and intertextuality to examine the effects of news coverage about political satire on audience members. The analysis uses experimental data to test whether news coverage of Stephen Colbert’s Super PAC influenced knowledge and opinion regarding Citizens United, as well as political trust and internal political efficacy. It also tests whether such effects depended on previous exposure to The Colbert Report (Colbert’s satirical television show) and traditional news. Results indicate that exposure to news coverage of satire can influence knowledge, opinion, and political trust. Additionally, regular satire viewers may experience stronger effects on opinion, as well as increased internal efficacy, when consuming news coverage about issues previously highlighted in satire programming.”

“With Facebook, Blogs, and Fake News, Teens Reject Journalistic ‘Objectivity’” Marchi, Regina. Journal of Communication Inquiry , 2012. doi: 10.1177/0196859912458700.

Abstract: “This article examines the news behaviors and attitudes of teenagers, an understudied demographic in the research on youth and news media. Based on interviews with 61 racially diverse high school students, it discusses how adolescents become informed about current events and why they prefer certain news formats to others. The results reveal changing ways news information is being accessed, new attitudes about what it means to be informed, and a youth preference for opinionated rather than objective news. This does not indicate that young people disregard the basic ideals of professional journalism but, rather, that they desire more authentic renderings of them.”

Keywords: alt-right, credibility, truth discovery, post-truth era, fact checking, news sharing, news literacy, misinformation, disinformation

5 fascinating digital media studies from fall 2018

Facebook and the newsroom: 6 questions for Siva Vaidhyanathan

About The Author

Denise-Marie Ordway

Smart. Open. Grounded. Inventive. Read our Ideas Made to Matter.

Which program is right for you?

Through intellectual rigor and experiential learning, this full-time, two-year MBA program develops leaders who make a difference in the world.

A rigorous, hands-on program that prepares adaptive problem solvers for premier finance careers.

A 12-month program focused on applying the tools of modern data science, optimization and machine learning to solve real-world business problems.

Earn your MBA and SM in engineering with this transformative two-year program.

Combine an international MBA with a deep dive into management science. A special opportunity for partner and affiliate schools only.

A doctoral program that produces outstanding scholars who are leading in their fields of research.

Bring a business perspective to your technical and quantitative expertise with a bachelor’s degree in management, business analytics, or finance.

A joint program for mid-career professionals that integrates engineering and systems thinking. Earn your master’s degree in engineering and management.

An interdisciplinary program that combines engineering, management, and design, leading to a master’s degree in engineering and management.

Executive Programs

A full-time MBA program for mid-career leaders eager to dedicate one year of discovery for a lifetime of impact.

This 20-month MBA program equips experienced executives to enhance their impact on their organizations and the world.

Non-degree programs for senior executives and high-potential managers.

A non-degree, customizable program for mid-career professionals.

Why do people around the world share fake news? New research finds commonalities in global behavior

MIT Sloan Office of Communications

Jun 29, 2023

MIT Sloan study finds similar solutions to slow the spread of online misinformation can be effective in countries worldwide.

CAMBRIDGE, Mass., June 29, 2023 – Since the 2016 U.S. Presidential election and British “Brexit” referendum — and then COVID-19 — opened the floodgates on fake news, academic research has delved into the psychology behind online misinformation and suggested interventions that might help curb the phenomenon.

Most studies have focused on misinformation in the West, even though misinformation is very much a dire global problem. In 2017, false information on Facebook was implicated in genocide against the Rohingya minority group in Myanmar, and in 2020 at least two dozen people were killed in mob lynchings after rumors spread on WhatsApp in India.

In a new paper published today in Nature Human Behaviour, “Understanding and Combating Misinformation Across 16 Countries on Six Continents,” MIT Sloan School of Management professor David Rand, found the traits shared by misinformation spreaders are surprisingly similar worldwide. With a dozen colleagues from universities across the globe — including lead author Professor Antonio A. Arechar of the Center for Research and Teaching in Economics in Aguascalientes, Mexico, and joint senior author Professor Gordon Pennycook of Cornell University — the researchers also found common strategies and solutions to slow the spread of fake news, which can be effective across countries.

“There’s a general psychology of misinformation that transcends cultural differences,” Rand said. “And broadly across many countries, we found that getting people to think about accuracy when deciding what to share, as well as showing them digital literacy tips, made them less likely to share false claims.”

The study, conducted in early 2021, found large variations across countries in how likely participants were to believe false statements about COVID-19 — for example, those in India believed false claims more than twice as much as those in the U.K. Overall, people from individualistic countries with open political systems were better at discerning true statements from falsehoods, the researchers found. However, in every country, they found that reflective analytic thinkers were less susceptible to believing false statements than people who relied on their gut.

“Pretty much everywhere we looked, people who were better critical thinkers and who cared more about accuracy were less likely to believe the false claims,” Rand said. In the study, people who got all three questions wrong on a critical thinking quiz were twice as likely to believe false claims compared to people who got all three questions correct. Valuing democracy was also consistently associated with being better at identifying truth.

On the other side, endorsing individual responsibility over government support and belief in God were linked to more difficulty in discerning true from false statements. People who said they would definitely not get vaccinated against COVID-19 when the vaccine became available were 52.9% more likely to believe false claims versus those who said they would.

The researchers also found that at the end of the study, while 79% of participants said it's very or extremely important to only share accurate news, 77% of them had nonetheless shared at least one false statement as part of the experiment.

What explains this striking disconnect? “A lot of it is people just not paying attention to accuracy,” Rand said. “In a social media environment, there’s so much to focus on around the social aspects of sharing — how many likes will I get, who else shared this — and we have limited cognitive bandwidth. So people often simply forget to even think about whether claims are true before they share them.”

This observation suggests ways to combat the spread of misinformation. Participants across countries who were nudged to focus on accuracy by evaluating the truth of an initial non-COVID-related question were nearly 10% less likely to share a false statement. Very simple digital literacy tips help focus attention on accuracy too, said Rand, and those who read them were 8.4% less likely to share a false statement. The researchers also discovered that when averaging as few as 20 participant ratings, it was possible to identify false headlines with very high accuracy, leveraging the “ wisdom of crowds ” to help platforms spot misinformation at scale.

Methodology

In their study, Rand and colleagues recruited social media users from 16 countries across South America, Asia, the Middle East, and Africa. Australia and the United States were also among the countries, and nine languages were represented. Of the final sample size of 34,286, 45% were female and the mean age was 38.7 years old.

The researchers randomly assigned study participants to one of four groups: Accuracy, Sharing, Prompt, and Tips. All participants were shown 10 false and 10 true COVID-19-related statements taken from the websites of health and news organizations including the World Health Organization and the BBC. For example, one false statement read, “Masks, Gloves, Vaccines, And Synthetic Hand Soaps Suppress Your Immune System,” while a true one said, “The likelihood of shoes spreading COVID-19 is very low.”

Accuracy group participants were asked to assess statements’ accuracy on a six-point scale, while the Sharing group rated how likely they would be to share statements. Study participants in the Prompt group were first asked to evaluate the accuracy of a non-COVID-related statement — “Flight attendant slaps crying baby during flight” — before deciding whether or not to share COVID statements. Finally, the Tips group read four simple digital literacy tips before they chose whether or not to share.

Implications for policy

The research has implications for policymakers and organizations like the United Nations looking to combat misinformation around the globe, as well as for social media companies trying to cut down on fake news sharing. “Really for anyone doing anti-disinformation work,” Rand said. “This research helps us better understand who is susceptible to misinformation, and it shows us there are globally relevant interventions to help.”

Social media companies could regularly ask users to rate the accuracy of headlines as a way to prompt them to focus on accuracy and share more thoughtfully, while also gathering useful data to help actually identify misinformation. Future research should test these types of interventions in field experiments on social media platforms around the world, Rand said. “If you focus people back on accuracy, they are less likely to share bad content,” he concluded.

About the MIT Sloan School of Management

The MIT Sloan School of Management is where smart, independent leaders come together to solve problems, create new organizations, and improve the world. Learn more at mitsloan.mit.edu .

Related Articles

- Share full article

Advertisement

How Social Media Amplifies Misinformation More Than Information

A new analysis found that algorithms and some features of social media sites help false posts go viral.

By Steven Lee Myers

- Oct. 13, 2022

It is well known that social media amplifies misinformation and other harmful content. The Integrity Institute, an advocacy group, is now trying to measure exactly how much — and on Thursday it began publishing results that it plans to update each week through the midterm elections on Nov. 8.

The institute’s initial report, posted online , found that a “well-crafted lie” will get more engagements than typical, truthful content and that some features of social media sites and their algorithms contribute to the spread of misinformation.

Twitter, the analysis showed, has what the institute called the great misinformation amplification factor, in large part because of its feature allowing people to share, or “retweet,” posts easily. It was followed by TikTok, the Chinese-owned video site, which uses machine-learning models to predict engagement and make recommendations to users.

“We see a difference for each platform because each platform has different mechanisms for virality on it,” said Jeff Allen, a former integrity officer at Facebook and a founder and the chief research officer at the Integrity Institute. “The more mechanisms there are for virality on the platform, the more we see misinformation getting additional distribution.”

The institute calculated its findings by comparing posts that members of the International Fact-Checking Network have identified as false with the engagement of previous posts that were not flagged from the same accounts. It analyzed nearly 600 fact-checked posts in September on a variety of subjects, including the Covid-19 pandemic, the war in Ukraine and the upcoming elections.

Facebook, according to the sample that the institute has studied so far, had the most instances of misinformation but amplified such claims to a lesser degree, in part because sharing posts requires more steps. But some of its newer features are more prone to amplify misinformation, the institute found.

Facebook’s amplification factor of video content alone is closer to TikTok’s, the institute found. That’s because the platform’s Reels and Facebook Watch, which are video features, “both rely heavily on algorithmic content recommendations” based on engagements, according to the institute’s calculations.

Instagram, which like Facebook is owned by Meta, had the lowest amplification rate. There was not yet sufficient data to make a statistically significant estimate for YouTube, according to the institute.

The institute plans to update its findings to track how the amplification fluctuates, especially as the midterm elections near. Misinformation, the institute’s report said, is much more likely to be shared than merely factual content.

“Amplification of misinformation can rise around critical events if misinformation narratives take hold,” the report said. “It can also fall, if platforms implement design changes around the event that reduce the spread of misinformation.”

Steven Lee Myers covers misinformation for The Times. He has worked in Washington, Moscow, Baghdad and Beijing, where he contributed to the articles that won the Pulitzer Prize for public service in 2021. He is also the author of “The New Tsar: The Rise and Reign of Vladimir Putin.” More about Steven Lee Myers

The Rise of TikTok

“Being labeled a “yapper” on TikTok isn’t necessarily a compliment, but on a platform built on talk, it isn’t an insult either .

“Who TF Did I Marry?!?,” the TikTok user Reesa Teesa’s account of her relationship with her ex-husband, is a story for grown-ups in their midlife crisis era.

Return fraud is a rampant problem for both shoppers and retailers — and the mishaps often make for viral videos on TikTok.

The Pink Stuff, a home cleaning paste, went from total obscurity to viral sensation — and Walmart staple — thanks to one “cleanfluencer” and her legion of fans .

Have we reached the end of TikTok’s infinite scroll? The app once offered seemingly endless chances to be charmed but in only a few short years, its promise of kismet is evaporating , our critic writes.

The TikTok creator known as “Tunnel Girl” has been documenting her attempt to build an emergency shelter under her home. She is not the only person with an off-the-books tunnel project .

- Request Info

Waidner-Spahr Library

- Do Research

- About the Library

- Dickinson Scholar

- Ask a Librarian

- My Library Account

Social Media: Fake News

- Online Persona

- Information Timeline

What is "Fake News?"

"Fake news" refers to news articles or other items that are deliberately false and intended to manipulate the viewer. While the concept of fake news stretches back to antiquity, it has become a large problem in recent years due to the ease with which it can be spread on social media and other online platforms, as people are often less likely to critically evaluate news shared by their friends or that confirms their existing beliefs. Fake news is alleged to have contributed to important political and economic outcomes in recent years.

This page presents strategies for detecting fake news and researching the topic through reliable sources so you can draw your own conclusions. The most important thing you can do is to recognize fake news and halt its dissemination by not spreading it to your social circles.

What are Social Media Platforms Doing?

Several social media platforms have responded to the rise in fake news by adjusting their news feeds, labeling news stories as false or contested, or through other approaches. Google has also made changes to address the problem. The websites below explain some of the steps these platforms are taking. These steps can only go so far, however; it's always the responsibility of the reader to question and verify information found online.

- Working to Stop Misinformation and False News--Facebook

- Facebook Tweaks Its 'Trending Topics' Algorithm To Better Reflect Real News--Facebook

- Facebook says it will act against 'information operations' using false accounts--Facebook

- Fact Check now available in Google Search and News around the world--Google

- Google has banned 200 publishers since it passed a new policy against fake news

- Solutions that can stop fake news spreading--BBC

Recognizing Fake News

You've all seen fake news before, and it's easy to recognize the worst examples.

Fake news is often too good to be true, too extreme, or too out of line with what you know to be true and what other news sources are telling you. The following suggestions for recognizing fake news are taken from the NPR story Fake News Or Real? How To Self-Check The News And Get The Facts .

- Pay attention to the domain and URL : Reliable websites have familiar names and standard URLs, like .com or .edu.

- Read the "About Us" section : Insufficient or overblown language can signal an unreliable source.

- Look at the quotes in a story : Good stories quote multiple experts to get a range of perspectives on an issue.

- Look at who said them : Can you verify that the quotes are correct? What kind of authority do the sources possess?

- Check the comments : Comments on social media platforms can alert you when the story doesn't support the headline.

- Reverse image search : If an image used in a story appears on other websites about different topics, it's a good sign the image isn't actually what the story claims it is.

If in doubt, contact a librarian for help evaluating the claim and the source of the news using reliable sources.

Strategies for Combatting Fake News

- Be aware of the problem : Many popular sources, particularly online news sources and social media, are competing for your attention through outlandish claims, and sometimes with the intent of manipulating the viewer

- Think critically : Critically evaluate news that you encounter. If it sounds too good (or sometimes too bad) to be true, it probably is. Most fake news preys on our desire to have our beliefs confirmed, whether they be positive or negative

- Check facts against reliable sources : When you encounter a claim in the news, particularly if it sets off alarm bells for you, take the time to evaluate the claim using reputable sources including library databases, fact checking websites like Snopes.com or PolitiFact , and authoritative news sources like the New York Times (click for instructions on registering an account) and the BBC . While established news sources can also be wrong from time to time, they take care to do extensive fact checking to validate their articles

- Stop the spread of fake news : You can do your part in halting the spread of fake news by not spreading it further on social media, through email, or in conversation

Real or Fake?

- News Story 1

- News Story 2

Is this news item real or fake? Try evaluating it using the tips presented on this page.

Story 1 (November 2011): SHOCK - Brain surgeon confirms ObamaCare rations care, has death panels!

Story 2 (January 2017): The State Department’s entire senior administrative team just resigned

How Social Media Spreads Fake News

Social media is one of the main ways that fake news is spread online. Platforms like Facebook and Twitter make it easy to share trending news without taking the time to critically evaluate it.

People are also less likely to critically evaluate news shared by their friends, so misleading news stories end up getting spread throughout social networks with a lot of momentum.

Read the articles below to get a better understanding of how social media can reinforce our preexisting beliefs and make us more likely to believe fake news.

- Stanford study examines fake news and the 2016 presidential election

- The reason your feed became an echo chamber--and what to do about it

- How fake news goes viral: a case study

- Is Facebook keeping you in a political bubble?

- 2016 Lie of the Year: Fake news

- Fake News Expert On How False Stories Spread and Why People Believe Them

- BBC News--Filter Bubbles

- NPR--Researchers Examine When People Are More Susceptible To Fake News

- << Previous: Danger

- Next: Information Timeline >>

- Last Updated: Jul 18, 2023 4:14 PM

- URL: https://libguides.dickinson.edu/socialmedia

Read our research on: Abortion | Podcasts | Election 2024

Regions & Countries

Misinformation, most americans favor restrictions on false information, violent content online.

Most Americans say the U.S. government and technology companies should each take steps to restrict false information and extremely violent content online.

As AI Spreads, Experts Predict the Best and Worst Changes in Digital Life by 2035

Social media seen as mostly good for democracy across many nations, but u.s. is a major outlier, the role of alternative social media in the news and information environment, sign up for the briefing.

Weekly updates on the world of news & information

All Misinformation Publications

Majorities in most countries surveyed say social media is good for democracy.

Across 27 countries surveyed, people generally see social media as more of a good thing than a bad thing for democracy.

As they watch the splashy emergence of generative artificial intelligence and an array of other AI applications, experts participating in a new Pew Research Center canvassing say they have deep concerns about people's and society's overall well-being. At the same time, they expect to see great benefits in health care, scientific advances and education

Most think social media has made it easier to manipulate and divide people, but they also say it informs and raises awareness.

In recent years, several new options have emerged in the social media universe, many of which explicitly present themselves as alternatives to more established social media platforms. Free speech ideals and heated political themes prevail on these sites, which draw praise from their users and skepticism from other Americans.

What do journalists think the news industry does best and worst?

Nearly 12,000 U.S.-based journalists in a pair of open-ended questions were asked to write down the one thing the news industry does the best job of these days and what it does worst.

Journalists Sense Turmoil in Their Industry Amid Continued Passion for Their Work

A survey of U.S.-based journalists finds 77% would choose their career all over again, though 57% are highly concerned about future restrictions on press freedom.

AI and Human Enhancement: Americans’ Openness Is Tempered by a Range of Concerns

Public views are tied to how these technologies would be used and what constraints would be in place.

The Future of Digital Spaces and Their Role in Democracy

Many experts say public online spaces will significantly improve by 2035 if reformers, big technology firms, governments and activists tackle the problems created by misinformation, disinformation and toxic discourse. Others expect continuing troubles as digital tools and forums are used to exploit people’s frailties, stoke their rage and drive them apart.

The Behaviors and Attitudes of U.S. Adults on Twitter

A minority of Twitter users produce a majority of tweets from U.S. adults, and the most active tweeters are less likely to view the tone or civility of discussions as a major problem on the site.

Refine Your Results

About Pew Research Center Pew Research Center is a nonpartisan fact tank that informs the public about the issues, attitudes and trends shaping the world. It conducts public opinion polling, demographic research, media content analysis and other empirical social science research. Pew Research Center does not take policy positions. It is a subsidiary of The Pew Charitable Trusts .

An Effective Hybrid Model for Fake News Detection in Social Media Using Deep Learning Approach

- Original Research

- Published: 27 March 2024

- Volume 5 , article number 346 , ( 2024 )

Cite this article

- R. Raghavendra ORCID: orcid.org/0000-0003-3538-2339 1 &

- M. Niranjanamurthy 2

Explore all metrics

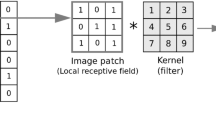

Social media is a strong Internet platform that allows people to voice their thoughts about numerous events happening in real time at multiple locations. People comment and express their thoughts on any social media post. Meanwhile, fake news or misleading information is disseminated regarding exciting occurrences that occur in real time. A large number of Internet users read and distribute such false and fake material without knowing the nature or legitimacy of the news. This has a detrimental influence on people’s perceptions of the particular event. Classical techniques are utilized to determine the type of news distributed on Twitter, Instagram, YouTube, and Facebook. However, these techniques fail to take into account characteristics such as news-generating location, consistency, timing, and novelty. It eventually leads to a scenario in which individuals form incorrect ideas and have misleading perceptions regarding any startling news. The spread of misleading thoughts and remarks has a significant impact on real-world action results. This research article addresses these difficulties by developing a system for detecting fake news using deep learning techniques and models. To determine the originality of the news, place of generation, and longevity, two deep learning models are developed artificial neural network and a mixed classifier model of convolution neural networks and long short-term memory. This aids in detecting bogus news and removing it from the server where it is stored. The tests utilizing these coupled models increase the detection of false information in social media. Furthermore, geo-map is used in this research to aids in the regulation of fake news flowing on social media regarding unique occasions occurring all over the world.

This is a preview of subscription content, log in via an institution to check access.

Access this article

Price includes VAT (Russian Federation)

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

Similar content being viewed by others

Fake news detection using recurrent neural network based on bidirectional LSTM and GloVe

Laith Abualigah, Yazan Yehia Al-Ajlouni, … Hazem Migdady

Detecting Fake News for Societal Benefit Using a Deep Learning Approach

Using Artificial Intelligence Against the Phenomenon of Fake News: A Systematic Literature Review

Data availability.

Not applicable.

Hanshal OA, Ucan ON, Sanjalawe YK. Hybrid deep learning model for automatic fake news detection. Appl Nanosci. 2023;13(4):2957–67.

Article Google Scholar

Jing J, et al. Multimodal fake news detection via progressive fusion networks. Inf Process Manag. 2023;60(1):103120.

Rawat G, et al. Fake news detection using machine learning. In: 2023 International Conference on Artificial Intelligence and Smart Communication (AISC), IEEE. 2023.

Lai CM, Chen MH, Kristiani E, Verma VK, Yang CT. Fake news classification based on content level features. Appl Sci. 2022;12(3):1116.

Mridha MF, Keya AJ, Hamid MA, Monowar MM, Rahman MS. A comprehensive review on fake news detection with deep learning. IEEE access. 2021.

Nasir JA, Khan OS, Varlamis I. Fake news detection: a hybrid CNN-RNN based deep learning approach. Int J Inf Manag Data Insights. 2021;1(1): 100007.

Google Scholar

Khan JY, Khondaker MTI, Afroz S, Uddin G, Iqbal A. A benchmark study of machine learning models for online fake news detection. Mach Learn Appl. 2021;4: 100032.

Kaliyar RK, Goswami A, Narang P. A hybrid model for effective fake news detection with a novel COVID-19 dataset. In: ICAART. 2021;2:1066–1072.

Zeng J, Zhang Y, Ma X. Fake news detection for epidemic emergencies via deep correlations between text and images. Sustain Cities Soc. 2021;66: 102652.

Aslam N, Ullah Khan I, Alotaibi FS, Aldaej LA, Aldubaikil AK. Fake detect: a deep learning ensemble model for fake news detection. Complexity. 2021;2021:1.

Ashik SS, Apu AR, Marjana NJ, Islam MS, Hasan MA. M82B at CheckThat! 2021: Multiclass fake news detection using BiLSTM. 2021.

Chauhan T, Palivela H. Optimization and improvement of fake news detection using deep learning approaches for societal benefit. Int J Inf Manag Data Insights. 2021;1(2): 100051.

Choudhary M, Chouhan SS, Pilli ES, Vipparthi SK. BerConvoNet: a deep learning framework for fake news classification. Appl Soft Comput. 2021;110: 107614.

Sadr MM. The use of LSTM neural network to detect fake news on Persian twitter. Turkish J Comput Math Edu (TURCOMAT). 2021;12(11):6658–68.

Seyam A, Bou Nassif A, Abu Talib M, Nasir Q, Al Blooshi B. Deep learning models to detect online false information: a systematic literature review. In: The 7th Annual International Conference on Arab Women in Computing in Conjunction with the 2nd Forum of Women in Research, 2021;1–5.

Chitra NT, Anusha R, Kumar SH, Chandana DS, Harika C, Kumar VU. Satellite imagery for deforestation prediction using deep learning. In: 2021 5th International Conference on Intelligent Computing and Control Systems (ICICCS), IEEE, 2021;522–525.

Islam MR, Liu S, Wang X, Xu G. Deep learning for misinformation detection on online social networks: a survey and new perspectives. Soc Netw Anal Min. 2020;10(1):1–20.

Umer M, Imtiaz Z, Ullah S, Mehmood A, Choi GS, On BW. Fake news stance detection using deep learning architecture (CNN-LSTM). IEEE Access. 2020;8:156695–706.

Kaliyar RK, Goswami A, Narang P, Sinha S. FNDNet–a deep convolutional neural network for fake news detection. Cogn Syst Res. 2020;61:32–44.

Aniyath A. A survey on fake news detection by the data mining perspective. Int J Inf Comput Sci. 2019;6(1):9–28.

Zhang J, Dong B, Philip SY. Fakedetector: effective fake news detection with deep diffusive neural network. In: 2020 IEEE 36th International Conference on Data Engineering (ICDE), IEEE, 2020;1826–1829.

Lee DH, Kim YR, Kim HJ, Park SM, Yang YJ. Fake news detection using deep learning. J Inf Process Syst. 2019;15(5):1119–30.

Drif A, Hamida ZF, Giordano S. Fake news detection method based on text-features. In: The Ninth International Conference on Advances in Information Mining and Management (IMMM), 2019.

Ramya SP, Eswari R. Attention-based deep learning models for detection of fake news in social networks. Int J Cogn Inf Nat Int (IJCINI). 2021;15(4):1–25.

Download references

Author information

Authors and affiliations.

Department of MCA, BMS Institute of Technology and Management (Affiliated to Visvesvaraya Technological University), Jnana Sangama, Avalahalli, Belgavi, Bangalore, Karnataka, 560064, India

R. Raghavendra

Department of AI and ML, BMS Institute of Technology and Management (Affiliated to Visvesvaraya Technological University), Jnana Sangama, Avalahalli, Belgavi, Bangalore, Karnataka, 560064, India

M. Niranjanamurthy

You can also search for this author in PubMed Google Scholar

Contributions

Corresponding author.

Correspondence to R. Raghavendra .

Ethics declarations

Conflict of interest, research involving human and/or animals, informed consent, additional information, publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article is part of the topical collection “Diverse Applications in Computing, Analytics and Networks” guest edited by Archana Mantri and Sagar Juneja.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

Reprints and permissions

About this article

Raghavendra, R., Niranjanamurthy, M. An Effective Hybrid Model for Fake News Detection in Social Media Using Deep Learning Approach. SN COMPUT. SCI. 5 , 346 (2024). https://doi.org/10.1007/s42979-024-02698-4

Download citation

Received : 15 June 2023

Accepted : 12 February 2024

Published : 27 March 2024

DOI : https://doi.org/10.1007/s42979-024-02698-4

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Artificial neural network (ANN)

- Convolutional neural network (CNN)

- Long short-term memory (LSTM)

- False/fake news detection (FND)

- Natural language processing (NLP)

Advertisement

- Find a journal

- Publish with us

- Track your research

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Springer Nature - PMC COVID-19 Collection

The impact of fake news on social media and its influence on health during the COVID-19 pandemic: a systematic review

Yasmim mendes rocha.

1 Post-graduate Program in Pharmaceutical Sciences, Federal University of Ceará (UFC), Campus Porangabussu, Fortaleza, CE 60.430-370 Brazil

Gabriel Acácio de Moura

2 Post-graduate Program in Veterinary Sciences, State University of Ceará (UECE), Campus do Itaperi, Fortaleza, CE 60.714-903 Brazil

Gabriel Alves Desidério

3 Health Sciences Institute, University of International Integration of the Afro-Brazilian Lusophony Brazil, CE 060 – Km51, Redençao, CE 62785-000 Brazil

Carlos Henrique de Oliveira

Francisco dantas lourenço, larissa deadame de figueiredo nicolete.

As the new coronavirus disease propagated around the world, the rapid spread of news caused uncertainty in the population. False news has taken over social media, becoming part of life for many people. Thus, this study aimed to evaluate, through a systematic review, the impact of social media on the dissemination of infodemic knowing and its impacts on health.

A systematic search was performed in the MedLine, Virtual Health Library (VHL), and Scielo databases from January 1, 2020, to May 11, 2021. Studies that addressed the impact of fake news on patients and healthcare professionals around the world were included. It was possible to methodologically assess the quality of the selected studies using the Loney and Newcastle–Ottawa Scales.

Fourteen studies were eligible for inclusion, consisting of six cross-sectional and eight descriptive observational studies. Through questionnaires, five studies included measures of anxiety or psychological distress caused by misinformation; another seven assessed feeling fear, uncertainty, and panic, in addition to attacks on health professionals and people of Asian origin.

By analyzing the phenomenon of fake news in health, it was possible to observe that infodemic knowledge can cause psychological disorders and panic, fear, depression, and fatigue.

Introduction

Coronavirus 2019 disease (COVID-19), caused by the SARS-CoV-2 virus, led to the emergence of a pandemic, with a shift in economics, disruption in education, and various rules on home confinement (Munster et al. 2020 ). In this context of uncertainty, there was a need for new information about the virus, clinical manifestations, transmission, and prevention of the disease (Eysenbach 2020 ).

The rapid implementation of these measures, together with the number of significant deaths caused by the virus, ended up causing uncertainty in the population (Tangcharoensathien et al. 2020 ). In association with the generalized panic and the constant concern that COVID-19 caused, this culminated in the appearance of physical and psychological disorders, in addition to reduced immunity in the general population (Lima et al. 2020 ).

Previous studies indicate that the emergence of the pandemic and measures of social confinement caused the number of patients and health professionals with anxiety, sleep disorders and depression to increase; in addition, suicide rates were also considered high (Choi et al. 2020 ; Okechukwu et al. 2020 ). However, the use of social media and search queries to obtain information about the course of the disease is constantly expanding, and includes Twitter, Facebook and Instagram, Google Trends, Bing, Yahoo, and other more popular sources such as blogs, forums, or Wikipedia (Depoux et al. 2020 ).

Thus, information overload accompanied by fabricated and fraudulent news, also called fake news (FN), has emerged in the twentieth century to designate the fake news produced and published by mass communication vehicles such as social media, dominating traditional and social platforms, becoming increasingly part of many people’s daily lives. FNs multiply rapidly and act as narratives that omit or add information to facts (Naeem et al. 2020 ).

The potential effect of FN stems from conspiracy theories, such as a biological weapon produced in China, water with lemon or coconut oil that could kill the virus, or drugs, which even if approved for other indications, could have potential effectiveness in prevention or treatment of COVID-19. Therefore, the impact of this massive dissemination of disease-related information is known as “infodemic knowledge” (Hua and Shaw 2020 ). Other worrisome examples of infodemic knowledge include cases of hydroxychloroquine overdose in Nigeria, drug shortages, changing treatment of patients with rheumatic and autoimmune diseases, and panic over supplies and fuel (CNN 2020 ; Tentolouris et al. 2021 ).

The World Health Organization (WHO 2020 ) has worked closely to track and respond to the most prevalent myths and rumors that can potentially harm public health. In this context, the objective of the study was to evaluate, through a systematic review, the impact of the media and the media during the pandemic caused by the new coronavirus, and to determine how the spread of infodemic impacts people’s health.

This is a systematic literature review that aimed to use explicit and systematic methods to avoid the chance of risk of bias (Donato and Donato 2019 ). Therefore, the study followed a design according to the guidelines of Preferred Report items for Systematic Reviews (PROSPERO) and PRISMA Meta-analyses (PRISMA 2021 ) and the search procedures were filed in the database and registered in PROSPERO: CRD42021256508 (PROSPERO 2021 ).

Searching strategy

Search strategies were developed from the identification of relevant articles using the Medical Subjects Headings (MeSH) in a combination of Boolean AND. The search by string and keyword was calculated as follows: “Covid-19” OR “SARS-CoV-2” AND “fake news” AND “health” OR “Covid-19” AND “fake news” OR “misinformation” AND “health”. The strategy was performed using MedLine, Virtual Health Library (VHL), and Scielo databases. Search results were revised to prevent duplicate studies. The articles obtained were analyzed for relevance and step-by-step, as illustrated in Fig. 1 . The report items for systematic review illustrate the PRISMA (PRISMA 2021 ) process used to report the results.

Search strategy flowchart

Inclusion and exclusion criteria