Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Chapter 10: Single-Subject Research

Single-Subject Research Designs

Learning Objectives

- Describe the basic elements of a single-subject research design.

- Design simple single-subject studies using reversal and multiple-baseline designs.

- Explain how single-subject research designs address the issue of internal validity.

- Interpret the results of simple single-subject studies based on the visual inspection of graphed data.

General Features of Single-Subject Designs

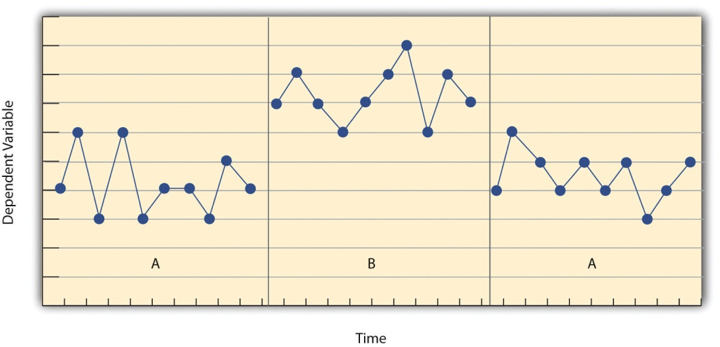

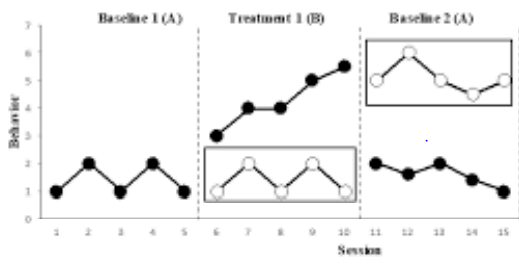

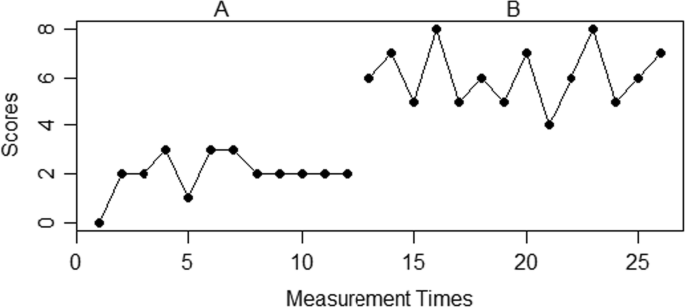

Before looking at any specific single-subject research designs, it will be helpful to consider some features that are common to most of them. Many of these features are illustrated in Figure 10.2, which shows the results of a generic single-subject study. First, the dependent variable (represented on the y -axis of the graph) is measured repeatedly over time (represented by the x -axis) at regular intervals. Second, the study is divided into distinct phases, and the participant is tested under one condition per phase. The conditions are often designated by capital letters: A, B, C, and so on. Thus Figure 10.2 represents a design in which the participant was tested first in one condition (A), then tested in another condition (B), and finally retested in the original condition (A). (This is called a reversal design and will be discussed in more detail shortly.)

Another important aspect of single-subject research is that the change from one condition to the next does not usually occur after a fixed amount of time or number of observations. Instead, it depends on the participant’s behaviour. Specifically, the researcher waits until the participant’s behaviour in one condition becomes fairly consistent from observation to observation before changing conditions. This is sometimes referred to as the steady state strategy (Sidman, 1960) [1] . The idea is that when the dependent variable has reached a steady state, then any change across conditions will be relatively easy to detect. Recall that we encountered this same principle when discussing experimental research more generally. The effect of an independent variable is easier to detect when the “noise” in the data is minimized.

Reversal Designs

The most basic single-subject research design is the reversal design , also called the ABA design . During the first phase, A, a baseline is established for the dependent variable. This is the level of responding before any treatment is introduced, and therefore the baseline phase is a kind of control condition. When steady state responding is reached, phase B begins as the researcher introduces the treatment. There may be a period of adjustment to the treatment during which the behaviour of interest becomes more variable and begins to increase or decrease. Again, the researcher waits until that dependent variable reaches a steady state so that it is clear whether and how much it has changed. Finally, the researcher removes the treatment and again waits until the dependent variable reaches a steady state. This basic reversal design can also be extended with the reintroduction of the treatment (ABAB), another return to baseline (ABABA), and so on.

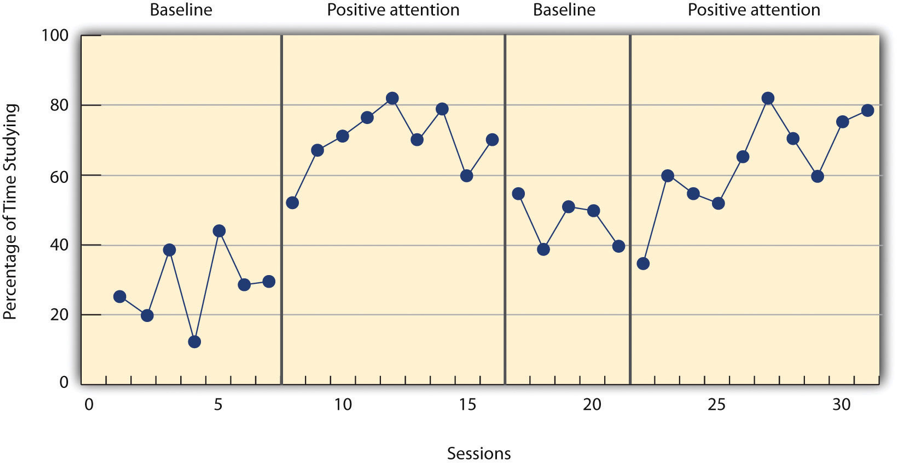

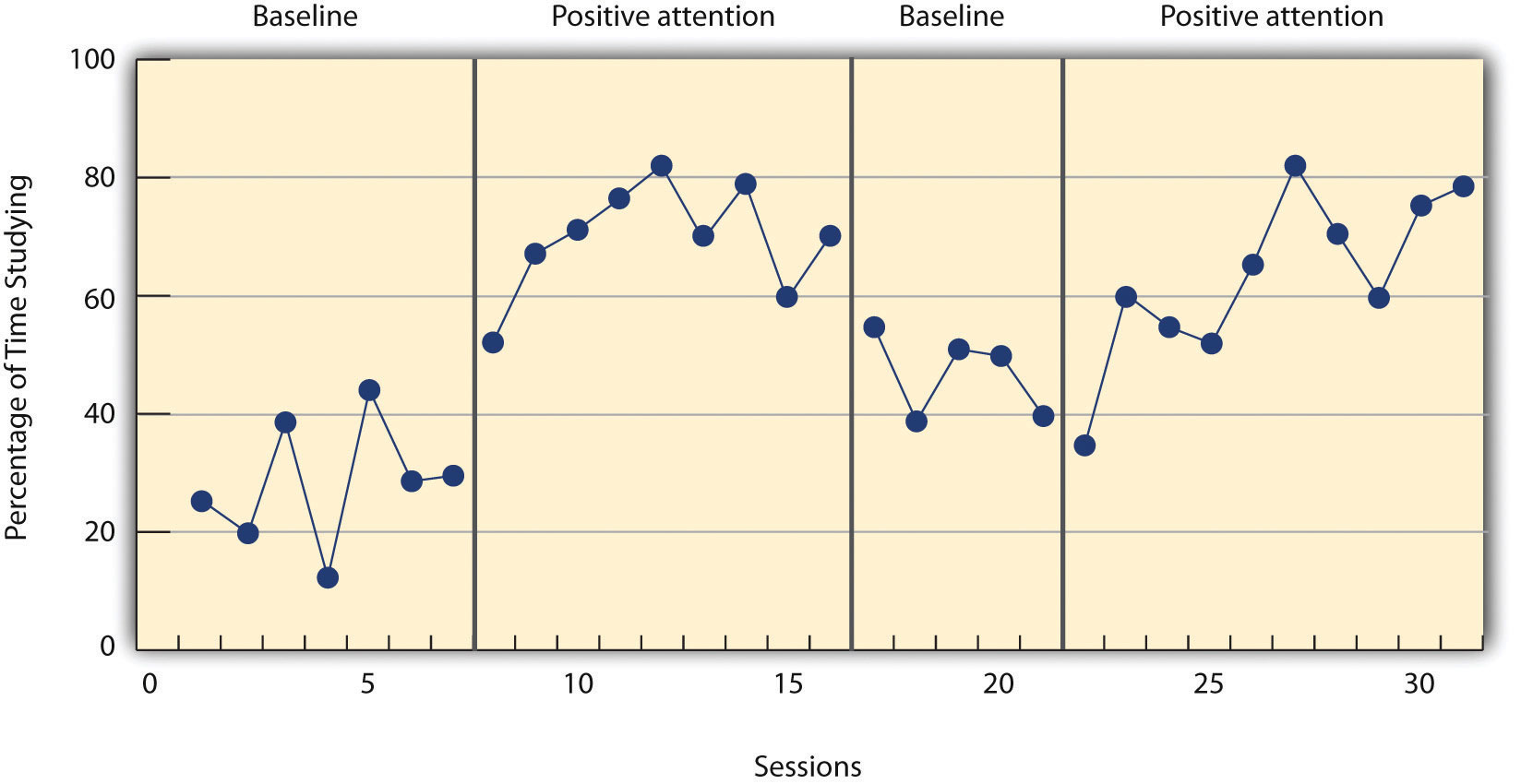

The study by Hall and his colleagues was an ABAB reversal design. Figure 10.3 approximates the data for Robbie. The percentage of time he spent studying (the dependent variable) was low during the first baseline phase, increased during the first treatment phase until it leveled off, decreased during the second baseline phase, and again increased during the second treatment phase.

Why is the reversal—the removal of the treatment—considered to be necessary in this type of design? Why use an ABA design, for example, rather than a simpler AB design? Notice that an AB design is essentially an interrupted time-series design applied to an individual participant. Recall that one problem with that design is that if the dependent variable changes after the treatment is introduced, it is not always clear that the treatment was responsible for the change. It is possible that something else changed at around the same time and that this extraneous variable is responsible for the change in the dependent variable. But if the dependent variable changes with the introduction of the treatment and then changes back with the removal of the treatment (assuming that the treatment does not create a permanent effect), it is much clearer that the treatment (and removal of the treatment) is the cause. In other words, the reversal greatly increases the internal validity of the study.

There are close relatives of the basic reversal design that allow for the evaluation of more than one treatment. In a multiple-treatment reversal design , a baseline phase is followed by separate phases in which different treatments are introduced. For example, a researcher might establish a baseline of studying behaviour for a disruptive student (A), then introduce a treatment involving positive attention from the teacher (B), and then switch to a treatment involving mild punishment for not studying (C). The participant could then be returned to a baseline phase before reintroducing each treatment—perhaps in the reverse order as a way of controlling for carryover effects. This particular multiple-treatment reversal design could also be referred to as an ABCACB design.

In an alternating treatments design , two or more treatments are alternated relatively quickly on a regular schedule. For example, positive attention for studying could be used one day and mild punishment for not studying the next, and so on. Or one treatment could be implemented in the morning and another in the afternoon. The alternating treatments design can be a quick and effective way of comparing treatments, but only when the treatments are fast acting.

Multiple-Baseline Designs

There are two potential problems with the reversal design—both of which have to do with the removal of the treatment. One is that if a treatment is working, it may be unethical to remove it. For example, if a treatment seemed to reduce the incidence of self-injury in a developmentally disabled child, it would be unethical to remove that treatment just to show that the incidence of self-injury increases. The second problem is that the dependent variable may not return to baseline when the treatment is removed. For example, when positive attention for studying is removed, a student might continue to study at an increased rate. This could mean that the positive attention had a lasting effect on the student’s studying, which of course would be good. But it could also mean that the positive attention was not really the cause of the increased studying in the first place. Perhaps something else happened at about the same time as the treatment—for example, the student’s parents might have started rewarding him for good grades.

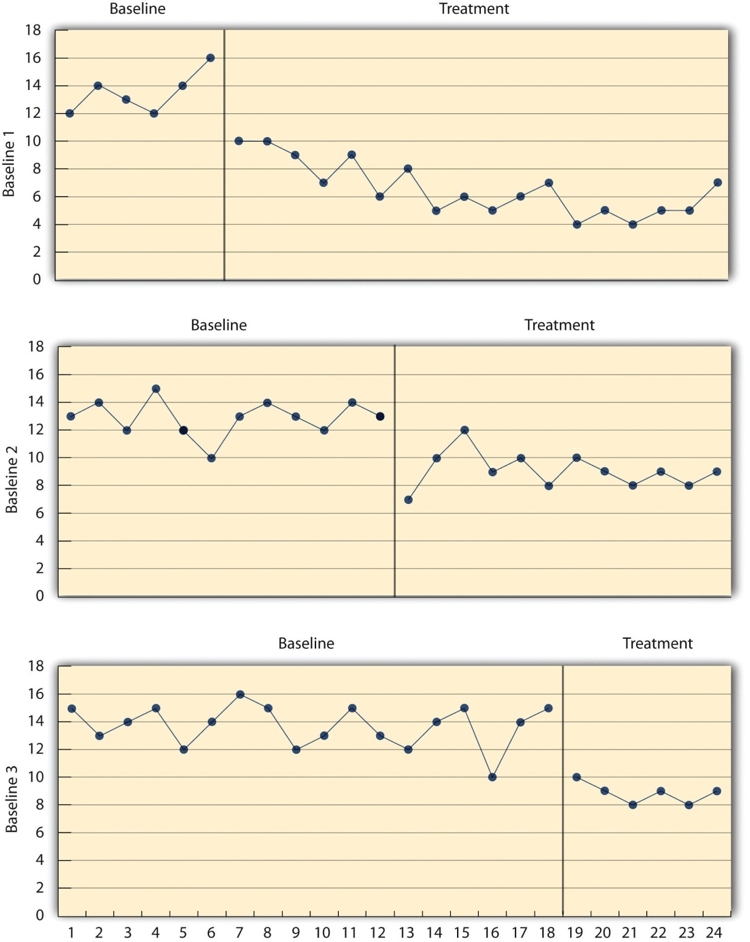

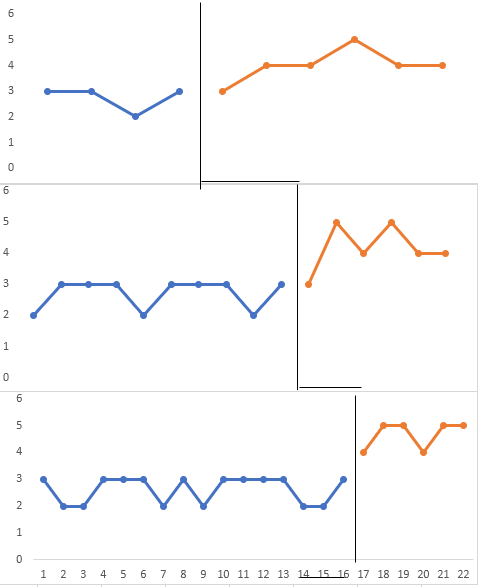

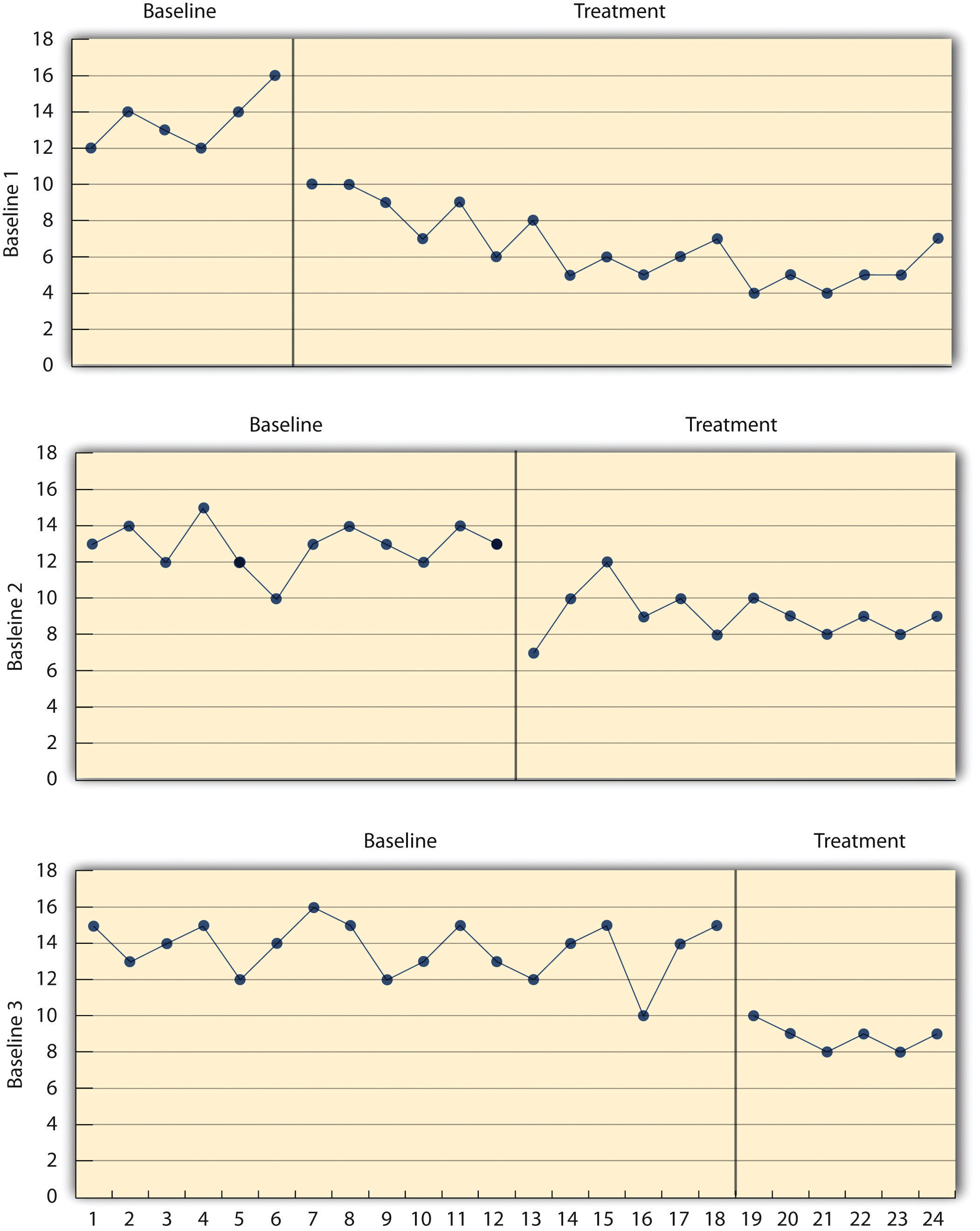

One solution to these problems is to use a multiple-baseline design , which is represented in Figure 10.4. In one version of the design, a baseline is established for each of several participants, and the treatment is then introduced for each one. In essence, each participant is tested in an AB design. The key to this design is that the treatment is introduced at a different time for each participant. The idea is that if the dependent variable changes when the treatment is introduced for one participant, it might be a coincidence. But if the dependent variable changes when the treatment is introduced for multiple participants—especially when the treatment is introduced at different times for the different participants—then it is extremely unlikely to be a coincidence.

As an example, consider a study by Scott Ross and Robert Horner (Ross & Horner, 2009) [2] . They were interested in how a school-wide bullying prevention program affected the bullying behaviour of particular problem students. At each of three different schools, the researchers studied two students who had regularly engaged in bullying. During the baseline phase, they observed the students for 10-minute periods each day during lunch recess and counted the number of aggressive behaviours they exhibited toward their peers. (The researchers used handheld computers to help record the data.) After 2 weeks, they implemented the program at one school. After 2 more weeks, they implemented it at the second school. And after 2 more weeks, they implemented it at the third school. They found that the number of aggressive behaviours exhibited by each student dropped shortly after the program was implemented at his or her school. Notice that if the researchers had only studied one school or if they had introduced the treatment at the same time at all three schools, then it would be unclear whether the reduction in aggressive behaviours was due to the bullying program or something else that happened at about the same time it was introduced (e.g., a holiday, a television program, a change in the weather). But with their multiple-baseline design, this kind of coincidence would have to happen three separate times—a very unlikely occurrence—to explain their results.

In another version of the multiple-baseline design, multiple baselines are established for the same participant but for different dependent variables, and the treatment is introduced at a different time for each dependent variable. Imagine, for example, a study on the effect of setting clear goals on the productivity of an office worker who has two primary tasks: making sales calls and writing reports. Baselines for both tasks could be established. For example, the researcher could measure the number of sales calls made and reports written by the worker each week for several weeks. Then the goal-setting treatment could be introduced for one of these tasks, and at a later time the same treatment could be introduced for the other task. The logic is the same as before. If productivity increases on one task after the treatment is introduced, it is unclear whether the treatment caused the increase. But if productivity increases on both tasks after the treatment is introduced—especially when the treatment is introduced at two different times—then it seems much clearer that the treatment was responsible.

In yet a third version of the multiple-baseline design, multiple baselines are established for the same participant but in different settings. For example, a baseline might be established for the amount of time a child spends reading during his free time at school and during his free time at home. Then a treatment such as positive attention might be introduced first at school and later at home. Again, if the dependent variable changes after the treatment is introduced in each setting, then this gives the researcher confidence that the treatment is, in fact, responsible for the change.

Data Analysis in Single-Subject Research

In addition to its focus on individual participants, single-subject research differs from group research in the way the data are typically analyzed. As we have seen throughout the book, group research involves combining data across participants. Group data are described using statistics such as means, standard deviations, Pearson’s r , and so on to detect general patterns. Finally, inferential statistics are used to help decide whether the result for the sample is likely to generalize to the population. Single-subject research, by contrast, relies heavily on a very different approach called visual inspection . This means plotting individual participants’ data as shown throughout this chapter, looking carefully at those data, and making judgments about whether and to what extent the independent variable had an effect on the dependent variable. Inferential statistics are typically not used.

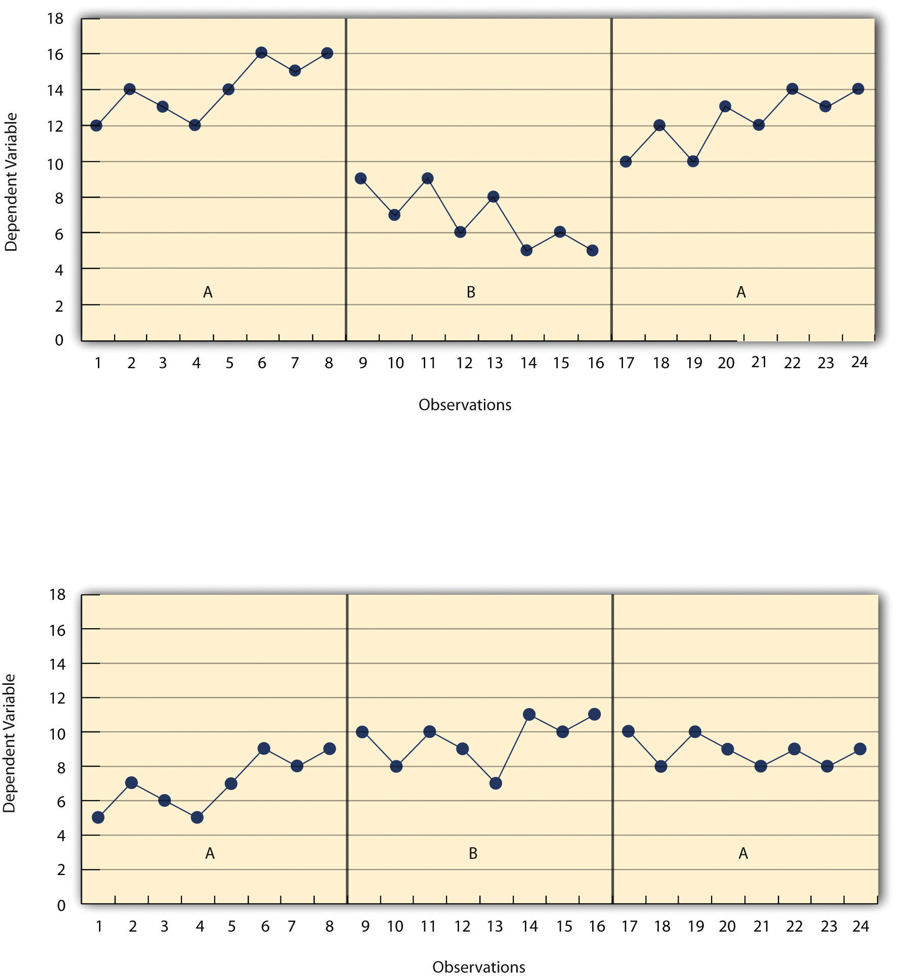

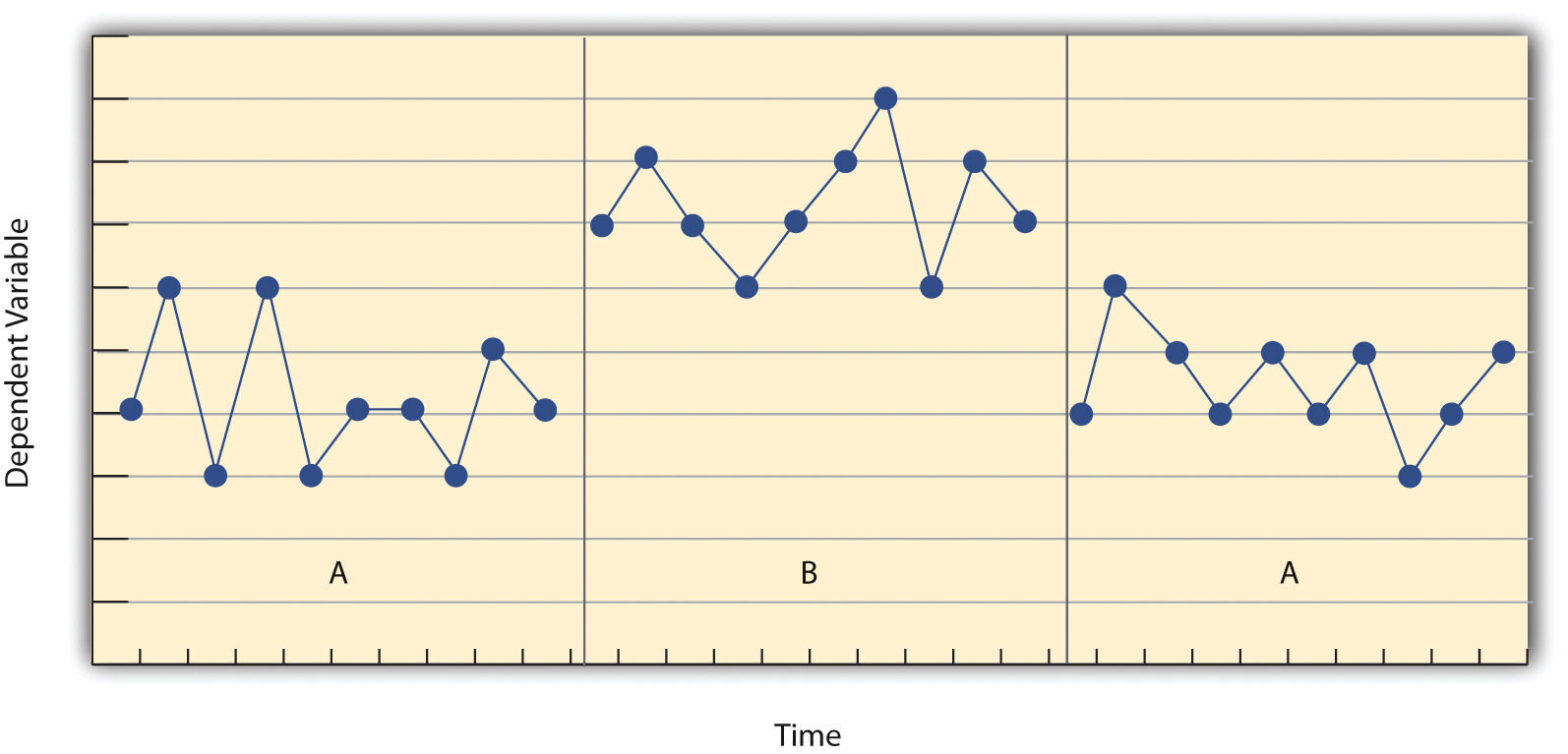

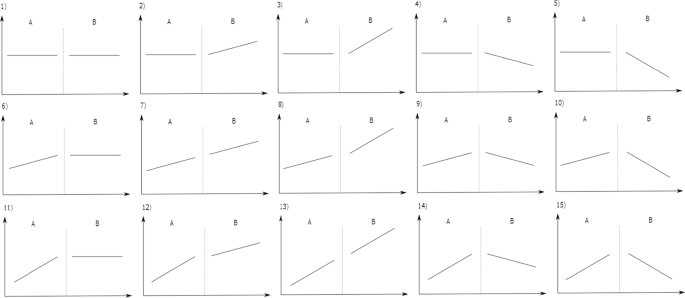

In visually inspecting their data, single-subject researchers take several factors into account. One of them is changes in the level of the dependent variable from condition to condition. If the dependent variable is much higher or much lower in one condition than another, this suggests that the treatment had an effect. A second factor is trend , which refers to gradual increases or decreases in the dependent variable across observations. If the dependent variable begins increasing or decreasing with a change in conditions, then again this suggests that the treatment had an effect. It can be especially telling when a trend changes directions—for example, when an unwanted behaviour is increasing during baseline but then begins to decrease with the introduction of the treatment. A third factor is latency , which is the time it takes for the dependent variable to begin changing after a change in conditions. In general, if a change in the dependent variable begins shortly after a change in conditions, this suggests that the treatment was responsible.

In the top panel of Figure 10.5, there are fairly obvious changes in the level and trend of the dependent variable from condition to condition. Furthermore, the latencies of these changes are short; the change happens immediately. This pattern of results strongly suggests that the treatment was responsible for the changes in the dependent variable. In the bottom panel of Figure 10.5, however, the changes in level are fairly small. And although there appears to be an increasing trend in the treatment condition, it looks as though it might be a continuation of a trend that had already begun during baseline. This pattern of results strongly suggests that the treatment was not responsible for any changes in the dependent variable—at least not to the extent that single-subject researchers typically hope to see.

The results of single-subject research can also be analyzed using statistical procedures—and this is becoming more common. There are many different approaches, and single-subject researchers continue to debate which are the most useful. One approach parallels what is typically done in group research. The mean and standard deviation of each participant’s responses under each condition are computed and compared, and inferential statistical tests such as the t test or analysis of variance are applied (Fisch, 2001) [3] . (Note that averaging across participants is less common.) Another approach is to compute the percentage of nonoverlapping data (PND) for each participant (Scruggs & Mastropieri, 2001) [4] . This is the percentage of responses in the treatment condition that are more extreme than the most extreme response in a relevant control condition. In the study of Hall and his colleagues, for example, all measures of Robbie’s study time in the first treatment condition were greater than the highest measure in the first baseline, for a PND of 100%. The greater the percentage of nonoverlapping data, the stronger the treatment effect. Still, formal statistical approaches to data analysis in single-subject research are generally considered a supplement to visual inspection, not a replacement for it.

Key Takeaways

- Single-subject research designs typically involve measuring the dependent variable repeatedly over time and changing conditions (e.g., from baseline to treatment) when the dependent variable has reached a steady state. This approach allows the researcher to see whether changes in the independent variable are causing changes in the dependent variable.

- In a reversal design, the participant is tested in a baseline condition, then tested in a treatment condition, and then returned to baseline. If the dependent variable changes with the introduction of the treatment and then changes back with the return to baseline, this provides strong evidence of a treatment effect.

- In a multiple-baseline design, baselines are established for different participants, different dependent variables, or different settings—and the treatment is introduced at a different time on each baseline. If the introduction of the treatment is followed by a change in the dependent variable on each baseline, this provides strong evidence of a treatment effect.

- Single-subject researchers typically analyze their data by graphing them and making judgments about whether the independent variable is affecting the dependent variable based on level, trend, and latency.

- Does positive attention from a parent increase a child’s toothbrushing behaviour?

- Does self-testing while studying improve a student’s performance on weekly spelling tests?

- Does regular exercise help relieve depression?

- Practice: Create a graph that displays the hypothetical results for the study you designed in Exercise 1. Write a paragraph in which you describe what the results show. Be sure to comment on level, trend, and latency.

Long Descriptions

Figure 10.3 long description: Line graph showing the results of a study with an ABAB reversal design. The dependent variable was low during first baseline phase; increased during the first treatment; decreased during the second baseline, but was still higher than during the first baseline; and was highest during the second treatment phase. [Return to Figure 10.3]

Figure 10.4 long description: Three line graphs showing the results of a generic multiple-baseline study, in which different baselines are established and treatment is introduced to participants at different times.

For Baseline 1, treatment is introduced one-quarter of the way into the study. The dependent variable ranges between 12 and 16 units during the baseline, but drops down to 10 units with treatment and mostly decreases until the end of the study, ranging between 4 and 10 units.

For Baseline 2, treatment is introduced halfway through the study. The dependent variable ranges between 10 and 15 units during the baseline, then has a sharp decrease to 7 units when treatment is introduced. However, the dependent variable increases to 12 units soon after the drop and ranges between 8 and 10 units until the end of the study.

For Baseline 3, treatment is introduced three-quarters of the way into the study. The dependent variable ranges between 12 and 16 units for the most part during the baseline, with one drop down to 10 units. When treatment is introduced, the dependent variable drops down to 10 units and then ranges between 8 and 9 units until the end of the study. [Return to Figure 10.4]

Figure 10.5 long description: Two graphs showing the results of a generic single-subject study with an ABA design. In the first graph, under condition A, level is high and the trend is increasing. Under condition B, level is much lower than under condition A and the trend is decreasing. Under condition A again, level is about as high as the first time and the trend is increasing. For each change, latency is short, suggesting that the treatment is the reason for the change.

In the second graph, under condition A, level is relatively low and the trend is increasing. Under condition B, level is a little higher than during condition A and the trend is increasing slightly. Under condition A again, level is a little lower than during condition B and the trend is decreasing slightly. It is difficult to determine the latency of these changes, since each change is rather minute, which suggests that the treatment is ineffective. [Return to Figure 10.5]

- Sidman, M. (1960). Tactics of scientific research: Evaluating experimental data in psychology . Boston, MA: Authors Cooperative. ↵

- Ross, S. W., & Horner, R. H. (2009). Bully prevention in positive behaviour support. Journal of Applied Behaviour Analysis, 42 , 747–759. ↵

- Fisch, G. S. (2001). Evaluating data from behavioural analysis: Visual inspection or statistical models. Behavioural Processes, 54 , 137–154. ↵

- Scruggs, T. E., & Mastropieri, M. A. (2001). How to summarize single-participant research: Ideas and applications. Exceptionality, 9 , 227–244. ↵

The researcher waits until the participant’s behaviour in one condition becomes fairly consistent from observation to observation before changing conditions. This way, any change across conditions will be easy to detect.

A study method in which the researcher gathers data on a baseline state, introduces the treatment and continues observation until a steady state is reached, and finally removes the treatment and observes the participant until they return to a steady state.

The level of responding before any treatment is introduced and therefore acts as a kind of control condition.

A baseline phase is followed by separate phases in which different treatments are introduced.

Two or more treatments are alternated relatively quickly on a regular schedule.

A baseline is established for several participants and the treatment is then introduced to each participant at a different time.

The plotting of individual participants’ data, examining the data, and making judgements about whether and to what extent the independent variable had an effect on the dependent variable.

Whether the data is higher or lower based on a visual inspection of the data; a change in the level implies the treatment introduced had an effect.

The gradual increases or decreases in the dependent variable across observations.

The time it takes for the dependent variable to begin changing after a change in conditions.

The percentage of responses in the treatment condition that are more extreme than the most extreme response in a relevant control condition.

Research Methods in Psychology - 2nd Canadian Edition Copyright © 2015 by Paul C. Price, Rajiv Jhangiani, & I-Chant A. Chiang is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Using Single Subject Experimental Designs

What are the Characteristics of Single Subject Experimental Designs?

Single-subject designs are the staple of applied behavior analysis research. Those preparing for the BCBA exam or the BCaBA exam must know single subject terms and definitions. When choosing a single-subject experimental design, ABA researchers are looking for certain characteristics that fit their study. First, individuals serve as their own control in single subject research. In other words, the results of each condition are compared to the participant’s own data. If 3 people participate in the study, each will act as their own control. Second, researchers are trying to predict, verify, and replicate the outcomes of their intervention. Prediction, replication, and verification are essential to single-subject design research and help prove experimental control. Prediction: the hypothesis related to what the outcome will be when measured Verification : showing that baseline data would remain consistent if the independent variable was not manipulated Replication: repeating the independent variable manipulation to show similar results across multiple phases Some experimental designs like withdrawal designs are better suited for demonstrating experimental control than others, but each design has its place. We will now look at the different types of single subject experimental designs and the core features of each.

Reversal Design/Withdrawal Design/A-B-A

Arguably the simplest single subject design, the reversal/withdrawal design is excellent at identifying experimental control. First, baseline data is recorded. Then, an intervention is introduced and the effects are recorded. Finally, the intervention is withdrawn and the experiment returns to baseline. The researcher or researchers then visually analyze the changes from baseline to intervention and determine whether or not experimental control was established. Prediction, verification, and replication are also clearly demonstrated in the withdrawal design. Below is a simple example of this A-B-A design.

Advantages: Demonstrate experimental control Disadvantages: Ethical concerns, some behaviors cannot be reversed, not great for high-risk or dangerous behaviors

Multiple Baseline Design/Multiple Probe Design

Multiple baseline designs are used when researchers need to measure across participants, behaviors, or settings. For instance, if you wanted to examine the effects of an independent variable in a classroom, in a home setting, and in a clinical setting, you might use a multiple baseline across settings design. Multiple baseline designs typically involve 3-5 subjects, settings, or behaviors. An intervention is introduced into each segment one at a time while baseline continues in the other conditions. Below is a rough example of what a multiple baseline design typically looks like:

Multiple probe designs are identical to multiple baseline designs except baseline is not continuous. Instead, data is taken only sporadically during the baseline condition. You may use this if time and resources are limited, or you do not anticipate baseline changing. Advantages: No withdrawal needed, examine multiple dependent variables at a time Disadvantages : Sometimes difficult to demonstrate experimental control

Alternating Treatment Design

The alternating treatment design involves rapid/semirandom alternating conditions taking place all in the same phase. There are equal opportunities for conditions to be present during measurement. Conditions are alternated rapidly and randomly to test multiple conditions at once.

Advantages: No withdrawal, multiple independent variables can be tried rapidly Disadvantages : The multiple treatment effect can impact measurement

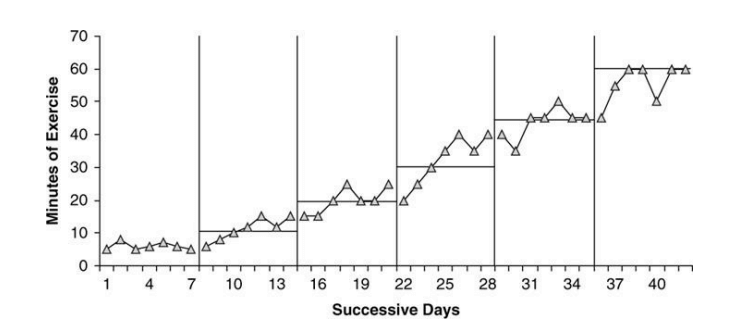

Changing Criterion Design

The changing criterion design is great for reducing or increasing behaviors. The behavior should already be in the subject’s repertoire when using changing criterion designs. Reducing smoking or increasing exercise are two common examples of the changing criterion design. With the changing criterion design, treatment is delivered in a series of ascending or descending phases. The criterion that the subject is expected to meet is changed for each phase. You can reverse a phase of a changing criterion design in an attempt to demonstrate experimental control.

Summary of Single Subject Experimental Designs

Single subject designs are popular in both social sciences and in applied behavior analysis. As always, your research question and purpose should dictate your design choice. You will need to know experimental design and the details behind single subject design for the BCBA exam and the BCaBA exam. For BCBA exam study materials check out our BCBA exam prep. For a full breakdown of the BCBA fifth edition task list, check out our YouTube :

A Meta-Analysis of Single-Case Research on Applied Behavior Analytic Interventions for People With Down Syndrome

Affiliation.

- 1 Nicole Neil, Ashley Amicarelli, Brianna M. Anderson, and Kailee Liesemer, Western University, Canada.

- PMID: 33651891

- DOI: 10.1352/1944-7558-126.2.114

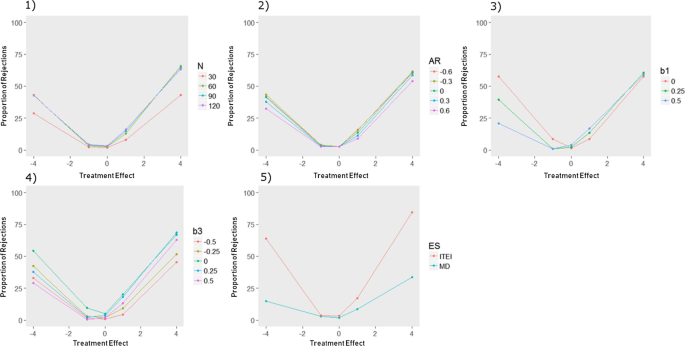

This systematic review evaluates single-case research design studies investigating applied behavior analytic (ABA) interventions for people with Down syndrome (DS). One hundred twenty-five studies examining the efficacy of ABA interventions on increasing skills and/or decreasing challenging behaviors met inclusion criteria. The What Works Clearinghouse standards and Risk of Bias in N-of-1 Trials scale were used to analyze methodological characteristics, and Tau-U effect sizes were calculated. Results suggest the use of ABA-based interventions are promising for behavior change in people with DS. Thirty-six high-quality studies were identified and demonstrated a medium overall effect. A range of outcomes was targeted, primarily involving communication and challenging behavior. These outcomes will guide future research on ABA interventions and DS.

Keywords: Down syndrome; Tau-U; applied behavior analysis; single-case research.

Publication types

- Meta-Analysis

- Systematic Review

- Behavior Therapy

- Communication

- Down Syndrome* / therapy

- Writing Center

- Brightspace

- Campus Directory

- My Library Items

Applied Behavior Analysis: Single Subject Research Design

- Find Articles

- Formatting the APA 7th Paper

- Using crossref.org

- Single Subject Research Design

Terms to Use for Articles

"reversal design" OR "withdrawal design" OR "ABAB design" OR "A-B-A-B design" OR "ABC design" OR "A-B-C design" OR "ABA design" OR "A-B-A design" OR "multiple baseline" OR "alternating treatments design" OR "multi-element design" OR "multielement design" OR "changing criterion design" OR "single case design" OR "single subject design" OR “single case series" or "single subject" or "single case"

Go To Databases

- ProQuest Education Database This link opens in a new window ProQuest Education Database indexes, abstracts, and provides full-text to leading scholarly and trade publications as well as reports in the field of education. Content includes primary, secondary, higher education, special education, home schooling, adult education, and more.

- PsycINFO This link opens in a new window PsycINFO, from the American Psychological Association's (APA), is a resource for abstracts of scholarly journal articles, book chapters, books, and dissertations across a range of disciplines in psychology. PsycINFO is indexed using APA's Thesaurus of Psychological Index Terms. Subscription ends 6/30/24.

Research Hints

Stimming – or self-stimulatory behaviour – is repetitive or unusual body movement or noises . Stimming might include:

- hand and finger mannerisms – for example, finger-flicking and hand-flapping

- unusual body movements – for example, rocking back and forth while sitting or standing

- posturing – for example, holding hands or fingers out at an angle or arching the back while sitting

- visual stimulation – for example, looking at something sideways, watching an object spin or fluttering fingers near the eyes

- repetitive behaviour – for example, opening and closing doors or flicking switches

- chewing or mouthing objects

- listening to the same song or noise over and over.

How to Search for a Specific Research Methodology in JABA

Single Case Design (Research Articles)

- Single Case Design (APA Dictionary of Psychology) an approach to the empirical study of a process that tracks a single unit (e.g., person, family, class, school, company) in depth over time. Specific types include the alternating treatments design, the multiple baseline design, the reversal design, and the withdrawal design. In other words, it is a within-subjects design with just one unit of analysis. For example, a researcher may use a single-case design for a small group of patients with a tic. After observing the patients and establishing the number of tics per hour, the researcher would then conduct an intervention and watch what happens over time, thus revealing the richness of any change. Such studies are useful for generating ideas for broader studies and for focusing on the microlevel concerns associated with a particular unit. However, data from these studies need to be evaluated carefully given the many potential threats to internal validity; there are also issues relating to the sampling of both the one unit and the process it undergoes. Also called N-of-1 design; N=1 design; single-participant design; single-subject (case) design.

- Anatomy of a Primary Research Article Document that goes through a research artile highlighting evaluative criteria for every section. Document from Mohawk Valley Community College. Permission to use sought and given

- Single Case Design (Explanation) Single case design (SCD), often referred to as single subject design, is an evaluation method that can be used to rigorously test the success of an intervention or treatment on a particular case (i.e., a person, school, community) and to also provide evidence about the general effectiveness of an intervention using a relatively small sample size. The material presented in this document is intended to provide introductory information about SCD in relation to home visiting programs and is not a comprehensive review of the application of SCD to other types of interventions.

- Single-Case Design, Analysis, and Quality Assessment for Intervention Research The purpose of this article is to describe single-case studies, and contrast them with case studies and randomized clinical trials Lobo, M. A., Moeyaert, M., Baraldi Cunha, A., & Babik, I. (2017). Single-case design, analysis, and quality assessment for intervention research. Journal of neurologic physical therapy : JNPT, 41(3), 187–197. https://doi.org/10.1097/NPT.0000000000000187

- The difference between a case study and single case designs There is a big difference between case studies and single case designs, despite them superficially sounding similar. (This is from a Blog posting)

- Single Case Design (Amanda N. Kelly, PhD, BCBA-D, LBA-aka Behaviorbabe) Despite the aka Behaviorbabe, Dr. Amanda N. Kelly, PhD, BCBA-D, LBA] provides a tutorial and explanation of single case design in simple terms.

- Lobo (2018). Single-Case Design, Analysis, and Quality Assessment for Intervention Research Lobo, M. A., Moeyaert, M., Cunha, A. B., & Babik, I. (2017). Single-case design, analysis, and quality assessment for intervention research. Journal of neurologic physical therapy: JNPT, 41(3), 187.. https://doi.org/10.1097/NPT.0000000000000187

- << Previous: Using crossref.org

- Next: Feedback >>

- Last Updated: Apr 6, 2024 2:39 PM

- URL: https://mville.libguides.com/appliedbehavioranalysis

Applied Behavior Analysis

- Find Articles on a Topic

Two Ways to Find Single Subject Research Design (SSRD) Articles

Finding ssrd articles via the browsing method, finding ssrd articles via the searching method.

- Search by Article Citation in OneSearch

- Find Reading Lists (AKA 'Course Reserves')

- Get Articles We Don't Have through Interlibrary Loan

- Browse ABA Journals

- APA citation style

- Install LibKey Nomad

Types of Single Subject Research Design

Types of SSRDs to look for as you skim abstracts:

- reversal design

- withdrawal design

- ABAB design

- A-B-A-B design

- A-B-C design

- A-B-A design

- multiple baseline

- alternating treatments design

- multi-element design

- changing criterion design

- single case design

- single subject design

- single case series

Behavior analysts recognize the advantages of single-subject design for establishing intervention efficacy. Much of the research performed by behavior analysts will use SSRD methods.

When you need to find SSRD articles, there are two methods you can use:

- Click on a title from the list of ABA Journal Titles .

- Scroll down on the resulting page to the View Online section.

- Choose a link which includes the date range you're interested in.

- Click on a link to an issue (date) you want to explore.

- From the resulting Table of Contents, explore titles of interest, reading the abstract carefully for signs that the research was carried out using a SSRD. (To help, look for the box on this page with a list of SSRD types.)

- APA PsycInfo This link opens in a new window When you search in APA PsycInfo, you are searching through abstracts and descriptions of articles published in these ABA Journals in addition to thousands of other psychology-related journals. more... less... Description: PsycInfo is a key database in the field of psychology. Includes information of use to psychologists, students, and professionals in related fields such as psychiatry, management, business, and education, social science, neuroscience, law, medicine, and social work. Time Period: 1887 to present Sources: Indexes more than 2,500 journals. Subject Headings: Education, Mobile, Psychology, Social Sciences (Psychology) Scholarly or Popular: Scholarly Primary Materials: Journal Articles Information Included: Abstracts, Citations, Linked Full Text FindIt@BALL STATE: Yes Print Equivalent: None Publisher: American Psychological Association Updates: Monthly Number of Simultaneous Users: Unlimited

First , go to APA PsycInfo.

Second , copy and paste this set of terms describing different types of SSRDs into an APA PsycInfo search box, and choose "Abstract" in the drop-down menu.

Third , copy and paste this list of ABA journals into another search box in APA PsycInfo, and choose "SO Publication Name" in the drop-down menu.

Fourth , type in some keywords in another APA PsycInfo search box (or two) describing what you're researching. Use OR and add synonyms or related words for the best results.

Hit SEARCH, and see what kind of results you get!

Here's an example of a search for SSRDs in ABA journals on the topic of fitness:

Note that the long list of terms in the top two boxes gets cut off in the screenshot - - but they're all there!

The reason this works:

- To find SSRD articles, we can't just search on the phrase "single subject research" because many studies which use SSRD do not include that phrase anywhere in the text of the article; instead such articles typically specify in the abstract (and "Methods" section) what type of SSRD method was used (ex. withdrawal design, multiple baseline, or ABA design). That's why we string together all the possible descriptions of SSRD types with the word OR in between -- it enables us to search for any sort of SSRD, regardless of how it's described. Choosing "Abstract" in the drop-down menu ensures that we're focusing on these terms being used in the abstract field (not just popping up in discussion in the full-text).

- To search specifically for studies carried out in the field of Applied Behavior Analysis, we enter in the titles of the ABA journals, strung together, with OR in between. The quotation marks ensure each title is searched as a phrase. Choosing "SO Publication Name" in the drop-down menu ensures that results will be from articles published in those journals (not just references to those journals).

- To limit the search to a topic we're interested in, we type in some keywords in another search box. The more synonyms you can think of, the better; that ensures you'll have a decent pool of records to look through, including authors who may have described your topic differently.

Search ideas:

To limit your search to just the top ABA journals, you can use this shorter list in place of the long one above:

"Behavior Analysis in Practice" OR "Journal of Applied Behavior Analysis" OR "Journal of Behavioral Education" OR "Journal of Developmental and Physical Disabilities" OR "Journal of the Experimental Analysis of Behavior"

To get more specific, topic-wise, add another search box with another term (or set of terms), like in this example:

To search more broadly and include other psychology studies outside of ABA journals, simply remove the list of journal titles from the search, as shown here:

- << Previous: Find Articles on a Topic

- Next: Search by Article Citation in OneSearch >>

- Last Updated: Mar 1, 2024 3:30 PM

- URL: https://bsu.libguides.com/appliedbehavioranalysis

- Previous Article

- Next Article

A Meta-Analysis of Single-Case Research on Applied Behavior Analytic Interventions for People With Down Syndrome

- Article contents

- Figures & tables

- Supplementary Data

- Peer Review

- Get Permissions

- Cite Icon Cite

- Search Site

Nicole Neil , Ashley Amicarelli , Brianna M. Anderson , Kailee Liesemer; A Meta-Analysis of Single-Case Research on Applied Behavior Analytic Interventions for People With Down Syndrome. Am J Intellect Dev Disabil 1 March 2021; 126 (2): 114–141. doi: https://doi.org/10.1352/1944-7558-126.2.114

Download citation file:

- Ris (Zotero)

- Reference Manager

This systematic review evaluates single-case research design studies investigating applied behavior analytic (ABA) interventions for people with Down syndrome (DS). One hundred twenty-five studies examining the efficacy of ABA interventions on increasing skills and/or decreasing challenging behaviors met inclusion criteria. The What Works Clearinghouse standards and Risk of Bias in N-of-1 Trials scale were used to analyze methodological characteristics, and Tau-U effect sizes were calculated. Results suggest the use of ABA-based interventions are promising for behavior change in people with DS. Thirty-six high-quality studies were identified and demonstrated a medium overall effect. A range of outcomes was targeted, primarily involving communication and challenging behavior. These outcomes will guide future research on ABA interventions and DS.

Client Account

Sign in via your institution.

AJIDD Call for Papers

The American Journal on Intellectual and Developmental Disabilities is looking for high-quality research articles on bio-psycho-social processes and their role in the healthy development and behavioral outcomes for people with IDD across the lifespan. For more information, contact the AJIDD editor, Frank Symons ( [email protected] ) or go to the AJIDD author instructions at https://meridian.allenpress.com/ajidd/pages/Info-for-Authors .

AAIDD Members

To access the journals, use your member log in credentials on the AAIDD website and return here to gain access. Your member credentials do not work with the login widgets on these pages. Always log in on the AAIDD website .

Citing articles via

Get email alerts.

- American Association on Intellectual and Developmental Disabilities

- eISSN 1944-7558

- ISSN 1944-7515

- Privacy Policy

- Get Adobe Acrobat Reader

This Feature Is Available To Subscribers Only

Sign In or Create an Account

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- v.37(1); 2014 May

The Evidence-Based Practice of Applied Behavior Analysis

Timothy a. slocum.

Utah State University, Logan, UT USA

Ronnie Detrich

Wing Institute, Oakland, CA USA

Susan M. Wilczynski

Ball State University, Muncie, IN USA

Trina D. Spencer

Northern Arizona University, Flagstaff, AZ USA

Oregon State University, Corvallis, OR USA

Katie Wolfe

University of South Carolina, Columbia, SC USA

Evidence-based practice (EBP) is a model of professional decision-making in which practitioners integrate the best available evidence with client values/context and clinical expertise in order to provide services for their clients. This framework provides behavior analysts with a structure for pervasive use of the best available evidence in the complex settings in which they work. This structure recognizes the need for clear and explicit understanding of the strength of evidence supporting intervention options, the important contextual factors including client values that contribute to decision making, and the key role of clinical expertise in the conceptualization, intervention, and evaluation of cases. Opening the discussion of EBP in this journal, Smith ( The Behavior Analyst, 36 , 7–33, 2013 ) raised several key issues related to EBP and applied behavior analysis (ABA). The purpose of this paper is to respond to Smith’s arguments and extend the discussion of the relevant issues. Although we support many of Smith’s ( The Behavior Analyst, 36 , 7–33, 2013 ) points, we contend that Smith’s definition of EBP is significantly narrower than definitions that are used in professions with long histories of EBP and that this narrowness conflicts with the principles that drive applied behavior analytic practice. We offer a definition and framework for EBP that aligns with the foundations of ABA and is consistent with well-established definitions of EBP in medicine, psychology, and other professions. In addition to supporting the systematic use of research evidence in behavior analytic decision making, this definition can promote clear communication about treatment decisions across disciplines and with important outside institutions such as insurance companies and granting agencies.

Almost 45 years ago, Baer et al. ( 1968 ) described a new discipline—applied behavior analysis (ABA). This discipline was distinguished from the experimental analysis of behavior by its focus on social impact (i.e., solving socially important problems in socially important settings). ABA has produced remarkably powerful interventions in fields such as education, developmental disabilities and autism, clinical psychology, behavioral medicine, organizational behavior management, and a host of other fields and populations. Behavior analysts have long recognized that developing interventions capable of improving client behavior solves only one part of the problem. The problem of broad social impact must be solved by having interventions implemented effectively in socially important settings and at scales of social importance (Baer et al. 1987 ; Horner et al. 2005b ; McIntosh et al. 2010 ). This latter set of challenges has proved to be more difficult. In many cases, demonstrations of effectiveness are not sufficient to produce broad adoption and careful implementation of these procedures. Key decision makers may be more influenced by variables other than the increases and decreases in the behaviors of our clients. In addition, even when client behavior is a very powerful factor in decision making, it does not guarantee that empirical data will be the basis for treatment selection; anecdotes, appeals to philosophy, or marketing have been given priority over evidence of outcomes (Carnine 1992 ; Polsgrove 2003 ).

Across settings in which behavior analysts work, there has been a persistent gap between what is known from research and what is actually implemented in practice. Behavior analysts have been concerned with the failed adoption of research-based practices for years (Baer et al. 1987 ). Even in the fields in which behavior analysts have produced powerful interventions, the vast majority of current practice fails to take advantage of them.

Behavior analysts have not been alone in recognizing serious problems with the quality of interventions used employed in practice settings. In the 1960s, many within the medical field recognized a serious research-to-practice gap. Studies suggested that a relatively small percentage (estimates range from 10 to 25 %) of medical treatment decisions were based on high-quality evidence (Goodman 2003 ). This raised the troubling question of what basis was used for the remaining decisions if it was not high-quality evidence. These concerns led to the development of evidence-based practice (EBP) of medicine (Goodman 2003 ; Sackett et al. 1996 ).

The research-to-practice gap appears to be universal across professions. For example, Kazdin ( 2000 ) has reported that less than 10 % of the child and adolescent mental health treatments reported in the professional literature have been systematically evaluated and found to be effective and those that have not been evaluated are more likely to be adopted in practice settings. In recognition of their own research-to-practice gaps, numerous professions have adopted an EBP framework. Nursing and other areas of health care, social work, clinical and educational psychology, speech and language pathology, and many others have adopted this framework and adapted it to the specific needs of their discipline to help guide decision-making. Not only have EBP frameworks been helping to structure professional practice, but they have also been used to guide federal policy. With the passage of No Child Left Behind ( 2002 ) and the reauthorization of the Individuals with Disabilities Education Improvement Act ( 2005 ), the federal department of education has aligned itself with the EBP movement. A recent memorandum from the federal Office of Management and Budget instructed agencies to consider evidence of effectiveness when awarding funds, increase the use of evidence in competitions, and to encourage widespread program evaluation (Zients 2012 ). The memo, which used the term evidence-based practice extensively, stated: “Where evidence is strong, we should act on it. Where evidence is suggestive, we should consider it. Where evidence is weak, we should build the knowledge to support better decisions in the future” (Zients 2012 , p. 1).

EBP is more broadly an effort to improve decision-making in applied settings by explicitly articulating the central role of evidence in these decisions and thereby improving outcomes. It addresses one of the long-standing challenges for ABA; the need to effectively support and disseminate interventions in the larger social systems in which our work is embedded. In particular, EBP addresses the fact that many decision-makers are not sufficiently influenced by the best evidence that is relevant to important decisions. EBP is an explicit statement of one of ABA’s core tenets—a commitment to evidence-based decision-making. Given that the EBP framework is well established in many disciplines closely related to ABA and in the larger institutional contexts in which we operate (e.g., federal policy and funding agencies), aligning ABA with EBP offers an opportunity for behavior analysts to work more effectively within broader social systems.

Discussion of issues related to EBP in ABA has taken place across several years. Researchers have extensively discussed methods for identifying well-supported treatments (e.g., Horner et al. 2005a ; Kratochwill et al. 2010 ), and systematically reviewed the evidence to identify these treatments (e.g., Maggin et al. 2011 ; National Autism Center 2009 ). However, until recently, discussion of an explicit definition of EBP in ABA has been limited to conference papers (e.g., Detrich 2009 ). Smith ( 2013 ) opened a discussion of the definition and critical features of EBP of ABA in the pages of The Behavior Analyst . In his thought-provoking article, Smith raised many important points that deserve serious discussion as the field moves toward a clear vision of EBP of ABA. Most importantly, Smith ( 2013 ) argued that behavior analysts must carefully consider how EBP is to be defined and understood by researchers and practitioners of behavior analysis.

Definitions Matter

We find much to agree with in Smith’s paper, and we will describe these points of agreement below. However, we have a core disagreement with Smith concerning the vision of what EBP is and how it might enhance and expand the effective practice of ABA. As behavior analysts know, definitions matter. A well-conceived definition can promote conceptual understanding and set the context for effective action. Conversely, a poor definition or confusion about definitions hinders clear understanding, communication, and action.

In providing a basis for his definition of EBP, Smith refers to definitions in professions that have well-developed conceptions of EBP. He quotes the American Psychological Association (APA) ( 2005 ) definition (which we quote here more extensively than he did):

Evidence-based practice in psychology (EBPP) is the integration of the best available research with clinical expertise in the context of patient characteristics, culture, and preferences. This definition of EBPP closely parallels the definition of evidence-based practice adopted by the Institute of Medicine ( 2001 , p. 147) as adapted from Sackett et al. ( 2000 ): “Evidence-based practice is the integration of best research evidence with clinical expertise and patient values.” The purpose of EBPP is to promote effective psychological practice and enhance public health by applying empirically supported principles of psychological assessment, case formulation, therapeutic relationship, and intervention.

The key to understanding this definition is to note how APA and the Institute of Medicine use the word practice . Clearly, practice does not refer to an intervention; instead, it references one’s professional behavior. This is the sense in which one might speak of the professional practice of behavior analysis. American Psychological Association Presidential Task Force of Evidence-Based Practice ( 2006 ) further elaborates this point:

It is important to clarify the relation between EBPP and empirically supported treatments (ESTs)…. ESTs are specific psychological treatments that have been shown to be efficacious in controlled clinical trials, whereas EBPP encompasses a broader range of clinical activities (e.g., psychological assessment, case formulation, therapy relationships). As such, EBPP articulates a decision-making process for integrating multiple streams of research evidence—including but not limited to RCTs—into the intervention process. (p. 273)

In contrast, Smith defined EBP not as a decision-making process but as a set of interventions that have been shown to be efficacious through rigorous research. He stated:

An evidence-based practice is a service that helps solve a consumer’s problem. Thus it is likely to be an integrated package of procedures, operationalized in a manual, and validated in studies of socially meaningful outcomes, usually with group designs. (p. 27).

Smith’s EBP is what APA has clearly labeled an empirically supported treatment . This is a common misconception found in conversation and in published articles (e.g., Cook and Cook 2013 ) but at odds with formal definitions provided by many professional organizations; definitions which result from extensive consideration and debate by representative leaders of each professional field (e.g., APA 2005 ; American Occupational Therapy Association 2008 ; American Speech-Language Hearing Association 2005 ; Institute of Medicine 2001 ).

Before entering into the discussion of a useful definition of EBP of ABA, we should clarify the functions that we believe a useful definition of EBP should perform. First, a useful definition should align with the philosophical tenets of ABA, support the most effective current practice of ABA, and contribute to further improvement of ABA practice. A definition that is in conflict with the foundations of ABA or detracts from effective practice clearly would be counterproductive. Second, a useful definition of EBP of ABA should enhance social support for ABA practice by describing its empirical basis and decision-making processes in a way that is understandable to professions that already have well-established definitions of EBP. A definition that corresponds with the fundamental components of EBP in other fields would promote ABA practice by improving communication with external audiences. This improved communication is critical in the interdisciplinary contexts in which behavior analysts often practice and for legitimacy among those familiar with EBP who often control local contingencies (e.g., policy makers and funding agencies).

Based on these functions, we propose the following definition: Evidence-based practice of applied behavior analysis is a decision-making process that integrates (a) the best available evidence with (b) clinical expertise and (c) client values and context. This definition positions EBP as a pervasive feature of all professional decision-making by a behavior analyst with respect to client services; it is not limited to a narrowly restricted set of situations or decisions. The definition asserts that the best available evidence should be a primary influence on all decision-making related to services for clients (e.g., intervention selection, progress monitoring, etc.). It also recognizes that evidence cannot be the sole basis for a decision; effective decision-making in a discipline as complex as ABA requires clinical expertise in identifying, defining, and analyzing problems, determining what evidence is relevant, and deciding how it should be applied. In the absence of this decision-making framework, practitioners of ABA would be conceptualized as behavioral technicians rather than analysts. Further, the definition of EBP of ABA includes client values and context. Decision-making is necessarily based on a set of values that determine the goals that are to be pursued and the means that are appropriate to achieve them. Context is included in recognition of the fact that the effectiveness of an intervention is highly dependent upon the context in which it is implemented. The definition asserts that effective decision-making must be informed by important contextual factors. We elaborate on each component of the definition below, but first we contrast our definition with that offered by Smith ( 2013 ).

Although Smith ( 2013 ) made brief reference to the other critical components of EBP, he framed EBP as a list of multicomponent interventions that can claim a sufficient level of research support. We agree with his argument that such lists are valuable resources for practitioners and therefore developing them should be a goal of researchers. However, such lists are not, by themselves , a powerful means of improving the effectiveness of behavior analytic practice. The vast majority of decisions faced in the practice of behavior analysis cannot be made by implementing the kind of manualized, multicomponent treatment packages described by Smith.

There are a number of reasons a list of interventions is not an adequate basis for EBP of ABA. First, there are few interventions that qualify as “practices” under Smith’s definition. For example, when discussing the importance of manuals for operationalizing treatments, Smith stated that the requirement that a “practice” be based on a manual, “sharply reduces the number of ABA approaches that can be regarded as evidence based. Of the 11 interventions for ASD identified in the NAC ( 2009 ) report, only the three that have been standardized in manuals might be considered to be practices, and even these may be incomplete” (p. 18). Thus, although the example referenced the autism treatment literature, it seems apparent that even a loose interpretation of this particular criterion would leave all practitioners with a highly restricted number of intervention options.

Second, even if more “practices” were developed and validated, many consumers cannot be well served with existing multicomponent packages. In order to meet their clients’ needs, behavior analysts must be able to selectively implement focused interventions alone or in combination. This flexibility is necessary to meet the diverse needs of their clients and to minimize the response demands on direct care providers or staff, who are less likely to implement a complicated intervention with fidelity (Riley-Tillman and Chafouleas 2003 ).

Third, the strategy of assembling a list of treatments and describing these as “practices” severely limits the ways in which research findings are used by practitioners. With the list approach to defining EBP, research only impacts practice by placing an intervention on a list when a specific criteria has been met. Thus, any research on an intervention that is not sufficiently broad or manualized to qualify as a “practice” has no influence on EBP. Similarly, a research study that shows clear results but is not part of a sufficient body of support for an intervention would also have no influence. A study that provides suggestive results but is not methodologically strong enough to be definitive would have no influence, even if it were the only study that is relevant to a given problem.

The primary problem with a list approach is that it does not provide a strong framework that directs practitioners to include the best available evidence in all of their professional decision-making. Too often, practitioners who consult such lists find that no interventions relevant to their specific case have been validated as “evidence-based” and therefore EBP is irrelevant. In contrast, definitions of EBP as a decision-making process can provide a robust framework for including research evidence along with clinical expertise and client values and context in the practice of behavior analysis. In the next sections, we explore the components of this definition in more detail.

Best Available Evidence

The term “best available evidence” occupies a critical and central place in the definition and concept of EBP; this aligns with the fundamental reliance on scientific research that is one of the core tenets of ABA. The Behavior Analyst Certification Board ( 2010 ) Guidelines for Responsible Conduct for Behavior Analysts repeatedly affirm ways in which behavior analysts should base their professional conduct on the best available evidence. For example:

Behavior analysts rely on scientifically and professionally derived knowledge when making scientific or professional judgments in human service provision, or when engaging in scholarly or professional endeavors.

- The behavior analyst always has the responsibility to recommend scientifically supported most effective treatment procedures. Effective treatment procedures have been validated as having both long-term and short-term benefits to clients and society.

- Clients have a right to effective treatment (i.e., based on the research literature and adapted to the individual client).

A Continuum of Evidence Quality

The term best implies that evidence can be of varying quality, and that better quality evidence is preferred over lower quality evidence. Quality of evidence for informing a specific practical question involves two dimensions: (a) relevance of the evidence and (b) certainty of the evidence.

The dimension of relevance recognizes that some evidence is more germane to a particular decision than is other evidence. This idea is similar to the concept of external validity. External validity refers to the degree to which research results apply to a range of applied situations whereas relevance refers to the degree to which research results apply to a specific applied situation. In general, evidence is more relevant when it matches the particular situation in terms of (a) important characteristics of the clients, (b) specific treatments or interventions under consideration, (c) outcomes or target behaviors including their functions, and (d) contextual variables such as the physical and social environment, staff skills, and the capacity of the organization. Unless all conditions match perfectly, behavior analysts are necessarily required to use their expertise to determine the applicability of the scientific evidence to each unique clinical situation. Evidence based on functionally similar situations is preferred over evidence based on situations that share fewer important characteristics with the specific practice situation. However, functional similarity between a study or set of studies and a particular applied problem is not always obvious.

The dimension of certainty of evidence recognizes that some evidence provides stronger support for claims that a particular intervention produced a specific result. Any instance of evidence can be evaluated for its methodological rigor or internal validity (i.e., the degree to which it provides strong support for the claim of effectiveness and rules out alternative explanations). Anecdotes are clearly weaker than more systematic observations, and well-controlled experiments provide the strongest evidence. Methodological rigor extends to the quality of the dependent measure, treatment fidelity, and other variables of interest (e.g., maintenance of skill acquisition), all of which influence the certainty of evidence. But the internal validity of any particular study is not the only variable influencing the certainty of evidence; the quantity of evidence supporting a claim is also critical to its certainty. Both systematic and direct replication are vital for strengthening claims of effectiveness (Johnston and Pennypacker 1993 ; Sidman 1960 ). Certainty of evidence is based on both the rigor of each bit of evidence and the degree to which the findings have been consistently replicated. Although these issues are simple in principle, operationalizing and measuring rigor of research is extremely complex. Numerous quality appraisal systems for both group and single-subject research have been proposed and used in systematic reviews (see below for more detail).

Under ideal circumstances, consistently high-quality evidence that closely matches the specifics of the practice situation is available; unfortunately, this is not always the case, and evidence-based practitioners of ABA must proceed despite an imperfect evidence base. The mandate to use the best available evidence specifies that the practitioner make decisions based on the best evidence that is available. Although this statement may seem rather obvious, the point is worth underscoring because the implications are highly relevant to behavior analysts. In an area with considerable high-quality relevant research, the standards for evidence should be quite high. But in an area with more limited research, the practitioner should take advantage of the best evidence that is available. This may require tentative reliance on research that is somewhat weaker or is only indirectly relevant to the specific situation at hand. For example, ideally, evidence-based practitioners of ABA would rely on well-controlled experimental results that have been replicated with the precise population with whom they are working. However, if this kind of evidence is not available, they might have to make decisions based on a single study that involves a similar but not identical population.

This idea of using the best of the available evidence is very different from one of using only extremely high-quality evidence (i.e., empirically supported treatments). If we limit EBP to considering only the highest quality evidence, we leave the practitioner with no guidance in the numerous situations in which high-quality and directly relevant evidence (i.e., precise matching of setting, function, behavior, motivating operations and precise procedures) simply does not exist. This approach would lead to a form of EBP that is irrelevant to the majority of decisions that a behavior analyst must make on a daily basis. Instead, our proposed definition of EBP asserts that the practitioner should be informed by the best evidence that is available.

Expanding Research on Utility of Treatments

Smith ( 2013 ) argued that the research methods used by behavior analysts to evaluate these treatments should be expanded to more comprehensively describe the utility of interventions. He suggested that too much ABA research is conducted in settings that do not approximate typical service settings, optimizing experimental control at the expense of external validity. Along this same line of reasoning, he noted that it is important to test the generality of effects across clients and identify variables that predict differential effectiveness. He suggested systematically reporting results from all research participants (e.g., the intent-to-treat model), and purposive selection of participants would provide a more complete account of the situations in which treatments are successful and those in which they are unsuccessful. Smith argued that researchers should include more distal and socially important outcomes because with a narrow target “behavior may change, but remain a problem for the individual or may be only a small component of a much larger cluster of problems such as addiction or delinquency.” He pointed out that in order to best support effective practice, research must demonstrate that an intervention produces or contributes to producing the socially important outcomes that would cause a consumer to say that the problem is solved.

Further, Smith argues that many of the questions most relevant to EBP—questions about the likely outcomes of a treatment when applied in a particular type of situation—are well suited to group research designs. He argued that RCTs are likely to be necessary within a program of research because:

most problems pose important actuarial questions (e.g., determining whether an intervention package is more effective than community treatment as usual; deciding whether to invest in one intervention package or another, both, or neither; and determining whether the long-term benefits justify the resources devoted to the intervention)…. A particularly important actuarial issue centers on the identification of the conditions under which the intervention is most likely to be effective. (p. 23)

We agree that selection of research methods should be driven by the kinds of questions being asked and that group research designs are the methods of choice for some types of questions that are central to EBP. Therefore, we support Smith’s call for increased use of group research designs within ABA. If practice decisions are to be informed by the best available evidence, we must take advantage of both group and single-subject designs. However, we disagree with Smith’s statement that EBP should be limited to treatments that are validated “usually with group designs” (Smith, p. 27). Practitioners should be supported by reviews of research that draw from all of the available evidence and provide the best recommendations possible given the state of knowledge on the particular question. In most areas of behavior analytic practice, single-subject research makes up a large portion of the best available evidence. The Institute for Education Science (IES) has recognized the contribution single case designs can make toward identifying effective practices and has recently established standards for evaluating the quality of single case design studies (Institute of Educational Sciences, n.d. ; Kratochwill et al. 2013 ).

Classes of Evidence

Identifying the best available evidence to inform specific practice decisions is extremely complex, and no single currently available source of evidence can adequately inform all aspects of practice. Therefore, we outline a number of strategies for identifying and summarizing evidence in ways that can support the EBP of ABA. We do not intend to cover all sources of evidence comprehensively, but merely outline some of the options available to behavior analysts.

Empirically Supported Treatment Reviews

Empirically supported treatments (EST) are identified through a particular form of systematic literature review. Systematic reviews bring a rigorous methodology to the process of reviewing research. The development and use of these methods are, in part, a response to the recognition that the process of reviewing the literature is subject to threats to validity. The systematic review process is characterized by explicitly stated and replicable methods for (a) searching for studies, (b) screening studies for relevance to the review question, (c) appraising the methodological quality of studies, (d) describing outcomes from each study, and (e) determining the degree to which the treatment (or treatments) is supported by the research. When the evidence in support of a treatment is plentiful and of high quality, the treatment generally earns the status of an EST. Many systematic reviews, however, find that no intervention for a particular problem has sufficient evidence to qualify as an EST.

Well-known organizations in medicine (e.g., Cochrane Collaboration), education (e.g., What Works Clearinghouse), and mental health (e.g., National Registry of Evidence-based Programs and Practices) conduct EST reviews. Until recently, systematic reviews have focused nearly exclusively on group research; however, systematic reviews of single-subject research are quickly becoming more common and more sophisticated (e.g., Carr 2009 ; NAC 2009 ; Maggin et al. 2012 ).

Systematic reviews for EST status is one important way to summarize the best available evidence because it can give a relatively objective evaluation of the strength of the research literature supporting a particular intervention. But systematic reviews are not infallible; as with all other research and evaluation methods, they require skillful application and are subject to threats to validity. The results of reviews can change dramatically based on seemingly minor changes in operational definitions and procedures for locating articles, screening for relevance, describing treatments, appraising methodological quality, describing outcomes, summarizing outcomes for the body of research as a whole, and rating the degree to which an intervention is sufficiently supported (Slocum et al. 2012a ; Wilczynski 2012 ). Systematic reviews and claims based upon them must be examined critically with full recognition of their limitations just as one examines primary research reports.

Behavior analysts encounter many situations in which no ESTs have been established for the particular combination of client characteristics, target behaviors, functions, contexts, and other parameters for decision-making. This dearth may exist because no systematic review has addressed the particular problem or because a systematic review has been conducted but failed to find any well-supported treatments for the particular problem. For example, in a recent review of all of the recommendations in the empirically supported practice guides published by the IES, 45 % of the recommendations had minimal support (Slocum et al. 2012b ). As Smith noted ( 2013 ), only 3 of the 11 interventions that the NAC identified as meeting quality standards might be considered practices in the sense that they are manualized. In these common situations, a behavior analyst cannot respond by simply selecting an intervention from a list of ESTs. A comprehensive EBP of ABA requires additional strategies for reviewing research evidence and drawing practice recommendations from existing evidence—strategies that can glean the best available evidence from an imperfect research base and formulate practice recommendations that are most likely to lead to favorable outcomes under conditions of uncertainty.

Other Methods for Reviewing Research Literature

The three strategies outlined below may complement systematic reviews in guiding behavior analysts toward effective decision-making.

Narrative Reviews of the Literature

There has been a long tradition across disciplines of relying on narrative reviews to summarize what is known with respect to treatments for a class of problems (e.g., aggression) or what is known about a particular treatment (e.g., token economy). The author of the review, presumably an expert, selects the theme and synthesizes the research literature that he or she considers most relevant. Narrative reviews allow the author to consider a wide range of research including studies that are indirectly relevant (e.g., those studying a given problem with a different population or demonstrating general principles) and studies that may not qualify for systematic reviews because of methodological limitations but which illustrate important points nonetheless. Narrative reviews can consider a broader array of evidence and have greater interpretive flexibility than most systematic reviews.

As with all sources of evidence, there are difficulties with narrative reviews. The selection of the literature is left up to the author’s discretion; there are no methodological guidelines and little transparency about how the author decided which literature to include and which to exclude. There is always the risk of confirmation bias that the author emphasized literature that is consistent with her preconceived opinions. Even with a peer-review process, it is always possible that the author neglected or misinterpreted research relevant to the discussion. These concerns not withstanding, narrative reviews may provide the best available evidence when no systematic reviews exist or when substantial generalizations from the systematic review to the practice context are needed. Many textbooks (e.g., Cooper et al. 2007 ) and handbooks (e.g., Fisher et al. 2011 ; Madden et al. 2013 ) provide excellent examples of narrative reviews that can provide important guidance for evidence-based practitioners of ABA.

Best Practice Guides

Best practice guides are another source of evidence that can inform decisions in the absence of available and relevant systematic reviews. Best practice guides provide recommendations that reflect the collective wisdom of an expert panel. It is presumed that the recommendations reflect what is known from the research literature, but the validity of recommendations is largely derived from the panel’s expertise rather than from the rigor of their methodology. Recommendations from best practice panels are usually much broader than the recommendations from systematic reviews. The recommendations from these guides can provide important information about how to implement a treatment, how to adapt the treatment for specific circumstances, and what is necessary for broad scale or system-wide implementation.

The limitations to best practice guides are similar to those for narrative reviews; specifically, potential bias and lack of transparency are significant concerns. Panel members are typically not selected using a specific set of operationalized criteria. Bias is possible if the panel is drawn too narrowly. If the panel is drawn too broadly; however, the panel may have difficulty reaching a consensus (Wilczynski 2012 ).

Empirically Supported Practice Guides