- En español – ExME

- Em português – EME

Systematic reviews vs meta-analysis: what’s the difference?

Posted on 24th July 2023 by Verónica Tanco Tellechea

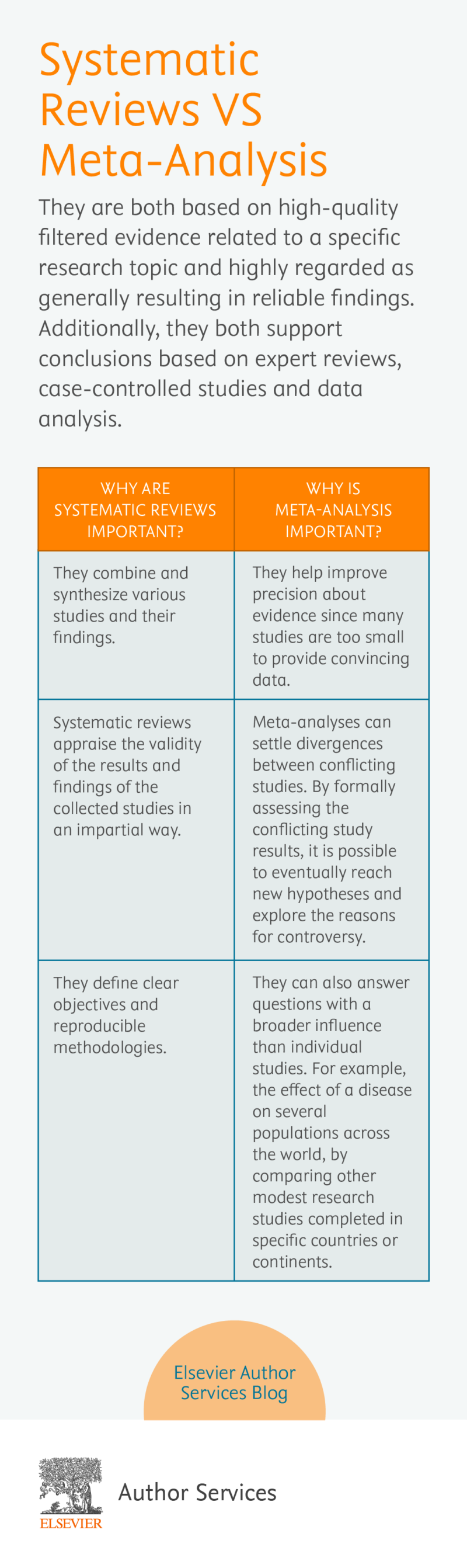

You may hear the terms ‘systematic review’ and ‘meta-analysis being used interchangeably’. Although they are related, they are distinctly different. Learn more in this blog for beginners.

What is a systematic review?

According to Cochrane (1), a systematic review attempts to identify, appraise and synthesize all the empirical evidence to answer a specific research question. Thus, a systematic review is where you might find the most relevant, adequate, and current information regarding a specific topic. In the levels of evidence pyramid , systematic reviews are only surpassed by meta-analyses.

To conduct a systematic review, you will need, among other things:

- A specific research question, usually in the form of a PICO question.

- Pre-specified eligibility criteria, to decide which articles will be included or discarded from the review.

- To follow a systematic method that will minimize bias.

You can find protocols that will guide you from both Cochrane and the Equator Network , among other places, and if you are a beginner to the topic then have a read of an overview about systematic reviews.

What is a meta-analysis?

A meta-analysis is a quantitative, epidemiological study design used to systematically assess the results of previous research (2) . Usually, they are based on randomized controlled trials, though not always. This means that a meta-analysis is a mathematical tool that allows researchers to mathematically combine outcomes from multiple studies.

When can a meta-analysis be implemented?

There is always the possibility of conducting a meta-analysis, yet, for it to throw the best possible results it should be performed when the studies included in the systematic review are of good quality, similar designs, and have similar outcome measures.

Why are meta-analyses important?

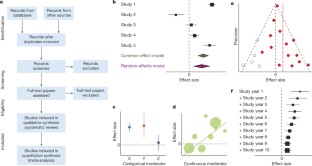

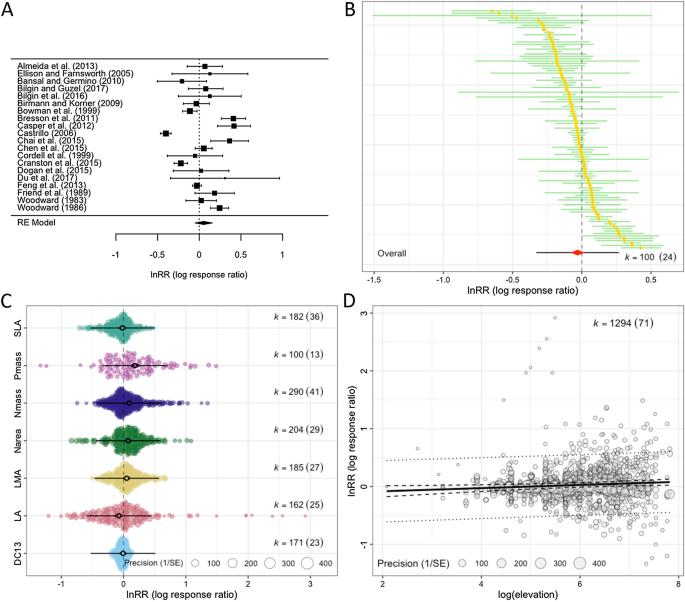

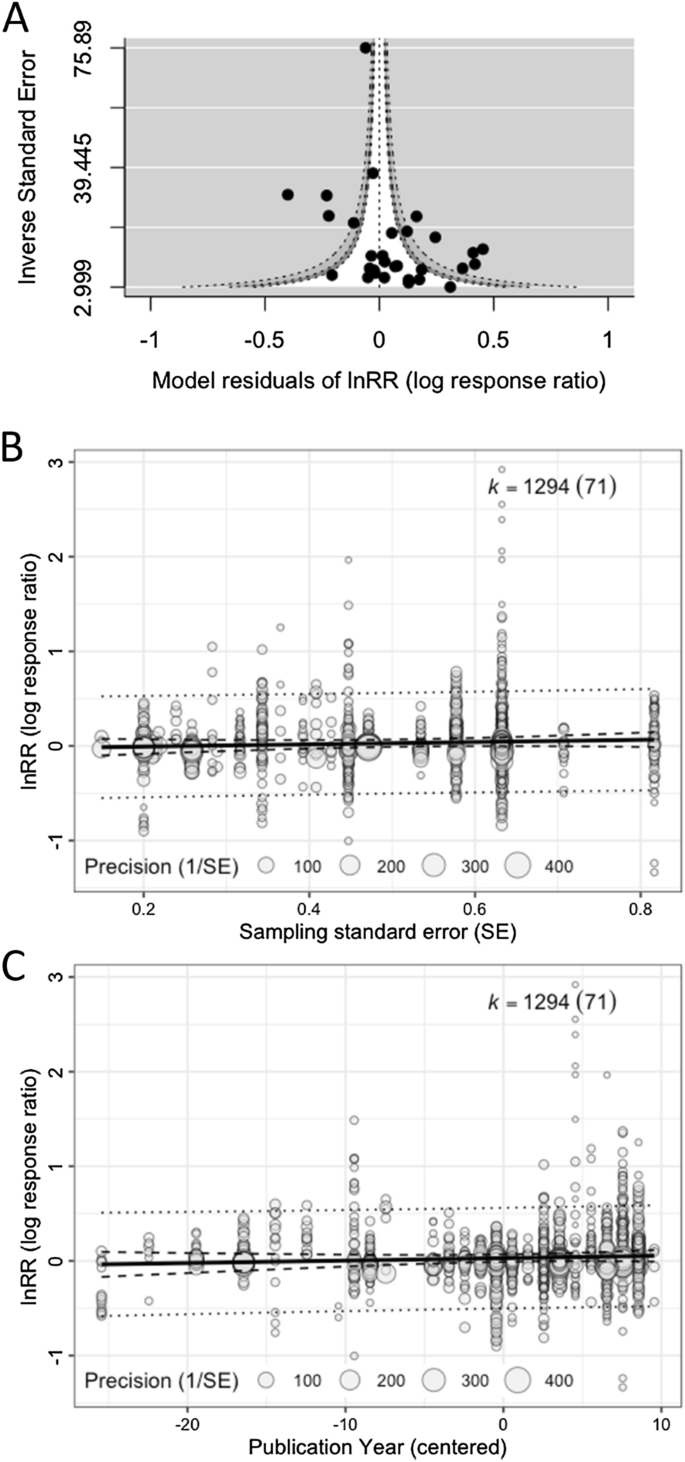

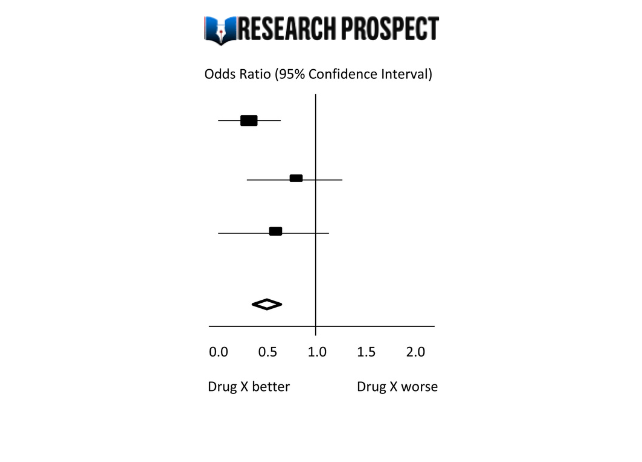

Outcomes from a meta-analysis may provide more precise information regarding the estimate of the effect of what is being studied because it merges outcomes from multiple studies. In a meta-analysis, data from various trials are combined and generate an average result (1), which is portrayed in a forest plot diagram. Moreover, meta-analysis also include a funnel plot diagram to visually detect publication bias.

Conclusions

A systematic review is an article that synthesizes available evidence on a certain topic utilizing a specific research question, pre-specified eligibility criteria for including articles, and a systematic method for its production. Whereas a meta-analysis is a quantitative, epidemiological study design used to assess the results of articles included in a systematic-review.

Remember: All meta-analyses involve a systematic review, but not all systematic reviews involve a meta-analysis.

If you would like some further reading on this topic, we suggest the following:

The systematic review – a S4BE blog article

Meta-analysis: what, why, and how – a S4BE blog article

The difference between a systematic review and a meta-analysis – a blog article via Covidence

Systematic review vs meta-analysis: what’s the difference? A 5-minute video from Research Masterminds:

- About Cochrane reviews [Internet]. Cochranelibrary.com. [cited 2023 Apr 30]. Available from: https://www.cochranelibrary.com/about/about-cochrane-reviews

- Haidich AB. Meta-analysis in medical research. Hippokratia. 2010;14(Suppl 1):29–37.

Verónica Tanco Tellechea

Leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Subscribe to our newsletter

You will receive our monthly newsletter and free access to Trip Premium.

Related Articles

How to read a funnel plot

This blog introduces you to funnel plots, guiding you through how to read them and what may cause them to look asymmetrical.

Heterogeneity in meta-analysis

When you bring studies together in a meta-analysis, one of the things you need to consider is the variability in your studies – this is called heterogeneity. This blog presents the three types of heterogeneity, considers the different types of outcome data, and delves a little more into dealing with the variations.

Natural killer cells in glioblastoma therapy

As seen in a previous blog from Davide, modern neuroscience often interfaces with other medical specialities. In this blog, he provides a summary of new evidence about the potential of a therapeutic strategy born at the crossroad between neurology, immunology and oncology.

- Search Menu

- Browse content in Arts and Humanities

- Browse content in Archaeology

- Anglo-Saxon and Medieval Archaeology

- Archaeological Methodology and Techniques

- Archaeology by Region

- Archaeology of Religion

- Archaeology of Trade and Exchange

- Biblical Archaeology

- Contemporary and Public Archaeology

- Environmental Archaeology

- Historical Archaeology

- History and Theory of Archaeology

- Industrial Archaeology

- Landscape Archaeology

- Mortuary Archaeology

- Prehistoric Archaeology

- Underwater Archaeology

- Urban Archaeology

- Zooarchaeology

- Browse content in Architecture

- Architectural Structure and Design

- History of Architecture

- Residential and Domestic Buildings

- Theory of Architecture

- Browse content in Art

- Art Subjects and Themes

- History of Art

- Industrial and Commercial Art

- Theory of Art

- Biographical Studies

- Byzantine Studies

- Browse content in Classical Studies

- Classical History

- Classical Philosophy

- Classical Mythology

- Classical Literature

- Classical Reception

- Classical Art and Architecture

- Classical Oratory and Rhetoric

- Greek and Roman Papyrology

- Greek and Roman Epigraphy

- Greek and Roman Law

- Greek and Roman Archaeology

- Late Antiquity

- Religion in the Ancient World

- Digital Humanities

- Browse content in History

- Colonialism and Imperialism

- Diplomatic History

- Environmental History

- Genealogy, Heraldry, Names, and Honours

- Genocide and Ethnic Cleansing

- Historical Geography

- History by Period

- History of Emotions

- History of Agriculture

- History of Education

- History of Gender and Sexuality

- Industrial History

- Intellectual History

- International History

- Labour History

- Legal and Constitutional History

- Local and Family History

- Maritime History

- Military History

- National Liberation and Post-Colonialism

- Oral History

- Political History

- Public History

- Regional and National History

- Revolutions and Rebellions

- Slavery and Abolition of Slavery

- Social and Cultural History

- Theory, Methods, and Historiography

- Urban History

- World History

- Browse content in Language Teaching and Learning

- Language Learning (Specific Skills)

- Language Teaching Theory and Methods

- Browse content in Linguistics

- Applied Linguistics

- Cognitive Linguistics

- Computational Linguistics

- Forensic Linguistics

- Grammar, Syntax and Morphology

- Historical and Diachronic Linguistics

- History of English

- Language Evolution

- Language Reference

- Language Acquisition

- Language Variation

- Language Families

- Lexicography

- Linguistic Anthropology

- Linguistic Theories

- Linguistic Typology

- Phonetics and Phonology

- Psycholinguistics

- Sociolinguistics

- Translation and Interpretation

- Writing Systems

- Browse content in Literature

- Bibliography

- Children's Literature Studies

- Literary Studies (Romanticism)

- Literary Studies (American)

- Literary Studies (Asian)

- Literary Studies (European)

- Literary Studies (Eco-criticism)

- Literary Studies (Modernism)

- Literary Studies - World

- Literary Studies (1500 to 1800)

- Literary Studies (19th Century)

- Literary Studies (20th Century onwards)

- Literary Studies (African American Literature)

- Literary Studies (British and Irish)

- Literary Studies (Early and Medieval)

- Literary Studies (Fiction, Novelists, and Prose Writers)

- Literary Studies (Gender Studies)

- Literary Studies (Graphic Novels)

- Literary Studies (History of the Book)

- Literary Studies (Plays and Playwrights)

- Literary Studies (Poetry and Poets)

- Literary Studies (Postcolonial Literature)

- Literary Studies (Queer Studies)

- Literary Studies (Science Fiction)

- Literary Studies (Travel Literature)

- Literary Studies (War Literature)

- Literary Studies (Women's Writing)

- Literary Theory and Cultural Studies

- Mythology and Folklore

- Shakespeare Studies and Criticism

- Browse content in Media Studies

- Browse content in Music

- Applied Music

- Dance and Music

- Ethics in Music

- Ethnomusicology

- Gender and Sexuality in Music

- Medicine and Music

- Music Cultures

- Music and Media

- Music and Religion

- Music and Culture

- Music Education and Pedagogy

- Music Theory and Analysis

- Musical Scores, Lyrics, and Libretti

- Musical Structures, Styles, and Techniques

- Musicology and Music History

- Performance Practice and Studies

- Race and Ethnicity in Music

- Sound Studies

- Browse content in Performing Arts

- Browse content in Philosophy

- Aesthetics and Philosophy of Art

- Epistemology

- Feminist Philosophy

- History of Western Philosophy

- Metaphysics

- Moral Philosophy

- Non-Western Philosophy

- Philosophy of Language

- Philosophy of Mind

- Philosophy of Perception

- Philosophy of Science

- Philosophy of Action

- Philosophy of Law

- Philosophy of Religion

- Philosophy of Mathematics and Logic

- Practical Ethics

- Social and Political Philosophy

- Browse content in Religion

- Biblical Studies

- Christianity

- East Asian Religions

- History of Religion

- Judaism and Jewish Studies

- Qumran Studies

- Religion and Education

- Religion and Health

- Religion and Politics

- Religion and Science

- Religion and Law

- Religion and Art, Literature, and Music

- Religious Studies

- Browse content in Society and Culture

- Cookery, Food, and Drink

- Cultural Studies

- Customs and Traditions

- Ethical Issues and Debates

- Hobbies, Games, Arts and Crafts

- Lifestyle, Home, and Garden

- Natural world, Country Life, and Pets

- Popular Beliefs and Controversial Knowledge

- Sports and Outdoor Recreation

- Technology and Society

- Travel and Holiday

- Visual Culture

- Browse content in Law

- Arbitration

- Browse content in Company and Commercial Law

- Commercial Law

- Company Law

- Browse content in Comparative Law

- Systems of Law

- Competition Law

- Browse content in Constitutional and Administrative Law

- Government Powers

- Judicial Review

- Local Government Law

- Military and Defence Law

- Parliamentary and Legislative Practice

- Construction Law

- Contract Law

- Browse content in Criminal Law

- Criminal Procedure

- Criminal Evidence Law

- Sentencing and Punishment

- Employment and Labour Law

- Environment and Energy Law

- Browse content in Financial Law

- Banking Law

- Insolvency Law

- History of Law

- Human Rights and Immigration

- Intellectual Property Law

- Browse content in International Law

- Private International Law and Conflict of Laws

- Public International Law

- IT and Communications Law

- Jurisprudence and Philosophy of Law

- Law and Politics

- Law and Society

- Browse content in Legal System and Practice

- Courts and Procedure

- Legal Skills and Practice

- Primary Sources of Law

- Regulation of Legal Profession

- Medical and Healthcare Law

- Browse content in Policing

- Criminal Investigation and Detection

- Police and Security Services

- Police Procedure and Law

- Police Regional Planning

- Browse content in Property Law

- Personal Property Law

- Study and Revision

- Terrorism and National Security Law

- Browse content in Trusts Law

- Wills and Probate or Succession

- Browse content in Medicine and Health

- Browse content in Allied Health Professions

- Arts Therapies

- Clinical Science

- Dietetics and Nutrition

- Occupational Therapy

- Operating Department Practice

- Physiotherapy

- Radiography

- Speech and Language Therapy

- Browse content in Anaesthetics

- General Anaesthesia

- Neuroanaesthesia

- Clinical Neuroscience

- Browse content in Clinical Medicine

- Acute Medicine

- Cardiovascular Medicine

- Clinical Genetics

- Clinical Pharmacology and Therapeutics

- Dermatology

- Endocrinology and Diabetes

- Gastroenterology

- Genito-urinary Medicine

- Geriatric Medicine

- Infectious Diseases

- Medical Toxicology

- Medical Oncology

- Pain Medicine

- Palliative Medicine

- Rehabilitation Medicine

- Respiratory Medicine and Pulmonology

- Rheumatology

- Sleep Medicine

- Sports and Exercise Medicine

- Community Medical Services

- Critical Care

- Emergency Medicine

- Forensic Medicine

- Haematology

- History of Medicine

- Browse content in Medical Skills

- Clinical Skills

- Communication Skills

- Nursing Skills

- Surgical Skills

- Browse content in Medical Dentistry

- Oral and Maxillofacial Surgery

- Paediatric Dentistry

- Restorative Dentistry and Orthodontics

- Surgical Dentistry

- Medical Ethics

- Medical Statistics and Methodology

- Browse content in Neurology

- Clinical Neurophysiology

- Neuropathology

- Nursing Studies

- Browse content in Obstetrics and Gynaecology

- Gynaecology

- Occupational Medicine

- Ophthalmology

- Otolaryngology (ENT)

- Browse content in Paediatrics

- Neonatology

- Browse content in Pathology

- Chemical Pathology

- Clinical Cytogenetics and Molecular Genetics

- Histopathology

- Medical Microbiology and Virology

- Patient Education and Information

- Browse content in Pharmacology

- Psychopharmacology

- Browse content in Popular Health

- Caring for Others

- Complementary and Alternative Medicine

- Self-help and Personal Development

- Browse content in Preclinical Medicine

- Cell Biology

- Molecular Biology and Genetics

- Reproduction, Growth and Development

- Primary Care

- Professional Development in Medicine

- Browse content in Psychiatry

- Addiction Medicine

- Child and Adolescent Psychiatry

- Forensic Psychiatry

- Learning Disabilities

- Old Age Psychiatry

- Psychotherapy

- Browse content in Public Health and Epidemiology

- Epidemiology

- Public Health

- Browse content in Radiology

- Clinical Radiology

- Interventional Radiology

- Nuclear Medicine

- Radiation Oncology

- Reproductive Medicine

- Browse content in Surgery

- Cardiothoracic Surgery

- Gastro-intestinal and Colorectal Surgery

- General Surgery

- Neurosurgery

- Paediatric Surgery

- Peri-operative Care

- Plastic and Reconstructive Surgery

- Surgical Oncology

- Transplant Surgery

- Trauma and Orthopaedic Surgery

- Vascular Surgery

- Browse content in Science and Mathematics

- Browse content in Biological Sciences

- Aquatic Biology

- Biochemistry

- Bioinformatics and Computational Biology

- Developmental Biology

- Ecology and Conservation

- Evolutionary Biology

- Genetics and Genomics

- Microbiology

- Molecular and Cell Biology

- Natural History

- Plant Sciences and Forestry

- Research Methods in Life Sciences

- Structural Biology

- Systems Biology

- Zoology and Animal Sciences

- Browse content in Chemistry

- Analytical Chemistry

- Computational Chemistry

- Crystallography

- Environmental Chemistry

- Industrial Chemistry

- Inorganic Chemistry

- Materials Chemistry

- Medicinal Chemistry

- Mineralogy and Gems

- Organic Chemistry

- Physical Chemistry

- Polymer Chemistry

- Study and Communication Skills in Chemistry

- Theoretical Chemistry

- Browse content in Computer Science

- Artificial Intelligence

- Computer Architecture and Logic Design

- Game Studies

- Human-Computer Interaction

- Mathematical Theory of Computation

- Programming Languages

- Software Engineering

- Systems Analysis and Design

- Virtual Reality

- Browse content in Computing

- Business Applications

- Computer Security

- Computer Games

- Computer Networking and Communications

- Digital Lifestyle

- Graphical and Digital Media Applications

- Operating Systems

- Browse content in Earth Sciences and Geography

- Atmospheric Sciences

- Environmental Geography

- Geology and the Lithosphere

- Maps and Map-making

- Meteorology and Climatology

- Oceanography and Hydrology

- Palaeontology

- Physical Geography and Topography

- Regional Geography

- Soil Science

- Urban Geography

- Browse content in Engineering and Technology

- Agriculture and Farming

- Biological Engineering

- Civil Engineering, Surveying, and Building

- Electronics and Communications Engineering

- Energy Technology

- Engineering (General)

- Environmental Science, Engineering, and Technology

- History of Engineering and Technology

- Mechanical Engineering and Materials

- Technology of Industrial Chemistry

- Transport Technology and Trades

- Browse content in Environmental Science

- Applied Ecology (Environmental Science)

- Conservation of the Environment (Environmental Science)

- Environmental Sustainability

- Environmentalist Thought and Ideology (Environmental Science)

- Management of Land and Natural Resources (Environmental Science)

- Natural Disasters (Environmental Science)

- Nuclear Issues (Environmental Science)

- Pollution and Threats to the Environment (Environmental Science)

- Social Impact of Environmental Issues (Environmental Science)

- History of Science and Technology

- Browse content in Materials Science

- Ceramics and Glasses

- Composite Materials

- Metals, Alloying, and Corrosion

- Nanotechnology

- Browse content in Mathematics

- Applied Mathematics

- Biomathematics and Statistics

- History of Mathematics

- Mathematical Education

- Mathematical Finance

- Mathematical Analysis

- Numerical and Computational Mathematics

- Probability and Statistics

- Pure Mathematics

- Browse content in Neuroscience

- Cognition and Behavioural Neuroscience

- Development of the Nervous System

- Disorders of the Nervous System

- History of Neuroscience

- Invertebrate Neurobiology

- Molecular and Cellular Systems

- Neuroendocrinology and Autonomic Nervous System

- Neuroscientific Techniques

- Sensory and Motor Systems

- Browse content in Physics

- Astronomy and Astrophysics

- Atomic, Molecular, and Optical Physics

- Biological and Medical Physics

- Classical Mechanics

- Computational Physics

- Condensed Matter Physics

- Electromagnetism, Optics, and Acoustics

- History of Physics

- Mathematical and Statistical Physics

- Measurement Science

- Nuclear Physics

- Particles and Fields

- Plasma Physics

- Quantum Physics

- Relativity and Gravitation

- Semiconductor and Mesoscopic Physics

- Browse content in Psychology

- Affective Sciences

- Clinical Psychology

- Cognitive Psychology

- Cognitive Neuroscience

- Criminal and Forensic Psychology

- Developmental Psychology

- Educational Psychology

- Evolutionary Psychology

- Health Psychology

- History and Systems in Psychology

- Music Psychology

- Neuropsychology

- Organizational Psychology

- Psychological Assessment and Testing

- Psychology of Human-Technology Interaction

- Psychology Professional Development and Training

- Research Methods in Psychology

- Social Psychology

- Browse content in Social Sciences

- Browse content in Anthropology

- Anthropology of Religion

- Human Evolution

- Medical Anthropology

- Physical Anthropology

- Regional Anthropology

- Social and Cultural Anthropology

- Theory and Practice of Anthropology

- Browse content in Business and Management

- Business Ethics

- Business Strategy

- Business History

- Business and Technology

- Business and Government

- Business and the Environment

- Comparative Management

- Corporate Governance

- Corporate Social Responsibility

- Entrepreneurship

- Health Management

- Human Resource Management

- Industrial and Employment Relations

- Industry Studies

- Information and Communication Technologies

- International Business

- Knowledge Management

- Management and Management Techniques

- Operations Management

- Organizational Theory and Behaviour

- Pensions and Pension Management

- Public and Nonprofit Management

- Strategic Management

- Supply Chain Management

- Browse content in Criminology and Criminal Justice

- Criminal Justice

- Criminology

- Forms of Crime

- International and Comparative Criminology

- Youth Violence and Juvenile Justice

- Development Studies

- Browse content in Economics

- Agricultural, Environmental, and Natural Resource Economics

- Asian Economics

- Behavioural Finance

- Behavioural Economics and Neuroeconomics

- Econometrics and Mathematical Economics

- Economic History

- Economic Systems

- Economic Methodology

- Economic Development and Growth

- Financial Markets

- Financial Institutions and Services

- General Economics and Teaching

- Health, Education, and Welfare

- History of Economic Thought

- International Economics

- Labour and Demographic Economics

- Law and Economics

- Macroeconomics and Monetary Economics

- Microeconomics

- Public Economics

- Urban, Rural, and Regional Economics

- Welfare Economics

- Browse content in Education

- Adult Education and Continuous Learning

- Care and Counselling of Students

- Early Childhood and Elementary Education

- Educational Equipment and Technology

- Educational Strategies and Policy

- Higher and Further Education

- Organization and Management of Education

- Philosophy and Theory of Education

- Schools Studies

- Secondary Education

- Teaching of a Specific Subject

- Teaching of Specific Groups and Special Educational Needs

- Teaching Skills and Techniques

- Browse content in Environment

- Applied Ecology (Social Science)

- Climate Change

- Conservation of the Environment (Social Science)

- Environmentalist Thought and Ideology (Social Science)

- Natural Disasters (Environment)

- Social Impact of Environmental Issues (Social Science)

- Browse content in Human Geography

- Cultural Geography

- Economic Geography

- Political Geography

- Browse content in Interdisciplinary Studies

- Communication Studies

- Museums, Libraries, and Information Sciences

- Browse content in Politics

- African Politics

- Asian Politics

- Chinese Politics

- Comparative Politics

- Conflict Politics

- Elections and Electoral Studies

- Environmental Politics

- European Union

- Foreign Policy

- Gender and Politics

- Human Rights and Politics

- Indian Politics

- International Relations

- International Organization (Politics)

- International Political Economy

- Irish Politics

- Latin American Politics

- Middle Eastern Politics

- Political Behaviour

- Political Economy

- Political Institutions

- Political Methodology

- Political Communication

- Political Philosophy

- Political Sociology

- Political Theory

- Politics and Law

- Public Policy

- Public Administration

- Quantitative Political Methodology

- Regional Political Studies

- Russian Politics

- Security Studies

- State and Local Government

- UK Politics

- US Politics

- Browse content in Regional and Area Studies

- African Studies

- Asian Studies

- East Asian Studies

- Japanese Studies

- Latin American Studies

- Middle Eastern Studies

- Native American Studies

- Scottish Studies

- Browse content in Research and Information

- Research Methods

- Browse content in Social Work

- Addictions and Substance Misuse

- Adoption and Fostering

- Care of the Elderly

- Child and Adolescent Social Work

- Couple and Family Social Work

- Developmental and Physical Disabilities Social Work

- Direct Practice and Clinical Social Work

- Emergency Services

- Human Behaviour and the Social Environment

- International and Global Issues in Social Work

- Mental and Behavioural Health

- Social Justice and Human Rights

- Social Policy and Advocacy

- Social Work and Crime and Justice

- Social Work Macro Practice

- Social Work Practice Settings

- Social Work Research and Evidence-based Practice

- Welfare and Benefit Systems

- Browse content in Sociology

- Childhood Studies

- Community Development

- Comparative and Historical Sociology

- Economic Sociology

- Gender and Sexuality

- Gerontology and Ageing

- Health, Illness, and Medicine

- Marriage and the Family

- Migration Studies

- Occupations, Professions, and Work

- Organizations

- Population and Demography

- Race and Ethnicity

- Social Theory

- Social Movements and Social Change

- Social Research and Statistics

- Social Stratification, Inequality, and Mobility

- Sociology of Religion

- Sociology of Education

- Sport and Leisure

- Urban and Rural Studies

- Browse content in Warfare and Defence

- Defence Strategy, Planning, and Research

- Land Forces and Warfare

- Military Administration

- Military Life and Institutions

- Naval Forces and Warfare

- Other Warfare and Defence Issues

- Peace Studies and Conflict Resolution

- Weapons and Equipment

- < Previous chapter

- Next chapter >

30 Meta-Analysis and Quantitative Research Synthesis

Noel A. Card, Family Studies and Human Development, University of Arizona, Tucson, AZ

Deborah M. Casper, Family Studies and Human Development, University of Arizona, Tucson, AZ

- Published: 01 October 2013

- Cite Icon Cite

- Permissions Icon Permissions

Meta-analysis is an increasingly common method of quantitatively synthesizing research results, with substantial advantages over traditional (i.e., qualitative or narrative) methods of literature review. This chapter is an overview of meta-analysis that provides the foundational knowledge necessary to understand the goals of meta-analysis and the process of conducting a meta-analysis, from the initial formulation of research questions through the interpretation of results. The chapter provides insights into the types of research questions that can and cannot be answered through meta-analysis as well as more practical information on the practices of meta-analysis. Finally, the chapter concludes with some advanced topics intended to alert readers to further possibilities available through meta-analysis.

Introduction to Meta-analysis

Meta-analysis, also referred to as quantitative research synthesis, is a systematic approach to quantitatively synthesizing empirical literature. By combining and comparing research results, metaanalysis is used to advance theory, resolve conflicts within a discipline, and identify directions for future research ( Cooper & Hedges, 2009 ). We begin by describing what meta-analysis is and what it is not.

Basic Terminology

It is important to provide a foundation of basic terminology on which to build a more technical and advanced understanding of meta-analysis. First, we draw the distinction between meta-analysis and primary and secondary analysis. The second distinction we draw is between quantitative research synthesis and qualitative literature review.

Glass (1976 ) defined primary-, secondary-, and meta-analysis as the analysis of data in an original study, the re-analysis of data previously explored in an effort to answer new questions or existing questions in a new way, and the quantitative analysis of results from multiple studies, respectively. A notable distinction between meta-analysis as compared to primary and secondary analysis involves the unit of analysis. In primary and secondary analyses, the units of analysis are most often the individual participants. In contrast, the units of analysis in a meta-analysis are the studies themselves or, more accurately, the effect sizes (defined below) of these studies.

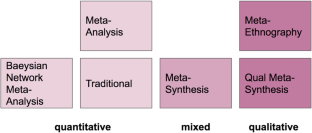

A second foundational feature to consider is the distinction between quantitative research synthesis and qualitative literature review. Although both approaches are valuable to the advancement of knowledge, they differ with regard to focus and methodology. The focus of meta-analysis is on the integration of research outcomes, specifically in terms of effect sizes. In contrast, the focus of a qualitative literature review can be on research outcomes (although typically not focusing on effect sizes) but can also be on theoretical perspectives or typical practices in research. In terms of methods, scientists utilizing meta-analytic methodologies quantitatively synthesize findings to draw conclusions based on statistical principle. In contrast, scholars who conduct a qualitative literature review subjectively interpret and integrate research. Not considered in this chapter are other methodologies that fall between these two approaches on the taxonomy of literature review (for a more comprehensive review, see Card, 2012 ; Cooper 1988 ).

As previously acknowledged, both quantitative research synthesis and qualitative literature review merit recognition for their respective contributions to the advancement of scientific knowledge. Quantitative literature reviews were developed to overcome many of the limitations of qualitative literature reviews, and we will highlight the advantages of quantitative literature reviews below. However, it is worth noting that quantitative research synthesis has also faced criticisms ( Chalmers, Hedges, & Cooper, 2002 ). Following are some highlights in the history of meta-analysis (for more thorough historical account, see Chalmers, Hedges, & Cooper, 2002 ; Hedges, 1992 ; Hunt, 1997 ; Olkin, 1990 ).

A Brief History

Research synthesis methodology can be traced as far back as 1904 when Karl Pearson integrated five studies looking at the association between inoculation for typhoid fever and morality ( see Olkin, 1990 ). By the 1970s, at least three independent groups had started to combine results from multiple studies ( Glass, 1976 ; Rosenthal & Rubin, 1978 ; Schmidt & Hunter, 1977 ), but the most influential work was Mary Smith and Gene Glass’ (1977) “meta-analysis” of psychotherapy, which was both ground-breaking and controversial. Smith and Glass’s (1977 ) meta-analysis sparked considerable controversy and debate as to the legitimacy of not only the findings but of the methodology itself ( Eysenck, 1978 ). It is worth noting, however, that some have suggested the controversy surrounding Smith and Glass’ (1977 ) meta-analysis had much more to do with the results than the methodology ( Card, 2012 ).

Following the somewhat turbulent introduction of meta-analysis into the social sciences, the 1980s offered significant contributions. These contributions came from both the advancement and dissemination of knowledge of meta-analytic techniques by way of published books describing the approach, as well as through the publication of research utilizing the methods ( Glass, McGaw, & Smith, 1981 ; Hedges & Olkin, 1985 ; Hunter, Schmidt, & Jackson, 1982 ; Rosenthal, 1984 ). Since its introduction into the social sciences in the 1970s, meta-analysis has become increasingly visible and has made considerable contributions to numerous bodies of scholarly research ( see Cochran, 1937 ; Hunter, Schmidt, & Hunter, 1979 ; Pearsons, 1904 ; Rosenthal & Rubin, 1978 ; Glass & Smith, 1979 ; Smith & Glass, 1977 ).

Research Synthesis in the Social Sciences

Glass (1976 ) brought the need for meta-analysis to the forefront in a presidential address. It is not uncommon to observe conflicting findings across studies ( Cooper & Hedges, 2009 ). These inconsistencies lead to confusion and impede progress in social science (as well as in the so-called hard sciences; Hedges, 1987 ). Quantitative research synthesis is a powerful approach that addresses this problem through the systematic integration of results from multiple studies that often individually report conflicting results.

Chapter Overview

The following chapter is an overview of metaanalysis that provides the foundational knowledge necessary to understand the goals of meta-analysis and the process of conducting a meta-analysis, from the initial formulation of research questions through the interpretation of results. The chapter provides insights into the types of research questions that can and cannot be answered through meta-analysis as well as more practical information on the practices of meta-analysis. Finally, we conclude the chapter with some advanced topics intended to alert readers to further possibilities available through meta-analysis. To begin, we consider the types of questions that can and cannot be answered through meta-analysis.

Problem Formulation

Questions that can and cannot be answered through meta-analysis.

One of the first things to consider when conducting scientific research is the question for which you seek an answer; meta-analysis is no exception. A primary purpose for conducting a meta-analytic review is to integrate findings across multiple studies; however, not all questions are suitable for this type of synthesis. Hundreds, or sometimes thousands, of individual research reports potentially exist on any given topic; therefore, after an initial search of the literature, it is important to narrow the focus, identify goals, and articulate concise research questions that can be answered by conducting a tractable meta-analysis. A common misconception by those unfamiliar with meta-analysis is that an entire discipline or phenomenon can be “meta-analyzed” ( Card, 2012 ). Because of the infinite number of questions that could be asked—many of which could be answered using meta-analysis—this sort of goal is too as pecific. Rather, a more appropriate approach to quantitative research synthesis is to identify a narrowly focused goal or set of goals and corresponding research questions.

Identifying Goals and Research Questions

Cooper’s (1988 ) taxonomy of literature reviews identified multiple goals for meta-analysis. These include integration, theory development, and the identification of central issues within a discipline. We consider each of these goals in turn.

Integration . There are two general approaches to integrating research findings in meta-analysis: combining and comparing studies. The approach of combining studies is used to integrate effect sizes from multiple primary studies in an effort to estimate an overall, typical effect size. It would then be expectable to make inferences about this mean effect size by way of significance testing and/or confidence intervals. A second approach commonly used to integrate findings involves comparing studies. Also known as moderator analyses (addressed in more detail below), comparisons can be made across studies when a particular effect size is hypothesized to systematically vary on one or more of the coded study characteristics. Analyses to address each of these two approaches to integration will be described below.

Theory Development . A second goal of meta-analysis involves the development of theory. Meta-analysis can be used quite effectively and efficiently toward this end. If associations between variables that have been meta-analytically combined are weak, then this might indicate that a theory positing stronger relations of the constructs in question should be abandoned or modified ( Schmidt, 1992 ). If, on the other hand, associations are strong, then this may be an indication that the phenomenon under investigation is moving toward a more integrated theory. Ideally, meta-analyses can be used to evaluate competing theories that make different predictions about the associations studied. Either way, meta-analysis is a powerful tool that can be used toward the advancement of theory within the social sciences.

Integration of Central Issues . A final goal has to do with identifying central issues within a discipline or phenomenon. The exhaustive review of empirical findings can aid in the process of identifying key issues within a discipline, such as whether there is inadequate study of certain types of samples or methodologies. The statistical techniques of meta-analysis can address inconsistencies in the findings, attempting to predict these inconsistencies with coded study characteristics (i.e., moderator analyses). Both of these contributions are important to the process of identifying directions for future research and the advancement of knowledge.

Critiques of Meta-Analysis

Earlier, we described how the controversial nature of one of the earliest meta-analyses ( Smith & Glass, 1977 ) drew criticism not only of their findings but also of the technique of meta-analysis itself. Although these critiques have largely been rebuffed, they are still occasionally applied. Among the most common criticisms of meta-analysis are: (1) the “file drawer” problem; (2) the apples and oranges problem; (3) garbage in and garbage out; (4) the level of expertise required of the meta-analyst; and (5) the potential lack of qualitative finesse.

The “file drawer” problem . The “file drawer” problem, also known as the threat of publication bias, is based on the notion that significant results get published and nonsignificant findings get relegated to the “file drawer,” resulting in the potential for a publication bias in meta-analysis ( Rosenthal, 1979 ). To answer this criticism, however, meta-analysts typically employ both systematic and exhaustive search strategies to obtain published and unpublished reports in an effort to minimize this threat. In addition, there is an extensive collection of statistical procedures in meta-analysis that can be used to probe the existence, extent, and likely impact of publication bias ( Rothstein, Sutton, & Borenstein, 2005 ).

The apples and oranges problem . The apples and oranges problem describes the potential process of combing such a diverse range of studies that the aggregated results are meaningless. For example, if a meta-analyst attempted to investigate the predictors of childhood internalizing problems by including studies focusing on depression, anxiety, and social withdrawal, then it could be argued that the aggregation of results across this diverse range of problems is meaningless. This critique, in our opinion, is conceptual rather than methodological: Did the scientist using meta-analytic techniques define a sampling frame of studies within which it is useful to combine results? Fortunately, meta-analytic reviews can use both (1) combination to estimate mean results and (2) comparison to evaluate whether studies with certain features differ. Put differently, meta-analysis allows for both general and specific results. Returning to the example of a meta-analyst investigating the predictors of child psychopathology, it might be useful to present results of both (1) predictors of general internalizing problems, and (2) comparisons of the distinct predictors of depression, anxiety, and social withdrawal.

Garbage in and garbage out . Garbage in, garbage out describes the practice of including poor-quality research reports in a meta-analysis, which result in only poor-quality conclusions. Although this critique is valid in some situations, we believe a more nuanced consideration of “garbage” is needed before being used as a critique of a particular meta-analysis. In the next section , we will provide this consideration by discussing how the limits of primary research place limits on the conclusions that can be drawn from meta-analysis of that research.

The level of expertise required of the meta-analyst . A common misconception is that meta-analysis requires advanced statistical expertise. We would argue that with basic methodological and quantitative training, such as usually obtained in the first year of graduate school, many scientists could readily learn the basic techniques (through an introductory course or book on meta-analysis) to conduct a sound meta-analytic review.

The potential lack of qualitative finesse . A final criticism that has been raised is that meta-analysis lacks the “qualitative finesse” of a qualitative review. Perhaps tellingly, a definition of qualitative finesse is generally lacking when this critique is made, but it seems that this critique implies that a meta-analyst has not thought carefully and critically about the nuances of the studies and collection of studies. There certainly exist meta-analyses where this critique seems relevant—just as there exist primary quantitative studies in which careful thought seems lacking. The solution to this critique is not to abandon meta-analytic techniques, however, just as the solution to thoughtless primary studies is not to abandon statistical analyses of these data. Rather, this critique makes clear that meta-analysis—like any other methodological approach—is a tool to aid careful thinking, rather than a replacement for it.

Limits of Primary Research and Meta-Analysis

It is also important to recognize that the conclusions of a meta-analytic review must be tempered by the quality of the empirical research comprising this review. Many of the threats to drawing conclusions in primary research are likely to translate to meta-analysis as well. Perhaps the most salient threats involve flaws in the study design, sampling procedures, methodological artifacts, and statistical power.

Study design . The design of primary studies guides the types of conclusions that can be drawn from them; similarly, the design of studies included in a meta-analysis guides the types of conclusions that can be drawn. Experimental designs, although powerful in their ability to permit inferences of causality, often do not share the same ecological validity as correlational designs. Conversely, correlational designs cannot make inferences of causality. It would follow that any limitation existing within primary studies also exists within the meta-analyses that encompass these studies.

Sampling . Another limitation of primary studies is that it is difficult to support inferences generalizable beyond the sampling frame. When a sample is drawn from a homogeneous population, inferences can be made only for a limited set of individuals. Similarly, findings from a meta-analysis can only be generalized to populations within the sampling frame of the included studies; however, the collection of primary studies within a meta-analysis is likely to be more heterogeneous than one single primary study if it includes studies that are collectively diverse in their samples, even if each study sample is homogeneous.

Methodological artifacts . Both primary research and meta-analysis involve methodological shortcomings. Although it is difficult to describe all of the characteristics that make up a high-quality study, it is possible to identify those artifacts that likely lower the quality of the design. In primary studies, methodological issues need to be addressed prior to data collection. In contrast, meta-analysis can address these methodological artifacts in either one of two ways. The first way is to compare (through moderator analyses) whether studies with different methodological features actually yield different findings. Second, for some artifacts (e.g., measurement unreliability) described near the end of this chapter, corrections can be made that allow for the analysis of effect sizes free of these artifacts. Artifact correction is rarely performed in primary research (with the exception of latent variable modeling to correct for unreliability) but more commonly considered in meta-analyses.

Statistical power . Another limitation of much primary research is low statistical power ( Maxwell, 2004 ). Statistical power is the probability of detecting an effect that truly does exist but is often unacceptably low in many primary research studies. This low power results in incorrect conclusions in primary studies that an effect does not exist (despite cautions against “accepting” the null hypothesis). Fortunately, meta-analysis is usually less affected by inadequate power of primary studies because it combines a potentially large number of studies, thus resulting in greater statistical power.

Strengths of Meta-Analysis

As outlined above, there are limits to metaanalysis; however, meta-analysis should be recognized for its considerable strengths. We next briefly describe three of the most important of these: (1) a systematic and disciplined review process; (2) sophisticated reporting of findings; and (3) a way of combining and comparing large amounts of data ( Lipsey & Wilson, 2001 ).

Systematic and disciplined review process . First, systematic procedures must be followed to conduct a comprehensive literature search, consistently code comparable characteristics and effect sizes from studies, and to ensure the accuracy of combining results from multiple reports into one effect size. The processes of searching the literature, identifying studies, coding, and analyzing results have received tremendous attention in the literature on meta-analysis methodology, in contrast to most other forms of literature review. Although this work requires discipline, diligent attention to detail, and meticulous documentation on the part of the metaanalyst, when these procedures are followed, a large amount of data can be combined and compared and the outcome is likely to be a significant contribution to the field.

Combining and comparing large amounts of data . Perhaps one of the greatest strengths of meta-analytic techniques is the ability to combine and compare large amounts of data that would otherwise be impossible to integrate in a meaningful way. It would assuredly exceed the capacity of almost any scholar to combine the large amounts of data and draw meaningful conclusions without quantitative literature review techniques. Following the strength of combining and comparing large amounts of data is the strength in the way in which the findings are reported.

Sophisticated reporting of findings . Meta-analysis offers a level of sophistication in the way in which the findings are reported. Unlike qualitative literature reviews that derive and report conclusions and interpretations in a narrative format, meta-analysis uses statistical techniques to yield quantified conclusions. Meta-analysts commonly take advantage of visual tools such as stem-and-leaf plots, funnel plots, and tables of effect sizes to add a level of sophistication to the reporting of findings.

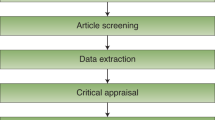

Searching the Literature

Defining a sampling frame.

Similarly to primary research, a sampling frame must be considered in meta-analysis. However, the unit of analysis in a meta-analysis is the study itself, as compared to the individuals in most primary studies. If we are to make inferences about the population of studies of interest, it is necessary to define the population a priori by articulating a set of criteria of the type of studies included versus excluded from this sampling frame.

Identifying Inclusion and Exclusion Criteria

As mentioned, the inclusion and exclusion criteria define the sampling frame of a meta-analysis. Establishing clear and explicit criteria will help guide the search process, a consideration particularly important if multiple individuals are working on the project. A second reason for identifying clear criteria is that it will help define the population of interest to which generalizations can be made. A final reason that clear criteria are necessary has to do with the ideas of transparency and replication. As with the sampling in well-conducted and well-reported primary studies, each decision and subsequent procedure utilized in the literature search of a meta-analysis must be transparent and replicable. Some of the more common search techniques and sources of information are described next.

Search Techniques and Identifying Resources

Many techniques have been used quite successfully toward the goal of searching the literature and identifying relevant resources. Two important concepts related to the literature search are recall and precision ( see White, 2009 ). Recall is the percentage of studies retrieved that meet your inclusion criteria from all of those that actually exist. Precision is the percentage of studies retrieved that meet the inclusion criteria for the meta-analysis. The ideal literature search strategy provides both high recall and precision, although the reality is that decisions that affect efforts to improve recall often lower precision and vice versa.

By using multiple methods of searching for literature, meta-analysts strive to maximize recall without imposing impractical detriments on precision. The use of multiple search techniques helps this effort. The techniques most commonly used include searching: electronic databases using keywords, bibliographical reference volumes, unpublished works and other outlets (described below), conference presentations, funding agency lists, research registries, backward searches, forward searches, and personal communications with colleagues.

Electronic databases . Electronic databases are probably one of the most helpful tools for conducting literature searches developed in the past decades. Now, electronic database searches can identify as much of the relevant literature in a matter of hours or days, as would have taken weeks or months a few decades earlier (not to mention that these searches can be done from the comfort of one’s office rather than within the confines of a library). Most disciplines have electronic databases that serve primarily that particular discipline (e.g., PsychINFO for psychology, Medline for medicine, ERIC for education, etc.). With these and similar databases, the metaanalyst identifies the most relevant combination of keywords, wildcard marks (e.g., * ), and logical statements (e.g., and, or, not), and voluminous amounts of literature is quickly searched for matches. The electronic database is perhaps the most fruitful place to begin and is currently the primary tool used to search the literature.

Despite their advantages, it is worth mentioning a few cautions regarding electronic databases. First, an electronic search must not be used exclusively because of that which is not included in these databases. For example, many unpublished works might not be retrieved through electronic databases. Second, as mentioned previously, each discipline relies on one primary electronic database; therefore, multiple databases must be considered in your search. Third, electronic databases produce studies that match the keyword searches, but it is not possible to know what has been excluded. Using other search strategies and investigating why studies found by these strategies were not identified in the electronic database search is necessary to avoid unnecessary (and potentially embarrassing) omission of studies from a meta-analysis.

Bibliographical reference volumes . A method of locating relevant literature that was common as little as a decade ago is to search biographical reference volumes. These volumes are printed collections containing essentially the same information as electronic databases. Although these reference volumes are being phased out of circulation, you may find them useful if relevant literature was published some time ago (especially if the electronic databases have not yet incorporated this older literature).

Unpublished works . One of the challenges of meta-analysis has to do with publication bias ( see Rothstein et al., 2005 ). If there is a tendency for significant findings to be more likely published than nonsignificant (presumably with smaller effect sizes) studies, then the exclusion of unpublished studies in a meta-analysis can be problematic. To balance this potential problem, the meta-analyst should make deliberate efforts to find and obtain unpublished studies. Some possible places to find such studies include conference program books, funding agency lists, and research registries.

Backward searches . Another technique commonly used in meta-analysis is the backward search. Once relevant reports are retrieved, it is recommended that the researcher thoroughly read each report and identify additional articles cited within these reports. This strategy is called a “backward” search because it proceeds backward in time from obtained studies toward previous studies.

Forward searches . A complimentary procedure, known as the forward search, involves searching for additional studies that have cited the relevant studies included in your meta-analysis (“forward” because the search proceeds from older studies to newer studies citing these previous works). To conduct this type of search, special databases (e.g., Social Science Citation Index) are used.

Personal communication with researchers in the field . A final search technique involves personal communication with the researchers in the field. It will be especially helpful to communicate with researchers in your field (those who will likely read your work) in an effort to locate resources that somehow escaped your comprehensive search efforts. An effective yet efficient way to do this is to simply email researchers in your field, let them know what type of meta-analysis you are conducting, and ask if they would be willing to peruse your reference list to see if there are any glaring oversights.

Coding Study Characteristics

In a meta-analysis, study characteristics are systematically coded for two reasons. First, this coded information is presented to describe the collective field being reviewed. For example, do studies primarily rely on White college students, or are the samples more diverse (either within or across studies)? Do studies rely on the same measures or types of measures, or has the phenomenon been studied using multiple measures?

A second reason for systematically coding study characteristics is for use as potential predictors of variation in effect sizes across studies (i.e., moderators, as described below in section titled Moderator Analyses). In other words, does variation across studies in the coded study characteristics co-occur with differences in results (i.e., effect sizes) from these studies? Ultimately, the decision of what study characteristics should be coded derives from the meta-analysts’ substantive understanding of the field. There are at least three general types of study features that are commonly considered: characteristics of the sample, the methodology, and the source.

Coding Sample Characteristics

Sample characteristics include any descriptions of the study samples that might systematically covary with study results (i.e., effect sizes). Some meta-analyses will include codes for the sampling procedures, such as whether the study used a representative sample or a convenience sample (e.g., college students), or whether the sample was selected from some specific setting, such as clinical treatment settings, schools, or prisons. Nearly all meta-analyses code various demographic features of the sample, such as the ethnic composition, proportion of the sample that is male or female, and the average age of participants in the sample.

Coding Methodological Characteristics

Potential methodological characteristics for coding include both design and measurement features. At a broad level, a meta-analyst might code broad types of designs, such as experimental, quasiexperimental, and single-subject ABAB studies. It might also be useful to code at more narrow levels, such as the type of control group used within experimental treatment studies (e.g., no contact, attention only, treatment as usual). Similarly, the types of measures used could be coded as either broad (e.g., parent vs. child reports) or narrow (CBCL vs. BASC parent reports). In practice, most meta-analysts will code methodological features at both broad and narrow levels, first considering broad-level features as predictors of variability in effect sizes, and then using more narrow-level feature if there exists unexplained variation in results within these broad features.

Coding Source Characteristics

Source characteristics include features of the report or author that might plausibly be related to study findings. The most commonly coded source characteristic is whether the study was published, which is often used to evaluate potential publication bias. The year of publication (or presentation, for unpublished works) is often used as a proxy for the historic time in which the study was conducted. If the year predicts differences in effect sizes, then this may be evidence for historic change in the phenomenon over time. Other source characteristics, such as characteristics of the researcher (e.g., gender, ethnicity, discipline), are less commonly coded but are possibilities. For example, some meta-analyses of gender differences have coded the gender of the first author to evaluate the possibility that the researchers’ presumed biases may somehow impact the results found (e.g., Card, Stucky, Sawalani, &Little, 2008 ).

Coding Effect Sizes

As mentioned, study results in meta-analysis are represented as effect sizes. To be useful in metaanalysis, a potential effect size needs to meet four criteria. First, it needs to quantify the direction and magnitude of a phenomenon of interest. Second, it needs to be comparable across studies that use different sample sizes and scales of measurement. Third, it needs to be either consistently reported in studies included in the meta-analysis or else it can be computed from commonly reported results. Fourth, it is necessary that the meta-analyst can compute its standard error, which is used for weighting of studies in subsequent meta-analytic combination and comparison.

The three effect sizes most commonly used in meta-analyses all index associations between two variables. The correlation coefficient (typically denoted as r ) quantifies associations between two continuous variables. The standardized mean differences are a family of effect sizes (we will focus on Hedges’ g ) that quantify associations between a dichotomous (group) variable and a continuous variable. The odds ratio (denoted as either o or OR) is a useful and commonly used index for associations between two dichotomous variables ( Fleiss, 1994 ). We next describe these three indexes of effect size, the correlation coefficient, the standardized mean difference, and the OR. After describing each of these effect sizes indexes, we will describe how these are computed from results commonly reported in empirical reports.

Correlation Coefficient

Correlation coefficients represent associations between two variables on a standardized scale from − 1 to +1. Correlations near 0 denote the absence of association between two variables, whereas positive values indicate that scores on one variable tend to be similar to scores on another (relatively high scores on one variable tend to occur with relatively high scores on the other, as do low scores tend to occur with low scores), whereas negative scores indicate the opposite (high scores with low scores). The correlation coefficient has the advantage of being widely recognized by scientists in diverse fields. A commonly applied suggestion is that r ≍ ±0.10 is considered small, r ≍ ±0.30 is considered medium, and r ≍ ±0.50 is considered large; however, disciplines and fields differ in their evaluations of what constitutes small or large correlations, and researchers should not be dogmatic in its application.

Although r has many advantages as an effect size, it has the undesirable property for meta-analysis of having sample estimates that are skewed around the population mean. For this reason, meta-analysts should transform r to Fisher’s Z r prior to analysis using the following equation:

Although Z r has desirable properties for meta-analytic combination and comparison, it is not very interpretable by most readers. Therefore, metaanalysts back-transform results in Z r metric (e.g., mean effect size) to r for reporting using the following equation:

As mentioned earlier, and will be described in greater detail below, it is necessary to compute the standard error of the estimation of the effect size ( Z r ) for use in weighting studies in meta-analysis. The equation for the standard error of Z r ( S E Z r ) is a simple function of the study sample size:

Standardized Mean Differences

There exist several standardized mean differences, which index associations between a dichotomous “group” variable and a continuous variable. Each of these standardized mean differences indexes the direction and magnitude of differences between two groups in standard deviation units. We begin with one of the more common of these indices, Hedges’ g , which is defined as:

The numerator of this equation contains the difference between the means of two groups (groups 1 and 2) and will yield a positive value if group 1 has a higher mean than group 2 or a negative value if group 2 has a higher mean than group 1. Although it is arbitrary which group is designated 1 or 2, this designation must be consistent across all studies coded for a meta-analysis.

If all studies in a meta-analysis use the same measure, or else different measures with the same scale, then the numerator of this equation alone would suffice as an effect size for meta-analysis (this is the unstandardized mean difference). However, the more common situation is that different scales are used across different studies, and in this situation it would make no sense to attempt to combine these unstandardized mean differences across studies. To illustrate, if one study comparing treatment to control groups measured an outcome on a 1 to 100 scale and found a 10-point difference, whereas another study measured the outcome on a 0 to 5 scale and found a 2-point difference, then there would be no way of knowing which—if either—study had a larger effect. To make these differences comparable across studies, it is necessary to standardize them in some way, typically by dividing the mean difference by a standard deviation.

As seen in equation (4) above, this standard deviation in the divisor for g is the pooled (i.e., combined across the two groups) estimate of the population standard deviation. Other variants within the standardized mean difference family of effect sizes use different divisors. For example, the index d uses the pooled sample standard deviation and a less commonly used index, g Glass (also denoted as Glass’ Δ), uses the estimated population standard deviation for one group (the group that you believe is a more accurate estimate of population standard deviation, such as the control group if you believe that treatment impacts the standard deviation). The latter index ( g Glass ) is less preferred because it cannot be computed from some commonly reported statistics (e.g., t tests), and it is a poorer estimate if the standard deviations are, in fact, comparable across groups ( Hedges & Olkin, 1985 ).

In this chapter, we focus our attention primarily on g , and we will describe the computation of g from commonly reported results below. Like other standardized mean differences, g has a value of 0 when the groups do not differ (i.e., no association between the dichotomous group variable and the continuous variable), and positive or negative values depending on which group has a higher mean. Unlike r, g is not bounded at 1, but can have values greater than ±1.0 if the groups differ by more than one standard deviation.

Although g is a preferred index of standardized mean differences, it exhibits a slight bias when estimated from small samples (e.g., sample sizes less than 20). To correct for this bias, it is common to apply the following correction:

As with any effect size used in meta-analysis, it is necessary to compute the standard error of estimates of g for weighting during meta-analytic combination. The standard error of g is more precisely estimated using the sample sizes from both groups under consideration (i.e., n 1 and n 1 for groups 1 and 2, respectively) using the left portion of Equation 6 but can be reasonably estimated using overall sample size ( N Total ; right portion of Equation 6 ) when exact group sizes are unknown but approximately equal (no more than a 3-to-1 discrepancy in group sizes; Card, 2012 ; Rosenthal, 1991 ):

Odds Ratios

The odds ratio, denoted as either o or OR, is a useful index of associations between two dichotomous variables. Although readers might be familiar with other indices of two variable associations, such as the rate (also known as risk) ratio or the phi coefficient, the OR is advantageous because it is not affected by differences in the base rates of dichotomous variables across studies and is computed from a wider range of study designs ( see Fleiss, 1994 ). The OR is estimated from 2 × 2 contingency tables by dividing the product of cell frequencies in the major diagonal (i.e., frequencies in the cells where values of the two variables are both 0 { n 00 }or both 1 { n 11 }) by the product of cell frequencies off the diagonal (i.e., frequencies in the cells where the two variables have different values, n 10 and n 01 ):

The OR has a rather different scale than either r or g . Values of 1.0 represent no association between the dichotomous variables, values from 1 down to 0 represent negative association, and values from 1 to infinity represent positive associations. Given this scale, o is obviously skewed; therefore, a log transformation is applied to o when included in a meta-analysis: ln ( o ). The standard error of this log-transformed odds ratio is a function of number of participants in each cell of the 2 × 2 contingency table:

Computing Effect Sizes From Commonly Reported Data

Ideally, all studies that you want to include in a meta-analysis will have effect sizes reported, and it is a fairly straightforward matter to simply record these. Unfortunately, many studies do not report effect sizes (despite many calls for this reporting; e.g., Wilkinson et al., 1999 ), and it is necessary to compute effect sizes from a wide variety of information reported in studies. Although it is not possible to consider all possibilities here, we next describe a few of the more common situations. Table 30.1 summarizes equations for computing r and g in these situations (note that it is typically necessary to reconstruct contingency tables from reported data to compute the odds ratio; see Fleiss, 1994 ).

It is common for studies to report group comparisons in the form of either the (independent samples) t -test or as the results of a two-group (i.e., 1 df ) analysis of variance (ANOVA). This occurs either because the study focused on a truly dichotomous grouping variable (in which case, the desired effect size is a standardized mean difference such as g ) or because the study authors artificially dichotomized one of the continuous variables (in which case the desired effect size is r ). In these cases, either r or g can be computed from the t statistic of F ratio in Table 30.1 . For the F ratio, it is critical that the result is from a two-group (i.e., 1 df ) comparison (for discussion of computing effect sizes from > 1 df F ratios, see Rosenthal, Rosnow, & Rubin, 2000 ). When computing g (but not r ), a more precise estimate can be made if the two group sizes are known; otherwise, it is necessary to use the approximations shown to the right of Table 30.1 (e.g., in the first row for g , the exact formula is on the left and the approximation is on the right).

An alternate situation is that the study has performed repeated-measures comparisons (e.g., pretreatment vs. posttreatment) and reported results of dependent, or repeated-measures, t -tests, or F ratios. The equations for computing r from these results are identical to those for computing from independent samples tests; however, for g , the equations differ for independent versus dependent sample statistics, as seen in Table 30.1 .

A third possibility is that the study authors represent both variables that constitute your effect size of interest as dichotomous variables. The study might report the 1 df χ 2 of this contingency or the data that can be used to construct the contingency table and the subsequent value. In this situation, r and g are computed from this χ 2 value and sample size ( N ). As with the F ratio, it is important to keep in mind that this equation only applied to 1 df χ 2 values (i.e., 2 × 2 contingency tables).

The last situation we will discuss is when the authors report none of the above statistics but do report a significance level (i.e., p ). Here, you can compute the one-tail standard normal deviate, Z , associated with this significance level (e.g., Z = 1.645 for p = 0.05) and then use the equations of Table 30.1 to compute r or g . These formulas are used when an exact significance level is reported (e.g., p = 0.027); if they are applied to ranges (e.g., p < 0.05), then they provide only a lower-bound estimate of the actual effect size.

Although we have certainly not covered all possible situations, these represent some of the most common situations you are likely to encounter when coding effect sizes for a meta-analysis. For details of these and other situations in which you might code effect sizes, see Card (2012 ) or Lipsey and Wilson (2001 ).

Analysis of Mean Effect Sizes and Heterogeneity

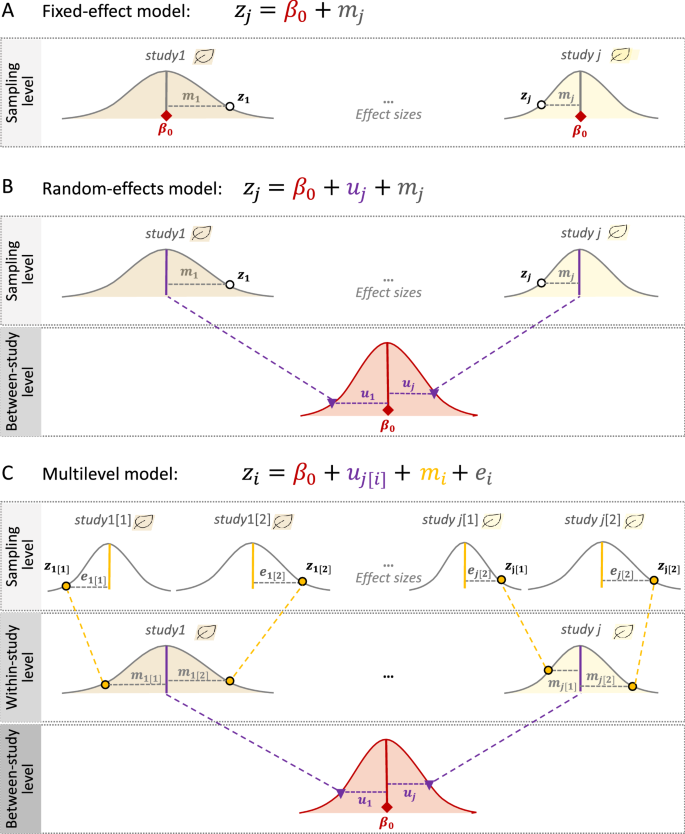

After coding study characteristics and effect sizes from all studies included in a meta-analysis, it is possible to statistically combine and compare results across studies. In this section, we describe a method (fixed effects) of computing a mean effect size and making inferences about this mean. We then describe a test of heterogeneity that informs whether the between-study variability in effect sizes is greater than expectable by sampling fluctuation alone. Finally, we describe an alternative approach to computing mean effect sizes (random effects) that accounts for between-study variability.

Fixed-Effects Means

One of the primary goals of meta-analytic combination of effect sizes from multiple studies is to estimate an average effect size that exists in the literature and then to make inferences about this average effect size in the form of statistical significance and/or confidence intervals. Before describing how to estimate and make inferences about a mean effect size, we briefly describe the concept of weighting.

Weighting in Meta-Analysis . Nearly all (and all that we describe here) analyses of effect sizes in meta-analysis apply weights to studies. These weights are meant to in dex the degree of precision in each study’s estimate of the population effect size, such that studies with more precise estimates receive greater weight in the analyses than studies with less precise estimates. The most straightforward weight is the inverse of the variance of a study’s estimate of the population effect size. In other words, the weight of study i is the inverse of the squared standard error from that study:

As described above, the standard error of a study largely depends on the sample size (and for g , the effect size itself), such that studies with large samples have smaller standard errors than studies with small samples. Therefore, studies with large samples have larger weights than studies with smaller samples.

Fixed-Effects Mean Effect Sizes . After computing weights for each study using the equation above, estimating the mean effect size ( E ¯ S ¯ ) across studies is a relatively simple matter of computing the weighted mean of effect sizes across all studies:

This value represents the estimate of a single effect size in the population based on information combined from all studies included in the meta-analysis. Because it is often useful to draw inferential conclusions, the standard error of this estimate is computed using the equation:

This standard error can then be used to compute either statistical significance or confidence intervals. For determining statistical significance, the mean effect size is divided by the standard error, and the resulting ratio is evaluated as a standard normal deviate (i.e., Z -test, with, e.g., values larger than ±1.96 having p < 0.05). For computing confidence intervals, the standard error is multiplied by the standard normal deviate associated with the desired confidence interval (e.g., Z = 1.96 for a 95% confidence interval), and this product is then subtracted from and added to the mean effect size to identify the lower- and upper-bounds of the confidence interval.

If the effect size chosen for the meta-analysis (i.e., r, g , or o ) was transformed prior to analyses (e.g., r to Z r ), then the mean effect size and boundaries of its confidence interval will be in this transformed metric. It is usually more meaningful to back-transform these values to their original metrics for reporting.

Heterogeneity

In addition to estimating a mean effect size, meta-analysts evaluate the variability of effect sizes across studies. Some degree of variability in effect sizes across studies is always expectable; the fact that different studies relied on different samples results in somewhat different estimates of effect sizes because of sampling variability. In situations where effect sizes differ by an amount expectable due to sampling variability, the studies are considered homogeneous with respect to their population effect sizes. However, if effect sizes vary across studies more than expected by sampling fluctuation alone, then they are considered heterogeneous (or varying) with respect to their population effect sizes.

It is common to perform a statistical test to evaluate heterogeneity. In this test, the null hypothesis is of homogeneity, or no variability, in population effect sizes across studies (i.e., any variability in sample effect sizes is caused by sampling variability), whereas the alternative hypothesis is of heterogeneity, or variability, in population effect sizes across studies (i.e., variability in sample effect sizes that is not accounted for by sampling variability alone). The result of this test is denoted by Q :

The statistical significance of this Q is evaluated as a χ 2 distribution with df = number of studies – 1. You will note that this equation has two forms. The left portion of Equation 12 is the definitional equation, which makes clear that the squared deviation of the effect size from each study i from the overall mean effect size is being weighted and summed across studies. Therefore, small deviations from the mean will contribute to small values of Q (homogeneity), whereas large deviations from the mean will contribute to large values of Q (heterogeneity). The right portion of Equation 12 is an algebraic rearrangement that simplifies computation (i.e., a computational formula).

Results of this test have implications for subsequent analyses. Specifically, a conclusion of homogeneity (more properly, failure to conclude heterogeneity) suggests that the fixed-effects mean described above is an acceptable way to summarize effect sizes, and this conclusion may contraindicate moderator analyses (described below). In contrast, a conclusion of heterogeneity implies that the fixedeffects mean is not an appropriate way to summarize effect sizes, but, rather, a random-effects model (described in the next section ) should be used. Further, a conclusion of heterogeneity indicates that moderator analyses (described below) may help explain this between-study variance (i.e., heterogeneity). It is worth noting that the result of this heterogeneity test is not the sole basis of deciding to use random-effects models or to conduct moderator analyses, and meta-analysts often base these decisions on conceptual rather than empirical grounds ( see Card, 2012 ; Hedges & Vevea, 1998 ).

Random-Effects Means