Understanding Speech to Text in Depth

Have you ever transcribed an interview before? Or seen an individual with disabilities use voice recognition software to control their devices and create text using their voice commands?

If yes, then you have directly experienced the impact of speech to text technology . Better known as STT, these tools help convert audio into written text. It works with a combination of artificial intelligence, deep learning, and computational linguistics.

To give you another real-life example of speech to text, YouTube features a ‘Closed Captions’ option that enables the live transcription of the dialogue happening on the video in real-time.

There are several use cases where voice to text comes in handy, including the dictation processes during meetings, transcribing important interviews, and much more.

In this blog, we’ll go through the evolution of speech to text, benefits, applications, and what the future of the technology looks like.

Table of Contents

Need for speech to text, 1. enhanced accessibility through speech recognition, 2. improved productivity, 3. hands-free operation through spoken words, 4. multitasking through voice commands, 5. language support through google speech recognition, 1. multilingual and cross-language capabilities, 2. enhanced customization and personalization, 2. integration with virtual and augmented reality, 3. expanded use in healthcare, 4. incorporation into smart assistants and iot devices, does murf have a speech to text, evolution of speech to text.

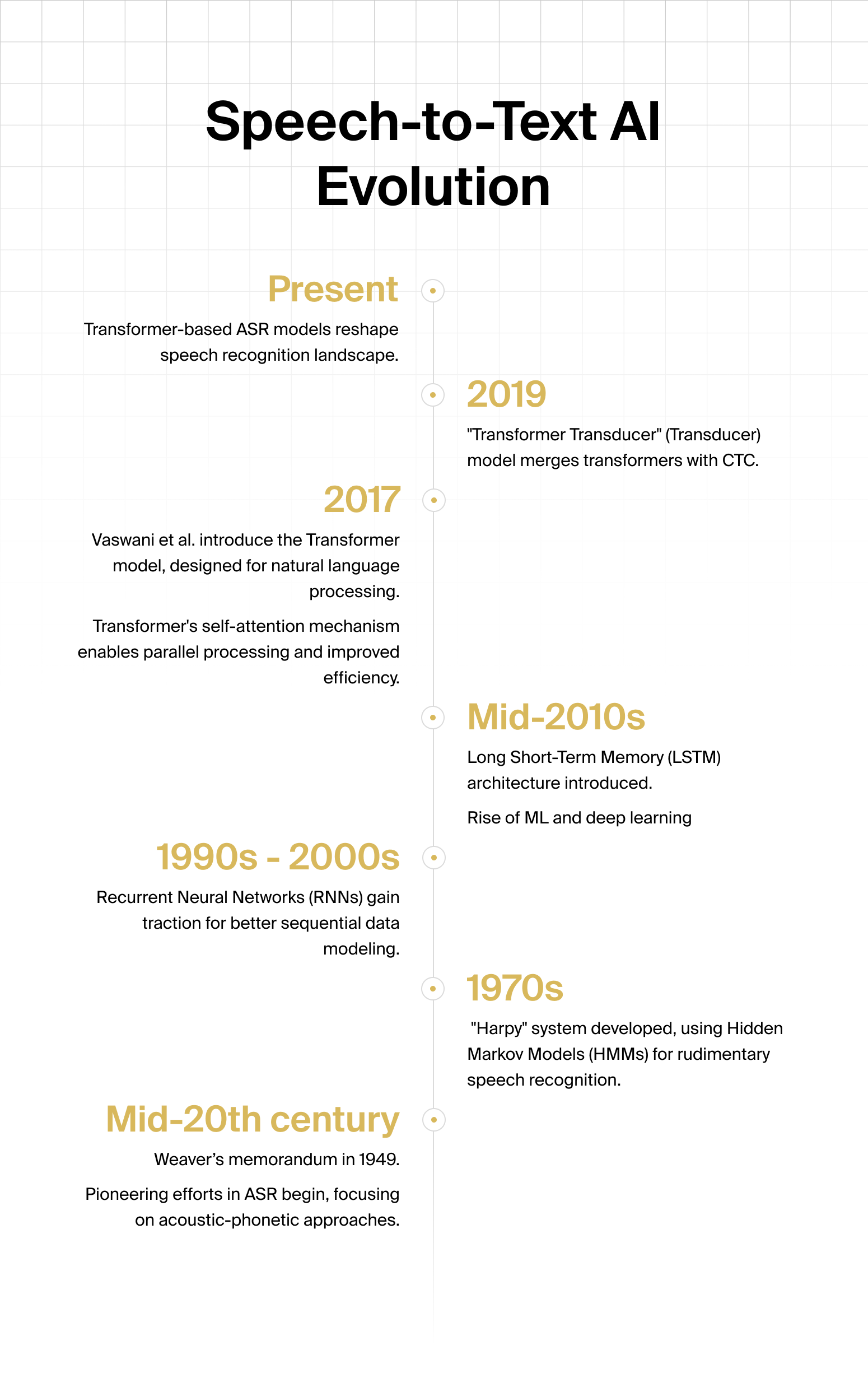

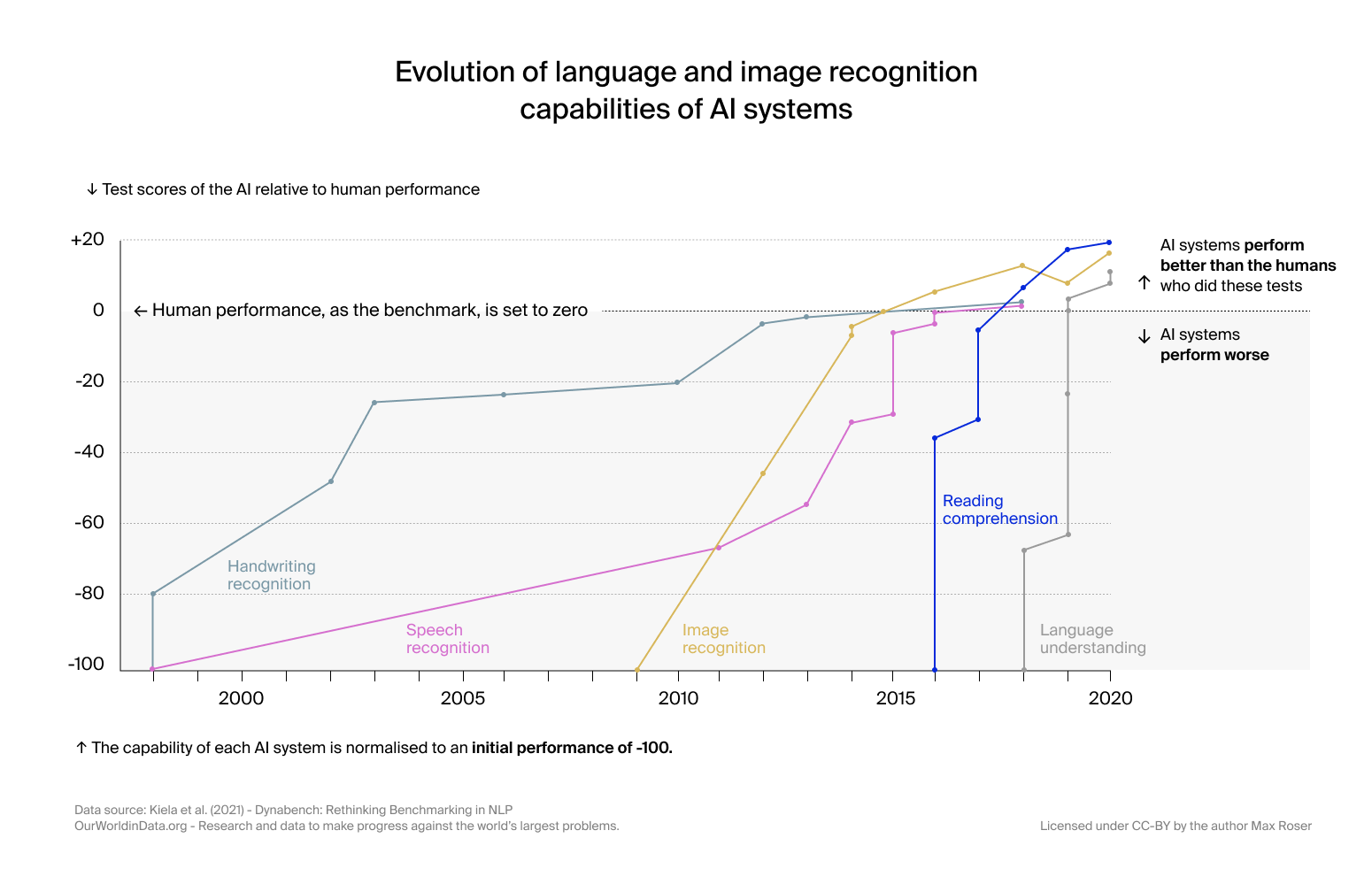

Speech recognition has always been under constant improvement since the 1950s. In fact, Bell Laboratories pioneered the world’s first speech recognition setup called AUDREY, which could recognize spoken numbers with almost 99% accuracy. However, the system was too bulky and consumed copious amounts of power.

In 1962, IBM innovated the niche with Shoebox, a speech recognition system that was able to recognize both numbers and simple mathematical terms. On a parallel timeline, the Japanese scientists were hard at work creating phoneme -based speech recognition technologies and speech segmenters.

This was when Kyoto University achieved a breakthrough in speech segmentation, allowing computers to ‘Segment' one sentence into a new line of speech for the subsequent tech to work on sound identification.

It wasn’t until HARPY from Carnegie Mellon came around in the 1970s that computers could recognize sentences from just over a 1,000-word vocabulary. The system was the first to use Hidden Markov Models, a probabilistic method that laid the foundation for the modern-day ASR.

The 1980s saw the first speech to text tool that leveraged IBM’s transcription system, Tangora. These tools were viable and usable and would then be polished to become the modern-day speech recognition software.

The fact that people around the world needed to generate transcripts at scale and fast led to the development of speech to text software.

Today, their use has expanded into other utilities as well, serving to provide live translations of language and aiding people with disabilities to participate in the online world equitably.

The speech to text process can be explained in five simple steps:

Vibration analysis: When a person speaks, the voice vibrations are first analyzed by STT software.

Phoneme identification: The software then identifies the phonemes in the input sound.

Phoneme-sentence correlation: The identified phonemes are then run through a mathematical algorithm to create sentences.

Linguistic algorithmic conversions: The phonemes are put together to form words and put into coherent sentences.

Output in the form of Unicode characters: The words are now displayed as Unicode characters.

Benefits of Speech to Text

Speech to text provides tremendous advantages to users:

Speech to text is an exemplary accessibility tool for people with mobility or visual disabilities to express themselves. Spoken language can be converted into text automatically, allowing them to take part in threads and discussions on, say, social media platforms.

Speech to text is also an excellent tool to use for enhancing productivity at work that involves exhaustive transcribing processes. The entire workflow can be automated to convert audio to text, clean the text, and then push it further for translation or proofreading.

Hands-free keyboard operation is another productivity enhancement that speech to text provides to users. Professionals can leave their desks and dictate meeting notes or instructions or type a letter using speech to text on popular software like MS Word.

Speech to text allows users to tackle multiple tasks at the same time. For example, while using STT tools for dictating onboarding instructions for a new hire, a professional can continue to read through the files that have been closed or need to be handed over.

Speech to text enables professionals to type in another language using speech. There are tools that take input speech recognition in one language and output the text in a different language selected by the user. It helps prevent errors in sensitive documents for international businesses.

Future of Speech to Text

In the near future, innovations in speech to text would unravel the improved potential of the technology across a variety of use cases:

Polyglot capabilities are set to emerge with speech to text tools promptly converting one language into written text in a second language. In the next step, the typed text in L2 can be converted into spoken audio again, achieving cross-language capabilities.

Currently, speech to text technologies feature a wide range of voice and language selections. In the future, there is potential to offer better voice modulation, auto punctuation, and customization capabilities to users for enhanced branding and user experience.

Speech to text can be extensively employed in VR and AR modules for simulating conversations with AI assistants or agents. It can prove to be a highly effective tool for corporate training , skill-building, and scenario simulations.

Speech to text has the potential to provide enhanced functionality to administrative tasking in the healthcare sector. It can help doctors quickly and efficiently provide prescriptions to patients and also help medical researchers take notes on a subject as they continue to study.

Speech to text is already finding expanded utility in voice assistants that work by recognizing speech and following through with voice commands. This capability can be further expanded into IoT beyond domestic use into specialized operations as well (like industrial operations).

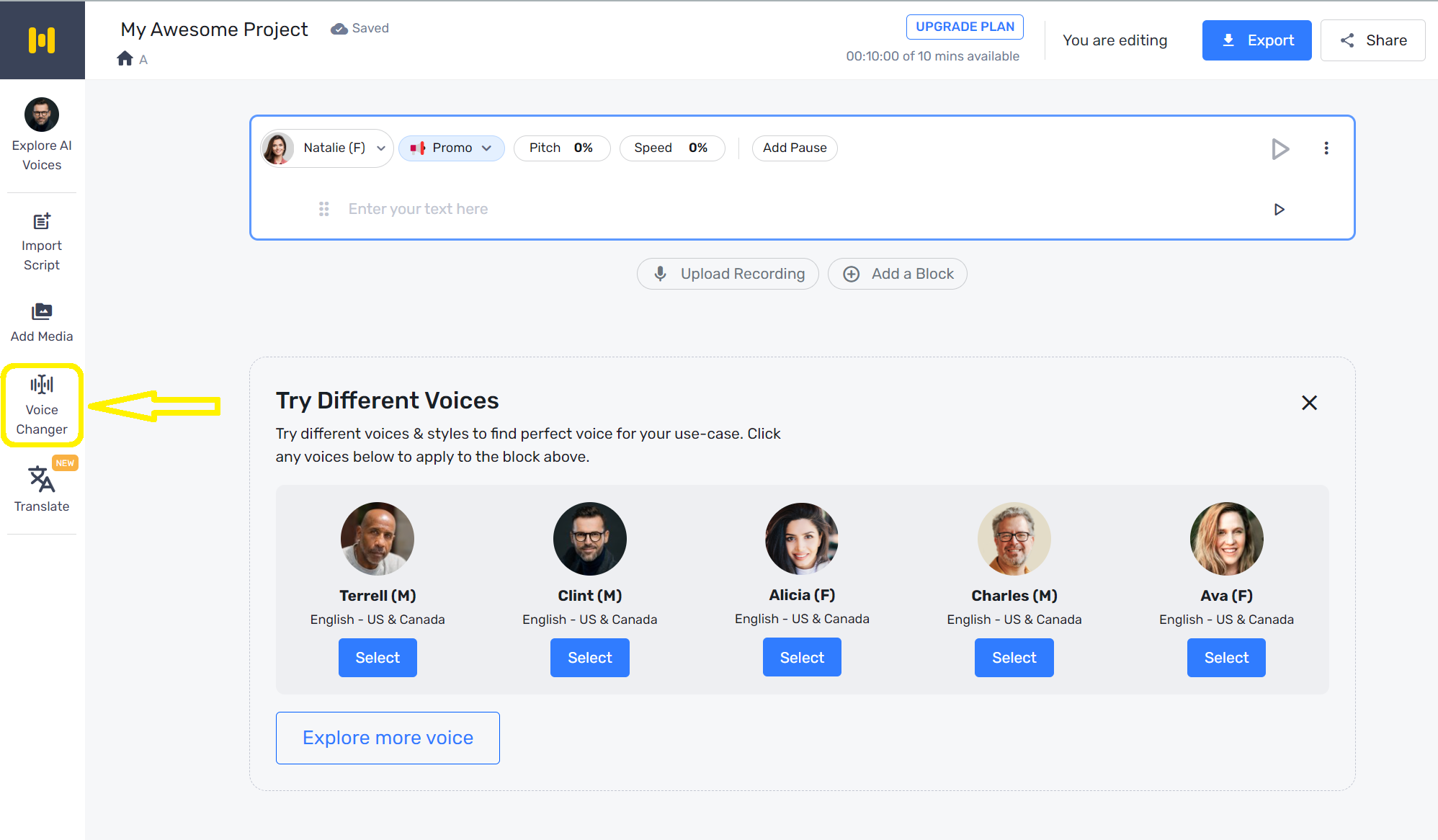

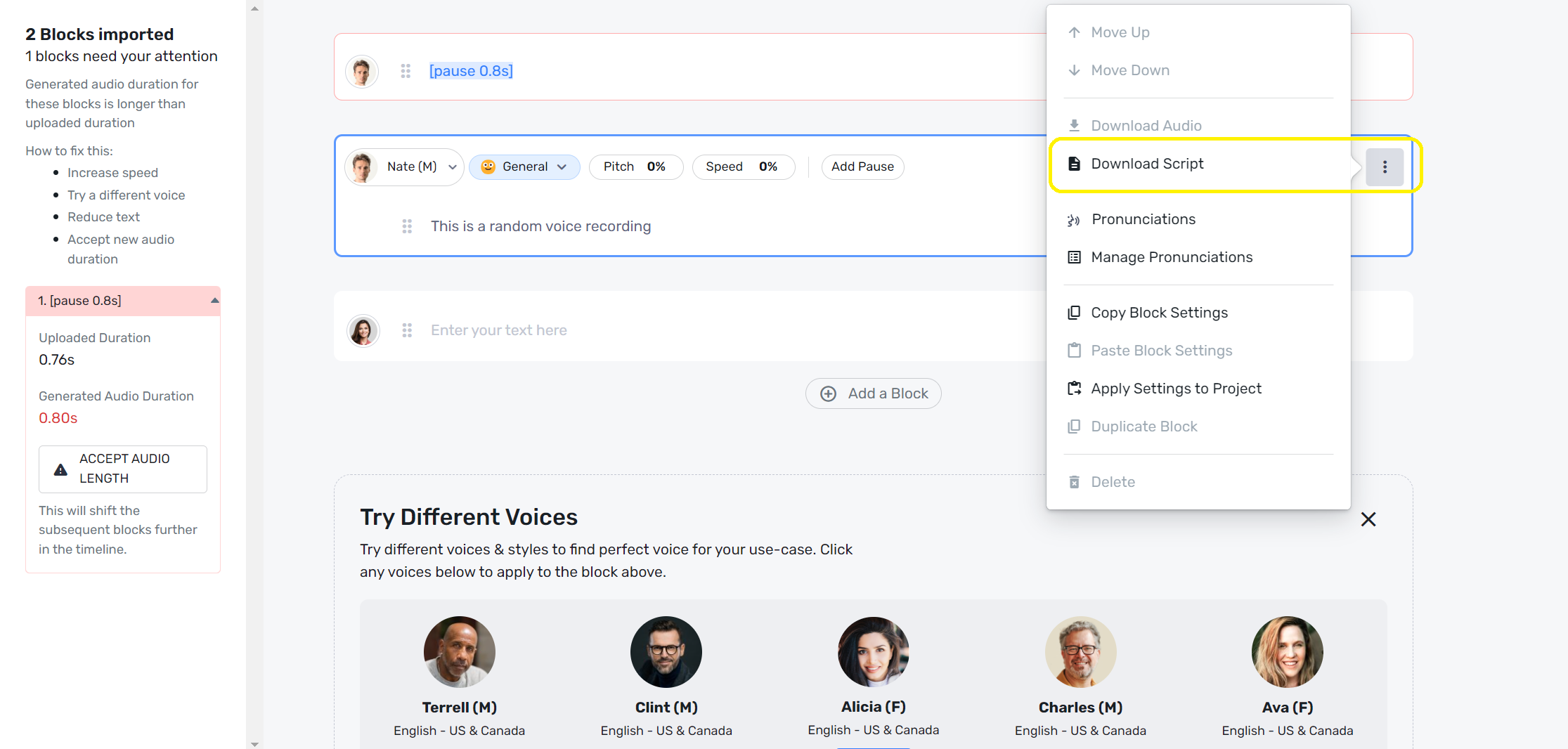

Murf Studio is primarily a versatile platform that provides high-quality AI voices for text to speech conversions. While the platform doesn’t offer a standalone speech to text module, users can still convert audio to script using Murf’s AI voice changer feature through the following steps:

Login to the Murf Studio dashboard and select AI voice changer from the left sidebar.

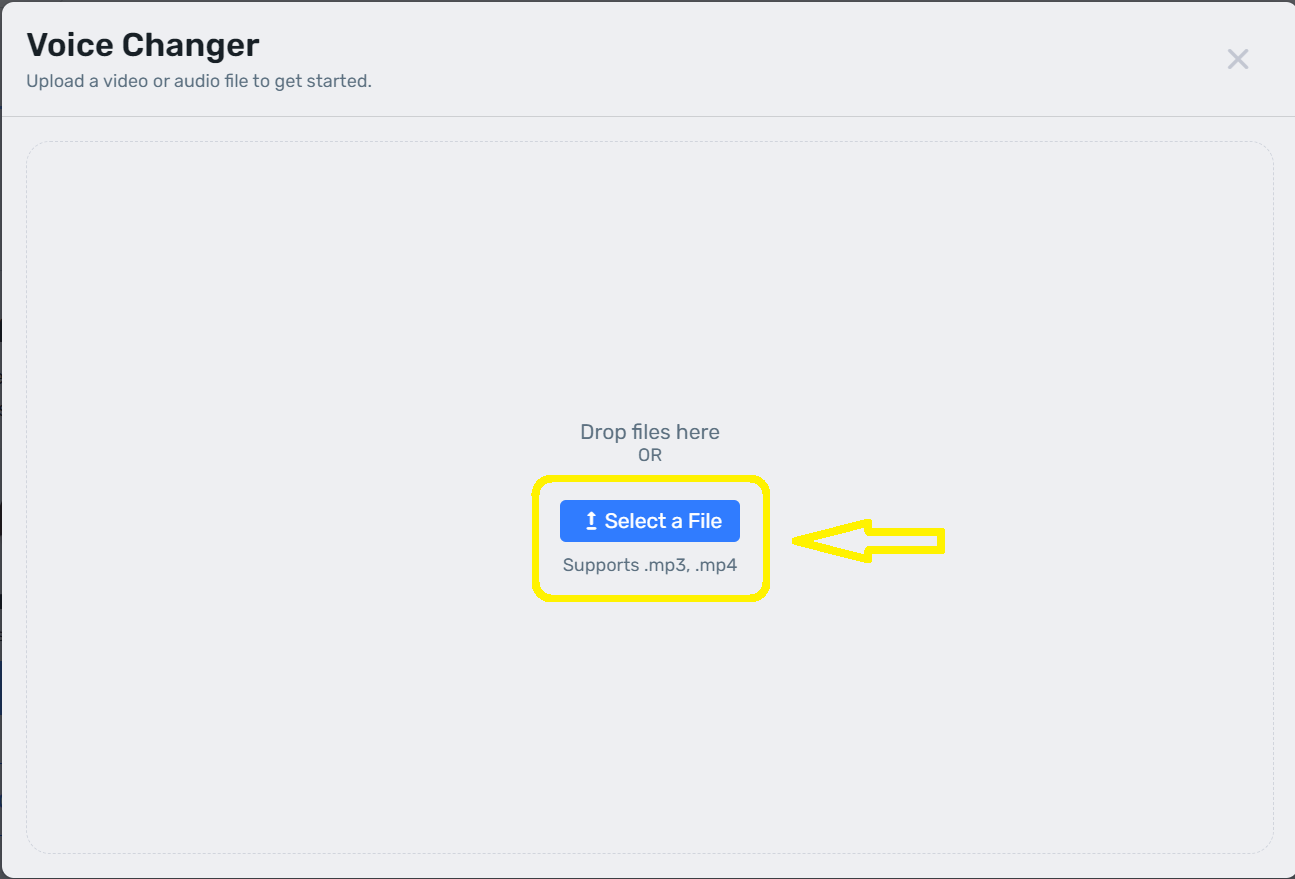

Select a recorded audio or video to upload to the platform.

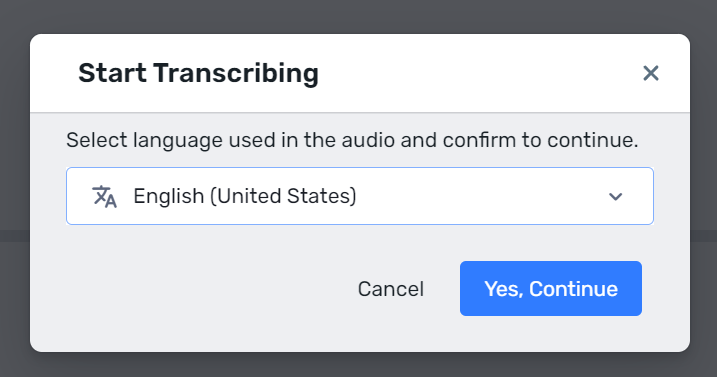

Select the language that your audio file is recorded in.

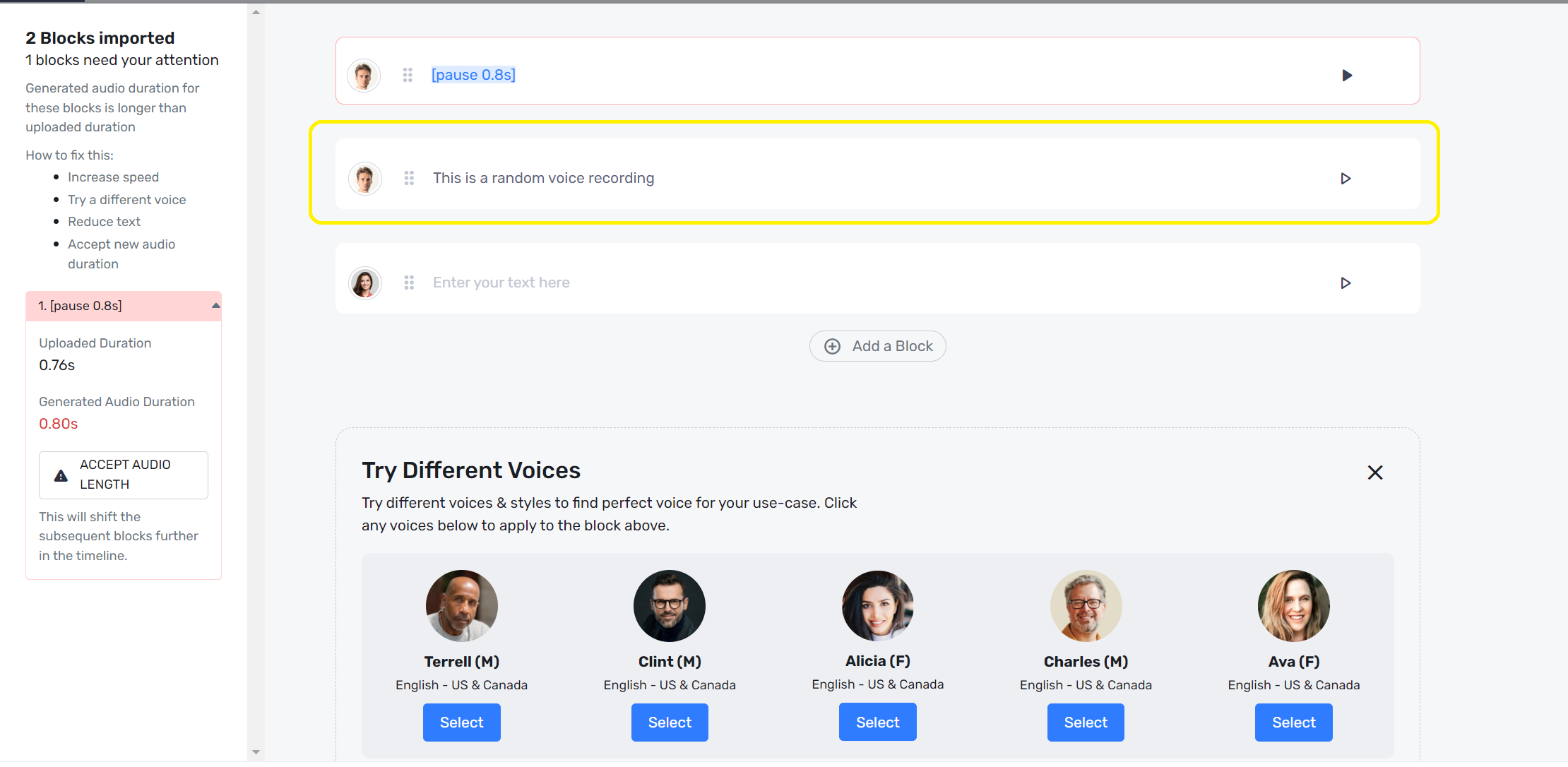

Once you see the transcribed text appear on the dashboard from your audio, you can proceed to download the text script from the interface. If required, you can apply customizations to the text here as well.

Click on the context menu option beside the text script and select “Download Script.”

Murf Studio allows you to download the text script in a variety of formats. You can also translate the script into 20+ languages available on the platform.

Speech to Text: More Than Just an Accessibility Enhancer

Speech to text tools are a boon for people who require tasking assistance. However, these tools can do more than just assistive tasks. Professionals actively employ STT to achieve higher levels of productivity at work; people also use it in their daily lives to interact with voice assistants.

Speech to text tools have become extremely accessible today, with advanced online platforms available aplenty. The simplicity in ease of use and quick transcriptions they provide have made it more inclusive for the populace.

What is STT technology, and how does it work?

Speech to text tools convert spoken words into text. They work by identifying sounds in a recording and converting them into corresponding text.

How accurate is speech to text?

Modern-day speech to text tools are extremely accurate as they work with expanded voice databases that allow for accurate transcriptions.

What are the objectives of speech to text?

Speech to text is purposed to convert spoken words and phrases into typed text with a view to enhance accessibility and productivity.

How is AI used in speech to text?

AI enables predictive and voice typing when using dictation methods on software like MS Word.

What applications use speech to text technology?

Daily-use electronics like Amazon’s Alexa or the voice assistants on your phone use speech to text technology.

Can speech to text handle multiple languages?

Yes, speech to text software can convert between languages once a text transcript is available.

How secure is speech to text technology?

Depending on the software you select, the degree of security varies in STT.

Can speech to text technology be used for real-time transcription?

Yes, YouTube and other video platforms leverage STT for real-time caption generation.

You should also read:

Top 10 Speech to Text Software in 2024

How Speech Recognition is Changing Language Learning

Future of AI in Speech Recognition

- Bahasa Indonesia

- Sign out of AWS Builder ID

- AWS Management Console

- Account Settings

- Billing & Cost Management

- Security Credentials

- AWS Personal Health Dashboard

- Support Center

- Expert Help

- Knowledge Center

- AWS Support Overview

- AWS re:Post

- What is Cloud Computing?

- Cloud Computing Concepts Hub

- Machine Learning & AI

What is Speech To Text?

What is speech to text?

Speech to text is a speech recognition software that enables the recognition and translation of spoken language into text through computational linguistics. It is also known as speech recognition or computer speech recognition. Specific applications, tools, and devices can transcribe audio streams in real-time to display text and act on it.

How does speech to text work?

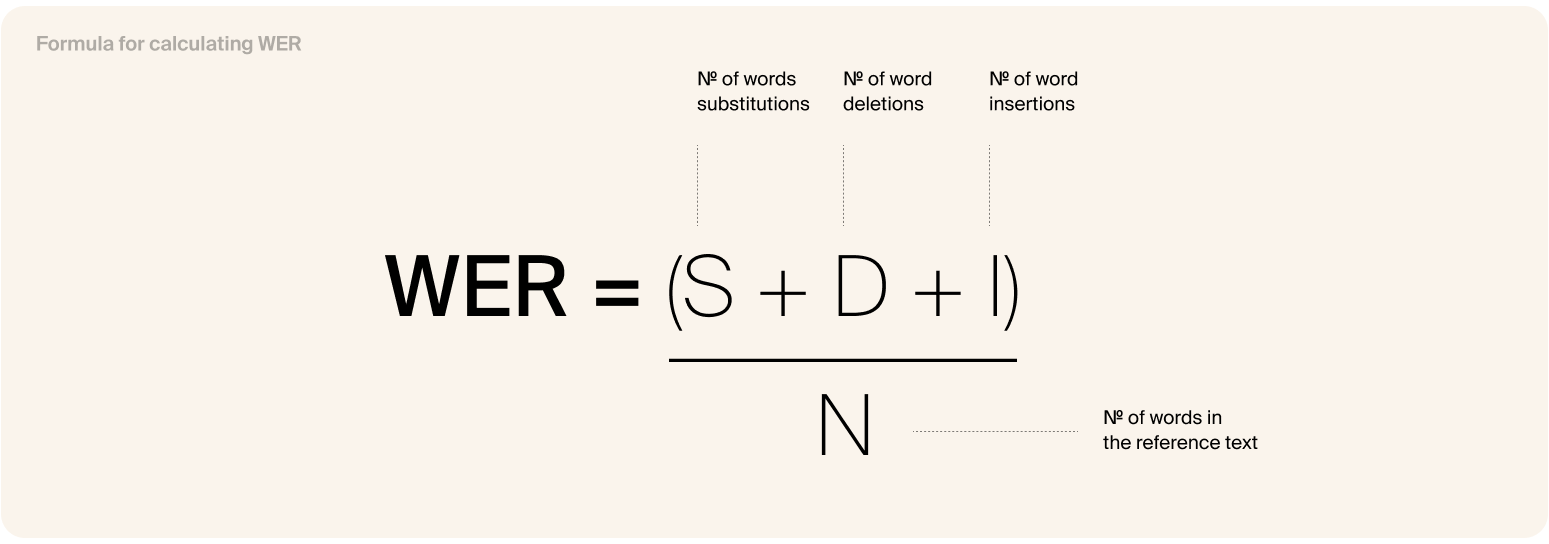

Speech to text is software that works by listening to audio and delivering an editable, verbatim transcript on a given device. The software does this through voice recognition. A computer program draws on linguistic algorithms to sort auditory signals from spoken words and transfer those signals into text using characters called Unicode. Converting speech to text works through a complex machine learning model that involves several steps. Let's take a closer look at how this works:

- When sounds come out of someone's mouth to create words, it also makes a series of vibrations. Speech to text technology works by picking up on these vibrations and translating them into a digital language through an analog to digital converter.

- The analog-to-digital-converter takes sounds from an audio file, measures the waves in great detail, and filters them to distinguish the relevant sounds.

- The sounds are then segmented into hundredths or thousandths of seconds and are then matched to phonemes. A phoneme is a unit of sound that distinguishes one word from another in any given language. For example, there are approximately 40 phonemes in the English language.

- The phonemes are then run through a network via a mathematical model that compares them to well-known sentences, words, and phrases.

- The text is then presented as text or a computer-based demand based on the audio’s most likely version.

What are the types of speech to text technology?

There are two main types of speech to text technology:

- Speaker-dependent : Mainly used for dictation software.

- Speaker-independent : Often used for phone applications.

These two speech recognition systems rely on software and services to function adequately, with the main type being built-in dictation technology. Many devices now have built-in dictation tools, such as laptops, smartphones, and tablets

What are the applications of speech to text?

Speech to text has quickly transcended from everyday use on phones in homes to applications in industries like marketing, banking, and medical. Speech recognition applications reveal how voice to text technology can increase the efficiency of simple tasks and extend to tasks that humans have traditionally performed.

Call analytics and agent assist

Using a tool like Transcribe Call Analytics allows you to extract actionable insights from customer conversations quickly, enabling improvements in customer engagement and increasing agent productivity.

Media content search

Amazon transcribe converts audio and video assets into searchable archives. It also allows users to improve the reach and accessibility of content by generating localized subtitles in combination with Amazon Translate .

Marketing is one of the leading industries to draw on speech to text through media content search. The introduction of voice-search allows for information about trends in data and consumer behavior for marketers.

For example, speech recognition provides information on people's accents and vocabulary, interpreting age, location, and other important demographics. Speaking is also a much more conversational search mode, allowing marketers to incorporate conversational keywords to stay ahead of trends.

Media subtitling

Amazon transcribe can also capture meetings and conversations through the digital scribe function, improving productivity, accessibility, and streamlining important notes.

Clinical documentation

Amazon Transcribe Medical is a tool for medical professionals to quickly and efficiently record clinical conversations into electronic health record systems for analysis. For example, in banking, speech to text is used through voice-activated customer service. In the healthcare sector, speech to text helps improve efficiency by providing immediate access to information and inputting data.

Why should you use speech to text?

Like all forms of technology, speech to text has many benefits that help us improve daily processes. These are some of the main advantages of using speech to text:

- Save time : Automatic speech recognition technology saves time by delivering accurate transcripts in real-time.

- Cost-efficient : Most speech to text software has a subscription fee, and a few services are free. However, the cost of the subscription is far more cost-efficient than hiring human transcription services.

- Enhance audio and video content : Speech to text capabilities mean that audio and video data can be converted in real-time for subtitling and fast video transcription.

- Streamline the customer experience : By drawing on natural language processing, the customer experience is transformed through ease, accessibility, and seamlessness.

What are the limitations of speech to text?

New technologies like speech to text don't come without imperfection, and these are some of the main limitations of speech to text:

- It isn't perfect : While dictation technology is a powerful tool, it is still in its early days,which means there are some gaps in its overall performance. Because it produces verbatim text only, you can end up with an inaccurate or awkward transcript or missing specific quotations.

- Requires human input : Because speech to text lacks complete accuracy, some human edits to the speech data are required for optimal usage.

- Requires clean recordings : To get a quality transcript from voice recognition software, you need to ensure the recorded audio is clear and intelligible. This means there needs to be no background noise, adequate pronunciation, no accents, and one person speaking at a time. You also need to provide voice commands for punctuation.

How to choose free speech to text software vs. paid?

Free speech to text software is helpful if you are on a limited budget. However, if you want to transcribe a large volume of audio to text you will need more robust software. Paid speech to text software is often more accurate, faster, and has added features and support.

Most free speech to text software:

- Do not offer quality technical support.

- Do not offer the greatest speed or accuracy.

- Have a limited capacity.

- Require a lot of extra editing on your part.

How to choose the best speech to text software?

With so many options available, choosing the best speech to text software can be challenging. Use the checklist below to assess the different speech to text software and make the best choice for you:

- No additional software is required - The most accessible speech to text software relies on an internet connection rather than additional software.

- Accuracy level is guaranteed - All speech to text services offer a degree of certainty. Some services have a greater focus on transcription, which ensures extra accuracy.

- Multi-language support - If you need multi-language support, you will need to choose a speech to text software that meets your language needs.

- App compatibility - Some speech to text services can be added to apps, which is important if you wish to use the software across multiple platforms.

How to use Amazon Transcribe for speech to text?

Using automatic speech recognition (ASR), Amazon Transcribe converts speech to text quickly and accurately. Amazon Transcribe offers a range of accessible tools for various uses including call analytics, medical transcriptions, subtitling, and generating metadata for media assets. To get started, simply sign up for a free AWS account and start transcribing with the free speech to text option today.

.ac6d31570dd8ee05e169f1568582927b56fd727c.png)

Ending Support for Internet Explorer

How to use speech-to-text on a Windows computer to quickly dictate text without typing

- You can use the speech-to-text feature on Windows to dictate text in any window, document, or field that you could ordinarily type in.

- To get started with speech-to-text, you need to enable your microphone and turn on speech recognition in "Settings."

- Once configured, you can press Win + H to open the speech recognition control and start dictating.

- Visit Business Insider's Tech Reference library for more stories.

One of the lesser known major features in Windows 10 is the ability to use speech-to-text technology to dictate text rather than type. If you have a microphone connected to your computer, you can have your speech quickly converted into text, which is handy if you suffer from repetitive strain injuries or are simply an inefficient typist.

Check out the products mentioned in this article:

Windows 10 (from $139.99 at best buy), acer chromebook 15 (from $179.99 at walmart), how to turn on the speech-to-text feature on windows.

It's likely that speech-to-text is not turned on by default, so you need to enable it before you start dictating to Windows.

1. Click the "Start" button and then click "Settings," designated by a gear icon.

2. Click "Time & Language."

3. In the navigation pane on the left, click "Speech."

4. If you've never set up your microphone, do it now by clicking "Get started" in the Microphone section. Follow the instructions to speak into the microphone, which calibrates it for dictation.

5. Scroll down and click "Speech, inking, & typing privacy settings" in the "Related settings" section. Then slide the switch to "On" in the "Online speech recognition" section. If you don't have the sliding switch, this may appear as a button called "Turn on speech services and typing suggestions."

How to use speech-to-text on Windows

Once you've turned speech-to-text on, you can start using it to dictate into any window or field that accepts text. You can dictate into word processing apps, Notepad, search boxes, and more.

1. Open the app or window you want to dictate into.

2. Press Win + H. This keyboard shortcut opens the speech recognition control at the top of the screen.

3. Now just start speaking normally, and you should see text appear.

If you pause for more than a few moments, Windows will pause speech recognition. It will also pause if you use the mouse to click in a different window. To start again, click the microphone in the control at the top of the screen. You can stop voice recognition for now by closing the control at the top of the screen.

Common commands you should know for speech-to-text on Windows

In general, Windows will convert anything you say into text and place it in the selected window. But there are many commands that, rather than being translated into text, will tell Windows to take a specific action. Most of these commands are related to editing text, and you can discover many of them on your own – in fact, there are dozens of these commands. Here are the most important ones to get you started:

- Punctuation . You can speak punctuation out loud during dictation. For example, you can say "Dear Steve comma how are you question mark."

- New line . Saying "new line" has the same effect as pressing the Enter key on the keyboard.

- Stop dictation . At any time, you can say "stop dictation," which has the same effect as pausing or clicking another window.

- Go to the [start/end] of [document/paragraph] . Windows can move the cursor to various places in your document based on a voice command. You can say "go to the start of the document," or "go to the end of the paragraph," for example, to quickly start dictating text from there.

- Undo that . This is the same as clicking "Undo" and undoes the last thing you dictated.

- Select [word/paragraph] . You can give commands to select a word or paragraph. It's actually a lot more powerful than that – you can say things like "select the previous three paragraphs."

Related coverage from Tech Reference :

How to use your ipad as a second monitor for your windows computer, you can use text-to-speech in the kindle app on an ipad using an accessibility feature— here's how to turn it on, how to use text-to-speech on discord, and have the desktop app read your messages aloud, how to use google text-to-speech on your android phone to hear text instead of reading it, 2 ways to lock a windows computer from your keyboard and quickly secure your data.

Insider Inc. receives a commission when you buy through our links.

Watch: A diehard Mac user switches to PC

- Main content

- Contact Sales Log In

What is speech-to-text?

Speech-to-text, or automatic speech recognition (ASR), technology has been around for a while, but it is only recently that it has gained widespread adoption. ASR allows users to speak commands and control their devices using their voice, making it a popular choice for virtual assistants, captioning and transcription, customer service, education, medical documentation, and legal documentation. According to Forrester's survey , many information workers in North America and Europe use voice commands on their smartphones at least occasionally, with the most common use being texting (56%), searching (46%), and navigation/directions (40%). However, there are still challenges that need to be addressed in order for this technology to reach its full potential.

In this article, we will explore the different methods of speech-to-text and how it is used in various applications, including transcription services, voice recognition software, and accessibility tools. We'll also take a look at the future of speech-to-text and see how this technology is likely to continue to improve and expand in the coming years. So, let's dive in and see what makes speech-to-text such a powerful tool for businesses and individuals alike.

How speech-to-text technology works

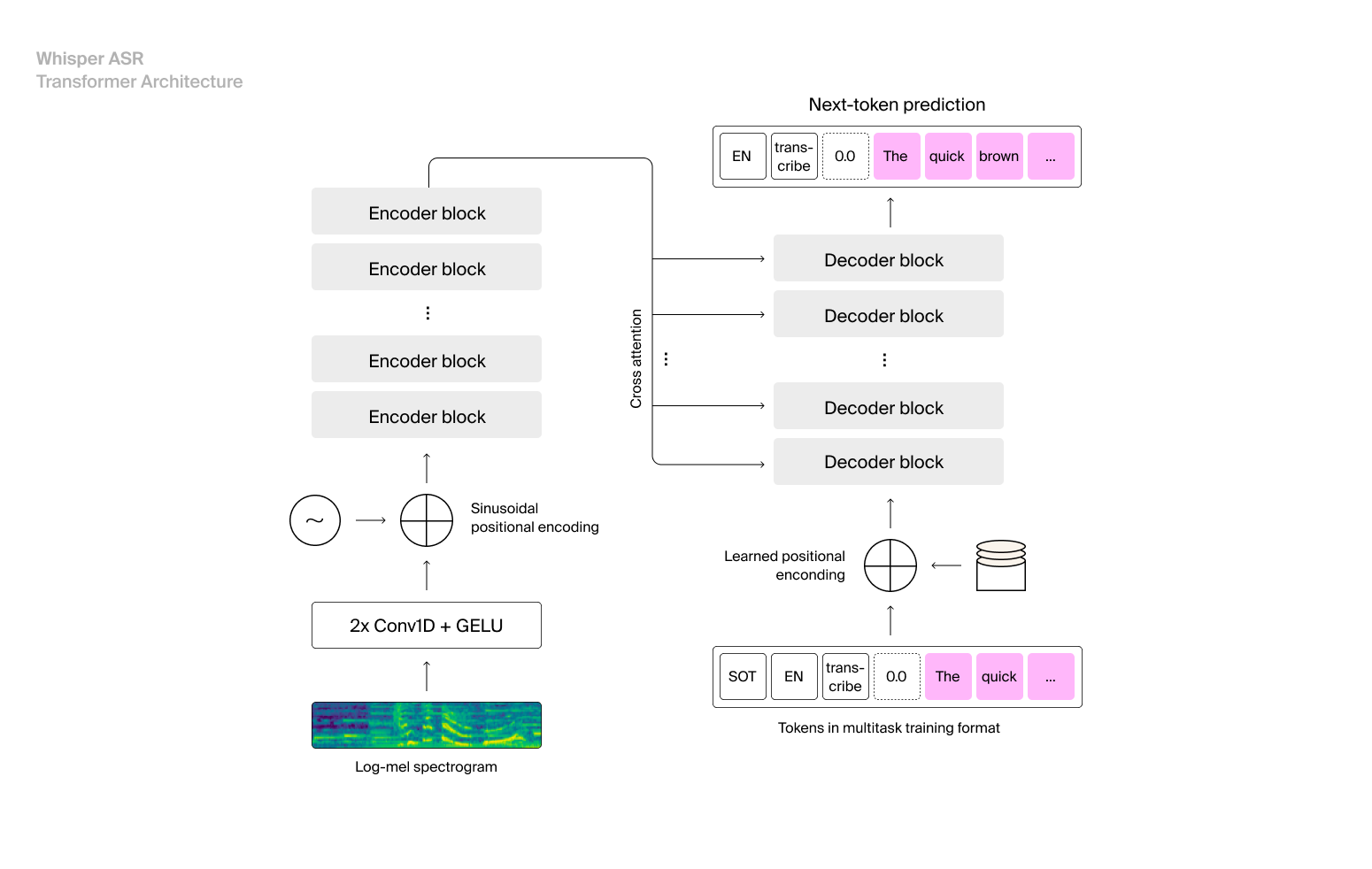

Speech-to-text technology is a type of natural language processing (NLP) that converts spoken words into written text. It is used in a variety of applications, including voice assistants, transcription services, and accessibility tools. Here is a more detailed explanation of how speech-to-text technology works:

Sound conversion

The first challenge in speech-to-text technology is that sound is analog, while computers can only understand digital inputs. To convert sound into a digital format that computers can understand, a microphone is used. The microphone converts sound waves into an electrical current, which is then converted into voltage and read by a computer.

Frequency isolation

The next step in the process is to isolate individual frequencies from the sound input. This is done using a technique called Fast Fourier Transform (FFT), which converts the sound input into a spectrogram. A spectrogram is a visual representation of sound, with time on the X-axis, frequencies on the Y-axis, and intensity represented by brightness.

Phoneme recognition

It’s the process of identifying the basic building blocks of speech, known as phonemes. This is a crucial step in speech-to-text technology because phonemes are the foundation upon which words are built. There are several different approaches to phoneme recognition, including statistical models like the hidden Markov model and machine learning systems like neural networks.

Neural networks are a type of machine learning system that is made up of interconnected nodes that can adjust their weights based on feedback. A neural network consists of layers of nodes that are organized into an input layer, an output layer, and one or more hidden layers. The input layer receives data, the hidden layers perform transformations on the data, and the output layer produces the final result. Every time the neural network receives feedback, it adjusts the weights of the connections between the nodes to improve its performance.

One advantage of neural networks is that they can adapt to large variations in speech, such as different accents and mispronunciations. However, they do require a large amount of data to be set up and trained, which may be a limitation for some applications. In contrast, statistical models like the hidden Markov model are less data-hungry, but they are unable to adapt to large variations in speech. As a result, it is common to use both types of models in speech-to-text technology, with the hidden Markov model being used to handle basic phoneme recognition and the neural network handling more complex tasks.

Word analysis

It’s the process of analyzing the sequence of phonemes that make up a word in order to identify the intended meaning. This is done using either a language or an acoustic model.

The language model takes into account the context of the word, as well as the frequency of different phoneme combinations in the language being used. For example, in English, the phoneme "m" is never followed by an "s." Therefore, if the language model encounters the sequence "ms," it will consider it to be an error and attempt to correct it based on the context and the likelihood of different phoneme combinations.

The language model is an important part of speech-to-text technology because it allows the system to understand the meaning of words and sentences. By analyzing the sequence of phonemes and taking into account the context, the language model can determine the intended meaning of spoken words and produce the corresponding written text.

The acoustic model is a statistical model that maps the acoustic features of speech to the corresponding words or phonemes. The acoustic model is trained on a large dataset of audio recordings and the corresponding transcriptions, and it uses this data to learn the patterns and features that are characteristic of the language being used.

During the STT process, the audio input is analyzed by the acoustic model, which produces a sequence of probability scores for each possible word or phoneme. The sequence of scores is then fed into a language model, which takes into account the context and the likelihood of different word combinations to produce the final transcription.

There are several different types of acoustic models, including hidden Markov models (HMMs) and deep neural networks (DNNs). HMMs are statistical model that consists of states and corresponding evidence, and they are commonly used for speech recognition because they are computationally efficient and relatively easy to train. DNNs are a type of machine learning model that consists of layers of interconnected nodes, and they are able to adapt to large variations in speech. DNNs are more data-hungry and require more computational resources to train, but they tend to perform better than HMMs on many speech recognition tasks.

Which model is better or more common for a given language depends on a variety of factors, including the complexity of the language, the amount of data available for training, and the resources available for training and running the model. In general, DNNs tend to perform better on a wide range of tasks, but they may not be the best choice for all languages or situations.

Final transcript

Text output is the final step in converting spoken words or text from one language to another using speech-to-text technology. It involves displaying the translated text on a screen or saving it to a file.

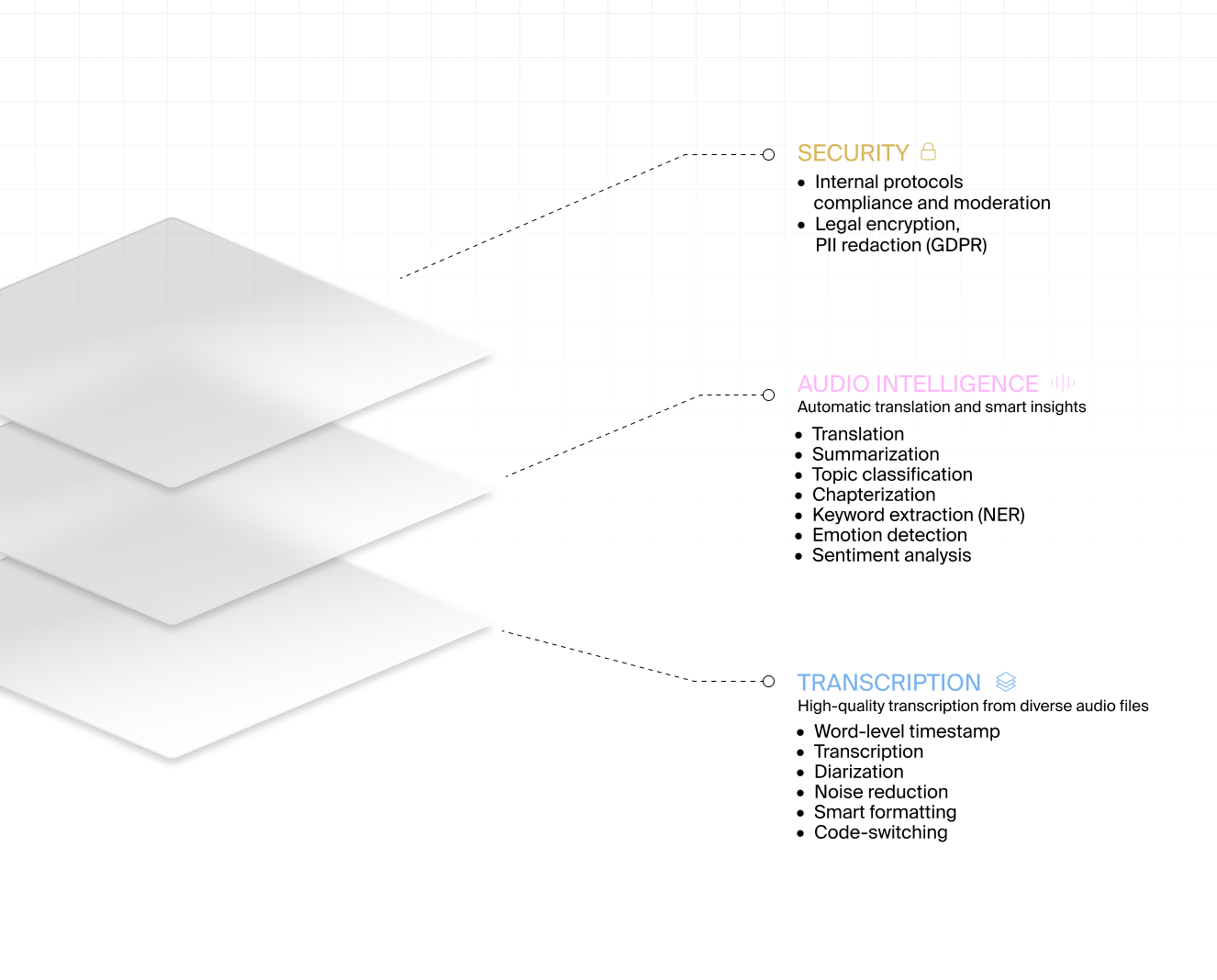

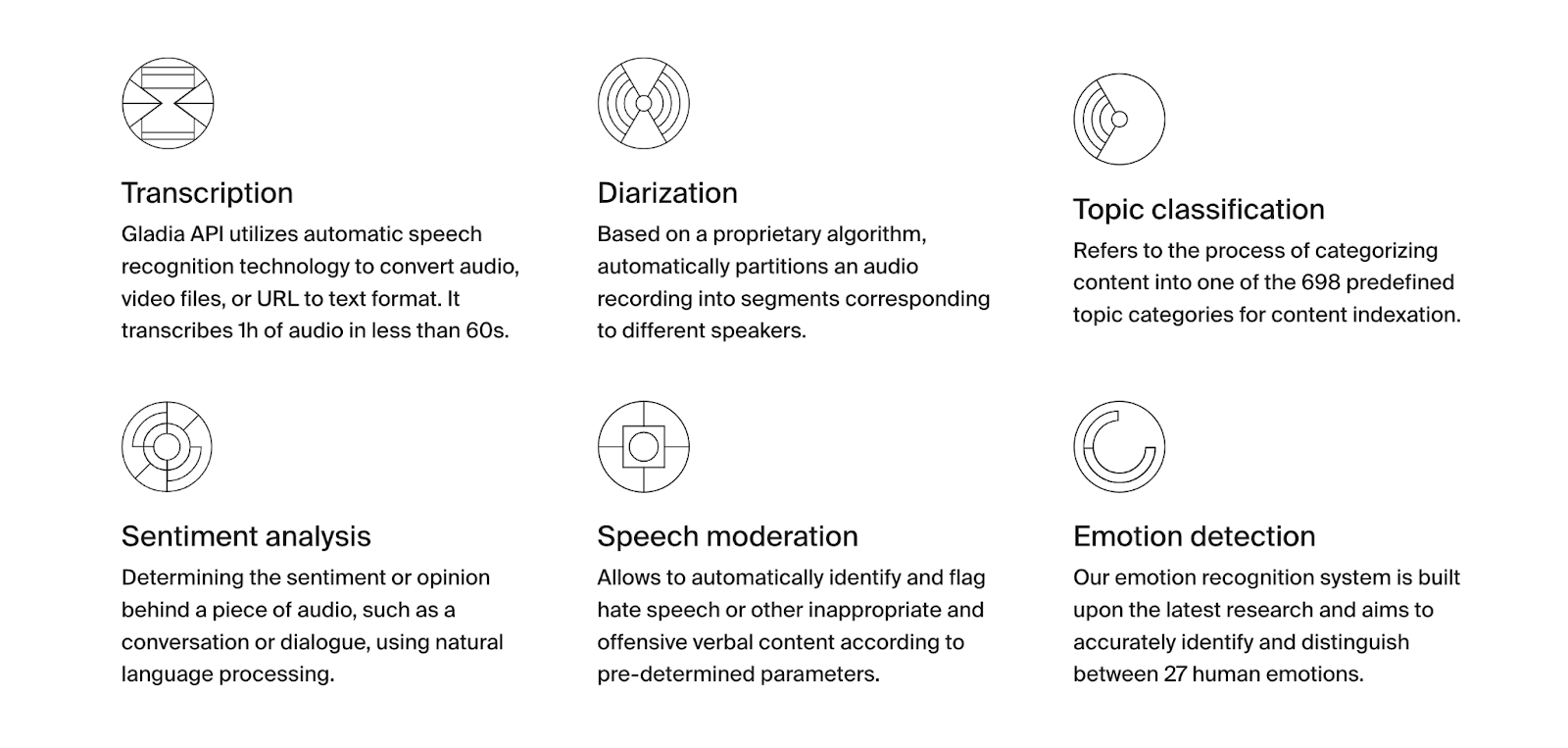

What are STT APIs and their advantages?

API (Application Programming Interface) is a set of rules and protocols that allows different software systems to communicate with each other. In the context of speech-to-text applications, an API is a set of programming instructions that allows developers to access and use the STT capabilities of a service or platform in their own applications.

There are several different types of voice recognition APIs available, including cloud-based APIs and on-premises APIs. Cloud-based APIs are hosted by a third-party provider and accessed over the internet, while on-premises APIs are installed on a local server and accessed within an organization's network.

Speech-to-text APIs offer plenty of advantages for individuals and businesses:

Increased productivity : Allows users to input text quickly and efficiently using their voice, rather than typing on a keyboard or touchpad. This can save time and increase productivity, especially for tasks that involve a lot of text input.

Improved accessibility : Can be used to provide accessibility features such as live captions and subtitles, which can be helpful for individuals with hearing impairments or learning disabilities.

Enhanced customer experience : Speech-to-text applications can provide various manipulations with recognized and transcribed text, for example, summarization . By getting a quick summary of customer feedback businesses can identify common issues, for example.

Greater flexibility : STT APIs can be accessed from any device with an internet connection, allowing users to input text using their voice from anywhere.

Cost savings : One of the major benefits for businesses is cost savings. By automating text input tasks, businesses can reduce or eliminate the need for manual transcription services, which can be costly and time-consuming. Additionally, it can help businesses streamline their processes and increase efficiency.

Improved accuracy : Advanced natural language processing algorithms have a high level of accuracy in transcribing spoken words, which can help reduce errors and improve the quality of the resulting text.

Best speech-to-text API applications

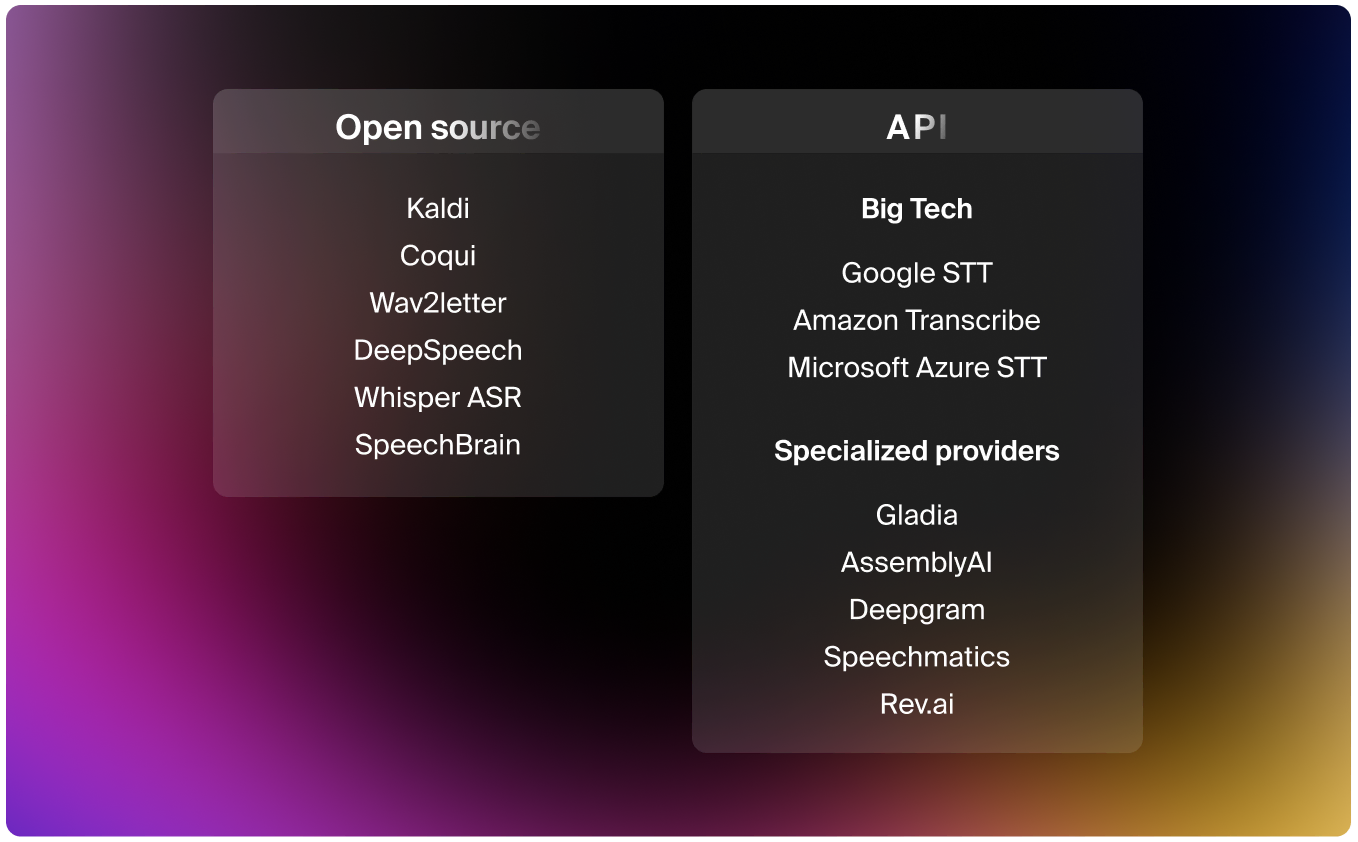

There are many speech-to-text (STT) application programming interfaces (APIs) available on the market, and the best one for you will depend on your specific needs and preferences. Here are some popular STT APIs that are widely used and well-regarded by experts:

- Google Cloud Speech-to-Text API : Use a powerful API to convert speeches into texts accurately with the help of Google Cloud’s Speech-to-Text solution known for its high accuracy and wide range of customization options. It offers an excellent user experience by transcribing your speech with accurate captions.

- IBM Watson Speech to Text API : IBM Watson Speech to Text offers AI-powered transcription and speech recognition solutions. It enables accurate and fast speech recognition in different languages for various use cases, such as customer self-service, speech analytics, agent assistance, and more.

- Microsoft Azure Speech Services : Use a powerful API to convert speeches into texts accurately with the help of Google Cloud’s Speech-to-Text solution. It offers an excellent user experience by transcribing your speech with accurate captions. It also helps improve your services through the insights taken and transcribed from your customer interactions.

- Amazon Transcribe : Amazon Transcribe is a big cloud-based automatic speech recognition platform developed specifically to convert audio to text for apps. It is available for use on a variety of platforms, including Windows, Mac, and mobile devices.

- OneAI is a language AI service that offers product-ready APIs and pre-trained models for developers. It allows developers to access speech-to-text and audio-intelligence capabilities in a single API call, enabling them to process audio and video into structured data for various purposes such as generating summaries and transcripts, and detecting sentiments and topics.

Use cases of speech-to-text applications

There are many potential use cases for speech-to-text technology. Some of the most common use cases include:

Automated dictation

If you're a content creator, writer, or anyone who needs to type long-form text, STT APIs can be a huge help. You can dictate your words and produce written text, saving time and effort.

Voice control

Speech-to-text can be used to enable voice control of various applications, such as virtual assistants or smart home devices. By issuing voice commands, users can easily interact with these devices and perform a wide range of tasks without having to type or use other input methods.

Medical transcription

In the medical field, this technology can be used to transcribe medical reports, notes, and other documents. This can help to reduce the workload for medical professionals and improve the accuracy of patient records

Translation

You can translate spoken words into different languages, which can be particularly useful for people who are traveling or working with people who speak different languages.

Voice biometrics

It’s the process of verifying the identity of a user based on their voice and also can be a task for voice recognition applications. This can be used to enable secure authentication for applications such as banking or online services.

Students with learning disabilities or language barriers can use the benefits of STT applications by getting real-time transcriptions of lectures or other educational materials. This can make learning more accessible and inclusive for all students.

Emotion recognition

Speech-to-text can also be used to analyze certain vocal characteristics to determine what emotion the speaker is feeling. Paired with sentiment analysis, this can reveal how someone feels about a product or service.

Limitations and future of speech-to-text

Like all technology, speech-to-text technology has its limitations. Some of the main limitations include:

Accurate transcription relies on clear speech : voice recognition systems are more likely to produce accurate transcriptions when the spoken words are clear and easily understood. If the speech is distorted or difficult to understand, the accuracy of the transcription may suffer.

Accents and dialects : Voice recognition systems are typically trained on a particular accent or dialect of a language. If the speaker has a different accent or dialect, the accuracy of the transcription may be lower.

Problems with context understanding : STT systems may struggle to understand the context in which words are being used, which can lead to incorrect transcriptions or translations.

Significant computing resources are required : Developing and maintaining voice recognition systems can be resource-intensive, as they require large amounts of data and computing power to train and operate.

Despite these limitations, the future of this technology looks bright. The speech-to-text industry has seen significant growth in recent years, with the global market value expected to reach $28.1 billion by 2027. The increased demand for this technology has led to the development of advanced capabilities such as punctuation, speaker diarization, global language packs, and entity formatting. One major breakthrough in the industry is the introduction of self-supervised learning, which allows STT engines to learn from unstructured data on the internet, giving them access to a wider range of voices and dialects and reducing the need for human supervision.

Universal availability will make ASR accessible to everyone, while the collaboration between humans and machines will allow for the organic learning of new words and speech styles. Finally, responsible AI principles will ensure that ASR operates without bias.

Speech-to-text technology has come a long way in recent years, and its capabilities continue to expand with the development of self-supervised learning and the integration of natural language understanding (NLU) . These advancements have enabled speech-to-text systems to learn from a wide range of unstructured data and improve their accuracy in a variety of languages and accents. As a result, STT technology is being utilized in an increasingly diverse range of industries, from healthcare and finance to communications and customer service.

OneAI creates 93% accurate speech-to-text transcriptions and suggests a wide range of Language Skills (use-case ready, vertically pre-trained models) like summarization , proofreading , sentiment analysis , and many more. Just check our Language Studio and pick those which will increase the efficiency of your business.

TURN YOUR C o NTENT INTO A GPT AGENT

Understanding Transcription: The Meaning of Dictating for Text Conversion

Understanding Transcribe Dictation in the Medical Field

In today’s fast-paced medical environment, transcribe dictation has emerged as a critical component of healthcare documentation. This process involves the conversion of spoken language into a written text by a healthcare provider. Traditionally, doctors would dictate their findings into a recorder after a patient visit, which would subsequently be transcribed either by an in-house transcriptionist or an outsourced service. However, the integration of AI-powered digital scribe services like ScribeMD is revolutionizing this practice by providing real-time transcription with a high degree of accuracy and efficiency.

One of the fundamental aspects of understanding transcribe dictation in the medical field is recognizing its impact on workflow optimization . The practice of dictation itself allows healthcare professionals to verbalize patient encounters and treatment plans without the need for typing or writing, saving valuable time. The subsequent transcription is then incorporated into patient records, which is essential for continuity of care, insurance processes, and legal documentation. Leveraging high-tech solutions to automate this process reduces the turnaround time from spoken word to electronic health records and minimizes potential for human error.

Welcome to the medical revolution, where words become your most powerful ally

Here at ScribeMD.AI, we’ve unlocked the secret to freeing medical professionals to focus on what truly matters: their patients. Can you imagine a world where the mountain of paperwork is reduced to a whisper in the wind? That’s ScribeMD.AI. An AI-powered digital assistant, meticulously designed to liberate you from the chains of the tedious medical note-taking process. It’s like having a second pair of eyes and ears but with the precision of a surgeon and the speed of lightning. Our service isn’t just a software program; it’s an intelligent companion that listens, understands, and transcribes your medical consultations with astounding accuracy. Think of it as a transcription maestro, a virtuoso of spoken words, trained to capture every crucial detail with expert precision. With ScribeMD.AI, say goodbye to endless hours of reviewing and correcting notes. Our advanced AI technology and language learning models ensure an accuracy rate that makes errors seem like a thing of the past. And best of all, it responds faster than you can blink. The true beauty of ScribeMD.AI lies in its ability to lighten your administrative burden, allowing you to return to the essence of your calling: caring for your patients. It’s more than a service; it’s a statement that in the world of medicine, patient care should always come first. So, are you ready to make the leap and join the healthcare revolution? ScribeMD.AI isn’t just a change; it’s the future. A future where doctors can be doctors, and patients receive all the attention they deserve.

- Conversion of spoken language into text

- Traditionally performed by human transcriptionists

- AI-powered services enhance speed and accuracy

- Dictation saves practitioners’ time during patient visits

- Essential for thorough patient records and documentation

Medical dictation isn’t just about capturing words; it’s a complex system that must recognize and adapt to various medical terminologies, accents, and dictation styles. Healthcare providers often use highly specialized language, and an effective transcription service must be equipped with medical-specific linguistic databases to accurately interpret and document these terms. The advent of advanced AI language learning models, as implemented by ScribeMD, means that modern dictation systems can continually learn and improve, offering increasingly precise transcriptions over time.

Furthermore, the value of transcribe dictation extends into the realm of medical billing and coding. Detailed and accurate documentation is paramount for correct billing codes, which not only ensures proper reimbursement but also aids in maintaining compliance with healthcare regulations. Here, the introduction of AI digital scribe technology simplifies this intricate process, reducing the likelihood of coding errors and enhancing the financial integrity of healthcare practices.

- Necessity for understanding specialized medical terms

- AI models provide contextual understanding

- Continuous improvement in speech recognition accuracy

- Accurate documentation aids in medical billing and compliance

The Role of AI in Enhancing Dictation Transcription

In the swiftly evolving medical industry, the integration of Artificial Intelligence (AI) in dictation transcription is transforming the landscape of patient record-keeping. AI-driven platforms, such as those provided by ScribeMD , are revolutionizing the way medical professionals document consultations and procedures. By harnessing the power of sophisticated algorithms and natural language processing (NLP) techniques, these systems are able to interpret and transcribe spoken language with remarkable accuracy . Such technology not only captures the nuances of medical terminology but also adapts to the distinct speech patterns of individual clinicians.

Hospitals and clinics embracing AI transcription tools are observing a significant uptick in efficiency. Traditional methods of transcribing dictated notes often involve a time-consuming process that can lead to delays in updating patient records. With AI, the transition from oral dictation to textual documentation occurs almost instantaneously, implying that health records are kept current with very little lag. The benefits of this advancement are multifold:

- Reduced Turnaround Time : Automated transcription delivers documentation expeditiously, thus streamlining patient care.

- Enhanced Accuracy : AI programs continually learn and improve, reducing the likelihood of errors associated with human transcription.

- Decreased Administrative Burden : By automating the transcription process, medical staff can redirect their focus toward patient-centric tasks, enhancing the overall quality of care.

Moreover, the role of AI extends beyond just the transcription of words. It entails an understanding of context and intent, distinguishing it from rudimentary voice recognition tools of the past. AI transcription services deploy highly advanced NLP engines that recognize jargon, abbreviations, and even slang pertinent to the medical field. Furthermore, these systems are capable of discerning relevant information for different sections of medical notes, including symptoms, diagnoses, and treatment plans. Thus, the adoption of an AI-powered digital scribe promises a more cohesive and reliable method for maintaining patient narratives, which are instrumental in delivering high-quality healthcare.

What Does Transcribe Dictation Mean for Patient Care?

Transcribing dictation in the medical field means converting the spoken word of healthcare professionals into accurate, accessible written records. At its core, this process is designed to capture the intricate details of patient encounters, ensuring that every symptom, diagnosis, and treatment plan is meticulously documented. The precision of medical transcription holds immense significance for patient care—it ensures continuity by creating a reliable, comprehensive narrative that can be referenced by practitioners now and in the future. This written account allows doctors to revisit patient information quickly, aiding in recalling specific case particulars, which is essential for ongoing care and treatment adjustments when necessary.

Moreover, the integration of an AI-powered digital scribe, such as ScribeMD , enhances the quality and efficiency of transcribing dictation. By leveraging advanced language models and speech recognition, the service minimizes the risk of human error often found in manual transcription. The outcomes are noteworthy:

– Higher accuracy rate : Ensuring that records reflect the correct terminology and details of the patient’s visit. – Quicker turnaround time : Rapid documentation means that patient records are updated almost in real-time, promoting better follow-up care. – Increased availability for patient interaction : When medical professionals are freed from the burdens of note-taking, they can refocus their attention on direct patient care, fostering a more personal and comprehensive healthcare experience.

These innovations fundamentally elevate the standard of care provided to patients by streamlining the processes behind the scenes.

Importantly, accurate transcribed notes act as a legal safeguard for both patients and healthcare providers. Quality documentation is a critical component of patient care, serving as evidence of the treatments provided and the healthcare professional’s adherence to the standard of care. Inaccurate or incomplete documentation can lead to misdiagnoses, inappropriate treatments, and even legal ramifications. Thus, the significance of transcribe dictation extends beyond administrative utility—it embodies a commitment to excellence in patient care. The adoption of technology like ScribeMD in the process optimizes this commitment by offering:

– Compliance with healthcare regulations : Automated transcriptions made to meet the stringent standards of medical documentation. – Enhanced security and confidentiality : Digitally securing patient information to comply with privacy laws such as the Health Insurance Portability and Accountability Act (HIPAA). – Interoperability : Seamless integration with Electronic Health Record (EHR) systems, ensuring that transcribed data enhances the healthcare ecosystem.

By encapsulating and preserving the integrity of the patient’s medical journey, transcribe dictation, when executed with technological finesse, becomes a cornerstone of exceptional patient-centered healthcare.

How Transcribe Dictation Saves Time for Healthcare Professionals

In today’s high-paced healthcare environment, time is a critical resource, and finding ways to save it is at the forefront of operational efficiency. Transcribe dictation has emerged as a transformative solution for healthcare professionals by streamlining one of the most time-consuming tasks— medical documentation . By enabling clinicians to speak their notes, observations, and patient interactions directly into a digital repository, the need for manual typing or writing is notably reduced. This not only accelerates the process of note-taking but also captures the nuances of verbal communication that might be lost in translation to written text.

Another facet where transcribe dictation shines is in its ability to allow healthcare professionals to multi-task effectively. As physicians engage with patients, they can dictate the relevant medical information in real-time. This immediacy greatly decreases the chances of omitting important patient data, which might be the case when notes are transcribed well after the patient encounter. Furthermore, when utilizing an AI-powered digital scribe, like ScribeMD , the integration of advanced language processing models ensures that the transcribed notes are not just fast, but also accurate and contextually coherent.

- Reduction in manual typing : Decreases the physical task of note-taking, saving time.

- Immediate documentation : Captures patient data in real-time, avoiding data loss.

- Advanced language processing : Ensures accuracy and contextual integrity of notes.

Moreover, the time saved by transcribing dictations translates into more direct patient care and improved clinical outcomes. Healthcare providers can allocate the hours gained from reduced administrative burdens to patient consultations, crafting more personalized care plans, or simply to increase patient throughput. This optimization of time is not only synonymous with financial prudence for healthcare establishments, but it also elevates patient satisfaction by fostering a healthcare experience where the focus visibly shifts from paperwork to patient interaction.

Lastly, the long-term benefits of transcribe dictation on time management echo through the overall healthcare system. With digital transcriptions readily available, information sharing between departments, specialists, and even across different healthcare facilities becomes significantly swifter and more efficient. The accumulation of these incremental time savings has the potential to make a substantial impact on healthcare delivery, setting the groundwork for a more agile and responsive medical practice.

- Enhanced patient care : Providers spend less time on documentation and more time with patients.

- Increased patient throughput : More efficient processes can lead to seeing more patients daily.

- Improved information sharing : Easier access to digital records streamlines communication between healthcare entities.

The Future of Dictation Transcription in Healthcare

As the landscape of healthcare continuously evolves, so does the necessity to streamline the documentation process. Dictation transcription, long a staple in the medical field, is poised for a transformative leap thanks to advancements in artificial intelligence (AI) and natural language processing (NLP). In the future, healthcare professionals can expect a seamless integration of AI-powered systems like ScribeMD.ai into their workflow, offering unprecedented accuracy and efficiency in transcribing medical dictations. This digital scribe technology not only understands the nuances of medical jargon but also contextualizes patient encounters, improving the quality of clinical documentation significantly.

Key drivers for this revolutionary change stem from the need to reduce administrative burdens and the growing emphasis on patient-centered care. With AI-enhanced dictation services, the speed at which spoken words are translated into written text vastly outstrips that of traditional methods. Healthcare providers can dictate medical notes in real-time, and the AI system promptly captures and converts this data with remarkable precision. This means less time spent on paperwork and more on direct patient interaction, aligning with modern healthcare’s ethos of personalized care.

- Improved accuracy in capturing complex medical terminology

- Reduced time spent on documentation

- Faster turnaround times for medical record completion

Moving beyond mere voice-to-text conversion, the future of dictation transcription will incorporate context-aware AI that can adapt to different accents, dialects, and the unique speech patterns of individual providers. The technology is expected to enhance with continuous learning capabilities, meaning it gets better over time with increased exposure to diverse linguistic data. Furthermore, AI-driven transcription services can be integrated with Electronic Health Record (EHR) systems, streamlining data entry and minimizing errors. This integration point allows healthcare practitioners to maintain comprehensive and accurate patient records without the tedium of manual input.

When considering data security and patient privacy, future dictation transcription tools will feature state-of-the-art encryption and compliance protocols aligned with Health Insurance Portability and Accountability Act (HIPAA) guidelines. Developers and healthcare institutions are keenly aware of the sensitivity of medical information; thus, the security aspect of transcription tools is a top priority. These hardened measures ensure that the confidential patient data physicians dictate is safeguarded throughout the documentation process.

Related Posts

Medical Transcriptionist: The Essential Guide to Launching Your Career

Master Medical Abbreviations: The Ultimate Guide for Healthcare Professionals

Become a Pro at Charting: The Ultimate Guide to Medical Scribe Mastery

Leave a comment cancel reply.

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

How-To Geek

Voice dictation works great, but should you use it.

Look who's (not) talking now.

Quick Links

We don't write the way we speak, formatting and editing is still a pain, voice dictation doesn't work in shared spaces, talking too much can be bad for you, it's a great hands-free and mobile typing technology, transcription is the real star.

For years we were promised voice dictation that was accurate, real-time, and easy. Today that promise has (largely) come true, but should you write your next work report, dissertation, or novel using your voice? Maybe not, as it turns out.

The biggest problem with dictating any serious writing is that, unlike reading, writing isn't a linear process. We don't think out whole paragraphs and sentences on the fly, so speaking the written word is rarely efficient.

Instead, writing goes back and forth. We stop and think. Then type out a torrent of words once those thoughts are in order. With the way current dictation systems work, it's hard to have this natural writing cadence work smoothly. The alternative is to adapt how we write to dictation. This author has certainly tried, but it doesn't seems conducive to the writing process, regardless of what you write.

Related: How to Use Voice Dictation on Windows 10

A significant amount of writing is simply formatting and editing text. No dictation system has really nailed perfect punctuation and formatting. Some of them infer where commas and periods should go and often do a great job. However, the most reliable method is still explicitly telling the system verbally where to put punctuation or when to, for example, create bold or italicized text.

Using your voice to format text is practically a no-go from the outset. It's simply faster and more efficient to use tactile controls. Even touch controls work better than formatting by voice. So inevitably, you'll have to go back and do manual typing no matter how well your initial voice draft came out.

While lots of people are working from home , open-plan offices and other communal workspaces are still common. This makes it a problem to produce text in a way that makes noise. Mechanical keyboards are already annoying when someone's mashing out an article on one, but could you imagine a room full of people talking at their computers?

It also makes it impossible to, for example, listen to music or other audio content while writing, unless you're willing to wear headphones . Overall, the noise pollution caused by voice dictation narrows down the types of environments and situations where you can use it comfortably.

Another potential reason voice dictation hasn't become the mainstream writing mode, is that talking for hours on end isn't great for anyone's voice. That's not to say that excessive typing isn't going to put some strain on your hands, but we've had decades to figure out better typing ergonomics . We don't have "ergonomic" microphones, after all.

Where voice dictation really shines is in writing small sections of text hands-free. Such as dictating a text message for use with your favorite app while driving. Even when you're not working hands-free, voice typing is generally less frustrating than typing on a tiny touch-screen keyboard. At least for anyone with human-sized thumbs.

So if you haven't tried voice typing on your smartphone , it's actually one of the best use cases for the technology, if you tend to mistype things on your phone regularly, voice typing is definitely worth a try.

So far, it may seem like voice dictation has turned out to be less useful than it seems, but that's only when you try to use this technology in real-time. What's far more practical is taking voice recordings and then transcribing them to editable text .

Voice dictation and transcription are essentially the same technology, except in the case of transcription the software has more time to get it right, has the context of the whole recording to work with, and does have to be interrupted for editing.

Related: Are Online Transcription Services Safe and Private?

By using a dedicated voice recorder, or a recording app on your phone, or even a smart watch , you can put down your thoughts over a period of time and then feed all of that audio into your transcription software. Then it's a matter of editing the end result, which is must faster than the stop-and-start nature of dictation.

So voice recognition technology is definitely something you should use, but perhaps live dictation isn't the best way to benefit from it.

Dictate your documents in Word

Dictation lets you use speech-to-text to author content in Microsoft 365 with a microphone and reliable internet connection. It's a quick and easy way to get your thoughts out, create drafts or outlines, and capture notes.

Start speaking to see text appear on the screen.

How to use dictation

Tip: You can also start dictation with the keyboard shortcut: ⌥ (Option) + F1.

Learn more about using dictation in Word on the web and mobile

Dictate your documents in Word for the web

Dictate your documents in Word Mobile

What can I say?

In addition to dictating your content, you can speak commands to add punctuation, navigate around the page, and enter special characters.

You can see the commands in any supported language by going to Available languages . These are the commands for English.

Punctuation

Navigation and selection, creating lists, adding comments, dictation commands, mathematics, emoji/faces, available languages.

Select from the list below to see commands available in each of the supported languages.

- Select your language

Arabic (Bahrain)

Arabic (Egypt)

Arabic (Saudi Arabia)

Croatian (Croatia)

Gujarati (India)

- Hebrew (Israel)

- Hungarian (Hungary)

- Irish (Ireland)

Marathi (India)

- Polish (Poland)

- Romanian (Romania)

- Russian (Russia)

- Slovenian (Slovenia)

Tamil (India)

Telugu (India)

- Thai (Thailand)

- Vietnamese (Vietnam)

More Information

Spoken languages supported.

By default, Dictation is set to your document language in Microsoft 365.

We are actively working to improve these languages and add more locales and languages.

Supported Languages

Chinese (China)

English (Australia)

English (Canada)

English (India)

English (United Kingdom)

English (United States)

French (Canada)

French (France)

German (Germany)

Italian (Italy)

Portuguese (Brazil)

Spanish (Spain)

Spanish (Mexico)

Preview languages *

Chinese (Traditional, Hong Kong)

Chinese (Taiwan)

Dutch (Netherlands)

English (New Zealand)

Norwegian (Bokmål)

Portuguese (Portugal)

Swedish (Sweden)

Turkish (Turkey)

* Preview Languages may have lower accuracy or limited punctuation support.

Dictation settings

Click on the gear icon to see the available settings.

Spoken Language: View and change languages in the drop-down

Microphone: View and change your microphone

Auto Punctuation: Toggle the checkmark on or off, if it's available for the language chosen

Profanity filter: Mask potentially sensitive phrases with ***

Tips for using Dictation

Saying “ delete ” by itself removes the last word or punctuation before the cursor.

Saying “ delete that ” removes the last spoken utterance.

You can bold, italicize, underline, or strikethrough a word or phrase. An example would be dictating “review by tomorrow at 5PM”, then saying “ bold tomorrow ” which would leave you with "review by tomorrow at 5PM"

Try phrases like “ bold last word ” or “ underline last sentence .”

Saying “ add comment look at this tomorrow ” will insert a new comment with the text “Look at this tomorrow” inside it.

Saying “ add comment ” by itself will create a blank comment box you where you can type a comment.

To resume dictation, please use the keyboard shortcut ALT + ` or press the Mic icon in the floating dictation menu.

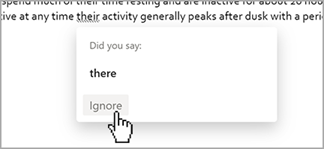

Markings may appear under words with alternates we may have misheard.

If the marked word is already correct, you can select Ignore .

This service does not store your audio data or transcribed text.

Your speech utterances will be sent to Microsoft and used only to provide you with text results.

For more information about experiences that analyze your content, see Connected Experiences in Microsoft 365 .

Troubleshooting

Can't find the dictate button.

If you can't see the button to start dictation:

Make sure you're signed in with an active Microsoft 365 subscription

Dictate is not available in Office 2016 or 2019 for Windows without Microsoft 365

Make sure you have Windows 10 or above

Dictate button is grayed out

If you see the dictate button is grayed out

Make sure the note is not in a Read-Only state.

Microphone doesn't have access

If you see "We don’t have access to your microphone":

Make sure no other application or web page is using the microphone and try again

Refresh, click on Dictate, and give permission for the browser to access the microphone

Microphone isn't working

If you see "There is a problem with your microphone" or "We can’t detect your microphone":

Make sure the microphone is plugged in

Test the microphone to make sure it's working

Check the microphone settings in Control Panel

Also see How to set up and test microphones in Windows

On a Surface running Windows 10: Adjust microphone settings

Dictation can't hear you

If you see "Dictation can't hear you" or if nothing appears on the screen as you dictate:

Make sure your microphone is not muted

Adjust the input level of your microphone

Move to a quieter location

If using a built-in mic, consider trying again with a headset or external mic

Accuracy issues or missed words

If you see a lot of incorrect words being output or missed words:

Make sure you're on a fast and reliable internet connection

Avoid or eliminate background noise that may interfere with your voice

Try speaking more deliberately

Check to see if the microphone you are using needs to be upgraded

Need more help?

Want more options.

Explore subscription benefits, browse training courses, learn how to secure your device, and more.

Microsoft 365 subscription benefits

Microsoft 365 training

Microsoft security

Accessibility center

Communities help you ask and answer questions, give feedback, and hear from experts with rich knowledge.

Ask the Microsoft Community

Microsoft Tech Community

Windows Insiders

Microsoft 365 Insiders

Was this information helpful?

Thank you for your feedback.

- Law Enforcement

- Ditto Difference/About

- Case Studies

- Work for Us

- Upload A File

Dictation Speech-to-Text Apps Vs. Transcription

By Ben Walker

Dictation and transcription are commonly mistaken for the same thing. However, that is far from the truth. Dictation is the process of speaking or dictating information, often to a device. Transcription involves listening to an audio recording and accurately transcribing the spoken words into a written document. Dictation and transcription services have different purposes in professional settings, and knowing which to use when can be greatly beneficial for businesses.

In this article, you’ll learn how:

- Transcription means converting speech to text through either automated or manual means. Dictation is speaking into a recording device or app to be listened to or transcribed in the future.

- Speech recognition software is often incorporated into dictation apps for real-time transcription.

- Professional, human-powered transcription is the best choice for transcribing audio files and other recordings into immediately usable transcripts.

Difference Between Dictation And Transcription

Dictation is the process of speaking or dictating information, while transcription is the process of converting spoken language into written form. To illustrate the point, here are their key factors and differences.

Key differences:

- Timing: Dictation happens in real-time, while transcription occurs after a recording.

- Accuracy: Transcription often requires greater accuracy and attention to detail due to its potential use as a legal or medical record.

- Formatting: Transcription often involves adding timestamps, speaker labels, and formatting for readability and referencing.

- Software: The best dictation software often has voice recognition and editing features, while transcription software focuses on playback control and accuracy.

Disadvantages of Dictation Apps

Dictation and transcription software that use speech recognition have their uses. However, to be sure they are right for your application, you must be aware of their limitations.

Transcription Vs. Dictation Software: When To Use And Why

Here’s a quick summary to figure out if dictation or transcription is appropriate for your needs:

Transcription is preferable when looking for accurate, permanent records accessible to everyone who needs them. Dictation is excellent for note-taking and reminders to be transcribed later.

For example, doctors can save time dictating their patients’ notes instead of taking them in real-time. Real estate agents can dictate as they survey a house instead of scribbling notes. However, voice dictations must still be converted into physical or digital medical documentation.

So, let’s talk about your options for getting transcription work done.

Can I Assign Transcription Work To My Employees?

You can assign transcription tasks to your clerks, admins, or clinic assistants for different businesses. Everyone can use a keyboard—it’s practically a life skill these days. Many people can even be called typists. You can transcribe your own dictations, and your employees can do the work you originally hired them to do.

Here’s the question, though: is transcription the real reason why you hired them in the first place?

You remove key human resources from your core business functions by assigning dictated recordings to your employees for transcription. Transcription is essential—there is no question about that. However, assigning your people to that task might not be the most cost-efficient way.

How About Hiring In-House To Transcribe Dictations And Recordings?

Another option is to hire a transcriptionist or a whole team to work on your audio recordings. However, there might be better options.

In-house transcription is time-consuming and costly. The business needs to spend money finding and hiring competent transcriptionists (which can take up to 24 days and cost upwards of $10,000 for the search), provide a decent salary and benefits package (which can cost up to $60,000 a year for salary alone), supply them with the necessary equipment (which may cost up to $2,000 each, depending on the quality of the equipment), and provide continuous training and development so that their skills do not stagnate.

How About Voice Recognition Software or Speech-To-Text Transcription Software?

Artificial intelligence can transcribe audio files; the best speech-to-text dictation apps often include the feature. With some apps, it’s often as simple as pressing the microphone icon and watching the words get written on your screen. So why don’t the best transcription services use them?

The issue lies with accuracy. Transcription services that use AI software suffer from transcription errors because automated transcription can only reach 86% accuracy without human intervention.

Applications that transform spoken words into documents are popular dictation transcription options. They’re pretty good, but you’ll quickly realize how frustrating they can be once you’ve dictated a critical letter into a dictation transcription application. While some software might recognize most of your words, the app can still surprise you with punctuation in odd places, butchered professional jargon, and unusable formats.

Simply put, you cannot use AI to transcribe everything.

Medical transcription services must offer near-perfect transcripts every time. Medical terminology can make it hard for automated transcription to produce accurate transcripts. That’s why certified medical transcriptionists are preferred.

Legal transcription is often used in court cases. Walking up to the judge and presenting error-filled transcripts and legal documents is a great way to lose cases and potentially send your client to jail.

Why Outsource To Human Transcription Services

Professional dictation transcription services that employ human transcriptionists are your best option when the correspondence and documents you produce are crucial to your reputation. Your documents are often your first line of interaction with the public. They tell people who and what you are and what you do. You want them to be accurate, attractive, and just as professional as you are.

A human transcriber might not recognize your words the first time you say them, but unlike a program or application, they can replay them and ensure they get them right. You can spell out complicated words; a transcriber will spell them exactly as you ask.

You can tell a professional dictation transcription service provider the punctuation and formatting you need—period, quotation marks, paragraph breaks, and everything in between.

Stop Yelling At Your Phone And Get Ditto’s Best Transcription Services Instead

Transcription services rely on accuracy, making automated typing software not the best choice.

Dictation apps are free, easy to use, and they’re everywhere. And sure, convenience is important— but it’s no substitute for quality and accuracy.

Get accurate, quality, and professional transcription services when you work with Ditto Transcripts. We’ll take dictations, audio recordings, and video content and create accurate, crystal-clear transcripts that can be used in any professional or business setting. Additionally, we offer flexible and affordable pricing options, customizable formats, fast turnaround times, and excellent customer service.

So what are you waiting for? Call us, or sign up for our free trial and experience the Ditto difference.

Ditto Transcripts is a HIPAA-compliant and CJIS-compliant Denver, Colorado-based transcription services company that provides fast, accurate, and affordable transcripts for individuals and companies of all sizes. Call (720) 287-3710 today for a free quote, and ask about our free five-day trial.

Looking For A Transcription Service?

Ditto Transcripts is a U.S.-based HIPAA and CJIS compliant company with experienced U.S. transcriptionists. Learn how we can help with your next project!

The SpeakWrite Blog

Transcription vs dictation: learn the difference.

- January 31, 2023

No, they’re not the same. But they’re both great time-saving options that allow you to get more done in less time—here’s what you should know.

Are you using the terms dictation and transcription interchangeably? Is there a discernible difference between dictation vs transcription?

The short answer is yes.

Whether it’s transcribing audio or notes from meetings or simply speeding up document creation efforts all around, understanding these terms is essential for any busy professional.

In this article, we’ll break down the difference between transcription vs dictation so you can optimize your workflow with the best document creation tools around, including the best transcription services and dictation software.

What is the difference between transcription vs dictation?

Dictation is the process of speaking aloud to produce a document or other type of output, while transcription is the process of converting a wav file into written text. It’s common to write a transcription from a dictation, and many modern pieces of software are capable of transcribing a dictation in real time.

No matter what your use case, dictation and transcription can be a great time-saving option that allows you to get more done in less time. Transcription services are usually provided by professional typists, while dictation services rely on AI software and speech recognition technology to transcribe audio into text.

What is Dictation?

Simply put, dictation is when you record your speaking, usually by speaking into a recording device. From there, you can either replay your dictation out loud and type it or have it professionally transcribed into written notes.

Dictation is useful because you can dictate in real-time, a nifty ability when:

- Typing is difficult or impossible due to injury or disability

- You need to take meeting or lecture notes

- You need to record patient information

- Recording thoughts or ideas while walking or driving

- Looking for ways to spend more time on billable hours

- You need documents transcribed into a different language

Types of Dictation Software

With smartphones, dictation has never been easier. Here are a few of the best dictation apps for recording raw audio with your phone.

Voice Memos (Apple)

Apple users can use their Voice Memos app for dictation. Simply hit the “record” button and begin speaking. Voice Memos also allows you to trim, re-record, and improve recording’s quality with ease.

Sound Recording (Android)

Android users can dictate using the Sound Recording app. Hit the “record” button and boom—you’re started. You can trim, re-record, and improve the audio quality within the app.

AudioNote is a fantastic dictation app that allows you to record audio while taking notes at the same time. It’s an excellent option for those who use dictation in an academic or commercial setting.

What Is Transcription?

Transcription is the process of converting spoken words into written text. Transcription can be achieved by using a human typist (still the most accurate form of transcription) or transcription software.

Transcription software—also called speech-to-text—uses artificial intelligence (AI) to automatically convert speech into written text. If you’ve ever used your phone’s voice-to-text feature, you’ve used transcription.

However, AI transcription isn’t perfect . It often has trouble transcribing language spoken quickly or with an accent.

For that reason, many people still prefer manual dictation. With manual transcription, you can simply upload an audio file and–voila!—a few hours later, you’ll have a super-accurate transcription of your dictation.

Transcription is used in a variety of settings, including:

- Sending texts on-the-go

- Note-taking during meetings or lectures

- Converting recorded interviews to text documents

- Making audio more accessible for those with disabilities

Types of Transcription

Different use cases require different types of transcription. Police interviews, for example, typically include every utterance spoken. Meeting notes, on the other hand, may not require the same level of detail. There are three types of transcription:

Verbatim transcription includes all features of speech, including laughing, sighing, and filler words like “um” and

Intelligent verbatim

Intelligent verbatim transcription is a type of transcription that accurately captures all spoken words, including filler words and false starts, while also providing context and meaning through the use of punctuation, formatting, and other techniques.