- Harvard Division of Continuing Education

- DCE Theses and Dissertations

- Communities & Collections

- By Issue Date

- FAS Department

- Quick submit

- Waiver Generator

- DASH Stories

- Accessibility

- COVID-related Research

Terms of Use

- Privacy Policy

- By Collections

- By Departments

Machine Learning for Financial Market Forecasting

Citable link to this page

Collections.

- DCE Theses and Dissertations [1189]

Contact administrator regarding this item (to report mistakes or request changes)

Click through the PLOS taxonomy to find articles in your field.

For more information about PLOS Subject Areas, click here .

Loading metrics

Open Access

Peer-reviewed

Research Article

A performance comparison of machine learning models for stock market prediction with novel investment strategy

Roles Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Software, Writing – original draft

Affiliation Department of Electrical Engineering and Computer Science, Jalozai Campus, University of Engineering and Technology, Peshawar, Pakistan

Roles Data curation, Formal analysis, Investigation, Methodology, Software, Writing – original draft

Roles Investigation, Methodology, Resources, Supervision, Validation, Writing – review & editing

Roles Investigation, Methodology, Project administration, Resources, Supervision, Visualization, Writing – review & editing

Roles Investigation, Methodology, Project administration, Resources, Supervision, Validation, Writing – review & editing

Roles Investigation, Project administration, Resources, Supervision, Validation, Writing – review & editing

Affiliation Department of Electrical Engineering, Main Campus, University of Engineering and Technology, Peshawar, Pakistan

Roles Funding acquisition, Methodology, Project administration, Resources, Writing – review & editing

* E-mail: [email protected]

Affiliation Cardiff School of Technologies, Cardiff Metropolitan University, Cardiff, United Kingdom

- Azaz Hassan Khan,

- Abdullah Shah,

- Abbas Ali,

- Rabia Shahid,

- Zaka Ullah Zahid,

- Malik Umar Sharif,

- Tariqullah Jan,

- Mohammad Haseeb Zafar

- Published: September 21, 2023

- https://doi.org/10.1371/journal.pone.0286362

- Reader Comments

Stock market forecasting is one of the most challenging problems in today’s financial markets. According to the efficient market hypothesis, it is almost impossible to predict the stock market with 100% accuracy. However, Machine Learning (ML) methods can improve stock market predictions to some extent. In this paper, a novel strategy is proposed to improve the prediction efficiency of ML models for financial markets. Nine ML models are used to predict the direction of the stock market. First, these models are trained and validated using the traditional methodology on a historic data captured over a 1-day time frame. Then, the models are trained using the proposed methodology. Following the traditional methodology, Logistic Regression achieved the highest accuracy of 85.51% followed by XG Boost and Random Forest. With the proposed strategy, the Random Forest model achieved the highest accuracy of 91.27% followed by XG Boost, ADA Boost and ANN. In the later part of the paper, it is shown that only classification report is not sufficient to validate the performance of ML model for stock market prediction. A simulation model of the financial market is used in order to evaluate the risk, maximum draw down and returns associate with each ML model. The overall results demonstrated that the proposed strategy not only improves the stock market returns but also reduces the risks associated with each ML model.

Citation: Khan AH, Shah A, Ali A, Shahid R, Zahid ZU, Sharif MU, et al. (2023) A performance comparison of machine learning models for stock market prediction with novel investment strategy. PLoS ONE 18(9): e0286362. https://doi.org/10.1371/journal.pone.0286362

Editor: Furqan Rustam, University College Dublin, PAKISTAN

Received: January 3, 2023; Accepted: May 15, 2023; Published: September 21, 2023

Copyright: © 2023 Khan et al. This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Data Availability: Data for this study is publicly available from the GitHub repository ( https://github.com/AzazHassankhan/Machine-Learning-based-Trading-Techniques ).

Funding: The authors received no specific funding for this work.

Competing interests: The authors have declared that no competing interests exist.

Introduction

Stock markets being one of the essential pillars of the economy have been extensively studied and researched [ 1 ]. Forecasting the stock price is an essential objective in the stock market since the higher expected return to the investors can be guaranteed with better prediction [ 2 ]. The price and uncertainty in the stock market is predicted by exploiting the patterns found in the past data [ 3 ]. The nature of the stock market has always been vague for investors because predicting the performance of a stock market is very challenging. Various factors like the political disturbance, natural catastrophes, international events and much more must be considered in predicting the stock market [ 4 ]. The challenge is so huge that even a small improvement in stock market prediction can lead to huge returns.

The stock market can only move in one of the two directions: upwards (when stock prices rise) or downwards (when stock prices fall) [ 5 ]. Generally, there are four ways to analyze the stock market direction [ 6 ]. The most basic type of analysis is the fundamental analysis, which is the way of analyzing the stock market by looking at the company’s economic conditions, reports and future projects [ 7 ]. The second and most common technique is technical analysis [ 8 ]. In this method, the direction of the stock market is anticipated by looking at the stock market price charts and comparing it with its previous prices [ 9 ]. The third and most advanced technique is the Machine learning (ML) based analysis that analyzes the market with less human interaction [ 10 ]. ML models find the patterns inside historical data based on which they try to forecast the stock market prices for the future. The fourth technique, called sentimental-based analysis, analyzes the stock market prices by the sentiments of other individuals like activity on social media or financial news websites [ 11 ].

The difficulty of the stock market prediction drew the attention of numerous researchers worldwide. A number of papers have been presented that could predict the stock prices based on ML models. These models include Artificial Neural Network (ANN) [ 12 ], Decision Tree (DT) [ 13 ], Support Vector Machine (SVM) [ 14 ], K-Nearest Neighbors (KNN) [ 15 ], Random Forest (RF) [ 16 ] and Long Short-Term Memory networks (LSTM) [ 17 ]. The proposed systems either used a single ML model optimized for specific stocks [ 18 – 20 ], or multiple ML models in order to analyze their performance on different stocks [ 21 – 24 ]. Many advanced techniques like hybrid models were also employed in order to improve prediction accuracy [ 25 – 27 ].

Different ML models like RF and stochastic gradient boosting were used to predict the prices of Gold and Silver with an accuracy of more than 85% [ 18 ]. A novel model based on SVM and Genetic Algorithm, called Genetic Algorithm Support Vector Machine (GASVM), was proposed to forecast the direction of Ghana Stock Exchange [ 19 ]. The proposed model achieved an accuracy of 93.7% for a 10-day stock price movement outperforming other traditional ML models. The Artificial Neural Network Garch (ANNG) model was used to forecast the uncertainty in oil prices [ 20 ]. In this model, first, the GARCH model is used to predict the oil price. This prediction is then used as input to ANN for improvement in the overall commodity price forecast by 30%.

Different ML models perform differently on the same historical data. Their performance depends on the type of data and the duration for which the past data is available. In many recent papers, multiple ML models were used on the same financial time series data to predict the future price of the stock to see the performance of each ML model [ 21 – 24 ]. Comparative analysis of nine ML and two Deep Learning (DL) models was performed on Tehran stock market [ 21 ]. The main purpose of this analysis was to compare the accuracy of different models on continuous and binary datasets. The binary dataset was found to increase the accuracy of models. In [ 22 ], four ML models (ANN, SVM, Subsequent Artificial Neural Network (SANN) and LSTM) were used to predict the Bitcoin prices using different time frames. The results show that SANN was able to predict the Bitcoin prices with an accuracy of 65%, whereas LSTM showed an accuracy of 53% only. In another comparative study [ 23 ], four ML models (Multi-Layer Perceptron (MLP), SVM and RF) were used to forecast the prices for different crypto-currencies like Bitcoin, Ethereum, Ripple and Litecoin using their historical prices. MLP outperformed all other models with an accuracy ranging from 64 to 72%. Similar study was performed in [ 24 ] showing the performance comparison of different ML models on the same data.

In some recent studies, hybrid models (a combination of different ML models) are used to forecast stock prices. A hybrid model designed with the SVM and sentimental-based technique was proposed for Shanghai Stock Exchange prediction [ 25 ]. This hybrid model was able to achieve the accuracy of 89.93%. A system consisting of k-mean clustering and ensemble learning technique was developed to predict the Chinese stock market [ 26 ]. The hybrid prediction model obtained the best forecasting accuracy of the stock price on Chinese stock market. Another hybrid framework was developed in [ 27 ] for the Indian Stock Market, this model was developed using SVM with different kernel functions and KNN to predict profit or loss. The proposed system was used to predict the future of stock value. Although the accuracy of the hybrid systems is much higher but they are too complex to be implemented in real-life. Furthermore, a comparative analysis of the prior and proposed study has been shown in Table 1 .

- PPT PowerPoint slide

- PNG larger image

- TIFF original image

https://doi.org/10.1371/journal.pone.0286362.t001

In almost all the proposed ML-based systems, a primary limitation has been observed in the empirical results. The performance of the ML models were only gauged by their classification ability. Although, it is one of the important parameters being used for the evaluation of the ML model, but it is insufficient to determine the performance of the ML model for stock market prediction. The classification metrics do not take into the account some important factors like returns, maximum draw down, risk-to-reward ratio, transactional cost and the risks associated with each ML model. These factors must be considered in the evaluation of ML models for stock market predictions.

Research cContributions

The following are the major contributions of paper:

- A performance comparison of nine ML models trained using the traditional methodology for stock market prediction using both performance metrics and financial system simulations.

- Proposing a novel strategy to train the ML models for financial markets that perform much better than the traditional methodologies.

- Proposing a novel financial system simulation that provides financial performance metrics like returns, maximum drawdown and risk-to-reward ratio for each ML model.

Paper organization

The rest of the paper is organized as follows: The next section explains the proposed methodology used in training nine ML models for stock market prediction. Section III analyses the outcomes of simulation models in detail. This section consists of ML models simulation as well as Financial models simulations. The conclusions and future directions are discussed in Sections IV and V respectively.

Methodology

In this paper, a software approach is used to apply different ML algorithms to predict the direction of the stock market for Tesla Inc. [ 28 ]. This prediction system is implemented in Python using frameworks like Scikit-learn [ 29 ], Pandas [ 30 ], NumPy [ 31 ], Alpaca broker [ 32 ] and Plotly [ 33 ].

The flowchart of the methodology is illustrated in Fig 1 . The first step is to import the stock market data from Alpaca broker and preprocess it using various techniques. The imported stock market data has some information that is not needed in the proposed system. This unwanted data, like trade counts and volume-weighted average price, is removed in the preprocessing stage. Preprocessing also involves handling missing stock prices and cleaning data from unnecessary noise. Missing values can be estimated using interpolation techniques or just by taking the mean value of the point before and after the missing point.

https://doi.org/10.1371/journal.pone.0286362.g001

Traditionally, the stock price at the end of the day (EOD) is used in ML-based systems. The variation in the stock price is usually the most in the first hour after the market is open. So, stock price within this hour is more effective than the EOD stock price. The direction of the market is set by the business done in this hour. So, in this paper, the stock price after 15 minutes, when the stock market is open, will also be extracted. The results from the stock price at EOD will be compared with the results from the proposed 15 minutes strategy.

Once the stock price data has been extracted, the subsequent stage involves computing various input features from the technical indicators and statistical formulas. Nine input features, listed in Table 2 , are selected for the prediction purposes. These calculated input features are subjected to overfitting tests. These tests are essential because overfit data can cause reduction in the accuracy of the ML models [ 34 ].

https://doi.org/10.1371/journal.pone.0286362.t002

The input features and output variables are provided to the ML models in order to detect the patterns within the training data. Various ML models have been employed in this study. Table 3 shows the selected nine ML models to predict the direction of the stock market in this paper. The optimal parameters for each ML models are selected through GridSearchCV [ 35 ]. A scikit-learn function that helps in selecting best performing parameter for a particular model. After choosing the optimal parameters, the ML models are trained and tested.

https://doi.org/10.1371/journal.pone.0286362.t003

Additionally, a confusion matrix is used to summarize the performance of each ML model. It provides detailed insight into ML predictions by indicating False Positives (FP), True Positives (TP), False Negatives (FN) and True Negatives (TN) [ 49 ]. False Positives show that the model prediction is true while the real sample is false; True Positives show that the model prediction and the real sample both are true; False Negatives represent that the model prediction is false while the real sample is true; True Negatives show that the model prediction and real sample both are false.

In the next step, a novel financial model is developed and simulated to analyze the performance of the trained ML models. The financial performance metrics like Sharpe ratio, maximum drawdown, cumulative return and annual return [ 50 ] are used to analyze the performance of the trained ML models.

Experimental results

Dataset description and project specifications.

Tesla Inc. is a major American automobile company producing technologically advanced electric vehicles. The company has recently obtained a lot of attention due to its stock prices. A drastic increase in revenue in the year 2021 made Tesla stocks very appealing for capitalists and investors around the world as shown in Table 4 [ 52 ].

https://doi.org/10.1371/journal.pone.0286362.t004

Table 4 shows the annual growth of Tesla from 2016 to 2021. There has been an increase of almost 70.67% in the year 2021. By taking into account the stock volatility in the previous years and its recent growth, Tesla Inc. is an ideal candidate for this study.

The stock prices for Tesla Inc. from 2016 to 2021 are considered for experimental evaluations in this paper. Furthermore, the data is split into training data and test datasets. Table 5 shows the ranges of our datasets. The stock market data for Tesla Inc., downloaded from Alpaca broker, from 2016 to 2021 is shown in Fig 2 . Additionally, the project specifications can be found in Table 6 .

https://doi.org/10.1371/journal.pone.0286362.g002

https://doi.org/10.1371/journal.pone.0286362.t005

https://doi.org/10.1371/journal.pone.0286362.t006

Machine learning models simulation

First, the optimal parameters settings for the nine ML models are selected through GridSearchCV. The selected optimal parametric settings for each model are shown in Table 7 .

https://doi.org/10.1371/journal.pone.0286362.t007

The simulations for stock market prediction are performed using Python on a Jupiter notebook. ML models were evaluated using Tesla Inc. stock prices for a 1-day time frame and 15-min time interval strategy. These models were first trained on the data from Jan 01, 2016 to Nov 15, 2020. The trained models were then validated on the test data from Nov 16, 2020 to Dec 31, 2021 as shown in Table 5 .

Tables 8 – 10 show the classification report for nine different ML models. Tables 8 and 9 show the performance metrics for different ML models for a 1-day time frame and 15-min time interval strategy. These tables list the accuracy, F1 score, ROC AUC, precision and recall in percentage for all of the ML models. Table 10 shows the confusion matrix for the ML models. It lists the number of correct and wrong predictions made by each ML model.

https://doi.org/10.1371/journal.pone.0286362.t008

https://doi.org/10.1371/journal.pone.0286362.t009

https://doi.org/10.1371/journal.pone.0286362.t010

ML models simulation results for 1-day time frame.

Table 8 shows the performance metrics of nine ML models optimized for a 1-day time frame. As shown in the table, the Logistic Regression achieved the highest accuracy of 85.51% while the Naive Bayes model is found to be the least accurate model with an accuracy of 73.49%. Other classification metrics in Table 8 show a similar tendency with Logistic Regression having the best performance followed by XG Boost and Random Forest.

The confusion matrix in Table 10 shows a similar trend. For Logistic Regression, the True Positives are 132 and the False Positives are 26 for the ‘Move Up’ class. The True Negatives are 110 and the False Negatives are 15 for the ‘Move Down’ class.

Based on the discussion above, it can be seen that the performance of Logistic Regression model is better than the rest of the models for 1-day time frame. Even though its accuracy among the nine ML models is only 85.51%.

The graphical illustration of the predictions made by the Logistic Regression model for a 1-day time frame can be seen in Fig 3 . It can be seen that the trained Logistic Regression model is able to make more profits than losses. However, it is interesting to note that sometimes the predictions made by the LR model are wrong in the consecutive trades that results in more drawdown. For example, during the period 180 to 230 days, there are a total of 6 trades executed, out of which 4 are losses and 2 are profitable trades.

https://doi.org/10.1371/journal.pone.0286362.g003

ML model simulation results for the proposed 15-min strategy.

In this paper, a novel 15-min time interval strategy has been proposed. In this strategy, the initial 15-min time interval is filtered out from 1-day time frame. Then the filtered 15-min time frame is used to train and validate the ML models in order to make prediction for the time frame of 1-day.

Table 9 shows the performance metrics of the ML models optimized for a 15-min time interval strategy. As shown in Table, the Random Forest achieved the highest accuracy of 91.27% followed by XG Boost and ADA Boost model. The KNN model is found to be the least accurate model with an accuracy of 80.53%. Other classification metrics in Table 9 show a similar tendency with the Random Forest having the best performance model.

The confusion matrix in Table 10 shows a similar trend. For Random Forest, the True Positives are 130 and the False Positives are 15 for the ‘Move Up’ class. The True Negatives are 142 and the False Negatives are 11 for the ‘Move Down’ class. When the results in Tables 8 and 9 are compared, it can be observed that by employing the proposed methodology, the performance of all the ML models has been greatly improved.

The graphical illustration of the predictions made by the Random Forest model is shown in Fig 4 , it shows the loss and profit in trades. It can also be observed that by using our proposed strategy, the number of consecutive losses has also been reduced. As shown in Fig 4(b) , there are only 2 consecutive losses, which occurred during the period of 150 to 200. Factually, the proposed methodology has not only improved the performance metrics of the ML models but it also reduced the number of consecutive losses.

https://doi.org/10.1371/journal.pone.0286362.g004

Financial models simulation

In this section, a novel financial simulation model is built that is able to make investment based on the decision of the ML model. Each ML model is evaluated using financial parameters to validate their performance and suitability for real-time stock market trading. The performance of ML models is gauged using cumulative return, annual return, maximum drawdown, Sharpe ratio and capital in hand at the end of the investment period.

Initially, a USD 10k is invested. A commission fee of 0.1% (Alpaca standard commission fee) is set for each buy or sell trade. Based on the prediction by the ML model, a decision regarding buying, holding or shorting a share is taken. A single share is bought or sold on each trade to validate the performance of ML models.

Figs 5 and 6 show the portfolio performance of ML models on Tesla Inc. stocks for a 1-day time frame and 15-min time interval strategy. These figures show how initial capital is used to buy and sell shares based on the decision made by the ML models. Each box in the figure represents one full year from Jan 01 till Dec 31. The portfolio of each ML model is compared to a benchmark that serves as a reference for all models. This benchmark is obtained using the positive gains of stock prices.

https://doi.org/10.1371/journal.pone.0286362.g005

https://doi.org/10.1371/journal.pone.0286362.g006

Financial simulation results for 1-Day time frame data.

The simulated outcomes of the ML models to forecast the stock price of Tesla Inc. for a 1-day period are displayed in Table 11 . In the previous section, it was shown that Logistic Regression had the highest accuracy as compared to the other ML models. Therefore, it is expected that this ML model will generate highest revenue. However, the outcome of the financial simulations shows different results. It can be seen in Table 11 that the Random Forest is the best ML model with an ending capital of USD 28,966. It has a cumulative return of 189.66%, and an annual return of 19.48%, with the highest Sharpe ratio of 0.68. The Random Forest did poorly at first but after the 2019 financial market crisis, it outperformed all other ML models. The maximum drawdown of the Random Forest model is -37.21% which happened during 2019 financial crisis as shown in Fig 7 . This is the lowest drawdown by any ML model.

https://doi.org/10.1371/journal.pone.0286362.g007

https://doi.org/10.1371/journal.pone.0286362.t011

The reason for better revenue generation by the Random Forest model is the quality of each True Positive and True Negative outcome. Even though the accuracy of the model is inferior to the Logistic Regression, each of its correct prediction resulted in more profit. The annual growth of Tesla Inc. from 2020 to 2021 is more than 70% as shown in Table 4 . Any correct prediction during this time will result in greater revenue generation. Random Forest model outperformed all other models during this time as shown in Fig 5 . Among the ML models, the Naive Bayes model shows the worst performance. Fig 5 shows that the Naive Bayes model is negative most of the time during the simulation. It is the only model with a negative cumulative return of -19.16% and worst Sharpe ratio of 0.1.

Financial simulation results for the proposed 15-min strategy.

The portfolio performance of the ML models using the proposed approach of a 15-min time interval strategy is shown in Fig 6 . This figure shows that the performance of some of the models has improved significantly when compared with a 1-day time frame. It can also be noticed that the models maintained their stability throughout the financial crisis of 2019, which indicates a significant improvement in the real-time performance of the models.

Table 12 displays the outcome of the financial model simulation of ML models trained and validated on Tesla Inc. stocks for a 15-min time interval strategy. As expected, it can be seen that the Random Forest is the best performing model with an ending capital of USD 25,300. It records a cumulative return of 153% and annual return of 16.80% with the highest Sharpe ratio of 0.79. The maximum drawdown by the Random Forest model is—35.09% as shown in Fig 8 , but it still able to generate the highest ending capital.

https://doi.org/10.1371/journal.pone.0286362.g008

https://doi.org/10.1371/journal.pone.0286362.t012

The above discussion shows that KNN is the worst performing model on the proposed strategy. Although, Random Forest is the best model in terms of portfolio returns but ANN is the most rewarding model with a Sharpe ratio of 0.91 on the proposed 15-min time interval strategy.

In this paper, nine ML models are used to predict the direction of the Tesla Inc. stock prices. The performance of this stock is first assessed for a 1-day time frame followed by a proposed 15-min time interval strategy. Following the traditional methodology, the Logistic Regression achieved the highest accuracy of 85.51% while Naive Bayes model is found to be the least accurate model with an accuracy of 73.49%. The proposed strategy significantly improved the classification performance of the ML models. With this strategy, the Random Forest model achieved the highest accuracy of 91.93% followed by XG Boost and ADA Boost. Conversely, the KNN model is found to be the least accurate model with an accuracy of 80.53%.

In this paper, it was shown that only classification metrics are not enough to justify the performance of ML models in the stock market. These metrics do not consider important factors like risk, maximum draw down and returns associate with each ML model. A simulation model of the financial market is used to simulate the trained ML models so that their performance is gauged with actual investment strategies. The evaluated results revealed that although some models are performing well in terms of portfolio returns on a traditional methodology but models on the proposed 15-min time frame strategy are significantly better in terms of risk to reward ratio and maximum drawdown. The evaluated result shows that Random Forest outperformed other models in terms of returns in both 1-day and 15-min time interval strategy.

Some other interesting observations are revealed by the comparison of the classification and financial results. The Logistic Regression model has the highest accuracy for a 1-day time frame data. So, it was expected that this ML model will generate the highest revenue. However, the outcome of the financial simulations showed different results. Similarly, the accuracy of the Random Forest model for a 15-min time interval strategy was much higher than the accuracy of the Random Forest model for a 1-day time frame. But instead of generating higher revenue on 15-min time frame strategy, it generated higher revenue on 1-day time frame. The above discussion revealed that however, the accuracy of the ML models is an important factor but the quality of each true positive outcome and true negative outcome is an equally important factor in the performance evaluation of the ML models for stock market prediction.

The overall results show that the proposed strategy has not only improved classification metrics but it also enhanced the stock market returns, risks and risk to reward ratio of each ML model. Additionally, the results also revealed that how important it is to consider both classification as well as financial analysis to evaluate the performance of the ML model on stock market.

Supporting information

S1 file. github file..

The data and script has been uploaded to GitHub. It can be accessed using the following link: https://github.com/AzazHassankhan/Machine-Learning-based-Trading-Techniques/ .

https://doi.org/10.1371/journal.pone.0286362.s001

- 1. Ghysels E. and Osborn D. R., “The Econometric Analysis of Seasonal Time Series,” Cambridge University Press, Cambridge, 2001.

- 2. Karpe M., “An overall view of key problems in algorithmic trading and recent progress,” arXiv , June. 9, 2020, Available online: 10.48550/arXiv.2006.05515

- View Article

- Google Scholar

- 4. Khositkulporn P., “The Factors Affecting Stock Market Volatility and Contagion: Thailand and South-East Asia Evidence,” Ph.D. dissertation, Dept. Business Administration, Victoria University, Melbourne, Australia, Feb. 2013.

- 7. Segal T., “Fundamental Analysis,” Investopedia , Aug. 25, 2022, Available online: www.investopedia.com , Accessed on: 01-04-2022.

- 9. Oğuz R. F., Uygun Y., Aktaş M. S. and Aykurt İ., “On the Use of Technical Analysis Indicators for Stock Market Price Movement Direction Prediction,” in Signal Processing and Communications Applications Conference , Sivas, Turkey, 2019.

- 10. Vijh M., Chandola D., Tikkiwal V. A. and Kumar A., “Stock Closing Price Prediction using Machine Learning Techniques,” International Conference on Computational Intelligence and Data Science , vol. 167, pp. 599-606, 2020.

- 11. Jariwala G., Agarwal H. and Jadhav V., “Sentimental Analysis of News Headlines for Stock Market,” IEEE International Conference for Innovation in Technology , Bangluru, India, pp. 1-5, 2020.

- PubMed/NCBI

- 28. Tesla Inc., Available online: www.tesla.com , Accessed on: Feb. 1, 2022.

- 29. Scikit-Learn, Available online: www.scikit-learn.org , Accessed on: Feb. 15, 2022.

- 30. Pandas, Available online: www.pandas.org , Accessed on: Feb. 16, 2022.

- 31. Numpy, Available online: www.numpy.org , Accessed on: Feb. 3, 2022.

- 32. Alpaca, Available online: alpaca.markets, Accessed on: Jan. 1, 2022.

- 33. Plotly, Available online: www.plotly.com , Accessed on: March. 1, 2022.

- 34. J. Frankle and M. Carbin, “The Lottery Ticket Hypothesis: Finding Sparse, Trainable Neural Networks,” International Conference on Learning Representations (ICLR), 2019.

- 35. Ranjan G. S. K., Verma A. K. and Sudha R., “K-Nearest Neighbors and Grid Search CV Based Real Time Fault Monitoring System for Industries,” International Conference for Convergence in Technology , pp. 1-5, 2019.

- 45. Choudhary R. and Gianey H., “Comprehensive Review On Supervised Machine Learning Algorithms,” International Conference on Machine learning and Data Science , pp. 37-43, 2017.

- 48. A. George and A. Ravindran, “Distributed Middleware for Edge Vision Systems,” 2019 IEEE 16th International Conference on Smart Cities: Improving Quality of Life Using ICT & IoT and AI (HONET-ICT), Charlotte, NC, USA, 2019, pp. 193-194.

- 52. Csi Market, Available online: www.csimarket.com , Accessed on: April. 1, 2022.

Help | Advanced Search

Quantitative Finance > General Finance

Title: stock market prediction via deep learning techniques: a survey.

Abstract: Existing surveys on stock market prediction often focus on traditional machine learning methods instead of deep learning methods. This motivates us to provide a structured and comprehensive overview of the research on stock market prediction. We present four elaborated subtasks of stock market prediction and propose a novel taxonomy to summarize the state-of-the-art models based on deep neural networks. In addition, we also provide detailed statistics on the datasets and evaluation metrics commonly used in the stock market. Finally, we point out several future directions by sharing some new perspectives on stock market prediction.

Submission history

Access paper:.

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

BibTeX formatted citation

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

- Open access

- Published: 28 August 2020

Short-term stock market price trend prediction using a comprehensive deep learning system

- Jingyi Shen 1 &

- M. Omair Shafiq ORCID: orcid.org/0000-0002-1859-8296 1

Journal of Big Data volume 7 , Article number: 66 ( 2020 ) Cite this article

268k Accesses

164 Citations

91 Altmetric

Metrics details

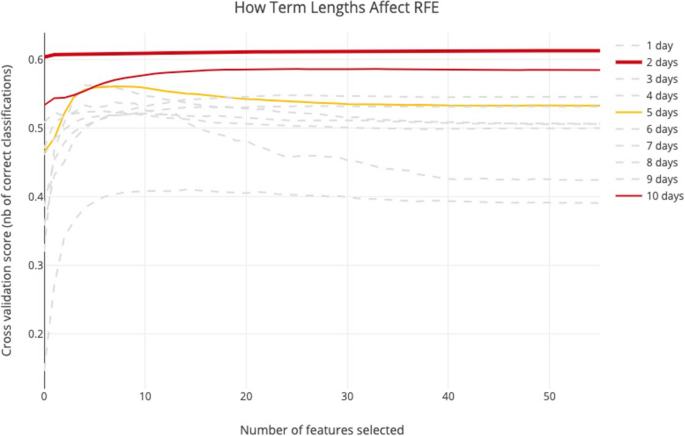

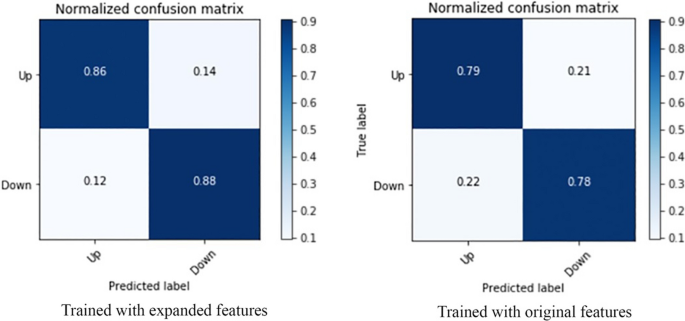

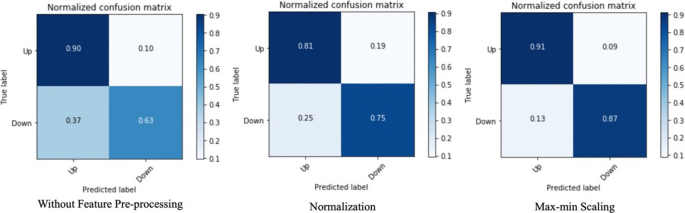

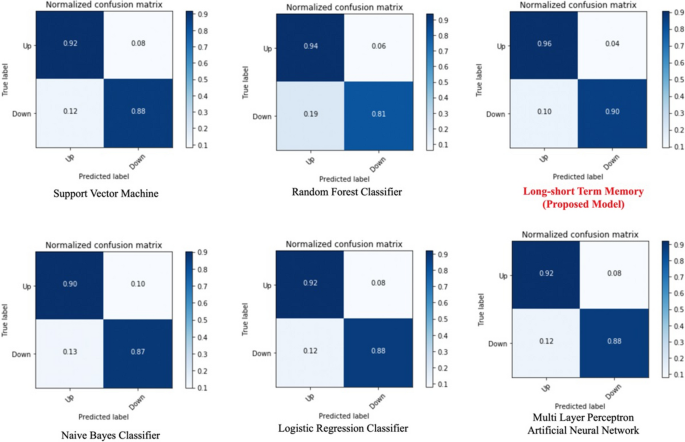

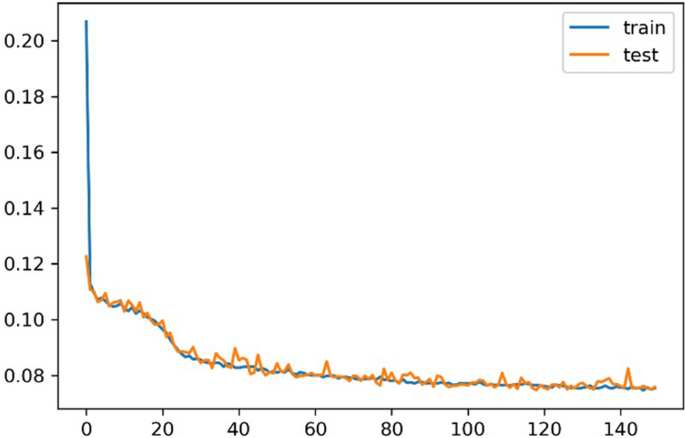

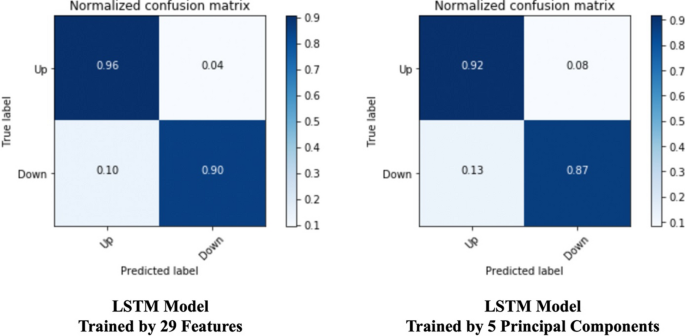

In the era of big data, deep learning for predicting stock market prices and trends has become even more popular than before. We collected 2 years of data from Chinese stock market and proposed a comprehensive customization of feature engineering and deep learning-based model for predicting price trend of stock markets. The proposed solution is comprehensive as it includes pre-processing of the stock market dataset, utilization of multiple feature engineering techniques, combined with a customized deep learning based system for stock market price trend prediction. We conducted comprehensive evaluations on frequently used machine learning models and conclude that our proposed solution outperforms due to the comprehensive feature engineering that we built. The system achieves overall high accuracy for stock market trend prediction. With the detailed design and evaluation of prediction term lengths, feature engineering, and data pre-processing methods, this work contributes to the stock analysis research community both in the financial and technical domains.

Introduction

Stock market is one of the major fields that investors are dedicated to, thus stock market price trend prediction is always a hot topic for researchers from both financial and technical domains. In this research, our objective is to build a state-of-art prediction model for price trend prediction, which focuses on short-term price trend prediction.

As concluded by Fama in [ 26 ], financial time series prediction is known to be a notoriously difficult task due to the generally accepted, semi-strong form of market efficiency and the high level of noise. Back in 2003, Wang et al. in [ 44 ] already applied artificial neural networks on stock market price prediction and focused on volume, as a specific feature of stock market. One of the key findings by them was that the volume was not found to be effective in improving the forecasting performance on the datasets they used, which was S&P 500 and DJI. Ince and Trafalis in [ 15 ] targeted short-term forecasting and applied support vector machine (SVM) model on the stock price prediction. Their main contribution is performing a comparison between multi-layer perceptron (MLP) and SVM then found that most of the scenarios SVM outperformed MLP, while the result was also affected by different trading strategies. In the meantime, researchers from financial domains were applying conventional statistical methods and signal processing techniques on analyzing stock market data.

The optimization techniques, such as principal component analysis (PCA) were also applied in short-term stock price prediction [ 22 ]. During the years, researchers are not only focused on stock price-related analysis but also tried to analyze stock market transactions such as volume burst risks, which expands the stock market analysis research domain broader and indicates this research domain still has high potential [ 39 ]. As the artificial intelligence techniques evolved in recent years, many proposed solutions attempted to combine machine learning and deep learning techniques based on previous approaches, and then proposed new metrics that serve as training features such as Liu and Wang [ 23 ]. This type of previous works belongs to the feature engineering domain and can be considered as the inspiration of feature extension ideas in our research. Liu et al. in [ 24 ] proposed a convolutional neural network (CNN) as well as a long short-term memory (LSTM) neural network based model to analyze different quantitative strategies in stock markets. The CNN serves for the stock selection strategy, automatically extracts features based on quantitative data, then follows an LSTM to preserve the time-series features for improving profits.

The latest work also proposes a similar hybrid neural network architecture, integrating a convolutional neural network with a bidirectional long short-term memory to predict the stock market index [ 4 ]. While the researchers frequently proposed different neural network solution architectures, it brought further discussions about the topic if the high cost of training such models is worth the result or not.

There are three key contributions of our work (1) a new dataset extracted and cleansed (2) a comprehensive feature engineering, and (3) a customized long short-term memory (LSTM) based deep learning model.

We have built the dataset by ourselves from the data source as an open-sourced data API called Tushare [ 43 ]. The novelty of our proposed solution is that we proposed a feature engineering along with a fine-tuned system instead of just an LSTM model only. We observe from the previous works and find the gaps and proposed a solution architecture with a comprehensive feature engineering procedure before training the prediction model. With the success of feature extension method collaborating with recursive feature elimination algorithms, it opens doors for many other machine learning algorithms to achieve high accuracy scores for short-term price trend prediction. It proved the effectiveness of our proposed feature extension as feature engineering. We further introduced our customized LSTM model and further improved the prediction scores in all the evaluation metrics. The proposed solution outperformed the machine learning and deep learning-based models in similar previous works.

The remainder of this paper is organized as follows. “ Survey of related works ” section describes the survey of related works. “ The dataset ” section provides details on the data that we extracted from the public data sources and the dataset prepared. “ Methods ” section presents the research problems, methods, and design of the proposed solution. Detailed technical design with algorithms and how the model implemented are also included in this section. “ Results ” section presents comprehensive results and evaluation of our proposed model, and by comparing it with the models used in most of the related works. “ Discussion ” section provides a discussion and comparison of the results. “ Conclusion ” section presents the conclusion. This research paper has been built based on Shen [ 36 ].

Survey of related works

In this section, we discuss related works. We reviewed the related work in two different domains: technical and financial, respectively.

Kim and Han in [ 19 ] built a model as a combination of artificial neural networks (ANN) and genetic algorithms (GAs) with discretization of features for predicting stock price index. The data used in their study include the technical indicators as well as the direction of change in the daily Korea stock price index (KOSPI). They used the data containing samples of 2928 trading days, ranging from January 1989 to December 1998, and give their selected features and formulas. They also applied optimization of feature discretization, as a technique that is similar to dimensionality reduction. The strengths of their work are that they introduced GA to optimize the ANN. First, the amount of input features and processing elements in the hidden layer are 12 and not adjustable. Another limitation is in the learning process of ANN, and the authors only focused on two factors in optimization. While they still believed that GA has great potential for feature discretization optimization. Our initialized feature pool refers to the selected features. Qiu and Song in [ 34 ] also presented a solution to predict the direction of the Japanese stock market based on an optimized artificial neural network model. In this work, authors utilize genetic algorithms together with artificial neural network based models, and name it as a hybrid GA-ANN model.

Piramuthu in [ 33 ] conducted a thorough evaluation of different feature selection methods for data mining applications. He used for datasets, which were credit approval data, loan defaults data, web traffic data, tam, and kiang data, and compared how different feature selection methods optimized decision tree performance. The feature selection methods he compared included probabilistic distance measure: the Bhattacharyya measure, the Matusita measure, the divergence measure, the Mahalanobis distance measure, and the Patrick-Fisher measure. For inter-class distance measures: the Minkowski distance measure, city block distance measure, Euclidean distance measure, the Chebychev distance measure, and the nonlinear (Parzen and hyper-spherical kernel) distance measure. The strength of this paper is that the author evaluated both probabilistic distance-based and several inter-class feature selection methods. Besides, the author performed the evaluation based on different datasets, which reinforced the strength of this paper. However, the evaluation algorithm was a decision tree only. We cannot conclude if the feature selection methods will still perform the same on a larger dataset or a more complex model.

Hassan and Nath in [ 9 ] applied the Hidden Markov Model (HMM) on the stock market forecasting on stock prices of four different Airlines. They reduce states of the model into four states: the opening price, closing price, the highest price, and the lowest price. The strong point of this paper is that the approach does not need expert knowledge to build a prediction model. While this work is limited within the industry of Airlines and evaluated on a very small dataset, it may not lead to a prediction model with generality. One of the approaches in stock market prediction related works could be exploited to do the comparison work. The authors selected a maximum 2 years as the date range of training and testing dataset, which provided us a date range reference for our evaluation part.

Lei in [ 21 ] exploited Wavelet Neural Network (WNN) to predict stock price trends. The author also applied Rough Set (RS) for attribute reduction as an optimization. Rough Set was utilized to reduce the stock price trend feature dimensions. It was also used to determine the structure of the Wavelet Neural Network. The dataset of this work consists of five well-known stock market indices, i.e., (1) SSE Composite Index (China), (2) CSI 300 Index (China), (3) All Ordinaries Index (Australian), (4) Nikkei 225 Index (Japan), and (5) Dow Jones Index (USA). Evaluation of the model was based on different stock market indices, and the result was convincing with generality. By using Rough Set for optimizing the feature dimension before processing reduces the computational complexity. However, the author only stressed the parameter adjustment in the discussion part but did not specify the weakness of the model itself. Meanwhile, we also found that the evaluations were performed on indices, the same model may not have the same performance if applied on a specific stock.

Lee in [ 20 ] used the support vector machine (SVM) along with a hybrid feature selection method to carry out prediction of stock trends. The dataset in this research is a sub dataset of NASDAQ Index in Taiwan Economic Journal Database (TEJD) in 2008. The feature selection part was using a hybrid method, supported sequential forward search (SSFS) played the role of the wrapper. Another advantage of this work is that they designed a detailed procedure of parameter adjustment with performance under different parameter values. The clear structure of the feature selection model is also heuristic to the primary stage of model structuring. One of the limitations was that the performance of SVM was compared to back-propagation neural network (BPNN) only and did not compare to the other machine learning algorithms.

Sirignano and Cont leveraged a deep learning solution trained on a universal feature set of financial markets in [ 40 ]. The dataset used included buy and sell records of all transactions, and cancellations of orders for approximately 1000 NASDAQ stocks through the order book of the stock exchange. The NN consists of three layers with LSTM units and a feed-forward layer with rectified linear units (ReLUs) at last, with stochastic gradient descent (SGD) algorithm as an optimization. Their universal model was able to generalize and cover the stocks other than the ones in the training data. Though they mentioned the advantages of a universal model, the training cost was still expensive. Meanwhile, due to the inexplicit programming of the deep learning algorithm, it is unclear that if there are useless features contaminated when feeding the data into the model. Authors found out that it would have been better if they performed feature selection part before training the model and found it as an effective way to reduce the computational complexity.

Ni et al. in [ 30 ] predicted stock price trends by exploiting SVM and performed fractal feature selection for optimization. The dataset they used is the Shanghai Stock Exchange Composite Index (SSECI), with 19 technical indicators as features. Before processing the data, they optimized the input data by performing feature selection. When finding the best parameter combination, they also used a grid search method, which is k cross-validation. Besides, the evaluation of different feature selection methods is also comprehensive. As the authors mentioned in their conclusion part, they only considered the technical indicators but not macro and micro factors in the financial domain. The source of datasets that the authors used was similar to our dataset, which makes their evaluation results useful to our research. They also mentioned a method called k cross-validation when testing hyper-parameter combinations.

McNally et al. in [ 27 ] leveraged RNN and LSTM on predicting the price of Bitcoin, optimized by using the Boruta algorithm for feature engineering part, and it works similarly to the random forest classifier. Besides feature selection, they also used Bayesian optimization to select LSTM parameters. The Bitcoin dataset ranged from the 19th of August 2013 to 19th of July 2016. Used multiple optimization methods to improve the performance of deep learning methods. The primary problem of their work is overfitting. The research problem of predicting Bitcoin price trend has some similarities with stock market price prediction. Hidden features and noises embedded in the price data are threats of this work. The authors treated the research question as a time sequence problem. The best part of this paper is the feature engineering and optimization part; we could replicate the methods they exploited in our data pre-processing.

Weng et al. in [ 45 ] focused on short-term stock price prediction by using ensemble methods of four well-known machine learning models. The dataset for this research is five sets of data. They obtained these datasets from three open-sourced APIs and an R package named TTR. The machine learning models they used are (1) neural network regression ensemble (NNRE), (2) a Random Forest with unpruned regression trees as base learners (RFR), (3) AdaBoost with unpruned regression trees as base learners (BRT) and (4) a support vector regression ensemble (SVRE). A thorough study of ensemble methods specified for short-term stock price prediction. With background knowledge, the authors selected eight technical indicators in this study then performed a thoughtful evaluation of five datasets. The primary contribution of this paper is that they developed a platform for investors using R, which does not need users to input their own data but call API to fetch the data from online source straightforward. From the research perspective, they only evaluated the prediction of the price for 1 up to 10 days ahead but did not evaluate longer terms than two trading weeks or a shorter term than 1 day. The primary limitation of their research was that they only analyzed 20 U.S.-based stocks, the model might not be generalized to other stock market or need further revalidation to see if it suffered from overfitting problems.

Kara et al. in [ 17 ] also exploited ANN and SVM in predicting the movement of stock price index. The data set they used covers a time period from January 2, 1997, to December 31, 2007, of the Istanbul Stock Exchange. The primary strength of this work is its detailed record of parameter adjustment procedures. While the weaknesses of this work are that neither the technical indicator nor the model structure has novelty, and the authors did not explain how their model performed better than other models in previous works. Thus, more validation works on other datasets would help. They explained how ANN and SVM work with stock market features, also recorded the parameter adjustment. The implementation part of our research could benefit from this previous work.

Jeon et al. in [ 16 ] performed research on millisecond interval-based big dataset by using pattern graph tracking to complete stock price prediction tasks. The dataset they used is a millisecond interval-based big dataset of historical stock data from KOSCOM, from August 2014 to October 2014, 10G–15G capacity. The author applied Euclidean distance, Dynamic Time Warping (DTW) for pattern recognition. For feature selection, they used stepwise regression. The authors completed the prediction task by ANN and Hadoop and RHive for big data processing. The “ Results ” section is based on the result processed by a combination of SAX and Jaro–Winkler distance. Before processing the data, they generated aggregated data at 5-min intervals from discrete data. The primary strength of this work is the explicit structure of the whole implementation procedure. While they exploited a relatively old model, another weakness is the overall time span of the training dataset is extremely short. It is difficult to access the millisecond interval-based data in real life, so the model is not as practical as a daily based data model.

Huang et al. in [ 12 ] applied a fuzzy-GA model to complete the stock selection task. They used the key stocks of the 200 largest market capitalization listed as the investment universe in the Taiwan Stock Exchange. Besides, the yearly financial statement data and the stock returns were taken from the Taiwan Economic Journal (TEJ) database at www.tej.com.tw/ for the time period from year 1995 to year 2009. They conducted the fuzzy membership function with model parameters optimized with GA and extracted features for optimizing stock scoring. The authors proposed an optimized model for selection and scoring of stocks. Different from the prediction model, the authors more focused on stock rankings, selection, and performance evaluation. Their structure is more practical among investors. But in the model validation part, they did not compare the model with existed algorithms but the statistics of the benchmark, which made it challenging to identify if GA would outperform other algorithms.

Fischer and Krauss in [ 5 ] applied long short-term memory (LSTM) on financial market prediction. The dataset they used is S&P 500 index constituents from Thomson Reuters. They obtained all month-end constituent lists for the S&P 500 from Dec 1989 to Sep 2015, then consolidated the lists into a binary matrix to eliminate survivor bias. The authors also used RMSprop as an optimizer, which is a mini-batch version of rprop. The primary strength of this work is that the authors used the latest deep learning technique to perform predictions. They relied on the LSTM technique, lack of background knowledge in the financial domain. Although the LSTM outperformed the standard DNN and logistic regression algorithms, while the author did not mention the effort to train an LSTM with long-time dependencies.

Tsai and Hsiao in [ 42 ] proposed a solution as a combination of different feature selection methods for prediction of stocks. They used Taiwan Economic Journal (TEJ) database as data source. The data used in their analysis was from year 2000 to 2007. In their work, they used a sliding window method and combined it with multi layer perceptron (MLP) based artificial neural networks with back propagation, as their prediction model. In their work, they also applied principal component analysis (PCA) for dimensionality reduction, genetic algorithms (GA) and the classification and regression trees (CART) to select important features. They did not just rely on technical indices only. Instead, they also included both fundamental and macroeconomic indices in their analysis. The authors also reported a comparison on feature selection methods. The validation part was done by combining the model performance stats with statistical analysis.

Pimenta et al. in [ 32 ] leveraged an automated investing method by using multi-objective genetic programming and applied it in the stock market. The dataset was obtained from Brazilian stock exchange market (BOVESPA), and the primary techniques they exploited were a combination of multi-objective optimization, genetic programming, and technical trading rules. For optimization, they leveraged genetic programming (GP) to optimize decision rules. The novelty of this paper was in the evaluation part. They included a historical period, which was a critical moment of Brazilian politics and economics when performing validation. This approach reinforced the generalization strength of their proposed model. When selecting the sub-dataset for evaluation, they also set criteria to ensure more asset liquidity. While the baseline of the comparison was too basic and fundamental, and the authors did not perform any comparison with other existing models.

Huang and Tsai in [ 13 ] conducted a filter-based feature selection assembled with a hybrid self-organizing feature map (SOFM) support vector regression (SVR) model to forecast Taiwan index futures (FITX) trend. They divided the training samples into clusters to marginally improve the training efficiency. The authors proposed a comprehensive model, which was a combination of two novel machine learning techniques in stock market analysis. Besides, the optimizer of feature selection was also applied before the data processing to improve the prediction accuracy and reduce the computational complexity of processing daily stock index data. Though they optimized the feature selection part and split the sample data into small clusters, it was already strenuous to train daily stock index data of this model. It would be difficult for this model to predict trading activities in shorter time intervals since the data volume would be increased drastically. Moreover, the evaluation is not strong enough since they set a single SVR model as a baseline, but did not compare the performance with other previous works, which caused difficulty for future researchers to identify the advantages of SOFM-SVR model why it outperforms other algorithms.

Thakur and Kumar in [ 41 ] also developed a hybrid financial trading support system by exploiting multi-category classifiers and random forest (RAF). They conducted their research on stock indices from NASDAQ, DOW JONES, S&P 500, NIFTY 50, and NIFTY BANK. The authors proposed a hybrid model combined random forest (RF) algorithms with a weighted multicategory generalized eigenvalue support vector machine (WMGEPSVM) to generate “Buy/Hold/Sell” signals. Before processing the data, they used Random Forest (RF) for feature pruning. The authors proposed a practical model designed for real-life investment activities, which could generate three basic signals for investors to refer to. They also performed a thorough comparison of related algorithms. While they did not mention the time and computational complexity of their works. Meanwhile, the unignorable issue of their work was the lack of financial domain knowledge background. The investors regard the indices data as one of the attributes but could not take the signal from indices to operate a specific stock straightforward.

Hsu in [ 11 ] assembled feature selection with a back propagation neural network (BNN) combined with genetic programming to predict the stock/futures price. The dataset in this research was obtained from Taiwan Stock Exchange Corporation (TWSE). The authors have introduced the description of the background knowledge in detail. While the weakness of their work is that it is a lack of data set description. This is a combination of the model proposed by other previous works. Though we did not see the novelty of this work, we can still conclude that the genetic programming (GP) algorithm is admitted in stock market research domain. To reinforce the validation strengths, it would be good to consider adding GP models into evaluation if the model is predicting a specific price.

Hafezi et al. in [ 7 ] built a bat-neural network multi-agent system (BN-NMAS) to predict stock price. The dataset was obtained from the Deutsche bundes-bank. They also applied the Bat algorithm (BA) for optimizing neural network weights. The authors illustrated their overall structure and logic of system design in clear flowcharts. While there were very few previous works that had performed on DAX data, it would be difficult to recognize if the model they proposed still has the generality if migrated on other datasets. The system design and feature selection logic are fascinating, which worth referring to. Their findings in optimization algorithms are also valuable for the research in the stock market price prediction research domain. It is worth trying the Bat algorithm (BA) when constructing neural network models.

Long et al. in [ 25 ] conducted a deep learning approach to predict the stock price movement. The dataset they used is the Chinese stock market index CSI 300. For predicting the stock price movement, they constructed a multi-filter neural network (MFNN) with stochastic gradient descent (SGD) and back propagation optimizer for learning NN parameters. The strength of this paper is that the authors exploited a novel model with a hybrid model constructed by different kinds of neural networks, it provides an inspiration for constructing hybrid neural network structures.

Atsalakis and Valavanis in [ 1 ] proposed a solution of a neuro-fuzzy system, which is composed of controller named as Adaptive Neuro Fuzzy Inference System (ANFIS), to achieve short-term stock price trend prediction. The noticeable strength of this work is the evaluation part. Not only did they compare their proposed system with the popular data models, but also compared with investment strategies. While the weakness that we found from their proposed solution is that their solution architecture is lack of optimization part, which might limit their model performance. Since our proposed solution is also focusing on short-term stock price trend prediction, this work is heuristic for our system design. Meanwhile, by comparing with the popular trading strategies from investors, their work inspired us to compare the strategies used by investors with techniques used by researchers.

Nekoeiqachkanloo et al. in [ 29 ] proposed a system with two different approaches for stock investment. The strengths of their proposed solution are obvious. First, it is a comprehensive system that consists of data pre-processing and two different algorithms to suggest the best investment portions. Second, the system also embedded with a forecasting component, which also retains the features of the time series. Last but not least, their input features are a mix of fundamental features and technical indices that aim to fill in the gap between the financial domain and technical domain. However, their work has a weakness in the evaluation part. Instead of evaluating the proposed system on a large dataset, they chose 25 well-known stocks. There is a high possibility that the well-known stocks might potentially share some common hidden features.

As another related latest work, Idrees et al. [ 14 ] published a time series-based prediction approach for the volatility of the stock market. ARIMA is not a new approach in the time series prediction research domain. Their work is more focusing on the feature engineering side. Before feeding the features into ARIMA models, they designed three steps for feature engineering: Analyze the time series, identify if the time series is stationary or not, perform estimation by plot ACF and PACF charts and look for parameters. The only weakness of their proposed solution is that the authors did not perform any customization on the existing ARIMA model, which might limit the system performance to be improved.

One of the main weaknesses found in the related works is limited data-preprocessing mechanisms built and used. Technical works mostly tend to focus on building prediction models. When they select the features, they list all the features mentioned in previous works and go through the feature selection algorithm then select the best-voted features. Related works in the investment domain have shown more interest in behavior analysis, such as how herding behaviors affect the stock performance, or how the percentage of inside directors hold the firm’s common stock affects the performance of a certain stock. These behaviors often need a pre-processing procedure of standard technical indices and investment experience to recognize.

In the related works, often a thorough statistical analysis is performed based on a special dataset and conclude new features rather than performing feature selections. Some data, such as the percentage of a certain index fluctuation has been proven to be effective on stock performance. We believe that by extracting new features from data, then combining such features with existed common technical indices will significantly benefit the existing and well-tested prediction models.

The dataset

This section details the data that was extracted from the public data sources, and the final dataset that was prepared. Stock market-related data are diverse, so we first compared the related works from the survey of financial research works in stock market data analysis to specify the data collection directions. After collecting the data, we defined a data structure of the dataset. Given below, we describe the dataset in detail, including the data structure, and data tables in each category of data with the segment definitions.

Description of our dataset

In this section, we will describe the dataset in detail. This dataset consists of 3558 stocks from the Chinese stock market. Besides the daily price data, daily fundamental data of each stock ID, we also collected the suspending and resuming history, top 10 shareholders, etc. We list two reasons that we choose 2 years as the time span of this dataset: (1) most of the investors perform stock market price trend analysis using the data within the latest 2 years, (2) using more recent data would benefit the analysis result. We collected data through the open-sourced API, namely Tushare [ 43 ], mean-while we also leveraged a web-scraping technique to collect data from Sina Finance web pages, SWS Research website.

Data structure

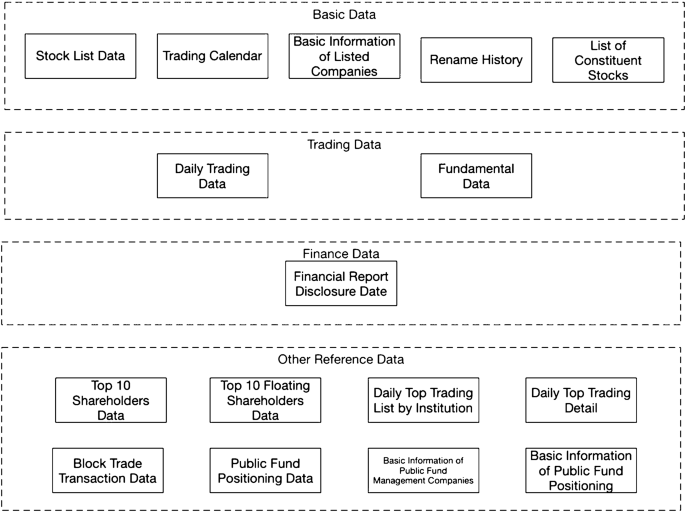

Figure 1 illustrates all the data tables in the dataset. We collected four categories of data in this dataset: (1) basic data, (2) trading data, (3) finance data, and (4) other reference data. All the data tables can be linked to each other by a common field called “Stock ID” It is a unique stock identifier registered in the Chinese Stock market. Table 1 shows an overview of the dataset.

Data structure for the extracted dataset

The Table 1 lists the field information of each data table as well as which category the data table belongs to.

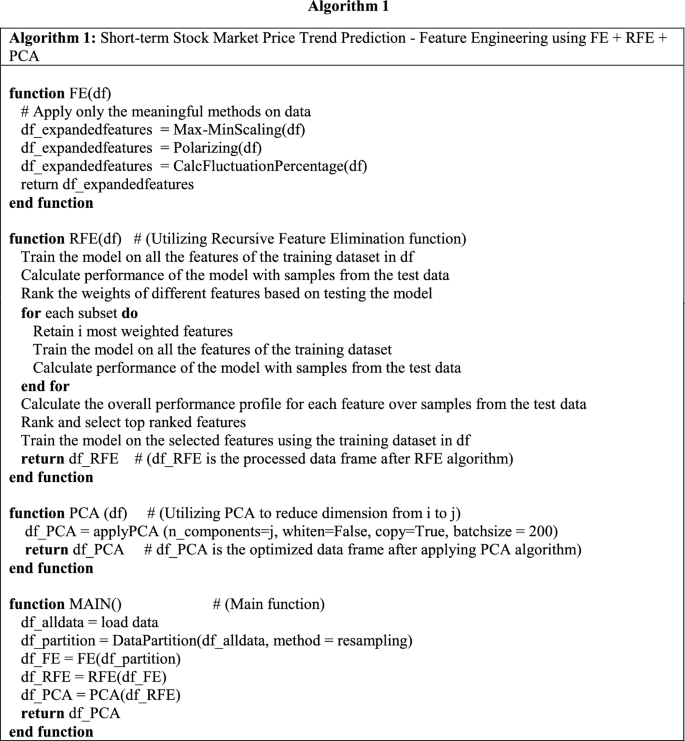

In this section, we present the proposed methods and the design of the proposed solution. Moreover, we also introduce the architecture design as well as algorithmic and implementation details.

Problem statement

We analyzed the best possible approach for predicting short-term price trends from different aspects: feature engineering, financial domain knowledge, and prediction algorithm. Then we addressed three research questions in each aspect, respectively: How can feature engineering benefit model prediction accuracy? How do findings from the financial domain benefit prediction model design? And what is the best algorithm for predicting short-term price trends?

The first research question is about feature engineering. We would like to know how the feature selection method benefits the performance of prediction models. From the abundance of the previous works, we can conclude that stock price data embedded with a high level of noise, and there are also correlations between features, which makes the price prediction notoriously difficult. That is also the primary reason for most of the previous works introduced the feature engineering part as an optimization module.

The second research question is evaluating the effectiveness of findings we extracted from the financial domain. Different from the previous works, besides the common evaluation of data models such as the training costs and scores, our evaluation will emphasize the effectiveness of newly added features that we extracted from the financial domain. We introduce some features from the financial domain. While we only obtained some specific findings from previous works, and the related raw data needs to be processed into usable features. After extracting related features from the financial domain, we combine the features with other common technical indices for voting out the features with a higher impact. There are numerous features said to be effective from the financial domain, and it would be impossible for us to cover all of them. Thus, how to appropriately convert the findings from the financial domain to a data processing module of our system design is a hidden research question that we attempt to answer.

The third research question is that which algorithms are we going to model our data? From the previous works, researchers have been putting efforts into the exact price prediction. We decompose the problem into predicting the trend and then the exact number. This paper focuses on the first step. Hence, the objective has been converted to resolve a binary classification problem, meanwhile, finding an effective way to eliminate the negative effect brought by the high level of noise. Our approach is to decompose the complex problem into sub-problems which have fewer dependencies and resolve them one by one, and then compile the resolutions into an ensemble model as an aiding system for investing behavior reference.

In the previous works, researchers have been using a variety of models for predicting stock price trends. While most of the best-performed models are based on machine learning techniques, in this work, we will compare our approach with the outperformed machine learning models in the evaluation part and find the solution for this research question.

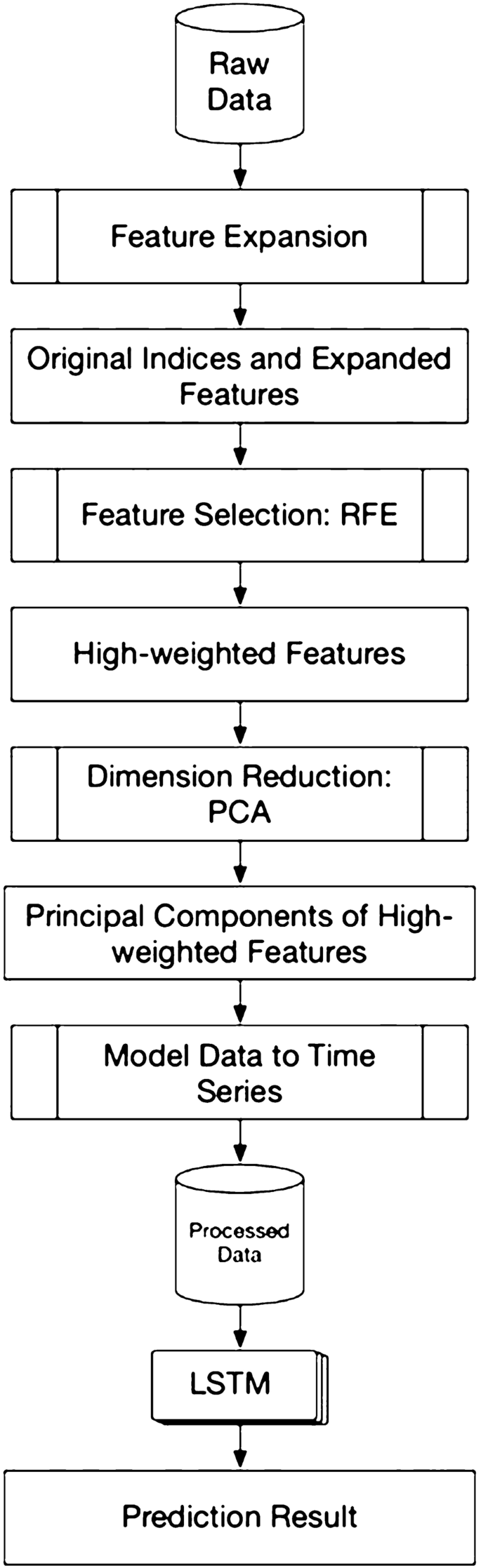

Proposed solution

The high-level architecture of our proposed solution could be separated into three parts. First is the feature selection part, to guarantee the selected features are highly effective. Second, we look into the data and perform the dimensionality reduction. And the last part, which is the main contribution of our work is to build a prediction model of target stocks. Figure 2 depicts a high-level architecture of the proposed solution.

High-level architecture of the proposed solution

There are ways to classify different categories of stocks. Some investors prefer long-term investments, while others show more interest in short-term investments. It is common to see the stock-related reports showing an average performance, while the stock price is increasing drastically; this is one of the phenomena that indicate the stock price prediction has no fixed rules, thus finding effective features before training a model on data is necessary.

In this research, we focus on the short-term price trend prediction. Currently, we only have the raw data with no labels. So, the very first step is to label the data. We mark the price trend by comparing the current closing price with the closing price of n trading days ago, the range of n is from 1 to 10 since our research is focusing on the short-term. If the price trend goes up, we mark it as 1 or mark as 0 in the opposite case. To be more specified, we use the indices from the indices of n − 1 th day to predict the price trend of the n th day.

According to the previous works, some researchers who applied both financial domain knowledge and technical methods on stock data were using rules to filter the high-quality stocks. We referred to their works and exploited their rules to contribute to our feature extension design.

However, to ensure the best performance of the prediction model, we will look into the data first. There are a large number of features in the raw data; if we involve all the features into our consideration, it will not only drastically increase the computational complexity but will also cause side effects if we would like to perform unsupervised learning in further research. So, we leverage the recursive feature elimination (RFE) to ensure all the selected features are effective.

We found most of the previous works in the technical domain were analyzing all the stocks, while in the financial domain, researchers prefer to analyze the specific scenario of investment, to fill the gap between the two domains, we decide to apply a feature extension based on the findings we gathered from the financial domain before we start the RFE procedure.

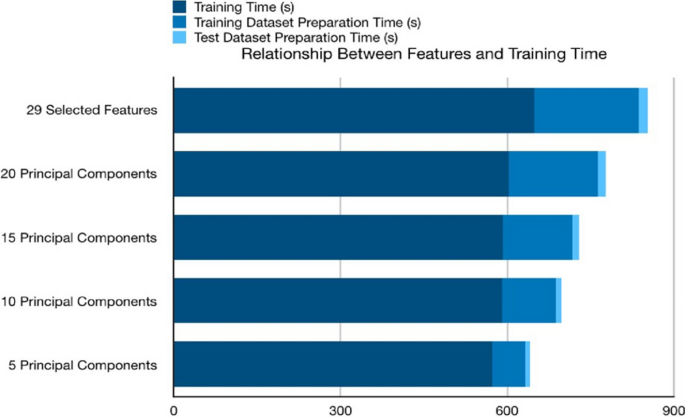

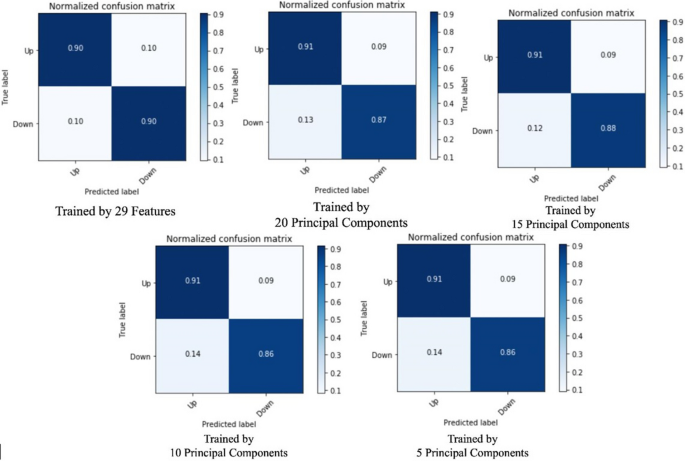

Since we plan to model the data into time series, the number of the features, the more complex the training procedure will be. So, we will leverage the dimensionality reduction by using randomized PCA at the beginning of our proposed solution architecture.

Detailed technical design elaboration

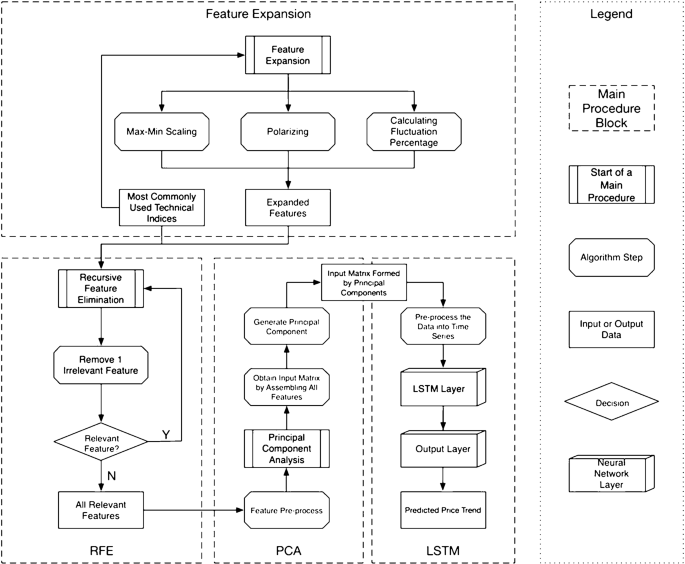

This section provides an elaboration of the detailed technical design as being a comprehensive solution based on utilizing, combining, and customizing several existing data preprocessing, feature engineering, and deep learning techniques. Figure 3 provides the detailed technical design from data processing to prediction, including the data exploration. We split the content by main procedures, and each procedure contains algorithmic steps. Algorithmic details are elaborated in the next section. The contents of this section will focus on illustrating the data workflow.

Detailed technical design of the proposed solution

Based on the literature review, we select the most commonly used technical indices and then feed them into the feature extension procedure to get the expanded feature set. We will select the most effective i features from the expanded feature set. Then we will feed the data with i selected features into the PCA algorithm to reduce the dimension into j features. After we get the best combination of i and j , we process the data into finalized the feature set and feed them into the LSTM [ 10 ] model to get the price trend prediction result.

The novelty of our proposed solution is that we will not only apply the technical method on raw data but also carry out the feature extensions that are used among stock market investors. Details on feature extension are given in the next subsection. Experiences gained from applying and optimizing deep learning based solutions in [ 37 , 38 ] were taken into account while designing and customizing feature engineering and deep learning solution in this work.

Applying feature extension

The first main procedure in Fig. 3 is the feature extension. In this block, the input data is the most commonly used technical indices concluded from related works. The three feature extension methods are max–min scaling, polarizing, and calculating fluctuation percentage. Not all the technical indices are applicable for all three of the feature extension methods; this procedure only applies the meaningful extension methods on technical indices. We choose meaningful extension methods while looking at how the indices are calculated. The technical indices and the corresponding feature extension methods are illustrated in Table 2 .

After the feature extension procedure, the expanded features will be combined with the most commonly used technical indices, i.e., input data with output data, and feed into RFE block as input data in the next step.

Applying recursive feature elimination

After the feature extension above, we explore the most effective i features by using the Recursive Feature Elimination (RFE) algorithm [ 6 ]. We estimate all the features by two attributes, coefficient, and feature importance. We also limit the features that remove from the pool by one, which means we will remove one feature at each step and retain all the relevant features. Then the output of the RFE block will be the input of the next step, which refers to PCA.

Applying principal component analysis (PCA)

The very first step before leveraging PCA is feature pre-processing. Because some of the features after RFE are percentage data, while others are very large numbers, i.e., the output from RFE are in different units. It will affect the principal component extraction result. Thus, before feeding the data into the PCA algorithm [ 8 ], a feature pre-processing is necessary. We also illustrate the effectiveness and methods comparison in “ Results ” section.

After performing feature pre-processing, the next step is to feed the processed data with selected i features into the PCA algorithm to reduce the feature matrix scale into j features. This step is to retain as many effective features as possible and meanwhile eliminate the computational complexity of training the model. This research work also evaluates the best combination of i and j, which has relatively better prediction accuracy, meanwhile, cuts the computational consumption. The result can be found in the “ Results ” section, as well. After the PCA step, the system will get a reshaped matrix with j columns.

Fitting long short-term memory (LSTM) model

PCA reduced the dimensions of the input data, while the data pre-processing is mandatory before feeding the data into the LSTM layer. The reason for adding the data pre-processing step before the LSTM model is that the input matrix formed by principal components has no time steps. While one of the most important parameters of training an LSTM is the number of time steps. Hence, we have to model the matrix into corresponding time steps for both training and testing dataset.

After performing the data pre-processing part, the last step is to feed the training data into LSTM and evaluate the performance using testing data. As a variant neural network of RNN, even with one LSTM layer, the NN structure is still a deep neural network since it can process sequential data and memorizes its hidden states through time. An LSTM layer is composed of one or more LSTM units, and an LSTM unit consists of cells and gates to perform classification and prediction based on time series data.

The LSTM structure is formed by two layers. The input dimension is determined by j after the PCA algorithm. The first layer is the input LSTM layer, and the second layer is the output layer. The final output will be 0 or 1 indicates if the stock price trend prediction result is going down or going up, as a supporting suggestion for the investors to perform the next investment decision.

Design discussion

Feature extension is one of the novelties of our proposed price trend predicting system. In the feature extension procedure, we use technical indices to collaborate with the heuristic processing methods learned from investors, which fills the gap between the financial research area and technical research area.

Since we proposed a system of price trend prediction, feature engineering is extremely important to the final prediction result. Not only the feature extension method is helpful to guarantee we do not miss the potentially correlated feature, but also feature selection method is necessary for pooling the effective features. The more irrelevant features are fed into the model, the more noise would be introduced. Each main procedure is carefully considered contributing to the whole system design.

Besides the feature engineering part, we also leverage LSTM, the state-of-the-art deep learning method for time-series prediction, which guarantees the prediction model can capture both complex hidden pattern and the time-series related pattern.