Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Quantitative Data Analysis

5 Hypothesis Testing in Quantitative Research

Mikaila Mariel Lemonik Arthur

Statistical reasoning is built on the assumption that data are normally distributed , meaning that they will be distributed in the shape of a bell curve as discussed in the chapter on Univariate Analysis . While real life often—perhaps even usually—does not resemble a bell curve, basic statistical analysis assumes that if all possible random samples from a population were drawn and the mean taken from each sample, the distribution of sample means, when plotted on a graph, would be normally distributed (this assumption is called the Central Limit Theorem ). Given this assumption, we can use the mathematical techniques developed for the study of probability to determine the likelihood that the relationships or patterns we observe in our data occurred due to random chance rather than due some actual real-world connection, which we call statistical significance.

Statistical significance is not the same as practical significance. The fact that we have determined that a given result is unlikely to have occurred due to random chance does not mean that this given result is important, that it matters, or that it is useful. Similarly, we might observe a relationship or result that is very important in practical terms, but that we cannot claim is statistically significant—perhaps because our sample size is too small, for instance. Such a result might have occurred by chance, but ignoring it might still be a mistake. Let’s consider some examples to make this a bit clearer. Assume we were interested in the impacts of diet on health outcomes and found the statistically significant result that people who eat a lot of citrus fruit end up having pinky fingernails that are, on average, 1.5 millimeters longer than those who tend not to eat any citrus fruit. Should anyone change their diet due to this finding? Probably not, even those it is statistically significant. On the other hand, if we found that the people who ate the diets highest in processed sugar died on average five years sooner than those who ate the least processed sugar, even in the absence of a statistically significant result we might want to advise that people consider limiting sugar in their diet. This latter result has more practical significance (lifespan matters more than the length of your pinky fingernail) as well as a larger effect size or association (5 years of life as opposed to 1.5 millimeters of length), a factor that will be discussed in the chapter on association .

While people generally use the shorthand of “the likelihood that the results occurred by chance” when talking about statistical significance, it is actually a bit more complicated than that. What statistical significance is really telling us is the likelihood (or probability ) that a result equal to or more “extreme [1] ” is true in the real world, rather than our results having occurred due to random chance or sampling error . Testing for statistical significance, then, requires us to understand something about probability.

A Brief Review of Probability

You might remember having studied probability in a math class, with questions about coin flips or drawing marbles out of a jar. Such exercises can make probability seem very abstract. But in reality, computations of probability are deeply important for a wide variety of activities, ranging from gambling and stock trading to weather forecasts and, yes, statistical significance.

Probability is represented as a proportion (or decimal number) somewhere between 0 and 1. At 0, there is absolutely no likelihood that the event or pattern of interest would occur; at 1, it is absolutely certain that the event or pattern of interest will occur. We indicate that we are talking about probability by using the symbol [latex]p[/latex]. For example, if something has a 50% chance of occurring, we would write [latex]p=0.5[/latex] or [latex]\frac {1}{2}[/latex]. If we want to represent the likelihood of something not occurring, we can write [latex]1-p[/latex].

Check your thinking: Assume you were flipping coins, and you called heads. The probability of getting heads on a coin flip using a fair coin (in other words, a normal coin that has not been weighted to bias the result) is 0.5. Thus, in 50% of coin flips you should get heads. Consider the following probability questions and write down your answers so you can check them against the discussion below.

- Imagine you have flipped the coin 29 times and you have gotten heads each time. What is the probability you will get heads on flip 30?

- What is the probability that you will get heads on all of the first five coin flips?

- What is the probability that you will get heads on at least one of the first five coin flips?

There are a few basic concepts from the mathematical study of probability that are important for beginner data analysts to know, and we will review them here.

Probability over Repeated Trials : The probability of the outcome of interest is the same in each trial or test, regardless of the results of the prior test. So, if we flip a coin 29 times and get heads each time, what happens when we flip it the 29th time? The probability of heads is still 0.5! The belief that “this time it must be tails because it has been heads so many times” or “this coin just wants to come up heads” is simply superstition, and—assuming a fair coin—the results of prior trials do not influence the results of this one.

Probability of Multiple Events : The probability that the outcome of interest will occur repeatedly across multiple trials is the product [2] of the probability of the outcome on each individual trial. This is called the multiplication theorem . Thinking about the multiplication theorem requires that we keep in mind the fact that when we multiply decimal numbers together, those numbers get smaller— thus, the probability that a series of outcomes will occur is smaller than the probability of any one of those outcomes occurring on its own. So, what is the probability that we will get heads on all five of our coin flips? Well, to figure that out, we need to multiply the probability of getting heads on each of our coin flips together. The math looks like this (and produces a very small probability indeed):

[latex]\frac {1}{2} \cdot \frac {1}{2} \cdot \frac {1}{2} \cdot \frac {1}{2} \cdot \frac {1}{2} = 0.03125[/latex]

Probability of One of Many Events : Determining the probability that the outcome of interest will occur on at least one out of a series of events or repeated trials is a little bit more complicated. Mathematicians use the addition theorem to refer to this, because the basic way to calculate it is to calculate the probability of each sequence of events (say, heads-heads-heads, heads-heads-tails, heads-tails-heads, and so on) and add them together. But the greater the number of repeated trials, the more complicated that gets, so there is a simpler way to do it. Consider that the probability of getting no heads is the same as the probability of getting all tails (which would be the same as the probability of getting all heads that we calculated above). And the only circumstance in which we would not have at least one flip resulting in heads would be a circumstance in which all flips had resulted in tails. Therefore, what we need to do in order to calculate the probability that we get at least one heads is to subtract the probability that we get no heads from 1—and as you can imagine, this procedure shows us that the probability of the outcome of interest occurring at least once over repeated trials is higher than the probability of the occurrence on any given trial. The math would look like this:

[latex]1- (\frac{1}{2})^5=0.9688[/latex]

So why is this digression into the math of probability important? Well, when we test for statistical significance, what we are really doing is determining the probability that the outcome we observed—or one that is more extreme than that which we observed—occurred by chance. We perform this analysis via a procedure called Null Hypothesis Significance Testing.

Null Hypothesis Significance Testing

Null hypothesis significance testing , or NHST , is a method of testing for statistical significance by comparing observed data to the data we would expect to see if there were no relationship between the variables or phenomena in question. NHST can take a little while to wrap one’s head around, especially because it relies on a logic of double negatives: first, we state a hypothesis we believe not to be true (there is no relationship between the variables in question) and then, we look for evidence that disconfirms this hypothesis. In other words, we are assuming that there is no relationship between the variables—even though our research hypothesis states that we think there is a relationship—and then looking to see if there is any evidence to suggest there is not no relationship. Confusing, right?

So why do we use the null hypothesis significance testing approach?

- The null hypothesis—that there is no relationship between the variables we are exploring—would be what we would generally accept as true in the absence of other information,

- It means we are assuming that differences or patterns occur due to chance unless there is strong evidence to suggest otherwise,

- It provides a benchmark for comparing observed outcomes, and

- It means we are searching for evidence that disconforms our hypothesis, making it less likely that we will accept a conclusion that turns out to be untrue.

Thus, NHST helps us avoid making errors in our interpretation of the result. In particular, it helps us avoid Type 2 error , as discussed in the chapter on Bivariate Analyses . As a reminder, Type 2 error is error where you accept a hypothesis as true when in fact it was false (while Type 1 error is error where you reject the hypothesis when in fact it was true). For example, you are making a Type 1 error if you decide not to study for a test because you assume you are so bad at the subject that studying simply cannot help you, when in fact we know from research that studying does lead to higher grades. And you are making a Type 2 error if your boss tells you that she is going to promote you if you do enough overtime and you then work lots of overtime in response, when actually your boss is just trying to make you work more hours and already had someone else in mind to promote.

We can never remove all sources of error from our analyses, though larger sample sizes help reduce error. Looking at the formula for computing standard error , we can see that the standard error ([latex]SE[/latex]) would get smaller as the sample size ([latex]N[/latex]) gets larger. Note: σ is the symbol we use to represent standard deviation.

[latex]SE = \frac{\sigma}{\sqrt N}[/latex]

Besides making our samples larger, another thing that we can do is that we can choose whether we are more willing to accept Type 1 error or Type 2 error and adjust our strategies accordingly. In most research, we would prefer to accept more Type 1 error, because we are more willing to miss out on a finding than we are to make a finding that turns out later to be inaccurate (though, of course, lots of research does eventually turn out to be inaccurate).

Performing NHST

Performing NHST requires that our data meet several assumptions:

- Our sample must be a random sample—statistical significance testing and other inferential and explanatory statistical methods are generally not appropriate for non-random samples [3] —as well as representative and of a sufficient size (see the Central Limit Theorem above).

- Observations must be independent of other observations, or else additional statistical manipulation must be performed. For instance, a dataset of data about siblings would need to be handled differently due to the fact that siblings affect one another, so data on each person in the dataset is not truly independent.

- You must determine the rules for your significance test, including the level of uncertainty you are willing to accept (significance level) and whether or not you are interested in the direction of the result (one-tailed versus two-tailed tests, to be discussed below), in advance of performing any analysis.

- The number of significance tests you run should be limited, because the more tests you run, the greater the likelihood that one of your tests will result in an error. To make this more clear, if you are willing to accept a 5% probability that you will make the error of accepting a hypothesis as true when it is really false, and you run 20 tests, one of those tests (5% of them!) is pretty likely to have produced an incorrect result.

If our data has met these assumptions, we can move forward with the process of conducting an NHST. This requires us to make three decisions: determining our null hypothesis , our confidence level (or acceptable significance level), and whether we will conduct a one-tailed or a two-tailed test. In keeping with Assumption 3 above, we must make these decisions before performing our analysis. The null hypothesis is the hypothesis that there is no relationship between the variables in question. So, for example, if our research hypothesis was that people who spend more time with their friends are happier, our null hypothesis would be that there is no relationship between how much time people spend with their friends and their happiness.

Our confidence level is the level of risk we are willing to accept that our results could have occurred by chance. Typically, in social science research, researchers use p<0.05 (we are willing to accept up to a 5% risk that our results occurred by chance), p<0.01 (we are willing to accept up to a 1% risk that our results occurred by chance), and/or p<0.001 (we are willing to accept up to a 0.1% risk that our results occurred by chance). P, as was noted above, is the mathematical notation for probability, and that’s why we use a p-value to indicate the probability that our results may have occurred by chance. A higher p-value increases the likelihood that we will accept as accurate a result that really occurred by chance; a lower p-value increases the likelihood that we will assume a result occurred by chance when actually it was real. Remember, what the p-value tells us is not the probability that our own research hypothesis is true, but rather this: assuming that the null hypothesis is correct, what is the probability that the data we observed—or data more extreme than the data we observed—would have occurred by chance.

Whether we choose a one-tailed or a two-tailed test tells us what we mean when we say “data more extreme than.” Remember that normal curve? A two-tailed test is agnostic as to the direction of our results—and many of the most common tests for statistical significance that we perform, like the Chi square, are two-tailed by default. However, if you are only interested in a result that occurs in a particular direction, you might choose a one-tailed test. For instance, if you were testing a new blood pressure medication, you might only care if the blood pressure of those taking the medication is significantly lower than those not taking the medication—having blood pressure significantly higher would not be a good or helpful result, so you might not want to test for that.

Having determined the parameters for our analysis, we then compute our test of statistical significance. There are different tests of statistical significance for different variables (for example, the Chi square discussed in the chapter on bivariate analyses ), as you will see in other chapters of this text, but all of them produce results in a similar format. We then compare this result to the p value we already selected. If the p value produced by our analysis is lower than the confidence level we selected, we can reject the null hypothesis, as the probability that our result occurred by chance is very low. If, on the other hand, the p value produced by our analysis is higher than the confidence level we selected, we fail to reject the null hypothesis, as the probability that our result occurred by chance is too high to accept. Keep in mind this is what we do even when the p value produced by our analysis is quite close to the threshold we have selected. So, for instance, if we have selected the confidence level of p<0.05 and the p value produced by our analysis is p=0.0501, we still fail to reject the null hypothesis and proceed as if there is not any support for our research hypothesis.

Thus, the process of null hypothesis significance testing proceeds according to the following steps:

- Determine the null hypothesis

- Set the confidence level and whether this will be a one-tailed or two-tailed test

- Compute the test value for the appropriate significance test

- Compare the test value to the critical value of that test statistic for the confidence level you selected

- Determine whether or not to reject the null hypothesis

Your statistical analysis software will perform steps 3 and 4 for you (before there was computer software to do this, researchers had to do the calculations by hand and compare their results to figures on published tables of critical values). But you as the researcher must perform steps 1, 2, and 5 yourself.

Confidence Intervals & Margins of Error

When talking about statistical significance, some researchers also use the terms confidence intervals and margins of error . Confidence intervals are ranges of probabilities within which we can assume the true population parameter lies. Most typically, analysts aim for 95% confidence intervals, meaning that in 95 out of 100 cases, the population parameter will lie within the upper and lower levels specified by your confidence interval. These are calculated by your statistics software as well. The margin of error, then, is the range of values within the confidence interval. So, for instance, a 2021 survey of Americans conducted by the Robert Wood Johnson Foundation and the Harvard T.H. Chan School of Public Health found that 71% of respondents favor substantially increasing federal spending on public health programs. This poll had a 95% confidence interval with a +/- 3.6 margin of error. What this tells us is that there is a 95% probability (19 in 20) that between 67.4% (71-3.6) and 74.6% (71+3.6) of Americans favored increasing federal public health spending at the time the poll was conducted. When a figure reflects an overwhelming majority, such as this one, the margin of error may seem of little relevance. But consider a similar poll with the same margin of error that sought to predict support for a political candidate and found that 51.5% of people said they would vote for that candidate. In that case, we would have found that there was a 95% probability that between 47.9% and 55.1% of people intended to vote for the candidate—which means the race is total tossup and we really would have no idea what to expect. For some people, thinking in terms of confidence intervals and margins of error is easier to understand than thinking in terms of p values; confidence intervals and margins of error are more frequently used in analyses of polls while p values are found more often in academic research. But basically, both approaches are doing the same fundamental analysis—they are determining the likelihood that the results we observed or a similarly-meaningful result would have occurred by chance.

What Does Significance Testing Tell Us?

One of the most important things to remember about significance testing is that, while the word “significance” is used in ordinary speech to mean importance, significance testing does not tell us whether our results are important—or even whether they are interesting. A full understanding of the relationship between a given set of variables requires looking at statistical significance as well as association and the theoretical importance of the findings. Table 1 provides a perspective on using the combination of significance and association to determine how important the results of statistical analysis are—but even using Table 1 as a guide, evaluating findings based on theoretical importance remains key. So: make sure that when you are conducting analyses, you avoid being misled into assuming that significant results are sufficient for making broad claims about the importance and meaning of results. And remember as well that significance only tells us the likelihood that the pattern of relationships we observe occurred by chance—not whether that pattern is causal. For, after all, quantitative research can never eliminate all plausible alternative explanations for the phenomenon in question (one of the three elements of causation, along with association and temporal order).

- Getting 7 heads on 7 coin flips

- Getting 5 heads on 7 coin flips

- Getting 1 head on 10 coin flips

Then check your work using the Coin Flip Probability Calculator .

- As the advertised hourly pay for a job goes up, the number of job applicants increases.

- Teenagers who watch more hours of makeup tutorial videos on TikTok have, on average, lower self-esteem.

- Couples who share hobbies in common are less likely to get divorced.

- Assume a research conducted a study that found that people wearing green socks type on average one word per minute faster than people who are not wearing green socks, and that this study found a p value of p<0.01. Is this result statistically significant? Is this result practically significant? Explain your answers.

- If we conduct a political poll and have a 95% confidence interval and a margin of error of +/- 2.3%, what can we conclude about support for Candidate X if 49.3% of respondents tell us they will vote for Candidate X? If 24.7% do? If 52.1% do? If 83.7% do?

- One way to think about this is to imagine that your result has been plotted on a bell curve. Statistical significance tells us the probability that the "real" result—the thing that is true in the real world and not due to random chance—is at the same point as or further along the skinny tails of the bell curve than the result we have plotted. ↵

- In other words, what you get when you multiply. ↵

- They also are not appropriate for censuses—but you do not need inferential statistics in a census because you are looking at the entire population rather than a sample, so you can simply describe the relationships that do exist. ↵

A distribution of values that is symmetrical and bell-shaped.

A graph showing a normal distribution—one that is symmetrical with a rounded top that then falls away towards the extremes in the shape of a bell

The sum of all the values in a list divided by the number of such values.

The theorem that states that if you take a series of sufficiently large random samples from the population (replacing people back into the population so they can be reselected each time you draw a new sample), the distribution of the sample means will be approximately normally distributed.

A statistical measure that suggests that sample results can be generalized to the larger population, based on a low probability of having made a Type 1 error.

How likely something is to happen; also, a branch of mathematics concerned with investigating the likelihood of occurrences.

Measurement error created due to the fact that even properly-constructed random samples are do not have precisely the same characteristics as the larger population from which they were drawn.

The theorem in probability about the likelihood of a given outcome occurring repeatedly over multiple trials; this is determined by multiplying the probabilities together.

The theorem addressing the determination of the probability of a given outcome occurring at least once across a series of trials; it is determined by adding the probability of each possible series of outcomes together.

A method of testing for statistical significance in which an observed relationship, pattern, or figure is tested against a hypothesis that there is no relationship or pattern among the variables being tested

Null hypothesis significance testing.

The error you make when you do not infer a relationship exists in the larger population when it actually does exist; in other words, a false negative conclusion.

The error made if one infers that a relationship exists in a larger population when it does not really exist; in other words, a false positive error.

A measure of accuracy of sample statistics computed using the standard deviation of the sampling distribution.

The hypothesis that there is no relationship between the variables in question.

The probability that the sample statistics we observe holds true for the larger population.

A measure of statistical significance used in crosstabulation to determine the generalizability of results.

A range of estimates into which it is highly probable that an unknown population parameter falls.

A suggestion of how far away from the actual population parameter a sample statistic is likely to be.

Social Data Analysis Copyright © 2021 by Mikaila Mariel Lemonik Arthur is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

- Teesside University Student & Library Services

- Learning Hub Group

Quantitative data collection and analysis

- Testing hypotheses

- Quantitative data collection

- Averages and percentiles

- Measures of Spread or Dispersion

- Samples and population

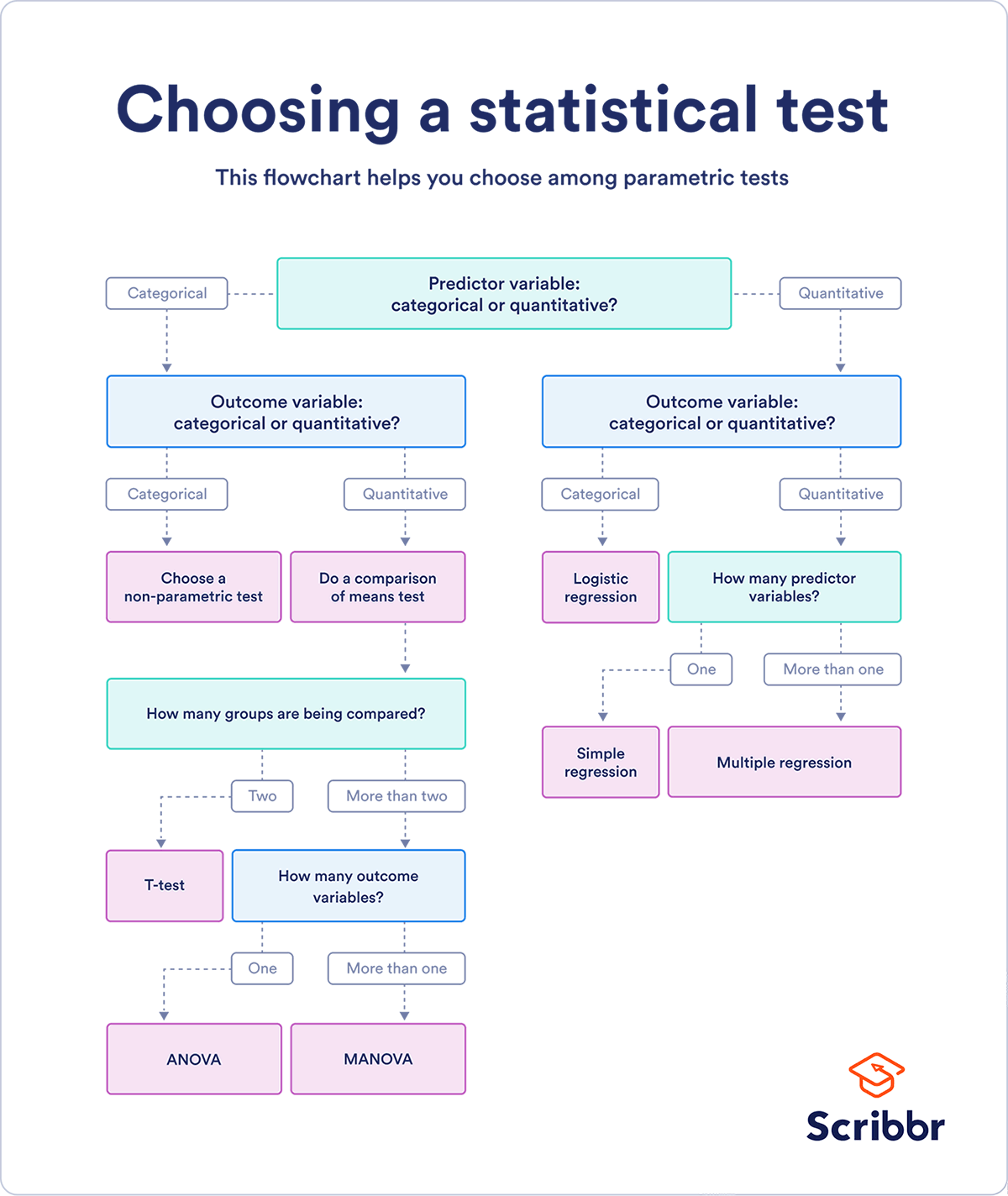

- Statistical tests - parametric

- Statistical tests - non-parametric

- Probability

- Reliability and Validity

- Analysing relationships

- Useful Books

Testing Hypotheses

- What is a hypothesis?

- Significance testing

- One-tailed or two-tailed?

- Degrees of freedom

A hypothesis is a statement that we are trying to prove or disprove. It is used to express the relationship between variables and whether this relationship is significant. It is specific and offers a prediction on the results of your research question.

Your research question will lead you to developing a hypothesis, this is why your research question needs to be specific and clear.

The hypothesis will then guide you to the most appropriate techniques you should use to answer the question. They reflect the literature and theories on which you basing them. They need to be testable (i.e. measurable and practical).

Null hypothesis (H 0 ) is the proposition that there will not be a relationship between the variables you are looking at. i.e. any differences are due to chance). They always refer to the population. (Usually we don't believe this to be true.)

e.g. There is no difference in instances of illegal drug use by teenagers who are members of a gang and those who are not..

Alternative hypothesis (H A ) or ( H 1 ): this is sometimes called the research hypothesis or experimental hypothesis. It is the proposition that there will be a relationship. It is a statement of inequality between the variables you are interested in. They always refer to the sample. It is usually a declaration rather than a question and is clear, to the point and specific.

e.g. The instances of illegal drug use of teenagers who are members of a gang is different than the instances of illegal drug use of teenagers who are not gang members.

A non-directional research hypothesis - reflects an expected difference between groups but does not specify the direction of this difference (see two-tailed test).

A directional research hypothesis - reflects an expected difference between groups but does specify the direction of this difference. (see one-tailed test)

e.g. The instances of illegal drug use by teenagers who are members of a gang will be higher t han the instances of illegal drug use of teenagers who are not gang members.

Then the process of testing is to ascertain which hypothesis to believe.

It is usually easier to prove something as untrue rather than true, so looking at the null hypothesis is the usual starting point.

The process of examining the null hypothesis in light of evidence from the sample is called significance testing . It is a way of establishing a range of values in which we can establish whether the null hypothesis is true or false.

The debate over hypothesis testing

There has been discussion over whether the scientific method employed in traditional hypothesis testing is appropriate.

See below for some articles that discuss this:

- Gill, J. (1999) 'The insignificance of null hypothesis testing', Politics Research Quarterly , 52(3), pp. 647-674 .

- Wainer, H. and Robinson, D.H. (2003) 'Shaping up the practice of null hypothesis significance testing', Educational Researcher, 32(7), pp.22-30 .

- Ferguson, C.J. and Heener, M. (2012) ' A vast graveyard of undead theories: publication bias and psychological science's aversion to the null' , Perspectives on Psychological Science, 7(6), pp.555-561 .

Taken from: Salkind, N.J. (2017) Statistics for people who (think they) hate statistics. 6th edn. London: SAGE pp. 144-145.

- Null hypothesis - a simple introduction (SPSS)

A significance level defines the level when your sample evidence contradicts your null hypothesis so that your can then reject it. It is the probability of rejecting the null hypothesis when it is really true.

e.g. a significance level of 0.05 indicates that there is a 5% (or 1 in 20) risk of deciding that there is an effect when in fact there is none.

The lower the significance level that you set, then the evidence from the sample has to be stronger to be able to reject the null hypothesis.

N.B. - it is important that you set the significance level before you carry out your study and analysis.

Using Confidence Intervals

I t is possible to test the significance of your null hypothesis using Confidence Interval (see under samples and populations tab).

- if the range lies outside our predicted null hypothesis value we can reject it and accept the alternative hypothesis

The test statistic

This is another commonly used statistic

- Write down your null and alternative hypothesis

- Find the sample statistic (e.g.the mean of your sample)

- Calculate the test statistic Z score (see under Measures of spread or dispersion and Statistical tests - parametric). In this case the sample mean is compared to the population mean (assumed from the null hypothesis) and the standard error (see under Samples and population) is used rather than the standard deviation.

- Compare the test statistic with the critical values (e.g. plus or minus 1.96 for 5% significance)

- Draw a conclusion about the hypotheses - does the calculated z value lies in this critical range i.e. above 1.96 or below -1.96? If it does we can reject the null hypothesis. This would indicate that the results are significant (or an effect has been detected) - which means that if there were no difference in the population then getting a result that you have observed would be highly unlikely therefore you can reject the null hypothesis.

Type I error - this is the chance of wrongly rejecting the null hypothesis even though it is actually true, e.g. by using a 5% p level you would expect the null hypothesis to be rejected about 5% of the time when the null hypothesis is true. You could set a more stringent p level such as 1% (or 1 in 100) to be more certain of not seeing a Type I error. This, however, makes more likely another type of error (Type II) occurring.

Type II error - this is where there is an effect, but the p value you obtain is non-significant hence you don’t detect this effect.

- Statistical significance - what does it really mean?

- Statistical tables

One-tailed tests - where we know in which direction (e.g. larger or smaller) the difference between sample and population will be. It is a directional hypothesis.

Two-tailed tests - where we are looking at whether there is a difference between sample and population. This difference could be larger or smaller. This is a non-directional hypothesis.

If the difference is in the direction you have predicted (i.e. a one-tailed test) it is easier to get a significant result. Though there are arguments against using a one-tailed test (Wright and London, 2009, p. 98-99)*

*Wright, D. B. & London, K. (2009) First (and second) steps in statistics . 2nd edn. London: SAGE.

N.B. - think of the ‘tails’ as the regions at the far-end of a normal distribution. For a two-tailed test with significance level of 0.05% then 0.025% of the values would be at one end of the distribution and the other 0.025% would be at the other end of the distribution. It is the values in these ‘critical’ extreme regions where we can think about rejecting the null hypothesis and claim that there has been an effect.

Degrees of freedom ( df) is a rather difficult mathematical concept, but is needed to calculate the signifcance of certain statistical tests, such as the t-test, ANOVA and Chi-squared test.

It is broadly defined as the number of "observations" (pieces of information) in the data that are free to vary when estimating statistical parameters. (Taken from Minitab Blog ).

The higher the degrees of freedom are the more powerful and precise your estimates of the parameter (population) will be.

Typically, for a 1-sample t-test it is considered as the number of values in your sample minus 1.

For chi-squared tests with a table of rows and columns the rule is:

(Number of rows minus 1) times (number of columns minus 1)

Any accessible example to illustrate the principle of degrees of freedom using chocolates.

- You have seven chocolates in a box, each being a different type, e.g. truffle, coffee cream, caramel cluster, fudge, strawberry dream, hazelnut whirl, toffee.

- You are being good and intend to eat only one chocolate each day of the week.

- On the first day, you can choose to eat any one of the 7 chocolate types - you have a choice from all 7.

- On the second day, you can choose from the 6 remaining chocolates, on day 3 you can choose from 5 chocolates, and so on.

- On the sixth day you have a choice of the remaining 2 chocolates you haven't ate that week.

- However on the seventh day - you haven't really got any choice of chocolate - it has got to be the one you have left in your box.

- You had 7-1 = 6 days of “chocolate” freedom—in which the chocolate you ate could vary!

- << Previous: Samples and population

- Next: Statistical tests - parametric >>

- Last Updated: Jan 9, 2024 11:01 AM

- URL: https://libguides.tees.ac.uk/quantitative

- Privacy Policy

Buy Me a Coffee

Home » Quantitative Research – Methods, Types and Analysis

Quantitative Research – Methods, Types and Analysis

Table of Contents

Quantitative Research

Quantitative research is a type of research that collects and analyzes numerical data to test hypotheses and answer research questions . This research typically involves a large sample size and uses statistical analysis to make inferences about a population based on the data collected. It often involves the use of surveys, experiments, or other structured data collection methods to gather quantitative data.

Quantitative Research Methods

Quantitative Research Methods are as follows:

Descriptive Research Design

Descriptive research design is used to describe the characteristics of a population or phenomenon being studied. This research method is used to answer the questions of what, where, when, and how. Descriptive research designs use a variety of methods such as observation, case studies, and surveys to collect data. The data is then analyzed using statistical tools to identify patterns and relationships.

Correlational Research Design

Correlational research design is used to investigate the relationship between two or more variables. Researchers use correlational research to determine whether a relationship exists between variables and to what extent they are related. This research method involves collecting data from a sample and analyzing it using statistical tools such as correlation coefficients.

Quasi-experimental Research Design

Quasi-experimental research design is used to investigate cause-and-effect relationships between variables. This research method is similar to experimental research design, but it lacks full control over the independent variable. Researchers use quasi-experimental research designs when it is not feasible or ethical to manipulate the independent variable.

Experimental Research Design

Experimental research design is used to investigate cause-and-effect relationships between variables. This research method involves manipulating the independent variable and observing the effects on the dependent variable. Researchers use experimental research designs to test hypotheses and establish cause-and-effect relationships.

Survey Research

Survey research involves collecting data from a sample of individuals using a standardized questionnaire. This research method is used to gather information on attitudes, beliefs, and behaviors of individuals. Researchers use survey research to collect data quickly and efficiently from a large sample size. Survey research can be conducted through various methods such as online, phone, mail, or in-person interviews.

Quantitative Research Analysis Methods

Here are some commonly used quantitative research analysis methods:

Statistical Analysis

Statistical analysis is the most common quantitative research analysis method. It involves using statistical tools and techniques to analyze the numerical data collected during the research process. Statistical analysis can be used to identify patterns, trends, and relationships between variables, and to test hypotheses and theories.

Regression Analysis

Regression analysis is a statistical technique used to analyze the relationship between one dependent variable and one or more independent variables. Researchers use regression analysis to identify and quantify the impact of independent variables on the dependent variable.

Factor Analysis

Factor analysis is a statistical technique used to identify underlying factors that explain the correlations among a set of variables. Researchers use factor analysis to reduce a large number of variables to a smaller set of factors that capture the most important information.

Structural Equation Modeling

Structural equation modeling is a statistical technique used to test complex relationships between variables. It involves specifying a model that includes both observed and unobserved variables, and then using statistical methods to test the fit of the model to the data.

Time Series Analysis

Time series analysis is a statistical technique used to analyze data that is collected over time. It involves identifying patterns and trends in the data, as well as any seasonal or cyclical variations.

Multilevel Modeling

Multilevel modeling is a statistical technique used to analyze data that is nested within multiple levels. For example, researchers might use multilevel modeling to analyze data that is collected from individuals who are nested within groups, such as students nested within schools.

Applications of Quantitative Research

Quantitative research has many applications across a wide range of fields. Here are some common examples:

- Market Research : Quantitative research is used extensively in market research to understand consumer behavior, preferences, and trends. Researchers use surveys, experiments, and other quantitative methods to collect data that can inform marketing strategies, product development, and pricing decisions.

- Health Research: Quantitative research is used in health research to study the effectiveness of medical treatments, identify risk factors for diseases, and track health outcomes over time. Researchers use statistical methods to analyze data from clinical trials, surveys, and other sources to inform medical practice and policy.

- Social Science Research: Quantitative research is used in social science research to study human behavior, attitudes, and social structures. Researchers use surveys, experiments, and other quantitative methods to collect data that can inform social policies, educational programs, and community interventions.

- Education Research: Quantitative research is used in education research to study the effectiveness of teaching methods, assess student learning outcomes, and identify factors that influence student success. Researchers use experimental and quasi-experimental designs, as well as surveys and other quantitative methods, to collect and analyze data.

- Environmental Research: Quantitative research is used in environmental research to study the impact of human activities on the environment, assess the effectiveness of conservation strategies, and identify ways to reduce environmental risks. Researchers use statistical methods to analyze data from field studies, experiments, and other sources.

Characteristics of Quantitative Research

Here are some key characteristics of quantitative research:

- Numerical data : Quantitative research involves collecting numerical data through standardized methods such as surveys, experiments, and observational studies. This data is analyzed using statistical methods to identify patterns and relationships.

- Large sample size: Quantitative research often involves collecting data from a large sample of individuals or groups in order to increase the reliability and generalizability of the findings.

- Objective approach: Quantitative research aims to be objective and impartial in its approach, focusing on the collection and analysis of data rather than personal beliefs, opinions, or experiences.

- Control over variables: Quantitative research often involves manipulating variables to test hypotheses and establish cause-and-effect relationships. Researchers aim to control for extraneous variables that may impact the results.

- Replicable : Quantitative research aims to be replicable, meaning that other researchers should be able to conduct similar studies and obtain similar results using the same methods.

- Statistical analysis: Quantitative research involves using statistical tools and techniques to analyze the numerical data collected during the research process. Statistical analysis allows researchers to identify patterns, trends, and relationships between variables, and to test hypotheses and theories.

- Generalizability: Quantitative research aims to produce findings that can be generalized to larger populations beyond the specific sample studied. This is achieved through the use of random sampling methods and statistical inference.

Examples of Quantitative Research

Here are some examples of quantitative research in different fields:

- Market Research: A company conducts a survey of 1000 consumers to determine their brand awareness and preferences. The data is analyzed using statistical methods to identify trends and patterns that can inform marketing strategies.

- Health Research : A researcher conducts a randomized controlled trial to test the effectiveness of a new drug for treating a particular medical condition. The study involves collecting data from a large sample of patients and analyzing the results using statistical methods.

- Social Science Research : A sociologist conducts a survey of 500 people to study attitudes toward immigration in a particular country. The data is analyzed using statistical methods to identify factors that influence these attitudes.

- Education Research: A researcher conducts an experiment to compare the effectiveness of two different teaching methods for improving student learning outcomes. The study involves randomly assigning students to different groups and collecting data on their performance on standardized tests.

- Environmental Research : A team of researchers conduct a study to investigate the impact of climate change on the distribution and abundance of a particular species of plant or animal. The study involves collecting data on environmental factors and population sizes over time and analyzing the results using statistical methods.

- Psychology : A researcher conducts a survey of 500 college students to investigate the relationship between social media use and mental health. The data is analyzed using statistical methods to identify correlations and potential causal relationships.

- Political Science: A team of researchers conducts a study to investigate voter behavior during an election. They use survey methods to collect data on voting patterns, demographics, and political attitudes, and analyze the results using statistical methods.

How to Conduct Quantitative Research

Here is a general overview of how to conduct quantitative research:

- Develop a research question: The first step in conducting quantitative research is to develop a clear and specific research question. This question should be based on a gap in existing knowledge, and should be answerable using quantitative methods.

- Develop a research design: Once you have a research question, you will need to develop a research design. This involves deciding on the appropriate methods to collect data, such as surveys, experiments, or observational studies. You will also need to determine the appropriate sample size, data collection instruments, and data analysis techniques.

- Collect data: The next step is to collect data. This may involve administering surveys or questionnaires, conducting experiments, or gathering data from existing sources. It is important to use standardized methods to ensure that the data is reliable and valid.

- Analyze data : Once the data has been collected, it is time to analyze it. This involves using statistical methods to identify patterns, trends, and relationships between variables. Common statistical techniques include correlation analysis, regression analysis, and hypothesis testing.

- Interpret results: After analyzing the data, you will need to interpret the results. This involves identifying the key findings, determining their significance, and drawing conclusions based on the data.

- Communicate findings: Finally, you will need to communicate your findings. This may involve writing a research report, presenting at a conference, or publishing in a peer-reviewed journal. It is important to clearly communicate the research question, methods, results, and conclusions to ensure that others can understand and replicate your research.

When to use Quantitative Research

Here are some situations when quantitative research can be appropriate:

- To test a hypothesis: Quantitative research is often used to test a hypothesis or a theory. It involves collecting numerical data and using statistical analysis to determine if the data supports or refutes the hypothesis.

- To generalize findings: If you want to generalize the findings of your study to a larger population, quantitative research can be useful. This is because it allows you to collect numerical data from a representative sample of the population and use statistical analysis to make inferences about the population as a whole.

- To measure relationships between variables: If you want to measure the relationship between two or more variables, such as the relationship between age and income, or between education level and job satisfaction, quantitative research can be useful. It allows you to collect numerical data on both variables and use statistical analysis to determine the strength and direction of the relationship.

- To identify patterns or trends: Quantitative research can be useful for identifying patterns or trends in data. For example, you can use quantitative research to identify trends in consumer behavior or to identify patterns in stock market data.

- To quantify attitudes or opinions : If you want to measure attitudes or opinions on a particular topic, quantitative research can be useful. It allows you to collect numerical data using surveys or questionnaires and analyze the data using statistical methods to determine the prevalence of certain attitudes or opinions.

Purpose of Quantitative Research

The purpose of quantitative research is to systematically investigate and measure the relationships between variables or phenomena using numerical data and statistical analysis. The main objectives of quantitative research include:

- Description : To provide a detailed and accurate description of a particular phenomenon or population.

- Explanation : To explain the reasons for the occurrence of a particular phenomenon, such as identifying the factors that influence a behavior or attitude.

- Prediction : To predict future trends or behaviors based on past patterns and relationships between variables.

- Control : To identify the best strategies for controlling or influencing a particular outcome or behavior.

Quantitative research is used in many different fields, including social sciences, business, engineering, and health sciences. It can be used to investigate a wide range of phenomena, from human behavior and attitudes to physical and biological processes. The purpose of quantitative research is to provide reliable and valid data that can be used to inform decision-making and improve understanding of the world around us.

Advantages of Quantitative Research

There are several advantages of quantitative research, including:

- Objectivity : Quantitative research is based on objective data and statistical analysis, which reduces the potential for bias or subjectivity in the research process.

- Reproducibility : Because quantitative research involves standardized methods and measurements, it is more likely to be reproducible and reliable.

- Generalizability : Quantitative research allows for generalizations to be made about a population based on a representative sample, which can inform decision-making and policy development.

- Precision : Quantitative research allows for precise measurement and analysis of data, which can provide a more accurate understanding of phenomena and relationships between variables.

- Efficiency : Quantitative research can be conducted relatively quickly and efficiently, especially when compared to qualitative research, which may involve lengthy data collection and analysis.

- Large sample sizes : Quantitative research can accommodate large sample sizes, which can increase the representativeness and generalizability of the results.

Limitations of Quantitative Research

There are several limitations of quantitative research, including:

- Limited understanding of context: Quantitative research typically focuses on numerical data and statistical analysis, which may not provide a comprehensive understanding of the context or underlying factors that influence a phenomenon.

- Simplification of complex phenomena: Quantitative research often involves simplifying complex phenomena into measurable variables, which may not capture the full complexity of the phenomenon being studied.

- Potential for researcher bias: Although quantitative research aims to be objective, there is still the potential for researcher bias in areas such as sampling, data collection, and data analysis.

- Limited ability to explore new ideas: Quantitative research is often based on pre-determined research questions and hypotheses, which may limit the ability to explore new ideas or unexpected findings.

- Limited ability to capture subjective experiences : Quantitative research is typically focused on objective data and may not capture the subjective experiences of individuals or groups being studied.

- Ethical concerns : Quantitative research may raise ethical concerns, such as invasion of privacy or the potential for harm to participants.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Case Study – Methods, Examples and Guide

Qualitative Research – Methods, Analysis Types...

Descriptive Research Design – Types, Methods and...

Qualitative Research Methods

Basic Research – Types, Methods and Examples

Exploratory Research – Types, Methods and...

Hypothesis Testing

When you conduct a piece of quantitative research, you are inevitably attempting to answer a research question or hypothesis that you have set. One method of evaluating this research question is via a process called hypothesis testing , which is sometimes also referred to as significance testing . Since there are many facets to hypothesis testing, we start with the example we refer to throughout this guide.

An example of a lecturer's dilemma

Two statistics lecturers, Sarah and Mike, think that they use the best method to teach their students. Each lecturer has 50 statistics students who are studying a graduate degree in management. In Sarah's class, students have to attend one lecture and one seminar class every week, whilst in Mike's class students only have to attend one lecture. Sarah thinks that seminars, in addition to lectures, are an important teaching method in statistics, whilst Mike believes that lectures are sufficient by themselves and thinks that students are better off solving problems by themselves in their own time. This is the first year that Sarah has given seminars, but since they take up a lot of her time, she wants to make sure that she is not wasting her time and that seminars improve her students' performance.

The research hypothesis

The first step in hypothesis testing is to set a research hypothesis. In Sarah and Mike's study, the aim is to examine the effect that two different teaching methods – providing both lectures and seminar classes (Sarah), and providing lectures by themselves (Mike) – had on the performance of Sarah's 50 students and Mike's 50 students. More specifically, they want to determine whether performance is different between the two different teaching methods. Whilst Mike is skeptical about the effectiveness of seminars, Sarah clearly believes that giving seminars in addition to lectures helps her students do better than those in Mike's class. This leads to the following research hypothesis:

Before moving onto the second step of the hypothesis testing process, we need to take you on a brief detour to explain why you need to run hypothesis testing at all. This is explained next.

Sample to population

If you have measured individuals (or any other type of "object") in a study and want to understand differences (or any other type of effect), you can simply summarize the data you have collected. For example, if Sarah and Mike wanted to know which teaching method was the best, they could simply compare the performance achieved by the two groups of students – the group of students that took lectures and seminar classes, and the group of students that took lectures by themselves – and conclude that the best method was the teaching method which resulted in the highest performance. However, this is generally of only limited appeal because the conclusions could only apply to students in this study. However, if those students were representative of all statistics students on a graduate management degree, the study would have wider appeal.

In statistics terminology, the students in the study are the sample and the larger group they represent (i.e., all statistics students on a graduate management degree) is called the population . Given that the sample of statistics students in the study are representative of a larger population of statistics students, you can use hypothesis testing to understand whether any differences or effects discovered in the study exist in the population. In layman's terms, hypothesis testing is used to establish whether a research hypothesis extends beyond those individuals examined in a single study.

Another example could be taking a sample of 200 breast cancer sufferers in order to test a new drug that is designed to eradicate this type of cancer. As much as you are interested in helping these specific 200 cancer sufferers, your real goal is to establish that the drug works in the population (i.e., all breast cancer sufferers).

As such, by taking a hypothesis testing approach, Sarah and Mike want to generalize their results to a population rather than just the students in their sample. However, in order to use hypothesis testing, you need to re-state your research hypothesis as a null and alternative hypothesis. Before you can do this, it is best to consider the process/structure involved in hypothesis testing and what you are measuring. This structure is presented on the next page .

Ohio State nav bar

The Ohio State University

- BuckeyeLink

- Find People

- Search Ohio State

Research Questions & Hypotheses

Generally, in quantitative studies, reviewers expect hypotheses rather than research questions. However, both research questions and hypotheses serve different purposes and can be beneficial when used together.

Research Questions

Clarify the research’s aim (farrugia et al., 2010).

- Research often begins with an interest in a topic, but a deep understanding of the subject is crucial to formulate an appropriate research question.

- Descriptive: “What factors most influence the academic achievement of senior high school students?”

- Comparative: “What is the performance difference between teaching methods A and B?”

- Relationship-based: “What is the relationship between self-efficacy and academic achievement?”

- Increasing knowledge about a subject can be achieved through systematic literature reviews, in-depth interviews with patients (and proxies), focus groups, and consultations with field experts.

- Some funding bodies, like the Canadian Institute for Health Research, recommend conducting a systematic review or a pilot study before seeking grants for full trials.

- The presence of multiple research questions in a study can complicate the design, statistical analysis, and feasibility.

- It’s advisable to focus on a single primary research question for the study.

- The primary question, clearly stated at the end of a grant proposal’s introduction, usually specifies the study population, intervention, and other relevant factors.

- The FINER criteria underscore aspects that can enhance the chances of a successful research project, including specifying the population of interest, aligning with scientific and public interest, clinical relevance, and contribution to the field, while complying with ethical and national research standards.

- The P ICOT approach is crucial in developing the study’s framework and protocol, influencing inclusion and exclusion criteria and identifying patient groups for inclusion.

- Defining the specific population, intervention, comparator, and outcome helps in selecting the right outcome measurement tool.

- The more precise the population definition and stricter the inclusion and exclusion criteria, the more significant the impact on the interpretation, applicability, and generalizability of the research findings.

- A restricted study population enhances internal validity but may limit the study’s external validity and generalizability to clinical practice.

- A broadly defined study population may better reflect clinical practice but could increase bias and reduce internal validity.

- An inadequately formulated research question can negatively impact study design, potentially leading to ineffective outcomes and affecting publication prospects.

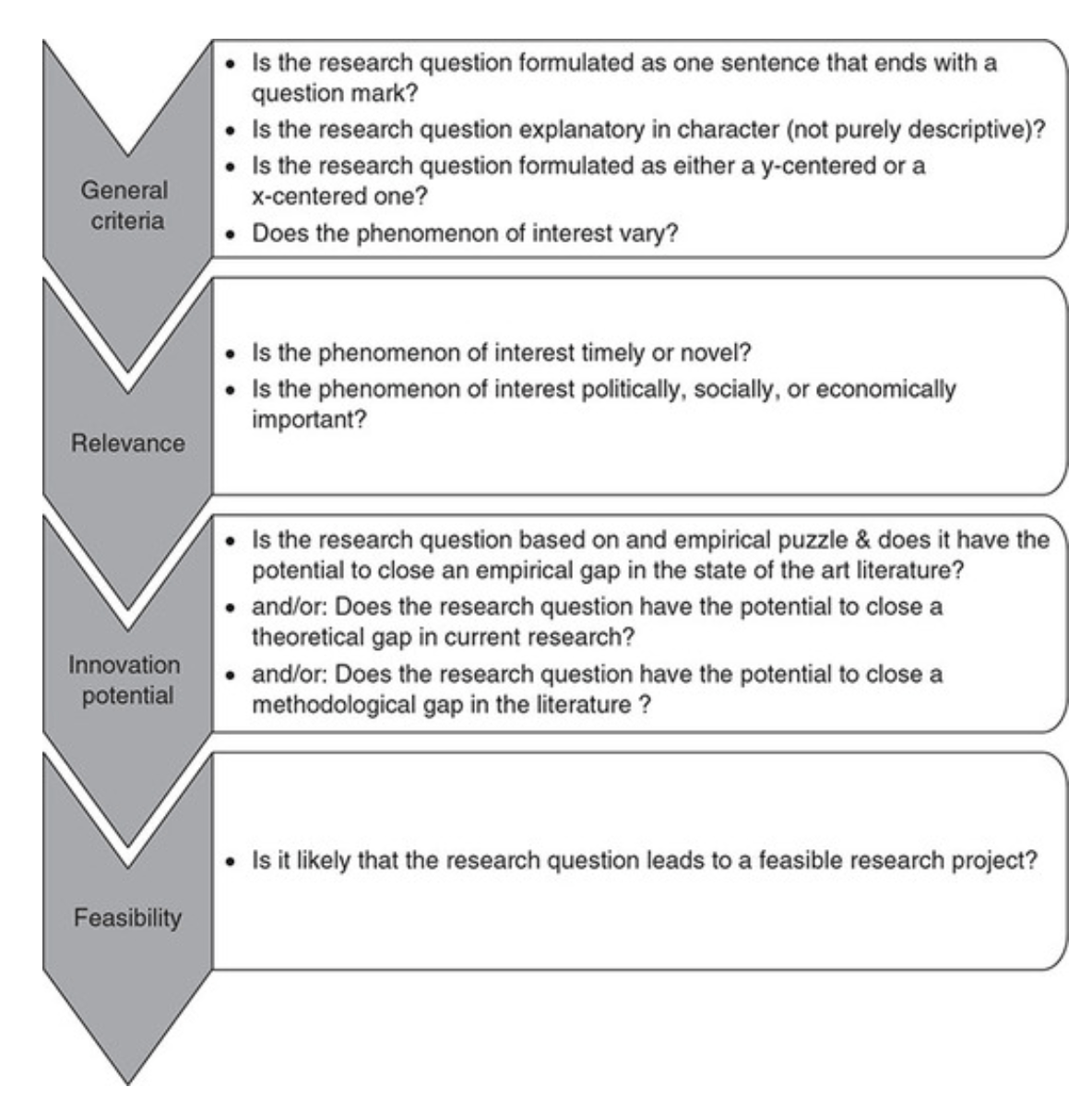

Checklist: Good research questions for social science projects (Panke, 2018)

Research Hypotheses

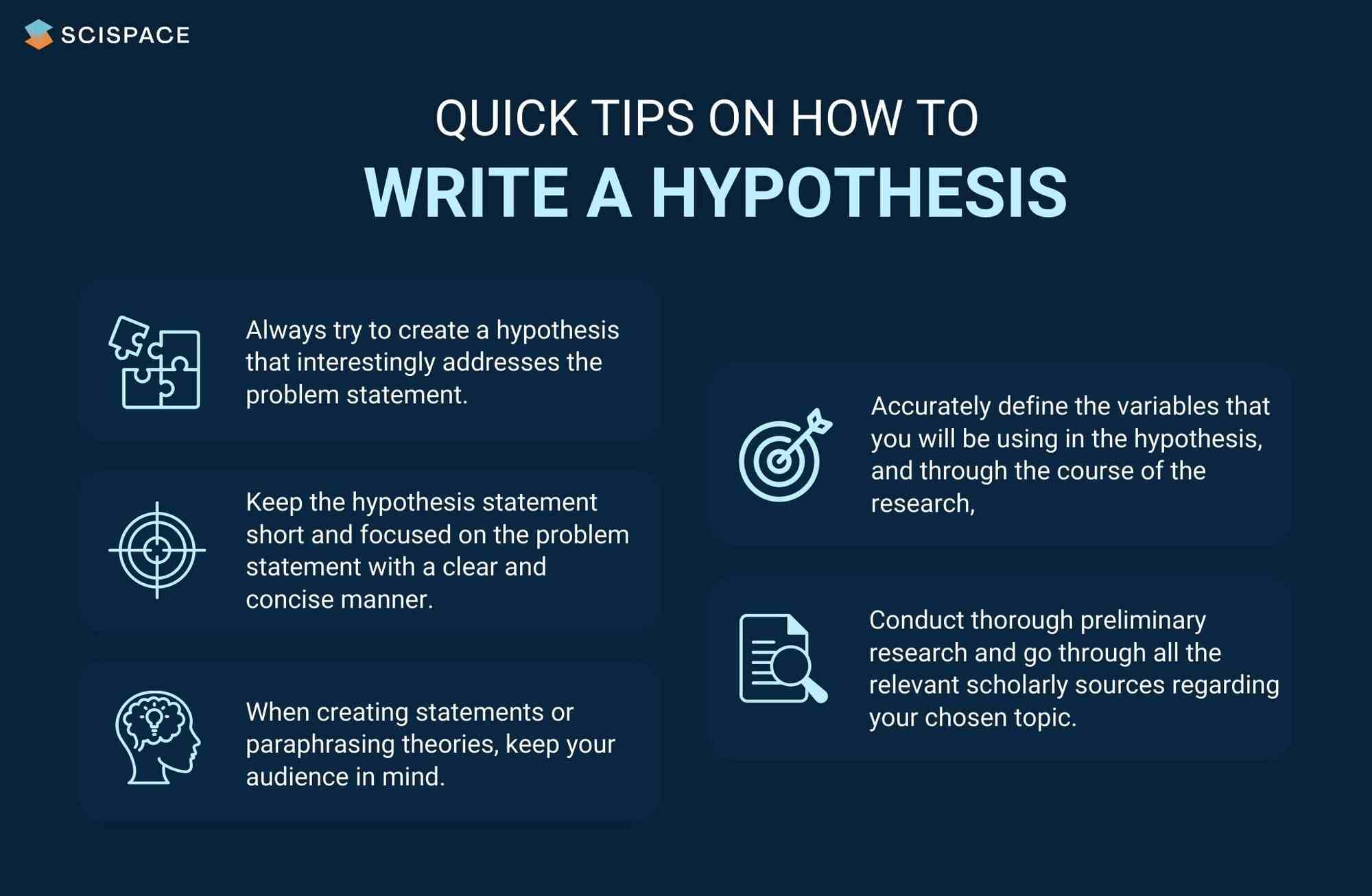

Present the researcher’s predictions based on specific statements.

- These statements define the research problem or issue and indicate the direction of the researcher’s predictions.

- Formulating the research question and hypothesis from existing data (e.g., a database) can lead to multiple statistical comparisons and potentially spurious findings due to chance.

- The research or clinical hypothesis, derived from the research question, shapes the study’s key elements: sampling strategy, intervention, comparison, and outcome variables.

- Hypotheses can express a single outcome or multiple outcomes.

- After statistical testing, the null hypothesis is either rejected or not rejected based on whether the study’s findings are statistically significant.

- Hypothesis testing helps determine if observed findings are due to true differences and not chance.

- Hypotheses can be 1-sided (specific direction of difference) or 2-sided (presence of a difference without specifying direction).

- 2-sided hypotheses are generally preferred unless there’s a strong justification for a 1-sided hypothesis.

- A solid research hypothesis, informed by a good research question, influences the research design and paves the way for defining clear research objectives.

Types of Research Hypothesis

- In a Y-centered research design, the focus is on the dependent variable (DV) which is specified in the research question. Theories are then used to identify independent variables (IV) and explain their causal relationship with the DV.

- Example: “An increase in teacher-led instructional time (IV) is likely to improve student reading comprehension scores (DV), because extensive guided practice under expert supervision enhances learning retention and skill mastery.”

- Hypothesis Explanation: The dependent variable (student reading comprehension scores) is the focus, and the hypothesis explores how changes in the independent variable (teacher-led instructional time) affect it.

- In X-centered research designs, the independent variable is specified in the research question. Theories are used to determine potential dependent variables and the causal mechanisms at play.

- Example: “Implementing technology-based learning tools (IV) is likely to enhance student engagement in the classroom (DV), because interactive and multimedia content increases student interest and participation.”

- Hypothesis Explanation: The independent variable (technology-based learning tools) is the focus, with the hypothesis exploring its impact on a potential dependent variable (student engagement).

- Probabilistic hypotheses suggest that changes in the independent variable are likely to lead to changes in the dependent variable in a predictable manner, but not with absolute certainty.

- Example: “The more teachers engage in professional development programs (IV), the more their teaching effectiveness (DV) is likely to improve, because continuous training updates pedagogical skills and knowledge.”

- Hypothesis Explanation: This hypothesis implies a probable relationship between the extent of professional development (IV) and teaching effectiveness (DV).

- Deterministic hypotheses state that a specific change in the independent variable will lead to a specific change in the dependent variable, implying a more direct and certain relationship.

- Example: “If the school curriculum changes from traditional lecture-based methods to project-based learning (IV), then student collaboration skills (DV) are expected to improve because project-based learning inherently requires teamwork and peer interaction.”

- Hypothesis Explanation: This hypothesis presumes a direct and definite outcome (improvement in collaboration skills) resulting from a specific change in the teaching method.

- Example : “Students who identify as visual learners will score higher on tests that are presented in a visually rich format compared to tests presented in a text-only format.”

- Explanation : This hypothesis aims to describe the potential difference in test scores between visual learners taking visually rich tests and text-only tests, without implying a direct cause-and-effect relationship.

- Example : “Teaching method A will improve student performance more than method B.”

- Explanation : This hypothesis compares the effectiveness of two different teaching methods, suggesting that one will lead to better student performance than the other. It implies a direct comparison but does not necessarily establish a causal mechanism.

- Example : “Students with higher self-efficacy will show higher levels of academic achievement.”

- Explanation : This hypothesis predicts a relationship between the variable of self-efficacy and academic achievement. Unlike a causal hypothesis, it does not necessarily suggest that one variable causes changes in the other, but rather that they are related in some way.

Tips for developing research questions and hypotheses for research studies

- Perform a systematic literature review (if one has not been done) to increase knowledge and familiarity with the topic and to assist with research development.

- Learn about current trends and technological advances on the topic.

- Seek careful input from experts, mentors, colleagues, and collaborators to refine your research question as this will aid in developing the research question and guide the research study.

- Use the FINER criteria in the development of the research question.

- Ensure that the research question follows PICOT format.

- Develop a research hypothesis from the research question.

- Ensure that the research question and objectives are answerable, feasible, and clinically relevant.

If your research hypotheses are derived from your research questions, particularly when multiple hypotheses address a single question, it’s recommended to use both research questions and hypotheses. However, if this isn’t the case, using hypotheses over research questions is advised. It’s important to note these are general guidelines, not strict rules. If you opt not to use hypotheses, consult with your supervisor for the best approach.

Farrugia, P., Petrisor, B. A., Farrokhyar, F., & Bhandari, M. (2010). Practical tips for surgical research: Research questions, hypotheses and objectives. Canadian journal of surgery. Journal canadien de chirurgie , 53 (4), 278–281.

Hulley, S. B., Cummings, S. R., Browner, W. S., Grady, D., & Newman, T. B. (2007). Designing clinical research. Philadelphia.

Panke, D. (2018). Research design & method selection: Making good choices in the social sciences. Research Design & Method Selection , 1-368.

Quantitative Research Methods

- Introduction

- Descriptive and Inferential Statistics

- Hypothesis Testing

- Regression and Correlation

- Time Series

- Meta-Analysis

- Mixed Methods

- Additional Resources

- Get Research Help

Hypothesis Tests

A hypothesis test is exactly what it sounds like: You make a hypothesis about the parameters of a population, and the test determines whether your hypothesis is consistent with your sample data.

- Hypothesis Testing Penn State University tutorial

- Hypothesis Testing Wolfram MathWorld overview

- Hypothesis Testing Minitab Blog entry

- List of Statistical Tests A list of commonly used hypothesis tests and the circumstances under which they're used.

The p-value of a hypothesis test is the probability that your sample data would have occurred if you hypothesis were not correct. Traditionally, researchers have used a p-value of 0.05 (a 5% probability that your sample data would have occurred if your hypothesis was wrong) as the threshold for declaring that a hypothesis is true. But there is a long history of debate and controversy over p-values and significance levels.

Nonparametric Tests

Many of the most commonly used hypothesis tests rely on assumptions about your sample data—for instance, that it is continuous, and that its parameters follow a Normal distribution. Nonparametric hypothesis tests don't make any assumptions about the distribution of the data, and many can be used on categorical data.

- Nonparametric Tests at Boston University A lesson covering four common nonparametric tests.

- Nonparametric Tests at Penn State Tutorial covering the theory behind nonparametric tests as well as several commonly used tests.

- << Previous: Descriptive and Inferential Statistics

- Next: Regression and Correlation >>

- Last Updated: Aug 18, 2023 11:55 AM

- URL: https://guides.library.duq.edu/quant-methods

- Getting Started

- GLG Institute

- Expert Witness

- Integrated Insights

- Qualitative

- Featured Content

- Medical Devices & Diagnostics

- Pharmaceuticals & Biotechnology

- Industrials

- Consumer Goods

- Payments & Insurance

- Hedge Funds

- Private Equity

- Private Credit

- Investment Managers & Mutual Funds

- Investment Banks & Research

- Consulting Firms

- Advertising & Public Relations

- Law Firm Resources

- Network Members

- Social Impact

- Clients - MyGLG

Qualitative vs. Quantitative Research — Here’s What You Need to Know

Will Mellor, Director of Surveys, GLG

Read Time: 0 Minutes

Qualitative vs. Quantitative — you’ve heard the terms before, but what do they mean? Here’s what you need to know on when to use them and how to apply them in your research projects.

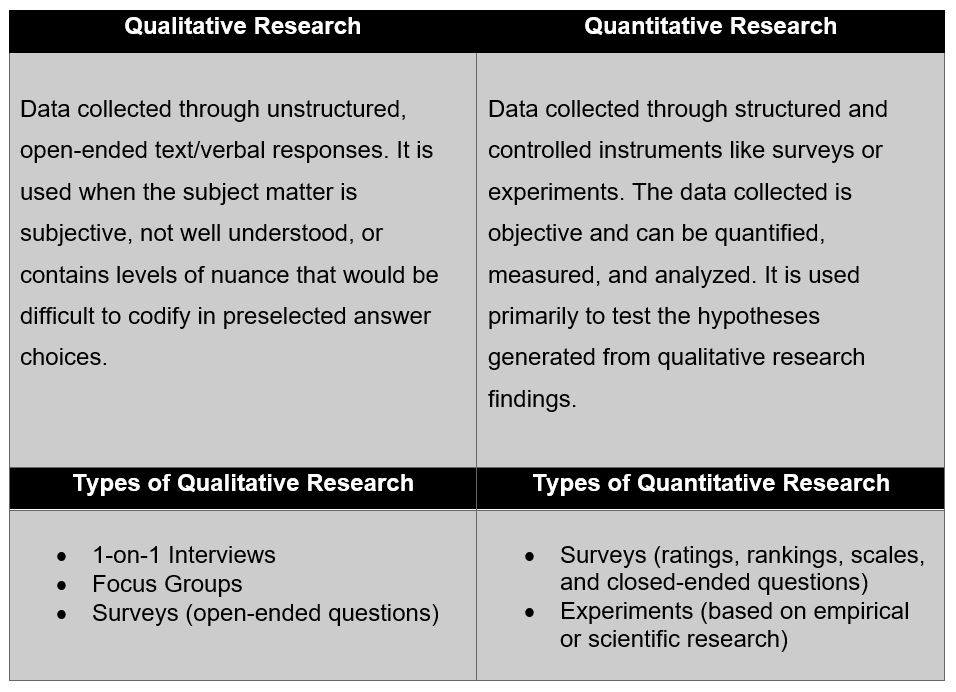

Most research projects you undertake will likely require some combination of qualitative and quantitative data. The magnitude of each will depend on what you need to accomplish. They are opposite in their approach, which makes them balanced in their outcomes.

When Are They Applied?

Qualitative

Qualitative research is used to formulate a hypothesis . If you need deeper information about a topic you know little about, qualitative research can help you uncover themes. For this reason, qualitative research often comes prior to quantitative. It allows you to get a baseline understanding of the topic and start to formulate hypotheses around correlation and causation.

Quantitative

Quantitative research is used to test or confirm a hypothesis . Qualitative research usually informs quantitative. You need to have enough understanding about a topic in order to develop a hypothesis you can test. Since quantitative research is highly structured, you first need to understand what the parameters are and how variable they are in practice. This allows you to create a research outline that is controlled in all the ways that will produce high-quality data.

In practice, the parameters are the factors you want to test against your hypothesis. If your hypothesis is that COVID is going to transform the way companies think about office space, some of your parameters might include the percent of your workforce working from home pre- and post-COVID, total square footage of office space held, and/or real-estate spend expectations by executive leadership. You would also want to know the variability of those parameters. In the COVID example, you will need to know standard ranges of square footage and real-estate expenditures so that you can create answer options that will capture relevant, high-quality, and easily actionable data.

Methods of Research

Often, qualitative research is conducted with a small sample size and includes many open-ended questions . The goal is to understand “Why?” and the thinking behind the decisions. The best way to facilitate this type of research is through one-on-one interviews, focus groups, and sometimes surveys. A major benefit of the interview and focus group formats is the ability to ask follow-up questions and dig deeper on answers that are particularly insightful.

Conversely, quantitative research is designed for larger sample sizes, which can garner perspectives across a wide spectrum of respondents. While not always necessary, sample sizes can sometimes be large enough to be statistically significant . The best way to facilitate this type of research is through surveys or large-scale experiments.

Unsurprisingly, the two different approaches will generate different types of data that will need to be analyzed differently.

For qualitative data, you’ll end up with data that will be highly textual in nature. You’ll be reading through the data and looking for key themes that emerge over and over. This type of research is also great at producing quotes that can be used in presentations or reports. Quotes are a powerful tool for conveying sentiment and making a poignant point.

For quantitative data, you’ll end up with a data set that can be analyzed, often with statistical software such as Excel, R, or SPSS. You can ask many different types of questions that produce this quantitative data, including rating/ranking questions, single-select, multiselect, and matrix table questions. These question types will produce data that can be analyzed to find averages, ranges, growth rates, percentage changes, minimums/maximums, and even time-series data for longer-term trend analysis.

Mixed Methods Approach

You aren’t limited to just one approach. If you need both quantitative and qualitative data, then collect both. You can even collect both quantitative and qualitative data within one type of research instrument. In a survey, you can ask both open-ended questions about “Why?” as well as closed-ended, data-related questions. Even in an unstructured format, like an interview or focus group, you can ask numerical questions to capture analyzable data.

Just be careful. While qualitative themes can be generalized, it can be dangerous to generalize on such a small sample size of quantitative data. For instance, why companies like a certain software platform may fall into three to five key themes. How much they spend on that platform can be highly variable.

The Takeaway

If you are unfamiliar with the topic you are researching, qualitative research is the best first approach. As you get deeper in your research, certain themes will emerge, and you’ll start to form hypotheses. From there, quantitative research can provide larger-scale data sets that can be analyzed to either confirm or deny the hypotheses you formulated earlier in your research. Most importantly, the two approaches are not mutually exclusive. You can have an eye for both themes and data throughout the research process. You’ll just be leaning more heavily to one or the other depending on where you are in your understanding of the topic.

Ready to get started? Get the actionable insights you need with the help of GLG’s qualitative and quantitative research methods.

About Will Mellor

Will Mellor leads a team of accomplished project managers who serve financial service firms across North America. His team manages end-to-end survey delivery from first draft to final deliverable. Will is an expert on GLG’s internal membership and consumer populations, as well as survey design and research. Before coming to GLG, he was the vice president of an economic consulting group, where he was responsible for designing economic impact models for clients in both the public sector and the private sector. Will has bachelor’s degrees in international business and finance and a master’s degree in applied economics.

For more information, read our articles: Three Ways to Apply Qualitative Research , Focusing on Focus Groups: Best Practices, What Type of Survey Do You Need?, or The 6 Pillars of Successful Survey Design

You can also download our eBooks: GLG’s Guide to Effective Qualitative Research or Strategies for Successful Surveys

Enter your contact information below and a member of our team will reach out to you shortly.

Thank you for contacting GLG, someone will respond to your inquiry as soon as possible.

Subscribe to Insights 360

Enter your email below and receive our monthly newsletter, featuring insights from GLG’s network of approximately 1 million professionals with first-hand expertise in every industry.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- J Korean Med Sci

- v.36(50); 2021 Dec 27

Formulating Hypotheses for Different Study Designs

Durga prasanna misra.

1 Department of Clinical Immunology and Rheumatology, Sanjay Gandhi Postgraduate Institute of Medical Sciences, Lucknow, India.

Armen Yuri Gasparyan

2 Departments of Rheumatology and Research and Development, Dudley Group NHS Foundation Trust (Teaching Trust of the University of Birmingham, UK), Russells Hall Hospital, Dudley, UK.

Olena Zimba

3 Department of Internal Medicine #2, Danylo Halytsky Lviv National Medical University, Lviv, Ukraine.

Marlen Yessirkepov

4 Department of Biology and Biochemistry, South Kazakhstan Medical Academy, Shymkent, Kazakhstan.

Vikas Agarwal

George d. kitas.

5 Centre for Epidemiology versus Arthritis, University of Manchester, Manchester, UK.