Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Synthesising the data

Synthesis is a stage in the systematic review process where extracted data, that is the findings of individual studies, are combined and evaluated.

The general purpose of extracting and synthesising data is to show the outcomes and effects of various studies, and to identify issues with methodology and quality. This means that your synthesis might reveal several elements, including:

- overall level of evidence

- the degree of consistency in the findings

- what the positive effects of a drug or treatment are , and what these effects are based on

- how many studies found a relationship or association between two components, e.g. the impact of disability-assistance animals on the psychological health of workplaces

There are two commonly accepted methods of synthesis in systematic reviews:

Qualitative data synthesis

- Quantitative data synthesis (i.e. meta-analysis)

The way the data is extracted from your studies, then synthesised and presented, depends on the type of data being handled.

In a qualitative systematic review, data can be presented in a number of different ways. A typical procedure in the health sciences is thematic analysis .

Thematic synthesis has three stages:

- the coding of text ‘line-by-line’

- the development of ‘descriptive themes’

- and the generation of ‘analytical themes’

If you have qualitative information, some of the more common tools used to summarise data include:

- textual descriptions, i.e. written words

- thematic or content analysis

Example qualitative systematic review

A good example of how to conduct a thematic analysis in a systematic review is the following journal article on cancer patients. In it, the authors go through the process of:

- identifying and coding information about the selected studies’ methodologies and findings on patient care

- organising these codes into subheadings and descriptive categories

- developing these categories into analytical themes

What Facilitates “Patient Empowerment” in Cancer Patients During Follow-Up: A Qualitative Systematic Review of the Literature

Quantitative data synthesis

In a quantitative systematic review, data is presented statistically. Typically, this is referred to as a meta-analysis .

The usual method is to combine and evaluate data from multiple studies. This is normally done in order to draw conclusions about outcomes, effects, shortcomings of studies and/or applicability of findings.

Remember, the data you synthesise should relate to your research question and protocol (plan). In the case of quantitative analysis, the data extracted and synthesised will relate to whatever method was used to generate the research question (e.g. PICO method), and whatever quality appraisals were undertaken in the analysis stage.

If you have quantitative information, some of the more common tools used to summarise data include:

- grouping of similar data, i.e. presenting the results in tables

- charts, e.g. pie-charts

- graphical displays, i.e. forest plots

Example of a quantitative systematic review

A quantitative systematic review is a combination of qualitative and quantitative, usually referred to as a meta-analysis.

Effectiveness of Acupuncturing at the Sphenopalatine Ganglion Acupoint Alone for Treatment of Allergic Rhinitis: A Systematic Review and Meta-Analysis

About meta-analyses

A systematic review may sometimes include a meta-analysis , although it is not a requirement of a systematic review. Whereas, a meta-analysis also includes a systematic review.

A meta-analysis is a statistical analysis that combines data from previous studies to calculate an overall result.

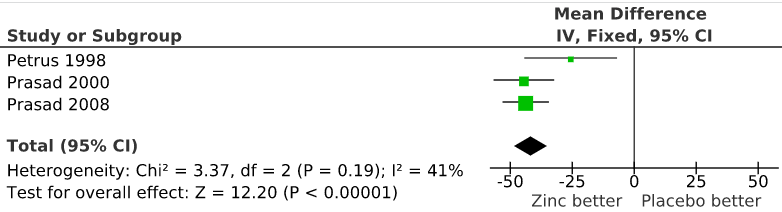

One way of accurately representing all the data is in the form of a forest plot . A forest plot is a way of combining the results of multiple studies in order to show point estimates arising from different studies of the same condition or treatment.

It is comprised of a graphical representation and often also a table. The graphical display shows the mean value for each study and often with a confidence interval (the horizontal bars). Each mean is plotted relative to the vertical line of no difference.

The following is an example of the graphical representation of a forest plot.

“File:The effect of zinc acetate lozenges on the duration of the common cold.svg” by Harri Hemilä is licensed under CC BY 3.0

Watch the following short video where a social health example is used to explain how to construct a forest plot graphic.

Forest Plots: Understanding a Meta-Analysis in 5 Minutes or Less (5:38 mins)

Forest Plots – Understanding a Meta-Analysis in 5 Minutes or Less (5:38 min) by The NCCMT ( YouTube )

Test your knowledge

Research and Writing Skills for Academic and Graduate Researchers Copyright © 2022 by RMIT University is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License , except where otherwise noted.

Share This Book

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- AIMS Public Health

- v.3(1); 2016

What Synthesis Methodology Should I Use? A Review and Analysis of Approaches to Research Synthesis

Kara schick-makaroff.

1 Faculty of Nursing, University of Alberta, Edmonton, AB, Canada

Marjorie MacDonald

2 School of Nursing, University of Victoria, Victoria, BC, Canada

Marilyn Plummer

3 College of Nursing, Camosun College, Victoria, BC, Canada

Judy Burgess

4 Student Services, University Health Services, Victoria, BC, Canada

Wendy Neander

Associated data, additional file 1.

When we began this process, we were doctoral students and a faculty member in a research methods course. As students, we were facing a review of the literature for our dissertations. We encountered several different ways of conducting a review but were unable to locate any resources that synthesized all of the various synthesis methodologies. Our purpose is to present a comprehensive overview and assessment of the main approaches to research synthesis. We use ‘research synthesis’ as a broad overarching term to describe various approaches to combining, integrating, and synthesizing research findings.

We conducted an integrative review of the literature to explore the historical, contextual, and evolving nature of research synthesis. We searched five databases, reviewed websites of key organizations, hand-searched several journals, and examined relevant texts from the reference lists of the documents we had already obtained.

We identified four broad categories of research synthesis methodology including conventional, quantitative, qualitative, and emerging syntheses. Each of the broad categories was compared to the others on the following: key characteristics, purpose, method, product, context, underlying assumptions, unit of analysis, strengths and limitations, and when to use each approach.

Conclusions

The current state of research synthesis reflects significant advancements in emerging synthesis studies that integrate diverse data types and sources. New approaches to research synthesis provide a much broader range of review alternatives available to health and social science students and researchers.

1. Introduction

Since the turn of the century, public health emergencies have been identified worldwide, particularly related to infectious diseases. For example, the Severe Acute Respiratory Syndrome (SARS) epidemic in Canada in 2002-2003, the recent Ebola epidemic in Africa, and the ongoing HIV/AIDs pandemic are global health concerns. There have also been dramatic increases in the prevalence of chronic diseases around the world [1] – [3] . These epidemiological challenges have raised concerns about the ability of health systems worldwide to address these crises. As a result, public health systems reform has been initiated in a number of countries. In Canada, as in other countries, the role of evidence to support public health reform and improve population health has been given high priority. Yet, there continues to be a significant gap between the production of evidence through research and its application in practice [4] – [5] . One strategy to address this gap has been the development of new research synthesis methodologies to deal with the time-sensitive and wide ranging evidence needs of policy makers and practitioners in all areas of health care, including public health.

As doctoral nursing students facing a review of the literature for our dissertations, and as a faculty member teaching a research methods course, we encountered several ways of conducting a research synthesis but found no comprehensive resources that discussed, compared, and contrasted various synthesis methodologies on their purposes, processes, strengths and limitations. To complicate matters, writers use terms interchangeably or use different terms to mean the same thing, and the literature is often contradictory about various approaches. Some texts [6] , [7] – [9] did provide a preliminary understanding about how research synthesis had been taken up in nursing, but these did not meet our requirements. Thus, in this article we address the need for a comprehensive overview of research synthesis methodologies to guide public health, health care, and social science researchers and practitioners.

Research synthesis is relatively new in public health but has a long history in other fields dating back to the late 1800s. Research synthesis, a research process in its own right [10] , has become more prominent in the wake of the evidence-based movement of the 1990s. Research syntheses have found their advocates and detractors in all disciplines, with challenges to the processes of systematic review and meta-analysis, in particular, being raised by critics of evidence-based healthcare [11] – [13] .

Our purpose was to conduct an integrative review of the literature to explore the historical, contextual, and evolving nature of research synthesis [14] – [15] . We synthesize and critique the main approaches to research synthesis that are relevant for public health, health care, and social scientists. Research synthesis is the overarching term we use to describe approaches to combining, aggregating, integrating, and synthesizing primary research findings. Each synthesis methodology draws on different types of findings depending on the purpose and product of the chosen synthesis (see Additional File 1 ).

3. Method of Review

Based on our current knowledge of the literature, we identified these approaches to include in our review: systematic review, meta-analysis, qualitative meta-synthesis, meta-narrative synthesis, scoping review, rapid review, realist synthesis, concept analysis, literature review, and integrative review. Our first step was to divide the synthesis types among the research team. Each member did a preliminary search to identify key texts. The team then met to develop search terms and a framework to guide the review.

Over the period of 2008 to 2012 we extensively searched the literature, updating our search at several time points, not restricting our search by date. The dates of texts reviewed range from 1967 to 2015. We used the terms above combined with the term “method* (e.g., “realist synthesis” and “method*) in the database Health Source: Academic Edition (includes Medline and CINAHL). This search yielded very few texts on some methodologies and many on others. We realized that many documents on research synthesis had not been picked up in the search. Therefore, we also searched Google Scholar, PubMed, ERIC, and Social Science Index, as well as the websites of key organizations such as the Joanna Briggs Institute, the University of York Centre for Evidence-Based Nursing, and the Cochrane Collaboration database. We hand searched several nursing, social science, public health and health policy journals. Finally, we traced relevant documents from the references in obtained texts.

We included works that met the following inclusion criteria: (1) published in English; (2) discussed the history of research synthesis; (3) explicitly described the approach and specific methods; or (4) identified issues, challenges, strengths and limitations of the particular methodology. We excluded research reports that resulted from the use of particular synthesis methodologies unless they also included criteria 2, 3, or 4 above.

Based on our search, we identified additional types of research synthesis (e.g., meta-interpretation, best evidence synthesis, critical interpretive synthesis, meta-summary, grounded formal theory). Still, we missed some important developments in meta-analysis, for example, identified by the journal's reviewers that have now been discussed briefly in the paper. The final set of 197 texts included in our review comprised theoretical, empirical, and conceptual papers, books, editorials and commentaries, and policy documents.

In our preliminary review of key texts, the team inductively developed a framework of the important elements of each method for comparison. In the next phase, each text was read carefully, and data for these elements were extracted into a table for comparison on the points of: key characteristics, purpose, methods, and product; see Additional File 1 ). Once the data were grouped and extracted, we synthesized across categories based on the following additional points of comparison: complexity of the process, degree of systematization, consideration of context, underlying assumptions, unit of analysis, and when to use each approach. In our results, we discuss our comparison of the various synthesis approaches on the elements above. Drawing only on documents for the review, ethics approval was not required.

We identified four broad categories of research synthesis methodology: Conventional, quantitative, qualitative, and emerging syntheses. From our dataset of 197 texts, we had 14 texts on conventional synthesis, 64 on quantitative synthesis, 78 on qualitative synthesis, and 41 on emerging syntheses. Table 1 provides an overview of the four types of research synthesis, definitions, types of data used, products, and examples of the methodology.

Although we group these types of synthesis into four broad categories on the basis of similarities, each type within a category has unique characteristics, which may differ from the overall group similarities. Each could be explored in greater depth to tease out their unique characteristics, but detailed comparison is beyond the scope of this article.

Additional File 1 presents one or more selected types of synthesis that represent the broad category but is not an exhaustive presentation of all types within each category. It provides more depth for specific examples from each category of synthesis on the characteristics, purpose, methods, and products than is found in Table 1 .

4.1. Key Characteristics

4.1.1. what is it.

Here we draw on two types of categorization. First, we utilize Dixon Woods et al.'s [49] classification of research syntheses as being either integrative or interpretive . (Please note that integrative syntheses are not the same as an integrative review as defined in Additional File 1 .) Second, we use Popay's [80] enhancement and epistemological models .

The defining characteristics of integrative syntheses are that they involve summarizing the data achieved by pooling data [49] . Integrative syntheses include systematic reviews, meta-analyses, as well as scoping and rapid reviews because each of these focus on summarizing data. They also define concepts from the outset (although this may not always be true in scoping or rapid reviews) and deal with a well-specified phenomenon of interest.

Interpretive syntheses are primarily concerned with the development of concepts and theories that integrate concepts [49] . The analysis in interpretive synthesis is conceptual both in process and outcome, and “the product is not aggregations of data, but theory” [49] , [p.12]. Interpretive syntheses involve induction and interpretation, and are primarily conceptual in process and outcome. Examples include integrative reviews, some systematic reviews, all of the qualitative syntheses, meta-narrative, realist and critical interpretive syntheses. Of note, both quantitative and qualitative studies can be either integrative or interpretive

The second categorization, enhancement versus epistemological , applies to those approaches that use multiple data types and sources [80] . Popay's [80] classification reflects the ways that qualitative data are valued in relation to quantitative data.

In the enhancement model , qualitative data adds something to quantitative analysis. The enhancement model is reflected in systematic reviews and meta-analyses that use some qualitative data to enhance interpretation and explanation. It may also be reflected in some rapid reviews that draw on quantitative data but use some qualitative data.

The epistemological model assumes that quantitative and qualitative data are equal and each has something unique to contribute. All of the other review approaches, except pure quantitative or qualitative syntheses, reflect the epistemological model because they value all data types equally but see them as contributing different understandings.

4.1.2. Data type

By and large, the quantitative approaches (quantitative systematic review and meta-analysis) have typically used purely quantitative data (i.e., expressed in numeric form). More recently, both Cochrane [81] and Campbell [82] collaborations are grappling with the need to, and the process of, integrating qualitative research into a systematic review. The qualitative approaches use qualitative data (i.e., expressed in words). All of the emerging synthesis types, as well as the conventional integrative review, incorporate qualitative and quantitative study designs and data.

4.1.3. Research question

Four types of research questions direct inquiry across the different types of syntheses. The first is a well-developed research question that gives direction to the synthesis (e.g., meta-analysis, systematic review, meta-study, concept analysis, rapid review, realist synthesis). The second begins as a broad general question that evolves and becomes more refined over the course of the synthesis (e.g., meta-ethnography, scoping review, meta-narrative, critical interpretive synthesis). In the third type, the synthesis begins with a phenomenon of interest and the question emerges in the analytic process (e.g., grounded formal theory). Lastly, there is no clear question, but rather a general review purpose (e.g., integrative review). Thus, the requirement for a well-defined question cuts across at least three of the synthesis types (e.g., quantitative, qualitative, and emerging).

4.1.4. Quality appraisal

This is a contested issue within and between the four synthesis categories. There are strong proponents of quality appraisal in the quantitative traditions of systematic review and meta-analysis based on the need for strong studies that will not jeopardize validity of the overall findings. Nonetheless, there is no consensus on pre-defined criteria; many scales exist that vary dramatically in composition. This has methodological implications for the credibility of findings [83] .

Specific methodologies from the conventional, qualitative, and emerging categories support quality appraisal but do so with caveats. In conventional integrative reviews appraisal is recommended, but depends on the sampling frame used in the study [18] . In meta-study, appraisal criteria are explicit but quality criteria are used in different ways depending on the specific requirements of the inquiry [54] . Among the emerging syntheses, meta-narrative review developers support appraisal of a study based on criteria from the research tradition of the primary study [67] , [84] – [85] . Realist synthesis similarly supports the use of high quality evidence, but appraisal checklists are viewed with scepticism and evidence is judged based on relevance to the research question and whether a credible inference may be drawn [69] . Like realist, critical interpretive syntheses do not judge quality using standardized appraisal instruments. They will exclude fatally flawed studies, but there is no consensus on what ‘fatally flawed’ means [49] , [71] . Appraisal is based on relevance to the inquiry, not rigor of the study.

There is no agreement on quality appraisal among qualitative meta-ethnographers with some supporting and others refuting the need for appraisal. [60] , [62] . Opponents of quality appraisal are found among authors of qualitative (grounded formal theory and concept analysis) and emerging syntheses (scoping and rapid reviews) because quality is not deemed relevant to the intention of the synthesis; the studies being reviewed are not effectiveness studies where quality is extremely important. These qualitative synthesis are often reviews of theoretical developments where the concept itself is what is important, or reviews that provide quotations from the raw data so readers can make their own judgements about the relevance and utility of the data. For example, in formal grounded theory, the purpose of theory generation and authenticity of data used to generate the theory is not as important as the conceptual category. Inaccuracies may be corrected in other ways, such as using the constant comparative method, which facilitates development of theoretical concepts that are repeatedly found in the data [86] – [87] . For pragmatic reasons, evidence is not assessed in rapid and scoping reviews, in part to produce a timely product. The issue of quality appraisal is unresolved across the terrain of research synthesis and we consider this further in our discussion.

4.2. Purpose

All research syntheses share a common purpose -- to summarize, synthesize, or integrate research findings from diverse studies. This helps readers stay abreast of the burgeoning literature in a field. Our discussion here is at the level of the four categories of synthesis. Beginning with conventional literature syntheses, the overall purpose is to attend to mature topics for the purpose of re-conceptualization or to new topics requiring preliminary conceptualization [14] . Such syntheses may be helpful to consider contradictory evidence, map shifting trends in the study of a phenomenon, and describe the emergence of research in diverse fields [14] . The purpose here is to set the stage for a study by identifying what has been done, gaps in the literature, important research questions, or to develop a conceptual framework to guide data collection and analysis.

The purpose of quantitative systematic reviews is to combine, aggregate, or integrate empirical research to be able to generalize from a group of studies and determine the limits of generalization [27] . The focus of quantitative systematic reviews has been primarily on aggregating the results of studies evaluating the effectiveness of interventions using experimental, quasi-experimental, and more recently, observational designs. Systematic reviews can be done with or without quantitative meta-analysis but a meta-analysis always takes place within the context of a systematic review. Researchers must consider the review's purpose and the nature of their data in undertaking a quantitative synthesis; this will assist in determining the approach.

The purpose of qualitative syntheses is broadly to synthesize complex health experiences, practices, or concepts arising in healthcare environments. There may be various purposes depending on the qualitative methodology. For example, in hermeneutic studies the aim may be holistic explanation or understanding of a phenomenon [42] , which is deepened by integrating the findings from multiple studies. In grounded formal theory, the aim is to produce a conceptual framework or theory expected to be applicable beyond the original study. Although not able to generalize from qualitative research in the statistical sense [88] , qualitative researchers usually do want to say something about the applicability of their synthesis to other settings or phenomena. This notion of ‘theoretical generalization’ has been referred to as ‘transferability’ [89] – [90] and is an important criterion of rigour in qualitative research. It applies equally to the products of a qualitative synthesis in which the synthesis of multiple studies on the same phenomenon strengthens the ability to draw transferable conclusions.

The overarching purpose of emerging syntheses is challenging the more traditional types of syntheses, in part by using data from both quantitative and qualitative studies with diverse designs for analysis. Beyond this, however, each emerging synthesis methodology has a unique purpose. In meta-narrative review, the purpose is to identify different research traditions in the area, synthesize a complex and diverse body of research. Critical interpretive synthesis shares this characteristic. Although a distinctive approach, critical interpretive synthesis utilizes a modification of the analytic strategies of meta-ethnography [61] (e.g., reciprocal translational analysis, refutational synthesis, and lines of argument synthesis) but goes beyond the use of these to bring a critical perspective to bear in challenging the normative or epistemological assumptions in the primary literature [72] – [73] . The unique purpose of a realist synthesis is to amalgamate complex empirical evidence and theoretical understandings within a diverse body of literature to uncover the operative mechanisms and contexts that affect the outcomes of social interventions. In a scoping review, the intention is to find key concepts, examine the range of research in an area, and identify gaps in the literature. The purpose of a rapid review is comparable to that of a scoping review, but done quickly to meet the time-sensitive information needs of policy makers.

4.3. Method

4.3.1. degree of systematization.

There are varying degrees of systematization across the categories of research synthesis. The most systematized are quantitative systematic reviews and meta-analyses. There are clear processes in each with judgments to be made at each step, although there are no agreed upon guidelines for this. The process is inherently subjective despite attempts to develop objective and systematic processes [91] – [92] . Mullen and Ramirez [27] suggest that there is often a false sense of rigour implied by the terms ‘systematic review’ and ‘meta-analysis’ because of their clearly defined procedures.

In comparison with some types of qualitative synthesis, concept analysis is quite procedural. Qualitative meta-synthesis also has defined procedures and is systematic, yet perhaps less so than concept analysis. Qualitative meta-synthesis starts in an unsystematic way but becomes more systematic as it unfolds. Procedures and frameworks exist for some of the emerging types of synthesis [e.g., [50] , [63] , [71] , [93] ] but are not linear, have considerable flexibility, and are often messy with emergent processes [85] . Conventional literature reviews tend not to be as systematic as the other three types. In fact, the lack of systematization in conventional literature synthesis was the reason for the development of more systematic quantitative [17] , [20] and qualitative [45] – [46] , [61] approaches. Some authors in the field [18] have clarified processes for integrative reviews making them more systematic and rigorous, but most conventional syntheses remain relatively unsystematic in comparison with other types.

4.3.2. Complexity of the process

Some synthesis processes are considerably more complex than others. Methodologies with clearly defined steps are arguably less complex than the more flexible and emergent ones. We know that any study encounters challenges and it is rare that a pre-determined research protocol can be followed exactly as intended. Not even the rigorous methods associated with Cochrane [81] systematic reviews and meta-analyses are always implemented exactly as intended. Even when dealing with numbers rather than words, interpretation is always part of the process. Our collective experience suggests that new methodologies (e.g., meta-narrative synthesis and realist synthesis) that integrate different data types and methods are more complex than conventional reviews or the rapid and scoping reviews.

4.4. Product

The products of research syntheses usually take three distinct formats (see Table 1 and Additional File 1 for further details). The first representation is in tables, charts, graphical displays, diagrams and maps as seen in integrative, scoping and rapid reviews, meta-analyses, and critical interpretive syntheses. The second type of synthesis product is the use of mathematical scores. Summary statements of effectiveness are mathematically displayed in meta-analyses (as an effect size), systematic reviews, and rapid reviews (statistical significance).

The third synthesis product may be a theory or theoretical framework. A mid-range theory can be produced from formal grounded theory, meta-study, meta-ethnography, and realist synthesis. Theoretical/conceptual frameworks or conceptual maps may be created in meta-narrative and critical interpretive syntheses, and integrative reviews. Concepts for use within theories are produced in concept analysis. While these three product types span the categories of research synthesis, narrative description and summary is used to present the products resulting from all methodologies.

4.5. Consideration of context

There are diverse ways that context is considered in the four broad categories of synthesis. Context may be considered to the extent that it features within primary studies for the purpose of the review. Context may also be understood as an integral aspect of both the phenomenon under study and the synthesis methodology (e.g., realist synthesis). Quantitative systematic reviews and meta-analyses have typically been conducted on studies using experimental and quasi-experimental designs and more recently observational studies, which control for contextual features to allow for understanding of the ‘true’ effect of the intervention [94] .

More recently, systematic reviews have included covariates or mediating variables (i.e., contextual factors) to help explain variability in the results across studies [27] . Context, however, is usually handled in the narrative discussion of findings rather than in the synthesis itself. This lack of attention to context has been one criticism leveled against systematic reviews and meta-analyses, which restrict the types of research designs that are considered [e.g., [95] ].

When conventional literature reviews incorporate studies that deal with context, there is a place for considering contextual influences on the intervention or phenomenon. Reviews of quantitative experimental studies tend to be devoid of contextual considerations since the original studies are similarly devoid, but context might figure prominently in a literature review that incorporates both quantitative and qualitative studies.

Qualitative syntheses have been conducted on the contextual features of a particular phenomenon [33] . Paterson et al. [54] advise researchers to attend to how context may have influenced the findings of particular primary studies. In qualitative analysis, contextual features may form categories by which the data can be compared and contrasted to facilitate interpretation. Because qualitative research is often conducted to understand a phenomenon as a whole, context may be a focus, although this varies with the qualitative methodology. At the same time, the findings in a qualitative synthesis are abstracted from the original reports and taken to a higher level of conceptualization, thus removing them from the original context.

Meta-narrative synthesis [67] , [84] , because it draws on diverse research traditions and methodologies, may incorporate context into the analysis and findings. There is not, however, an explicit step in the process that directs the analyst to consider context. Generally, the research question guiding the synthesis is an important factor in whether context will be a focus.

More recent iterations of concept analysis [47] , [96] – [97] explicitly consider context reflecting the assumption that a concept's meaning is determined by its context. Morse [47] points out, however, that Wilson's [98] approach to concept analysis, and those based on Wilson [e.g., [45] ], identify attributes that are devoid of context, while Rodgers' [96] , [99] evolutionary method considers context (e.g., antecedents, consequences, and relationships to other concepts) in concept development.

Realist synthesis [69] considers context as integral to the study. It draws on a critical realist logic of inquiry grounded in the work of Bhaskar [100] , who argues that empirical co-occurrence of events is insufficient for inferring causation. One must identify generative mechanisms whose properties are causal and, depending on the situation, may nor may not be activated [94] . Context interacts with program/intervention elements and thus cannot be differentiated from the phenomenon [69] . This approach synthesizes evidence on generative mechanisms and analyzes contextual features that activate them; the result feeds back into the context. The focus is on what works, for whom, under what conditions, why and how [68] .

4.6. Underlying Philosophical and Theoretical Assumptions

When we began our review, we ‘assumed’ that the assumptions underlying synthesis methodologies would be a distinguishing characteristic of synthesis types, and that we could compare the various types on their assumptions, explicit or implicit. We found, however, that many authors did not explicate the underlying assumptions of their methodologies, and it was difficult to infer them. Kirkevold [101] has argued that integrative reviews need to be carried out from an explicit philosophical or theoretical perspective. We argue this should be true for all types of synthesis.

Authors of some emerging synthesis approaches have been very explicit about their assumptions and philosophical underpinnings. An implicit assumption of most emerging synthesis methodologies is that quantitative systematic reviews and meta-analyses have limited utility in some fields [e.g., in public health – [13] , [102] ] and for some kinds of review questions like those about feasibility and appropriateness versus effectiveness [103] – [104] . They also assume that ontologically and epistemologically, both kinds of data can be combined. This is a significant debate in the literature because it is about the commensurability of overarching paradigms [105] but this is beyond the scope of this review.

Realist synthesis is philosophically grounded in critical realism or, as noted above, a realist logic of inquiry [93] , [99] , [106] – [107] . Key assumptions regarding the nature of interventions that inform critical realism have been described above in the section on context. See Pawson et al. [106] for more information on critical realism, the philosophical basis of realist synthesis.

Meta-narrative synthesis is explicitly rooted in a constructivist philosophy of science [108] in which knowledge is socially constructed rather than discovered, and what we take to be ‘truth’ is a matter of perspective. Reality has a pluralistic and plastic character, and there is no pre-existing ‘real world’ independent of human construction and language [109] . See Greenhalgh et al. [67] , [85] and Greenhalgh & Wong [97] for more discussion of the constructivist basis of meta-narrative synthesis.

In the case of purely quantitative or qualitative syntheses, it may be an easier matter to uncover unstated assumptions because they are likely to be shared with those of the primary studies in the genre. For example, grounded formal theory shares the philosophical and theoretical underpinnings of grounded theory, rooted in the theoretical perspective of symbolic interactionism [110] – [111] and the philosophy of pragmatism [87] , [112] – [114] .

As with meta-narrative synthesis, meta-study developers identify constructivism as their interpretive philosophical foundation [54] , [88] . Epistemologically, constructivism focuses on how people construct and re-construct knowledge about a specific phenomenon, and has three main assumptions: (1) reality is seen as multiple, at times even incompatible with the phenomenon under consideration; (2) just as primary researchers construct interpretations from participants' data, meta-study researchers also construct understandings about the primary researchers' original findings. Thus, meta-synthesis is a construction of a construction, or a meta-construction; and (3) all constructions are shaped by the historical, social and ideological context in which they originated [54] . The key message here is that reports of any synthesis would benefit from an explicit identification of the underlying philosophical perspectives to facilitate a better understanding of the results, how they were derived, and how they are being interpreted.

4.7. Unit of Analysis

The unit of analysis for each category of review is generally distinct. For the emerging synthesis approaches, the unit of analysis is specific to the intention. In meta-narrative synthesis it is the storyline in diverse research traditions; in rapid review or scoping review, it depends on the focus but could be a concept; and in realist synthesis, it is the theories rather than programs that are the units of analysis. The elements of theory that are important in the analysis are mechanisms of action, the context, and the outcome [107] .

For qualitative synthesis, the units of analysis are generally themes, concepts or theories, although in meta-study, the units of analysis can be research findings (“meta-data-analysis”), research methods (“meta-method”) or philosophical/theoretical perspectives (“meta-theory”) [54] . In quantitative synthesis, the units of analysis range from specific statistics for systematic reviews to effect size of the intervention for meta-analysis. More recently, some systematic reviews focus on theories [115] – [116] , therefore it depends on the research question. Similarly, within conventional literature synthesis the units of analysis also depend on the research purpose, focus and question as well as on the type of research methods incorporated into the review. What is important in all research syntheses, however, is that the unit of analysis needs to be made explicit. Unfortunately, this is not always the case.

4.8. Strengths and Limitations

In this section, we discuss the overarching strengths and limitations of synthesis methodologies as a whole and then highlight strengths and weaknesses across each of our four categories of synthesis.

4.8.1. Strengths of Research Syntheses in General

With the vast proliferation of research reports and the increased ease of retrieval, research synthesis has become more accessible providing a way of looking broadly at the current state of research. The availability of syntheses helps researchers, practitioners, and policy makers keep up with the burgeoning literature in their fields without which evidence-informed policy or practice would be difficult. Syntheses explain variation and difference in the data helping us identify the relevance for our own situations; they identify gaps in the literature leading to new research questions and study designs. They help us to know when to replicate a study and when to avoid excessively duplicating research. Syntheses can inform policy and practice in a way that well-designed single studies cannot; they provide building blocks for theory that helps us to understand and explain our phenomena of interest.

4.8.2. Limitations of Research Syntheses in General

The process of selecting, combining, integrating, and synthesizing across diverse study designs and data types can be complex and potentially rife with bias, even with those methodologies that have clearly defined steps. Just because a rigorous and standardized approach has been used does not mean that implicit judgements will not influence the interpretations and choices made at different stages.

In all types of synthesis, the quantity of data can be considerable, requiring difficult decisions about scope, which may affect relevance. The quantity of available data also has implications for the size of the research team. Few reviews these days can be done independently, in particular because decisions about inclusion and exclusion may require the involvement of more than one person to ensure reliability.

For all types of synthesis, it is likely that in areas with large, amorphous, and diverse bodies of literature, even the most sophisticated search strategies will not turn up all the relevant and important texts. This may be more important in some synthesis methodologies than in others, but the omission of key documents can influence the results of all syntheses. This issue can be addressed, at least in part, by including a library scientist on the research team as required by some funding agencies. Even then, it is possible to miss key texts. In this review, for example, because none of us are trained in or conduct meta-analyses, we were not even aware that we had missed some new developments in this field such as meta-regression [117] – [118] , network meta-analysis [119] – [121] , and the use of individual patient data in meta-analyses [122] – [123] .

One limitation of systematic reviews and meta-analyses is that they rapidly go out of date. We thought this might be true for all types of synthesis, although we wondered if those that produce theory might not be somewhat more enduring. We have not answered this question but it is open for debate. For all types of synthesis, the analytic skills and the time required are considerable so it is clear that training is important before embarking on a review, and some types of review may not be appropriate for students or busy practitioners.

Finally, the quality of reporting in primary studies of all genres is variable so it is sometimes difficult to identify aspects of the study essential for the synthesis, or to determine whether the study meets quality criteria. There may be flaws in the original study, or journal page limitations may necessitate omitting important details. Reporting standards have been developed for some types of reviews (e.g., systematic review, meta-analysis, meta-narrative synthesis, realist synthesis); but there are no agreed upon standards for qualitative reviews. This is an important area for development in advancing the science of research synthesis.

4.8.3. Strengths and Limitations of the Four Synthesis Types

The conventional literature review and now the increasingly common integrative review remain important and accessible approaches for students, practitioners, and experienced researchers who want to summarize literature in an area but do not have the expertise to use one of the more complex methodologies. Carefully executed, such reviews are very useful for synthesizing literature in preparation for research grants and practice projects. They can determine the state of knowledge in an area and identify important gaps in the literature to provide a clear rationale or theoretical framework for a study [14] , [18] . There is a demand, however, for more rigour, with more attention to developing comprehensive search strategies and more systematic approaches to combining, integrating, and synthesizing the findings.

Generally, conventional reviews include diverse study designs and data types that facilitate comprehensiveness, which may be a strength on the one hand, but can also present challenges on the other. The complexity inherent in combining results from studies with diverse methodologies can result in bias and inaccuracies. The absence of clear guidelines about how to synthesize across diverse study types and data [18] has been a challenge for novice reviewers.

Quantitative systematic reviews and meta-analyses have been important in launching the field of evidence-based healthcare. They provide a systematic, orderly and auditable process for conducting a review and drawing conclusions [25] . They are arguably the most powerful approaches to understanding the effectiveness of healthcare interventions, especially when intervention studies on the same topic show very different results. When areas of research are dogged by controversy [25] or when study results go against strongly held beliefs, such approaches can reduce the uncertainty and bring strong evidence to bear on the controversy.

Despite their strengths, they also have limitations. Systematic reviews and meta-analyses do not provide a way of including complex literature comprising various types of evidence including qualitative studies, theoretical work, and epidemiological studies. Only certain types of design are considered and qualitative data are used in a limited way. This exclusion limits what can be learned in a topic area.

Meta-analyses are often not possible because of wide variability in study design, population, and interventions so they may have a narrow range of utility. New developments in meta-analysis, however, can be used to address some of these limitations. Network meta-analysis is used to explore relative efficacy of multiple interventions, even those that have never been compared in more conventional pairwise meta-analyses [121] , allowing for improved clinical decision making [120] . The limitation is that network meta-analysis has only been used in medical/clinical applications [119] and not in public health. It has not yet been widely accepted and many methodological challenges remain [120] – [121] . Meta-regression is another development that combines meta-analytic and linear regression principles to address the fact that heterogeneity of results may compromise a meta-analysis [117] – [118] . The disadvantage is that many clinicians are unfamiliar with it and may incorrectly interpret results [117] .

Some have accused meta-analysis of combining apples and oranges [124] raising questions in the field about their meaningfulness [25] , [28] . More recently, the use of individual rather than aggregate data has been useful in facilitating greater comparability among studies [122] . In fact, Tomas et al. [123] argue that meta-analysis using individual data is now the gold standard although access to the raw data from other studies may be a challenge to obtain.

The usefulness of systematic reviews in synthesizing complex health and social interventions has also been challenged [102] . It is often difficult to synthesize their findings because such studies are “epistemologically diverse and methodologically complex” [ [69] , p.21]. Rigid inclusion/exclusion criteria may allow only experimental or quasi-experimental designs into consideration resulting in lost information that may well be useful to policy makers for tailoring an intervention to the context or understanding its acceptance by recipients.

Qualitative syntheses may be the type of review most fraught with controversy and challenge, while also bringing distinct strengths to the enterprise. Although these methodologies provide a comprehensive and systematic review approach, they do not generally provide definitive statements about intervention effectiveness. They do, however, address important questions about the development of theoretical concepts, patient experiences, acceptability of interventions, and an understanding about why interventions might work.

Most qualitative syntheses aim to produce a theoretically generalizable mid-range theory that explains variation across studies. This makes them more useful than single primary studies, which may not be applicable beyond the immediate setting or population. All provide a contextual richness that enhances relevance and understanding. Another benefit of some types of qualitative synthesis (e.g., grounded formal theory) is that the concept of saturation provides a sound rationale for limiting the number of texts to be included thus making reviews potentially more manageable. This contrasts with the requirements of systematic reviews and meta-analyses that require an exhaustive search.

Qualitative researchers debate about whether the findings of ontologically and epistemological diverse qualitative studies can actually be combined or synthesized [125] because methodological diversity raises many challenges for synthesizing findings. The products of different types of qualitative syntheses range from theory and conceptual frameworks, to themes and rich descriptive narratives. Can one combine the findings from a phenomenological study with the theory produced in a grounded theory study? Many argue yes, but many also argue no.

Emerging synthesis methodologies were developed to address some limitations inherent in other types of synthesis but also have their own issues. Because each type is so unique, it is difficult to identify overarching strengths of the entire category. An important strength, however, is that these newer forms of synthesis provide a systematic and rigorous approach to synthesizing a diverse literature base in a topic area that includes a range of data types such as: both quantitative and qualitative studies, theoretical work, case studies, evaluations, epidemiological studies, trials, and policy documents. More than conventional literature reviews and systematic reviews, these approaches provide explicit guidance on analytic methods for integrating different types of data. The assumption is that all forms of data have something to contribute to knowledge and theory in a topic area. All have a defined but flexible process in recognition that the methods may need to shift as knowledge develops through the process.

Many emerging synthesis types are helpful to policy makers and practitioners because they are usually involved as team members in the process to define the research questions, and interpret and disseminate the findings. In fact, engagement of stakeholders is built into the procedures of the methods. This is true for rapid reviews, meta-narrative syntheses, and realist syntheses. It is less likely to be the case for critical interpretive syntheses.

Another strength of some approaches (realist and meta-narrative syntheses) is that quality and publication standards have been developed to guide researchers, reviewers, and funders in judging the quality of the products [108] , [126] – [127] . Training materials and online communities of practice have also been developed to guide users of realist and meta-narrative review methods [107] , [128] . A unique strength of critical interpretive synthesis is that it takes a critical perspective on the process that may help reconceptualize the data in a way not considered by the primary researchers [72] .

There are also challenges of these new approaches. The methods are new and there may be few published applications by researchers other than the developers of the methods, so new users often struggle with the application. The newness of the approaches means that there may not be mentors available to guide those unfamiliar with the methods. This is changing, however, and the number of applications in the literature is growing with publications by new users helping to develop the science of synthesis [e.g., [129] ]. However, the evolving nature of the approaches and their developmental stage present challenges for novice researchers.

4.9. When to Use Each Approach

Choosing an appropriate approach to synthesis will depend on the question you are asking, the purpose of the review, and the outcome or product you want to achieve. In Additional File 1 , we discuss each of these to provide guidance to readers on making a choice about review type. If researchers want to know whether a particular type of intervention is effective in achieving its intended outcomes, then they might choose a quantitative systemic review with or without meta-analysis, possibly buttressed with qualitative studies to provide depth and explanation of the results. Alternately, if the concern is about whether an intervention is effective with different populations under diverse conditions in varying contexts, then a realist synthesis might be the most appropriate.

If researchers' concern is to develop theory, they might consider qualitative syntheses or some of the emerging syntheses that produce theory (e.g., critical interpretive synthesis, realist review, grounded formal theory, qualitative meta-synthesis). If the aim is to track the development and evolution of concepts, theories or ideas, or to determine how an issue or question is addressed across diverse research traditions, then meta-narrative synthesis would be most appropriate.

When the purpose is to review the literature in advance of undertaking a new project, particularly by graduate students, then perhaps an integrative review would be appropriate. Such efforts contribute towards the expansion of theory, identify gaps in the research, establish the rationale for studying particular phenomena, and provide a framework for interpreting results in ways that might be useful for influencing policy and practice.

For researchers keen to bring new insights, interpretations, and critical re-conceptualizations to a body of research, then qualitative or critical interpretive syntheses will provide an inductive product that may offer new understandings or challenges to the status quo. These can inform future theory development, or provide guidance for policy and practice.

5. Discussion

What is the current state of science regarding research synthesis? Public health, health care, and social science researchers or clinicians have previously used all four categories of research synthesis, and all offer a suitable array of approaches for inquiries. New developments in systematic reviews and meta-analysis are providing ways of addressing methodological challenges [117] – [123] . There has also been significant advancement in emerging synthesis methodologies and they are quickly gaining popularity. Qualitative meta-synthesis is still evolving, particularly given how new it is within the terrain of research synthesis. In the midst of this evolution, outstanding issues persist such as grappling with: the quantity of data, quality appraisal, and integration with knowledge translation. These topics have not been thoroughly addressed and need further debate.

5.1. Quantity of Data

We raise the question of whether it is possible or desirable to find all available studies for a synthesis that has this requirement (e.g., meta-analysis, systematic review, scoping, meta-narrative synthesis [25] , [27] , [63] , [67] , [84] – [85] ). Is the synthesis of all available studies a realistic goal in light of the burgeoning literature? And how can this be sustained in the future, particularly as the emerging methodologies continue to develop and as the internet facilitates endless access? There has been surprisingly little discussion on this topic and the answers will have far-reaching implications for searching, sampling, and team formation.

Researchers and graduate students can no longer rely on their own independent literature search. They will likely need to ask librarians for assistance as they navigate multiple sources of literature and learn new search strategies. Although teams now collaborate with library scientists, syntheses are limited in that researchers must make decisions on the boundaries of the review, in turn influencing the study's significance. The size of a team may also be pragmatically determined to manage the search, extraction, and synthesis of the burgeoning data. There is no single answer to our question about the possibility or necessity of finding all available articles for a review. Multiple strategies that are situation specific are likely to be needed.

5.2. Quality Appraisal

While the issue of quality appraisal has received much attention in the synthesis literature, scholars are far from resolution. There may be no agreement about appraisal criteria in a given tradition. For example, the debate rages over the appropriateness of quality appraisal in qualitative synthesis where there are over 100 different sets of criteria and many do not overlap [49] . These differences may reflect disciplinary and methodological orientations, but diverse quality appraisal criteria may privilege particular types of research [49] . The decision to appraise is often grounded in ontological and epistemological assumptions. Nonetheless, diversity within and between categories of synthesis is likely to continue unless debate on the topic of quality appraisal continues and evolves toward consensus.

5.3. Integration with Knowledge Translation

If research syntheses are to make a difference to practice and ultimately to improve health outcomes, then we need to do a better job of knowledge translation. In the Canadian Institutes of Health Research (CIHR) definition of knowledge translation (KT), research or knowledge synthesis is an integral component [130] . Yet, with few exceptions [131] – [132] , very little of the research synthesis literature even mentions the relationship of synthesis to KT nor does it discuss strategies to facilitate the integration of synthesis findings into policy and practice. The exception is in the emerging synthesis methodologies, some of which (e.g., realist and meta-narrative syntheses, scoping reviews) explicitly involve stakeholders or knowledge users. The argument is that engaging them in this way increases the likelihood that the knowledge generated will be translated into policy and practice. We suggest that a more explicit engagement with knowledge users in all types of synthesis would benefit the uptake of the research findings.

Research synthesis neither makes research more applicable to practice nor ensures implementation. Focus must now turn seriously towards translation of synthesis findings into knowledge products that are useful for health care practitioners in multiple areas of practice and develop appropriate strategies to facilitate their use. The burgeoning field of knowledge translation has, to some extent, taken up this challenge; however, the research-practice gap continues to plague us [133] – [134] . It is a particular problem for qualitative syntheses [131] . Although such syntheses have an important place in evidence-informed practice, little effort has gone into the challenge of translating the findings into useful products to guide practice [131] .

5.4. Limitations

Our study took longer than would normally be expected for an integrative review. Each of us were primarily involved in our own dissertations or teaching/research positions, and so this study was conducted ‘off the sides of our desks.’ A limitation was that we searched the literature over the course of 4 years (from 2008–2012), necessitating multiple search updates. Further, we did not do a comprehensive search of the literature after 2012, thus the more recent synthesis literature was not systematically explored. We did, however, perform limited database searches from 2012–2015 to keep abreast of the latest methodological developments. Although we missed some new approaches to meta-analysis in our search, we did not find any new features of the synthesis methodologies covered in our review that would change the analysis or findings of this article. Lastly, we struggled with the labels used for the broad categories of research synthesis methodology because of our hesitancy to reinforce the divide between quantitative and qualitative approaches. However, it was very difficult to find alternative language that represented the types of data used in these methodologies. Despite our hesitancy in creating such an obvious divide, we were left with the challenge of trying to find a way of characterizing these broad types of syntheses.

6. Conclusion

Our findings offer methodological clarity for those wishing to learn about the broad terrain of research synthesis. We believe that our review makes transparent the issues and considerations in choosing from among the four broad categories of research synthesis. In summary, research synthesis has taken its place as a form of research in its own right. The methodological terrain has deep historical roots reaching back over the past 200 years, yet research synthesis remains relatively new to public health, health care, and social sciences in general. This is rapidly changing. New developments in systematic reviews and meta-analysis, and the emergence of new synthesis methodologies provide a vast array of options to review the literature for diverse purposes. New approaches to research synthesis and new analytic methods within existing approaches provide a much broader range of review alternatives for public health, health care, and social science students and researchers.

Acknowledgments

KSM is an assistant professor in the Faculty of Nursing at the University of Alberta. Her work on this article was largely conducted as a Postdoctoral Fellow, funded by KRESCENT (Kidney Research Scientist Core Education and National Training Program, reference #KRES110011R1) and the Faculty of Nursing at the University of Alberta.

MM's work on this study over the period of 2008-2014 was supported by a Canadian Institutes of Health Research Applied Public Health Research Chair Award (grant #92365).

We thank Rachel Spanier who provided support with reference formatting.

List of Abbreviations (in Additional File 1 )

Conflict of interest: The authors declare that they have no conflicts of interest in this article.

Authors' contributions: KSM co-designed the study, collected data, analyzed the data, drafted/revised the manuscript, and managed the project.

MP contributed to searching the literature, developing the analytic framework, and extracting data for the Additional File.

JB contributed to searching the literature, developing the analytic framework, and extracting data for the Additional File.

WN contributed to searching the literature, developing the analytic framework, and extracting data for the Additional File.

All authors read and approved the final manuscript.

Additional Files: Additional File 1 – Selected Types of Research Synthesis

This Additional File is our dataset created to organize, analyze and critique the literature that we synthesized in our integrative review. Our results were created based on analysis of this Additional File.

The Behavioral and Social Sciences: Achievements and Opportunities (1988)

Chapter: 5. methods of data collection, representation, and anlysis.

Below is the uncorrected machine-read text of this chapter, intended to provide our own search engines and external engines with highly rich, chapter-representative searchable text of each book. Because it is UNCORRECTED material, please consider the following text as a useful but insufficient proxy for the authoritative book pages.

l - Methods of Data Collection, Representation Analysis , and

SMethods of Data Collection. Representation, and This chapter concerns research on collecting, representing, and analyzing the data that underlie behavioral and social sciences knowledge. Such research, methodological in character, includes ethnographic and historical approaches, scaling, axiomatic measurement, and statistics, with its important relatives, econometrics and psychometrics. The field can be described as including the self-conscious study of how scientists draw inferences and reach conclusions from observations. Since statistics is the largest and most prominent of meth- odological approaches and is used by researchers in virtually every discipline, statistical work draws the lion's share of this chapter's attention. Problems of interpreting data arise whenever inherent variation or measure- ment fluctuations create challenges to understand data or to judge whether observed relationships are significant, durable, or general. Some examples: Is a sharp monthly (or yearly) increase in the rate of juvenile delinquency (or unemployment) in a particular area a matter for alarm, an ordinary periodic or random fluctuation, or the result of a change or quirk in reporting method? Do the temporal patterns seen in such repeated observations reflect a direct causal mechanism, a complex of indirect ones, or just imperfections in the Analysis 167

168 / The Behavioral and Social Sciences data? Is a decrease in auto injuries an effect of a new seat-belt law? Are the disagreements among people describing some aspect of a subculture too great to draw valid inferences about that aspect of the culture? Such issues of inference are often closely connected to substantive theory and specific data, and to some extent it is difficult and perhaps misleading to treat methods of data collection, representation, and analysis separately. This report does so, as do all sciences to some extent, because the methods developed often are far more general than the specific problems that originally gave rise to them. There is much transfer of new ideas from one substantive field to anotherand to and from fields outside the behavioral and social sciences. Some of the classical methods of statistics arose in studies of astronomical observations, biological variability, and human diversity. The major growth of the classical methods occurred in the twentieth century, greatly stimulated by problems in agriculture and genetics. Some methods for uncovering geometric structures in data, such as multidimensional scaling and factor analysis, orig- inated in research on psychological problems, but have been applied in many other sciences. Some time-series methods were developed originally to deal with economic data, but they are equally applicable to many other kinds of data. Within the behavioral and social sciences, statistical methods have been developed in and have contributed to an enormous variety of research, includ- ing: · In economics: large-scale models of the U.S. economy; effects of taxa- tion, money supply, and other government fiscal and monetary policies; theories of duopoly, oligopoly, and rational expectations; economic effects of slavery. · In psychology: test calibration; the formation of subjective probabilities, their revision in the light of new information, and their use in decision making; psychiatric epidemiology and mental health program evaluation. · In sociology and other fields: victimization and crime rates; effects of incarceration and sentencing policies; deployment of police and fire-fight- ing forces; discrimination, antitrust, and regulatory court cases; social net- works; population growth and forecasting; and voting behavior. Even such an abridged listing makes clear that improvements in method- ology are valuable across the spectrum of empirical research in the behavioral and social sciences as well as in application to policy questions. Clearly, meth- odological research serves many different purposes, and there is a need to develop different approaches to serve those different purposes, including ex- ploratory data analysis, scientific inference about hypotheses and population parameters, individual decision making, forecasting what will happen in the event or absence of intervention, and assessing causality from both randomized experiments and observational data.

Methods of Data Collection, Representation, and Analysis / 169 This discussion of methodological research is divided into three areas: de- sign, representation, and analysis. The efficient design of investigations must take place before data are collected because it involves how much, what kind of, and how data are to be collected. What type of study is feasible: experi- mental, sample survey, field observation, or other? What variables should be measured, controlled, and randomized? How extensive a subject pool or ob- servational period is appropriate? How can study resources be allocated most effectively among various sites, instruments, and subsamples? The construction of useful representations of the data involves deciding what kind of formal structure best expresses the underlying qualitative and quanti- tative concepts that are being used in a given study. For example, cost of living is a simple concept to quantify if it applies to a single individual with unchang- ing tastes in stable markets (that is, markets offering the same array of goods from year to year at varying prices), but as a national aggregate for millions of households and constantly changing consumer product markets, the cost of living is not easy to specify clearly or measure reliably. Statisticians, economists, sociologists, and other experts have long struggled to make the cost of living a precise yet practicable concept that is also efficient to measure, and they must continually modify it to reflect changing circumstances. Data analysis covers the final step of characterizing and interpreting research findings: Can estimates of the relations between variables be made? Can some conclusion be drawn about correlation, cause and effect, or trends over time? How uncertain are the estimates and conclusions and can that uncertainty be reduced by analyzing the data in a different way? Can computers be used to display complex results graphically for quicker or better understanding or to suggest different ways of proceeding? Advances in analysis, data representation, and research design feed into and reinforce one another in the course of actual scientific work. The intersections between methodological improvements and empirical advances are an impor- tant aspect of the multidisciplinary thrust of progress in the behavioral and . socla. . sciences. DESIGNS FOR DATA COLLECTION Four broad kinds of research designs are used in the behavioral and social sciences: experimental, survey, comparative, and ethnographic. Experimental designs, in either the laboratory or field settings, systematically manipulate a few variables while others that may affect the outcome are held constant, randomized, or otherwise controlled. The purpose of randomized experiments is to ensure that only one or a few variables can systematically affect the results, so that causes can be attributed. Survey designs include the collection and analysis of data from censuses, sample surveys, and longitudinal studies and the examination of various relationships among the observed phe-

170 / The Behavioral and Social Sciences nomena. Randomization plays a different role here than in experimental de- signs: it is used to select members of a sample so that the sample is as repre- sentative of the whole population as possible. Comparative designs involve the retrieval of evidence that is recorded in the flow of current or past events in different times or places and the interpretation and analysis of this evidence. Ethnographic designs, also known as participant-observation designs, involve a researcher in intensive and direct contact with a group, community, or pop- ulation being studied, through participation, observation, and extended inter- vlewlng. Experimental Designs Laboratory Experiments Laboratory experiments underlie most of the work reported in Chapter 1, significant parts of Chapter 2, and some of the newest lines of research in Chapter 3. Laboratory experiments extend and adapt classical methods of de- sign first developed, for the most part, in the physical and life sciences and agricultural research. Their main feature is the systematic and independent manipulation of a few variables and the strict control or randomization of all other variables that might affect the phenomenon under study. For example, some studies of animal motivation involve the systematic manipulation of amounts of food and feeding schedules while other factors that may also affect motiva- tion, such as body weight, deprivation, and so on, are held constant. New designs are currently coming into play largely because of new analytic and computational methods (discussed below, in "Advances in Statistical Inference and Analysis". Two examples of empirically important issues that demonstrate the need for broadening classical experimental approaches are open-ended responses and lack of independence of successive experimental trials. The first concerns the design of research protocols that do not require the strict segregation of the events of an experiment into well-defined trials, but permit a subject to respond at will. These methods are needed when what is of interest is how the respond- ent chooses to allocate behavior in real time and across continuously available alternatives. Such empirical methods have long been used, but they can gen- erate very subtle and difficult problems in experimental design and subsequent analysis. As theories of allocative behavior of all sorts become more sophisti- cated and precise, the experimental requirements become more demanding, so the need to better understand and solve this range of design issues is an outstanding challenge to methodological ingenuity. The second issue arises in repeated-trial designs when the behavior on suc- cessive trials, even if it does not exhibit a secular trend (such as a learning curve), is markedly influenced by what has happened in the preceding trial or trials. The more naturalistic the experiment and the more sensitive the meas-

Methods of Data Collection, Representation, and Analysis / 171 urements taken, the more likely it is that such effects will occur. But such sequential dependencies in observations cause a number of important concep- tual and technical problems in summarizing the data and in testing analytical models, which are not yet completely understood. In the absence of clear solutions, such effects are sometimes ignored by investigators, simplifying the data analysis but leaving residues of skepticism about the reliability and sig- nificance of the experimental results. With continuing development of sensitive measures in repeated-trial designs, there is a growing need for more advanced concepts and methods for dealing with experimental results that may be influ- enced by sequential dependencies. Randomized Field Experiments The state of the art in randomized field experiments, in which different policies or procedures are tested in controlled trials under real conditions, has advanced dramatically over the past two decades. Problems that were once considered major methodological obstacles such as implementing random- ized field assignment to treatment and control groups and protecting the ran- domization procedure from corruption have been largely overcome. While state-of-the-art standards are not achieved in every field experiment, the com- mitment to reaching them is rising steadily, not only among researchers but also among customer agencies and sponsors. The health insurance experiment described in Chapter 2 is an example of a major randomized field experiment that has had and will continue to have important policy reverberations in the design of health care financing. Field experiments with the negative income tax (guaranteed minimum income) con- ducted in the 1970s were significant in policy debates, even before their com- pletion, and provided the most solid evidence available on how tax-based income support programs and marginal tax rates can affect the work incentives and family structures of the poor. Important field experiments have also been carried out on alternative strategies for the prevention of delinquency and other criminal behavior, reform of court procedures, rehabilitative programs in men- tal health, family planning, and special educational programs, among other areas. In planning field experiments, much hinges on the definition and design of the experimental cells, the particular combinations needed of treatment and control conditions for each set of demographic or other client sample charac- teristics, including specification of the minimum number of cases needed in each cell to test for the presence of effects. Considerations of statistical power, client availability, and the theoretical structure of the inquiry enter into such specifications. Current important methodological thresholds are to find better ways of predicting recruitment and attrition patterns in the sample, of designing experiments that will be statistically robust in the face of problematic sample