FOR EMPLOYERS

Top 10 real-world data science case studies.

Aditya Sharma

Aditya is a content writer with 5+ years of experience writing for various industries including Marketing, SaaS, B2B, IT, and Edtech among others. You can find him watching anime or playing games when he’s not writing.

Frequently Asked Questions

Real-world data science case studies differ significantly from academic examples. While academic exercises often feature clean, well-structured data and simplified scenarios, real-world projects tackle messy, diverse data sources with practical constraints and genuine business objectives. These case studies reflect the complexities data scientists face when translating data into actionable insights in the corporate world.

Real-world data science projects come with common challenges. Data quality issues, including missing or inaccurate data, can hinder analysis. Domain expertise gaps may result in misinterpretation of results. Resource constraints might limit project scope or access to necessary tools and talent. Ethical considerations, like privacy and bias, demand careful handling.

Lastly, as data and business needs evolve, data science projects must adapt and stay relevant, posing an ongoing challenge.

Real-world data science case studies play a crucial role in helping companies make informed decisions. By analyzing their own data, businesses gain valuable insights into customer behavior, market trends, and operational efficiencies.

These insights empower data-driven strategies, aiding in more effective resource allocation, product development, and marketing efforts. Ultimately, case studies bridge the gap between data science and business decision-making, enhancing a company's ability to thrive in a competitive landscape.

Key takeaways from these case studies for organizations include the importance of cultivating a data-driven culture that values evidence-based decision-making. Investing in robust data infrastructure is essential to support data initiatives. Collaborating closely between data scientists and domain experts ensures that insights align with business goals.

Finally, continuous monitoring and refinement of data solutions are critical for maintaining relevance and effectiveness in a dynamic business environment. Embracing these principles can lead to tangible benefits and sustainable success in real-world data science endeavors.

Data science is a powerful driver of innovation and problem-solving across diverse industries. By harnessing data, organizations can uncover hidden patterns, automate repetitive tasks, optimize operations, and make informed decisions.

In healthcare, for example, data-driven diagnostics and treatment plans improve patient outcomes. In finance, predictive analytics enhances risk management. In transportation, route optimization reduces costs and emissions. Data science empowers industries to innovate and solve complex challenges in ways that were previously unimaginable.

Hire remote developers

Tell us the skills you need and we'll find the best developer for you in days, not weeks.

For enquiries call:

+1-469-442-0620

- Data Science

Top 12 Data Science Case Studies: Across Various Industries

Home Blog Data Science Top 12 Data Science Case Studies: Across Various Industries

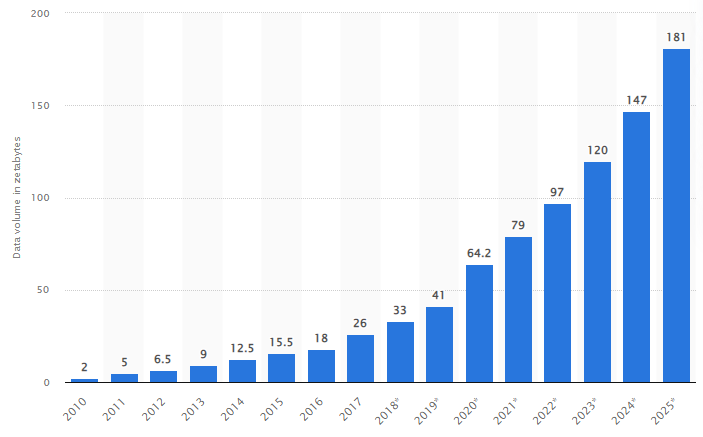

Data science has become popular in the last few years due to its successful application in making business decisions. Data scientists have been using data science techniques to solve challenging real-world issues in healthcare, agriculture, manufacturing, automotive, and many more. For this purpose, a data enthusiast needs to stay updated with the latest technological advancements in AI . An excellent way to achieve this is through reading industry data science case studies. I recommend checking out Data Science With Python course syllabus to start your data science journey. In this discussion, I will present some case studies to you that contain detailed and systematic data analysis of people, objects, or entities focusing on multiple factors present in the dataset. Aspiring and practising data scientists can motivate themselves to learn more about the sector, an alternative way of thinking, or methods to improve their organization based on comparable experiences. Almost every industry uses data science in some way. You can learn more about data science fundamentals in this data science course content . From my standpoint, data scientists may use it to spot fraudulent conduct in insurance claims. Automotive data scientists may use it to improve self-driving cars. In contrast, e-commerce data scientists can use it to add more personalization for their consumers—the possibilities are unlimited and unexplored. Let’s look at the top eight data science case studies in this article so you can understand how businesses from many sectors have benefitted from data science to boost productivity, revenues, and more. Read on to explore more or use the following links to go straight to the case study of your choice.

Examples of Data Science Case Studies

- Hospitality: Airbnb focuses on growth by analyzing customer voice using data science. Qantas uses predictive analytics to mitigate losses

- Healthcare: Novo Nordisk is Driving innovation with NLP. AstraZeneca harnesses data for innovation in medicine

- Covid 19: Johnson and Johnson use s d ata science to fight the Pandemic

- E-commerce: Amazon uses data science to personalize shop p ing experiences and improve customer satisfaction

- Supply chain management : UPS optimizes supp l y chain with big data analytics

- Meteorology: IMD leveraged data science to achieve a rec o rd 1.2m evacuation before cyclone ''Fani''

- Entertainment Industry: Netflix u ses data science to personalize the content and improve recommendations. Spotify uses big data to deliver a rich user experience for online music streaming

- Banking and Finance: HDFC utilizes Big D ata Analytics to increase income and enhance the banking experience

Top 8 Data Science Case Studies [For Various Industries]

1. data science in hospitality industry.

In the hospitality sector, data analytics assists hotels in better pricing strategies, customer analysis, brand marketing , tracking market trends, and many more.

Airbnb focuses on growth by analyzing customer voice using data science. A famous example in this sector is the unicorn '' Airbnb '', a startup that focussed on data science early to grow and adapt to the market faster. This company witnessed a 43000 percent hypergrowth in as little as five years using data science. They included data science techniques to process the data, translate this data for better understanding the voice of the customer, and use the insights for decision making. They also scaled the approach to cover all aspects of the organization. Airbnb uses statistics to analyze and aggregate individual experiences to establish trends throughout the community. These analyzed trends using data science techniques impact their business choices while helping them grow further.

Travel industry and data science

Predictive analytics benefits many parameters in the travel industry. These companies can use recommendation engines with data science to achieve higher personalization and improved user interactions. They can study and cross-sell products by recommending relevant products to drive sales and increase revenue. Data science is also employed in analyzing social media posts for sentiment analysis, bringing invaluable travel-related insights. Whether these views are positive, negative, or neutral can help these agencies understand the user demographics, the expected experiences by their target audiences, and so on. These insights are essential for developing aggressive pricing strategies to draw customers and provide better customization to customers in the travel packages and allied services. Travel agencies like Expedia and Booking.com use predictive analytics to create personalized recommendations, product development, and effective marketing of their products. Not just travel agencies but airlines also benefit from the same approach. Airlines frequently face losses due to flight cancellations, disruptions, and delays. Data science helps them identify patterns and predict possible bottlenecks, thereby effectively mitigating the losses and improving the overall customer traveling experience.

How Qantas uses predictive analytics to mitigate losses

Qantas , one of Australia's largest airlines, leverages data science to reduce losses caused due to flight delays, disruptions, and cancellations. They also use it to provide a better traveling experience for their customers by reducing the number and length of delays caused due to huge air traffic, weather conditions, or difficulties arising in operations. Back in 2016, when heavy storms badly struck Australia's east coast, only 15 out of 436 Qantas flights were cancelled due to their predictive analytics-based system against their competitor Virgin Australia, which witnessed 70 cancelled flights out of 320.

2. Data Science in Healthcare

The Healthcare sector is immensely benefiting from the advancements in AI. Data science, especially in medical imaging, has been helping healthcare professionals come up with better diagnoses and effective treatments for patients. Similarly, several advanced healthcare analytics tools have been developed to generate clinical insights for improving patient care. These tools also assist in defining personalized medications for patients reducing operating costs for clinics and hospitals. Apart from medical imaging or computer vision, Natural Language Processing (NLP) is frequently used in the healthcare domain to study the published textual research data.

A. Pharmaceutical

Driving innovation with NLP: Novo Nordisk. Novo Nordisk uses the Linguamatics NLP platform from internal and external data sources for text mining purposes that include scientific abstracts, patents, grants, news, tech transfer offices from universities worldwide, and more. These NLP queries run across sources for the key therapeutic areas of interest to the Novo Nordisk R&D community. Several NLP algorithms have been developed for the topics of safety, efficacy, randomized controlled trials, patient populations, dosing, and devices. Novo Nordisk employs a data pipeline to capitalize the tools' success on real-world data and uses interactive dashboards and cloud services to visualize this standardized structured information from the queries for exploring commercial effectiveness, market situations, potential, and gaps in the product documentation. Through data science, they are able to automate the process of generating insights, save time and provide better insights for evidence-based decision making.

How AstraZeneca harnesses data for innovation in medicine. AstraZeneca is a globally known biotech company that leverages data using AI technology to discover and deliver newer effective medicines faster. Within their R&D teams, they are using AI to decode the big data to understand better diseases like cancer, respiratory disease, and heart, kidney, and metabolic diseases to be effectively treated. Using data science, they can identify new targets for innovative medications. In 2021, they selected the first two AI-generated drug targets collaborating with BenevolentAI in Chronic Kidney Disease and Idiopathic Pulmonary Fibrosis.

Data science is also helping AstraZeneca redesign better clinical trials, achieve personalized medication strategies, and innovate the process of developing new medicines. Their Center for Genomics Research uses data science and AI to analyze around two million genomes by 2026. Apart from this, they are training their AI systems to check these images for disease and biomarkers for effective medicines for imaging purposes. This approach helps them analyze samples accurately and more effortlessly. Moreover, it can cut the analysis time by around 30%.

AstraZeneca also utilizes AI and machine learning to optimize the process at different stages and minimize the overall time for the clinical trials by analyzing the clinical trial data. Summing up, they use data science to design smarter clinical trials, develop innovative medicines, improve drug development and patient care strategies, and many more.

C. Wearable Technology

Wearable technology is a multi-billion-dollar industry. With an increasing awareness about fitness and nutrition, more individuals now prefer using fitness wearables to track their routines and lifestyle choices.

Fitness wearables are convenient to use, assist users in tracking their health, and encourage them to lead a healthier lifestyle. The medical devices in this domain are beneficial since they help monitor the patient's condition and communicate in an emergency situation. The regularly used fitness trackers and smartwatches from renowned companies like Garmin, Apple, FitBit, etc., continuously collect physiological data of the individuals wearing them. These wearable providers offer user-friendly dashboards to their customers for analyzing and tracking progress in their fitness journey.

3. Covid 19 and Data Science

In the past two years of the Pandemic, the power of data science has been more evident than ever. Different pharmaceutical companies across the globe could synthesize Covid 19 vaccines by analyzing the data to understand the trends and patterns of the outbreak. Data science made it possible to track the virus in real-time, predict patterns, devise effective strategies to fight the Pandemic, and many more.

How Johnson and Johnson uses data science to fight the Pandemic

The data science team at Johnson and Johnson leverages real-time data to track the spread of the virus. They built a global surveillance dashboard (granulated to county level) that helps them track the Pandemic's progress, predict potential hotspots of the virus, and narrow down the likely place where they should test its investigational COVID-19 vaccine candidate. The team works with in-country experts to determine whether official numbers are accurate and find the most valid information about case numbers, hospitalizations, mortality and testing rates, social compliance, and local policies to populate this dashboard. The team also studies the data to build models that help the company identify groups of individuals at risk of getting affected by the virus and explore effective treatments to improve patient outcomes.

4. Data Science in E-commerce

In the e-commerce sector , big data analytics can assist in customer analysis, reduce operational costs, forecast trends for better sales, provide personalized shopping experiences to customers, and many more.

Amazon uses data science to personalize shopping experiences and improve customer satisfaction. Amazon is a globally leading eCommerce platform that offers a wide range of online shopping services. Due to this, Amazon generates a massive amount of data that can be leveraged to understand consumer behavior and generate insights on competitors' strategies. Amazon uses its data to provide recommendations to its users on different products and services. With this approach, Amazon is able to persuade its consumers into buying and making additional sales. This approach works well for Amazon as it earns 35% of the revenue yearly with this technique. Additionally, Amazon collects consumer data for faster order tracking and better deliveries.

Similarly, Amazon's virtual assistant, Alexa, can converse in different languages; uses speakers and a camera to interact with the users. Amazon utilizes the audio commands from users to improve Alexa and deliver a better user experience.

5. Data Science in Supply Chain Management

Predictive analytics and big data are driving innovation in the Supply chain domain. They offer greater visibility into the company operations, reduce costs and overheads, forecasting demands, predictive maintenance, product pricing, minimize supply chain interruptions, route optimization, fleet management , drive better performance, and more.

Optimizing supply chain with big data analytics: UPS

UPS is a renowned package delivery and supply chain management company. With thousands of packages being delivered every day, on average, a UPS driver makes about 100 deliveries each business day. On-time and safe package delivery are crucial to UPS's success. Hence, UPS offers an optimized navigation tool ''ORION'' (On-Road Integrated Optimization and Navigation), which uses highly advanced big data processing algorithms. This tool for UPS drivers provides route optimization concerning fuel, distance, and time. UPS utilizes supply chain data analysis in all aspects of its shipping process. Data about packages and deliveries are captured through radars and sensors. The deliveries and routes are optimized using big data systems. Overall, this approach has helped UPS save 1.6 million gallons of gasoline in transportation every year, significantly reducing delivery costs.

6. Data Science in Meteorology

Weather prediction is an interesting application of data science . Businesses like aviation, agriculture and farming, construction, consumer goods, sporting events, and many more are dependent on climatic conditions. The success of these businesses is closely tied to the weather, as decisions are made after considering the weather predictions from the meteorological department.

Besides, weather forecasts are extremely helpful for individuals to manage their allergic conditions. One crucial application of weather forecasting is natural disaster prediction and risk management.

Weather forecasts begin with a large amount of data collection related to the current environmental conditions (wind speed, temperature, humidity, clouds captured at a specific location and time) using sensors on IoT (Internet of Things) devices and satellite imagery. This gathered data is then analyzed using the understanding of atmospheric processes, and machine learning models are built to make predictions on upcoming weather conditions like rainfall or snow prediction. Although data science cannot help avoid natural calamities like floods, hurricanes, or forest fires. Tracking these natural phenomena well ahead of their arrival is beneficial. Such predictions allow governments sufficient time to take necessary steps and measures to ensure the safety of the population.

IMD leveraged data science to achieve a record 1.2m evacuation before cyclone ''Fani''

Most d ata scientist’s responsibilities rely on satellite images to make short-term forecasts, decide whether a forecast is correct, and validate models. Machine Learning is also used for pattern matching in this case. It can forecast future weather conditions if it recognizes a past pattern. When employing dependable equipment, sensor data is helpful to produce local forecasts about actual weather models. IMD used satellite pictures to study the low-pressure zones forming off the Odisha coast (India). In April 2019, thirteen days before cyclone ''Fani'' reached the area, IMD (India Meteorological Department) warned that a massive storm was underway, and the authorities began preparing for safety measures.

It was one of the most powerful cyclones to strike India in the recent 20 years, and a record 1.2 million people were evacuated in less than 48 hours, thanks to the power of data science.

7. Data Science in the Entertainment Industry

Due to the Pandemic, demand for OTT (Over-the-top) media platforms has grown significantly. People prefer watching movies and web series or listening to the music of their choice at leisure in the convenience of their homes. This sudden growth in demand has given rise to stiff competition. Every platform now uses data analytics in different capacities to provide better-personalized recommendations to its subscribers and improve user experience.

How Netflix uses data science to personalize the content and improve recommendations

Netflix is an extremely popular internet television platform with streamable content offered in several languages and caters to various audiences. In 2006, when Netflix entered this media streaming market, they were interested in increasing the efficiency of their existing ''Cinematch'' platform by 10% and hence, offered a prize of $1 million to the winning team. This approach was successful as they found a solution developed by the BellKor team at the end of the competition that increased prediction accuracy by 10.06%. Over 200 work hours and an ensemble of 107 algorithms provided this result. These winning algorithms are now a part of the Netflix recommendation system.

Netflix also employs Ranking Algorithms to generate personalized recommendations of movies and TV Shows appealing to its users.

Spotify uses big data to deliver a rich user experience for online music streaming

Personalized online music streaming is another area where data science is being used. Spotify is a well-known on-demand music service provider launched in 2008, which effectively leveraged big data to create personalized experiences for each user. It is a huge platform with more than 24 million subscribers and hosts a database of nearly 20million songs; they use the big data to offer a rich experience to its users. Spotify uses this big data and various algorithms to train machine learning models to provide personalized content. Spotify offers a "Discover Weekly" feature that generates a personalized playlist of fresh unheard songs matching the user's taste every week. Using the Spotify "Wrapped" feature, users get an overview of their most favorite or frequently listened songs during the entire year in December. Spotify also leverages the data to run targeted ads to grow its business. Thus, Spotify utilizes the user data, which is big data and some external data, to deliver a high-quality user experience.

8. Data Science in Banking and Finance

Data science is extremely valuable in the Banking and Finance industry . Several high priority aspects of Banking and Finance like credit risk modeling (possibility of repayment of a loan), fraud detection (detection of malicious or irregularities in transactional patterns using machine learning), identifying customer lifetime value (prediction of bank performance based on existing and potential customers), customer segmentation (customer profiling based on behavior and characteristics for personalization of offers and services). Finally, data science is also used in real-time predictive analytics (computational techniques to predict future events).

How HDFC utilizes Big Data Analytics to increase revenues and enhance the banking experience

One of the major private banks in India, HDFC Bank , was an early adopter of AI. It started with Big Data analytics in 2004, intending to grow its revenue and understand its customers and markets better than its competitors. Back then, they were trendsetters by setting up an enterprise data warehouse in the bank to be able to track the differentiation to be given to customers based on their relationship value with HDFC Bank. Data science and analytics have been crucial in helping HDFC bank segregate its customers and offer customized personal or commercial banking services. The analytics engine and SaaS use have been assisting the HDFC bank in cross-selling relevant offers to its customers. Apart from the regular fraud prevention, it assists in keeping track of customer credit histories and has also been the reason for the speedy loan approvals offered by the bank.

9. Data Science in Urban Planning and Smart Cities

Data Science can help the dream of smart cities come true! Everything, from traffic flow to energy usage, can get optimized using data science techniques. You can use the data fetched from multiple sources to understand trends and plan urban living in a sorted manner.

The significant data science case study is traffic management in Pune city. The city controls and modifies its traffic signals dynamically, tracking the traffic flow. Real-time data gets fetched from the signals through cameras or sensors installed. Based on this information, they do the traffic management. With this proactive approach, the traffic and congestion situation in the city gets managed, and the traffic flow becomes sorted. A similar case study is from Bhubaneswar, where the municipality has platforms for the people to give suggestions and actively participate in decision-making. The government goes through all the inputs provided before making any decisions, making rules or arranging things that their residents actually need.

10. Data Science in Agricultural Yield Prediction

Have you ever wondered how helpful it can be if you can predict your agricultural yield? That is exactly what data science is helping farmers with. They can get information about the number of crops they can produce in a given area based on different environmental factors and soil types. Using this information, the farmers can make informed decisions about their yield and benefit the buyers and themselves in multiple ways.

Farmers across the globe and overseas use various data science techniques to understand multiple aspects of their farms and crops. A famous example of data science in the agricultural industry is the work done by Farmers Edge. It is a company in Canada that takes real-time images of farms across the globe and combines them with related data. The farmers use this data to make decisions relevant to their yield and improve their produce. Similarly, farmers in countries like Ireland use satellite-based information to ditch traditional methods and multiply their yield strategically.

11. Data Science in the Transportation Industry

Transportation keeps the world moving around. People and goods commute from one place to another for various purposes, and it is fair to say that the world will come to a standstill without efficient transportation. That is why it is crucial to keep the transportation industry in the most smoothly working pattern, and data science helps a lot in this. In the realm of technological progress, various devices such as traffic sensors, monitoring display systems, mobility management devices, and numerous others have emerged.

Many cities have already adapted to the multi-modal transportation system. They use GPS trackers, geo-locations and CCTV cameras to monitor and manage their transportation system. Uber is the perfect case study to understand the use of data science in the transportation industry. They optimize their ride-sharing feature and track the delivery routes through data analysis. Their data science approach enabled them to serve more than 100 million users, making transportation easy and convenient. Moreover, they also use the data they fetch from users daily to offer cost-effective and quickly available rides.

12. Data Science in the Environmental Industry

Increasing pollution, global warming, climate changes and other poor environmental impacts have forced the world to pay attention to environmental industry. Multiple initiatives are being taken across the globe to preserve the environment and make the world a better place. Though the industry recognition and the efforts are in the initial stages, the impact is significant, and the growth is fast.

The popular use of data science in the environmental industry is by NASA and other research organizations worldwide. NASA gets data related to the current climate conditions, and this data gets used to create remedial policies that can make a difference. Another way in which data science is actually helping researchers is they can predict natural disasters well before time and save or at least reduce the potential damage considerably. A similar case study is with the World Wildlife Fund. They use data science to track data related to deforestation and help reduce the illegal cutting of trees. Hence, it helps preserve the environment.

Where to Find Full Data Science Case Studies?

Data science is a highly evolving domain with many practical applications and a huge open community. Hence, the best way to keep updated with the latest trends in this domain is by reading case studies and technical articles. Usually, companies share their success stories of how data science helped them achieve their goals to showcase their potential and benefit the greater good. Such case studies are available online on the respective company websites and dedicated technology forums like Towards Data Science or Medium.

Additionally, we can get some practical examples in recently published research papers and textbooks in data science.

What Are the Skills Required for Data Scientists?

Data scientists play an important role in the data science process as they are the ones who work on the data end to end. To be able to work on a data science case study, there are several skills required for data scientists like a good grasp of the fundamentals of data science, deep knowledge of statistics, excellent programming skills in Python or R, exposure to data manipulation and data analysis, ability to generate creative and compelling data visualizations, good knowledge of big data, machine learning and deep learning concepts for model building & deployment. Apart from these technical skills, data scientists also need to be good storytellers and should have an analytical mind with strong communication skills.

Opt for the best business analyst training elevating your expertise. Take the leap towards becoming a distinguished business analysis professional

Conclusion

These were some interesting data science case studies across different industries. There are many more domains where data science has exciting applications, like in the Education domain, where data can be utilized to monitor student and instructor performance, develop an innovative curriculum that is in sync with the industry expectations, etc.

Almost all the companies looking to leverage the power of big data begin with a swot analysis to narrow down the problems they intend to solve with data science. Further, they need to assess their competitors to develop relevant data science tools and strategies to address the challenging issue. This approach allows them to differentiate themselves from their competitors and offer something unique to their customers.

With data science, the companies have become smarter and more data-driven to bring about tremendous growth. Moreover, data science has made these organizations more sustainable. Thus, the utility of data science in several sectors is clearly visible, a lot is left to be explored, and more is yet to come. Nonetheless, data science will continue to boost the performance of organizations in this age of big data.

Frequently Asked Questions (FAQs)

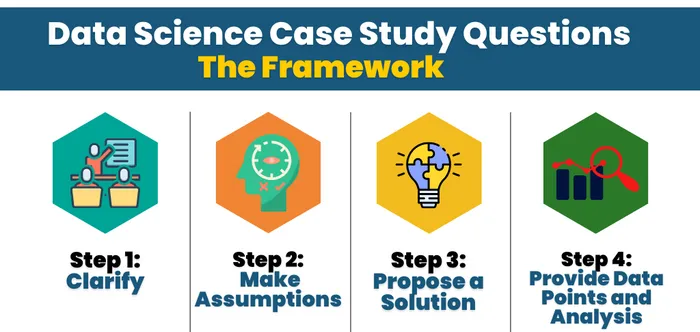

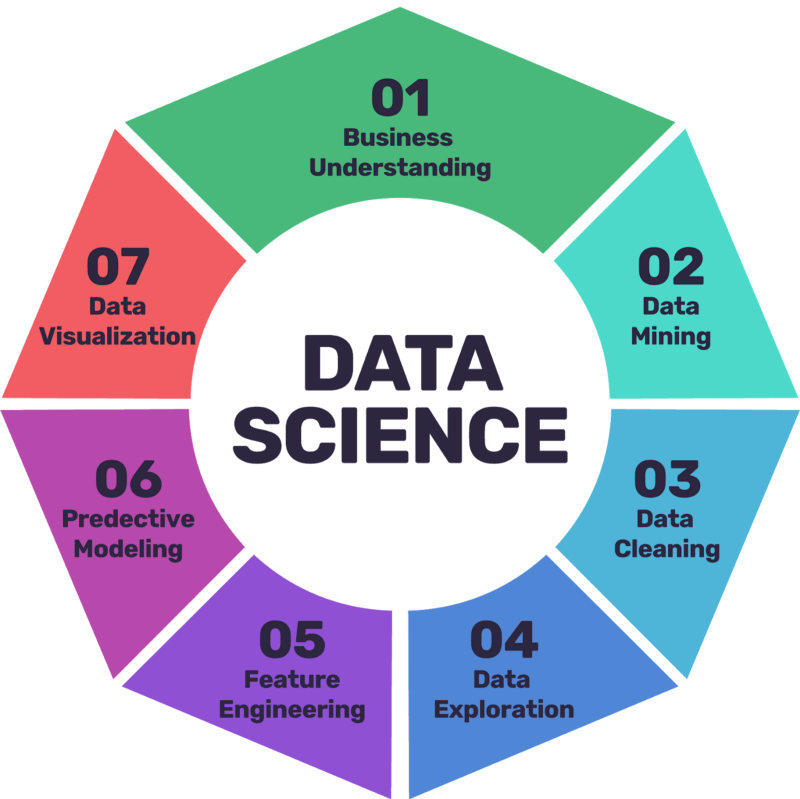

A case study in data science requires a systematic and organized approach for solving the problem. Generally, four main steps are needed to tackle every data science case study:

- Defining the problem statement and strategy to solve it

- Gather and pre-process the data by making relevant assumptions

- Select tool and appropriate algorithms to build machine learning /deep learning models

- Make predictions, accept the solutions based on evaluation metrics, and improve the model if necessary.

Getting data for a case study starts with a reasonable understanding of the problem. This gives us clarity about what we expect the dataset to include. Finding relevant data for a case study requires some effort. Although it is possible to collect relevant data using traditional techniques like surveys and questionnaires, we can also find good quality data sets online on different platforms like Kaggle, UCI Machine Learning repository, Azure open data sets, Government open datasets, Google Public Datasets, Data World and so on.

Data science projects involve multiple steps to process the data and bring valuable insights. A data science project includes different steps - defining the problem statement, gathering relevant data required to solve the problem, data pre-processing, data exploration & data analysis, algorithm selection, model building, model prediction, model optimization, and communicating the results through dashboards and reports.

Devashree Madhugiri

Devashree holds an M.Eng degree in Information Technology from Germany and a background in Data Science. She likes working with statistics and discovering hidden insights in varied datasets to create stunning dashboards. She enjoys sharing her knowledge in AI by writing technical articles on various technological platforms. She loves traveling, reading fiction, solving Sudoku puzzles, and participating in coding competitions in her leisure time.

Avail your free 1:1 mentorship session.

Something went wrong

Upcoming Data Science Batches & Dates

6 of my favorite case studies in Data Science!

Data scientists are numbers people. They have a deep understanding of statistics and algorithms, programming and hacking, and communication skills. Data science is about applying these three skill sets in a disciplined and systematic manner, with the goal of improving an aspect of the business. That’s the data science process . In order to stay abreast of industry trends, data scientists often turn to case studies. Reviewing these is a helpful way for both aspiring and working data scientists to challenge themselves and learn more about a particular field, a different way of thinking, or ways to better their own company based on similar experiences. If you’re not familiar with case studies , they’ve been described as “an intensive, systematic investigation of a single individual, group, community or some other unit in which the researcher examines in-depth data relating to several variables.” Data science is used by pretty much every industry out there. Insurance claims analysts can use data science to identify fraudulent behavior, e-commerce data scientists can build personalized experiences for their customers, music streaming companies can use it to create different genres of playlists—the possibilities are endless. Allow us to share a few of our favorite data science case studies with you so you can see first hand how companies across a variety of industries leveraged big data to drive productivity, profits, and more.

6 case studies in Data Science

- How Airbnb characterizes data science

- How data science is involved in decision-making at Airbnb

- How Airbnb has scaled its data science efforts across all aspects of the company

Airbnb says that “we’re at a point where our infrastructure is stable, our tools are sophisticated, and our warehouse is clean and reliable. We’re ready to take on exciting new problems.” 3. Spotify’s “This Is” Playlists: The Ultimate Song Analysis For 50 Mainstream Artists If you’re a music lover, you’ve probably used Spotify at least once. If you’re a regular user, you’ve likely taken note of their personalized playlists and been impressed at how well the songs catered to your music preferences. But have you ever thought about how Spotify categorizes their music? You can thank their data science teams for that. The goal of the “This Is” case study is to analyze the music of various Spotify artists, segment the styles, and categorize them into by loudness, danceability, energy, and more. To start, a data scientist looked at Spotify’s API, which collects and provides data from Spotify’s music catalog. Once the data researcher accessed the data from Spotify’s API, he:

- Processed the data to extract audio features for each artist

- Visualized the data using D3.js.

- Applied k-means clustering to separate the artists into different groups

- Analyzed each feature for all the artists

Want a sneak peek at the results? James Arthur and Post Malone are in the same cluster, Kendrick Lamar is the “fastest” artist, and Marshmello beat Martin Garrix in the energy category. 4. A Leading Online Travel Agency Increases Revenues by 16 Percent with Actionable Analytics One of the largest online travel agencies in the world generated the majority of its revenue through its website and directed most of its resources there, but its clients were still using offline channels such as faxes and phone calls to ask questions. The agency brought in WNS, a travel-focused business process management company, to help it determine how to rethink and redesign its roadmap to capture missed revenue opportunities. WNS determined that the agency lacked an adequate offline strategy, which resulted in a dip in revenue and market share. After a deep dive into customer segments, the performance of offline sales agents, ideal hours for sales agents, and more, WNS was able to help the agency increase offline revenue by 16 percent and increase conversion rates by 21 percent. 5. How Mint.com Grew from Zero to 1 Million Users Mint.com is a free personal finance management service that asks users to input their personal spending data to generate insights about where their money goes. When Noah Kagan joined Mint.com as its marketing director, his goal was to find 100,000 new members in just six months. He didn’t just meet that goal. He destroyed it, generating one million members. How did he do it? Kagan says his success was two-fold. This first part was having a product he believed in. The second he attributes to “reverse engineering marketing.” “The key focal point to this strategy is to work backward,” Kagan explained. “Instead of starting with an intimidating zero playing on your mind, start at the solution and map your plan back from there.” He went on: “Think of it as a road trip. You start with a set destination in mind and then plan your route there. You don’t get in your car and start driving without in the hope that you magically end up where you wanted to be.” 6. Netflix: Using Big Data to Drive Big Engagement One of the best ways to explain the benefits of data science to people who don’t quite grasp the industry is by using Netflix-focused examples. Yes, Netflix is the largest internet-television network in the world. But what most people don’t realize is that, at its core, Netflix is a customer-focused, data-driven business. Founded in 1997 as a mail-order DVD company, it now boasts more than 53 million members in approximately 50 countries. If you watch The Fast and The Furious on Friday night, Netflix will likely serve up a Mark Wahlberg movie among your personalized recommendations for Saturday night. This is due to data science. But did you know that the company also uses its data insights to inform the way it buys, licenses, and creates new content? House of Cards and Orange is the New Black are two examples of how the company leveraged big data to understand its subscribers and cater to their needs. The company’s most-watched shows are generated from recommendations, which in turn foster consumer engagement and loyalty. This is why the company is constantly working on its recommendation engines. The Netflix story is a perfect case study for those who require engaged audiences in order to survive. In summary, data scientists are companies’ secret weapons when it comes to understanding customer behavior and levering it to drive conversion, loyalty, and profits. These six data science case studies show you how a variety of organizations—from a nature conservation group to a finance company to a media company—leveraged their big data to not only survive but to beat out the competition.

Recent Blogs

Why Invest In Data?

Data Science

How big data and product analytics are impacting the fintech industry

How Even the Most World-Weary Investors are Leveraging the Power of Big Data to Make Trades

What you need to build and implement an enterprise big data strategy

Enterprise...

Big data challenges and how to overcome them

Big Data and blockchain are a perfect match. So what's keeping them apart?

Not that...

4 applications of big data in Supply Chain Management

How to help high schoolers understand big data

Data Science , Tech and Tools

The use of big data in manufacturing industry

Approximat...

The importance of big data and open source for the blockchain

Challenges of maintaining a traditional data warehouse

5 reasons why big data initiatives fail

5 data science books every beginner should read

Books , Data Science

How the evolution of data analytics impacts the digital marketing industry

Data analytics: How is it saving lives

Benefits and advantages of data cleansing techniques

How to use big data for business development

7 Best practices to help secure big data

others , Data Science

The Role of Big Data in Mobile App Development

Data matters: Just being a visionary is not enough for new entrepreneurs

“Without...

Why improved connectivity is boosted by big data

According...

How big data is battling child abuse

Technology...

How small businesses can harness the power of big data and data analytics

API testing tutorial: How does it work?

Big data in auditing and analytics: How is it helping?

Why customer data collection is important for effective marketing strategies?

Customer...

Subscribe to the Crayon Blog

Get the latest posts in your inbox!

Data Science Interview Case Studies: How to Prepare and Excel

In the realm of Data Science Interviews , case studies play a crucial role in assessing a candidate's problem-solving skills and analytical mindset . To stand out and excel in these scenarios, thorough preparation is key. Here's a comprehensive guide on how to prepare and shine in data science interview case studies.

Understanding the Basics

Before delving into case studies, it's essential to have a solid grasp of fundamental data science concepts. Review key topics such as statistical analysis, machine learning algorithms, data manipulation, and data visualization. This foundational knowledge will form the basis of your approach to solving case study problems.

Deconstructing the Case Study

When presented with a case study during the interview, take a structured approach to deconstructing the problem. Begin by defining the business problem or question at hand. Break down the problem into manageable components and identify the key variables involved. This analytical framework will guide your problem-solving process.

🚀 Read more on: "Ultimate Guide: Crafting an Impressive UI/UX Design Portfolio for Success"

Utilizing Data Science Techniques

Apply your data science skills to analyze the provided data and derive meaningful insights. Utilize statistical methods, predictive modeling, and data visualization techniques to explore patterns and trends within the dataset. Clearly communicate your methodology and reasoning to demonstrate your analytical capabilities.

Problem-Solving Strategy

Develop a systematic problem-solving strategy to tackle case study challenges effectively. Start by outlining your approach and assumptions before proceeding to data analysis and interpretation. Implement a logical and structured process to arrive at well-supported conclusions.

Practice Makes Perfect

Engage in regular practice sessions with mock case studies to hone your problem-solving skills. Participate in data science forums and communities to discuss case studies with peers and gain diverse perspectives. The more you practice, the more confident and proficient you will become in tackling complex data science challenges.

Communicating Your Findings

Effectively communicating your findings and insights is crucial in a data science interview case study. Present your analysis in a clear and concise manner, highlighting key takeaways and recommendations. Demonstrate your storytelling ability by structuring your presentation in a logical and engaging manner.

💡 Are you a job seeker in San Francisco? Check out these fresh jobs in your area!

Exceling in data science interview case studies requires a combination of technical proficiency, analytical thinking, and effective communication . By mastering the art of case study preparation and problem-solving, you can showcase your data science skills and secure coveted job opportunities in the field.

Explore, Engage, Elevate: Discover Unlimited Stories on Rise Blog

Let us know your email to read this article and many more, plus get fresh jobs delivered to your inbox every week 🎉

Featured Jobs ⭐️

Get Featured ⭐️ jobs delivered straight to your inbox 📬

Get Fresh Jobs Delivered Straight to Your Inbox

Join our newsletter for free job alerts every Monday!

Jump to explore jobs

Sign up for our weekly newsletter of fresh jobs

Get fresh jobs delivered to your inbox every week 🎉

Next Gen Data Learning – Amplify Your Skills

Data Science Case Study Interview: Your Guide to Success

by Enterprise DNA Experts | Careers

Ready to crush your next data science interview? Well, you’re in the right place.

This type of interview is designed to assess your problem-solving skills, technical knowledge, and ability to apply data-driven solutions to real-world challenges.

So, how can you master these interviews and secure your next job?

To master your data science case study interview:

Practice Case Studies: Engage in mock scenarios to sharpen problem-solving skills.

Review Core Concepts: Brush up on algorithms, statistical analysis, and key programming languages.

Contextualize Solutions: Connect findings to business objectives for meaningful insights.

Clear Communication: Present results logically and effectively using visuals and simple language.

Adaptability and Clarity: Stay flexible and articulate your thought process during problem-solving.

This article will delve into each of these points and give you additional tips and practice questions to get you ready to crush your upcoming interview!

After you’ve read this article, you can enter the interview ready to showcase your expertise and win your dream role.

Let’s dive in!

Table of Contents

What to Expect in the Interview?

Data science case study interviews are an essential part of the hiring process. They give interviewers a glimpse of how you, approach real-world business problems and demonstrate your analytical thinking, problem-solving, and technical skills.

Furthermore, case study interviews are typically open-ended , which means you’ll be presented with a problem that doesn’t have a right or wrong answer.

Instead, you are expected to demonstrate your ability to:

Break down complex problems

Make assumptions

Gather context

Provide data points and analysis

This type of interview allows your potential employer to evaluate your creativity, technical knowledge, and attention to detail.

But what topics will the interview touch on?

Topics Covered in Data Science Case Study Interviews

In a case study interview , you can expect inquiries that cover a spectrum of topics crucial to evaluating your skill set:

Topic 1: Problem-Solving Scenarios

In these interviews, your ability to resolve genuine business dilemmas using data-driven methods is essential.

These scenarios reflect authentic challenges, demanding analytical insight, decision-making, and problem-solving skills.

Real-world Challenges: Expect scenarios like optimizing marketing strategies, predicting customer behavior, or enhancing operational efficiency through data-driven solutions.

Analytical Thinking: Demonstrate your capacity to break down complex problems systematically, extracting actionable insights from intricate issues.

Decision-making Skills: Showcase your ability to make informed decisions, emphasizing instances where your data-driven choices optimized processes or led to strategic recommendations.

Your adeptness at leveraging data for insights, analytical thinking, and informed decision-making defines your capability to provide practical solutions in real-world business contexts.

Topic 2: Data Handling and Analysis

Data science case studies assess your proficiency in data preprocessing, cleaning, and deriving insights from raw data.

Data Collection and Manipulation: Prepare for data engineering questions involving data collection, handling missing values, cleaning inaccuracies, and transforming data for analysis.

Handling Missing Values and Cleaning Data: Showcase your skills in managing missing values and ensuring data quality through cleaning techniques.

Data Transformation and Feature Engineering: Highlight your expertise in transforming raw data into usable formats and creating meaningful features for analysis.

Mastering data preprocessing—managing, cleaning, and transforming raw data—is fundamental. Your proficiency in these techniques showcases your ability to derive valuable insights essential for data-driven solutions.

Topic 3: Modeling and Feature Selection

Data science case interviews prioritize your understanding of modeling and feature selection strategies.

Model Selection and Application: Highlight your prowess in choosing appropriate models, explaining your rationale, and showcasing implementation skills.

Feature Selection Techniques: Understand the importance of selecting relevant variables and methods, such as correlation coefficients, to enhance model accuracy.

Ensuring Robustness through Random Sampling: Consider techniques like random sampling to bolster model robustness and generalization abilities.

Excel in modeling and feature selection by understanding contexts, optimizing model performance, and employing robust evaluation strategies.

Become a master at data modeling using these best practices:

Topic 4: Statistical and Machine Learning Approach

These interviews require proficiency in statistical and machine learning methods for diverse problem-solving. This topic is significant for anyone applying for a machine learning engineer position.

Using Statistical Models: Utilize logistic and linear regression models for effective classification and prediction tasks.

Leveraging Machine Learning Algorithms: Employ models such as support vector machines (SVM), k-nearest neighbors (k-NN), and decision trees for complex pattern recognition and classification.

Exploring Deep Learning Techniques: Consider neural networks, convolutional neural networks (CNN), and recurrent neural networks (RNN) for intricate data patterns.

Experimentation and Model Selection: Experiment with various algorithms to identify the most suitable approach for specific contexts.

Combining statistical and machine learning expertise equips you to systematically tackle varied data challenges, ensuring readiness for case studies and beyond.

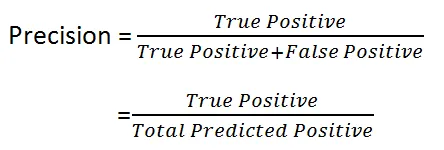

Topic 5: Evaluation Metrics and Validation

In data science interviews, understanding evaluation metrics and validation techniques is critical to measuring how well machine learning models perform.

Choosing the Right Metrics: Select metrics like precision, recall (for classification), or R² (for regression) based on the problem type. Picking the right metric defines how you interpret your model’s performance.

Validating Model Accuracy: Use methods like cross-validation and holdout validation to test your model across different data portions. These methods prevent errors from overfitting and provide a more accurate performance measure.

Importance of Statistical Significance: Evaluate if your model’s performance is due to actual prediction or random chance. Techniques like hypothesis testing and confidence intervals help determine this probability accurately.

Interpreting Results: Be ready to explain model outcomes, spot patterns, and suggest actions based on your analysis. Translating data insights into actionable strategies showcases your skill.

Finally, focusing on suitable metrics, using validation methods, understanding statistical significance, and deriving actionable insights from data underline your ability to evaluate model performance.

Also, being well-versed in these topics and having hands-on experience through practice scenarios can significantly enhance your performance in these case study interviews.

Prepare to demonstrate technical expertise and adaptability, problem-solving, and communication skills to excel in these assessments.

Now, let’s talk about how to navigate the interview.

Here is a step-by-step guide to get you through the process.

Steps by Step Guide Through the Interview

This section’ll discuss what you can expect during the interview process and how to approach case study questions.

Step 1: Problem Statement: You’ll be presented with a problem or scenario—either a hypothetical situation or a real-world challenge—emphasizing the need for data-driven solutions within data science.

Step 2: Clarification and Context: Seek more profound clarity by actively engaging with the interviewer. Ask pertinent questions to thoroughly understand the objectives, constraints, and nuanced aspects of the problem statement.

Step 3: State your Assumptions: When crucial information is lacking, make reasonable assumptions to proceed with your final solution. Explain these assumptions to your interviewer to ensure transparency in your decision-making process.

Step 4: Gather Context: Consider the broader business landscape surrounding the problem. Factor in external influences such as market trends, customer behaviors, or competitor actions that might impact your solution.

Step 5: Data Exploration: Delve into the provided datasets meticulously. Cleanse, visualize, and analyze the data to derive meaningful and actionable insights crucial for problem-solving.

Step 6: Modeling and Analysis: Leverage statistical or machine learning techniques to address the problem effectively. Implement suitable models to derive insights and solutions aligning with the identified objectives.

Step 7: Results Interpretation: Interpret your findings thoughtfully. Identify patterns, trends, or correlations within the data and present clear, data-backed recommendations relevant to the problem statement.

Step 8: Results Presentation: Effectively articulate your approach, methodologies, and choices coherently. This step is vital, especially when conveying complex technical concepts to non-technical stakeholders.

Remember to remain adaptable and flexible throughout the process and be prepared to adapt your approach to each situation.

Now that you have a guide on navigating the interview, let us give you some tips to help you stand out from the crowd.

Top 3 Tips to Master Your Data Science Case Study Interview

Approaching case study interviews in data science requires a blend of technical proficiency and a holistic understanding of business implications.

Here are practical strategies and structured approaches to prepare effectively for these interviews:

1. Comprehensive Preparation Tips

To excel in case study interviews, a blend of technical competence and strategic preparation is key.

Here are concise yet powerful tips to equip yourself for success:

Practice with Mock Case Studies : Familiarize yourself with the process through practice. Online resources offer example questions and solutions, enhancing familiarity and boosting confidence.

Review Your Data Science Toolbox: Ensure a strong foundation in fundamentals like data wrangling, visualization, and machine learning algorithms. Comfort with relevant programming languages is essential.

Simplicity in Problem-solving: Opt for clear and straightforward problem-solving approaches. While advanced techniques can be impressive, interviewers value efficiency and clarity.

Interviewers also highly value someone with great communication skills. Here are some tips to highlight your skills in this area.

2. Communication and Presentation of Results

In case study interviews, communication is vital. Present your findings in a clear, engaging way that connects with the business context. Tips include:

Contextualize results: Relate findings to the initial problem, highlighting key insights for business strategy.

Use visuals: Charts, graphs, or diagrams help convey findings more effectively.

Logical sequence: Structure your presentation for easy understanding, starting with an overview and progressing to specifics.

Simplify ideas: Break down complex concepts into simpler segments using examples or analogies.

Mastering these techniques helps you communicate insights clearly and confidently, setting you apart in interviews.

Lastly here are some preparation strategies to employ before you walk into the interview room.

3. Structured Preparation Strategy

Prepare meticulously for data science case study interviews by following a structured strategy.

Here’s how:

Practice Regularly: Engage in mock interviews and case studies to enhance critical thinking and familiarity with the interview process. This builds confidence and sharpens problem-solving skills under pressure.

Thorough Review of Concepts: Revisit essential data science concepts and tools, focusing on machine learning algorithms, statistical analysis, and relevant programming languages (Python, R, SQL) for confident handling of technical questions.

Strategic Planning: Develop a structured framework for approaching case study problems. Outline the steps and tools/techniques to deploy, ensuring an organized and systematic interview approach.

Understanding the Context: Analyze business scenarios to identify objectives, variables, and data sources essential for insightful analysis.

Ask for Clarification: Engage with interviewers to clarify any unclear aspects of the case study questions. For example, you may ask ‘What is the business objective?’ This exhibits thoughtfulness and aids in better understanding the problem.

Transparent Problem-solving: Clearly communicate your thought process and reasoning during problem-solving. This showcases analytical skills and approaches to data-driven solutions.

Blend technical skills with business context, communicate clearly, and prepare to systematically ace your case study interviews.

Now, let’s really make this specific.

Each company is different and may need slightly different skills and specializations from data scientists.

However, here is some of what you can expect in a case study interview with some industry giants.

Case Interviews at Top Tech Companies

As you prepare for data science interviews, it’s essential to be aware of the case study interview format utilized by top tech companies.

In this section, we’ll explore case interviews at Facebook, Twitter, and Amazon, and provide insight into what they expect from their data scientists.

Facebook predominantly looks for candidates with strong analytical and problem-solving skills. The case study interviews here usually revolve around assessing the impact of a new feature, analyzing monthly active users, or measuring the effectiveness of a product change.

To excel during a Facebook case interview, you should break down complex problems, formulate a structured approach, and communicate your thought process clearly.

Twitter , similar to Facebook, evaluates your ability to analyze and interpret large datasets to solve business problems. During a Twitter case study interview, you might be asked to analyze user engagement, develop recommendations for increasing ad revenue, or identify trends in user growth.

Be prepared to work with different analytics tools and showcase your knowledge of relevant statistical concepts.

Amazon is known for its customer-centric approach and data-driven decision-making. In Amazon’s case interviews, you may be tasked with optimizing customer experience, analyzing sales trends, or improving the efficiency of a certain process.

Keep in mind Amazon’s leadership principles, especially “Customer Obsession” and “Dive Deep,” as you navigate through the case study.

Remember, practice is key. Familiarize yourself with various case study scenarios and hone your data science skills.

With all this knowledge, it’s time to practice with the following practice questions.

Mockup Case Studies and Practice Questions

To better prepare for your data science case study interviews, it’s important to practice with some mockup case studies and questions.

One way to practice is by finding typical case study questions.

Here are a few examples to help you get started:

Customer Segmentation: You have access to a dataset containing customer information, such as demographics and purchase behavior. Your task is to segment the customers into groups that share similar characteristics. How would you approach this problem, and what machine-learning techniques would you consider?

Fraud Detection: Imagine your company processes online transactions. You are asked to develop a model that can identify potentially fraudulent activities. How would you approach the problem and which features would you consider using to build your model? What are the trade-offs between false positives and false negatives?

Demand Forecasting: Your company needs to predict future demand for a particular product. What factors should be taken into account, and how would you build a model to forecast demand? How can you ensure that your model remains up-to-date and accurate as new data becomes available?

By practicing case study interview questions , you can sharpen problem-solving skills, and walk into future data science interviews more confidently.

Remember to practice consistently and stay up-to-date with relevant industry trends and techniques.

Final Thoughts

Data science case study interviews are more than just technical assessments; they’re opportunities to showcase your problem-solving skills and practical knowledge.

Furthermore, these interviews demand a blend of technical expertise, clear communication, and adaptability.

Remember, understanding the problem, exploring insights, and presenting coherent potential solutions are key.

By honing these skills, you can demonstrate your capability to solve real-world challenges using data-driven approaches. Good luck on your data science journey!

Frequently Asked Questions

How would you approach identifying and solving a specific business problem using data.

To identify and solve a business problem using data, you should start by clearly defining the problem and identifying the key metrics that will be used to evaluate success.

Next, gather relevant data from various sources and clean, preprocess, and transform it for analysis. Explore the data using descriptive statistics, visualizations, and exploratory data analysis.

Based on your understanding, build appropriate models or algorithms to address the problem, and then evaluate their performance using appropriate metrics. Iterate and refine your models as necessary, and finally, communicate your findings effectively to stakeholders.

Can you describe a time when you used data to make recommendations for optimization or improvement?

Recall a specific data-driven project you have worked on that led to optimization or improvement recommendations. Explain the problem you were trying to solve, the data you used for analysis, the methods and techniques you employed, and the conclusions you drew.

Share the results and how your recommendations were implemented, describing the impact it had on the targeted area of the business.

How would you deal with missing or inconsistent data during a case study?

When dealing with missing or inconsistent data, start by assessing the extent and nature of the problem. Consider applying imputation methods, such as mean, median, or mode imputation, or more advanced techniques like k-NN imputation or regression-based imputation, depending on the type of data and the pattern of missingness.

For inconsistent data, diagnose the issues by checking for typos, duplicates, or erroneous entries, and take appropriate corrective measures. Document your handling process so that stakeholders can understand your approach and the limitations it might impose on the analysis.

What techniques would you use to validate the results and accuracy of your analysis?

To validate the results and accuracy of your analysis, use techniques like cross-validation or bootstrapping, which can help gauge model performance on unseen data. Employ metrics relevant to your specific problem, such as accuracy, precision, recall, F1-score, or RMSE, to measure performance.

Additionally, validate your findings by conducting sensitivity analyses, sanity checks, and comparing results with existing benchmarks or domain knowledge.

How would you communicate your findings to both technical and non-technical stakeholders?

To effectively communicate your findings to technical stakeholders, focus on the methodology, algorithms, performance metrics, and potential improvements. For non-technical stakeholders, simplify complex concepts and explain the relevance of your findings, the impact on the business, and actionable insights in plain language.

Use visual aids, like charts and graphs, to illustrate your results and highlight key takeaways. Tailor your communication style to the audience, and be prepared to answer questions and address concerns that may arise.

How do you choose between different machine learning models to solve a particular problem?

When choosing between different machine learning models, first assess the nature of the problem and the data available to identify suitable candidate models. Evaluate models based on their performance, interpretability, complexity, and scalability, using relevant metrics and techniques such as cross-validation, AIC, BIC, or learning curves.

Consider the trade-offs between model accuracy, interpretability, and computation time, and choose a model that best aligns with the problem requirements, project constraints, and stakeholders’ expectations.

Keep in mind that it’s often beneficial to try several models and ensemble methods to see which one performs best for the specific problem at hand.

Related Posts

How To Leverage Expert Guidance for Your Career in AI

So, you’re considering a career in AI. With so much buzz around the industry, it’s no wonder you’re...

Continuous Learning in AI – How To Stay Ahead Of The Curve

AI , Careers

Artificial Intelligence (AI) is one of the most dynamic and rapidly evolving fields in the tech...

Learning Interpersonal Skills That Elevate Your Data Science Role

Data science has revolutionized the way businesses operate. It’s not just about the numbers anymore;...

How To Network And Create Connections in Data Science and AI

Careers , Power BI

The field of data science and artificial intelligence (AI) is constantly evolving, and the demand for...

Top 20+ Data Visualization Interview Questions Explained

So, you’re applying for a data visualization or data analytics job? We get it, job interviews can be...

Master’s in Data Science Salary Expectations Explained

Are you pursuing a Master's in Data Science or recently graduated? Great! Having your Master's offers...

33 Important Data Science Manager Interview Questions

As an aspiring data science manager, you might wonder about the interview questions you'll face. We get...

Top 22 Data Analyst Behavioural Interview Questions & Answers

Data analyst behavioral interviews can be a valuable tool for hiring managers to assess your skills,...

Top 22 Database Design Interview Questions Revealed

Database design is a crucial aspect of any software development process. Consequently, companies that...

Data Analyst Salary in New York: How Much?

Are you looking at becoming a data analyst in New York? Want to know how much you can possibly earn? In...

Top 30 Python Interview Questions for Data Engineers

Careers , Python

Going for a job as a data engineer? Need to nail your Python proficiency? Well, you're in the right...

Facebook (Meta) SQL Career Questions: Interview Prep Guide

Careers , SQL

So, you want to land a great job at Facebook (Meta)? Well, as a data professional exploring potential...

Data science case interviews (what to expect & how to prepare)

Data science case studies are tough to crack: they’re open-ended, technical, and specific to the company. Interviewers use them to test your ability to break down complex problems and your use of analytical thinking to address business concerns.

So we’ve put together this guide to help you familiarize yourself with case studies at companies like Amazon, Google, and Meta (Facebook), as well as how to prepare for them, using practice questions and a repeatable answer framework.

Here’s the first thing you need to know about tackling data science case studies: always start by asking clarifying questions, before jumping in to your plan.

Let’s get started.

- What to expect in data science case study interviews

- How to approach data science case studies

- Sample cases from FAANG data science interviews

- How to prepare for data science case interviews

Click here to practice 1-on-1 with ex-FAANG interviewers

1. what to expect in data science case study interviews.

Before we get into an answer method and practice questions for data science case studies, let’s take a look at what you can expect in this type of interview.

Of course, the exact interview process for data scientist candidates will depend on the company you’re applying to, but case studies generally appear in both the pre-onsite phone screens and during the final onsite or virtual loop.

These questions may take anywhere from 10 to 40 minutes to answer, depending on the depth and complexity that the interviewer is looking for. During the initial phone screens, the case studies are typically shorter and interspersed with other technical and/or behavioral questions. During the final rounds, they will likely take longer to answer and require a more detailed analysis.

While some candidates may have the opportunity to prepare in advance and present their conclusions during an interview round, most candidates work with the information the interviewer offers on the spot.

1.1 The types of data science case studies

Generally, there are two types of case studies:

- Analysis cases , which focus on how you translate user behavior into ideas and insights using data. These typically center around a product, feature, or business concern that’s unique to the company you’re interviewing with.

- Modeling cases , which are more overtly technical and focus on how you build and use machine learning and statistical models to address business problems.

The number of case studies that you’ll receive in each category will depend on the company and the position that you’ve applied for. Facebook , for instance, typically doesn’t give many machine learning modeling cases, whereas Amazon does.

Also, some companies break these larger groups into smaller subcategories. For example, Facebook divides its analysis cases into two types: product interpretation and applied data .

You may also receive in-depth questions similar to case studies, which test your technical capabilities (e.g. coding, SQL), so if you’d like to learn more about how to answer coding interview questions, take a look here .

We’ll give you a step-by-step method that can be used to answer analysis and modeling cases in section 2 . But first, let’s look at how interviewers will assess your answers.

1.2 What interviewers are looking for

We’ve researched accounts from ex-interviewers and data scientists to pinpoint the main criteria that interviewers look for in your answers. While the exact grading rubric will vary per company, this list from an ex-Google data scientist is a good overview of the biggest assessment areas:

- Structure : candidate can break down an ambiguous problem into clear steps

- Completeness : candidate is able to fully answer the question

- Soundness : candidate’s solution is feasible and logical

- Clarity : candidate’s explanations and methodology are easy to understand

- Speed : candidate manages time well and is able to come up with solutions quickly

You’ll be able to improve your skills in each of these categories by practicing data science case studies on your own, and by working with an answer framework. We’ll get into that next.

2. How to approach data science case studies

Approaching data science cases with a repeatable framework will not only add structure to your answer, but also help you manage your time and think clearly under the stress of interview conditions.

Let’s go over a framework that you can use in your interviews, then break it down with an example answer.

2.1 Data science case framework: CAPER

We've researched popular frameworks used by real data scientists, and consolidated them to be as memorable and useful in an interview setting as possible.

Try using the framework below to structure your thinking during the interview.

- Clarify : Start by asking questions. Case questions are ambiguous, so you’ll need to gather more information from the interviewer, while eliminating irrelevant data. The types of questions you’ll ask will depend on the case, but consider: what is the business objective? What data can I access? Should I focus on all customers or just in X region?

- Assume : Narrow the problem down by making assumptions and stating them to the interviewer for confirmation. (E.g. the statistical significance is X%, users are segmented based on XYZ, etc.) By the end of this step you should have constrained the problem into a clear goal.

- Plan : Now, begin to craft your solution. Take time to outline a plan, breaking it into manageable tasks. Once you’ve made your plan, explain each step that you will take to the interviewer, and ask if it sounds good to them.

- Execute : Carry out your plan, walking through each step with the interviewer. Depending on the type of case, you may have to prepare and engineer data, code, apply statistical algorithms, build a model, etc. In the majority of cases, you will need to end with business analysis.

- Review : Finally, tie your final solution back to the business objectives you and the interviewer had initially identified. Evaluate your solution, and whether there are any steps you could have added or removed to improve it.

Now that you’ve seen the framework, let’s take a look at how to implement it.

2.2 Sample answer using the CAPER framework

Below you’ll find an answer to a Facebook data science interview question from the Applied Data loop. This is an example that comes from Facebook’s data science interview prep materials, which you can find here .

Try this question:

Imagine that Facebook is building a product around high schools, starting with about 300 million users who have filled out a field with the name of their current high school. How would you find out how much of this data is real?

First, we need to clarify the question, eliminating irrelevant data and pinpointing what is the most important. For example:

- What exactly does “real” mean in this context?

- Should we focus on whether the high school itself is real, or whether the user actually attended the high school they’ve named?

After discussing with the interviewer, we’ve decided to focus on whether the high school itself is real first, followed by whether the user actually attended the high school they’ve named.

Next, we’ll narrow the problem down and state our assumptions to the interviewer for confirmation. Here are some assumptions we could make in the context of this problem:

- The 300 million users are likely teenagers, given that they’re listing their current high school

- We can assume that a high school that is listed too few times is likely fake

- We can assume that a high school that is listed too many times (e.g. 10,000+ students) is likely fake

The interviewer has agreed with each of these assumptions, so we can now move on to the plan.

Next, it’s time to make a list of actionable steps and lay them out for the interviewer before moving on.

First, there are two approaches that we can identify:

- A high precision approach, which provides a list of people who definitely went to a confirmed high school

- A high recall approach, more similar to market sizing, which would provide a ballpark figure of people who went to a confirmed high school

As this is for a product that Facebook is currently building, the product use case likely calls for an estimate that is as accurate as possible. So we can go for the first approach, which will provide a more precise estimate of confirmed users listing a real high school.

Now, we list the steps that make up this approach:

- To find whether a high school is real: Draw a distribution with the number of students on the X axis, and the number of high schools on the Y axis, in order to find and eliminate the lower and upper bounds

- To find whether a student really went to a high school: use a user’s friend graph and location to determine the plausibility of the high school they’ve named

The interviewer has approved the plan, which means that it’s time to execute.

4. Execute

Step 1: Determining whether a high school is real

Going off of our plan, we’ll first start with the distribution.

We can use x1 to denote the lower bound, below which the number of times a high school is listed would be too small for a plausible school. x2 then denotes the upper bound, above which the high school has been listed too many times for a plausible school.

Here is what that would look like:

Be prepared to answer follow up questions. In this case, the interviewer may ask, “looking at this graph, what do you think x1 and x2 would be?”