Guidelines for Reporting of Figures and Tables for Clinical Research in Urology

Affiliations.

- 1 Memorial Sloan Kettering Cancer Center, New York, New York.

- 2 Janssen Research & Development, Raritan, New Jersey.

- 3 Vanderbilt University School of Medicine, Nashville, Tennessee.

- 4 Southern Illinois University School of Medicine, Springfield, Illinois.

- 5 University of Chicago, Chicago, Illinois.

- 6 MD Anderson Cancer Center, University of Texas, Houston, Texas.

- 7 Cleveland Clinic, Cleveland, Ohio.

- PMID: 32441187

- DOI: 10.1097/JU.0000000000001096

In an effort to improve the presentation of and information within tables and figures in clinical urology research, we propose a set of appropriate guidelines. We introduce six principles: (1) include graphs only if they improve the reader's ability to understand the study findings; (2) think through how a graph might best convey information, do not just select a graph from preselected options on statistical software; (3) do not use graphs to replace reporting key numbers in the text of a paper; (4) graphs should give an immediate visual impression of the data; (5) make it beautiful; and (6) make the labels and legend clear and complete. We present a list of quick "dos and don'ts" for both tables and figures. Investigators should feel free to break any of the guidelines if it would result in a beautiful figure or a clear table that communicates data effectively. That said, we believe that the quality of tables and figures in the medical literature would improve if these guidelines were to be followed. Patient summary: A set of guidelines were developed for presenting figures and tables in urology research. The guidelines were developed by a broad group of statistical experts with special interest in urology.

Keywords: figures; guidelines; reporting guidelines; tables.

- Biomedical Research / standards*

- Computer Graphics / standards*

- Publishing / standards*

- Statistics as Topic / standards*

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 11 April 2024

Reporting guidelines in medical artificial intelligence: a systematic review and meta-analysis

- Fiona R. Kolbinger ORCID: orcid.org/0000-0003-2265-4809 1 , 2 , 3 , 4 , 5 , 6 na1 ,

- Gregory P. Veldhuizen 1 na1 ,

- Jiefu Zhu 1 ,

- Daniel Truhn ORCID: orcid.org/0000-0002-9605-0728 7 &

- Jakob Nikolas Kather ORCID: orcid.org/0000-0002-3730-5348 1 , 8 , 9 , 10

Communications Medicine volume 4 , Article number: 71 ( 2024 ) Cite this article

328 Accesses

3 Altmetric

Metrics details

- Diagnostic markers

- Medical research

- Predictive markers

- Prognostic markers

The field of Artificial Intelligence (AI) holds transformative potential in medicine. However, the lack of universal reporting guidelines poses challenges in ensuring the validity and reproducibility of published research studies in this field.

Based on a systematic review of academic publications and reporting standards demanded by both international consortia and regulatory stakeholders as well as leading journals in the fields of medicine and medical informatics, 26 reporting guidelines published between 2009 and 2023 were included in this analysis. Guidelines were stratified by breadth (general or specific to medical fields), underlying consensus quality, and target research phase (preclinical, translational, clinical) and subsequently analyzed regarding the overlap and variations in guideline items.

AI reporting guidelines for medical research vary with respect to the quality of the underlying consensus process, breadth, and target research phase. Some guideline items such as reporting of study design and model performance recur across guidelines, whereas other items are specific to particular fields and research stages.

Conclusions

Our analysis highlights the importance of reporting guidelines in clinical AI research and underscores the need for common standards that address the identified variations and gaps in current guidelines. Overall, this comprehensive overview could help researchers and public stakeholders reinforce quality standards for increased reliability, reproducibility, clinical validity, and public trust in AI research in healthcare. This could facilitate the safe, effective, and ethical translation of AI methods into clinical applications that will ultimately improve patient outcomes.

Plain Language Summary

Artificial Intelligence (AI) refers to computer systems that can perform tasks that normally require human intelligence, like recognizing patterns or making decisions. AI has the potential to transform healthcare, but research on AI in medicine needs clear rules so caregivers and patients can trust it. This study reviews and compares 26 existing guidelines for reporting on AI in medicine. The key differences between these guidelines are their target areas (medicine in general or specific medical fields), the ways they were created, and the research stages they address. While some key items like describing the AI model recurred across guidelines, others were specific to the research area. The analysis shows gaps and variations in current guidelines. Overall, transparent reporting is important, so AI research is reliable, reproducible, trustworthy, and safe for patients. This systematic review of guidelines aims to increase the transparency of AI research, supporting an ethical and safe progression of AI from research into clinical practice.

Similar content being viewed by others

Reporting guidelines for clinical trial reports for interventions involving artificial intelligence: the CONSORT-AI extension

Xiaoxuan Liu, Samantha Cruz Rivera, … The SPIRIT-AI and CONSORT-AI Working Group

Guidelines for clinical trial protocols for interventions involving artificial intelligence: the SPIRIT-AI extension

Samantha Cruz Rivera, Xiaoxuan Liu, … SPIRIT-AI and CONSORT-AI Consensus Group

Reporting guideline for the early-stage clinical evaluation of decision support systems driven by artificial intelligence: DECIDE-AI

Baptiste Vasey, Myura Nagendran, … the DECIDE-AI expert group

Introduction

The field of Artificial Intelligence (AI) is rapidly growing and its applications in the medical field have the potential to revolutionize the way diseases are diagnosed and treated. Despite the field still being in its relative infancy, deep learning algorithms have already proven to perform at parity with or better than current gold standards for a variety of tasks related to patient care. For example, deep learning models perform on par with human experts in classification of skin cancer 1 , aid in both the timely identification of patients with sepsis 2 and respective adaptation of the treatment strategy 3 , and can identify genetic alterations from histopathological imaging across different cancer types 4 . Due to the black box nature of many AI-based investigations, it is critical that the methodology and results of the findings are reported in a thorough, transparent and reproducible manner. However, despite this need, such measures are often omitted 5 . High reporting standards are vital in ensuring that public trust, medical efficacy and scientific integrity are not compromised by erroneous, often overly positive performance metrics due to flaws such as skewed data selection or methodological errors such as data leakage.

To address these challenges, numerous reporting guidelines have been developed to regulate AI-related research in preclinical, translational, and clinical settings. A reporting guideline is a set of criteria and recommendations designed to standardize the reporting of research methodologies and findings. These guidelines aim to ensure the inclusion of minimum essential information within research studies and thereby enhance transparency, reproducibility, and the overall quality of research reporting 6 , 7 . While clinical treatment guidelines typically describe a summary of standards of care based on existing medical evidence, there is no universal standard approach for the development of reporting guidelines regarding what information should be provided when attempting to publish findings from a scientific investigation. Consequently, the quality of reporting guidelines can vary depending on the methods used to reach consensus as well as the individuals involved in the process. The Delphi method, when employed by a panel of authoritative experts in the relevant field, is generally considered to be the most appropriate means of obtaining high-quality agreement 8 . This method describes a structured technique in which experts cycle through several rounds of questionnaires, with each round resulting in an updated questionnaire that is provided to participants along with a summary of responses in the subsequent iteration. This pattern is repeated until consensus is reached.

Another factor to consider when developing reporting guidelines for medical AI is their scope. Reporting guidelines may be specific to the unique needs of a single clinical specialty or intended to be more general in nature. In addition, due to the highly dynamic nature of AI research, these guidelines require frequent reassessment to safeguard against obsolescence. As a consequence of the breadth of stakeholders involved in the development and regulation of medical AI, including government organizations, academic institutions, publishers and corporations, a multitude of reporting guidelines have arisen. The repercussion of this is a notable lack of clarity for researchers as to which guidelines to follow, uncertainty whether or not guidelines exist for their specific domain of research, and whether or not reporting standards can be expected to be enforced by publishers of mainstream academic journals. As a result, despite the abundance of reporting guidelines for healthcare, only a fraction of research items adheres to them 9 , 10 , 11 . This reflects a deficiency on the part of researchers and scholarly publishers alike.

This systematic review provides an overview of existing reporting guidelines for AI-related research in medicine that have been published by research consortia, federal institutions, or adopted by medical and medical informatics publishers. It summarizes the key elements that are near-universally considered necessary when reporting findings to ensure maximum reproducibility and clinical validity. These key elements include descriptions of the clinical rationale, the data that reported models are based on, and of the training and validation process. By highlighting guideline items that are widely agreed upon, our work aims to provide orientation to researchers, policymakers, and stakeholders in the field of medical AI and form a basis for the development of future reporting guidelines with the goal of ensuring maximum reproducibility and clinical translatability of AI-related medical research. In addition, our summary of key reporting items may provide guidance for researchers in situations where no high-quality reporting guideline currently exists for the topic of their research.

Search strategy

We report the results of this systematic review following the PRISMA 2020 statement for reporting systematic reviews 12 . To cover the breadth of published AI-related reporting guidelines in medicine, our search strategies included three sources: (i) Guidelines published as scholarly research publications listed in the database PubMed and in the EQUATOR Network’s library of reporting guidelines ( https://www.equator-network.org/library/ ), (ii) AI-related statements and requirements of international federal health agencies, and (iii) relevant journals in Medicine and Medical Informatics. The search strategy was developed by three authors with experience in medical AI research (FRK, GPV, JNK), and no preprint servers were included in the search.

PubMed was searched on June 26, 2022, without language restrictions, for literature published since database inception, on AI guidelines in the fields of preclinical, translational, and clinical medicine, using the keywords (“Artificial Intelligence” OR “Machine Learning” OR “Deep Learning”) AND (“consensus statement” OR “guideline” OR “checklist”). The EQUATOR Network’s library of reporting guidelines was searched on November 14, 2023, using the keywords “Artificial Intelligence”, “Machine Learning” and “Deep Learning”. Additionally, statements and requirements of the federal health agencies of the United States (Food and Drug Administration, FDA), the European Union (European Medicines Agency, EMA), the United Kingdom (Medicines and Healthcare Products Regulatory Agency), China (National Medical Products Association), and Japan (Pharmaceuticals and Medical Devices Agency) were reviewed with respect to further guidelines and requirements. Finally, the ten journals in Medicine and Medical Informatics with the highest journal impact factors in 2021 according to the Clarivate Journal Citation reports were screened for specific AI/ML checklist requirements for submitted articles. Studies identified as incidental findings were added independent of the aforementioned search process, thereby including studies published after the initial search on June 26, 2022.

Study selection

Duplicate studies were removed. All search results were independently screened by two physicians with experience in clinical AI research (FRK and GPV) using Rayyan 13 . Screening results were blinded until completion of each reviewer’s individual screening. The inclusion criteria were (1) the topic of the publication being AI in medicine and (2) the guideline recommendations being specific to the application of AI methods for either preclinical, translational, or clinical scenarios. Publications were excluded on the basis of (1) not providing actionable reporting guidance, (2) collecting or reassembling guideline items from existing guidelines rather than providing new guideline items or (3) reporting the intention to develop a new, as yet unpublished guideline rather than the guideline itself. Disagreements regarding guideline selection were resolved by judgment of a third reviewer (JNK).

Data extraction and analysis

Two physicians with experience in clinical AI research (FRK, GPV) reviewed all selected guidelines and extracted the year of publication, the target research phase (preclinical, translational and/or clinical research), the breadth of the guideline (general or specific to a medical subspecialty) and the consensus process as a way to assess the risk of bias. The target research phase was considered preclinical if the guideline regulates theoretical studies not involving clinical outcome data but potentially retrospectively involving patient data, translational if the guideline targets retrospective or prospective observational trials involving patient data with a potential clinical implication, and clinical if the guideline regulates interventional trials in a clinical setting. The breadth of a guideline was considered general or subject-specific depending on target research areas mentioned in the guideline. Additionally, reporting guidelines were independently graded by FRK and GPV (with arbitration by a third rater, JNK, in case of disagreement) as being either “comprehensive”, “collaborative” or “expert-led” in their consensus process. The consensus process of a guideline was classified as expert-led if the method by which it was developed did not appear to be through a consensus-based procedure, if the guideline did not involve relevant stakeholders, or if the described development procedure was not clearly outlined. Guidelines were classified as collaborative if the authors (presumably) used a formal consensus procedure involving multiple experts, but provided no details on the exact protocol or methodological structure. Comprehensive guidelines outlined a structured, consensus-based, methodical development approach involving multiple experts and relevant stakeholders with details on the exact protocol (e.g., using the Delphi procedure).

FRK and GPV extracted each guideline’s recommended items for the purpose of creating an omnibus list of all as-yet published guideline items (Supplementary Table 1 ). FRK and GPV independently evaluated each guideline for the purpose of determining which items from the omnibus list were either fully, partially, or not covered by each publication individually. Aspects that were directly described in a guideline including some details or examples were considered “fully” covered, aspects mentioned implicitly using general terms were considered “partially” covered. Disagreements were resolved by judgment of a third reviewer (JNK). Overlap of guideline content was visualized using pyCirclize 14 . Items recommended by at least 50% of all reporting guidelines or 50% of reporting guidelines with a specified systematic development process (i.e., comprehensive consensus) were considered universal recommendations for clinical AI research reporting.

Study registration

This systematic review was registered at OSF https://doi.org/10.17605/OSF.IO/YZE6J on August 25, 2023. The protocol was not amended or changed.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Search results

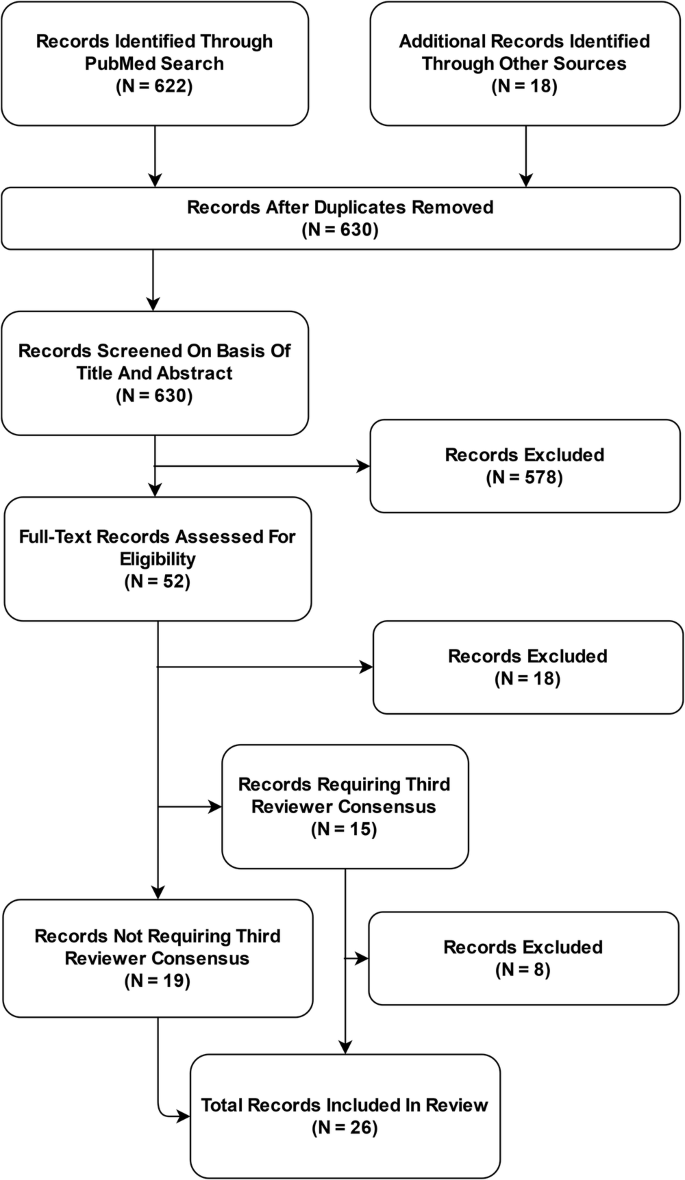

The PubMed database search yielded 622 unique publications; another 18 guidelines were identified through other sources: 8 guidelines were identified through a search of the EQUATOR Network’s library of reporting guidelines, two guidelines were identified through review of recommendations of federal agencies; one additional guideline was included based on review of journal recommendations. Another seven additional guidelines were added as incidental findings.

After removal of duplicates, 630 publications were subjected to the screening process. Out of these, 578 records were excluded based on Title and Abstract. Of the remaining 52 full-text articles assessed for eligibility, 26 records were excluded and 26 reporting guidelines were included in the systematic review and meta-analysis (Fig. 1 ). Interrater agreement for study selection on the basis of full-text records was 71% ( n = 15 requiring third reviewer out of n = 52).

Based on a systematic review of academic publications and reporting standards demanded by international federal health institutions and leading journals in the fields of medicine and medical informatics, 26 reporting guidelines published between 2009 and 2023 were included in this analysis.

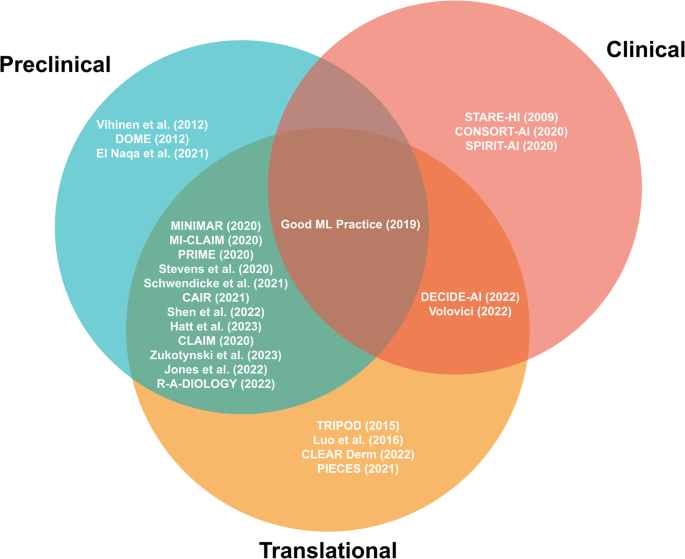

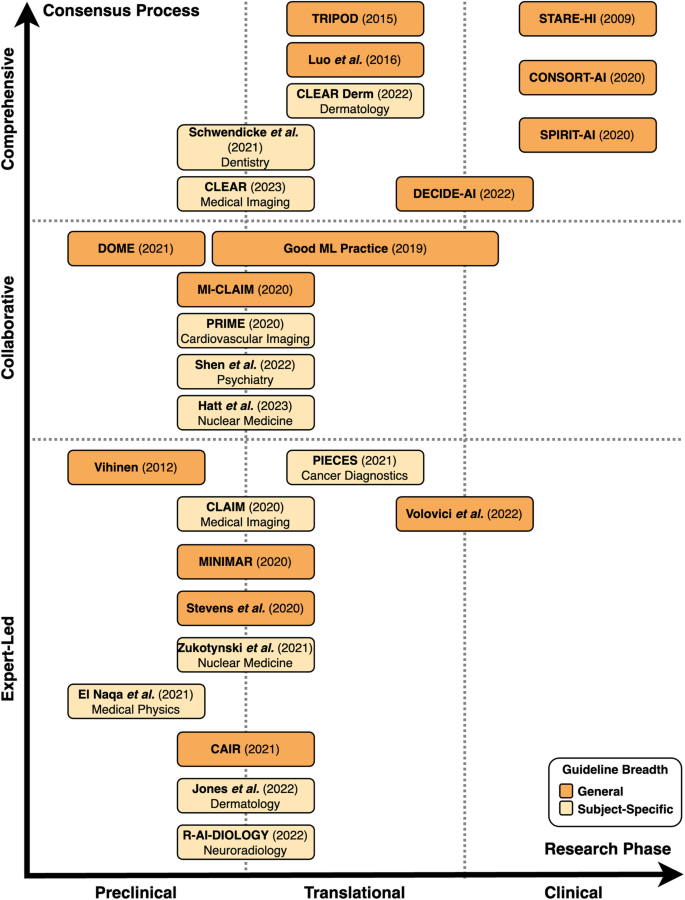

The landscape of reporting guidelines in clinical AI

A total of 26 reporting guidelines was included in this systematic review. We identified nine comprehensive, six collaborative and eleven expert-led reporting guidelines. Approximately half of all reporting guidelines ( n = 14, 54%) provided general guidelines for AI-related research in medicine. The remaining publications ( n = 12, 46%) were developed to regulate the reporting of AI-related research within a specific field of medicine. These included medical physics, dermatology, cancer diagnostics, nuclear medicine, medical imaging, cardiovascular imaging, neuroradiology, psychiatry, and dental research (Table 1 , Figs. 2 and 3 ).

Preclinical guidelines regulate theoretical studies not involving clinical outcome data but potentially retrospectively involving patient data. Translational guidelines target retrospective or prospective observational trials involving patient data with a potential clinical implication. Clinical guidelines regulate interventional trials in a clinical setting. Reporting guidelines catering towards specific research phases are able to be more specific in their items, while those aimed at overlapping research phases tend to necessitate more general reporting items.

Preclinical guidelines regulate theoretical studies not involving clinical outcome data but potentially retrospectively involving patient data. Translational guidelines target retrospective or prospective observational trials involving patient data with a potential clinical implication. Clinical guidelines regulate interventional trials in a clinical setting. The breadth of guidelines is classified as general or subject-specific depending on target research areas mentioned in the guideline. In terms of the consensus process, comprehensive guidelines are based on a structured, consensus-based, methodical development approach involving multiple experts and relevant stakeholders with details on the exact protocol. Collaborative guidelines are (presumably) developed using a formal consensus procedure involving multiple experts, but provide no details on the exact protocol or methodological structure. Expert-led guidelines are not developed through a consensus-based procedure, do not involve relevant stakeholders, or do not clearly describe the development procedure.

We systematically categorized the reporting guidelines by the research phase that they were aimed at as well as the level of consensus used in their development (Fig. 2 , Fig. 3 ). The majority of guidelines ( n = 20, 77%) concern AI applications for preclinical and translational research rather than clinical trials. Of these preclinical and translational reporting guidelines, many ( n = 12) are specific for individual fields of medicine such as cardiovascular imaging, psychiatry or dermatology rather than generally applicable recommendations. In addition, these guidelines tend to more often be expert-led or collaborative ( n = 15) in nature rather than comprehensive ( n = 5). This is in contrast to the considerably fewer clinical reporting guidelines ( n = 6) that are universally general in nature and overwhelmingly comprehensive in their consensus process ( n = 4). There has been a notable increase in the publication of reporting guidelines in recent years, with 81% ( n = 21) of included guidelines having been published in or after 2020.

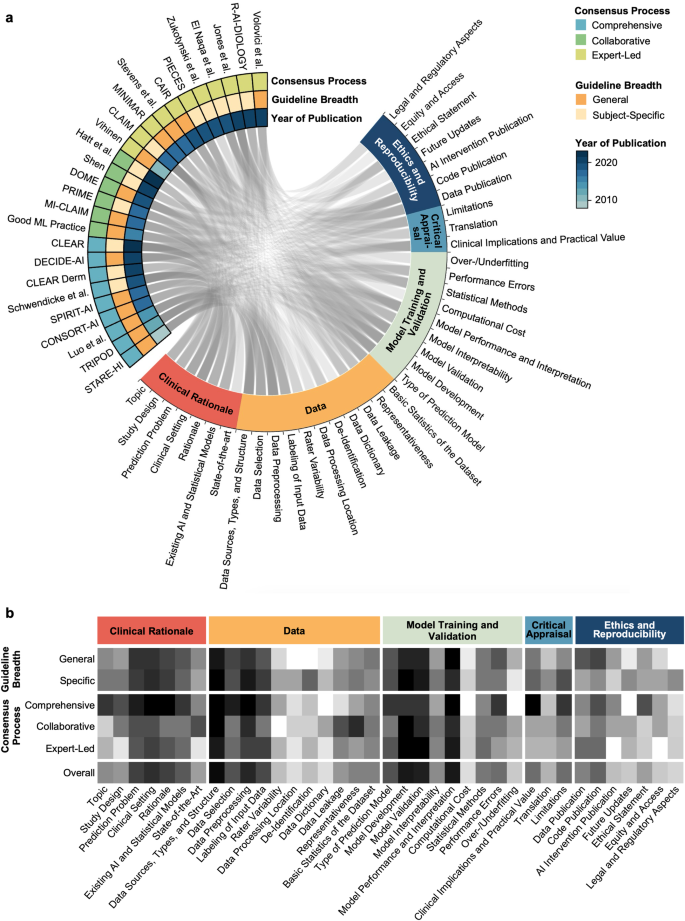

Consensus in guideline items

The identified guidelines were analyzed with respect to their overlap in individual guideline recommendations (Supplementary Table 1 , Fig. 4a, b ). A total of 37 unique guideline items were identified. These concerned Clinical Rationale (7 items), Data (11 items), Model Training and Validation (9 items), Critical Appraisal (3 items), and Ethics and Reproducibility (7 items). We were unable to identify a clear weighting towards certain items over others within our primary means of clustering reporting guidelines, namely the consensus procedure and whether the guideline is directed at specific research fields or provides general guidance (Fig. 4b ).

The Circos plot ( a ) displays represented content as a connecting line between guideline and guideline items. The heatmap ( b ) displays the differential representation of specific guideline aspects depending on guideline quality and breadth. Darker color represents a higher proportion of representation of the respective guideline aspect in the respective group of reporting guidelines for medical AI.

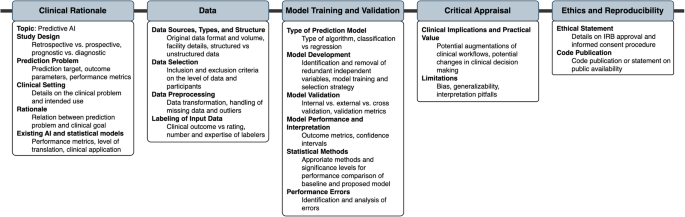

Figure 5 summarizes items that were recommended by at least 50% of all guidelines or 50% of guidelines with a specified systematic development process (comprehensive guidelines). These items are considered universal components of studies on predictive clinical AI models.

Items recommended by at least 50% of all guidelines or 50% of guidelines with a specified systematic development process were considered universal components of studies on predictive clinical AI models.

With the increasing availability of computational resources and methodological advances, the field of AI-based medical applications has experienced significant growth over the last decade. To ensure reproducibility, responsible use and clinical validity of such applications, numerous guidelines have been published, with varying development strategies, structures, application targets, content and support from research communities. We conducted a systematic review of existing guidelines for AI applications in medicine, with a focus on assessing their quality, application areas, and content.

Our analysis suggests that the majority of AI-related reporting guidelines has been conceived by individual (groups of) stakeholders without a formal consensus process and that most reporting guidelines address preclinical and translational research rather than the clinical validation of AI-based applications. Guidelines targeting specific medical fields often result from less rigorous consensus processes than broader guidelines targeting medical AI in general, resulting in some use cases for which several high-evidence guidelines are available (i.e., dermatology, medical imaging), whereas no specialty-independent guideline developed in a formal consensus process is currently available for preclinical research.

Differences in data types and tasks that AI can address in different medical specialties represent a key challenge for the development of guidelines for AI applications in medicine. Many predominantly diagnostics-based specialties such as pathology or radiology rely heavily on different types of imaging with distinct peculiarities and challenges. The need to account for such differences is stronger in preclinical and translational steps of development as compared to clinical evaluation, where AI applications are tested for validity.

Most specialty-specific guidelines address preclinical phases, and these guidelines have predominantly been conceived in less rigorous consensus processes. While individual peculiarities of specific use cases may be clearer in specific guidelines than in more general guidelines, it is conceivable that subject-specific guidelines could result in many guidelines on the same topic when use cases and guideline requirements are similar across fields. To address this issue, stratification by data type could be a potential solution to ensure that guidelines are universal yet specific enough to regulate.

Incorporation of innovations in guidelines represents another challenge, as guidelines have traditionally been distributed in the form of academic publications. In this context, the fact that AI represents a major methodological innovation has been acknowledged by regulating institutions such as the EQUATOR network, which has issued AI-specific counterparts for existing guidelines, including CONSORT(-AI) regulating randomized controlled clinical trials and SPIRIT(-AI) regulating interventional clinical trial protocols. Several other comprehensive high-quality AI-specific guideline extensions are expected to become publicly available in the near future including STARD-AI 15 , TRIPOD-AI 16 , and PRISMA-AI 17 . Ideally, guidelines should be adaptive and interactive to dynamically integrate new innovations as they emerge. Two quality assessment tools, PROBAST-AI 16 (for risk of bias and applicability assessment of prediction model studies) and QUADAS-AI 18 (for quality assessment of AI-centered diagnostic accuracy studies), will be developed alongside the anticipated AI-specific reporting guidelines.

To prevent the previously mentioned creation of multiple guidelines on the same topic, guidelines could potentially be continuously updated. However, this requires careful management to ensure that guidelines remain relevant and up-to-date without becoming overwhelming or contradictory. On a similar line, it may be worth considering whether AI-specific guidelines should repeat non-AI-specific items, such as ethics statements or Institutional Review Board (IRB) requirements. It may be useful to compare these needs with good scientific practice, to refer to existing resources, and to consider how best to balance comprehensiveness with clarity and ease of use. Whenever new guidelines are being developed, it is advisable to follow available guidance to ensure high guideline quality through methods like a structured literature review and a multi-stage Delphi process 19 , 20 .

Before entering clinical practice, medical innovations must undergo a rigorous evaluation process, and regulatory needs play a crucial role in this process. However, this can lead to undynamic processes, resulting in a gap between large amounts of preclinical research that largely do not enter steps towards clinical translation. Therefore, future guidelines should include items relevant to translational processes, such as regulatory sciences, access, updates, and assessment of feasibility for implementation into clinical practice. Less than half of the guidelines included in this review mentioned such items. By including such statements, better selection of disruptive and clinically impactful research could be made.

Despite various available guidelines, some use cases including preclinical research remain poorly regulated, and it is necessary to address gaps in existing guidelines. For such cases, it is advisable to identify the most relevant general guideline and adhere to key guideline items that are universally accepted and should be part of any AI research in the medical field. As a consequence, researchers can be guided on what to include in their research, and regulatory bodies can be more stringent in demanding adherence to guidelines. In this context, our review resulted in the finding that many high-impact medical and medical informatics journals do not demand adherence to any guidelines. While peer reviewers can encourage respective additions, more stringency in adherence to guidelines would help ensure the responsible use of AI-based medical applications.

While the content of reporting guidelines in medical AI has been critically reviewed previously 21 , 22 , this is, to our knowledge, the first systematic review on reporting guidelines used in various stages of AI-related medical research. Importantly, this review focuses on guidelines for AI applications in healthcare and intentionally does not consider guidelines for prediction models in general; this has been done elsewhere 10 .

The limitations of this systematic review are primarily related to its methodology: First, our search strategy was developed by three of the authors (FRK, GPV, JNK), without any external review of the search strategy 23 and without input from a librarian. Similarly, our systematic search was limited to the publication database PubMed, the EQUATOR Network’s library of reporting guidelines ( https://www.equator-network.org/library ), journal guidelines and guidelines of major federal institutions. An involvement of internal peer reviewers with journalogical experience in the development of the search strategy and an inclusion of preprint servers in the search may have revealed additional guidelines to include in this systematic review. Second, our systematic review included only a basic assessment of the risk of bias, differentiating between expert-led, collaborative and comprehensive guidelines by analyzing the rigor of the consensus process. While risk of bias assessment tools developed for systematic reviews of observational or interventional trials 24 , 25 would not be appropriate for a methodological review, an in-depth analysis with a custom, methods-centered tool 26 could have provided more insights on the specific shortcomings of the included guidelines. Third, we acknowledge the potential limitation of the context-agnostic nature of our summary of consensus items. While we intentionally adopted a generalized approach to create broadly applicable findings, we recognize that this lack of nuance may result in our findings being of varying applicability depending on the specific subject domain. Fourth, this systematic review has limitations related to guideline selection and classification and limited generalizability. To allow for focused comparison of guideline content, only those reporting guidelines offering actionable items were included. Three high-quality reporting guidelines were excluded given that they do not specifically address AI in medicine: STARD 27 , STROBE 28 , and SPIRIT 29 , 30 . While these guidelines are clearly out of the scope of this systematic review and some of these guidelines have dedicated AI-specific guidelines in development (e.g. STARD-AI), indicating that the creators of the guidelines themselves may have seen deficiencies regarding computational medical research, they could still have provided valuable insights. Similarly, some publications were considered out of scope for reviewing very specific areas of AI such as surrogate metrics 31 without demanding actionable items. In addition, future guideline updates could result in changes in the landscape of AI reporting guidelines, which this systematic review cannot represent. Nevertheless, this review contributes to the scientific landscape in two ways: First, it provides a resource for scientists as to what guideline to adhere to. Second, it highlights potential areas for improvement that policymakers, scientific institutions and journal editors can reinforce.

In conclusion, this systematic review provides a comprehensive overview of existing guidelines for AI applications in medicine. While the guidelines reviewed vary in quality and scope, they generally provide valuable guidance for developing and evaluating AI-based models. However, the lack of standardization across guidelines, particularly regarding the ethical, legal, and social implications of AI in healthcare, highlights the need for further research and collaboration in this area. Furthermore, as AI-based models become more prevalent in clinical practice, it will be essential to update guidelines regularly to reflect the latest developments in the field and ensure their continued relevance. Good scientific practice needs to be reinforced by every individual scientist and every scientific institution. It is the same with reporting guidelines. No guideline in itself can guarantee quality and reproducibility of research. A guideline only unfolds its power when interpreted by responsible scientists.

Data availability

All included guidelines are publicly available. The list of guideline items included in published guidelines regulating medical AI research that was generated in this systematic review is published along with this work (Supplementary Table 1 ).

Esteva, A. et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 542 , 115–118 (2017).

Article CAS PubMed PubMed Central Google Scholar

Lauritsen, S. M. et al. Early detection of sepsis utilizing deep learning on electronic health record event sequences. Artif. Intell. Med. 104 , 101820 (2020).

Article PubMed Google Scholar

Wu, X., Li, R., He, Z., Yu, T. & Cheng, C. A value-based deep reinforcement learning model with human expertise in optimal treatment of sepsis. NPJ Digit. Med. 6 , 15 (2023).

Article PubMed PubMed Central Google Scholar

Kather, J. N. et al. Pan-cancer image-based detection of clinically actionable genetic alterations. Nat. Cancer. 1 , 789–799 (2020).

Jayakumar, S. et al. Quality assessment standards in artificial intelligence diagnostic accuracy systematic reviews: a meta-research study. NPJ Digit. Med. 5 , 11 (2022).

Simera, I., Moher, D., Hoey, J., Schulz, K. F. & Altman, D. G. The EQUATOR Network and reporting guidelines: Helping to achieve high standards in reporting health research studies. Maturitas. 63 , 4–6 (2009).

Simera, I. et al. Transparent and accurate reporting increases reliability, utility, and impact of your research: reporting guidelines and the EQUATOR Network. BMC Med. 8 , 24 (2010).

Rayens, M. K. & Hahn, E. J. Building Consensus Using the Policy Delphi Method. Policy Polit. Nurs. Pract. 1 , 308–315 (2000).

Article Google Scholar

Samaan, Z. et al. A systematic scoping review of adherence to reporting guidelines in health care literature. J. Multidiscip. Healthc. 6 , 169–188 (2013).

PubMed PubMed Central Google Scholar

Lu, J. H. et al. Assessment of Adherence to Reporting Guidelines by Commonly Used Clinical Prediction Models From a Single Vendor: A Systematic Review. JAMA Netw. Open. 5 , e2227779 (2022).

Yusuf, M. et al. Reporting quality of studies using machine learning models for medical diagnosis: a systematic review. BMJ Open. 10 , e034568 (2020).

Page, M. J. et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. J. Clin. Epidemiol. 134 , 178–189 (2021).

Ouzzani, M., Hammady, H., Fedorowicz, Z. & Elmagarmid, A. Rayyan-a web and mobile app for systematic reviews. Syst. Rev. 5 , 210 (2016).

Shimoyama Y. Circular visualization in Python (Circos Plot, Chord Diagram) - pyCirclize. Github; Available: https://github.com/moshi4/pyCirclize (accessed: April 1, 2024).

Sounderajah, V. et al. Developing a reporting guideline for artificial intelligence-centred diagnostic test accuracy studies: the STARD-AI protocol. BMJ Open. 11 , e047709 (2021).

Collins, G. S. et al. Protocol for development of a reporting guideline (TRIPOD-AI) and risk of bias tool (PROBAST-AI) for diagnostic and prognostic prediction model studies based on artificial intelligence. BMJ Open. 11 , e048008 (2021).

Cacciamani, G. E. et al. PRISMA AI reporting guidelines for systematic reviews and meta-analyses on AI in healthcare. Nat. Med. 29 , 14–15 (2023).

Article CAS PubMed Google Scholar

Sounderajah, V. et al. A quality assessment tool for artificial intelligence-centered diagnostic test accuracy studies: QUADAS-AI. Nat. Med. 27 , 1663–1665 (2021).

Moher, D., Schulz, K. F., Simera, I. & Altman, D. G. Guidance for developers of health research reporting guidelines. PLoS Med. 7 , e1000217 (2010).

Schlussel, M. M. et al. Reporting guidelines used varying methodology to develop recommendations. J. Clin. Epidemiol. 159 , 246–256 (2023).

Ibrahim, H., Liu, X. & Denniston, A. K. Reporting guidelines for artificial intelligence in healthcare research. Clin. Experiment. Ophthalmol. 49 , 470–476 (2021).

Shelmerdine, S. C., Arthurs, O. J., Denniston, A. & Sebire N. J. Review of study reporting guidelines for clinical studies using artificial intelligence in healthcare. BMJ Health Care Inform. 28 , https://doi.org/10.1136/bmjhci-2021-100385 (2021).

McGowan, J. et al. PRESS Peer Review of Electronic Search Strategies: 2015 Guideline Statement. J. Clin. Epidemiol. 75 , 40–46 (2016).

Sterne, J. A. C. et al. RoB 2: a revised tool for assessing risk of bias in randomised trials. BMJ. 366 , l4898 (2019).

Higgins, J. P. T. et al. The Cochrane Collaboration’s tool for assessing risk of bias in randomised trials. BMJ. 343 , d5928 (2011).

Cukier, S. et al. Checklists to detect potential predatory biomedical journals: a systematic review. BMC Med. 18 , 104 (2020).

Bossuyt, P.M. et al. Towards complete and accurate reporting of studies of diagnostic accuracy: The STARD Initiative. Radiology. 226 , 24–28 (2003).

Elm von, E. et al. Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) statement: guidelines for reporting observational studies. BMJ 335 , 806–808 (2007).

Chan, A.-W. et al. SPIRIT 2013 statement: defining standard protocol items for clinical trials. Ann. Intern. Med. 158 , 200–207 (2013).

Chan, A.-W. et al. SPIRIT 2013 explanation and elaboration: guidance for protocols of clinical trials. BMJ. 346 , e7586 (2013).

Reinke, A. et al. Common Limitations of Image Processing Metrics: A Picture Story. arXiv. https://doi.org/10.48550/arxiv.2104.05642 (2021).

Talmon, J. et al. STARE-HI-Statement on reporting of evaluation studies in Health Informatics. Int. J. Med. Inform. 78 , 1–9 (2009).

Vihinen, M. How to evaluate performance of prediction methods? Measures and their interpretation in variation effect analysis. BMC Genomics. 13 , S2 (2012).

Collins, G. S., Reitsma, J. B., Altman, D. G., Moons, K. G. M. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): The TRIPOD statement. BMJ . 350 , https://doi.org/10.1136/bmj.g7594 (2015).

Luo, W. et al. Guidelines for Developing and Reporting Machine Learning Predictive Models in Biomedical Research: A Multidisciplinary View. J. Med. Internet Res. 18 , e323 (2016).

Center for Devices, Radiological Health. Good Machine Learning Practice for Medical Device Development: Guiding Principles . U.S. Food and Drug Administration, FDA, 2023. Available: https://www.fda.gov/medical-devices/software-medical-device-samd/good-machine-learning-practice-medical-device-development-guiding-principles .

Mongan, J., Moy, L. & Kahn, C. E. Jr. Checklist for Artificial Intelligence in Medical Imaging (CLAIM): A Guide for Authors and Reviewers. Radiol. Artif. Intell. 2 , e200029 (2020).

Liu, X., Rivera, S. C., Moher, D., Calvert, M. J. & Denniston, A. K. SPIRIT-AI and CONSORT-AI Working Group Reporting guidelines for clinical trial reports for interventions involving artificial intelligence: the CONSORT-AI Extension. BMJ. 370 , m3164 (2020).

Norgeot, B. et al. Minimum information about clinical artificial intelligence modeling: the MI-CLAIM checklist. Nat. Med. 26 , 1320–1324 (2020).

Sengupta, P. P. et al. Proposed requirements for cardiovascular imaging-related machine learning evaluation (PRIME): A checklist. JACC Cardiovasc. Imaging. 13 , 2017–2035 (2020).

Cruz Rivera, S., Liu, X., Chan, A.-W., Denniston, A. K. & Calvert, M. J. SPIRIT-AI and CONSORT-AI Working Group Guidelines for clinical trial protocols for interventions involving artificial intelligence: the SPIRIT-AI extension. Lancet Digit Health. 2 , e549–e560 (2020).

Hernandez-Boussard, T., Bozkurt, S., Ioannidis, J. P. A. & Shah, N. H. MINIMAR (MINimum Information for Medical AI Reporting): Developing reporting standards for artificial intelligence in health care. J. Am. Med. Inform. Assoc. 27 , 2011–2015 (2020).

Stevens, L. M., Mortazavi, B. J., Deo, R. C., Curtis, L. & Kao, D. P. Recommendations for Reporting Machine Learning Analyses in Clinical Research. Circ. Cardiovasc. Qual. Outcomes. 13 , e006556 (2020).

Walsh, I., Fishman, D., Garcia-Gasulla, D., Titma, T. & Pollastri, G. ELIXIR Machine Learning Focus Group, et al. DOME: recommendations for supervised machine learning validation in biology. Nat. Methods. 18 , 1122–1127 (2021).

Olczak, J. et al. Presenting artificial intelligence, deep learning, and machine learning studies to clinicians and healthcare stakeholders: an introductory reference with a guideline and a Clinical AI Research (CAIR) checklist proposal. Acta Orthop. 92 , 513–525 (2021).

Kleppe, A. et al. Designing deep learning studies in cancer diagnostics. Nat. Rev. Cancer. 21 , 199–211 (2021).

El Naqa, I. et al. AI in medical physics: guidelines for publication. Med. Phys. 48 , 4711–4714 (2021).

Zukotynski, K. et al. Machine Learning in Nuclear Medicine: Part 2—Neural Networks and Clinical Aspects. J. Nucl. Med. 62 , 22–29 (2021).

Schwendicke, F. et al. Artificial intelligence in dental research: Checklist for authors, reviewers, readers. J. Dent. 107 , 103610 (2021).

Daneshjou, R. et al. Checklist for Evaluation of Image-Based Artificial Intelligence Reports in Dermatology: CLEAR Derm Consensus Guidelines From the International Skin Imaging Collaboration Artificial Intelligence Working Group. JAMA Dermatol. 158 , 90–96 (2022).

Vasey, B. et al. Reporting guideline for the early-stage clinical evaluation of decision support systems driven by artificial intelligence: DECIDE-AI. Nat. Med. 28 , 924–933 (2022).

Jones, O. T. et al. Artificial intelligence and machine learning algorithms for early detection of skin cancer in community and primary care settings: a systematic review. Lancet Digit Health. 4 , e466–e476 (2022).

Haller, S., Van Cauter, S., Federau, C., Hedderich, D. M. & Edjlali, M. The R-AI-DIOLOGY checklist: a practical checklist for evaluation of artificial intelligence tools in clinical neuroradiology. Neuroradiology. 64 , 851–864 (2022).

Shen, F. X. et al. An Ethics Checklist for Digital Health Research in psychiatry: Viewpoint. J. Med. Internet Res. 24 , e31146 (2022).

Volovici, V., Syn, N. L., Ercole, A., Zhao, J. J. & Liu, N. Steps to avoid overuse and misuse of machine learning in clinical research. Nat. Med. 28 , 1996–1999 (2022).

Hatt, M. et al. Joint EANM/SNMMI guideline on radiomics in nuclear medicine: Jointly supported by the EANM Physics Committee and the SNMMI Physics, Instrumentation and Data Sciences Council. Eur. J. Nucl. Med. Mol. Imaging. 50 , 352–375 (2023).

Kocak, B. et al. CheckList for EvaluAtion of Radiomics research (CLEAR): a step-by-step reporting guideline for authors and reviewers endorsed by ESR and EuSoMII. Insights Imaging. 14 , 75 (2023).

Download references

Acknowledgements

F.R.K. is supported by the German Cancer Research Center (CoBot 2.0), the Joachim Herz Foundation (Add-On Fellowship for Interdisciplinary Life Science) and the German Research Foundation (Deutsche Forschungsgemeinschaft, DFG) as part of Germany’s Excellence Strategy (EXC 2050/1, Project ID 390696704) within the Cluster of Excellence”Centre for Tactile Internet with Human-in-the-Loop” (CeTI) of Dresden University of Technology. Furthermore, F.R.K. receives support from the Indiana Clinical and Translational Sciences Institute funded, in part, by Grant Number UM1TR004402 from the National Institutes of Health, National Center for Advancing Translational Sciences, Clinical and Translational Sciences Award. G.P.V. is partly supported by BMBF (Federal Ministry of Education and Research) in DAAD project 57616814 (SECAI, School of Embedded Composite AI, https://secai.org/ ) as part of the program Konrad Zuse Schools of Excellence in Artificial Intelligence. J.N.K. is supported by the German Federal Ministry of Health (DEEP LIVER, ZMVI1-2520DAT111) and the Max-Eder-Programme of the German Cancer Aid (grant #70113864), the German Federal Ministry of Education and Research (PEARL, 01KD2104C; CAMINO, 01EO2101; SWAG, 01KD2215A; TRANSFORM LIVER, 031L0312A), the German Academic Exchange Service (SECAI, 57616814), the German Federal Joint Committee (Transplant.KI, 01VSF21048) the European Union (ODELIA, 101057091; GENIAL, 101096312) and the National Institute for Health and Care Research (NIHR, NIHR213331) Leeds Biomedical Research Centre. The views expressed are those of the author(s) and not necessarily those of the National Institutes of Health, the NHS, the NIHR or the Department of Health and Social Care.

Open Access funding enabled and organized by Projekt DEAL.

Author information

These authors contributed equally: Fiona R. Kolbinger, Gregory P. Veldhuizen.

Authors and Affiliations

Else Kroener Fresenius Center for Digital Health, TUD Dresden University of Technology, Dresden, Germany

Fiona R. Kolbinger, Gregory P. Veldhuizen, Jiefu Zhu & Jakob Nikolas Kather

Department of Visceral, Thoracic and Vascular Surgery, University Hospital and Faculty of Medicine Carl Gustav Carus, TUD Dresden University of Technology, Dresden, Germany

Fiona R. Kolbinger

Weldon School of Biomedical Engineering, Purdue University, West Lafayette, IN, USA

Regenstrief Center for Healthcare Engineering, Purdue University, West Lafayette, IN, USA

Department of Biostatistics and Health Data Science, Richard M. Fairbanks School of Public Health, Indiana University, Indianapolis, IN, USA

Indiana University Simon Comprehensive Cancer Center, Indiana University School of Medicine, Indianapolis, IN, USA

Department of Diagnostic and Interventional Radiology, University Hospital Aachen, Aachen, Germany

Daniel Truhn

Department of Medicine III, University Hospital RWTH Aachen, Aachen, Germany

Jakob Nikolas Kather

Department of Medicine I, University Hospital Dresden, Dresden, Germany

Medical Oncology, National Center for Tumor Diseases (NCT), University Hospital Heidelberg, Heidelberg, Germany

You can also search for this author in PubMed Google Scholar

Contributions

F.R.K., G.P.V. and J.N.K. conceptualized the study, developed the search strategy, conducted the review, curated, analyzed, and interpreted the data. F.R.K., G.P.V. and J.Z. prepared visualizations. D.T. and J.N.K. provided oversight, mentorship, and funding. F.R.K. and G.P.V. wrote the original draft of the manuscript. All authors reviewed and approved the final version of the manuscript.

Corresponding author

Correspondence to Jakob Nikolas Kather .

Ethics declarations

Competing interests.

D.T. holds shares in StratifAI GmbH and has received honoraria for lectures from Bayer. J.N.K. declares consulting services for Owkin, France, DoMore Diagnostics, Norway, Panakeia, UK, Scailyte, Switzerland, Cancilico, Germany, Mindpeak, Germany, MultiplexDx, Slovakia, and Histofy, UK; furthermore, he holds shares in StratifAI GmbH, Germany, has received a research grant by GSK, and has received honoraria by AstraZeneca, Bayer, Eisai, Janssen, MSD, BMS, Roche, Pfizer and Fresenius. All other authors declare no conflicts of interest.

Peer review

Peer review information.

Communications Medicine thanks Weijie Chen, David Moher and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Peer review file, supplementary information, reporting summary, rights and permissions.

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Cite this article.

Kolbinger, F.R., Veldhuizen, G.P., Zhu, J. et al. Reporting guidelines in medical artificial intelligence: a systematic review and meta-analysis. Commun Med 4 , 71 (2024). https://doi.org/10.1038/s43856-024-00492-0

Download citation

Received : 18 August 2023

Accepted : 27 March 2024

Published : 11 April 2024

DOI : https://doi.org/10.1038/s43856-024-00492-0

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- HHS Author Manuscripts

Guidelines for Reporting of Statistics for Clinical Research in Urology

Melissa assel.

a Memorial Sloan Kettering Cancer Center, New York, NY, USA

Daniel Sjoberg

Andrew elders.

b Glasgow Caledonian University, Glasgow, UK

Xuemei Wang

c The University of Texas, MD Anderson Cancer Center, Houston, TX, USA

Dezheng Huo

d The University of Chicago, Chicago, IL, USA

Albert Botchway

e Southern Illinois University School of Medicine, Springfield, IL, USA

Kristin Delfino

f University of Minnesota, Minneapolis, MN, USA

Zhiguo Zhao

g Cleveland Clinic, Cleveland, OH, USA

Tatsuki Koyama

h Vanderbilt University Medical Center, Nashville, TN, USA

Brent Hollenbeck

i University of Michigan, Ann Arbor, MI, USA

j Janssen Research & Development, NJ, USA

Whitney Zahnd

k University of South Carolina, Columbia, SC, USA

Emily C. Zabor

Michael w. kattan, andrew j. vickers.

Author contributions : Andrew J. Vickers had full access to all the data in the study and takes responsibility for the integrity of the data and the accuracy of the data analysis.

Acquisition of data : None.

Analysis and interpretation of data : None.

Drafting of the manuscript : Vickers, Assel, Sjoberg, Kattan.

Critical revision of the manuscript for important intellectual content : All authors.

Statistical analysis : None.

Obtaining funding : None.

Administrative, technical, or material support : None.

Supervision : None.

Other : None.

In an effort to improve the quality of statistics in the clinical urology literature, statisticians at European Urology, The Journal of Urology, Urology , and BJUI came together to develop a set of guidelines to address common errors of statistical analysis, reporting, and interpretation. Authors should “break any of the guidelines if it makes scientific sense to do so” but would need to provide a clear justification. Adoption of the guidelines will, in our view, not only increase the quality of published papers in our journals, but also improve statistical knowledge in our field in general.

It is widely acknowledged that the quality of statistics in the clinical research literature is poor. This is true for urology just as it is for other medical specialties. In 2005, Scales et al [ 1 ] published a systematic evaluation of the statistics in papers appearing in a single month in one of the four leading urology medical journals: European Urology , The Journal of Urology , Urology , and BJUI . They reported widespread errors, including 71% of papers with comparative statistics having at least one statistical flaw. These findings mirror many others in the literature; see, for instance, the review given by Lang and Altman [ 2 ]. The quality of statistical reporting in urology journals has no doubt improved since 2005, but remains unsatisfactory.

The four urology journals in the Scales et al’s [ 1 ] review have come together to publish a shared set of statistical guidelines, adapted from those in use at one of the journals, European Urology , since 2014 [ 3 ]. The guidelines will also be adopted by European Urology Focus and European Urology Oncology . Statistical reviewers at the four journals will systematically assess submitted manuscripts using the guidelines to improve statistical analysis, reporting, and interpretation. Adoption of the guidelines will, in our view, not only increase the quality of published papers in our journals, but also improve statistical knowledge in our field in general. Asking an author to follow a guideline about, say, the fallacy of accepting the null hypothesis would no doubt result in a better paper, but we hope that it would also enhance the author’s understanding of hypothesis tests.

The guidelines are didactic, based on the consensus of the statistical consultants to the journals. We avoided, where possible, making specific analytic recommendations and focused instead on analyses or methods of reporting statistics that should be avoided. We intend to update the guidelines over time and hence encourage readers who question the value or rationale of a guideline to write to the authors.

1. The golden rule

1.1. break any of the guidelines if it makes scientific sense to do so.

Science varies too much to allow methodologic or reporting guidelines to apply universally.

2. Reporting of design and statistical analysis

2.1. follow existing reporting guidelines for the type of study you are reporting, such as consort for randomized trials, remark for marker studies, tripod for prediction models, strobe for observational studies, or amstar for systematic reviews.

Statisticians and methodologists have contributed extensively to a large number of reporting guidelines. The first is widely recognized to be the Consolidated Standards of Reporting Trials (CONSORT) statement on reporting of randomized trials, but there are now many other guidelines, covering a wide range of different types of study. Reporting guidelines can be downloaded from the Equator website ( http://www.equator-network.org ).

2.2. Describe cohort selection fully

It is insufficient to state, for instance, that “the study cohort consisted of 1144 patients treated for benign prostatic hyperplasia at our institution.” The cohort needs to be defined in terms of dates (eg, “presenting March 2013 to December 2017”), inclusion criteria (eg, “IPSS > 12”), and whether patients were selected to be included (eg, for a research study) versus being a consecutive series. Exclusions should be described one by one, with the number of patients omitted for each exclusion criterion to give the final cohort size (eg, “patients with prior surgery [ n = 43], allergies to 5-ARIs [ n = 12], and missing data on baseline prostate volume [ n = 86] were excluded to give a final cohort for analysis of 1003 patients”). Note that the inclusion criteria can be omitted if obvious from the context (eg, no need to state “undergoing radical prostatectomy for histologically proven prostate cancer”); on the contrary, dates may need to be explained if their rationale could be questioned (eg, “March 2013, when our specialist voiding clinic was established, to December 2017”).

2.3. Describe the practical steps of randomization in randomized trials

Although this reporting guideline is part of the CONSORT statement, it is so critical and so widely misunderstood that it bears repeating. The purpose of randomization is to prevent selection bias. This can be achieved only if the consenting patients cannot guess their treatment allocation before registration in the trial or change it afterward. This safeguard is known as allocation concealment . Stating merely that “a randomization list was created by a statistician” or that “envelope randomization was used” does not ensure allocation concealment: a list could have been posted in the nurse’s station for all to see; envelopes can be opened and resealed. Investigators need to specify the exact logistic steps taken to ensure allocation concealment. The best method is to use a password-protected computer database.

2.4. The statistical methods should describe the study questions and the statistical approaches used to address each question

Many statistical methods sections state only something like “Mann-Whitney was used for comparisons of continuous variables and Fisher’s exact for comparisons of binary variables.” This says little more than “the inference tests used were not grossly erroneous for the type of data.” Instead, statistical methods sections should lay out each primary study question separately: carefully detail the analysis associated with each and describe the rationale for the analytic approach, if this is not obvious or there are reasonable alternatives. Special attention and description should be provided for rarely used statistical techniques.

2.5. The statistical methods should be described in sufficient detail to allow replication by an independent statistician given the same data set

Vague reference to “adjusting for confounders” or “nonlinear approaches” is insufficiently specific to allow replication, a cornerstone of the scientific method. All statistical analyses should be specified in the Methods section, including details such as the covariates included in a multivariable model. All variables should be clearly defined where there is room for ambiguity. For instance, avoid saying that “Gleason grade was included in the model”; state instead “Gleason grade group was included in four categories 1, 2, 3, and 4 or 5.”

3. Inference and p values

3.1. do not accept the null hypothesis.

In a court case, defendants are declared guilty or not guilty; there is no verdict of “innocent.” Similarly, in a statistical test, the null hypothesis is rejected or not rejected. If the p value is 0.05 or higher, investigators should avoid conclusions such as “the drug was ineffective,” “there was no difference between groups,” or “response rates were unaffected.” Instead, authors should use phrases such as “we did not see evidence of a drug effect,” “we were unable to demonstrate a difference between groups,” or simply “there was no statistically significant difference in response rates.”

3.2. P values just above 5% are not a trend, and they are not moving

Avoid saying that a p value such as 0.07 shows a “trend” (which is meaningless) or “approaches statistical significance” (because the p value is not moving). Alternative language might be that “although we saw some evidence of improved response rates in patients receiving the novel procedure, differences between groups did not meet conventional levels of statistical significance.”

3.3. The p values and 95% confidence intervals do not quantify the probability of a hypothesis

A p value of, say, 0.03 does not mean that there is 3% probability that the findings are due to chance. Additionally, a 95% confidence interval (CI) should not be interpreted as a 95% certainty that the true parameter value is in the range of the 95% CI. The correct interpretation of a p value is the probability of finding the observed or more extreme results when the null hypothesis is true; the 95% CI will contain the true parameter value 95% of the time were a study to be repeated many times using different samples.

3.4. Do not use confidence intervals to test hypotheses

Investigators often interpret confidence intervals in terms of hypotheses. For instance, investigators might claim that there is a statistically significant difference between groups because the 95% CI for the odds ratio excludes 1. Such claims are problematic because confidence intervals are concerned with estimation, and not inference. Moreover, the mathematical method to calculate confidence intervals may be different from those used to calculate p values. It is perfectly possible to have a 95% CI that includes no difference between groups even though the p value is <0.05 or vice versa. For instance, in a study of 100 patients in two equal groups, with event rates of 70% and 50%, the p value from Fisher’s exact test is 0.066 but the 95% CI for the odds ratio is 1.03–5.26. The 95% CI for the risk difference and risk ratio also exclude no difference between groups.

3.5. Take care to interpret results when reporting multiple p values

The more questions you ask, the more likely you are to get a spurious answer to at least one of them. For example, if you report p values for five independent true null hypotheses, the probability that you will falsely reject at least one is not 5%, but >20%. Although formal adjustment of p values is appropriate in some specific cases, such as genomic studies, a more common approach is to simply interpret p values in the context of multiple testing. For instance, if an investigator examines the association of 10 variables with three different endpoints, thereby testing 30 separate hypotheses, a p value of 0.04 should not be interpreted in the same way as if the study tested only a single hypothesis with a p value of 0.04.

3.6. Do not report separate p values for each of two different groups in order to address the question of whether there is a difference between groups

One scientific question means one statistical hypothesis tested by one p value. To illustrate the error of using two p values to address one question, take the case of a randomized trial of drug versus placebo to reduce voiding symptoms, with 30 patients in each group. The authors might report that symptom scores improved by 6 (standard deviation 14) points in the drug group ( p = 0.03 by one-sample t test) and by 5 (standard deviation 15) points in the placebo group ( p = 0.08). However, the study hypothesis concerns the difference between drug and placebo. To test a single hypothesis, a single p value is needed. A two-sample t test for these data gives a p value of 0.8—unsurprising, given that the scores in each group were virtually the same—confirming that it would be unsound to conclude that the drug was effective based on the finding that the change was significant in the drug group but not in placebo controls.

3.7. Use interaction terms in place of subgroup analyses

A similar error to the use of separate tests for a single hypothesis is when an intervention is shown to have a statistically significant effect in one group of patients but not in another. A more appropriate approach is to use what is known as an interaction term in a statistical model. For instance, to determine whether a drug reduced pain scores more in women than in men, the model might be as follows:

It is sometimes appropriate to report estimates and confidence intervals within subgroups of interest, but p values should be avoided.

3.8. Tests for change over time are generally uninteresting

A common analysis is to conduct a paired t test comparing, say, erectile function in older men at baseline with erectile function after 5 yr of follow-up. The null hypothesis here is that “erectile function does not change over time,” which is known to be false. Investigators are encouraged to focus on estimation rather than on inference, reporting, for example, the mean change over time along with a 95% CI.

3.9. Avoid using statistical tests to determine the type of analysis to be conducted

Numerous statistical tests are available that can be used to determine how a hypothesis test should be conducted. For instance, investigators might conduct a Shapiro-Wilk test for normality to determine whether to use a t test or a Mann-Whitney test, and Cochran’s Q to decide whether to use a fixed-effect or a random-effect approach in a meta-analysis or to use a t test for between-group differences in a covariate to determine whether that covariate should be included a multivariable model. The problem with these sorts of approaches is that they are often testing a null hypothesis that is known to be false. For instance, no data set perfectly follows a normal distribution. Moreover, it is often questionable that changing the statistical approach in the light of the test is actually of benefit. Statisticians are far from unanimous as to whether Mann-Whitney is always superior to t test when data are nonnormal, or that fixed effects are invalid under study heterogeneity, or that the criterion of adjusting for a variable should be whether it is significantly different between groups. Investigators should generally follow a prespecified analytic plan, only altering the analysis if the data unambiguously point to a better alternative.

3.10. When reporting p values, be clear about the hypothesis tested and ensure that the hypothesis is a sensible one

The p values test very specific hypotheses. When reporting a p value in the Results section, state the hypothesis being tested unless this is completely clear. Take, for instance, the statement “pain scores were higher in group 1 and similar in groups 2 and 3 ( p = 0.02).” It is ambiguous whether the p value of 0.02 is testing group 1 versus groups 2 and 3 combined or the hypothesis that pain score is same in all three groups. Clarity about the hypotheses being tested can help avoid the testing of inappropriate hypotheses. For instance, p values for differences between groups at baseline in a randomized trial is testing a null hypothesis that is known to be true (informally, that any observed differences between groups are due to chance).

4. Reporting of study estimates

4.1. use appropriate levels of precision.

Reporting a p value of 0.7345 suggests that there is an appreciable difference between p values of 0.7344 and 0.7346. Reporting that 16.9% of 83 patients responded entails a precision (to the nearest 0.1%) that is nearly 200 times greater than the width of the confidence interval (10–27%). Reporting in a clinical study that the mean calorie consumption was 2069.9 suggest that calorie consumption can be measured extremely precisely by a food questionnaire. Some might argue that being overly precise is irrelevant, because the extra numbers can always be ignored. The counterargument is that investigators should think very hard about every number they report, rather than just carelessly cutting and pasting numbers from the statistical software printout. The specific guidelines for precision are as follows:

- Report p values to a single significant figure unless the p value is close to 0.05, in which case, report two significant figures. Do not report “not significant” for p values of 0.05 or higher. Very low p values can be reported as p < 0.001 or similar. A p value can indeed be 1, although some investigators prefer to report this as >0.9. For instance, the following p values are reported to appropriate precision: <0.001, 0.004, 0.045, 0.13, 0.3, 1.

- Report percentages, rates, and probabilities to two significant figures, for example, 75%, 3.4%, 0.13%.

- Do not report p values of 0, as any experimental result has a nonzero probability.

- Do not give decimal places if a probability or proportion is 1 (eg, a p value of 1.00 or a percentage of 100.00%). The decimal places suggest that it is possible to have, say, a p value of 1.05. There is a similar consideration for data that can take only integer values. It makes sense to state that, for instance, the mean number of pregnancies was 2.4, but not that 29% of women reported 1.0 pregnancy.

- There is generally no need to report estimates to more than three significant figures.

- Hazard and odds ratios are normally reported to two decimal places, although this can be avoided for high odds ratios (eg, 18.2 rather than 18.17).

4.2. Avoid redundant statistics in cohort descriptions

Authors should be selective about the descriptive statistics reported, and ensure that each and every number provides unique information. Authors should avoid reporting descriptive statistics that can readily be derived from the data that have already been provided. For instance, there is no need to state that in a cohort, 40% were men and 60% were women; choose one or the other. Another common error is to include a column of descriptive statistics for two groups separately and then combine the whole cohort. If, say, the median age is 60 in group 1 and 62 in group 2, we do not need to be told that the median age in the cohort as a whole is close to 61.

4.3. For descriptive statistics, median and quartiles are preferred over means and standard deviations (or standard errors); range should be avoided

The median and quartiles provide all sorts of useful information; for instance, 50% of patients had values above the median or between the quartiles. The range gives the values of just two patients and so is generally uninformative of the data distribution.

4.4. Report estimates for the main study questions

A clinical study typically focuses on a limited number of scientific questions. Authors should generally provide an estimate for each of these questions. In a study comparing two groups, for instance, authors should give an estimate of the difference between groups, and avoid giving only data on each group separately or simply saying that the difference was or was not significant. In a study of a prognostic factor, authors should give an estimate of the strength of the prognostic factor, such as an odds ratio or a hazard ratio, as well as reporting a p value testing the null hypothesis of no association between the prognostic factor and outcome.

4.5. Report confidence intervals for the main estimates of interest

Authors should generally report a 95% CI around the estimates relating to the key research questions, but not other estimates given in a paper. For instance, in a study comparing two surgical techniques, the authors might report adverse event rates of 10% and 15%; however, the key estimate in this case is the difference between groups, so this estimate, 5%, should be reported along with a 95% CI (eg, 1–9%). Confidence intervals should not be reported for the estimates within each group (eg, adverse event rate in group A of 10%, 95% CI 7–13%). Similarly, confidence intervals should not be given for statistics such as mean age or gender ratio.

4.6. Do not treat categorical variables as continuous

Variables such as Gleason grade groups are scored 1–5, but it is not true that the difference between groups 3 and 4 is half as great as the difference between groups 2 and 4. Variables such as Gleason grade groups should be reported as categories (eg, 40% grade group 1, 20% group 2, 20% group 3, 20% groups 4 and 5) rather than as a continuous variable (eg, mean Gleason score of 2.4). Similarly, categorical variables such as Gleason should be entered into regression models not as a single variable (eg, a hazard ratio of 1.5 per 1-point increase in Gleason grade group) but as multiple categories (eg, a hazard ratio of 1.6 comparing Gleason grade group 2 with group 1 and a hazard ratio of 3.9 comparing group 3 to group 1).

4.7. Avoid categorization of continuous variables unless there is a convincing rationale

A common approach to a variable such as age is to define patients as either old (aged ≥60 yr) or young (aged <60 yr) and then enter age into analyses as a categorical variable, reporting, for example, that “patients aged 60 and over had twice the risk of an operative complication than patients aged less than 60”. In epidemiologic and marker studies, a common approach is to divide a variable into quartiles and report a statistic such as a hazard ratio for each quartile compared with the lowest (“reference”) quartile. This is problematic because it assumes that all values of a variable within a category are the same. For instance, it is likely not the case that a patient aged 65 yr has the same risk as a patient aged 90 yr, but a very different risk from that of a patient aged 64 yr. It is generally preferable to leave variables in a continuous form, reporting, for instance, how risk changes with a 10-yr increase in age. Nonlinear terms can also be used, to avoid the assumption that the association between age and risk follows a straight line.

4.8. Do not use statistical methods to obtain cut-points for clinical practice

Various statistical methods are available to dichotomize a continuous variable. For instance, outcomes can be compared on either side of several different cut-points and the optimal cut-point chosen as the one associated with the smallest p value. Alternatively, investigators might choose a cut-point that leads to the highest value of sensitivity + specificity, that is, the point closest to the top left-hand corner of a receiver operating curve (ROC). Such methods are inappropriate for determining clinical cut-points because they do not consider clinical consequences. The ROC approach, for instance, assumes that sensitivity and specificity are of equal value, whereas it is generally worse to miss disease than to treat unnecessarily. The smallest p value approach tests strength of evidence against the null hypothesis, which has little to do with the relative benefits and harms of a treatment or further diagnostic workup.

4.9. The association between a continuous predictor and outcome can be demonstrated graphically, particularly by using nonlinear modeling

In high-school mathematics, we often thought about the relationship between y and x by plotting a line on a graph, with a scatterplot added in some cases. This also holds true for many scientific studies. In the case of a study of age and complication rates, for instance, an investigator could plot age on the x axis against the risk of a complication on the y axis and show a regression line, perhaps with a 95% CI. Nonlinear modeling is often useful because it avoids assuming a linear relationship and allows the investigator to determine questions such as whether risk starts to increase disproportionately beyond a given age.

4.10. Do not ignore significant heterogeneity in meta-analyses

Informally speaking, heterogeneity statistics test whether variations between the results of different studies in a meta-analysis are consistent with chance or whether such variation reflects, at least in part, true differences between studies. If heterogeneity is present, authors need to do more than merely report the p value and focus on the random-effect estimate. Authors should investigate the sources of heterogeneity and try to determine the factors that lead to differences in study results, for example, by identifying common features of studies with similar findings or idiosyncratic aspects of studies with outlying results.

4.11. For time-to-event variables, report the number of events but not the proportion

Take the case of a study that reported the following: “of 60 patients accrued, 10 (17%) died.” Although it is important to report the number of events, patients entered the study at different times and were followed for different periods; hence, the reported proportion of 17% is meaningless. The standard statistical approach to time-to-event variables is to calculate probabilities, such as the risk of death being 60% by 5 yr or the median survival—the time at which the probability of survival first drops below 50%—being 52 mo.