InVisionApp, Inc.

Inside Design

5 steps to a hypothesis-driven design process

• mar 22, 2018.

S ay you’re starting a greenfield project, or you’re redesigning a legacy app. The product owner gives you some high-level goals. Lots of ideas and questions are in your mind, and you’re not sure where to start.

Hypothesis-driven design will help you navigate through a unknown space so you can come out at the end of the process with actionable next steps.

Ready? Let’s dive in.

Step 1: Start with questions and assumptions

On the first day of the project, you’re curious about all the different aspects of your product. “How could we increase the engagement on the homepage? ” “ What features are important for our users? ”

Related: 6 ways to speed up and improve your product design process

To reduce risk, I like to take some time to write down all the unanswered questions and assumptions. So grab some sticky notes and write all your questions down on the notes (one question per note).

I recommend that you use the How Might We technique from IDEO to phrase the questions and turn your assumptions into questions. It’ll help you frame the questions in a more open-ended way to avoid building the solution into the statement prematurely. For example, you have an idea that you want to make riders feel more comfortable by showing them how many rides the driver has completed. You can rephrase the question to “ How might we ensure rider feel comfortable when taking ride, ” and leave the solution part out to the later step.

“It’s easy to come up with design ideas, but it’s hard to solve the right problem.”

It’s even more valuable to have your team members participate in the question brainstorming session. Having diverse disciplines in the room always brings fresh perspectives and leads to a more productive conversation.

Step 2: Prioritize the questions and assumptions

Now that you have all the questions on sticky notes, organize them into groups to make it easier to review them. It’s especially helpful if you can do the activity with your team so you can have more input from everybody.

When it comes to choosing which question to tackle first, think about what would impact your product the most or what would bring the most value to your users.

If you have a big group, you can Dot Vote to prioritize the questions. Here’s how it works: Everyone has three dots, and each person gets to vote on what they think is the most important question to answer in order to build a successful product. It’s a common prioritization technique that’s also used in the Sprint book by Jake Knapp —he writes, “ The prioritization process isn’t perfect, but it leads to pretty good decisions and it happens fast. ”

Related: Go inside design at Google Ventures

Step 3: Turn them into hypotheses

After the prioritization, you now have a clear question in mind. It’s time to turn the question into a hypothesis. Think about how you would answer the question.

Let’s continue the previous ride-hailing service example. The question you have is “ How might we make people feel safe and comfortable when using the service? ”

Based on this question, the solutions can be:

- Sharing the rider’s location with friends and family automatically

- Displaying more information about the driver

- Showing feedback from previous riders

Now you can combine the solution and question, and turn it into a hypothesis. Hypothesis is a framework that can help you clearly define the question and solution, and eliminate assumption.

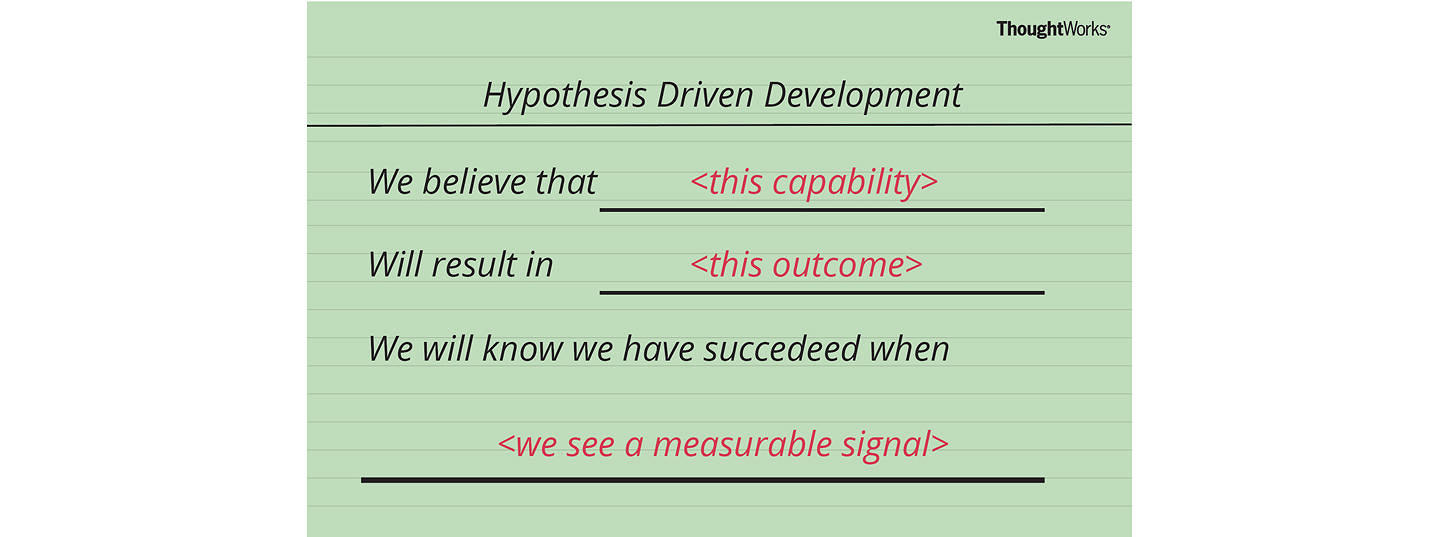

From Lean UX

We believe that [ sharing more information about the driver’s experience and stories ] For [ the riders ] Will [ make riders feel more comfortable and connected throughout the ride ]

4. Develop an experiment and testing the hypothesis

Develop an experiment so you can test your hypothesis. Our test will follow the scientific methods, so it’s subject to collecting empirical and measurable evidence in order to obtain new knowledge. In other words, it’s crucial to have a measurable outcome for the hypothesis so we can determine whether it has succeeded or failed.

There are different ways you can create an experiment, such as interview, survey , landing page validation, usability testing, etc. It could also be something that’s built into the software to get quantitative data from users. Write down what the experiment will be, and define the outcomes that determine whether the hypothesis is valids. A well-defined experiment can validate/invalidate the hypothesis.

In our example, we could define the experiment as “ We will run X studies to show more information about a driver (number of ride, years of experience), and ask follow-up questions to identify the rider’s emotion associated with this ride (safe, fun, interesting, etc.). We will know the hypothesis is valid when we get more than 70% identify the ride as safe or comfortable. ”

After defining the experiment, it’s time to get the design done. You don’t need to have every design detail thought through. You can focus on designing what is needed to be tested.

When the design is ready, you’re ready to run the test. Recruit the users you want to target , have a time frame, and put the design in front of the users.

5. Learn and build

You just learned that the result was positive and you’re excited to roll out the feature. That’s great! If the hypothesis failed, don’t worry—you’ll be able to gain some insights from that experiment. Now you have some new evidence that you can use to run your next experiment. In each experiment, you’ll learn something new about your product and your customers.

“Design is a never-ending process.”

What other information can you show to make riders feel safe and comfortable? That can be your next hypothesis. You now have a feature that’s ready to be built, and a new hypothesis to be tested.

Principles from from The Lean Startup

We often assume that we understand our users and know what they want. It’s important to slow down and take a moment to understand the questions and assumptions we have about our product.

After testing each hypothesis, you’ll get a clearer path of what’s most important to the users and where you need to dig deeper. You’ll have a clear direction for what to do next.

by Sylvia Lai

Sylvia Lai helps startup and enterprise solve complex problems through design thinking and user-centered design methodologies at Pivotal Labs . She is the biggest advocate for the users, making sure their voices are heard is her number one priority. Outside of work, she loves mentoring other designers through one-on-one conversation. Connect with her through LinkedIn or Twitter .

Collaborate in real time on a digital whiteboard Try Freehand

Get awesome design content in your inbox each week, give it a try—it only takes a click to unsubscribe., thanks for signing up, you should have a thank you gift in your inbox now-and you’ll hear from us again soon, get started designing better. faster. together. and free forever., give it a try. nothing’s holding you back..

How to Implement Hypothesis-Driven Development

Remember back to the time when we were in high school science class. Our teachers had a framework for helping us learn – an experimental approach based on the best available evidence at hand. We were asked to make observations about the world around us, then attempt to form an explanation or hypothesis to explain what we had observed. We then tested this hypothesis by predicting an outcome based on our theory that would be achieved in a controlled experiment – if the outcome was achieved, we had proven our theory to be correct.

We could then apply this learning to inform and test other hypotheses by constructing more sophisticated experiments, and tuning, evolving or abandoning any hypothesis as we made further observations from the results we achieved.

Experimentation is the foundation of the scientific method, which is a systematic means of exploring the world around us. Although some experiments take place in laboratories, it is possible to perform an experiment anywhere, at any time, even in software development.

Practicing Hypothesis-Driven Development is thinking about the development of new ideas, products and services – even organizational change – as a series of experiments to determine whether an expected outcome will be achieved. The process is iterated upon until a desirable outcome is obtained or the idea is determined to be not viable.

We need to change our mindset to view our proposed solution to a problem statement as a hypothesis, especially in new product or service development – the market we are targeting, how a business model will work, how code will execute and even how the customer will use it.

We do not do projects anymore, only experiments. Customer discovery and Lean Startup strategies are designed to test assumptions about customers. Quality Assurance is testing system behavior against defined specifications. The experimental principle also applies in Test-Driven Development – we write the test first, then use the test to validate that our code is correct, and succeed if the code passes the test. Ultimately, product or service development is a process to test a hypothesis about system behaviour in the environment or market it is developed for.

The key outcome of an experimental approach is measurable evidence and learning.

Learning is the information we have gained from conducting the experiment. Did what we expect to occur actually happen? If not, what did and how does that inform what we should do next?

In order to learn we need use the scientific method for investigating phenomena, acquiring new knowledge, and correcting and integrating previous knowledge back into our thinking.

As the software development industry continues to mature, we now have an opportunity to leverage improved capabilities such as Continuous Design and Delivery to maximize our potential to learn quickly what works and what does not. By taking an experimental approach to information discovery, we can more rapidly test our solutions against the problems we have identified in the products or services we are attempting to build. With the goal to optimize our effectiveness of solving the right problems, over simply becoming a feature factory by continually building solutions.

The steps of the scientific method are to:

- Make observations

- Formulate a hypothesis

- Design an experiment to test the hypothesis

- State the indicators to evaluate if the experiment has succeeded

- Conduct the experiment

- Evaluate the results of the experiment

- Accept or reject the hypothesis

- If necessary, make and test a new hypothesis

Using an experimentation approach to software development

We need to challenge the concept of having fixed requirements for a product or service. Requirements are valuable when teams execute a well known or understood phase of an initiative, and can leverage well understood practices to achieve the outcome. However, when you are in an exploratory, complex and uncertain phase you need hypotheses.

Handing teams a set of business requirements reinforces an order-taking approach and mindset that is flawed.

Business does the thinking and ‘knows’ what is right. The purpose of the development team is to implement what they are told. But when operating in an area of uncertainty and complexity, all the members of the development team should be encouraged to think and share insights on the problem and potential solutions. A team simply taking orders from a business owner is not utilizing the full potential, experience and competency that a cross-functional multi-disciplined team offers.

Framing hypotheses

The traditional user story framework is focused on capturing requirements for what we want to build and for whom, to enable the user to receive a specific benefit from the system.

As A…. <role>

I Want… <goal/desire>

So That… <receive benefit>

Behaviour Driven Development (BDD) and Feature Injection aims to improve the original framework by supporting communication and collaboration between developers, tester and non-technical participants in a software project.

In Order To… <receive benefit>

As A… <role>

When viewing work as an experiment, the traditional story framework is insufficient. As in our high school science experiment, we need to define the steps we will take to achieve the desired outcome. We then need to state the specific indicators (or signals) we expect to observe that provide evidence that our hypothesis is valid. These need to be stated before conducting the test to reduce biased interpretations of the results.

If we observe signals that indicate our hypothesis is correct, we can be more confident that we are on the right path and can alter the user story framework to reflect this.

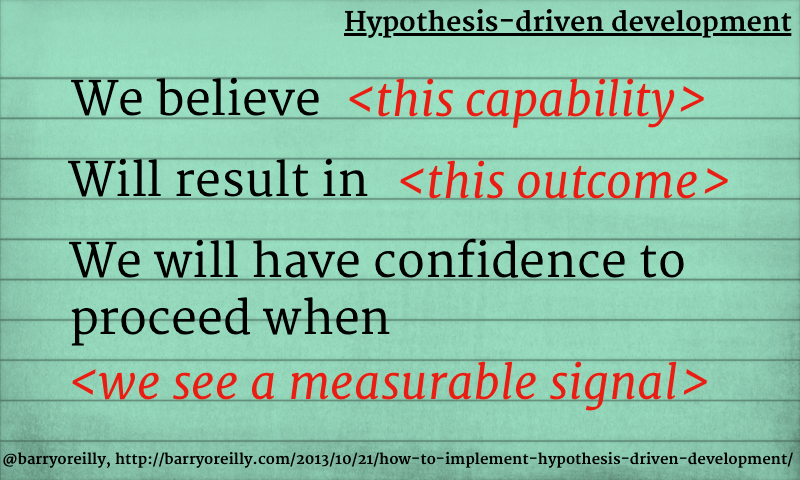

Therefore, a user story structure to support Hypothesis-Driven Development would be;

We believe < this capability >

What functionality we will develop to test our hypothesis? By defining a ‘test’ capability of the product or service that we are attempting to build, we identify the functionality and hypothesis we want to test.

Will result in < this outcome >

What is the expected outcome of our experiment? What is the specific result we expect to achieve by building the ‘test’ capability?

We will know we have succeeded when < we see a measurable signal >

What signals will indicate that the capability we have built is effective? What key metrics (qualitative or quantitative) we will measure to provide evidence that our experiment has succeeded and give us enough confidence to move to the next stage.

The threshold you use for statistically significance will depend on your understanding of the business and context you are operating within. Not every company has the user sample size of Amazon or Google to run statistically significant experiments in a short period of time. Limits and controls need to be defined by your organization to determine acceptable evidence thresholds that will allow the team to advance to the next step.

For example if you are building a rocket ship you may want your experiments to have a high threshold for statistical significance. If you are deciding between two different flows intended to help increase user sign up you may be happy to tolerate a lower significance threshold.

The final step is to clearly and visibly state any assumptions made about our hypothesis, to create a feedback loop for the team to provide further input, debate and understanding of the circumstance under which we are performing the test. Are they valid and make sense from a technical and business perspective?

Hypotheses when aligned to your MVP can provide a testing mechanism for your product or service vision. They can test the most uncertain areas of your product or service, in order to gain information and improve confidence.

Examples of Hypothesis-Driven Development user stories are;

Business story

We Believe That increasing the size of hotel images on the booking page

Will Result In improved customer engagement and conversion

We Will Know We Have Succeeded When we see a 5% increase in customers who review hotel images who then proceed to book in 48 hours.

It is imperative to have effective monitoring and evaluation tools in place when using an experimental approach to software development in order to measure the impact of our efforts and provide a feedback loop to the team. Otherwise we are essentially blind to the outcomes of our efforts.

In agile software development we define working software as the primary measure of progress.

By combining Continuous Delivery and Hypothesis-Driven Development we can now define working software and validated learning as the primary measures of progress.

Ideally we should not say we are done until we have measured the value of what is being delivered – in other words, gathered data to validate our hypothesis.

Examples of how to gather data is performing A/B Testing to test a hypothesis and measure to change in customer behaviour. Alternative testings options can be customer surveys, paper prototypes, user and/or guerrilla testing.

One example of a company we have worked with that uses Hypothesis-Driven Development is lastminute.com . The team formulated a hypothesis that customers are only willing to pay a max price for a hotel based on the time of day they book. Tom Klein, CEO and President of Sabre Holdings shared the story of how they improved conversion by 400% within a week.

Combining practices such as Hypothesis-Driven Development and Continuous Delivery accelerates experimentation and amplifies validated learning. This gives us the opportunity to accelerate the rate at which we innovate while relentlessly reducing cost, leaving our competitors in the dust. Ideally we can achieve the ideal of one piece flow: atomic changes that enable us to identify causal relationships between the changes we make to our products and services, and their impact on key metrics.

As Kent Beck said, “Test-Driven Development is a great excuse to think about the problem before you think about the solution”. Hypothesis-Driven Development is a great opportunity to test what you think the problem is, before you work on the solution.

How can you achieve faster growth?

The 6 Steps that We Use for Hypothesis-Driven Development

One of the greatest fears of product managers is to create an app that flopped because it's based on untested assumptions. After successfully launching more than 20 products, we're convinced that we've found the right approach for hypothesis-driven development.

In this guide, I'll show you how we validated the hypotheses to ensure that the apps met the users' expectations and needs.

What is hypothesis-driven development?

Hypothesis-driven development is a prototype methodology that allows product designers to develop, test, and rebuild a product until it’s acceptable by the users. It is an iterative measure that explores assumptions defined during the project and attempts to validate it with users’ feedbacks.

What you have assumed during the initial stage of development may not be valid for the users. Even if they are backed by historical data, user behaviors can be affected by specific audiences and other factors. Hypothesis-driven development removes these uncertainties as the project progresses.

Why we use hypothesis-driven development

For us, the hypothesis-driven approach provides a structured way to consolidate ideas and build hypotheses based on objective criteria. It’s also less costly to test the prototype before production.

Using this approach has reliably allowed us to identify what, how, and in which order should the testing be done. It gives us a deep understanding of how we prioritise the features, how it’s connected to the business goals and desired user outcomes.

We’re also able to track and compare the desired and real outcomes of developing the features.

The process of Prototype Development that we use

Our success in building apps that are well-accepted by users is based on the Lean UX definition of hypothesis. We believe that the business outcome will be achieved if the user’s outcome is fulfilled for the particular feature.

Here’s the process flow:

How Might We technique → Dot voting (based on estimated/assumptive impact) → converting into a hypothesis → define testing methodology (research method + success/fail criteria) → impact effort scale for prioritizing → test, learn, repeat.

Once the hypothesis is proven right, the feature is escalated into the development track for UI design and development.

Step 1: List Down Questions And Assumptions

Whether it’s the initial stage of the project or after the launch, there are always uncertainties or ideas to further improve the existing product. In order to move forward, you’ll need to turn the ideas into structured hypotheses where they can be tested prior to production.

To start with, jot the ideas or assumptions down on paper or a sticky note.

Then, you’ll want to widen the scope of the questions and assumptions into possible solutions. The How Might We (HMW) technique is handy in rephrasing the statements into questions that facilitate brainstorming.

For example, if you have a social media app with a low number of users, asking, “How might we increase the number of users for the app?” makes brainstorming easier.

Step 2: Dot Vote to Prioritize Questions and Assumptions

Once you’ve got a list of questions, it’s time to decide which are potentially more impactful for the product. The Dot Vote method, where team members are given dots to place on the questions, helps prioritize the questions and assumptions.

Our team uses this method when we’re faced with many ideas and need to eliminate some of them. We started by grouping similar ideas and use 3-5 dots to vote. At the end of the process, we’ll have the preliminary data on the possible impact and our team’s interest in developing certain features.

This method allows us to prioritize the statements derived from the HMW technique and we’re only converting the top ones.

Step 3: Develop Hypotheses from Questions

The questions lead to a brainstorming session where the answers become hypotheses for the product. The hypothesis is meant to create a framework that allows the questions and solutions to be defined clearly for validation.

Our team followed a specific format in forming hypotheses. We structured the statement as follow:

We believe we will achieve [ business outcome],

If [ the persona],

Solve their need in [ user outcome] using [feature].

Here’s a hypothesis we’ve created:

We believe we will achieve DAU=100 if Mike (our proto persona) solve their need in recording and sharing videos instantaneously using our camera and cloud storage .

Step 4: Test the Hypothesis with an Experiment

It’s crucial to validate each of the assumptions made on the product features. Based on the hypotheses, experiments in the form of interviews, surveys, usability testing, and so forth are created to determine if the assumptions are aligned with reality.

Each of the methods provides some level of confidence. Therefore, you don’t want to be 100% reliant on a particular method as it’s based on a sample of users.

It’s important to choose a research method that allows validation to be done with minimal effort. Even though hypotheses validation provides a degree of confidence, not all assumptions can be tested and there could be a margin of error in data obtained as the test is conducted on a sample of people.

The experiments are designed in such a way that feedback can be compared with the predicted outcome. Only validated hypotheses are brought forward for development.

Testing all the hypotheses can be tedious. To be more efficient, you can use the impact effort scale. This method allows you to focus on hypotheses that are potentially high value and easy to validate.

You can also work on hypotheses that deliver high impact but require high effort. Ignore those that require high impact but low impact and keep hypotheses with low impact and effort into the backlog.

At Uptech, we assign each hypothesis with clear testing criteria. We rank the hypothesis with a binary ‘task success’ and subjective ‘effort on task’ where the latter is scored from 1 to 10.

While we’re conducting the test, we also collect qualitative data such as the users' feedback. We have a habit of segregation the feedback into pros, cons and neutral with color-coded stickers. (red - cons, green -pros, blue- neutral).

The best practice is to test each hypothesis at least on 5 users.

Step 5 Learn, Build (and Repeat)

The hypothesis-driven approach is not a single-ended process. Often, you’ll find that some of the hypotheses are proven to be false. Rather than be disheartened, you should use the data gathered to finetune the hypothesis and design a better experiment in the next phase.

Treat the entire cycle as a learning process where you’ll better understand the product and the customers.

We’ve found the process helpful when developing an MVP for Carbon Club, an environmental startup in the UK. The app allows users to donate to charity based on the carbon-footprint produced.

In order to calculate the carbon footprint, we’re weighing the options of

- Connecting the app to the users’ bank account to monitor the carbon footprint based on purchases made.

- Allowing users to take quizzes on their lifestyles.

Upon validation, we’ve found that all of the users opted for the second option as they are concerned about linking an unknown app to their banking account.

The result makes us shelves the first assumption we’ve made during pre-Sprint research. It also saves our client $50,000, and a few months of work as connecting the app to the bank account requires a huge effort.

.png)

Step 6: Implement Product and Maintain

Once you’ve got the confidence that the remaining hypotheses are validated, it’s time to develop the product. However, testing must be continued even after the product is launched.

You should be on your toes as customers’ demands, market trends, local economics, and other conditions may require some features to evolve.

Our takeaways for hypothesis-driven development

If there’s anything that you could pick from our experience, it’s these 5 points.

1. Should every idea go straight into the backlog? No, unless they are validated with substantial evidence.

2. While it’s hard to define business outcomes with specific metrics and desired values, you should do it anyway. Try to be as specific as possible, and avoid general terms. Give your best effort and adjust as you receive new data.

3. Get all product teams involved as the best ideas are born from collaboration.

4. Start with a plan consists of 2 main parameters, i.e., criteria of success and research methods. Besides qualitative insights, you need to set objective criteria to determine if a test is successful. Use the Test Card to validate the assumptions strategically.

5. The methodology that we’ve recommended in this article works not only for products. We’ve applied it at the end of 2019 for setting the strategic goals of the company and end up with robust results, engaged and aligned team.

You'll have a better idea of which features would lead to a successful product with hypothesis-driven development. Rather than vague assumptions, the consolidated data from users will provide a clear direction for your development team.

As for the hypotheses that don't make the cut, improvise, re-test, and leverage for future upgrades.

Keep failing with product launches? I'll be happy to point you in the right direction. Drop me a message here.

Tell us about your idea. We will reach you out.

- Work together

- Product development

- Ways of working

Have you read my two bestsellers, Unlearn and Lean Enterprise? If not, please do. If you have, please write a review!

- Read my story

- Get in touch

- Oval Copy 2 Blog

How to Implement Hypothesis-Driven Development

- Facebook__x28_alt_x29_ Copy

Remember back to the time when we were in high school science class. Our teachers had a framework for helping us learn – an experimental approach based on the best available evidence at hand. We were asked to make observations about the world around us, then attempt to form an explanation or hypothesis to explain what we had observed. We then tested this hypothesis by predicting an outcome based on our theory that would be achieved in a controlled experiment – if the outcome was achieved, we had proven our theory to be correct.

We could then apply this learning to inform and test other hypotheses by constructing more sophisticated experiments, and tuning, evolving, or abandoning any hypothesis as we made further observations from the results we achieved.

Experimentation is the foundation of the scientific method, which is a systematic means of exploring the world around us. Although some experiments take place in laboratories, it is possible to perform an experiment anywhere, at any time, even in software development.

Practicing Hypothesis-Driven Development [1] is thinking about the development of new ideas, products, and services – even organizational change – as a series of experiments to determine whether an expected outcome will be achieved. The process is iterated upon until a desirable outcome is obtained or the idea is determined to be not viable.

We need to change our mindset to view our proposed solution to a problem statement as a hypothesis, especially in new product or service development – the market we are targeting, how a business model will work, how code will execute and even how the customer will use it.

We do not do projects anymore, only experiments. Customer discovery and Lean Startup strategies are designed to test assumptions about customers. Quality Assurance is testing system behavior against defined specifications. The experimental principle also applies in Test-Driven Development – we write the test first, then use the test to validate that our code is correct, and succeed if the code passes the test. Ultimately, product or service development is a process to test a hypothesis about system behavior in the environment or market it is developed for.

The key outcome of an experimental approach is measurable evidence and learning. Learning is the information we have gained from conducting the experiment. Did what we expect to occur actually happen? If not, what did and how does that inform what we should do next?

In order to learn we need to use the scientific method for investigating phenomena, acquiring new knowledge, and correcting and integrating previous knowledge back into our thinking.

As the software development industry continues to mature, we now have an opportunity to leverage improved capabilities such as Continuous Design and Delivery to maximize our potential to learn quickly what works and what does not. By taking an experimental approach to information discovery, we can more rapidly test our solutions against the problems we have identified in the products or services we are attempting to build. With the goal to optimize our effectiveness of solving the right problems, over simply becoming a feature factory by continually building solutions.

The steps of the scientific method are to:

- Make observations

- Formulate a hypothesis

- Design an experiment to test the hypothesis

- State the indicators to evaluate if the experiment has succeeded

- Conduct the experiment

- Evaluate the results of the experiment

- Accept or reject the hypothesis

- If necessary, make and test a new hypothesis

Using an experimentation approach to software development

We need to challenge the concept of having fixed requirements for a product or service. Requirements are valuable when teams execute a well known or understood phase of an initiative and can leverage well-understood practices to achieve the outcome. However, when you are in an exploratory, complex and uncertain phase you need hypotheses. Handing teams a set of business requirements reinforces an order-taking approach and mindset that is flawed. Business does the thinking and ‘knows’ what is right. The purpose of the development team is to implement what they are told. But when operating in an area of uncertainty and complexity, all the members of the development team should be encouraged to think and share insights on the problem and potential solutions. A team simply taking orders from a business owner is not utilizing the full potential, experience and competency that a cross-functional multi-disciplined team offers.

Framing Hypotheses

The traditional user story framework is focused on capturing requirements for what we want to build and for whom, to enable the user to receive a specific benefit from the system.

As A…. <role>

I Want… <goal/desire>

So That… <receive benefit>

Behaviour Driven Development (BDD) and Feature Injection aims to improve the original framework by supporting communication and collaboration between developers, tester and non-technical participants in a software project.

In Order To… <receive benefit>

As A… <role>

When viewing work as an experiment, the traditional story framework is insufficient. As in our high school science experiment, we need to define the steps we will take to achieve the desired outcome. We then need to state the specific indicators (or signals) we expect to observe that provide evidence that our hypothesis is valid. These need to be stated before conducting the test to reduce the bias of interpretation of results.

If we observe signals that indicate our hypothesis is correct, we can be more confident that we are on the right path and can alter the user story framework to reflect this.

Therefore, a user story structure to support Hypothesis-Driven Development would be;

We believe < this capability >

What functionality we will develop to test our hypothesis? By defining a ‘test’ capability of the product or service that we are attempting to build, we identify the functionality and hypothesis we want to test.

Will result in < this outcome >

What is the expected outcome of our experiment? What is the specific result we expect to achieve by building the ‘test’ capability?

We will have confidence to proceed when < we see a measurable signal >

What signals will indicate that the capability we have built is effective? What key metrics (qualitative or quantitative) we will measure to provide evidence that our experiment has succeeded and give us enough confidence to move to the next stage.

The threshold you use for statistical significance will depend on your understanding of the business and context you are operating within. Not every company has the user sample size of Amazon or Google to run statistically significant experiments in a short period of time. Limits and controls need to be defined by your organization to determine acceptable evidence thresholds that will allow the team to advance to the next step.

For example, if you are building a rocket ship you may want your experiments to have a high threshold for statistical significance. If you are deciding between two different flows intended to help increase user sign up you may be happy to tolerate a lower significance threshold.

The final step is to clearly and visibly state any assumptions made about our hypothesis, to create a feedback loop for the team to provide further input, debate, and understanding of the circumstance under which we are performing the test. Are they valid and make sense from a technical and business perspective?

Hypotheses, when aligned to your MVP, can provide a testing mechanism for your product or service vision. They can test the most uncertain areas of your product or service, in order to gain information and improve confidence.

Examples of Hypothesis-Driven Development user stories are;

Business story.

We Believe That increasing the size of hotel images on the booking page Will Result In improved customer engagement and conversion We Will Have Confidence To Proceed When we see a 5% increase in customers who review hotel images who then proceed to book in 48 hours.

It is imperative to have effective monitoring and evaluation tools in place when using an experimental approach to software development in order to measure the impact of our efforts and provide a feedback loop to the team. Otherwise, we are essentially blind to the outcomes of our efforts.

In agile software development, we define working software as the primary measure of progress. By combining Continuous Delivery and Hypothesis-Driven Development we can now define working software and validated learning as the primary measures of progress.

Ideally, we should not say we are done until we have measured the value of what is being delivered – in other words, gathered data to validate our hypothesis.

Examples of how to gather data is performing A/B Testing to test a hypothesis and measure to change in customer behavior. Alternative testings options can be customer surveys, paper prototypes, user and/or guerilla testing.

One example of a company we have worked with that uses Hypothesis-Driven Development is lastminute.com . The team formulated a hypothesis that customers are only willing to pay a max price for a hotel based on the time of day they book. Tom Klein, CEO and President of Sabre Holdings shared the story of how they improved conversion by 400% within a week.

Combining practices such as Hypothesis-Driven Development and Continuous Delivery accelerates experimentation and amplifies validated learning. This gives us the opportunity to accelerate the rate at which we innovate while relentlessly reducing costs, leaving our competitors in the dust. Ideally, we can achieve the ideal of one-piece flow: atomic changes that enable us to identify causal relationships between the changes we make to our products and services, and their impact on key metrics.

As Kent Beck said, “Test-Driven Development is a great excuse to think about the problem before you think about the solution”. Hypothesis-Driven Development is a great opportunity to test what you think the problem is before you work on the solution.

We also run a workshop to help teams implement Hypothesis-Driven Development . Get in touch to run it at your company.

[1] Hypothesis-Driven Development By Jeffrey L. Taylor

More strategy insights

Say hello to venture capital 3.0, negotiation made simple with dr john lowry, how high performance organizations innovate at scale, read my newsletter.

Insights in every edition. News you can use. No spam, ever. Read the latest edition

We've just sent you your first email. Go check it out!

- Explore Insights

- Nobody Studios

- LinkedIn Learning: High Performance Organizations

Building and Using Theoretical Frameworks

- Open Access

- First Online: 03 December 2022

Cite this chapter

You have full access to this open access chapter

- James Hiebert 6 ,

- Jinfa Cai 7 ,

- Stephen Hwang 7 ,

- Anne K Morris 6 &

- Charles Hohensee 6

Part of the book series: Research in Mathematics Education ((RME))

14k Accesses

1 Citations

Theoretical frameworks can be confounding. They are supposed to be very important, but it is not always clear what they are or why you need them. Using ideas from Chaps. 1 and 2 , we describe them as local theories that are custom-designed for your study. Although they might use parts of larger well-known theories, they are created by individual researchers for particular studies. They are developed through the cyclic process of creating more precise and meaningful hypotheses. Building directly on constructs from the previous chapters, you can think of theoretical frameworks as equivalent to the most compelling, complete rationales you can develop for the predictions you make. Theoretical frameworks are important because they do lots of work for you. They incorporate the literature into your rationale, they explain why your study matters, they suggest how you can best test your predictions, and they help you interpret what you find. Your theoretical framework creates an essential coherence for your study and for the paper you are writing to report the study.

You have full access to this open access chapter, Download chapter PDF

Part I. What Are Theoretical Frameworks?

As the name implies, a theoretical framework is a type of theory. We will define it as the custom-made theory that focuses specifically on the hypotheses you want to test and the research questions you want to answer. It is custom-made for your study because it explains why your predictions are plausible. It does no more and no less. Building directly on Chap. 2 , as you develop more complete rationales for your predictions, you are actually building a theory to support your predictions. Our goal in this chapter is for you to become comfortable with what theoretical frameworks are, with how they relate to the general concept of theory, with what role they play in scientific inquiry, and with why and how to create one for your study.

As you read this chapter, it will be helpful to remember that our definitions of terms in this book, such as theoretical framework, are based on our view of scientific inquiry as formulating, testing, and revising hypotheses. We define theoretical framework in ways that continue the coherent story we lay out across all phases of scientific inquiry and all the chapters this book. You are likely to find descriptions of theoretical frameworks in other sources that differ in some ways from our description. In addition, you are likely to see other terms that we would include as synonyms for theoretical framework, including conceptual framework. We suggest that when you encounter these special terms, make sure you understand how the authors are defining them.

Definitions of Theories

We begin by stepping back and considering how theoretical frameworks fit within the concept of theory, as usually defined. There are many definitions of theory; you can find a huge number simply by googling “theory.” Educational researchers and theorists often propose their own definitions but many of these are quite similar. Praetorius and Charalambous ( 2022 ) reviewed a number of definitions to set the stage for examining theories of teaching. Here are a few, beginning with a dictionary definition:

Lexico.com Dictionary (Oxford University Press, 2021 ): “A supposition or a system of ideas intended to explain something, especially one based on general principles independent of the thing to be explained.”

Biddle and Anderson ( 1986 ): “By scientific theory we mean the system of concepts and propositions that is used to represent, think about, and predict observable events. Within a mature science that theory is also explanatory and formalized. It does not represent ultimate ‘truth,’ however; indeed, it will be superseded by other theories presently. Instead, it represents the best explanation we have, at present, for those events we have so far observed” (p. 241).

Kerlinger ( 1964 ): “A theory is a set of interrelated constructs (concepts), definitions and propositions which presents a systematic view of phenomena by specifying relations among variables, with the purpose of explaining and predicting phenomena” (p. 11).

Colquitt and Zapata-Phelan ( 2007 ): The authors say that theories allow researchers to understand and predict outcomes of interest, describe and explain a process or sequence of events, raise consciousness about a specific set of concepts as well as prevent scholars from “being dazzled by the complexity of the empirical world by providing a linguistic tool for organizing it” (p. 1281).

For our purposes, it is important to notice two things that most definitions of theories share: They are descriptions of a connected set of facts and concepts, and they are created to predict and/or explain observed events. You can connect these ideas to Chaps. 1 and 2 by noticing that the language for the descriptors of scientific inquiry we suggested in Chap. 1 are reflected in the definitions of theories. In particular, notice in the definitions two of the descriptors: “Observing something and trying to explain why it is the way it is” and “Updating everyone’s thinking in response to more and better information.” Notice also in the definitions the emphasis on the elements of a theory similar to the elements of a rationale described in Chap. 2 : definitions, variables, and mechanisms that explain relationships.

Exercise 3.1

Before you continue reading, in your own words, write down a definition for “theoretical framework.”

Theoretical Frameworks Are Local Theories

There are strong similarities between building theories and doing scientific inquiry (formulating, testing, and revising hypotheses). In both cases, the researcher (or theorist) develops explanations for phenomena of interest. Building theories involves describing the concepts and conjectures that predict and later explain the events, and specifying the predictions by identifying the variables that will be measured. Doing scientific inquiry involves many of the same activities: formulating predictions for answers to questions about the research problem and building rationales to explain why the predictions are appropriate and reasonable.

As you move through the cycles described in Chap. 2 —cycles of asking questions, making predictions, writing out the reasons for these predictions, imagining how you would test the predictions, reading more about what scholars know and have hypothesized, revising your predictions (and maybe your questions), and so on—your theoretical rationales will become both more complete and more precise. They will become more complete as you find new arguments and new data in the literature and through talking with others, and they will become sharper as you remove parts of the rationales that originally seemed relevant but now create mostly distractions and noise. They will become increasingly customized local theories that support your predictions.

In the end, your framework should be as clean and frugal as possible without missing arguments or data that are directly relevant. In the language of mathematics, you should use an idea if and only if it makes your framework stronger, more convincing. On the one hand, including more than you need becomes a distraction and can confuse both you, as you try to conceptualize and conduct your research, and others, as they read your reports of your research. On the other hand, including less than you need means your rationale is not yet as convincing as it could be.

The set of rationales, blended together, constitute a precisely targeted custom-made theory that supports your predictions. Custom designing your rationales for your specific predictions means you probably will be drawing ideas from lots of sources and combining them in new ways. You are likely to end up with a unique local theory, a theoretical framework that has not been proposed in exactly the same way before.

A common misconception among beginning researchers is that they should borrow a theoretical framework from somewhere else, especially from well-known scholars who have theories named after them or well-known general theories of learning or teaching. You are likely to use ideas from these theories (e.g., Vygotsky’s theory of learning, Maslow’s theory of motivation, constructivist theories of learning), but you will combine specific ideas from multiple sources to create your own framework. When someone asks, “What theoretical framework are you using?” you would not say, “A Vygotskian framework.” Rather, you would say something like, “I created my framework by combining ideas from different sources so it explains why I am making these predictions.”

You should think of your theoretical framework as a potential contribution to the field, all on its own. Although it is unique to your study, there are elements of your framework that other researchers could draw from to construct theoretical frameworks for their studies, just as you drew from others’ frameworks. In rare cases, other researchers could use your framework as is. This might happen if they want to replicate your study or extend it in very specific ways. Usually, however, researchers borrow parts of frameworks or modify them in ways that better fit their own studies. And, just as you are doing with your own theoretical framework, those researchers will need to justify why borrowing or modifying parts of your framework will help them explain the predictions they are making.

Considering your theoretical framework as a contribution to the field means you should treat it as a central part of scientific inquiry, not just as a required step that must be completed before moving to the next phase. To be useful, the theoretical framework should be constructed as a critical part of conceptualizing and carrying out the research (Cai et al., 2019c ). This also means you should write out your framework as you are developing it. This will be a key part of your evolving research paper. Because your framework will be adjusted multiple times, your written document will go through many drafts.

If you are a graduate student, do not think of the potential audience for your written framework as only your advisor and committee members. Rather, consider your audience to be the larger community of education researchers. You will need to be sure all the key terms are defined and each part of your argument is clear, even to those who are not familiar with your study. This is one place where writing out your framework can benefit your study—it is easy to assume key terms are clear, but then you find out they are not so clear, even to you, when trying to communicate them. Failing to notice this lack of clarity can create lots of problems down the road.

Exercise 3.2

Researchers have used a number of different metaphors to describe theoretical frameworks. Maxwell (2005) referred to a theoretical framework as a “coat closet” that provides “places to ‘hang’ data, showing their relationship to other data,” although he cautioned that “a theory that neatly organizes some data will leave other data disheveled and lying on the floor, with no place to put them” (p. 49). Lester (2005) referred to a framework as a “scaffold” (p. 458), and others have called it a “blueprint” (Grant & Osanloo, 2014). Eisenhart (1991) described the framework as a “skeletal structure of justification” (p. 209). Spangler and Williams (2019) drew an analogy to the role that a house frame provides in preventing the house from collapsing in on itself. What aspects of a theoretical framework does each of these metaphors capture? What aspects does each fail to capture? Which metaphor do you find best fits your definition of a theoretical framework? Why? Can you think of another metaphor to describe a theoretical framework?

Part II. Why Do You Need Theoretical Frameworks?

Theoretical frameworks do lots of work for you. They have four primary purposes. They ensure (1) you have sound reasons to expect your predictions will be accurate, (2) you will craft appropriate methods to test your predictions, (3) you can interpret appropriately what you find, and (4) your interpretations will contribute to the accumulation of a knowledge base that can improve education. How do they do this?

Supporting Your Predictions

In previous chapters and earlier in this chapter, we described how theoretical frameworks are built along with your predictions. In fact, the rationales you develop for convincing others (and yourself) that your predictions are accurate are used to refine your predictions, and vice versa. So, it is not surprising that your refined framework provides a rationale that is fully aligned with your predictions. In fact, you could think of your theoretical framework as your best explanation, at any given moment during scientific inquiry, for why you will find what you think you will find.

Throughout this book, we are using “explanation” in a broad sense. As we noted earlier, an explanation for why your predictions are accurate includes all the concepts and definitions about mechanisms (Kerlinger’s, 1964 definition of “theory”) that help you describe why you think the predictions you are making are the best predictions possible. The explanation also identifies and describes all the variables that make up your predictions, variables that will be measured to test your predictions.

Crafting Appropriate Methods

Critical decisions you make to test your hypotheses form the methods for your scientific inquiry. As we have noted, imagining how you will test your hypotheses helps you decide whether the empirical observations you make can be compared with your predictions or whether you need to revise the methods (or your predictions). Remember, the theoretical framework is the coherent argument built from the rationales you develop as part of each hypothesis you formulate. Because each rationale explains why you make that prediction, it contains helpful cues for which methods would provide the fairest and most complete test of that prediction. In fact, your theoretical framework provides a logic against which you can check every aspect of the methods you imagine using.

You might find it helpful to ask yourself two questions as you think about which methods are best aligned with your theoretical framework. One is, “After reading my theoretical framework, will anyone be surprised by the methods I use?” If so, you should look back at your framework and make sure the predictions are clear and the rationales include all the reasons for your predictions. Your framework should telegraph the methods that make the most sense. The other question is, “Are there some predictions for which I can’t imagine appropriate methods?” If so, we recommend you return to your hypotheses—to your predictions and rationales (theoretical framework)—to make sure the predictions are phrased as precisely as possible and your framework is fully developed. In most cases, this will help you imagine methods that could be used. If not, you might need to revise your hypotheses.

Exercise 3.3

Kerlinger ( 1964 ) stated, “A theory is a set of interrelated constructs (concepts), definitions and propositions which presents a systematic view of phenomena by specifying relations among variables, with the purpose of explaining and predicting phenomena” (p. 11). What role do definitions play in a theoretical framework and how do they help in crafting appropriate methods?

Exercise 3.4

Sarah is in the beginning stages of developing a study. Her initial prediction is: There is a relationship between pedagogical content knowledge and ambitious teaching. She realizes that in order to craft appropriate measures, she needs to develop definitions of these constructs. Sarah’s original definitions are: Pedagogical content knowledge is knowledge about subject matter that is relevant to teaching. Ambitious teaching is teaching that is responsive to students’ thinking and develops a deep knowledge of content. Sarah recognizes that her prediction and her definitions are too broad and too general to work with. She wants to refine the definitions so they can guide the refinement of her prediction and the design of the study. Develop definitions of these two constructs that have clearer implications for the design and that would help Sarah to refine her prediction. (tip: Sarah may need to reduce the scope of her prediction by choosing to focus only on one aspect of pedagogical content knowledge and one aspect of ambitious teaching. Then, she can more precisely define those aspects.)

Guiding Interpretations of the Data

By providing rationales for your predictions, your theoretical framework explains why you think your predictions will be accurate. In education, researchers almost always find that if they make specific predictions (which they should), the predictions are not entirely accurate. This is a consequence of the fact that theoretical frameworks are never complete. Recall the definition of theories from Biddle and Anderson ( 1986 ): A theory “does not represent ultimate ‘truth,’ however; indeed, it will be superseded by other theories presently. Instead, it represents the best explanation we have, at present, for those events we have so far observed” (p. 241). If you have created your best developed and clearly stated theoretical framework that explains why you expected certain results, you can focus your interpretation on the ways in which your theoretical framework should be revised.

Focusing on realigning your theoretical framework with the data you collected produces the richest interpretation of your results. And it prevents you from making one of the most common errors of beginning researchers (and veteran researchers, as well): claiming that your results say more than they really do. Without this anchor to ground your interpretation of the data, it is easy to overgeneralize and make claims that go beyond the evidence.

In one of the definitions of theory presented earlier, Colquitt and Zapata-Phelan ( 2007 ) say that theories prevent scholars from “being dazzled by the complexity of the empirical world” (p. 1281). Theoretical frameworks keep researchers grounded by setting parameters within which the empirical world can be interpreted.

Exercise 3.5

Find two published articles that explicitly present theoretical frameworks (not all articles do). Where do you see evidence of the researchers using their theoretical frameworks to inform, shape, and connect other parts of their articles?

Showing the Contribution of Your Study

Theoretical frameworks contain the arguments that define the contribution of research studies. They do this in two ways, by showing how your study extends what is known and by setting the parameters for your contribution.

Showing How Your Study Extends What Is Known

Because your theoretical framework is built from what is already known or has been proposed, it situates your study in work that has occurred before. A clearly written framework shows readers how your study will take advantage of what is known to extend it further. It reveals what is new about what you are studying. The predictions that are generated from your framework are predictions that have never been made in quite the same way. They predict you will find something that has not been found previously in exactly this way. Your theoretical framework allows others to see the contributions that your study is likely to make even before the study has been conducted.

Setting the Parameters for Your Contribution

Earlier we noted that theoretical frameworks keep researchers grounded by setting parameters within which they should interpret their data. They do this by providing an initial explanation for why researchers expect to find particular results. The explanation is custom-built for each study. This means it uniquely explains the expected results. The results will almost surely turn out somewhat differently than predicted. Interpreting the data includes revising the initial explanation. So, you will end up with two versions of your theoretical framework, one that explains what you expected to find plus a second, updated framework that explains what you actually found.

The two frameworks—the initial version and the updated version—define the parameters of your study’s contribution. The difference between the two frameworks is what can be learned from your study. The first framework is a claim about what is known before you conduct your study about the phenomenon you are studying; the updated framework is a claim about how what is known has changed based on your results. It is the new aspects of the updated framework that capture the important contribution of your work.

Here is a brief example. Suppose you study the errors fourth graders make after receiving ordinary instruction on adding and subtracting decimal fractions. Based on empirical findings from past research, on theories of student learning, and on your own experience, you develop a rationale which predicts that a common error on “ragged” addition problems will be adding the wrong numerals. One of the reasons for this prediction is that students are likely to ignore the values of the digit positions and “line up” the numerals as they do with whole numbers. For instance, if they are asked to add 53.2 + .16, they are likely to answer either 5.48 or 54.8.

When you conduct your study, you present problems, handwritten, in both horizontal and vertical form. The horizontal form presents the numbers using the format shown above. The vertical form places one numeral over the other but not carefully aligned:

You find the predicted error occurs, but only for problems written in vertical form. To interpret these data, you look back at your theoretical framework and realize that students might ignore the value of the digits if the format reminded them of the way they lined up digits for whole number addition but might consider the value of the digits if they are forced to align the digits themselves, either by rewriting the problem or by just adding in their heads. A measure of what you (and others) learned from this study is the change in possible explanations (your theoretical frameworks). This does not mean your updated theoretical framework is “correct” or will make perfectly accurate predictions next time. But, it does mean that you are very likely moving toward more accurate predictions and toward a deeper understanding of how students think about adding decimal fractions.

Anchoring the Coherence of Your Study (and Your Evolving Research Paper)

Your theoretical framework serves as the anchor or center point around which all other aspects of your study should be aligned. This does not mean it is created first or that all other aspects are changed to align with the framework after it is created. The framework also changes as other aspects are considered. However, it is useful to always check alignment by beginning with the framework and asking whether other aspects are aligned and, if not, adjusting one or the other. This process of checking alignment is equally true when writing your evolving research paper as when planning and conducting your study.

Part III. How Do You Construct a Theoretical Framework for Your Study?

How do you start the process? Because constructing a theoretical framework is a natural extension of constructing rationales for your predictions, you already started as soon as you began formulating hypotheses: making predictions for what you will find and writing down reasons for why you are making these predictions. In Chap. 2 , we talked about beginning this process. In this section, we will explore how you can continue building out your rationales into a full-fledged theoretical framework.

Building a Theoretical Framework in Phases

Building your framework will occur in phases and proceed through cycles of clarifying your questions, making more precise and explicit your predictions, articulating reasons for making these predictions, and imagining ways of testing the predictions. The major source for ideas that will shape the framework is the research literature. That said, conversations with colleagues and other experts can help clarify your predictions and the rationales you develop to justify the predictions.

As you read relevant literature, you can ask: What have researchers found that help me predict what I will find? How have they explained their findings, and how might those explanations help me develop reasons for my predictions? Are there new ways to interpret past results so they better inform my predictions? Are there ways to look across previous results (and claims) and see new patterns that I can use to refine my predictions and enrich my rationales? How can theories that have credibility in the research community help me understand what I might find and help me explain why this is the case? As we have said, this process will go back and forth between clarifying your predictions, adjusting your rationales, reading, clarifying more, adjusting, reading, and so on.

One Researcher’s Experience Constructing a Theoretical Framework: The Continuing Case of Martha

In Chap. 2 , we followed Martha, a doctoral student in mathematics education, as she was working out the topic for her study, asking questions she wanted to answer, predicting the answers, and developing rationales for these predictions. Our story concluded with a research question, a sample set of predictions, and some reasons for Martha’s predictions. The question was: “Under what conditions do middle school teachers who lack conceptual knowledge of linear functions benefit from five 2-hour learning opportunity (LO) sessions that engage them in conceptual learning of linear functions as assessed by changes in their teaching toward a more conceptual emphasis of linear functions?” Her predictions focused on particular conditions that would affect the outcomes in particular ways. She was beginning to build rationales for these predictions by returning to the literature and identifying previous research and theory that were relevant. We continue the story here.

Imagine Martha continuing to read as she develops her theoretical framework—the rationales for her predictions. She tweaks some of her predictions based on what other researchers have already found. As she continues reading, she comes across some related literature on learning opportunities for teachers. A number of articles describe the potential of another form of LOs that might help teachers teach mathematics more conceptually—analyzing videos of mathematics lessons.

The data suggested that teachers can improve their teaching by analyzing videos of other teachers’ lessons as well as their own. However, the results were mixed so researchers did not seem to know exactly what makes the difference. Martha also read that teachers who already can analyze videos of lessons and spontaneously describe the mathematics that students are struggling with and offer useful suggestions for how to improve learning opportunities for students teach toward more conceptual learning goals, and their students learn more (Kersting et al., 2010 , 2012 ). These findings caught Martha’s attention because it is unusual to find correlates with conceptual teaching and better achievement. What is not known, realized Martha, is whether teachers who learn to analyze videos in this way, through specially designed LOs, would look like the teachers who already could analyze them. Would teachers who learned to analyze videos teach more conceptually?

It occurred to Martha she could bring these lines of research together by extending what is known along both lines. Recall our earlier suggestion of looking across the literature and noticing new patterns that can inform your work. Martha thought about studying how, exactly, these two skills are related: analyzing videos in particular ways and teaching conceptually. Would the relationships reported in the literature hold up for teachers who learn to describe the mathematics students are struggling with and make useful suggestions for improving students’ LOs?

Martha was now conflicted. She was well on her way to developing a testable hypothesis about the effects of learning about linear functions, but she was really intrigued by the work on analyzing videos of teaching. In addition, she saw several advantages of switching to this new topic:

The research question could be formulated quite easily. It would be something like: “What are the relationships between learning to analyze videos of mathematics teaching in particular ways (specified from prior research) and teaching for conceptual understanding?”

She could imagine predicting the answers to this question based directly on previous research. She would predict connections between particular kinds of analysis skills and levels of conceptual teaching of mathematics in ways that employed these skills.

The level of conceptual teaching, a challenging construct to define with her previous topic (the effects of professional development on the teaching of linear functions), was already defined in the work on analyzing videos of mathematics teaching, so that would solve a big problem. The definition foregrounded particular sets of behaviors and skills such as identifying key learning moments in a lesson and focusing on students’ thinking about the key mathematical idea during these moments. In other words, Martha saw ways to adapt a definition that had already been used and tested.

The issue of transfer—another challenging issue in her original hypothesis—was addressed more directly in this setting because the learning environment—analyzing videos of classroom teaching—is quite close to the classroom environment in which participants’ conceptual teaching would be observed.

Finally, the nature of learning opportunities, an aspect of her original idea she still needed to work through, had been explored in previous studies on this new topic, and connections were found between studying videos and changes in teaching.

Given all these advantages, Martha decided to change her topic and her research question. We applaud this decision for two major reasons. First, Martha’s interest grew as she explored this new topic. She became excited about conducting a study that might answer the research question she posed. It is always good to be passionate about what you study. Second, Martha was more likely to contribute important new insights if she could extend what is already known rather than explore a new area. Exploring something quite new requires lots of effort defining terms, creating measures, making new predictions, developing reasons for the predictions, and so on. Sometimes, exploring a new area has payoffs. But, as a beginning researcher, we suggest you take advantage of work that has already been done and extend it in creative ways.

Although Martha’s idea of extending previous work came with real advantages, she still faced a number of challenges. A first, major challenge was to decide whether she could build a rationale that would predict learning to analyze videos caused more conceptual teaching. Or, could she only build a rationale that would predict that there was a relationship between changes in analyzing videos and level of conceptual teaching? Perhaps a cause-effect relationship existed but in the opposite direction: If teachers learned to teach more conceptually, their analysis of teaching videos would improve. Although most of the literature described learning to analyze videos as the potential cause of teaching conceptually, Martha did not believe there was sufficient evidence to build a rationale for this prediction. Instead, she decided to first determine if a relationship existed and, if so, to understand the relationship. Then, if warranted, she could develop and test a hypothesis of causation in a future study. In fact, the direction of the causation might become clearer when she understood the relationship more clearly.

A second major challenge was whether to study the relationship as it existed or as one (or both) of the constructs was changing. Past research had explored the relationship as it existed, without inducing changes in either analyzing videos or teaching conceptually. So, Martha decided she could learn more about the relationship if one of the constructs was changing in a planned way. Because researchers had argued that teachers’ analysis of video could be changed with appropriate LOs, and because changing teachers’ teaching practices has resisted simple interventions, Martha decided to study the relationship as she facilitated changes in teachers’ analysis of videos. This would require gathering data on the relationship at more than one point in time.

Even after resolving these thorny issues, Martha faced many additional challenges. Should she predict a closer relationship between learning to analyze video and teaching for conceptual understanding before teachers began learning to analyze videos or after? Perhaps the relationship increases over time because conceptual teaching often changes slowly. Should she predict a closer relationship if the content of the videos teachers analyzed was the same as the content they would be teaching? Should she predict the relationship will be similar across pairs of similar topics? Should she predict that some analysis skills will show closer relationships to levels of conceptual teaching than others? These questions and others occurred to Martha as she was formulating her predictions, developing justifications for her predictions, and considering how she would test the predictions.

Based on her reading and discussions with colleagues, Martha phrased her initial predictions as follows:

There will be a significant positive correlation between teachers’ performance on analysis of videos and the extent to which they create conceptual learning opportunities for their students both before and after proposed learning experiences.

The relationship will be stronger:

Before the proposed opportunities to learn to analyze videos of teaching;

When the videos and the instruction are about similar mathematical topics; and,

When the videos analyzed display conceptual misunderstandings among students.

Of the video analysis skills that will be assessed, the two that will show the strongest relationship are spontaneously describing (1) the mathematics that students are struggling with and (2) useful suggestions for how to improve the conceptual learning opportunities for students.

Martha’s rationales for these predictions—her theoretical framework—evolved along with her predictions. We will not detail the framework here, but we will note that the rationale for the first prediction was based on findings from past research. In particular, the prediction is generated by reasoning that if there has been no special intervention, the tendency to analyze videos in particular ways and to teach conceptually develop together. This might explain Kersting’s findings described earlier. The second and third predictions were based on the literature on teachers’ learning, especially their learning from analyzing videos of teaching.

Before leaving Martha at this point in her journey, we want to make an important point about the change she made to her research topic. Changes like this occur quite often as researchers do the hard intellectual work of developing testable hypotheses that guide research studies. When this happens to you, it can feel like you have lost ground. You might feel like you wasted your time on the original topic. In Chap. 1 , we described inevitable “failure” when engaged in scientific inquiry. Failure is often associated with realizing the data you collected do not come close to supporting your predictions. But a common kind of failure occurs when researchers realize the direction they have been pursuing should change before they collect data. This happened in Martha’s case because she came across a topic that was more intriguing to her and because it helped solve some problems she was facing with the previous topic. This is an example of “failing productively” (see Chap. 1 ). Martha did not succeed in pursuing her original idea, but while she was recognizing the problems, she was also seeing new possibilities.

Constantly Improving Your Framework

We will use Martha’s experience to be more specific about the back-and-forth process in which you will engage as you flesh out your framework. We mentioned earlier your review of the literature as a major source of ideas and evidence that will affect your framework.

Reviewing Published Empirical Evidence

One of the best sources for helping you specify your predictions are studies that have been conducted on related topics. The closer to your topic, the more helpful will be the evidence for anticipating what you will find. Many beginning researchers worry they will locate a study just like the one they are planning. This (almost) never happens. Your study will be different in some ways, and a study that is very similar to yours can be extraordinarily helpful in specifying your predictions. Be excited instead of terrified when you come across a study with a title similar to yours.

Try to locate all the published research that has been conducted on your topic. What does “on your topic” mean? How widely should you cast your net? There are no rules here; you will need to use your professional judgment. However, here is a general guide: If the study does not help you clarify your predictions, change your confidence in them, or strengthen your rationale, then it falls outside your net.

In addition to helping specify your predictions, prior research studies can be a goldmine for developing and strengthening your theoretical framework. How did researchers justify their predictions or explain why they found what they did? How can you use these ideas to support (or change) your own predictions?

By reading research on similar topics, you might also imagine ways of testing your predictions. Maybe you learn of ways you could design your study, measures you could use to collect data, or strategies you could use to analyze your data. As you find helpful ideas, you will want to keep track of where you found these ideas so you can cite the appropriate sources as you write drafts of your evolving research paper.

Examining Theories

You will read a wide range of theories that provide insights into why things might work like they do. When the phenomena addressed by the theory are similar to those you will study, the associated theories can help you think through your own predictions and why you are making them. Returning to Martha’s situation, she could benefit from reading theories on adult learning, especially teacher learning, on transferring knowledge from one setting to another, on professional development for teachers, on the role of videos in learning, on the knowledge needed to teach conceptually, and so on.

Focusing on Variables and Mechanisms

As you review the literature and search for evidence and ideas that could strengthen your predictions and rationales, it is useful to keep your eyes on two components: the variables you will attend to and the mechanisms that might explain the relationships between the variables. Predictions could be considered statements about expected behaviors of the variables. The theoretical framework could be thought of as a description of all the variables that will be deliberately attended to plus the mechanisms conjectured to account for these relationships.