- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

10 Quantitative Data Analysis Software for Every Data Scientist

Are you curious about digging into data but not sure where to start? Don’t worry; we’ve got you covered! As a data scientist, you know that having the right tools can make all the difference in the world. When it comes to analyzing quantitative data, having the right quantitative data analysis software can help you extract insights faster and more efficiently.

From spotting trends to making smart decisions, quantitative analysis helps us unlock the secrets hidden within our data and chart a course for success.

In this blog post, we’ll introduce you to 10 quantitative data analysis software that every data scientist should know about.

What is Quantitative Data Analysis?

Quantitative data analysis refers to the process of systematically examining numerical data to uncover patterns, trends, relationships, and insights.

Unlike analyzing qualitative data, which deals with non-numeric data like text or images, quantitative research focuses on data that can be quantified, measured, and analyzed using statistical techniques.

What is Quantitative Data Analysis Software?

Quantitative data analysis software refers to specialized computer programs or tools designed to assist researchers, analysts, and professionals in analyzing numerical data.

These software applications are tailored to handle quantitative data, which consists of measurable quantities, counts, or numerical values. Quantitative data analysis software provides a range of features and functionalities to manage, analyze, visualize, and interpret numerical data effectively.

Key features commonly found in quantitative data analysis software include:

- Data Import and Management: Capability to import data from various sources such as spreadsheets, databases, text files, or online repositories.

- Descriptive Statistics: Tools for computing basic descriptive statistics such as measures of central tendency (e.g., mean, median, mode) and measures of dispersion (e.g., standard deviation, variance).

- Data Visualization: Functionality to create visual representations of data through charts, graphs, histograms, scatter plots, or heatmaps.

- Statistical Analysis: Support for conducting a wide range of statistical tests and analyses to explore relationships, test hypotheses, make predictions, or infer population characteristics from sample data.

- Advanced Analytics: Advanced analytical techniques for more complex data exploration and modeling, such as cluster analysis, principal component analysis (PCA), time series analysis, survival analysis, and structural equation modeling (SEM).

- Automation and Reproducibility: Features for automating analysis workflows, scripting repetitive tasks, and ensuring the reproducibility of results.

- Reporting and Collaboration: Tools for generating customizable reports, summaries, or presentations to communicate analysis results effectively to stakeholders.

Benefits of Quantitative Data Analysis

Quantitative data analysis offers numerous benefits across various fields and disciplines. Here are some of the key advantages:

Making Confident Decisions

Quantitative data analysis provides solid, evidence-based insights that support decision-making. By relying on data rather than intuition, you can reduce the risk of making incorrect decisions. This not only increases confidence in your choices but also fosters buy-in from stakeholders and team members.

Cost Reduction

Analyzing quantitative data helps identify areas where costs can be reduced or optimized. For instance, if certain marketing campaigns yield lower-than-average results, reallocating resources to more effective channels can lead to cost savings and improved ROI.

Personalizing User Experience

Quantitative analysis allows for the mapping of customer journeys and the identification of preferences and behaviors. By understanding these patterns, businesses can tailor their offerings, content, and communication to specific user segments, leading to enhanced user satisfaction and engagement.

Improving User Satisfaction and Delight

Quantitative data analysis highlights areas of success and areas for improvement in products or services. For instance, if a webpage shows high engagement but low conversion rates, further investigation can uncover user pain points or friction in the conversion process. Addressing these issues can lead to improved user satisfaction and increased conversion rates.

Best 10 Quantitative Data Analysis Software

1. questionpro.

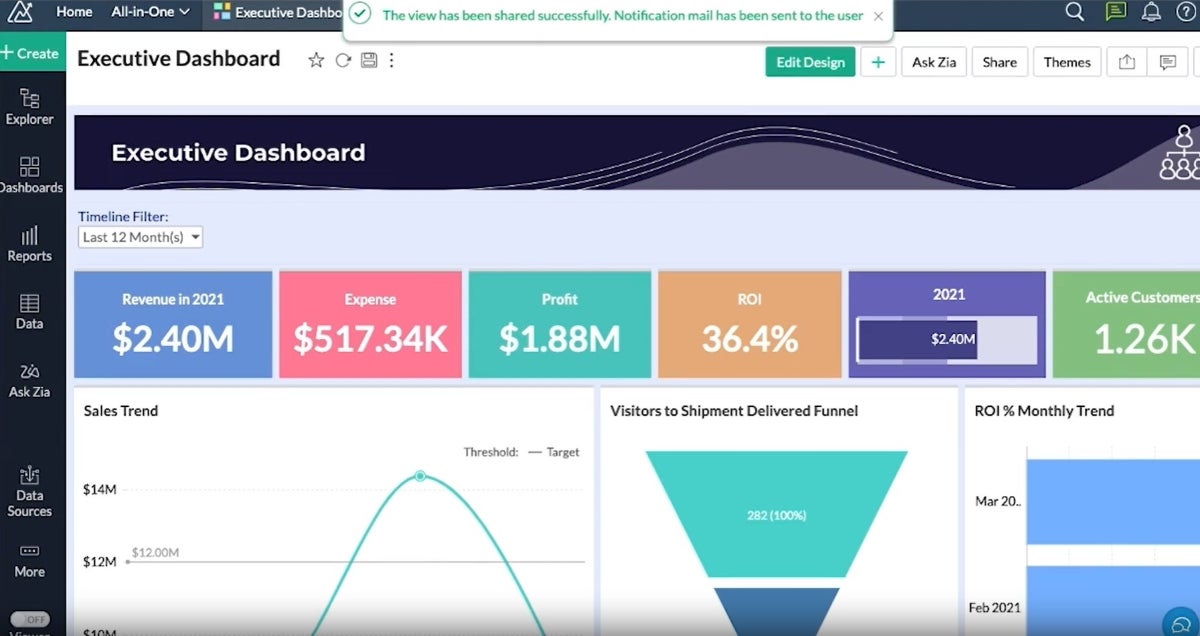

Known for its robust survey and research capabilities, QuestionPro is a versatile platform that offers powerful data analysis tools tailored for market research, customer feedback, and academic studies. With features like advanced survey logic, data segmentation, and customizable reports, QuestionPro empowers users to derive actionable insights from their quantitative data.

Features of QuestionPro

- Customizable Surveys

- Advanced Question Types:

- Survey Logic and Branching

- Data Segmentation

- Real-Time Reporting

- Mobile Optimization

- Integration Options

- Multi-Language Support

- Data Export

- User-friendly interface.

- Extensive question types.

- Seamless data export capabilities.

- Limited free version.

Pricing :

Starts at $99 per month per user.

2. SPSS (Statistical Package for the Social Sciences

SPSS is a venerable software package widely used in the social sciences for statistical analysis. Its intuitive interface and comprehensive range of statistical techniques make it a favorite among researchers and analysts for hypothesis testing, regression analysis, and data visualization tasks.

- Advanced statistical analysis capabilities.

- Data management and manipulation tools.

- Customizable graphs and charts.

- Syntax-based programming for automation.

- Extensive statistical procedures.

- Flexible data handling.

- Integration with other statistical software package

- High cost for the full version.

- Steep learning curve for beginners.

Pricing:

- Starts at $99 per month.

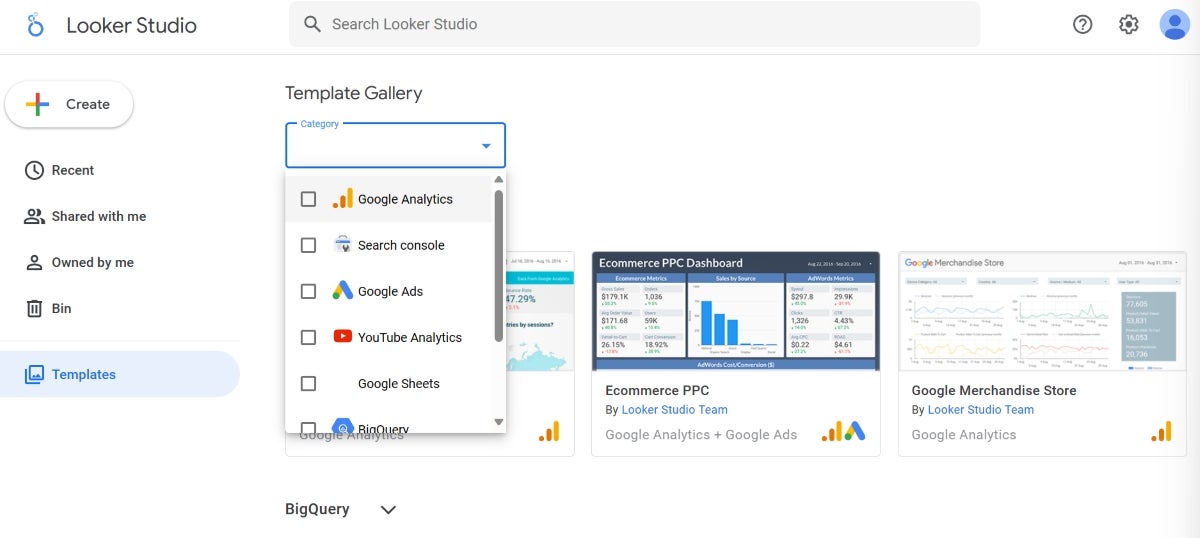

3. Google Analytics

Primarily used for web analytics, Google Analytics provides invaluable insights into website traffic, user behavior, and conversion metrics. By tracking key performance indicators such as page views, bounce rates, and traffic sources, Google Analytics helps businesses optimize their online presence and maximize their digital marketing efforts.

- Real-time tracking of website visitors.

- Conversion tracking and goal setting.

- Customizable reports and dashboards.

- Integration with Google Ads and other Google products.

- Free version available.

- Easy to set up and use.

- Comprehensive insights into website performance.

- Limited customization options in the free version.

- Free for basic features.

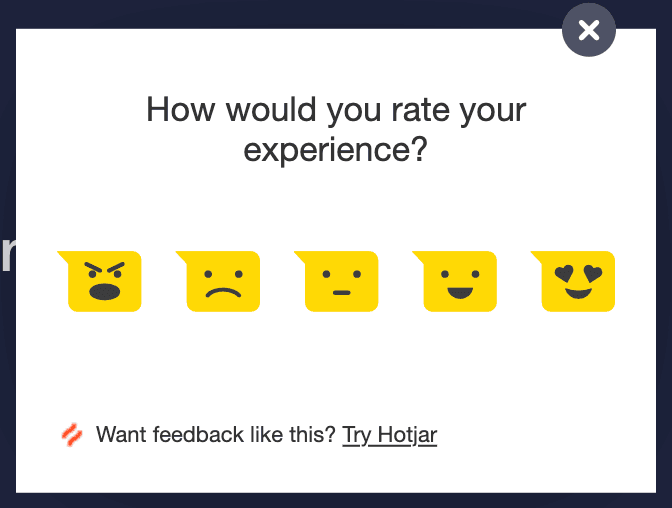

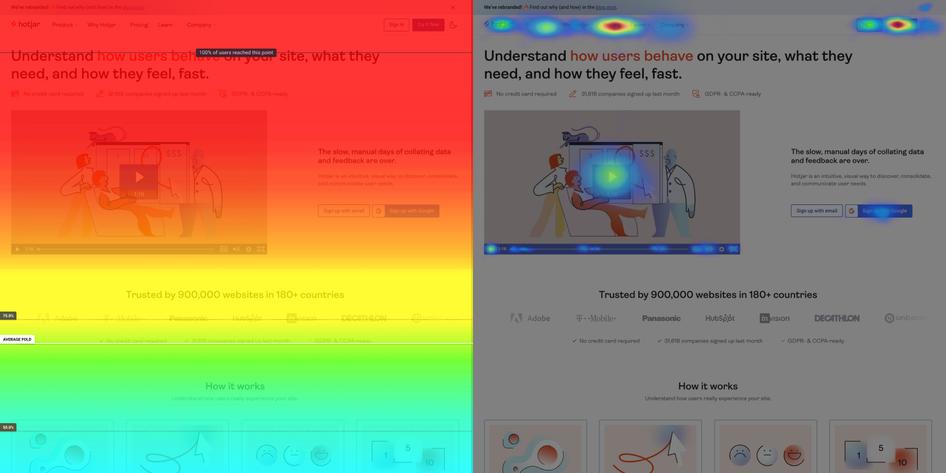

Hotjar is a powerful tool for understanding user behavior on websites and digital platforms. Hotjar enables businesses to visualize how users interact with their websites, identify pain points, and optimize the user experience for better conversion rates and customer satisfaction through features like heatmaps, session recordings, and on-site surveys.

- Heatmaps to visualize user clicks, taps, and scrolling behavior.

- Session recordings for in-depth user interaction analysis.

- Feedback polls and surveys.

- Funnel and form analysis.

- Easy to install and set up.

- Comprehensive insights into user behavior.

- Affordable pricing plans.

- Limited customization options for surveys.

Starts at $39 per month.

While not a dedicated data analysis software, Python is a versatile programming language widely used for data analysis, machine learning, and scientific computing. With libraries such as NumPy, pandas, and matplotlib, Python provides a comprehensive ecosystem for data manipulation, visualization, and statistical analysis, making it a favorite among data scientists and analysts.

- The rich ecosystem of data analysis libraries.

- Flexible and scalable for large datasets.

- Integration with other tools and platforms.

- Open-source with a supportive community.

- Free and open-source.

- High performance and scalability.

- Great for automation and customization.

- Requires programming knowledge.

- It is Free for the beginners.

6. SAS (Statistical Analysis System)

SAS is a comprehensive software suite renowned for its advanced analytics, business intelligence, and data management capabilities. With a wide range of statistical techniques, predictive modeling tools, and data visualization options, SAS is trusted by organizations across industries for complex data analysis tasks and decision support.

- Wide range of statistical procedures.

- Data integration and cleansing tools.

- Advanced analytics and machine learning capabilities.

- Scalable for enterprise-level data analysis.

- Powerful statistical modeling capabilities.

- Excellent support for large datasets.

- Trusted by industries for decades.

- Expensive licensing fees.

- Steep learning curve.

- Contact sales for pricing details.

Despite its simplicity compared to specialized data analysis software, Excel remains popular for basic quantitative analysis and data visualization. With features like pivot tables, functions, and charting tools, Excel provides a familiar and accessible platform for users to perform tasks such as data cleaning, summarization, and exploratory analysis.

- Formulas and functions for calculations.

- Pivot tables and charts for data visualization.

- Data sorting and filtering capabilities.

- Integration with other Microsoft Office applications.

- Widely available and familiar interface.

- Affordable for basic analysis tasks.

- Versatile for various data formats.

- Limited statistical functions compared to specialized software.

- Not suitable for handling large datasets.

- Included in Microsoft 365 subscription plans, starts at $6.99 per month.

8. IBM SPSS Statistics

Building on the foundation of SPSS, IBM SPSS Statistics offers enhanced features and capabilities for advanced statistical analysis and predictive modeling. With modules for data preparation, regression analysis, and survival analysis, IBM SPSS Statistics is well-suited for researchers and analysts tackling complex data analysis challenges.

- Advanced statistical procedures.

- Data preparation and transformation tools.

- Automated model building and deployment.

- Integration with other IBM products.

- Extensive statistical capabilities.

- User-friendly interface for beginners.

- Enterprise-grade security and scalability.

- Limited support for open-source integration.

Minitab is a specialized software package designed for quality improvement and statistical analysis in manufacturing, engineering, and healthcare industries. With tools for experiment design, statistical process control, and reliability analysis, Minitab empowers users to optimize processes, reduce defects, and improve product quality.

- Basic and advanced statistical analysis.

- Graphical analysis tools for data visualization.

- Statistical methods improvement.

- DOE (Design of Experiments) capabilities.

- Streamlined interface for statistical analysis.

- Comprehensive quality improvement tools.

- Excellent customer support.

- Limited flexibility for customization.

Pricing:

- Starts at $29 per month.

JMP is a dynamic data visualization and statistical analysis tool developed by SAS Institute. Known for its interactive graphics and exploratory data analysis capabilities, JMP enables users to uncover patterns, trends, and relationships in their data, facilitating deeper insights and informed decision-making.

- Interactive data visualization.

- Statistical modeling and analysis.

- Predictive analytics and machine learning.

- Integration with SAS and other data sources.

- Intuitive interface for exploratory data analysis.

- Dynamic graphics for better insights.

- Integration with SAS for advanced analytics.

- Limited scripting capabilities.

- Less customizable compared to other SAS products.

QuestionPro is Your Right Quantitative Data Analysis Software?

QuestionPro offers a range of features specifically designed for quantitative data analysis, making it a suitable choice for various research, survey, and data-driven decision-making needs. Here’s why it might be the right fit for you:

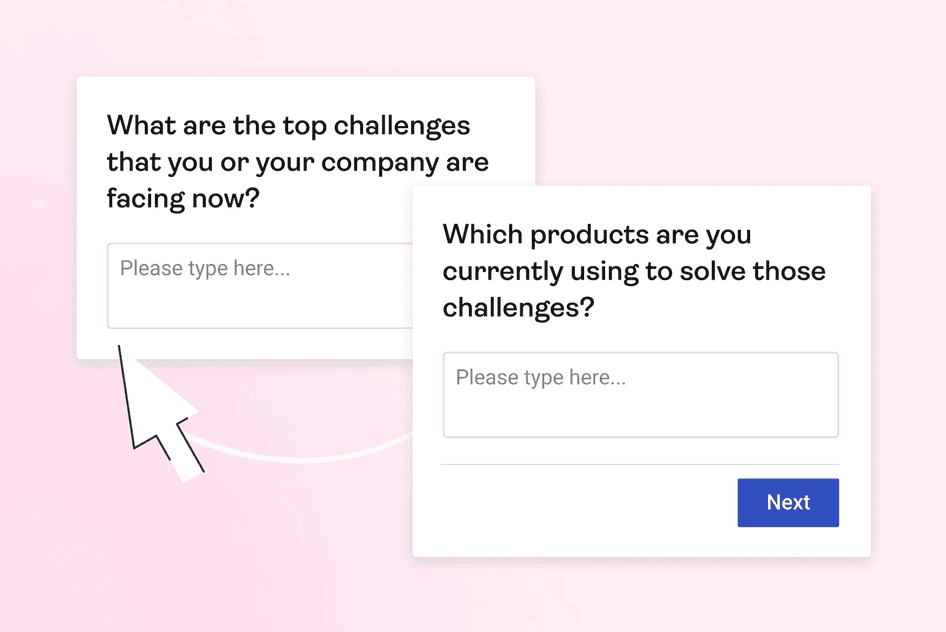

Comprehensive Survey Capabilities

QuestionPro provides extensive tools for creating surveys with quantitative questions, allowing you to gather structured data from respondents. Whether you need Likert scale questions, multiple-choice questions, or numerical input fields, QuestionPro offers the flexibility to design surveys tailored to your research objectives.

Real-Time Data Analysis

With QuestionPro’s real-time data collection and analysis features, you can access and analyze survey responses as soon as they are submitted. This enables you to quickly identify trends, patterns, and insights without delay, facilitating agile decision-making based on up-to-date information.

Advanced Statistical Analysis

QuestionPro includes advanced statistical analysis tools that allow you to perform in-depth quantitative analysis of survey data. Whether you need to calculate means, medians, standard deviations, correlations, or conduct regression analysis, QuestionPro offers the functionality to derive meaningful insights from your data.

Data Visualization

Visualizing quantitative data is crucial for understanding trends and communicating findings effectively. QuestionPro offers a variety of visualization options, including charts, graphs, and dashboards, to help you visually represent your survey data and make it easier to interpret and share with stakeholders.

Segmentation and Filtering

QuestionPro enables you to segment and filter survey data based on various criteria, such as demographics, responses to specific questions, or custom variables. This segmentation capability allows you to analyze different subgroups within your dataset separately, gaining deeper insights into specific audience segments or patterns.

Cost-Effective Solutions

QuestionPro offers pricing plans tailored to different user needs and budgets, including options for individuals, businesses, and enterprise-level organizations. Whether conducting a one-time survey or needing ongoing access to advanced features, QuestionPro provides cost-effective solutions to meet your requirements.

Choosing the right quantitative data analysis software depends on your specific needs, budget, and level of expertise. Whether you’re a researcher, marketer, or business analyst, these top 10 software options offer diverse features and capabilities to help you unlock valuable insights from your data.

If you’re looking for a comprehensive, user-friendly, and cost-effective solution for quantitative data analysis, QuestionPro could be the right choice for your research, survey, or data-driven decision-making needs. With its powerful features, intuitive interface, and flexible pricing options, QuestionPro empowers users to derive valuable insights from their survey data efficiently and effectively.

So go ahead, explore QuestionPro, and empower yourself to unlock valuable insights from your data!

LEARN MORE FREE TRIAL

MORE LIKE THIS

Customer Communication Tool: Types, Methods, Uses, & Tools

Apr 23, 2024

Top 12 Sentiment Analysis Tools for Understanding Emotions

QuestionPro BI: From Research Data to Actionable Dashboards

Apr 22, 2024

21 Best Customer Experience Management Software in 2024

Other categories.

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

A Review of Software Tools for Quantitative Data Analysis

How to get started with statistical analysis

- Research, Samples, and Statistics

- Key Concepts

- Major Sociologists

- News & Issues

- Recommended Reading

- Archaeology

If you're a sociology student or budding social scientist and have started to work with quantitative (statistical) data, analytic software will be very useful.

These programs force researchers to organize and clean their data and offer pre-programmed commands that allow everything from very basic to quite advanced forms of statistical analysis .

They even offer useful visualizations that will be useful as you seek to interpret data, and that you may wish to use when presenting it to others.

There are many programs on the market that are quite expensive. The good news for students and faculty is that most universities have licenses for at least one program students and professors can use.

Also, most programs offer a free, pared-down version of the full software package which will often suffice.

Here's a review of the three main programs that quantitative social scientists use.

Statistical Package for Social Science (SPSS)

SPSS is the most popular quantitative analysis software program used by social scientists.

Made and sold by IBM, it is comprehensive, flexible, and can be used with almost any type of data file. However, it is especially useful for analyzing large-scale survey data .

It can be used to generate tabulated reports, charts, and plots of distributions and trends, as well as generate descriptive statistics such as means, medians, modes and frequencies in addition to more complex statistical analyses like regression models.

SPSS provides a user interface that makes it easy and intuitive for all levels of users. With menus and dialogue boxes, you can perform analyses without having to write command syntax, like in other programs.

It is also simple and easy to enter and edit data directly into the program.

There are a few drawbacks, however, which might not make it the best program for some researchers. For example, there is a limit on the number of cases you can analyze. It is also difficult to account for weights, strata and group effects with SPSS.

STATA is an interactive data analysis program that runs on a variety of platforms. It can be used for both simple and complex statistical analyses.

STATA uses a point-and-click interface as well as command syntax, which makes it easy to use. STATA also makes it simple to generate graphs and plots of data and results.

Analysis in STATA is centered around four windows:

- command window

- review window

- result window

- variable window

Analysis commands are entered into the command window and the review window records those commands. The variables window lists the variables that are available in the current data set along with the variable labels, and the results appear in the results window.

SAS, short for Statistical Analysis System, is also used by many businesses.

In addition to statistical analysis, it also allows programmers to perform report writing, graphics, business planning, forecasting, quality improvement, project management and more.

SAS is a great program for the intermediate and advanced user because it is very powerful; it can be used with extremely large datasets and can perform complex and advanced analyses.

SAS is good for analyses that require you to take into account weights, strata, or groups.

Unlike SPSS and STATA, SAS is run largely by programming syntax rather than point-and-click menus, so some knowledge of the programming language is required.

Other Programs

Other programs popular with sociologists include:

- R: Free to download and use. You can add your own programs to it if you are familiar with statistics and programming.

- NVio: "It helps researchers organize and analyze complex non-numerical or unstructured data, both text and multimedia," according to UCLA Library .

- MATLAB: Provides "Simulations, Multidimensional Data, Image and Signal Processing," according to NYU Libraries .

- Understanding Path Analysis

- The 7 Best Programming Languages to Learn for Beginners

- Data Cleaning for Data Analysis in Sociology

- What Is Quantitative Data?

- Pros and Cons of Secondary Data Analysis

- Cluster Analysis and How Its Used in Research

- Understanding Secondary Data and How to Use It in Research

- Qualitative Data Definition and Examples

- An Overview of Qualitative Research Methods

- 7 Graphs Commonly Used in Statistics

- Correlation Analysis in Research

- Linear Regression Analysis

- Glossary of Visual Basic Terms

- Your Comprehensive Guide to a Painless Undergrad Econometrics Project

- Benefits of the Graphical User Interface

- The Study Island Program: An In-Depth Review

11 Best Data Analysis Software for Research [2023]

5 Best Reference Management Software for Research [FREE]

7 Best Survey Tools for Research [2023]

Leave a comment cancel reply.

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Quantitative Analysis Guide: Which Statistical Software to Use?

- Finding Data

- Which Statistical Software to Use?

- Merging Data Sets

- Reshaping Data Sets

- Choose Statistical Test for 1 Dependent Variable

- Choose Statistical Test for 2 or More Dependent Variables

- Data Services Home Page

Statistical Software Comparison

- What statistical test to use?

- Data Visualization Resources

- Data Analysis Examples External (UCLA) examples of regression and power analysis

- Supported software

- Request a consultation

- Making your code reproducible

Software Access

- The first version of SPSS was developed by Norman H. Nie, Dale H. Bent and C. Hadlai Hull in and released in 1968 as the Statistical Package for Social Sciences.

- In July 2009, IBM acquired SPSS.

- Social sciences

- Health sciences

Data Format and Compatibility

- .sav file to save data

- Optional syntax files (.sps)

- Easily export .sav file from Qualtrics

- Import Excel files (.xls, .xlsx), Text files (.csv, .txt, .dat), SAS (.sas7bdat), Stata (.dta)

- Export Excel files (.xls, .xlsx), Text files (.csv, .dat), SAS (.sas7bdat), Stata (.dta)

- SPSS Chart Types

- Chart Builder: Drag and drop graphics

- Easy and intuitive user interface; menus and dialog boxes

- Similar feel to Excel

- SEMs through SPSS Amos

- Easily exclude data and handle missing data

Limitations

- Absence of robust methods (e.g...Least Absolute Deviation Regression, Quantile Regression, ...)

- Unable to perform complex many to many merge

Sample Data

- Developed by SAS

- Created in the 1980s by John Sall to take advantage of the graphical user interface introduced by Macintosh

- Orginally stood for 'John's Macintosh Program'

- Five products: JMP, JMP Pro, JMP Clinical, JMP Genomics, JMP Graph Builder App

- Engineering: Six Sigma, Quality Control, Scientific Research, Design of Experiments

- Healthcare/Pharmaceutical

- .jmp file to save data

- Optional syntax files (.jsl)

- Import Excel files (.xls, .xlsx), Text files (.csv, .txt, .dat), SAS (.sas7bdat), Stata (.dta), SPSS (.sav)

- Export Excel files (.xls, .xlsx), Text files (.csv, .dat), SAS (.sas7bdat)

- Gallery of JMP Graphs

- Drag and Drop Graph Editor will try to guess what chart is correct for your data

- Dynamic interface can be used to zoom and change view

- Ability to lasso outliers on a graph and regraph without the outliers

- Interactive Graphics

- Scripting Language (JSL)

- SAS, R and MATLAB can be executed using JSL

- Interface for using R from within and add-in for Excel

- Great interface for easily managing output

- Graphs and data tables are dynamically linked

- Great set of online resources!

- Absence of some robust methods (regression: 2SLS, LAD, Quantile)

- Stata was first released in January 1985 as a regression and data management package with 44 commands, written by Bill Gould and Sean Becketti.

- The name Stata is a syllabic abbreviation of the words statistics and data.

- The graphical user interface (menus and dialog boxes) was released in 2003.

- Political Science

- Public Health

- Data Science

- Who uses Stata?

Data Format and Compatibility

- .dta file to save dataset

- .do syntax file, where commands can be written and saved

- Import Excel files (.xls, .xlsx), Text files (.txt, .csv, .dat), SAS (.XPT), Other (.XML), and various ODBC data sources

- Export Excel files (.xls, . xlsx ), Text files (.txt, .csv, .dat), SAS (.XPT), Other (.XML), and various ODBC data sources

- Newer versions of Stata can read datasets, commands, graphs, etc., from older versions, and in doing so, reproduce results

- Older versions of Stata cannot read newer versions of Stata datasets, but newer versions can save in the format of older versions

- Stata Graph Gallery

- UCLA - Stata Graph Gallery

- Syntax mainly used, but menus are an option as well

- Some user written programs are available to install

- Offers matrix programming in Mata

- Works well with panel, survey, and time-series data

- Data management

- Can only hold one dataset in memory at a time

- The specific Stata package ( Stata/IC, Stata/SE, and Stata/MP ) limits the size of usable datasets. One may have to sacrifice the number of variables for the number of observations, or vice versa, depending on the package.

- Overall, graphs have limited flexibility. Stata schemes , however, provide some flexibility in changing the style of the graphs.

- Sample Syntax

* First enter the data manually; input str10 sex test1 test2 "Male" 86 83 "Male" 93 79 "Male" 85 81 "Male" 83 80 "Male" 91 76 "Female" 94 79 "Fem ale" 91 94 "Fem ale" 83 84 "Fem ale" 96 81 "Fem ale" 95 75 end

* Next run a paired t-test; ttest test1 == test2

* Create a scatterplot; twoway ( scatter test2 test1 if sex == "Male" ) ( scatter test2 test1 if sex == "Fem ale" ), legend (lab(1 "Male" ) lab(2 "Fem ale" ))

- The development of SAS (Statistical Analysis System) began in 1966 by Anthony Bar of North Carolina State University and later joined by James Goodnight.

- The National Institute of Health funded this project with a goal of analyzing agricultural data to improve crop yields.

- The first release of SAS was in 1972. In 2012, SAS held 36.2% of the market making it the largest market-share holder in 'advanced analytics.'

- Financial Services

- Manufacturing

- Health and Life Sciences

- Available for Windows only

- Import Excel files (.xls, .xlsx), Text files (.txt, .dat, .csv), SPSS (.sav), Stata (.dta), JMP (.jmp), Other (.xml)

- Export Excel files (.xls, . xlsx ), Text files (.txt, .dat, .csv), SPSS (.sav), Stata (.dta), JMP (.jmp), Other (.xml)

- SAS Graphics Samples Output Gallery

- Can be cumbersome at times to create perfect graphics with syntax

- ODS Graphics Designer provides a more interactive interface

- BASE SAS contains the data management facility, programming language, data analysis and reporting tools

- SAS Libraries collect the SAS datasets you create

- Multitude of additional components are available to complement Base SAS which include SAS/GRAPH, SAS/PH (Clinical Trial Analysis), SAS/ETS (Econometrics and Time Series), SAS/Insight (Data Mining) etc...

- SAS Certification exams

- Handles extremely large datasets

- Predominantly used for data management and statistical procedures

- SAS has two main types of code; DATA steps and PROC steps

- With one procedure, test results, post estimation and plots can be produced

- Size of datasets analyzed is only limited by the machine

Limitations

- Graphics can be cumbersome to manipulate

- Since SAS is a proprietary software, there may be an extensive lag time for the implementation of new methods

- Documentation and books tend to be very technical and not necessarily new user friendly

* First enter the data manually; data example; input sex $ test1 test2; datalines ; M 86 83 M 93 79 M 85 81 M 83 80 M 91 76 F 94 79 F 91 94 F 83 84 F 96 81 F 95 75 ; run ;

* Next run a paired t-test; proc ttest data = example; paired test1*test2; run ;

* Create a scatterplot; proc sgplot data = example; scatter y = test1 x = test2 / group = sex; run ;

- R first appeared in 1993 and was created by Ross Ihaka and Robert Gentleman at the University of Auckland, New Zealand.

- R is an implementation of the S programming language which was developed at Bell Labs.

- It is named partly after its first authors and partly as a play on the name of S.

- R is currently developed by the R Development Core Team.

- RStudio, an integrated development environment (IDE) was first released in 2011.

- Companies Using R

- Finance and Economics

- Bioinformatics

- Import Excel files (.xls, .xlsx), Text files (.txt, .dat, .csv), SPSS (.sav), Stata (.dta), SAS(.sas7bdat), Other (.xml, .json)

- Export Excel files (.xlsx), Text files (.txt, .csv), SPSS (.sav), Stata (.dta), Other (.json)

- ggplot2 package, grammar of graphics

- Graphs available through ggplot2

- The R Graph Gallery

- Network analysis (igraph)

- Flexible esthetics and options

- Interactive graphics with Shiny

- Many available packages to create field specific graphics

- R is a free and open source

- Over 6000 user contributed packages available through CRAN

- Large online community

- Network Analysis, Text Analysis, Data Mining, Web Scraping

- Interacts with other software such as, Python, Bioconductor, WinBUGS, JAGS etc...

- Scope of functions, flexible, versatile etc..

Limitations

- Large online help community but no 'formal' tech support

- Have to have a good understanding of different data types before real ease of use begins

- Many user written packages may be hard to sift through

# Manually enter the data into a dataframe dataset <- data.frame(sex = c("Male", "Male", "Male", "Male", "Male", "Female", "Female", "Female", "Female", "Female"), test1 = c( 86 , 93 , 85 , 83 , 91 , 94 , 91 , 83 , 96 , 95 ), test2 = c( 83 , 79 , 81 , 80 , 76 , 79 , 94 , 84 , 81 , 75 ))

# Now we will run a paired t-test t.test(dataset$test1, dataset$test2, paired = TRUE )

# Last let's simply plot these two test variables plot(dataset$test1, dataset$test2, col = c("red","blue")[dataset$sex]) legend("topright", fill = c("blue", "red"), c("Male", "Female"))

# Making the same graph using ggplot2 install.packages('ggplot2') library(ggplot2) mygraph <- ggplot(data = dataset, aes(x = test1, y = test2, color = sex)) mygraph + geom_point(size = 5) + ggtitle('Test1 versus Test2 Scores')

- Cleave Moler of the University of New Mexico began development in the late 1970s.

- With the help of Jack Little, they cofounded MathWorks and released MATLAB (matrix laboratory) in 1984.

- Education (linear algebra and numerical analysis)

- Popular among scientists involved in image processing

- Engineering

- .m Syntax file

- Import Excel files (.xls, .xlsx), Text files (.txt, .dat, .csv), Other (.xml, .json)

- Export Excel files (.xls, .xlsx), Text files (.txt, .dat, .csv), Other (.xml, .json)

- MATLAB Plot Gallery

- Customizable but not point-and-click visualization

- Optimized for data analysis, matrix manipulation in particular

- Basic unit is a matrix

- Vectorized operations are quick

- Diverse set of available toolboxes (apps) [Statistics, Optimization, Image Processing, Signal Processing, Parallel Computing etc..]

- Large online community (MATLAB Exchange)

- Image processing

- Vast number of pre-defined functions and implemented algorithms

- Lacks implementation of some advanced statistical methods

- Integrates easily with some languages such as C, but not others, such as Python

- Limited GIS capabilities

sex = { 'Male' , 'Male' , 'Male' , 'Male' , 'Male' , 'Female' , 'Female' , 'Female' , 'Female' , 'Female' }; t1 = [86,93,85,83,91,94,91,83,96,95]; t2 = [83,79,81,80,76,79,94,84,81,75];

% paired t-test [h,p,ci,stats] = ttest(t1,t2)

% independent samples t-test sex = categorical(sex); [h,p,ci,stats] = ttest2(t1(sex== 'Male' ),t1(sex== 'Female' ))

plot(t1,t2, 'o' ) g = sex== 'Male' ; plot(t1(g),t2(g), 'bx' ); hold on; plot(t1(~g),t2(~g), 'ro' )

Software Features and Capabilities

*The primary interface is bolded in the case of multiple interface types available.

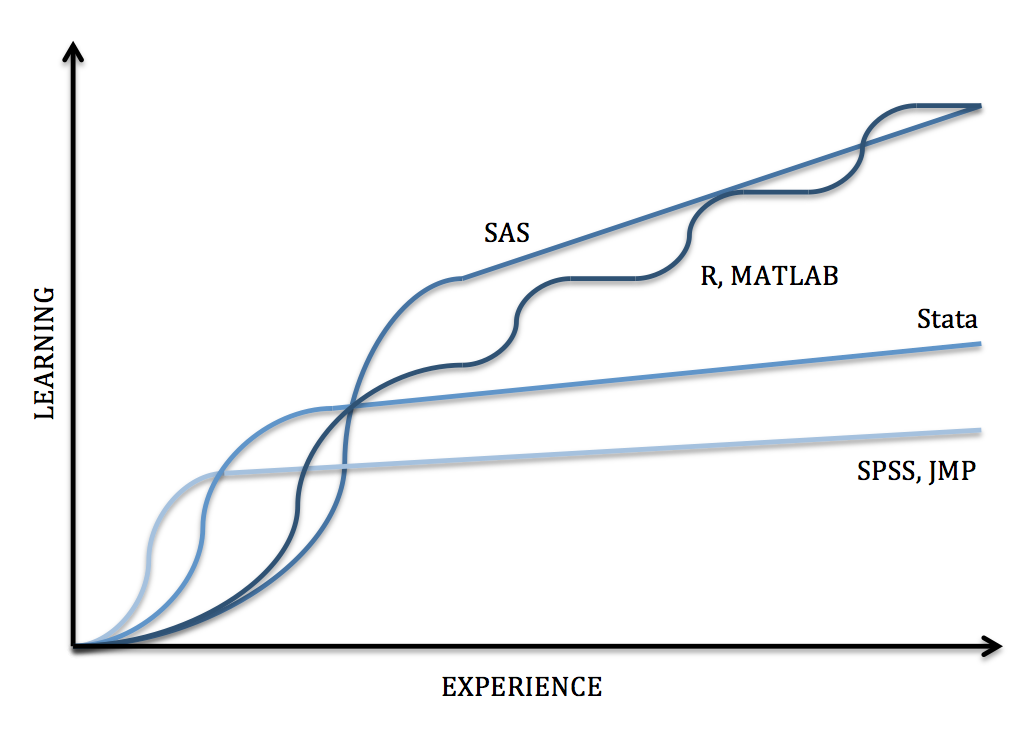

Learning Curve

Further Reading

- The Popularity of Data Analysis Software

- Statistical Software Capability Table

- The SAS versus R Debate in Industry and Academia

- Why R has a Steep Learning Curve

- Comparison of Data Analysis Packages

- Comparison of Statistical Packages

- MATLAB commands in Python and R

- MATLAB and R Side by Side

- Stata and R Side by Side

- << Previous: Statistical Guidance

- Next: Merging Data Sets >>

- Last Updated: Apr 18, 2024 4:36 AM

- URL: https://guides.nyu.edu/quant

Quantitative Data Analysis: A Comprehensive Guide

By: Ofem Eteng Published: May 18, 2022

Related Articles

A healthcare giant successfully introduces the most effective drug dosage through rigorous statistical modeling, saving countless lives. A marketing team predicts consumer trends with uncanny accuracy, tailoring campaigns for maximum impact.

Table of Contents

These trends and dosages are not just any numbers but are a result of meticulous quantitative data analysis. Quantitative data analysis offers a robust framework for understanding complex phenomena, evaluating hypotheses, and predicting future outcomes.

In this blog, we’ll walk through the concept of quantitative data analysis, the steps required, its advantages, and the methods and techniques that are used in this analysis. Read on!

What is Quantitative Data Analysis?

Quantitative data analysis is a systematic process of examining, interpreting, and drawing meaningful conclusions from numerical data. It involves the application of statistical methods, mathematical models, and computational techniques to understand patterns, relationships, and trends within datasets.

Quantitative data analysis methods typically work with algorithms, mathematical analysis tools, and software to gain insights from the data, answering questions such as how many, how often, and how much. Data for quantitative data analysis is usually collected from close-ended surveys, questionnaires, polls, etc. The data can also be obtained from sales figures, email click-through rates, number of website visitors, and percentage revenue increase.

Quantitative Data Analysis vs Qualitative Data Analysis

When we talk about data, we directly think about the pattern, the relationship, and the connection between the datasets – analyzing the data in short. Therefore when it comes to data analysis, there are broadly two types – Quantitative Data Analysis and Qualitative Data Analysis.

Quantitative data analysis revolves around numerical data and statistics, which are suitable for functions that can be counted or measured. In contrast, qualitative data analysis includes description and subjective information – for things that can be observed but not measured.

Let us differentiate between Quantitative Data Analysis and Quantitative Data Analysis for a better understanding.

Data Preparation Steps for Quantitative Data Analysis

Quantitative data has to be gathered and cleaned before proceeding to the stage of analyzing it. Below are the steps to prepare a data before quantitative research analysis:

- Step 1: Data Collection

Before beginning the analysis process, you need data. Data can be collected through rigorous quantitative research, which includes methods such as interviews, focus groups, surveys, and questionnaires.

- Step 2: Data Cleaning

Once the data is collected, begin the data cleaning process by scanning through the entire data for duplicates, errors, and omissions. Keep a close eye for outliers (data points that are significantly different from the majority of the dataset) because they can skew your analysis results if they are not removed.

This data-cleaning process ensures data accuracy, consistency and relevancy before analysis.

- Step 3: Data Analysis and Interpretation

Now that you have collected and cleaned your data, it is now time to carry out the quantitative analysis. There are two methods of quantitative data analysis, which we will discuss in the next section.

However, if you have data from multiple sources, collecting and cleaning it can be a cumbersome task. This is where Hevo Data steps in. With Hevo, extracting, transforming, and loading data from source to destination becomes a seamless task, eliminating the need for manual coding. This not only saves valuable time but also enhances the overall efficiency of data analysis and visualization, empowering users to derive insights quickly and with precision

Hevo is the only real-time ELT No-code Data Pipeline platform that cost-effectively automates data pipelines that are flexible to your needs. With integration with 150+ Data Sources (40+ free sources), we help you not only export data from sources & load data to the destinations but also transform & enrich your data, & make it analysis-ready.

Start for free now!

Now that you are familiar with what quantitative data analysis is and how to prepare your data for analysis, the focus will shift to the purpose of this article, which is to describe the methods and techniques of quantitative data analysis.

Methods and Techniques of Quantitative Data Analysis

Quantitative data analysis employs two techniques to extract meaningful insights from datasets, broadly. The first method is descriptive statistics, which summarizes and portrays essential features of a dataset, such as mean, median, and standard deviation.

Inferential statistics, the second method, extrapolates insights and predictions from a sample dataset to make broader inferences about an entire population, such as hypothesis testing and regression analysis.

An in-depth explanation of both the methods is provided below:

- Descriptive Statistics

- Inferential Statistics

1) Descriptive Statistics

Descriptive statistics as the name implies is used to describe a dataset. It helps understand the details of your data by summarizing it and finding patterns from the specific data sample. They provide absolute numbers obtained from a sample but do not necessarily explain the rationale behind the numbers and are mostly used for analyzing single variables. The methods used in descriptive statistics include:

- Mean: This calculates the numerical average of a set of values.

- Median: This is used to get the midpoint of a set of values when the numbers are arranged in numerical order.

- Mode: This is used to find the most commonly occurring value in a dataset.

- Percentage: This is used to express how a value or group of respondents within the data relates to a larger group of respondents.

- Frequency: This indicates the number of times a value is found.

- Range: This shows the highest and lowest values in a dataset.

- Standard Deviation: This is used to indicate how dispersed a range of numbers is, meaning, it shows how close all the numbers are to the mean.

- Skewness: It indicates how symmetrical a range of numbers is, showing if they cluster into a smooth bell curve shape in the middle of the graph or if they skew towards the left or right.

2) Inferential Statistics

In quantitative analysis, the expectation is to turn raw numbers into meaningful insight using numerical values, and descriptive statistics is all about explaining details of a specific dataset using numbers, but it does not explain the motives behind the numbers; hence, a need for further analysis using inferential statistics.

Inferential statistics aim to make predictions or highlight possible outcomes from the analyzed data obtained from descriptive statistics. They are used to generalize results and make predictions between groups, show relationships that exist between multiple variables, and are used for hypothesis testing that predicts changes or differences.

There are various statistical analysis methods used within inferential statistics; a few are discussed below.

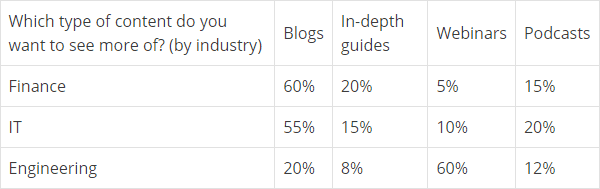

- Cross Tabulations: Cross tabulation or crosstab is used to show the relationship that exists between two variables and is often used to compare results by demographic groups. It uses a basic tabular form to draw inferences between different data sets and contains data that is mutually exclusive or has some connection with each other. Crosstabs help understand the nuances of a dataset and factors that may influence a data point.

- Regression Analysis: Regression analysis estimates the relationship between a set of variables. It shows the correlation between a dependent variable (the variable or outcome you want to measure or predict) and any number of independent variables (factors that may impact the dependent variable). Therefore, the purpose of the regression analysis is to estimate how one or more variables might affect a dependent variable to identify trends and patterns to make predictions and forecast possible future trends. There are many types of regression analysis, and the model you choose will be determined by the type of data you have for the dependent variable. The types of regression analysis include linear regression, non-linear regression, binary logistic regression, etc.

- Monte Carlo Simulation: Monte Carlo simulation, also known as the Monte Carlo method, is a computerized technique of generating models of possible outcomes and showing their probability distributions. It considers a range of possible outcomes and then tries to calculate how likely each outcome will occur. Data analysts use it to perform advanced risk analyses to help forecast future events and make decisions accordingly.

- Analysis of Variance (ANOVA): This is used to test the extent to which two or more groups differ from each other. It compares the mean of various groups and allows the analysis of multiple groups.

- Factor Analysis: A large number of variables can be reduced into a smaller number of factors using the factor analysis technique. It works on the principle that multiple separate observable variables correlate with each other because they are all associated with an underlying construct. It helps in reducing large datasets into smaller, more manageable samples.

- Cohort Analysis: Cohort analysis can be defined as a subset of behavioral analytics that operates from data taken from a given dataset. Rather than looking at all users as one unit, cohort analysis breaks down data into related groups for analysis, where these groups or cohorts usually have common characteristics or similarities within a defined period.

- MaxDiff Analysis: This is a quantitative data analysis method that is used to gauge customers’ preferences for purchase and what parameters rank higher than the others in the process.

- Cluster Analysis: Cluster analysis is a technique used to identify structures within a dataset. Cluster analysis aims to be able to sort different data points into groups that are internally similar and externally different; that is, data points within a cluster will look like each other and different from data points in other clusters.

- Time Series Analysis: This is a statistical analytic technique used to identify trends and cycles over time. It is simply the measurement of the same variables at different times, like weekly and monthly email sign-ups, to uncover trends, seasonality, and cyclic patterns. By doing this, the data analyst can forecast how variables of interest may fluctuate in the future.

- SWOT analysis: This is a quantitative data analysis method that assigns numerical values to indicate strengths, weaknesses, opportunities, and threats of an organization, product, or service to show a clearer picture of competition to foster better business strategies

How to Choose the Right Method for your Analysis?

Choosing between Descriptive Statistics or Inferential Statistics can be often confusing. You should consider the following factors before choosing the right method for your quantitative data analysis:

1. Type of Data

The first consideration in data analysis is understanding the type of data you have. Different statistical methods have specific requirements based on these data types, and using the wrong method can render results meaningless. The choice of statistical method should align with the nature and distribution of your data to ensure meaningful and accurate analysis.

2. Your Research Questions

When deciding on statistical methods, it’s crucial to align them with your specific research questions and hypotheses. The nature of your questions will influence whether descriptive statistics alone, which reveal sample attributes, are sufficient or if you need both descriptive and inferential statistics to understand group differences or relationships between variables and make population inferences.

Pros and Cons of Quantitative Data Analysis

1. Objectivity and Generalizability:

- Quantitative data analysis offers objective, numerical measurements, minimizing bias and personal interpretation.

- Results can often be generalized to larger populations, making them applicable to broader contexts.

Example: A study using quantitative data analysis to measure student test scores can objectively compare performance across different schools and demographics, leading to generalizable insights about educational strategies.

2. Precision and Efficiency:

- Statistical methods provide precise numerical results, allowing for accurate comparisons and prediction.

- Large datasets can be analyzed efficiently with the help of computer software, saving time and resources.

Example: A marketing team can use quantitative data analysis to precisely track click-through rates and conversion rates on different ad campaigns, quickly identifying the most effective strategies for maximizing customer engagement.

3. Identification of Patterns and Relationships:

- Statistical techniques reveal hidden patterns and relationships between variables that might not be apparent through observation alone.

- This can lead to new insights and understanding of complex phenomena.

Example: A medical researcher can use quantitative analysis to pinpoint correlations between lifestyle factors and disease risk, aiding in the development of prevention strategies.

1. Limited Scope:

- Quantitative analysis focuses on quantifiable aspects of a phenomenon , potentially overlooking important qualitative nuances, such as emotions, motivations, or cultural contexts.

Example: A survey measuring customer satisfaction with numerical ratings might miss key insights about the underlying reasons for their satisfaction or dissatisfaction, which could be better captured through open-ended feedback.

2. Oversimplification:

- Reducing complex phenomena to numerical data can lead to oversimplification and a loss of richness in understanding.

Example: Analyzing employee productivity solely through quantitative metrics like hours worked or tasks completed might not account for factors like creativity, collaboration, or problem-solving skills, which are crucial for overall performance.

3. Potential for Misinterpretation:

- Statistical results can be misinterpreted if not analyzed carefully and with appropriate expertise.

- The choice of statistical methods and assumptions can significantly influence results.

This blog discusses the steps, methods, and techniques of quantitative data analysis. It also gives insights into the methods of data collection, the type of data one should work with, and the pros and cons of such analysis.

Gain a better understanding of data analysis with these essential reads:

- Data Analysis and Modeling: 4 Critical Differences

- Exploratory Data Analysis Simplified 101

- 25 Best Data Analysis Tools in 2024

Carrying out successful data analysis requires prepping the data and making it analysis-ready. That is where Hevo steps in.

Want to give Hevo a try? Sign Up for a 14-day free trial and experience the feature-rich Hevo suite first hand. You may also have a look at the amazing Hevo price , which will assist you in selecting the best plan for your requirements.

Share your experience of understanding Quantitative Data Analysis in the comment section below! We would love to hear your thoughts.

Ofem is a freelance writer specializing in data-related topics, who has expertise in translating complex concepts. With a focus on data science, analytics, and emerging technologies.

No-code Data Pipeline for your Data Warehouse

- Data Analysis

- Data Warehouse

- Quantitative Data Analysis

Continue Reading

Saloni Agarwal

Enterprise Data Lake: A Simplified Guide

Riya Bothra

An Expert Guide To Enterprise Data Analysis

Gcp storage buckets list: efficient data organizing strategy, i want to read this e-book.

- Our foundation

Best Software for Quantitative Data Analysis: The Definitive Guide

Data can be a scary thing to navigate. Even with the explosion of information that we have at our fingertips, it can still be challenging to make sense of it all. There are multiple ways in which quantitative data can be analysed and interpreted, but there are also many pitfalls to avoid along the way. It’s not just about analyzing raw numbers and figures; data analysis should be strategic, smart, and actionable. In this blog post, we’ll discuss the best software for quantitative data analysis so you can find the most suitable option for your needs. Since there are several options available on the market, we’ve outlined some key considerations before making recommendations based on specific use cases and price points. Read on to learn more!

What Is Quantitative Data Analysis?

Quantitative data analysis is the process of collecting and interpreting numerical or statistical data to make decisions or solve problems. It’s an important component of data science, as it includes the collection, cleaning and organizing of data as well as basic visualizations and analyses. It’s crucial to effectively analyze large amounts of data because it allows you to understand what’s happening in your business and use it to inform your decision-making. The analysis can be anything from a few simple calculations to running complex algorithms on a large dataset. The goal is to gain insights that can help you to make better decisions based on the data you have.

Why is Quantitative Data Analysis Important?

Quantitative data analysis is important for a variety of reasons, but its primary value is that it allows you to make more accurate and informed decisions. When you’re using data to inform your decision-making, you’re being more strategic and less reactive. You’re able to base your decisions on facts rather than gut feelings or emotions. This is critical for all areas of business, but it’s especially useful in the following areas:

– Marketing: This is one of the most popular areas where quantitative analysis is used. Data allows you to make better decisions about your marketing campaigns, such as what kind of ads to run, which audience to target, etc.

– Sales: If you’re analyzing sales data, you can better understand what’s happening with your sales funnel. This allows you to make changes to increase conversion rates and close more sales.

– Product and operations: If you’re analyzing product or operational data, you can make better decisions about what to prioritize and where to focus your efforts.

– Finance: This is one of the oldest uses for quantitative analysis, but it’s still incredibly important. Data analysis allows you to make better decisions about managing your finances, such as what interest rate to offer on a loan.

Best Tools for Basic Data Analysis

Basic data analysis is the most basic form of data analysis. It covers the bare minimum of what data analysis is and does. Basic data analysis is designed to give you a high-level overview of the data and let you know if there’s anything wrong with it. If you’re dealing with small amounts of data, basic data analysis can be done in Excel. However, it’s not recommended to analyze large amounts of data in Excel. If the data is large, you’re better off using a dedicated data analysis tool. There are many great tools for basic data analysis, including these top picks:

– Google Sheets: Google Sheets is an excellent option for basic data analysis. It’s a free tool that allows you to analyze small to medium-sized datasets. It’s ideal for businesses that are still growing, as it allows you to make sense of your data without having to invest in more expensive tools. – Microsoft Excel: While Excel isn’t the best option for large datasets, it’s a great tool for basic data analysis for smaller datasets. It allows you to perform calculations, create visualizations, and more.

Best Tools for Advanced Data Analysis

Advanced data analysis goes beyond basic data analysis and allows you to go deeper into your data. It allows you to discover insights that weren’t visible in your data during the basic analysis process. There are many tools out there that allow you to do advanced data analysis. However, many of these tools are only useful if you have a large amount of data. If you don’t have a large dataset, it may be challenging to do advanced data analysis with these tools. So, if you don’t have a large amount of data, you might want to use one of the tools for basic data analysis. If you have a large amount of data, there are many tools for advanced data analysis that you can use. Some of the best software for quantitative data analysis for advanced data analysis are available here in cmnty with all the available modules.

Quantitative data analysis can be challenging, but it’s also incredibly important. It allows you to make better decisions and understand your data more clearly. It also helps you to identify any issues with your data so they can be corrected. The best quantitative data analysis software depends on a variety of factors, such as the type of data you’re analyzing, the volume of data, the complexity of the data, etc. From basic to advanced data analysis, the best tools are designed to make the process as easy as possible.

We care about your privacy

We and third parties use cookies on our website for statistical, preference, and marketing purposes. Google Analytics cookies are anonymized. You can change your preference by clicking on 'Configure'. By clicking on 'Accept', you accept the use of all cookies as described in our privacy statement .

Choose your privacy preferences

Through the cookie statement on our website, you can change or withdraw your consent at any time. In our privacy policy, you can find more information about who we are, how you can contact us, and how we process personal data.

Preferences

The IBM® SPSS® software platform offers advanced statistical analysis, a vast library of machine learning algorithms, text analysis, open-source extensibility, integration with big data and seamless deployment into applications.

Its ease of use, flexibility and scalability make SPSS accessible to users of all skill levels. What’s more, it’s suitable for projects of all sizes and levels of complexity, and can help you find new opportunities, improve efficiency and minimize risk.

Within the SPSS software family of products, IBM SPSS Statistics supports a top-down, hypothesis testing approach to your data, while IBM SPSS Modeler exposes patterns and models hidden in data through a bottom-up, hypothesis generation approach.

The AI studio that brings together traditional machine learning along with the new generative AI capabilities powered by foundation models.

SPSS Statistics for Students

Prepare and analyze data with an easy-to-use interface without having to write code.

Choose from purchase options including subscription and traditional licenses.

Empower coders, noncoders and analysts with visual data science tools.

IBM SPSS Modeler helps you tap into data assets and modern applications, with algorithms and models that are ready for immediate use.

IBM SPSS Modeler is available on IBM Cloud Pak for Data. Take advantage of IBM SPSS Modeler on the public cloud.

Manage analytical assets, automate processes and share results more efficiently and securely.

Get descriptive and predictive analytics, data preparation and real-time scoring.

Use structural equation modeling (SEM) to test hypotheses and gain new insights from data.

Create a platform that can make predictive analytics easier for big data.

Find support resources for SPSS Statistics.

Get technical tips and insights from other SPSS users.

Gain new perspective through expert guidance.

Find support resources for IBM SPSS Modeler.

Learn how to use linear regression analysis to predict the value of a variable based on the value of another variable.

Learn how logistic regression estimates the probability of an event occurring, based on a dataset of independent variables.

Learn about new statistical procedures, data visualization tools and other improvements in SPSS Statistics 29.

Discover how you can uncover data insights that solve business and research problems.

Quantitative research

Objectives and applications

Quantitative research methods, choosing a quantitative research design, software for quantitative research.

Quantitative and qualitative research are commonly considered differing fundamentally. Yet, their objectives, as well as their applications, overlap in numerous ways. Quantitative Research is considered to have as its main purpose the quantification of data. This allows generalizations of results from a sample to an entire population of interest and the measurement of the incidence of various views and opinions in a given sample.

Yet, quantitative research is not infrequently followed by qualitative research, which aims to explore select findings further. Qualitative research is considered particularly suitable for gaining an in-depth understanding of underlying reasons and motivations. It provides insights into the setting of a problem. At the same time, it frequently generates ideas and hypotheses for later quantitative research.

Quantitative research measures the frequency or intensity of a phenomenon or its distribution, hypotheses can be tested, and insights inferred. At the beginning of the research process, theories about the facts under investigation have already been proposed, from which hypotheses are derived. The actual data are then collected by quantitative methods. In the social sciences, often, these are surveys using questionnaires or experiments. Statistical methods are used to dissect and evaluate the data, often using control groups. The research process results are then, in turn, related to the previously established theories and interpreted.

The advantages of quantitative research are high reliability, fast processing of large amounts of data, and high comparability. There are several methods of quantitative research:

- standardized surveys

- standardized observations

- experiments and trials

- quantitative content analysis

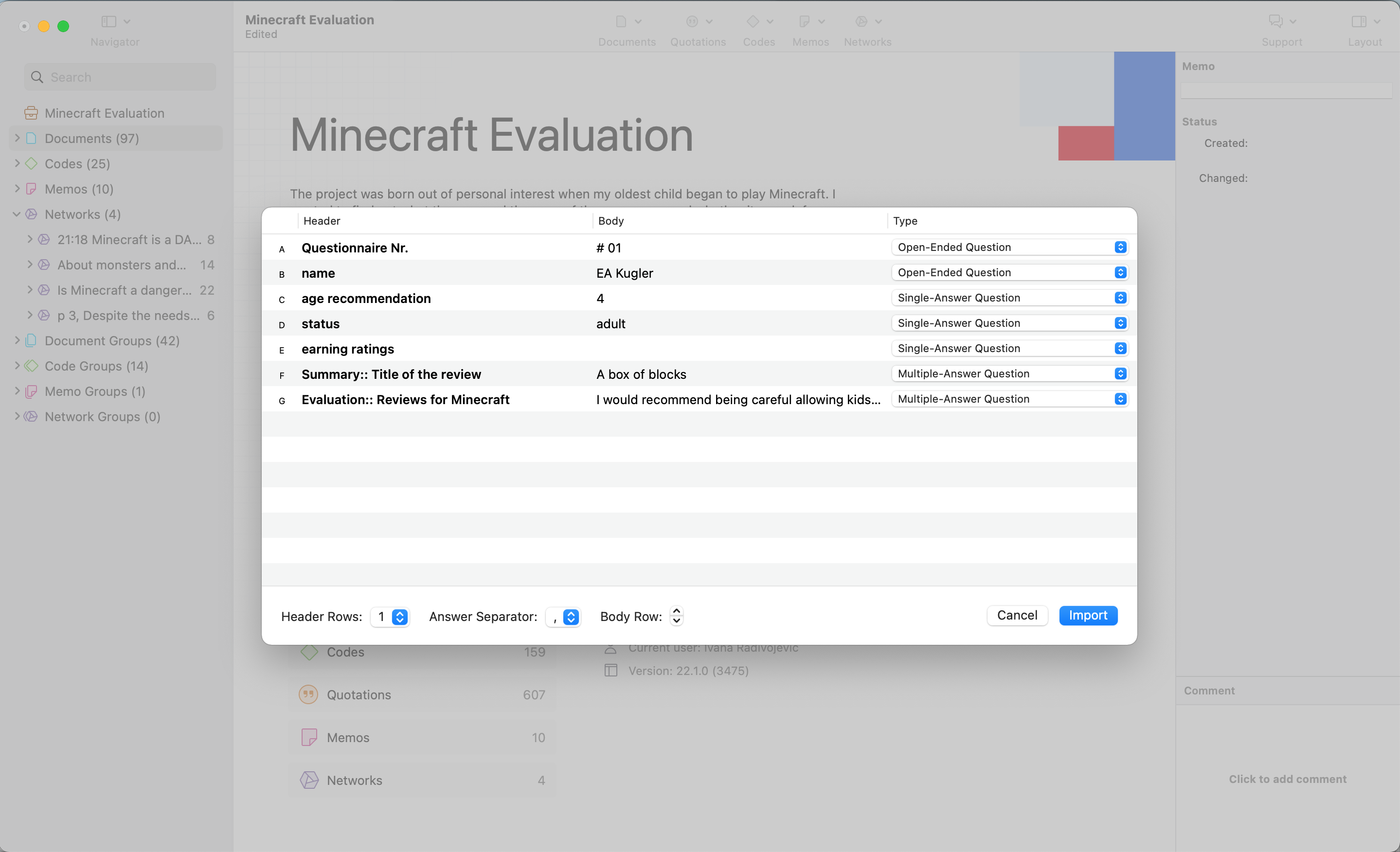

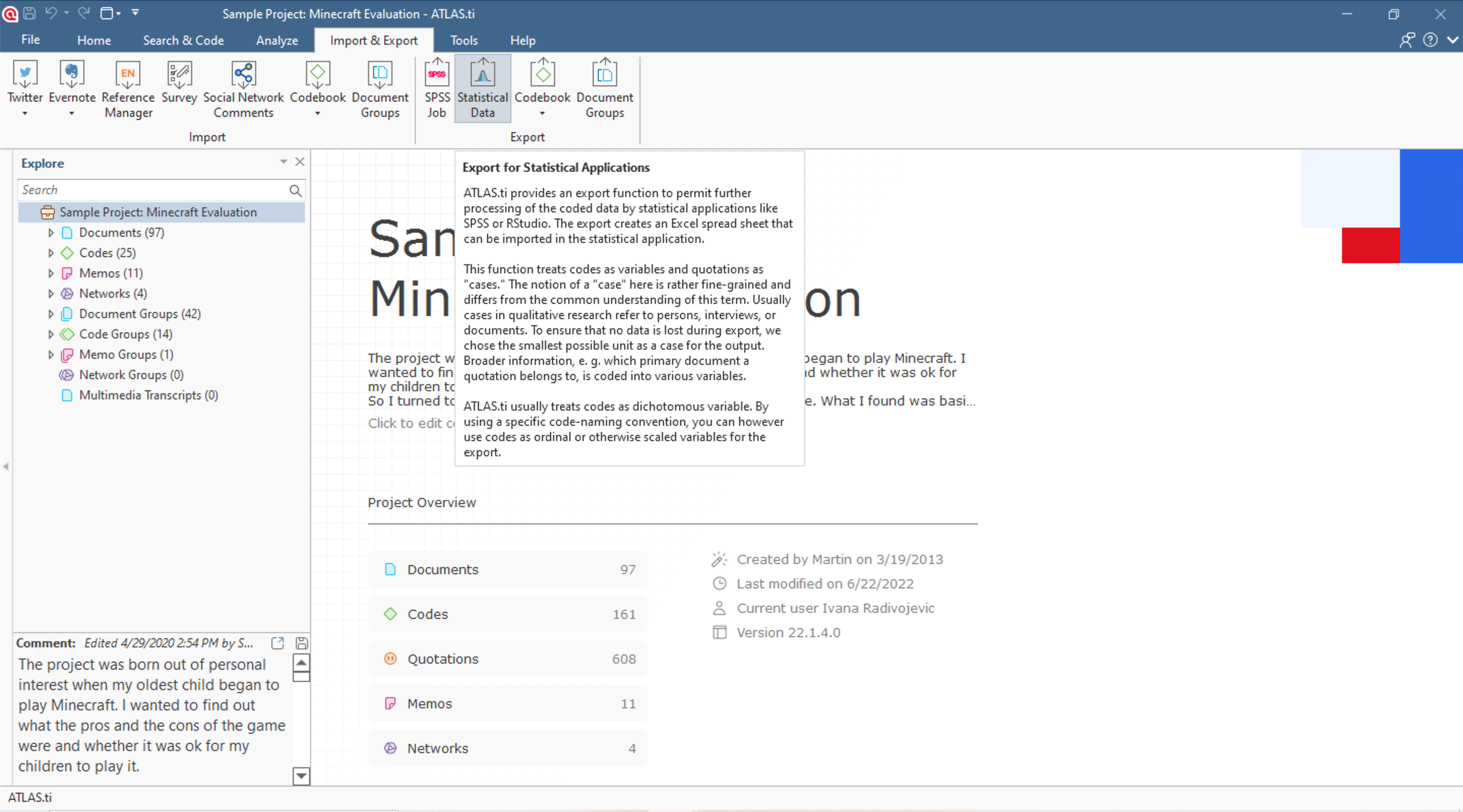

Analysis of ideas, actions, and values made possible with ATLAS.ti.

Turn your data into key insights with our powerful tools. Download a free trial today.

The research design is composed of:

- Type of research

- Data collection

- Data description

- Method of analysis

Which method of data collection and analysis is suitable depends on the research questions.

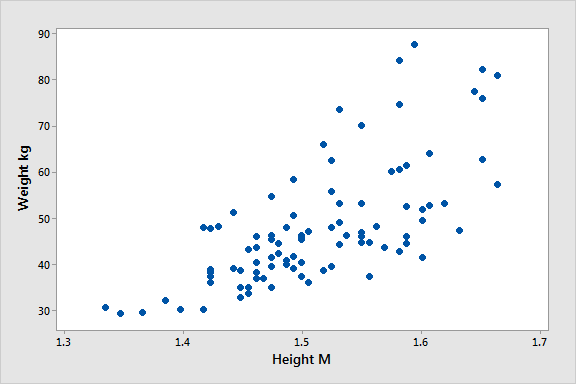

A distinction can be made between dependent and independent variables in quantitative research. Independent variables are considered to have an effect on other variables in the research context. They influence the dependent variable(s). Regression analysis can be run to determine whether an independent variable has an effect. For example, one can examine the bathing time (dependent variable) of swimming pool guests as a function of the water temperature (independent variable).

Correlational analysis can be used to determine whether two variables are related, but no cause and effect relationship can be established. For example, it has been observed that more children are born in places where many storks live. This however does not mean that storks deliver babies. The simple explanation for this observation is that birth rates are higher in the countryside, and storks also prefer to live in this environment.

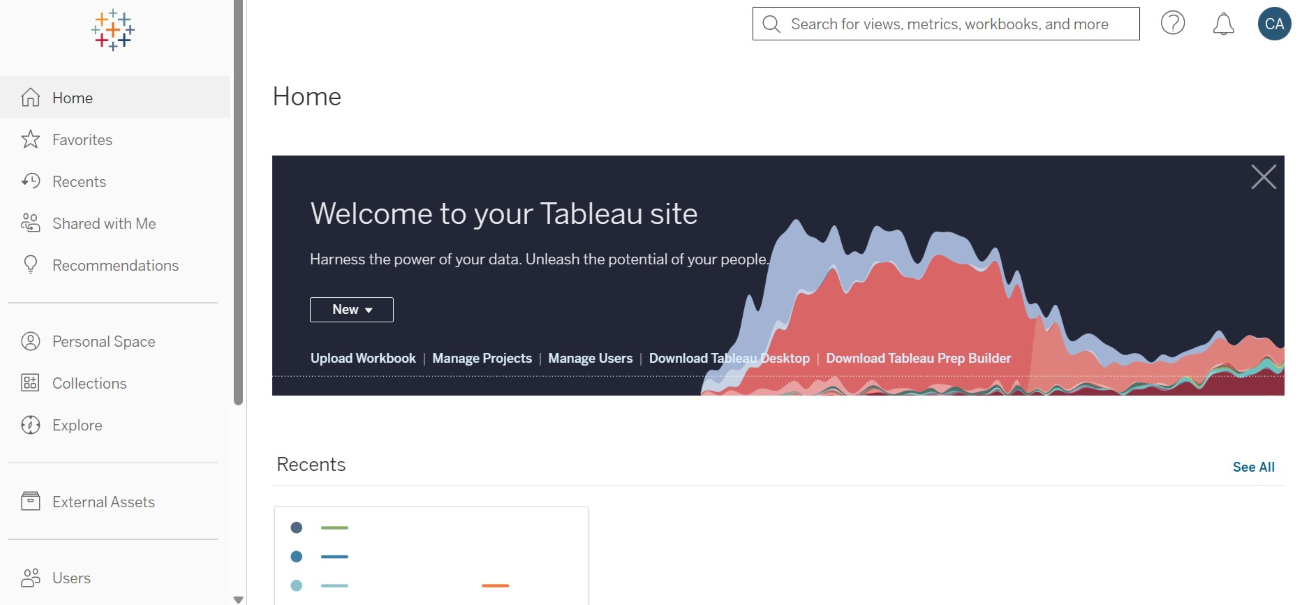

Quantitative research, predominantly statistical analysis, is common in the social sciences. Many software programs designed for use with quantitative data are available today. The main requirements for such packages are that they are comprehensive and flexible. A useful statistical software tool can generate tabulated reports, charts, and plots of distributions and trends and generate descriptive statistics and more complex statistical analyses. Lastly, a user interface that makes it very easy and intuitive for all levels of users is a must.

Examples of statistical analysis software are SPSS, Excel, SAS, or R. The presentation of results of studies usually takes place in the form of tables or graphs.

Suppose you have used ATLAS.ti for analyzing qualitative data. If your sample is sufficiently large, and you want to confirm results via statistical procedures, you can export your ATLAS.ti data for use in SPSS, Excel, SAS, or R. ATLAS.it offers two output options - an SPSS syntax file or a generic Excel file for input in any statistical software. Each coded data segment becomes a case, and each code and code group a variable.

Analyze transcripts, notes, and more with ATLAS.ti

Intuitive tools to help you with your research. Check them out with a free trial of ATLAS.ti.

Libraries | Research Guides

Software for data analysis.

- Quantitative Tools

- Qualitative Tools

Meet one-on-one with a research data specialist from the library or NUIT.

Request a Consult

Statistical Software Guides and Tutorials

- Sage Research Methods Core Collection This link opens in a new window A collection of e-books and other resources covering research methods in the social and behavioral sciences. It contains the popular Little Green Book series as well as other titles on quantitative analysis

- NUIT Research Data Services: Training and Learning NUIT offers data analysis training through workshops and online learning.

- LinkedIn Learning Northwestern provides faculty, staff, and students with access to this suite of online courses.

Online Courses

- IBM's SPSS User Guide

- SPSS Tutorials

Online Tutorials

- Stata Documentation The official user guide, along with manuals and examples for using specific statistical methods in Stata.

- Stata Learning Modules Beginner-friendly guide to Stata from UCLA's Advanced Research Computing.

- SAS Learning Modules Beginner-friendly guide to SAS from UCLA's Advanced Research Computing.

- Google's Python Class Unlike R, Python is a general-purpose programming language. This site offers a more general introduction to Python, which you may want for background knowledge before moving on to using Python for data analysis.

Accessing Software

Open-source software.

Both R and Python are free and open source. NUIT's Research Data Services offers installation guidelines:

- I nstalling R and RStudio

- Installing Python and Jupyter

Proprietary Software

Northwestern provides access to licensed software in the library computer labs and on NUWorkspace , a virtual desktop. NUIT also makes free or discounted software licenses available. In addition to these campus-wide resources, your department may have software licenses you can access.

Sarah Thorngate

- << Previous: Home

- Next: Qualitative Tools >>

- Last Updated: Feb 23, 2024 11:33 AM

- URL: https://libguides.northwestern.edu/data2

Quantitative Data Analysis 101

The lingo, methods and techniques, explained simply.

By: Derek Jansen (MBA) and Kerryn Warren (PhD) | December 2020

Quantitative data analysis is one of those things that often strikes fear in students. It’s totally understandable – quantitative analysis is a complex topic, full of daunting lingo , like medians, modes, correlation and regression. Suddenly we’re all wishing we’d paid a little more attention in math class…

The good news is that while quantitative data analysis is a mammoth topic, gaining a working understanding of the basics isn’t that hard , even for those of us who avoid numbers and math . In this post, we’ll break quantitative analysis down into simple , bite-sized chunks so you can approach your research with confidence.

Overview: Quantitative Data Analysis 101

- What (exactly) is quantitative data analysis?

- When to use quantitative analysis

- How quantitative analysis works

The two “branches” of quantitative analysis

- Descriptive statistics 101

- Inferential statistics 101

- How to choose the right quantitative methods

- Recap & summary

What is quantitative data analysis?

Despite being a mouthful, quantitative data analysis simply means analysing data that is numbers-based – or data that can be easily “converted” into numbers without losing any meaning.

For example, category-based variables like gender, ethnicity, or native language could all be “converted” into numbers without losing meaning – for example, English could equal 1, French 2, etc.

This contrasts against qualitative data analysis, where the focus is on words, phrases and expressions that can’t be reduced to numbers. If you’re interested in learning about qualitative analysis, check out our post and video here .

What is quantitative analysis used for?

Quantitative analysis is generally used for three purposes.

- Firstly, it’s used to measure differences between groups . For example, the popularity of different clothing colours or brands.

- Secondly, it’s used to assess relationships between variables . For example, the relationship between weather temperature and voter turnout.

- And third, it’s used to test hypotheses in a scientifically rigorous way. For example, a hypothesis about the impact of a certain vaccine.

Again, this contrasts with qualitative analysis , which can be used to analyse people’s perceptions and feelings about an event or situation. In other words, things that can’t be reduced to numbers.

How does quantitative analysis work?

Well, since quantitative data analysis is all about analysing numbers , it’s no surprise that it involves statistics . Statistical analysis methods form the engine that powers quantitative analysis, and these methods can vary from pretty basic calculations (for example, averages and medians) to more sophisticated analyses (for example, correlations and regressions).

Sounds like gibberish? Don’t worry. We’ll explain all of that in this post. Importantly, you don’t need to be a statistician or math wiz to pull off a good quantitative analysis. We’ll break down all the technical mumbo jumbo in this post.

Need a helping hand?

As I mentioned, quantitative analysis is powered by statistical analysis methods . There are two main “branches” of statistical methods that are used – descriptive statistics and inferential statistics . In your research, you might only use descriptive statistics, or you might use a mix of both , depending on what you’re trying to figure out. In other words, depending on your research questions, aims and objectives . I’ll explain how to choose your methods later.

So, what are descriptive and inferential statistics?

Well, before I can explain that, we need to take a quick detour to explain some lingo. To understand the difference between these two branches of statistics, you need to understand two important words. These words are population and sample .

First up, population . In statistics, the population is the entire group of people (or animals or organisations or whatever) that you’re interested in researching. For example, if you were interested in researching Tesla owners in the US, then the population would be all Tesla owners in the US.

However, it’s extremely unlikely that you’re going to be able to interview or survey every single Tesla owner in the US. Realistically, you’ll likely only get access to a few hundred, or maybe a few thousand owners using an online survey. This smaller group of accessible people whose data you actually collect is called your sample .

So, to recap – the population is the entire group of people you’re interested in, and the sample is the subset of the population that you can actually get access to. In other words, the population is the full chocolate cake , whereas the sample is a slice of that cake.

So, why is this sample-population thing important?

Well, descriptive statistics focus on describing the sample , while inferential statistics aim to make predictions about the population, based on the findings within the sample. In other words, we use one group of statistical methods – descriptive statistics – to investigate the slice of cake, and another group of methods – inferential statistics – to draw conclusions about the entire cake. There I go with the cake analogy again…

With that out the way, let’s take a closer look at each of these branches in more detail.

Branch 1: Descriptive Statistics

Descriptive statistics serve a simple but critically important role in your research – to describe your data set – hence the name. In other words, they help you understand the details of your sample . Unlike inferential statistics (which we’ll get to soon), descriptive statistics don’t aim to make inferences or predictions about the entire population – they’re purely interested in the details of your specific sample .

When you’re writing up your analysis, descriptive statistics are the first set of stats you’ll cover, before moving on to inferential statistics. But, that said, depending on your research objectives and research questions , they may be the only type of statistics you use. We’ll explore that a little later.

So, what kind of statistics are usually covered in this section?

Some common statistical tests used in this branch include the following:

- Mean – this is simply the mathematical average of a range of numbers.

- Median – this is the midpoint in a range of numbers when the numbers are arranged in numerical order. If the data set makes up an odd number, then the median is the number right in the middle of the set. If the data set makes up an even number, then the median is the midpoint between the two middle numbers.

- Mode – this is simply the most commonly occurring number in the data set.

- In cases where most of the numbers are quite close to the average, the standard deviation will be relatively low.

- Conversely, in cases where the numbers are scattered all over the place, the standard deviation will be relatively high.

- Skewness . As the name suggests, skewness indicates how symmetrical a range of numbers is. In other words, do they tend to cluster into a smooth bell curve shape in the middle of the graph, or do they skew to the left or right?

Feeling a bit confused? Let’s look at a practical example using a small data set.

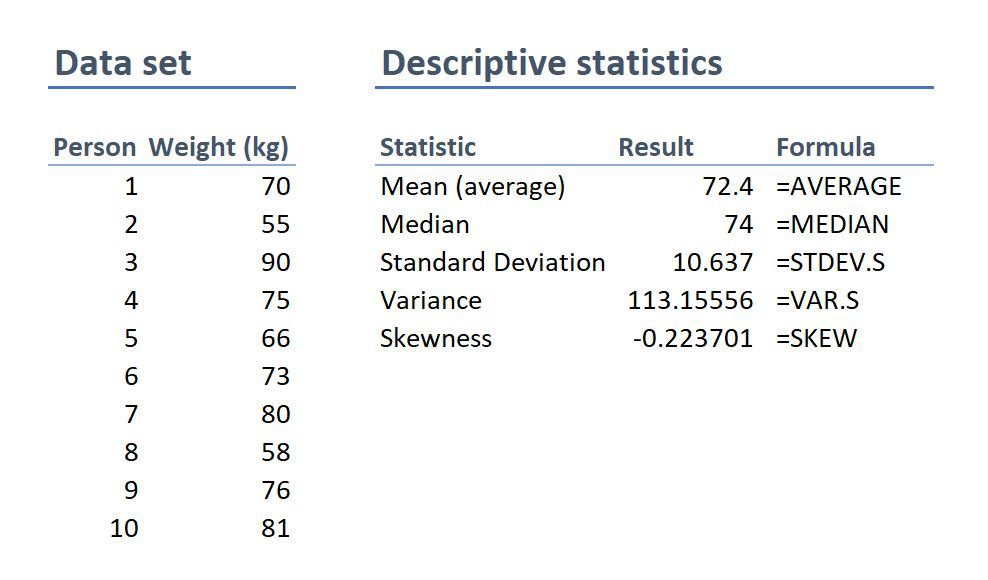

On the left-hand side is the data set. This details the bodyweight of a sample of 10 people. On the right-hand side, we have the descriptive statistics. Let’s take a look at each of them.

First, we can see that the mean weight is 72.4 kilograms. In other words, the average weight across the sample is 72.4 kilograms. Straightforward.

Next, we can see that the median is very similar to the mean (the average). This suggests that this data set has a reasonably symmetrical distribution (in other words, a relatively smooth, centred distribution of weights, clustered towards the centre).

In terms of the mode , there is no mode in this data set. This is because each number is present only once and so there cannot be a “most common number”. If there were two people who were both 65 kilograms, for example, then the mode would be 65.

Next up is the standard deviation . 10.6 indicates that there’s quite a wide spread of numbers. We can see this quite easily by looking at the numbers themselves, which range from 55 to 90, which is quite a stretch from the mean of 72.4.

And lastly, the skewness of -0.2 tells us that the data is very slightly negatively skewed. This makes sense since the mean and the median are slightly different.

As you can see, these descriptive statistics give us some useful insight into the data set. Of course, this is a very small data set (only 10 records), so we can’t read into these statistics too much. Also, keep in mind that this is not a list of all possible descriptive statistics – just the most common ones.

But why do all of these numbers matter?

While these descriptive statistics are all fairly basic, they’re important for a few reasons:

- Firstly, they help you get both a macro and micro-level view of your data. In other words, they help you understand both the big picture and the finer details.

- Secondly, they help you spot potential errors in the data – for example, if an average is way higher than you’d expect, or responses to a question are highly varied, this can act as a warning sign that you need to double-check the data.

- And lastly, these descriptive statistics help inform which inferential statistical techniques you can use, as those techniques depend on the skewness (in other words, the symmetry and normality) of the data.

Simply put, descriptive statistics are really important , even though the statistical techniques used are fairly basic. All too often at Grad Coach, we see students skimming over the descriptives in their eagerness to get to the more exciting inferential methods, and then landing up with some very flawed results.

Don’t be a sucker – give your descriptive statistics the love and attention they deserve!

Branch 2: Inferential Statistics

As I mentioned, while descriptive statistics are all about the details of your specific data set – your sample – inferential statistics aim to make inferences about the population . In other words, you’ll use inferential statistics to make predictions about what you’d expect to find in the full population.

What kind of predictions, you ask? Well, there are two common types of predictions that researchers try to make using inferential stats:

- Firstly, predictions about differences between groups – for example, height differences between children grouped by their favourite meal or gender.

- And secondly, relationships between variables – for example, the relationship between body weight and the number of hours a week a person does yoga.

In other words, inferential statistics (when done correctly), allow you to connect the dots and make predictions about what you expect to see in the real world population, based on what you observe in your sample data. For this reason, inferential statistics are used for hypothesis testing – in other words, to test hypotheses that predict changes or differences.

Of course, when you’re working with inferential statistics, the composition of your sample is really important. In other words, if your sample doesn’t accurately represent the population you’re researching, then your findings won’t necessarily be very useful.