Random Assignment in Psychology (Definition + 40 Examples)

Have you ever wondered how researchers discover new ways to help people learn, make decisions, or overcome challenges? A hidden hero in this adventure of discovery is a method called random assignment, a cornerstone in psychological research that helps scientists uncover the truths about the human mind and behavior.

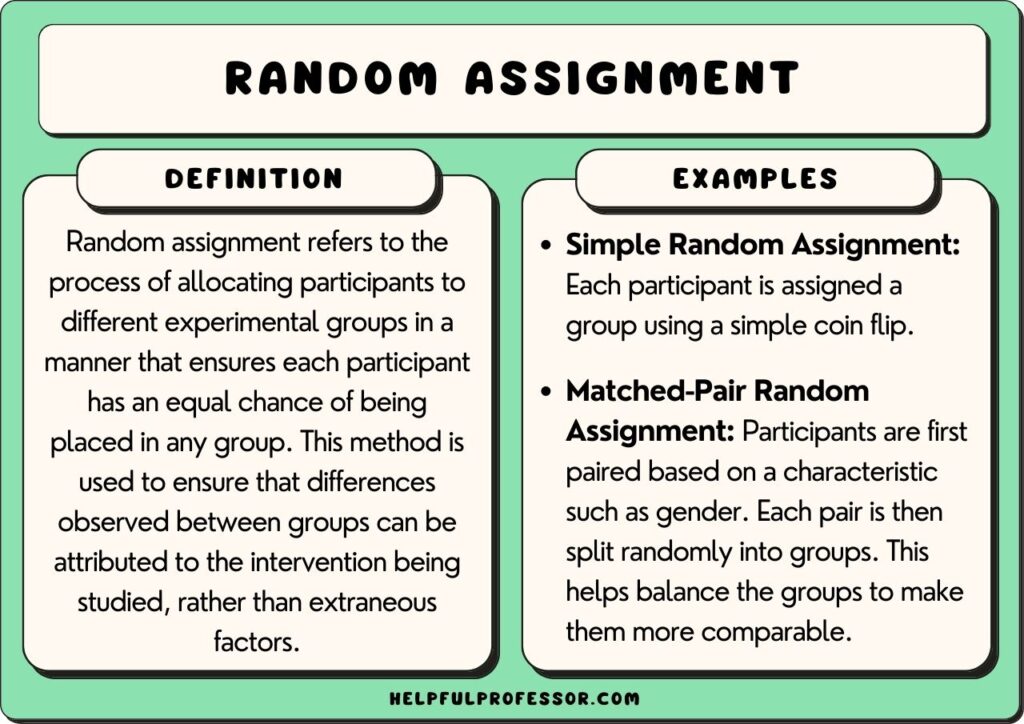

Random Assignment is a process used in research where each participant has an equal chance of being placed in any group within the study. This technique is essential in experiments as it helps to eliminate biases, ensuring that the different groups being compared are similar in all important aspects.

By doing so, researchers can be confident that any differences observed are likely due to the variable being tested, rather than other factors.

In this article, we’ll explore the intriguing world of random assignment, diving into its history, principles, real-world examples, and the impact it has had on the field of psychology.

History of Random Assignment

Stepping back in time, we delve into the origins of random assignment, which finds its roots in the early 20th century.

The pioneering mind behind this innovative technique was Sir Ronald A. Fisher , a British statistician and biologist. Fisher introduced the concept of random assignment in the 1920s, aiming to improve the quality and reliability of experimental research .

His contributions laid the groundwork for the method's evolution and its widespread adoption in various fields, particularly in psychology.

Fisher’s groundbreaking work on random assignment was motivated by his desire to control for confounding variables – those pesky factors that could muddy the waters of research findings.

By assigning participants to different groups purely by chance, he realized that the influence of these confounding variables could be minimized, paving the way for more accurate and trustworthy results.

Early Studies Utilizing Random Assignment

Following Fisher's initial development, random assignment started to gain traction in the research community. Early studies adopting this methodology focused on a variety of topics, from agriculture (which was Fisher’s primary field of interest) to medicine and psychology.

The approach allowed researchers to draw stronger conclusions from their experiments, bolstering the development of new theories and practices.

One notable early study utilizing random assignment was conducted in the field of educational psychology. Researchers were keen to understand the impact of different teaching methods on student outcomes.

By randomly assigning students to various instructional approaches, they were able to isolate the effects of the teaching methods, leading to valuable insights and recommendations for educators.

Evolution of the Methodology

As the decades rolled on, random assignment continued to evolve and adapt to the changing landscape of research.

Advances in technology introduced new tools and techniques for implementing randomization, such as computerized random number generators, which offered greater precision and ease of use.

The application of random assignment expanded beyond the confines of the laboratory, finding its way into field studies and large-scale surveys.

Researchers across diverse disciplines embraced the methodology, recognizing its potential to enhance the validity of their findings and contribute to the advancement of knowledge.

From its humble beginnings in the early 20th century to its widespread use today, random assignment has proven to be a cornerstone of scientific inquiry.

Its development and evolution have played a pivotal role in shaping the landscape of psychological research, driving discoveries that have improved lives and deepened our understanding of the human experience.

Principles of Random Assignment

Delving into the heart of random assignment, we uncover the theories and principles that form its foundation.

The method is steeped in the basics of probability theory and statistical inference, ensuring that each participant has an equal chance of being placed in any group, thus fostering fair and unbiased results.

Basic Principles of Random Assignment

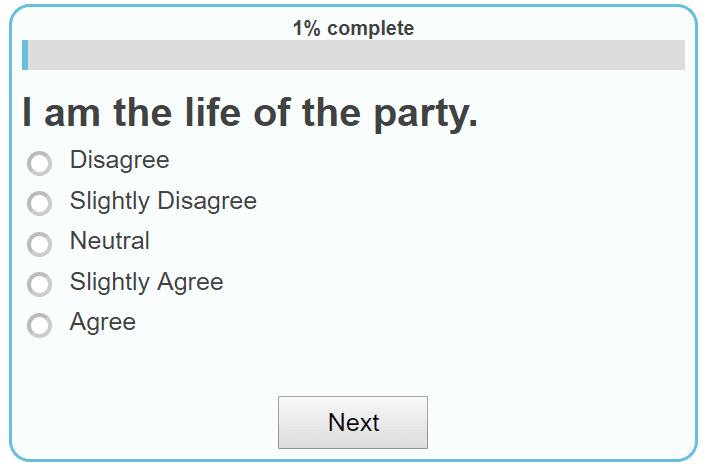

Understanding the core principles of random assignment is key to grasping its significance in research. There are three principles: equal probability of selection, reduction of bias, and ensuring representativeness.

The first principle, equal probability of selection , ensures that every participant has an identical chance of being assigned to any group in the study. This randomness is crucial as it mitigates the risk of bias and establishes a level playing field.

The second principle focuses on the reduction of bias . Random assignment acts as a safeguard, ensuring that the groups being compared are alike in all essential aspects before the experiment begins.

This similarity between groups allows researchers to attribute any differences observed in the outcomes directly to the independent variable being studied.

Lastly, ensuring representativeness is a vital principle. When participants are assigned randomly, the resulting groups are more likely to be representative of the larger population.

This characteristic is crucial for the generalizability of the study’s findings, allowing researchers to apply their insights broadly.

Theoretical Foundation

The theoretical foundation of random assignment lies in probability theory and statistical inference .

Probability theory deals with the likelihood of different outcomes, providing a mathematical framework for analyzing random phenomena. In the context of random assignment, it helps in ensuring that each participant has an equal chance of being placed in any group.

Statistical inference, on the other hand, allows researchers to draw conclusions about a population based on a sample of data drawn from that population. It is the mechanism through which the results of a study can be generalized to a broader context.

Random assignment enhances the reliability of statistical inferences by reducing biases and ensuring that the sample is representative.

Differentiating Random Assignment from Random Selection

It’s essential to distinguish between random assignment and random selection, as the two terms, while related, have distinct meanings in the realm of research.

Random assignment refers to how participants are placed into different groups in an experiment, aiming to control for confounding variables and help determine causes.

In contrast, random selection pertains to how individuals are chosen to participate in a study. This method is used to ensure that the sample of participants is representative of the larger population, which is vital for the external validity of the research.

While both methods are rooted in randomness and probability, they serve different purposes in the research process.

Understanding the theories, principles, and distinctions of random assignment illuminates its pivotal role in psychological research.

This method, anchored in probability theory and statistical inference, serves as a beacon of reliability, guiding researchers in their quest for knowledge and ensuring that their findings stand the test of validity and applicability.

Methodology of Random Assignment

Implementing random assignment in a study is a meticulous process that involves several crucial steps.

The initial step is participant selection, where individuals are chosen to partake in the study. This stage is critical to ensure that the pool of participants is diverse and representative of the population the study aims to generalize to.

Once the pool of participants has been established, the actual assignment process begins. In this step, each participant is allocated randomly to one of the groups in the study.

Researchers use various tools, such as random number generators or computerized methods, to ensure that this assignment is genuinely random and free from biases.

Monitoring and adjusting form the final step in the implementation of random assignment. Researchers need to continuously observe the groups to ensure that they remain comparable in all essential aspects throughout the study.

If any significant discrepancies arise, adjustments might be necessary to maintain the study’s integrity and validity.

Tools and Techniques Used

The evolution of technology has introduced a variety of tools and techniques to facilitate random assignment.

Random number generators, both manual and computerized, are commonly used to assign participants to different groups. These generators ensure that each individual has an equal chance of being placed in any group, upholding the principle of equal probability of selection.

In addition to random number generators, researchers often use specialized computer software designed for statistical analysis and experimental design.

These software programs offer advanced features that allow for precise and efficient random assignment, minimizing the risk of human error and enhancing the study’s reliability.

Ethical Considerations

The implementation of random assignment is not devoid of ethical considerations. Informed consent is a fundamental ethical principle that researchers must uphold.

Informed consent means that every participant should be fully informed about the nature of the study, the procedures involved, and any potential risks or benefits, ensuring that they voluntarily agree to participate.

Beyond informed consent, researchers must conduct a thorough risk and benefit analysis. The potential benefits of the study should outweigh any risks or harms to the participants.

Safeguarding the well-being of participants is paramount, and any study employing random assignment must adhere to established ethical guidelines and standards.

Conclusion of Methodology

The methodology of random assignment, while seemingly straightforward, is a multifaceted process that demands precision, fairness, and ethical integrity. From participant selection to assignment and monitoring, each step is crucial to ensure the validity of the study’s findings.

The tools and techniques employed, coupled with a steadfast commitment to ethical principles, underscore the significance of random assignment as a cornerstone of robust psychological research.

Benefits of Random Assignment in Psychological Research

The impact and importance of random assignment in psychological research cannot be overstated. It is fundamental for ensuring the study is accurate, allowing the researchers to determine if their study actually caused the results they saw, and making sure the findings can be applied to the real world.

Facilitating Causal Inferences

When participants are randomly assigned to different groups, researchers can be more confident that the observed effects are due to the independent variable being changed, and not other factors.

This ability to determine the cause is called causal inference .

This confidence allows for the drawing of causal relationships, which are foundational for theory development and application in psychology.

Ensuring Internal Validity

One of the foremost impacts of random assignment is its ability to enhance the internal validity of an experiment.

Internal validity refers to the extent to which a researcher can assert that changes in the dependent variable are solely due to manipulations of the independent variable , and not due to confounding variables.

By ensuring that each participant has an equal chance of being in any condition of the experiment, random assignment helps control for participant characteristics that could otherwise complicate the results.

Enhancing Generalizability

Beyond internal validity, random assignment also plays a crucial role in enhancing the generalizability of research findings.

When done correctly, it ensures that the sample groups are representative of the larger population, so can allow researchers to apply their findings more broadly.

This representative nature is essential for the practical application of research, impacting policy, interventions, and psychological therapies.

Limitations of Random Assignment

Potential for implementation issues.

While the principles of random assignment are robust, the method can face implementation issues.

One of the most common problems is logistical constraints. Some studies, due to their nature or the specific population being studied, find it challenging to implement random assignment effectively.

For instance, in educational settings, logistical issues such as class schedules and school policies might stop the random allocation of students to different teaching methods .

Ethical Dilemmas

Random assignment, while methodologically sound, can also present ethical dilemmas.

In some cases, withholding a potentially beneficial treatment from one of the groups of participants can raise serious ethical questions, especially in medical or clinical research where participants' well-being might be directly affected.

Researchers must navigate these ethical waters carefully, balancing the pursuit of knowledge with the well-being of participants.

Generalizability Concerns

Even when implemented correctly, random assignment does not always guarantee generalizable results.

The types of people in the participant pool, the specific context of the study, and the nature of the variables being studied can all influence the extent to which the findings can be applied to the broader population.

Researchers must be cautious in making broad generalizations from studies, even those employing strict random assignment.

Practical and Real-World Limitations

In the real world, many variables cannot be manipulated for ethical or practical reasons, limiting the applicability of random assignment.

For instance, researchers cannot randomly assign individuals to different levels of intelligence, socioeconomic status, or cultural backgrounds.

This limitation necessitates the use of other research designs, such as correlational or observational studies , when exploring relationships involving such variables.

Response to Critiques

In response to these critiques, people in favor of random assignment argue that the method, despite its limitations, remains one of the most reliable ways to establish cause and effect in experimental research.

They acknowledge the challenges and ethical considerations but emphasize the rigorous frameworks in place to address them.

The ongoing discussion around the limitations and critiques of random assignment contributes to the evolution of the method, making sure it is continuously relevant and applicable in psychological research.

While random assignment is a powerful tool in experimental research, it is not without its critiques and limitations. Implementation issues, ethical dilemmas, generalizability concerns, and real-world limitations can pose significant challenges.

However, the continued discourse and refinement around these issues underline the method's enduring significance in the pursuit of knowledge in psychology.

By being careful with how we do things and doing what's right, random assignment stays a really important part of studying how people act and think.

Real-World Applications and Examples

Random assignment has been employed in many studies across various fields of psychology, leading to significant discoveries and advancements.

Here are some real-world applications and examples illustrating the diversity and impact of this method:

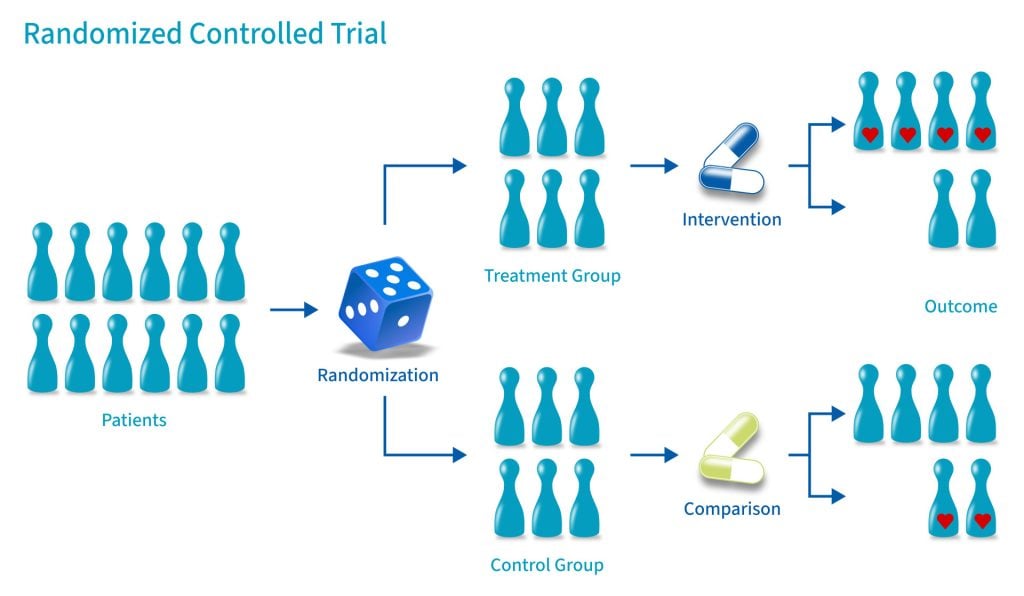

- Medicine and Health Psychology: Randomized Controlled Trials (RCTs) are the gold standard in medical research. In these studies, participants are randomly assigned to either the treatment or control group to test the efficacy of new medications or interventions.

- Educational Psychology: Studies in this field have used random assignment to explore the effects of different teaching methods, classroom environments, and educational technologies on student learning and outcomes.

- Cognitive Psychology: Researchers have employed random assignment to investigate various aspects of human cognition, including memory, attention, and problem-solving, leading to a deeper understanding of how the mind works.

- Social Psychology: Random assignment has been instrumental in studying social phenomena, such as conformity, aggression, and prosocial behavior, shedding light on the intricate dynamics of human interaction.

Let's get into some specific examples. You'll need to know one term though, and that is "control group." A control group is a set of participants in a study who do not receive the treatment or intervention being tested , serving as a baseline to compare with the group that does, in order to assess the effectiveness of the treatment.

- Smoking Cessation Study: Researchers used random assignment to put participants into two groups. One group received a new anti-smoking program, while the other did not. This helped determine if the program was effective in helping people quit smoking.

- Math Tutoring Program: A study on students used random assignment to place them into two groups. One group received additional math tutoring, while the other continued with regular classes, to see if the extra help improved their grades.

- Exercise and Mental Health: Adults were randomly assigned to either an exercise group or a control group to study the impact of physical activity on mental health and mood.

- Diet and Weight Loss: A study randomly assigned participants to different diet plans to compare their effectiveness in promoting weight loss and improving health markers.

- Sleep and Learning: Researchers randomly assigned students to either a sleep extension group or a regular sleep group to study the impact of sleep on learning and memory.

- Classroom Seating Arrangement: Teachers used random assignment to place students in different seating arrangements to examine the effect on focus and academic performance.

- Music and Productivity: Employees were randomly assigned to listen to music or work in silence to investigate the effect of music on workplace productivity.

- Medication for ADHD: Children with ADHD were randomly assigned to receive either medication, behavioral therapy, or a placebo to compare treatment effectiveness.

- Mindfulness Meditation for Stress: Adults were randomly assigned to a mindfulness meditation group or a waitlist control group to study the impact on stress levels.

- Video Games and Aggression: A study randomly assigned participants to play either violent or non-violent video games and then measured their aggression levels.

- Online Learning Platforms: Students were randomly assigned to use different online learning platforms to evaluate their effectiveness in enhancing learning outcomes.

- Hand Sanitizers in Schools: Schools were randomly assigned to use hand sanitizers or not to study the impact on student illness and absenteeism.

- Caffeine and Alertness: Participants were randomly assigned to consume caffeinated or decaffeinated beverages to measure the effects on alertness and cognitive performance.

- Green Spaces and Well-being: Neighborhoods were randomly assigned to receive green space interventions to study the impact on residents’ well-being and community connections.

- Pet Therapy for Hospital Patients: Patients were randomly assigned to receive pet therapy or standard care to assess the impact on recovery and mood.

- Yoga for Chronic Pain: Individuals with chronic pain were randomly assigned to a yoga intervention group or a control group to study the effect on pain levels and quality of life.

- Flu Vaccines Effectiveness: Different groups of people were randomly assigned to receive either the flu vaccine or a placebo to determine the vaccine’s effectiveness.

- Reading Strategies for Dyslexia: Children with dyslexia were randomly assigned to different reading intervention strategies to compare their effectiveness.

- Physical Environment and Creativity: Participants were randomly assigned to different room setups to study the impact of physical environment on creative thinking.

- Laughter Therapy for Depression: Individuals with depression were randomly assigned to laughter therapy sessions or control groups to assess the impact on mood.

- Financial Incentives for Exercise: Participants were randomly assigned to receive financial incentives for exercising to study the impact on physical activity levels.

- Art Therapy for Anxiety: Individuals with anxiety were randomly assigned to art therapy sessions or a waitlist control group to measure the effect on anxiety levels.

- Natural Light in Offices: Employees were randomly assigned to workspaces with natural or artificial light to study the impact on productivity and job satisfaction.

- School Start Times and Academic Performance: Schools were randomly assigned different start times to study the effect on student academic performance and well-being.

- Horticulture Therapy for Seniors: Older adults were randomly assigned to participate in horticulture therapy or traditional activities to study the impact on cognitive function and life satisfaction.

- Hydration and Cognitive Function: Participants were randomly assigned to different hydration levels to measure the impact on cognitive function and alertness.

- Intergenerational Programs: Seniors and young people were randomly assigned to intergenerational programs to study the effects on well-being and cross-generational understanding.

- Therapeutic Horseback Riding for Autism: Children with autism were randomly assigned to therapeutic horseback riding or traditional therapy to study the impact on social communication skills.

- Active Commuting and Health: Employees were randomly assigned to active commuting (cycling, walking) or passive commuting to study the effect on physical health.

- Mindful Eating for Weight Management: Individuals were randomly assigned to mindful eating workshops or control groups to study the impact on weight management and eating habits.

- Noise Levels and Learning: Students were randomly assigned to classrooms with different noise levels to study the effect on learning and concentration.

- Bilingual Education Methods: Schools were randomly assigned different bilingual education methods to compare their effectiveness in language acquisition.

- Outdoor Play and Child Development: Children were randomly assigned to different amounts of outdoor playtime to study the impact on physical and cognitive development.

- Social Media Detox: Participants were randomly assigned to a social media detox or regular usage to study the impact on mental health and well-being.

- Therapeutic Writing for Trauma Survivors: Individuals who experienced trauma were randomly assigned to therapeutic writing sessions or control groups to study the impact on psychological well-being.

- Mentoring Programs for At-risk Youth: At-risk youth were randomly assigned to mentoring programs or control groups to assess the impact on academic achievement and behavior.

- Dance Therapy for Parkinson’s Disease: Individuals with Parkinson’s disease were randomly assigned to dance therapy or traditional exercise to study the effect on motor function and quality of life.

- Aquaponics in Schools: Schools were randomly assigned to implement aquaponics programs to study the impact on student engagement and environmental awareness.

- Virtual Reality for Phobia Treatment: Individuals with phobias were randomly assigned to virtual reality exposure therapy or traditional therapy to compare effectiveness.

- Gardening and Mental Health: Participants were randomly assigned to engage in gardening or other leisure activities to study the impact on mental health and stress reduction.

Each of these studies exemplifies how random assignment is utilized in various fields and settings, shedding light on the multitude of ways it can be applied to glean valuable insights and knowledge.

Real-world Impact of Random Assignment

Random assignment is like a key tool in the world of learning about people's minds and behaviors. It’s super important and helps in many different areas of our everyday lives. It helps make better rules, creates new ways to help people, and is used in lots of different fields.

Health and Medicine

In health and medicine, random assignment has helped doctors and scientists make lots of discoveries. It’s a big part of tests that help create new medicines and treatments.

By putting people into different groups by chance, scientists can really see if a medicine works.

This has led to new ways to help people with all sorts of health problems, like diabetes, heart disease, and mental health issues like depression and anxiety.

Schools and education have also learned a lot from random assignment. Researchers have used it to look at different ways of teaching, what kind of classrooms are best, and how technology can help learning.

This knowledge has helped make better school rules, develop what we learn in school, and find the best ways to teach students of all ages and backgrounds.

Workplace and Organizational Behavior

Random assignment helps us understand how people act at work and what makes a workplace good or bad.

Studies have looked at different kinds of workplaces, how bosses should act, and how teams should be put together. This has helped companies make better rules and create places to work that are helpful and make people happy.

Environmental and Social Changes

Random assignment is also used to see how changes in the community and environment affect people. Studies have looked at community projects, changes to the environment, and social programs to see how they help or hurt people’s well-being.

This has led to better community projects, efforts to protect the environment, and programs to help people in society.

Technology and Human Interaction

In our world where technology is always changing, studies with random assignment help us see how tech like social media, virtual reality, and online stuff affect how we act and feel.

This has helped make better and safer technology and rules about using it so that everyone can benefit.

The effects of random assignment go far and wide, way beyond just a science lab. It helps us understand lots of different things, leads to new and improved ways to do things, and really makes a difference in the world around us.

From making healthcare and schools better to creating positive changes in communities and the environment, the real-world impact of random assignment shows just how important it is in helping us learn and make the world a better place.

So, what have we learned? Random assignment is like a super tool in learning about how people think and act. It's like a detective helping us find clues and solve mysteries in many parts of our lives.

From creating new medicines to helping kids learn better in school, and from making workplaces happier to protecting the environment, it’s got a big job!

This method isn’t just something scientists use in labs; it reaches out and touches our everyday lives. It helps make positive changes and teaches us valuable lessons.

Whether we are talking about technology, health, education, or the environment, random assignment is there, working behind the scenes, making things better and safer for all of us.

In the end, the simple act of putting people into groups by chance helps us make big discoveries and improvements. It’s like throwing a small stone into a pond and watching the ripples spread out far and wide.

Thanks to random assignment, we are always learning, growing, and finding new ways to make our world a happier and healthier place for everyone!

Related posts:

- 19+ Experimental Design Examples (Methods + Types)

- Cluster Sampling vs Stratified Sampling

- 41+ White Collar Job Examples (Salary + Path)

- 47+ Blue Collar Job Examples (Salary + Path)

- McDonaldization of Society (Definition + Examples)

Reference this article:

About The Author

Free Personality Test

Free Memory Test

Free IQ Test

PracticalPie.com is a participant in the Amazon Associates Program. As an Amazon Associate we earn from qualifying purchases.

Follow Us On:

Youtube Facebook Instagram X/Twitter

Psychology Resources

Developmental

Personality

Relationships

Psychologists

Serial Killers

Psychology Tests

Personality Quiz

Memory Test

Depression test

Type A/B Personality Test

© PracticalPsychology. All rights reserved

Privacy Policy | Terms of Use

What Is Random Assignment in Psychology?

Categories Research Methods

Sharing is caring!

Random assignment means that every participant has the same chance of being chosen for the experimental or control group. It involves using procedures that rely on chance to assign participants to groups. Doing this means that every participant in a study has an equal opportunity to be assigned to any group.

For example, in a psychology experiment, participants might be assigned to either a control or experimental group. Some experiments might only have one experimental group, while others may have several treatment variations.

Using random assignment means that each participant has the same chance of being assigned to any of these groups.

Table of Contents

How to Use Random Assignment

So what type of procedures might psychologists utilize for random assignment? Strategies can include:

- Flipping a coin

- Assigning random numbers

- Rolling dice

- Drawing names out of a hat

How Does Random Assignment Work?

A psychology experiment aims to determine if changes in one variable lead to changes in another variable. Researchers will first begin by coming up with a hypothesis. Once researchers have an idea of what they think they might find in a population, they will come up with an experimental design and then recruit participants for their study.

Once they have a pool of participants representative of the population they are interested in looking at, they will randomly assign the participants to their groups.

- Control group : Some participants will end up in the control group, which serves as a baseline and does not receive the independent variables.

- Experimental group : Other participants will end up in the experimental groups that receive some form of the independent variables.

By using random assignment, the researchers make it more likely that the groups are equal at the start of the experiment. Since the groups are the same on other variables, it can be assumed that any changes that occur are the result of varying the independent variables.

After a treatment has been administered, the researchers will then collect data in order to determine if the independent variable had any impact on the dependent variable.

Random Assignment vs. Random Selection

It is important to remember that random assignment is not the same thing as random selection , also known as random sampling.

Random selection instead involves how people are chosen to be in a study. Using random selection, every member of a population stands an equal chance of being chosen for a study or experiment.

So random sampling affects how participants are chosen for a study, while random assignment affects how participants are then assigned to groups.

Examples of Random Assignment

Imagine that a psychology researcher is conducting an experiment to determine if getting adequate sleep the night before an exam results in better test scores.

Forming a Hypothesis

They hypothesize that participants who get 8 hours of sleep will do better on a math exam than participants who only get 4 hours of sleep.

Obtaining Participants

The researcher starts by obtaining a pool of participants. They find 100 participants from a local university. Half of the participants are female, and half are male.

Randomly Assign Participants to Groups

The researcher then assigns random numbers to each participant and uses a random number generator to randomly assign each number to either the 4-hour or 8-hour sleep groups.

Conduct the Experiment

Those in the 8-hour sleep group agree to sleep for 8 hours that night, while those in the 4-hour group agree to wake up after only 4 hours. The following day, all of the participants meet in a classroom.

Collect and Analyze Data

Everyone takes the same math test. The test scores are then compared to see if the amount of sleep the night before had any impact on test scores.

Why Is Random Assignment Important in Psychology Research?

Random assignment is important in psychology research because it helps improve a study’s internal validity. This means that the researchers are sure that the study demonstrates a cause-and-effect relationship between an independent and dependent variable.

Random assignment improves the internal validity by minimizing the risk that there are systematic differences in the participants who are in each group.

Key Points to Remember About Random Assignment

- Random assignment in psychology involves each participant having an equal chance of being chosen for any of the groups, including the control and experimental groups.

- It helps control for potential confounding variables, reducing the likelihood of pre-existing differences between groups.

- This method enhances the internal validity of experiments, allowing researchers to draw more reliable conclusions about cause-and-effect relationships.

- Random assignment is crucial for creating comparable groups and increasing the scientific rigor of psychological studies.

What is a Randomized Control Trial (RCT)?

Julia Simkus

Editor at Simply Psychology

BA (Hons) Psychology, Princeton University

Julia Simkus is a graduate of Princeton University with a Bachelor of Arts in Psychology. She is currently studying for a Master's Degree in Counseling for Mental Health and Wellness in September 2023. Julia's research has been published in peer reviewed journals.

Learn about our Editorial Process

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

A randomized control trial (RCT) is a type of study design that involves randomly assigning participants to either an experimental group or a control group to measure the effectiveness of an intervention or treatment.

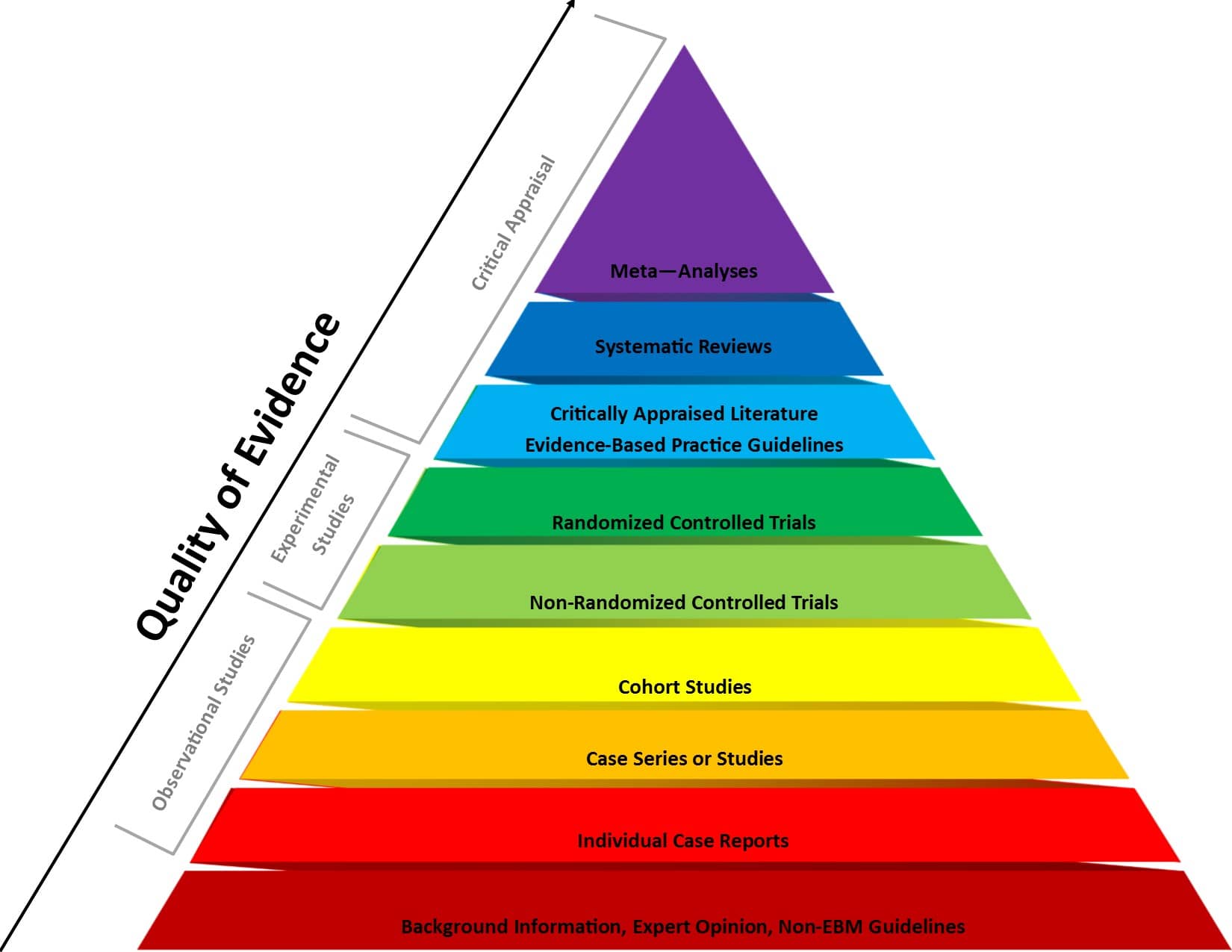

Randomized Controlled Trials (RCTs) are considered the “gold standard” in medical and health research due to their rigorous design.

Control Group

A control group consists of participants who do not receive any treatment or intervention but a placebo or reference treatment. The control participants serve as a comparison group.

The control group is matched as closely as possible to the experimental group, including age, gender, social class, ethnicity, etc.

Because the participants are randomly assigned, the characteristics between the two groups should be balanced, enabling researchers to attribute any differences in outcome to the study intervention.

Since researchers can be confident that any differences between the control and treatment groups are due solely to the effects of the treatments, scientists view RCTs as the gold standard for clinical trials.

Random Allocation

Random allocation and random assignment are terms used interchangeably in the context of a randomized controlled trial (RCT).

Both refer to assigning participants to different groups in a study (such as a treatment group or a control group) in a way that is completely determined by chance.

The process of random assignment controls for confounding variables , ensuring differences between groups are due to chance alone.

Without randomization, researchers might consciously or subconsciously assign patients to a particular group for various reasons.

Several methods can be used for randomization in a Randomized Control Trial (RCT). Here are a few examples:

- Simple Randomization: This is the simplest method, like flipping a coin. Each participant has an equal chance of being assigned to any group. This can be achieved using random number tables, computerized random number generators, or drawing lots or envelopes.

- Block Randomization: In this method, participants are randomized within blocks, ensuring that each block has an equal number of participants in each group. This helps to balance the number of participants in each group at any given time during the study.

- Stratified Randomization: This method is used when researchers want to ensure that certain subgroups of participants are equally represented in each group. Participants are divided into strata, or subgroups, based on characteristics like age or disease severity, and then randomized within these strata.

- Cluster Randomization: In this method, groups of participants (like families or entire communities), rather than individuals, are randomized.

- Adaptive Randomization: In this method, the probability of being assigned to each group changes based on the participants already assigned to each group. For example, if more participants have been assigned to the control group, new participants will have a higher probability of being assigned to the experimental group.

Computer software can generate random numbers or sequences that can be used to assign participants to groups in a simple randomization process.

For more complex methods like block, stratified, or adaptive randomization, computer algorithms can be used to consider the additional parameters and ensure that participants are assigned to groups appropriately.

Using a computerized system can also help to maintain the integrity of the randomization process by preventing researchers from knowing in advance which group a participant will be assigned to (a principle known as allocation concealment). This can help to prevent selection bias and ensure the validity of the study results .

Allocation Concealment

Allocation concealment is a technique to ensure the random allocation process is truly random and unbiased.

RCTs use allocation concealment to decide which patients get the real medicine and which get a placebo (a fake medicine)

It involves keeping the sequence of group assignments (i.e., who gets assigned to the treatment group and who gets assigned to the control group next) hidden from the researchers before a participant has enrolled in the study.

This helps to prevent the researchers from consciously or unconsciously selecting certain participants for one group or the other based on their knowledge of which group is next in the sequence.

Allocation concealment ensures that the investigator does not know in advance which treatment the next person will get, thus maintaining the integrity of the randomization process.

Blinding (Masking)

Binding, or masking, refers to withholding information regarding the group assignments (who is in the treatment group and who is in the control group) from the participants, the researchers, or both during the study .

A blinded study prevents the participants from knowing about their treatment to avoid bias in the research. Any information that can influence the subjects is withheld until the completion of the research.

Blinding can be imposed on any participant in an experiment, including researchers, data collectors, evaluators, technicians, and data analysts.

Good blinding can eliminate experimental biases arising from the subjects’ expectations, observer bias, confirmation bias, researcher bias, observer’s effect on the participants, and other biases that may occur in a research test.

In a double-blind study , neither the participants nor the researchers know who is receiving the drug or the placebo. When a participant is enrolled, they are randomly assigned to one of the two groups. The medication they receive looks identical whether it’s the drug or the placebo.

Figure 1 . Evidence-based medicine pyramid. The levels of evidence are appropriately represented by a pyramid as each level, from bottom to top, reflects the quality of research designs (increasing) and quantity (decreasing) of each study design in the body of published literature. For example, randomized control trials are higher quality and more labor intensive to conduct, so there is a lower quantity published.

Prevents bias

In randomized control trials, participants must be randomly assigned to either the intervention group or the control group, such that each individual has an equal chance of being placed in either group.

This is meant to prevent selection bias and allocation bias and achieve control over any confounding variables to provide an accurate comparison of the treatment being studied.

Because the distribution of characteristics of patients that could influence the outcome is randomly assigned between groups, any differences in outcome can be explained only by the treatment.

High statistical power

Because the participants are randomized and the characteristics between the two groups are balanced, researchers can assume that if there are significant differences in the primary outcome between the two groups, the differences are likely to be due to the intervention.

This warrants researchers to be confident that randomized control trials will have high statistical power compared to other types of study designs.

Since the focus of conducting a randomized control trial is eliminating bias, blinded RCTs can help minimize any unconscious information bias.

In a blinded RCT, the participants do not know which group they are assigned to or which intervention is received. This blinding procedure should also apply to researchers, health care professionals, assessors, and investigators when possible.

“Single-blind” refers to an RCT where participants do not know the details of the treatment, but the researchers do.

“ Double-blind ” refers to an RCT where both participants and data collectors are masked of the assigned treatment.

Limitations

Costly and timely.

Some interventions require years or even decades to evaluate, rendering them expensive and time-consuming.

It might take an extended period of time before researchers can identify a drug’s effects or discover significant results.

Requires large sample size

There must be enough participants in each group of a randomized control trial so researchers can detect any true differences or effects in outcomes between the groups.

Researchers cannot detect clinically important results if the sample size is too small.

Change in population over time

Because randomized control trials are longitudinal in nature, it is almost inevitable that some participants will not complete the study, whether due to death, migration, non-compliance, or loss of interest in the study.

This tendency is known as selective attrition and can threaten the statistical power of an experiment.

Randomized control trials are not always practical or ethical, and such limitations can prevent researchers from conducting their studies.

For example, a treatment could be too invasive, or administering a placebo instead of an actual drug during a trial for treating a serious illness could deny a participant’s normal course of treatment. Without ethical approval, a randomized control trial cannot proceed.

Fictitious Example

An example of an RCT would be a clinical trial comparing a drug’s effect or a new treatment on a select population.

The researchers would randomly assign participants to either the experimental group or the control group and compare the differences in outcomes between those who receive the drug or treatment and those who do not.

Real-life Examples

- Preventing illicit drug use in adolescents: Long-term follow-up data from a randomized control trial of a school population (Botvin et al., 2000).

- A prospective randomized control trial comparing medical and surgical treatment for early pregnancy failure (Demetroulis et al., 2001).

- A randomized control trial to evaluate a paging system for people with traumatic brain injury (Wilson et al., 2009).

- Prehabilitation versus Rehabilitation: A Randomized Control Trial in Patients Undergoing Colorectal Resection for Cancer (Gillis et al., 2014).

- A Randomized Control Trial of Right-Heart Catheterization in Critically Ill Patients (Guyatt, 1991).

- Berry, R. B., Kryger, M. H., & Massie, C. A. (2011). A novel nasal excitatory positive airway pressure (EPAP) device for the treatment of obstructive sleep apnea: A randomized controlled trial. Sleep , 34, 479–485.

- Gloy, V. L., Briel, M., Bhatt, D. L., Kashyap, S. R., Schauer, P. R., Mingrone, G., . . . Nordmann, A. J. (2013, October 22). Bariatric surgery versus non-surgical treatment for obesity: A systematic review and meta-analysis of randomized controlled trials. BMJ , 347.

- Streeton, C., & Whelan, G. (2001). Naltrexone, a relapse prevention maintenance treatment of alcohol dependence: A meta-analysis of randomized controlled trials. Alcohol and Alcoholism, 36 (6), 544–552.

How Should an RCT be Reported?

Reporting of a Randomized Controlled Trial (RCT) should be done in a clear, transparent, and comprehensive manner to allow readers to understand the design, conduct, analysis, and interpretation of the trial.

The Consolidated Standards of Reporting Trials ( CONSORT ) statement is a widely accepted guideline for reporting RCTs.

Further Information

- Cocks, K., & Torgerson, D. J. (2013). Sample size calculations for pilot randomized trials: a confidence interval approach. Journal of clinical epidemiology, 66(2), 197-201.

- Kendall, J. (2003). Designing a research project: randomised controlled trials and their principles. Emergency medicine journal: EMJ, 20(2), 164.

Akobeng, A.K., Understanding randomized controlled trials. Archives of Disease in Childhood , 2005; 90: 840-844.

Bell, C. C., Gibbons, R., & McKay, M. M. (2008). Building protective factors to offset sexually risky behaviors among black youths: a randomized control trial. Journal of the National Medical Association, 100 (8), 936-944.

Bhide, A., Shah, P. S., & Acharya, G. (2018). A simplified guide to randomized controlled trials. Acta obstetricia et gynecologica Scandinavica, 97 (4), 380-387.

Botvin, G. J., Griffin, K. W., Diaz, T., Scheier, L. M., Williams, C., & Epstein, J. A. (2000). Preventing illicit drug use in adolescents: Long-term follow-up data from a randomized control trial of a school population. Addictive Behaviors, 25 (5), 769-774.

Demetroulis, C., Saridogan, E., Kunde, D., & Naftalin, A. A. (2001). A prospective randomized control trial comparing medical and surgical treatment for early pregnancy failure. Human Reproduction, 16 (2), 365-369.

Gillis, C., Li, C., Lee, L., Awasthi, R., Augustin, B., Gamsa, A., … & Carli, F. (2014). Prehabilitation versus rehabilitation: a randomized control trial in patients undergoing colorectal resection for cancer. Anesthesiology, 121 (5), 937-947.

Globas, C., Becker, C., Cerny, J., Lam, J. M., Lindemann, U., Forrester, L. W., … & Luft, A. R. (2012). Chronic stroke survivors benefit from high-intensity aerobic treadmill exercise: a randomized control trial. Neurorehabilitation and Neural Repair, 26 (1), 85-95.

Guyatt, G. (1991). A randomized control trial of right-heart catheterization in critically ill patients. Journal of Intensive Care Medicine, 6 (2), 91-95.

MediLexicon International. (n.d.). Randomized controlled trials: Overview, benefits, and limitations. Medical News Today. Retrieved from https://www.medicalnewstoday.com/articles/280574#what-is-a-randomized-controlled-trial

Wilson, B. A., Emslie, H., Quirk, K., Evans, J., & Watson, P. (2005). A randomized control trial to evaluate a paging system for people with traumatic brain injury. Brain Injury, 19 (11), 891-894.

Random Assignment in Psychology (Intro for Students)

Random assignment is a research procedure used to randomly assign participants to different experimental conditions (or ‘groups’). This introduces the element of chance, ensuring that each participant has an equal likelihood of being placed in any condition group for the study.

It is absolutely essential that the treatment condition and the control condition are the same in all ways except for the variable being manipulated.

Using random assignment to place participants in different conditions helps to achieve this.

It ensures that those conditions are the same in regards to all potential confounding variables and extraneous factors .

Why Researchers Use Random Assignment

Researchers use random assignment to control for confounds in research.

Confounds refer to unwanted and often unaccounted-for variables that might affect the outcome of a study. These confounding variables can skew the results, rendering the experiment unreliable.

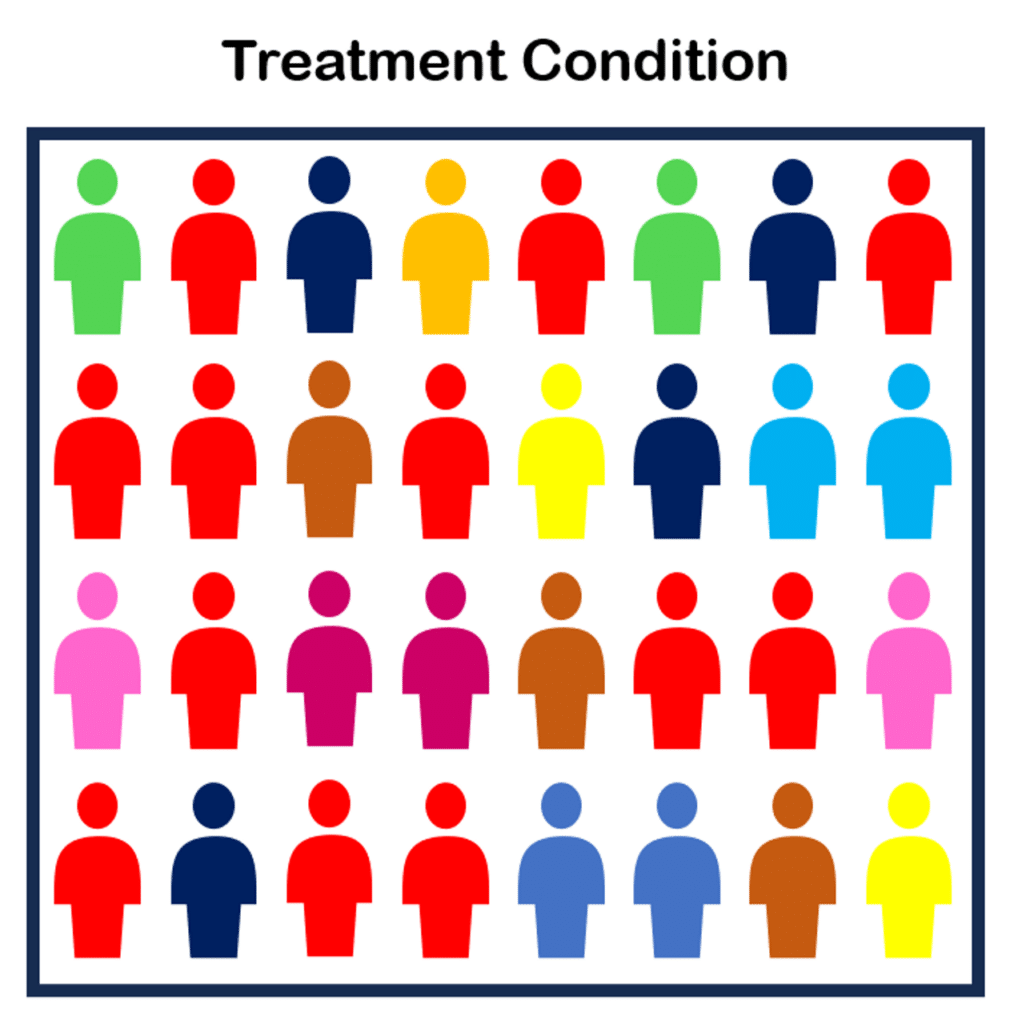

For example, below is a study with two groups. Note how there are more ‘red’ individuals in the first group than the second:

There is likely a confounding variable in this experiment explaining why more red people ended up in the treatment condition and less in the control condition. The red people might have self-selected, for example, leading to a skew of them in one group over the other.

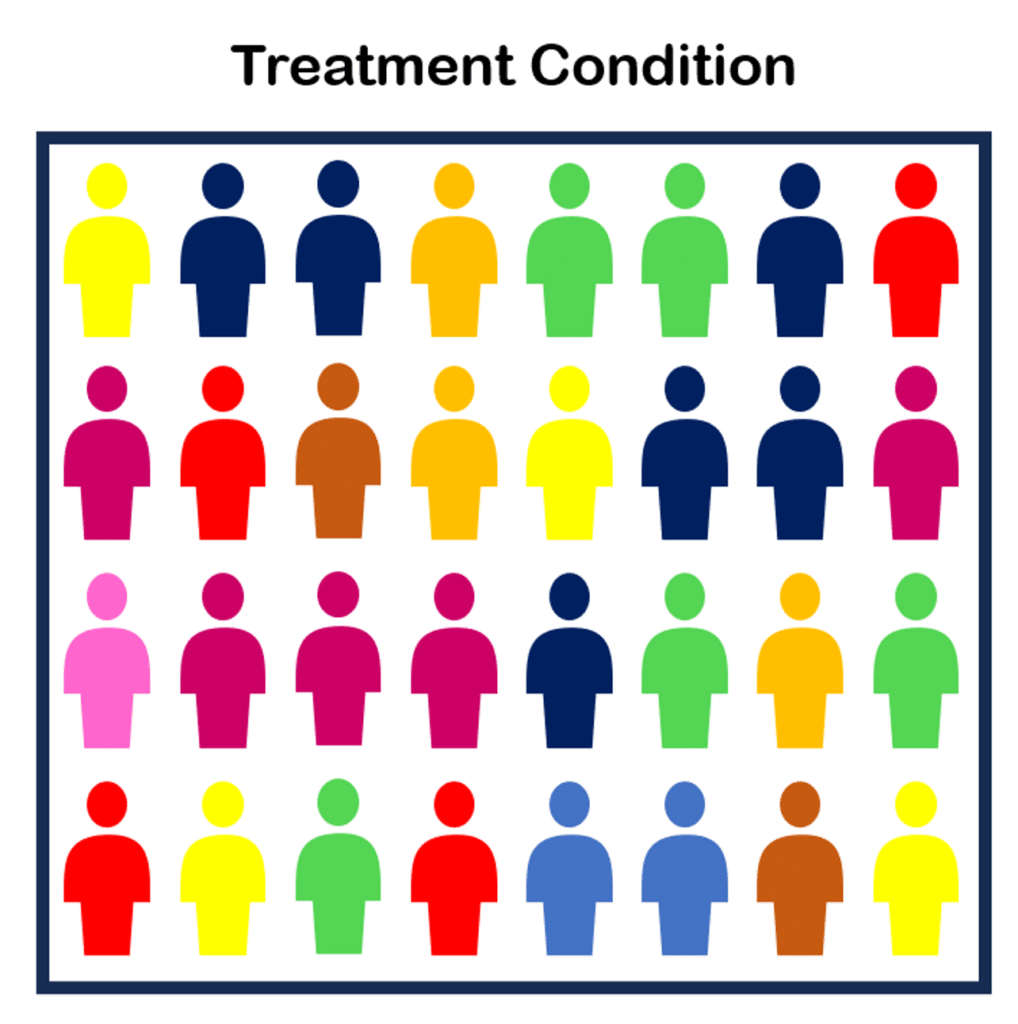

Ideally, we’d want a more even distribution, like below:

To achieve better balance in our two conditions, we use randomized sampling.

Fact File: Experiments 101

Random assignment is used in the type of research called the experiment.

An experiment involves manipulating the level of one variable and examining how it affects another variable. These are the independent and dependent variables :

- Independent Variable: The variable manipulated is called the independent variable (IV)

- Dependent Variable: The variable that it is expected to affect is called the dependent variable (DV).

The most basic form of the experiment involves two conditions: the treatment and the control .

- The Treatment Condition: The treatment condition involves the participants being exposed to the IV.

- The Control Condition: The control condition involves the absence of the IV. Therefore, the IV has two levels: zero and some quantity.

Researchers utilize random assignment to determine which participants go into which conditions.

Methods of Random Assignment

There are several procedures that researchers can use to randomly assign participants to different conditions.

1. Random number generator

There are several websites that offer computer-generated random numbers. Simply indicate how many conditions are in the experiment and then click. If there are 4 conditions, the program will randomly generate a number between 1 and 4 each time it is clicked.

2. Flipping a coin

If there are two conditions in an experiment, then the simplest way to implement random assignment is to flip a coin for each participant. Heads means being assigned to the treatment and tails means being assigned to the control (or vice versa).

3. Rolling a die

Rolling a single die is another way to randomly assign participants. If the experiment has three conditions, then numbers 1 and 2 mean being assigned to the control; numbers 3 and 4 mean treatment condition one; and numbers 5 and 6 mean treatment condition two.

4. Condition names in a hat

In some studies, the researcher will write the name of the treatment condition(s) or control on slips of paper and place them in a hat. If there are 4 conditions and 1 control, then there are 5 slips of paper.

The researcher closes their eyes and selects one slip for each participant. That person is then assigned to one of the conditions in the study and that slip of paper is placed back in the hat. Repeat as necessary.

There are other ways of trying to ensure that the groups of participants are equal in all ways with the exception of the IV. However, random assignment is the most often used because it is so effective at reducing confounds.

Read About More Methods and Examples of Random Assignment Here

Potential Confounding Effects

Random assignment is all about minimizing confounding effects.

Here are six types of confounds that can be controlled for using random assignment:

- Individual Differences: Participants in a study will naturally vary in terms of personality, intelligence, mood, prior knowledge, and many other characteristics. If one group happens to have more people with a particular characteristic, this could affect the results. Random assignment ensures that these individual differences are spread out equally among the experimental groups, making it less likely that they will unduly influence the outcome.

- Temporal or Time-Related Confounds: Events or situations that occur at a particular time can influence the outcome of an experiment. For example, a participant might be tested after a stressful event, while another might be tested after a relaxing weekend. Random assignment ensures that such effects are equally distributed among groups, thus controlling for their potential influence.

- Order Effects: If participants are exposed to multiple treatments or tests, the order in which they experience them can influence their responses. Randomly assigning the order of treatments for different participants helps control for this.

- Location or Environmental Confounds: The environment in which the study is conducted can influence the results. One group might be tested in a noisy room, while another might be in a quiet room. Randomly assigning participants to different locations can control for these effects.

- Instrumentation Confounds: These occur when there are variations in the calibration or functioning of measurement instruments across conditions. If one group’s responses are being measured using a slightly different tool or scale, it can introduce a confound. Random assignment can ensure that any such potential inconsistencies in instrumentation are equally distributed among groups.

- Experimenter Effects: Sometimes, the behavior or expectations of the person administering the experiment can unintentionally influence the participants’ behavior or responses. For instance, if an experimenter believes one treatment is superior, they might unconsciously communicate this belief to participants. Randomly assigning experimenters or using a double-blind procedure (where neither the participant nor the experimenter knows the treatment being given) can help control for this.

Random assignment helps balance out these and other potential confounds across groups, ensuring that any observed differences are more likely due to the manipulated independent variable rather than some extraneous factor.

Limitations of the Random Assignment Procedure

Although random assignment is extremely effective at eliminating the presence of participant-related confounds, there are several scenarios in which it cannot be used.

- Ethics: The most obvious scenario is when it would be unethical. For example, if wanting to investigate the effects of emotional abuse on children, it would be unethical to randomly assign children to either received abuse or not. Even if a researcher were to propose such a study, it would not receive approval from the Institutional Review Board (IRB) which oversees research by university faculty.

- Practicality: Other scenarios involve matters of practicality. For example, randomly assigning people to specific types of diet over a 10-year period would be interesting, but it would be highly unlikely that participants would be diligent enough to make the study valid. This is why examining these types of subjects has to be carried out through observational studies . The data is correlational, which is informative, but falls short of the scientist’s ultimate goal of identifying causality.

- Small Sample Size: The smaller the sample size being assigned to conditions, the more likely it is that the two groups will be unequal. For example, if you flip a coin many times in a row then you will notice that sometimes there will be a string of heads or tails that come up consecutively. This means that one condition may have a build-up of participants that share the same characteristics. However, if you continue flipping the coin, over the long-term, there will be a balance of heads and tails. Unfortunately, how large a sample size is necessary has been the subject of considerable debate (Bloom, 2006; Shadish et al., 2002).

“It is well known that larger sample sizes reduce the probability that random assignment will result in conditions that are unequal” (Goldberg, 2019, p. 2).

Applications of Random Assignment

The importance of random assignment has been recognized in a wide range of scientific and applied disciplines (Bloom, 2006).

Random assignment began as a tool in agricultural research by Fisher (1925, 1935). After WWII, it became extensively used in medical research to test the effectiveness of new treatments and pharmaceuticals (Marks, 1997).

Today it is widely used in industrial engineering (Box, Hunter, and Hunter, 2005), educational research (Lindquist, 1953; Ong-Dean et al., 2011)), psychology (Myers, 1972), and social policy studies (Boruch, 1998; Orr, 1999).

One of the biggest obstacles to the validity of an experiment is the confound. If the group of participants in the treatment condition are substantially different from the group in the control condition, then it is impossible to determine if the IV has an affect or if the confound has an effect.

Thankfully, random assignment is highly effective at eliminating confounds that are known and unknown. Because each participant has an equal chance of being placed in each condition, they are equally distributed.

There are several ways of implementing random assignment, including flipping a coin or using a random number generator.

Random assignment has become an essential procedure in research in a wide range of subjects such as psychology, education, and social policy.

Alferes, V. R. (2012). Methods of randomization in experimental design . Sage Publications.

Bloom, H. S. (2008). The core analytics of randomized experiments for social research. The SAGE Handbook of Social Research Methods , 115-133.

Boruch, R. F. (1998). Randomized controlled experiments for evaluation and planning. Handbook of applied social research methods , 161-191.

Box, G. E., Hunter, W. G., & Hunter, J. S. (2005). Design of experiments: Statistics for Experimenters: Design, Innovation and Discovery.

Dehue, T. (1997). Deception, efficiency, and random groups: Psychology and the gradual origination of the random group design. Isis , 88 (4), 653-673.

Fisher, R.A. (1925). Statistical methods for research workers (11th ed. rev.). Oliver and Boyd: Edinburgh.

Fisher, R. A. (1935). The Design of Experiments. Edinburgh: Oliver and Boyd.

Goldberg, M. H. (2019). How often does random assignment fail? Estimates and recommendations. Journal of Environmental Psychology , 66 , 101351.

Jamison, J. C. (2019). The entry of randomized assignment into the social sciences. Journal of Causal Inference , 7 (1), 20170025.

Lindquist, E. F. (1953). Design and analysis of experiments in psychology and education . Boston: Houghton Mifflin Company.

Marks, H. M. (1997). The progress of experiment: Science and therapeutic reform in the United States, 1900-1990 . Cambridge University Press.

Myers, J. L. (1972). Fundamentals of experimental design (2nd ed.). Allyn & Bacon.

Ong-Dean, C., Huie Hofstetter, C., & Strick, B. R. (2011). Challenges and dilemmas in implementing random assignment in educational research. American Journal of Evaluation , 32 (1), 29-49.

Orr, L. L. (1999). Social experiments: Evaluating public programs with experimental methods . Sage.

Shadish, W. R., Cook, T. D., & Campbell, D. T. (2002). Quasi-experiments: interrupted time-series designs. Experimental and quasi-experimental designs for generalized causal inference , 171-205.

Stigler, S. M. (1992). A historical view of statistical concepts in psychology and educational research. American Journal of Education , 101 (1), 60-70.

Dave Cornell (PhD)

Dr. Cornell has worked in education for more than 20 years. His work has involved designing teacher certification for Trinity College in London and in-service training for state governments in the United States. He has trained kindergarten teachers in 8 countries and helped businessmen and women open baby centers and kindergartens in 3 countries.

- Dave Cornell (PhD) https://helpfulprofessor.com/author/dave-cornell-phd/ 25 Positive Punishment Examples

- Dave Cornell (PhD) https://helpfulprofessor.com/author/dave-cornell-phd/ 25 Dissociation Examples (Psychology)

- Dave Cornell (PhD) https://helpfulprofessor.com/author/dave-cornell-phd/ 15 Zone of Proximal Development Examples

- Dave Cornell (PhD) https://helpfulprofessor.com/author/dave-cornell-phd/ Perception Checking: 15 Examples and Definition

Chris Drew (PhD)

This article was peer-reviewed and edited by Chris Drew (PhD). The review process on Helpful Professor involves having a PhD level expert fact check, edit, and contribute to articles. Reviewers ensure all content reflects expert academic consensus and is backed up with reference to academic studies. Dr. Drew has published over 20 academic articles in scholarly journals. He is the former editor of the Journal of Learning Development in Higher Education and holds a PhD in Education from ACU.

- Chris Drew (PhD) #molongui-disabled-link 25 Positive Punishment Examples

- Chris Drew (PhD) #molongui-disabled-link 25 Dissociation Examples (Psychology)

- Chris Drew (PhD) #molongui-disabled-link 15 Zone of Proximal Development Examples

- Chris Drew (PhD) #molongui-disabled-link Perception Checking: 15 Examples and Definition

Leave a Comment Cancel Reply

Your email address will not be published. Required fields are marked *

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

6.2 Experimental Design

Learning objectives.

- Explain the difference between between-subjects and within-subjects experiments, list some of the pros and cons of each approach, and decide which approach to use to answer a particular research question.

- Define random assignment, distinguish it from random sampling, explain its purpose in experimental research, and use some simple strategies to implement it.

- Define what a control condition is, explain its purpose in research on treatment effectiveness, and describe some alternative types of control conditions.

- Define several types of carryover effect, give examples of each, and explain how counterbalancing helps to deal with them.

In this section, we look at some different ways to design an experiment. The primary distinction we will make is between approaches in which each participant experiences one level of the independent variable and approaches in which each participant experiences all levels of the independent variable. The former are called between-subjects experiments and the latter are called within-subjects experiments.

Between-Subjects Experiments

In a between-subjects experiment , each participant is tested in only one condition. For example, a researcher with a sample of 100 college students might assign half of them to write about a traumatic event and the other half write about a neutral event. Or a researcher with a sample of 60 people with severe agoraphobia (fear of open spaces) might assign 20 of them to receive each of three different treatments for that disorder. It is essential in a between-subjects experiment that the researcher assign participants to conditions so that the different groups are, on average, highly similar to each other. Those in a trauma condition and a neutral condition, for example, should include a similar proportion of men and women, and they should have similar average intelligence quotients (IQs), similar average levels of motivation, similar average numbers of health problems, and so on. This is a matter of controlling these extraneous participant variables across conditions so that they do not become confounding variables.

Random Assignment

The primary way that researchers accomplish this kind of control of extraneous variables across conditions is called random assignment , which means using a random process to decide which participants are tested in which conditions. Do not confuse random assignment with random sampling. Random sampling is a method for selecting a sample from a population, and it is rarely used in psychological research. Random assignment is a method for assigning participants in a sample to the different conditions, and it is an important element of all experimental research in psychology and other fields too.

In its strictest sense, random assignment should meet two criteria. One is that each participant has an equal chance of being assigned to each condition (e.g., a 50% chance of being assigned to each of two conditions). The second is that each participant is assigned to a condition independently of other participants. Thus one way to assign participants to two conditions would be to flip a coin for each one. If the coin lands heads, the participant is assigned to Condition A, and if it lands tails, the participant is assigned to Condition B. For three conditions, one could use a computer to generate a random integer from 1 to 3 for each participant. If the integer is 1, the participant is assigned to Condition A; if it is 2, the participant is assigned to Condition B; and if it is 3, the participant is assigned to Condition C. In practice, a full sequence of conditions—one for each participant expected to be in the experiment—is usually created ahead of time, and each new participant is assigned to the next condition in the sequence as he or she is tested. When the procedure is computerized, the computer program often handles the random assignment.

One problem with coin flipping and other strict procedures for random assignment is that they are likely to result in unequal sample sizes in the different conditions. Unequal sample sizes are generally not a serious problem, and you should never throw away data you have already collected to achieve equal sample sizes. However, for a fixed number of participants, it is statistically most efficient to divide them into equal-sized groups. It is standard practice, therefore, to use a kind of modified random assignment that keeps the number of participants in each group as similar as possible. One approach is block randomization . In block randomization, all the conditions occur once in the sequence before any of them is repeated. Then they all occur again before any of them is repeated again. Within each of these “blocks,” the conditions occur in a random order. Again, the sequence of conditions is usually generated before any participants are tested, and each new participant is assigned to the next condition in the sequence. Table 6.2 “Block Randomization Sequence for Assigning Nine Participants to Three Conditions” shows such a sequence for assigning nine participants to three conditions. The Research Randomizer website ( http://www.randomizer.org ) will generate block randomization sequences for any number of participants and conditions. Again, when the procedure is computerized, the computer program often handles the block randomization.

Table 6.2 Block Randomization Sequence for Assigning Nine Participants to Three Conditions

Random assignment is not guaranteed to control all extraneous variables across conditions. It is always possible that just by chance, the participants in one condition might turn out to be substantially older, less tired, more motivated, or less depressed on average than the participants in another condition. However, there are some reasons that this is not a major concern. One is that random assignment works better than one might expect, especially for large samples. Another is that the inferential statistics that researchers use to decide whether a difference between groups reflects a difference in the population takes the “fallibility” of random assignment into account. Yet another reason is that even if random assignment does result in a confounding variable and therefore produces misleading results, this is likely to be detected when the experiment is replicated. The upshot is that random assignment to conditions—although not infallible in terms of controlling extraneous variables—is always considered a strength of a research design.

Treatment and Control Conditions

Between-subjects experiments are often used to determine whether a treatment works. In psychological research, a treatment is any intervention meant to change people’s behavior for the better. This includes psychotherapies and medical treatments for psychological disorders but also interventions designed to improve learning, promote conservation, reduce prejudice, and so on. To determine whether a treatment works, participants are randomly assigned to either a treatment condition , in which they receive the treatment, or a control condition , in which they do not receive the treatment. If participants in the treatment condition end up better off than participants in the control condition—for example, they are less depressed, learn faster, conserve more, express less prejudice—then the researcher can conclude that the treatment works. In research on the effectiveness of psychotherapies and medical treatments, this type of experiment is often called a randomized clinical trial .

There are different types of control conditions. In a no-treatment control condition , participants receive no treatment whatsoever. One problem with this approach, however, is the existence of placebo effects. A placebo is a simulated treatment that lacks any active ingredient or element that should make it effective, and a placebo effect is a positive effect of such a treatment. Many folk remedies that seem to work—such as eating chicken soup for a cold or placing soap under the bedsheets to stop nighttime leg cramps—are probably nothing more than placebos. Although placebo effects are not well understood, they are probably driven primarily by people’s expectations that they will improve. Having the expectation to improve can result in reduced stress, anxiety, and depression, which can alter perceptions and even improve immune system functioning (Price, Finniss, & Benedetti, 2008).

Placebo effects are interesting in their own right (see Note 6.28 “The Powerful Placebo” ), but they also pose a serious problem for researchers who want to determine whether a treatment works. Figure 6.2 “Hypothetical Results From a Study Including Treatment, No-Treatment, and Placebo Conditions” shows some hypothetical results in which participants in a treatment condition improved more on average than participants in a no-treatment control condition. If these conditions (the two leftmost bars in Figure 6.2 “Hypothetical Results From a Study Including Treatment, No-Treatment, and Placebo Conditions” ) were the only conditions in this experiment, however, one could not conclude that the treatment worked. It could be instead that participants in the treatment group improved more because they expected to improve, while those in the no-treatment control condition did not.

Figure 6.2 Hypothetical Results From a Study Including Treatment, No-Treatment, and Placebo Conditions

Fortunately, there are several solutions to this problem. One is to include a placebo control condition , in which participants receive a placebo that looks much like the treatment but lacks the active ingredient or element thought to be responsible for the treatment’s effectiveness. When participants in a treatment condition take a pill, for example, then those in a placebo control condition would take an identical-looking pill that lacks the active ingredient in the treatment (a “sugar pill”). In research on psychotherapy effectiveness, the placebo might involve going to a psychotherapist and talking in an unstructured way about one’s problems. The idea is that if participants in both the treatment and the placebo control groups expect to improve, then any improvement in the treatment group over and above that in the placebo control group must have been caused by the treatment and not by participants’ expectations. This is what is shown by a comparison of the two outer bars in Figure 6.2 “Hypothetical Results From a Study Including Treatment, No-Treatment, and Placebo Conditions” .

Of course, the principle of informed consent requires that participants be told that they will be assigned to either a treatment or a placebo control condition—even though they cannot be told which until the experiment ends. In many cases the participants who had been in the control condition are then offered an opportunity to have the real treatment. An alternative approach is to use a waitlist control condition , in which participants are told that they will receive the treatment but must wait until the participants in the treatment condition have already received it. This allows researchers to compare participants who have received the treatment with participants who are not currently receiving it but who still expect to improve (eventually). A final solution to the problem of placebo effects is to leave out the control condition completely and compare any new treatment with the best available alternative treatment. For example, a new treatment for simple phobia could be compared with standard exposure therapy. Because participants in both conditions receive a treatment, their expectations about improvement should be similar. This approach also makes sense because once there is an effective treatment, the interesting question about a new treatment is not simply “Does it work?” but “Does it work better than what is already available?”

The Powerful Placebo

Many people are not surprised that placebos can have a positive effect on disorders that seem fundamentally psychological, including depression, anxiety, and insomnia. However, placebos can also have a positive effect on disorders that most people think of as fundamentally physiological. These include asthma, ulcers, and warts (Shapiro & Shapiro, 1999). There is even evidence that placebo surgery—also called “sham surgery”—can be as effective as actual surgery.

Medical researcher J. Bruce Moseley and his colleagues conducted a study on the effectiveness of two arthroscopic surgery procedures for osteoarthritis of the knee (Moseley et al., 2002). The control participants in this study were prepped for surgery, received a tranquilizer, and even received three small incisions in their knees. But they did not receive the actual arthroscopic surgical procedure. The surprising result was that all participants improved in terms of both knee pain and function, and the sham surgery group improved just as much as the treatment groups. According to the researchers, “This study provides strong evidence that arthroscopic lavage with or without débridement [the surgical procedures used] is not better than and appears to be equivalent to a placebo procedure in improving knee pain and self-reported function” (p. 85).

Research has shown that patients with osteoarthritis of the knee who receive a “sham surgery” experience reductions in pain and improvement in knee function similar to those of patients who receive a real surgery.

Army Medicine – Surgery – CC BY 2.0.

Within-Subjects Experiments

In a within-subjects experiment , each participant is tested under all conditions. Consider an experiment on the effect of a defendant’s physical attractiveness on judgments of his guilt. Again, in a between-subjects experiment, one group of participants would be shown an attractive defendant and asked to judge his guilt, and another group of participants would be shown an unattractive defendant and asked to judge his guilt. In a within-subjects experiment, however, the same group of participants would judge the guilt of both an attractive and an unattractive defendant.

The primary advantage of this approach is that it provides maximum control of extraneous participant variables. Participants in all conditions have the same mean IQ, same socioeconomic status, same number of siblings, and so on—because they are the very same people. Within-subjects experiments also make it possible to use statistical procedures that remove the effect of these extraneous participant variables on the dependent variable and therefore make the data less “noisy” and the effect of the independent variable easier to detect. We will look more closely at this idea later in the book.

Carryover Effects and Counterbalancing