What Is Statistical Analysis?

Statistical analysis is a technique we use to find patterns in data and make inferences about those patterns to describe variability in the results of a data set or an experiment.

In its simplest form, statistical analysis answers questions about:

- Quantification — how big/small/tall/wide is it?

- Variability — growth, increase, decline

- The confidence level of these variabilities

What Are the 2 Types of Statistical Analysis?

- Descriptive Statistics: Descriptive statistical analysis describes the quality of the data by summarizing large data sets into single measures.

- Inferential Statistics: Inferential statistical analysis allows you to draw conclusions from your sample data set and make predictions about a population using statistical tests.

What’s the Purpose of Statistical Analysis?

Using statistical analysis, you can determine trends in the data by calculating your data set’s mean or median. You can also analyze the variation between different data points from the mean to get the standard deviation . Furthermore, to test the validity of your statistical analysis conclusions, you can use hypothesis testing techniques, like P-value, to determine the likelihood that the observed variability could have occurred by chance.

More From Abdishakur Hassan The 7 Best Thematic Map Types for Geospatial Data

Statistical Analysis Methods

There are two major types of statistical data analysis: descriptive and inferential.

Descriptive Statistical Analysis

Descriptive statistical analysis describes the quality of the data by summarizing large data sets into single measures.

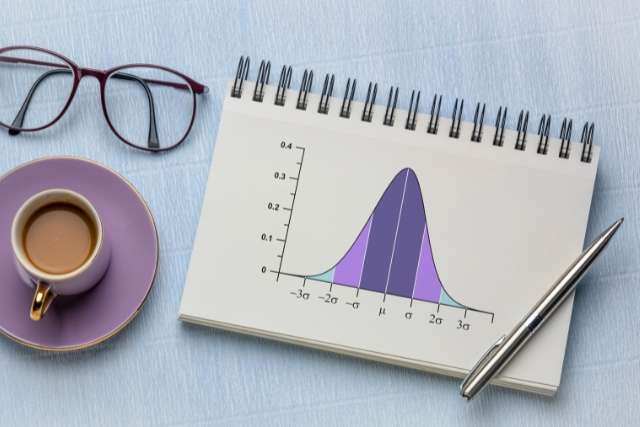

Within the descriptive analysis branch, there are two main types: measures of central tendency (i.e. mean, median and mode) and measures of dispersion or variation (i.e. variance , standard deviation and range).

For example, you can calculate the average exam results in a class using central tendency or, in particular, the mean. In that case, you’d sum all student results and divide by the number of tests. You can also calculate the data set’s spread by calculating the variance. To calculate the variance, subtract each exam result in the data set from the mean, square the answer, add everything together and divide by the number of tests.

Inferential Statistics

On the other hand, inferential statistical analysis allows you to draw conclusions from your sample data set and make predictions about a population using statistical tests.

There are two main types of inferential statistical analysis: hypothesis testing and regression analysis. We use hypothesis testing to test and validate assumptions in order to draw conclusions about a population from the sample data. Popular tests include Z-test, F-Test, ANOVA test and confidence intervals . On the other hand, regression analysis primarily estimates the relationship between a dependent variable and one or more independent variables. There are numerous types of regression analysis but the most popular ones include linear and logistic regression .

Statistical Analysis Steps

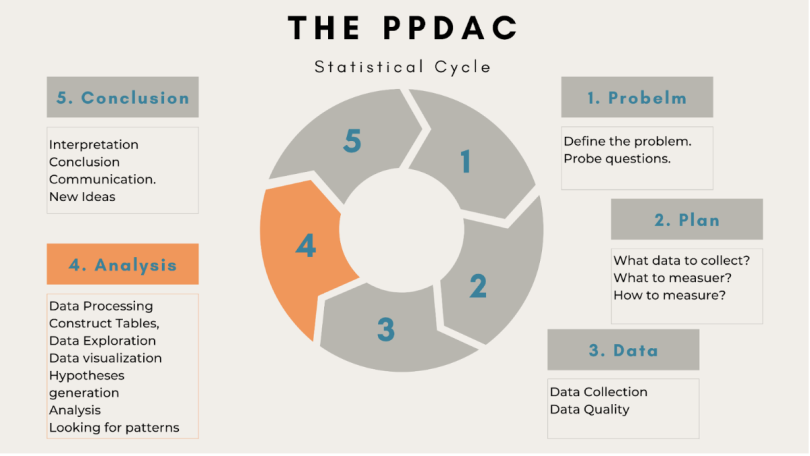

In the era of big data and data science, there is a rising demand for a more problem-driven approach. As a result, we must approach statistical analysis holistically. We may divide the entire process into five different and significant stages by using the well-known PPDAC model of statistics: Problem, Plan, Data, Analysis and Conclusion.

In the first stage, you define the problem you want to tackle and explore questions about the problem.

Next is the planning phase. You can check whether data is available or if you need to collect data for your problem. You also determine what to measure and how to measure it.

The third stage involves data collection, understanding the data and checking its quality.

4. Analysis

Statistical data analysis is the fourth stage. Here you process and explore the data with the help of tables, graphs and other data visualizations. You also develop and scrutinize your hypothesis in this stage of analysis.

5. Conclusion

The final step involves interpretations and conclusions from your analysis. It also covers generating new ideas for the next iteration. Thus, statistical analysis is not a one-time event but an iterative process.

Statistical Analysis Uses

Statistical analysis is useful for research and decision making because it allows us to understand the world around us and draw conclusions by testing our assumptions. Statistical analysis is important for various applications, including:

- Statistical quality control and analysis in product development

- Clinical trials

- Customer satisfaction surveys and customer experience research

- Marketing operations management

- Process improvement and optimization

- Training needs

More on Statistical Analysis From Built In Experts Intro to Descriptive Statistics for Machine Learning

Benefits of Statistical Analysis

Here are some of the reasons why statistical analysis is widespread in many applications and why it’s necessary:

Understand Data

Statistical analysis gives you a better understanding of the data and what they mean. These types of analyses provide information that would otherwise be difficult to obtain by merely looking at the numbers without considering their relationship.

Find Causal Relationships

Statistical analysis can help you investigate causation or establish the precise meaning of an experiment, like when you’re looking for a relationship between two variables.

Make Data-Informed Decisions

Businesses are constantly looking to find ways to improve their services and products . Statistical analysis allows you to make data-informed decisions about your business or future actions by helping you identify trends in your data, whether positive or negative.

Determine Probability

Statistical analysis is an approach to understanding how the probability of certain events affects the outcome of an experiment. It helps scientists and engineers decide how much confidence they can have in the results of their research, how to interpret their data and what questions they can feasibly answer.

You’ve Got Questions. Our Experts Have Answers. Confidence Intervals, Explained!

What Are the Risks of Statistical Analysis?

Statistical analysis can be valuable and effective, but it’s an imperfect approach. Even if the analyst or researcher performs a thorough statistical analysis, there may still be known or unknown problems that can affect the results. Therefore, statistical analysis is not a one-size-fits-all process. If you want to get good results, you need to know what you’re doing. It can take a lot of time to figure out which type of statistical analysis will work best for your situation .

Thus, you should remember that our conclusions drawn from statistical analysis don’t always guarantee correct results. This can be dangerous when making business decisions. In marketing , for example, we may come to the wrong conclusion about a product . Therefore, the conclusions we draw from statistical data analysis are often approximated; testing for all factors affecting an observation is impossible.

Built In’s expert contributor network publishes thoughtful, solutions-oriented stories written by innovative tech professionals. It is the tech industry’s definitive destination for sharing compelling, first-person accounts of problem-solving on the road to innovation.

Great Companies Need Great People. That's Where We Come In.

Table of Contents

Types of statistical analysis, importance of statistical analysis, benefits of statistical analysis, statistical analysis process, statistical analysis methods, statistical analysis software, statistical analysis examples, career in statistical analysis, choose the right program, become proficient in statistics today, what is statistical analysis types, methods and examples.

Statistical analysis is the process of collecting and analyzing data in order to discern patterns and trends. It is a method for removing bias from evaluating data by employing numerical analysis. This technique is useful for collecting the interpretations of research, developing statistical models, and planning surveys and studies.

Statistical analysis is a scientific tool in AI and ML that helps collect and analyze large amounts of data to identify common patterns and trends to convert them into meaningful information. In simple words, statistical analysis is a data analysis tool that helps draw meaningful conclusions from raw and unstructured data.

The conclusions are drawn using statistical analysis facilitating decision-making and helping businesses make future predictions on the basis of past trends. It can be defined as a science of collecting and analyzing data to identify trends and patterns and presenting them. Statistical analysis involves working with numbers and is used by businesses and other institutions to make use of data to derive meaningful information.

Given below are the 6 types of statistical analysis:

Descriptive Analysis

Descriptive statistical analysis involves collecting, interpreting, analyzing, and summarizing data to present them in the form of charts, graphs, and tables. Rather than drawing conclusions, it simply makes the complex data easy to read and understand.

Inferential Analysis

The inferential statistical analysis focuses on drawing meaningful conclusions on the basis of the data analyzed. It studies the relationship between different variables or makes predictions for the whole population.

Predictive Analysis

Predictive statistical analysis is a type of statistical analysis that analyzes data to derive past trends and predict future events on the basis of them. It uses machine learning algorithms, data mining , data modelling , and artificial intelligence to conduct the statistical analysis of data.

Prescriptive Analysis

The prescriptive analysis conducts the analysis of data and prescribes the best course of action based on the results. It is a type of statistical analysis that helps you make an informed decision.

Exploratory Data Analysis

Exploratory analysis is similar to inferential analysis, but the difference is that it involves exploring the unknown data associations. It analyzes the potential relationships within the data.

Causal Analysis

The causal statistical analysis focuses on determining the cause and effect relationship between different variables within the raw data. In simple words, it determines why something happens and its effect on other variables. This methodology can be used by businesses to determine the reason for failure.

Statistical analysis eliminates unnecessary information and catalogs important data in an uncomplicated manner, making the monumental work of organizing inputs appear so serene. Once the data has been collected, statistical analysis may be utilized for a variety of purposes. Some of them are listed below:

- The statistical analysis aids in summarizing enormous amounts of data into clearly digestible chunks.

- The statistical analysis aids in the effective design of laboratory, field, and survey investigations.

- Statistical analysis may help with solid and efficient planning in any subject of study.

- Statistical analysis aid in establishing broad generalizations and forecasting how much of something will occur under particular conditions.

- Statistical methods, which are effective tools for interpreting numerical data, are applied in practically every field of study. Statistical approaches have been created and are increasingly applied in physical and biological sciences, such as genetics.

- Statistical approaches are used in the job of a businessman, a manufacturer, and a researcher. Statistics departments can be found in banks, insurance businesses, and government agencies.

- A modern administrator, whether in the public or commercial sector, relies on statistical data to make correct decisions.

- Politicians can utilize statistics to support and validate their claims while also explaining the issues they address.

Become a Data Science & Business Analytics Professional

- 28% Annual Job Growth By 2026

- 11.5 M Expected New Jobs For Data Science By 2026

Data Analyst

- Industry-recognized Data Analyst Master’s certificate from Simplilearn

- Dedicated live sessions by faculty of industry experts

Data Scientist

- Add the IBM Advantage to your Learning

- 25 Industry-relevant Projects and Integrated labs

Here's what learners are saying regarding our programs:

Gayathri Ramesh

Associate data engineer , publicis sapient.

The course was well structured and curated. The live classes were extremely helpful. They made learning more productive and interactive. The program helped me change my domain from a data analyst to an Associate Data Engineer.

A.Anthony Davis

Simplilearn has one of the best programs available online to earn real-world skills that are in demand worldwide. I just completed the Machine Learning Advanced course, and the LMS was excellent.

Statistical analysis can be called a boon to mankind and has many benefits for both individuals and organizations. Given below are some of the reasons why you should consider investing in statistical analysis:

- It can help you determine the monthly, quarterly, yearly figures of sales profits, and costs making it easier to make your decisions.

- It can help you make informed and correct decisions.

- It can help you identify the problem or cause of the failure and make corrections. For example, it can identify the reason for an increase in total costs and help you cut the wasteful expenses.

- It can help you conduct market analysis and make an effective marketing and sales strategy.

- It helps improve the efficiency of different processes.

Given below are the 5 steps to conduct a statistical analysis that you should follow:

- Step 1: Identify and describe the nature of the data that you are supposed to analyze.

- Step 2: The next step is to establish a relation between the data analyzed and the sample population to which the data belongs.

- Step 3: The third step is to create a model that clearly presents and summarizes the relationship between the population and the data.

- Step 4: Prove if the model is valid or not.

- Step 5: Use predictive analysis to predict future trends and events likely to happen.

Although there are various methods used to perform data analysis, given below are the 5 most used and popular methods of statistical analysis:

Mean or average mean is one of the most popular methods of statistical analysis. Mean determines the overall trend of the data and is very simple to calculate. Mean is calculated by summing the numbers in the data set together and then dividing it by the number of data points. Despite the ease of calculation and its benefits, it is not advisable to resort to mean as the only statistical indicator as it can result in inaccurate decision making.

Standard Deviation

Standard deviation is another very widely used statistical tool or method. It analyzes the deviation of different data points from the mean of the entire data set. It determines how data of the data set is spread around the mean. You can use it to decide whether the research outcomes can be generalized or not.

Regression is a statistical tool that helps determine the cause and effect relationship between the variables. It determines the relationship between a dependent and an independent variable. It is generally used to predict future trends and events.

Hypothesis Testing

Hypothesis testing can be used to test the validity or trueness of a conclusion or argument against a data set. The hypothesis is an assumption made at the beginning of the research and can hold or be false based on the analysis results.

Sample Size Determination

Sample size determination or data sampling is a technique used to derive a sample from the entire population, which is representative of the population. This method is used when the size of the population is very large. You can choose from among the various data sampling techniques such as snowball sampling, convenience sampling, and random sampling.

Everyone can't perform very complex statistical calculations with accuracy making statistical analysis a time-consuming and costly process. Statistical software has become a very important tool for companies to perform their data analysis. The software uses Artificial Intelligence and Machine Learning to perform complex calculations, identify trends and patterns, and create charts, graphs, and tables accurately within minutes.

Look at the standard deviation sample calculation given below to understand more about statistical analysis.

The weights of 5 pizza bases in cms are as follows:

Calculation of Mean = (9+2+5+4+12)/5 = 32/5 = 6.4

Calculation of mean of squared mean deviation = (6.76+19.36+1.96+5.76+31.36)/5 = 13.04

Sample Variance = 13.04

Standard deviation = √13.04 = 3.611

A Statistical Analyst's career path is determined by the industry in which they work. Anyone interested in becoming a Data Analyst may usually enter the profession and qualify for entry-level Data Analyst positions right out of high school or a certificate program — potentially with a Bachelor's degree in statistics, computer science, or mathematics. Some people go into data analysis from a similar sector such as business, economics, or even the social sciences, usually by updating their skills mid-career with a statistical analytics course.

Statistical Analyst is also a great way to get started in the normally more complex area of data science. A Data Scientist is generally a more senior role than a Data Analyst since it is more strategic in nature and necessitates a more highly developed set of technical abilities, such as knowledge of multiple statistical tools, programming languages, and predictive analytics models.

Aspiring Data Scientists and Statistical Analysts generally begin their careers by learning a programming language such as R or SQL. Following that, they must learn how to create databases, do basic analysis, and make visuals using applications such as Tableau. However, not every Statistical Analyst will need to know how to do all of these things, but if you want to advance in your profession, you should be able to do them all.

Based on your industry and the sort of work you do, you may opt to study Python or R, become an expert at data cleaning, or focus on developing complicated statistical models.

You could also learn a little bit of everything, which might help you take on a leadership role and advance to the position of Senior Data Analyst. A Senior Statistical Analyst with vast and deep knowledge might take on a leadership role leading a team of other Statistical Analysts. Statistical Analysts with extra skill training may be able to advance to Data Scientists or other more senior data analytics positions.

Supercharge your career in AI and ML with Simplilearn's comprehensive courses. Gain the skills and knowledge to transform industries and unleash your true potential. Enroll now and unlock limitless possibilities!

Program Name AI Engineer Post Graduate Program In Artificial Intelligence Post Graduate Program In Artificial Intelligence Geo All Geos All Geos IN/ROW University Simplilearn Purdue Caltech Course Duration 11 Months 11 Months 11 Months Coding Experience Required Basic Basic No Skills You Will Learn 10+ skills including data structure, data manipulation, NumPy, Scikit-Learn, Tableau and more. 16+ skills including chatbots, NLP, Python, Keras and more. 8+ skills including Supervised & Unsupervised Learning Deep Learning Data Visualization, and more. Additional Benefits Get access to exclusive Hackathons, Masterclasses and Ask-Me-Anything sessions by IBM Applied learning via 3 Capstone and 12 Industry-relevant Projects Purdue Alumni Association Membership Free IIMJobs Pro-Membership of 6 months Resume Building Assistance Upto 14 CEU Credits Caltech CTME Circle Membership Cost $$ $$$$ $$$$ Explore Program Explore Program Explore Program

Hope this article assisted you in understanding the importance of statistical analysis in every sphere of life. Artificial Intelligence (AI) can help you perform statistical analysis and data analysis very effectively and efficiently.

If you are a science wizard and fascinated by the role of AI in statistical analysis, check out this amazing Caltech Post Graduate Program in AI & ML course in collaboration with Caltech. With a comprehensive syllabus and real-life projects, this course is one of the most popular courses and will help you with all that you need to know about Artificial Intelligence.

Our AI & Machine Learning Courses Duration And Fees

AI & Machine Learning Courses typically range from a few weeks to several months, with fees varying based on program and institution.

Get Free Certifications with free video courses

Data Science & Business Analytics

Introduction to Data Analytics Course

Introduction to Data Science

Learn from Industry Experts with free Masterclasses

Ai & machine learning.

Gain Gen AI expertise in Purdue's Applied Gen AI Specialization

Unlock Your Career Potential: Land Your Dream Job with Gen AI Tools

Make Your Gen AI & ML Career Shift in 2024 a Success with iHUB DivyaSampark, IIT Roorkee

Recommended Reads

Free eBook: Guide To The CCBA And CBAP Certifications

Understanding Statistical Process Control (SPC) and Top Applications

A Complete Guide on the Types of Statistical Studies

Digital Marketing Salary Guide 2021

What Is Data Analysis: A Comprehensive Guide

A Complete Guide to Get a Grasp of Time Series Analysis

Get Affiliated Certifications with Live Class programs

- PMP, PMI, PMBOK, CAPM, PgMP, PfMP, ACP, PBA, RMP, SP, and OPM3 are registered marks of the Project Management Institute, Inc.

Statistical Analysis in Research: Meaning, Methods and Types

Home » Videos » Statistical Analysis in Research: Meaning, Methods and Types

The scientific method is an empirical approach to acquiring new knowledge by making skeptical observations and analyses to develop a meaningful interpretation. It is the basis of research and the primary pillar of modern science. Researchers seek to understand the relationships between factors associated with the phenomena of interest. In some cases, research works with vast chunks of data, making it difficult to observe or manipulate each data point. As a result, statistical analysis in research becomes a means of evaluating relationships and interconnections between variables with tools and analytical techniques for working with large data. Since researchers use statistical power analysis to assess the probability of finding an effect in such an investigation, the method is relatively accurate. Hence, statistical analysis in research eases analytical methods by focusing on the quantifiable aspects of phenomena.

What is Statistical Analysis in Research? A Simplified Definition

Statistical analysis uses quantitative data to investigate patterns, relationships, and patterns to understand real-life and simulated phenomena. The approach is a key analytical tool in various fields, including academia, business, government, and science in general. This statistical analysis in research definition implies that the primary focus of the scientific method is quantitative research. Notably, the investigator targets the constructs developed from general concepts as the researchers can quantify their hypotheses and present their findings in simple statistics.

When a business needs to learn how to improve its product, they collect statistical data about the production line and customer satisfaction. Qualitative data is valuable and often identifies the most common themes in the stakeholders’ responses. On the other hand, the quantitative data creates a level of importance, comparing the themes based on their criticality to the affected persons. For instance, descriptive statistics highlight tendency, frequency, variation, and position information. While the mean shows the average number of respondents who value a certain aspect, the variance indicates the accuracy of the data. In any case, statistical analysis creates simplified concepts used to understand the phenomenon under investigation. It is also a key component in academia as the primary approach to data representation, especially in research projects, term papers and dissertations.

Most Useful Statistical Analysis Methods in Research

Using statistical analysis methods in research is inevitable, especially in academic assignments, projects, and term papers. It’s always advisable to seek assistance from your professor or you can try research paper writing by CustomWritings before you start your academic project or write statistical analysis in research paper. Consulting an expert when developing a topic for your thesis or short mid-term assignment increases your chances of getting a better grade. Most importantly, it improves your understanding of research methods with insights on how to enhance the originality and quality of personalized essays. Professional writers can also help select the most suitable statistical analysis method for your thesis, influencing the choice of data and type of study.

Descriptive Statistics

Descriptive statistics is a statistical method summarizing quantitative figures to understand critical details about the sample and population. A description statistic is a figure that quantifies a specific aspect of the data. For instance, instead of analyzing the behavior of a thousand students, research can identify the most common actions among them. By doing this, the person utilizes statistical analysis in research, particularly descriptive statistics.

- Measures of central tendency . Central tendency measures are the mean, mode, and media or the averages denoting specific data points. They assess the centrality of the probability distribution, hence the name. These measures describe the data in relation to the center.

- Measures of frequency . These statistics document the number of times an event happens. They include frequency, count, ratios, rates, and proportions. Measures of frequency can also show how often a score occurs.

- Measures of dispersion/variation . These descriptive statistics assess the intervals between the data points. The objective is to view the spread or disparity between the specific inputs. Measures of variation include the standard deviation, variance, and range. They indicate how the spread may affect other statistics, such as the mean.

- Measures of position . Sometimes researchers can investigate relationships between scores. Measures of position, such as percentiles, quartiles, and ranks, demonstrate this association. They are often useful when comparing the data to normalized information.

Inferential Statistics

Inferential statistics is critical in statistical analysis in quantitative research. This approach uses statistical tests to draw conclusions about the population. Examples of inferential statistics include t-tests, F-tests, ANOVA, p-value, Mann-Whitney U test, and Wilcoxon W test. This

Common Statistical Analysis in Research Types

Although inferential and descriptive statistics can be classified as types of statistical analysis in research, they are mostly considered analytical methods. Types of research are distinguishable by the differences in the methodology employed in analyzing, assembling, classifying, manipulating, and interpreting data. The categories may also depend on the type of data used.

Predictive Analysis

Predictive research analyzes past and present data to assess trends and predict future events. An excellent example of predictive analysis is a market survey that seeks to understand customers’ spending habits to weigh the possibility of a repeat or future purchase. Such studies assess the likelihood of an action based on trends.

Prescriptive Analysis

On the other hand, a prescriptive analysis targets likely courses of action. It’s decision-making research designed to identify optimal solutions to a problem. Its primary objective is to test or assess alternative measures.

Causal Analysis

Causal research investigates the explanation behind the events. It explores the relationship between factors for causation. Thus, researchers use causal analyses to analyze root causes, possible problems, and unknown outcomes.

Mechanistic Analysis

This type of research investigates the mechanism of action. Instead of focusing only on the causes or possible outcomes, researchers may seek an understanding of the processes involved. In such cases, they use mechanistic analyses to document, observe, or learn the mechanisms involved.

Exploratory Data Analysis

Similarly, an exploratory study is extensive with a wider scope and minimal limitations. This type of research seeks insight into the topic of interest. An exploratory researcher does not try to generalize or predict relationships. Instead, they look for information about the subject before conducting an in-depth analysis.

The Importance of Statistical Analysis in Research

As a matter of fact, statistical analysis provides critical information for decision-making. Decision-makers require past trends and predictive assumptions to inform their actions. In most cases, the data is too complex or lacks meaningful inferences. Statistical tools for analyzing such details help save time and money, deriving only valuable information for assessment. An excellent statistical analysis in research example is a randomized control trial (RCT) for the Covid-19 vaccine. You can download a sample of such a document online to understand the significance such analyses have to the stakeholders. A vaccine RCT assesses the effectiveness, side effects, duration of protection, and other benefits. Hence, statistical analysis in research is a helpful tool for understanding data.

Sources and links For the articles and videos I use different databases, such as Eurostat, OECD World Bank Open Data, Data Gov and others. You are free to use the video I have made on your site using the link or the embed code. If you have any questions, don’t hesitate to write to me!

Support statistics and data, if you have reached the end and like this project, you can donate a coffee to “statistics and data”..

Copyright © 2022 Statistics and Data

Skip to main content

- SAS Viya Platform

- Learn About SAS Viya

- Try It and Buy It

- Move to SAS Viya

- Risk Management

- All Products & Solutions

- Public Sector

- Life Sciences

- Retail & Consumer Goods

- All Industries

- Contracting with SAS

- Customer Stories

Why Learn SAS?

Demand for SAS skills is growing. Advance your career and train your team in sought after skills

- Train My Team

- Course Catalog

- Free Training

- My Training

- Academic Programs

- Free Academic Software

- Certification

- Choose a Credential

- Why get certified?

- Exam Preparation

- My Certification

- Communities

- Ask the Expert

- All Webinars

- Video Tutorials

- YouTube Channel

- SAS Programming

- Statistical Procedures

- New SAS Users

- Administrators

- All Communities

- Documentation

- Installation & Configuration

- SAS Viya Administration

- SAS Viya Programming

- System Requirements

- All Documentation

- Support & Services

- Knowledge Base

- Starter Kit

- Support by Product

- Support Services

- All Support & Services

- User Groups

- Partner Program

- Find a Partner

- Sign Into PartnerNet

Learn why SAS is the world's most trusted analytics platform, and why analysts, customers and industry experts love SAS.

Learn more about SAS

- Annual Report

- Vision & Mission

- Office Locations

- Internships

- Search Jobs

- News & Events

- Newsletters

- Trust Center

- support.sas.com

- documentation.sas.com

- blogs.sas.com

- communities.sas.com

- developer.sas.com

Select Your Region

Middle East & Africa

Asia Pacific

- Canada (English)

- Canada (Français)

- United States

- Bosnia & Herz.

- Česká Republika

- Deutschland

- Magyarország

- North Macedonia

- Schweiz (Deutsch)

- Suisse (Français)

- United Kingdom

- Middle East

- Saudi Arabia

- South Africa

- Indonesia (Bahasa)

- Indonesia (English)

- New Zealand

- Philippines

- Thailand (English)

- ประเทศไทย (ภาษาไทย)

- Worldwide Sites

Create Profile

Get access to My SAS, trials, communities and more.

Edit Profile

Statistical Analysis

Look around you. statistics are everywhere..

The field of statistics touches our lives in many ways. From the daily routines in our homes to the business of making the greatest cities run, the effects of statistics are everywhere.

Statistical Analysis Defined

What is statistical analysis? It’s the science of collecting, exploring and presenting large amounts of data to discover underlying patterns and trends. Statistics are applied every day – in research, industry and government – to become more scientific about decisions that need to be made. For example:

- Manufacturers use statistics to weave quality into beautiful fabrics, to bring lift to the airline industry and to help guitarists make beautiful music.

- Researchers keep children healthy by using statistics to analyze data from the production of viral vaccines, which ensures consistency and safety.

- Communication companies use statistics to optimize network resources, improve service and reduce customer churn by gaining greater insight into subscriber requirements.

- Government agencies around the world rely on statistics for a clear understanding of their countries, their businesses and their people.

Look around you. From the tube of toothpaste in your bathroom to the planes flying overhead, you see hundreds of products and processes every day that have been improved through the use of statistics.

Analytics Insights

Connect with the latest insights on analytics through related articles and research., more on statistical analysis.

- What are the next big trends in statistics?

- Why should students study statistics?

- Celebrating statisticians: W. Edwards Deming

- Statistics: The language of science

Statistics is so unique because it can go from health outcomes research to marketing analysis to the longevity of a light bulb. It’s a fun field because you really can do so many different things with it.

Besa Smith President and Senior Scientist Analydata

Statistical Computing

Traditional methods for statistical analysis – from sampling data to interpreting results – have been used by scientists for thousands of years. But today’s data volumes make statistics ever more valuable and powerful. Affordable storage, powerful computers and advanced algorithms have all led to an increased use of computational statistics.

Whether you are working with large data volumes or running multiple permutations of your calculations, statistical computing has become essential for today’s statistician. Popular statistical computing practices include:

- Statistical programming – From traditional analysis of variance and linear regression to exact methods and statistical visualization techniques, statistical programming is essential for making data-based decisions in every field.

- Econometrics – Modeling, forecasting and simulating business processes for improved strategic and tactical planning. This method applies statistics to economics to forecast future trends.

- Operations research – Identify the actions that will produce the best results – based on many possible options and outcomes. Scheduling, simulation, and related modeling processes are used to optimize business processes and management challenges.

- Matrix programming – Powerful computer techniques for implementing your own statistical methods and exploratory data analysis using row operation algorithms.

- Statistical quality improvement – A mathematical approach to reviewing the quality and safety characteristics for all aspects of production.

Careers in Statistical Analysis

With everyone from The New York Times to Google’s Chief Economist Hal Varien proclaiming statistics to be the latest hot career field, who are we to argue? But why is there so much talk about careers in statistical analysis and data science? It could be the shortage of trained analytical thinkers. Or it could be the demand for managing the latest big data strains. Or, maybe it’s the excitement of applying mathematical concepts to make a difference in the world.

If you talk to statisticians about what first interested them in statistical analysis, you’ll hear a lot of stories about collecting baseball cards as a child. Or applying statistics to win more games of Axis and Allies. It is often these early passions that lead statisticians into the field. As adults, those passions can carry over into the workforce as a love of analysis and reasoning, where their passions are applied to everything from the influence of friends on purchase decisions to the study of endangered species around the world.

Learn more about current and historical statisticians:

- Ask a statistician videos cover current uses and future trends in statistics.

- SAS loves stats profiles statisticians working at SAS.

- Celebrating statisticians commemorates statistics practitioners from history.

Statistics Procedures Community

Join our statistics procedures community, where you can ask questions and share your experiences with SAS statistical products. SAS Statistical Procedures

Statistical Analysis Resources

- Statistics training

- Statistical analytics tutorials

- Statistics and operations research news

- SAS ® statistics products

Want more insights?

Risk & Fraud

Discover new insights on risk and fraud through research, related articles and much more..

Get more insights on big data including articles, research and other hot topics.

Explore insights from marketing movers and shakers on a variety of timely topics.

Learn more about sas products and solutions.

The Best PhD and Masters Consulting Company

Introduction to Statistical Analysis: A Beginner’s Guide.

Statistical analysis is a crucial component of research work across various disciplines, helping researchers derive meaningful insights from data. Whether you’re conducting scientific studies, social research, or data-driven investigations, having a solid understanding of statistical analysis is essential. In this beginner’s guide, we will explore the fundamental concepts and techniques of statistical analysis specifically tailored for research work, providing you with a strong foundation to enhance the quality and credibility of your research findings.

1. Importance of Statistical Analysis in Research:

Research aims to uncover knowledge and make informed conclusions. Statistical analysis plays a pivotal role in achieving this by providing tools and methods to analyze and interpret data accurately. It helps researchers identify patterns, test hypotheses, draw inferences, and quantify the strength of relationships between variables. Understanding the significance of statistical analysis empowers researchers to make evidence-based decisions.

2. Data Collection and Organization:

Before diving into statistical analysis, researchers must collect and organize their data effectively. We will discuss the importance of proper sampling techniques, data quality assurance, and data preprocessing. Additionally, we will explore methods to handle missing data and outliers, ensuring that your dataset is reliable and suitable for analysis.

3. Exploratory Data Analysis (EDA):

Exploratory Data Analysis is a preliminary step that involves visually exploring and summarizing the main characteristics of the data. We will cover techniques such as data visualization, descriptive statistics, and data transformations to gain insights into the distribution, central tendencies, and variability of the variables in your dataset. EDA helps researchers understand the underlying structure of the data and identify potential relationships for further investigation.

4. Statistical Inference and Hypothesis Testing:

Statistical inference allows researchers to make generalizations about a population based on a sample. We will delve into hypothesis testing, covering concepts such as null and alternative hypotheses, p-values, and significance levels. By understanding these concepts, you will be able to test your research hypotheses and determine if the observed results are statistically significant.

5. Parametric and Non-parametric Tests:

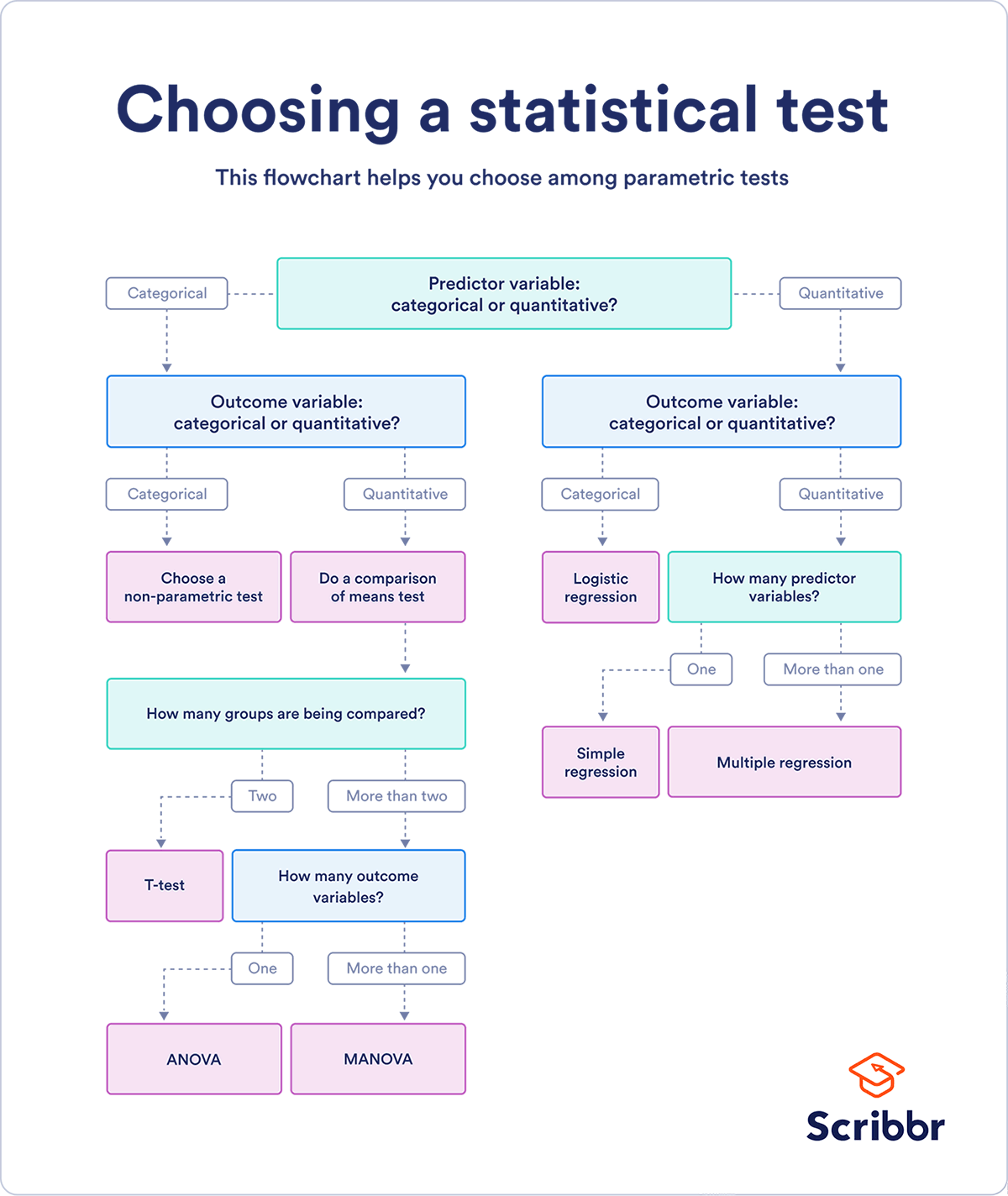

Parametric and non-parametric tests are statistical techniques used to analyze data based on different assumptions about the underlying population distribution. We will explore commonly used parametric tests, such as t-tests and analysis of variance (ANOVA), as well as non-parametric tests like the Mann-Whitney U test and Kruskal-Wallis test. Understanding when to use each type of test is crucial for selecting the appropriate analysis method for your research questions.

6. Correlation and Regression Analysis:

Correlation and regression analysis allow researchers to explore relationships between variables and make predictions. We will cover Pearson correlation coefficients, multiple regression analysis, and logistic regression. These techniques enable researchers to quantify the strength and direction of associations and identify predictive factors in their research.

7. Sample Size Determination and Power Analysis:

Sample size determination is a critical aspect of research design, as it affects the validity and reliability of your findings. We will discuss methods for estimating sample size based on statistical power analysis, ensuring that your study has sufficient statistical power to detect meaningful effects. Understanding sample size determination is essential for planning robust research studies.

Conclusion:

Statistical analysis is an indispensable tool for conducting high-quality research. This beginner’s guide has provided an overview of key concepts and techniques specifically tailored for research work, enabling you to enhance the credibility and reliability of your findings. By understanding the importance of statistical analysis, collecting and organizing data effectively, performing exploratory data analysis, conducting hypothesis testing, utilizing parametric and non-parametric tests, and considering sample size determination, you will be well-equipped to carry out rigorous research and contribute valuable insights to your field. Remember, continuous learning, practice, and seeking guidance from statistical experts will further enhance your skills in statistical analysis for research.

Leave a Comment Cancel Reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Data Center

- Applications

- Open Source

Datamation content and product recommendations are editorially independent. We may make money when you click on links to our partners. Learn More .

Statistical analysis is a systematic method of gathering, analyzing, interpreting, presenting, and deriving conclusions from data. It employs statistical tools to find patterns, trends, and links within datasets to facilitate informed decision-making. Data collection, description, exploratory data analysis (EDA), inferential statistics, statistical modeling, data visualization, and interpretation are all important aspects of statistical analysis.

Used in quantitative research to gather and analyze data, statistical data analysis provides a more comprehensive view of operational landscapes and gives organizations the insights they need to make strategic, evidence-based decisions. Here’s what you need to know.

Table of Contents

Featured Partners: Data Analysis Software

How Does Statistical Analysis Work?

The strategic use of statistical analysis procedures helps organizations get insights from data to make educated decisions. Statistical analytic approaches, which include everything from data extraction to the creation of actionable recommendations, provide a systematic approach to comprehending, interpreting, and using large datasets. By navigating these complex processes, businesses uncover hidden patterns in their data and extract important insights that can be used as a compass for strategic decision-making.

Extracting and Organizing Raw Data

Extracting and organizing raw data entails gathering information from a variety of sources, combining datasets, and assuring data quality through rigorous cleaning. In healthcare, for example, this method may comprise combining patient information from several systems to assess patterns in illness prevalence and treatment outcomes.

Identifying Essential Data

Identifying key data—and excluding irrelevant data—necessitates a thorough analysis of the dataset. Analysts use variable selection strategies to filter datasets to emphasize characteristics most relevant to the objectives, resulting in more focused and meaningful analysis.

Developing Innovative Collection Strategies

Innovative data collection procedures include everything from creating successful surveys and organizing experiments to data mining to extract data from a wide range of sources. Researchers in environmental studies might use remote sensing technology to obtain data on how plants and land cover change over time. Modern approaches such as satellite photography and machine learning algorithms help scientists improve the depth and precision of data collecting, opening the way for more nuanced analyses and informed decision-making.

Collaborating With Experts

Collaborating with clients and specialists to review data analysis tactics can align analytical approaches with organizational objectives. In finance, for example, engaging with investment professionals ensures that data analysis tactics analyze market trends and make educated investment decisions. Analysts may modify their tactics by incorporating comments from domain experts, making the ensuing study more relevant and applicable to the given sector or subject.

Creating Reports and Visualizations

Creating data reports and visualizations entails generating extensive summaries and graphical representations for clarity. In e-commerce, reports might indicate user purchase trends using visualizations like heatmaps to highlight popular goods. Businesses that display data in a visually accessible format can rapidly analyze patterns and make data-driven choices that optimize product offers and improve the entire consumer experience.

Analyzing Data Findings

This step entails using statistical tools to discover patterns, correlations, and insights in the dataset. In manufacturing, data analysis can identify connections between production factors and product faults, leading process improvement efforts. Engineers may discover and resolve fundamental causes using statistical tools and methodologies, resulting in higher product quality and operational efficiency.

Acting on the Data

Synthesizing findings from data analysis leads to the development of organizational recommendations. In the hospitality business, for example, data analysis might indicate trends in client preferences, resulting in strategic suggestions for tailored services and marketing efforts. Continuous improvement ideas based on analytical results help the firm adapt to a changing market scenario and compete more effectively.

The Importance of Statistical Analysis

The importance of statistical analysis goes far beyond data processing; it is the cornerstone in giving vital insights required for strategic decision-making, especially in the dynamic area of presenting new items to the market. Statistical analysis, which meticulously examines data, not only reveals trends and patterns but also provides a full insight into customer behavior, preferences, and market dynamics.

This abundance of information is a guiding force for enterprises, allowing them to make data-driven decisions that optimize product launches, improve market positioning, and ultimately drive success in an ever-changing business landscape.

2 Types of Statistical Analysis

There are two forms of statistical analysis, descriptive statistics, and statistical inference, both of which play an important role in guaranteeing data correctness and communicability using various analytical approaches.

By combining the capabilities of descriptive statistics with statistical inference, analysts can completely characterize the data and draw relevant insights that extend beyond the observed sample, guaranteeing conclusions that are resilient, trustworthy, and applicable to a larger context. This dual method improves the overall dependability of statistical analysis, making it an effective tool for obtaining important information from a variety of datasets.

Descriptive Statistics

This type of statistical analysis is all about visuals. Raw data doesn’t mean much on its own, and the sheer quantity can be overwhelming to digest. Descriptive statistical analysis focuses on creating a basic visual description of the data or turning information into graphs, charts, and other visuals that help people understand the meaning of the values in the data set. Descriptive analysis isn’t about explaining or drawing conclusions, though. It is only the practice of digesting and summarizing raw data to better understand it.

Statistical Inference

Inferential statistics practices involve more upfront hypotheses and follow-up explanations than descriptive statistics. In this type of statistical analysis, you are less focused on the entire collection of raw data and instead, take a sample and test your hypothesis or first estimation. From this sample and the results of your experiment, you can use inferential statistics to infer conclusions about the rest of the data set.

6 Benefits of Statistical Analysis

Statistical analysis enables a methodical and data-driven approach to decision-making and helps organizations maximize the value of their data, resulting in increased efficiency, informed decision-making, and innovation. Here are six of the most important benefits:

- Competitive Analysis: Statistical analysis illuminates your company’s objective value—knowing common metrics like sales revenue and net profit margin allows you to compare your performance to competitors.

- True Sales Visibility: The sales team says it is having a good week, and the numbers look good, but how can you accurately measure the impact on sales numbers? Statistical data analysis measures sales data and associates it with specific timeframes, products, and individual salespeople, which gives better visibility of marketing and sales successes.

- Predictive Analytics: Predictive analytics allows you to use past numerical data to predict future outcomes and areas where your team should make adjustments to improve performance.

- Risk Assessment and Management: Statistical tools help organizations analyze and manage risks more efficiently. Organizations may use historical data to identify possible hazards, anticipate future outcomes, and apply risk mitigation methods, lowering uncertainty and improving overall risk management.

- Resource Optimization: Statistical analysis identifies areas of inefficiency or underutilization, improving personnel management, budget allocation, and resource deployment and leading to increased operational efficiency and cost savings.

- Informed Decision Making: Statistical analysis allows businesses to base judgments on factual data rather than intuition. Data analysis allows firms to uncover patterns, trends, and correlations, resulting in better informed and strategic decision-making processes.

5-Step Statistical Analysis Process

Here are five essential steps for executing a thorough statistical analysis. By carefully following these stages, analysts may undertake a complete and rigorous statistical analysis, creating the framework for informed decision-making and providing actionable insights for both individuals and businesses.

Step 1: Data Identification and Description

Identify and clarify the features of the data to be analyzed. Understanding the nature of the dataset is critical in building the framework for a thorough statistical analysis.

Step 2: Establishing the Population Connection

Make progress toward creating a meaningful relationship between the studied data and the larger sample population from which it is drawn. This stage entails contextualizing the data within the greater framework of the population it represents, increasing the analysis’s relevance and application.

Step 3: Model Construction and Synthesis

Create a model that accurately captures and synthesizes the complex relationship between the population under study and the unique dataset. Creating a well-defined model is essential for analyzing data and generating useful insights.

Step 4: Model Validity Verification

Apply the model to thorough testing and inspection to ensure its validity. This stage guarantees that the model properly represents the population’s underlying dynamics, which improves the trustworthiness of future analysis and results.

Step 5: Predictive Analysis of Future Trends

Using predictive analytics tools , you may take your analysis to the next level. This final stage forecasts future trends and occurrences based on the developed model, providing significant insights into probable developments and assisting with proactive decision-making.

5 Statistical Analysis Methods

There are five common statistical analysis methods, each adapted to distinct data goals and guiding rapid decision-making. The approach you choose is determined by the nature of your dataset and the goals you want to achieve.

Finding the mean—the average, or center point, of the dataset—is computed by adding all the values and dividing by the number of observations. In real-world situations, the mean is used to calculate a representative value that captures the usual magnitude of a group of observations. For example, in educational evaluations, the mean score of a class provides educators with a concise measure of overall performance, allowing them to determine the general level of comprehension.

Standard Deviation

The standard deviation measures the degree of variance or dispersion within a dataset. By demonstrating how far individual values differ from the mean, it provides information about the dataset’s general dispersion. In practice, the standard deviation is used in financial analysis to analyze the volatility of stock prices. A higher standard deviation indicates greater price volatility, which helps investors evaluate and manage risks associated with various investment opportunities.

Regression analysis seeks to understand and predict connections between variables. This statistical approach is used in a variety of disciplines, including marketing, where it helps anticipate sales based on advertising spend. For example, a corporation may use regression analysis to assess how changes in advertising spending affect product sales, allowing for more efficient resource allocation for future marketing efforts.

Hypothesis Testing

Hypothesis testing is used to determine the validity of a claim or hypothesis regarding a population parameter. In medical research, hypothesis testing may be used to compare the efficacy of a novel medicine against a traditional treatment. Researchers develop a null hypothesis, implying that there is no difference between treatments, and then use statistical tests to assess if there is sufficient evidence to reject the null hypothesis in favor of the alternative.

Sample Size Determination

Choosing an adequate sample size is critical for producing trustworthy and relevant results in a study. In clinical studies, for example, researchers determine the sample size to ensure that the study has the statistical power to detect differences in treatment results. A well-determined sample size strikes a compromise between the requirement for precision and practical factors, thereby strengthening the study’s results and helping evidence-based decision-making.

Bottom Line: Identify Patterns and Trends With Statistical Analysis

Statistical analysis can provide organizations with insights into customer behavior, market dynamics, and operational efficiency. This information simplifies decision-making and prepares organizations to adapt and prosper in changing situations. Organizations that use top-tier statistical analysis tools can leverage the power of data, uncover trends, and stay at the forefront of innovation, assuring a competitive advantage in today’s ever-changing technological world.

Interested in statistical analysis? Learn how to run Monte Carlo simulations and master logistic regression in Excel.

Subscribe to Data Insider

Learn the latest news and best practices about data science, big data analytics, artificial intelligence, data security, and more.

Similar articles

Crm software examples: 10 industry use cases and tools for 2024, coursera: machine learning (ml) courses for certification in 2024, 12 top data mining certifications of 2024, get the free newsletter.

Subscribe to Data Insider for top news, trends & analysis

Latest Articles

What is cybersecurity definitions,..., crm software examples: 10..., coursera: machine learning (ml)..., 76 top saas companies....

Ever wondered how we make sense of vast amounts of data to make informed decisions? Statistical analysis is the answer. In our data-driven world, statistical analysis serves as a powerful tool to uncover patterns, trends, and relationships hidden within data. From predicting sales trends to assessing the effectiveness of new treatments, statistical analysis empowers us to derive meaningful insights and drive evidence-based decision-making across various fields and industries. In this guide, we'll explore the fundamentals of statistical analysis, popular methods, software tools, practical examples, and best practices to help you harness the power of statistics effectively. Whether you're a novice or an experienced analyst, this guide will equip you with the knowledge and skills to navigate the world of statistical analysis with confidence.

What is Statistical Analysis?

Statistical analysis is a methodical process of collecting, analyzing, interpreting, and presenting data to uncover patterns, trends, and relationships. It involves applying statistical techniques and methodologies to make sense of complex data sets and draw meaningful conclusions.

Importance of Statistical Analysis

Statistical analysis plays a crucial role in various fields and industries due to its numerous benefits and applications:

- Informed Decision Making : Statistical analysis provides valuable insights that inform decision-making processes in business, healthcare, government, and academia. By analyzing data, organizations can identify trends, assess risks, and optimize strategies for better outcomes.

- Evidence-Based Research : Statistical analysis is fundamental to scientific research, enabling researchers to test hypotheses, draw conclusions, and validate theories using empirical evidence. It helps researchers quantify relationships, assess the significance of findings, and advance knowledge in their respective fields.

- Quality Improvement : In manufacturing and quality management, statistical analysis helps identify defects, improve processes, and enhance product quality. Techniques such as Six Sigma and Statistical Process Control (SPC) are used to monitor performance, reduce variation, and achieve quality objectives.

- Risk Assessment : In finance, insurance, and investment, statistical analysis is used for risk assessment and portfolio management. By analyzing historical data and market trends, analysts can quantify risks, forecast outcomes, and make informed decisions to mitigate financial risks.

- Predictive Modeling : Statistical analysis enables predictive modeling and forecasting in various domains, including sales forecasting, demand planning, and weather prediction. By analyzing historical data patterns, predictive models can anticipate future trends and outcomes with reasonable accuracy.

- Healthcare Decision Support : In healthcare, statistical analysis is integral to clinical research, epidemiology, and healthcare management. It helps healthcare professionals assess treatment effectiveness, analyze patient outcomes, and optimize resource allocation for improved patient care.

Statistical Analysis Applications

Statistical analysis finds applications across diverse domains and disciplines, including:

- Business and Economics : Market research , financial analysis, econometrics, and business intelligence.

- Healthcare and Medicine : Clinical trials, epidemiological studies, healthcare outcomes research, and disease surveillance.

- Social Sciences : Survey research, demographic analysis, psychology experiments, and public opinion polls.

- Engineering : Reliability analysis, quality control, process optimization, and product design.

- Environmental Science : Environmental monitoring, climate modeling, and ecological research.

- Education : Educational research, assessment, program evaluation, and learning analytics.

- Government and Public Policy : Policy analysis, program evaluation, census data analysis, and public administration.

- Technology and Data Science : Machine learning, artificial intelligence, data mining, and predictive analytics.

These applications demonstrate the versatility and significance of statistical analysis in addressing complex problems and informing decision-making across various sectors and disciplines.

Fundamentals of Statistics

Understanding the fundamentals of statistics is crucial for conducting meaningful analyses. Let's delve into some essential concepts that form the foundation of statistical analysis.

Basic Concepts

Statistics is the science of collecting, organizing, analyzing, and interpreting data to make informed decisions or conclusions. To embark on your statistical journey, familiarize yourself with these fundamental concepts:

- Population vs. Sample : A population comprises all the individuals or objects of interest in a study, while a sample is a subset of the population selected for analysis. Understanding the distinction between these two entities is vital, as statistical analyses often rely on samples to draw conclusions about populations.

- Independent Variables : Variables that are manipulated or controlled in an experiment.

- Dependent Variables : Variables that are observed or measured in response to changes in independent variables.

- Parameters vs. Statistics : Parameters are numerical measures that describe a population, whereas statistics are numerical measures that describe a sample. For instance, the population mean is denoted by μ (mu), while the sample mean is denoted by x̄ (x-bar).

Descriptive Statistics

Descriptive statistics involve methods for summarizing and describing the features of a dataset. These statistics provide insights into the central tendency, variability, and distribution of the data. Standard measures of descriptive statistics include:

- Mean : The arithmetic average of a set of values, calculated by summing all values and dividing by the number of observations.

- Median : The middle value in a sorted list of observations.

- Mode : The value that appears most frequently in a dataset.

- Range : The difference between the maximum and minimum values in a dataset.

- Variance : The average of the squared differences from the mean.

- Standard Deviation : The square root of the variance, providing a measure of the average distance of data points from the mean.

- Graphical Techniques : Graphical representations, including histograms, box plots, and scatter plots, offer visual insights into the distribution and relationships within a dataset. These visualizations aid in identifying patterns, outliers, and trends.

Inferential Statistics

Inferential statistics enable researchers to draw conclusions or make predictions about populations based on sample data. These methods allow for generalizations beyond the observed data. Fundamental techniques in inferential statistics include:

- Null Hypothesis (H0) : The hypothesis that there is no significant difference or relationship.

- Alternative Hypothesis (H1) : The hypothesis that there is a significant difference or relationship.

- Confidence Intervals : Confidence intervals provide a range of plausible values for a population parameter. They offer insights into the precision of sample estimates and the uncertainty associated with those estimates.

- Regression Analysis : Regression analysis examines the relationship between one or more independent variables and a dependent variable. It allows for the prediction of the dependent variable based on the values of the independent variables.

- Sampling Methods : Sampling methods, such as simple random sampling, stratified sampling, and cluster sampling, are employed to ensure that sample data are representative of the population of interest. These methods help mitigate biases and improve the generalizability of results.

Probability Distributions

Probability distributions describe the likelihood of different outcomes in a statistical experiment. Understanding these distributions is essential for modeling and analyzing random phenomena. Some common probability distributions include:

- Normal Distribution : The normal distribution, also known as the Gaussian distribution, is characterized by a symmetric, bell-shaped curve. Many natural phenomena follow this distribution, making it widely applicable in statistical analysis.

- Binomial Distribution : The binomial distribution describes the number of successes in a fixed number of independent Bernoulli trials. It is commonly used to model binary outcomes, such as success or failure, heads or tails.

- Poisson Distribution : The Poisson distribution models the number of events occurring in a fixed interval of time or space. It is often used to analyze rare or discrete events, such as the number of customer arrivals in a queue within a given time period.

Types of Statistical Analysis

Statistical analysis encompasses a diverse range of methods and approaches, each suited to different types of data and research questions. Understanding the various types of statistical analysis is essential for selecting the most appropriate technique for your analysis. Let's explore some common distinctions in statistical analysis methods.

Parametric vs. Non-parametric Analysis

Parametric and non-parametric analyses represent two broad categories of statistical methods, each with its own assumptions and applications.

- Parametric Analysis : Parametric methods assume that the data follow a specific probability distribution, often the normal distribution. These methods rely on estimating parameters (e.g., means, variances) from the data. Parametric tests typically provide more statistical power but require stricter assumptions. Examples of parametric tests include t-tests, ANOVA, and linear regression.

- Non-parametric Analysis : Non-parametric methods make fewer assumptions about the underlying distribution of the data. Instead of estimating parameters, non-parametric tests rely on ranks or other distribution-free techniques. Non-parametric tests are often used when data do not meet the assumptions of parametric tests or when dealing with ordinal or non-normal data. Examples of non-parametric tests include the Wilcoxon rank-sum test, Kruskal-Wallis test, and Spearman correlation.

Descriptive vs. Inferential Analysis

Descriptive and inferential analyses serve distinct purposes in statistical analysis, focusing on summarizing data and making inferences about populations, respectively.

- Descriptive Analysis : Descriptive statistics aim to describe and summarize the features of a dataset. These statistics provide insights into the central tendency, variability, and distribution of the data. Descriptive analysis techniques include measures of central tendency (e.g., mean, median, mode), measures of dispersion (e.g., variance, standard deviation), and graphical representations (e.g., histograms, box plots).

- Inferential Analysis : Inferential statistics involve making inferences or predictions about populations based on sample data. These methods allow researchers to generalize findings from the sample to the larger population. Inferential analysis techniques include hypothesis testing, confidence intervals, regression analysis, and sampling methods. These methods help researchers draw conclusions about population parameters, such as means, proportions, or correlations, based on sample data.

Exploratory vs. Confirmatory Analysis

Exploratory and confirmatory analyses represent two different approaches to data analysis, each serving distinct purposes in the research process.

- Exploratory Analysis : Exploratory data analysis (EDA) focuses on exploring data to discover patterns, relationships, and trends. EDA techniques involve visualizing data, identifying outliers, and generating hypotheses for further investigation. Exploratory analysis is particularly useful in the early stages of research when the goal is to gain insights and generate hypotheses rather than confirm specific hypotheses.

- Confirmatory Analysis : Confirmatory data analysis involves testing predefined hypotheses or theories based on prior knowledge or assumptions. Confirmatory analysis follows a structured approach, where hypotheses are tested using appropriate statistical methods. Confirmatory analysis is common in hypothesis-driven research, where the goal is to validate or refute specific hypotheses using empirical evidence. Techniques such as hypothesis testing, regression analysis, and experimental design are often employed in confirmatory analysis.

Methods of Statistical Analysis

Statistical analysis employs various methods to extract insights from data and make informed decisions. Let's explore some of the key methods used in statistical analysis and their applications.

Hypothesis Testing

Hypothesis testing is a fundamental concept in statistics, allowing researchers to make decisions about population parameters based on sample data. The process involves formulating null and alternative hypotheses, selecting an appropriate test statistic, determining the significance level, and interpreting the results. Standard hypothesis tests include:

- t-tests : Used to compare means between two groups.

- ANOVA (Analysis of Variance) : Extends the t-test to compare means across multiple groups.

- Chi-square test : Assessing the association between categorical variables.

Regression Analysis

Regression analysis explores the relationship between one or more independent variables and a dependent variable. It is widely used in predictive modeling and understanding the impact of variables on outcomes. Key types of regression analysis include:

- Simple Linear Regression : Examines the linear relationship between one independent variable and a dependent variable.

- Multiple Linear Regression : Extends simple linear regression to analyze the relationship between multiple independent variables and a dependent variable.

- Logistic Regression : Used for predicting binary outcomes or modeling probabilities.

Analysis of Variance (ANOVA)

ANOVA is a statistical technique used to compare means across two or more groups. It partitions the total variability in the data into components attributable to different sources, such as between-group differences and within-group variability. ANOVA is commonly used in experimental design and hypothesis testing scenarios.

Time Series Analysis

Time series analysis deals with analyzing data collected or recorded at successive time intervals. It helps identify patterns, trends, and seasonality in the data. Time series analysis techniques include:

- Trend Analysis : Identifying long-term trends or patterns in the data.

- Seasonal Decomposition : Separating the data into seasonal, trend, and residual components.

- Forecasting : Predicting future values based on historical data.

Survival Analysis

Survival analysis is used to analyze time-to-event data, such as time until death, failure, or occurrence of an event of interest. It is widely used in medical research, engineering, and social sciences to analyze survival probabilities and hazard rates over time.

Factor Analysis

Factor analysis is a statistical method used to identify underlying factors or latent variables that explain patterns of correlations among observed variables. It is commonly used in psychology, sociology, and market research to uncover underlying dimensions or constructs.

Cluster Analysis

Cluster analysis is a multivariate technique that groups similar objects or observations into clusters or segments based on their characteristics. It is widely used in market segmentation, image processing, and biological classification.

Principal Component Analysis (PCA)

PCA is a dimensionality reduction technique used to transform high-dimensional data into a lower-dimensional space while preserving most of the variability in the data. It identifies orthogonal axes (principal components) that capture the maximum variance in the data. PCA is useful for data visualization, feature selection, and data compression.

How to Choose the Right Statistical Analysis Method?

Selecting the appropriate statistical method is crucial for obtaining accurate and meaningful results from your data analysis.

Understanding Data Types and Distribution

Before choosing a statistical method, it's essential to understand the types of data you're working with and their distribution. Different statistical methods are suitable for different types of data:

- Continuous vs. Categorical Data : Determine whether your data are continuous (e.g., height, weight) or categorical (e.g., gender, race). Parametric methods such as t-tests and regression are typically used for continuous data, while non-parametric methods like chi-square tests are suitable for categorical data.

- Normality : Assess whether your data follows a normal distribution. Parametric methods often assume normality, so if your data are not normally distributed, non-parametric methods may be more appropriate.

Assessing Assumptions

Many statistical methods rely on certain assumptions about the data. Before applying a method, it's essential to assess whether these assumptions are met:

- Independence : Ensure that observations are independent of each other. Violations of independence assumptions can lead to biased results.

- Homogeneity of Variance : Verify that variances are approximately equal across groups, especially in ANOVA and regression analyses. Levene's test or Bartlett's test can be used to assess homogeneity of variance.

- Linearity : Check for linear relationships between variables, particularly in regression analysis. Residual plots can help diagnose violations of linearity assumptions.

Considering Research Objectives

Your research objectives should guide the selection of the appropriate statistical method.

- What are you trying to achieve with your analysis? : Determine whether you're interested in comparing groups, predicting outcomes, exploring relationships, or identifying patterns.

- What type of data are you analyzing? : Choose methods that are suitable for your data type and research questions.

- Are you testing specific hypotheses or exploring data for insights? : Confirmatory analyses involve testing predefined hypotheses, while exploratory analyses focus on discovering patterns or relationships in the data.

Consulting Statistical Experts

If you're unsure about the most appropriate statistical method for your analysis, don't hesitate to seek advice from statistical experts or consultants:

- Collaborate with Statisticians : Statisticians can provide valuable insights into the strengths and limitations of different statistical methods and help you select the most appropriate approach.

- Utilize Resources : Take advantage of online resources, forums, and statistical software documentation to learn about different methods and their applications.

- Peer Review : Consider seeking feedback from colleagues or peers familiar with statistical analysis to validate your approach and ensure rigor in your analysis.

By carefully considering these factors and consulting with experts when needed, you can confidently choose the suitable statistical method to address your research questions and obtain reliable results.

Statistical Analysis Software

Choosing the right software for statistical analysis is crucial for efficiently processing and interpreting your data. In addition to statistical analysis software, it's essential to consider tools for data collection, which lay the foundation for meaningful analysis.

What is Statistical Analysis Software?