- Research article

- Open access

- Published: 16 March 2013

Overview of data-synthesis in systematic reviews of studies on outcome prediction models

- Tobias van den Berg 1 ,

- Martijn W Heymans 1 ,

- Stephanie S Leone 2 ,

- David Vergouw 2 ,

- Jill A Hayden 3 ,

- Arianne P Verhagen 4 &

- Henrica CW de Vet 1

BMC Medical Research Methodology volume 13 , Article number: 42 ( 2013 ) Cite this article

41k Accesses

16 Citations

1 Altmetric

Metrics details

Many prognostic models have been developed. Different types of models, i.e. prognostic factor and outcome prediction studies, serve different purposes, which should be reflected in how the results are summarized in reviews. Therefore we set out to investigate how authors of reviews synthesize and report the results of primary outcome prediction studies.

Outcome prediction reviews published in MEDLINE between October 2005 and March 2011 were eligible and 127 Systematic reviews with the aim to summarize outcome prediction studies written in English were identified for inclusion.

Characteristics of the reviews and the primary studies that were included were independently assessed by 2 review authors, using standardized forms.

After consensus meetings a total of 50 systematic reviews that met the inclusion criteria were included. The type of primary studies included (prognostic factor or outcome prediction) was unclear in two-thirds of the reviews. A minority of the reviews reported univariable or multivariable point estimates and measures of dispersion from the primary studies. Moreover, the variables considered for outcome prediction model development were often not reported, or were unclear. In most reviews there was no information about model performance. Quantitative analysis was performed in 10 reviews, and 49 reviews assessed the primary studies qualitatively. In both analyses types a range of different methods was used to present the results of the outcome prediction studies.

Conclusions

Different methods are applied to synthesize primary study results but quantitative analysis is rarely performed. The description of its objectives and of the primary studies is suboptimal and performance parameters of the outcome prediction models are rarely mentioned. The poor reporting and the wide variety of data synthesis strategies are prone to influence the conclusions of outcome prediction reviews. Therefore, there is much room for improvement in reviews of outcome prediction studies.

Peer Review reports

The methodology for prognosis research is still under development. Although there is abundant literature to help researchers perform this type of research [ 1 – 5 ], there is still no widely agreed approach to building a multivariable prediction model [ 6 ]. An important distinction in prognosis is made between prognostic factor models, also called explanatory models and outcome prediction models [ 7 , 8 ]. Prognostic factor studies investigate causal relationships, or pathways between a single (prognostic) factor and an outcome, and focus on the effect size (e.g. relative risk) of this prognostic factor which ideally is adjusted for potential confounders. Outcome prediction studies, on the other hand, combine multiple factors (e.g. clinical and non-clinical patient characteristics) in order to predict future events in individuals, and therefore focus on absolute risks, i.e. predicted probabilities in logistic regression analysis. Methods that can be used to summarize data from prognostic factor studies in a meta-analysis can easily be found in the literature [ 9 , 10 ], but this is not the case for outcome prediction studies. Therefore, in the present study we focus on how authors of published reviews have synthesized outcome prediction models. The nomenclature to indicate various types of prognosis research is not standardized. We use prognosis research as an umbrella term for all research that might explain or predict a future outcome and prognostic factor and outcome prediction as specific types of prognosis studies.

In 2006, Hayden et al. showed that in systematic reviews of prognosis studies, different methods are used to assess the quality of primary studies [ 11 ]. Moreover, when quality is assessed, integration of these quality scores in the synthesis of the review is not guaranteed. For reviews of outcome prediction models, additional characteristics are important in the synthesis of models to reflect choices made in the primary studies, such as which variables are included in statistical models and how this selection was made. These choices therefore also reflect the internal and external validity of a model and influence the predictive performance of the model. In systematic reviews the researchers synthesize results across primary outcome prediction studies which include different variables and show methodological diversity. Moreover, relevant information is not always available, due to poor reporting in the studies. For example, several researchers have found that current knowledge about the recommended number of events per variable, and the coding and selection of variables, among other features, are not always reported in primary outcome prediction research [ 12 – 14 ]. Although improvement in primary studies themselves is needed, reviews that summarize outcome prediction evidence need to consider the current diversity in methodology in primary studies.

In this meta-review we focus on reviews of outcome prediction studies, and how they summarize the characteristics of design and analysis, and the results of primary studies. As there is no guideline nor agreement how primary outcome prediction models in medical research and epidemiology should be summarized in systematic reviews, an overview of current methods helps researchers to improve and develop these methods. Moreover, current methods for outcome prediction reviews are unknown to the research community. Therefore, the aim of this review was to provide an overview on how published reviews of outcome prediction studies describe and summarize the characteristics of the analyses in primary studies, and how the data is synthesized.

Literature search and selection of studies

Systematic reviews and meta-analyses of outcome prediction models that were published between October 2005 and March 2011 were searched. We were only interested in reviews that included multivariable outcome prediction studies. In collaboration with a medical information specialist, we developed a search strategy in MEDLINE, extending on the strategy used by Hayden [ 11 ], by adding recommended other search terms for predictive and prognostic research [ 15 , 16 ]. The full search strategy is presented in Appendix 1.

Based on title and abstract, potential eligible reviews were selected by one author (TvdB), who in case of any doubt included the review. Another author (MH) checked the set of potential eligible reviews. Ineligible reviews were excluded after consensus between both authors. The full texts of the included reviews were read, and if there was any doubt on eligibility a third review author (HdV) was consulted. The inclusion criteria were met if the study design was a systematic review with or without a meta-analysis, multiple variables were studied in an outcome prediction model, and the review was written in the English language. Reviews were excluded if they were based on individual patient data only, or when the topic was genetic profiling.

Data-extraction

A data-extraction form was developed, based on important items to prognosis [ 1 , 2 , 12 , 13 , 17 ], to assess the characteristics of reviews and primary studies and is available from the first author on request. The items on this data-extraction form are shown in Appendix 2. Before the form was finalized it was pilot-tested by all review authors and minor adjustments were made after discussion about the differences in scores. One review author scored all reviews (TvdB) while other review authors (MH, AV, DV, and SL) collectively scored all reviews. Consensus meetings were held within 2 weeks after a review had been scored to solve disagreements. If consensus was not reached, a third reviewer (MH or HdV) was consulted to make a final decision.

An item was scored ‘yes’ if positive information was found about that specific methodological item, e.g. if it was clear that sensitivity analyses were conducted. If it was clear that a specific methodological requirement was not fulfilled, a ‘no’ was scored, e.g. no sensitivity analyses were conducted. In case of doubt or uncertainty, ‘unclear’ was scored. Sometimes, a methodological item could be scored as ‘not applicable’. The number of reviews within a specific answer category was reported, as well as the proportion.

Literature search and selection process

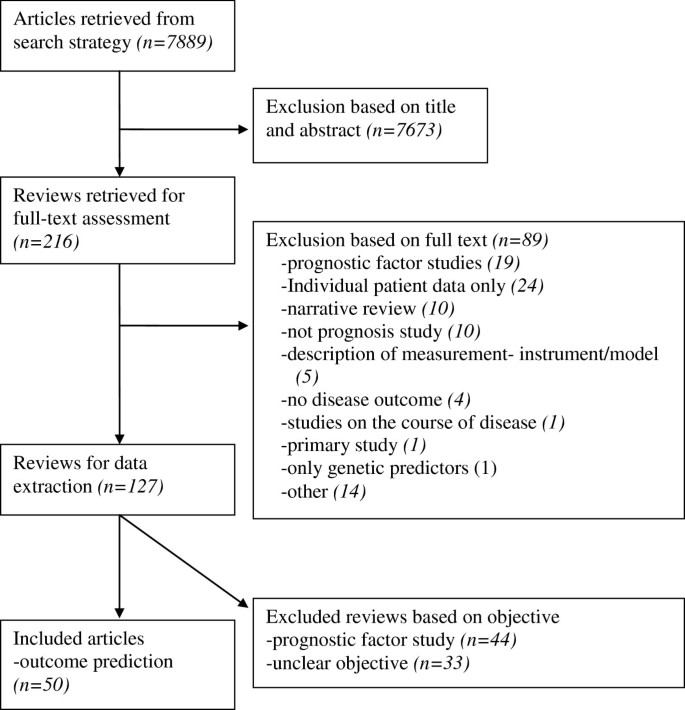

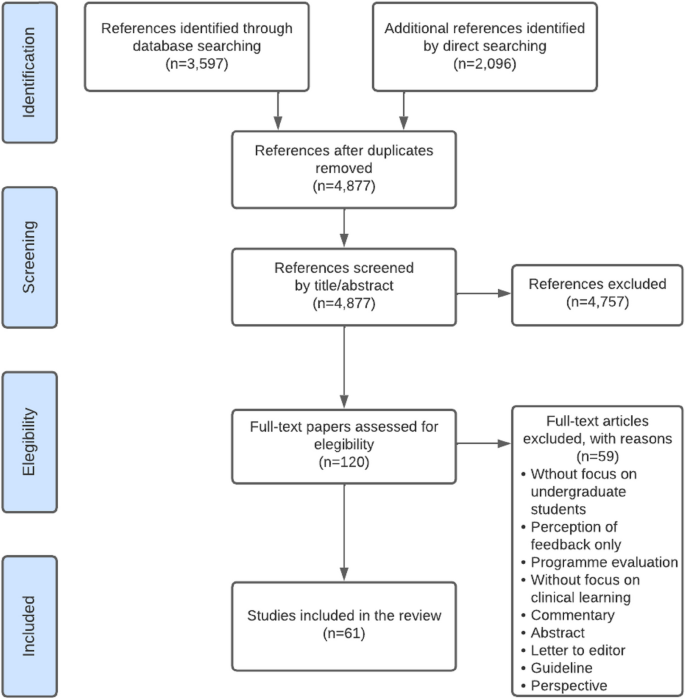

The search strategy revealed 7889 references and, based on title and abstract, 216 were selected to be read in full text (see the flowchart in Figure 1 ). Of these reviews, 89 were excluded and 127 remained. Exclusions after the full text had been read were mainly due to the focus of the research on a single variable with an outcome (prognostic factor study), analysis based on individual patient data only, or a narrative overview study design. After completing the data-extraction, the objectives and methods of 44 reviews described summaries of prognostic factor studies, and 33 reviews had an unclear approach. Therefore, a total of 50 reviews on outcome prediction studies were analyzed [ 18 – 67 ].

Flowchart of the search and selection process.

After completing the data-extraction form for all of the included reviews, most disagreements between review authors were found on items concerning the review objectives, the type of primary studies included, and the method of qualitative data-synthesis. Unclear reporting and, to a lesser degree, reading errors contributed to the disagreements. After consensus meetings only a small proportion of items needed to be discussed with a third reviewer.

Objective and design of the review

Table 1 , section 1 shows the items with regard to information about the reviews. Of the 50 reviews rated as summaries of outcome prediction studies, less than one third included only outcome prediction studies *[ 23 , 27 , 28 , 32 , 35 , 39 , 44 , 48 ],[ 50 , 52 , 55 , 58 , 60 , 66 ]. In about two thirds, the type of primary studies that were included was unclear, and the remaining reviews included a combination of prognostic factor and outcome prediction studies. Most reviews clearly described their outcome of interest. Also information about the assessment of the methodological quality of the primary studies, i.e. risk of bias, was provided in most reviews. In those that did, two thirds described the basic design of the primary studies in addition to a list of methodological criteria (defined in our study as a list consisting of at least four quality items). In some reviews an established criteria list was used or adapted, or a new criteria list was developed. In the reviews that assessed methodological quality, less than half actually used this information to account for differences in study quality, mainly by performing a ‘levels of evidence’ analysis, subgroup-analyses, or sensitivity analyses.

Information about the design and results of the primary studies

In Table 1 , section 2 shows information provided about the included primary studies. The outcome measures used in the included studies were reported in most of the reviews. Only 2 reviews [ 28 , 52 ] described the statistical methods that were used in the primary studies to select variables for inclusion of a final prediction model, e.g. forward or backward selection procedures, and 6 others whether and how patients were treated.

A minority of reviews [ 23 , 24 , 27 , 28 ] described for all studies the variables that were considered for inclusion in the outcome prediction model and only 5 reviews [ 36 , 37 , 39 , 48 , 55 ] reported univariable point estimates (i.e.. regression coefficients or odds ratios) and estimates of dispersion (e.g. standard errors) of all studies. Similarly, multivariable point estimates and estimates of dispersion were reported in respectively 11 and 10 of the reviews [ 21 , 26 , 27 , 31 , 33 , 37 , 44 , 52 ],[ 55 , 64 , 65 ].

With regard to the presentation of univariable and multivariable point estimates, 2 reviews presented both types of results [ 37 , 55 ], 31 did not report any estimates, and 17 reviews were unclear or reported only univariable or multivariable results [not shown in the table]. Lastly, model performance and number of events per variable were reported in 7 reviews [ 32 , 39 , 41 , 60 , 61 , 65 , 66 ] and 4 reviews [ 40 , 48 , 58 , 61 ], respectively.

Data-analysis and synthesis in the reviews

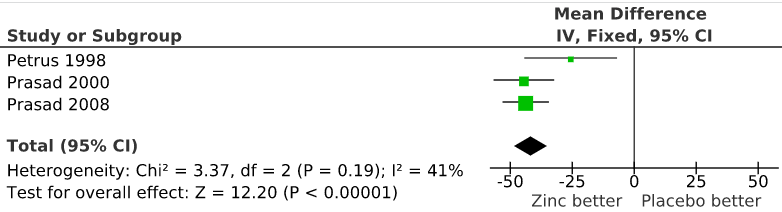

Table 1 , section 3 illustrates how the results of primary studies were summarized in the reviews. It shows that heterogeneity was described in almost all reviews by reporting differences in the study design and the characteristics of the study population. All but one review [ 57 ] summarized the results of included studies in a qualitative manner. Methods that were mainly used for that purpose were number of statistical significant results, consistency of findings, or a combination of these. Quantitative analysis, i.e. statistical pooling, was performed in 10 of the 50 reviews [ 25 , 28 , 31 , 36 , 37 , 44 , 45 , 57 – 59 ]. The quantitative methods used included random effects models and fixed effects models of regression coefficients, odds ratios or hazard ratios. Of these quantitative summaries, 40% assessed the presence of statistical heterogeneity using I 2 , Chi 2 , or the Q statistic. In two reviews [ 25 , 59 ], statistical heterogeneity was found to be present, and subgroup analysis was performed to determine the source of this heterogeneity [results not shown]. In 8 of the reviews there was a graphical presentation of the results, in which a forest plot [ 25 , 28 , 36 – 38 , 52 , 59 ], per single predictor, was the frequently used method. Other studies used a barplot [ 57 ] or a scatterplot [ 38 ]. In 6 reviews [ 25 , 26 , 32 , 43 , 46 , 58 ] a sensitivity analysis was performed to test the robustness of the choices made such as changing the cut-off value for a high or low quality primary study.

We made an overview of how systematic reviews summarize and report the results of primary outcome prediction studies. Specifically, we extracted information on how the data-synthesis was performed in reviews since outcome prediction models may consider different potential predictors, and include a dissimilar set of variables in the final prediction model, and use a variety of statistical methods to obtain an outcome prediction model.

Currently, in prognosis studies a distinction is made between outcome prediction models and prognostic factor models. The methodology of data synthesis in a review of the latter type of prognosis is comparable to the methodology of aetiological reviews. For that reason, in the present study we only focused on reviews of outcome prediction studies. Nonetheless, we found it difficult to distinct between both review types. Less than half of the reviews that we initially selected for data-extraction in fact seemed to serve an outcome prediction purpose. It appeared that the other reviews summarized prognostic factor studies only, or the objective was unclear. In particular, prognostic factor reviews that investigated more than one variable in addition to non-specific objectives made it difficult to clarify what the purpose of reviews was. As a consequence, we might have misclassified some of the 44 excluded reviews rated as prognostic factor. The objective of a review should also include information about the type of study that is included, that is of outcome prediction studies in this case. However, we found that in reviews aimed at outcome prediction the type of primary study was unclear for two-thirds of the reviews. An example we encountered in a review was that their purpose was “to identify preoperative predictive factors for acute post-operative pain and analgesic consumption” although the review authors included any study that identified one or more potential risk factors or predictive factors. The risk of combining both types of studies, i.e. risk factor or prognostic factor studies and predictive factor studies, is that inclusion of potential covariables in the former type are based on change in regression coefficient of the risk factor while in the latter study type all potential predictor variables are included based on their predictive ability of the outcome. This distinction may lead to: 1) biased results in a meta-analysis or other form of evidence synthesis because a risk factor is not always predictive for an outcome and 2) risk factor studies – if adjusted for potential confounders at all – have a slightly different method to obtain a multivariable model compared to outcome prediction studies which may also lead to biased regression coefficients. The distinction between prognostic factor and outcome prediction studies was already emphasized in 1983 by Copas [ 68 ]. He stated that “a method for achieving a good predictor may be quite inappropriate for other questions in regression analysis such as the interpretation of individual regression coefficients”. In other words, the methodology of outcome prediction modelling differs from that of prognostic factor modelling, and therefore combining both types of research into one review to reflect current evidence should be discouraged. Hemingway et al. [ 2 ] appealed for standard nomenclature in prognosis research, and the results of our study underline their plea. Authors of reviews and primary studies should clarify their type of research, for example by using the terms applied by Hayden et al. [ 8 ] ‘prognostic factor modelling’ and ‘outcome prediction modelling’, and give a clear description of their objective.

Studies included in outcome prediction reviews are rarely similar in design and methodology, and this is often neglected when summarizing the evidence. Differences, for instance in the variables studied and the method of analysis for variable selection might explain heterogeneity in results, and should therefore be reported and reflected on when striving to summarize evidence in the most appropriate way. There is no doubt that the methodological quality of primary studies included in reviews is related to the concept of bias [ 69 , 70 ] and it is therefore important to assess this [ 11 , 69 , 70 ]. Dissemination bias reflects if publication bias is likely to be present, how this is handled and what is done to correct for it [ 71 ]. To our knowledge, dissemination bias and especially its consequences in reviews of outcome prediction models are not studied yet. Most likely testimation bias [ 5 ], i.e. the predictors considered and the amount of predictors in relation to the effective sample size influence results more then publication bias. Therefore, we did not study dissemination bias on the review level.

With regard to the reporting of primary study characteristics in the systematic reviews, there is much room for improvement. We found that the methods of model development (e.g. the variables considered and the variable selection methods used) in the primary studies were not, or only vaguely reported in the included reviews. These methods are however important, because variable selection procedures can affect the composition of the multivariable model due to estimation bias, or may result in an increase in model uncertainty [ 72 – 74 ]. Furthermore, the predictive performance of the model can be biased by these methods [ 74 ]. We also found that only 5 of the reviews reported what kind of treatment the patients received in the primary studies. Although prescribed treatment is often not considered as a candidate predictor, it is likely to have a considerable impact on prognosis. Moreover, treatment may vary in relation to predictive variables [ 75 ], and although randomized controlled trials provide patients with similar treatment strategies, in cohort studies which are most often seen in prognosis research this is often not the case. Regardless of difficulties in defining groups that receive the same treatment, it is imperative to consider treatment in outcome prediction models. In order to ensure correct data-synthesis of the results, the primary studies not only should provide point estimates and estimates of dispersion of all the included variables, but also for non-significant findings. Whereas the results of positive or favourable findings are more often reported [ 75 – 78 ], the effects of predictive factors that do not reach statistical significance also need to be compared and summarized in a review. Imagine a variable being of statistical significance in one article, but not reported in others because of non-significance. It is likely that this one significant result is a spurious finding or that the others were underpowered. Without information about the non-significant findings in other studies, biased or even incorrect conclusions might be drawn. This means that reporting of the evidence of primary studies should be accompanied by the results of univariable and multivariable associations, regardless of their level of significance. Moreover, confidence intervals, or other estimates of dispersion are also needed in the review, and unfortunately these results were not presented in most of the reviews in our study. Some reviews considered differences in unadjusted and adjusted results, and the results of one review were sensibly stratified according to univariable and multivariable effects [ 38 ]. Other reviews merely reported multivariable results [ 31 ], or only univariable results if multivariable results were unavailable [ 58 ]. In addition to the multivariable results of a final prediction model, the predictive performance of these models is important for the assessment of clinical usefulness [ 79 ]. A prediction model in itself does not indicate how much variance in outcome is explained by the included variables. Unfortunately, in addition to the non-reporting of several primary study characteristics, the performance of the models was rarely reported in the reviews included in our overview.

Different stages can be distinguished in outcome prediction research [ 80 ]. Most outcome prediction models evaluated in the systematic reviews appeared to be in a developmental phase. Before implementation in daily practice, confirmation of the results in other studies is needed. With this type of validation studies underway, in future reviews we should acknowledge the difference between externally validated models and models from developmental studies, and analyze them separately.

In systematic reviews data can be combined quantitatively, i.e. a meta-analysis can be performed. This was done in 10 of the reviews. All of them combined point estimates (mostly odds ratios, but also a mix of odds ratios, hazard ratios and relative risks) and confidence intervals for single outcome prediction variables. This made it possible to calculate a pooled point estimate, often complemented with confidence intervals [ 81 ]. However, in outcome prediction research we are interested in the estimates of a combination of predictive factors, which makes it possible to calculate absolute risks or probabilities to predict an outcome in individuals [ 82 ]. Even if the relative risk of a variable is statistically significant, it does not provide information about the extent to which this variable is predictive for a particular outcome. The distribution of predictor values, outcome prevalence, and correlations between variables also influences the predictive value of variables within a model [ 83 ]. Effect sizes also provide no information about the amount of variation in outcomes that is explained. In summary: the current quantitative methods seem to be more of an explanatory way of summarizing the available evidence, instead of quantitatively summarizing complete outcome prediction models.

Medline was the only database that was searched for relevant reviews. Our intention was to provide an overview of recently published reviews and not to include all relevant outcome prediction reviews. Within Medline, some eligible reviews may have been missed if their titles and abstracts did not include relevant terms and information. An extensive search strategy was applied and abstracts were screened thoroughly and discussed in case of disagreement. Data-extraction was performed in pairs to prevent reading and interpretation errors. Disagreements mainly occurred when deciding on the objective of a review and the type of primary studies included, due to poor reporting in most of the reviews. This indicates a lack of clarity, explanation and reporting within reviews. Therefore, screening in pairs is a necessity, and standardized criteria should be developed and applied in future studies focusing on such reviews. Consistency in rating on the data-extraction form was enhanced by one review author rating all reviews, with one of the other review authors as second rater. Several items were scored as “no”, but we did not know whether this was a true negative (i.e. leading to bias) or that no information was reported about a particular item. For review authors it is especially difficult to summarize information about primary studies because there may be a lack of information in the studies [ 13 , 14 , 84 ].

Implications

There is still no available methodological procedure for a meta-analysis of regression coefficients of multivariable outcome prediction models. Some authors, such as Riley et al. and Altman [ 81 , 84 ], are of the opinion that it remains practically impossible, due to poor reporting, publication bias, and heterogeneity across studies. However, a considerable number of outcome prediction studies have been published, and it would be useful to integrate this body of evidence into one summary result. Moreover, there is an increase in the number of reviews that are being published. Therefore, there is a need to find the best strategy to integrate the results of primary outcome prediction studies. Consequently, until a method to quantitatively synthesize results has been developed, a sensible qualitative data-synthesis, which takes methodological differences between primary studies into account, is indicated. In summarizing the evidence, differences in methodological items and model-building strategies should be described and taken into account when assessing the overall evidence for outcome prediction. For example, univariable and multivariable results should be described separately, or subgroup analyses should be performed when they are combined. Other items that, in our opinion should be taken into consideration with regard to the data-synthesis are: study quality, variables used for model development, statistical methods used for variable selection procedures, the performance of models, and sufficient cases and non-cases to guarantee adequate study power. Regardless of whether or not these items are taken into consideration in the data-synthesis, we strongly recommend that in reviews they are described for all primary studies included so that readers can also take them into consideration.

In conclusion, poor reporting of relevant information and differences in methodology occur in primary outcome prediction research. Even the predictive ability of the models was rarely reported. This, together with our current inability to pool multivariable outcome prediction models, challenges review authors to make informative reviews of outcome prediction models.

Search strategy: 01-03-2011

Database: MEDLINE

((“systematic review”[tiab] OR “systematic reviews”[tiab] OR “Meta-Analysis as Topic”[Mesh] OR meta-analysis[tiab] OR “Meta-Analysis”[Publication Type]) AND (“2005/11/01”[EDat] : “3000”[EDat]) AND ((“Incidence”[Mesh] OR “Models, Statistical”[Mesh] OR “Mortality”[Mesh] OR “mortality ”[Subheading] OR “Follow-Up Studies”[Mesh] OR “Prognosis”[Mesh:noexp] OR “Disease-Free Survival”[Mesh] OR “Disease Progression”[Mesh:noexp] OR “Natural History”[Mesh] OR “Prospective Studies”[Mesh]) OR ((cohort*[tw] OR course*[tw] OR first episode*[tw] OR predict*[tw] OR predictor*[tw] OR prognos*[tw] OR follow-up stud*[tw] OR inciden*[tw]) NOT medline[sb]))) NOT ((“addresses”[Publication Type] OR “biography”[Publication Type] OR “case reports”[Publication Type] OR “comment”[Publication Type] OR “directory”[Publication Type] OR “editorial”[Publication Type] OR “festschrift”[Publication Type] OR “interview”[Publication Type] OR “lectures”[Publication Type] OR “legal cases”[Publication Type] OR “legislation”[Publication Type] OR “letter”[Publication Type] OR “news”[Publication Type] OR “newspaper article”[Publication Type] OR “patient education handout”[Publication Type] OR “popular works”[Publication Type] OR “congresses”[Publication Type] OR “consensus development conference”[Publication Type] OR “consensus development conference, nih”[Publication Type] OR “practice guideline”[Publication Type]) OR (“Animals”[Mesh] NOT (“Animals”[Mesh] AND “Humans”[Mesh]))).

Items used to assess the characteristics of analyses in outcome prediction primary studies and reviews:

Information about the review:

What type of studies are included?

Is(/are) the outcome(s) of interest clearly described?

Is information about the quality assessment method provided?

What method was used?

Did the review account for quality?

Information about the analysis of the primary studies:

Are the outcome measures clearly described?

Is the statistical method used for variable selection described?

Is there a description of treatments received provided?

Information about the results of the primary studies:

Are crude univariable associations and estimates of dispersion for all the variables of the primary studies presented?

Are all variables that were used for model development described?

Are the multivariable associations and estimates of dispersions presented?

Is model performance assessed and reported?

Is the number of predictors relative to the number of outcome events described?

Data-analysis and synthesis of the review:

Is the heterogeneity of primary studies described?

Is a qualitative synthesis presented?

Are methods for quantitative analysis described?

Is the statistical heterogeneity assessed?

What method is used to assess statistical heterogeneity?

If statistical heterogeneity exists, are sources of the heterogeneity investigated?

What method is used to investigate potential sources of heterogeneity?

Is a graphical presentation of the results provided?

Are sensitivity analysis performed?

On which level?

Harrell FEJ, Lee KL, Mark DB: Multivariable prognostic models: issues in developing models, evaluating assumptions and adequacy, and measuring and reducing errors. Stat Med. 1996, 15: 361-387. 10.1002/(SICI)1097-0258(19960229)15:4<361::AID-SIM168>3.0.CO;2-4.

Article PubMed Google Scholar

Hemingway H, Riley RD, Altman DG: Ten steps towards improving prognosis research. BMJ. 2009, 339: b4184-10.1136/bmj.b4184.

Moons KGM, Donders AR, Steyerberg EW, Harrell FE: Penalized maximum likelihood estimation to directly adjust diagnostic and prognostic prediction models for overoptimism: a clinical example. J Clin Epidemiol. 2004, 57: 1262-1270. 10.1016/j.jclinepi.2004.01.020.

Article CAS PubMed Google Scholar

Royston P, Altman DG, Sauerbrei W: Dichotomizing continuous predictors in multiple regression: a bad idea. Stat Med. 2006, 25: 127-141. 10.1002/sim.2331.

Steyerberg EW: Clinical Prediction Models: A Practical Approach to Development, Validation, and Updating. 2009, New York: Springer

Book Google Scholar

Royston P, Moons KGM, Altman DG, Vergouwe Y: Prognosis and prognostic research: Developing a prognostic model. BMJ. 2009, 338: b604-10.1136/bmj.b604.

Moons KGM, Royston P, Vergouwe Y, Grobbee DE, Altman DG: Prognosis and prognostic research: what, why, and how?. BMJ. 2009, 338: b375-10.1136/bmj.b375.

Hayden JA, Dunn KM, van der Windt DA, Shaw WS: What is the prognosis of back pain?. Best Pract Res Clin Rheumatol. 2010, 24: 167-179. 10.1016/j.berh.2009.12.005.

Hayden JA, Chou R, Hogg-Johnson S, Bombardier C: Systematic reviews of low back pain prognosis had variable methods and results: guidance for future prognosis reviews. J Clin Epidemiol. 2009, 62: 781-796. 10.1016/j.jclinepi.2008.09.004.

Krasopoulos G, Brister SJ, Beattie WS, Buchanan MR: Aspirin “resistance” and risk of cardiovascular morbidity: systematic review and meta-analysis. BMJ. 2008, 336: 195-198. 10.1136/bmj.39430.529549.BE.

Article PubMed PubMed Central Google Scholar

Hayden JA, Cote P, Bombardier C: Evaluation of the quality of prognosis studies in systematic reviews. Ann Intern Med. 2006, 144: 427-437. 10.7326/0003-4819-144-6-200603210-00010.

Mallett S, Timmer A, Sauerbrei W, Altman DG: Reporting of prognostic studies of tumour markers: a review of published articles in relation to REMARK guidelines. Br J Cancer. 2010, 102: 173-180. 10.1038/sj.bjc.6605462.

Mallett S, Royston P, Waters R, Dutton S, Altman DG: Reporting performance of prognostic models in cancer: a review. BMC Med. 2010, 8: 21-10.1186/1741-7015-8-21.

Mallett S, Royston P, Dutton S, Waters R, Altman DG: Reporting methods in studies developing prognostic models in cancer: a review. BMC Med. 2010, 8: 20-10.1186/1741-7015-8-20.

Ingui BJ, Rogers MA: Searching for clinical prediction rules in MEDLINE. J Am Med Inform Assoc. 2001, 8: 391-397. 10.1136/jamia.2001.0080391.

Article CAS PubMed PubMed Central Google Scholar

Wilczynski NL: Natural History and Prognosis. PDQ, Evidence-Based Principles and Practice. Edited by: McKibbon KA, Wilczynski NL, Eady A, Marks S. 2009, Shelton, Connecticut: People’s Medical Publishing House

Google Scholar

Austin PC, Tu JV: Automated variable selection methods for logistic regression produced unstable models for predicting acute myocardial infarction mortality. J Clin Epidemiol. 2004, 57: 1138-1146. 10.1016/j.jclinepi.2004.04.003.

Lee M, Chodosh J: Dementia and life expectancy: what do we know?. J Am Med Dir Assoc. 2009, 10: 466-471. 10.1016/j.jamda.2009.03.014.

Gravante G, Garcea G, Ong S: Prediction of Mortality in Acute Pancreatitis: A Systematic Review of the Published Evidence. Pancreatology. 2009, 9: 601-614. 10.1159/000212097.

Celestin J, Edwards RR, Jamison RN: Pretreatment psychosocial variables as predictors of outcomes following lumbar surgery and spinal cord stimulation: a systematic review and literature synthesis. Pain Med. 2009, 10: 639-653. 10.1111/j.1526-4637.2009.00632.x.

Wright AA, Cook C, Abbott JH: Variables associated with the progression of hip osteoarthritis: a systematic review. Arthritis Rheum. 2009, 61: 925-936. 10.1002/art.24641.

Heitz C, Hilfiker R, Bachmann L: Comparison of risk factors predicting return to work between patients with subacute and chronic non-specific low back pain: systematic review. Eur Spine J. 2009, 18: 1829-35. 10.1007/s00586-009-1083-9.

Sansam K, Neumann V, O’Connor R, Bhakta B: Predicting walking ability following lower limb amputation: a systematic review of the literature. J Rehabil Med. 2009, 41: 593-603. 10.2340/16501977-0393.

Detaille SI, Heerkens YF, Engels JA, van der Gulden JWJ, van Dijk FJH: Common prognostic factors of work disability among employees with a chronic somatic disease: a systematic review of cohort studies. Scand J Work Environ Health. 2009, 35: 261-281. 10.5271/sjweh.1337.

Walton DM, Pretty J, MacDermid JC, Teasell RW: Risk factors for persistent problems following whiplash injury: results of a systematic review and meta-analysis. J Orthop Sports Phys Ther. 2009, 39: 334-350.

van Velzen JM, van Bennekom CAM, Edelaar MJA, Sluiter JK, Frings-Dresen MHW: Prognostic factors of return to work after acquired brain injury: a systematic review. Brain Inj. 2009, 23: 385-395. 10.1080/02699050902838165.

Borghuis MS, Lucassen PLBJ, van de Laar FA, Speckens AE, van Weel C, olde Hartman TC: Medically unexplained symptoms, somatisation disorder and hypochondriasis: course and prognosis. A systematic review. J Psychosom Res. 2009, 66: 363-377. 10.1016/j.jpsychores.2008.09.018.

Bramer JAM, van Linge JH, Grimer RJ, Scholten RJPM: Prognostic factors in localized extremity osteosarcoma: a systematic review. Eur J Surg Oncol. 2009, 35: 1030-1036. 10.1016/j.ejso.2009.01.011.

Tandon P, Garcia-Tsao G: Prognostic indicators in hepatocellular carcinoma: a systematic review of 72 studies. Liver Int. 2009, 29: 502-510. 10.1111/j.1478-3231.2008.01957.x.

Santaguida PL, Hawker GA, Hudak PL: Patient characteristics affecting the prognosis of total hip and knee joint arthroplasty: a systematic review. Can J Surg. 2008, 51: 428-436.

PubMed PubMed Central Google Scholar

Elmunzer BJ, Young SD, Inadomi JM, Schoenfeld P, Laine L: Systematic review of the predictors of recurrent hemorrhage after endoscopic hemostatic therapy for bleeding peptic ulcers. Am J Gastroenterol. 2008, 103: 2625-2632. 10.1111/j.1572-0241.2008.02070.x.

Adamson SJ, Sellman JD, Frampton CMA: Patient predictors of alcohol treatment outcome: a systematic review. J Subst Abuse Treat. 2009, 36: 75-86. 10.1016/j.jsat.2008.05.007.

Paez JIG, Costa SF: Risk factors associated with mortality of infections caused by Stenotrophomonas maltophilia: a systematic review. J Hosp Infect. 2008, 70: 101-108. 10.1016/j.jhin.2008.05.020.

Johnson SR, Swiston JR, Granton JT: Prognostic factors for survival in scleroderma associated pulmonary arterial hypertension. J Rheumatol. 2008, 35: 1584-1590.

PubMed Google Scholar

Clarke SA, Eiser C, Skinner R: Health-related quality of life in survivors of BMT for paediatric malignancy: a systematic review of the literature. Bone Marrow Transplant. 2008, 42: 73-82. 10.1038/bmt.2008.156.

Kok M, Cnossen J, Gravendeel L, van der Post J, Opmeer B, Mol BW: Clinical factors to predict the outcome of external cephalic version: a metaanalysis. Am J Obstet Gynecol. 2008, 199: 630-637.

Stuart-Harris R, Caldas C, Pinder SE, Pharoah P: Proliferation markers and survival in early breast cancer: a systematic review and meta-analysis of 85 studies in 32,825 patients. Breast. 2008, 17: 323-334. 10.1016/j.breast.2008.02.002.

Kamper SJ, Rebbeck TJ, Maher CG, McAuley JH, Sterling M: Course and prognostic factors of whiplash: a systematic review and meta-analysis. Pain. 2008, 138: 617-629. 10.1016/j.pain.2008.02.019.

Nijrolder I, van der Horst H, van der Windt D: Prognosis of fatigue. A systematic review. J Psychosom Res. 2008, 64: 335-349. 10.1016/j.jpsychores.2007.11.001.

Williams M, Williamson E, Gates S, Lamb S, Cooke M: A systematic literature review of physical prognostic factors for the development of Late Whiplash Syndrome. Spine (Phila Pa 1976). 2007, 32: E764-E780. 10.1097/BRS.0b013e31815b6565.

Article Google Scholar

Willemse-van Son AHP, Ribbers GM, Verhagen AP, Stam HJ: Prognostic factors of long-term functioning and productivity after traumatic brain injury: a systematic review of prospective cohort studies. Clin Rehabil. 2007, 21: 1024-1037. 10.1177/0269215507077603.

Alvarez J, Wilkinson J, Lipshultz S: Outcome Predictors for Pediatric Dilated Cardiomyopathy: A Systematic Review. Prog Pediatr Cardiol. 2007, 23: 25-32. 10.1016/j.ppedcard.2007.05.009.

Mallen CD, Peat G, Thomas E, Dunn KM, Croft PR: Prognostic factors for musculoskeletal pain in primary care: a systematic review. Br J Gen Pract. 2007, 57: 655-661.

Stroke Risk in Atrial Fibrillation Working Group: Independent predictors of stroke in patients with atrial fibrillation: a systematic review. Neurology. 2007, 69: 546-554.

Kent PM, Keating JL: Can we predict poor recovery from recent-onset nonspecific low back pain? A systematic review. Man Ther. 2008, 13: 12-28. 10.1016/j.math.2007.05.009.

Tjang YS, van Hees Y, Korfer R, Grobbee DE, van der Heijden GJMG: Predictors of mortality after aortic valve replacement. Eur J Cardiothorac Surg. 2007, 32: 469-474. 10.1016/j.ejcts.2007.06.012.

Pfannschmidt J, Dienemann H, Hoffmann H: Surgical resection of pulmonary metastases from colorectal cancer: a systematic review of published series. Ann Thorac Surg. 2007, 84: 324-338. 10.1016/j.athoracsur.2007.02.093.

Williamson E, Williams M, Gates S, Lamb SE: A systematic literature review of psychological factors and the development of late whiplash syndrome. Pain. 2008, 135: 20-30. 10.1016/j.pain.2007.04.035.

Tas U, Verhagen AP, Bierma-Zeinstra SMA, Odding E, Koes BW: Prognostic factors of disability in older people: a systematic review. Br J Gen Pract. 2007, 57: 319-323.

Rassi AJ, Rassi A, Rassi SG: Predictors of mortality in chronic Chagas disease: a systematic review of observational studies. Circulation. 2007, 115: 1101-1108. 10.1161/CIRCULATIONAHA.106.627265.

Belo JN, Berger MY, Reijman M, Koes BW, Bierma-Zeinstra SMA: Prognostic factors of progression of osteoarthritis of the knee: a systematic review of observational studies. Arthritis Rheum. 2007, 57: 13-26. 10.1002/art.22475.

Langer-Gould A, Popat RA, Huang SM: Clinical and demographic predictors of long-term disability in patients with relapsing-remitting multiple sclerosis: a systematic review. Arch Neurol. 2006, 63: 1686-1691. 10.1001/archneur.63.12.1686.

Lamme B, Mahler CW, van Ruler O, Gouma DJ, Reitsma JB, Boermeester MA: Clinical predictors of ongoing infection in secondary peritonitis: systematic review. World J Surg. 2006, 30: 2170-2181. 10.1007/s00268-005-0333-1.

van Dijk GM, Dekker J, Veenhof C, van den Ende CHM: Course of functional status and pain in osteoarthritis of the hip or knee: a systematic review of the literature. Arthritis Rheum. 2006, 55: 779-785. 10.1002/art.22244.

Aalto TJ, Malmivaara A, Kovacs F: Preoperative predictors for postoperative clinical outcome in lumbar spinal stenosis: systematic review. Spine (Phila Pa 1976). 2006, 31: E648-E663. 10.1097/01.brs.0000231727.88477.da.

Hauser CA, Stockler MR, Tattersall MHN: Prognostic factors in patients with recently diagnosed incurable cancer: a systematic review. Support Care Cancer. 2006, 14: 999-1011. 10.1007/s00520-006-0079-9.

Bollen CW, Uiterwaal CSPM, van Vught AJ: Systematic review of determinants of mortality in high frequency oscillatory ventilation in acute respiratory distress syndrome. Crit Care. 2006, 10: R34-10.1186/cc4824.

Steenstra IA, Verbeek JH, Heymans MW, Bongers PM: Prognostic factors for duration of sick leave in patients sick listed with acute low back pain: a systematic review of the literature. Occup Environ Med. 2005, 62: 851-860. 10.1136/oem.2004.015842.

Bai M, Qi X, Yang Z: Predictors of hepatic encephalopathy after transjugular intrahepatic portosystemic shunt in cirrhotic patients: a systematic review. J Gastroenterol Hepatol. 2011, 26: 943-51. 10.1111/j.1440-1746.2011.06663.x.

Monteiro-Soares M, Boyko E, Ribeiro J, Ribeiro I, Dinis-Ribeiro M: Risk stratification systems for diabetic foot ulcers: a systematic review. Diabetologia. 2011, 54: 1190-1199. 10.1007/s00125-010-2030-3.

Lichtman JH, Leifheit-Limson EC, Jones SB: Predictors of hospital readmission after stroke: a systematic review. Stroke. 2010, 41: 2525-2533. 10.1161/STROKEAHA.110.599159.

Ronden RA, Houben AJ, Kessels AG, Stehouwer CD, de Leeuw PW, Kroon AA: Predictors of clinical outcome after stent placement in atherosclerotic renal artery stenosis: a systematic review and meta-analysis of prospective studies. J Hypertens. 2010, 28: 2370-2377.

de Jonge RCJ, van Furth AM, Wassenaar M, Gemke RJBJ, Terwee CB: Predicting sequelae and death after bacterial meningitis in childhood: a systematic review of prognostic studies. BMC Infect Dis. 2010, 10: 232-10.1186/1471-2334-10-232.

Colohan SM: Predicting prognosis in thermal burns with associated inhalational injury: a systematic review of prognostic factors in adult burn victims. J Burn Care Res. 2010, 31: 529-539. 10.1097/BCR.0b013e3181e4d680.

Clay FJ, Newstead SV, McClure RJ: A systematic review of early prognostic factors for return to work following acute orthopaedic trauma. Injury. 2010, 41: 787-803. 10.1016/j.injury.2010.04.005.

Brabrand M, Folkestad L, Clausen NG, Knudsen T, Hallas J: Risk scoring systems for adults admitted to the emergency department: a systematic review. Scand J Trauma Resusc Emerg Med. 2010, 18: 8-10.1186/1757-7241-18-8.

Montazeri A: Quality of life data as prognostic indicators of survival in cancer patients: an overview of the literature from 1982 to 2008. Health Qual Life Outcomes. 2009, 7: 102-10.1186/1477-7525-7-102.

Copas JB: Prediction and Shrinkage. J R Stat Soc Ser B (methodological). 1983, 45: 311-354.

Atkins D, Best D, Briss PA: Grading quality of evidence and strength of recommendations. BMJ. 2004, 328: 1490-

Deeks JJ, Dinnes J, D’Amico R: Evaluating non-randomised intervention studies. Health Technol Assess. 2003, 7: iii-173-

Parekh-Bhurke S, Kwok CS, Pang C: Uptake of methods to deal with publication bias in systematic reviews has increased over time, but there is still much scope for improvement. J Clin Epidemiol. 2011, 64: 349-57. 10.1016/j.jclinepi.2010.04.022.

Steyerberg EW: Selection of main effects. Clinicical Prediction Models: A Practical Approach to Development, Validation, and Updating. 2009, New York: Springer

Chatfield C: Model Uncertainty, Data Mining and Statistical Inference. J R Stat Soc Ser A. 1995, 158: 419-466. 10.2307/2983440.

Steyerberg EW, Eijkemans MJ, Habbema JD: Stepwise selection in small data sets: a simulation study of bias in logistic regression analysis. J Clin Epidemiol. 1999, 52: 935-942. 10.1016/S0895-4356(99)00103-1.

Altman DG: Systematic reviews of evaluations of prognostic variables. BMJ. 2001, 323: 224-228. 10.1136/bmj.323.7306.224.

Kyzas PA, Ioannidis JPA, axa-Kyza D: Quality of reporting of cancer prognostic marker studies: association with reported prognostic effect. J Natl Cancer Inst. 2007, 99: 236-243. 10.1093/jnci/djk032.

Kyzas PA, Ioannidis JPA, axa-Kyza D: Almost all articles on cancer prognostic markers report statistically significant results. Eur J Cancer. 2007, 43: 2559-2579. 10.1016/j.ejca.2007.08.030.

Rifai N, Altman DG, Bossuyt PM: Reporting bias in diagnostic and prognostic studies: time for action. Clin Chem. 2008, 54: 1101-1103. 10.1373/clinchem.2008.108993.

Vergouwe Y, Steyerberg EW, Eijkemans MJC, Habbema JD: Validity of prognostic models: when is a model clinically useful?. Semin Urol Oncol. 2002, 20: 96-107. 10.1053/suro.2002.32521.

Altman DG, Vergouwe Y, Royston P, Moons KGM: Prognosis and prognostic research: validating a prognostic model. BMJ. 2009, 338: b605-10.1136/bmj.b605.

Altman DG: Systematic reviews of evaluations of prognostic variables. Systematic Reviews in Health Care. Edited by: Egger M, Smith GD, Altman DG. 2001, London: BMJ Publishing Group, 228-47.

Chapter Google Scholar

Ware JH: The limitations of risk factors as prognostic tools. N Engl J Med. 2006, 355: 2615-2617. 10.1056/NEJMp068249.

Harrell FE: Multivariable modeling strategies. Regression modeling strategies with applications to linear models, logistic regression, and survival analysis. 2001, New York: Springer,

Riley RD, Abrams KR, Sutton AJ: Reporting of prognostic markers: current problems and development of guidelines for evidence-based practice in the future. Br J Cancer. 2003, 88: 1191-1198. 10.1038/sj.bjc.6600886.

Pre-publication history

The pre-publication history for this paper can be accessed here: http://www.biomedcentral.com/1471-2288/13/42/prepub

Download references

Acknowledgment

We thank Ilse Jansma, MSc, for her contributions as a medical information specialist regarding the Medline search strategy. No compensation was received for her contribution.

No external funding was received for this study.

Author information

Authors and affiliations.

Department of Epidemiology and Biostatistics and the EMGO Institute for Health and Care Research, VU University Medical Centre, Amsterdam, The Netherlands

Tobias van den Berg, Martijn W Heymans & Henrica CW de Vet

Department of General Practice and the EMGO Institute for Health and Care Research, VU University Medical Centre, Amsterdam, The Netherlands

Stephanie S Leone & David Vergouw

Department of Community Health and Epidemiology, Dalhousie University, Halifax, Nova Scotia, Canada

Jill A Hayden

Department of General Practice, Erasmus Medical Centre, Rotterdam, The Netherlands

Arianne P Verhagen

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Tobias van den Berg .

Additional information

Competing interests.

All authors report no conflicts of interests.

Authors’ contributions

TvdB, had full access to all of the data in the study and takes responsibility for the integrity of the data and the accuracy of the data analysis. Study concept and design: TvdB, MH, JH, AV, HdV. Acquisition of data: TvdB, MH, SL, DV, AV, HdV Analysis and interpretation of data: TvdB, MH, HdV. Drafting of the manuscript: TvdB, MH, HdV. Critical revision of the manuscript for important intellectual content: TvdB, MH, SL, DV, JH, AV, HdV. Statistical analysis: TvdB Study supervision: MH, HdV. All authors read and approved the final manuscript.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Authors’ original file for figure 1

Authors’ original file for figure 2, rights and permissions.

This article is published under license to BioMed Central Ltd. This is an Open Access article distributed under the terms of the Creative Commons Attribution License ( http://creativecommons.org/licenses/by/2.0 ), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Reprints and permissions

About this article

Cite this article.

van den Berg, T., Heymans, M.W., Leone, S.S. et al. Overview of data-synthesis in systematic reviews of studies on outcome prediction models. BMC Med Res Methodol 13 , 42 (2013). https://doi.org/10.1186/1471-2288-13-42

Download citation

Received : 26 September 2012

Accepted : 04 March 2013

Published : 16 March 2013

DOI : https://doi.org/10.1186/1471-2288-13-42

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Meta-analysis

- Forecasting

BMC Medical Research Methodology

ISSN: 1471-2288

- General enquiries: [email protected]

Should I do a synthesis (i.e. literature review)?

- Questions & Quandaries

- Published: 18 April 2024

Cite this article

- H. Carrie Chen 1 ,

- Ayelet Kuper 2 , 3 , 4 ,

- Jennifer Cleland 5 &

- Patricia O’Sullivan 6

257 Accesses

1 Altmetric

Explore all metrics

This column is intended to address the kinds of knotty problems and dilemmas with which many scholars grapple in studying health professions education. In this article, the authors address the question of whether one should conduct a literature review or knowledge synthesis, considering the why, when, and how, as well as its potential pitfalls. The goal is to guide supervisors and students who are considering whether to embark on a literature review in education research.

Avoid common mistakes on your manuscript.

Two junior colleagues come to you to ask your advice about carrying out a literature review on a particular topic. “Should they?” immediately pops into your mind, followed closely by, if yes, then what kind of literature review is appropriate? Our experience is that colleagues often come to suggest a literature review to “kick start” their research (in fact, some academic programs require them as part of degree requirements), without a full understanding of the work involved, the different types of literature review, and what type of literature review might be most suitable for their research question. In this Questions and Quandaries, we address the question of literature reviews in education research, considering the why, when, and how, as well as potential pitfalls.

First, what is meant by literature review? The term literature review has been used to refer to both a review of the literature and a knowledge synthesis (Maggio et al., 2018 ; Siddaway et al., 2019 ). For our purposes, we employ the term as commonly used to refer to a knowledge synthesis , which is a formal comprehensive review of the existing body of literature on a topic. It is a research approach that critically integrates and synthesizes available evidence from multiple studies to provide insight and allow the drawing of conclusions. It is an example of Boyer’s scholarship of integration (Boyer, 1990 ). In contrast, a review of the literature is a relatively casual and expedient method for attaining a general overview of the state of knowledge on a given topic to make the argument that a new study is needed. In this interpretation, a literature review serves as a key starting point for anyone conducting research by identifying gaps in the literature, informing the study question, and situating one’s study in the field.

Whether a formal knowledge synthesis should be done depends on if a review is needed and what the rationale is for the review. The first question to consider is whether a literature review already exists. If no, is there enough literature published on the topic to warrant a review? If yes, does the previous review need updating? How long has it been since the last review and has the literature expanded so much or are there important new studies that need integrating to justify an updated review? Or were there flaws in the previous review that one intends to address with a new review? Or does one intend to address a different question than the focus of the previous review?

If the knowledge synthesis is to be done, it should be driven by a research question. What is the research question? Can it be answered by a review? What is the purpose of the synthesis? There are two main purposes for knowledge synthesis– knowledge support and decision support. Knowledge support summarizes the evidence while decision support takes additional analytical steps to allow for decision-making in particular contexts (Mays et al., 2005 ).

If the purpose is to provide knowledge support, then the question is how or what will the knowledge synthesis add to the literature? Will it establish the state of knowledge in an area, identify gaps in the literature/knowledge base, and/or map opportunities for future research? Cornett et al., performed a scoping review of the literature on professional identity, focusing on how professional identity is described, why the studies where done, and what constructs of identity were used. Their findings advanced understanding of the state of knowledge by indicating that professional identity studies were driven primarily by the desire to examine the impact of political, social and healthcare reforms and advances, and that the various constructs of professional identity across the literature could be categorized into five themes (Cornett et al., 2023 ).

If, on the other hand, the purpose of the knowledge synthesis is to provide decision support, for whom will the synthesis be relevant and how will it improve practice? Will the synthesis result in tools such as guidelines or recommendations for practitioners and policymakers? An example of a knowledge synthesis for decision support is a systematic review conducted by Spencer and colleagues to examine the validity evidence for use of the Ottawa Surgical Competency Operating Room Evaluation (OSCORE) assessment tool. The authors summarized their findings with recommendations for educational practice– namely supporting the use of the OSCORE for in-the-moment entrustment decisions by frontline supervisors in surgical fields but cautioning about the limited evidence for support of its use in summative promotions decisions or non-surgical contexts (Spencer et al., 2022 ).

If a knowledge synthesis is indeed appropriate, its methodology should be informed by its research question and purpose. We do not have the space to discuss the various types of knowledge synthesis except to say that several types have been described in the literature. The five most common types in health professions education are narrative reviews, systematic reviews, umbrella reviews (meta-syntheses), scoping reviews, and realist reviews (Maggio et al., 2018 ). These represent different epistemologies, serve different review purposes, use different methods, and result in different review outcomes (Gordon, 2016 ).

Each type of review lends itself best to answering a certain type of research question. For instance, narrative reviews generally describe what is known about a topic without necessarily answering a specific empirical question (Maggio et al., 2018 ). A recent example of a narrative review focused on schoolwide wellbeing programs, describing what is known about the key characteristics and mediating factors that influence student support and identifying critical tensions around confidentiality that could make or break programs (Tan et al., 2023 ). Umbrella reviews, on the other hand, synthesize evidence from multiple reviews or meta-analyses and can illuminate agreement, inconsistencies, or evolution of evidence on a topic. For example, an umbrella review on problem-based learning highlighted the shift in research focus over time from does it work, to how does it work, to how does it work in different contexts, and pointed to directions for new research (Hung et al., 2019 ).

Practical questions for those considering a literature review include whether one has the time required and an appropriate team to conduct a high-quality knowledge synthesis. Regardless of the type of knowledge synthesis and use of quantitative or qualitative methods, all require rigorous and clear methods that allow for reproducibility. This can take time, up to 12–18 months. A high-quality knowledge synthesis also requires a team whose members have expertise not only in the content matter, but also in knowledge synthesis methodology and in literature searches (i.e. a librarian). A team with multiple reviewers with a variety of perspectives can also help manage the volume of large reviews, minimize potential biases, and strengthen the critical analysis.

Finally, a pitfall one should be careful to avoid is merely summarizing everything in the literature without critical evaluation and integration of the information. A knowledge synthesis that merely bean counts or presents a collection of unconnected information that has not been reflected upon or critically analyzed does not truly advance knowledge or decision-making. Rather, it leads us back to our original question of whether it should have been done in the first place.

Boyer, E. L. (1990). Scholarship reconsidered: Priorities of the professoriate (pp. 18–21). Princeton University Press.

Cornett, M., Palermo, C., & Ash, S. (2023). Professional identity research in the health professions—a scoping review. Advances in Health Sciences Education , 28 (2), 589–642.

Article Google Scholar

Gordon, M. (2016). Are we talking the same paradigm? Considering methodological choices in health education systematic review. Medical Teacher , 38 (7), 746–750.

Hung, W., Dolmans, D. H. J. M., & van Merrienboer, J. J. G. (2019). A review to identify key perspectives in PBL meta-analyses and reviews: Trends, gaps and future research directions. Advances in Health Sciences Education , 24 , 943–957.

Maggio, L. A., Thomas, A., & Durning, S. J. (2018). Knowledge synthesis. In T. Swanwick, K. Forrest, & B. C. O’Brien (Eds.), Understanding Medical Education: Evidence, theory, and practice (pp. 457–469). Wiley.

Mays, N., Pope, C., & Popay, J. (2005). Systematically reviewing qualitative and quantitative evidence to inform management and policy-making in the health field. Journal of Health Services Research & Policy , 10 (1_suppl), 6–20.

Siddaway, A. P., Wood, A. M., & Hedges, L. V. (2019). How to do a systematic review: A best practice guide for conducting and reporting narrative reviews, meta-analyses, and meta-syntheses. Annual Review of Psychology , 70 , 747–770.

Spencer, M., Sherbino, J., & Hatala, R. (2022). Examining the validity argument for the Ottawa Surgical Competency operating room evaluation (OSCORE): A systematic review and narrative synthesis. Advances in Health Sciences Education , 27 , 659–689.

Tan, E., Frambach, J., Driessen, E., & Cleland, J. (2023). Opening the black box of school-wide student wellbeing programmes: A critical narrative review informed by activity theory. Advances in Health Sciences Education . https://doi.org/10.1007/s10459-023-10261-8 . Epub ahead of print 02 July 2023.

Download references

Author information

Authors and affiliations.

Georgetown University School of Medicine, Washington, DC, USA

H. Carrie Chen

Wilson Centre for Research in Education, University Health Network, University of Toronto, Toronto, Canada

Ayelet Kuper

Division of General Internal Medicine, Sunnybrook Health Sciences Center, Toronto, Canada

Department of Medicine, University of Toronto, Toronto, Canada

Lee Kong Chian School of Medicine, Nanyang Technological University Singapore, Singapore, Singapore

Jennifer Cleland

University of California San Francisco School of Medicine, San Francisco, CA, USA

Patricia O’Sullivan

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to H. Carrie Chen .

Additional information

Publisher’s note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Reprints and permissions

About this article

Chen, H.C., Kuper, A., Cleland, J. et al. Should I do a synthesis (i.e. literature review)?. Adv in Health Sci Educ (2024). https://doi.org/10.1007/s10459-024-10335-1

Download citation

Published : 18 April 2024

DOI : https://doi.org/10.1007/s10459-024-10335-1

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Find a journal

- Publish with us

- Track your research

About Systematic Reviews

Strategy for Data Synthesis in Systematic Review

Automate every stage of your literature review to produce evidence-based research faster and more accurately.

The purpose of a data extraction table within a systematic review becomes apparent during synthesis, where reviewers collate and evaluate the meaning of the data gathered. Synthesis means that reviewers use the information from their data extraction template for systematic review to create coherent bodies of data that can be analyzed to gain a deeper understanding of the information conveyed.

Reviewers should have a clear strategy showing how they will approach data synthesis to expedite and verify outcomes, such as whether or not their specific review subject requires a meta-analysis or a quantitative synthesis.

The Importance of a Data Synthesis Strategy

Numerous synthesis methodologies are available, making it important to have a defined data extraction process systematic review relevant that describes how a reviewer will categorize and interpret data and use that evaluation to reach conclusions.

Appropriate research approaches can adopt broad categories, such as emerging, qualitative, quantitative, and conventional syntheses. However, each has varying characteristics, context, assumptions, analysis units, strengths, and restrictions that determine which potential technique is most suited to the systematic review in question.

The right data extraction process for systematic review will depend on these variables and the anticipated outcomes and theories that the study seeks to uphold or disprove.

Alternative Data Synthesis Approaches

Below, we examine the four primary subsections of data synthesis used in systematic reviews to demonstrate how each applies depending on the data types available.

Conventional Synthesis

This is used to produce charts, diagrams, maps, and tables, demonstrating conceptual frameworks or theories. This type of data synthesis examines data types such as quantitative studies, literature, policy documentation, and qualitative research.

Some downsides include a reduced element of critique, and systematic evaluation, making it more suitable for reassessing existing topics or preliminary conceptualization for new pieces of research.

Qualitative Synthesis

Our next data synthesis approach involves collating or integrating multiple data sets comprising qualitative research findings and theoretical literature. Outcomes involve conceptual frameworks or maps, definitions, and narrative summaries of the subject matter.

Quantitative Synthesis

This category of systematic review is similar to qualitative synthesis, although it uses quantitative studies to produce generalizable statements, narrative summaries, and mathematical scoring evaluations.

Emerging Synthesis

Finally, approaching data synthesis with an emerging strategy takes a newer approach, incorporating literature and metrics from a broad spectrum of data types, including diverse subject groups.

Selected data sources might include quantitative and qualitative studies, editorials, policies, evaluations, commentaries, and theoretical work. A systematic review adopting an emerging data synthesis approach can produce conceptual maps, decision-making reports, and statistics such as charts, graphs, diagrams, and scoring.

Learn More About DistillerSR

(Article continues below)

Why Does Systematic Review Require a Data Synthesis Strategy?

Synthesized data represents the results derived from studies and analyzed in relevance to the question or theory the systematic review attempts to answer. Because the synthesis technique used dictates the data used and the possible outcomes of the review, reviewers must take the right approach, evaluating the strengths and drawbacks of each and how synthesis adds value to the exercise.

The right strategy can make a considerable difference to the integrity of the outcomes and effects found and the value and credence of the quality of the information provided as a final conclusion.

3 Reasons to Connect

Good review practice: a researcher guide to systematic review methodology in the sciences of food and health

- About this guide

- Part A: Systematic review method

- What are Good Practice points?

- Part C: The core steps of the SR process

- 1.1 Setting eligibility criteria

- 1.2 Identifying search terms

- 1.3 Protocol development

- 2. Searching for studies

- 3. Screening the results

- 4. Evaluation of included studies: quality assessment

- 5. Data extraction

- 6. Data synthesis and summary

- 7. Presenting results

- Links to current versions of the reference guidelines

- Download templates

- Food science databases

- Process management tools

- Screening tools

- Reference management tools

- Grey literature sources

- Links for access to protocol repository and platforms for registration

- Links for access to PRISMA frameworks

- Links for access to 'Risk of Bias' assessment tools for quantitative and qualitative studies

- Links for access to grading checklists

- Links for access to reporting checklists

- What questions are suitable for the systematic review methodology?

- How to assess feasibility of using the method?

- What is a scoping study and how to construct one?

- How to construct a systematic review protocol?

- How to construct a comprehensive search?

- Study designs and levels of evidence

- Download a pdf version This link opens in a new window

Data synthesis and summary

Data synthesis and summary .

Data synthesis includes synthesising the findings of primary studies and when possible or appropriate some forms of statistical analysis of numerical data. Synthesis methods vary depending on the nature of the evidence (e.g., quantitative, qualitative, or mixed), the aim of the review and the study types and designs. Reviewers have to decide and preselect a method of analysis based on the review question at the protocol development stage.

Synthesis Methods

Narrative summary : is a summary of the review results when meta-analysis is not possible. Narrative summaries describe the results of the review, but some can take a more interpretive approach in summarising the results . [8] These are known as " evidence statements " and can include the results of quality appraisal and weighting processes and provide the ratings of the studies.

Meta-analysis : is a quantitative synthesis of the results from included studies using statistical analysis methods that are extensions to those used in primary studies. [9] Meta-analysis can provide a more precise estimate of the outcomes by measuring and counting for uncertainty of outcomes from individual studies by means of statistical methods. However, it is not always feasible to conduct statistical analyses due to several reasons including inadequate data, heterogeneous data, poor quality of included studies and the level of complexity. [10]

Qualitative Data Synthesis (QDS) : is a method of identifying common themes across qualitative studies to create a great degree of conceptual development compared with narrative reviews. The key concepts are identified through a process that begins with interpretations of the primary findings reported to researchers which will then be interpreted to their views of the meaning in a second-order and finally interpreted by reviewers into explanations and generating hypotheses. [11]

Mixed methods synthesis: is an advanced method of data synthesis developed by EPPI-Centre to better understand the meanings of quantitative studies by conducting a parallel review of user evaluations to traditional systematic reviews and combining the findings of the syntheses to identify and provide clear directions in practice. [11]

- << Previous: 5. Data extraction

- Next: 7. Presenting results >>

- Last Updated: Sep 18, 2023 1:16 PM

- URL: https://ifis.libguides.com/systematic_reviews

- University of Texas Libraries

- UT Libraries

Systematic Reviews & Evidence Synthesis Methods

- Types of Reviews

- Formulate Question

- Find Existing Reviews & Protocols

- Register a Protocol

- Searching Systematically

- Supplementary Searching

- Managing Results

- Deduplication

- Critical Appraisal

- Glossary of terms

- Librarian Support

- Video tutorials This link opens in a new window

- Systematic Review & Evidence Synthesis Boot Camp

Once you have completed your analysis, you will want to both summarize and synthesize those results. You may have a qualitative synthesis, a quantitative synthesis, or both.

Qualitative Synthesis

In a qualitative synthesis, you describe for readers how the pieces of your work fit together. You will summarize, compare, and contrast the characteristics and findings, exploring the relationships between them. Further, you will discuss the relevance and applicability of the evidence to your research question. You will also analyze the strengths and weaknesses of the body of evidence. Focus on where the gaps are in the evidence and provide recommendations for further research.

Quantitative Synthesis

Whether or not your Systematic Review includes a full meta-analysis, there is typically some element of data analysis. The quantitative synthesis combines and analyzes the evidence using statistical techniques. This includes comparing methodological similarities and differences and potentially the quality of the studies conducted.

Summarizing vs. Synthesizing

In a systematic review, researchers do more than summarize findings from identified articles. You will synthesize the information you want to include.

While a summary is a way of concisely relating important themes and elements from a larger work or works in a condensed form, a synthesis takes the information from a variety of works and combines them together to create something new.

Synthesis :

"The goal of a systematic synthesis of qualitative research is to integrate or compare the results across studies in order to increase understanding of a particular phenomenon, not to add studies together. Typically the aim is to identify broader themes or new theories – qualitative syntheses usually result in a narrative summary of cross-cutting or emerging themes or constructs, and/or conceptual models."

Denner, J., Marsh, E. & Campe, S. (2017). Approaches to reviewing research in education. In D. Wyse, N. Selwyn, & E. Smith (Eds.), The BERA/SAGE Handbook of educational research (Vol. 2, pp. 143-164). doi: 10.4135/9781473983953.n7

- Approaches to Reviewing Research in Education from Sage Knowledge

Data synthesis (Collaboration for Environmental Evidence Guidebook)

Interpreting findings and and reporting conduct (Collaboration for Environmental Evidence Guidebook)

Interpreting results and drawing conclusions (Cochrane Handbook, Chapter 15)

Guidance on the conduct of narrative synthesis in systematic reviews (ESRC Methods Programme)

- Last Updated: Apr 9, 2024 8:57 PM

- URL: https://guides.lib.utexas.edu/systematicreviews

Library buildings are open for UniSA staff and students via UniSA ID swipe cards. Please contact us on Ask the Library for any assistance. Find out about other changes to Library services .

- Overview of systematic reviews

- Other review types

- Glossary of terms

- Define question

- Top tools and techniques

- How to search

- Where to search

- Subject headings

- Search filters

- Review your search

- Run your search on other databases

- Search the grey literature

- Report search results

- Updating a search

- How to screen

- Critical appraisal

Data synthesis

Data synthesis overview.

Now that you have extracted your data , the next step is to synthesise the data.

Move through the slide deck below to learn about data synthesis. Alternatively, download the PDF document at the bottom of this box.

- Data synthesis This document is a printable version of the slide deck above.

Forest plot example

If you have conducted a meta-analysis, you can present a summary of each study and your overall findings in a forest plot.

Select the i icons in the image below to learn about each component of a forest plot.

Test your knowledge

Test your knowledge about meta-analysis and forest plots in the activity below.

Guidelines and standards

- Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) website

Items 13a - 13f of the PRISMA 2020 Checklist address synthesis methods and are described further in the Explanation and Elaboration document.

Other standards

- Overview of systematic reviews See the overview page (of this guide) for additional guidelines and standards.

- Interpreting and understanding meta-analysis graphs: a practical guide (2006) Provides a practical guide for appraising systematic reviews for relevance to clinical practice and interpreting meta-analysis graphs .

- What is a meta-analysis? (CEBI, University of Oxford) Provides addition information about meta-analyses.

- How to read a forest plot (CEBI, University of Oxford) Additional detailed information about how to read a forest plot.

Additional readings:

- Modern meta-analysis review and update of methodologies (2017) Comprehensive details about conducting meta-analyses.

- Meta-synthesis and evidence-based health care - a method for systematic review (2012) This article describes the process of systematic review of qualitative studies.

- Lessons from comparing narrative synthesis and meta-analysis in a systematic review (2015). Investigates the contribution and implications of narrative synthesis and meta-analysis in a systematic review.

- Speech-language pathologist interventions for communication in moderate-severe dementia: a systematic review (2018) An example of a systematic review without a meta-analysis.

Next topic →

- << Previous: Extraction

- Next: Write >>

- Last Updated: Apr 22, 2024 9:44 AM

- URL: https://guides.library.unisa.edu.au/SystematicReviews

- Langson Library

- Science Library

- Grunigen Medical Library

- Law Library

- Connect From Off-Campus

- Accessibility

- Gateway Study Center

Email this link

Systematic reviews & evidence synthesis methods.

- Schedule a Consultation / Meet our Team

- What is Evidence Synthesis?

- Types of Evidence Synthesis

- Evidence Synthesis Across Disciplines

- Finding and Appraising Existing Systematic Reviews

- 1. Develop a Protocol

- 2. Draft your Research Question

- 3. Select Databases

- 4. Select Grey Literature Sources

- 5. Write a Search Strategy

- 6. Register a Protocol

- 7. Translate Search Strategies

- 8. Citation Management

- 9. Article Screening

- 10. Risk of Bias Assessment

- 11. Data Extraction

- 12. Synthesize, Map, or Describe the Results

- Open Access Evidence Synthesis Resources

Data Extraction