Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

2.1 How Humans Produce Speech

Phonetics studies human speech. Speech is produced by bringing air from the lungs to the larynx (respiration), where the vocal folds may be held open to allow the air to pass through or may vibrate to make a sound (phonation). The airflow from the lungs is then shaped by the articulators in the mouth and nose (articulation).

Check Yourself

Video script.

The field of phonetics studies the sounds of human speech. When we study speech sounds we can consider them from two angles. Acoustic phonetics , in addition to being part of linguistics, is also a branch of physics. It’s concerned with the physical, acoustic properties of the sound waves that we produce. We’ll talk some about the acoustics of speech sounds, but we’re primarily interested in articulatory phonetics , that is, how we humans use our bodies to produce speech sounds. Producing speech needs three mechanisms.

The first is a source of energy. Anything that makes a sound needs a source of energy. For human speech sounds, the air flowing from our lungs provides energy.

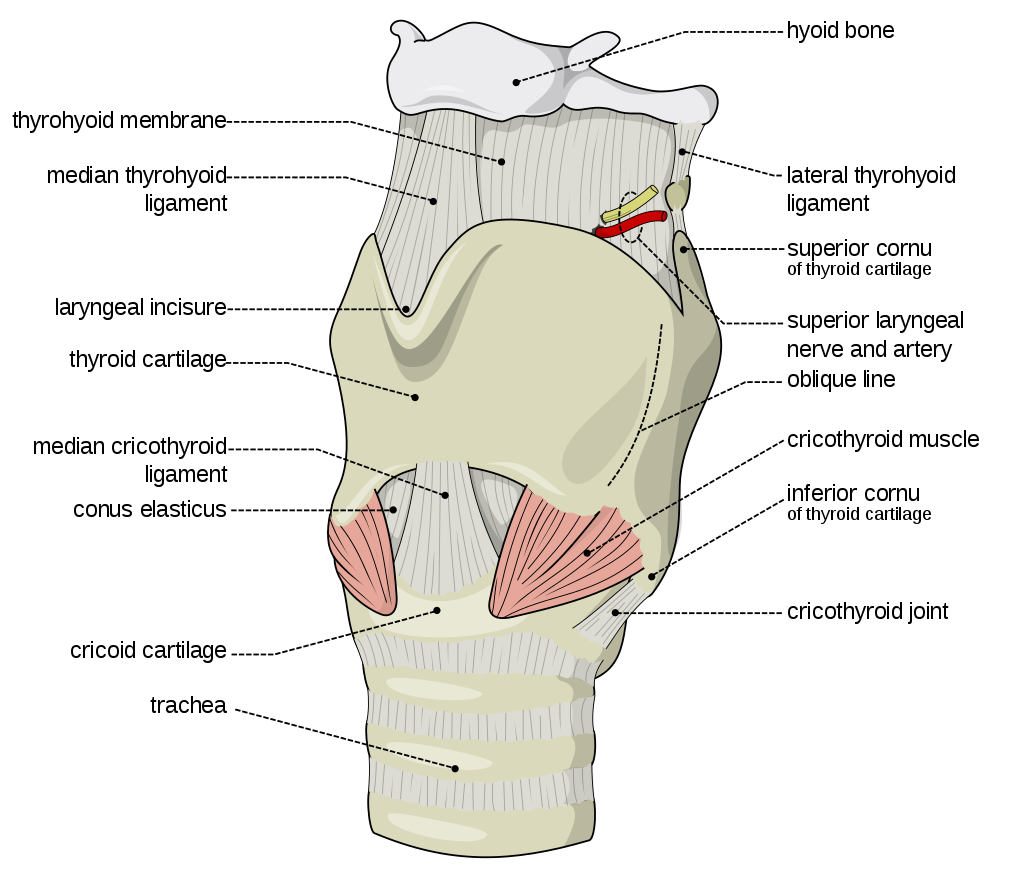

The second is a source of the sound: air flowing from the lungs arrives at the larynx. Put your hand on the front of your throat and gently feel the bony part under your skin. That’s the front of your larynx . It’s not actually made of bone; it’s cartilage and muscle. This picture shows what the larynx looks like from the front.

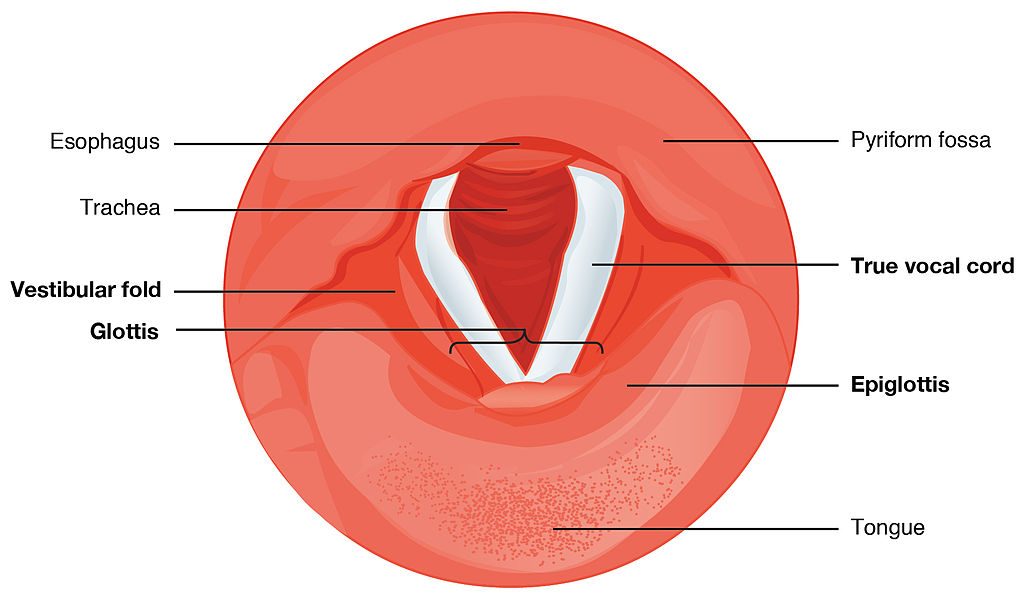

This next picture is a view down a person’s throat.

What you see here is that the opening of the larynx can be covered by two triangle-shaped pieces of skin. These are often called “vocal cords” but they’re not really like cords or strings. A better name for them is vocal folds .

The opening between the vocal folds is called the glottis .

We can control our vocal folds to make a sound. I want you to try this out so take a moment and close your door or make sure there’s no one around that you might disturb.

First I want you to say the word “uh-oh”. Now say it again, but stop half-way through, “Uh-”. When you do that, you’ve closed your vocal folds by bringing them together. This stops the air flowing through your vocal tract. That little silence in the middle of “uh-oh” is called a glottal stop because the air is stopped completely when the vocal folds close off the glottis.

Now I want you to open your mouth and breathe out quietly, “haaaaaaah”. When you do this, your vocal folds are open and the air is passing freely through the glottis.

Now breathe out again and say “aaah”, as if the doctor is looking down your throat. To make that “aaaah” sound, you’re holding your vocal folds close together and vibrating them rapidly.

When we speak, we make some sounds with vocal folds open, and some with vocal folds vibrating. Put your hand on the front of your larynx again and make a long “SSSSS” sound. Now switch and make a “ZZZZZ” sound. You can feel your larynx vibrate on “ZZZZZ” but not on “SSSSS”. That’s because [s] is a voiceless sound, made with the vocal folds held open, and [z] is a voiced sound, where we vibrate the vocal folds. Do it again and feel the difference between voiced and voiceless.

Now take your hand off your larynx and plug your ears and make the two sounds again with your ears plugged. You can hear the difference between voiceless and voiced sounds inside your head.

I said at the beginning that there are three crucial mechanisms involved in producing speech, and so far we’ve looked at only two:

- Energy comes from the air supplied by the lungs.

- The vocal folds produce sound at the larynx.

- The sound is then filtered, or shaped, by the articulators .

The oral cavity is the space in your mouth. The nasal cavity, obviously, is the space inside and behind your nose. And of course, we use our tongues, lips, teeth and jaws to articulate speech as well. In the next unit, we’ll look in more detail at how we use our articulators.

So to sum up, the three mechanisms that we use to produce speech are:

- respiration at the lungs,

- phonation at the larynx, and

- articulation in the mouth.

Essentials of Linguistics Copyright © 2018 by Catherine Anderson is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Speech Production

- Reference work entry

- First Online: 01 January 2015

- pp 1493–1498

- Cite this reference work entry

- Laura Docio-Fernandez 3 &

- Carmen García Mateo 4

1213 Accesses

3 Altmetric

Sound generation; Speech system

Speech production is the process of uttering articulated sounds or words, i.e., how humans generate meaningful speech. It is a complex feedback process in which hearing, perception, and information processing in the nervous system and the brain are also involved.

Speaking is in essence the by-product of a necessary bodily process, the expulsion from the lungs of air charged with carbon dioxide after it has fulfilled its function in respiration. Most of the time, one breathes out silently; but it is possible, by contracting and relaxing the vocal tract, to change the characteristics of the air expelled from the lungs.

Introduction

Speech is one of the most natural forms of communication for human beings. Researchers in speech technology are working on developing systems with the ability to understand speech and speak with a human being.

Human-computer interaction is a discipline concerned with the design, evaluation, and implementation...

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Durable hardcover edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

T. Hewett, R. Baecker, S. Card, T. Carey, J. Gasen, M. Mantei, G. Perlman, G. Strong, W. Verplank, Chapter 2: Human-computer interaction, in ACM SIGCHI Curricula for Human-Computer Interaction ed. by B. Hefley (ACM, 2007)

Google Scholar

G. Fant, Acoustic Theory of Speech Production , 1st edn. (Mouton, The Hague, 1960)

G. Fant, Glottal flow: models and interaction. J. Phon. 14 , 393–399 (1986)

R.D. Kent, S.G. Adams, G.S. Turner, Models of speech production, in Principles of Experimental Phonetics , ed. by N.J. Lass (Mosby, St. Louis, 1996), pp. 2–45

T.L. Burrows, Speech Processing with Linear and Neural Network Models (1996)

J.R. Deller, J.G. Proakis, J.H.L. Hansen, Discrete-Time Processing of Speech Signals , 1st edn. (Macmillan, New York, 1993)

Download references

Author information

Authors and affiliations.

Department of Signal Theory and Communications, University of Vigo, Vigo, Spain

Laura Docio-Fernandez

Atlantic Research Center for Information and Communication Technologies, University of Vigo, Pontevedra, Spain

Carmen García Mateo

You can also search for this author in PubMed Google Scholar

Editor information

Editors and affiliations.

Center for Biometrics and Security, Research & National Laboratory of Pattern Recognition, Institute of Automation, Chinese Academy of Sciences, Beijing, China

Departments of Computer Science and Engineering, Michigan State University, East Lansing, MI, USA

Anil K. Jain

Rights and permissions

Reprints and permissions

Copyright information

© 2015 Springer Science+Business Media New York

About this entry

Cite this entry.

Docio-Fernandez, L., García Mateo, C. (2015). Speech Production. In: Li, S.Z., Jain, A.K. (eds) Encyclopedia of Biometrics. Springer, Boston, MA. https://doi.org/10.1007/978-1-4899-7488-4_199

Download citation

DOI : https://doi.org/10.1007/978-1-4899-7488-4_199

Published : 03 July 2015

Publisher Name : Springer, Boston, MA

Print ISBN : 978-1-4899-7487-7

Online ISBN : 978-1-4899-7488-4

eBook Packages : Computer Science Reference Module Computer Science and Engineering

Share this entry

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

- Subject List

- Take a Tour

- For Authors

- Subscriber Services

- Publications

- African American Studies

- African Studies

- American Literature

- Anthropology

- Architecture Planning and Preservation

- Art History

- Atlantic History

- Biblical Studies

- British and Irish Literature

- Childhood Studies

- Chinese Studies

- Cinema and Media Studies

- Communication

- Criminology

- Environmental Science

- Evolutionary Biology

- International Law

- International Relations

- Islamic Studies

- Jewish Studies

- Latin American Studies

- Latino Studies

Linguistics

- Literary and Critical Theory

- Medieval Studies

- Military History

- Political Science

- Public Health

- Renaissance and Reformation

- Social Work

- Urban Studies

- Victorian Literature

- Browse All Subjects

How to Subscribe

- Free Trials

In This Article Expand or collapse the "in this article" section Speech Production

Introduction.

- Historical Studies

- Animal Studies

- Evolution and Development

- Functional Magnetic Resonance and Positron Emission Tomography

- Electroencephalography and Other Approaches

- Theoretical Models

- Speech Apparatus

- Speech Disorders

Related Articles Expand or collapse the "related articles" section about

About related articles close popup.

Lorem Ipsum Sit Dolor Amet

Vestibulum ante ipsum primis in faucibus orci luctus et ultrices posuere cubilia Curae; Aliquam ligula odio, euismod ut aliquam et, vestibulum nec risus. Nulla viverra, arcu et iaculis consequat, justo diam ornare tellus, semper ultrices tellus nunc eu tellus.

- Acoustic Phonetics

- Animal Communication

- Articulatory Phonetics

- Biology of Language

- Clinical Linguistics

- Cognitive Mechanisms for Lexical Access

- Cross-Language Speech Perception and Production

- Dementia and Language

- Early Child Phonology

- Interface Between Phonology and Phonetics

- Khoisan Languages

- Language Acquisition

- Speech Perception

- Speech Synthesis

- Voice and Voice Quality

Other Subject Areas

Forthcoming articles expand or collapse the "forthcoming articles" section.

- Cognitive Grammar

- Edward Sapir

- Find more forthcoming articles...

- Export Citations

- Share This Facebook LinkedIn Twitter

Speech Production by Eryk Walczak LAST REVIEWED: 22 February 2018 LAST MODIFIED: 22 February 2018 DOI: 10.1093/obo/9780199772810-0217

Speech production is one of the most complex human activities. It involves coordinating numerous muscles and complex cognitive processes. The area of speech production is related to Articulatory Phonetics , Acoustic Phonetics and Speech Perception , which are all studying various elements of language and are part of a broader field of Linguistics . Because of the interdisciplinary nature of the current topic, it is usually studied on several levels: neurological, acoustic, motor, evolutionary, and developmental. Each of these levels has its own literature but in the vast majority of speech production literature, each of these elements will be present. The large body of relevant literature is covered in the speech perception entry on which this bibliography builds upon. This entry covers general speech production mechanisms and speech disorders. However, speech production in second language learners or bilinguals has special features which were described in separate bibliography on Cross-Language Speech Perception and Production . Speech produces sounds, and sounds are a topic of study for Phonology .

As mentioned in the introduction, speech production tends to be described in relation to acoustics, speech perception, neuroscience, and linguistics. Because of this interdisciplinarity, there are not many published textbooks focusing exclusively on speech production. Guenther 2016 and Levelt 1993 are the exceptions. The former has a stronger focus on the neuroscientific underpinnings of speech. Auditory neuroscience is also extensively covered by Schnupp, et al. 2011 and in the extensive textbook Hickok and Small 2015 . Rosen and Howell 2011 is a textbook focusing on signal processing and acoustics which are necessary to understand by any speech scientist. A historical approach to psycholinguistics which also covers speech research is Levelt 2013 .

Guenther, F. H. 2016. Neural control of speech . Cambridge, MA: MIT.

This textbook provides an overview of neural processes responsible for speech production. Large sections describe speech motor control, especially the DIVA model (co-authored by Guenther). It includes extensive coverage of behavioral and neuroimaging studies of speech as well as speech disorders and ties them together with a unifying theoretical framework.

Hickok, G., and S. L. Small. 2015. Neurobiology of language . London: Academic Press.

This voluminous textbook edited by Hickok and Small covers a wide range of topics related to neurobiology of language. It includes a section devoted to speaking which covers neurobiology of speech production, motor control perspective, neuroimaging studies, and aphasia.

Levelt, W. J. M. 1993. Speaking: From intention to articulation . Cambridge, MA: MIT.

A seminal textbook Speaking is worth reading particularly for its detailed explanation of the author’s speech model, which is part of the author’s language model. The book is slightly dated, as it was released in 1993, but chapters 8–12 are especially relevant to readers interested in phonetic plans, articulating, and self-monitoring.

Levelt, W. J. M. 2013. A history of psycholinguistics: The pre-Chomskyan era . Oxford: Oxford University Press.

Levelt published another important book detailing the development of psycholinguistics. As its title suggests, it focuses on the early history of discipline, so readers interested in historical research on speech can find an abundance of speech-related research in that book. It covers a wide range of psycholinguistic specializations.

Rosen, S., and P. Howell. 2011. Signals and Systems for Speech and Hearing . 2d ed. Bingley, UK: Emerald.

Rosen and Howell provide a low-level explanation of speech signals and systems. The book includes informative charts explaining the basic acoustic and signal processing concepts useful for understanding speech science.

Schnupp, J., I. Nelken, and A. King. 2011. Auditory neuroscience: Making sense of sound . Cambridge, MA: MIT.

A general introduction to speech concepts with main focus on neuroscience. The textbook is linked with a website which provides a demonstration of described phenomena.

back to top

Users without a subscription are not able to see the full content on this page. Please subscribe or login .

Oxford Bibliographies Online is available by subscription and perpetual access to institutions. For more information or to contact an Oxford Sales Representative click here .

- About Linguistics »

- Meet the Editorial Board »

- Acceptability Judgments

- Accessibility Theory in Linguistics

- Acquisition, Second Language, and Bilingualism, Psycholin...

- Adpositions

- African Linguistics

- Afroasiatic Languages

- Algonquian Linguistics

- Altaic Languages

- Ambiguity, Lexical

- Analogy in Language and Linguistics

- Applicatives

- Applied Linguistics, Critical

- Arawak Languages

- Argument Structure

- Artificial Languages

- Australian Languages

- Austronesian Linguistics

- Auxiliaries

- Balkans, The Languages of the

- Baudouin de Courtenay, Jan

- Berber Languages and Linguistics

- Bilingualism and Multilingualism

- Borrowing, Structural

- Caddoan Languages

- Caucasian Languages

- Celtic Languages

- Celtic Mutations

- Chomsky, Noam

- Chumashan Languages

- Classifiers

- Clauses, Relative

- Cognitive Linguistics

- Colonial Place Names

- Comparative Reconstruction in Linguistics

- Comparative-Historical Linguistics

- Complementation

- Complexity, Linguistic

- Compositionality

- Compounding

- Computational Linguistics

- Conditionals

- Conjunctions

- Connectionism

- Consonant Epenthesis

- Constructions, Verb-Particle

- Contrastive Analysis in Linguistics

- Conversation Analysis

- Conversation, Maxims of

- Conversational Implicature

- Cooperative Principle

- Coordination

- Creoles, Grammatical Categories in

- Critical Periods

- Cyberpragmatics

- Default Semantics

- Definiteness

- Dene (Athabaskan) Languages

- Dené-Yeniseian Hypothesis, The

- Dependencies

- Dependencies, Long Distance

- Derivational Morphology

- Determiners

- Dialectology

- Distinctive Features

- Dravidian Languages

- Endangered Languages

- English as a Lingua Franca

- English, Early Modern

- English, Old

- Eskimo-Aleut

- Euphemisms and Dysphemisms

- Evidentials

- Exemplar-Based Models in Linguistics

- Existential

- Existential Wh-Constructions

- Experimental Linguistics

- Fieldwork, Sociolinguistic

- Finite State Languages

- First Language Attrition

- Formulaic Language

- Francoprovençal

- French Grammars

- Gabelentz, Georg von der

- Genealogical Classification

- Generative Syntax

- Genetics and Language

- Grammar, Categorial

- Grammar, Construction

- Grammar, Descriptive

- Grammar, Functional Discourse

- Grammars, Phrase Structure

- Grammaticalization

- Harris, Zellig

- Heritage Languages

- History of Linguistics

- History of the English Language

- Hmong-Mien Languages

- Hokan Languages

- Humor in Language

- Hungarian Vowel Harmony

- Idiom and Phraseology

- Imperatives

- Indefiniteness

- Indo-European Etymology

- Inflected Infinitives

- Information Structure

- Interjections

- Iroquoian Languages

- Isolates, Language

- Jakobson, Roman

- Japanese Word Accent

- Jones, Daniel

- Juncture and Boundary

- Kiowa-Tanoan Languages

- Kra-Dai Languages

- Labov, William

- Language and Law

- Language Contact

- Language Documentation

- Language, Embodiment and

- Language for Specific Purposes/Specialized Communication

- Language, Gender, and Sexuality

- Language Geography

- Language Ideologies and Language Attitudes

- Language in Autism Spectrum Disorders

- Language Nests

- Language Revitalization

- Language Shift

- Language Standardization

- Language, Synesthesia and

- Languages of Africa

- Languages of the Americas, Indigenous

- Languages of the World

- Learnability

- Lexical Access, Cognitive Mechanisms for

- Lexical Semantics

- Lexical-Functional Grammar

- Lexicography

- Lexicography, Bilingual

- Linguistic Accommodation

- Linguistic Anthropology

- Linguistic Areas

- Linguistic Landscapes

- Linguistic Prescriptivism

- Linguistic Profiling and Language-Based Discrimination

- Linguistic Relativity

- Linguistics, Educational

- Listening, Second Language

- Literature and Linguistics

- Machine Translation

- Maintenance, Language

- Mande Languages

- Mass-Count Distinction

- Mathematical Linguistics

- Mayan Languages

- Mental Health Disorders, Language in

- Mental Lexicon, The

- Mesoamerican Languages

- Minority Languages

- Mixed Languages

- Mixe-Zoquean Languages

- Modification

- Mon-Khmer Languages

- Morphological Change

- Morphology, Blending in

- Morphology, Subtractive

- Munda Languages

- Muskogean Languages

- Nasals and Nasalization

- Niger-Congo Languages

- Non-Pama-Nyungan Languages

- Northeast Caucasian Languages

- Oceanic Languages

- Papuan Languages

- Penutian Languages

- Philosophy of Language

- Phonetics, Acoustic

- Phonetics, Articulatory

- Phonological Research, Psycholinguistic Methodology in

- Phonology, Computational

- Phonology, Early Child

- Policy and Planning, Language

- Politeness in Language

- Positive Discourse Analysis

- Possessives, Acquisition of

- Pragmatics, Acquisition of

- Pragmatics, Cognitive

- Pragmatics, Computational

- Pragmatics, Cross-Cultural

- Pragmatics, Developmental

- Pragmatics, Experimental

- Pragmatics, Game Theory in

- Pragmatics, Historical

- Pragmatics, Institutional

- Pragmatics, Second Language

- Pragmatics, Teaching

- Prague Linguistic Circle, The

- Presupposition

- Psycholinguistics

- Quechuan and Aymaran Languages

- Reading, Second-Language

- Reciprocals

- Reduplication

- Reflexives and Reflexivity

- Register and Register Variation

- Relevance Theory

- Representation and Processing of Multi-Word Expressions in...

- Salish Languages

- Sapir, Edward

- Saussure, Ferdinand de

- Second Language Acquisition, Anaphora Resolution in

- Semantic Maps

- Semantic Roles

- Semantic-Pragmatic Change

- Semantics, Cognitive

- Sentence Processing in Monolingual and Bilingual Speakers

- Sign Language Linguistics

- Sociolinguistics

- Sociolinguistics, Variationist

- Sociopragmatics

- Sound Change

- South American Indian Languages

- Specific Language Impairment

- Speech, Deceptive

- Speech Production

- Switch-Reference

- Syntactic Change

- Syntactic Knowledge, Children’s Acquisition of

- Tense, Aspect, and Mood

- Text Mining

- Tone Sandhi

- Transcription

- Transitivity and Voice

- Translanguaging

- Translation

- Trubetzkoy, Nikolai

- Tucanoan Languages

- Tupian Languages

- Usage-Based Linguistics

- Uto-Aztecan Languages

- Valency Theory

- Verbs, Serial

- Vocabulary, Second Language

- Vowel Harmony

- Whitney, William Dwight

- Word Classes

- Word Formation in Japanese

- Word Recognition, Spoken

- Word Recognition, Visual

- Word Stress

- Writing, Second Language

- Writing Systems

- Zapotecan Languages

- Privacy Policy

- Cookie Policy

- Legal Notice

- Accessibility

Powered by:

- [66.249.64.20|45.133.227.243]

- 45.133.227.243

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 31 January 2024

Single-neuronal elements of speech production in humans

- Arjun R. Khanna ORCID: orcid.org/0000-0003-0677-5598 1 na1 ,

- William Muñoz ORCID: orcid.org/0000-0002-1354-3472 1 na1 ,

- Young Joon Kim 2 na1 ,

- Yoav Kfir 1 ,

- Angelique C. Paulk ORCID: orcid.org/0000-0002-4413-3417 3 ,

- Mohsen Jamali ORCID: orcid.org/0000-0002-1750-7591 1 ,

- Jing Cai ORCID: orcid.org/0000-0002-2970-0567 1 ,

- Martina L. Mustroph 1 ,

- Irene Caprara 1 ,

- Richard Hardstone ORCID: orcid.org/0000-0002-7502-9145 3 ,

- Mackenna Mejdell 1 ,

- Domokos Meszéna ORCID: orcid.org/0000-0003-4042-2542 3 ,

- Abigail Zuckerman 2 ,

- Jeffrey Schweitzer ORCID: orcid.org/0000-0003-4079-0791 1 ,

- Sydney Cash ORCID: orcid.org/0000-0002-4557-6391 3 na2 &

- Ziv M. Williams ORCID: orcid.org/0000-0002-0017-0048 1 , 4 , 5 na2

Nature volume 626 , pages 603–610 ( 2024 ) Cite this article

24k Accesses

4 Citations

476 Altmetric

Metrics details

- Extracellular recording

Humans are capable of generating extraordinarily diverse articulatory movement combinations to produce meaningful speech. This ability to orchestrate specific phonetic sequences, and their syllabification and inflection over subsecond timescales allows us to produce thousands of word sounds and is a core component of language 1 , 2 . The fundamental cellular units and constructs by which we plan and produce words during speech, however, remain largely unknown. Here, using acute ultrahigh-density Neuropixels recordings capable of sampling across the cortical column in humans, we discover neurons in the language-dominant prefrontal cortex that encoded detailed information about the phonetic arrangement and composition of planned words during the production of natural speech. These neurons represented the specific order and structure of articulatory events before utterance and reflected the segmentation of phonetic sequences into distinct syllables. They also accurately predicted the phonetic, syllabic and morphological components of upcoming words and showed a temporally ordered dynamic. Collectively, we show how these mixtures of cells are broadly organized along the cortical column and how their activity patterns transition from articulation planning to production. We also demonstrate how these cells reliably track the detailed composition of consonant and vowel sounds during perception and how they distinguish processes specifically related to speaking from those related to listening. Together, these findings reveal a remarkably structured organization and encoding cascade of phonetic representations by prefrontal neurons in humans and demonstrate a cellular process that can support the production of speech.

Similar content being viewed by others

Large-scale single-neuron speech sound encoding across the depth of human cortex

Neural dynamics of phoneme sequences reveal position-invariant code for content and order

Phonemic segmentation of narrative speech in human cerebral cortex

Humans can produce a remarkably wide array of word sounds to convey specific meanings. To produce fluent speech, linguistic analyses suggest a structured succession of processes involved in planning the arrangement and structure of phonemes in individual words 1 , 2 . These processes are thought to occur rapidly during natural speech and to recruit prefrontal regions in parts of the broader language network known to be involved in word planning 3 , 4 , 5 , 6 , 7 , 8 , 9 , 10 , 11 , 12 and sentence construction 13 , 14 , 15 , 16 and which widely connect with downstream areas that play a role in their motor production 17 , 18 , 19 . Cortical surface recordings have also demonstrated that phonetic features may be regionally organized 20 and that they can be decoded from local-field activities across posterior prefrontal and premotor areas 21 , 22 , 23 , suggesting an underlying cortical structure. Understanding the basic cellular elements by which we plan and produce words during speech, however, has remained a significant challenge.

Although previous studies in animal models 24 , 25 , 26 and more recent investigation in humans 27 , 28 have offered an important understanding of how cells in primary motor areas relate to vocalization movements and the production of sound sequences such as song, they do not reveal the neuronal process by which humans construct individual words and by which we produce natural speech 29 . Further, although linguistic theory based on behavioural observations has suggested tightly coupled sublexical processes necessary for the coordination of articulators during word planning 30 , how specific phonetic sequences, their syllabification or inflection are precisely coded for by individual neurons remains undefined. Finally, whereas previous studies have revealed a large regional overlap in areas involved in articulation planning and production 31 , 32 , 33 , 34 , 35 , little is known about whether and how these linguistic process may be uniquely represented at a cellular scale 36 , what their cortical organization may be or how mechanisms specifically related to speech production and perception may differ.

Single-neuronal recordings have the potential to begin revealing some of the basic functional building blocks by which humans plan and produce words during speech and study these processes at spatiotemporal scales that have largely remained inaccessible 37 , 38 , 39 , 40 , 41 , 42 , 43 , 44 , 45 . Here, we used an opportunity to combine recently developed ultrahigh-density microelectrode arrays for acute intraoperative neuronal recordings, speech tracking and modelling approaches to begin addressing these questions.

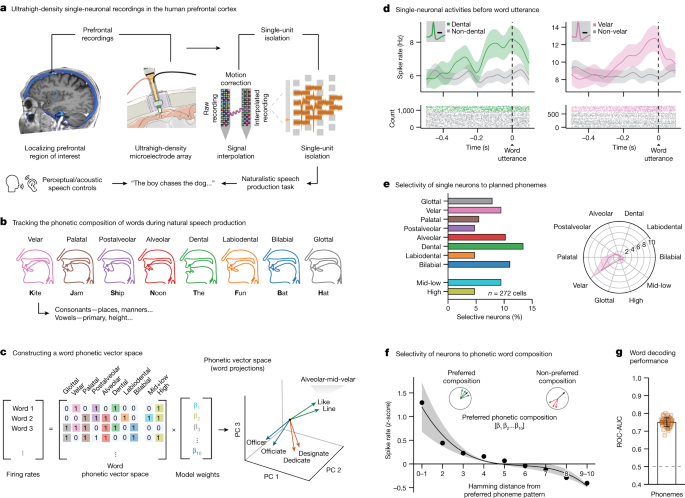

Neuronal recordings during natural speech

Single-neuronal recordings were obtained from the language-dominant (left) prefrontal cortex in participants undergoing planned intraoperative neurophysiology (Fig. 1a ; section on ‘Acute intraoperative single-neuronal recordings’). These recordings were obtained from the posterior middle frontal gyrus 10 , 46 , 47 , 48 , 49 , 50 in a region known to be broadly involved in word planning 3 , 4 , 5 , 6 , 7 , 8 , 9 , 10 , 11 , 12 and sentence construction 13 , 14 , 15 , 16 and to connect with neighbouring motor areas shown to play a role in articulation 17 , 18 , 19 and lexical processing 51 , 52 , 53 (Extended Data Fig. 1a ). This region was traversed during recordings as part of planned neurosurgical care and roughly ranged in distribution from alongside anterior area 55b to 8a, with sites varying by approximately 10 mm (s.d.) across subjects (Extended Data Fig. 1b ; section on ‘Anatomical localization of recordings’). Moreover, the participants undergoing recordings were awake and thus able to perform language-based tasks (section on ‘Study participants’), together providing an extraordinarily rare opportunity to study the action potential (AP) dynamics of neurons during the production of natural speech.

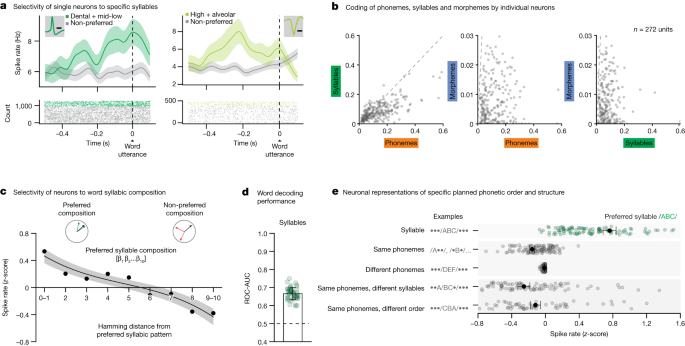

a , Left, single-neuronal recordings were confirmed to localize to the posterior middle frontal gyrus of language-dominant prefrontal cortex in a region known to be involved in word planning and production (Extended Data Fig. 1a,b ); right, acute single-neuronal recordings were made using Neuropixels arrays (Extended Data Fig. 1c,d ); bottom, speech production task and controls (Extended Data Fig. 2a ). b , Example of phonetic groupings based on the planned places of articulation (Extended Data Table 1 ). c , A ten-dimensional feature space was constructed to provide a compositional representation of all phonemes per word. d , Peri-event time histograms were constructed by aligning the APs of each neuron to word onset at millisecond resolution. Data are presented as mean (line) values ± s.e.m. (shade). Inset, spike waveform morphology and scale bar (0.5 ms). e , Left, proportions of modulated neurons that selectively changed their activities to specific planned phonemes; right, tuning curve for a cell that was preferentially tuned to velar consonants. f , Average z -scored firing rates as a function of the Hamming distance between the preferred phonetic composition of the neuron (that producing largest change in activity) and all other phonetic combinations. Here, a Hamming distance of 0 indicates that the words had the same phonetic compositions, whereas a Hamming distance of 1 indicates that they differed by a single phoneme. Data are presented as mean (line) values ± s.e.m. (shade). g , Decoding performance for planned phonemes. The orange points provide the sampled distribution for the classifier’s ROC-AUC; n = 50 random test/train splits; P = 7.1 × 10 −18 , two-sided Mann–Whitney U -test. Data are presented as mean ± s.d.

Source Data

To obtain acute recordings from individual cortical neurons and to reliably track their AP activities across the cortical column, we used ultrahigh-density, fully integrated linear silicon Neuropixels arrays that allowed for high throughput recordings from single cortical units 54 , 55 . To further obtain stable recordings, we developed custom-made software that registered and motion-corrected the AP activity of each unit and kept track of their position across the cortical column (Fig. 1a , right) 56 . Only well-isolated single units, with low relative neighbour noise and stable waveform morphologies consistent with that of neocortical neurons were used (Extended Data Fig. 1c,d ; section on ‘Acute intraoperative single-neuronal recordings’). Altogether, we obtained recordings from 272 putative neurons across five participants for an average of 54 ± 34 (s.d.) single units per participant (range 16–115 units).

Next, to study neuronal activities during the production of natural speech and to track their per word modulation, the participants performed a naturalistic speech production task that required them to articulate broadly varied words in a replicable manner (Extended Data Fig. 2a ) 57 . Here, the task required the participants to produce words that varied in phonetic, syllabic and morphosyntactic content and to provide them in a structured and reproducible format. It also required them to articulate the words independently of explicit phonetic cues (for example, from simply hearing and then repeating the same words) and to construct them de novo during natural speech. Extra controls were further used to evaluate for preceding word-related responses, sensory–perceptual effects and phonetic–acoustic properties as well as to evaluate the robustness and generalizability of neuronal activities (section on ‘Speech production task’).

Together, the participants produced 4,263 words for an average of 852.6 ± 273.5 (s.d.) words per participant (range 406–1,252 words). The words were transcribed using a semi-automated platform and aligned to AP activity at millisecond resolution (section on ‘Audio recordings and task synchronization’) 51 . All participants were English speakers and showed comparable word-production performances (Extended Data Fig. 2b ).

Representations of phonemes by neurons

To first examine the relation between single-neuronal activities and the specific speech organs involved 58 , 59 , we focused our initial analyses on the primary places of articulation 60 . The places of articulation describe the points where constrictions are made between an active and a passive articulator and are what largely give consonants their distinctive sounds. Thus, for example, whereas bilabial consonants (/p/ and /b/) involve the obstruction of airflow at the lips, velar consonants are articulated with the dorsum of the tongue placed against the soft palate (/k/ and /g/; Fig. 1b ). To further examine sounds produced without constriction, we also focused our initial analyses on vowels in relation to the relative height of the tongue (mid-low and high vowels). More phonetic groupings based on the manners of articulation (configuration and interaction of articulators) and primary cardinal vowels (combined positions of the tongue and lips) are described in Extended Data Table 1 .

Next, to provide a compositional phonetic representation of each word, we constructed a feature space on the basis of the constituent phonemes of each word (Fig. 1c , left). For instance, the words ‘like’ and ‘bike’ would be represented uniquely in vector space because they differ by a single phoneme (‘like’ contains alveolar /l/ whereas ‘bike’ contains bilabial /b/; Fig. 1c , right). The presence of a particular phoneme was therefore represented by a unitary value for its respective vector component, together yielding a vectoral representation of the constituent phonemes of each word (section on ‘Constructing a word feature space’). Generalized linear models (GLMs) were then used to quantify the degree to which variations in neuronal activity during planning could be explained by individual phonemes across all possible combinations of phonemes per word (section on ‘Single-neuronal analysis’).

Overall, we find that the firing activities of many of the neurons (46.7%, n = 127 of 272 units) were explained by the constituent phonemes of the word before utterance (−500 to 0 ms); GLM likelihood ratio test, P < 0.01); meaning that their activity patterns were informative of the phonetic content of the word. Among these, the activities of 56 neurons (20.6% of the 272 units recorded) were further selectively tuned to the planned production of specific phonemes (two-sided Wald test for each GLM coefficient, P < 0.01, Bonferroni-corrected across all phoneme categories; Fig. 1d,e and Extended Data Figs. 2 and 3 ). Thus, for example, whereas certain neurons changed their firing rate when the upcoming words contained bilabial consonants (for example, /p/ or /b/), others changed their firing rate when they contained velar consonants. Of these neurons, most encoded information both about the planned places and manners of articulation ( n = 37 or 66% overlap, two-sided hypergeometric test, P < 0.0001) or planned places of articulation and vowels ( n = 27 or 48% overlap, two-sided hypergeometric test, P < 0.0001; Extended Data Fig. 4 ). Most also reflected the spectral properties of the articulated words on a phoneme-by-phoneme basis (64%, n = 36 of 56; two-sided hypergeometric test, P = 1.1 × 10 −10 ; Extended Data Fig. 5a,b ); together providing detailed information about the upcoming phonemes before utterance.

Because we had a complete representation of the upcoming phonemes for each word, we could also quantify the degree to which neuronal activities reflected their specific combinations. For example, we could ask whether the activities of certain neurons not only reflected planned words with velar consonants but also words that contained the specific combination of both velar and labial consonants. By aligning the activity of each neuron to its preferred phonetic composition (that is, the specific combination of phonemes to which the neuron most strongly responded) and by calculating the Hamming distance between this and all other possible phonetic compositions across words (Fig. 1c , right; section on ‘Single-neuronal analysis’), we find that the relation between the vectoral distances across words and neuronal activity was significant (two-sided Spearman’s ρ = −0.97, P = 5.14 × 10 −7 ; Fig. 1f ). These neurons therefore seemed not only to encode specific planned phonemes but also their specific composition with upcoming words.

Finally, we asked whether the constituent phonemes of the word could be robustly decoded from the activity patterns of the neuronal population. Using multilabel decoders to classify the upcoming phonemes of words not used for model training (section on ‘Population modelling’), we find that the composition of phonemes could be predicted from neuronal activity with significant accuracy (receiver operating characteristic area under the curve; ROC-AUC = 0.75 ± 0.03 mean ± s.d. observed versus 0.48 ± 0.02 chance, P < 0.001, two-sided Mann–Whitney U -test; Fig. 1g ). Similar findings were also made when examining the planned manners of articulation (AUC = 0.77 ± 0.03, P < 0.001, two-sided Mann–Whitney U -test), primary cardinal vowels (AUC = 0.79 ± 0.04, P < 0.001, two-sided Mann–Whitney U -test) and their spectral properties (AUC = 0.75 ± 0.03, P < 0.001, two-sided Mann–Whitney U -test; Extended Data Fig. 5a , right). Taken together, these neurons therefore seemed to reliably predict the phonetic composition of the upcoming words before utterance.

Motoric and perceptual processes

Neurons that reflected the phonetic composition of the words during planning were largely distinct from those that reflected their composition during perception. It is possible, for instance, that similar response patterns could have been observed when simply hearing the words. Therefore, to test for this, we performed an extra ‘perception’ control in three of the participants whereby they listened to, rather than produced, the words ( n = 126 recorded units; section on ‘Speech production task’). Here, we find that 29.3% ( n = 37) of the neurons showed phonetic selectively during listening (Extended Data Fig. 6a ) and that their activities could be used to accurately predict the phonemes being heard (AUC = 0.70 ± 0.03 observed versus 0.48 ± 0.02 chance, P < 0.001, two-sided Mann–Whitney U -test; Extended Data Fig. 6b ). We also find, however, that these cells were largely distinct from those that showed phonetic selectivity during planning ( n = 10; 7.9% overlap) and that their activities were uninformative of phonemic content of the words being planned (AUC = 0.48 ± 0.01, P = 0.99, two-sided Mann–Whitney U -test; Extended Data Fig. 6b ). Similar findings were also made when replaying the participant’s own voices to them (‘playback’ control; 0% overlap in neurons); together suggesting that speaking and listening engaged largely distinct but complementary sets of cells in the neural population.

Given the above observations, we also examined whether the activities of the neurons could have been explained by the acoustic–phonetic properties of the preceding spoken words. For example, it is possible that the activities of the neuron may have partly reflected the phonetic composition of the previous articulated word or their motoric components. Thus, to test for this, we repeated our analyses but now excluded words in which the preceding articulated word contained the phoneme being decoded (section on ‘Single-neuronal analysis’) and find that decoding performance remained significant (AUC = 0.72 ± 0.1, P < 0.001, two-sided Mann–Whitney U -test). We also find that decoding performance remained significant when constricting (−400 to 0 ms window instead of −500:0 ms; AUC = 0.72 ± 0.1, P < 0.001, two-sided Mann–Whitney U -test) or shifting the analysis window closer to utterance (−300 to +200 ms window results in AUC = 0.76 ± 0.1, P < 0.001, two-sided Mann–Whitney U -test); indicating that these neurons coded for the phonetic composition of the upcoming words.

Syllabic and morphological features

To transform sets of consonants and vowels into words, the planned phonemes must also be arranged and segmented into distinct syllables 61 . For example, even though the words ‘casting’ and ‘stacking’ possess the same constituent phonemes, they are distinguished by their specific syllabic structure and order. Therefore, to examine whether neurons in the population may further reflect these sublexical features, we created an extra vector space based on the specific order and segmentation of phonemes (section on ‘Constructing a word feature space’). Here, focusing on the most common syllables to allow for tractable neuronal analysis (Extended Data Table 1 ), we find that the activities of 25.0% ( n = 68 of 272) of the neurons reflected the presence of specific planned syllables (two-sided Wald test for each GLM coefficient, P < 0.01, Bonferroni-corrected across all syllable categories; Fig. 2a,b ). Thus, whereas certain neurons may respond selectively to a velar-low-alveolar syllable, other neurons may respond selectively to an alveolar-low-velar syllable. Together, the neurons responded preferentially to specific syllables when tested across words (two-sided Spearman’s ρ = −0.96, P = 1.85 × 10 −6 ; Fig. 2c ) and accurately predicted their content (AUC = 0.67 ± 0.03 observed versus 0.50 ± 0.02 chance, P < 0.001, two-sided Mann–Whitney U -test; Fig. 2d ); suggesting that these subsets of neurons encoded information about the syllables.

a , Peri-event time histograms were constructed by aligning the APs of each neuron to word onset. Data are presented as mean (line) values ± s.e.m. (shade). Examples of two representative neurons which selectively changed their activity to specific planned syllables. Inset, spike waveform morphology and scale bar (0.5 ms). b , Scatter plots of D 2 values (the degree to which specific features explained neuronal response, n = 272 units) in relation to planned phonemes, syllables and morphemes. c , Average z -scored firing rates as a function of the Hamming distance between the preferred syllabic composition and all other compositions of the neuron. Data are presented as mean (line) values ± s.e.m. (shade). d , Decoding performance for planned syllables. The orange points provide the sampled distribution for the classifier’s ROC-AUC values ( n = 50 random test/train splits; P = 7.1 × 10 −18 two-sided Mann–Whitney U -test). Data are presented as mean ± s.d. e , To evaluate the selectivity of neurons to specific syllables, their activities were further compared for words that contained the preferred syllable of each neuron (that is, the syllable to which they responded most strongly; green) to (i) words that contained one or more of same individual phonemes but not necessarily their preferred syllable, (ii) words that contained different phonemes and syllables, (iii) words that contained the same phonemes but divided across different syllables and (iv) words that contained the same phonemes in a syllable but in different order (grey). Neuronal activities across all comparisons (to green points) were significant ( n = 113; P = 6.2 × 10 −20 , 8.8 × 10 −20 , 4.2 × 10 −20 and 1.4 × 10 −20 , for the comparisons above, respectively; two-sided Wilcoxon signed-rank test). Data are presented as mean (dot) values ± s.e.m.

Next, to confirm that these neurons were selectively tuned to specific syllables, we compared their activities for words that contained the preferred syllable of each neuron (for example, /d-iy/) to words that simply contained their constituent phonemes (for example, d or iy). Thus, for example, if these neurons reflected individual phonemes irrespective of their specific order, then we would observe no difference in response. On the basis of these comparisons, however, we find that the responses of the neurons to their preferred syllables was significantly greater than to that of their individual constituent phonemes ( z -score difference 0.92 ± 0.04; two-sided Wilcoxon signed-rank test, P < 0.0001; Fig. 2e ). We also tested words containing syllables with the same constituent phonemes but in which the phonemes were simply in a different order (for example, /g-ah-d/ versus /d-ah-g/) but again find that the neurons were preferentially tuned to specific syllables ( z -score difference 0.99 ± 0.06; two-sided Wilcoxon signed-rank test, P < 1.0 × 10 −6 ; Fig. 2e ). Then, we examined words that contained the same arrangements of phonemes but in which the phonemes themselves belonged to different syllables (for example, /r-oh-b/ versus r-oh/b-; accounting prosodic emphasis) and similarly find that the neurons were preferentially tuned to specific syllables ( z -score difference 1.01 ± 0.06; two-sided Wilcoxon signed-rank test, P < 0.0001; Fig. 2e ). Therefore, rather than simply reflecting the phonetic composition of the upcoming words, these subsets of neurons encoded their specific segmentation and order in individual syllables.

Finally, we asked whether certain neurons may code for the inclusion of morphemes. Unlike phonemes, bound morphemes such as ‘–ed’ in ‘directed’ or ‘re–’ in ‘retry’ are capable of carrying specific meanings and are thus thought to be subserved by distinct neural mechanisms 62 , 63 . Therefore, to test for this, we also parsed each word on the basis of whether it contained a suffix or prefix (controlling for word length) and find that the activities of 11.4% ( n = 31 of 272) of the neurons selectively changed for words that contained morphemes compared to those that did not (two-sided Wald test for each GLM coefficient, P < 0.01, Bonferroni-corrected across morpheme categories; Extended Data Fig. 5c ). Moreover, neural activity across the population could be used to reliably predict the inclusion of morphemes before utterance (AUC = 0.76 ± 0.05 observed versus 0.52 ± 0.01 for shuffled data, P < 0.001, two-sided Mann–Whitney U -test; Extended Data Fig. 5c ), together suggesting that the neurons coded for this sublexical feature.

Spatial distribution of neurons

Neurons that encoded information about the sublexical components of the upcoming words were broadly distributed across the cortex and cortical column depth. By tracking the location of each neuron in relation to the Neuropixels arrays, we find that there was a slightly higher preponderance of neurons that were tuned to phonemes (one-sided χ 2 test (2) = 0.7 and 5.2, P > 0.05, for places and manners of articulation, respectively), syllables (one-sided χ 2 test (2) = 3.6, P > 0.05) and morphemes (one-sided χ 2 test (2) = 4.9, P > 0.05) at lower cortical depths, but that this difference was non-significant, suggesting a broad distribution (Extended Data Fig. 7 ). We also find, however, that the proportion of neurons that showed selectivity for phonemes increased as recordings were acquired more posteriorly along the rostral–caudal axis of the cortex (one-sided χ 2 test (4) = 45.9 and 52.2, P < 0.01, for places and manners of articulation, respectively). Similar findings were also made for syllables and morphemes (one-sided χ 2 test (4) = 31.4 and 49.8, P < 0.01, respectively; Extended Data Fig. 7 ); together suggesting a gradation of cellular representations, with caudal areas showing progressively higher proportions of selective neurons.

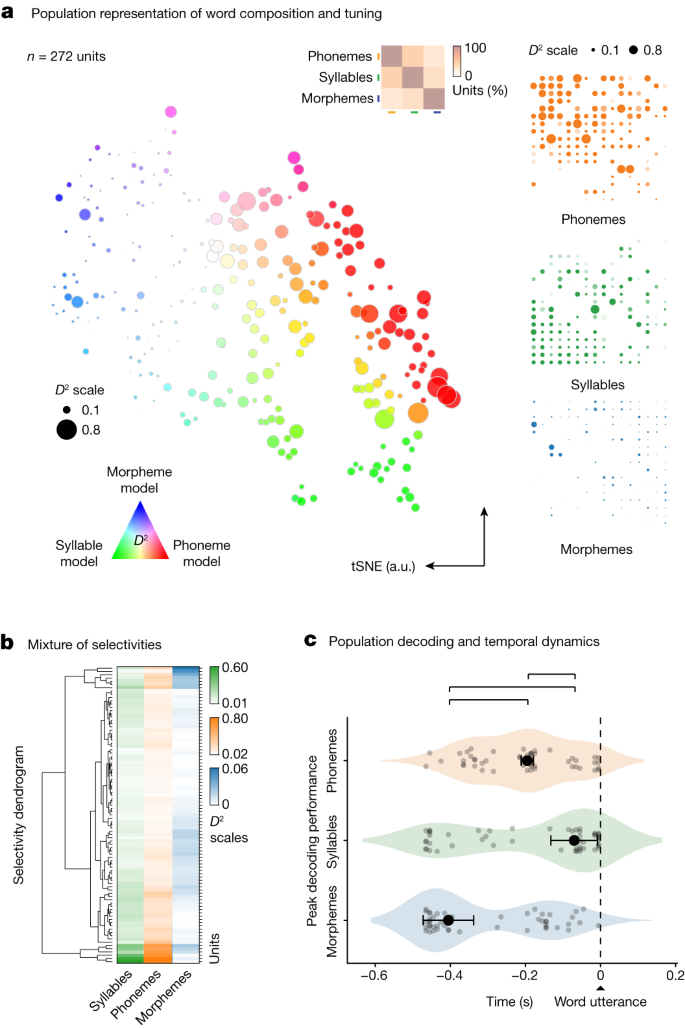

Collectively, the activities of these cell ensembles provided richly detailed information about the phonetic, syllabic and morphological components of upcoming words. Of the neurons that showed selectivity to any sublexical feature, 51% ( n = 46 of 90 units) were significantly informative of more than one feature. Moreover, the selectivity of these neurons lay along a continuum and were closely correlated (two-sided test of Pearson’s correlation in D 2 across all sublexical feature comparisons, r = 0.80, 0.51 and 0.37 for phonemes versus syllables, phonemes versus morphemes and syllables versus morphemes, respectively, all P < 0.001; Fig. 2b ), with most cells exhibiting a mixture of representations for specific phonetic, syllabic or morphological features (two-sided Wilcoxon signed-rank test, P < 0.0001). Figure 3a further illustrates this mixture of representations (Fig. 3a , left; t -distributed stochastic neighbour embedding (tSNE)) and their hierarchical structure (Fig. 3a , right; D 2 distribution), together revealing a detailed characterization of the phonetic, syllabic and morphological components of upcoming words at the level of the cell population.

a , Left, response selectivity of neurons to specific word features (phonemes, syllables and morphemes) is visualized across the population using a tSNE procedure (that is, neurons with similar response characteristics were plotted in closer proximity). The hue of each point reflects the degree of selectivity to a particular sublexical feature whereas the size of each point reflects the degree to which those features explained neuronal response. Inset, the relative proportions of neurons showing selectivity and their overlap. Right, the D 2 metric (the degree to which specific features explained neuronal response) for each cell shown individually per feature. b , The relative degree to which the activities of the neurons were explained by the phonetic, syllabic and morphological features of the words ( D 2 metric) and their hierarchical structure (agglomerative hierarchical clustering). c , Distribution of peak decoding performances for phonemes, syllables and morphemes aligned to word utterance onset. Significant differences in peak decoding timings across sample distribution are labelled in brackets above ( n = 50 random test/train splits; P = 0.024, 0.002 and 0.002; pairwise, two-sided permutation tests of differences in medians for phonemes versus syllables, syllables versus morphemes and phonemes versus morphemes, respectively; Methods ). Data are presented as median (dot) values ± bootstrapped standard error of the median.

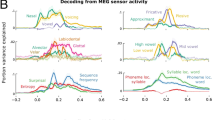

Temporal organization of representations

Given the above observations, we examined the temporal dynamic of neuronal activities during the production of speech. By tracking peak decoding in the period leading up to utterance onset (peak AUC; 50 model testing/training splits) 64 , we find these neural populations showed a consistent morphological–phonetic–syllabic dynamic in which decoding performance first peaked for morphemes. Peak decoding then followed for phonemes and syllables (Fig. 3b and Extended Data Fig. 8a,b ; section on ‘Population modelling’). Overall, decoding performance peaked for the morphological properties of words at −405 ± 67 ms before utterance, followed by peak decoding for phonemes at −195 ± 16 ms and syllables at −70 ± 62 ms (s.e.m.; Fig. 3b ). This temporal dynamic was highly unlikely to have been observed by chance (two-sided Kruskal–Wallis test, H = 13.28, P < 0.01) and was largely distinct from that observed during listening (two-sided Kruskal–Wallis test, H = 14.75, P < 0.001; Extended Data Fig. 6c ). The activities of these neurons therefore seemed to follow a consistent, temporally ordered morphological–phonetic–syllabic dynamic before utterance.

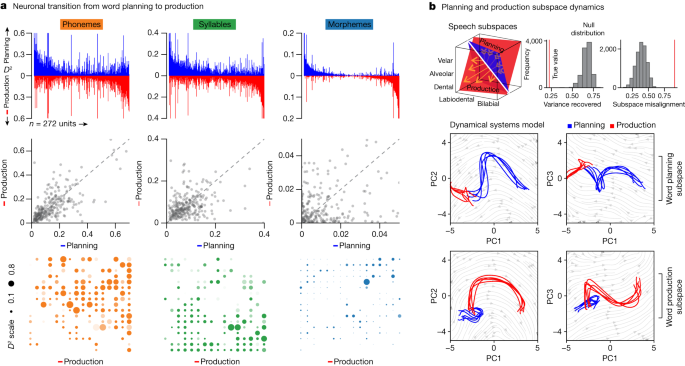

The activities of these neurons also followed a temporally structured transition from articulation planning to production. When comparing their activities before utterance onset (−500:0 ms) to those after (0:500 ms), we find that neurons which encoded information about the upcoming phonemes during planning encoded similar information during production ( P < 0.001, Mann–Whitney U -test for phonemes and syllables; Fig. 4a ). Moreover, when using models that were originally trained on words before utterance onset to decode the properties of the articulated words during production (model-switch approach), we find that decoding accuracy for the phonetic, syllabic and morphological properties of the words all remained significant (AUC = 0.76 ± 0.02 versus 0.48 ± 0.03 chance, 0.65 ± 0.03 versus 0.51 ± 0.04 chance, 0.74 ± 0.06 versus 0.44 ± 0.07 chance, for phonemes, syllables and morphemes, respectively; P < 0.001 for all, two-sided Mann–Whitney U -tests; Extended Data Fig. 8c ). Information about the sublexical features of words was therefore reliably represented during articulation planning and execution by the neuronal population.

a , Top, the D 2 value of neuronal activity (the degree to which specific features explained neuronal response, n = 272 units) during word planning (green) and production (orange) sorted across all population neurons. Middle, relationship between explanatory power ( D 2 ) of neuronal activity ( n = 272 units) for phonemes (Spearman’s ρ = 0.69), syllables (Spearman’s ρ = 0.40) and morphemes (Spearman’s ρ = 0.08) during planning and production ( P = 1.3 × 10 −39 , P = 6.6 × 10 −12 , P = 0.18, respectively, two-sided test of Spearman rank-order correlation). Bottom, the D 2 metric for each cell during production per feature ( n = 272 units). b , Top left, schematic illustration of speech planning (blue plane) and production (red plane) subspaces as traversed by a neuron for different phonemes (yellow arrows; Extended Data Fig. 9 ). Top right, subspace misalignment quantified by an alignment index (red) or Grassmannian chordal distance (red) compared to that expected from chance (grey), demonstrating that the subspaces occupied by the neural population ( n = 272 units) during planning and production were distinct. Bottom, projection of neural population activity ( n = 272 units) during word planning (blue) and production (red) onto the first three PCs for the planning (upper row) and production (lower row) subspaces.

Utilizing a dynamical systems approach to further allow for the unsupervised identification of functional subspaces (that is, wherein neural activity is embedded into a high-dimensional vector space; Fig. 4b , left; section on ‘Dynamical system and subspace analysis’) 31 , 34 , 65 , 66 , we find that the activities of the population were mostly low-dimensional, with more than 90% of the variance in neuronal activity being captured by its first four principal components (Fig. 4b , right). However, when tracking how the dimensions in which neural populations evolved over time, we also find that the subspaces which defined neural activity during articulation planning and production were largely distinct. In particular, whereas the first five subspaces captured 98.4% of variance in the trajectory of the population during planning, they captured only 11.9% of variance in the trajectory during articulation (two-sided permutation test, P < 0.0001; Fig. 4b , bottom and Extended Data Fig. 9 ). Together, these cell ensembles therefore seemed to occupy largely separate preparatory and motoric subspaces while also allowing for information about the phonetic, syllabic and morphological contents of the words to be stably represented during the production of speech.

Using Neuropixels probes to obtain acute, fine-scaled recordings from single neurons in the language-dominant prefrontal cortex 3 , 4 , 5 , 6 —in a region proposed to be involved in word planning 3 , 4 , 5 , 6 , 7 , 8 , 9 , 10 , 11 , 12 and production 13 , 14 , 15 , 16 —we find a strikingly detailed organization of phonetic representations at a cellular level. In particular, we find that the activities of many of the neurons closely mirrored the way in which the word sounds were produced, meaning that they reflected how individual planned phonemes were generated through specific articulators 58 , 59 . Moreover, rather than simply representing phonemes independently of their order or structure, many of the neurons coded for their composition in the upcoming words. They also reliably predicted the arrangement and segmentation of phonemes into distinct syllables, together suggesting a process that could allow the structure and order of articulatory events to be encoded at a cellular level.

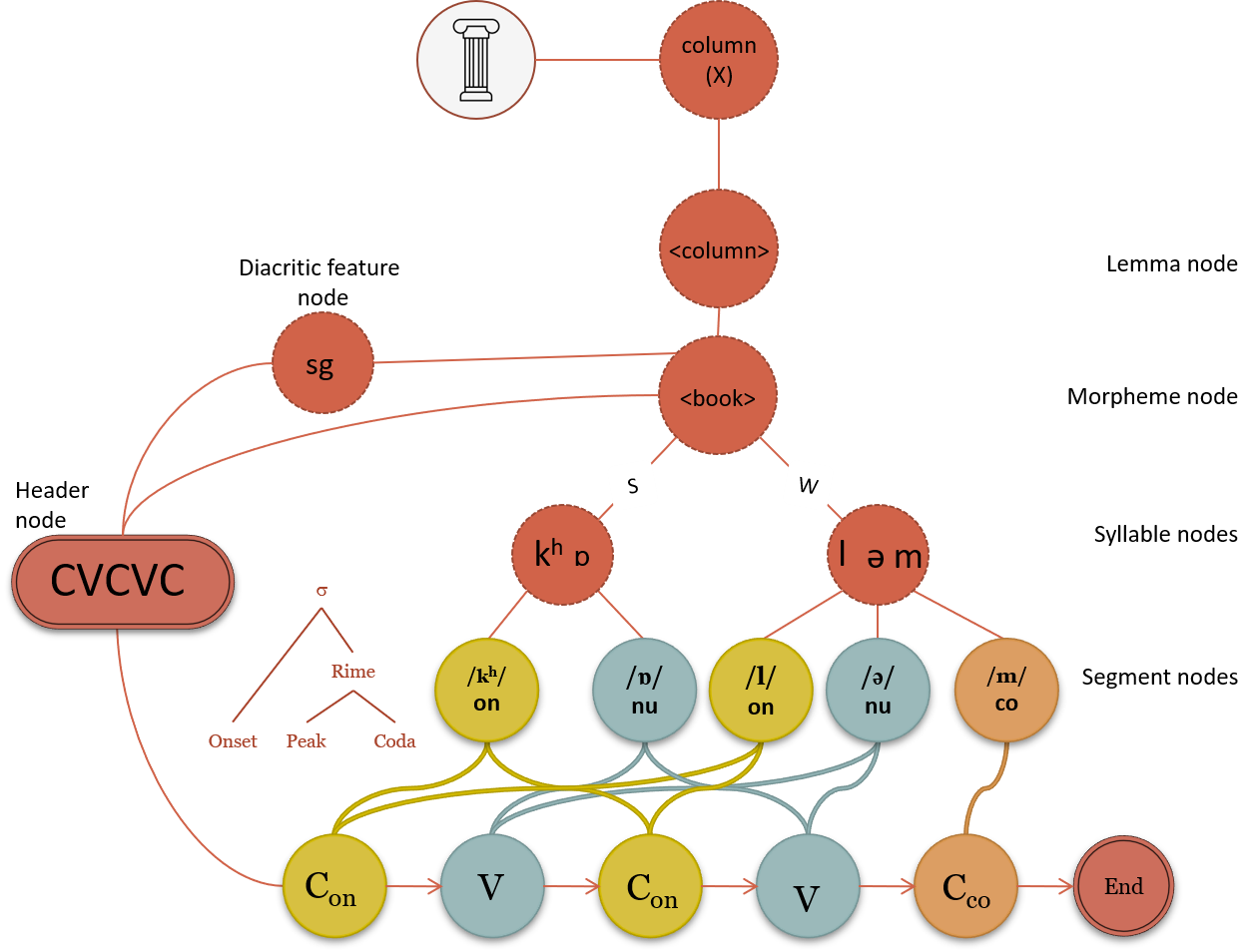

Collectively, this putative mechanism supports the existence of context-general representations of classes of speech sounds that speakers use to construct different word forms. In contrast, coding of sequences of phonemes as syllables may represent a context-specific representation of these speech sounds in a particular segmental context. This combination of context-general and context-specific representation of speech sound classes, in turn, is supportive of many speech production models which suggest that speakers hold abstract representations of discrete phonological units in a context-general way and that, as part of speech planning, these units are organized into prosodic structures that are context-specific 1 , 30 . Although the present study does not reveal whether these representations may be stored in and retrieved from a mental syllabary 1 or are constructed from abstract phonology ad hoc, it lays a groundwork from which to begin exploring these possibilities at a cellular scale. It also expands on previous observations in animal models such as marmosets 67 , 68 , singing mice 69 and canaries 70 on the syllabic structure and sequence of vocalization processes, providing us with some of the earliest lines of evidence for the neuronal coding of vocal-motor plans.

Another interesting finding from these studies is the diversity of phonetic feature representations and their organization across cortical depth. Although our recordings sampled locally from relatively small columnar populations, most phonetic features could be reliably decoded from their collective activities. Such findings suggest that phonetic information necessary for constructing words may be potentially fully represented in certain regions along the cortical column 10 , 46 , 47 , 48 , 49 , 50 . They also place these populations at a putative intersection for the shared coding of places and manners of articulation and demonstrate how these representations may be locally distributed. Such redundancy and accessibility of information in local cortical populations is consistent with that observed from animal models 31 , 32 , 33 , 34 , 35 and could serve to allow for the rapid orchestration of neuronal processes necessary for the real-time construction of words; especially during the production of natural speech. Our findings are also supportive of a putative ‘mirror’ system that could allow for the shared representation of phonetic features within the population when speaking and listening and for the real-time feedback of phonetic information by neurons during perception 23 , 71 .

A final notable observation from these studies is the temporal succession of neuronal encoding events. In particular, our findings are supportive of previous neurolinguistic theories suggesting closely coupled processes for coordination planned articulatory events that ultimately produces words. These models, for example, suggest that the morphology of a word is probably retrieved before its phonologic code, as the exact phonology depends on the morphemes in the word form 1 . They also suggest the later syllabification of planned phonemes which would enable them to be sequentially arranged in specific order (although different temporal orders have been suggested as well) 72 . Here, our findings provide tentative support for a structured sublexical coding succession that could allow for the discretization of such information during articulation. Our findings also suggest (through dynamical systems modelling) a mechanism that, consistent with previous observations on motor planning and execution 31 , 34 , 65 , 66 , could enable information to occupy distinct functional subspaces 34 , 73 and therefore allow for the rapid separation of neural processes necessary for the construction and articulation of words.

Taken together, these findings reveal a set of processes and framework in the language-dominant prefrontal cortex by which to begin understanding how words may be constructed during natural speech at a single-neuronal level through which to start defining their fine-scale spatial and temporal dynamics. Given their robust decoding performances (especially in the absence of natural language processing-based predictions), it is interesting to speculate whether such prefrontal recordings could also be used for synthetic speech prostheses or for the augmentation of other emerging approaches 21 , 22 , 74 used in brain–machine interfaces. It is important to note, however, that the production of words also involves more complex processes, including semantic retrieval, the arrangement of words in sentences, and prosody, which were not tested here. Moreover, future experiments will be required to investigate eloquent areas such as ventral premotor and superior posterior temporal areas not accessible with our present techniques. Here, this study provides a prospective platform by which to begin addressing these questions using a combination of ultrahigh-density microelectrode recordings, naturalistic speech tracking and acute real-time intraoperative neurophysiology to study human language at cellular scale.

Study participants

All aspects of the study were carried out in strict accordance with and were approved by the Massachusetts General Brigham Institutional Review Board. Right-handed native English speakers undergoing awake microelectrode recording-guided deep brain stimulator implantation were screened for enrolment. Clinical consideration for surgery was made by a multidisciplinary team of neurosurgeons, neurologists and neuropsychologists. Operative planning was made independently by the surgical team and without consideration of study participation. Participants were only enroled if: (1) the surgical plan was for awake microelectrode recording-guided placement, (2) the patient was at least 18 years of age, (3) they had intact language function with English fluency and (4) were able to provide informed consent for study participation. Participation in the study was voluntary and all participants were informed that they were free to withdraw from the study at any time.

Acute intraoperative single-neuronal recordings

Single-neuronal prefrontal recordings using neuropixels probes.

As part of deep brain stimulator implantation at our institution, participants are often awake and microelectrode recordings are used to optimize anatomical targeting of the deep brain structures 46 . During these cases, the electrodes often traverse part of the posterior language-dominant prefrontal cortex 3 , 4 , 5 , 6 in an area previously shown be involved in word planning 3 , 4 , 5 , 6 , 7 , 8 , 9 , 10 , 11 , 12 and sentence construction 13 , 14 , 15 , 16 and which broadly connects with premotor areas involved in their articulation 51 , 52 , 53 and lexical processing 17 , 18 , 19 by imaging studies (Extended Data Fig. 1a,b ). All microelectrode entry points and placements were based purely on planned clinical targeting and were made independently of any study consideration.

Sterile Neuropixels probes (v.1.0-S, IMEC, ethylene oxide sterilized by BioSeal 54 ) together with a 3B2 IMEC headstage were attached to cannula and a manipulator connected to a ROSA ONE Brain (Zimmer Biomet) robotic arm. Here, the probes were inserted into the cortical ribbon under direct robot navigational guidance through the implanted burr hole (Fig. 1a ). The probes (width 70 µm; length 10 mm; thickness 100 µm) consisted of a total of 960 contact sites (384 preselected recording channels) laid out in a chequerboard pattern with approximately 25 µm centre-to-centre nearest-neighbour site spacing. The IMEC headstage was connected through a multiplexed cable to a PXIe acquisition module card (IMEC), installed into a PXIe Chassis (PXIe-1071 chassis, National Instruments). Neuropixels recordings were performed using SpikeGLX (v.20201103 and v.20221012-phase30; http://billkarsh.github.io/SpikeGLX/ ) or OpenEphys (v.0.5.3.1 and v.0.6.0; https://open-ephys.org/ ) on a computer connected to the PXIe acquisition module recording the action potential band (AP, band-pass filtered from 0.3 to 10 kHz) sampled at 30 kHz and a local-field potential band (LFP, band-pass filtered from 0.5 to 500 Hz), sampled at 2,500 Hz. Once putative units were identified, the Neuropixels probe was briefly held in position to confirm signal stability (we did not screen putative neurons for speech responsiveness). Further description of this recording approach can be found in refs. 54 , 55 . After single-neural recordings from the cortex were completed, the Neuropixels probe was removed and subcortical neuronal recordings and deep brain stimulator placement proceeded as planned.

Single-unit isolation

Single-neuronal recordings were performed in two main steps. First, to track the activities of putative neurons at high spatiotemporal resolution and to account for intraoperative cortical motion, we use a Decentralized Registration of Electrophysiology Data software (DREDge; https://github.com/evarol/DREDge ) and interpolation approach ( https://github.com/williamunoz/InterpolationAfterDREDge ). Briefly, and as previously described 54 , 55 , 56 , an automated protocol was used to track LFP voltages using a decentralized correlation technique that re-aligned the recording channels in relation to brain movements (Fig. 1a , right). Following this step, we then interpolated the AP band continuous voltage data using the DREDge motion estimate to allow the activities of the putative neurons to be stably tracked over time. Next, single units were isolated from the motion-corrected interpolated signal using Kilosort (v.1.0; https://github.com/cortex-lab/KiloSort ) followed by Phy for cluster curation (v.2.0a1; https://github.com/cortex-lab/phy ; Extended Data Fig. 1c,d ). Here, units were selected on the basis of their waveform morphologies and separability in principal component space, their interspike interval profiles and similarity of waveforms across contacts. Only well-isolated single units with mean firing rates ≥0.1 Hz were included. The range of units obtained from these recordings was 16–115 units per participant.

Audio recordings and task synchronization

For task synchronization, we used the TTL output and audio output to send the synchronization trigger through the SMA input to the IMEC PXIe acquisition module card. To allow for added synchronizing, triggers were also recorded on an extra breakout analogue and digital input/output board (BNC2110, National Instruments) connected through a PXIe board (PXIe-6341 module, National Instruments).

Audio recordings were obtained at 44 kHz sampling frequency (TASCAM DR-40×4-Channel/ 4-Track Portable Audio Recorder and USB Interface with Adjustable Microphone) which had an audio input. These recordings were then sent to a NIDAQ board analogue input in the same PXIe acquisition module containing the IMEC PXIe board for high-fidelity temporal alignment with neuronal data. Synchronization of neuronal activity with behavioural events was performed through TTL triggers through a parallel port sent to both the IMEC PXIe board (the sync channel) and the analogue NIDAQ input as well as the parallel audio input into the analogue input channels on the NIDAQ board.

Audio recordings were annotated in semi-automated fashion (Audacity; v.2.3). Recorded audio for each word and sentence by the participants was analysed in Praat 75 and Audacity (v.2.3). Exact word and phoneme onsets and offsets were identified using the Montreal Forced Aligner (v.2.2; https://github.com/MontrealCorpusTools/Montreal-Forced-Aligner ) 76 and confirmed with manual review of all annotated recordings. Together, these measures allowed for the millisecond-level alignment of neuronal activity with each produced word and phoneme.

Anatomical localization of recordings

Pre-operative high-resolution magnetic resonance imaging and postoperative head computerized tomography scans were coregistered by combination of ROSA software (Zimmer Biomet; v.3.1.6.276), Mango (v.4.1; https://mangoviewer.com/download.html ) and FreeSurfer (v.7.4.1; https://surfer.nmr.mgh.harvard.edu/fswiki/DownloadAndInstall ) to reconstruct the cortical surface and identify the cortical location from which Neuropixels recordings were obtained 77 , 78 , 79 , 80 , 81 . This registration allowed localization of the surgical areas that underlaid the cortical sites of recording (Fig. 1a and Extended Data Fig. 1a ) 54 , 55 , 56 . The MNI transformation of these coordinates was then carried out to register the locations in MNI space with Fieldtrip toolbox (v.20230602; https://www.fieldtriptoolbox.org/ ; Extended Data Fig. 1b ) 82 .

For depth calculation, we estimated the pial boundary of recordings according to the observed sharp signal change in signal from channels that were implanted in the brain parenchyma versus those outside the brain. We then referenced our single-unit recording depth (based on their maximum waveform amplitude channel) in relation to this estimated pial boundary. Here, all units were assessed on the basis of their relative depths in relation to the pial boundary as superficial, middle and deep (Extended Data Fig. 7 ).

Speech production task

The participants performed a priming-based naturalistic speech production task 57 in which they were given a scene on a screen that consisted of a scenario that had to be described in specific order and format. Thus, for example, the participant may be given a scene of a boy and a girl playing with a balloon or they may be given a scene of a dog chasing a cat. These scenes, together, required the participants to produce words that varied in phonetic, syllabic and morphosyntactic content. They were also highlighted in a way that required them to produce the words in a structured format. Thus, for example, a scene may be highlighted in a way that required the participants to produce the sentence “The mouse was being chased by the cat” or in a way that required them to produce the sentence “The cat was chasing the mouse” (Extended Data Fig. 2a ). Because the sentences had to be constructed de novo, it also required the participants to produce the words without providing explicit phonetic cues (for example, from hearing and then repeating the word ‘cat’). Taken together, this task therefore allowed neuronal activity to be examined whereby words (for example, ‘cat’), rather than independent phonetic sounds (for example, /k/), were articulated and in which the words were produced during natural speech (for example, constructing the sentence “the dog chased the cat”) rather than simply repeated (for example, hearing and then repeating the word ‘cat’).

Finally, to account for the potential contribution of sensory–perceptual responses, three of the participants also performed a ‘perception’ control in which they listened to words spoken to them. One of these participants further performed an auditory ‘playback’ control in which they listened to their own recorded voice. For this control, all words spoken by the participant were recorded using a high-fidelity microphone (Zoom ZUM-2 USM microphone) and then played back to them on a word-by-word level in randomized separate blocks.

Constructing a word feature space

To allow for single-neuronal analysis and to provide a compositional representation for each word, we grouped the constituent phonemes on the basis of the relative positions of articulatory organs associated with their production 60 . Here, for our primary analyses, we selected the places of articulation for consonants (for example, bilabial consonants) on the basis of established IPA categories defining the primary articulators involved in speech production. For consonants, phonemes were grouped on the basis of their places of articulation into glottal, velar, palatal, postalveolar, alveolar, dental, labiodental and bilabial. For vowels, we grouped phonemes on the basis of the relative height of the tongue with high vowels being produced with the tongue in a relatively high position and mid-low (that is, mid+low) vowels being produced with it in a lower position. Here, this grouping of phonemes is broadly referred to as ‘places of articulation’ together reflecting the main positions of articulatory organs and their combinations used to produce the words 58 , 59 . Finally, to allow for comparison and to test their generalizability, we examined the manners of articulation stop, fricative, affricate, nasal, liquid and glide for consonants which describe the nature of airflow restriction by various parts of the mouth and tongue. For vowels, we also evaluated the primary cardinal vowels i, e, ɛ, a, α, ɔ, o and u which are described, in combination, by the position of the tongue relative to the roof of the mouth, how far forward or back it lies and the relative positions of the lips 83 , 84 . A detailed summary of these phonetic groupings can be found in Extended Data Table 1 .

Phoneme feature space

To further evaluate the relationship between neuronal activity and the presence of specific constituent phonemes per word, the phonemes in each word were parsed according to their precise pronunciation provided by the English Lexicon Project (or the Longman Pronunciation Dictionary for American English where necessary) as described previously 85 . Thus, for example, the word ‘like’ (l-aɪ-k) would be parsed into a sequence of alveolar-mid-low-velar phonemes, whereas the word ‘bike’ (b-aɪ-k) would be parsed into a sequence of bilabial-mid-low-velar phonemes.

These constituent phonemes were then used to represent each word as a ten-dimensional vector in which the value in each position reflected the presence of each type of phoneme (Fig. 1c ). For example, the word ‘like’, containing a sequence of alveolar-mid-low-velar phonemes, was represented by the vector [0 0 0 1 0 0 1 0 0 1], with each entry representing the number of the respective type of phoneme in the word. Together, such vectors representing all words defined a phonetic ‘vector space’. Further analyses to evaluate the precise arrangement of phonemes per word are described further below. Goodness-of-fit and selectivity metrics used to evaluate single-neuronal responses to these phonemes and their specific combination in words are described further below.

Syllabic feature space

Next, to evaluate the relationship between neuronal activity and the specific arrangement of phonemes in syllables, we parsed the constituent syllables for each word using American pronunciations provided in ref. 85 . Thus, for example, ‘back’ would be defined as a labial-low-velar sequence. Here, to allow for neuronal analysis and to limit the combination of all possible syllables, we selected the ten most common syllable types. High and mid-low vowels were considered as syllables here only if they reflected syllables in themselves and were unbound from a consonant (for example, /ih/ in ‘hesitate’ or /ah-/ in ‘adore’). Similar to the phoneme space, the syllables were then transformed into an n -dimensional binary vector in which the value in each dimension reflected the presence of specific syllables (similar to construction of the phoneme space). Thus, for the n -dimensional representation of each word in this syllabic feature space, the value in each dimension could be also interpreted in relation to neuronal activity.