An official website of the United States government

The .gov means it's official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you're on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- Browse Titles

NCBI Bookshelf. A service of the National Library of Medicine, National Institutes of Health.

WormBook: The Online Review of C. elegans Biology [Internet]. Pasadena (CA): WormBook; 2005-2018.

WormBook: The Online Review of C. elegans Biology [Internet].

A biologist's guide to statistical thinking and analysis *.

David S. Fay and Ken Gerow .

Affiliations

The proper understanding and use of statistical tools are essential to the scientific enterprise. This is true both at the level of designing one's own experiments as well as for critically evaluating studies carried out by others. Unfortunately, many researchers who are otherwise rigorous and thoughtful in their scientific approach lack sufficient knowledge of this field. This methods chapter is written with such individuals in mind. Although the majority of examples are drawn from the field of Caenorhabditis elegans biology, the concepts and practical applications are also relevant to those who work in the disciplines of molecular genetics and cell and developmental biology. Our intent has been to limit theoretical considerations to a necessary minimum and to use common examples as illustrations for statistical analysis. Our chapter includes a description of basic terms and central concepts and also contains in-depth discussions on the analysis of means, proportions, ratios, probabilities, and correlations. We also address issues related to sample size, normality, outliers, and non-parametric approaches.

1. The basics

1.1. introduction.

At the first group meeting that I attended as a new worm postdoc (1997, D.S.F.), I heard the following opinion expressed by a senior scientist in the field: “If I need to rely on statistics to prove my point, then I'm not doing the right experiment.” In fact, reading this statement today, many of us might well identify with this point of view. Our field has historically gravitated toward experiments that provide clear-cut “yes” or “no” types of answers. Yes, mutant X has a phenotype. No, mutant Y does not genetically complement mutant Z . We are perhaps even a bit suspicious of other kinds of data, which we perceive as requiring excessive hand waving. However, the realities of biological complexity, the sometimes-necessary intrusion of sophisticated experimental design, and the need for quantifying results may preclude black-and-white conclusions. Oversimplified statements can also be misleading or at least overlook important and interesting subtleties. Finally, more and more of our experimental approaches rely on large multi-faceted datasets. These types of situations may not lend themselves to straightforward interpretations or facile models. Statistics may be required.

The intent of these sections will be to provide C. elegans researchers with a practical guide to the application of statistics using examples that are relevant to our field. Namely, which common situations require statistical approaches and what are some of the appropriate methods (i.e., tests or estimation procedures) to carry out? Our intent is therefore to aid worm researchers in applying statistics to their own work, including considerations that may inform experimental design. In addition, we hope to provide reviewers and critical readers of the worm scientific literature with some criteria by which to interpret and evaluate statistical analyses carried out by others. At various points we suggest some general guidelines, which may lead to somewhat more uniformity in how our field conducts and presents statistical findings. Finally, we provide some suggestions for additional readings for those interested in a more systematic and in-depth coverage of the topics introduced ( Appendix A ).

1.2. Quantifying variation in population or sample data

There are numerous ways to describe and present the variation that is inherent to most data sets. Range (defined as the largest value minus the smallest) is one common measure and has the advantage of being simple and intuitive. Range, however, can be misleading because of the presence of outliers , and it tends to be larger for larger sample sizes even without unusual data values . Standard deviation (SD) is the most common way to present variation in biological data. It has the advantage that nearly everyone is familiar with the term and that its units are identical to the units of the sample measurement. Its disadvantage is that few people can recall what it actually means.

Figure 1 depicts density curves of brood sizes in two different populations of self-fertilizing hermaphrodites. Both have identical average brood sizes of 300. However, the population in Figure 1B displays considerably more inherent variation than the population in Figure 1A . Looking at the density curves, we would predict that 10 randomly selected values from the population depicted in Figure 1B would tend to show a wider range than an equivalent set from the more tightly distributed population in Figure 1A . We might also note from the shape and symmetry of the density curves that both populations are Normally 1 distributed (this is also referred to as a Gaussian distribution ). In reality, most biological data do not conform to a perfect bell-shaped curve, and, in some cases, they may profoundly deviate from this ideal. Nevertheless, in many instances, the distribution of various types of data can be roughly approximated by a normal distribution. Furthermore, the normal distribution is a particularly useful concept in classical statistics (more on this later) and in this example is helpful for illustrative purposes.

Two normal distributions.

The vertical red lines in Figure 1A and 1B indicate one SD to either side of the mean. From this, we can see that the population in Figure 1A has a SD of 20, whereas the population in Figure 1B has a SD of 50. A useful rule of thumb is that roughly 67% of the values within a normally distributed population will reside within one SD to either side of the mean. Correspondingly, 95% of values reside within two 2 SDs, and more than 99% reside within three SDs to either side of the mean. Thus, for the population in Figure 1A , we can predict that about 95% of hermaphrodites produce brood sizes between 260 and 340, whereas for the population in Figure 1B , 95% of hermaphrodites produce brood sizes between 200 and 400.

Often we can never really know the true mean or SD of a population because we cannot usually observe the entire population. Instead, we must use a sample to make an educated guess. In the case of experimental laboratory science, there is often no limit to the number of animals that we could theoretically test or the number of experimental repeats that we could perform. Admittedly, use of the term “populations” in this context can sound rather forced. It's awkward for us to think of a theoretical collection of bands on a western blot or a series of cycle numbers from a qRT-PCR experiment as a population, but from the standpoint of statistics, that's exactly what they are. Thus, our populations tend to be mythical in nature as well as infinite. Moreover, even the most sadistic advisor can only expect a finite number of biological or technical repeats to be carried out. The data that we ultimately analyze are therefore always just a tiny proportion of the population, real or theoretical, from whence they came.

It is important to note that increasing our sample size will not predictably increase or decrease the amount of variation that we are ultimately likely to record. What can be stated is that a larger sample size will tend to give a sample SD that is a more accurate estimate of the population SD. In the same vein, a larger sample size will also provide a more accurate estimation of other parameters , such as the population mean.

In some cases, standard numerical summaries (e.g., mean and SD) may not be sufficient to fully or accurately describe the data. In particular, these measures usually 3 tell you nothing about the shape of the underlying distribution. Figure 2 illustrates this point; Panels A and B show the duration (in seconds) of vulval muscle cell contractions in two populations of C. elegans . The data from both panels have nearly identical means and SDs, but the data from panel A are clearly bimodal, whereas the data from Panel B conform more to a normal distribution 4 . One way to present this observation would be to show the actual histograms (in a figure or supplemental figure). Alternatively, a somewhat more concise depiction, which still gets the basic point across, is shown by the individual data plot in Panel C. In any case, presenting these data simply as a mean and SD without highlighting the difference in distributions would be potentially quite misleading, as the populations would appear to be identical.

Two distributions with similar means and SDs. Panels A and B show histograms of simulated data of vulval muscle cell contraction durations derived from underlying populations with distributions that are either bimodal (A) or normal (B). Note that both (more...)

1.3. Quantifying statistical uncertainty

Before you become distressed about what the title of this section actually means, let's be clear about something. Statistics, in its broadest sense, effectively does two things for us—more or less simultaneously. (1) Statistics provides us with useful quantitative descriptors for summarizing our data. This includes fairly simple stuff such as means and proportions. It also includes more complex statistics such as the correlation between related measurements, the slope of a linear regression, and the odds ratio for mortality under differing conditions. These can all be useful for interpreting our data, making informed conclusions, and constructing hypotheses for future studies. However, statistics gives us something else, too. (2) Statistics also informs us about the accuracy of the very estimates that we've made. What a deal! Not only can we obtain predictions for the population mean and other parameters, we also estimate how accurate those predictions really are. How this comes about is part of the “magic” of statistics, which as stated shouldn't be taken literally, even if it appears to be that way at times.

In the preceding section we discussed the importance of SD as a measure for describing natural variation within an entire population of worms. We also touched upon the idea that we can calculate statistics, such as SD, from a sample that is drawn from a larger population. Intuition also tells us that these two values, one corresponding to the population, the other to the sample, ought to generally be similar in magnitude, if the sample size is large. Finally, we understand that the larger the sample size, the closer our sample statistic will be to the true population statistic. This is true not only for the SD but also for many other statistics as well.

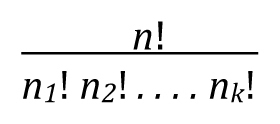

It is now time to discuss SD in another context that is central to the understanding of statistics. We do this with a thought experiment. Imagine that we determine the brood size for six animals that are randomly selected from a larger population. We could then use these data to calculate a sample mean, as well as a sample SD, which would be based on a sample size of n = 6. Not being satisfied with our efforts, we repeat this approach every day for 10 days, each day obtaining a new mean and new SD ( Table 1 ). At the end of 10 days, having obtained ten different means, we can now use each sample mean as though it were a single data point to calculate a new mean, which we can call the mean of the means . In addition, we can calculate the SD of these ten mean values, which we can refer to for now as the SD of the means . We can then pose the following question: will the SD calculated using the ten means generally turn out to be a larger or smaller value (on average) than the SD calculated from each sample of six random individuals? This is not merely an idiosyncratic question posed for intellectual curiosity. The notion of the SD of the mean is critical to statistical inference. Read on.

Table 1. Ten random samples (trials) of brood sizes.

Thinking about this, we may realize that the ten mean values, being averages of six worms, will tend to show less total variation than measurements from individual worms. In other words, the variation between means should be less than the variation between individual data values. Moreover, the average of these means will generally be closer to the true population mean than would a mean obtained from just six random individuals. In fact, this idea is born out in Table 1 , which used random sampling from a theoretical population (with a mean of 300 and SD of 50) to generate the sample values. We can therefore conclude sample means will generally exhibit less variation than that seen among individual samples. Furthermore, we can consider what might happen if we were to take daily samples of 20 worms instead of 6. Namely, the larger sample size would result in an even tighter cluster of mean values. This in turn would produce an even smaller SD of the means than from the experiment where only six worms were analyzed each day. Thus, increasing sample size will consistently lead to a smaller SD of the means. Note however, as discussed above, increasing sample size will not predictably lead to a smaller or larger SD for any given sample.

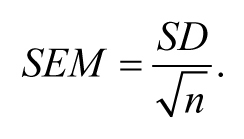

It turns out that this concept of calculating the SD of multiple means (or other statistical parameters) is a very important one. The good news is that rather than having to actually collect samples for ten or more days, statistical theory gives us a short cut that allows us to estimate this value based on only a single day's effort. What a deal! Rather than calling this value the “SD of the means”, as might make sense, the field has historically chosen to call this value the “ standard error of the mean ” (SEM). In fact, whenever a SD is calculated for a statistic (e.g., the slope from a regression or a proportion), it is called the standard error (SE) of that statistic. SD is a term generally reserved for describing variation within a sample or population only. Although we will largely avoid the use of formulas in this review, it is worth knowing that we can estimate the SEM from a single sample of n animals using the following equation:

From this relatively simple formula 5 , we can see that the greater the SD of the sample, the greater the SEM will be. Conversely, the larger our sample size, the smaller the SEM will be. Looking back at Table 1 , we can also see that the SEM estimate for each daily sample is reasonably 6 close, on average, to what we obtained by calculating the observed SD of the means from 10 days. This is not an accident. Rather, chalk one up for statistical theory.

Obviously, having a smaller SEM value reflects more precise estimates of the population mean. For that reason, scientists are typically motivated to keep SEM values as low as possible. In the case of experimental biology, variation within our samples may be due to inherent biological variation or to technical issues related to the methods we use. The former we probably can't control very much. The latter we may be able to control to some extent, but probably not completely. This leaves increasing sample size as a direct route to decreasing SE estimates and thus to improving the precision of the parameter estimates. However, increasing sample size in some instances may not be a practical or efficient use of our time. Furthermore, because the denominator in SE equations typically involves the square root of sample size, increasing sample size will have diminishing returns. In general, a quadrupling of sample size is required to yield a halving of the SEM. Moreover, as discussed elsewhere in this chapter, supporting very small differences with very high sample sizes might lead us to make convincing-sounding statistical statements regarding biological effects of no real importance, which is not something we should aspire to do.

1.4. Confidence intervals

Although SDs and SEs are all well and good, what we typically want to know is the accuracy of our parameter estimates. It turns out that SEs are the key to calculating a more directly useful measure, a confidence interval (CI). Although, the transformation of SEs into CIs isn't necessarily that complex, we will generally want to let computers or calculators perform this conversion for us. That said, for sample means derived from sample sizes greater than about ten, a 95% CI will usually span about two SEMs above and below the mean 7 . When pressed for a definition, most people might say that with a 95% CI, we are 95% certain that the true value of the mean or slope (or whatever parameter we are estimating) is between the upper and lower limits of the given CI. Proper statistical semantics would more accurately state that a 95% CI procedure is such that 95% of properly calculated intervals from appropriately random samples will contain the true value of the parameter. If you can discern the difference, fine. If not, don't worry about it.

One thing to keep in mind about CIs is that, for a given sample, a higher confidence level (e.g., 99%) will invoke intervals that are wider than those created with a lower confidence level (e.g., 90%). Think about it this way. With a given amount of information (i.e., data), if you wish to be more confident that your interval contains the parameter, then you need to make the interval wider. Thus, less confidence corresponds to a narrower interval, whereas higher confidence requires a wider interval. Generally for CIs to be useful, the range shouldn't be too great. Another thing to realize is that there is really only one way to narrow the range associated with a given confidence level, and that is to increase the sample size. As discussed above, however, diminishing returns, as well as basic questions related to biological importance of the data, should figure foremost in any decision regarding sample size.

1.5. What is the best way to report variation in data?

Of course, the answer to this will depend on what you are trying to show and which measures of variation are most relevant to your experiment. Nevertheless, here is an important news flash: with respect to means, the SEM is often not the most informative parameter to display. This should be pretty obvious by now. SD is a good way to go if we are trying to show how much variation there is within a population or sample. CIs are highly informative if we are trying to make a statement regarding the accuracy of the estimated population mean. In contrast, SEM does neither of these things directly, yet remains very popular and is often used inappropriately ( Nagele, 2003 ). Some statisticians have pointed out that because SEM gives the smallest of the error bars, authors may often chose SEMs for aesthetic reasons. Namely, to make their data appear less variable or to convince readers of a difference between values that might not otherwise appear to be very different. In fairness, SEM is a perfectly legitimate descriptor of variation 8 . In contrast to CIs, the size of the SEM is not an artifact of the chosen confidence level. Furthermore, unlike the CI, the validity of the SEM does not require assumptions that relate to statistical normality 9 . However, because the SEM is often less directly informative to readers, presenting either SDs or CIs can be strongly recommended for most data. Furthermore, if the intent of a figure is to compare means for differences or a lack thereof, CIs are the clear choice.

1.6. A quick guide to interpreting different indicators of variation

Figure 3 shows a bar graph containing identical (artificial) data plotted with the SD, SEM, and CI to indicate variation. Note that the SD is the largest value, followed by the CI and SEM. This will be the case for all but very small sample sizes (in which case the CI could be wider than two SDs). Remember: SD is variation among individuals, SE is the variation for a theoretical collection of sample means (acquired in an identical manner to the real sample), and CI is a rescaling of the SE so as to be able to impute confidence regarding the value of the population mean. With larger sample sizes, the SE and CI will shrink, but there is no such tendency for the SD, which tends to remain the same but can also increase or decrease somewhat in a manner that is not predictable.

Illustration of SD, SE, and CI as measures of variability.

Figure 4 shows two different situations for two artificial means: one in which bars do not overlap ( Figure 4A ), and one in which they do, albeit slightly ( Figure 4B ). The following are some general guidelines for interpreting error bars, which are summarized in Table 2 . (1) With respect to SD, neither overlapping bars nor an absence of overlapping bars can be used to infer whether or not two sample means are significantly different. This again is because SD reflects individual variation and you simply cannot infer anything about significance of differences for the means. End of story. (2) With respect to SEM, overlapping bars ( Figure 4B ) can be used to infer that the sample means are not significantly different . However, the absence of overlapping bars ( Figure 4A ) cannot be used to infer that the sample means are different. (3) With respect to CIs, the absence of overlapping bars ( Figure 4A ) can be used to infer that the sample means are statistically different 6 . If the CI bars do overlap ( Figure 4B ), however, the answer is “maybe”. Here is why. The correct measure for comparing two means is in fact the SE of the difference between the means . In the case of equal SEMs, as illustrated in Figure 4 , the SE of the difference is ∼1.4 times the SEM. To be significantly different, 10 then, two means need to be separated by about twice the SE of the difference (2.8 SEMs). In contrast, visual separation using the CI bars requires a difference of four times the SEM (remember that CI ∼ 2 × SEM above and below the mean), which is larger than necessary to infer a difference between means. Therefore, a slight overlap can be present even when two means differ significantly. If they overlap a lot (e.g., the CI for Mean 1 includes Mean 2), then the two means are for sure not significantly different. If there is any uncertainty (i.e., there is some slight overlap), determination of significance is not possible; the test needs to be formally carried out.

Comparing means using visual measures of precision.

Table 2. General guidelines for interpreting error bars.

1.7. The coefficient of variation

1.8. P -values

Most statistical tests culminate in a statement regarding the P -value, without which reviewers or readers may feel shortchanged. The P -value is commonly defined as the probability of obtaining a result (more formally a test statistic ) that is at least as extreme as the one observed, assuming that the null hypothesis is true. Here, the specific null hypothesis will depend on the nature of the experiment. In general, the null hypothesis is the statistical equivalent of the “innocent until proven guilty” convention of the judicial system. For example, we may be testing a mutant that we suspect changes the ratio of male-to-hermaphrodite cross-progeny following mating. In this case, the null hypothesis is that the mutant does not differ from wild type, where the sex ratio is established to be 1:1. More directly, the null hypothesis is that the sex ratio in mutants is 1:1. Furthermore, the complement of the null hypothesis, known as the experimental or alternative hypothesis , would be that the sex ratio in mutants is different than that in wild type or is something other than 1:1. For this experiment, showing that the ratio in mutants is significantly different than 1:1 would constitute a finding of interest. Here, use of the term “significantly” is short-hand for a particular technical meaning, namely that the result is statistically significant , which in turn implies only that the observed difference appears to be real and is not due only to random chance in the sample(s). Whether or not a result that is statistically significant is also biologically significant is another question . Moreover, the term significant is not an ideal one, but because of long-standing convention, we are stuck with it. Statistically plausible or statistically supported may in fact be better terms.

Getting back to P -values, let's imagine that in an experiment with mutants, 40% of cross-progeny are observed to be males, whereas 60% are hermaphrodites. A statistical significance test then informs us that for this experiment, P = 0.25. We interpret this to mean that even if there was no actual difference between the mutant and wild type with respect to their sex ratios, we would still expect to see deviations as great, or greater than, a 6:4 ratio in 25% of our experiments. Put another way, if we were to replicate this experiment 100 times, random chance would lead to ratios at least as extreme as 6:4 in 25 of those experiments. Of course, you may well wonder how it is possible to extrapolate from one experiment to make conclusions about what (approximately) the next 99 experiments will look like. (Short answer: There is well-established statistical theory behind this extrapolation that is similar in nature to our discussion on the SEM.) In any case, a large P -value, such as 0.25, is a red flag and leaves us unconvinced of a difference. It is, however, possible that a true difference exists but that our experiment failed to detect it (because of a small sample size, for instance). In contrast, suppose we found a sex ratio of 6:4, but with a corresponding P -value of 0.001 (this experiment likely had a much larger sample size than did the first). In this case, the likelihood that pure chance has conspired to produce a deviation from the 1:1 ratio as great or greater than 6:4 is very small, 1 in 1,000 to be exact. Because this is very unlikely, we would conclude that the null hypothesis is not supported and that mutants really do differ in their sex ratio from wild type. Such a finding would therefore be described as statistically significant on the basis of the associated low P -value.

1.9. Why 0.05?

There is a long-standing convention in biology that P -values that are ≤0.05 are considered to be significant, whereas P -values that are >0.05 are not significant 11 . Of course, common sense would dictate that there is no rational reason for anointing any specific number as a universal cutoff, below or above which results must either be celebrated or condemned. Can anyone imagine a convincing argument by someone stating that they will believe a finding if the P -value is 0.04 but not if it is 0.06? Even a P -value of 0.10 suggests a finding for which there is some chance that it is real.

So why impose “cutoffs”, which are often referred to as the chosen α level , of any kind? Well, for one thing, it makes life simpler for reviewers and readers who may not want to agonize over personal judgments regarding every P -value in every experiment. It could also be argued that, much like speed limits, there needs to be an agreed-upon cutoff. Even if driving at 76 mph isn't much more dangerous than driving at 75 mph, one does have to consider public safety. In the case of science, the apparent danger is that too many false-positive findings may enter the literature and become dogma. Noting that the imposition of a reasonable, if arbitrary, cutoff is likely to do little to prevent the publication of dubious findings is probably irrelevant at this point.

The key is not to change the chosen cutoff—we have no better suggestion 12 than 0.05. The key is for readers to understand that there is nothing special about 0.05 and, most importantly, to look beyond P -values to determine whether or not the experiments are well controlled and the results are of biological interest. It is also often more informative to include actual P -values rather than simply stating P ≤ 0.05; a result where P = 0.049 is roughly three times more likely to have occurred by chance than when P = 0.016, yet both are typically reported as P ≤ 0.05. Moreover, reporting the results of statistical tests as P ≤ 0.05 (or any number) is a holdover to the days when computing exact P -values was much more difficult. Finally, if a finding is of interest and the experiment is technically sound, reviewers need not skewer a result or insist on authors discarding the data just because P ≤ 0.07. Judgment and common sense should always take precedent over an arbitrary number.

2. Comparing two means

2.1. introduction.

Many studies in our field boil down to generating means and comparing them to each other. In doing so, we try to answer questions such as, “Is the average brood size of mutant x different from that of wild type?” or “Is the expression of gene y in embryos greater at 25 °C than at 20 °C?” Of course we will never really obtain identical values from any two experiments. This is true even if the data are acquired from a single population; the sample means will always be different from each other, even if only slightly. The pertinent question that statistics can address is whether or not the differences we inevitably observe reflect a real difference in the populations from which the samples were acquired. Put another way, are the differences detected by our experiments, which are necessarily based on a limited sample size, likely or not to result from chance effects of sampling (i.e., chance sampling ). If chance sampling can account for the observed differences, then our results will not be deemed statistically significant 13 . In contrast, if the observed differences are unlikely to have occurred by chance, then our results may be considered significant in so much as statistics are concerned. Whether or not such differences are biologically significant is a separate question reserved for the judgment of biologists.

Most biologists, even those leery of statistics, are generally aware that the venerable t- test (a.k.a., Student's t -test) 14 is the standard method used to address questions related to differences between two sample means. Several factors influence the power of the t- test to detect significant differences. These include the size of the sample and the amount of variation present within the sample. If these sound familiar, they should. They were both factors that influence the size of the SEM, discussed in the preceding section. This is not a coincidence, as the heart of a t- test resides in estimating the standard error of the difference between two means (SEDM). Greater variance in the sample data increases the size of the SEDM, whereas higher sample sizes reduce it. Thus, lower variance and larger samples make it easier to detect differences. If the size of the SEDM is small relative to the absolute difference in means, then the finding will likely hold up as being statistically significant .

In fact, it is not necessary to deal directly with the SEDM to be perfectly proficient at interpreting results from a t -test. We will therefore focus primarily on aspects of the t -test that are most relevant to experimentalists. These include choices of carrying out tests that are either one- or two-tailed and are either paired or unpaired, assumptions of equal variance or not, and issues related to sample sizes and normality. We would also note, in passing, that alternatives to the t -test do exist. These tests, which include the computationally intensive bootstrap (see Section 6.7 ), typically require somewhat fewer assumptions than the t -test and will generally yield similar or superior results. For reasonably large sample sizes, a t -test will provide virtually the same answer and is currently more straightforward to carry out using available software and websites. It is also the method most familiar to reviewers, who may be skeptical of approaches that are less commonly used.

2.2. Understanding the t-test: a brief foray into some statistical theory

To aid in understanding the logic behind the t -test, as well as the basic requirements for the t -test to be valid, we need to introduce a few more statistical concepts. We will do this through an example. Imagine that we are interested in knowing whether or not the expression of gene a is altered in comma-stage embryos when gene b has been inactivated by a mutation. To look for an effect, we take total fluorescence intensity measurements 15 of an integrated a ::GFP reporter in comma-stage embryos in both wild-type (Control, Figure 5A ) and b mutant (Test, Figure 5B ) strains. For each condition, we analyze 55 embryos. Expression of gene a appears to be greater in the control setting; the difference between the two sample means is 11.3 billion fluorescence units (henceforth simply referred to as “11.3 units”).

Summary of GFP-reporter expression data for a control and a test group.

Along with the familiar mean and SD, Figure 5 shows some additional information about the two data sets. Recall that in Section 1.2 , we described what a data set looks like that is normally distributed ( Figure 1 ). What we didn't mention is that distribution of the data 16 can have a strong impact, at least indirectly, on whether or not a given statistical test will be valid. Such is the case for the t -test. Looking at Figure 5 , we can see that the datasets are in fact a bit lopsided, having somewhat longer tails on the right. In technical terms, these distributions would be categorized as skewed right. Furthermore, because our sample size was sufficiently large (n=55), we can conclude that the populations from whence the data came are also skewed right. Although not critical to our present discussion, several parameters are typically used to quantify the shape of the data including the extent to which the data deviate from normality (e.g., skewness 17 , kurtosis 18 and A-squared 19 ). In any case, an obvious question now becomes, how can you know whether your data are distributed normally (or at least normally enough), to run a t -test?

Before addressing this question, we must first grapple with a bit of statistical theory. The Gaussian curve shown in Figure 6A represents a theoretical distribution of differences between sample means for our experiment. Put another way, this is the distribution of differences that we would expect to obtain if we were to repeat our experiment an infinite number of times. Remember that for any given population, when we randomly “choose” a sample, each repetition will generate a slightly different sample mean. Thus, if we carried out such sampling repetitions with our two populations ad infinitum, the bell-shaped distribution of differences between the two means would be generated ( Figure 6A ). Note that this theoretical distribution of differences is based on our actual sample means and SDs, as well as on the assumption that our original data sets were derived from populations that are normal, which is something we already know isn't true. So what to do?

Theoretical and simulated sampling distribution of differences between two means. The distributions are from the gene expression example. The mean and SE (SEDM) of the theoretical (A) and simulated (B) distributions are both approximately 11.3 and 1.4 (more...)

As it happens, this lack of normality in the distribution of the populations from which we derive our samples does not often pose a problem. The reason is that the distribution of sample means, as well as the distribution of differences between two independent sample means (along with many 20 other conventionally used statistics), is often normal enough for the statistics to still be valid. The reason is the The Central Limit Theorem , a “statistical law of gravity”, that states (in its simplest form 21 ) that the distribution of a sample mean will be approximately normal providing the sample size is sufficiently large. How large is large enough? That depends on the distribution of the data values in the population from which the sample came. The more non-normal it is (usually, that means the more skewed), the larger the sample size requirement. Assessing this is a matter of judgment 22 . Figure 7 was derived using a computational sampling approach to illustrate the effect of sample size on the distribution of the sample mean. In this case, the sample was derived from a population that is sharply skewed right, a common feature of many biological systems where negative values are not encountered ( Figure 7A ). As can be seen, with a sample size of only 15 ( Figure 7B ), the distribution of the mean is still skewed right, although much less so than the original population. By the time we have sample sizes of 30 or 60 ( Figure 7C, D ), however, the distribution of the mean is indeed very close to being symmetrical (i.e., normal).

Illustration of the Central Limit Theorem for binomial proportions. Panels A–D show results from a computational sampling experiment where the proportion of successes in the population is 0.25. The x axes indicate the proportions obtained from (more...)

Illustration of Central Limit Theorem for a skewed population of values. Panel A shows the population (highly skewed right and truncated at zero); Panels B, C, and D show distributions of the mean for sample sizes of 15, 30, and 60, respectively, as obtained (more...)

The Central Limit Theorem having come to our rescue, we can now set aside the caveat that the populations shown in Figure 5 are non-normal and proceed with our analysis. From Figure 6 we can see that the center of the theoretical distribution (black line) is 11.29, which is the actual difference we observed in our experiment. Furthermore, we can see that on either side of this center point, there is a decreasing likelihood that substantially higher or lower values will be observed. The vertical blue lines show the positions of one and two SDs from the apex of the curve, which in this case could also be referred to as SEDMs. As with other SDs, roughly 95% of the area under the curve is contained within two SDs. This means that in 95 out of 100 experiments, we would expect to obtain differences of means that were between “8.5” and “14.0” fluorescence units. In fact, this statement amounts to a 95% CI for the difference between the means, which is a useful measure and amenable to straightforward interpretation. Moreover, because the 95% CI of the difference in means does not include zero, this implies that the P -value for the difference must be less than 0.05 (i.e., that the null hypothesis of no difference in means is not true). Conversely, had the 95% CI included zero, then we would already know that the P -value will not support conclusions of a difference based on the conventional cutoff (assuming application of the two-tailed t -test; see below).

The key is to understand that the t -test is based on the theoretical distribution shown in Figure 6A , as are many other statistical parameters including 95% CIs of the mean. Thus, for the t -test to be valid, the shape of the actual differences in sample means must come reasonably close to approximating a normal curve. But how can we know what this distribution would look like without repeating our experiment hundreds or thousands of times? To address this question, we have generated a complementary distribution shown in Figure 6B . In contrast to Figure 6A , Figure 6B was generated using a computational re-sampling method known as bootstrapping (discussed in Section 6.7 ). It shows a histogram of the differences in means obtained by carrying out 1,000 in silico repeats of our experiment. Importantly, because this histogram was generated using our actual sample data, it automatically takes skewing effects into account. Notice that the data from this histogram closely approximate a normal curve and that the values obtained for the mean and SDs are virtually identical to those obtained using the theoretical distribution in Figure 6A . What this tells us is that even though the sample data were indeed somewhat skewed, a t -test will still give a legitimate result. Moreover, from this exercise we can see that with a sufficient sample size, the t -test is quite robust to some degree of non-normality in the underlying population distributions. Issues related to normality are also discussed further below.

2.3. One- versus two-sample tests

Although t -tests always evaluate differences between two means, in some cases only one of the two mean values may be derived from an experimental sample. For example, we may wish to compare the number of vulval cell fates induced in wild-type hermaphrodites versus mutant m . Because it is broadly accepted that wild type induces (on average) three progenitor vulval cells, we could theoretically dispense with re-measuring this established value and instead measure it only in the mutant m background ( Sulston and Horvitz, 1977 ). In such cases, we would be obliged to run a one-sample t -test to determine if the mean value of mutant m is different from that of wild type.

There is, however, a problem in using the one-sample approach, which is not statistical but experimental. Namely, there is always the possibility that something about the growth conditions, experimental execution, or alignment of the planets, could result in a value for wild type that is different from that of the established norm. If so, these effects would likely conspire to produce a value for mutant m that is different from the traditional wild-type value, even if no real difference exists. This could then lead to a false conclusion of a difference between wild type and mutant m . In other words, the statistical test, though valid, would be carried out using flawed data. For this reason, one doesn't often see one-sample t -tests in the worm literature. Rather, researchers tend to carry out parallel experiments on both populations to avoid being misled. Typically, this is only a minor inconvenience and provides much greater assurance that any conclusions will be legitimate. Along these lines, historical controls, including those carried out by the same lab but at different times, should typically be avoided.

2.4. One versus two tails

One aspect of the t -test that tends to agitate users is the obligation to choose either the one or two-tailed versions of the test. That the term “tails” is not particularly informative only exacerbates the matter. The key difference between the one- and two-tailed versions comes down to the formal statistical question being posed. Namely, the difference lies in the wording of the research question. To illustrate this point, we will start by applying a two-tailed t -test to our example of embryonic GFP expression. In this situation, our typical goal as scientists would be to detect a difference between the two means. This aspiration can be more formally stated in the form of a research or alternative hypothesis . Namely, that the average expression levels of a ::GFP in wild type and in mutant b are different. The null hypothesis must convey the opposite sentiment. For the two-tailed t -test, the null hypothesis is simply that the expression of a ::GFP in wild type and mutant b backgrounds is the same. Alternatively, one could state that the difference in expression levels between wild type and mutant b is zero.

It turns out that our example, while real and useful for illustrating the idea that the sampling distribution of the mean can be approximately normal (and indeed should be if a t -test is to be carried out), even if the distribution of the data are not, is not so useful for illustrating P -value concepts. Hence, we will continue this discussion with a contrived variation: suppose the SEDM was 5.0, reflecting a very large amount of variation in the gene expression data. This would lead to the distribution shown in Figure 8A , which is analogous to the one from Figure 6A . You can see how the increase in the SEDM affects the values that are contained in the resulting 95% CI. The mean is still 11.3, but now there is some probability (albeit a small one) of obtaining a difference of zero, our null hypothesis. Figure 8B shows the same curve and SEDMs. This time, however, we have shifted the values of the x axis to consider the condition under which the null hypothesis is true. Thus the postulated difference in this scenario is zero (at the peak of the curve).

Graphical representation of a two-tailed t -test. (A) The same theoretical sampling distribution shown in Figure 6A in which the SEDM has been changed to 5.0 units (instead of 1.4). The numerical values on the x -axis represent differences from the mean (more...)

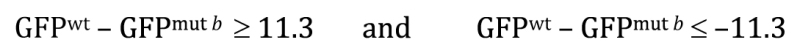

Now recall that The P -value answers the following question: If the null hypothesis is true, what is the probability that chance sampling could have resulted in a difference in sample means at least as extreme as the one obtained? In our experiment, the difference in sample means was 11.3, where a ::GFP showed lower expression in the mutant b background. However, to derive the P -value for the two-tailed t -test, we would need to include the following two possibilities, represented here in equation forms:

Most notably, with a two-tailed t -test we impose no bias as to the direction of the difference when carrying out the statistical analysis. Looking at Figure 8B , we can begin to see how the P -value is calculated. Depicted is a normal curve , with the observed difference represented by the vertical blue line located at 11.3 units (∼2.3 SEs). In addition, a dashed vertical blue line at −11.3 is also included. Red lines are located at about 2 SEs to either side of the apex. Based on our understanding of the normal curve, we know that about 95% of the total area under the curve resides between the two red lines, leaving the remaining 5% to be split between the two areas outside of the red lines in the tail regions. Furthermore, the proportion of the area under the curve that is to the outside of each individual blue line is 1.3%, for a total of 2.6%. This directly corresponds to the calculated two-tailed P -value of 0.026. Thus, the probability of having observed an effect this large by mere chance is only 2.6%, and we can conclude that the observed difference of 11.3 is statistically significant.

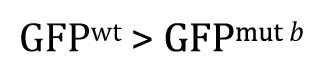

Once you understand the idea behind the two-tailed t -test, the one-tailed type is fairly straightforward. For the one-tailed t -test, however, there will always be two distinct versions, each with a different research hypothesis and corresponding null hypothesis. For example, if there is sufficient a priori 23 reason to believe that GFP wt will be greater than GFP mut b , then the research hypothesis could be written as

This means that the null hypothesis would be written as

Most importantly, the P -value for this test will answer the question: If the null hypothesis is true, what is the probability that the following result could have occurred by chance sampling?

Looking at Figure 8B , we can see that the answer is just the proportion of the area under the curve that lies to the right of positive 11.3 (solid vertical blue line). Because the graph is perfectly symmetrical, the P -value for this right-tailed test will be exactly half the value that we determined for the two-tailed test, or 0.013. Thus in cases where the direction of the difference coincides with a directional research hypothesis, the P -value of the one-tailed test will always be half that of the two-tailed test. This is a useful piece of information. Anytime you see a P -value from a one-tailed t -test and want to know what the two-tailed value would be, simply multiply by two.

Alternatively, had there been sufficient reason to posit a priori that GFP mut b will be greater than GFP wt , and then the research hypothesis could be written as

Of course, our experimental result that GFP wt was greater than GFP mut b clearly fails to support this research hypothesis. In such cases, there would be no reason to proceed further with a t -test, as the P -value in such situations is guaranteed to be >0.5. Nevertheless, for the sake of completeness, we can write out the null hypothesis as

And the P -value will answer the question: If the null hypothesis is true, what is the probability that the following result could have occurred by chance sampling?

Or, written slightly differently to keep things consistent with Figure 8B ,

This one-tailed test yields a P -value of 0.987, meaning that the observed lower mean of a ::GFP in mut b embryos is entirely consistent with a null hypothesis of GFP mut b ≤ GFP wt .

Interestingly, there is considerable debate, even among statisticians, regarding the appropriate use of one- versus two-tailed t -tests. Some argue that because in reality no two population means are ever identical, that all tests should be one tailed, as one mean must in fact be larger (or smaller) than the other ( Jones and Tukey, 2000 ). Put another way, the null hypothesis of a two-tailed test is always a false premise. Others encourage standard use of the two-tailed test largely on the basis of its being more conservative. Namely, the P -value will always be higher, and therefore fewer false-positive results will be reported. In addition, two-tailed tests impose no preconceived bias as to the direction of the change, which in some cases could be arbitrary or based on a misconception. A universally held rule is that one should never make the choice of a one-tailed t -test post hoc 24 after determining which direction is suggested by your data . In other words, if you are hoping to see a difference and your two-tailed P -value is 0.06, don't then decide that you really intended to do a one-tailed test to reduce the P -value to 0.03. Alternatively, if you were hoping for no significant difference, choosing the one-tailed test that happens to give you the highest P -value is an equally unacceptable practice.

Generally speaking, one-tailed tests are often reserved for situations where a clear directional outcome is anticipated or where changes in only one direction are relevant to the goals of the study. Examples of the latter are perhaps more often encountered in industry settings, such as testing a drug for the alleviation of symptoms. In this case, there is no reason to be interested in proving that a drug worsens symptoms, only that it improves them. In such situations, a one-tailed test may be suitable. Another example would be tracing the population of an endangered species over time, where the anticipated direction is clear and where the cost of being too conservative in the interpretation of data could lead to extinction. Notably, for the field of C. elegans experimental biology, these circumstances rarely, if ever, arise. In part for this reason, two-tailed tests are more common and further serve to dispel any suggestion that one has manipulated the test to obtain a desired outcome.

2.5. Equal or non-equal variances

It is common to read in textbooks that one of the underlying assumptions of the t -test is that both samples should be derived from populations of equal variance. Obviously this will often not be the case. Furthermore, when using the t -test, we are typically not asking whether or not the samples were derived from identical populations, as we already know they are not. Rather, we want to know if the two independent populations from which they were derived have different means. In fact, the original version of the t -test, which does not formally take into account unequal sample variances, is nevertheless quite robust for small or even moderate differences in variance. Nevertheless, it is now standard to use a modified version of the t -test that directly adjusts for unequal variances. In most statistical programs, this may simply require checking or unchecking a box. The end result is that for samples that do have similar variances, effectively no differences in P -values will be observed between the two methods. For samples that do differ considerably in their variances, P -values will be higher using the version that takes unequal variances into account. This method therefore provides a slightly more conservative and accurate estimate for P -values and can generally be recommended.

Also, just to reinforce a point raised earlier, greater variance in the sample data will lead to higher P -values because of the effect of sample variance on the SEDM. This will make it more difficult to detect differences between sample means using the t -test. Even without any technical explanation, this makes intuitive sense given that greater scatter in the data will create a level of background noise that could obscure potential differences. This is particularly true if the differences in means are small relative to the amount of scatter. This can be compensated for to some extent by increasing the sample size. This, however, may not be practical in some cases, and there can be downsides associated with accumulating data solely for the purpose of obtaining low P -values (see Section 6.3 ).

2.6. Are the data normal enough?

Technically speaking, we know that for t -tests to be valid, the distribution of the differences in means must be close to normal (discussed above). For this to be the case, the populations from which the samples are derived must also be sufficiently close to normal. Of course we seldom know the true nature of populations and can only infer this from our sample data. Thus in practical terms the question often boils down to whether or not the sample data suggest that the underlying population is normal or normal enough . The good news is that in cases where the sample size is not too small, the distribution of the sample will reasonably reflect the population from which it was derived (as mentioned above). The bad news is that with small sample sizes (say below 20), we may not be able to tell much about the population distribution. This creates a considerable conundrum when dealing with small samples from unknown populations. For example, for certain types of populations, such as a theoretical collection of bands on a western blot, we may have no way of knowing if the underlying population is normal or skewed and probably can't collect sufficient data to make an informed judgment. In these situations, you would admittedly use a t -test at your own risk 25 .

Textbooks will tell you that using highly skewed data for t -tests can lead to unreliable P -values. Furthermore, the reliability of certain other statistics, such as CIs, can also be affected by the distribution of data. In the case of the t -test, we know that the ultimate issue isn't whether the data or populations are skewed but whether the theoretical population of differences between the two means is skewed. In the examples shown earlier ( Figures 6 and 8 ), the shapes of the distributions were normal, and thus the t -tests were valid, even though our original data were skewed ( Figure 5 ). A basic rule of thumb is that if the data are normal or only slightly skewed, then the test statistic will be normal and the t -test results will be valid, even for small sample sizes. Conversely, if one or both samples (or populations) are strongly skewed, this can result in a skewed test statistic and thus invalid statistical conclusions.

Interestingly, although increasing the sample size will not change the underlying distribution of the population, it can often go a long way toward correcting for skewness in the test statistic 26 . Thus, the t -test often becomes valid, even with fairly skewed data, if the sample size is large enough. In fact, using data from Figure 5 , we did a simulation study and determined that the sampling distribution for the difference in means is indeed approximately normal with a sample size of 30 (data not shown). In that case, the histogram of that sampling distribution looked very much like that in Figure 6B , with the exception that the SD 27 of the distribution was ∼1.9 rather than 1.4. In contrast, carrying out a simulation with a sample size of only 15 did not yield a normal distribution of the test statistic and thus the t -test would not have been valid.

Unfortunately, there is no simple rule for choosing a minimum sample size to protect against skewed data, although some textbooks recommend 30. Even a sample size of 30, however, may not be sufficient to correct for skewness or kurtosis in the test statistic if the sample data (i.e., populations) are severely non-normal to begin with 28 . The bottom line is that there are unfortunately no hard and fast rules to rely on. Thus, if you have reason to suspect that your samples or the populations from which there are derived are strongly skewed, consider consulting your nearest statistician for advice on how to proceed. In the end, given a sufficient sample size, you may be cleared for the t -test. Alternatively, several classes of nonparametric tests can potentially be used ( Section 6.5 ). Although these tests tend to be less powerful than the t -test at detecting differences, the statistical conclusions drawn from these approaches will be much more valid. Furthermore, the computationally intensive method bootstrapping retains the power of the t -test but doesn't require a normal distribution of the test statistic to yield valid results.

In some cases, it may also be reasonable to assume that the population distributions are normal enough. Normality, or something quite close to it, is typically found in situations where many small factors serve to contribute to the ultimate distribution of the population. Because such effects are frequently encountered in biological systems, many natural distributions may be normal enough with respect to the t -test. Another way around this conundrum is to simply ask a different question, one that doesn't require the t -test approach. In fact, the western blot example is one where many of us would intuitively look toward analyzing the ratios of band intensities within individual blots (discussed in Section 6.5 ).

2.7. Is there a minimum acceptable sample size?

You may be surprised to learn that nothing can stop you from running a t -test with sample sizes of two. Of course, you may find it difficult to convince anyone of the validity of your conclusion, but run it you may! Another problem is that very low sample sizes will render any test much less powerful . What this means in practical terms is that to detect a statistically significant difference with small sample sizes, the difference between the two means must be quite large. In cases where the inherent difference is not large enough to compensate for a low sample size, the P -value will likely be above the critical threshold. In this event, you might state that there is insufficient evidence to indicate a difference between the populations, although there could be a difference that the experiment failed to detect. Alternatively, it may be tempting to continue gathering samples to push the P -value below the traditionally acceptable threshold of 0.05. As to whether this is a scientifically appropriate course of action is a matter of some debate, although in some circumstances it may be acceptable. However, this general tactic does have some grave pitfalls, which are addressed in later sections (e.g., Section 6.3 ).

One good thing about working with C. elegans , however, is that for many kinds of experiments, we can obtain fairly large sample sizes without much trouble or expense. The same cannot be said for many other biological or experimental systems. This advantage should theoretically allow us to determine if our data are normal enough or to simply not care about normality since our sample sizes are high. In any event, we should always strive to take advantage of this aspect of our system and not short-change our experiments. Of course, no matter what your experimental system might be, issues such as convenience and expense should not be principal driving forces behind the determination of sample size. Rather, these decisions should be based on logical, pragmatic, and statistically informed arguments (see Section 6.2 on power analysis).

Nevertheless, there are certain kinds of common experiments, such as qRT-PCR, where a sample size of three is quite typical. Of course, by three we do not mean three worms. For each sample in a qRT-PCR experiment, many thousands of worms may have been used to generate a single mRNA extract. Here, three refers to the number of biological replicates . In such cases, it is generally understood that worms for the three extracts may have been grown in parallel but were processed for mRNA isolation and cDNA synthesis separately. Better yet, the templates for each biological replicate may have been grown and processed at different times. In addition, qRT-PCR experiments typically require technical replicates . Here, three or more equal-sized aliquots of cDNA from the same biological replicate are used as the template in individual PCR reactions. Of course, the data from technical replicates will nearly always show less variation than data from true biological replicates. Importantly, technical replicates should never be confused with biological replicates. In the case of qRT-PCR, the former are only informative as to the variation introduced by the pipetting or amplification process. As such, technical replicates should be averaged, and this value treated as a single data point.

In this case, suppose for the sake of discussion that each replicate contains extracts from 5,000 worms. If all 15,000 worms can be considered to be from some a single population (at least with respect to the mRNA of interest), then each observed value is akin to a mean from a sample of 5,000. In that case, one could likely argue that the three values do come from a normal population (the Central Limit Theorem having done its magic on each), and so a t -test using the mean of those three values would be acceptable from a statistical standpoint. It might possibly still suffer from a lack of power, but the test itself would be valid. Similarly, western blot quantitation values, which average proteins from many thousands of worms, could also be argued to fall under this umbrella.

2.8. Paired versus unpaired tests

The paired t -test is a powerful way to detect differences in two sample means, provided that your experiment has been designed to take advantage of this approach. In our example of embryonic GFP expression, the two samples were independent in that the expression within any individual embryo was not linked to the expression in any other embryo. For situations involving independent samples, the paired t -test is not applicable; we carried out an unpaired t -test instead. For the paired method to be valid, data points must be linked in a meaningful way. If you remember from our first example, worms that have a mutation in b show lower expression of the a ::GFP reporter. In this example of a paired t -test, consider a strain that carries a construct encoding a hairpin dsRNA corresponding to gene b . Using a specific promoter and the appropriate genetic background, the dsRNA will be expressed only in the rightmost cell of one particular neuronal pair, where it is expected to inhibit the expression of gene b via the RNAi response. In contrast, the neuron on the left should be unaffected. In addition, this strain carries the same a ::GFP reporter described above, and it is known that this reporter is expressed in both the left and right neurons at identical levels in wild type. The experimental hypothesis is therefore that, analogous to what was observed in embryos, fluorescence of the a ::GFP reporter will be weaker in the right neuron, where gene b has been inhibited.

In this scenario, the data are meaningfully paired in that we are measuring GFP levels in two distinct cells, but within a single worm. We then collect fluorescence data from 14 wild-type worms and 14 b(RNAi) worms. A visual display of the data suggests that expression of a ::GFP is perhaps slightly decreased in the right cell where gene b has been inhibited, but the difference between the control and experimental dataset is not very impressive ( Figure 9A, B ). Furthermore, whereas the means of GFP expression in the left neurons in wild-type and b(RNAi) worms are nearly identical, the mean of GFP expression in the right neurons in wild type is a bit higher than that in the right neurons of b(RNAi) worms. For our t -test analysis, one option would be to ignore the natural pairing in the data and treat left and right cells of individual animals as independent. In doing so, however, we would hinder our ability to detect real differences. The reason is as follows. We already know that GFP expression in some worms will happen to be weaker or stronger (resulting in a dimmer or brighter signal) than in other worms. This variability, along with a relatively small mean difference in expression, may preclude our ability to support differences statistically. In fact, a two-tailed t -test using the (hypothetical) data for right cells from wild-type and b(RNAi) strains ( Figure 9B ) turns out to give a P > 0.05.

Representation of paired data.

Figure 9C, D , in contrast, shows a slightly different arrangement of the same GFP data. Here the wild-type and b(RNAi) strains have been separated, and we are specifically comparing expression in the left and right neurons for each genotype. In addition, lines have been drawn between left and right data points from the same animal. Two things are quite striking. One is that worms that are bright in one cell tend to be bright in the other. Second, looking at b(RNAi) worms, we can see that within individuals, there is a strong tendency to have reduced expression in the right neuron as compared with its left counterpart ( Figure 9D ). However, because of the inherent variability between worms, this difference was largely obscured when we failed to make use of the paired nature of the experiment. This wasn't a problem in the embryonic analysis, because the difference between wild type and b mutants was large enough relative to the variability between embryos. In the case of neurons (and the use of RNAi), the difference was, however, much smaller and thus fell below the level necessary for statistical validation. Using a paired two-tailed t -test for this dataset gives a P < 0.01.

The rationale behind using the paired t -test is that it takes meaningfully linked data into account when calculating the P -value. The paired t -test works by first calculating the difference between each individual pair. Then a mean and variance are calculated for all the differences among the pairs. Finally, a one-sample t -test is carried out where the null hypothesis is that the mean of the differences is equal to zero. Furthermore, the paired t -test can be one- or two-tailed, and arguments for either are similar to those for two independent means. Of course, standard programs will do all of this for you, so the inner workings are effectively invisible. Given the enhanced power of the paired t -test to detect differences, it is often worth considering how the statistical analysis will be carried out at the stage when you are developing your experimental design. Then, if it's feasible, you can design the experiment to take advantage of the paired t -test method.

2.9. The critical value approach

Some textbooks, particularly older ones, present a method known as the critical value approach in conjunction with the t -test. This method, which traditionally involves looking up t -values in lengthy appendices, was developed long before computer software was available to calculate precise P -values. Part of the reason this method may persist, at least in some textbooks, is that it provides authors with a vehicle to explain the basis of hypothesis testing along with certain technical aspects of the t -test. As a modern method for analyzing data, however, it has long since gone the way of the dinosaur. Feel no obligation to learn this.

3. Comparisons of more than two means

3.1. introduction.

The two-sample t -test works well in situations where we need to determine if differences exist between two populations for which we have sample means. But what happens when our analyses involve comparisons between three or more separate populations? Here things can get a bit tricky. Such scenarios tend to arise in one of several ways. Most common to our field is that we have obtained a collection of sample means that we want to compare with a single standard. For example, we might measure the body lengths of young adult-stage worms grown on RNAi-feeding plates, each targeting one of 100 different collagen genes. In this case, we would want to compare mean lengths from animals grown on each plate with a control RNAi that is known to have no effect on body length. On the surface, the statistical analysis might seem simple: just carry out 100 two-sample t -tests where the average length from each collagen RNAi plate is compared with the same control. The problem with this approach is the unavoidable presence of false-positive findings (also known as Type I errors ). The more t -tests you run, the greater the chance of obtaining a statistically significant result through chance sampling. Even if all the populations were identical in their lengths, 100 t -tests would result on average in five RNAi clones showing differences supported by P- values of <0.05, including one clone with a P -value of <0.01. This type of multiple comparisons problem is common in many of our studies and is a particularly prevalent issue in high-throughput experiments such as microarrays, which typically involve many thousands of comparisons.

Taking a slightly different angle, we can calculate the probability of incurring at least one false significance in situations of multiple comparisons. For example, with just two t -tests and a significance threshold of 0.05, there would be an ∼10% chance 29 that we would obtain at least one P -value that was <0.05 just by chance [1 – (0.95) 2 = 0.0975]. With just fourteen comparisons, that probability leaps to >50% (1 – (0.95) 14 = 0.512). With 100 comparisons, there is a 99% chance of obtaining at least one statistically significant result by chance. Using probability calculators available on the web (also see Section 4.10 ), we can determine that for 100 tests there is a 56.4% chance of obtaining five or more false positives and a 2.8% chance of obtaining ten or more. Thinking about it this way, we might be justifiably concerned that our studies may be riddled with incorrect conclusions! Furthermore, reducing the chosen significance threshold to 0.01 will only help so much. In this case, with 50 comparisons, there is still an ∼40% probability that at least one comparison will sneak below the cutoff by chance. Moreover, by reducing our threshold, we run the risk of discarding results that are both statistically and biologically significant.

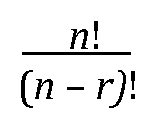

A related but distinct situation occurs when we have a collection of sample means, but rather than comparing each of them to a single standard, we want to compare all of them to each other. As is with the case of multiple comparisons to a single control, the problem lies in the sheer number of tests required. With only five different sample means, we would need to carry out 10 individual t -tests to analyze all possible pair-wise comparisons [5(5 − 1)/2 = 10]. With 100 sample means, that number skyrockets to 4,950. (100(100 − 1)/2 = 4,950). Based on a significance threshold of 0.05, this would lead to about 248 statistically significant results occurring by mere chance! Obviously, both common sense, as well as the use of specialized statistical methods, will come into play when dealing with these kinds of scenarios. In the sections below, we discuss some of the underlying concepts and describe several practical approaches for handling the analysis of multiple means.

3.2. Safety through repetition

Before delving into some of the common approaches used to cope with multiple comparisons, it is worth considering an experimental scenario that would likely not require specialized statistical methods. Specifically, we may consider the case of a large-scale “functional genomics screen”. In C. elegans , these would typically be carried out using RNAi-feeding libraries ( Kamath et al., 2003 ) or chemical genetics ( Carroll et al., 2003 ) and may involve many thousands of comparisons. For example, if 36 RNAi clones are ultimately identified that lead to resistance to a particular bacterial pathogen from a set of 17,000 clones tested, how does one analyze this result? No worries: the methodology is not composed of 17,000 statistical tests (each with some chance of failing). That's because the final reported tally, 36 clones, was presumably not the result of a single round of screening. In the first round (the primary screen), a larger number (say, 200 clones) might initially be identified as possibly resistant (with some “false significances” therein). A second or third round of screening would effectively eliminate virtually all of the false positives, reducing the number of clones that show a consistent biological affect to 36. In other words, secondary and tertiary screening would reduce to near zero the chance that any of the clones on the final list are in error because the chance of getting the same false positives repeatedly would be very slim. This idea of “safety through independent experimental repeats” is also addressed in Section 4.10 in the context of proportion data. Perhaps more than anything else, carrying out independent repeats is often best way to solidify results and avoid the presence of false positives within a dataset.

3.3. The family-wise error rate

To help grapple with the problems inherent to multiple comparisons, statisticians came up with something called the family-wise error rate . This is also sometimes referred to as the family-wide error rate , which may provide a better description of the underlying intent. The basic idea is that rather than just considering each comparison in isolation, a statistical cutoff is applied that takes into account the entire collection of comparisons. Recall that in the case of individual comparisons (sometimes called the per-comparison or comparison-wise error rate ), a P -value of <0.05 tells us that for that particular comparison, there is less than a 5% chance of having obtained a difference at least as large as the one observed by chance. Put another way, in the absence of any real difference between two populations, there is a 95% chance that we will not render a false conclusion of statistical significance. In the family-wise error rate approach, the criterion used to judge the statistical significance of any individual comparison is made more stringent as a way to compensate for the total number of comparisons being made. This is generally done by lowering the P -value cutoff (α level) for individual t -tests. When all is said and done, a P -value of <0.05 will mean that there is less than a 5% chance that the entire collection of declared positive findings contains any false positives.

We can use our example of the collagen experiment to further illustrate the meaning of the family-wise error rate. Suppose we test 100 genes and apply a family-wise error rate cutoff of 0.05. Perhaps this leaves us with a list of 12 genes that lead to changes in body size that are deemed statistically significant . This means that there is only a 5% chance that one or more of the 12 genes identified is a false positive. This also means that if none of the 100 genes really controlled body size, then 95% of the time our experiment would lead to no positive findings. Without this kind of adjustment, and using an α level of 0.05 for individual tests, the presence of one or more false positives in a data set based on 100 comparisons could be expected to happen >99% of the time. Several techniques for applying the family-wise error rate are described below.

3.4. Bonferroni-type corrections

The Bonferroni method , along with several related techniques, is conceptually straightforward and provides conservative family-wise error rates. To use the Bonferroni method, one simply divides the chosen family-wise error rate (e.g., 0.05) by the number of comparisons to obtain a Bonferroni-adjusted P -value cutoff. Going back to our example of the collagen genes, if the desired family-wise error rate is 0.05 and the number of comparisons is 100, the adjusted per-comparison significance threshold would be reduced to 0.05/100 = 0.0005. Thus, individual t -tests yielding P -values as low as 0.0006 would be declared insignificant. This may sound rather severe. In fact, a real problem with the Bonferroni method is that for large numbers of comparisons, the significance threshold may be so low that one may fail to detect a substantial proportion of true positives within a data set. For this reason, the Bonferroni method is widely considered to be too conservative in situations with large numbers of comparisons.

Another variation on the Bonferroni method is to apply significance thresholds for each comparison in a non-uniform manner. For example, with a family-wise error rate of 0.05 and 10 comparisons, a uniform cutoff would require any given t -test to have an associated P -value of <0.005 to be declared significant. Another way to think about this is that the sum of the 10 individual cutoffs must add up to 0.05. Interestingly, the integrity of the family-wise error rate is not compromised if one were to apply a 0.04 significance threshold for one comparison, and a 0.00111 (0.01/9) significance threshold for the remaining nine. This is because 0.04 + 9(0.00111) ≈ 0.05. The rub, however, is that the decision to apply non-uniform significance cutoffs cannot be made post hoc based on how the numbers shake out! For this method to be properly implemented, researchers must first prioritize comparisons based on the perceived importance of specific tests, such as if a negative health or environmental consequence could result from failing to detect a particular difference. For example, it may be more important to detect a correlation between industrial emissions and childhood cancer rates than to effects on the rate of tomato ripening. This may all sound rather arbitrary, but it is nonetheless statistically valid.

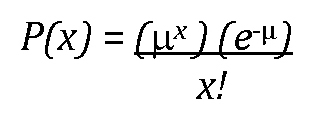

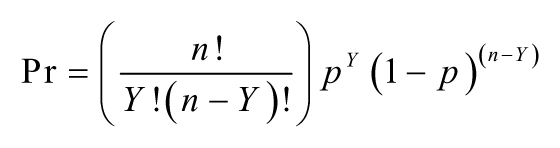

3.5. False discovery rates