- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Data Analysis in Research: Types & Methods

Content Index

Why analyze data in research?

Types of data in research, finding patterns in the qualitative data, methods used for data analysis in qualitative research, preparing data for analysis, methods used for data analysis in quantitative research, considerations in research data analysis, what is data analysis in research.

Definition of research in data analysis: According to LeCompte and Schensul, research data analysis is a process used by researchers to reduce data to a story and interpret it to derive insights. The data analysis process helps reduce a large chunk of data into smaller fragments, which makes sense.

Three essential things occur during the data analysis process — the first is data organization . Summarization and categorization together contribute to becoming the second known method used for data reduction. It helps find patterns and themes in the data for easy identification and linking. The third and last way is data analysis – researchers do it in both top-down and bottom-up fashion.

LEARN ABOUT: Research Process Steps

On the other hand, Marshall and Rossman describe data analysis as a messy, ambiguous, and time-consuming but creative and fascinating process through which a mass of collected data is brought to order, structure and meaning.

We can say that “the data analysis and data interpretation is a process representing the application of deductive and inductive logic to the research and data analysis.”

Researchers rely heavily on data as they have a story to tell or research problems to solve. It starts with a question, and data is nothing but an answer to that question. But, what if there is no question to ask? Well! It is possible to explore data even without a problem – we call it ‘Data Mining’, which often reveals some interesting patterns within the data that are worth exploring.

Irrelevant to the type of data researchers explore, their mission and audiences’ vision guide them to find the patterns to shape the story they want to tell. One of the essential things expected from researchers while analyzing data is to stay open and remain unbiased toward unexpected patterns, expressions, and results. Remember, sometimes, data analysis tells the most unforeseen yet exciting stories that were not expected when initiating data analysis. Therefore, rely on the data you have at hand and enjoy the journey of exploratory research.

Create a Free Account

Every kind of data has a rare quality of describing things after assigning a specific value to it. For analysis, you need to organize these values, processed and presented in a given context, to make it useful. Data can be in different forms; here are the primary data types.

- Qualitative data: When the data presented has words and descriptions, then we call it qualitative data . Although you can observe this data, it is subjective and harder to analyze data in research, especially for comparison. Example: Quality data represents everything describing taste, experience, texture, or an opinion that is considered quality data. This type of data is usually collected through focus groups, personal qualitative interviews , qualitative observation or using open-ended questions in surveys.

- Quantitative data: Any data expressed in numbers of numerical figures are called quantitative data . This type of data can be distinguished into categories, grouped, measured, calculated, or ranked. Example: questions such as age, rank, cost, length, weight, scores, etc. everything comes under this type of data. You can present such data in graphical format, charts, or apply statistical analysis methods to this data. The (Outcomes Measurement Systems) OMS questionnaires in surveys are a significant source of collecting numeric data.

- Categorical data: It is data presented in groups. However, an item included in the categorical data cannot belong to more than one group. Example: A person responding to a survey by telling his living style, marital status, smoking habit, or drinking habit comes under the categorical data. A chi-square test is a standard method used to analyze this data.

Learn More : Examples of Qualitative Data in Education

Data analysis in qualitative research

Data analysis and qualitative data research work a little differently from the numerical data as the quality data is made up of words, descriptions, images, objects, and sometimes symbols. Getting insight from such complicated information is a complicated process. Hence it is typically used for exploratory research and data analysis .

Although there are several ways to find patterns in the textual information, a word-based method is the most relied and widely used global technique for research and data analysis. Notably, the data analysis process in qualitative research is manual. Here the researchers usually read the available data and find repetitive or commonly used words.

For example, while studying data collected from African countries to understand the most pressing issues people face, researchers might find “food” and “hunger” are the most commonly used words and will highlight them for further analysis.

LEARN ABOUT: Level of Analysis

The keyword context is another widely used word-based technique. In this method, the researcher tries to understand the concept by analyzing the context in which the participants use a particular keyword.

For example , researchers conducting research and data analysis for studying the concept of ‘diabetes’ amongst respondents might analyze the context of when and how the respondent has used or referred to the word ‘diabetes.’

The scrutiny-based technique is also one of the highly recommended text analysis methods used to identify a quality data pattern. Compare and contrast is the widely used method under this technique to differentiate how a specific text is similar or different from each other.

For example: To find out the “importance of resident doctor in a company,” the collected data is divided into people who think it is necessary to hire a resident doctor and those who think it is unnecessary. Compare and contrast is the best method that can be used to analyze the polls having single-answer questions types .

Metaphors can be used to reduce the data pile and find patterns in it so that it becomes easier to connect data with theory.

Variable Partitioning is another technique used to split variables so that researchers can find more coherent descriptions and explanations from the enormous data.

LEARN ABOUT: Qualitative Research Questions and Questionnaires

There are several techniques to analyze the data in qualitative research, but here are some commonly used methods,

- Content Analysis: It is widely accepted and the most frequently employed technique for data analysis in research methodology. It can be used to analyze the documented information from text, images, and sometimes from the physical items. It depends on the research questions to predict when and where to use this method.

- Narrative Analysis: This method is used to analyze content gathered from various sources such as personal interviews, field observation, and surveys . The majority of times, stories, or opinions shared by people are focused on finding answers to the research questions.

- Discourse Analysis: Similar to narrative analysis, discourse analysis is used to analyze the interactions with people. Nevertheless, this particular method considers the social context under which or within which the communication between the researcher and respondent takes place. In addition to that, discourse analysis also focuses on the lifestyle and day-to-day environment while deriving any conclusion.

- Grounded Theory: When you want to explain why a particular phenomenon happened, then using grounded theory for analyzing quality data is the best resort. Grounded theory is applied to study data about the host of similar cases occurring in different settings. When researchers are using this method, they might alter explanations or produce new ones until they arrive at some conclusion.

LEARN ABOUT: 12 Best Tools for Researchers

Data analysis in quantitative research

The first stage in research and data analysis is to make it for the analysis so that the nominal data can be converted into something meaningful. Data preparation consists of the below phases.

Phase I: Data Validation

Data validation is done to understand if the collected data sample is per the pre-set standards, or it is a biased data sample again divided into four different stages

- Fraud: To ensure an actual human being records each response to the survey or the questionnaire

- Screening: To make sure each participant or respondent is selected or chosen in compliance with the research criteria

- Procedure: To ensure ethical standards were maintained while collecting the data sample

- Completeness: To ensure that the respondent has answered all the questions in an online survey. Else, the interviewer had asked all the questions devised in the questionnaire.

Phase II: Data Editing

More often, an extensive research data sample comes loaded with errors. Respondents sometimes fill in some fields incorrectly or sometimes skip them accidentally. Data editing is a process wherein the researchers have to confirm that the provided data is free of such errors. They need to conduct necessary checks and outlier checks to edit the raw edit and make it ready for analysis.

Phase III: Data Coding

Out of all three, this is the most critical phase of data preparation associated with grouping and assigning values to the survey responses . If a survey is completed with a 1000 sample size, the researcher will create an age bracket to distinguish the respondents based on their age. Thus, it becomes easier to analyze small data buckets rather than deal with the massive data pile.

LEARN ABOUT: Steps in Qualitative Research

After the data is prepared for analysis, researchers are open to using different research and data analysis methods to derive meaningful insights. For sure, statistical analysis plans are the most favored to analyze numerical data. In statistical analysis, distinguishing between categorical data and numerical data is essential, as categorical data involves distinct categories or labels, while numerical data consists of measurable quantities. The method is again classified into two groups. First, ‘Descriptive Statistics’ used to describe data. Second, ‘Inferential statistics’ that helps in comparing the data .

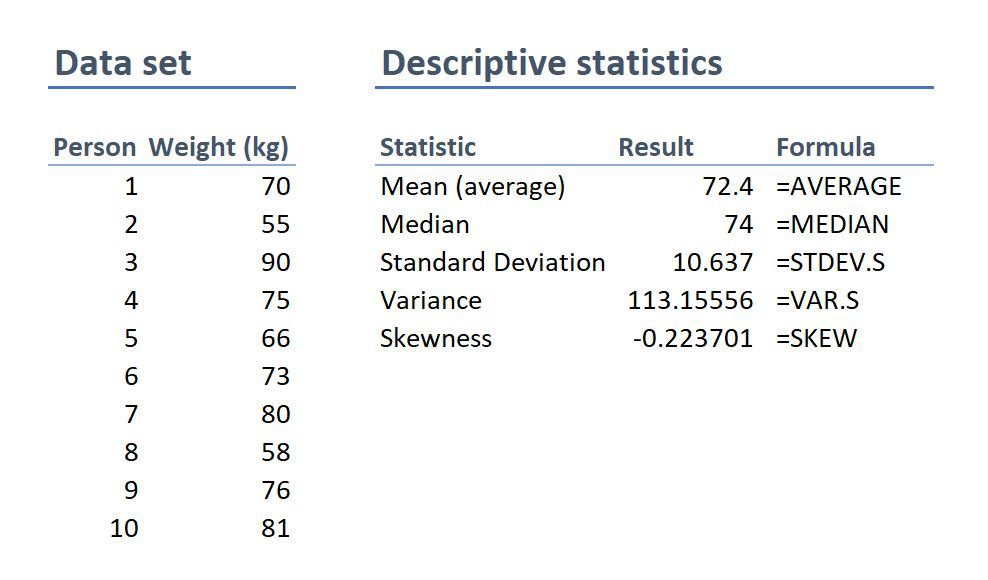

Descriptive statistics

This method is used to describe the basic features of versatile types of data in research. It presents the data in such a meaningful way that pattern in the data starts making sense. Nevertheless, the descriptive analysis does not go beyond making conclusions. The conclusions are again based on the hypothesis researchers have formulated so far. Here are a few major types of descriptive analysis methods.

Measures of Frequency

- Count, Percent, Frequency

- It is used to denote home often a particular event occurs.

- Researchers use it when they want to showcase how often a response is given.

Measures of Central Tendency

- Mean, Median, Mode

- The method is widely used to demonstrate distribution by various points.

- Researchers use this method when they want to showcase the most commonly or averagely indicated response.

Measures of Dispersion or Variation

- Range, Variance, Standard deviation

- Here the field equals high/low points.

- Variance standard deviation = difference between the observed score and mean

- It is used to identify the spread of scores by stating intervals.

- Researchers use this method to showcase data spread out. It helps them identify the depth until which the data is spread out that it directly affects the mean.

Measures of Position

- Percentile ranks, Quartile ranks

- It relies on standardized scores helping researchers to identify the relationship between different scores.

- It is often used when researchers want to compare scores with the average count.

For quantitative research use of descriptive analysis often give absolute numbers, but the in-depth analysis is never sufficient to demonstrate the rationale behind those numbers. Nevertheless, it is necessary to think of the best method for research and data analysis suiting your survey questionnaire and what story researchers want to tell. For example, the mean is the best way to demonstrate the students’ average scores in schools. It is better to rely on the descriptive statistics when the researchers intend to keep the research or outcome limited to the provided sample without generalizing it. For example, when you want to compare average voting done in two different cities, differential statistics are enough.

Descriptive analysis is also called a ‘univariate analysis’ since it is commonly used to analyze a single variable.

Inferential statistics

Inferential statistics are used to make predictions about a larger population after research and data analysis of the representing population’s collected sample. For example, you can ask some odd 100 audiences at a movie theater if they like the movie they are watching. Researchers then use inferential statistics on the collected sample to reason that about 80-90% of people like the movie.

Here are two significant areas of inferential statistics.

- Estimating parameters: It takes statistics from the sample research data and demonstrates something about the population parameter.

- Hypothesis test: I t’s about sampling research data to answer the survey research questions. For example, researchers might be interested to understand if the new shade of lipstick recently launched is good or not, or if the multivitamin capsules help children to perform better at games.

These are sophisticated analysis methods used to showcase the relationship between different variables instead of describing a single variable. It is often used when researchers want something beyond absolute numbers to understand the relationship between variables.

Here are some of the commonly used methods for data analysis in research.

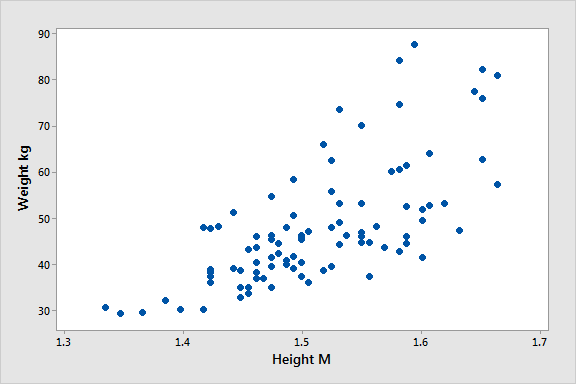

- Correlation: When researchers are not conducting experimental research or quasi-experimental research wherein the researchers are interested to understand the relationship between two or more variables, they opt for correlational research methods.

- Cross-tabulation: Also called contingency tables, cross-tabulation is used to analyze the relationship between multiple variables. Suppose provided data has age and gender categories presented in rows and columns. A two-dimensional cross-tabulation helps for seamless data analysis and research by showing the number of males and females in each age category.

- Regression analysis: For understanding the strong relationship between two variables, researchers do not look beyond the primary and commonly used regression analysis method, which is also a type of predictive analysis used. In this method, you have an essential factor called the dependent variable. You also have multiple independent variables in regression analysis. You undertake efforts to find out the impact of independent variables on the dependent variable. The values of both independent and dependent variables are assumed as being ascertained in an error-free random manner.

- Frequency tables: The statistical procedure is used for testing the degree to which two or more vary or differ in an experiment. A considerable degree of variation means research findings were significant. In many contexts, ANOVA testing and variance analysis are similar.

- Analysis of variance: The statistical procedure is used for testing the degree to which two or more vary or differ in an experiment. A considerable degree of variation means research findings were significant. In many contexts, ANOVA testing and variance analysis are similar.

- Researchers must have the necessary research skills to analyze and manipulation the data , Getting trained to demonstrate a high standard of research practice. Ideally, researchers must possess more than a basic understanding of the rationale of selecting one statistical method over the other to obtain better data insights.

- Usually, research and data analytics projects differ by scientific discipline; therefore, getting statistical advice at the beginning of analysis helps design a survey questionnaire, select data collection methods, and choose samples.

LEARN ABOUT: Best Data Collection Tools

- The primary aim of data research and analysis is to derive ultimate insights that are unbiased. Any mistake in or keeping a biased mind to collect data, selecting an analysis method, or choosing audience sample il to draw a biased inference.

- Irrelevant to the sophistication used in research data and analysis is enough to rectify the poorly defined objective outcome measurements. It does not matter if the design is at fault or intentions are not clear, but lack of clarity might mislead readers, so avoid the practice.

- The motive behind data analysis in research is to present accurate and reliable data. As far as possible, avoid statistical errors, and find a way to deal with everyday challenges like outliers, missing data, data altering, data mining , or developing graphical representation.

LEARN MORE: Descriptive Research vs Correlational Research The sheer amount of data generated daily is frightening. Especially when data analysis has taken center stage. in 2018. In last year, the total data supply amounted to 2.8 trillion gigabytes. Hence, it is clear that the enterprises willing to survive in the hypercompetitive world must possess an excellent capability to analyze complex research data, derive actionable insights, and adapt to the new market needs.

LEARN ABOUT: Average Order Value

QuestionPro is an online survey platform that empowers organizations in data analysis and research and provides them a medium to collect data by creating appealing surveys.

MORE LIKE THIS

Top 13 A/B Testing Software for Optimizing Your Website

Apr 12, 2024

21 Best Contact Center Experience Software in 2024

Government Customer Experience: Impact on Government Service

Apr 11, 2024

Employee Engagement App: Top 11 For Workforce Improvement

Apr 10, 2024

Other categories

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

Your Modern Business Guide To Data Analysis Methods And Techniques

Table of Contents

1) What Is Data Analysis?

2) Why Is Data Analysis Important?

3) What Is The Data Analysis Process?

4) Types Of Data Analysis Methods

5) Top Data Analysis Techniques To Apply

6) Quality Criteria For Data Analysis

7) Data Analysis Limitations & Barriers

8) Data Analysis Skills

9) Data Analysis In The Big Data Environment

In our data-rich age, understanding how to analyze and extract true meaning from our business’s digital insights is one of the primary drivers of success.

Despite the colossal volume of data we create every day, a mere 0.5% is actually analyzed and used for data discovery , improvement, and intelligence. While that may not seem like much, considering the amount of digital information we have at our fingertips, half a percent still accounts for a vast amount of data.

With so much data and so little time, knowing how to collect, curate, organize, and make sense of all of this potentially business-boosting information can be a minefield – but online data analysis is the solution.

In science, data analysis uses a more complex approach with advanced techniques to explore and experiment with data. On the other hand, in a business context, data is used to make data-driven decisions that will enable the company to improve its overall performance. In this post, we will cover the analysis of data from an organizational point of view while still going through the scientific and statistical foundations that are fundamental to understanding the basics of data analysis.

To put all of that into perspective, we will answer a host of important analytical questions, explore analytical methods and techniques, while demonstrating how to perform analysis in the real world with a 17-step blueprint for success.

What Is Data Analysis?

Data analysis is the process of collecting, modeling, and analyzing data using various statistical and logical methods and techniques. Businesses rely on analytics processes and tools to extract insights that support strategic and operational decision-making.

All these various methods are largely based on two core areas: quantitative and qualitative research.

To explain the key differences between qualitative and quantitative research, here’s a video for your viewing pleasure:

Gaining a better understanding of different techniques and methods in quantitative research as well as qualitative insights will give your analyzing efforts a more clearly defined direction, so it’s worth taking the time to allow this particular knowledge to sink in. Additionally, you will be able to create a comprehensive analytical report that will skyrocket your analysis.

Apart from qualitative and quantitative categories, there are also other types of data that you should be aware of before dividing into complex data analysis processes. These categories include:

- Big data: Refers to massive data sets that need to be analyzed using advanced software to reveal patterns and trends. It is considered to be one of the best analytical assets as it provides larger volumes of data at a faster rate.

- Metadata: Putting it simply, metadata is data that provides insights about other data. It summarizes key information about specific data that makes it easier to find and reuse for later purposes.

- Real time data: As its name suggests, real time data is presented as soon as it is acquired. From an organizational perspective, this is the most valuable data as it can help you make important decisions based on the latest developments. Our guide on real time analytics will tell you more about the topic.

- Machine data: This is more complex data that is generated solely by a machine such as phones, computers, or even websites and embedded systems, without previous human interaction.

Why Is Data Analysis Important?

Before we go into detail about the categories of analysis along with its methods and techniques, you must understand the potential that analyzing data can bring to your organization.

- Informed decision-making : From a management perspective, you can benefit from analyzing your data as it helps you make decisions based on facts and not simple intuition. For instance, you can understand where to invest your capital, detect growth opportunities, predict your income, or tackle uncommon situations before they become problems. Through this, you can extract relevant insights from all areas in your organization, and with the help of dashboard software , present the data in a professional and interactive way to different stakeholders.

- Reduce costs : Another great benefit is to reduce costs. With the help of advanced technologies such as predictive analytics, businesses can spot improvement opportunities, trends, and patterns in their data and plan their strategies accordingly. In time, this will help you save money and resources on implementing the wrong strategies. And not just that, by predicting different scenarios such as sales and demand you can also anticipate production and supply.

- Target customers better : Customers are arguably the most crucial element in any business. By using analytics to get a 360° vision of all aspects related to your customers, you can understand which channels they use to communicate with you, their demographics, interests, habits, purchasing behaviors, and more. In the long run, it will drive success to your marketing strategies, allow you to identify new potential customers, and avoid wasting resources on targeting the wrong people or sending the wrong message. You can also track customer satisfaction by analyzing your client’s reviews or your customer service department’s performance.

What Is The Data Analysis Process?

When we talk about analyzing data there is an order to follow in order to extract the needed conclusions. The analysis process consists of 5 key stages. We will cover each of them more in detail later in the post, but to start providing the needed context to understand what is coming next, here is a rundown of the 5 essential steps of data analysis.

- Identify: Before you get your hands dirty with data, you first need to identify why you need it in the first place. The identification is the stage in which you establish the questions you will need to answer. For example, what is the customer's perception of our brand? Or what type of packaging is more engaging to our potential customers? Once the questions are outlined you are ready for the next step.

- Collect: As its name suggests, this is the stage where you start collecting the needed data. Here, you define which sources of data you will use and how you will use them. The collection of data can come in different forms such as internal or external sources, surveys, interviews, questionnaires, and focus groups, among others. An important note here is that the way you collect the data will be different in a quantitative and qualitative scenario.

- Clean: Once you have the necessary data it is time to clean it and leave it ready for analysis. Not all the data you collect will be useful, when collecting big amounts of data in different formats it is very likely that you will find yourself with duplicate or badly formatted data. To avoid this, before you start working with your data you need to make sure to erase any white spaces, duplicate records, or formatting errors. This way you avoid hurting your analysis with bad-quality data.

- Analyze : With the help of various techniques such as statistical analysis, regressions, neural networks, text analysis, and more, you can start analyzing and manipulating your data to extract relevant conclusions. At this stage, you find trends, correlations, variations, and patterns that can help you answer the questions you first thought of in the identify stage. Various technologies in the market assist researchers and average users with the management of their data. Some of them include business intelligence and visualization software, predictive analytics, and data mining, among others.

- Interpret: Last but not least you have one of the most important steps: it is time to interpret your results. This stage is where the researcher comes up with courses of action based on the findings. For example, here you would understand if your clients prefer packaging that is red or green, plastic or paper, etc. Additionally, at this stage, you can also find some limitations and work on them.

Now that you have a basic understanding of the key data analysis steps, let’s look at the top 17 essential methods.

17 Essential Types Of Data Analysis Methods

Before diving into the 17 essential types of methods, it is important that we go over really fast through the main analysis categories. Starting with the category of descriptive up to prescriptive analysis, the complexity and effort of data evaluation increases, but also the added value for the company.

a) Descriptive analysis - What happened.

The descriptive analysis method is the starting point for any analytic reflection, and it aims to answer the question of what happened? It does this by ordering, manipulating, and interpreting raw data from various sources to turn it into valuable insights for your organization.

Performing descriptive analysis is essential, as it enables us to present our insights in a meaningful way. Although it is relevant to mention that this analysis on its own will not allow you to predict future outcomes or tell you the answer to questions like why something happened, it will leave your data organized and ready to conduct further investigations.

b) Exploratory analysis - How to explore data relationships.

As its name suggests, the main aim of the exploratory analysis is to explore. Prior to it, there is still no notion of the relationship between the data and the variables. Once the data is investigated, exploratory analysis helps you to find connections and generate hypotheses and solutions for specific problems. A typical area of application for it is data mining.

c) Diagnostic analysis - Why it happened.

Diagnostic data analytics empowers analysts and executives by helping them gain a firm contextual understanding of why something happened. If you know why something happened as well as how it happened, you will be able to pinpoint the exact ways of tackling the issue or challenge.

Designed to provide direct and actionable answers to specific questions, this is one of the world’s most important methods in research, among its other key organizational functions such as retail analytics , e.g.

c) Predictive analysis - What will happen.

The predictive method allows you to look into the future to answer the question: what will happen? In order to do this, it uses the results of the previously mentioned descriptive, exploratory, and diagnostic analysis, in addition to machine learning (ML) and artificial intelligence (AI). Through this, you can uncover future trends, potential problems or inefficiencies, connections, and casualties in your data.

With predictive analysis, you can unfold and develop initiatives that will not only enhance your various operational processes but also help you gain an all-important edge over the competition. If you understand why a trend, pattern, or event happened through data, you will be able to develop an informed projection of how things may unfold in particular areas of the business.

e) Prescriptive analysis - How will it happen.

Another of the most effective types of analysis methods in research. Prescriptive data techniques cross over from predictive analysis in the way that it revolves around using patterns or trends to develop responsive, practical business strategies.

By drilling down into prescriptive analysis, you will play an active role in the data consumption process by taking well-arranged sets of visual data and using it as a powerful fix to emerging issues in a number of key areas, including marketing, sales, customer experience, HR, fulfillment, finance, logistics analytics , and others.

As mentioned at the beginning of the post, data analysis methods can be divided into two big categories: quantitative and qualitative. Each of these categories holds a powerful analytical value that changes depending on the scenario and type of data you are working with. Below, we will discuss 17 methods that are divided into qualitative and quantitative approaches.

Without further ado, here are the 17 essential types of data analysis methods with some use cases in the business world:

A. Quantitative Methods

To put it simply, quantitative analysis refers to all methods that use numerical data or data that can be turned into numbers (e.g. category variables like gender, age, etc.) to extract valuable insights. It is used to extract valuable conclusions about relationships, differences, and test hypotheses. Below we discuss some of the key quantitative methods.

1. Cluster analysis

The action of grouping a set of data elements in a way that said elements are more similar (in a particular sense) to each other than to those in other groups – hence the term ‘cluster.’ Since there is no target variable when clustering, the method is often used to find hidden patterns in the data. The approach is also used to provide additional context to a trend or dataset.

Let's look at it from an organizational perspective. In a perfect world, marketers would be able to analyze each customer separately and give them the best-personalized service, but let's face it, with a large customer base, it is timely impossible to do that. That's where clustering comes in. By grouping customers into clusters based on demographics, purchasing behaviors, monetary value, or any other factor that might be relevant for your company, you will be able to immediately optimize your efforts and give your customers the best experience based on their needs.

2. Cohort analysis

This type of data analysis approach uses historical data to examine and compare a determined segment of users' behavior, which can then be grouped with others with similar characteristics. By using this methodology, it's possible to gain a wealth of insight into consumer needs or a firm understanding of a broader target group.

Cohort analysis can be really useful for performing analysis in marketing as it will allow you to understand the impact of your campaigns on specific groups of customers. To exemplify, imagine you send an email campaign encouraging customers to sign up for your site. For this, you create two versions of the campaign with different designs, CTAs, and ad content. Later on, you can use cohort analysis to track the performance of the campaign for a longer period of time and understand which type of content is driving your customers to sign up, repurchase, or engage in other ways.

A useful tool to start performing cohort analysis method is Google Analytics. You can learn more about the benefits and limitations of using cohorts in GA in this useful guide . In the bottom image, you see an example of how you visualize a cohort in this tool. The segments (devices traffic) are divided into date cohorts (usage of devices) and then analyzed week by week to extract insights into performance.

3. Regression analysis

Regression uses historical data to understand how a dependent variable's value is affected when one (linear regression) or more independent variables (multiple regression) change or stay the same. By understanding each variable's relationship and how it developed in the past, you can anticipate possible outcomes and make better decisions in the future.

Let's bring it down with an example. Imagine you did a regression analysis of your sales in 2019 and discovered that variables like product quality, store design, customer service, marketing campaigns, and sales channels affected the overall result. Now you want to use regression to analyze which of these variables changed or if any new ones appeared during 2020. For example, you couldn’t sell as much in your physical store due to COVID lockdowns. Therefore, your sales could’ve either dropped in general or increased in your online channels. Through this, you can understand which independent variables affected the overall performance of your dependent variable, annual sales.

If you want to go deeper into this type of analysis, check out this article and learn more about how you can benefit from regression.

4. Neural networks

The neural network forms the basis for the intelligent algorithms of machine learning. It is a form of analytics that attempts, with minimal intervention, to understand how the human brain would generate insights and predict values. Neural networks learn from each and every data transaction, meaning that they evolve and advance over time.

A typical area of application for neural networks is predictive analytics. There are BI reporting tools that have this feature implemented within them, such as the Predictive Analytics Tool from datapine. This tool enables users to quickly and easily generate all kinds of predictions. All you have to do is select the data to be processed based on your KPIs, and the software automatically calculates forecasts based on historical and current data. Thanks to its user-friendly interface, anyone in your organization can manage it; there’s no need to be an advanced scientist.

Here is an example of how you can use the predictive analysis tool from datapine:

**click to enlarge**

5. Factor analysis

The factor analysis also called “dimension reduction” is a type of data analysis used to describe variability among observed, correlated variables in terms of a potentially lower number of unobserved variables called factors. The aim here is to uncover independent latent variables, an ideal method for streamlining specific segments.

A good way to understand this data analysis method is a customer evaluation of a product. The initial assessment is based on different variables like color, shape, wearability, current trends, materials, comfort, the place where they bought the product, and frequency of usage. Like this, the list can be endless, depending on what you want to track. In this case, factor analysis comes into the picture by summarizing all of these variables into homogenous groups, for example, by grouping the variables color, materials, quality, and trends into a brother latent variable of design.

If you want to start analyzing data using factor analysis we recommend you take a look at this practical guide from UCLA.

6. Data mining

A method of data analysis that is the umbrella term for engineering metrics and insights for additional value, direction, and context. By using exploratory statistical evaluation, data mining aims to identify dependencies, relations, patterns, and trends to generate advanced knowledge. When considering how to analyze data, adopting a data mining mindset is essential to success - as such, it’s an area that is worth exploring in greater detail.

An excellent use case of data mining is datapine intelligent data alerts . With the help of artificial intelligence and machine learning, they provide automated signals based on particular commands or occurrences within a dataset. For example, if you’re monitoring supply chain KPIs , you could set an intelligent alarm to trigger when invalid or low-quality data appears. By doing so, you will be able to drill down deep into the issue and fix it swiftly and effectively.

In the following picture, you can see how the intelligent alarms from datapine work. By setting up ranges on daily orders, sessions, and revenues, the alarms will notify you if the goal was not completed or if it exceeded expectations.

7. Time series analysis

As its name suggests, time series analysis is used to analyze a set of data points collected over a specified period of time. Although analysts use this method to monitor the data points in a specific interval of time rather than just monitoring them intermittently, the time series analysis is not uniquely used for the purpose of collecting data over time. Instead, it allows researchers to understand if variables changed during the duration of the study, how the different variables are dependent, and how did it reach the end result.

In a business context, this method is used to understand the causes of different trends and patterns to extract valuable insights. Another way of using this method is with the help of time series forecasting. Powered by predictive technologies, businesses can analyze various data sets over a period of time and forecast different future events.

A great use case to put time series analysis into perspective is seasonality effects on sales. By using time series forecasting to analyze sales data of a specific product over time, you can understand if sales rise over a specific period of time (e.g. swimwear during summertime, or candy during Halloween). These insights allow you to predict demand and prepare production accordingly.

8. Decision Trees

The decision tree analysis aims to act as a support tool to make smart and strategic decisions. By visually displaying potential outcomes, consequences, and costs in a tree-like model, researchers and company users can easily evaluate all factors involved and choose the best course of action. Decision trees are helpful to analyze quantitative data and they allow for an improved decision-making process by helping you spot improvement opportunities, reduce costs, and enhance operational efficiency and production.

But how does a decision tree actually works? This method works like a flowchart that starts with the main decision that you need to make and branches out based on the different outcomes and consequences of each decision. Each outcome will outline its own consequences, costs, and gains and, at the end of the analysis, you can compare each of them and make the smartest decision.

Businesses can use them to understand which project is more cost-effective and will bring more earnings in the long run. For example, imagine you need to decide if you want to update your software app or build a new app entirely. Here you would compare the total costs, the time needed to be invested, potential revenue, and any other factor that might affect your decision. In the end, you would be able to see which of these two options is more realistic and attainable for your company or research.

9. Conjoint analysis

Last but not least, we have the conjoint analysis. This approach is usually used in surveys to understand how individuals value different attributes of a product or service and it is one of the most effective methods to extract consumer preferences. When it comes to purchasing, some clients might be more price-focused, others more features-focused, and others might have a sustainable focus. Whatever your customer's preferences are, you can find them with conjoint analysis. Through this, companies can define pricing strategies, packaging options, subscription packages, and more.

A great example of conjoint analysis is in marketing and sales. For instance, a cupcake brand might use conjoint analysis and find that its clients prefer gluten-free options and cupcakes with healthier toppings over super sugary ones. Thus, the cupcake brand can turn these insights into advertisements and promotions to increase sales of this particular type of product. And not just that, conjoint analysis can also help businesses segment their customers based on their interests. This allows them to send different messaging that will bring value to each of the segments.

10. Correspondence Analysis

Also known as reciprocal averaging, correspondence analysis is a method used to analyze the relationship between categorical variables presented within a contingency table. A contingency table is a table that displays two (simple correspondence analysis) or more (multiple correspondence analysis) categorical variables across rows and columns that show the distribution of the data, which is usually answers to a survey or questionnaire on a specific topic.

This method starts by calculating an “expected value” which is done by multiplying row and column averages and dividing it by the overall original value of the specific table cell. The “expected value” is then subtracted from the original value resulting in a “residual number” which is what allows you to extract conclusions about relationships and distribution. The results of this analysis are later displayed using a map that represents the relationship between the different values. The closest two values are in the map, the bigger the relationship. Let’s put it into perspective with an example.

Imagine you are carrying out a market research analysis about outdoor clothing brands and how they are perceived by the public. For this analysis, you ask a group of people to match each brand with a certain attribute which can be durability, innovation, quality materials, etc. When calculating the residual numbers, you can see that brand A has a positive residual for innovation but a negative one for durability. This means that brand A is not positioned as a durable brand in the market, something that competitors could take advantage of.

11. Multidimensional Scaling (MDS)

MDS is a method used to observe the similarities or disparities between objects which can be colors, brands, people, geographical coordinates, and more. The objects are plotted using an “MDS map” that positions similar objects together and disparate ones far apart. The (dis) similarities between objects are represented using one or more dimensions that can be observed using a numerical scale. For example, if you want to know how people feel about the COVID-19 vaccine, you can use 1 for “don’t believe in the vaccine at all” and 10 for “firmly believe in the vaccine” and a scale of 2 to 9 for in between responses. When analyzing an MDS map the only thing that matters is the distance between the objects, the orientation of the dimensions is arbitrary and has no meaning at all.

Multidimensional scaling is a valuable technique for market research, especially when it comes to evaluating product or brand positioning. For instance, if a cupcake brand wants to know how they are positioned compared to competitors, it can define 2-3 dimensions such as taste, ingredients, shopping experience, or more, and do a multidimensional scaling analysis to find improvement opportunities as well as areas in which competitors are currently leading.

Another business example is in procurement when deciding on different suppliers. Decision makers can generate an MDS map to see how the different prices, delivery times, technical services, and more of the different suppliers differ and pick the one that suits their needs the best.

A final example proposed by a research paper on "An Improved Study of Multilevel Semantic Network Visualization for Analyzing Sentiment Word of Movie Review Data". Researchers picked a two-dimensional MDS map to display the distances and relationships between different sentiments in movie reviews. They used 36 sentiment words and distributed them based on their emotional distance as we can see in the image below where the words "outraged" and "sweet" are on opposite sides of the map, marking the distance between the two emotions very clearly.

Aside from being a valuable technique to analyze dissimilarities, MDS also serves as a dimension-reduction technique for large dimensional data.

B. Qualitative Methods

Qualitative data analysis methods are defined as the observation of non-numerical data that is gathered and produced using methods of observation such as interviews, focus groups, questionnaires, and more. As opposed to quantitative methods, qualitative data is more subjective and highly valuable in analyzing customer retention and product development.

12. Text analysis

Text analysis, also known in the industry as text mining, works by taking large sets of textual data and arranging them in a way that makes it easier to manage. By working through this cleansing process in stringent detail, you will be able to extract the data that is truly relevant to your organization and use it to develop actionable insights that will propel you forward.

Modern software accelerate the application of text analytics. Thanks to the combination of machine learning and intelligent algorithms, you can perform advanced analytical processes such as sentiment analysis. This technique allows you to understand the intentions and emotions of a text, for example, if it's positive, negative, or neutral, and then give it a score depending on certain factors and categories that are relevant to your brand. Sentiment analysis is often used to monitor brand and product reputation and to understand how successful your customer experience is. To learn more about the topic check out this insightful article .

By analyzing data from various word-based sources, including product reviews, articles, social media communications, and survey responses, you will gain invaluable insights into your audience, as well as their needs, preferences, and pain points. This will allow you to create campaigns, services, and communications that meet your prospects’ needs on a personal level, growing your audience while boosting customer retention. There are various other “sub-methods” that are an extension of text analysis. Each of them serves a more specific purpose and we will look at them in detail next.

13. Content Analysis

This is a straightforward and very popular method that examines the presence and frequency of certain words, concepts, and subjects in different content formats such as text, image, audio, or video. For example, the number of times the name of a celebrity is mentioned on social media or online tabloids. It does this by coding text data that is later categorized and tabulated in a way that can provide valuable insights, making it the perfect mix of quantitative and qualitative analysis.

There are two types of content analysis. The first one is the conceptual analysis which focuses on explicit data, for instance, the number of times a concept or word is mentioned in a piece of content. The second one is relational analysis, which focuses on the relationship between different concepts or words and how they are connected within a specific context.

Content analysis is often used by marketers to measure brand reputation and customer behavior. For example, by analyzing customer reviews. It can also be used to analyze customer interviews and find directions for new product development. It is also important to note, that in order to extract the maximum potential out of this analysis method, it is necessary to have a clearly defined research question.

14. Thematic Analysis

Very similar to content analysis, thematic analysis also helps in identifying and interpreting patterns in qualitative data with the main difference being that the first one can also be applied to quantitative analysis. The thematic method analyzes large pieces of text data such as focus group transcripts or interviews and groups them into themes or categories that come up frequently within the text. It is a great method when trying to figure out peoples view’s and opinions about a certain topic. For example, if you are a brand that cares about sustainability, you can do a survey of your customers to analyze their views and opinions about sustainability and how they apply it to their lives. You can also analyze customer service calls transcripts to find common issues and improve your service.

Thematic analysis is a very subjective technique that relies on the researcher’s judgment. Therefore, to avoid biases, it has 6 steps that include familiarization, coding, generating themes, reviewing themes, defining and naming themes, and writing up. It is also important to note that, because it is a flexible approach, the data can be interpreted in multiple ways and it can be hard to select what data is more important to emphasize.

15. Narrative Analysis

A bit more complex in nature than the two previous ones, narrative analysis is used to explore the meaning behind the stories that people tell and most importantly, how they tell them. By looking into the words that people use to describe a situation you can extract valuable conclusions about their perspective on a specific topic. Common sources for narrative data include autobiographies, family stories, opinion pieces, and testimonials, among others.

From a business perspective, narrative analysis can be useful to analyze customer behaviors and feelings towards a specific product, service, feature, or others. It provides unique and deep insights that can be extremely valuable. However, it has some drawbacks.

The biggest weakness of this method is that the sample sizes are usually very small due to the complexity and time-consuming nature of the collection of narrative data. Plus, the way a subject tells a story will be significantly influenced by his or her specific experiences, making it very hard to replicate in a subsequent study.

16. Discourse Analysis

Discourse analysis is used to understand the meaning behind any type of written, verbal, or symbolic discourse based on its political, social, or cultural context. It mixes the analysis of languages and situations together. This means that the way the content is constructed and the meaning behind it is significantly influenced by the culture and society it takes place in. For example, if you are analyzing political speeches you need to consider different context elements such as the politician's background, the current political context of the country, the audience to which the speech is directed, and so on.

From a business point of view, discourse analysis is a great market research tool. It allows marketers to understand how the norms and ideas of the specific market work and how their customers relate to those ideas. It can be very useful to build a brand mission or develop a unique tone of voice.

17. Grounded Theory Analysis

Traditionally, researchers decide on a method and hypothesis and start to collect the data to prove that hypothesis. The grounded theory is the only method that doesn’t require an initial research question or hypothesis as its value lies in the generation of new theories. With the grounded theory method, you can go into the analysis process with an open mind and explore the data to generate new theories through tests and revisions. In fact, it is not necessary to collect the data and then start to analyze it. Researchers usually start to find valuable insights as they are gathering the data.

All of these elements make grounded theory a very valuable method as theories are fully backed by data instead of initial assumptions. It is a great technique to analyze poorly researched topics or find the causes behind specific company outcomes. For example, product managers and marketers might use the grounded theory to find the causes of high levels of customer churn and look into customer surveys and reviews to develop new theories about the causes.

How To Analyze Data? Top 17 Data Analysis Techniques To Apply

Now that we’ve answered the questions “what is data analysis’”, why is it important, and covered the different data analysis types, it’s time to dig deeper into how to perform your analysis by working through these 17 essential techniques.

1. Collaborate your needs

Before you begin analyzing or drilling down into any techniques, it’s crucial to sit down collaboratively with all key stakeholders within your organization, decide on your primary campaign or strategic goals, and gain a fundamental understanding of the types of insights that will best benefit your progress or provide you with the level of vision you need to evolve your organization.

2. Establish your questions

Once you’ve outlined your core objectives, you should consider which questions will need answering to help you achieve your mission. This is one of the most important techniques as it will shape the very foundations of your success.

To help you ask the right things and ensure your data works for you, you have to ask the right data analysis questions .

3. Data democratization

After giving your data analytics methodology some real direction, and knowing which questions need answering to extract optimum value from the information available to your organization, you should continue with democratization.

Data democratization is an action that aims to connect data from various sources efficiently and quickly so that anyone in your organization can access it at any given moment. You can extract data in text, images, videos, numbers, or any other format. And then perform cross-database analysis to achieve more advanced insights to share with the rest of the company interactively.

Once you have decided on your most valuable sources, you need to take all of this into a structured format to start collecting your insights. For this purpose, datapine offers an easy all-in-one data connectors feature to integrate all your internal and external sources and manage them at your will. Additionally, datapine’s end-to-end solution automatically updates your data, allowing you to save time and focus on performing the right analysis to grow your company.

4. Think of governance

When collecting data in a business or research context you always need to think about security and privacy. With data breaches becoming a topic of concern for businesses, the need to protect your client's or subject’s sensitive information becomes critical.

To ensure that all this is taken care of, you need to think of a data governance strategy. According to Gartner , this concept refers to “ the specification of decision rights and an accountability framework to ensure the appropriate behavior in the valuation, creation, consumption, and control of data and analytics .” In simpler words, data governance is a collection of processes, roles, and policies, that ensure the efficient use of data while still achieving the main company goals. It ensures that clear roles are in place for who can access the information and how they can access it. In time, this not only ensures that sensitive information is protected but also allows for an efficient analysis as a whole.

5. Clean your data

After harvesting from so many sources you will be left with a vast amount of information that can be overwhelming to deal with. At the same time, you can be faced with incorrect data that can be misleading to your analysis. The smartest thing you can do to avoid dealing with this in the future is to clean the data. This is fundamental before visualizing it, as it will ensure that the insights you extract from it are correct.

There are many things that you need to look for in the cleaning process. The most important one is to eliminate any duplicate observations; this usually appears when using multiple internal and external sources of information. You can also add any missing codes, fix empty fields, and eliminate incorrectly formatted data.

Another usual form of cleaning is done with text data. As we mentioned earlier, most companies today analyze customer reviews, social media comments, questionnaires, and several other text inputs. In order for algorithms to detect patterns, text data needs to be revised to avoid invalid characters or any syntax or spelling errors.

Most importantly, the aim of cleaning is to prevent you from arriving at false conclusions that can damage your company in the long run. By using clean data, you will also help BI solutions to interact better with your information and create better reports for your organization.

6. Set your KPIs

Once you’ve set your sources, cleaned your data, and established clear-cut questions you want your insights to answer, you need to set a host of key performance indicators (KPIs) that will help you track, measure, and shape your progress in a number of key areas.

KPIs are critical to both qualitative and quantitative analysis research. This is one of the primary methods of data analysis you certainly shouldn’t overlook.

To help you set the best possible KPIs for your initiatives and activities, here is an example of a relevant logistics KPI : transportation-related costs. If you want to see more go explore our collection of key performance indicator examples .

7. Omit useless data

Having bestowed your data analysis tools and techniques with true purpose and defined your mission, you should explore the raw data you’ve collected from all sources and use your KPIs as a reference for chopping out any information you deem to be useless.

Trimming the informational fat is one of the most crucial methods of analysis as it will allow you to focus your analytical efforts and squeeze every drop of value from the remaining ‘lean’ information.

Any stats, facts, figures, or metrics that don’t align with your business goals or fit with your KPI management strategies should be eliminated from the equation.

8. Build a data management roadmap

While, at this point, this particular step is optional (you will have already gained a wealth of insight and formed a fairly sound strategy by now), creating a data governance roadmap will help your data analysis methods and techniques become successful on a more sustainable basis. These roadmaps, if developed properly, are also built so they can be tweaked and scaled over time.

Invest ample time in developing a roadmap that will help you store, manage, and handle your data internally, and you will make your analysis techniques all the more fluid and functional – one of the most powerful types of data analysis methods available today.

9. Integrate technology

There are many ways to analyze data, but one of the most vital aspects of analytical success in a business context is integrating the right decision support software and technology.

Robust analysis platforms will not only allow you to pull critical data from your most valuable sources while working with dynamic KPIs that will offer you actionable insights; it will also present them in a digestible, visual, interactive format from one central, live dashboard . A data methodology you can count on.

By integrating the right technology within your data analysis methodology, you’ll avoid fragmenting your insights, saving you time and effort while allowing you to enjoy the maximum value from your business’s most valuable insights.

For a look at the power of software for the purpose of analysis and to enhance your methods of analyzing, glance over our selection of dashboard examples .

10. Answer your questions

By considering each of the above efforts, working with the right technology, and fostering a cohesive internal culture where everyone buys into the different ways to analyze data as well as the power of digital intelligence, you will swiftly start to answer your most burning business questions. Arguably, the best way to make your data concepts accessible across the organization is through data visualization.

11. Visualize your data

Online data visualization is a powerful tool as it lets you tell a story with your metrics, allowing users across the organization to extract meaningful insights that aid business evolution – and it covers all the different ways to analyze data.

The purpose of analyzing is to make your entire organization more informed and intelligent, and with the right platform or dashboard, this is simpler than you think, as demonstrated by our marketing dashboard .

This visual, dynamic, and interactive online dashboard is a data analysis example designed to give Chief Marketing Officers (CMO) an overview of relevant metrics to help them understand if they achieved their monthly goals.

In detail, this example generated with a modern dashboard creator displays interactive charts for monthly revenues, costs, net income, and net income per customer; all of them are compared with the previous month so that you can understand how the data fluctuated. In addition, it shows a detailed summary of the number of users, customers, SQLs, and MQLs per month to visualize the whole picture and extract relevant insights or trends for your marketing reports .

The CMO dashboard is perfect for c-level management as it can help them monitor the strategic outcome of their marketing efforts and make data-driven decisions that can benefit the company exponentially.

12. Be careful with the interpretation

We already dedicated an entire post to data interpretation as it is a fundamental part of the process of data analysis. It gives meaning to the analytical information and aims to drive a concise conclusion from the analysis results. Since most of the time companies are dealing with data from many different sources, the interpretation stage needs to be done carefully and properly in order to avoid misinterpretations.

To help you through the process, here we list three common practices that you need to avoid at all costs when looking at your data:

- Correlation vs. causation: The human brain is formatted to find patterns. This behavior leads to one of the most common mistakes when performing interpretation: confusing correlation with causation. Although these two aspects can exist simultaneously, it is not correct to assume that because two things happened together, one provoked the other. A piece of advice to avoid falling into this mistake is never to trust just intuition, trust the data. If there is no objective evidence of causation, then always stick to correlation.

- Confirmation bias: This phenomenon describes the tendency to select and interpret only the data necessary to prove one hypothesis, often ignoring the elements that might disprove it. Even if it's not done on purpose, confirmation bias can represent a real problem, as excluding relevant information can lead to false conclusions and, therefore, bad business decisions. To avoid it, always try to disprove your hypothesis instead of proving it, share your analysis with other team members, and avoid drawing any conclusions before the entire analytical project is finalized.

- Statistical significance: To put it in short words, statistical significance helps analysts understand if a result is actually accurate or if it happened because of a sampling error or pure chance. The level of statistical significance needed might depend on the sample size and the industry being analyzed. In any case, ignoring the significance of a result when it might influence decision-making can be a huge mistake.

13. Build a narrative

Now, we’re going to look at how you can bring all of these elements together in a way that will benefit your business - starting with a little something called data storytelling.

The human brain responds incredibly well to strong stories or narratives. Once you’ve cleansed, shaped, and visualized your most invaluable data using various BI dashboard tools , you should strive to tell a story - one with a clear-cut beginning, middle, and end.

By doing so, you will make your analytical efforts more accessible, digestible, and universal, empowering more people within your organization to use your discoveries to their actionable advantage.

14. Consider autonomous technology

Autonomous technologies, such as artificial intelligence (AI) and machine learning (ML), play a significant role in the advancement of understanding how to analyze data more effectively.

Gartner predicts that by the end of this year, 80% of emerging technologies will be developed with AI foundations. This is a testament to the ever-growing power and value of autonomous technologies.

At the moment, these technologies are revolutionizing the analysis industry. Some examples that we mentioned earlier are neural networks, intelligent alarms, and sentiment analysis.

15. Share the load

If you work with the right tools and dashboards, you will be able to present your metrics in a digestible, value-driven format, allowing almost everyone in the organization to connect with and use relevant data to their advantage.

Modern dashboards consolidate data from various sources, providing access to a wealth of insights in one centralized location, no matter if you need to monitor recruitment metrics or generate reports that need to be sent across numerous departments. Moreover, these cutting-edge tools offer access to dashboards from a multitude of devices, meaning that everyone within the business can connect with practical insights remotely - and share the load.

Once everyone is able to work with a data-driven mindset, you will catalyze the success of your business in ways you never thought possible. And when it comes to knowing how to analyze data, this kind of collaborative approach is essential.

16. Data analysis tools

In order to perform high-quality analysis of data, it is fundamental to use tools and software that will ensure the best results. Here we leave you a small summary of four fundamental categories of data analysis tools for your organization.

- Business Intelligence: BI tools allow you to process significant amounts of data from several sources in any format. Through this, you can not only analyze and monitor your data to extract relevant insights but also create interactive reports and dashboards to visualize your KPIs and use them for your company's good. datapine is an amazing online BI software that is focused on delivering powerful online analysis features that are accessible to beginner and advanced users. Like this, it offers a full-service solution that includes cutting-edge analysis of data, KPIs visualization, live dashboards, reporting, and artificial intelligence technologies to predict trends and minimize risk.

- Statistical analysis: These tools are usually designed for scientists, statisticians, market researchers, and mathematicians, as they allow them to perform complex statistical analyses with methods like regression analysis, predictive analysis, and statistical modeling. A good tool to perform this type of analysis is R-Studio as it offers a powerful data modeling and hypothesis testing feature that can cover both academic and general data analysis. This tool is one of the favorite ones in the industry, due to its capability for data cleaning, data reduction, and performing advanced analysis with several statistical methods. Another relevant tool to mention is SPSS from IBM. The software offers advanced statistical analysis for users of all skill levels. Thanks to a vast library of machine learning algorithms, text analysis, and a hypothesis testing approach it can help your company find relevant insights to drive better decisions. SPSS also works as a cloud service that enables you to run it anywhere.

- SQL Consoles: SQL is a programming language often used to handle structured data in relational databases. Tools like these are popular among data scientists as they are extremely effective in unlocking these databases' value. Undoubtedly, one of the most used SQL software in the market is MySQL Workbench . This tool offers several features such as a visual tool for database modeling and monitoring, complete SQL optimization, administration tools, and visual performance dashboards to keep track of KPIs.

- Data Visualization: These tools are used to represent your data through charts, graphs, and maps that allow you to find patterns and trends in the data. datapine's already mentioned BI platform also offers a wealth of powerful online data visualization tools with several benefits. Some of them include: delivering compelling data-driven presentations to share with your entire company, the ability to see your data online with any device wherever you are, an interactive dashboard design feature that enables you to showcase your results in an interactive and understandable way, and to perform online self-service reports that can be used simultaneously with several other people to enhance team productivity.

17. Refine your process constantly

Last is a step that might seem obvious to some people, but it can be easily ignored if you think you are done. Once you have extracted the needed results, you should always take a retrospective look at your project and think about what you can improve. As you saw throughout this long list of techniques, data analysis is a complex process that requires constant refinement. For this reason, you should always go one step further and keep improving.

Quality Criteria For Data Analysis

So far we’ve covered a list of methods and techniques that should help you perform efficient data analysis. But how do you measure the quality and validity of your results? This is done with the help of some science quality criteria. Here we will go into a more theoretical area that is critical to understanding the fundamentals of statistical analysis in science. However, you should also be aware of these steps in a business context, as they will allow you to assess the quality of your results in the correct way. Let’s dig in.

- Internal validity: The results of a survey are internally valid if they measure what they are supposed to measure and thus provide credible results. In other words , internal validity measures the trustworthiness of the results and how they can be affected by factors such as the research design, operational definitions, how the variables are measured, and more. For instance, imagine you are doing an interview to ask people if they brush their teeth two times a day. While most of them will answer yes, you can still notice that their answers correspond to what is socially acceptable, which is to brush your teeth at least twice a day. In this case, you can’t be 100% sure if respondents actually brush their teeth twice a day or if they just say that they do, therefore, the internal validity of this interview is very low.

- External validity: Essentially, external validity refers to the extent to which the results of your research can be applied to a broader context. It basically aims to prove that the findings of a study can be applied in the real world. If the research can be applied to other settings, individuals, and times, then the external validity is high.

- Reliability : If your research is reliable, it means that it can be reproduced. If your measurement were repeated under the same conditions, it would produce similar results. This means that your measuring instrument consistently produces reliable results. For example, imagine a doctor building a symptoms questionnaire to detect a specific disease in a patient. Then, various other doctors use this questionnaire but end up diagnosing the same patient with a different condition. This means the questionnaire is not reliable in detecting the initial disease. Another important note here is that in order for your research to be reliable, it also needs to be objective. If the results of a study are the same, independent of who assesses them or interprets them, the study can be considered reliable. Let’s see the objectivity criteria in more detail now.

- Objectivity: In data science, objectivity means that the researcher needs to stay fully objective when it comes to its analysis. The results of a study need to be affected by objective criteria and not by the beliefs, personality, or values of the researcher. Objectivity needs to be ensured when you are gathering the data, for example, when interviewing individuals, the questions need to be asked in a way that doesn't influence the results. Paired with this, objectivity also needs to be thought of when interpreting the data. If different researchers reach the same conclusions, then the study is objective. For this last point, you can set predefined criteria to interpret the results to ensure all researchers follow the same steps.

The discussed quality criteria cover mostly potential influences in a quantitative context. Analysis in qualitative research has by default additional subjective influences that must be controlled in a different way. Therefore, there are other quality criteria for this kind of research such as credibility, transferability, dependability, and confirmability. You can see each of them more in detail on this resource .

Data Analysis Limitations & Barriers

Analyzing data is not an easy task. As you’ve seen throughout this post, there are many steps and techniques that you need to apply in order to extract useful information from your research. While a well-performed analysis can bring various benefits to your organization it doesn't come without limitations. In this section, we will discuss some of the main barriers you might encounter when conducting an analysis. Let’s see them more in detail.

- Lack of clear goals: No matter how good your data or analysis might be if you don’t have clear goals or a hypothesis the process might be worthless. While we mentioned some methods that don’t require a predefined hypothesis, it is always better to enter the analytical process with some clear guidelines of what you are expecting to get out of it, especially in a business context in which data is utilized to support important strategic decisions.

- Objectivity: Arguably one of the biggest barriers when it comes to data analysis in research is to stay objective. When trying to prove a hypothesis, researchers might find themselves, intentionally or unintentionally, directing the results toward an outcome that they want. To avoid this, always question your assumptions and avoid confusing facts with opinions. You can also show your findings to a research partner or external person to confirm that your results are objective.

- Data representation: A fundamental part of the analytical procedure is the way you represent your data. You can use various graphs and charts to represent your findings, but not all of them will work for all purposes. Choosing the wrong visual can not only damage your analysis but can mislead your audience, therefore, it is important to understand when to use each type of data depending on your analytical goals. Our complete guide on the types of graphs and charts lists 20 different visuals with examples of when to use them.