Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 23 March 2020

Deep-learning-based image segmentation integrated with optical microscopy for automatically searching for two-dimensional materials

- Satoru Masubuchi ORCID: orcid.org/0000-0001-7039-6694 1 ,

- Eisuke Watanabe 1 ,

- Yuta Seo 1 ,

- Shota Okazaki 2 ,

- Takao Sasagawa 2 ,

- Kenji Watanabe ORCID: orcid.org/0000-0003-3701-8119 3 ,

- Takashi Taniguchi 1 , 3 &

- Tomoki Machida 1

npj 2D Materials and Applications volume 4 , Article number: 3 ( 2020 ) Cite this article

25k Accesses

82 Citations

48 Altmetric

Metrics details

- Materials science

- Nanoscience and technology

Deep-learning algorithms enable precise image recognition based on high-dimensional hierarchical image features. Here, we report the development and implementation of a deep-learning-based image segmentation algorithm in an autonomous robotic system to search for two-dimensional (2D) materials. We trained the neural network based on Mask-RCNN on annotated optical microscope images of 2D materials (graphene, hBN, MoS 2 , and WTe 2 ). The inference algorithm is run on a 1024 × 1024 px 2 optical microscope images for 200 ms, enabling the real-time detection of 2D materials. The detection process is robust against changes in the microscopy conditions, such as illumination and color balance, which obviates the parameter-tuning process required for conventional rule-based detection algorithms. Integrating the algorithm with a motorized optical microscope enables the automated searching and cataloging of 2D materials. This development will allow researchers to utilize a large number of 2D materials simply by exfoliating and running the automated searching process. To facilitate research, we make the training codes, dataset, and model weights publicly available.

Similar content being viewed by others

Automatic detection of multilayer hexagonal boron nitride in optical images using deep learning-based computer vision

Fereshteh Ramezani, Sheikh Parvez, … Bradley M. Whitaker

Physically informed machine-learning algorithms for the identification of two-dimensional atomic crystals

Laura Zichi, Tianci Liu, … Gongjun Xu

Deep-learning-based quality filtering of mechanically exfoliated 2D crystals

Yu Saito, Kento Shin, … Koji Tsuda

Introduction

The recent advances in deep-learning technologies based on neural networks have led to the emergence of high-performance algorithms for interpreting images, such as object detection 1 , 2 , 3 , 4 , 5 , semantic segmentation 4 , 6 , 7 , 8 , 9 , 10 , instance segmentation 11 , and image generation 12 . As neural networks can learn the high-dimensional hierarchical features of objects from large sets of training data 13 , deep-learning algorithms can acquire a high generalization ability to recognize images, i.e., they can interpret images that they have not been shown before, which is one of the traits of artificial intelligence 14 . Soon after the success of deep-learning algorithms in general scene recognition challenges 15 , attempts at automation began for imaging tasks that are conducted by human experts, such as medical diagnosis 16 and biological image analysis 17 , 18 . However, despite significant advances in image recognition algorithms, the implementation of these tools for practical applications remains challenging 18 because of the unique requirements for developing deep-learning algorithms that necessitate the joint development of hardware, datasets, and software 18 , 19 .

In the field of two-dimensional (2D) materials 20 , 21 , 22 , the recent advent of autonomous robotic assembly systems has enabled high-throughput searching for exfoliated 2D materials and their subsequent assembly into van der Waals heterostructures 23 . These developments were bolstered by an image recognition algorithm for detecting 2D materials on SiO 2 /Si substrates 23 , 24 ; however, current implementations have been developed on the framework of conventional rule-based image processing 25 , 26 , which uses traditional handcrafted image features, such as color contrast, edges, and entropy 23 , 24 . Although these algorithms are computationally inexpensive, the detection parameters need to be adjusted by experts, with retuning required when the microscopy conditions change. To perform the parameter tuning in conventional rule-based algorithms, one has to manually find at least one sample flake on SiO 2 /Si substrate, every time one exfoliates 2D flakes. Since the exfoliated flakes are sparsely distributed on SiO 2 /Si substrate, e.g., 3–10 thin flakes in 1 × 1 cm 2 SiO 2 /Si substrate for MoS 2 23 , manually finding a flake and tuning parameters requires at least 30 min. The time spent for parameter-tuning process causes degradation of some two-dimensional materials, such as Bi 2 Sr 2 CaCu 2 O 8+δ 27 , even in a glovebox enclosure.

In contrast, deep-learning algorithms for detecting 2D materials are expected to be robust against changes in optical microscopy conditions, and the development of such an algorithm would provide a generalized 2D material detector that does not require fine-tuning of the parameters. In general, deep-learning algorithms for interpreting images are grouped into two categories 28 . Fully convolutional approaches employ an encoder–decoder architecture, such as SegNet 7 , U-Net 8 , and SharpMask 29 . In contrast, region-based approaches employ feature extraction by a stack of convolutional neural networks (CNNs), such as Mask-RCNN 11 , PSP Net 30 , and DeepLab 10 . In general, the region-based approaches outperform the fully convolutional approaches for most image segmentation tasks when the networks are trained on a sufficiently large number of annotated datasets 11 .

In this work, we implemented and integrated deep-learning algorithms with an automated optical microscope to search for 2D materials on SiO 2 /Si substrates. The neural network architecture based on Mask-RCNN enabled the detection of exfoliated 2D materials while generating a segmentation mask for each object. Transfer learning from the network trained on the Microsoft common objects in context (COCO) dataset 31 enabled the development of a neural network from a relatively small (~2000 optical microscope images) dataset of 2D materials. Owing to the generalization ability of the neural network, the detection process is robust against changes in the microscopy conditions. These properties could not be realized using conventional rule-based image recognition algorithms. To facilitate further research, we make the source codes for network training, the model weights, the training dataset, and the optical microscope drivers publicly available. Our implementation can be deployed on optical microscopes other than the instrument utilized in this study.

System architectures and functionalities

A schematic diagram of our deep-learning-assisted optical microscopy system is shown in Fig. 1a , with photographs shown in Fig. 1b, c . The system comprises three components: (i) an autofocus microscope with a motorized XY scanning stage (Chuo Precision); (ii) a customized software pipeline to capture the optical microscope image, run deep-learning algorithms, display results, and record the results in a database; (iii) a set of trained deep-learning algorithms for detecting 2D materials (graphene, hBN, MoS 2 , and WTe 2 ). By combining these components, the system can automatically search for 2D materials exfoliated on SiO 2 /Si substrates (Supplementary Movie 1 and 2 ). When 2D flakes are detected, their positions and shapes are stored in a database (sample record is presented in supplementary information), which can be browsed and utilized to assemble van der Waals heterostructures with a robotic system 23 . The key component developed in this study was the set of trained deep-learning algorithms for detecting 2D materials in the optical microscope images. Algorithm development required three steps, namely, preparation of a large dataset of annotated optical microscope images, training of the deep-learning algorithm on the dataset, and deploying the algorithm to run inference on optical microscope images.

a Schematic of the deep-learning-assisted optical microscope system. The optical microscope acquires an image of exfoliated 2D crystals on a SiO 2 /Si substrate. The images are input into the deep-learning inference algorithm. The Mask-RCNN architecture generates a segmentation mask, bounding boxes, and class labels. The inference data and images are stored in a cloud database, which forms a searchable database. The customized computer-assisted-design (CAD) software enables browsing of 2D crystals, and designing of van der Waals heterostructures. b , c Photographs of ( b ) the optical microscope and ( c ) the computer screen for deep-learning-assisted automated searching. d – k Segmentation of 2D crystals. Optical microscope images of ( d ) graphene, ( e ) hBN, ( f ) WTe 2 , and ( g ) MoS 2 on SiO 2 (290 nm)/Si. h – k Inference results for the optical microscope images in d – g , respectively. The segmentation masks and bounding boxes are indicated by polygons and dashed squares, respectively. In addition, the class labels and confidences are displayed. The contaminating objects, such as scotch tape residue, particles, and corrugated 2D flakes, are indicated by the white arrows in e , f , i , and j . The scale bars correspond to 10 µm.

The deep-learning model we employed was Mask-RCNN 11 (Fig. 1a ), which predicts objects, bounding boxes, and segmentation masks in images. When an image is input into the network, the deep convolutional network ResNet101 32 extracts the position-aware high-dimensional features. These features are passed to the region proposal network (RPN) and the region of interest alignment network (ROI Align), which propose candidate regions where the targeted objects are located. The full connection network performs classification (Class) and regression for the bounding box (BBox) of the detected objects. Finally, the convolutional network generates segmentation masks for the objects using the output of the ROI Align layer. This model was developed on the Keras/TensorFlow framework 33 , 34 , 35 .

To train the Mask-RCNN model, we prepared annotated images and trained networks as follows. In general, the performance of a deep-learning network is known to scale with the size of the dataset 36 . To collect a large set of optical microscope images containing 2D materials, we exfoliated graphene (covalent material), MoS 2 (2D semiconductors), WTe 2 , and hBN crystals onto SiO 2 /Si substrates. Using the automated optical microscope, we collected ~2100 optical microscope images containing graphene, MoS 2 , WTe 2 , and hBN flakes. The images were annotated manually using a web-based labeling tool 37 . The training was performed by the stochastic gradient decent method described later in this paper.

We show the inference results for optical microscope images containing 2D materials. Figure 1c–f shows optical microscope images of graphene, WTe 2 , MoS 2 , and hBN flakes, which were input into the neural network. The inference results shown in Fig. 1g–j consist of bounding boxes (colored squares), class labels (text), confidences (numbers), and masks (colored polygons). For the layer thickness classification, we defined three categories: “mono” (1 layer), “few” (2–10 layers), and “thick” (10–40 layers). Note that this categorization was sufficient for practical use in the first screening process because final verification of the layer thickness can be conducted either by manual inspection or by using the computational post process, such as color contrast analysis 24 , 38 , 39 , 40 , 41 , 42 , which would be interfaced with the deep-learning algorithms in the future works. As indicated in Fig. 1g–j , the 2D flakes are detected by the Mask-RCNN, and the segmentation mask exhibits good overlap with the 2D flakes. The layer thickness was also correctly classified, with monolayer graphene classified as “mono”. The detection process is robust against contaminating objects, such as scotch tape residue, particles, and corrugated 2D flakes (white arrows, Fig. 1e, f, I, j ).

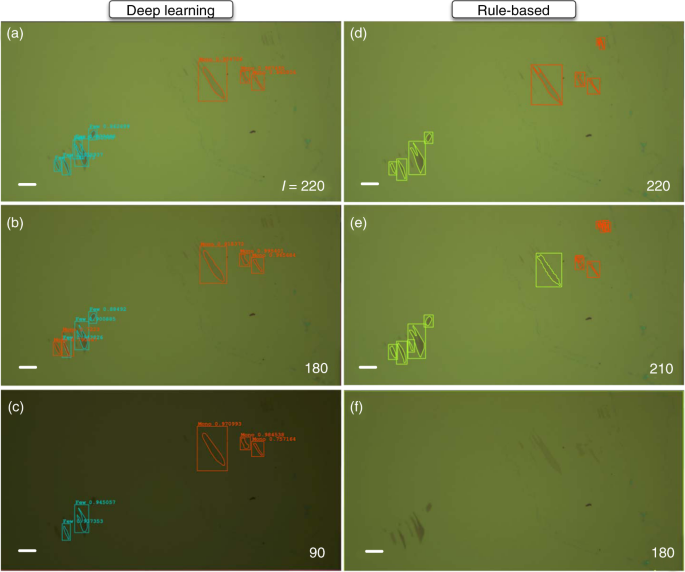

As the neural network locates 2D crystals using the high-dimensional hierarchical features of the image, the detection results were unchanged when the illumination conditions were varied (Supplementary Movie 3 ). Figure 2a–c shows the deep-learning detection of graphene flakes under differing illumination intensities ( I ). For comparison, the results obtained using conventional rule-based detection are presented in Fig. 2d–f . For the deep-learning case, the results were not affected by changing the illumination intensity from I = 220 (a) to 180 (b) or 90 (c) (red, blue, and green curves, Fig. 2 ). In contrast, with rule-based detection, a slight decrease in the light intensity from I = 220 (d) to 200 (e) affected the results significantly, and the graphene flakes became undetectable. Further decreasing the illumination intensity to I = 180 (f) resulted in no objects being detected. These results demonstrate the robustness of the deep-learning algorithms over conventional rule-based image processing for detecting 2D flakes.

Input image and inference results under illumination intensities of I = a 220, b 180, and c 90 (arb. unit) for deep-learning detection, and I = d 220, e 210, and f 180 (arb. unit) for rule-based detection. The scale bars correspond to 10 µm.

The deep-learning model was integrated with a motorized optical microscope by developing a customized software pipeline using C++ and Python. We employed a server/client architecture to integrate the deep-learning inference algorithms with the conventional optical microscope (Supplementary Fig. 1 ). The image captured by the optical microscope is sent to the inference server, and the inference results are sent back to the client computer. The deep-learning model can run on a graphics-processing unit (NVIDIA Tesla V100) at 200 ms. Including the overheads for capturing images, transferring image data, and displaying inference results, frame rates of ~1 fps were achieved. To investigate the applicability of the deep-learning inference to searching for 2D crystals, we selected WTe 2 crystals as a testbed because the exfoliation yields of transition metal dichalcogenides are significantly smaller than graphene flakes. We exfoliated WTe 2 crystals onto 1 × 1 cm 2 SiO 2 /Si substrates, and then conducted searching, which was completed in 1 h using a ×50 objective lens. Searching identified ~25 WTe 2 flakes on 1 × 1 cm 2 SiO 2 /Si with various thicknesses (1–10 layers; Supplementary Fig. 2 ).

To quantify the performance of the Mask-RCNN detection process, we manually checked over 2300 optical microscope images, and the detection metrics are summarized in Supplementary Table 1 . Here, we defined true- and false-positive detections (TP and FP) as whether the optical microscope image contained at least one correctly detected 2D crystal or not (examples are presented in Supplementary Figs 2 – 7 ). An image in which the 2D crystal was not correctly detected was considered a false negative (FN). Based on these definitions, the value of precision was TP/(TP + FP) ~0.53, which implies that over half of the optical microscope images with positive detection contained WTe 2 crystals. Notably, the recall (TP/(TP + FN) ~0.93) was significantly high. In addition, the examples of false-negative detection contain only small fractured WTe 2 crystals, which cannot be utilized for assembling van der Waals heterostructures. These results imply that the deep-learning-based detection process does not miss usable 2D crystals. This property is favorable for the practical application of deep-learning algorithms to searching for 2D crystals, as exfoliated 2D crystals are usually sparsely distributed over SiO 2 /Si substrates. In this case, false-positive detection is less problematic than missing 2D crystals (false negative). The screening of the results can be performed by a human operator without significant intervention 43 . In the case of graphene (Supplementary Table 1 ), both the precision and recall were high (~0.95 and ~0.97, respectively), which implies excellent performance of the deep-learning algorithm for detecting 2D crystals. We speculate that there is a difference between the exfoliation yields of graphene and WTe 2 because the mean average precision (mAP) at the intersection of union (IOU) over 50% mAP@IoU 50% with respect to the annotated dataset (see preparation methods below) for each material does not differ significantly (0.49 for graphene and 0.52 for WTe 2 ). As demonstrated above, these values are sufficiently high and can be successfully applied to searches for 2D crystals. These results indicate that the deep-learning inference can be practically utilized to search for 2D crystals.

Model training

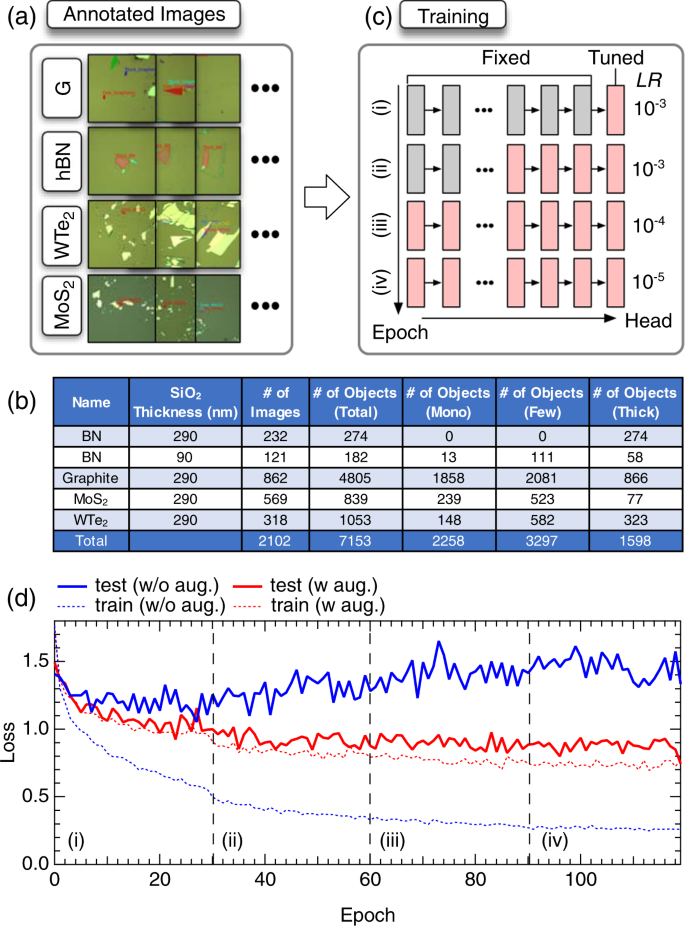

The Mask-RCNN model was trained on a dataset, where Fig. 3a shows representative annotated images, and Fig. 3b shows the annotation metrics. The dataset comprises 353 (hBN), 862 (graphene), 569 (MoS 2 ), and 318 (WTe 2 ) images. The numbers of annotated objects were 456 (hBN), 4805 (graphene), 839 (MoS 2 ), and 1053 (WTe 2 ). The annotations were converted to the JSON format compatible with the Microsoft COCO dataset using our customized scripts written in Python. Finally, the annotated dataset was randomly divided into training and test datasets in a 8:2 ratio. To train the model on the annotated dataset, we utilized the multitask loss function defined in refs 11 , 33

where L cls , L box , and L mask are the classification, localization, and segmentation mask losses, respectively; α – γ is the control parameter for tuning the balance between the loss sets as ( α , β , γ ) = (0.6, 1.0, 1.0). The class loss was

where p = ( p 0 , …, p k ) is the probability distribution for each region of interest in which the result of classification is u . The bounding box loss L box is defined as

where \({\mathrm{smooth}}_{L_1}\left( x \right) = \left\{ {\begin{array}{l} {0.5x^2,\,\left| x \right| < 1} \\ {\left| x \right| - 0.5,{\mathrm{otherwise}}} \end{array}} \right.\) is an L 1 loss. The mask loss L mask was defined as the average binary cross-entropy loss:

where y ij is the binary mask at ( i , j ) from an ROI of ( m × m ) size on the ground truth mask of class k , and \(\hat y_{ij}^k\) is the predicted class label of the same cell.

a Examples of annotated datasets for graphene (G), hBN, WTe 2 , and MoS 2 . b Training data metrics. c Schematic of the training procedure. d Learning curves for training on the dataset. The network weights were initialized by the model weights pretrained on the MS-COCO dataset. Solid (dotted) curves are test (train) losses. Training was performed either with (red curve) or without (blue curve) augmentation.

Instead of training the model from scratch, the model weights, except for the network heads, were initialized using those obtained by pretraining on a large-scale object segmentation dataset in general scenes, i.e., the MS-COCO dataset 31 . The remaining parts of the network weights were initialized using random values. The optimization was conducted using a stochastic gradient decent with a momentum of 0.9 and a weight decay of 0.0001. Each training epoch consisted of 500 iterations. The training comprised four stages, each lasting for 30 epochs (Fig. 3c ). For the first two training stages, the learning rate was set to 10–3. The learning rate was decreased to 10–4 and 10–5 for the last two stages. In the first stage, only the network heads were trained (top row, Fig. 3c ). Next, the parts of the backbone starting at layer 4 were optimized (second row, Fig. 3c ). In the third and fourth stages, the entire model (backbone and heads) was trained (third and fourth rows, Fig. 3c ). The training took 12 h using four GPUs (NVIDIA Tesla V100 with 32-GB memory). To increase the number of training datasets, we used data augmentation techniques, including color channel multiplication, rotation, horizontal/vertical flips, and horizontal/vertical shifts. These operations were applied to the training data with a random probability online to reduce disk usage (examples of the augmented data are presented in Supplementary Figs 8 and 9 ). Before being fed to the Mask-RCNN model, each image was resized to 1024 × 1024 px 2 while preserving the aspect ratio, with any remaining space zero padded.

To improve the generalization ability of the network, we organized the training of the Mask-RCNN model into two steps. First, the model was trained on mixed datasets consisting of multiple 2D materials (graphene, hBN, MoS 2 , and WTe 2 ). At this stage, the model was trained to perform segmentation and classification, both on material identity and layer thickness. Then, we use the trained weights as a source, and performed transfer learning on each material subset to achieve layer thickness classification. By employing this strategy, the feature values that are common to 2D materials behind the network heads were optimized and shared between the different materials. As shown below, the sharing of the backbone network contributed to faster convergence of the network weights and a smaller test loss.

Training curve

Figure 3d shows the value of the loss function as a function of the epoch count. The solid (dotted) curves represent the test (training) loss. The training was conducted either with (red curves) or without (blue curves) data augmentation. Without augmentation, the training loss decreased to zero, while the test loss was increased. The difference between the test and training losses was significantly increased with training, which indicates that the generalization error increased, and the model overfits the training data 13 . When data augmentation was applied, both the training and validation losses decreased monotonically with training, and the difference between the training and validation losses was small. These results indicate that when 2000 optical microscope images are prepared, the Mask-RCNN model can be trained on 2D materials without overfitting.

Transfer learning

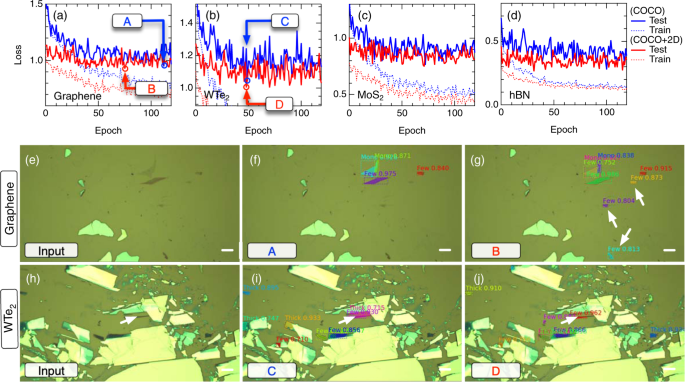

After training on multiple material categories, we applied transfer learning to the model using each sub-dataset. Figure 4a–d shows the learning curves for training the networks on the graphene, hBN, MoS 2 , and WTe 2 subsets of the annotated data, respectively. The solid (dotted) curves represent the test (training) loss. The network weights were initialized using those at epoch 120 obtained by training on multiple material classes (Fig. 3d ) (red curves, Fig. 4a–d ). For reference, we also trained the dataset by initializing the network weights using those obtained by pretraining only on the MS-COCO dataset (blue curves, Fig. 4a–d ). Notably, in all cases, the test loss decreased faster for those pretrained on the 2D crystals and MS-COCO than for those pretrained on MS-COCO only. The loss value after 30 epochs of training on 2D crystals and MS-COCO was of almost the same order as that obtained after 80 epochs of training on MS-COCO only. In addition, the minimum loss value achieved in the case of pretraining on 2D crystals and MS-COCO was smaller than that achieved with MS-COCO only. These results indicate that the feature values that are common to 2D materials are learnt in the backbone network. In particular, the trained backbone network weights contribute to improving the model performance on each material.

a – d Test (solid curves) and training (dotted curves) losses as a function of epoch count for training on a graphene, b WTe 2 , c MoS 2 , and d hBN. Each epoch consists of 500 training steps. The model weights were initialized using those pretrained on (blue) MS-COCO and (red) MS-COCO and 2D material datasets. The optical microscope image of graphene (WTe 2 ) and the inference results for these images are shown in e – g ( h – j ). The scale bars correspond to 10 µm.

To investigate the improvement of the model accuracy, we compared the inference results for the optical microscope images using the network weights from each training set. Figure 4e–h shows the optical microscope images of graphene and WTe 2 , respectively, input into the network. We employed the model weights where the loss value was minimum (indicated by the red/blue arrows). The inference results in the cases of transferring only from MS-COCO, and from both MS-COCO and 2D materials, are shown in Fig. 4f, g for graphene, and Fig. 4I, j for WTe 2 . For graphene, the model transferred from MS-COCO only failed in detecting some thick graphite flakes, as indicated by the white arrows in Fig. 4f , whereas the model trained on MS-COCO and 2D crystals detected the graphene flakes, as indicated by the white arrows in Fig. 4g . Similarly, for WTe 2 , when the inference process was performed using the model transferred from MS-COCO only, the surface of the SiO 2 /Si substrate surrounded by thick WTe 2 crystals was misclassified as WTe 2 , as indicated by the white arrow in Fig. 4d . In contrast, when learning was transferred from the model pretrained on MS-COCO and 2D materials (red arrow, Fig. 4b ), this region was not recognized as WTe 2 . These results indicate that pretraining on multiple material classes contributes to improving model accuracy because the common properties of 2D crystals are learnt in the backbone network. The inference results presented in Fig. 1 were obtained by utilizing the model weights at epoch 120 for each material.

Generalization ability

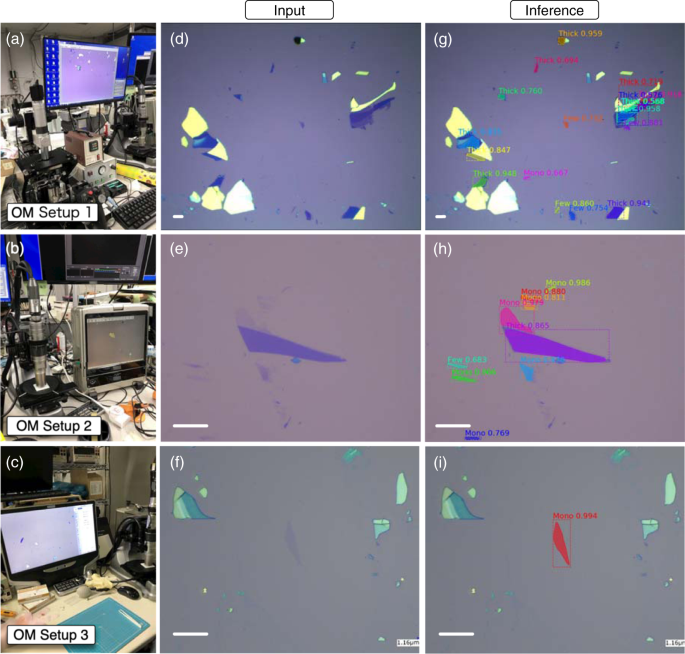

Finally, we investigated the generalization ability of the neural network for detecting graphene flakes in images obtained using different optical microscope setups (Asahikogaku AZ10-T/E, Keyence VHX-900, and Keyence VHX-5000 as shown in Fig. 5a–c , respectively). Figure 5d–f shows the optical microscope images of exfoliated graphene captured by each instrument. Across these instruments, there are significant variations in the white balance, magnification, resolution, illumination intensity, and illumination inhomogeneity (Fig. 5d–f ). The model weights from training epoch 120 on the graphene dataset were employed (red arrow, Fig. 4d ). Even though no optical microscope images recorded by these instruments were utilized for training, as shown by the inference results in Fig. 5g–i , the deep-learning model successfully detected the regions of exfoliated graphene. These results indicate that our trained neural network captured the latent general features of graphene flakes, and thus constitutes a general-purpose graphene detector that works irrespective of the optical microscope setup. These properties cannot be realized by utilizing the conventional rule-based detection algorithms for 2D crystals, where the detection parameters must be retuned when the optical conditions were altered.

a–c Optical microscope setups used for capturing images of exfoliated graphene (Asahikogaku AZ10-T/E, Keyence VHX-900, and Keyence VHX-5000, respectively). d–f Optical microscope images recorded using instruments ( a–c ), respectively. g–i Inference results for the optical microscope images in d–f , respectively. The segmentation masks are shown in color, and the category and confidences are also indicated. The scale bars correspond to 10 µm.

In order to train the neural network for the 2D crystals that have different appearance, such as ZrSe 3 , the model weights trained on both MS-COCO and 2D crystals obtained in this study can be used as source weights to start training. In our experience, the Mask-RCNN trained on a small dataset of ~80 images from the MS-COCO pretrained model can produce rough segmentation masks on graphene. Therefore, providing <80 annotated images would be sufficient for developing a classification algorithm that works for detecting other 2D materials when we use our trained weights as a source. Our work can be utilized as a starting point for developing neural network models that work for various 2D materials.

Moreover, the trained neural networks can be utilized for searching the materials other than those used for training. For demonstration, we exfoliated WSe 2 and MoSe 2 flakes on SiO 2 /Si substrate, and conducted searching with the model trained on WTe 2 . As shown in Supplementary Figs 10 and 11 in supplementary information, thin WSe 2 and MoSe 2 flakes are correctly detected even without training on these materials. This result indicates that the difference of the appearances of WSe 2 and MoSe 2 from WTe 2 are covered by the generalization ability of neural networks.

Finally, our deep-learning inference process can run on the remote server/client architecture. This architecture is suitable for researchers with an occasional need for deep learning, as it provides a cloud-based setup that does not require a local GPU. The conventional optical microscope instruments that were not covered in this study can also be modified to support deep-learning inference by implementing the client software to capture an image, send an image to the server, receive, and display inference results. The distribution of the deep-learning inference system will benefit the research community by saving the time needed for optical microscopy-based searching of 2D materials.

In this work, we developed a deep-learning-assisted automated optical microscope to search for 2D crystals on SiO 2 /Si substrates. A neural network with Mask-RCNN architecture trained on 2D materials enabled the efficient detection of various exfoliated 2D crystals, including graphene, hBN, and transition metal dichalcogenides (WTe 2 and MoS 2 ), while simultaneously generating a segmentation mask for each object. This work, along with the recent other attempts for utilizing the deep-learning algorithms 44 , 45 , 46 , should free researchers from the repetitive tasks of optical microscopy, and comprises a fundamental step toward realizing fully automated fabrication systems for van der Waals heterostructures. To facilitate such research, we make the source codes for training, the model weights, the training dataset, and the optical microscope drivers publicly available.

Optical microscope drivers

The automated optical microscope drivers were written in C++ and Python. The software stack was developed on the stacks of a robotic operating system 47 and the HALCON image-processing library (MVTec Software GmbH).

Preparation of the training dataset

To obtain the Mask-RCNN model to segment 2D crystals, we employed a semiautomatic annotation workflow. First, we trained the Mask-RCNN with a small dataset consisting of ~80 images of graphene. Then, we conducted predictions on optical microscope images of graphene. The prediction labels generated using the Mask-RCNN were stored in LabelBox using API. These labels were manually corrected by a human annotator. This procedure greatly enhanced the annotation efficiency, allowing each image to be labeled in 20–30 s.

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Code availability

The source code, the trained network weights, and the training data are available at https://github.com/tdmms/ .

Zhao, Z.-Q., Zheng, P., Xu, S.-t. & Wu, X. Object detection with deep learning: a review. IEEE Transactions on Neural Networks and Learning Systems 30 , 3212–3232 (2019).

Ren, S., He, K., Girshick, R. & Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. Advances in Neural Information Processing Systems , 91–99 (Neural Information Processing Systems Foundation, 2015).

Girshick, R. Fast R-CNN. Proceedings of the IEEE International Conference on Computer Vision , 1440–1448 (IEEE, 2015).

Girshick, R., Donahue, J., Darrell, T. & Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition , 580–587 (IEEE, 2014).

Liu, W. et al. SSD: Single shot multibox detector. European Conference on Computer Vision , 21–37 (Springer, 2016).

Garcia-Garcia, A., Orts-Escolano, S., Oprea, S. O., Villena-Martinez, V. & Garcia-Rodriguez, J. A review on deep learning techniques applied to semantic segmentation. Preprint at https://arxiv.org/abs/1704.06857 (2017).

Badrinarayanan, V., Kendall, A. & Cipolla, R. SegNet: a deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 39 , 2481–2495 (2017).

Article Google Scholar

Ronneberger, O., Fischer, P. & Brox, T. U-Net: convolutional networks for biomedical image segmentation. International Conference on Medical Image Computing and Computer-assisted Intervention , 234–241 (Springer, 2015).

Long, J., Shelhamer, E. & Darrell, T. Fully convolutional networks for semantic segmentation. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition , 3431–3440 (IEEE, 2015).

Chen, L.-C., Papandreou, G., Kokkinos, I., Murphy, K. & Yuille, A. L. DeepLab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 40 , 834–848 (2017).

He, K., Gkioxari, G., Dollár, P. & Girshick, R. Mask R-CNN. Proceedings of the IEEE International Conference on Computer Vision , 2961–2969 (IEEE, 2017).

Goodfellow, I. et al. Generative adversarial nets. Advances in Neural Information Processing Systems , 2672–2680 (Neural Information Processing Systems Foundation, 2014).

Goodfellow, I., Bengio, Y. & Courville, A. Deep Learning . (MIT Press, 2016).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521 , 436–444 (2015).

Article CAS Google Scholar

Krizhevsky, A., Sutskever, I. & Hinton, G. E. Imagenet classification with deep convolutional neural networks. Advances in Neural Information Processing Systems , 1097–1105 (Neural Information Processing Systems Foundation, 2012).

Litjens, G. et al. A survey on deep learning in medical image analysis. Med. Image Anal. 42 , 60–88 (2017).

Falk, T. et al. U-Net: deep learning for cell counting, detection, and morphometry. Nat. Methods 16 , 67–70 (2019).

Moen, E. et al. Deep learning for cellular image analysis. Nat. Methods https://doi.org/10.1038/s41592-019-0403-1 (2019).

Karpathy, A. Software 2.0 . https://medium.com/@karpathy/software-2-0-a64152b37c35 (2017).

Novoselov, K. S., Mishchenko, A., Carvalho, A. & Castro Neto, A. H. 2D materials and van der Waals heterostructures. Science 353 , aac9439 (2016).

Novoselov, K. S. et al. Two-dimensional atomic crystals. Proc. Natl Acad. Sci. USA 102 , 10451–10453 (2005).

Novoselov, K. S. et al. Electric field effect in atomically thin carbon films. Science 306 , 666–669 (2004).

Masubuchi, S. et al. Autonomous robotic searching and assembly of two-dimensional crystals to build van der Waals superlattices. Nat. Commun. 9 , 1413 (2018).

Masubuchi, S. & Machida, T. Classifying optical microscope images of exfoliated graphene flakes by data-driven machine learning. npj 2D Mater. Appl. 3 , 4 (2019).

Nixon, M. S. & Aguado, A. S. Feature Extraction & Image Processing for Computer Vision (Academic Press, 2012).

Szeliski, R. Computer Vision: Algorithms and Applications . (Springer Science & Business Media, 2010).

Yu, Y. et al. High-temperature superconductivity in monolayer Bi 2 Sr 2 CaCu 2 O 8+δ . Nature 575 , 156–163 (2019).

Ghosh, S., Das, N., Das, I. & Maulik, U. Understanding deep learning techniques for image segmentation. Preprint at https://arxiv.org/abs/1907.06119 (2019).

Pinheiro, P. O., Lin, T.-Y., Collobert, R. & Dollár, P. Learning to refine object segments. European Conference on Computer Vision , 75–91 (Springer, 2016).

Zhao, H., Shi, J., Qi, X., Wang, X. & Jia, J. Pyramid scene parsing network. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition , 2881–2890 (IEEE, 2017).

Lin, T.-Y. et al. Microsoft COCO: common objects in context. European Conference on Computer Vision , 740–755 (Springer, 2014).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition , 770–778 (IEEE, 2016).

Abdulla, W. Mask R-CNN for object detection and instance segmentation on Keras and TensorFlow https://github.com/matterport/Mask_RCNN (2017).

Chollet, F. Keras: Deep learning for humans https://github.com/keras-team/keras (2015).

Abadi, M. et al. Tensorflow: a system for large-scale machine learning. 12th USENIX Symposium on Operating Systems Design and Implementation , 265–283 (USENIX Association, 2016).

Hestness, J. et al. Deep learning scaling is predictable, empirically. Preprint at https://arxiv.org/abs/1712.00409 (2017).

Labelbox, “Labelbox,” Online, [Online]. https://labelbox.com (2019).

Lin, X. et al. Intelligent identification of two-dimensional nanostructures by machine-learning optical microscopy. Nano Res. 11 , 6316–6324 (2018).

Li, H. et al. Rapid and reliable thickness identification of two-dimensional nanosheets using optical microscopy. ACS Nano 7 , 10344–10353 (2013).

Ni, Z. H. et al. Graphene thickness determination using reflection and contrast spectroscopy. Nano Lett. 7 , 2758–2763 (2007).

Nolen, C. M., Denina, G., Teweldebrhan, D., Bhanu, B. & Balandin, A. A. High-throughput large-area automated identification and quality control of graphene and few-layer graphene films. ACS Nano 5 , 914–922 (2011).

Taghavi, N. S. et al. Thickness determination of MoS 2 , MoSe 2 , WS 2 and WSe 2 on transparent stamps used for deterministic transfer of 2D materials. Nano Res. 12 , 1691–1695 (2019).

Zhang, P., Zhong, Y., Deng, Y., Tang, X. & Li, X. A survey on deep learning of small sample in biomedical image analysis. Preprint at https://arxiv.org/abs/1908.00473 (2019).

Saito, Y. et al. Deep-learning-based quality filtering of mechanically exfoliated 2D crystals. npj Computational Materials 5 , 1–6 (2019).

Han, B. et al. Deep learning enabled fast optical characterization of two-dimensional materials. Preprint at https://arxiv.org/abs/1906.11220 (2019).

Greplova, E. et al. Fully automated identification of 2D material samples. Preprint at https://arxiv.org/abs/1911.00066 (2019).

Quigley, M. et al. ROS: an open-source Robot Operating System. ICRA Workshop on Open Source Software (Open Robotics, 2009).

Download references

Acknowledgements

This work was supported by CREST, Japan Science and Technology Agency Grant Numbers JPMJCR15F3 and JPMJCR16F2, and by JSPS KAKENHI under Grant No. JP19H01820.

Author information

Authors and affiliations.

Institute of Industrial Science, University of Tokyo, 4-6-1 Komaba, Meguro-ku, Tokyo, 153-8505, Japan

Satoru Masubuchi, Eisuke Watanabe, Yuta Seo, Takashi Taniguchi & Tomoki Machida

Laboratory for Materials and Structures, Tokyo Institute of Technology, 4259 Nagatsuta, Midori-ku, Yokohama, 226-8503, Japan

Shota Okazaki & Takao Sasagawa

National Institute for Materials Science, 1-1 Namiki, Tsukuba, Ibaraki, 305-0044, Japan

Kenji Watanabe & Takashi Taniguchi

You can also search for this author in PubMed Google Scholar

Contributions

S.M. conceived the scheme, implemented the software, trained the neural network, and wrote the paper. E.W. and Y.S. exfoliated the 2D materials and tested the system. S.O. and T.S. synthesized the WTe 2 and WSe 2 crystals. K.W. and T.T. synthesized the hBN crystals. T.M. supervised the research program.

Corresponding authors

Correspondence to Satoru Masubuchi or Tomoki Machida .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary information pdf, supplementary movie1_compressed.mp4, supplementary movie2_compressed.mp4, supplementary movie3_compressed.mp4, rights and permissions.

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Cite this article.

Masubuchi, S., Watanabe, E., Seo, Y. et al. Deep-learning-based image segmentation integrated with optical microscopy for automatically searching for two-dimensional materials. npj 2D Mater Appl 4 , 3 (2020). https://doi.org/10.1038/s41699-020-0137-z

Download citation

Received : 20 October 2019

Accepted : 24 February 2020

Published : 23 March 2020

DOI : https://doi.org/10.1038/s41699-020-0137-z

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

Van der waals enabled formation and integration of ultrathin high-κ dielectrics on 2d semiconductors.

- Matej Sebek

- Jinghua Teng

npj 2D Materials and Applications (2024)

- Laura Zichi

Scientific Reports (2023)

- Fereshteh Ramezani

- Sheikh Parvez

- Bradley M. Whitaker

Review: 2D material property characterizations by machine-learning-assisted microscopies

- Zhizhong Si

- Daming Zhou

- Xiaoyang Lin

Applied Physics A (2023)

Clean assembly of van der Waals heterostructures using silicon nitride membranes

- Wendong Wang

- Nicholas Clark

- Roman Gorbachev

Nature Electronics (2023)

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

- Reference Manager

- Simple TEXT file

People also looked at

Editorial article, editorial: current trends in image processing and pattern recognition.

- PAMI Research Lab, Computer Science, University of South Dakota, Vermillion, SD, United States

Editorial on the Research Topic Current Trends in Image Processing and Pattern Recognition

Technological advancements in computing multiple opportunities in a wide variety of fields that range from document analysis ( Santosh, 2018 ), biomedical and healthcare informatics ( Santosh et al., 2019 ; Santosh et al., 2021 ; Santosh and Gaur, 2021 ; Santosh and Joshi, 2021 ), and biometrics to intelligent language processing. These applications primarily leverage AI tools and/or techniques, where topics such as image processing, signal and pattern recognition, machine learning and computer vision are considered.

With this theme, we opened a call for papers on Current Trends in Image Processing & Pattern Recognition that exactly followed third International Conference on Recent Trends in Image Processing & Pattern Recognition (RTIP2R), 2020 (URL: http://rtip2r-conference.org ). Our call was not limited to RTIP2R 2020, it was open to all. Altogether, 12 papers were submitted and seven of them were accepted for publication.

In Deshpande et al. , authors addressed the use of global fingerprint features (e.g., ridge flow, frequency, and other interest/key points) for matching. With Convolution Neural Network (CNN) matching model, which they called “Combination of Nearest-Neighbor Arrangement Indexing (CNNAI),” on datasets: FVC2004 and NIST SD27, their highest rank-I identification rate of 84.5% was achieved. Authors claimed that their results can be compared with the state-of-the-art algorithms and their approach was robust to rotation and scale. Similarly, in Deshpande et al. , using the exact same datasets, exact same set of authors addressed the importance of minutiae extraction and matching by taking into low quality latent fingerprint images. Their minutiae extraction technique showed remarkable improvement in their results. As claimed by the authors, their results were comparable to state-of-the-art systems.

In Gornale et al. , authors extracted distinguishing features that were geometrically distorted or transformed by taking Hu’s Invariant Moments into account. With this, authors focused on early detection and gradation of Knee Osteoarthritis, and they claimed that their results were validated by ortho surgeons and rheumatologists.

In Tamilmathi and Chithra , authors introduced a new deep learned quantization-based coding for 3D airborne LiDAR point cloud image. In their experimental results, authors showed that their model compressed an image into constant 16-bits of data and decompressed with approximately 160 dB of PSNR value, 174.46 s execution time with 0.6 s execution speed per instruction. Authors claimed that their method can be compared with previous algorithms/techniques in case we consider the following factors: space and time.

In Tamilmathi and Chithra , authors carefully inspected possible signs of plant leaf diseases. They employed the concept of feature learning and observed the correlation and/or similarity between symptoms that are related to diseases, so their disease identification is possible.

In Das Chagas Silva Araujo et al. , authors proposed a benchmark environment to compare multiple algorithms when one needs to deal with depth reconstruction from two-event based sensors. In their evaluation, a stereo matching algorithm was implemented, and multiple experiments were done with multiple camera settings as well as parameters. Authors claimed that this work could be considered as a benchmark when we consider robust evaluation of the multitude of new techniques under the scope of event-based stereo vision.

In Steffen et al. ; Gornale et al. , authors employed handwritten signature to better understand the behavioral biometric trait for document authentication/verification, such letters, contracts, and wills. They used handcrafter features such as LBP and HOG to extract features from 4,790 signatures so shallow learning can efficiently be applied. Using k-NN, decision tree and support vector machine classifiers, they reported promising performance.

Author Contributions

The author confirms being the sole contributor of this work and has approved it for publication.

Conflict of Interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Santosh, KC, Antani, S., Guru, D. S., and Dey, N. (2019). Medical Imaging Artificial Intelligence, Image Recognition, and Machine Learning Techniques . United States: CRC Press . ISBN: 9780429029417. doi:10.1201/9780429029417

CrossRef Full Text | Google Scholar

Santosh, KC, Das, N., and Ghosh, S. (2021). Deep Learning Models for Medical Imaging, Primers in Biomedical Imaging Devices and Systems . United States: Elsevier . eBook ISBN: 9780128236505.

Google Scholar

Santosh, KC (2018). Document Image Analysis - Current Trends and Challenges in Graphics Recognition . United States: Springer . ISBN 978-981-13-2338-6. doi:10.1007/978-981-13-2339-3

Santosh, KC, and Gaur, L. (2021). Artificial Intelligence and Machine Learning in Public Healthcare: Opportunities and Societal Impact . Spain: SpringerBriefs in Computational Intelligence Series . ISBN: 978-981-16-6768-8. doi:10.1007/978-981-16-6768-8

Santosh, KC, and Joshi, A. (2021). COVID-19: Prediction, Decision-Making, and its Impacts, Book Series in Lecture Notes on Data Engineering and Communications Technologies . United States: Springer Nature . ISBN: 978-981-15-9682-7. doi:10.1007/978-981-15-9682-7

Keywords: artificial intelligence, computer vision, machine learning, image processing, signal processing, pattern recocgnition

Citation: Santosh KC (2021) Editorial: Current Trends in Image Processing and Pattern Recognition. Front. Robot. AI 8:785075. doi: 10.3389/frobt.2021.785075

Received: 28 September 2021; Accepted: 06 October 2021; Published: 09 December 2021.

Edited and reviewed by:

Copyright © 2021 Santosh. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: KC Santosh, [email protected]

This article is part of the Research Topic

Current Trends in Image Processing and Pattern Recognition

- Open access

- Published: 11 February 2019

Research on image classification model based on deep convolution neural network

- Mingyuan Xin 1 &

- Yong Wang 2

EURASIP Journal on Image and Video Processing volume 2019 , Article number: 40 ( 2019 ) Cite this article

46k Accesses

130 Citations

Metrics details

Based on the analysis of the error backpropagation algorithm, we propose an innovative training criterion of depth neural network for maximum interval minimum classification error. At the same time, the cross entropy and M 3 CE are analyzed and combined to obtain better results. Finally, we tested our proposed M3 CE-CEc on two deep learning standard databases, MNIST and CIFAR-10. The experimental results show that M 3 CE can enhance the cross-entropy, and it is an effective supplement to the cross-entropy criterion. M3 CE-CEc has obtained good results in both databases.

1 Introduction

Traditional machine learning methods (such as multilayer perception machines, support vector machines, etc.) mostly use shallow structures to deal with a limited number of samples and computing units. When the target objects have rich meanings, the performance and generalization ability of complex classification problems are obviously insufficient. The convolution neural network (CNN) developed in recent years has been widely used in the field of image processing because it is good at dealing with image classification and recognition problems and has brought great improvement in the accuracy of many machine learning tasks. It has become a powerful and universal deep learning model.

Convolutional neural network (CNN) is a multilayer neural network, and it is also the most classical and common deep learning framework. A new reconstruction algorithm based on convolutional neural networks is proposed by Newman et al. [ 1 ] and its advantages in speed and performance are demonstrated. Wang et al. [ 2 ] discussed three methods, that is, the CNN model with pretraining or fine-tuning and the hybrid method. The first two executive images are passed to the network one time, while the last category uses a patch-based feature extraction scheme. The survey provides a milestone in modern case retrieval, reviews a wide selection of different categories of previous work, and provides insights into the link between SIFT and the CNN based approach. After analyzing and comparing the retrieval performance of different categories on several data sets, we discuss a new direction of general and special case retrieval. Convolution neural network (CNN) is very interested in machine learning and has excellent performance in hyperspectral image classification. Al-Saffar et al. [ 3 ] proposed a classification framework called region-based pluralistic CNN, which can encode semantic context-aware representations to obtain promising features. By combining a set of different discriminant appearance factors, the representation based on CNN presents the spatial spectral contextual sensitivity that is essential for accurate pixel classification. The proposed method for learning contextual interaction features using various region-based inputs is expected to have more discriminant power. Then, the combined representation containing rich spectrum and spatial information is fed to the fully connected network and the label of each pixel vector is predicted by the Softmax layer. The experimental results of the widely used hyperspectral image datasets show that the proposed method can outperform any other traditional deep-learning-based classifiers and other advanced classifiers. Context-based convolution neural network (CNN) with deep structure and pixel-based multilayer perceptron (MLP) with shallow structure are recognized neural network algorithms which represent the most advanced depth learning methods and classical non-neural network algorithms. The two algorithms with very different behaviors are integrated in a concise and efficient manner, and a rule-based decision fusion method is used to classify very fine spatial resolution (VFSR) remote sensing images. The decision fusion rules, which are mainly based on the CNN classification confidence design, reflect the usual complementary patterns of each classifier. Therefore, the ensemble classifier MLP-CNN proposed by Said et al. [ 4 ] acquires supplementary results obtained from CNN based on deep spatial feature representation and MLP based on spectral discrimination. At the same time, the CNN constraints resulting from the use of convolution filters, such as the uncertainty of object boundary segmentation and the loss of useful fine spatial resolution details, are compensated. The validity of the ensemble MLP-CNN classifier was tested in urban and rural areas using aerial photography and additional satellite sensor data sets. MLP-CNN classifier achieves promising performance and is always superior to pixel based MLP, spectral and texture based MLP, and context-based CNN in classification accuracy. The research paves the way for solving the complex problem of VFSR image classification effectively. Periodic inspection of nuclear power plant components is important to ensure safe operation. However, current practice is time-consuming, tedious, and subjective, involving human technicians examining videos and identifying reactor cracks. Some vision-based crack detection methods have been developed for metal surfaces, and they generally perform poorly when used to analyze nuclear inspection videos. Detecting these cracks is a challenging task because of their small size and the presence of noise patterns on the surface of the components. Huang et al. [ 5 ] proposed a depth learning framework based on convolutional neural network (CNN) and Naive Bayes data fusion scheme (called NB-CNN), which can be used to analyze a single video frame for crack detection. At the same time, a new data fusion scheme is proposed to aggregate the information extracted from each video frame to enhance the overall performance and robustness of the system. In this paper, a CNN is proposed to detect the fissures in each video frame, the proposed data fusion scheme maintains the temporal and spatial coherence of the cracks in the video, and the Naive Bayes decision effectively discards the false positives. The proposed framework achieves a hit rate of 98.3% 0.1 false positives per frame which is significantly higher than the most advanced method proposed in this paper. The prediction of visual attention data from any type of media is valuable to content creators and is used to drive coding algorithms effectively. With the current trend in the field of virtual reality (VR), the adaptation of known technologies to this new media is beginning to gain momentum R. Gupta and Bhavsar [ 6 ] proposed an extension to the architecture of any convolutional neural network (CNN) to fine-tune traditional 2D significant prediction to omnidirectional image (ODI). In an end-to-end manner, it is shown that each step in the pipeline presented by them is aimed at making the generated salient map more accurate than the ground live data. Convolutional neural network (Ann) is a kind of depth machine learning method derived from artificial neural network (Ann), which has achieved great success in the field of image recognition in recent years. The training algorithm of neural network is based on the error backpropagation algorithm (BP), which is based on the decrease of precision. However, with the increase of the number of neural network layers, the number of weight parameters will increase sharply, which leads to the slow convergence speed of the BP algorithm. The training time is too long. However, CNN training algorithm is a variant of BP algorithm. By means of local connection and weight sharing, the network structure is more similar to the biological neural network, which not only keeps the deep structure of the network, but also greatly reduces the network parameters, so that the model has good generalization energy and is easier to train. This advantage is more obvious when the network input is a multi-dimensional image, so that the image can be directly used as the network input, avoiding the complex feature extraction and data reconstruction process in traditional recognition algorithm. Therefore, convolutional neural networks can also be interpreted as a multilayer perceptron designed to recognize two-dimensional shapes, which are highly invariant to translation, scaling, tilting, or other forms of deformation [ 7 , 8 , 9 , 10 , 11 , 12 , 13 , 14 , 15 ].

With the rapid development of mobile Internet technology, more and more image information is stored on the Internet. Image has become another important network information carrier after text. Under this background, it is very important to make use of a computer to classify and recognize these images intelligently and make them serve human beings better. In the initial stage of image classification and recognition, people mainly use this technology to meet some auxiliary needs, such as Baidu’s star face function can help users find the most similar star. Using OCR technology to extract text and information from images, it is very important for graph-based semi-supervised learning method to construct good graphics that can capture intrinsic data structures. This method is widely used in hyperspectral image classification with a small number of labeled samples. Among the existing graphic construction methods, sparse representation (based on SR) shows an impressive performance in semi-supervised HSI classification tasks. However, most algorithms based on SR fail to consider the rich spatial information of HSI, which has been proved to be beneficial to classification tasks. Yan et al. [ 16 ] proposed a space and class structure regularized sparse representation (SCSSR) graph for semi-supervised HSI classification. Specifically, spatial information has been incorporated into the SR model through graph Laplace regularization, which assumes that spatial neighbors should have similar representation coefficients, so the obtained coefficient matrix can more accurately reflect the similarity between samples. In addition, they also combine the probabilistic class structure (which means the probabilistic relationship between each sample and each class) into the SR model to further improve the differentiability of graphs. Hyion and AVIRIS hyperspectral data show that our method is superior to the most advanced method. The invariance extracted by Zhang et al. [ 17 ], such as the specificity of uniform samples and the invariance of rotation invariance, is very important for object detection and classification applications. Current research focuses on the specific invariance of features, such as rotation invariance. In this paper, a new multichannel convolution neural network (mCNN) is proposed to extract the invariant features of object classification. Multi-channel convolution sharing the same weight is used to reduce the characteristic variance of sample pairs with different rotation in the same class. As a result, the invariance of the uniform object and the rotation invariance are encountered simultaneously to improve the invariance of the feature. More importantly, the proposed mCNN is particularly effective for small training samples. The experimental results of two datum datasets for handwriting recognition show that the proposed mCNN is very effective for extracting invariant features with a small number of training samples. With the development of big data era, convolutional neural network (CNN) with more hidden layers has more complex network structure and stronger feature learning and feature expression ability than traditional machine learning methods. Since the introduction of the convolutional neural network model trained by the deep learning algorithm, significant achievements have been made in many large-scale recognition tasks in the field of computer vision. Chaib et al. [ 18 ] first introduced the rise and development of deep learning and convolution neural network and summarized the basic model structure, convolution feature extraction, and pool operation of convolution neural network. Then, the research status and development trend of convolution neural network model based on deep learning in image classification are reviewed, and the typical network structure, training method, and performance are introduced. Finally, some problems in the current research are briefly summarized and discussed, and new directions of future development are predicted. Computer diagnostic technology has played an important role in medical diagnosis from the beginning to now. Especially, image classification technology, from the initial theoretical research to clinical diagnosis, has provided effective assistance for the diagnosis of various diseases. In addition, the image is the concrete image formed in the human brain by the objective things existing in the natural environment, and it is an important source of information for a human to obtain the knowledge of the external things. With the continuous development of computer technology, the general object image recognition technology in natural scene is applied more and more in daily life. From image processing technology in simple bar code recognition to text recognition (such as handwritten character recognition and optical character recognition OCR etc.) to biometric recognition (such as fingerprint, sound, iris, face, gestures, emotion recognition, etc.), there are many successful applications. Image recognition (Image Recognition), especially (Object Category Recognition) in natural scenes, is a unique skill of human beings. In a complex natural environment, people can identify concrete objects (such as teacups) at a glance (swallow, etc.) or a specific category of objects (household goods, birds, etc.). However, there are still many questions about how human beings do this and how to apply these related technologies to computers so that they have humanoid intelligence. Therefore, the research of image recognition algorithms is still in the fields of machine vision, machine learning, depth learning, and artificial intelligence [ 19 , 20 , 21 , 22 , 23 , 24 ].

Therefore, this paper applies the advantage of depth mining convolution neural network to image classification, tests the loss function constructed by M 3 CE on two depth learning standard databases MNIST and CIFAR-10, and pushes forward the new direction of image classification research.

2 Proposed method

Image classification is one of the hot research directions in computer vision field, and it is also the basic image classification system in other image application fields, which is usually divided into three important parts: image preprocessing, image feature extraction and classifier.

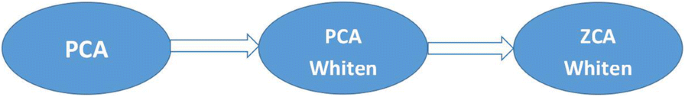

2.1 The ZCA process is shown as below

In this process, we first use PCA to zero the mean value. In this paper, we assume that X represents the image vector [ 25 ]: \( \mu =\frac{1}{m}\sum \limits_{j=1}^m{x}_j \)

Next, the covariance matrix for the entire data is calculated, with the following formulas:

where I represents the covariance matrix, I is decomposed by SVD [ 26 ], and its eigenvalues and corresponding eigenvectors are obtained.

Of which, U is the eigenvector matrix of ∑, and S is the eigenvalue matrix of ∑. Based on this, x can be whitened by PCA, and the formula is:

So X ZCAwhiten can be expressed as

For the data set in this paper, because the training sample and the test sample are not well distinguished [ 27 ], the random generation method is used to avoid the subjective color of the artificial classification.

2.2 Image feature extraction based on time-frequency composite weighting

Feature extraction is a concept in computer vision and image processing. It refers to the use of a computer to extract image information and determine whether the points of each image belong to an image feature extraction. The purpose of feature extraction is to divide the points on the image into different subsets, which are often isolated points, a continuous curve, or region. There are usually many kinds of features to describe the image. These features can be classified according to different criteria, such as point features, line features, and regional characteristics according to the representation of the features on the image data. According to the region size of feature extraction, it can be divided into two categories: global feature and local feature [ 24 ]. The image features used in some feature extraction methods in this paper include color feature and texture feature, analysis of the current situation of corner feature, and edge feature.

The time-frequency composite weighting algorithm for multi-frame blurred images is a frequency-domain and time-domain weighting simultaneous processing algorithm based on blurred image data. Based on the weighted characteristic of the algorithm and the feature extraction of target image in time domain and frequency domain, the depth extraction technique is based on the time-frequency composite weighting of night image to extract the target information from depth image. The main steps of the time-frequency composite weighted feature extraction method are as follows:

Step 1: Construct a time-frequency composite weighted signal model for multiple blurred images, as the following expression shows:

Of which, f ( t ) is original signal, S = ( c − v )/( c + v ), called the image scale factor. Referred to as scale, it represents the signal scaling change of the original image time-frequency composite weighting algorithm. \( \sqrt{S} \) is the normalized factor of image time-frequency composite weighting algorithm.

Step 2: Map the one-dimensional function to the two-dimensional function y ( t ) of the time scale a and the time shift b , and perform a time-frequency composite weighted transform on the continuous nighttime image of the image time-frequency composite weighted 0 using the square integrable function as shown below:

Of which, divisor \( 1/\sqrt{\mid a\mid } \) . The energy normalization of the unitary transformation is ensured. ψ a , b is ψ ( t ) obtained by transforming U ( a , b ) through the affine group, as shown by the following expression:

Step 3: Substituting the variable of the original image f ( t )by a = 1/ s and b = τ and rewriting the expression to obtain an expression:

Step 4: Build a multi-frame fuzzy image time-frequency composite weighted signal form.

Of which, rect( t ) = 1 and ∣ t ∣ ≤ 1/2.

Step 5: The frequency modulation law of the time-frequency composite weighted signal of multi-thread fuzzy image is a hyperbolic function;

among them, K = Tf max f min / B , t 0 = f 0 T / B , f 0 is arithmetic center frequency, and f max , f min are the minimum and maximum frequencies, respectively.

Step 6: Use the image transformation formula of the multi-detector fuzzy image time-frequency composite weighted signal to carry on the time-frequency composite weighting to the image, the definition of the image transformation is like the formula.

Of which, \( {b}_a=\left(1-a\right)\left(\frac{1}{afm_{ax}}-\frac{T}{2}\right) \) , and Ei (•) represents an exponential integral.

Final output image time-frequency composite weighted image signal W u u ( a , b ). Therefore, compared with the traditional time-domain, c extraction technique of image features can be better realized by the time-frequency composite weighting algorithm.

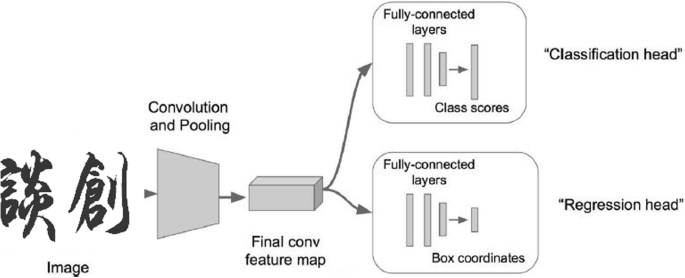

2.3 Application of deep convolution neural network in image classification

After obtaining the feature vectors from the image, the image can be described as a vector of fixed length, and then a classifier is needed to classify the feature vectors.

In general, a common convolution neural network consists of input layer, convolution layer, activation layer, pool layer, full connection layer, and final output layer from input to output. The convolutional neural network layer establishes the relationship between different computational neural nodes and transfers input information layer by layer, and the continuous convolution-pool structure decodes, deduces, converges, and maps the feature signals of the original data to the hidden layer feature space [ 28 ]. The next full connection layer classifies and outputs according to the extracted features.

2.3.1 Convolution neural network

Convolution is an important analytical operation in mathematics. It is a mathematical operator that generates a third function from two functions f and g , representing the area of overlap between function f and function g that has been flipped or translated. Its calculation is usually defined by a following formula:

Its integral form is the following:

In image processing, a digital image can be regarded as a discrete function of a two-dimensional space, denoted as f ( x , y ). Assuming the existence of a two-dimensional convolution function g ( x , y ), the output image z ( x , y ) can be represented by the following formula:

In this way, the convolution operation can be used to extract the image features. Similarly, in depth learning applications, when the input is a color image containing RGB three channels, and the image is composed of each pixel, the input is a high-dimensional array of 3 × image width × image length; accordingly, the kernel (called “convolution kernel” in the convolution neural network) is defined in the learning algorithm as the accounting. Computational parameter is also a high-dimensional array. Then, when two-dimensional images are input, the corresponding convolution operation can be expressed by the following formula:

The integral form is the following:

If a convolution kernel of m × n is given, there is

where f represents the input image G to denote the size of the convolution kernel m and n . In a computer, the realization of convolution is usually represented by the product of a matrix. Suppose the size of an image is M × M and the size of the convolution kernel is n × n . In computation, the convolution kernel multiplies with each image region of n × n size of the image, which is equivalent to extracting the image region of n × n and expressing it as a column vector of n × n length. In a zero-zero padding operation with a step of 1, a total of ( M − n + 1) ∗ ( M − n + 1) calculation results can be obtained; when these small image regions are each represented as a column vector of n × n , the original image can be represented by the matrix [ n ∗ n ∗ ( M − n + 1)]. Assuming that the number of convolution kernels is K , the output of the original image obtained by the above convolution operation is k ∗ ( M − n + 1) ∗ ( M − n + 1). The output is the number of convolution kernels × the image width after convolution × the image length after convolution.

2.3.2 M 3 CE constructed loss function

In the process of neural network training, the loss function is the evaluation standard of the whole network model. It not only represents the current state of the network parameters, but also provides the gradient of the parameters in the gradient descent method, so the loss function is an important part of the deep learning training. In this paper, we introduce the loss function proposed by M 3 CE. Finally, the loss function of M 3 CE and cross-entropy is obtained by gradient analysis.

According to the definition of MCE, we use the output of Softmax function as the discriminant function. Then, the error classification metric formula is redefined as.

Where k is the label of the sample, q = arg max l ≠ k , P l represents the most confusing class of output of the Softmax function. If we use the logistic loss function, we can find the gradient of the loss function to Z .

This gradient is used in the backpropagation algorithm to get the gradient of the entire network, and it is worth noting that if z is misdivided,ℓ k will be infinitely close to 1, and a ℓ k (1 − ℓ k ) will be close to 0. Then, the gradient will be close to 0, which will cause almost no gradient to be reversed to the previous layer, which will not be good for the completion of the training process [ 29 ].

The sigmoid function is used in the traditional neural network activation function. But this is also the case during training. The observation formula shows that when the activation value is high the backpropagation gradient is very small which is called saturation. In the past, the influence of shallow neural networks was not very large, but with the increase of the number of network layers, this situation would affect the learning of the whole network. In particular, if the saturated sigmoid function is at a higher level, it will affect all the previous low-level gradients. Therefore, in the present depth neural networks, an unsaturated activation function linear rectifier unit (Rectified Linear Unit, Re LU) is used to replace the sigmoid function. It can be seen from the formula that when the input value is positive, the gradient of the linear rectifying unit is 1, so the gradient of the upper layer can be reversely transmitted to the lower layer without attenuation. The literature shows that linear rectification units can accelerate the training process and prevent gradient dispersion.

According to the fact that the saturation activation function in the middle of the network is not conducive to the training of the depth network, but the saturation function in the top loss function, has a great influence on the depth neural network.

We call it the max-margin loss, where the interval is defined as ∈ k = − d k ( z ) = P k − P q .

Since P k is a probability, that is, P k ∈ [0, 1], then d k ∈ [−1, 1], when a sample gradually becomes misclassified from the correct classification, d k increases from − 1 to 1, compared to the original logistic loss function, and even if the sample is seriously misclassified, the loss function still get the biggest loss value. Because of1 + d k ≥ 0, it can be simplified.

When we need to give a larger loss value to the wrong classification sample, the upper formula can be extended to

where γ is a positive integer. If γ = 2 is set, we get the squared maximum interval loss function. If the function is to be applied to training deep neural networks, the gradient needs to be calculated and obtained according to the chain rule.

Here, we need to discuss (1) when the dimension is the dimension corresponding to the sample label, (2) when the dimension is the dimension corresponding to the confused category label, and (3) when the dimension is neither the sample label nor the dimension corresponding to the confused category label. The following conclusions have been drawn:

3 Experimental results

3.1 experimental platform and data preprocessing.

MNIST (Mixed National Institute of Standards and Technology) database is a standard database in machine learning. It consists of ten types of handwritten digital grayscale images, of which 60,000 training pictures are tested with a resolution of 28 × 28.

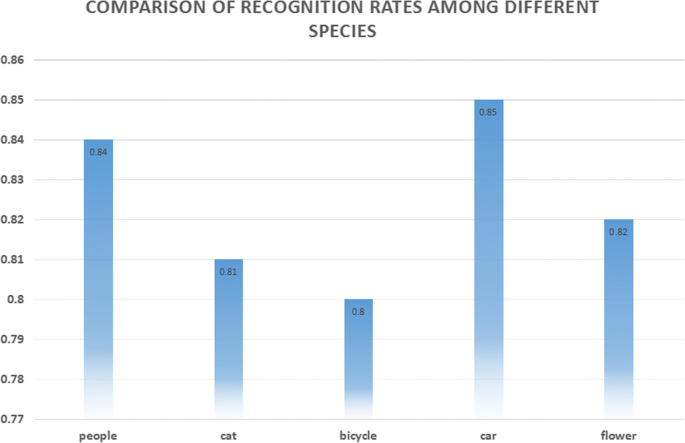

In this paper, we mainly use ZCA whitening to process the image data, such as reading the data into the array and reforming the size we need (Figs. 1 , 2 , 3 , 4 , and 5 ). The image of the data set is normalized and whitened respectively. It makes all pixels have the same mean value and variance, eliminates the white noise problem in the image, and eliminates the correlation between pixels and pixels.

ZCA whitening flow chart

Sample selection of different fonts and different colors

Comparison of image feature extraction

Image classification and modeling based on deep convolution neural network

Comparison of recognition rates among different species

At the same time, a common way to change the results of image training is a random form of distortion, cropping, or sharpening the training input, which has the advantage of extending the effective size of the training data, thanks to all possible changes in the same image. And it tends to help network learning to deal with all distortion problems that will occur in the real use of classifiers. Therefore, when the training results are abnormal, the images will be deformed randomly to avoid the large interference caused by individual abnormal images to the whole model.

3.2 Build a training network