When you choose to publish with PLOS, your research makes an impact. Make your work accessible to all, without restrictions, and accelerate scientific discovery with options like preprints and published peer review that make your work more Open.

- PLOS Biology

- PLOS Climate

- PLOS Complex Systems

- PLOS Computational Biology

- PLOS Digital Health

- PLOS Genetics

- PLOS Global Public Health

- PLOS Medicine

- PLOS Mental Health

- PLOS Neglected Tropical Diseases

- PLOS Pathogens

- PLOS Sustainability and Transformation

- PLOS Collections

How to Write a Peer Review

When you write a peer review for a manuscript, what should you include in your comments? What should you leave out? And how should the review be formatted?

This guide provides quick tips for writing and organizing your reviewer report.

Review Outline

Use an outline for your reviewer report so it’s easy for the editors and author to follow. This will also help you keep your comments organized.

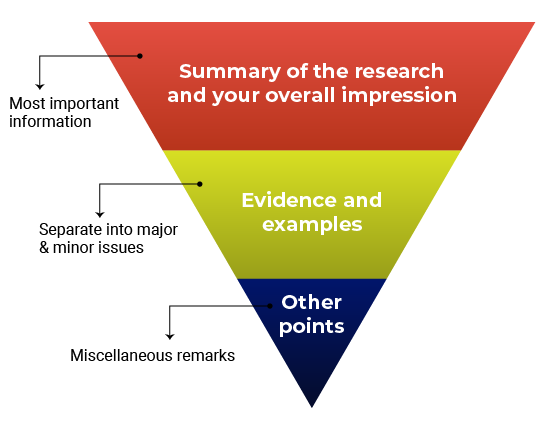

Think about structuring your review like an inverted pyramid. Put the most important information at the top, followed by details and examples in the center, and any additional points at the very bottom.

Here’s how your outline might look:

1. Summary of the research and your overall impression

In your own words, summarize what the manuscript claims to report. This shows the editor how you interpreted the manuscript and will highlight any major differences in perspective between you and the other reviewers. Give an overview of the manuscript’s strengths and weaknesses. Think about this as your “take-home” message for the editors. End this section with your recommended course of action.

2. Discussion of specific areas for improvement

It’s helpful to divide this section into two parts: one for major issues and one for minor issues. Within each section, you can talk about the biggest issues first or go systematically figure-by-figure or claim-by-claim. Number each item so that your points are easy to follow (this will also make it easier for the authors to respond to each point). Refer to specific lines, pages, sections, or figure and table numbers so the authors (and editors) know exactly what you’re talking about.

Major vs. minor issues

What’s the difference between a major and minor issue? Major issues should consist of the essential points the authors need to address before the manuscript can proceed. Make sure you focus on what is fundamental for the current study . In other words, it’s not helpful to recommend additional work that would be considered the “next step” in the study. Minor issues are still important but typically will not affect the overall conclusions of the manuscript. Here are some examples of what would might go in the “minor” category:

- Missing references (but depending on what is missing, this could also be a major issue)

- Technical clarifications (e.g., the authors should clarify how a reagent works)

- Data presentation (e.g., the authors should present p-values differently)

- Typos, spelling, grammar, and phrasing issues

3. Any other points

Confidential comments for the editors.

Some journals have a space for reviewers to enter confidential comments about the manuscript. Use this space to mention concerns about the submission that you’d want the editors to consider before sharing your feedback with the authors, such as concerns about ethical guidelines or language quality. Any serious issues should be raised directly and immediately with the journal as well.

This section is also where you will disclose any potentially competing interests, and mention whether you’re willing to look at a revised version of the manuscript.

Do not use this space to critique the manuscript, since comments entered here will not be passed along to the authors. If you’re not sure what should go in the confidential comments, read the reviewer instructions or check with the journal first before submitting your review. If you are reviewing for a journal that does not offer a space for confidential comments, consider writing to the editorial office directly with your concerns.

Get this outline in a template

Giving Feedback

Giving feedback is hard. Giving effective feedback can be even more challenging. Remember that your ultimate goal is to discuss what the authors would need to do in order to qualify for publication. The point is not to nitpick every piece of the manuscript. Your focus should be on providing constructive and critical feedback that the authors can use to improve their study.

If you’ve ever had your own work reviewed, you already know that it’s not always easy to receive feedback. Follow the golden rule: Write the type of review you’d want to receive if you were the author. Even if you decide not to identify yourself in the review, you should write comments that you would be comfortable signing your name to.

In your comments, use phrases like “ the authors’ discussion of X” instead of “ your discussion of X .” This will depersonalize the feedback and keep the focus on the manuscript instead of the authors.

General guidelines for effective feedback

- Justify your recommendation with concrete evidence and specific examples.

- Be specific so the authors know what they need to do to improve.

- Be thorough. This might be the only time you read the manuscript.

- Be professional and respectful. The authors will be reading these comments too.

- Remember to say what you liked about the manuscript!

Don’t

- Recommend additional experiments or unnecessary elements that are out of scope for the study or for the journal criteria.

- Tell the authors exactly how to revise their manuscript—you don’t need to do their work for them.

- Use the review to promote your own research or hypotheses.

- Focus on typos and grammar. If the manuscript needs significant editing for language and writing quality, just mention this in your comments.

- Submit your review without proofreading it and checking everything one more time.

Before and After: Sample Reviewer Comments

Keeping in mind the guidelines above, how do you put your thoughts into words? Here are some sample “before” and “after” reviewer comments

✗ Before

“The authors appear to have no idea what they are talking about. I don’t think they have read any of the literature on this topic.”

✓ After

“The study fails to address how the findings relate to previous research in this area. The authors should rewrite their Introduction and Discussion to reference the related literature, especially recently published work such as Darwin et al.”

“The writing is so bad, it is practically unreadable. I could barely bring myself to finish it.”

“While the study appears to be sound, the language is unclear, making it difficult to follow. I advise the authors work with a writing coach or copyeditor to improve the flow and readability of the text.”

“It’s obvious that this type of experiment should have been included. I have no idea why the authors didn’t use it. This is a big mistake.”

“The authors are off to a good start, however, this study requires additional experiments, particularly [type of experiment]. Alternatively, the authors should include more information that clarifies and justifies their choice of methods.”

Suggested Language for Tricky Situations

You might find yourself in a situation where you’re not sure how to explain the problem or provide feedback in a constructive and respectful way. Here is some suggested language for common issues you might experience.

What you think : The manuscript is fatally flawed. What you could say: “The study does not appear to be sound” or “the authors have missed something crucial”.

What you think : You don’t completely understand the manuscript. What you could say : “The authors should clarify the following sections to avoid confusion…”

What you think : The technical details don’t make sense. What you could say : “The technical details should be expanded and clarified to ensure that readers understand exactly what the researchers studied.”

What you think: The writing is terrible. What you could say : “The authors should revise the language to improve readability.”

What you think : The authors have over-interpreted the findings. What you could say : “The authors aim to demonstrate [XYZ], however, the data does not fully support this conclusion. Specifically…”

What does a good review look like?

Check out the peer review examples at F1000 Research to see how other reviewers write up their reports and give constructive feedback to authors.

Time to Submit the Review!

Be sure you turn in your report on time. Need an extension? Tell the journal so that they know what to expect. If you need a lot of extra time, the journal might need to contact other reviewers or notify the author about the delay.

Tip: Building a relationship with an editor

You’ll be more likely to be asked to review again if you provide high-quality feedback and if you turn in the review on time. Especially if it’s your first review for a journal, it’s important to show that you are reliable. Prove yourself once and you’ll get asked to review again!

- Getting started as a reviewer

- Responding to an invitation

- Reading a manuscript

- Writing a peer review

The contents of the Peer Review Center are also available as a live, interactive training session, complete with slides, talking points, and activities. …

The contents of the Writing Center are also available as a live, interactive training session, complete with slides, talking points, and activities. …

There’s a lot to consider when deciding where to submit your work. Learn how to choose a journal that will help your study reach its audience, while reflecting your values as a researcher…

- Writing Center

- Current Students

- Online Only Students

- Faculty & Staff

- Parents & Family

- Alumni & Friends

- Community & Business

- Student Life

- Video Introduction

- Become a Writing Assistant

- All Writers

- Graduate Students

- ELL Students

- Campus and Community

- Testimonials

- Encouraging Writing Center Use

- Incentives and Requirements

- Open Educational Resources

- How We Help

- Get to Know Us

- Conversation Partners Program

- Workshop Series

- Professors Talk Writing

- Computer Lab

- Starting a Writing Center

- A Note to Instructors

- Annotated Bibliography

- Literature Review

- Research Proposal

- Argument Essay

- Rhetorical Analysis

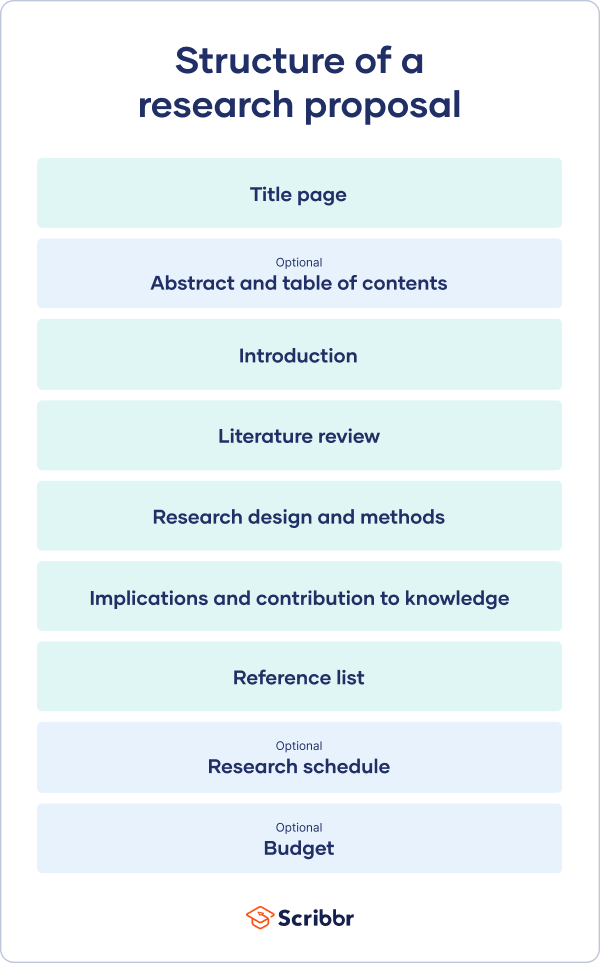

Research Proposal Peer Review

As a writer . . .

Step 1: Include answers to the following two questions at the top of your draft:

- What questions do you have for your reviewer?

- List two concerns you have about your argument essay.

Step 2: When you receive your peer's feedback, read and consider it carefully.

- Remember: you are not bound to accept everything your reader suggests; if you believe that the response comes as a result of misunderstanding your intentions, be sure that those intentions are clear. The problem can be either with the reader or the writer!

As a reviewer . . .

As you begin writing your peer review, remember that your peers benefit more from constructive criticism than vague praise. A comment like "I got confused here" or "I saw your point clearly here" is more useful than "It looks okay to me." Point out ways your classmates can improve their work.

Step 1: Read your peer’s draft two times.

- Read the draft once to get an overview of the paper, and a second time to provide constructive criticism for the author to use when revising the draft.

Step 2: Answer the following questions:

- Does the draft include an introduction that establishes the purpose of your paper, provides, a thoughtful explanation of your project's significance by communicating why the project is important and how it will contribute to the existing field of knowledge.

- Does the research review section include at least five credible sources on the topic?

- In the research review section, has the writer explained the sources' relevance to the topic and discussed the significant commonalities and conflicts between your sources?

- In the methodology section, has the writer discussed how they will proceed with the proposed project and addressed questions that still need to be answered about the topic? Is it clear why those questions are significant?

- In the methodology section, has the writer discussed potential challenges (e.g., language and/or cultural barriers, potential safety concerns, time constraints, etc.) and how they plan to overcome them?

- In the conclusion section, has the writer reminded the reader of the potential benefits of the proposed research by discussing who will potentially benefit from the proposed research and what the research will contribute to knowledge and understanding about your topic?

- What did you find most interesting about this draft?

Step 3: Address your peer's questions and concerns included at the top of the draft.

Step 4: Write a short paragraph about what the writer does especially well.

Step 5: Write a short paragraph about what you think the writer should do to improve the draft.

Your suggestions will be the most useful part of peer review for your classmates, so focus more of your time on these paragraphs; they will count for more of your peer review grade than the yes or no responses.

Hints for peer review:

- Point out the strengths in the essay.

- Address the larger issues first.

- Make specific suggestions for improvement.

- Be tactful but be candid and direct.

- Don't be afraid to disagree with another reviewer.

- Make and receive comments in a useful way.

- Remember peer review is not an editing service.

This material was developed by the COMPSS team and is licensed under a Creative Commons Attribution 4.0 International License. All materials created by the COMPSS team are free to use and can be adopted, remixed, shared at will as long as the materials are attributed.

Contact Info

Kennesaw Campus 1000 Chastain Road Kennesaw, GA 30144

Marietta Campus 1100 South Marietta Pkwy Marietta, GA 30060

Campus Maps

Phone 470-KSU-INFO (470-578-4636)

kennesaw.edu/info

Media Resources

Resources For

Related Links

- Financial Aid

- Degrees, Majors & Programs

- Job Opportunities

- Campus Security

- Global Education

- Sustainability

- Accessibility

470-KSU-INFO (470-578-4636)

© 2024 Kennesaw State University. All Rights Reserved.

- Privacy Statement

- Accreditation

- Emergency Information

- Reporting Hotline

- Open Records

- Human Trafficking Notice

- Student Member

- Corporate Partnership

- Accreditation

- OR Society Accreditation »

- Become Chartered »

- Data Science Professional Certification »

- Continuing Professional Development CPD »

- Awards, Medals and Scholarships

- Beale Medal »

- President's Medal »

- Goodeve Medal »

- Stafford Beer Medal »

- Cook Medal »

- KD Tocher Medal »

- Griffiths Medal »

- Ranyard Medal »

- Lyn Thomas Impact Medal »

- Companion of OR »

- The Simpson Award »

- May Hicks Award »

- The OR Society Undergraduate Award »

- The Doctoral Award »

- Scholarships IFORS »

- Donald Hicks Scholarships »

- EURO Summer Institute Scholarships »

- Elsie Cropper Award »

- Assisted Places »

- Silver Medal »

- Master's Scholarship »

- University Master's Courses in OR »

- Master's Scholarships Recent Winners »

- Analytics Summit

- AS24 Speakers »

- AS24 Sponsors »

- Annual Conference

- OR66 Streams »

- OR66 Organising Committee »

- Previous Annual Conferences

- OR64 »

- OR63 »

- OR62 »

- OR61 »

- OR65 »

- OR65 Plenary Speakers »

- OR65 Streams »

- OR65 Key Dates »

- OR65 Rates and Booking »

- OR65 Useful Information »

- ECR Workshop »

- OR65 Organising Committee »

- Annual General Meeting

- 2018 Beale Lecture Richard Omerod »

- 2018 Beale Lecture Dr Çagri Koç »

- 2019 Beale Lecture Mike Jackson »

- Beale Lecture 2020 Speakers »

- 2021 Beale Lecture »

- Beale 2023 »

- Blackett Lecture

- Previous Blackett Lectures »

- Blackett 2022: Professor Christina Pagel »

- Careers Open Day

- COD 2022 Exhibitors »

- ISMOR 40 Proceedings »

- ISMOR 39 Proceedings »

- Knowledge Exchange Day

- New to OR Conference

- Speakers »

- Simulation Workshop

- Scenario Planning and Foresight

- Validate AI Conference

- Regional Society & SIG Events

- Non-Society Events

- Scientific Writing »

- WORAN Land Lecture

- Previous WORAN Land Lectures »

- September Webinar »

- November Webinar »

- October Webinar »

- 15 November Webinar »

- December Webinar »

- January 24 Webinar »

- WISDOM Webinar »

- February 24 webinar »

- March 2024 »

- April 24 »

- May 24 »

- May_2_2024 »

- Joint SIG Event

- Joint SIG Speakers 2024 »

- Publications

- JORS »

- EJIS »

- KMRP »

- JOS »

- JBA »

- OR Insight »

- Inside OR »

- Impact Magazine »

- Databases & Literature Searches

- Additional Journals

- Technology Analysis & Strategic Management »

- Engineering Optimization »

- Journal of Decision Systems »

- Journal of Management Analytics »

- International Journal of Modelling and Simulation »

- International Journal of Management Science and Engineering Management »

- International Journal of Systems Science: Operations & Logistics »

- International Journal of Healthcare Management »

- Tutors »

- Your Learning Portal

- Inhouse Private Courses

- Training for PhD Students – NATCOR

- Submit Training Bids

- Researchers Database

- EPSRC Peer College Review

- Why Kerem Akartunali joined the EPSRC Peer Review College »

- Why Alain Zemkoho is joining the EPSRC Peer Review College »

- Why Kathy Kotiadis joined the EPSRC Peer Review College »

- Open Funding Opportunities

- Top Tips: Applying for Funding

- Potential Funding Sources for Research

Top Tips: Reviewing a Research Proposal

- Get Involved

- Job Opportunities in OR

- Volunteering Opportunities

- OR in Education

- University Masters Courses in OR »

- Careers »

- For Volunteers »

- For Lecturers »

- For Teachers »

- Teaching Resources »

- Volunteering Resources »

- Webinars »

- Pro Bono OR

- Pro Bono OR Volunteering »

- Open Pro Bono Projects »

- Pro Bono OR for the Third Sector »

- Case Studies »

- Society Groups

- Regional Societies »

- East Midlands »

- London & South East »

- Midlands »

- North East »

- North West »

- Scotland »

- South Wales »

- Southern »

- Western »

- Yorkshire & Humberside »

- Special Interest Groups and Networks »

- Analytics Network »

- Behavioural OR »

- Decision Analysis »

- Defence »

- Early Career Researchers (ECR) »

- Health & Social Services »

- Independent Consultants Network »

- New to OR Network »

- OR, Analytics, and Education »

- OR and Strategy »

- OR in Practice »

- OR in the Third Sector »

- People Analytics »

- Problem Structuring Methods »

- Public Policy Design »

- Simulation OR »

- Systems Thinking »

- Women in OR & Analytics Network »

- Related Organisations

- Submit a paper to a journal

- Become a reviewer

- Legacy Giving

- History of OR

- OR in Business

- Agent Based Modelling »

- Bayesian Analysis »

- Data Envelopment Analysis »

- Data Provenance »

- Data Warehousing »

- Fuzzy Systems »

- Grey Models »

- Heuristics »

- Multicriteria Analysis »

- Neural Networks »

- Optimization »

- Performance Measurement »

- Queueing »

- Reliability »

- Retail »

- Simulation »

- System Dynamics »

- Vehicle Routing Problem »

- History of The OR Society

- 75th Anniversary

The peer review process is invaluable in assisting research panels to make decisions about funding. Independent experts scrutinise the importance, potential and cost-effectiveness of the research being proposed.

Check the funder’s website for guidance Ensure you are clear on what type of proposal you are being asked to review and read the assessment criteria and scoring matrix as a priority. Many funding councils have prepared comprehensive guidance for reviewers that is freely available online. As an example, EPSRC and ESRC guidance can be accessed here:

EPSRC: https://www.epsrc.ac.uk/funding/assessmentprocess/review/formsandguidancenotes/standardcalls/

ESRC: http://www.esrc.ac.uk/funding/guidance-for-peer-reviewers/

Be objective and professional Provide clear and concise comments and objective criticism when identifying strengths and weaknesses in the proposal. Whether or not there are major flaws or ethical concerns, provide justification and references for your comments and the score you provide. Remain anonymous by avoiding referring to your own work or any personal information. Don’t allow your review to be influenced by bias for your own field of research and be mindful of unconscious bias and the impact this could have on your review. See: https://implicit.harvard.edu/implicit/takeatest.html.

Be concise but clear Many submission systems have character limits for the review sections, so you will need to be concise. However, you should be conscious that not everyone reading your review comments will be a specialist in your field so use accessible language throughout.

Remember to praise a good proposal If you find that the proposal you’re reviewing is good, you should say so and explain why.

Take your time Finally, allow enough time to thoroughly read the proposal before writing and submitting your review. If you feel you need more time to complete your review, then contact the funder to request a deadline extension. Most funders would prefer that you request an extension, and provide a more comprehensive review, than submit something brief and uninformative because there was inadequate time for you to consider it in detail.

Andrew (2014, May 19). Review a research grant-application in five minutes. Retrieved from: https://parkerderrington.com/peer-review-your-own-grant-application-in-five-minutes/

Medical Research Council (2017) Guidance for peer reviewers. Retrieved from: https://www.mrc.ac.uk/documents/pdf/reviewers-handbook/

Prosser, R. (2016, September 19). 8 top tips for writing a useful grant review. Insight . Retrieved from: https://mrc.ukri.org/news/blog/8-top-tips-for-writing-a-useful-review/?redirected-from-wordpress

- Search Menu

- Advance articles

- Author Guidelines

- Submission Site

- Open Access

- Why Publish?

- About Science and Public Policy

- Editorial Board

- Advertising and Corporate Services

- Journals Career Network

- Self-Archiving Policy

- Dispatch Dates

- Journals on Oxford Academic

- Books on Oxford Academic

Article Contents

1. introduction, 2. background, 4. findings, 5. discussion, 6. conclusion and final remarks, supplementary material, data availability, conflict of interest statement., acknowledgements.

- < Previous

Evaluation of research proposals by peer review panels: broader panels for broader assessments?

- Article contents

- Figures & tables

- Supplementary Data

Rebecca Abma-Schouten, Joey Gijbels, Wendy Reijmerink, Ingeborg Meijer, Evaluation of research proposals by peer review panels: broader panels for broader assessments?, Science and Public Policy , Volume 50, Issue 4, August 2023, Pages 619–632, https://doi.org/10.1093/scipol/scad009

- Permissions Icon Permissions

Panel peer review is widely used to decide which research proposals receive funding. Through this exploratory observational study at two large biomedical and health research funders in the Netherlands, we gain insight into how scientific quality and societal relevance are discussed in panel meetings. We explore, in ten review panel meetings of biomedical and health funding programmes, how panel composition and formal assessment criteria affect the arguments used. We observe that more scientific arguments are used than arguments related to societal relevance and expected impact. Also, more diverse panels result in a wider range of arguments, largely for the benefit of arguments related to societal relevance and impact. We discuss how funders can contribute to the quality of peer review by creating a shared conceptual framework that better defines research quality and societal relevance. We also contribute to a further understanding of the role of diverse peer review panels.

Scientific biomedical and health research is often supported by project or programme grants from public funding agencies such as governmental research funders and charities. Research funders primarily rely on peer review, often a combination of independent written review and discussion in a peer review panel, to inform their funding decisions. Peer review panels have the difficult task of integrating and balancing the various assessment criteria to select and rank the eligible proposals. With the increasing emphasis on societal benefit and being responsive to societal needs, the assessment of research proposals ought to include broader assessment criteria, including both scientific quality and societal relevance, and a broader perspective on relevant peers. This results in new practices of including non-scientific peers in review panels ( Del Carmen Calatrava Moreno et al. 2019 ; Den Oudendammer et al. 2019 ; Van den Brink et al. 2016 ). Relevant peers, in the context of biomedical and health research, include, for example, health-care professionals, (healthcare) policymakers, and patients as the (end-)users of research.

Currently, in scientific and grey literature, much attention is paid to what legitimate criteria are and to deficiencies in the peer review process, for example, focusing on the role of chance and the difficulty of assessing interdisciplinary or ‘blue sky’ research ( Langfeldt 2006 ; Roumbanis 2021a ). Our research primarily builds upon the work of Lamont (2009) , Huutoniemi (2012) , and Kolarz et al. (2016) . Their work articulates how the discourse in peer review panels can be understood by giving insight into disciplinary assessment cultures and social dynamics, as well as how panel members define and value concepts such as scientific excellence, interdisciplinarity, and societal impact. At the same time, there is little empirical work on what actually is discussed in peer review meetings and to what extent this is related to the specific objectives of the research funding programme. Such observational work is especially lacking in the biomedical and health domain.

The aim of our exploratory study is to learn what arguments panel members use in a review meeting when assessing research proposals in biomedical and health research programmes. We explore how arguments used in peer review panels are affected by (1) the formal assessment criteria and (2) the inclusion of non-scientific peers in review panels, also called (end-)users of research, societal stakeholders, or societal actors. We add to the existing literature by focusing on the actual arguments used in peer review assessment in practice.

To this end, we observed ten panel meetings in a variety of eight biomedical and health research programmes at two large research funders in the Netherlands: the governmental research funder The Netherlands Organisation for Health Research and Development (ZonMw) and the charitable research funder the Dutch Heart Foundation (DHF). Our first research question focuses on what arguments panel members use when assessing research proposals in a review meeting. The second examines to what extent these arguments correspond with the formal −as described in the programme brochure and assessment form− criteria on scientific quality and societal impact creation. The third question focuses on how arguments used differ between panel members with different perspectives.

2.1 Relation between science and society

To understand the dual focus of scientific quality and societal relevance in research funding, a theoretical understanding and a practical operationalisation of the relation between science and society are needed. The conceptualisation of this relationship affects both who are perceived as relevant peers in the review process and the criteria by which research proposals are assessed.

The relationship between science and society is not constant over time nor static, yet a relation that is much debated. Scientific knowledge can have a huge impact on societies, either intended or unintended. Vice versa, the social environment and structure in which science takes place influence the rate of development, the topics of interest, and the content of science. However, the second part of this inter-relatedness between science and society generally receives less attention ( Merton 1968 ; Weingart 1999 ).

From a historical perspective, scientific and technological progress contributed to the view that science was valuable on its own account and that science and the scientist stood independent of society. While this protected science from unwarranted political influence, societal disengagement with science resulted in less authority by science and debate about its contribution to society. This interdependence and mutual influence contributed to a modern view of science in which knowledge development is valued both on its own merit and for its impact on, and interaction with, society. As such, societal factors and problems are important drivers for scientific research. This warrants that the relation and boundaries between science, society, and politics need to be organised and constantly reinforced and reiterated ( Merton 1968 ; Shapin 2008 ; Weingart 1999 ).

Glerup and Horst (2014) conceptualise the value of science to society and the role of society in science in four rationalities that reflect different justifications for their relation and thus also for who is responsible for (assessing) the societal value of science. The rationalities are arranged along two axes: one is related to the internal or external regulation of science and the other is related to either the process or the outcome of science as the object of steering. The first two rationalities of Reflexivity and Demarcation focus on internal regulation in the scientific community. Reflexivity focuses on the outcome. Central is that science, and thus, scientists should learn from societal problems and provide solutions. Demarcation focuses on the process: science should continuously question its own motives and methods. The latter two rationalities of Contribution and Integration focus on external regulation. The core of the outcome-oriented Contribution rationality is that scientists do not necessarily see themselves as ‘working for the public good’. Science should thus be regulated by society to ensure that outcomes are useful. The central idea of the process-oriented Integration rationality is that societal actors should be involved in science in order to influence the direction of research.

Research funders can be seen as external or societal regulators of science. They can focus on organising the process of science, Integration, or on scientific outcomes that function as solutions for societal challenges, Contribution. In the Contribution perspective, a funder could enhance outside (societal) involvement in science to ensure that scientists take responsibility to deliver results that are needed and used by society. From Integration follows that actors from science and society need to work together in order to produce the best results. In this perspective, there is a lack of integration between science and society and more collaboration and dialogue are needed to develop a new kind of integrative responsibility ( Glerup and Horst 2014 ). This argues for the inclusion of other types of evaluators in research assessment. In reality, these rationalities are not mutually exclusive and also not strictly separated. As a consequence, multiple rationalities can be recognised in the reasoning of scientists and in the policies of research funders today.

2.2 Criteria for research quality and societal relevance

The rationalities of Glerup and Horst have consequences for which language is used to discuss societal relevance and impact in research proposals. Even though the main ingredients are quite similar, as a consequence of the coexisting rationalities in science, societal aspects can be defined and operationalised in different ways ( Alla et al. 2017 ). In the definition of societal impact by Reed, emphasis is placed on the outcome : the contribution to society. It includes the significance for society, the size of potential impact, and the reach , the number of people or organisations benefiting from the expected outcomes ( Reed et al. 2021 ). Other models and definitions focus more on the process of science and its interaction with society. Spaapen and Van Drooge introduced productive interactions in the assessment of societal impact, highlighting a direct contact between researchers and other actors. A key idea is that the interaction in different domains leads to impact in different domains ( Meijer 2012 ; Spaapen and Van Drooge 2011 ). Definitions that focus on the process often refer to societal impact as (1) something that can take place in distinguishable societal domains, (2) something that needs to be actively pursued, and (3) something that requires interactions with societal stakeholders (or users of research) ( Hughes and Kitson 2012 ; Spaapen and Van Drooge 2011 ).

Glerup and Horst show that process and outcome-oriented aspects can be combined in the operationalisation of criteria for assessing research proposals on societal aspects. Also, the funders participating in this study include the outcome—the value created in different domains—and the process—productive interactions with stakeholders—in their formal assessment criteria for societal relevance and impact. Different labels are used for these criteria, such as societal relevance , societal quality , and societal impact ( Abma-Schouten 2017 ; Reijmerink and Oortwijn 2017 ). In this paper, we use societal relevance or societal relevance and impact .

Scientific quality in research assessment frequently refers to all aspects and activities in the study that contribute to the validity and reliability of the research results and that contribute to the integrity and quality of the research process itself. The criteria commonly include the relevance of the proposal for the funding programme, the scientific relevance, originality, innovativeness, methodology, and feasibility ( Abdoul et al. 2012 ). Several studies demonstrated that quality is seen as not only a rich concept but also a complex concept in which excellence and innovativeness, methodological aspects, engagement of stakeholders, multidisciplinary collaboration, and societal relevance all play a role ( Geurts 2016 ; Roumbanis 2019 ; Scholten et al. 2018 ). Another study showed a comprehensive definition of ‘good’ science, which includes creativity, reproducibility, perseverance, intellectual courage, and personal integrity. It demonstrated that ‘good’ science involves not only scientific excellence but also personal values and ethics, and engagement with society ( Van den Brink et al. 2016 ). Noticeable in these studies is the connection made between societal relevance and scientific quality.

In summary, the criteria for scientific quality and societal relevance are conceptualised in different ways, and perspectives on the role of societal value creation and the involvement of societal actors vary strongly. Research funders hence have to pay attention to the meaning of the criteria for the panel members they recruit to help them, and navigate and negotiate how the criteria are applied in assessing research proposals. To be able to do so, more insight is needed in which elements of scientific quality and societal relevance are discussed in practice by peer review panels.

2.3 Role of funders and societal actors in peer review

National governments and charities are important funders of biomedical and health research. How this funding is distributed varies per country. Project funding is frequently allocated based on research programming by specialised public funding organisations, such as the Dutch Research Council in the Netherlands and ZonMw for health research. The DHF, the second largest private non-profit research funder in the Netherlands, provides project funding ( Private Non-Profit Financiering 2020 ). Funders, as so-called boundary organisations, can act as key intermediaries between government, science, and society ( Jasanoff 2011 ). Their responsibility is to develop effective research policies connecting societal demands and scientific ‘supply’. This includes setting up and executing fair and balanced assessment procedures ( Sarewitz and Pielke 2007 ). Herein, the role of societal stakeholders is receiving increasing attention ( Benedictus et al. 2016 ; De Rijcke et al. 2016 ; Dijstelbloem et al. 2013 ; Scholten et al. 2018 ).

All charitable health research funders in the Netherlands have, in the last decade, included patients at different stages of the funding process, including in assessing research proposals ( Den Oudendammer et al. 2019 ). To facilitate research funders in involving patients in assessing research proposals, the federation of Dutch patient organisations set up an independent reviewer panel with (at-risk) patients and direct caregivers ( Patiëntenfederatie Nederland, n.d .). Other foundations have set up societal advisory panels including a wider range of societal actors than patients alone. The Committee Societal Quality (CSQ) of the DHF includes, for example, (at-risk) patients and a wide range of cardiovascular health-care professionals who are not active as academic researchers. This model is also applied by the Diabetes Foundation and the Princess Beatrix Muscle Foundation in the Netherlands ( Diabetesfonds, n.d .; Prinses Beatrix Spierfonds, n.d .).

In 2014, the Lancet presented a series of five papers about biomedical and health research known as the ‘increasing value, reducing waste’ series ( Macleod et al. 2014 ). The authors addressed several issues as well as potential solutions that funders can implement. They highlight, among others, the importance of improving the societal relevance of the research questions and including the burden of disease in research assessment in order to increase the value of biomedical and health science for society. A better understanding of and an increasing role of users of research are also part of the described solutions ( Chalmers et al. 2014 ; Van den Brink et al. 2016 ). This is also in line with the recommendations of the 2013 Declaration on Research Assessment (DORA) ( DORA 2013 ). These recommendations influence the way in which research funders operationalise their criteria in research assessment, how they balance the judgement of scientific and societal aspects, and how they involve societal stakeholders in peer review.

2.4 Panel peer review of research proposals

To assess research proposals, funders rely on the services of peer experts to review the thousands or perhaps millions of research proposals seeking funding each year. While often associated with scholarly publishing, peer review also includes the ex ante assessment of research grant and fellowship applications ( Abdoul et al. 2012 ). Peer review of proposals often includes a written assessment of a proposal by an anonymous peer and a peer review panel meeting to select the proposals eligible for funding. Peer review is an established component of professional academic practice, is deeply embedded in the research culture, and essentially consists of experts in a given domain appraising the professional performance, creativity, and/or quality of scientific work produced by others in their field of competence ( Demicheli and Di Pietrantonj 2007 ). The history of peer review as the default approach for scientific evaluation and accountability is, however, relatively young. While the term was unheard of in the 1960s, by 1970, it had become the standard. Since that time, peer review has become increasingly diverse and formalised, resulting in more public accountability ( Reinhart and Schendzielorz 2021 ).

While many studies have been conducted concerning peer review in scholarly publishing, peer review in grant allocation processes has been less discussed ( Demicheli and Di Pietrantonj 2007 ). The most extensive work on this topic has been conducted by Lamont (2009) . Lamont studied peer review panels in five American research funding organisations, including observing three panels. Other examples include Roumbanis’s ethnographic observations of ten review panels at the Swedish Research Council in natural and engineering sciences ( Roumbanis 2017 , 2021a ). Also, Huutoniemi was able to study, but not observe, four panels on environmental studies and social sciences of the Academy of Finland ( Huutoniemi 2012 ). Additionally, Van Arensbergen and Van den Besselaar (2012) analysed peer review through interviews and by analysing the scores and outcomes at different stages of the peer review process in a talent funding programme. In particular, interesting is the study by Luo and colleagues on 164 written panel review reports, showing that the reviews from panels that included non-scientific peers described broader and more concrete impact topics. Mixed panels also more often connected research processes and characteristics of applicants with impact creation ( Luo et al. 2021 ).

While these studies primarily focused on peer review panels in other disciplinary domains or are based on interviews or reports instead of direct observations, we believe that many of the findings are relevant to the functioning of panels in the context of biomedical and health research. From this literature, we learn to have realistic expectations of peer review. It is inherently difficult to predict in advance which research projects will provide the most important findings or breakthroughs ( Lee et al. 2013 ; Pier et al. 2018 ; Roumbanis 2021a , 2021b ). At the same time, these limitations may not substantiate the replacement of peer review by another assessment approach ( Wessely 1998 ). Many topics addressed in the literature are inter-related and relevant to our study, such as disciplinary differences and interdisciplinarity, social dynamics and their consequences for consistency and bias, and suggestions to improve panel peer review ( Lamont and Huutoniemi 2011 ; Lee et al. 2013 ; Pier et al. 2018 ; Roumbanis 2021a , b ; Wessely 1998 ).

Different scientific disciplines show different preferences and beliefs about how to build knowledge and thus have different perceptions of excellence. However, panellists are willing to respect and acknowledge other standards of excellence ( Lamont 2009 ). Evaluation cultures also differ between scientific fields. Science, technology, engineering, and mathematics panels might, in comparison with panellists from social sciences and humanities, be more concerned with the consistency of the assessment across panels and therefore with clear definitions and uses of assessment criteria ( Lamont and Huutoniemi 2011 ). However, much is still to learn about how panellists’ cognitive affiliations with particular disciplines unfold in the evaluation process. Therefore, the assessment of interdisciplinary research is much more complex than just improving the criteria or procedure because less explicit repertoires would also need to change ( Huutoniemi 2012 ).

Social dynamics play a role as panellists may differ in their motivation to engage in allocation processes, which could create bias ( Lee et al. 2013 ). Placing emphasis on meeting established standards or thoroughness in peer review may promote uncontroversial and safe projects, especially in a situation where strong competition puts pressure on experts to reach a consensus ( Langfeldt 2001 ,2006 ). Personal interest and cognitive similarity may also contribute to conservative bias, which could negatively affect controversial or frontier science ( Luukkonen 2012 ; Roumbanis 2021a ; Travis and Collins 1991 ). Central in this part of literature is that panel conclusions are the outcome of and are influenced by the group interaction ( Van Arensbergen et al. 2014a ). Differences in, for example, the status and expertise of the panel members can play an important role in group dynamics. Insights from social psychology on group dynamics can help in understanding and avoiding bias in peer review panels ( Olbrecht and Bornmann 2010 ). For example, group performance research shows that more diverse groups with complementary skills make better group decisions than homogenous groups. Yet, heterogeneity can also increase conflict within the group ( Forsyth 1999 ). Therefore, it is important to pay attention to power dynamics and maintain team spirit and good communication ( Van Arensbergen et al. 2014a ), especially in meetings that include both scientific and non-scientific peers.

The literature also provides funders with starting points to improve the peer review process. For example, the explicitness of review procedures positively influences the decision-making processes ( Langfeldt 2001 ). Strategic voting and decision-making appear to be less frequent in panels that rate than in panels that rank proposals. Also, an advisory instead of a decisional role may improve the quality of the panel assessment ( Lamont and Huutoniemi 2011 ).

Despite different disciplinary evaluative cultures, formal procedures, and criteria, panel members with different backgrounds develop shared customary rules of deliberation that facilitate agreement and help avoid situations of conflict ( Huutoniemi 2012 ; Lamont 2009 ). This is a necessary prerequisite for opening up peer review panels to include non-academic experts. When doing so, it is important to realise that panel review is a social, emotional, and interactional process. It is therefore important to also take these non-cognitive aspects into account when studying cognitive aspects ( Lamont and Guetzkow 2016 ), as we do in this study.

In summary, what we learn from the literature is that (1) the specific criteria to operationalise scientific quality and societal relevance of research are important, (2) the rationalities from Glerup and Horst predict that not everyone values societal aspects and involve non-scientists in peer review to the same extent and in the same way, (3) this may affect the way peer review panels discuss these aspects, and (4) peer review is a challenging group process that could accommodate other rationalities in order to prevent bias towards specific scientific criteria. To disentangle these aspects, we have carried out an observational study of a diverse range of peer review panel sessions using a fixed set of criteria focusing on scientific quality and societal relevance.

3.1 Research assessment at ZonMw and the DHF

The peer review approach and the criteria used by both the DHF and ZonMw are largely comparable. Funding programmes at both organisations start with a brochure describing the purposes, goals, and conditions for research applications, as well as the assessment procedure and criteria. Both organisations apply a two-stage process. In the first phase, reviewers are asked to write a peer review. In the second phase, a panel reviews the application based on the advice of the written reviews and the applicants’ rebuttal. The panels advise the board on eligible proposals for funding including a ranking of these proposals.

There are also differences between the two organisations. At ZonMw, the criteria for societal relevance and quality are operationalised in the ZonMw Framework Fostering Responsible Research Practices ( Reijmerink and Oortwijn 2017 ). This contributes to a common operationalisation of both quality and societal relevance on the level of individual funding programmes. Important elements in the criteria for societal relevance are, for instance, stakeholder participation, (applying) holistic health concepts, and the added value of knowledge in practice, policy, and education. The framework was developed to optimise the funding process from the perspective of knowledge utilisation and includes concepts like productive interactions and Open Science. It is part of the ZonMw Impact Assessment Framework aimed at guiding the planning, monitoring, and evaluation of funding programmes ( Reijmerink et al. 2020 ). At ZonMw, interdisciplinary panels are set up specifically for each funding programme. Panels are interdisciplinary in nature with academics of a wide range of disciplines and often include non-academic peers, like policymakers, health-care professionals, and patients.

At the DHF, the criteria for scientific quality and societal relevance, at the DHF called societal impact , find their origin in the strategy report of the advisory committee CardioVascular Research Netherlands ( Reneman et al. 2010 ). This report forms the basis of the DHF research policy focusing on scientific and societal impact by creating national collaborations in thematic, interdisciplinary research programmes (the so-called consortia) connecting preclinical and clinical expertise into one concerted effort. An International Scientific Advisory Committee (ISAC) was established to assess these thematic consortia. This panel consists of international scientists, primarily with expertise in the broad cardiovascular research field. The DHF criteria for societal impact were redeveloped in 2013 in collaboration with their CSQ. This panel assesses and advises on the societal aspects of proposed studies. The societal impact criteria include the relevance of the health-care problem, the expected contribution to a solution, attention to the next step in science and towards implementation in practice, and the involvement of and interaction with (end-)users of research (R.Y. Abma-Schouten and I.M. Meijer, unpublished data). Peer review panels for consortium funding are generally composed of members of the ISAC, members of the CSQ, and ad hoc panel members relevant to the specific programme. CSQ members often have a pre-meeting before the final panel meetings to prepare and empower CSQ representatives participating in the peer review panel.

3.2 Selection of funding programmes

To compare and evaluate observations between the two organisations, we selected funding programmes that were relatively comparable in scope and aims. The criteria were (1) a translational and/or clinical objective and (2) the selection procedure consisted of review panels that were responsible for the (final) relevance and quality assessment of grant applications. In total, we selected eight programmes: four at each organisation. At the DHF, two programmes were chosen in which the CSQ did not participate to better disentangle the role of the panel composition. For each programme, we observed the selection process varying from one session on one day (taking 2–8 h) to multiple sessions over several days. Ten sessions were observed in total, of which eight were final peer review panel meetings and two were CSQ meetings preparing for the panel meeting.

After management approval for the study in both organisations, we asked programme managers and panel chairpersons of the programmes that were selected for their consent for observation; none refused participation. Panel members were, in a passive consent procedure, informed about the planned observation and anonymous analyses.

To ensure the independence of this evaluation, the selection of the grant programmes, and peer review panels observed, was at the discretion of the project team of this study. The observations and supervision of the analyses were performed by the senior author not affiliated with the funders.

3.3 Observation matrix

Given the lack of a common operationalisation for scientific quality and societal relevance, we decided to use an observation matrix with a fixed set of detailed aspects as a gold standard to score the brochures, the assessment forms, and the arguments used in panel meetings. The matrix used for the observations of the review panels was based upon and adapted from a ‘grant committee observation matrix’ developed by Van Arensbergen. The original matrix informed a literature review on the selection of talent through peer review and the social dynamics in grant review committees ( van Arensbergen et al. 2014b ). The matrix includes four categories of aspects that operationalise societal relevance, scientific quality, committee, and applicant (see Table 1 ). The aspects of scientific quality and societal relevance were adapted to fit the operationalisation of scientific quality and societal relevance of the organisations involved. The aspects concerning societal relevance were derived from the CSQ criteria, and the aspects concerning scientific quality were based on the scientific criteria of the first panel observed. The four argument types related to the panel were kept as they were. This committee-related category reflects statements that are related to the personal experience or preference of a panel member and can be seen as signals for bias. This category also includes statements that compare a project with another project without further substantiation. The three applicant-related arguments in the original observation matrix were extended with a fourth on social skills in communication with society. We added health technology assessment (HTA) because one programme specifically focused on this aspect. We tested our version of the observation matrix in pilot observations.

Aspects included in the observation matrix and examples of arguments.

3.4 Observations

Data were primarily collected through observations. Our observations of review panel meetings were non-participatory: the observer and goal of the observation were introduced at the start of the meeting, without further interactions during the meeting. To aid in the processing of observations, some meetings were audiotaped (sound only). Presentations or responses of applicants were not noted and were not part of the analysis. The observer made notes on the ongoing discussion and scored the arguments while listening. One meeting was not attended in person and only observed and scored by listening to the audiotape recording. Because this made identification of the panel members unreliable, this panel meeting was excluded from the analysis of the third research question on how arguments used differ between panel members with different perspectives.

3.5 Grant programmes and the assessment criteria

We gathered and analysed all brochures and assessment forms used by the review panels in order to answer our second research question on the correspondence of arguments used with the formal criteria. Several programmes consisted of multiple grant calls: in that case, the specific call brochure was gathered and analysed, not the overall programme brochure. Additional documentation (e.g. instructional presentations at the start of the panel meeting) was not included in the document analysis. All included documents were marked using the aforementioned observation matrix. The panel-related arguments were not used because this category reflects the personal arguments of panel members that are not part of brochures or instructions. To avoid potential differences in scoring methods, two of the authors independently scored half of the documents that were checked and validated afterwards by the other. Differences were discussed until a consensus was reached.

3.6 Panel composition

In order to answer the third research question, background information on panel members was collected. We categorised the panel members into five common types of panel members: scientific, clinical scientific, health-care professional/clinical, patient, and policy. First, a list of all panel members was composed including their scientific and professional backgrounds and affiliations. The theoretical notion that reviewers represent different types of users of research and therefore potential impact domains (academic, social, economic, and cultural) was leading in the categorisation ( Meijer 2012 ; Spaapen and Van Drooge 2011 ). Because clinical researchers play a dual role in both advancing research as a fellow academic and as a user of the research output in health-care practice, we divided the academic members into two categories of non-clinical and clinical researchers. Multiple types of professional actors participated in each review panel. These were divided into two groups for the analysis: health-care professionals (without current academic activity) and policymakers in the health-care sector. No representatives of the private sector participated in the observed review panels. From the public domain, (at-risk) patients and patient representatives were part of several review panels. Only publicly available information was used to classify the panel members. Members were assigned to one category only: categorisation took place based on the specific role and expertise for which they were appointed to the panel.

In two of the four DHF programmes, the assessment procedure included the CSQ. In these two programmes, representatives of this CSQ participated in the scientific panel to articulate the findings of the CSQ meeting during the final assessment meeting. Two grant programmes were assessed by a review panel with solely (clinical) scientific members.

3.7 Analysis

Data were processed using ATLAS.ti 8 and Microsoft Excel 2010 to produce descriptive statistics. All observed arguments were coded and given a randomised identification code for the panel member using that particular argument. The number of times an argument type was observed was used as an indicator for the relative importance of that argument in the appraisal of proposals. With this approach, a practical and reproducible method for research funders to evaluate the effect of policy changes on peer review was developed. If codes or notes were unclear, post-observation validation of codes was carried out based on observation matrix notes. Arguments that were noted by the observer but could not be matched with an existing code were first coded as a ‘non-existing’ code, and these were resolved by listening back to the audiotapes. Arguments that could not be assigned to a panel member were assigned a ‘missing panel member’ code. A total of 4.7 per cent of all codes were assigned a ‘missing panel member’ code.

After the analyses, two meetings were held to reflect on the results: one with the CSQ and the other with the programme coordinators of both organisations. The goal of these meetings was to improve our interpretation of the findings, disseminate the results derived from this project, and identify topics for further analyses or future studies.

3.8 Limitations

Our study focuses on studying the final phase of the peer review process of research applications in a real-life setting. Our design, a non-participant observation of peer review panels, also introduced several challenges ( Liu and Maitlis 2010 ).

First, the independent review phase or pre-application phase was not part of our study. We therefore could not assess to what extent attention to certain aspects of scientific quality or societal relevance and impact in the review phase influenced the topics discussed during the meeting.

Second, the most important challenge of overt non-participant observations is the observer effect: the danger of causing reactivity in those under study. We believe that the consequences of this effect on our conclusions were limited because panellists are used to external observers in the meetings of these two funders. The observer briefly explained the goal of the study during the introductory round of the panel in general terms. The observer sat as unobtrusively as possible and avoided reactivity to discussions. Similar to previous observations of panels, we experienced that the fact that an observer was present faded into the background during a meeting ( Roumbanis 2021a ). However, a limited observer effect can never be entirely excluded.

Third, our design to only score the arguments raised, and not the responses of the applicant, or information on the content of the proposals, has its positives and negatives. With this approach, we could assure the anonymity of the grant procedures reviewed, the applicants and proposals, panels, and individual panellists. This was an important condition for the funders involved. We took the frequency arguments used as a proxy for the relative importance of that argument in decision-making, which undeniably also has its caveats. Our data collection approach limits more in-depth reflection on which arguments were decisive in decision-making and on group dynamics during the interaction with the applicants as non-verbal and non-content-related comments were not captured in this study.

Fourth, despite this being one of the largest observational studies on the peer review assessment of grant applications with the observation of ten panels in eight grant programmes, many variables might explain differences in arguments used within and beyond our view. Examples of ‘confounding’ variables are the many variations in panel composition, the differences in objectives of the programmes, and the range of the funding programmes. Our study should therefore be seen as exploratory and thus warrants caution in drawing conclusions.

4.1 Overview of observational data

The grant programmes included in this study reflected a broad range of biomedical and health funding programmes, ranging from fellowship grants to translational research and applied health research. All formal documents available to the applicants and to the review panel were retrieved for both ZonMw and the DHF. In total, eighteen documents corresponding to the eight grant programmes were studied. The number of proposals assessed per programme varied from three to thirty-three. The duration of the panel meetings varied between 2 h and two consecutive days. Together, this resulted in a large spread in the number of total arguments used in an individual meeting and in a grant programme as a whole. In the shortest meeting, 49 arguments were observed versus 254 in the longest, with a mean of 126 arguments per meeting and on average 15 arguments per proposal.

We found consistency between how criteria were operationalised in the grant programme’s brochures and in the assessment forms of the review panels overall. At the same time, because the number of elements included in the observation matrix is limited, there was a considerable diversity in the arguments that fall within each aspect (see examples in Table 1 ). Some of these differences could possibly be explained by differences in language used and the level of detail in the observation matrix, the brochure, and the panel’s instructions. This was especially the case in the applicant-related aspects in which the observation matrix was more detailed than the text in the brochure and assessment forms.

In interpretating our findings, it is important to take into account that, even though our data were largely complete and the observation matrix matched well with the description of the criteria in the brochures and assessment forms, there was a large diversity in the type and number of arguments used and in the number of proposals assessed in the grant programmes included in our study.

4.2 Wide range of arguments used by panels: scientific arguments used most

For our first research question, we explored the number and type of arguments used in the panel meetings. Figure 1 provides an overview of the arguments used. Scientific quality was discussed most. The number of times the feasibility of the aims was discussed clearly stands out in comparison to all other arguments. Also, the match between the science and the problem studied and the plan of work were frequently discussed aspects of scientific quality. International competitiveness of the proposal was discussed the least of all five scientific arguments.

The number of arguments used in panel meetings.

Attention was paid to societal relevance and impact in the panel meetings of both organisations. Yet, the language used differed somewhat between organisations. The contribution to a solution and the next step in science were the most often used societal arguments. At ZonMw, the impact of the health-care problem studied and the activities towards partners were less frequently discussed than the other three societal arguments. At the DHF, the five societal arguments were used equally often.

With the exception of the fellowship programme meeting, applicant-related arguments were not often used. The fellowship panel used arguments related to the applicant and to scientific quality about equally often. Committee-related arguments were also rarely used in the majority of the eight grant programmes observed. In three out of the ten panel meetings, one or two arguments were observed, which were related to personal experience with the applicant or their direct network. In seven out of ten meetings, statements were observed, which were unasserted or were explicitly announced as reflecting a personal preference. The frequency varied between one and seven statements (sixteen in total), which is low in comparison to the other arguments used (see Fig. 1 for examples).

4.3 Use of arguments varied strongly per panel meeting

The balance in the use of scientific and societal arguments varied strongly per grant programme, panel, and organisation. At ZonMw, two meetings had approximately an equal balance in societal and scientific arguments. In the other two meetings, scientific arguments were used twice to four times as often as societal arguments. At the DHF, three types of panels were observed. Different patterns in the relative use of societal and scientific arguments were observed for each of these panel types. In the two CSQ-only meetings the societal arguments were used approximately twice as often as scientific arguments. In the two meetings of the scientific panels, societal arguments were infrequently used (between zero and four times per argument category). In the combined societal and scientific panel meetings, the use of societal and scientific arguments was more balanced.

4.4 Match of arguments used by panels with the assessment criteria

In order to answer our second research question, we looked into the relation of the arguments used with the formal criteria. We observed that a broader range of arguments were often used in comparison to how the criteria were described in the brochure and assessment instruction. However, arguments related to aspects that were consequently included in the brochure and instruction seemed to be discussed more frequently than in programmes where those aspects were not consistently included or were not included at all. Although the match of the science with the health-care problem and the background and reputation of the applicant were not always made explicit in the brochure or instructions, they were discussed in many panel meetings. Supplementary Fig. S1 provides a visualisation of how arguments used differ between the programmes in which those aspects were, were not, consistently included in the brochure and instruction forms.

4.5 Two-thirds of the assessment was driven by scientific panel members

To answer our third question, we looked into the differences in arguments used between panel members representing a scientific, clinical scientific, professional, policy, or patient perspective. In each research programme, the majority of panellists had a scientific background ( n = 35), thirty-four members had a clinical scientific background, twenty had a health professional/clinical background, eight members represented a policy perspective, and fifteen represented a patient perspective. From the total number of arguments (1,097), two-thirds were made by members with a scientific or clinical scientific perspective. Members with a scientific background engaged most actively in the discussion with a mean of twelve arguments per member. Similarly, clinical scientists and health-care professionals participated with a mean of nine arguments, and members with a policy and patient perspective put forward the least number of arguments on average, namely, seven and eight. Figure 2 provides a complete overview of the total and mean number of arguments used by the different disciplines in the various panels.

The total and mean number of arguments displayed per subgroup of panel members.

4.6 Diverse use of arguments by panellists, but background matters

In meetings of both organisations, we observed a diverse use of arguments by the panel members. Yet, the use of arguments varied depending on the background of the panel member (see Fig. 3 ). Those with a scientific and clinical scientific perspective used primarily scientific arguments. As could be expected, health-care professionals and patients used societal arguments more often.

The use of arguments differentiated by panel member background.

Further breakdown of arguments across backgrounds showed clear differences in the use of scientific arguments between the different disciplines of panellists. Scientists and clinical scientists discussed the feasibility of the aims more than twice as often as their second most often uttered element of scientific quality, which was the match between the science and the problem studied . Patients and members with a policy or health professional background put forward fewer but more varied scientific arguments.

Patients and health-care professionals accounted for approximately half of the societal arguments used, despite being a much smaller part of the panel’s overall composition. In other words, members with a scientific perspective were less likely to use societal arguments. The relevance of the health-care problem studied, activities towards partners , and arguments related to participation and diversity were not used often by this group. Patients often used arguments related to patient participation and diversity and activities towards partners , although the frequency of the use of the latter differed per organisation.

The majority of the applicant-related arguments were put forward by scientists, including clinical scientists. Committee-related arguments were very rare and are therefore not differentiated by panel member background, except comments related to a comparison with other applications. These arguments were mainly put forward by panel members with a scientific background. HTA -related arguments were often used by panel members with a scientific perspective. Panel members with other perspectives used this argument scarcely (see Supplementary Figs S2–S4 for the visual presentation of the differences between panel members on all aspects included in the matrix).

5.1 Explanations for arguments used in panels

Our observations show that most arguments for scientific quality were often used. However, except for the feasibility , the frequency of arguments used varied strongly between the meetings and between the individual proposals that were discussed. The fact that most arguments were not consistently used is not surprising given the results from previous studies that showed heterogeneity in grant application assessments and low consistency in comments and scores by independent reviewers ( Abdoul et al. 2012 ; Pier et al. 2018 ). In an analysis of written assessments on nine observed dimensions, no dimension was used in more than 45 per cent of the reviews ( Hartmann and Neidhardt 1990 ).

There are several possible explanations for this heterogeneity. Roumbanis (2021a) described how being responsive to the different challenges in the proposals and to the points of attention arising from the written assessments influenced discussion in panels. Also when a disagreement arises, more time is spent on discussion ( Roumbanis 2021a ). One could infer that unambiguous, and thus not debated, aspects might remain largely undetected in our study. We believe, however, that the main points relevant to the assessment will not remain entirely unmentioned, because most panels in our study started the discussion with a short summary of the proposal, the written assessment, and the rebuttal. Lamont (2009) , however, points out that opening statements serve more goals than merely decision-making. They can also increase the credibility of the panellist, showing their comprehension and balanced assessment of an application. We can therefore not entirely disentangle whether the arguments observed most were also found to be most important or decisive or those were simply the topics that led to most disagreement.

An interesting difference with Roumbanis’ study was the available discussion time per proposal. In our study, most panels handled a limited number of proposals, allowing for longer discussions in comparison with the often 2-min time frame that Roumbanis (2021b) described, potentially contributing to a wider range of arguments being discussed. Limited time per proposal might also limit the number of panellists contributing to the discussion per proposal ( De Bont 2014 ).

5.2 Reducing heterogeneity by improving operationalisation and the consequent use of assessment criteria

We found that the language used for the operationalisation of the assessment criteria in programme brochures and in the observation matrix was much more detailed than in the instruction for the panel, which was often very concise. The exercise also illustrated that many terms were used interchangeably.

This was especially true for the applicant-related aspects. Several panels discussed how talent should be assessed. This confusion is understandable when considering the changing values in research and its assessment ( Moher et al. 2018 ) and the fact that the instruction of the funders was very concise. For example, it was not explicated whether the individual or the team should be assessed. Arensbergen et al. (2014b) described how in grant allocation processes, talent is generally assessed using limited characteristics. More objective and quantifiable outputs often prevailed at the expense of recognising and rewarding a broad variety of skills and traits combining professional, social, and individual capital ( DORA 2013 ).

In addition, committee-related arguments, like personal experiences with the applicant or their institute, were rarely used in our study. Comparisons between proposals were sometimes made without further argumentation, mainly by scientific panel members. This was especially pronounced in one (fellowship) grant programme with a high number of proposals. In this programme, the panel meeting concentrated on quickly comparing the quality of the applicants and of the proposals based on the reviewer’s judgement, instead of a more in-depth discussion of the different aspects of the proposals. Because the review phase was not part of this study, the question of which aspects have been used for the assessment of the proposals in this panel therefore remains partially unanswered. However, weighing and comparing proposals on different aspects and with different inputs is a core element of scientific peer review, both in the review of papers and in the review of grants ( Hirschauer 2010 ). The large role of scientific panel members in comparing proposals is therefore not surprising.

One could anticipate that more consequent language in the operationalising criteria may lead to more clarity for both applicants and panellists and to more consistency in the assessment of research proposals. The trend in our observations was that arguments were used less when the related criteria were not or were consequently included in the brochure and panel instruction. It remains, however, challenging to disentangle the influence of the formal definitions of criteria on the arguments used. Previous studies also encountered difficulties in studying the role of the formal instruction in peer review but concluded that this role is relatively limited ( Langfeldt 2001 ; Reinhart 2010 ).

The lack of a clear operationalisation of criteria can contribute to heterogeneity in peer review as many scholars found that assessors differ in the conceptualisation of good science and to the importance they attach to various aspects of research quality and societal relevance ( Abdoul et al. 2012 ; Geurts 2016 ; Scholten et al. 2018 ; Van den Brink et al. 2016 ). The large variation and absence of a gold standard in the interpretation of scientific quality and societal relevance affect the consistency of peer review. As a consequence, it is challenging to systematically evaluate and improve peer review in order to fund the research that contributes most to science and society. To contribute to responsible research and innovation, it is, therefore, important that funders invest in a more consistent and conscientious peer review process ( Curry et al. 2020 ; DORA 2013 ).