Boosting Discriminative Visual Representation Learning with Scenario-Agnostic Mixup

Mixup is a well-known data-dependent augmentation technique for DNNs, consisting of two sub-tasks: mixup generation and classification. However, the recent dominant online training method confines mixup to supervised learning (SL), and the objective of the generation sub-task is limited to selected sample pairs instead of the whole data manifold, which might cause trivial solutions. To overcome such limitations, we comprehensively study the objective of mixup generation and propose S cenario- A gnostic Mix up (SAMix) for both SL and Self-supervised Learning (SSL) scenarios. Specifically, we hypothesize and verify the objective function of mixup generation as optimizing local smoothness between two mixed classes subject to global discrimination from other classes. Accordingly, we propose η 𝜂 \eta -balanced mixup loss for complementary learning of the two sub-objectives. Meanwhile, a label-free generation sub-network is designed, which effectively provides non-trivial mixup samples and improves transferable abilities. Moreover, to reduce the computational cost of online training, we further introduce a pre-trained version, SAMix P , achieving more favorable efficiency and generalizability. Extensive experiments on nine SL and SSL benchmarks demonstrate the consistent superiority and versatility of SAMix compared with existing methods.

1 Introduction

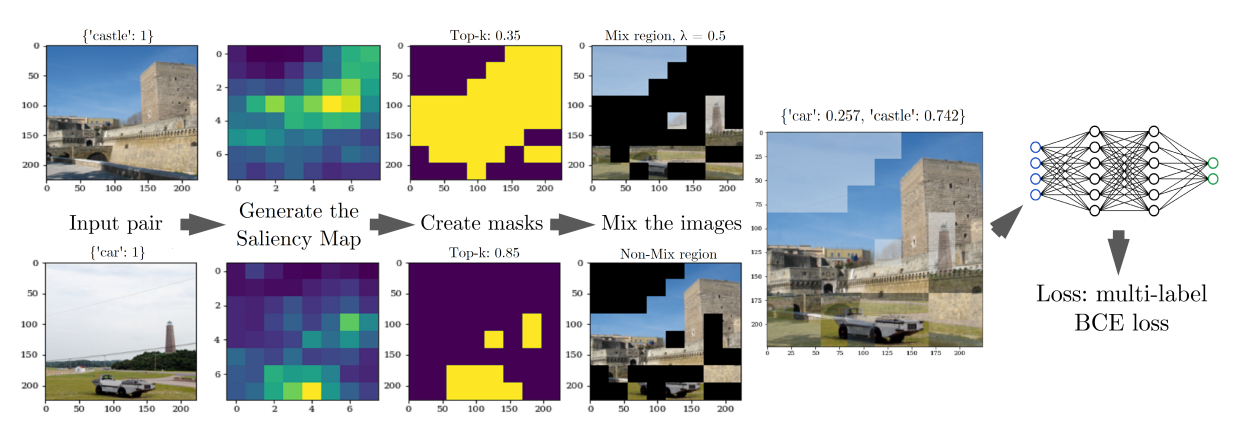

One of the fundamental problems in machine learning is how to learn a proper low-dimensional representation that captures intrinsic data structures and facilitates downstream tasks in an efficient manner [ 1 , 2 , 3 , 4 ] . Data mixing, as a means of generating symmetric mixed data and labels, has largely improved the efficiency of deep neural networks (DNNs) in learning discriminative representation across various scenarios [ 5 , 6 , 7 ] , especially for Vision Transformers (ViTs) [ 8 , 9 ] . Despite its general application, the policy of sample generation process in data mixing requires an explicit design ( e.g. linear interpolation or random local patch replacement). Differ from that, the optimizable data mixing algorithms utilize labels to localize task-relevant targets ( e.g. , gradCAM [ 10 ] ) and thereby generate semantic mixed samples such as offline maximizing saliency information of related samples [ 6 , 11 , 12 ] . These handcrafted mixing policies are shown in the red box of Figure 1 . Additionally, [ 13 ] learns a mixup generator by supervised adversarial training.

Most current works combine mixup with contrastive learning, which directly transfers linear mixup methods into contrastive learning [ 7 , 14 , 15 ] . The recently proposed AutoMix [ 16 ] provides a novel perspective to make mixup policy parameterized and can be trained online. Although these optimizable methods have attained significant gains in supervised learning (SL) tasks, they still do not exploit the underlying structure of the whole observed data manifold, resulting in trivial solutions without the guidance of labels, which makes them fail to apply in self-supervised learning (SSL) scenarios (discussed in Section 2 ). The question then naturally arises as to whether it is possible to design a more generalized and trained mixup policy that can be applied to both SL and SSL scenarios. To achieve this goal, there are two remaining open challenges to be solved: (i) how to solve the problem of trivial solutions in online training approaches; (ii) how to design a proper generation objective to make the algorithm generalizable for both SL and SSL.

In this paper, we propose S cenario- A gnostic Mix up (SAMix), a framework shown in Figure 1 that employs η 𝜂 \eta -balanced mixup loss (Section 3.1 ) for treating mixup generation and classification differently from a local and global perspective. At the same time, specially designed Mixer (Section 3.2 ) generate mixed samples adaptively either at instance-level or cluster-level to tackle the trivial solutions effectively. Furthermore, in order to eliminate the drawback of poor versatility and computational overhead in SAMix optimization, we propose a pre-trained setting, SAMix P , which employs a pre-trained Mixer to generate high-quality mixed samples balancing performance and speed for various downstream applications. Noticed that SAMix P can achieve competitive or slightly higher performances than SAMix in classification tasks. Comprehensive experiments demonstrate the effectiveness and transferring abilities of SAMix. Our contributions are summarized as follows:

We decompose mixup learning objectives into local and global terms and further analyze the corresponding properties (local smoothness and global discrimination) of mixup generation, then design η 𝜂 \eta -balanced loss to targetedly boost mixup generation performance.

We build a label-free mixup generator, Mixer, with mixing attention and non-linear content modeling which effectively tackles the trivial solution problem in existing learnable methods and thus makes it more adaptive and transferable for varied scenarios.

Combining the above η 𝜂 \eta -balanced loss and specially tailored label-free Mixer, a unified scenario-agnostic mixup training framework, SAMix, is proposed that supports online and pre-trained pipelines for both SL and SSL tasks.

Built on SAMix framework, a pre-trained version named SAMix P is provided, which brings SAMix more favorable performance-efficiency trade-offs and generalizability across multifarious visual downstream tasks.

2 Preliminaries

Given a finite set of i.i.d samples, X = [ x i ] i = 1 n ∈ ℝ D × n 𝑋 superscript subscript delimited-[] subscript 𝑥 𝑖 𝑖 1 𝑛 superscript ℝ 𝐷 𝑛 X=[x_{i}]_{i=1}^{n}\in\mathbb{R}^{D\times n} , each data x i ∈ ℝ D subscript 𝑥 𝑖 superscript ℝ 𝐷 x_{i}\in\mathbb{R}^{D} is drawn from a mixture of, say C 𝐶 C , distributions 𝒟 = { 𝒟 c } c = 1 C 𝒟 superscript subscript subscript 𝒟 𝑐 𝑐 1 𝐶 \mathcal{D}=\{\mathcal{D}_{c}\}_{c=1}^{C} . Our basic assumption for discriminative representations is that the each component distribution 𝒟 c subscript 𝒟 𝑐 \mathcal{D}_{c} has relatively low-dimensional intrinsic structures, i.e., the distribution 𝒟 c subscript 𝒟 𝑐 \mathcal{D}_{c} is constrained on a sub-manifold, say ℳ c subscript ℳ 𝑐 \mathcal{M}_{c} with dimension d c ≪ D much-less-than subscript 𝑑 𝑐 𝐷 d_{c}\ll D . The distribution 𝒟 𝒟 \mathcal{D} of X 𝑋 X is consisted of sub-manifolds, ℳ = ∪ c = 1 C ℳ c ℳ superscript subscript 𝑐 1 𝐶 subscript ℳ 𝑐 \mathcal{M}=\cup_{c=1}^{C}\mathcal{M}_{c} . We seek a low-dimensional representation z i ∈ ℳ subscript 𝑧 𝑖 ℳ z_{i}\in\mathcal{M} of x i subscript 𝑥 𝑖 x_{i} by learning a continuous mapping by a network encoder, f θ ( x ) : x ⟼ z : subscript 𝑓 𝜃 𝑥 ⟼ 𝑥 𝑧 f_{\theta}(x):x\longmapsto z with the parameter θ ∈ Θ 𝜃 Θ \theta\in\Theta , which captures intrinsic structures of ℳ ℳ \mathcal{M} and facilitates the discriminative tasks.

2.1 Discriminative Representation Learning

2.2 mixup for discriminative representation.

We further consider mixup as a generation task into discriminative representation learning to form a closed-loop framework. Then we have two mutually benefited sub-tasks: (a) mixed data generation and (b) classification . As for the sub-task (a), we define two functions, h ( ⋅ ) ℎ ⋅ h(\cdot) and v ( ⋅ ) 𝑣 ⋅ v(\cdot) , to generate mixed samples and labels with a mixing ratio λ ∼ B e t a ( α , α ) similar-to 𝜆 𝐵 𝑒 𝑡 𝑎 𝛼 𝛼 \lambda\sim Beta(\alpha,\alpha) . Given the mixed data, (b) defines a mixup training objective to optimize the representation space between instances or classes.

where z m subscript 𝑧 𝑚 z_{m} , z i subscript 𝑧 𝑖 z_{i} and z j subscript 𝑧 𝑗 z_{j} denote the corresponding representations. The major difference with Eq. 3 is that the augmentation view that generates z m subscript 𝑧 𝑚 z_{m} is not from the same view, i.e., z i subscript 𝑧 𝑖 z_{i} and z j subscript 𝑧 𝑗 z_{j} , as the objective function, which is effective in retaining task-relevant information, details in A.5.1 .

superscript subscript ℒ italic-ϕ 𝑐 𝑙 𝑠 superscript subscript ℒ italic-ϕ 𝑚 𝑎 𝑠 𝑘 \mathcal{L}_{\phi}=\mathcal{L}_{\phi}^{cls}+\mathcal{L}_{\phi}^{mask} , and the final learning objective is,

3 SAMix for Discriminative Representations

3.1 learning objective for mixup generation.

Typically the objective function ℒ ϕ subscript ℒ italic-ϕ \mathcal{L}_{\phi} corresponding to the mixup generation is consistent with the classification in parametric training ( e.g., ℓ C E superscript ℓ 𝐶 𝐸 \ell^{CE} ). In this paper, we argue that mixup generation is aimed to optimize the local term subject to the global term . The local term focuses on the classes of sample pairs to be mixed, while the global term introduces the constraints of other classes. For example, ℓ C E superscript ℓ 𝐶 𝐸 \ell^{CE} is the global term whose each class produces an equivalent effect on the final prediction without focusing on the relevant classes of the current sample pair. At the class level, to emphasize the local term, we introduce a parametric binary cross-entropy (pBCE) loss for the generation task. Formally, assuming y i subscript 𝑦 𝑖 y_{i} and y j subscript 𝑦 𝑗 y_{j} belong to the class a 𝑎 a and class b 𝑏 b , pBCE can be summarized as:

\ell_{+} and ℓ − subscript ℓ \ell_{-} to represent the local and global terms, and ℓ − subscript ℓ \ell_{-} for the parametric loss refers to ℓ C E superscript ℓ 𝐶 𝐸 \ell^{\,CE} . Symmetrically, we have non-parametric binary cross-entropy mixup loss (BCE) for CL:

subscript 𝑧 𝑚 subscript 𝑧 𝑖 𝑡 subscript 𝑧 𝑚 subscript 𝑧 𝑗 𝑡 p_{m,i}=\frac{\exp(z_{m}z_{i}/t)}{\exp(z_{m}z_{i}/t)+\exp(z_{m}z_{j}/t)} and its ℓ − subscript ℓ \ell_{-} refers to ℓ N C E superscript ℓ 𝑁 𝐶 𝐸 \ell^{NCE} .

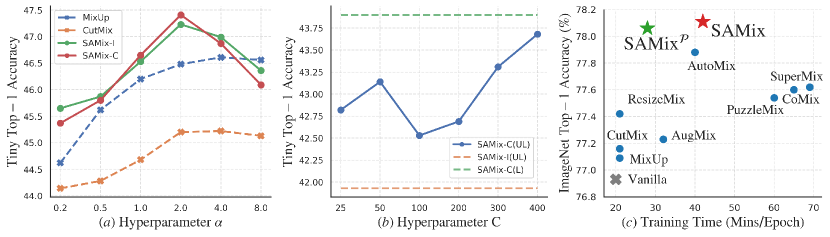

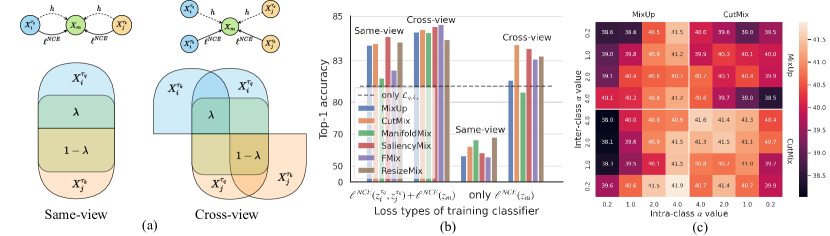

\ell_{+} determines the generation performance, (ii) the global term ℓ − subscript ℓ \ell_{-} improves global discrimination but is sensitive to class information. To verify these properties, we design an empirical experiment based on the proposed Mixer on Tiny (see A.5 ). The main difference between the mixup CE and infoNCE is whether to adopt parametric class centroids. Therefore, we compare the intensity of class information among unlabeled (UL), pseudo labels (PL), and ground truth labels (L). Notice that PL is generated by ODC [ 21 ] with the cluster number C 𝐶 C . The class supervision can be imported to mixup infoNCE loss by filtering out negative samples with PL or L as [ 22 ] denoted as infoNCE (L) and infoNCE (PL). As shown in Figure 2 (left), our hypothesizes are verified in the SL task (as the performance decreases from CE(L) to pBCE(L) and CE(PL) losses), but the opposite result appears in the CL task. The performance increases from InfoNCE(UL) to InfoNCE(L) as the false negative samples are removed [ 23 , 22 ] while trivial solutions occur using BCE(UL) (in Figure 6 ). Therefore, we propose it is better to explicitly import class information as PL for instance-level mixup to generate "strong" inter-class mixed samples while preserving intra-class compactness.

SAMix with η 𝜂 \eta -balanced generation objectives. Practically, we provide two versions of the learning objective: the mixup CE loss with PL as the class-level version (SAMix-C), and the mixup infoNCE loss as the instance-level version (SAMix-I). Then, we hypothesize that the best performing mixed samples will be close to the sweet spot: achieving λ 𝜆 \lambda smoothness locally between two classes or neighborhood systems while globally discriminating from other classes or instances. We propose an η 𝜂 \eta -balanced mixup loss as the objective of mixup generation,

𝜂 subscript superscript ℓ 𝑁 𝐶 𝐸 \mathcal{L}_{\phi}^{cls}\triangleq\ell^{NCE}_{+}+\eta\ell^{NCE}_{-} for SAMix-I (details in A.2 ).

3.2 De Novo Mixer for Mixup Generation

Although AutoMix proposes the MixBlock that learns adaptive mixup generation policies online, there are three drawbacks in practice: (a) fail to encode the mixing ratio λ 𝜆 \lambda on small datasets; (b) fall into trivial solutions when performing CL tasks; (c) the online training pipeline leads to double or more computational costs than MixUp. Our Mixer ℳ ϕ subscript ℳ italic-ϕ \mathcal{M}_{\phi} solves these problems individually.

Adaptive λ 𝜆 \lambda encoding and mixing attention. Since a randomly sampled λ 𝜆 \lambda should directly guide mixup generation, the predicted mask s 𝑠 s should be semantically proportional to λ 𝜆 \lambda . The previous typical design regards λ 𝜆 \lambda as the prior knowledge and concatenates λ 𝜆 \lambda to input feature maps, which might be unable to encode λ 𝜆 \lambda properly (the analysis in A.6.1 ). We propose an adaptive λ 𝜆 \lambda encoding as,

Pre-trained pipeline v.s. Online pipeline. Even though online-optimized mixup methods [ 16 ] outperform their handcrafted counterparts by a substantial margin, their extra computational cost is intolerable especially for large datasets. On large-scale benchmarks, we observe that the mixed samples generated by SAMix in both early and late training periods or with different CNN encoders vary little. Consequently, we hypothesize that, similar to knowledge distillation [ 24 ] , we can replace the online training Mixer in our SAMix with a pre-trained one. From this perspective, we propose the pre-trained SAMix pipeline, denoted as SAMix P , in Figure 5 . We can conclude: (i) SAMix P pre-trained on large-scale datasets achieves similar or better performance as its online training version on current or relevant datasets with less computational cost. (ii) SAMix P with light or median CNN encoders ( e.g. , ResNet-18) yields better performance than heavy ones ( e.g. , ResNet-101). (iii) SAMix P has better transferring abilities than AutoMix P . (iv) Online training pipeline is still irreplaceable on small datasets ( e.g. , CIFAR and CUB).

Prior knowledge of mixup. Moreover, we summarize some commonly adopted prior knowledge [ 6 , 24 ] for mixup as two aspects: (a) adjusting the mean of s i subscript 𝑠 𝑖 s_{i} correlated with λ 𝜆 \lambda , and (b) balancing the smoothness of local image patches while maintaining discrimination of x m subscript 𝑥 𝑚 x_{m} . Based on them, we introduce λ 𝜆 \lambda adjusting and modifying the mask loss ℒ ϕ m a s k superscript subscript ℒ italic-ϕ 𝑚 𝑎 𝑠 𝑘 \mathcal{L}_{\phi}^{mask} (details in A.2 ).

3.3 Discussion and Visualization of SAMix

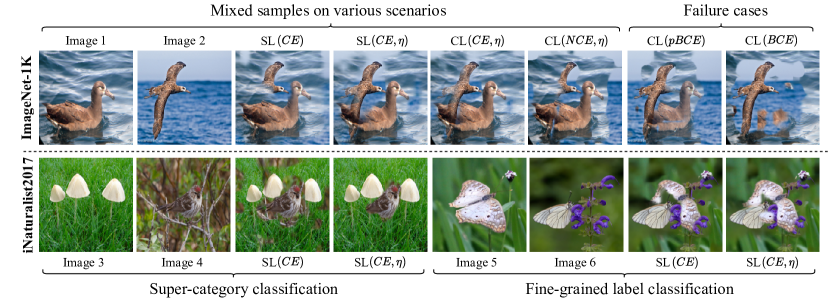

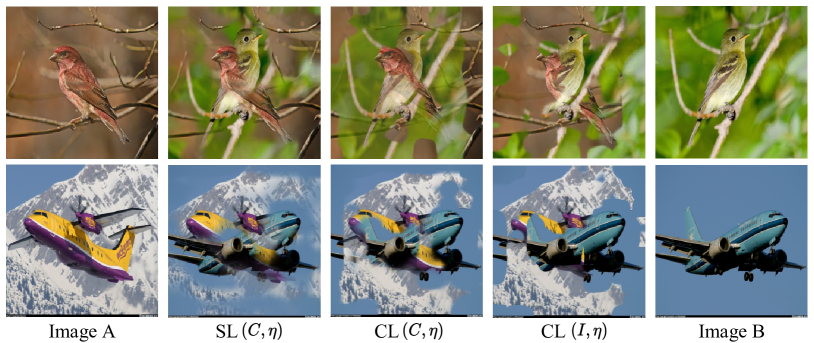

To show the influence of local and global constraints on mixup generation, we visualize mixed samples generated from Mixer on various scenarios in Figure 6 . It is clear that SAMix captures robust underlying data structure from both class- and instance-level effectively and thus can avoid trivial solutions and then become more applicable for SSL.

Class-level. In the supervised task, global constraint localizes key features by discriminating to other classes, while the local term is prone to preserve more information related to the current two samples and classes. For example, comparing the mixed results with and without η 𝜂 \eta -balanced mixup loss, it was found that pixels of the foreground target were of interest to Mixer. When the global constraint is balanced ( η = 0.5 𝜂 0.5 \eta=0.5 ), the foreground target is retained more completely. Importantly, our designed Mixer remains invariant to the background for the more challenging fine-grained classification and preserves discriminative features.

Instance-level. Since no label supervision is available for SSL, the global and local terms are transformed from class to instance. Similar results are shown in the top row, and the only difference is that SAMix-C has a more precise target correspondence compared to SAMix-I via introducing class information by PL, which further indicates the importance of the information of classes. If we only focus on local relationships, Mixer can only generate mixed samples with fixed patterns (the last two results in the top row). These failure cases imply the importance of global constraints.

4 Experiments

We first evaluate SAMix for supervised learning (SL) in Sec. 4.1 and self-supervised learning (SSL) in Sec. 4.2 , and then perform ablation studies in Sec. 4.3 . Nine benchmarks are used for evaluation: CIFAR-100 [ 25 ] , Tiny-ImageNet (Tiny) [ 26 ] , ImageNet-1k (IN-1k) [ 27 ] , STL-10 [ 28 ] , CUB-200 [ 29 ] , FGVC-Aircraft (Aircraft) [ 30 ] , iNaturalist2017/2018 (iNat2017/2018) [ 31 ] , and Place205 [ 32 ] . All experiments are conducted with PyTorch and reported the mean of 3 trials . SAMix uses α = 2 𝛼 2 \alpha=2 and the feature layer l = 3 𝑙 3 l=3 while SAMix P is pre-trained 100 epochs with ResNet-18 (SL tasks) or ResNet-50 (SSL tasks) on IN-1k. The median validation top-1 Acc of the last 10 epochs is recorded.

4.1 Evaluation on Supervised Image Classification

CNNs and ViTs are used as backbone networks, including ResNet (R), Wide-ResNet (WRN) [ 33 ] , ResNeXt-32x4d (RX) [ 34 ] , DeiT [ 9 ] , and Swin Transformer [ 35 ] . We use PyTorch training procedures [ 36 ] by default: an SGD optimizer with cosine scheduler [ 37 ] . A special case in Table 4 : RSB A3 (using LAMB optimizer [ 38 ] for R-50) in timm [ 39 ] and DeiT (using AdamW optimizer [ 40 ] for DeiT-S and Swin-T) training recipes are fully adopted on IN-1k. MCE and MBCE denote mixup cross-entropy and mixup binary cross-entropy in RSB A3. For a fair comparison, grid search is performed for hyper-parameters α ∈ { 0.1 , 0.2 , 0.5 , 1 , 2 , 4 } 𝛼 0.1 0.2 0.5 1 2 4 \alpha\in\{0.1,0.2,0.5,1,2,4\} of all mixup variants. We follow hyper-parameters in original papers by default. ∗ * denotes arXiv preprint works, † † \dagger and ‡ ‡ \ddagger denote reproduced results by official codes and originally reported results, the rest are reproduced (see A.3 and A.4 ).

Comparison and discussion Table 3 shows results on small-scale and fine-grained classification tasks. SAMix consistently improves classification performances over the previous best algorithm, AutoMix, with the improved Mixer. Notice that SAMix significantly improved the performance of CUB-200 and Aircraft by 1.24% and 0.78% based on ResNet-18, and continued to expand its dominance on Tiny by bringing 1.23% and 1.40% improvement on ResNet-18 and ResNeXt-50. As for the large-scale classification task, we benchmark popular mixup methods in Table 1 , 2 , and 4 , SAMix and SAMix P outperform all existing methods on IN-1k, iNat2017/2018 and Places205. Especially, SAMix P yields similar or better performances than SAMix with less computational cost.

4.2 Evaluation on Self-supervised Learning

Then, we evaluate SAMix on SSL tasks pre-training on STL-10, Tiny, and IN-1k. We adopt all hyper-parameter configurations from MoCo.V2 unless otherwise stated. We compared SAMix in three dimensions in CL: (i) compare with other mixup variants, based on our proposed cross-view pipeline, and whether the predefined cluster information is given (denotes by C) or not, as shown in Table 5 . (ii) longitudinal comparison with CL methods that utilize mixup variants or Mixup+ latent space mixup strategies in Table 6 , including MoCHi [ 14 ] , i-Mix [ 7 ] , Un-Mix [ 15 ] , and WBSIM [ 41 ] , where all comparing methods are based on MoCo.V2 except SwAV [ 42 ] . (iii) extend SAMix P and mixup variants to various CL baselines based on ResNet-50 and ViT-S in Table 7 and Table 8 , compared with DACL [ 43 ] and SDMP [ 44 ] (using three mixup augmentations). In these tables, ⋆ ⋆ \star denotes our modified methods (PuzzleMix ∗ uses PL and Inter-Intra ∗ combines inter-class CutMix with intra-class Mixup, and n 𝑛 n -Mix denotes the types of mixup variants used in the SSL method.

Linear Classification Following the linear classification protocol proposed in MoCo, we train a linear classifier on top of frozen backbone features with the supervised train set. We train 100 epochs using SGD with a batch size of 256. The initialized learning rate is set to 0.1 0.1 0.1 for Tiny and STL-10 while 30 30 30 for IN-1k, and decay by 0.1 0.1 0.1 at epochs 30 and 60. As shown in Table 5 , SAMix-I outperforms all the linear mixup methods by a large margin, while SAMix-C surpasses the saliency-based PuzzleMix when PL is available. And SAMix-I has both global and local properties through infoNCE and BCE losses. Meanwhile, Table 6 demonstrates that both SAMix-I and SAMix-C surpass other CL methods combined with the predefined mixup. Overall, SAMix-C yields the best performance in CL tasks, indicating it provides task-relevant information with the help of PL. Table 7 and Table 8 verify the generalizability of SAMix P variants on popular CL baselines, which achieve comparable performances to recently proposed algorithms that combine n 𝑛 n -Mix with CL.

Downstream Tasks Following the protocol in MoCo, we evaluate transferable abilities of the learned representation of comparing methods to object detection task on PASCAL VOC [ 45 ] and COCO [ 46 ] in Detectron2 [ 47 ] . We fine-tune Faster R-CNN [ 48 ] with pre-trained models on VOC trainval07+12 and evaluate on the VOC test2007 set. Similarly, Mask R-CNN [ 49 ] is fine-tuned (2 × \times schedule) on the COCO train2017 and evaluated on the COCO val2017 . SAMix still achieves comparable performance among state-of-the-art mixup methods for CL. View results in A.5.5 .

4.3 Ablation Study

We conduct ablation studies in four aspects: (i) Mixer : Table 10 verifies the effectiveness of each proposed module in both SL and CL tasks on Tiny. The first three modules enable Mixer to model the non-linear mixup relationship, while the next two modules enhance Mixer, especially in CL tasks. (ii) Learning objectives : We analyze the effectiveness of proposed ℓ η subscript ℓ 𝜂 \ell_{\eta} with other losses, as shown in Table 9 . Using ℓ η subscript ℓ 𝜂 \ell_{\eta} for the mixup CE and infoNCE consistently improves the performance both for the CL task on STL-10 and Tiny. (iii) Time complexity analysis : Figure 7 (c) shows computational analysis conducted on the SL task on IN-1k using PyTorch 100-epoch settings. It shows that the overall accuracy v.s. time efficiency of SAMix and SAMix P are superior to other methods. (iv) Hyper-parameters : Figure 7 (a) and (b) show ablation results of the hyper-parameter α 𝛼 \alpha and the clustering number C 𝐶 C for SAMix-C. We empirically choose α 𝛼 \alpha =2.0 and C = 200 𝐶 200 C=200 as default.

5 Related Work

Class-level Mixup for SL There are four types sample mixing policies for class-level mixup: linear mixup of input space [ 5 , 19 , 50 , 51 , 52 ] and latent space [ 53 , 54 ] , saliency-based [ 12 , 6 , 11 ] , generation-based [ 13 , 55 ] , and learning mixup generation and classification end-to-end [ 16 , 24 ] . More recently, mixup designed for ViTs optimizes mixing policies with self-attention maps [ 56 , 57 ] . SAMix belongs to the fourth type and learns both class- and instance-level mixup relationships and its pre-trained SAMix P eliminates high time-consuming problems of this type of method. Additionally, some researchers [ 58 , 56 , 59 ] improve class mixing policies upon linear mixup. See A.7 for details.

Instance-level Mixup for SSL A complementary method to learn better instance-level representation is to apply mixup in SSL scenarios [ 7 ] . However, most approaches are limited to using linear mixup variants, such as applying MixUp and CutMix in the input or latent space mixup [ 14 , 41 , 43 , 44 ] for SSL without ground-truth labels. SAMix improves SSL tasks by learning mixup policies online.

6 Limitations and Conlusions

In this work, we first study and decompose objectives for mixup generation as local-emphasized and global-constrained terms in order to learn adaptive mixup policy at both class- and instance-level. SAMix provides a unified mixup framework with both online and pre-trained pipelines to boost discriminative representation learning based on improved η 𝜂 \eta -balanced loss and Mixer. Moreover, a more applicable pre-trained SAMix P is provided. As a limitation, the Mixer only takes two samples as input and conflicts when the task-relevant information is overlapping. In future works, we suppose that k-mixup (k ≥ \geq 2) or conflict-aware Mixer can be another promising avenue to improve mixup.

Acknowledgements

This work was supported by National Key R&D Program of China (No. 2022ZD0115100), National Natural Science Foundation of China Project (No. U21A20427), and Project (No. WU2022A009) from the Center of Synthetic Biology and Integrated Bioengineering of Westlake University. This work was done when Zedong Wang and Zhiyuan Chen interned at Westlake University. We thank the AI Station of Westlake University for the support of GPUs.

- [1] Yoshua Bengio, Aaron Courville, and Pascal Vincent. Representation learning: A review and new perspectives. IEEE transactions on pattern analysis and machine intelligence , 35(8):1798–1828, 2013.

- [2] Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805 , 2018.

- [3] Bruno Korbar, Du Tran, and Lorenzo Torresani. Cooperative learning of audio and video models from self-supervised synchronization. arXiv preprint arXiv:1807.00230 , 2018.

- [4] Ting Chen, Simon Kornblith, Mohammad Norouzi, and Geoffrey Hinton. A simple framework for contrastive learning of visual representations. In Proceedings of the International Conference on Machine Learning (ICML) , 2020.

- [5] Hongyi Zhang, Moustapha Cisse, Yann N Dauphin, and David Lopez-Paz. mixup: Beyond empirical risk minimization. In International Conference on Learning Representations (ICLR) , 2018.

- [6] Jang-Hyun Kim, Wonho Choo, and Hyun Oh Song. Puzzle mix: Exploiting saliency and local statistics for optimal mixup. In International Conference on Machine Learning (ICML) , pages 5275–5285. PMLR, 2020.

- [7] Kibok Lee, Yian Zhu, Kihyuk Sohn, Chun-Liang Li, Jinwoo Shin, and Honglak Lee. I-mix: A domain-agnostic strategy for contrastive representation learning. In International Conference on Learning Representations (ICLR) , 2021.

- [8] Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, Jakob Uszkoreit, and Neil Houlsby. An image is worth 16x16 words: Transformers for image recognition at scale. In International Conference on Learning Representations (ICLR) , 2021.

- [9] Hugo Touvron, M. Cord, M. Douze, F. Massa, Alexandre Sablayrolles, and H. Jégou. Training data-efficient image transformers & distillation through attention. In Proceedings of the International Conference on Machine Learning (ICML) , 2021.

- [10] Ramprasaath R. Selvaraju, Michael Cogswell, Abhishek Das, Ramakrishna Vedantam, Devi Parikh, and Dhruv Batra. Grad-cam: Visual explanations from deep networks via gradient-based localization. arXiv preprint arXiv:1610.02391 , 2019.

- [11] Jang-Hyun Kim, Wonho Choo, Hosan Jeong, and Hyun Oh Song. Co-mixup: Saliency guided joint mixup with supermodular diversity. In International Conference on Learning Representations (ICLR) , 2021.

- [12] AFM Uddin, Mst Monira, Wheemyung Shin, TaeChoong Chung, Sung-Ho Bae, et al. Saliencymix: A saliency guided data augmentation strategy for better regularization. In International Conference on Learning Representations (ICLR) , 2021.

- [13] Jianchao Zhu, Liangliang Shi, Junchi Yan, and Hongyuan Zha. Automix: Mixup networks for sample interpolation via cooperative barycenter learning. In European Conference on Computer Vision (ECCV) . Springer, 2020.

- [14] Yannis Kalantidis, Mert Bulent Sariyildiz, Noe Pion, Philippe Weinzaepfel, and Diane Larlus. Hard negative mixing for contrastive learning. In Advances in Neural Information Processing Systems (NeurIPS) , 2020.

- [15] Zhiqiang Shen, Zechun Liu, Zhuang Liu, Marios Savvides, Trevor Darrell, and Eric Xing. Un-mix: Rethinking image mixtures for unsupervised visual representation learning. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI) , 2021.

- [16] Zicheng Liu, Siyuan Li, Di Wu, Zhiyuan Chen, Lirong Wu, Jianzhu Guo, and Stan Z Li. Automix: Unveiling the power of mixup. In Proceedings of the European Conference on Computer Vision (ECCV) . Springer, 2022.

- [17] Kaiming He, Haoqi Fan, Yuxin Wu, Saining Xie, and Ross Girshick. Momentum contrast for unsupervised visual representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) , pages 9729–9738, 2020.

- [18] Aaron van den Oord, Yazhe Li, and Oriol Vinyals. Representation learning with contrastive predictive coding. arXiv preprint arXiv:1807.03748 , 2019.

- [19] Sangdoo Yun, Dongyoon Han, Seong Joon Oh, Sanghyuk Chun, Junsuk Choe, and Youngjoon Yoo. Cutmix: Regularization strategy to train strong classifiers with localizable features. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) , pages 6023–6032, 2019.

- [20] Jean-Bastien Grill, Florian Strub, Florent Altché, Corentin Tallec, Pierre H Richemond, Elena Buchatskaya, Carl Doersch, Bernardo Avila Pires, Zhaohan Daniel Guo, Mohammad Gheshlaghi Azar, et al. Bootstrap your own latent: A new approach to self-supervised learning. In Advances in Neural Information Processing Systems (NeurIPS) , 2020.

- [21] Xiaohang Zhan, Jiahao Xie, Ziwei Liu, Yew Soon Ong, and Chen Change Loy. Online deep clustering for unsupervised representation learning. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR) , 2020.

- [22] Prannay Khosla, Piotr Teterwak, Chen Wang, Aaron Sarna, Yonglong Tian, Phillip Isola, Aaron Maschinot, Ce Liu, and Dilip Krishnan. Supervised contrastive learning. In Advances in Neural Information Processing Systems (NeurIPS) , 2020.

- [23] Joshua David Robinson, Ching-Yao Chuang, Suvrit Sra, and Stefanie Jegelka. Contrastive learning with hard negative samples. In International Conference on Learning Representations , 2021.

- [24] Ali Dabouei, Sobhan Soleymani, Fariborz Taherkhani, and Nasser M Nasrabadi. Supermix: Supervising the mixing data augmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) , pages 13794–13803, 2021.

- [25] Alex Krizhevsky, Geoffrey Hinton, et al. Learning multiple layers of features from tiny images. 2009.

- [26] Patryk Chrabaszcz, Ilya Loshchilov, and Frank Hutter. A downsampled variant of imagenet as an alternative to the cifar datasets. arXiv preprint arXiv:1707.08819 , 2017.

- [27] Olga Russakovsky, Jia Deng, Hao Su, Jonathan Krause, Sanjeev Satheesh, Sean Ma, Zhiheng Huang, Andrej Karpathy, Aditya Khosla, Michael Bernstein, et al. Imagenet large scale visual recognition challenge. International journal of computer vision (IJCV) , pages 211–252, 2015.

- [28] Adam Coates, Andrew Ng, and Honglak Lee. An analysis of single-layer networks in unsupervised feature learning. In Proceedings of the fourteenth international conference on artificial intelligence and statistics , pages 215–223. JMLR Workshop and Conference Proceedings, 2011.

- [29] Catherine Wah, Steve Branson, Peter Welinder, Pietro Perona, and Serge Belongie. The caltech-ucsd birds-200-2011 dataset. California Institute of Technology, 2011.

- [30] Subhransu Maji, Esa Rahtu, Juho Kannala, Matthew Blaschko, and Andrea Vedaldi. Fine-grained visual classification of aircraft. arXiv preprint arXiv:1306.5151 , 2013.

- [31] Grant Van Horn, Oisin Mac Aodha, Yang Song, Yin Cui, Chen Sun, Alex Shepard, Hartwig Adam, Pietro Perona, and Serge Belongie. The inaturalist species classification and detection dataset. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR) , 2018.

- [32] Bolei Zhou, Agata Lapedriza, Jianxiong Xiao, Antonio Torralba, and Aude Oliva. Learning deep features for scene recognition using places database. In Advances in Neural Information Processing Systems (NeurIPS) , pages 487–495, 2014.

- [33] Sergey Zagoruyko and Nikos Komodakis. Wide residual networks. In Proceedings of the British Machine Vision Conference (BMVC) , 2016.

- [34] Saining Xie, Ross Girshick, Piotr Dollár, Zhuowen Tu, and Kaiming He. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR) , pages 1492–1500, 2017.

- [35] Ze Liu, Yutong Lin, Yue Cao, Han Hu, Yixuan Wei, Zheng Zhang, Stephen Lin, and Baining Guo. Swin transformer: Hierarchical vision transformer using shifted windows. In IEEE/CVF International Conference on Computer Vision (ICCV) , 2021.

- [36] Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, Alban Desmaison, Andreas Köpf, Edward Yang, Zach DeVito, Martin Raison, Alykhan Tejani, Sasank Chilamkurthy, Benoit Steiner, Lu Fang, Junjie Bai, and Soumith Chintala. Pytorch: An imperative style, high-performance deep learning library. In Advances in Neural Information Processing Systems (NeurIPS) , 2019.

- [37] Ilya Loshchilov and Frank Hutter. Sgdr: Stochastic gradient descent with warm restarts. arXiv preprint arXiv:1608.03983 , 2016.

- [38] Yang You, Jing Li, Sashank Reddi, Jonathan Hseu, Sanjiv Kumar, Srinadh Bhojanapalli, Xiaodan Song, James Demmel, Kurt Keutzer, and Cho-Jui Hsieh. Large batch optimization for deep learning: Training BERT in 76 minutes. In International Conference on Learning Representations (ICLR) , 2020.

- [39] Ross Wightman, Hugo Touvron, and Hervé Jégou. Resnet strikes back: An improved training procedure in timm. arXiv preprint arXiv:2110.00476 , 2021.

- [40] Ilya Loshchilov and Frank Hutter. Decoupled weight decay regularization. In International Conference on Learning Representations (ICLR) , 2019.

- [41] Xiangxiang Chu, Xiaohang Zhan, and Xiaolin Wei. A unified mixture-view framework for unsupervised representation learning. In Proceedings of the British Machine Vision Conference (BMVC) , 2022.

- [42] Mathilde Caron, Ishan Misra, Julien Mairal, Priya Goyal, Piotr Bojanowski, and Armand Joulin. Unsupervised learning of visual features by contrasting cluster assignments. In Advances in Neural Information Processing Systems (NeurIPS) , 2020.

- [43] Vikas Verma, Minh-Thang Luong, Kenji Kawaguchi, Hieu Pham, and Quoc V. Le. Towards domain-agnostic contrastive learning. In International Conference on Machine Learning (ICML) , 2021.

- [44] Sucheng Ren, Huiyu Wang, Zhengqi Gao, Shengfeng He, Alan Loddon Yuille, Yuyin Zhou, and Cihang Xie. A simple data mixing prior for improving self-supervised learning. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR) , pages 14575–14584, 2022.

- [45] Mark Everingham, Luc Van Gool, Christopher KI Williams, John Winn, and Andrew Zisserman. The pascal visual object classes (voc) challenge. International journal of computer vision (IJCV) , 88(2):303–338, 2010.

- [46] Tsung-Yi Lin, Michael Maire, Serge Belongie, James Hays, Pietro Perona, Deva Ramanan, Piotr Dollár, and C Lawrence Zitnick. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision (ECCV) , 2014.

- [47] Yuxin Wu, Alexander Kirillov, Francisco Massa, Wan-Yen Lo, and Ross Girshick. Detectron2. https://github.com/facebookresearch/detectron2 , 2019.

- [48] Shaoqing Ren, Kaiming He, Ross Girshick, and Jian Sun. Faster r-cnn: Towards real-time object detection with region proposal networks. arXiv preprint arXiv:1506.01497 , 2015.

- [49] Kaiming He, Georgia Gkioxari, Piotr Dollár, and Ross Girshick. Mask r-cnn. In Proceedings of the International Conference on Computer Vision (ICCV) , 2017.

- [50] Dan Hendrycks, Norman Mu, Ekin D Cubuk, Barret Zoph, Justin Gilmer, and Balaji Lakshminarayanan. Augmix: A simple data processing method to improve robustness and uncertainty. In International Conference on Learning Representations (ICLR) , 2020.

- [51] Ethan Harris, Antonia Marcu, Matthew Painter, Mahesan Niranjan, and Adam Prügel-Bennett Jonathon Hare. Fmix: Enhancing mixed sample data augmentation. arXiv preprint arXiv:2002.12047 , 2(3):4, 2020.

- [52] Jie Qin, Jiemin Fang, Qian Zhang, Wenyu Liu, Xingang Wang, and Xinggang Wang. Resizemix: Mixing data with preserved object information and true labels. arXiv preprint arXiv:2012.11101 , 2020.

- [53] Vikas Verma, Alex Lamb, Christopher Beckham, Amir Najafi, Ioannis Mitliagkas, David Lopez-Paz, and Yoshua Bengio. Manifold mixup: Better representations by interpolating hidden states. In International Conference on Machine Learning (ICML) , pages 6438–6447. PMLR, 2019.

- [54] Mojtaba Faramarzi, Mohammad Amini, Akilesh Badrinaaraayanan, Vikas Verma, and Sarath Chandar. Patchup: A regularization technique for convolutional neural networks. arXiv preprint arXiv:2006.07794 , 2020.

- [55] Shashanka Venkataramanan, Ewa Kijak, Laurent Amsaleg, and Yannis Avrithis. Alignmixup: Improving representations by interpolating aligned features. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) , 2022.

- [56] Jie-Neng Chen, Shuyang Sun, Ju He, Philip Torr, Alan Yuille, and Song Bai. Transmix: Attend to mix for vision transformers. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR) , 2022.

- [57] Jihao Liu, B. Liu, Hang Zhou, Hongsheng Li, and Yu Liu. Tokenmix: Rethinking image mixing for data augmentation in vision transformers. In Proceedings of the European Conference on Computer Vision (ECCV) , 2022.

- [58] Joonhyung Park, June Yong Yang, Jinwoo Shin, Sung Ju Hwang, and Eunho Yang. Saliency grafting: Innocuous attribution-guided mixup with calibrated label mixing. In AAAI Conference on Artificial Intelligence (AAAI) , 2022.

- [59] Zicheng Liu, Siyuan Li, Ge Wang, Cheng Tan, Lirong Wu, and Stan Z. Li. Decoupled mixup for data-efficient learning, 2022.

- [60] Siyuan Li, Zedong Wang, Zicheng Liu, Di Wu, and Stan Z. Li. Openmixup: Open mixup toolbox and benchmark for visual representation learning. https://github.com/Westlake-AI/openmixup , 2022.

- [61] Alex Krizhevsky, Ilya Sutskever, and Geoffrey E Hinton. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems (NeurIPS) , pages 1097–1105, 2012.

- [62] Xinlei Chen, Haoqi Fan, Ross Girshick, and Kaiming He. Improved baselines with momentum contrastive learning. arXiv preprint arXiv:2003.04297 , 2020.

- [63] Mathilde Caron, Piotr Bojanowski, Armand Joulin, and Matthijs Douze. Deep clustering for unsupervised learning of visual features. In Proceedings of the European Conference on Computer Vision (ECCV) , 2018.

- [64] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR) , pages 770–778, 2016.

- [65] Kaiming He, Haoqi Fan, Yuxin Wu, Saining Xie, and Ross Girshick. Momentum contrast for unsupervised visual representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) , pages 9729–9738, 2020.

- [66] Xinlei Chen and Kaiming He. Exploring simple siamese representation learning. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR) , 2021.

- [67] Xinlei Chen, Saining Xie, and Kaiming He. An empirical study of training self-supervised vision transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) , pages 9640–9649, 2021.

- [68] Yonglong Tian, Chen Sun, Ben Poole, Dilip Krishnan, Cordelia Schmid, and Phillip Isola. What makes for good views for contrastive learning? In Advances in Neural Information Processing Systems (NeurIPS) , 2020.

- [69] Yao-Hung Hubert Tsai, Yue Wu, Ruslan Salakhutdinov, and Louis-Philippe Morency. Self-supervised learning from a multi-view perspective. In International Conference on Learning Representations (ICLR) , 2021.

- [70] Yonglong Tian, Dilip Krishnan, and Phillip Isola. Contrastive multiview coding. In Proceedings of the European Conference on Computer Vision (ECCV) , 2020.

- [71] Mohamed Ishmael Belghazi, Aristide Baratin, Sai Rajeswar, Sherjil Ozair, Yoshua Bengio, Aaron Courville, and R Devon Hjelm. Mine: Mutual information neural estimation. In Proceedings of the International Conference on Machine Learning (ICML) , 2018.

- [72] Jure Zbontar, Li Jing, Ishan Misra, Yann LeCun, and Stéphane Deny. Barlow twins: Self-supervised learning via redundancy reduction. In International Conference on Machine Learning (ICML) , pages 12310–12320. PMLR, 2021.

- [73] Mathilde Caron, Hugo Touvron, Ishan Misra, Hervé Jégou, Julien Mairal, Piotr Bojanowski, and Armand Joulin. Emerging properties in self-supervised vision transformers. In Proceedings of the International Conference on Computer Vision (ICCV) , 2021.

Appendix A Appendix

We first introduce dataset information in A.1 and provide implementation details for supervised (SL) and self-supervised learning (SSL) tasks in A.2 , A.3 , and A.4 . Then, we provide settings and results of analysis experiments for Sec. 3 in A.5 . Moreover, we visualize mixed samples in A.6 , and provide detailed related work in A.7 .

A.1 Basic Settings

Reproduction details..

We use OpenMixup [ 60 ] implemented in PyTorch [ 36 ] as our code-base for both supervised image classification and contrastive learning (CL) tasks. Except results marked by † † {\dagger} and ‡ ‡ \ddagger , we reproduce most experiment results of compared methods, including Mixup [ 5 ] , CutMix [ 19 ] , ManifoldMix [ 53 ] , SaliencyMix [ 12 ] , FMix [ 51 ] , and ResizeMix [ 52 ] .

Dataset information.

We briefly introduce image datasets used in Sec. 4 : (1) CIFAR-100 [ 25 ] contains 50k training images and 10K test images of 100 classes. (2) ImageNet-1k (IN-1k) [ 61 ] contains 1.28 million training images and 50k validation images of 1000 classes. (3) Tiny-ImageNet (Tiny) [ 26 ] is a rescaled version of ImageNet-1k, which has 100k training images and 10k validation images of 200 classes. (4) STL-10 [ 28 ] benchmark is designed for semi- or unsupervised learning, which consists of 5k labeled training images for 10 classes 100K unlabelled training images, and a test set of 8k images. (5) CUB-200-2011 (CUB) [ 29 ] contains over 11.8k images from 200 wild bird species for fine-grained classification. (6) FGVC-Aircraft (Aircraft) [ 30 ] contains 10k images of 100 classes of aircraft. (7) iNaturalist2017 (iNat2017) [ 31 ] is a large-scale fine-grained classification benchmark consisting of 579.2k images for training and 96k images for validation from over 5k different wild species. (8) PASCAL VOC [ 45 ] is a classical objection detection and segmentation dataset containing 16.5k images for 20 classes. (9) COCO [ 46 ] is an objection detection and segmentation benchmark containing 118k scenic images with many objects for 80 classes.

A.2 Implementation of SAMix

Online training pipeline..

The training process of SAMix is summarized as four steps: (1) using the momentum encoder to generate the feature maps Z l superscript 𝑍 𝑙 Z^{l} for Mixer ℳ ϕ subscript ℳ italic-ϕ \mathcal{M}_{\phi} ; (2) generating X m i x q superscript subscript 𝑋 𝑚 𝑖 𝑥 𝑞 X_{mix}^{q} and X m i x k superscript subscript 𝑋 𝑚 𝑖 𝑥 𝑘 X_{mix}^{k} by Mixer for the online networks and Mixer; (3) training the online networks by Eq. 12 and the Mixer by Eq. 13 separately; (4) updating the momentum networks by Eq. 14 .

Prior knowledge of mixup.

subscript ℓ 𝜇 subscript ℓ 𝜎 \mathcal{L}_{\phi}^{mask}=\beta(\ell_{\mu}+\ell_{\sigma}) , where β 𝛽 \beta is a balancing weight. β 𝛽 \beta is initialized to 0.1 0.1 0.1 and linearly decreases to 0 during training.

A.3 Supervised Image Classification

Hyper-parameter settings., a.4 contrastive learning, implementation of samix-c and samix-i..

As for Table 5 and Table 6 , all compared CL methods use MoCo.V2 pre-training settings except for SwAV [ 42 ] , which adopts ResNet-50 [ 64 ] as the encoder f θ subscript 𝑓 𝜃 f_{\theta} with two-layer MLP projector g ω subscript 𝑔 𝜔 g_{\omega} and is optimized by SGD optimizer and Cosine scheduler with the initial learning rate of 0.03 0.03 0.03 and the batch size of 256 256 256 . The length of the momentum dictionary is 65536 for IN-1k and 16384 for STL-10 and Tiny datasets. The data augmentation strategy is based on IN-1k in MoCo.v2 as follows: Geometric augmentation is RandomResizedCrop with the scale in [ 0.2 , 1.0 ] 0.2 1.0 [0.2,1.0] and RandomHorizontalFlip . Color augmentation is ColorJitter with {brightness, contrast, saturation, hue} strength of { 0.4 , 0.4 , 0.4 , 0.1 } 0.4 0.4 0.4 0.1 \{0.4,0.4,0.4,0.1\} with a probability of 0.8 0.8 0.8 , and RandomGrayscale with a probability of 0.2 0.2 0.2 . Blurring augmentation uses a square Gaussian kernel of size 23 × 23 23 23 23\times 23 with a std uniformly sampled in [ 0.1 , 2.0 ] 0.1 2.0 [0.1,2.0] . We use 224 × \times 224 resolutions for IN-1k and 96 × \times 96 resolutions for STL-10 and Tiny datasets. As for Table 7 and Table 8 , we follow the original setups of these CL baselines (SimCLR [ 4 ] , MoCo.V1 [ 65 ] , MoCo.V2 [ 62 ] , BYOL [ 20 ] , SwAV [ 42 ] , SimSiam [ 66 ] , and MoCo.V3 [ 67 ] ) using OpenMixup [ 60 ] implementations. Notice that MoCo.V3 is specially designed for vision Transformers [ 8 ] while other CL baselines are originally proposed with CNN architecture. Meanwhile, we employ the contrastive learning objectives with mixing augmentations for BYOL and SimSiam proposed in BSIM [ 41 ] because these CL baselines adopt the MSE loss between positive sample pairs instead of the infoNCE loss (Eq. 2 ).

CL methods with Mixup augmentations.

In Sec. 4.2 , we compare the proposed SAMix variants with general Mixup approaches proposed in SL and well-designed CL methods with Mixups. As for the general Mixup variants implemented with CL baselines, Mixup [ 5 ] , ManifoldMix [ 53 ] , CutMix [ 19 ] , SaliencyMix [ 12 ] , FMix [ 51 ] , ResizeMix [ 52 ] , PuzzleMix [ 6 ] , and out proposed SAMix only use the single Mixup augmentation. As for the CL methods applying Mixup augmentations, DACL [ 43 ] employs the vanilla Mixup, MoCHi [ 14 ] , i-Mix [ 7 ] , UnMix [ 15 ] , WBSIM [ 41 ] use two types of Mixup strategies in the input image and the latent space of the encoder, SDMP [ 44 ] randomly applies three types of input space Mixups (Mixup, CutMix, and ResizeMix). Therefore, SDMP can achieve competitive performances as SAMix variants in Table 7 and Table 8 .

Evaluation protocols.

We evaluate the SSL representation with a linear classification protocol proposed in MoCo [ 17 ] and MoCo.V3 [ 67 ] for ResNet and ViT variants, which trains a linear classifier on top of the frozen representation on the training set. For ResNet variants, the linear classifier is trained 100 epochs by an SGD optimizer with the SGD momentum of 0.9 0.9 0.9 and the weight decay of 0 0 . We set the initial learning rate of 30 30 30 for IN-1k as MoCo, and 0.1 0.1 0.1 for STL-10 and Tiny with a batch size of 256. The learning rate decays by 0.1 0.1 0.1 at epochs 60 and 80. For ViT-S, the linear classifier is trained 90 epochs by the SGD optimizer with a batch size of 1024 and a basic learning rate of 12. Moreover, we adopt object detection task to evaluate transfer learning abilities following MoCo, which uses the 4 4 4 -th layer feature maps of ResNet (ResNet-C4) to fine-tune Faster R-CNN [ 48 ] with 24k iterations on the trainval07+12 set and Mask R-CNN [ 49 ] with 2 × \times training schedule (24-epoch) on the train2017 set.

A.5 Empirical Experiments

A.5.1 cross-view training pipeline for instance-level mixup.

superscript ℓ 𝑁 𝐶 𝐸 superscript subscript 𝑧 𝑖 subscript 𝜏 𝑞 superscript subscript 𝑧 𝑖 subscript 𝜏 𝑘 superscript ℓ 𝑁 𝐶 𝐸 subscript 𝑧 𝑚 \ell^{NCE}(z_{i}^{\tau_{q}},z_{i}^{\tau_{k}})+\ell^{NCE}(z_{m}) , surpasses only using one of them, which indicates that mixup enables f θ subscript 𝑓 𝜃 f_{\theta} to learn the relationship between local neighborhood systems.

A.5.2 Analysis of Instance-level Mixup

As we discussed in Sec. A.5.1 , we propose the cross-view training pipeline for instance-level mixup classification. We then discuss inter- and intra-class proprieties of instance-level mixup. As shown in Figure 8 (c), we adopt inter-cluster and intra-cluster mixup from {Mixup, CutMix} with α ∈ { 0.2 , 1 , 2 , 4 } 𝛼 0.2 1 2 4 \alpha\in\{0.2,1,2,4\} to verify that instance-level mixup should treat inter- and intra-class mixup differently. Empirically, mixed samples provided by Mixup preserve global information of both source samples (smoother), while samples generated by CutMix preserve local patches (more discriminative). And we introduce pseudo labels (PL) to indicate different clusters by clustering method ODC [ 21 ] with the class (cluster) number C 𝐶 C . Based on experiment results, we can conclude that inter-class mixup requires discriminative mixed samples with strong intensity while the intra-class needs smooth samples with low intensity. Moreover, we provide two cluster-based instance-level mixup methods in Table 5 and 6 (denoting by ∗ * ): (a) Inter-Intra ∗ . We use CutMix with α ≥ 2 𝛼 2 \alpha\geq 2 as inter-cluster mixup and Mixup with α = 0.2 𝛼 0.2 \alpha=0.2 as an intra-cluster mixup. (b) PuzzleMix ∗ . We introduce saliency-based mixup methods to SSL tasks by introducing PL and a parametric cluster classifier g ψ C superscript subscript 𝑔 𝜓 𝐶 g_{\psi}^{C} after the encoder. This classifier g ψ C superscript subscript 𝑔 𝜓 𝐶 g_{\psi}^{C} and encoder f θ subscript 𝑓 𝜃 f_{\theta} are optimized online like AutoMix and SAMix mentioned in A.2 . Based on Grad-CAM [ 10 ] calculated from the classifier, PuzzleMix can be adopted on SSL tasks.

A.5.3 Analysis of Mixup Generation Objectives

In Sec. 3.1 , we design experiments to analyze various losses for mixup generation in Figure 2 (left) and the proposed η 𝜂 \eta -balanced loss in Figure 2 (right) for both SL and SSL tasks with ResNet-18 on STL-10 and Tiny. Basically, we assume both STL-10 and Tiny datasets have 200 classes on their 100k images. Since STL-10 does not provide ground truth labels (L) for 100k unlabeled data, we introduce PL generated by a supervised pertained classifier on Tiny as the "ground truth" for its 100k training set. Notice that L denotes ground truth labels and PL denotes pseudo labels generated by ODC [ 21 ] with C = 200 𝐶 200 C=200 .

As for the SL task, we use the labeled training set for mixup classification (100k on Tiny v.s. 5k on STL-10). Notice that SL results are worse than using SSL settings on STL-10, since the SL task only trains a randomly initialized classifier on 5k labeled data. Because the infoNCE and BCE loss require cross-view augmentation (or they will produce trivial solutions), we adopt MoCo.V2 augmentation settings for these two losses when performing the SL task. Compared to CE (L), we corrupt the global term in CE as CE (PL) or directly remove them as pBCE (L) to show that pBCE is vital to optimizing mixed samples. Similarly, we show that the global term is used as the global constraint by comparing BCE (UL) with infoNCE (UL), infoNCE (PL), and infoNCE (L).

As for the SSL task, we verify the conclusions drawn from the SL task and conclude that (a) the local term optimizes mixup generation directly, corresponding to the smoothness property, and (b) the global term serves as the global constraint corresponding to the discriminative property. Moreover, we verified that using the η 𝜂 \eta -balanced loss as ℒ ϕ c l s superscript subscript ℒ italic-ϕ 𝑐 𝑙 𝑠 \mathcal{L}_{\phi}^{cls} yields the best performance on SL and SSL tasks. Notice that we use η = 0.5 𝜂 0.5 \eta=0.5 on small-scale datasets and η = 0.1 𝜂 0.1 \eta=0.1 on large-scale datasets for SL tasks, and use η = 0.5 𝜂 0.5 \eta=0.5 for all SSL tasks.

A.5.4 Analysis of Mutual Information for Mixup

Since mutual information (MI) is usually adopted to analyze contrastive-based augmentations [ 70 , 68 ] , we estimate MI between x m subscript 𝑥 𝑚 x_{m} of various methods and x i subscript 𝑥 𝑖 x_{i} by MINE [ 71 ] with 100k images in 64 × \times 64 resolutions on Tiny-ImageNet. We sample λ = 𝜆 absent \lambda= from 0 to 1 with the step of 0.125 0.125 0.125 and plot results in Figure 7 (d). Here we see that SAMix-C and SAMix-I with more MI when λ ≈ 0.5 𝜆 0.5 \lambda\approx 0.5 perform better.

A.5.5 Results of Downstream tasks

In Sec. 4.2 , we evaluate transferable abilities of the learned representation of self-supervised methods to object detection task on PASCAL VOC [ 45 ] and COCO [ 46 ] . In Table 11 , the online SAMix-C and the pre-trained SAMix-I P achieve the best detection performances among the compared methods and significantly improves the baseline MoCo.V2 ( e.g., SAMix-C gains 0.9% AP and +0.7% AP b over MoCo.V2). Notice that MoCHi, i-Mix, and UnMix introduce mixup augmentations in both the input and latent spaces, while our proposed SAMix only generates mixed samples in the input space.

A.6 Visualization of SAMix

A.6.1 mixing attention and content in mixer.

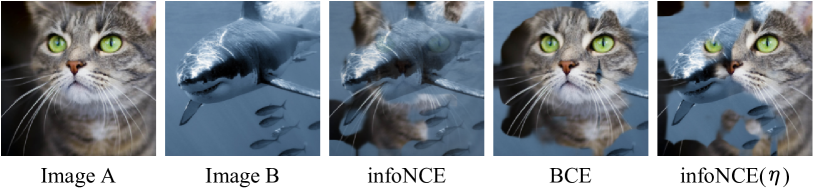

A.6.2 Effects of Mixup Generation Loss

In addition to Sec. 3.3 , we further provide visualization of mixed samples using the infoNCE (Eq. 4 ), BCE (Eq. 7 ), and η 𝜂 \eta -balanced infoNCE loss (Eq. 8 ) for Mixer. As shown in Figure 9 , we find that mixed samples using infoNCE mixup loss prefer instance-specific and fine-grained features. On the contrary, mixed samples of the BCE loss seem only to consider discrimination between two corresponding neighborhood systems. It is more inclined to maintain the continuity of the whole object relative to infoNCE. Thus, combining both the characteristics, the η 𝜂 \eta -balanced infoNCE loss yields mixed samples that retain both instance-specific features and global discrimination.

A.6.3 Visualization of Mixed Samples in SAMix

Samix in various scenarios..

In addition to Sec. 3.3 , we visualize the mixed samples of SAMix in various scenarios to show the relationship between mixed samples and class (cluster) information. Since IN-1k contains some samples in CUB and Aircraft, we choose the overlapped samples to visualize SAMix trained for the fine-grained SL task (CUB and Aircraft) and SSL tasks (SAMix-I and SAMix-C). As shown in Figure 10 , mixed samples reflect the granularity of class information adopted in mixup training. Specifically, we find that mixed samples using infoNCE mixup loss (Eq. 4 ) is more closely to the fine-grained SL because they both have many fine-grained centroids.

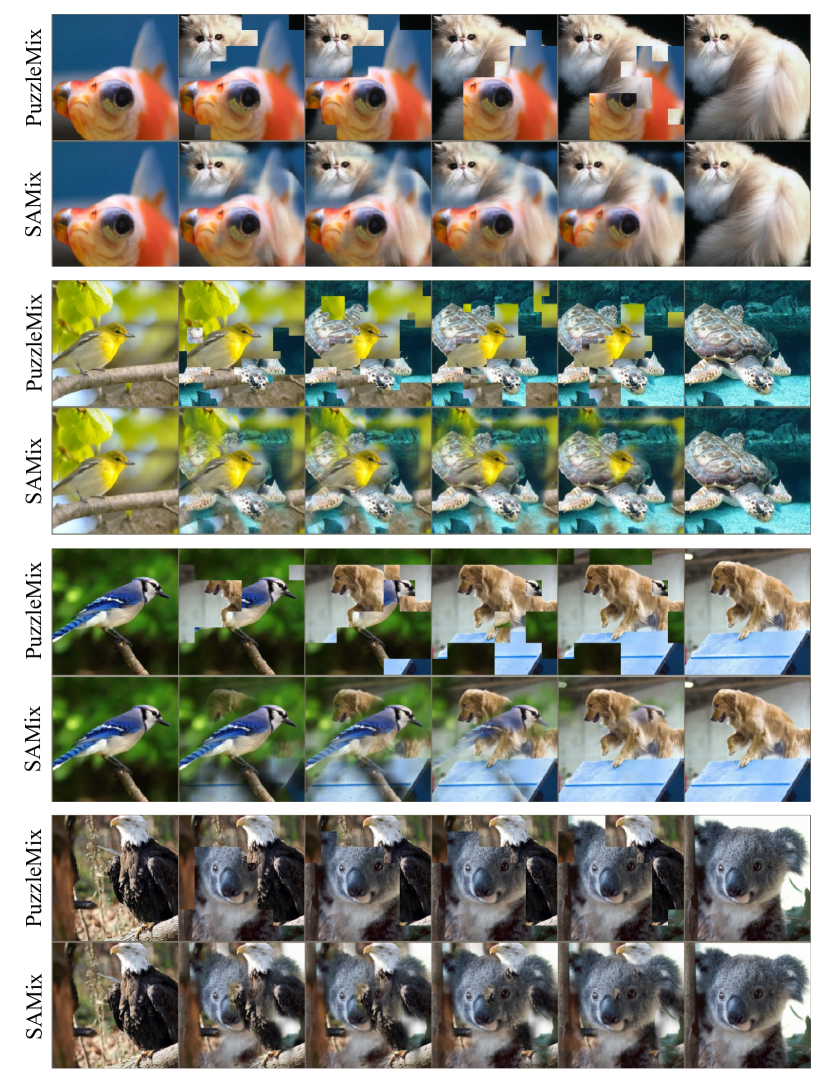

Comparison with PuzzleMix in SL tasks.

To highlight the accurate mixup relationship modeling in SAMix compared to PuzzleMix (standing for saliency-based methods), we visualize the results of mixed samples from these two methods in the supervised case in Figure 11 . There is three main difference: (a) bilinear upsampling strategy in SAMix makes the mixed samples more smooth in local patches. (b) adaptive λ 𝜆 \lambda encoding and mixing attention enhances the correspondence between mixed samples and λ 𝜆 \lambda value. (c) η 𝜂 \eta -balanced mixup loss enables SAMix to balance global discriminative and fine-grained features.

Comparison of SAMix-I and SAMix-C in SSL tasks.

As shown in Figure 12 , we provide more mixed samples of SAMix-I and SAMix-C in the SSL tasks to show that introducing class information by PL can help Mixer generate mixed samples that retain both the fine-grained features (instance discrimination) and whole targets.

A.7 Detailed related work

Contrastive learning..

CL amplifies the potential of SSL by achieving significant improvements on classification [ 4 , 17 , 42 ] , which maximizes similarities of positive pairs while minimizing similarities of negative pairs. To provide a global view of CL, MoCo [ 17 ] proposes a memory-based framework with a large number of negative samples and model differentiation using the exponential moving average. SimCLR [ 4 ] demonstrates a simple memory-free approach with large batch size and strong data augmentations that is also competitive in performance to memory-based methods. BYOL [ 20 ] and its variants [ 66 , 67 ] do not require negative pairs or a large batch size for the proposed pretext task, which tries to estimate latent representations from the same instance. Besides pairwise contrasting, SwAV [ 42 ] performs online clustering while enforcing consistency between multi-views of the same image. Barlow Twins [ 72 ] avoids the representation collapsing by learning the cross-correlation matrix of distorted views of the same sample. Moreover, MoCo.V3 [ 67 ] and DINO [ 73 ] are proposed to tackle unstable issues and degenerated performances of CL based on popular Vision Transformers [ 8 ] .

MixUp [ 5 ] , convex interpolations of any two samples and their unique one-hot labels were presented as the first mixing-based data augmentation approach for regularising the training of networks. ManifoldMix [ 53 ] and PatchUp [ 54 ] expand it to the hidden space. CutMix [ 19 ] suggests a mixing strategy based on the patch of the image, i.e. , randomly replacing a local rectangular section in images. Based on CutMix, ResizeMix [ 52 ] inserts a whole image into a local rectangular area of another image after scaling down. FMix [ 51 ] converts the image to Fourier space (spectrum domain) to create binary masks. To generate more semantic virtual samples, offline optimization algorithms are introduced for the saliency regions. SaliencyMix [ 12 ] obtains the saliency using a universal saliency detector. With optimization transportation, PuzzleMix [ 6 ] and Co-Mixup [ 11 ] present more precise methods for finding appropriate mixup masks based on saliency statistics. SuperMix [ 24 ] combines mixup with knowledge distillation, which learns a pixel-wise sample mixing policy via a teacher-student framework. More recently, TransMix [ 56 ] and TokenMix [ 57 ] are proposed specially designed Mixup augmentations for Vision Transformers [ 8 ] . Differing from previous methods, AutoMix [ 16 ] can learn the mixup generation by a sub-network end-to-end, which generates mixed samples via feature maps and the mixing ratio. Orthogonal to the sample mixing strategies, some researchers [ 58 , 56 , 59 ] improve the label mixing policies upon linear mixup.

Mixup for contrastive learning.

A complementary method for better instance-level representation learning is to use mixup on CL [ 14 , 15 ] . When used in collaboration with CE loss, Mixup and its several variants provide highly efficient data augmentation for SL by establishing a relationship between samples. Most approaches are limited to linear mixup methods without a ground-truth label. For example, Un-mix [ 15 ] attempts to use MixUp in the input space for self-supervised learning, whereas the developers of MoChi [ 14 ] propose mixing the negative sample in the embedding space to increase the number of hard negatives but at the expense of classification accuracy. i-Mix [ 7 ] , DACL [ 43 ] , BSIM [ 41 ] and SDMP [ 44 ] demonstrated how to regularize contrastive learning by mixing instances in the input or latent spaces. We introduce an automatic mixup for SSL tasks, which adaptively learns the instance relationship based on inter- and intra-cluster properties online.

Subscribe to the PwC Newsletter

Join the community, boosting discriminative visual representation learning with scenario-agnostic mixup.

Mixup is a well-known data-dependent augmentation technique for DNNs, consisting of two sub-tasks: mixup generation and classification. However, the recent dominant online training method confines mixup to supervised learning (SL), and the objective of the generation sub-task is limited to selected sample pairs instead of the whole data manifold, which might cause trivial solutions. To overcome such limitations, we comprehensively study the objective of mixup generation and propose \textbf{S}cenario-\textbf{A}gnostic \textbf{Mix}up (SAMix) for both SL and Self-supervised Learning (SSL) scenarios. Specifically, we hypothesize and verify the objective function of mixup generation as optimizing local smoothness between two mixed classes subject to global discrimination from other classes. Accordingly, we propose $\eta$-balanced mixup loss for complementary learning of the two sub-objectives. Meanwhile, a label-free generation sub-network is designed, which effectively provides non-trivial mixup samples and improves transferable abilities. Moreover, to reduce the computational cost of online training, we further introduce a pre-trained version, SAMix$^\mathcal{P}$, achieving more favorable efficiency and generalizability. Extensive experiments on nine SL and SSL benchmarks demonstrate the consistent superiority and versatility of SAMix compared with existing methods.

Boosting Discriminative Visual Representation Learning with Scenario-Agnostic Mixup

Siyuan li , zicheng liu , di wu , lei shang , baigui sun , xuansong xie , stan z. li, send feedback.

Enter your feedback below and we'll get back to you as soon as possible. To submit a bug report or feature request, you can use the official OpenReview GitHub repository: Report an issue

BibTeX Record

Search code, repositories, users, issues, pull requests...

Provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications

Awesome List of Mixup Augmentation Papers for Visual Representation Learning

Westlake-AI/Awesome-Mixup

Folders and files, repository files navigation, awesome-mixup.

- Introduction

We summarize awesome mixup data augmentation methods for visual representation learning in various scenarios.

The list of awesome mixup augmentation methods is summarized in chronological order and is on updating. The main branch is modified according to Awesome-Mixup in OpenMixup , and we will add more papers according to Awesome-Mix . We first summarize fundermental mixup methods from two aspects: sample mixup policy and label mixup policy . Then, we summarize mixup techniques used in downstream tasks.

- To find related papers and their relationships, check out Connected Papers , which visualizes the academic field in a graph representation.

- To export BibTeX citations of papers, check out ArXiv or Semantic Scholar of the paper for professional reference formats.

- Table of Contents

Pre-defined Policies

Adaptive policies, label mixup methods, mixup for self-supervised learning, mixup for semi-supervised learning, mixup for regression, mixup for robustness, mixup for multi-modality, analysis of mixup, natural language processing, graph representation learning, contribution, acknowledgement, related project, fundermental methods, sample mixup methods.

mixup: Beyond Empirical Risk Minimization Hongyi Zhang, Moustapha Cisse, Yann N. Dauphin, David Lopez-Paz ICLR'2018 [ Paper ] [ Code ]

Between-class Learning for Image Classification Yuji Tokozume, Yoshitaka Ushiku, Tatsuya Harada CVPR'2018 [ Paper ] [ Code ]

MixUp as Locally Linear Out-Of-Manifold Regularization Hongyu Guo, Yongyi Mao, Richong Zhang AAAI'2019 [ Paper ]

CutMix: Regularization Strategy to Train Strong Classifiers with Localizable Features Sangdoo Yun, Dongyoon Han, Seong Joon Oh, Sanghyuk Chun, Junsuk Choe, Youngjoon Yoo ICCV'2019 [ Paper ] [ Code ]

Manifold Mixup: Better Representations by Interpolating Hidden States Vikas Verma, Alex Lamb, Christopher Beckham, Amir Najafi, Ioannis Mitliagkas, David Lopez-Paz, Yoshua Bengio ICML'2019 [ Paper ] [ Code ]

Improved Mixed-Example Data Augmentation Cecilia Summers, Michael J. Dinneen WACV'2019 [ Paper ] [ Code ]

FMix: Enhancing Mixed Sample Data Augmentation Ethan Harris, Antonia Marcu, Matthew Painter, Mahesan Niranjan, Adam Prügel-Bennett, Jonathon Hare Arixv'2020 [ Paper ] [ Code ]

SmoothMix: a Simple Yet Effective Data Augmentation to Train Robust Classifiers Jin-Ha Lee, Muhammad Zaigham Zaheer, Marcella Astrid, Seung-Ik Lee CVPRW'2020 [ Paper ] [ Code ]

PatchUp: A Regularization Technique for Convolutional Neural Networks Mojtaba Faramarzi, Mohammad Amini, Akilesh Badrinaaraayanan, Vikas Verma, Sarath Chandar Arxiv'2020 [ Paper ] [ Code ]

GridMix: Strong regularization through local context mapping Kyungjune Baek, Duhyeon Bang, Hyunjung Shim Pattern Recognition'2021 [ Paper ] [ Code ]

ResizeMix: Mixing Data with Preserved Object Information and True Labels Jie Qin, Jiemin Fang, Qian Zhang, Wenyu Liu, Xingang Wang, Xinggang Wang Arixv'2020 [ Paper ] [ Code ]

Where to Cut and Paste: Data Regularization with Selective Features Jiyeon Kim, Ik-Hee Shin, Jong-Ryul, Lee, Yong-Ju Lee ICTC'2020 [ Paper ] [ Code ]

AugMix: A Simple Data Processing Method to Improve Robustness and Uncertainty Dan Hendrycks, Norman Mu, Ekin D. Cubuk, Barret Zoph, Justin Gilmer, Balaji Lakshminarayanan ICLR'2020 [ Paper ] [ Code ]

DJMix: Unsupervised Task-agnostic Augmentation for Improving Robustness Ryuichiro Hataya, Hideki Nakayama Arxiv'2021 [ Paper ]

PixMix: Dreamlike Pictures Comprehensively Improve Safety Measures Dan Hendrycks, Andy Zou, Mantas Mazeika, Leonard Tang, Bo Li, Dawn Song, Jacob Steinhardt Arxiv'2021 [ Paper ] [ Code ]

StyleMix: Separating Content and Style for Enhanced Data Augmentation Minui Hong, Jinwoo Choi, Gunhee Kim CVPR'2021 [ Paper ] [ Code ]

Domain Generalization with MixStyle Kaiyang Zhou, Yongxin Yang, Yu Qiao, Tao Xiang ICLR'2021 [ Paper ] [ Code ]

On Feature Normalization and Data Augmentation Boyi Li, Felix Wu, Ser-Nam Lim, Serge Belongie, Kilian Q. Weinberger CVPR'2021 [ Paper ] [ Code ]

Guided Interpolation for Adversarial Training Chen Chen, Jingfeng Zhang, Xilie Xu, Tianlei Hu, Gang Niu, Gang Chen, Masashi Sugiyama ArXiv'2021 [ Paper ]

Observations on K-image Expansion of Image-Mixing Augmentation for Classification Joonhyun Jeong, Sungmin Cha, Youngjoon Yoo, Sangdoo Yun, Taesup Moon, Jongwon Choi IEEE Access'2021 [ Paper ] [ Code ]

Noisy Feature Mixup Soon Hoe Lim, N. Benjamin Erichson, Francisco Utrera, Winnie Xu, Michael W. Mahoney ICLR'2022 [ Paper ] [ Code ]

Preventing Manifold Intrusion with Locality: Local Mixup Raphael Baena, Lucas Drumetz, Vincent Gripon EUSIPCO'2022 [ Paper ] [ Code ]

RandomMix: A mixed sample data augmentation method with multiple mixed modes Xiaoliang Liu, Furao Shen, Jian Zhao, Changhai Nie ArXiv'2022 [ Paper ]

SuperpixelGridCut, SuperpixelGridMean and SuperpixelGridMix Data Augmentation Karim Hammoudi, Adnane Cabani, Bouthaina Slika, Halim Benhabiles, Fadi Dornaika, Mahmoud Melkemi ArXiv'2022 [ Paper ] [ Code ]

AugRmixAT: A Data Processing and Training Method for Improving Multiple Robustness and Generalization Performance Xiaoliang Liu, Furao Shen, Jian Zhao, Changhai Nie ICME'2022 [ Paper ]

A Unified Analysis of Mixed Sample Data Augmentation: A Loss Function Perspective Chanwoo Park, Sangdoo Yun, Sanghyuk Chun NIPS'2022 [ Paper ] [ Code ]

RegMixup: Mixup as a Regularizer Can Surprisingly Improve Accuracy and Out Distribution Robustness Francesco Pinto, Harry Yang, Ser-Nam Lim, Philip H.S. Torr, Puneet K. Dokania NIPS'2022 [ Paper ] [ Code ]

ContextMix: A context-aware data augmentation method for industrial visual inspection systems Hyungmin Kim, Donghun Kim, Pyunghwan Ahn, Sungho Suh, Hansang Cho, Junmo Kim EAAI'2024 [ Paper ]

( back to top )

SaliencyMix: A Saliency Guided Data Augmentation Strategy for Better Regularization A F M Shahab Uddin and Mst. Sirazam Monira and Wheemyung Shin and TaeChoong Chung and Sung-Ho Bae ICLR'2021 [ Paper ] [ Code ]

Attentive CutMix: An Enhanced Data Augmentation Approach for Deep Learning Based Image Classification Devesh Walawalkar, Zhiqiang Shen, Zechun Liu, Marios Savvides ICASSP'2020 [ Paper ] [ Code ]

SnapMix: Semantically Proportional Mixing for Augmenting Fine-grained Data Shaoli Huang, Xinchao Wang, Dacheng Tao AAAI'2021 [ Paper ] [ Code ]

Attribute Mix: Semantic Data Augmentation for Fine Grained Recognition Hao Li, Xiaopeng Zhang, Hongkai Xiong, Qi Tian VCIP'2020 [ Paper ]

On Adversarial Mixup Resynthesis Christopher Beckham, Sina Honari, Vikas Verma, Alex Lamb, Farnoosh Ghadiri, R Devon Hjelm, Yoshua Bengio, Christopher Pal NIPS'2019 [ Paper ] [ Code ]

Patch-level Neighborhood Interpolation: A General and Effective Graph-based Regularization Strategy Ke Sun, Bing Yu, Zhouchen Lin, Zhanxing Zhu ArXiv'2019 [ Paper ]

AutoMix: Mixup Networks for Sample Interpolation via Cooperative Barycenter Learning Jianchao Zhu, Liangliang Shi, Junchi Yan, Hongyuan Zha ECCV'2020 [ Paper ]

PuzzleMix: Exploiting Saliency and Local Statistics for Optimal Mixup Jang-Hyun Kim, Wonho Choo, Hyun Oh Song ICML'2020 [ Paper ] [ Code ]

Co-Mixup: Saliency Guided Joint Mixup with Supermodular Diversity Jang-Hyun Kim, Wonho Choo, Hosan Jeong, Hyun Oh Song ICLR'2021 [ Paper ] [ Code ]

SuperMix: Supervising the Mixing Data Augmentation Ali Dabouei, Sobhan Soleymani, Fariborz Taherkhani, Nasser M. Nasrabadi CVPR'2021 [ Paper ] [ Code ]

Evolving Image Compositions for Feature Representation Learning Paola Cascante-Bonilla, Arshdeep Sekhon, Yanjun Qi, Vicente Ordonez BMVC'2021 [ Paper ]

StackMix: A complementary Mix algorithm John Chen, Samarth Sinha, Anastasios Kyrillidis UAI'2022 [ Paper ]

SalfMix: A Novel Single Image-Based Data Augmentation Technique Using a Saliency Map Jaehyeop Choi, Chaehyeon Lee, Donggyu Lee, Heechul Jung Sensor'2021 [ Paper ]

k-Mixup Regularization for Deep Learning via Optimal Transport Kristjan Greenewald, Anming Gu, Mikhail Yurochkin, Justin Solomon, Edward Chien ArXiv'2021 [ Paper ]

AlignMix: Improving representation by interpolating aligned features Shashanka Venkataramanan, Ewa Kijak, Laurent Amsaleg, Yannis Avrithis CVPR'2022 [ Paper ] [ Code ]

AutoMix: Unveiling the Power of Mixup for Stronger Classifiers Zicheng Liu, Siyuan Li, Di Wu, Zihan Liu, Zhiyuan Chen, Lirong Wu, Stan Z. Li ECCV'2022 [ Paper ] [ Code ]

Boosting Discriminative Visual Representation Learning with Scenario-Agnostic Mixup Siyuan Li, Zicheng Liu, Di Wu, Zihan Liu, Stan Z. Li Arxiv'2021 [ Paper ] [ Code ]

ScoreNet: Learning Non-Uniform Attention and Augmentation for Transformer-Based Histopathological Image Classification Thomas Stegmüller, Behzad Bozorgtabar, Antoine Spahr, Jean-Philippe Thiran Arxiv'2022 [ Paper ]

RecursiveMix: Mixed Learning with History Lingfeng Yang, Xiang Li, Borui Zhao, Renjie Song, Jian Yang NIPS'2022 [ Paper ] [ Code ]

Expeditious Saliency-guided Mix-up through Random Gradient Thresholding Remy Sun, Clement Masson, Gilles Henaff, Nicolas Thome, Matthieu Cord. ICPR'2022 [ Paper ]

TransformMix: Learning Transformation and Mixing Strategies for Sample-mixing Data Augmentation Tsz-Him Cheung, Dit-Yan Yeung. <\br> OpenReview'2023 [ Paper ]

GuidedMixup: An Efficient Mixup Strategy Guided by Saliency Maps Minsoo Kang, Suhyun Kim AAAI'2023 [ Paper ]

MixPro: Data Augmentation with MaskMix and Progressive Attention Labeling for Vision Transformer Qihao Zhao, Yangyu Huang, Wei Hu, Fan Zhang, Jun Liu ICLR'2023 [ Paper ] [ Code ]

Expeditious Saliency-guided Mix-up through Random Gradient Thresholding Minh-Long Luu, Zeyi Huang, Eric P.Xing, Yong Jae Lee, Haohan Wang 2nd Practical-DL Workshop @ AAAI'23 [ Paper ] [ Code ]

SMMix: Self-Motivated Image Mixing for Vision Transformers Mengzhao Chen, Mingbao Lin, ZhiHang Lin, Yuxin Zhang, Fei Chao, Rongrong Ji ICCV'2023 [ Paper ] [ Code ]

Embedding Space Interpolation Beyond Mini-Batch, Beyond Pairs and Beyond Examples Shashanka Venkataramanan, Ewa Kijak, Laurent Amsaleg, Yannis Avrithis NeurIPS'2023 [ Paper ]

GradSalMix: Gradient Saliency-Based Mix for Image Data Augmentation Tao Hong, Ya Wang, Xingwu Sun, Fengzong Lian, Zhanhui Kang, Jinwen Ma ICME'2023 [ Paper ]

LGCOAMix: Local and Global Context-and-Object-Part-Aware Superpixel-Based Data Augmentation for Deep Visual Recognition Fadi Dornaika, Danyang Sun TIP'2023 [ Paper ] [ Code ]

Catch-Up Mix: Catch-Up Class for Struggling Filters in CNN Minsoo Kang, Minkoo Kang, Suhyun Kim AAAI'2024 [ Paper ]

Adversarial AutoMixup Huafeng Qin, Xin Jin, Yun Jiang, Mounim A. El-Yacoubi, Xinbo Gao ICLR'2024 [ Paper ] [ Code ]

Metamixup: Learning adaptive interpolation policy of mixup with metalearning Zhijun Mai, Guosheng Hu, Dexiong Chen, Fumin Shen, Heng Tao Shen TNNLS'2021 [ Paper ]

Mixup Without Hesitation Hao Yu, Huanyu Wang, Jianxin Wu ICIG'2022 [ Paper ] [ Code ]

Combining Ensembles and Data Augmentation can Harm your Calibration Yeming Wen, Ghassen Jerfel, Rafael Muller, Michael W. Dusenberry, Jasper Snoek, Balaji Lakshminarayanan, Dustin Tran ICLR'2021 [ Paper ] [ Code ]

Combining Ensembles and Data Augmentation can Harm your Calibration Zihang Jiang, Qibin Hou, Li Yuan, Daquan Zhou, Yujun Shi, Xiaojie Jin, Anran Wang, Jiashi Feng NIPS'2021 [ Paper ] [ Code ]

Saliency Grafting: Innocuous Attribution-Guided Mixup with Calibrated Label Mixing Joonhyung Park, June Yong Yang, Jinwoo Shin, Sung Ju Hwang, Eunho Yang AAAI'2022 [ Paper ]

TransMix: Attend to Mix for Vision Transformers Jie-Neng Chen, Shuyang Sun, Ju He, Philip Torr, Alan Yuille, Song Bai CVPR'2022 [ Paper ] [ Code ]

GenLabel: Mixup Relabeling using Generative Models Jy-yong Sohn, Liang Shang, Hongxu Chen, Jaekyun Moon, Dimitris Papailiopoulos, Kangwook Lee ArXiv'2022 [ Paper ]

Harnessing Hard Mixed Samples with Decoupled Regularizer Zicheng Liu, Siyuan Li, Ge Wang, Cheng Tan, Lirong Wu, Stan Z. Li NIPS'2023 [ Paper ] [ Code ]

TokenMix: Rethinking Image Mixing for Data Augmentation in Vision Transformers Jihao Liu, Boxiao Liu, Hang Zhou, Hongsheng Li, Yu Liu ECCV'2022 [ Paper ] [ Code ]

Optimizing Random Mixup with Gaussian Differential Privacy Donghao Li, Yang Cao, Yuan Yao arXiv'2022 [ Paper ]

TokenMixup: Efficient Attention-guided Token-level Data Augmentation for Transformers Hyeong Kyu Choi, Joonmyung Choi, Hyunwoo J. Kim NIPS'2022 [ Paper ] [ Code ]

Token-Label Alignment for Vision Transformers Han Xiao, Wenzhao Zheng, Zheng Zhu, Jie Zhou, Jiwen Lu arXiv'2022 [ Paper ] [ Code ]

LUMix: Improving Mixup by Better Modelling Label Uncertainty Shuyang Sun, Jie-Neng Chen, Ruifei He, Alan Yuille, Philip Torr, Song Bai arXiv'2022 [ Paper ] [ Code ]

MixupE: Understanding and Improving Mixup from Directional Derivative Perspective Vikas Verma, Sarthak Mittal, Wai Hoh Tang, Hieu Pham, Juho Kannala, Yoshua Bengio, Arno Solin, Kenji Kawaguchi UAI'2023 [ Paper ] [ Code ]

Infinite Class Mixup Thomas Mensink, Pascal Mettes arXiv'2023 [ Paper ]

Semantic Equivariant Mixup Zongbo Han, Tianchi Xie, Bingzhe Wu, Qinghua Hu, Changqing Zhang arXiv'2023 [ Paper ]

RankMixup: Ranking-Based Mixup Training for Network Calibration Jongyoun Noh, Hyekang Park, Junghyup Lee, Bumsub Ham ICCV'2023 [ Paper ] [ Code ]

G-Mix: A Generalized Mixup Learning Framework Towards Flat Minima Xingyu Li, Bo Tang arXiv'2023 [ Paper ]

MixCo: Mix-up Contrastive Learning for Visual Representation Sungnyun Kim, Gihun Lee, Sangmin Bae, Se-Young Yun NIPSW'2020 [ Paper ] [ Code ]

Hard Negative Mixing for Contrastive Learning Yannis Kalantidis, Mert Bulent Sariyildiz, Noe Pion, Philippe Weinzaepfel, Diane Larlus NIPS'2020 [ Paper ] [ Code ]

i-Mix A Domain-Agnostic Strategy for Contrastive Representation Learning Kibok Lee, Yian Zhu, Kihyuk Sohn, Chun-Liang Li, Jinwoo Shin, Honglak Lee ICLR'2021 [ Paper ] [ Code ]

Un-Mix: Rethinking Image Mixtures for Unsupervised Visual Representation Zhiqiang Shen, Zechun Liu, Zhuang Liu, Marios Savvides, Trevor Darrell, Eric Xing AAAI'2022 [ Paper ] [ Code ]

Beyond Single Instance Multi-view Unsupervised Representation Learning Xiangxiang Chu, Xiaohang Zhan, Xiaolin Wei BMVC'2022 [ Paper ]

Improving Contrastive Learning by Visualizing Feature Transformation Rui Zhu, Bingchen Zhao, Jingen Liu, Zhenglong Sun, Chang Wen Chen ICCV'2021 [ Paper ] [ Code ]

Piecing and Chipping: An effective solution for the information-erasing view generation in Self-supervised Learning Jingwei Liu, Yi Gu, Shentong Mo, Zhun Sun, Shumin Han, Jiafeng Guo, Xueqi Cheng OpenReview'2021 [ Paper ]

Contrast and Mix: Temporal Contrastive Video Domain Adaptation with Background Mixing Aadarsh Sahoo, Rutav Shah, Rameswar Panda, Kate Saenko, Abir Das NIPS'2021 [ Paper ] [ Code ]

MixSiam: A Mixture-based Approach to Self-supervised Representation Learning Xiaoyang Guo, Tianhao Zhao, Yutian Lin, Bo Du OpenReview'2021 [ Paper ]

Mix-up Self-Supervised Learning for Contrast-agnostic Applications Yichen Zhang, Yifang Yin, Ying Zhang, Roger Zimmermann ICME'2021 [ Paper ]

Towards Domain-Agnostic Contrastive Learning Vikas Verma, Minh-Thang Luong, Kenji Kawaguchi, Hieu Pham, Quoc V. Le ICML'2021 [ Paper ]

Center-wise Local Image Mixture For Contrastive Representation Learning Hao Li, Xiaopeng Zhang, Hongkai Xiong BMVC'2021 [ Paper ]

Contrastive-mixup Learning for Improved Speaker Verification Xin Zhang, Minho Jin, Roger Cheng, Ruirui Li, Eunjung Han, Andreas Stolcke ICASSP'2022 [ Paper ]

ProGCL: Rethinking Hard Negative Mining in Graph Contrastive Learning Jun Xia, Lirong Wu, Ge Wang, Jintao Chen, Stan Z.Li ICML'2022 [ Paper ] [ Code ]

M-Mix: Generating Hard Negatives via Multi-sample Mixing for Contrastive Learning Shaofeng Zhang, Meng Liu, Junchi Yan, Hengrui Zhang, Lingxiao Huang, Pinyan Lu, Xiaokang Yang KDD'2022 [ Paper ] [ Code ]

A Simple Data Mixing Prior for Improving Self-Supervised Learning Sucheng Ren, Huiyu Wang, Zhengqi Gao, Shengfeng He, Alan Yuille, Yuyin Zhou, Cihang Xie CVPR'2022 [ Paper ] [ Code ]

On the Importance of Asymmetry for Siamese Representation Learning Xiao Wang, Haoqi Fan, Yuandong Tian, Daisuke Kihara, Xinlei Chen CVPR'2022 [ Paper ] [ Code ]

VLMixer: Unpaired Vision-Language Pre-training via Cross-Modal CutMix Teng Wang, Wenhao Jiang, Zhichao Lu, Feng Zheng, Ran Cheng, Chengguo Yin, Ping Luo ICML'2022 [ Paper ]

CropMix: Sampling a Rich Input Distribution via Multi-Scale Cropping Junlin Han, Lars Petersson, Hongdong Li, Ian Reid ArXiv'2022 [ Paper ] [ Code ]

i-MAE: Are Latent Representations in Masked Autoencoders Linearly Separable Kevin Zhang, Zhiqiang Shen ArXiv'2022 [ Paper ] [ Code ]

MixMAE: Mixed and Masked Autoencoder for Efficient Pretraining of Hierarchical Vision Transformers Jihao Liu, Xin Huang, Jinliang Zheng, Yu Liu, Hongsheng Li CVPR'2023 [ Paper ] [ Code ]

Mixed Autoencoder for Self-supervised Visual Representation Learning Kai Chen, Zhili Liu, Lanqing Hong, Hang Xu, Zhenguo Li, Dit-Yan Yeung CVPR'2023 [ Paper ]