Academia.edu no longer supports Internet Explorer.

To browse Academia.edu and the wider internet faster and more securely, please take a few seconds to upgrade your browser .

Enter the email address you signed up with and we'll email you a reset link.

- We're Hiring!

- Help Center

The role of teachers in continuous assessment: a model for primary schools in Windhoek

Related Papers

Paulina Hamukonda

Teklebrhan Berhe

The purpose of the study is to assess the prospects and implementing continuous assessment (CA) in in higher education. Data were collected through a structured questionnaire from instructors and students of Adigrat University as well as Mekelle and Aksum Universities for comparison purpose. Both quantitative and qualitative data were carried out. Result of this study indicated that, instructors were not continuously collecting information about student progress, small number of assessment is used in courses and few instructors give feedback at all. Significant number of instructors and students had poor knowledge and negative attitude towards CA. Based on the results, it can be concluded and recommend that instructors need to use the results from CA as a means of identifying students ’ progress and thereby providing support. Accordingly, departments need to have strong documentation and reporting systems, the maximum and minimum numbers of students in a class need to be put at a st...

FIGHTWELL MUTAMBO

The purpose of this study was to determine the perception of students and teachers have on Continuous Assessment in the two secondary school of Chiwala and Masala on the Copperbelt Province of the Republic of Zambia.Perception is considered to be of prime importance in the engagement of an individual in any activity. If the perception is low, then the individual’s commitment to the activity will most likely be low. The methods employed to determine perception were both quantitative and qualitative as the scaled questionnaires were used as well as focus group discussions and guided interviews.A total of 99 respondents were involved and these consisted of 70 grade 12 pupils out of population 1200 possible students. The teachers involved were 29 out of a possible 130 teachers. Percentages were used as the main method of analysis even though qualitative data were analysed qualitatively. The results indicated that the Continuous Assessment was perceived to be important by all stakeholders and to a large extent both pupils and teachers indicated that parents were well abreast with the existence of Continuous Assessment in the two schools. However the degree to which parents were interested and participated in its implementation needed evaluation. The practical subjects such Home Economics were said to be difficult to assess regularly due to the cost involved in carrying out assessment in buying of materials .This was also found to be true for science related subjects and caused the schools to schedule serious assessment on practical’s to mock examination time only. This was not enough as it implies that the practical subjects are taught theoretically. The practice of continuous assessment in the two schools has a positive will from the administrators however there is room for tremendous improvement to ensure participation of all. The guidance, careers and placement teachers who were the main coordinators of continuous assessment need to be motivated in some way as the task before them was quite huge. The maintenance of Continuous Assessment records and their processing required time and therefore their teaching loads should be lower. The school Governance Boards and administration should consider mobilising resources directed at continuous assessment so that practical subjects that are meant to equip skills are effectively taught. To reduce on physical space for storage of Continuous Assessment records the schools should consider in investing in electronic data facilities. The words pupils and students in this study are used interchangeably and mean one. Keywords: Perception; Challenges; Implementing; Continuous Assessment, Feedback.

Ethiopian Journal of Education and Sciences

Abiy Filate

Irene Vilakazi

temitope opaleye

ABSTRACT Evaluation of student performance in accordance with behavioural objectives is ascertained through continuous assessment. Hence, this study sought to determine the perception of teachers towards the use of continuous assessment senior secondary school. It was a descriptive survey and simple stratified random sampling technique was used to select a sample of one hundred and eighty (n=180) participants for the study. The instrument title: Teachers’ Perception Towards the Use of Continuous Assessment (TPTUCA) was used for data collection. Frequency counts; percentages and chi-square were used to analyze the data. Teachers have positive attitude towards continuous assessment use but it requires great deal of time and demanding. There were significance and meaningful association between some continuous assessment strategies such as essay, rating scale, practical demonstration and home work but not so for observation and multiple choice test. Also, essay test and socio-metrics in area of testing of previous knowledge were found to be significance in assessing achievement of the behavioural objective(s) by each student. Choice of assessment strategies covers the three domains of learning .i.e. Cognitive , affective and psychomotor. Recommendation was made on the need for in-service training and refreshers course for knowledge update and to ensure educational policy on evaluation of aggregate student performance through cognitive, affective and psychomotor skill. Effective and efficient supervision of classroom teachers should be in place for checks and balances.

Imamudin Husen

getinet seifu walde

This paper examines the status of the implementation of continuous assessment (CA) in Mettu University. A random stratified sampling method was used to select 309 students and 29 instructors and purposive method used to select quality assurance and faculty Deans. Questionnaires, focus group discussion, interview and documents were used for data collection. Quantitative data were analyzed in terms of descriptive statistics whereas qualitative data qualitatively. The finding of the study reviled that; instructors considered it as continuous testing, students perceived it as a method of assessment used to increase their academic result. The major challenges were: lack of clear manuals and guidelines, lack of continuous and adequate training, awareness and skills on the part of instructors, large class size, lack of infrastructure and instructional materials, poor communication of staff with concerned bodies. Based on the results recommendations were forwarded. Introduction Assessment is the process of making judgments about a student's performance on a particular task (Harlan, 1994). Arends (1997) also defined assessment as the full range of information gathered and synthesized by teachers for making decisions about their students. According to Gipps, C. V. (1994) it is a wide range of methods for evaluating pupil performance and attainment including formal testing and examinations, practical and oral assessment, classroom‐based assessment carried out by teachers and portfolios. These definitions suggest that Educational assessment is a broad term that includes many procedures used to obtain information about student achievement and learning progress. As correctly pointed out by Cone and Foster (1991), good measurement resulting in accurate data is the foundation of sound decision making. According to them there is little doubt among educational practitioners about the special value of assessment as a basic condition for effective learning. This is because of the fact that traditional ways of testing can only deal with a fraction of what some body want to evaluate. Therefore, (Alausa, 2004) indicated the major problems of assessment of learners have been in the approaches or methods of assessment. To solve these problems experts and educational policy makers' come up with the concept of continuous assessment (CA). Many educational systems all over the world have adopted this approach in assessing learners' achievement in many subject areas. This is because CA approaches can help to rectify the problem of mismatches between tests and classroom activities. According to Bolyard (2003) CA is a strategy used by teachers to support the attainment of goals and skills by learners over a period of time. It occurs as part of the daily interaction between teachers and

Dereje Mathewos

Dereje Mathewos , Mesfin Aberra

ABSTRACT The major objective of this research was to examine the practices and challenges in implementing continuous assessment while teaching English. To achieve this objective, the research was designed in descriptive survey. The participants of the study were 26 grade 11 English teachers from Alamura and Tabor secondary and preparatory school in Hawassa City Administration. Since they are a place where the researcher working place, they were selected by using purposive sampling technique. The data were collected through archival document analysis, semi-structured interview and questionnaire and analyzed using frequency, percentage and narrative analysis. The results indicated that there were differences between teachers in implementing continuous assessment with in the similar record sheet given. They gave more emphasis to grammar skill in assessing students’ performance. Tests, quizzes and final examinations, were the dominant assessment tools implemented by teachers. With regard to challenges, there were multiple determinants that hindered the effective implementation of the continuous assessment such as teachers being overloaded with teaching duties that consume most of their time, large class size, the bulky size of textbook that consisted lessons that should be completed within the prescribed academic year, lack of cooperation from other subject teachers during invigilation and the like. As a result, continuous assessment was not fully implemented as desired. It was recommended that teachers should need to have a clear plan to meaningfully implement continuous assessment and should balance all language skills in while assessment. Key words: Practice, Challenge, Assessment, Continuous assessment, Alternative Assessment, document analysis

RELATED PAPERS

Niels O Schiller

Geoforum Perspektiv

Deakin Law Review

John Sheehan

Alicia Wolhein

daxesh patel

Tonatiuh Molina Villa

What You Should Know About Science

Harry Collins

Scientific Reports

Till Luckenbach

Jurnal Standardisasi

ellia kristiningrum

Revista Brasileira de Paleontologia

Luiz Carlos Weinschütz

Dymyd Mychajlo

Journal of Cancer Prevention & Current Research

Danijela Scepanovic

Revista Portuguesa De Arqueologia

António Manuel S . P . Silva

Luka Tomašević

Jurnal Sains & Teknologi Modifikasi Cuaca

Bayu Prayoga

Journal of Nuclear Materials

Naoyuki Hashimoto

Elmilan Elmi

Atherosclerosis Supplements

Karmela Barišić

Revista De Derecho Penal Y Criminologia

María Marta González Tascón

Teologia i Człowiek

Szymon Drzyżdżyk , Marek Gilski

Journal of Sleep Research

Eszter Csabi

Lecture Notes in Computer Science

Pablo Zambrano

Studies on Russian Economic Development

tatiana mitrova

hjhds jyuttgf

Journal of Internal Medicine

Marianne Philippe

RELATED TOPICS

- We're Hiring!

- Help Center

- Find new research papers in:

- Health Sciences

- Earth Sciences

- Cognitive Science

- Mathematics

- Computer Science

- Academia ©2024

- No results found

Pupils’ role in continuous assessment

Literature review, 3.7 pupils’ role in continuous assessment.

In Ghana, the basic school continuous assessment guide as described earlier in Sections 3.2.1 & 3.2.2, requires the teacher to plan, and sets learning objectives,

designs activities, mark and records pupils’ scores (MoE, 2004). The only role pupils play in the continuous assessment process is performing tasks assigned to them by the teacher.

The situation in Ghana reflects Gersch’s (1992) observation that although the process and purpose of assessment may vary from professional to professional, and indeed there are different emphases on test, observation and other techniques, pupils themselves are conventionally ascribed a subservient role in the whole assessment process. They are often expected to carry out specified tasks, answer specific questions, undertake written activities or follow set of procedures. The child is generally seen as a relatively ‘passive object’, and assessment is viewed as something which is ‘done to the child’ than involving very actively (p. 25). According to Gersch, if a child joins in too actively, or becomes too questioning or challenging, he or she might be regarded as interfering. Perhaps, historically, the idea of ‘children knowing their place’ and ‘being seen and not heard’ has left its mark when it comes to pupil assessment (p. 25).

For their part, Tilstone, Lacey, Porter and Robertson (2000) suggest pupils themselves have little role to play in the traditional perspective on assessment. It is something done for them. However, in a dynamic view of assessment, pupils have a central part to play. They are involved in setting their own targets and monitoring their own progress. There are several frameworks that can support pupil involvement, such as records of attainment. Currently, in Ghana, there is no provision in terms of pupil involvement in their assessment. It will be impossible for basic school pupils to play

any meaningful role in their assessments. There are no frameworks to support pupils’ involvement in their assessment.

As discussed earlier (Section 3.2.2), in Ghana, the continuous assessment model seems to apply the principles from the behaviourist learning theory. For example, the teachers assess and reinforce pupils’ responses (James, 2006) and make records on the basis of new assessments; the pupils’ progress is measured against performance criteria which are teacher-defined (Sebba, Byers and Rose, 1993). The literature for example, MoE 2004) shows that in Ghana pupils’ role in continuous assessment is limited to answering questions and working on tasks designed by teachers. If pupils’ involvement is to be fostered then in addition to principles drawing on behaviourist theory, the continuous assessment programme in Ghana has to adopt some principles from the cognitive, constructivist theories of learning (see Chapter 1). This however, requires radical changes in teachers’ beliefs, competencies and their conceptualisation of continuous assessment; these shifts may not occur easily.

3.7.1 Self- and peer-assessment

Literature shows that self- and peer-assessment are largely adopted in assessment practice that applies principles from the constructivists’ learning theory (Pollard et al., 2005). Self- and peer- assessment when applied in classrooms can foster improvement of all pupils, including those who record lower attainments in class. Black and Wiliam (1998) point out that assessment that involves pupils in their own self-evaluation is a key element in improving learning. This is succinctly, expressed by Assessment Reform Group (2002) cited by Clarke (2005) as follows:

Independent learners have the ability to seek out and gain new skills, new knowledge and new understandings. They are able to engage in self-reflection

and to identify the next steps in their learning. Teachers should equip learners with the desire and the capacity to take charge of their learning through developing the skills of self-assessment (p. 109).

Clarke (2005) suggests that one reason that peer-assessment is so valuable is because pupils often give and receive criticisms of their work more freely than in the traditional teacher/pupil interchange. Another advantage is that the language used by pupils to each other is the language they would naturally use, rather than school language. Further, peer-assessment can involve a few minutes of pupils helping each other to improve their work.

However, Rose, McNamara and O’Neil (1996) point out that in considering approaches to the greater involvement of pupils in self-assessment and the planning process, it is necessary to be clear about the purpose to be served by such an approach, and the practicalities of its implementation. Further, greater involvement of pupils in the management of their assessment and learning is dependent upon the development of teachers’ confidence in their own abilities to maintain effective classroom management.

Self-assessment does not occur automatically; Rose, McNamara and O’Neil (1996), in considering the involvement of pupils in self-assessment, identifies the importance of providing pupils with a range of skills before they can take more responsibility for their own learning. He lists the ability to recall, to summarise, to organise evidence, to reflect and to evaluate as prerequisites for effective self-evaluation. Also, Rose, McNamara and O’Neil (1996) describes the skills of attending, completing tasks, and joint goal setting as essential components of ‘learning to learn’, and provides

examples of ways in which pupils with learning difficulties have been encouraged to move towards achieving these requirements.

Since teacher education in Ghana does not emphasise assessment for learning in its programmes, teachers may lack competence, knowledge, skills and confidence to foster self-and peer-assessments in classrooms (see Chapter 2).

3.8 Summary of the chapter

The review has revealed that the nature of continuous assessment in Ghana, in relation to international perspectives of teacher assessment. Unlike teacher assessments done elsewhere, the continuous assessment comprises three distinctive activities: classroom exercises, tests and homework. These activities are designed specifically to measure attainments in order to get marks to fill pupils’ records. Pupils’ aggregated continuous assessment is added to external examination (BECE) for grading and certification.

However, literature from the UK and USA reveals that classroom assessments that focus more on informing teaching and learning (formative assessment), support lower attaining pupils to improve. These countries have relevant policies, support and resources to enhance teachers’ practices. The materials from Ghana, the UK and the USA will facilitate the discussion of the data from the fieldwork in Chapters 5, 6, 7. This will enable me to draw conclusion as to whether teachers’ continuous assessment practices support and enhance lower attaining pupils’ learning in classrooms.

METHODOLOGY, DESIGN, METHODS OF DATA

- The cognitive, constructivist theories of learning

- Background to the 1987 basic education reform in Ghana

- The core curriculum and teaching syllabus

- Issues relating to teacher continuous assessment practice

- Teacher education and professional development in Ghana in relation to lower attainments

- Continuous assessment activities

- Continuous assessment and formative assessment

- Contributing to external examination

- Effects of formative assessment on lower attaining pupils

- Effects of curriculum-based assessment on lower attaining pupils

- Effects of summative assessment on lower attaining pupils

- Approaches for enhancing lower attaining pupils’ performance

- Pupils’ role in continuous assessment (You are here)

- Background to choice of approach and methods

- Justification for using mixed methods design

- Focus groups of lower attaining pupils

- Ethical issues

- Reliability and validity issues

Related documents

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Perspect Med Educ

- v.7(1); 2018 Feb

Writing an effective literature review

Lorelei lingard.

Schulich School of Medicine & Dentistry, Health Sciences Addition, Western University, London, Ontario Canada

In the Writer’s Craft section we offer simple tips to improve your writing in one of three areas: Energy, Clarity and Persuasiveness. Each entry focuses on a key writing feature or strategy, illustrates how it commonly goes wrong, teaches the grammatical underpinnings necessary to understand it and offers suggestions to wield it effectively. We encourage readers to share comments on or suggestions for this section on Twitter, using the hashtag: #how’syourwriting?

This Writer’s Craft instalment is the first in a two-part series that offers strategies for effectively presenting the literature review section of a research manuscript. This piece alerts writers to the importance of not only summarizing what is known but also identifying precisely what is not, in order to explicitly signal the relevance of their research. In this instalment, I will introduce readers to the mapping the gap metaphor, the knowledge claims heuristic, and the need to characterize the gap.

Mapping the gap

The purpose of the literature review section of a manuscript is not to report what is known about your topic. The purpose is to identify what remains unknown— what academic writing scholar Janet Giltrow has called the ‘knowledge deficit’ — thus establishing the need for your research study [ 1 ]. In an earlier Writer’s Craft instalment, the Problem-Gap-Hook heuristic was introduced as a way of opening your paper with a clear statement of the problem that your work grapples with, the gap in our current knowledge about that problem, and the reason the gap matters [ 2 ]. This article explains how to use the literature review section of your paper to build and characterize the Gap claim in your Problem-Gap-Hook. The metaphor of ‘mapping the gap’ is a way of thinking about how to select and arrange your review of the existing literature so that readers can recognize why your research needed to be done, and why its results constitute a meaningful advance on what was already known about the topic.

Many writers have learned that the literature review should describe what is known. The trouble with this approach is that it can produce a laundry list of facts-in-the-world that does not persuade the reader that the current study is a necessary next step. Instead, think of your literature review as painting in a map of your research domain: as you review existing knowledge, you are painting in sections of the map, but your goal is not to end with the whole map fully painted. That would mean there is nothing more we need to know about the topic, and that leaves no room for your research. What you want to end up with is a map in which painted sections surround and emphasize a white space, a gap in what is known that matters. Conceptualizing your literature review this way helps to ensure that it achieves its dual goal: of presenting what is known and pointing out what is not—the latter of these goals is necessary for your literature review to establish the necessity and importance of the research you are about to describe in the methods section which will immediately follow the literature review.

To a novice researcher or graduate student, this may seem counterintuitive. Hopefully you have invested significant time in reading the existing literature, and you are understandably keen to demonstrate that you’ve read everything ever published about your topic! Be careful, though, not to use the literature review section to regurgitate all of your reading in manuscript form. For one thing, it creates a laundry list of facts that makes for horrible reading. But there are three other reasons for avoiding this approach. First, you don’t have the space. In published medical education research papers, the literature review is quite short, ranging from a few paragraphs to a few pages, so you can’t summarize everything you’ve read. Second, you’re preaching to the converted. If you approach your paper as a contribution to an ongoing scholarly conversation,[ 2 ] then your literature review should summarize just the aspects of that conversation that are required to situate your conversational turn as informed and relevant. Third, the key to relevance is to point to a gap in what is known. To do so, you summarize what is known for the express purpose of identifying what is not known . Seen this way, the literature review should exert a gravitational pull on the reader, leading them inexorably to the white space on the map of knowledge you’ve painted for them. That white space is the space that your research fills.

Knowledge claims

To help writers move beyond the laundry list, the notion of ‘knowledge claims’ can be useful. A knowledge claim is a way of presenting the growing understanding of the community of researchers who have been exploring your topic. These are not disembodied facts, but rather incremental insights that some in the field may agree with and some may not, depending on their different methodological and disciplinary approaches to the topic. Treating the literature review as a story of the knowledge claims being made by researchers in the field can help writers with one of the most sophisticated aspects of a literature review—locating the knowledge being reviewed. Where does it come from? What is debated? How do different methodologies influence the knowledge being accumulated? And so on.

Consider this example of the knowledge claims (KC), Gap and Hook for the literature review section of a research paper on distributed healthcare teamwork:

KC: We know that poor team communication can cause errors. KC: And we know that team training can be effective in improving team communication. KC: This knowledge has prompted a push to incorporate teamwork training principles into health professions education curricula. KC: However, most of what we know about team training research has come from research with co-located teams—i. e., teams whose members work together in time and space. Gap: Little is known about how teamwork training principles would apply in distributed teams, whose members work asynchronously and are spread across different locations. Hook: Given that much healthcare teamwork is distributed rather than co-located, our curricula will be severely lacking until we create refined teamwork training principles that reflect distributed as well as co-located work contexts.

The ‘We know that …’ structure illustrated in this example is a template for helping you draft and organize. In your final version, your knowledge claims will be expressed with more sophistication. For instance, ‘We know that poor team communication can cause errors’ will become something like ‘Over a decade of patient safety research has demonstrated that poor team communication is the dominant cause of medical errors.’ This simple template of knowledge claims, though, provides an outline for the paragraphs in your literature review, each of which will provide detailed evidence to illustrate a knowledge claim. Using this approach, the order of the paragraphs in the literature review is strategic and persuasive, leading the reader to the gap claim that positions the relevance of the current study. To expand your vocabulary for creating such knowledge claims, linking them logically and positioning yourself amid them, I highly recommend Graff and Birkenstein’s little handbook of ‘templates’ [ 3 ].

As you organize your knowledge claims, you will also want to consider whether you are trying to map the gap in a well-studied field, or a relatively understudied one. The rhetorical challenge is different in each case. In a well-studied field, like professionalism in medical education, you must make a strong, explicit case for the existence of a gap. Readers may come to your paper tired of hearing about this topic and tempted to think we can’t possibly need more knowledge about it. Listing the knowledge claims can help you organize them most effectively and determine which pieces of knowledge may be unnecessary to map the white space your research attempts to fill. This does not mean that you leave out relevant information: your literature review must still be accurate. But, since you will not be able to include everything, selecting carefully among the possible knowledge claims is essential to producing a coherent, well-argued literature review.

Characterizing the gap

Once you’ve identified the gap, your literature review must characterize it. What kind of gap have you found? There are many ways to characterize a gap, but some of the more common include:

- a pure knowledge deficit—‘no one has looked at the relationship between longitudinal integrated clerkships and medical student abuse’

- a shortcoming in the scholarship, often due to philosophical or methodological tendencies and oversights—‘scholars have interpreted x from a cognitivist perspective, but ignored the humanist perspective’ or ‘to date, we have surveyed the frequency of medical errors committed by residents, but we have not explored their subjective experience of such errors’

- a controversy—‘scholars disagree on the definition of professionalism in medicine …’

- a pervasive and unproven assumption—‘the theme of technological heroism—technology will solve what ails teamwork—is ubiquitous in the literature, but what is that belief based on?’

To characterize the kind of gap, you need to know the literature thoroughly. That means more than understanding each paper individually; you also need to be placing each paper in relation to others. This may require changing your note-taking technique while you’re reading; take notes on what each paper contributes to knowledge, but also on how it relates to other papers you’ve read, and what it suggests about the kind of gap that is emerging.

In summary, think of your literature review as mapping the gap rather than simply summarizing the known. And pay attention to characterizing the kind of gap you’ve mapped. This strategy can help to make your literature review into a compelling argument rather than a list of facts. It can remind you of the danger of describing so fully what is known that the reader is left with the sense that there is no pressing need to know more. And it can help you to establish a coherence between the kind of gap you’ve identified and the study methodology you will use to fill it.

Acknowledgements

Thanks to Mark Goldszmidt for his feedback on an early version of this manuscript.

PhD, is director of the Centre for Education Research & Innovation at Schulich School of Medicine & Dentistry, and professor for the Department of Medicine at Western University in London, Ontario, Canada.

- Open access

- Published: 23 April 2024

Designing feedback processes in the workplace-based learning of undergraduate health professions education: a scoping review

- Javiera Fuentes-Cimma 1 , 2 ,

- Dominique Sluijsmans 3 ,

- Arnoldo Riquelme 4 ,

- Ignacio Villagran ORCID: orcid.org/0000-0003-3130-8326 1 ,

- Lorena Isbej ORCID: orcid.org/0000-0002-4272-8484 2 , 5 ,

- María Teresa Olivares-Labbe 6 &

- Sylvia Heeneman 7

BMC Medical Education volume 24 , Article number: 440 ( 2024 ) Cite this article

130 Accesses

Metrics details

Feedback processes are crucial for learning, guiding improvement, and enhancing performance. In workplace-based learning settings, diverse teaching and assessment activities are advocated to be designed and implemented, generating feedback that students use, with proper guidance, to close the gap between current and desired performance levels. Since productive feedback processes rely on observed information regarding a student's performance, it is imperative to establish structured feedback activities within undergraduate workplace-based learning settings. However, these settings are characterized by their unpredictable nature, which can either promote learning or present challenges in offering structured learning opportunities for students. This scoping review maps literature on how feedback processes are organised in undergraduate clinical workplace-based learning settings, providing insight into the design and use of feedback.

A scoping review was conducted. Studies were identified from seven databases and ten relevant journals in medical education. The screening process was performed independently in duplicate with the support of the StArt program. Data were organized in a data chart and analyzed using thematic analysis. The feedback loop with a sociocultural perspective was used as a theoretical framework.

The search yielded 4,877 papers, and 61 were included in the review. Two themes were identified in the qualitative analysis: (1) The organization of the feedback processes in workplace-based learning settings, and (2) Sociocultural factors influencing the organization of feedback processes. The literature describes multiple teaching and assessment activities that generate feedback information. Most papers described experiences and perceptions of diverse teaching and assessment feedback activities. Few studies described how feedback processes improve performance. Sociocultural factors such as establishing a feedback culture, enabling stable and trustworthy relationships, and enhancing student feedback agency are crucial for productive feedback processes.

Conclusions

This review identified concrete ideas regarding how feedback could be organized within the clinical workplace to promote feedback processes. The feedback encounter should be organized to allow follow-up of the feedback, i.e., working on required learning and performance goals at the next occasion. The educational programs should design feedback processes by appropriately planning subsequent tasks and activities. More insight is needed in designing a full-loop feedback process, in which specific attention is needed in effective feedforward practices.

Peer Review reports

The design of effective feedback processes in higher education has been important for educators and researchers and has prompted numerous publications discussing potential mechanisms, theoretical frameworks, and best practice examples over the past few decades. Initially, research on feedback primarily focused more on teachers and feedback delivery, and students were depicted as passive feedback recipients [ 1 , 2 , 3 ]. The feedback conversation has recently evolved to a more dynamic emphasis on interaction, sense-making, outcomes in actions, and engagement with learners [ 2 ]. This shift aligns with utilizing the feedback process as a form of social interaction or dialogue to enhance performance [ 4 ]. Henderson et al. (2019) defined feedback processes as "where the learner makes sense of performance-relevant information to promote their learning." (p. 17). When a student grasps the information concerning their performance in connection to the desired learning outcome and subsequently takes suitable action, a feedback loop is closed so the process can be regarded as successful [ 5 , 6 ].

Hattie and Timperley (2007) proposed a comprehensive perspective on feedback, the so-called feedback loop, to answer three key questions: “Where am I going? “How am I going?” and “Where to next?” [ 7 ]. Each question represents a key dimension of the feedback loop. The first is the feed-up, which consists of setting learning goals and sharing clear objectives of learners' performance expectations. While the concept of the feed-up might not be consistently included in the literature, it is considered to be related to principles of effective feedback and goal setting within educational contexts [ 7 , 8 ]. Goal setting allows students to focus on tasks and learning, and teachers to have clear intended learning outcomes to enable the design of aligned activities and tasks in which feedback processes can be embedded [ 9 ]. Teachers can improve the feed-up dimension by proposing clear, challenging, but achievable goals [ 7 ]. The second dimension of the feedback loop focuses on feedback and aims to answer the second question by obtaining information about students' current performance. Different teaching and assessment activities can be used to obtain feedback information, and it can be provided by a teacher or tutor, a peer, oneself, a patient, or another coworker. The last dimension of the feedback loop is the feedforward, which is specifically associated with using feedback to improve performance or change behaviors [ 10 ]. Feedforward is crucial in closing the loop because it refers to those specific actions students must take to reduce the gap between current and desired performance [ 7 ].

From a sociocultural perspective, feedback processes involve a social practice consisting of intricate relationships within a learning context [ 11 ]. The main feature of this approach is that students learn from feedback only when the feedback encounter includes generating, making sense of, and acting upon the information given [ 11 ]. In the context of workplace-based learning (WBL), actionable feedback plays a crucial role in enabling learners to leverage specific feedback to enhance their performance, skills, and conceptual understandings. The WBL environment provides students with a valuable opportunity to gain hands-on experience in authentic clinical settings, in which students work more independently on real-world tasks, allowing them to develop and exhibit their competencies [ 3 ]. However, WBL settings are characterized by their unpredictable nature, which can either promote self-directed learning or present challenges in offering structured learning opportunities for students [ 12 ]. Consequently, designing purposive feedback opportunities within WBL settings is a significant challenge for clinical teachers and faculty.

In undergraduate clinical education, feedback opportunities are often constrained due to the emphasis on clinical work and the absence of dedicated time for teaching [ 13 ]. Students are expected to perform autonomously under supervision, ideally achieved by giving them space to practice progressively and providing continuous instances of constructive feedback [ 14 ]. However, the hierarchy often present in clinical settings places undergraduate students in a dependent position, below residents and specialists [ 15 ]. Undergraduate or junior students may have different approaches to receiving and using feedback. If their priority is meeting the minimum standards given pass-fail consequences and acting merely as feedback recipients, other incentives may be needed to engage with the feedback processes because they will need more learning support [ 16 , 17 ]. Adequate supervision and feedback have been recognized as vital educational support in encouraging students to adopt a constructive learning approach [ 18 ]. Given that productive feedback processes rely on observed information regarding a student's performance, it is imperative to establish structured teaching and learning feedback activities within undergraduate WBL settings.

Despite the extensive research on feedback, a significant proportion of published studies involve residents or postgraduate students [ 19 , 20 ]. Recent reviews focusing on feedback interventions within medical education have clearly distinguished between undergraduate medical students and residents or fellows [ 21 ]. To gain a comprehensive understanding of initiatives related to actionable feedback in the WBL environment for undergraduate health professions, a scoping review of the existing literature could provide insight into how feedback processes are designed in that context. Accordingly, the present scoping review aims to answer the following research question: How are the feedback processes designed in the undergraduate health professions' workplace-based learning environments?

A scoping review was conducted using the five-step methodological framework proposed by Arksey and O'Malley (2005) [ 22 ], intertwined with the PRISMA checklist extension for scoping reviews to provide reporting guidance for this specific type of knowledge synthesis [ 23 ]. Scoping reviews allow us to study the literature without restricting the methodological quality of the studies found, systematically and comprehensively map the literature, and identify gaps [ 24 ]. Furthermore, a scoping review was used because this topic is not suitable for a systematic review due to the varied approaches described and the large difference in the methodologies used [ 21 ].

Search strategy

With the collaboration of a medical librarian, the authors used the research question to guide the search strategy. An initial meeting was held to define keywords and search resources. The proposed search strategy was reviewed by the research team, and then the study selection was conducted in two steps:

An online database search included Medline/PubMed, Web of Science, CINAHL, Cochrane Library, Embase, ERIC, and PsycINFO.

A directed search of ten relevant journals in the health sciences education field (Academic Medicine, Medical Education, Advances in Health Sciences Education, Medical Teacher, Teaching and Learning in Medicine, Journal of Surgical Education, BMC Medical Education, Medical Education Online, Perspectives on Medical Education and The Clinical Teacher) was performed.

The research team conducted a pilot or initial search before the full search to identify if the topic was susceptible to a scoping review. The full search was conducted in November 2022. One team member (MO) identified the papers in the databases. JF searched in the selected journals. Authors included studies written in English due to feasibility issues, with no time span limitation. After eliminating duplicates, two research team members (JF and IV) independently reviewed all the titles and abstracts using the exclusion and inclusion criteria described in Table 2 and with the support of the screening application StArT [ 25 ]. A third team member (AR) reviewed the titles and abstracts when the first two disagreed. The reviewer team met again at a midpoint and final stage to discuss the challenges related to study selection. Articles included for full-text review were exported to Mendeley. JF independently screened all full-text papers, and AR verified 10% for inclusion. The authors did not analyze study quality or risk of bias during study selection, which is consistent with conducting a scoping review.

The analysis of the results incorporated a descriptive summary and a thematic analysis, which was carried out to clarify and give consistency to the results' reporting [ 22 , 24 , 26 ]. Quantitative data were analyzed to report the characteristics of the studies, populations, settings, methods, and outcomes. Qualitative data were labeled, coded, and categorized into themes by three team members (JF, SH, and DS). The feedback loop framework with a sociocultural perspective was used as the theoretical framework to analyze the results.

The keywords used for the search strategies were as follows:

Clinical clerkship; feedback; formative feedback; health professions; undergraduate medical education; workplace.

Definitions of the keywords used for the present review are available in Appendix 1 .

As an example, we included the search strategy that we used in the Medline/PubMed database when conducting the full search:

("Formative Feedback"[Mesh] OR feedback) AND ("Workplace"[Mesh] OR workplace OR "Clinical Clerkship"[Mesh] OR clerkship) AND (("Education, Medical, Undergraduate"[Mesh] OR undergraduate health profession*) OR (learner* medical education)).

Inclusion and exclusion criteria

The following inclusion and exclusion criteria were used (Table 1 ):

Data extraction

The research group developed a data-charting form to organize the information obtained from the studies. The process was iterative, as the data chart was continuously reviewed and improved as necessary. In addition, following Levac et al.'s recommendation (2010), the three members involved in the charting process (JF, LI, and IV) independently reviewed the first five selected studies to determine whether the data extraction was consistent with the objectives of this scoping review and to ensure consistency. Then, the team met using web-conferencing software (Zoom; CA, USA) to review the results and adjust any details in the chart. The same three members extracted data independently from all the selected studies, considering two members reviewing each paper [ 26 ]. A third team member was consulted if any conflict occurred when extracting data. The data chart identified demographic patterns and facilitated the data synthesis. To organize data, we used a shared Excel spreadsheet, considering the following headings: title, author(s), year of publication, journal/source, country/origin, aim of the study, research question (if any), population/sample size, participants, discipline, setting, methodology, study design, data collection, data analysis, intervention, outcomes, outcomes measure, key findings, and relation of findings to research question.

Additionally, all the included papers were uploaded to AtlasTi v19 to facilitate the qualitative analysis. Three team members (JF, SH, and DS) independently coded the first six papers to create a list of codes to ensure consistency and rigor. The group met several times to discuss and refine the list of codes. Then, one member of the team (JF) used the code list to code all the rest of the papers. Once all papers were coded, the team organized codes into descriptive themes aligned with the research question.

Preliminary results were shared with a number of stakeholders (six clinical teachers, ten students, six medical educators) to elicit their opinions as an opportunity to build on the evidence and offer a greater level of meaning, content expertise, and perspective to the preliminary findings [ 26 ]. No quality appraisal of the studies is considered for this scoping review, which aligns with the frameworks for guiding scoping reviews [ 27 ].

The datasets analyzed during the current study are available from the corresponding author upon request.

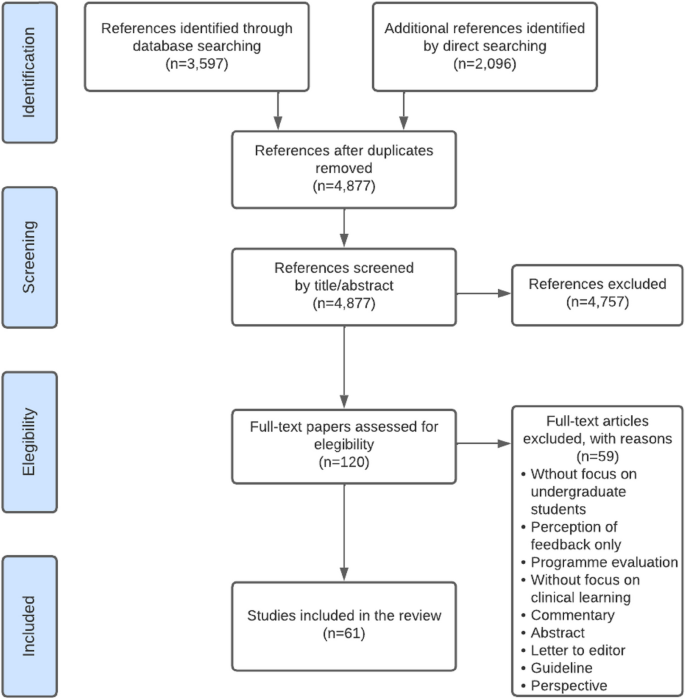

A database search resulted in 3,597 papers, and the directed search of the most relevant journals in the health sciences education field yielded 2,096 titles. An example of the results of one database is available in Appendix 2 . Of the titles obtained, 816 duplicates were eliminated, and the team reviewed the titles and abstracts of 4,877 papers. Of these, 120 were selected for full-text review. Finally, 61 papers were included in this scoping review (Fig. 1 ), as listed in Table 2 .

PRISMA flow diagram for included studies, incorporating records identified through the database and direct searching

The selected studies were published between 1986 and 2022, and seventy-five percent (46) were published during the last decade. Of all the articles included in this review, 13% (8) were literature reviews: one integrative review [ 28 ] and four scoping reviews [ 29 , 30 , 31 , 32 ]. Finally, fifty-three (87%) original or empirical papers were included (i.e., studies that answered a research question or achieved a research purpose through qualitative or quantitative methodologies) [ 15 , 33 , 34 , 35 , 36 , 37 , 38 , 39 , 40 , 41 , 42 , 43 , 44 , 45 , 46 , 47 , 48 , 49 , 50 , 51 , 52 , 53 , 54 , 55 , 56 , 57 , 58 , 59 , 60 , 61 , 62 , 63 , 64 , 65 , 66 , 67 , 68 , 69 , 70 , 71 , 72 , 73 , 74 , 75 , 76 , 77 , 78 , 79 , 80 , 81 , 82 , 83 , 84 , 85 ].

Table 2 summarizes the papers included in the present scoping review, and Table 3 describes the characteristics of the included studies.

The thematic analysis resulted in two themes: (1) the organization of feedback processes in WBL settings, and (2) sociocultural factors influencing the organization of feedback processes. Table 4 gives a summary of the themes and subthemes.

Organization of feedback processes in WBL settings.

Setting learning goals (i.e., feed-up dimension).

Feedback that focuses on students' learning needs and is based on known performance standards enhances student response and setting learning goals [ 30 ]. Discussing goals and agreements before starting clinical practice enhances students' feedback-seeking behavior [ 39 ] and responsiveness to feedback [ 83 ]. Farrell et al. (2017) found that teacher-learner co-constructed learning goals enhance feedback interactions and help establish educational alliances, improving the learning experience [ 50 ]. However, Kiger (2020) found that sharing individualized learning plans with teachers aligned feedback with learning goals but did not improve students' perceived use of feedback [ 64 ]

Two papers of this set pointed out the importance of goal-oriented feedback, a dynamic process that depends on discussion of goal setting between teachers and students [ 50 ] and influences how individuals experience, approach, and respond to upcoming learning activities [ 34 ]. Goal-oriented feedback should be embedded in the learning experience of the clinical workplace, as it can enhance students' engagement in safe feedback dialogues [ 50 ]. Ideally, each feedback encounter in the WBL context should conclude, in addition to setting a plan of action to achieve the desired goal, with a reflection on the next goal [ 50 ].

Feedback strategies within the WBL environment. (i.e., feedback dimension)

In undergraduate WBL environments, there are several tasks and feedback opportunities organized in the undergraduate clinical workplace that can enable feedback processes:

Questions from clinical teachers to students are a feedback strategy [ 74 ]. There are different types of questions that the teacher can use, either to clarify concepts, to reach the correct answer, or to facilitate self-correction [ 74 ]. Usually, questions can be used in conjunction with other communication strategies, such as pauses, which enable self-correction by the student [ 74 ]. Students can also ask questions to obtain feedback on their performance [ 54 ]. However, question-and-answer as a feedback strategy usually provides information on either correct or incorrect answers and fewer suggestions for improvement, rendering it less constructive as a feedback strategy [ 82 ].

Direct observation of performance by default is needed to be able to provide information to be used as input in the feedback process [ 33 , 46 , 49 , 86 ]. In the process of observation, teachers can include clarification of objectives (i.e., feed-up dimension) and suggestions for an action plan (i.e., feedforward) [ 50 ]. Accordingly, Schopper et al. (2016) showed that students valued being observed while interviewing patients, as they received feedback that helped them become more efficient and effective as interviewers and communicators [ 33 ]. Moreover, it is widely described that direct observation improves feedback credibility [ 33 , 40 , 84 ]. Ideally, observation should be deliberate [ 33 , 83 ], informal or spontaneous [ 33 ], conducted by a (clinical) expert [ 46 , 86 ], provided immediately after the observation, and clinical teacher if possible, should schedule or be alert on follow-up observations to promote closing the gap between current and desired performance [ 46 ].

Workplace-based assessments (WBAs), by definition, entail direct observation of performance during authentic task demonstration [ 39 , 46 , 56 , 87 ]. WBAs can significantly impact behavioral change in medical students [ 55 ]. Organizing and designing formative WBAs and embedding these in a feedback dialogue is essential for effective learning [ 31 ].

Summative organization of WBAs is a well described barrier for feedback uptake in the clinical workplace [ 35 , 46 ]. If feedback is perceived as summative, or organized as a pass-fail decision, students may be less inclined to use the feedback for future learning [ 52 ]. According to Schopper et al. (2016), using a scale within a WBA makes students shift their focus during the clinical interaction and see it as an assessment with consequences [ 33 ]. Harrison et al. (2016) pointed out that an environment that only contains assessments with a summative purpose will not lead to a culture of learning and improving performance [ 56 ]. The recommendation is to separate the formative and summative WBAs, as feedback in summative instances is often not recognized as a learning opportunity or an instance to seek feedback [ 54 ]. In terms of the design, an organizational format is needed to clarify to students how formative assessments can promote learning from feedback [ 56 ]. Harrison et al. (2016) identified that enabling students to have more control over their assessments, designing authentic assessments, and facilitating long-term mentoring could improve receptivity to formative assessment feedback [ 56 ].

Multiple WBA instruments and systems are reported in the literature. Sox et al. (2014) used a detailed evaluation form to help students improve their clinical case presentation skills. They found that feedback on oral presentations provided by supervisors using a detailed evaluation form improved clerkship students’ oral presentation skills [ 78 ]. Daelmans et al. (2006) suggested that a formal in-training assessment programme composed by 19 assessments that provided structured feedback, could promote observation and verbal feedback opportunities through frequent assessments [ 43 ]. However, in this setting, limited student-staff interactions still hindered feedback follow-up [ 43 ]. Designing frequent WBA improves feedback credibility [ 28 ]. Long et al. (2021) emphasized that students' responsiveness to assessment feedback hinges on its perceived credibility, underlining the importance of credibility for students to effectively engage and improve their performance [ 31 ].

The mini-CEX is one of the most widely described WBA instruments in the literature. Students perceive that the mini-CEX allows them to be observed and encourages the development of interviewing skills [ 33 ]. The mini-CEX can provide feedback that improves students' clinical skills [ 58 , 60 ], as it incorporates a structure for discussing the student's strengths and weaknesses and the design of a written action plan [ 39 , 80 ]. When mini-CEXs are incorporated as part of a system of WBA, such as programmatic assessment, students feel confident in seeking feedback after observation, and being systematic allows for follow-up [ 39 ]. Students suggested separating grading from observation and using the mini-CEX in more informal situations [ 33 ].

Clinical encounter cards allow students to receive weekly feedback and make them request more feedback as the clerkship progresses [ 65 ]. Moreover, encounter cards stimulate that feedback is given by supervisors, and students are more satisfied with the feedback process [ 72 ]. With encounter card feedback, students are responsible for asking a supervisor for feedback before a clinical encounter, and supervisors give students written and verbal comments about their performance after the encounter [ 42 , 72 ]. Encounter cards enhance the use of feedback and add approximately one minute to the length of the clinical encounter, so they are well accepted by students and supervisors [ 72 ]. Bennett (2006) identified that Instant Feedback Cards (IFC) facilitated mid-rotation feedback [ 38 ]. Feedback encounter card comments must be discussed between students and supervisors; otherwise, students may perceive it as impersonal, static, formulaic, and incomplete [ 59 ].

Self-assessments can change students' feedback orientation, transforming them into coproducers of learning [ 68 ]. Self-assessments promote the feedback process [ 68 ]. Some articles emphasize the importance of organizing self-assessments before receiving feedback from supervisors, for example, discussing their appraisal with the supervisor [ 46 , 52 ]. In designing a feedback encounter, starting with a self-assessment as feed-up, discussing with the supervisor, and identifying areas for improvement is recommended, as part of the feedback dialogue [ 68 ].

Peer feedback as an organized activity allows students to develop strategies to observe and give feedback to other peers [ 61 ]. Students can act as the feedback provider or receiver, fostering understanding of critical comments and promoting evaluative judgment for their clinical practice [ 61 ]. Within clerkships, enabling the sharing of feedback information among peers allows for a better understanding and acceptance of feedback [ 52 ]. However, students can find it challenging to take on the peer assessor/feedback provider role, as they prefer to avoid social conflicts [ 28 , 61 ]. Moreover, it has been described that they do not trust the judgment of their peers because they are not experts, although they know the procedures, tasks, and steps well and empathize with their peer status in the learning process [ 61 ].

Bedside-teaching encounters (BTEs) provide timely feedback and are an opportunity for verbal feedback during performance [ 74 ]. Rizan et al. (2014) explored timely feedback delivered within BTEs and determined that it promotes interaction that constructively enhances learner development through various corrective strategies (e.g., question and answers, pauses, etc.). However, if the feedback given during the BTEs was general, unspecific, or open-ended, it could go unnoticed [ 74 ]. Torre et al. (2005) investigated which integrated feedback activities and clinical tasks occurred on clerkship rotations and assessed students' perceived quality in each teaching encounter [ 81 ]. The feedback activities reported were feedback on written clinical history, physical examination, differential diagnosis, oral case presentation, a daily progress note, and bedside feedback. Students considered all these feedback activities high-quality learning opportunities, but they were more likely to receive feedback when teaching was at the bedside than at other teaching locations [ 81 ].

Case presentations are an opportunity for feedback within WBL contexts [ 67 , 73 ]. However, both students and supervisors struggled to identify them as feedback moments, and they often dismissed questions and clarifications around case presentations as feedback [ 73 ]. Joshi (2017) identified case presentations as a way for students to ask for informal or spontaneous supervisor feedback [ 63 ].

Organization of follow-up feedback and action plans (i.e., feedforward dimension).

Feedback that generates use and response from students is characterized by two-way communication and embedded in a dialogue [ 30 ]. Feedback must be future-focused [ 29 ], and a feedback encounter should be followed by planning the next observation [ 46 , 87 ]. Follow-up feedback could be organized as a future self-assessment, reflective practice by the student, and/or a discussion with the supervisor or coach [ 68 ]. The literature describes that a lack of student interaction with teachers makes follow-up difficult [ 43 ]. According to Haffling et al. (2011), follow-up feedback sessions improve students' satisfaction with feedback compared to students who do not have follow-up sessions. In addition, these same authors reported that a second follow-up session allows verification of improved performances or confirmation that the skill was acquired [ 55 ].

Although feedback encounter forms are a recognized way of obtaining information about performance (i.e., feedback dimension), the literature does not provide many clear examples of how they may impact the feedforward phase. For example, Joshi et al. (2016) consider a feedback form with four fields (i.e., what did you do well, advise the student on what could be done to improve performance, indicate the level of proficiency, and personal details of the tutor). In this case, the supervisor highlighted what the student could improve but not how, which is the missing phase of the co-constructed action plan [ 63 ]. Whichever WBA instrument is used in clerkships to provide feedback, it should include a "next steps" box [ 44 ], and it is recommended to organize a long-term use of the WBA instrument so that those involved get used to it and improve interaction and feedback uptake [ 55 ]. RIME-based feedback (Reporting, Interpreting, Managing, Educating) is considered an interesting example, as it is perceived as helpful to students in knowing what they need to improve in their performance [ 44 ]. Hochberg (2017) implemented formative mid-clerkship assessments to enhance face-to-face feedback conversations and co-create an improvement plan [ 59 ]. Apps for structuring and storing feedback improve the amount of verbal and written feedback. In the study of Joshi et al. (2016), a reasonable proportion of students (64%) perceived that these app tools help them improve their performance during rotations [ 63 ].

Several studies indicate that an action plan as part of the follow-up feedback is essential for performance improvement and learning [ 46 , 55 , 60 ]. An action plan corresponds to an agreed-upon strategy for improving, confirming, or correcting performance. Bing-You et al. (2017) determined that only 12% of the articles included in their scoping review incorporated an action plan for learners [ 32 ]. Holmboe et al. (2004) reported that only 11% of the feedback sessions following a mini-CEX included an action plan [ 60 ]. Suhoyo et al. (2017) also reported that only 55% of mini-CEX encounters contained an action plan [ 80 ]. Other authors reported that action plans are not commonly offered during feedback encounters [ 77 ]. Sokol-Hessner et al. (2010) implemented feedback card comments with a space to provide written feedback and a specific action plan. In their results, 96% contained positive comments, and only 5% contained constructive comments [ 77 ]. In summary, although the recommendation is to include a “next step” box in the feedback instruments, evidence shows these items are not often used for constructive comments or action plans.

Sociocultural factors influencing the organization of feedback processes.

Multiple sociocultural factors influence interaction in feedback encounters, promoting or hampering the productivity of the feedback processes.

Clinical learning culture

Context impacts feedback processes [ 30 , 82 ], and there are barriers to incorporating actionable feedback in the clinical learning context. The clinical learning culture is partly determined by the clinical context, which can be unpredictable [ 29 , 46 , 68 ], as the available patients determine learning opportunities. Supervisors are occupied by a high workload, which results in limited time or priority for teaching [ 35 , 46 , 48 , 55 , 68 , 83 ], hindering students’ feedback-seeking behavior [ 54 ], and creating a challenge for the balance between patient care and student mentoring [ 35 ].

Clinical workplace culture does not always purposefully prioritize instances for feedback processes [ 83 , 84 ]. This often leads to limited direct observation [ 55 , 68 ] and the provision of poorly informed feedback. It is also evident that this affects trust between clinical teachers and students [ 52 ]. Supervisors consider feedback a low priority in clinical contexts [ 35 ] due to low compensation and lack of protected time [ 83 ]. In particular, lack of time appears to be the most significant and well-known barrier to frequent observation and workplace feedback [ 35 , 43 , 48 , 62 , 67 , 83 ].

The clinical environment is hierarchical [ 68 , 80 ] and can make students not consider themselves part of the team and feel like a burden to their supervisor [ 68 ]. This hierarchical learning environment can lead to unidirectional feedback, limit dialogue during feedback processes, and hinder the seeking, uptake, and use of feedback [ 67 , 68 ]. In a learning culture where feedback is not supported, learners are less likely to want to seek it and feel motivated and engaged in their learning [ 83 ]. Furthermore, it has been identified that clinical supervisors lack the motivation to teach [ 48 ] and the intention to observe or reobserve performance [ 86 ].

In summary, the clinical context and WBL culture do not fully use the potential of a feedback process aimed at closing learning gaps. However, concrete actions shown in the literature can be taken to improve the effectiveness of feedback by organizing the learning context. For example, McGinness et al. (2022) identified that students felt more receptive to feedback when working in a safe, nonjudgmental environment [ 67 ]. Moreover, supervisors and trainees identified the learning culture as key to establishing an open feedback dialogue [ 73 ]. Students who perceive culture as supportive and formative can feel more comfortable performing tasks and more willing to receive feedback [ 73 ].

Relationships

There is a consensus in the literature that trusting and long-term relationships improve the chances of actionable feedback. However, relationships between supervisors and students in the clinical workplace are often brief and not organized as more longitudinally [ 68 , 83 ], leaving little time to establish a trustful relationship [ 68 ]. Supervisors change continuously, resulting in short interactions that limit the creation of lasting relationships over time [ 50 , 68 , 83 ]. In some contexts, it is common for a student to have several supervisors who have their own standards in the observation of performance [ 46 , 56 , 68 , 83 ]. A lack of stable relationships results in students having little engagement in feedback [ 68 ]. Furthermore, in case of summative assessment programmes, the dual role of supervisors (i.e., assessing and giving feedback) makes feedback interactions perceived as summative and can complicate the relationship [ 83 ].

Repeatedly, the articles considered in this review describe that long-term and stable relationships enable the development of trust and respect [ 35 , 62 ] and foster feedback-seeking behavior [ 35 , 67 ] and feedback-giver behavior [ 39 ]. Moreover, constructive and positive relationships enhance students´ use of and response to feedback [ 30 ]. For example, Longitudinal Integrated Clerkships (LICs) promote stable relationships, thus enhancing the impact of feedback [ 83 ]. In a long-term trusting relationship, feedback can be straightforward and credible [ 87 ], there are more opportunities for student observation, and the likelihood of follow-up and actionable feedback improves [ 83 ]. Johnson et al. (2020) pointed out that within a clinical teacher-student relationship, the focus must be on establishing psychological safety; thus, the feedback conversations might be transformed [ 62 ].

Stable relationships enhance feedback dialogues, which offer an opportunity to co-construct learning and propose and negotiate aspects of the design of learning strategies [ 62 ].

Students as active agents in the feedback processes

The feedback response learners generate depends on the type of feedback information they receive, how credible the source of feedback information is, the relationship between the receiver and the giver, and the relevance of the information delivered [ 49 ]. Garino (2020) noted that students who are most successful in using feedback are those who do not take criticism personally, who understand what they need to improve and know they can do so, who value and feel meaning in criticism, are not surprised to receive it, and who are motivated to seek new feedback and use effective learning strategies [ 52 ]. Successful users of feedback ask others for help, are intentional about their learning, know what resources to use and when to use them, listen to and understand a message, value advice, and use effective learning strategies. They regulate their emotions, find meaning in the message, and are willing to change [ 52 ].

Student self-efficacy influences the understanding and use of feedback in the clinical workplace. McGinness et al. (2022) described various positive examples of self-efficacy regarding feedback processes: planning feedback meetings with teachers, fostering good relationships with the clinical team, demonstrating interest in assigned tasks, persisting in seeking feedback despite the patient workload, and taking advantage of opportunities for feedback, e.g., case presentations [ 67 ].

When students are encouraged to seek feedback aligned with their own learning objectives, they promote feedback information specific to what they want to learn and improve and enhance the use of feedback [ 53 ]. McGinness et al. (2022) identified that the perceived relevance of feedback information influenced the use of feedback because students were more likely to ask for feedback if they perceived that the information was useful to them. For example, if students feel part of the clinical team and participate in patient care, they are more likely to seek feedback [ 17 ].

Learning-oriented students aim to seek feedback to achieve clinical competence at the expected level [ 75 ]; they focus on improving their knowledge and skills and on professional development [ 17 ]. Performance-oriented students aim not to fail and to avoid negative feedback [ 17 , 75 ].

For effective feedback processes, including feed-up, feedback, and feedforward, the student must be feedback-oriented, i.e., active, seeking, listening to, interpreting, and acting on feedback [ 68 ]. The literature shows that feedback-oriented students are coproducers of learning [ 68 ] and are more involved in the feedback process [ 51 ]. Additionally, students who are metacognitively aware of their learning process are more likely to use feedback to reduce gaps in learning and performance [ 52 ]. For this, students must recognize feedback when it occurs and understand it when they receive it. Thus, it is important to organize training and promote feedback literacy so that students understand what feedback is, act on it, and improve the quality of feedback and their learning plans [ 68 ].

Table 5 summarizes those feedback tasks, activities, and key features of organizational aspects that enable each phase of the feedback loop based on the literature review.

The present scoping review identified 61 papers that mapped the literature on feedback processes in the WBL environments of undergraduate health professions. This review explored how feedback processes are organized in these learning contexts using the feedback loop framework. Given the specific characteristics of feedback processes in undergraduate clinical learning, three main findings were identified on how feedback processes are being conducted in the clinical environment and how these processes could be organized to support feedback processes.

First, the literature lacks a balance between the three dimensions of the feedback loop. In this regard, most of the articles in this review focused on reporting experiences or strategies for delivering feedback information (i.e., feedback dimension). Credible and objective feedback information is based on direct observation [ 46 ] and occurs within an interaction or a dialogue [ 62 , 88 ]. However, only having credible and objective information does not ensure that it will be considered, understood, used, and put into practice by the student [ 89 ].

Feedback-supporting actions aligned with goals and priorities facilitate effective feedback processes [ 89 ] because goal-oriented feedback focuses on students' learning needs [ 7 ]. In contrast, this review showed that only a minority of the studies highlighted the importance of aligning learning objectives and feedback (i.e., the feed-up dimension). To overcome this, supervisors and students must establish goals and agreements before starting clinical practice, as it allows students to measure themselves on a defined basis [ 90 , 91 ] and enhances students' feedback-seeking behavior [ 39 , 92 ] and responsiveness to feedback [ 83 ]. In addition, learning goals should be shared, and co-constructed, through a dialogue [ 50 , 88 , 90 , 92 ]. In fact, relationship-based feedback models emphasize setting shared goals and plans as part of the feedback process [ 68 ].

Many of the studies acknowledge the importance of establishing an action plan and promoting the use of feedback (i.e., feedforward). However, there is yet limited insight on how to best implement strategies that support the use of action plans, improve performance and close learning gaps. In this regard, it is described that delivering feedback without perceiving changes, results in no effect or impact on learning [ 88 ]. To determine if a feedback loop is closed, observing a change in the student's response is necessary. In other words, feedback does not work without repeating the same task [ 68 ], so teachers need to observe subsequent tasks to notice changes [ 88 ]. While feedforward is fundamental to long-term performance, it is shown that more research is needed to determine effective actions to be implemented in the WBL environment to close feedback loops.

Second, there is a need for more knowledge about designing feedback activities in the WBL environment that will generate constructive feedback for learning. WBA is the most frequently reported feedback activity in clinical workplace contexts [ 39 , 46 , 56 , 87 ]. Despite the efforts of some authors to use WBAs as a formative assessment and feedback opportunity, in several studies, a summative component of the WBA was presented as a barrier to actionable feedback [ 33 , 56 ]. Students suggest separating grading from observation and using, for example, the mini-CEX in informal situations [ 33 ]. Several authors also recommend disconnecting the summative components of WBAs to avoid generating emotions that can limit the uptake and use of feedback [ 28 , 93 ]. Other literature recommends purposefully designing a system of assessment using low-stakes data points for feedback and learning. Accordingly, programmatic assessment is a framework that combines both the learning and the decision-making function of assessment [ 94 , 95 ]. Programmatic assessment is a practical approach for implementing low-stakes as a continuum, giving opportunities to close the gap between current and desired performance and having the student as an active agent [ 96 ]. This approach enables the incorporation of low-stakes data points that target student learning [ 93 ] and provide performance-relevant information (i.e., meaningful feedback) based on direct observations during authentic professional activities [ 46 ]. Using low-stakes data points, learners make sense of information about their performance and use it to enhance the quality of their work or performance [ 96 , 97 , 98 ]. Implementing multiple instances of feedback is more effective than providing it once because it promotes closing feedback loops by giving the student opportunities to understand the feedback, make changes, and see if those changes were effective [ 89 ].

Third, the support provided by the teacher is fundamental and should be built into a reliable and long-term relationship, where the teacher must take the role of coach rather than assessor, and students should develop feedback agency and be active in seeking and using feedback to improve performance. Although it is recognized that institutional efforts over the past decades have focused on training teachers to deliver feedback, clinical supervisors' lack of teaching skills is still identified as a barrier to workplace feedback [ 99 ]. In particular, research indicates that clinical teachers lack the skills to transform the information obtained from an observation into constructive feedback [ 100 ]. Students are more likely to use feedback if they consider it credible and constructive [ 93 ] and based on stable relationships [ 93 , 99 , 101 ]. In trusting relationships, feedback can be straightforward and credible, and the likelihood of follow-up and actionable feedback improves [ 83 , 88 ]. Coaching strategies can be enhanced by teachers building an educational alliance that allows for trustworthy relationships or having supervisors with an exclusive coaching role [ 14 , 93 , 102 ].

Last, from a sociocultural perspective, individuals are the main actors in the learning process. Therefore, feedback impacts learning only if students engage and interact with it [ 11 ]. Thus, feedback design and student agency appear to be the main features of effective feedback processes. Accordingly, the present review identified that feedback design is a key feature for effective learning in complex environments such as WBL. Feedback in the workplace must ideally be organized and implemented to align learning outcomes, learning activities, and assessments, allowing learners to learn, practice, and close feedback loops [ 88 ]. To guide students toward performances that reflect long-term learning, an intensive formative learning phase is needed, in which multiple feedback processes are included that shape students´ further learning [ 103 ]. This design would promote student uptake of feedback for subsequent performance [ 1 ].

Strengths and limitations

The strengths of this study are (1) the use of an established framework, the Arksey and O'Malley's framework [ 22 ]. We included the step of socializing the results with stakeholders, which allowed the team to better understand the results from another perspective and offer a realistic look. (2) Using the feedback loop as a theoretical framework strengthened the results and gave a more thorough explanation of the literature regarding feedback processes in the WBL context. (3) our team was diverse and included researchers from different disciplines as well as a librarian.

The present scoping review has several limitations. Although we adhered to the recommended protocols and methodologies, some relevant papers may have been omitted. The research team decided to select original studies and reviews of the literature for the present scoping review. This caused some articles, such as guidelines, perspectives, and narrative papers, to be excluded from the current study.