Azure Research – Systems

Cloud systems innovation at the core of Azure

Publications

Publications by year, managing memory tiers with cxl in virtualized environments.

Yuhong Zhong, Daniel S. Berger , Carl Waldspurger, Ishwar Agarwal, Rajat Agarwal, Frank Hady, Karthik Kumar, Mark D. Hill, Mosharaf Chowdhury, Asaf Cidon

USENIX OSDI | July 2024

Designing Cloud Servers for Lower Carbon

Jaylen Wang, Daniel S. Berger , Fiodar Kazhamiaka , Celine Irvene , Chaojie Zhang , Esha Choukse , Kali Frost , Rodrigo Fonseca , Brijesh Warrier, Chetan Bansal , Jonathan Stern, Ricardo Bianchini , Akshitha Sriraman

ISCA | June 2024

Publication

DyLeCT: Achieving Huge-page-like Translation Performance For Hardware-compressed Memory

Gagan Panwar, Muhammad Laghari, Esha Choukse , Xun Jian

SmartOClock: Workload- and Risk-Aware Overclocking in the Cloud

Jovan Stojkovic, Pulkit Misra , Íñigo Goiri , Sam Whitlock , Esha Choukse , Mayukh Das , Chetan Bansal , Jason Lee, Zoey Sun, Haoran Qiu, Reed Zimmermann, Savyasachi Samal, Brijesh Warrier , Ashish Raniwala, Ricardo Bianchini

Splitwise: Efficient generative LLM inference using phase splitting

Pratyush Patel, Esha Choukse , Chaojie Zhang , Aashaka Shah , Íñigo Goiri , Saeed Maleki, Ricardo Bianchini

DOI Publication Project

Characterizing Power Management Opportunities for LLMs in the Cloud

Pratyush Patel, Esha Choukse , Chaojie Zhang , Íñigo Goiri , Brijesh Warrier , Nithish Mahalingam, Ricardo Bianchini

ASPLOS | April 2024

Publication Project

Intelligent Overclocking for Improved Cloud Efficiency

Aditya Soni, Mayukh Das , Pulkit Misra , Chetan Bansal

AIOps ’24 workshop @ ASPLOS [5th International Workshop on Cloud Intelligence / AIOps] | April 2024

Making Kernel Bypass Practical for the Cloud with Junction

Joshua Fried, Gohar Irfan Chaudhry, Enrique Saurez , Esha Choukse , Íñigo Goiri , Sameh Elnikety , Rodrigo Fonseca , Adam Belay

NSDI | April 2024

Baleen: ML Admission & Prefetching for Flash Caches

Daniel Lin-Kit Wong, Hao Wu, Carson Molder, S. Gunasekar, Jimmy Lu, Snehal Khandkar, Abhinav Sharma, Daniel S. Berger , Nathan Beckmann, G. R. Ganger

USENIX FAST | February 2024

POLCA: Power Oversubscription in LLM Cloud Providers

ArXiv | August 2023, Vol abs/2308.12908

Enso: A Streaming Interface for NIC-Application Communication

Hugo Sadok, Nirav Atre, Zhipeng Zhao, Daniel S. Berger , James C. Hoe, Aurojit Panda, Justine Sherry, Ren Wang

Proceedings of the 17th Symposium on Operating Systems Design and Implementation (OSDI) | July 2023

OSDI Best Paper Award

Hyrax: Fail-in-Place Server Operation in Cloud Platforms

Jialun Lyu, Marisa You, Celine Irvene , Mark Jung, Tyler Narmore, Jacob Shapiro, Luke Marshall , Savyasachi Samal, Ioannis Manousakis, Lisa Hsu, Preetha Subbarayalu, Ashish Raniwala, Brijesh Warrier, Ricardo Bianchini , Bianca Shroeder, Daniel S. Berger

Myths and Misconceptions Around Reducing Carbon Embedded in Cloud Platforms

Jialun Lyu, Jaylen Wang, Kali Frost , Chaojie Zhang , Celine Irvene , Esha Choukse , Rodrigo Fonseca , Ricardo Bianchini , Fiodar Kazhamiaka , Daniel S. Berger

2nd Workshop on Sustainable Computer Systems (HotCarbon’23) | July 2023

Towards Improved Power Management in Cloud GPUs

Pratyush Patel, Zibo Gong, S. Rizvi, Esha Choukse, Pulkit A. Misra, T. Anderson, Akshitha Sriraman, Esha Choukse , Pulkit Misra

IEEE Computer Architecture Letters | June 2023, Vol 22: pp. 141-144

DOI Project

How Different are the Cloud Workloads? Characterizing Large-Scale Private and Public Cloud Workloads

Xiaoting Qin , Minghua Ma, Yueng Zhao, Jue Zhang , Chao Du, Yudong Liu, A. Parayil , Chetan Bansal , Saravan Rajmohan , Íñigo Goiri , Eli Cortez, Si Qin , Qingwei Lin 林庆维 , Dongmei Zhang

DSN | June 2023

An Introduction to the Compute Express Link (CXL) Interconnect

Debendra Das Sharma, R. Blankenship, Daniel S. Berger

DOI Publication

Palette Load Balancing: Locality Hints for Serverless Functions

Mania Abdi, Sam Ginzburg, Charles Lin, Jose M Faleiro, Íñigo Goiri , Gohar Irfan Chaudhry, Ricardo Bianchini , Daniel S. Berger , Rodrigo Fonseca

EuroSys | May 2023

With Great Freedom Comes Great Opportunity: Rethinking Resource Allocation for Serverless Functions

Muhammad Bilal, Marco Canini, Rodrigo Fonseca , Rodrigo Rodrigues

Publication DOI Project

Unlocking unallocated cloud capacity for long, uninterruptible workloads

Anup Agarwal, Shadi Noghabi, Íñigo Goiri , Srinivasan Seshan, Anirudh Badam

NSDI | April 2023

SelfTune: Tuning Cluster Managers

Ajaykrishna Karthikeyan, Nagarajan Natarajan , Gagan Somashekar, Lei Zhao, Ranjita Bhagwan, Rodrigo Fonseca , Tatiana Racheva, Yogesh Bansal

2023 Networked Systems Design and Implementation | April 2023

Publication Publication Project Project

Snape: Reliable and Low-Cost Computing with Mixture of Spot and On-Demand VMs

Fangkai Yang , Lu Wang, Zhenyu Xu, Jue Zhang , Liqun Li, Bo Qiao , Camille Couturier , Chetan Bansal , Soumya Ram, Si Qin , Zhen Ma, Íñigo Goiri , Eli Cortez, Terry Yang, Victor Ruehle , Saravan Rajmohan , Qingwei Lin 林庆维 , Dongmei Zhang

Proceedings of the International Conference on Architectural Support for Programming Languages and Operating Systems (ASPLOS) | March 2023

Pond: CXL-Based Memory Pooling Systems for Cloud Platforms

Huaicheng Li, Daniel S. Berger , Stanko Novakovic, Lisa Hsu, Dan Ernst, Pantea Zardoshti , Monish Shah, Samir Rajadnya, Scott Lee, Ishwar Agarwal, Mark D. Hill, Marcus Fontoura, Ricardo Bianchini

Distinguished Paper Award

Research Avenues Towards Net-Zero Cloud Platforms

Daniel S. Berger , Fiodar Kazhamiaka , Esha Choukse , Íñigo Goiri , Celine Irvene , Pulkit Misra , Alok Kumbhare , Rodrigo Fonseca , Ricardo Bianchini

NetZero 2023: 1st Workshop on NetZero Carbon Computing | February 2023

Design Tradeoffs in CXL-Based Memory Pools for Public Cloud Platforms

Daniel S. Berger , Huaicheng Li, Pantea Zardoshti , Monish Shah, Samir Rajadnya, Scott Lee, Lisa Hsu, Ishwar Agarwal, Mark D. Hill, Ricardo Bianchini

IEEE Micro | February 2023, Vol 43(2): pp. 30-38

TIPSY: Predicting Where Traffic Will Ingress a WAN

Michael Markovitch, Sharad Agarwal , Rodrigo Fonseca , Ryan Beckett , Chuanji Zhang, Irena Atov, Somesh Chaturmohta

ACM SIGCOMM | August 2022

Overclocking in Immersion-Cooled Datacenters

Pulkit Misra , Ioannis Manousakis, Esha Choukse , Majid Jalili, Íñigo Goiri , Ashish Raniwala, Brijesh Warrier, Husam Alissa, Bharath Ramakrishnan, Phillip Tuma, Christian Belady, Marcus Fontoura, Ricardo Bianchini

IEEE Micro Top Picks from the 2021 Computer Architecture Conferences | July 2022

C2DN: How to Harness Erasure Codes at the Edge for Efficient Content Delivery

Juncheng Yang, Anirudh Sabnis, Daniel S. Berger , K. V. Rashmi, Ramesh K. Sitaraman

Networked Systems Design and Implementation (NSDI) | April 2022

Memory-Harvesting VMs in Cloud Platforms

Alex Fuerst, Stanko Novakovic, Íñigo Goiri , Gohar Irfan Chaudhry, Prateek Sharma, Kapil Arya , Kevin Broas, Eugene Bak, Mehmet Iyigun, Ricardo Bianchini

Proceedings of the International Conference on Architectural Support for Programming Languages and Operating Systems (ASPLOS) | March 2022

SOL: Safe On-Node Learning in Cloud Platforms

Yawen Wang, Dan Crankshaw, Neeraja J. Yadwadkar, Daniel S. Berger , Christos Kozyrakis, Ricardo Bianchini

Faa$T: A Transparent Auto-Scaling Cache for Serverless Applications

Francisco Romero, Gohar Irfan Chaudhry, Íñigo Goiri , Pragna Gopa, Paul Batum, Neeraja J. Yadwadkar, Rodrigo Fonseca , Christos Kozyrakis, Ricardo Bianchini

Proceedings of the Symposium on Cloud Computing (SoCC) | November 2021

Publication Project Project

Provisioning Differentiated Last-Level Cache Allocations to VMs in Public Clouds

Mohammad Shahrad, Sameh Elnikety , Ricardo Bianchini

Faster and Cheaper Serverless Computing on Harvested Resources

Yanqi Zhang, Íñigo Goiri , Gohar Irfan Chaudhry, Rodrigo Fonseca , Sameh Elnikety , Christina Delimitrou, Ricardo Bianchini

Proceedings of the International Symposium on Operating Systems Principles (SOSP) | October 2021

Prediction-Based Power Oversubscription in Cloud Platforms

Alok Kumbhare , Reza Azimi, Ioannis Manousakis, Anand Bonde , Felipe Vieira Frujeri, Nithish Mahalingam, Pulkit Misra , Seyyed Ahmad Javadi, Bianca Schroeder, Marcus Fontoura, Ricardo Bianchini

Proceedings of the USENIX Annual Technical Conference (ATC) | July 2021

Earlier version published as arXiv:2010.15388, October 2020

Cost-Efficient Overclocking in Immersion-Cooled Datacenters

Majid Jalili, Ioannis Manousakis, Íñigo Goiri , Pulkit Misra , Ashish Raniwala, Husam Alissa, Bharath Ramakrishnan, Phillip Tuma, Christian Belady, Marcus Fontoura, Ricardo Bianchini

Proceedings of the International Symposium on Computer Architecture (ISCA) | June 2021

Flex: High-Availability Datacenters With Zero Reserved Power

Chaojie Zhang, Alok Kumbhare , Ioannis Manousakis, Deli Zhang, Pulkit Misra , Rod Assis, Kyle Woolcock, Nithish Mahalingam, Brijesh Warrier, David Gauthier, Lalu Kunnath, Steve Solomon, Osvaldo Morales, Marcus Fontoura, Ricardo Bianchini

SmartHarvest: Harvesting Idle CPUs Safely and Efficiently in the Cloud

Yawen Wang, Kapil Arya , Marios Kogias, Manohar Vanga, Aditya Bhandari, Neeraja J. Yadwadkar, Siddhartha Sen , Sameh Elnikety , Christos Kozyrakis, Ricardo Bianchini

Proceedings of the 16th European Conference on Computer Systems (EuroSys) | April 2021

Providing SLOs for Resource-Harvesting VMs in Cloud Platforms

Pradeep Ambati, Íñigo Goiri , Felipe Vieira Frujeri, Alper Gun, Ke Wang, Brian Dolan, Brian Corell, Sekhar Pasupuleti, Thomas Moscibroda , Sameh Elnikety , Marcus Fontoura, Ricardo Bianchini

Proceedings of the Symposium on Operating Systems Design and Implementation (OSDI) | November 2020

Serverless in the Wild: Characterizing and Optimizing the Serverless Workload at a Large Cloud Provider

Mohammad Shahrad, Rodrigo Fonseca , Íñigo Goiri , Gohar Irfan Chaudhry, Paul Batum, Jason Cooke, Eduardo Laureano, Colby Tresness, Mark Russinovich , Ricardo Bianchini

Proceedings of the USENIX Annual Technical Conference (ATC) | July 2020

Community Award

Buddy compression: enabling larger memory for deep learning and HPC workloads on GPUs

Esha Choukse , Michael B. Sullivan, Mike O’Connor, Mattan Erez, Jeff Pool, David Nellans, Stephen W. Keckler

2020 International Symposium on Computer Architecture | May 2020

DOI Publication PDF

LeapIO: Efficient and Portable Virtual NVMe Storage on ARM SoCs

Huaicheng Li, Mingzhe Hao, Stanko Novakovic (stnovako), Vaibhav Gogte, Sriram Govindan, Dan R. K. Ports , Irene Zhang , Ricardo Bianchini , Haryadi S. Gunawi, Anirudh Badam

Proceedings on the International Conference on Architectural Support for Programming Languages and Operating Systems (ASPLOS) | March 2020

Toward ML-Centric Cloud Platforms: Opportunities, Designs, and Experience with Microsoft Azure

Ricardo Bianchini , Marcus Fontoura, Eli Cortez, Anand Bonde , Alexandre Muzio, Ana-Maria Constantin, Thomas Moscibroda , Gabriel Magalhaes, Girish Bablani, Mark Russinovich

Communications of the ACM | February 2020, Vol 63(2)

PruneTrain: fast neural network training by dynamic sparse model reconfiguration

Sangkug Lym, Esha Choukse , Siavash Zangeneh, Wei Wen, Sujay Sanghavi, Mattan Erez

2019 IEEE International Conference on High Performance Computing, Data, and Analytics | November 2019

Managing Tail Latency in Datacenter-Scale File Systems Under Production Constraints

Pulkit Misra , María F. Borge, Íñigo Goiri , Alvin R. Lebeck, Willy Zwaenepoel, Ricardo Bianchini

Proceedings of the 14th European Conference on Computer Systems (EuroSys) | March 2019

Uncertainty Propagation in Data Processing Systems

Ioannis Manousakis, Íñigo Goiri , Ricardo Bianchini , Sandro Rigo, Thu D. Nguyen

Proceedings of the Symposium on Cloud Computing (SoCC) | November 2018

Resource Central: Understanding and Predicting Workloads for Improved Resource Management in Large Cloud Platforms

Eli Cortez, Anand Bonde , Alexandre Muzio, Mark Russinovich, Marcus Fontoura, Ricardo Bianchini

Proceedings of the International Symposium on Operating Systems Principles (SOSP) | October 2017

Exploiting Heterogeneity for Tail Latency and Energy Efficiency

Md E. Haque, Yuxiong He, Sameh Elnikety , Thu D. Nguyen, Ricardo Bianchini , Kathryn McKinley

Proceedings of the International Symposium on Microarchitecture (MICRO) | October 2017

Scaling Distributed File Systems in Resource-Harvesting Datacenters

Pulkit Misra , Íñigo Goiri , Jason Kace , Ricardo Bianchini

Proceedings of USENIX Annual Technical Conference (ATC) | July 2017

History-Based Harvesting Spare Cycles and Storage in Large-Scale Datacenters

Yunqi Zhang, George Prekas, Giovanni Matteo Fumarola, Marcus Fontoura, Íñigo Goiri , Ricardo Bianchini

Proceedings of the International Symposium on Operating Systems Design and Implementation (OSDI) | November 2016

Environmental Conditions and Disk Reliability in Free-Cooled Datacenters

Ioannis Manousakis, Sriram Sankar, Gregg McKnight, Thu D. Nguyen, Ricardo Bianchini

Proceedings of the USENIX Conference on File and Storage Technologies (FAST) | February 2016

Best paper award

CoolProvision: Underprovisioning Datacenter Cooling

Ioannis Manousakis, Íñigo Goiri , Sriram Sankar, Thu D. Nguyen, Ricardo Bianchini

Proceedings of the International Symposium on Cloud Computing (SoCC) | August 2015

ApproxHadoop: Bringing Approximations to MapReduce Frameworks

Íñigo Goiri , Ricardo Bianchini , Santosh Nagarakatte, Thu D. Nguyen

Proceedings of the ACM International Conference on Architectural Support for Programming Languages and Operating Systems (ASPLOS) | March 2015

CoolAir: Temperature- and Variation-Aware Management for Free-Cooled Datacenters

Íñigo Goiri , Thu D. Nguyen, Ricardo Bianchini

Few-to-Many: Incremental Parallelism for Reducing Tail Latency in Interactive Services

E. Haque, Yong hun Eom, Yuxiong He, Sameh Elnikety , Ricardo Bianchini , Kathryn S McKinley

Publications by Research Area

Artificial intelligence, systems and networking, hardware and devices, programming languages and software engineering, search and information retrieval, publications by type, inproceedings (conference), miscellaneous, article (journal).

- Follow on Twitter

- Like on Facebook

- Follow on LinkedIn

- Subscribe on Youtube

- Follow on Instagram

- Subscribe to our RSS feed

Share this page:

- Share on Twitter

- Share on Facebook

- Share on LinkedIn

- Share on Reddit

A Detailed Study of Azure Platform & Its Cognitive Services

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

Increasing research and development productivity with Copilot in Azure Quantum Elements

- Share Increasing research and development productivity with Copilot in Azure Quantum Elements on X X

- Share Increasing research and development productivity with Copilot in Azure Quantum Elements on LinkedIn LinkedIn

- Share Increasing research and development productivity with Copilot in Azure Quantum Elements on Facebook Facebook

- Share Increasing research and development productivity with Copilot in Azure Quantum Elements on Email Email

- Print a copy of Increasing research and development productivity with Copilot in Azure Quantum Elements Print

Dr. Chi Chen

Senior Quantum Architect

Yousif Almulla

Machine Learning Engineer

At Microsoft, we see opportunities to accelerate research and development (R&D) productivity and usher in a new era of scientific discovery by harnessing the power of today’s new generation of AI. In pursuit of this goal, we recently released Copilot in Azure Quantum Elements , which enables scientists to use natural language to reason through, and orchestrate solutions to, some of the most complex chemistry and materials science problems—from developing safer and more sustainable products to accelerating drug discovery. With Copilot, researchers no longer need to manually sift through countless research papers in search of relevant data. Furthermore, scientists can turn a few words of natural language into chemistry simulation code, and students can use interactive exercises to learn quantum computing concepts.

Azure Quantum Elements

Accelerating scientific discovery

Helping researchers learn and do more with Copilot

We are living through a time of rapid technological change, which will transform nearly every aspect of work and life. As one potent example, today’s new generation of AI and large language models are allowing us to tap into our universal user interface—natural language—to make tasks more efficient.

In a recent post , we highlighted how we combined property-prediction AI models for new materials with HPC calculations to digitally screen candidates. This work highlighted the acceleration capabilities that AI provides in a digital discovery pipeline. With the addition of large language models, AI can provide even more opportunities to increase research productivity.

The launch of Copilot—providing free capabilities online and deeper integration within Azure Quantum Elements—was driven by the desire to increase research productivity and prioritize the needs of innovators, equipping them with a seamless user experience that can make complex simulations more manageable. Copilot in Azure Quantum is built on Azure OpenAI services and grounded on chemistry and materials science data from over 300,000 open-source documents, publications, textbooks, and manuals. This means users can get technical and guided answers, query and visualize data, and initiate simulations—in turn reducing the barriers to configuring and launching workflows. Furthermore, Copilot can help innovators prepare for a quantum future with interactive exercises for learning about quantum computing and writing code for today’s quantum computers.

Lowering the entry barrier for chemistry simulations: code generation for scientific workflows

Computational workflows can be complex, and usually require a deep understanding of a variety of software packages and codes. With Copilot integrated into Azure Quantum Elements, scientists can now run simulations without having to write all of the code themselves, due to Copilot’s ability to generate code for both new and existing workflows within a Jupyter notebook.

For instance, Copilot can generate code to help model the electronic structure of a molecule with density functional theory (DFT) calculations. DFT is one of the most popular computational chemistry methods due to its efficiency and accuracy on a wide range of quantum mechanical properties, aiding with electronic structure visualization and many other property evaluation applications.

Furthermore, Copilot can describe the computational approach for addressing a scientific problem and instruct the system to configure both the underlying software needed and the hardware on which to run it. This greatly lowers the barriers to running chemistry and materials science workflows. The example below highlights Copilot’s ability to use the Quantum ESPRESSO package and write code for calculating the band structure of indium arsenide, a semiconductor, using the AiiDA workflow manager.

Tuned for scientific research: Retrieval Augmented Generation capabilities

While Copilot’s integrated coding features are currently only available for Azure Quantum Elements customers, Copilot also offers free capabilities to help streamline research. Using these features, scientists can save valuable time by using Copilot to find and summarize relevant studies and articles quickly. This is possible because Copilot can reference publicly available scientific research papers that are acceptable for commercial use.

This referencing capability is possible via grounding, a technique in which AI models extract relevant information from various data sets, enabling them to generate correct answers to questions. Grounding is based on Retrieval Augmented Generation (RAG), which helps large language models extract insights from large amounts of supplemental and business-specific data without having to retrain the models on new information—a very expensive task. With RAG, the large language model searches a supplemental dataset and matches the user’s prompt to the relevant chunks within, which are then summarized. You can learn more about grounding data in a recent Microsoft Research article.

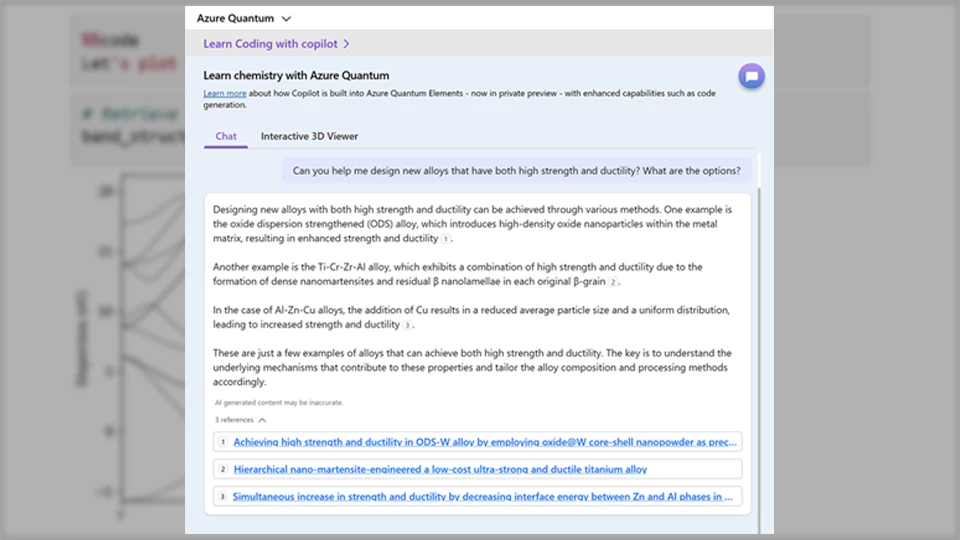

With these capabilities in Copilot, researchers can obtain technical answers to a wide variety of scientific questions. As an example, the following illustration shows Copilot citing recent research articles to answer the question, “Can you help me design new alloys that have both high strength and ductility? What are the options?”

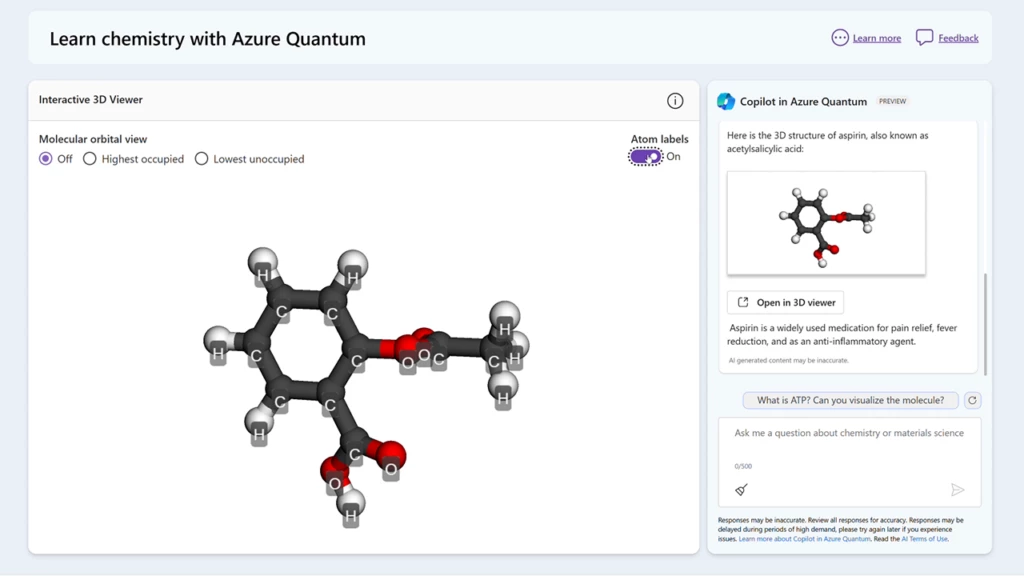

Grounded with additional chemistry and materials science data: molecular visualization and proprietary datasets

In addition to being grounded on publicly available research papers for text queries, Copilot in Azure Quantum is also augmented with molecular structure data. This means that researchers can visualize molecules, derived from millions of possibilities, as well as calculate their respective orbitals 1 . As an example, Copilot can visualize the molecule found in aspirin, acetylsalicylic acid. Regardless of whether the text input was aspirin or acetylsalicylic acid, Copilot would display the same molecule.

Copilot’s molecular visualization capabilities are available for free . Furthermore, our Azure Quantum team is hard at work to pioneer new Copilot capabilities—for future integration within Azure Quantum Elements—that would enable enterprise customers to create a customized Copilot experience grounded on their own proprietary data. More information about the private preview of Azure Quantum Elements is available here.

Learning about quantum computing with the Azure Quantum Katas

Copilot is fueling scientific discovery and also nurturing a robust ecosystem of quantum innovators. To help prepare for a quantum future, we recently released the Azure Quantum Katas , which are free, self-paced, Copilot-assisted programming exercises that teach the elements of quantum computing and the Q# programming language. Learners can start exploring the hands-on exercises with no installation or subscription required.

Learn more about Azure Quantum Elements

Interested to learn more about how the power of the Azure cloud can help you? Here are additional resources:

- Try Copilot for chemistry and Azure Quantum Q# coding .

- Sign up to learn more about the private preview of Azure Quantum Elements .

- Read our recent paper in collaboration with AI4Science— The Impact of Large Language Models on Scientific Discovery: a preliminary Study using GPT-4 .

- Visit the Azure Quantum Elements website and check out the Microsoft Quantum Innovator Series webinars .

1 Calculations performed using xTB container running in Azure K8S.

Related blog posts

How microsoft and quantinuum achieved reliable quantum computing .

Today, Microsoft is announcing a critical breakthrough that advances the field of quantum computing by Read more

Responsible computing and accelerating scientific discovery across HPC, AI, and Quantum

The technological landscape can evolve quickly, and early adoption of governance and risk mitigation measures Read more

Microsoft and 1910 Genetics partner to turbocharge R&D productivity for the pharmaceutical industry

Unprecedented collaboration will build the most powerful, fully integrated, AI-driven drug discovery and development platform Read more

Follow Microsoft

- Get filtered RSS

- Get all RSS

This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

Azure Cosmos DB conceptual whitepapers

- 4 contributors

Whitepapers allow you to explore Azure Cosmos DB concepts at a deeper level. This article provides you with a list of available whitepapers for Azure Cosmos DB.

- Learn about common Azure Cosmos DB use cases

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback .

Submit and view feedback for

Additional resources

Academia.edu no longer supports Internet Explorer.

To browse Academia.edu and the wider internet faster and more securely, please take a few seconds to upgrade your browser .

- We're Hiring!

- Help Center

Microsoft Azure

- Most Cited Papers

- Most Downloaded Papers

- Newest Papers

- Save to Library

- Last »

- Hyper-V Follow Following

- Linux/Windows System Administration Follow Following

- System Administration Follow Following

- AZ-400 Follow Following

- Technology Follow Following

- VMWare Follow Following

- English Follow Following

- Education Follow Following

- Exam Questions Follow Following

- AZ-103 pdf dumps Follow Following

Enter the email address you signed up with and we'll email you a reset link.

- Academia.edu Publishing

- We're Hiring!

- Help Center

- Find new research papers in:

- Health Sciences

- Earth Sciences

- Cognitive Science

- Mathematics

- Computer Science

- Academia ©2024

Our approach

- Responsibility

- Infrastructure

- Try Meta AI

RECOMMENDED READS

- 5 Steps to Getting Started with Llama 2

- The Llama Ecosystem: Past, Present, and Future

- Introducing Code Llama, a state-of-the-art large language model for coding

- Meta and Microsoft Introduce the Next Generation of Llama

- Today, we’re introducing Meta Llama 3, the next generation of our state-of-the-art open source large language model.

- Llama 3 models will soon be available on AWS, Databricks, Google Cloud, Hugging Face, Kaggle, IBM WatsonX, Microsoft Azure, NVIDIA NIM, and Snowflake, and with support from hardware platforms offered by AMD, AWS, Dell, Intel, NVIDIA, and Qualcomm.

- We’re dedicated to developing Llama 3 in a responsible way, and we’re offering various resources to help others use it responsibly as well. This includes introducing new trust and safety tools with Llama Guard 2, Code Shield, and CyberSec Eval 2.

- In the coming months, we expect to introduce new capabilities, longer context windows, additional model sizes, and enhanced performance, and we’ll share the Llama 3 research paper.

- Meta AI, built with Llama 3 technology, is now one of the world’s leading AI assistants that can boost your intelligence and lighten your load—helping you learn, get things done, create content, and connect to make the most out of every moment. You can try Meta AI here .

Today, we’re excited to share the first two models of the next generation of Llama, Meta Llama 3, available for broad use. This release features pretrained and instruction-fine-tuned language models with 8B and 70B parameters that can support a broad range of use cases. This next generation of Llama demonstrates state-of-the-art performance on a wide range of industry benchmarks and offers new capabilities, including improved reasoning. We believe these are the best open source models of their class, period. In support of our longstanding open approach, we’re putting Llama 3 in the hands of the community. We want to kickstart the next wave of innovation in AI across the stack—from applications to developer tools to evals to inference optimizations and more. We can’t wait to see what you build and look forward to your feedback.

Our goals for Llama 3

With Llama 3, we set out to build the best open models that are on par with the best proprietary models available today. We wanted to address developer feedback to increase the overall helpfulness of Llama 3 and are doing so while continuing to play a leading role on responsible use and deployment of LLMs. We are embracing the open source ethos of releasing early and often to enable the community to get access to these models while they are still in development. The text-based models we are releasing today are the first in the Llama 3 collection of models. Our goal in the near future is to make Llama 3 multilingual and multimodal, have longer context, and continue to improve overall performance across core LLM capabilities such as reasoning and coding.

State-of-the-art performance

Our new 8B and 70B parameter Llama 3 models are a major leap over Llama 2 and establish a new state-of-the-art for LLM models at those scales. Thanks to improvements in pretraining and post-training, our pretrained and instruction-fine-tuned models are the best models existing today at the 8B and 70B parameter scale. Improvements in our post-training procedures substantially reduced false refusal rates, improved alignment, and increased diversity in model responses. We also saw greatly improved capabilities like reasoning, code generation, and instruction following making Llama 3 more steerable.

*Please see evaluation details for setting and parameters with which these evaluations are calculated.

In the development of Llama 3, we looked at model performance on standard benchmarks and also sought to optimize for performance for real-world scenarios. To this end, we developed a new high-quality human evaluation set. This evaluation set contains 1,800 prompts that cover 12 key use cases: asking for advice, brainstorming, classification, closed question answering, coding, creative writing, extraction, inhabiting a character/persona, open question answering, reasoning, rewriting, and summarization. To prevent accidental overfitting of our models on this evaluation set, even our own modeling teams do not have access to it. The chart below shows aggregated results of our human evaluations across of these categories and prompts against Claude Sonnet, Mistral Medium, and GPT-3.5.

Preference rankings by human annotators based on this evaluation set highlight the strong performance of our 70B instruction-following model compared to competing models of comparable size in real-world scenarios.

Our pretrained model also establishes a new state-of-the-art for LLM models at those scales.

To develop a great language model, we believe it’s important to innovate, scale, and optimize for simplicity. We adopted this design philosophy throughout the Llama 3 project with a focus on four key ingredients: the model architecture, the pretraining data, scaling up pretraining, and instruction fine-tuning.

Model architecture

In line with our design philosophy, we opted for a relatively standard decoder-only transformer architecture in Llama 3. Compared to Llama 2, we made several key improvements. Llama 3 uses a tokenizer with a vocabulary of 128K tokens that encodes language much more efficiently, which leads to substantially improved model performance. To improve the inference efficiency of Llama 3 models, we’ve adopted grouped query attention (GQA) across both the 8B and 70B sizes. We trained the models on sequences of 8,192 tokens, using a mask to ensure self-attention does not cross document boundaries.

Training data

To train the best language model, the curation of a large, high-quality training dataset is paramount. In line with our design principles, we invested heavily in pretraining data. Llama 3 is pretrained on over 15T tokens that were all collected from publicly available sources. Our training dataset is seven times larger than that used for Llama 2, and it includes four times more code. To prepare for upcoming multilingual use cases, over 5% of the Llama 3 pretraining dataset consists of high-quality non-English data that covers over 30 languages. However, we do not expect the same level of performance in these languages as in English.

To ensure Llama 3 is trained on data of the highest quality, we developed a series of data-filtering pipelines. These pipelines include using heuristic filters, NSFW filters, semantic deduplication approaches, and text classifiers to predict data quality. We found that previous generations of Llama are surprisingly good at identifying high-quality data, hence we used Llama 2 to generate the training data for the text-quality classifiers that are powering Llama 3.

We also performed extensive experiments to evaluate the best ways of mixing data from different sources in our final pretraining dataset. These experiments enabled us to select a data mix that ensures that Llama 3 performs well across use cases including trivia questions, STEM, coding, historical knowledge, etc.

Scaling up pretraining

To effectively leverage our pretraining data in Llama 3 models, we put substantial effort into scaling up pretraining. Specifically, we have developed a series of detailed scaling laws for downstream benchmark evaluations. These scaling laws enable us to select an optimal data mix and to make informed decisions on how to best use our training compute. Importantly, scaling laws allow us to predict the performance of our largest models on key tasks (for example, code generation as evaluated on the HumanEval benchmark—see above) before we actually train the models. This helps us ensure strong performance of our final models across a variety of use cases and capabilities.

We made several new observations on scaling behavior during the development of Llama 3. For example, while the Chinchilla-optimal amount of training compute for an 8B parameter model corresponds to ~200B tokens, we found that model performance continues to improve even after the model is trained on two orders of magnitude more data. Both our 8B and 70B parameter models continued to improve log-linearly after we trained them on up to 15T tokens. Larger models can match the performance of these smaller models with less training compute, but smaller models are generally preferred because they are much more efficient during inference.

To train our largest Llama 3 models, we combined three types of parallelization: data parallelization, model parallelization, and pipeline parallelization. Our most efficient implementation achieves a compute utilization of over 400 TFLOPS per GPU when trained on 16K GPUs simultaneously. We performed training runs on two custom-built 24K GPU clusters . To maximize GPU uptime, we developed an advanced new training stack that automates error detection, handling, and maintenance. We also greatly improved our hardware reliability and detection mechanisms for silent data corruption, and we developed new scalable storage systems that reduce overheads of checkpointing and rollback. Those improvements resulted in an overall effective training time of more than 95%. Combined, these improvements increased the efficiency of Llama 3 training by ~three times compared to Llama 2.

Instruction fine-tuning

To fully unlock the potential of our pretrained models in chat use cases, we innovated on our approach to instruction-tuning as well. Our approach to post-training is a combination of supervised fine-tuning (SFT), rejection sampling, proximal policy optimization (PPO), and direct preference optimization (DPO). The quality of the prompts that are used in SFT and the preference rankings that are used in PPO and DPO has an outsized influence on the performance of aligned models. Some of our biggest improvements in model quality came from carefully curating this data and performing multiple rounds of quality assurance on annotations provided by human annotators.

Learning from preference rankings via PPO and DPO also greatly improved the performance of Llama 3 on reasoning and coding tasks. We found that if you ask a model a reasoning question that it struggles to answer, the model will sometimes produce the right reasoning trace: The model knows how to produce the right answer, but it does not know how to select it. Training on preference rankings enables the model to learn how to select it.

Building with Llama 3

Our vision is to enable developers to customize Llama 3 to support relevant use cases and to make it easier to adopt best practices and improve the open ecosystem. With this release, we’re providing new trust and safety tools including updated components with both Llama Guard 2 and Cybersec Eval 2, and the introduction of Code Shield—an inference time guardrail for filtering insecure code produced by LLMs.

We’ve also co-developed Llama 3 with torchtune , the new PyTorch-native library for easily authoring, fine-tuning, and experimenting with LLMs. torchtune provides memory efficient and hackable training recipes written entirely in PyTorch. The library is integrated with popular platforms such as Hugging Face, Weights & Biases, and EleutherAI and even supports Executorch for enabling efficient inference to be run on a wide variety of mobile and edge devices. For everything from prompt engineering to using Llama 3 with LangChain we have a comprehensive getting started guide and takes you from downloading Llama 3 all the way to deployment at scale within your generative AI application.

A system-level approach to responsibility

We have designed Llama 3 models to be maximally helpful while ensuring an industry leading approach to responsibly deploying them. To achieve this, we have adopted a new, system-level approach to the responsible development and deployment of Llama. We envision Llama models as part of a broader system that puts the developer in the driver’s seat. Llama models will serve as a foundational piece of a system that developers design with their unique end goals in mind.

Instruction fine-tuning also plays a major role in ensuring the safety of our models. Our instruction-fine-tuned models have been red-teamed (tested) for safety through internal and external efforts. Our red teaming approach leverages human experts and automation methods to generate adversarial prompts that try to elicit problematic responses. For instance, we apply comprehensive testing to assess risks of misuse related to Chemical, Biological, Cyber Security, and other risk areas. All of these efforts are iterative and used to inform safety fine-tuning of the models being released. You can read more about our efforts in the model card .

Llama Guard models are meant to be a foundation for prompt and response safety and can easily be fine-tuned to create a new taxonomy depending on application needs. As a starting point, the new Llama Guard 2 uses the recently announced MLCommons taxonomy, in an effort to support the emergence of industry standards in this important area. Additionally, CyberSecEval 2 expands on its predecessor by adding measures of an LLM’s propensity to allow for abuse of its code interpreter, offensive cybersecurity capabilities, and susceptibility to prompt injection attacks (learn more in our technical paper ). Finally, we’re introducing Code Shield which adds support for inference-time filtering of insecure code produced by LLMs. This offers mitigation of risks around insecure code suggestions, code interpreter abuse prevention, and secure command execution.

With the speed at which the generative AI space is moving, we believe an open approach is an important way to bring the ecosystem together and mitigate these potential harms. As part of that, we’re updating our Responsible Use Guide (RUG) that provides a comprehensive guide to responsible development with LLMs. As we outlined in the RUG, we recommend that all inputs and outputs be checked and filtered in accordance with content guidelines appropriate to the application. Additionally, many cloud service providers offer content moderation APIs and other tools for responsible deployment, and we encourage developers to also consider using these options.

Deploying Llama 3 at scale

Llama 3 will soon be available on all major platforms including cloud providers, model API providers, and much more. Llama 3 will be everywhere .

Our benchmarks show the tokenizer offers improved token efficiency, yielding up to 15% fewer tokens compared to Llama 2. Also, Group Query Attention (GQA) now has been added to Llama 3 8B as well. As a result, we observed that despite the model having 1B more parameters compared to Llama 2 7B, the improved tokenizer efficiency and GQA contribute to maintaining the inference efficiency on par with Llama 2 7B.

For examples of how to leverage all of these capabilities, check out Llama Recipes which contains all of our open source code that can be leveraged for everything from fine-tuning to deployment to model evaluation.

What’s next for Llama 3?

The Llama 3 8B and 70B models mark the beginning of what we plan to release for Llama 3. And there’s a lot more to come.

Our largest models are over 400B parameters and, while these models are still training, our team is excited about how they’re trending. Over the coming months, we’ll release multiple models with new capabilities including multimodality, the ability to converse in multiple languages, a much longer context window, and stronger overall capabilities. We will also publish a detailed research paper once we are done training Llama 3.

To give you a sneak preview for where these models are today as they continue training, we thought we could share some snapshots of how our largest LLM model is trending. Please note that this data is based on an early checkpoint of Llama 3 that is still training and these capabilities are not supported as part of the models released today.

We’re committed to the continued growth and development of an open AI ecosystem for releasing our models responsibly. We have long believed that openness leads to better, safer products, faster innovation, and a healthier overall market. This is good for Meta, and it is good for society. We’re taking a community-first approach with Llama 3, and starting today, these models are available on the leading cloud, hosting, and hardware platforms with many more to come.

Try Meta Llama 3 today

We’ve integrated our latest models into Meta AI, which we believe is the world’s leading AI assistant. It’s now built with Llama 3 technology and it’s available in more countries across our apps.

You can use Meta AI on Facebook, Instagram, WhatsApp, Messenger, and the web to get things done, learn, create, and connect with the things that matter to you. You can read more about the Meta AI experience here .

Visit the Llama 3 website to download the models and reference the Getting Started Guide for the latest list of all available platforms.

You’ll also soon be able to test multimodal Meta AI on our Ray-Ban Meta smart glasses.

As always, we look forward to seeing all the amazing products and experiences you will build with Meta Llama 3.

Our latest updates delivered to your inbox

Subscribe to our newsletter to keep up with Meta AI news, events, research breakthroughs, and more.

Join us in the pursuit of what’s possible with AI.

Product experiences

Foundational models

Latest news

Meta © 2024

Microsoft's AI lead puts Amazon cloud dominance on watch

- Medium Text

GOOGLE AI GAINS TO TAKE LONGER

Sign up here.

Reporting by Yuvraj Malik in Bengaluru; Editing by Anil D'Silva

Our Standards: The Thomson Reuters Trust Principles. New Tab , opens new tab

Technology Chevron

A Chinese state-backed company on Thursday unveiled a brain chip similar to the technology developed by Elon Musk's startup Neuralink.

AI + Machine Learning , Announcements , Azure IoT , Digital Twins , Events , Internet of Things

Azure IoT’s industrial transformation strategy on display at Hannover Messe 2024

By Adam Bogobowicz Director of IoT and Digital Operations Marketing

Posted on April 17, 2024 4 min read

- Tag: Generative AI

Running and transforming a successful enterprise is like being the coach of a championship-winning sports team. To win the trophy, you need a strategy, game plans, and the ability to bring all the players together. In the early days of training, coaches relied on basic drills, manual strategies, and simple equipment. But as technology advanced, so did the art of coaching. Today, coaches use data-driven training programs, performance tracking technology, and sophisticated game strategies to achieve unimaginable performance and secure victories.

We see a similar change happening in industrial production management and performance and we are excited to showcase how we are innovating with our products and services to help you succeed in the modern era. Microsoft recently launched two accelerators for industrial transformation:

- Azure’s adaptive cloud approach—a new strategy

- Azure IoT Operations (preview)—a new product

Our adaptive cloud approach connects teams, systems, and sites through consistent management tools, development patterns, and insight generation. Putting the adaptive cloud approach into practice, IoT Operations leverages open standards and works with Microsoft Fabric to create a common data foundation for IT and operational technology (OT) collaboration.

- Azure IoT Operations

Build interoperable IoT solutions that transform physical operations at scale

accelerating industrial transformation with azure iot operations

We will be demonstrating these accelerators in the Microsoft booth at Hannover Messe 2024 , presenting the new approach on the Microsoft stage, and will be ready to share exciting partnership announcements that enable interoperability in the industry.

Here’s a preview of what you can look forward to at the event from Azure IoT .

Experience the future of automation with IoT Operations

Using our adaptive cloud approach , we’ve built a robotic assembly line demonstration that puts together car battery parts for attendees of the event. This production line is partner-enabled and features a standard OT environment, including solutions from Rockwell Automation and PTC. IoT Operations was used to build a monitoring solution for the robots because it embraces industry standards, like Open Platform Communications Unified Architecture (OPC UA), and integrates with existing infrastructure to connect data from an array of OT devices and systems, and flow it to the right places and people. IoT Operations processes data at the edge for local use by multiple applications and sends insights to the cloud for use by multiple applications there too, reducing data fragmentation.

For those attending Hannover Messe 2024, head to the center of the Microsoft booth and look for the station “ Achieve industrial transformation across the value chain . ”

Watch this video to see how IoT Operations and the adaptive cloud approach build a common data foundation for an industrial equipment manufacturer.

Consult with Azure experts on IT and OT collaboration tools

Find out how Microsoft Azure’s open and standardized strategy, an adaptive cloud approach, can help you reach the next stage of industrial transformation. Our experts will help your team collect data from assets and systems on the shop floor, compute at the edge, integrate that data into multiple solutions, and create production analytics on a global scale. Whether you’re just starting to connect and digitize your operations, or you’re ready to analyze and reason with your data, make predictions, and apply AI, we’re here to assist.

For those attending Hannover Messe 2024, these experts are located at the demonstration called “ Scale solutions and interoperate with IoT, edge, and cloud innovation .”

Check out Jumpstart to get your collaboration environment up and running. In May 2024, Jumpstart will have a comprehensive scenario designed for manufacturing.

Attend a presentation on modernizing the shop floor

We will share the results of a survey on the latest trends, technologies, and priorities for manufacturing companies wanting to efficiently manage their data to prepare for AI and accelerate industrial transformation. 73% of manufacturers agreed that a scalable technology stack is an important paradigm for the future of factories. 1 To make that a reality, manufacturers are making changes to modernize, such as adopting containerization, shifting to central management of devices, and emphasizing IT and OT collaboration tools. These modernization trends can maximize the ROI of existing infrastructure and solutions, enhance security, and apply AI at the edge.

This presentation “ How manufacturers prepare shopfloors for a future with AI ,” will take place in the Microsoft theater at our booth, Hall 17, on Monday, April 22, 2024, at 2:00 PM CEST at Hannover Messe 2024.

For those who cannot attend, you can sign up to receive a notification when the full report is out.

Learn about actions and initiatives driving interoperability

Microsoft is strengthening and supporting the industrial ecosystem to enable at-scale transformation and interoperate solutions. Our adaptive cloud approach both incorporates existing investments in partner technology and builds a foundation for consistent deployment patterns and repeatability for scale.

Our ecosystem of partners

Microsoft is building an ecosystem of connectivity partners to modernize industrial systems and devices. These partners provide data translation and normalization services across heterogeneous environments for a seamless and secure data flow on the shop floor, and from the shop floor to the cloud. We leverage open standards and provide consistent control and management capabilities for OT and IT assets. To date, we have established integrations with Advantech, Softing, and PTC.

Siemens and Microsoft have announced the convergence of the Digital Twin Definition Language (DTDL) with the W3C Web of Things standard. This convergence will help consolidate digital twin definitions for assets in the industry and enable new technology innovation like automatic asset onboarding with the help of generative AI technologies.

Microsoft embraces open standards and interoperability. Our adaptive cloud approach is based on those principles. We are thrilled to join project Margo, a new ecosystem-led initiative, that will help industrial customers achieve their digital transformation goals with greater speed and efficiency. Margo will define how edge applications, edge devices, and edge orchestration software interoperate with each other with increased flexibility. Read more about this important initiative .

Discover solutions with Microsoft

Visit our booth and speak with our experts to reach new heights of industrial transformation and prepare the shop floor for AI. Together, we will maximize your existing investments and drive scale in the industry. We look forward to working with you.

- Hannover Messe 2024

1 IoT Analytics, “Accelerate industrial transformation: How manufacturers prepare shopfloor for AI”, May 2023.

Let us know what you think of Azure and what you would like to see in the future.

Provide feedback

Build your cloud computing and Azure skills with free courses by Microsoft Learn.

Explore Azure learning

Related posts

Analyst Reports , Announcements , Azure API Management , Azure Analysis Services , Azure Data Factory , Azure Functions , Azure Integration Services , Azure IoT , Azure Logic Apps , Azure OpenAI Service , Event Grid , Integration , Service Bus

Microsoft named a Leader in 2024 Gartner® Magic Quadrant™ for Integration Platform as a Service chevron_right

AI + Machine Learning , Azure App Service , Azure Blob Storage , Azure Functions , Azure IoT , Azure Synapse Analytics , Customer stories , Partners

Key customer benefits of the Microsoft and MongoDB expanded partnership chevron_right

AI + Machine Learning , Announcements , Azure Arc , Azure IoT , Azure Migrate , Events , Management and Governance , Microsoft Copilot for Azure

Microsoft Azure delivers purpose-built cloud infrastructure in the era of AI chevron_right

AI + Machine Learning , Azure AI , Azure AI Bot Service , Azure AI Services , Azure IoT , Azure Machine Learning , Azure OpenAI Service , HoloLens 2 , Thought leadership

Azure OpenAI Service: 10 ways generative AI is transforming businesses chevron_right

IMAGES

COMMENTS

Cloud systems innovation at the core of Azure Azure Research - Systems is a research group in Azure Core that brings forward-looking, world-class systems research directly into Azure. The group was seeded from the Cloud Efficiency team, which migrated from the Systems Research Group at Microsoft Research, for a closer integration with Azure. Our group's […]

Microsoft's Azure is the standard circled figuring stage which draws in the client to send and work assets with speed of adaptability. Our basic work in this paper is to go up against recognizing confirmation and face attestation utilizing "Face API" where we will use our own one of a kind codes, for example, to perceive and see the powers ...

Cloud4NFICA-Nearness Factor-Based Incremental Clustering Algorithm Using Microsoft Azure for the Analysis of Intelligent Meter Data. 10.4018/978-1-6684-3666-.ch020 . 2022 . pp. 423-442. Author (s): Archana Yashodip Chaudhari . Preeti Mulay. Keyword (s): Smart Grids .

A Microsoft Azure account was created to manage the database that is stored in the cloud. ... This paper aims at supporting research in this area by providing a survey of the state of the art of ...

In addition to being grounded on publicly available research papers for text queries, Copilot in Azure Quantum is also augmented with molecular structure data. This means that researchers can visualize molecules, derived from millions of possibilities, as well as calculate their respective orbitals 1. As an example, Copilot can visualize the ...

ResearchGate | Find and share research

In this paper, we perform a thorough case study of Microsoft Azure a popular cloud computing platform. We thoroughly study various key concepts and features provided by this platform. This study ...

This paper provides guidance for Azure Cosmos DB customers managing a cloud-based database, an on-premises database, or both. This paper is ideal for customers who want to learn more about the rules related to how we handle and protect their personal data in their database systems. Next-gen app development with Azure Cosmos DB.

This paper elaborate cloud based application service deployment process, benefits, plans provided by Microsoft Azure. Discover the world's research 25+ million members

We recently published a paper describing the internal details of Windows Azure Storage at the 23rd ACM Symposium on Operating Systems Principles (SOSP). The paper can be found here . The conference also posted a video of the talk here .

In this paper, researchers have given brief details of Amazon EC2 and Microsoft Azure Cloud. It is very difficult to decide one cloud service provider from all. In this paper, comparison of Amazon EC2 and Microsoft Azure is given, which will help in taking decision for choosing Cloud Provider.

Comparative study of Amazon EC2 and. Microsoft Azure cloud architecture. Prof Vaibhav A Gandhi. Research Scholar, Dept of Computer Science, Sau Uni, & Associate Professor, Dept of MCA,B H Gardi ...

Contact Microsoft Azure Sales. Start a chat session, call us, or have us call you—your choice. Contact Sales. Browse white papers, analyst reports, e-books, and other Microsoft resources—from the basics of cloud computing and Azure to deep dives and technical guides.

A Review on Amazon Web Service (AWS), Microsoft. Azure. &. Google Cloud Platform (GCP) Services. Tabish Mufti, Pooja Mittal2,*,Bulbul Gupta3. {p [email protected]} 1, 2, 3 Department of ...

Today, we're introducing Meta Llama 3, the next generation of our state-of-the-art open source large language model. Llama 3 models will soon be available on AWS, Databricks, Google Cloud, Hugging Face, Kaggle, IBM WatsonX, Microsoft Azure, NVIDIA NIM, and Snowflake, and with support from hardware platforms offered by AMD, AWS, Dell, Intel, NVIDIA, and Qualcomm.

Azure, part of the Intelligent cloud unit at Microsoft, is expected to have grown 28.9% in the January-to-March period, according to estimates from Visible Alpha.

Experience a fast, reliable, and private connection to Azure. Microsoft Entra ID (formerly Azure AD) Synchronize on-premises directories and enable single sign-on. Azure SQL Migrate, modernize, and innovate on the modern SQL family of cloud databases