The top list of academic search engines

1. Google Scholar

4. science.gov, 5. semantic scholar, 6. baidu scholar, get the most out of academic search engines, frequently asked questions about academic search engines, related articles.

Academic search engines have become the number one resource to turn to in order to find research papers and other scholarly sources. While classic academic databases like Web of Science and Scopus are locked behind paywalls, Google Scholar and others can be accessed free of charge. In order to help you get your research done fast, we have compiled the top list of free academic search engines.

Google Scholar is the clear number one when it comes to academic search engines. It's the power of Google searches applied to research papers and patents. It not only lets you find research papers for all academic disciplines for free but also often provides links to full-text PDF files.

- Coverage: approx. 200 million articles

- Abstracts: only a snippet of the abstract is available

- Related articles: ✔

- References: ✔

- Cited by: ✔

- Links to full text: ✔

- Export formats: APA, MLA, Chicago, Harvard, Vancouver, RIS, BibTeX

BASE is hosted at Bielefeld University in Germany. That is also where its name stems from (Bielefeld Academic Search Engine).

- Coverage: approx. 136 million articles (contains duplicates)

- Abstracts: ✔

- Related articles: ✘

- References: ✘

- Cited by: ✘

- Export formats: RIS, BibTeX

CORE is an academic search engine dedicated to open-access research papers. For each search result, a link to the full-text PDF or full-text web page is provided.

- Coverage: approx. 136 million articles

- Links to full text: ✔ (all articles in CORE are open access)

- Export formats: BibTeX

Science.gov is a fantastic resource as it bundles and offers free access to search results from more than 15 U.S. federal agencies. There is no need anymore to query all those resources separately!

- Coverage: approx. 200 million articles and reports

- Links to full text: ✔ (available for some databases)

- Export formats: APA, MLA, RIS, BibTeX (available for some databases)

Semantic Scholar is the new kid on the block. Its mission is to provide more relevant and impactful search results using AI-powered algorithms that find hidden connections and links between research topics.

- Coverage: approx. 40 million articles

- Export formats: APA, MLA, Chicago, BibTeX

Although Baidu Scholar's interface is in Chinese, its index contains research papers in English as well as Chinese.

- Coverage: no detailed statistics available, approx. 100 million articles

- Abstracts: only snippets of the abstract are available

- Export formats: APA, MLA, RIS, BibTeX

RefSeek searches more than one billion documents from academic and organizational websites. Its clean interface makes it especially easy to use for students and new researchers.

- Coverage: no detailed statistics available, approx. 1 billion documents

- Abstracts: only snippets of the article are available

- Export formats: not available

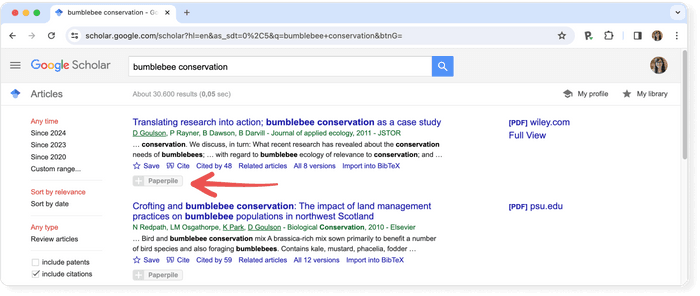

Consider using a reference manager like Paperpile to save, organize, and cite your references. Paperpile integrates with Google Scholar and many popular databases, so you can save references and PDFs directly to your library using the Paperpile buttons:

Google Scholar is an academic search engine, and it is the clear number one when it comes to academic search engines. It's the power of Google searches applied to research papers and patents. It not only let's you find research papers for all academic disciplines for free, but also often provides links to full text PDF file.

Semantic Scholar is a free, AI-powered research tool for scientific literature developed at the Allen Institute for AI. Sematic Scholar was publicly released in 2015 and uses advances in natural language processing to provide summaries for scholarly papers.

BASE , as its name suggest is an academic search engine. It is hosted at Bielefeld University in Germany and that's where it name stems from (Bielefeld Academic Search Engine).

CORE is an academic search engine dedicated to open access research papers. For each search result a link to the full text PDF or full text web page is provided.

Science.gov is a fantastic resource as it bundles and offers free access to search results from more than 15 U.S. federal agencies. There is no need any more to query all those resources separately!

28 Best Academic Search Engines That make your research easier

This post may contain affiliate links that allow us to earn a commission at no expense to you. Learn more

If you’re a researcher or scholar, you know that conducting effective online research is a critical part of your job. And if you’re like most people, you’re always on the lookout for new and better ways to do it.

I’m sure you are familiar with some research databases. But, top researchers keep an open mind and are always looking for inspiration in unexpected places.

This article aims to give you an edge over researchers that rely mainly on Google for their entire research process.

Our list of 28 academic search engines will start with the more familiar to less.

Table of Contents

#1. Google Scholar

Google Scholar is an academic search engine that indexes the full text or metadata of scholarly literature across an array of publishing formats and disciplines.

Great for academic research, you can use Google Scholar to find articles from academic journals, conference proceedings, theses, and dissertations. The results returned by Google Scholar are typically more relevant and reliable than those from regular search engines like Google.

Tip: You can restrict your results to peer-reviewed articles only by clicking on the “Scholarly”

- Scholarly results are typically more relevant and reliable than those from regular search engines like Google.

- You can restrict your results to peer-reviewed articles only by clicking on the “Scholarly” tab.

- Google Scholar database Coverage is extensive, with approx. 200 million articles indexed.

- Abstracts are available for most articles.

- Related articles are shown, as well as the number of times an article has been cited.

- Links to full text are available for many articles.

- Abstracts are only a snippet of the full article, so you might need to do additional searching to get the full information you need.

- Not all articles are available in full text.

Google Scholar is completely free.

#2. ERIC (Education Resources Information Center)

ERIC (short for educational resources information center) is a great academic search engine that focuses on education-related literature. It is sponsored by the U.S. Department of Education and produced by the Institute of Education Sciences.

ERIC indexes over a million articles, reports, conference papers, and other resources on all aspects of education from early childhood to higher education. So, search results are more relevant to Education on ERIC.

- Extensive coverage: ERIC indexes over a million articles, reports, and other resources on all aspects of education from early childhood to higher education.

- You can limit your results to peer-reviewed journals by clicking on the “Peer-Reviewed” tab.

- Great search engine for educators, as abstracts are available for most articles.

ERIC is a free online database of education-related literature.

You might also like:

- SCI Journal: Science Journal Impact Factor

- 15 Best Websites to Download Research Papers for Free

- 11 Best Academic Writing Tools For Researchers 2024

- 10 Best Reference Management Software for Research 2024

- Academic Tools

#3. Wolfram Alpha

Wolfram Alpha is a “computational knowledge engine” that can answer factual questions posed in natural language. It can be a useful search tool.

Type in a question like “What is the square root of 64?” or “What is the boiling point of water?” and Wolfram Alpha will give you an answer.

Wolfram Alpha can also be used to find academic articles. Just type in your keywords and Wolfram Alpha will generate a list of academic articles that match your query.

Tip: You can restrict your results to peer-reviewed journals by clicking on the “Scholarly” tab.

- Can answer factual questions posed in natural language.

- Can be used to find academic articles.

- Results are ranked by relevance.

- Results can be overwhelming, so it’s important to narrow down your search criteria as much as possible.

- The experience feels a bit more structured but it could also be a bit restrictive

Wolfram Alpha offers a few pricing options, including a “Pro” subscription that gives you access to additional features, such as the ability to create custom reports. You can also purchase individual articles or download them for offline use.

Pro costs $5.49 and Pro Premium costs $9.99

#4. iSEEK Education

- 15 Best Websites To Download Research Papers For Free

- 30+ Essential Software For Researchers

- 15 Best Academic Research Trend Prediction Platforms

- 15 Best Academic Networking And Collaboration Platforms

iSEEK is a search engine targeting students, teachers, administrators, and caregiver. It’s designed to be safe with editor-reviewed content.

iSEEK Education also includes a “Cited by” feature which shows you how often an article has been cited by other researchers.

- Editor-reviewed content.

- “Cited by” feature shows how often an article has been cited by other researchers.

- Limited to academic content.

- Doesn’t have the breadth of coverage that some of the other academic search engines have.

iSEEK Education is free to use.

#5. BASE (Bielefeld Academic Search Engine)

BASE is hosted at Bielefeld University in Germany and that’s where it name stems from (Bielefeld Academic Search Engine).

Known as “one of the most comprehensive academic web search engines,” it contains over 100 million documents from 4,000 different sources.

Users can narrow their search using the advanced search option, so regardless of whether you need a book, a review, a lecture, a video or a thesis, BASE has what you need.

BASE indexes academic articles from a variety of disciplines, including the arts, humanities, social sciences, and natural sciences.

- One of the world’s most voluminous search engines,

- Indexes academic articles from a variety of disciplines, especially for academic web resources

- Includes an “Advanced Search” feature that lets you restrict your results to peer-reviewed journals.

- Doesn’t include abstracts for most articles.

- Doesn’t have related articles, references, cited by

BASE is free to use.

- 10 Best Reference Management Software for Research 2023

- 15 Best Academic Networking and Collaboration Platforms

- 30+ Essential Software for Researchers

- 15 Best Academic Blogging and Content Management

- 11 Best Academic Writing Tools For Researchers

CORE is an academic search engine that focuses on open access research papers. A link to the full text PDF or complete text web page is supplied for each search result. It’s academic search engine dedicated to open access research papers.

- Focused on open access research papers.

- Links to full text PDF or complete text web page are supplied for each search result.

- Export formats include BibTeX, Endnote, RefWorks, Zotero.

- Coverage is limited to open access research papers.

- No abstracts are available for most articles.

- No related articles, references, or cited by features.

CORE is free to use.

- Best Plagiarism Checkers for Research Papers in 2024

#7. Science.gov

Science.gov is a search engine developed and managed by the United States government. It includes results from a variety of scientific databases, including NASA, EPA, USGS, and NIST.

US students are more likely to have early exposure to this tool for scholarly research.

- Coverage from a variety of scientific databases (200 million articles and reports).

- Links to full text are available for some articles.

Science.gov is free to use.

- 15 Best Academic Journal Discovery Platforms

- Sci Hub Review

#8. Semantic Scholar

Semantic Scholar is a recent entrant to the field. Its goal is to provide more relevant and effective search results via artificial intelligence-powered methods that detect hidden relationships and connections between research topics.

- Powered by artificial intelligence, which enhances search results.

- Covers a large number of academic articles (approx. 40 million).

- Related articles, references, and cited by features are all included.

- Links to full text are available for most articles.

Semantic Scholar is free to use.

- 11 Best Academic Writing Tools For Researchers

- 10 Best Reference Management Software for Research

- 15 Best Academic Journal Discovery Platforms

#9. RefSeek

RefSeek searches more than five billion documents, including web pages, books, encyclopedias, journals, and newspapers.

This is one of the free search engines that feels like Yahoo with a massive directory. It could be good when you are just looking for research ideas from unexpected angles. It could lead you to some other database that you might not know such as the CIA The World Factbook, which is a great reference tool.

- Searches more than five billion documents.

- The Documents tab is very focused on research papers and easy to use.

- Results can be filtered by date, type of document, and language.

- Good source for free academic articles, open access journals, and technical reports.

- The navigation and user experience is very dated even to millenials…

- It requires more than 3 clicks to dig up interesting references (which is how it could lead to you something beyond the 1st page of Google)

- The top part of the results are ALL ads (well… it’s free to use)

RefSeek is free to use.

#10. ResearchGate

A mixture of social networking site + forum + content databases where researchers can build their profile, share research papers, and interact with one another.

Although it is not an academic search engine that goes outside of its site, ResearchGate ‘s library of works offers an excellent choice for any curious scholar.

There are more than 100 million publications available on the site from over 11 million researchers. It is possible to search by publication, data, and author, as well as to ask the researchers questions.

- A great place to find research papers and researchers.

- Can follow other researchers and get updates when they share new papers or make changes to their profile.

- The network effect can be helpful in finding people who have expertise in a particular topic.

- Interface is not as user friendly

- Can be overwhelming when trying to find relevant papers.

- Some papers are behind a paywall.

ResearchGate is free to use.

- 15 Best Academic Research Trend Prediction Platforms

- 25 Best Tools for Tracking Research Impact and Citations

#11. DataONE Search (formerly CiteULike)

A social networking site for academics who want to share and discover academic articles and papers.

- A great place to find academic papers that have been shared by other academics.

- Some papers are behind a paywall

CiteULike is free to use.

#12. DataElixir

DataElixir is deigned to help you find, understand and use data. It includes a curated list of the best open datasets, tools and resources for data science.

- Dedicated resource for finding open data sets, tools, and resources for data science.

- The website is easy to navigate.

- The content is updated regularly

- The resources are grouped by category.

- Not all of the resources are applicable to academic research.

- Some of the content is outdated.

DataElixir is free to use.

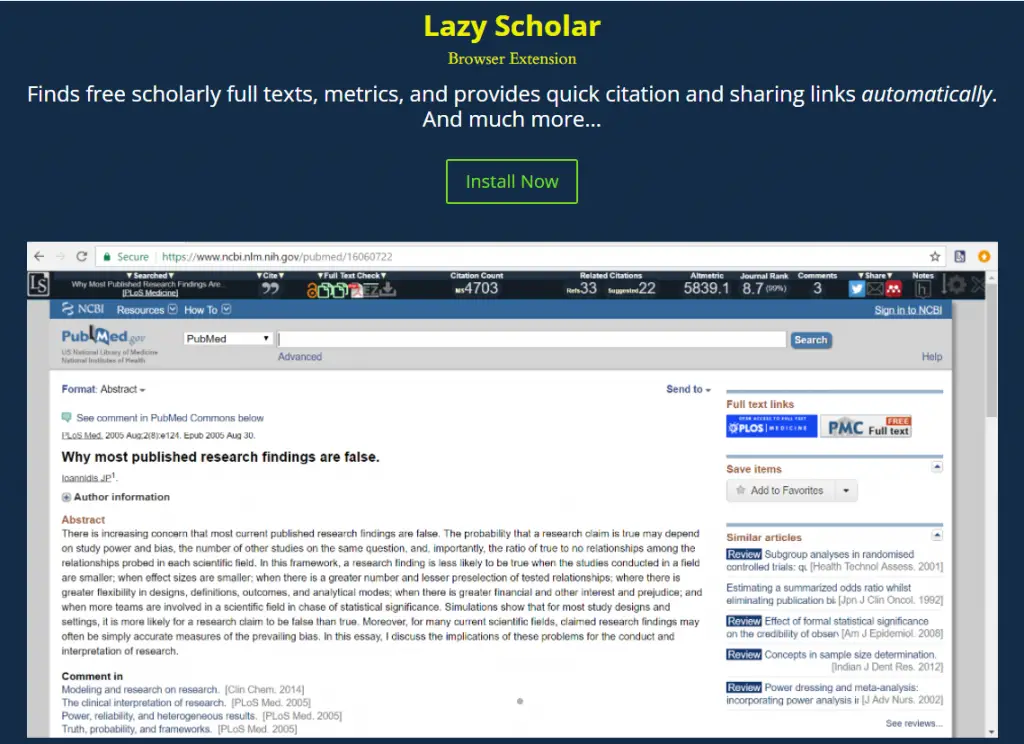

#13. LazyScholar – browser extension

LazyScholar is a free browser plugin that helps you discover free academic full texts, metrics, and instant citation and sharing links. Lazy Scholar is created Colby Vorland, a postdoctoral fellow at Indiana University.

- It can integrate with your library to find full texts even when you’re off-campus.

- Saves your history and provides an interface to find it.

- A pre-formed citation is availlable in over 900 citation styles.

- Can recommend you topics and scans new PubMed listings to suggest new papers

- Results can be a bit hit or miss

LazyScholar is free to use.

#14. CiteseerX – digital library from PenState

CiteseerX is a digital library stores and indexes research articles in Computer Science and related fields. The site has a robust search engine that allows you to filter results by date, author.

- Searches a large number of academic papers.

- Results can be filtered by date, author, and topic.

- The website is easy to use.

- You can create an account and save your searches for future reference.

CiteseerX is free to use.

- Surfer Review: Is It Worth It?

- 25 Best Tools For Tracking Research Impact And Citations

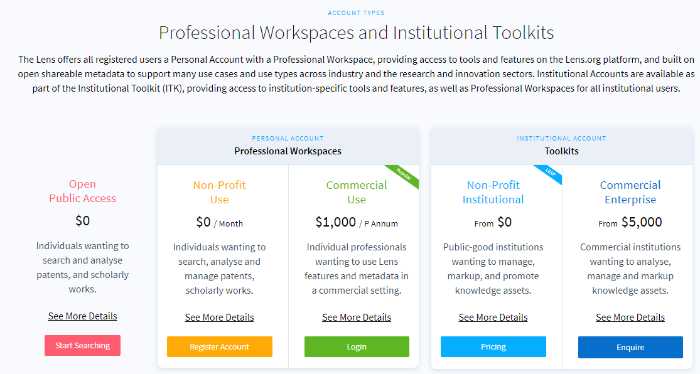

#15. The Lens – patents search

The Lens or the Patent Lens is an online patent and scholarly literature search facility, provided by Cambia, an Australia-based non-profit organization.

- Searches for a large number of academic papers.

The price range can be free for non-profit use to $5,000 for commercial enterprise.

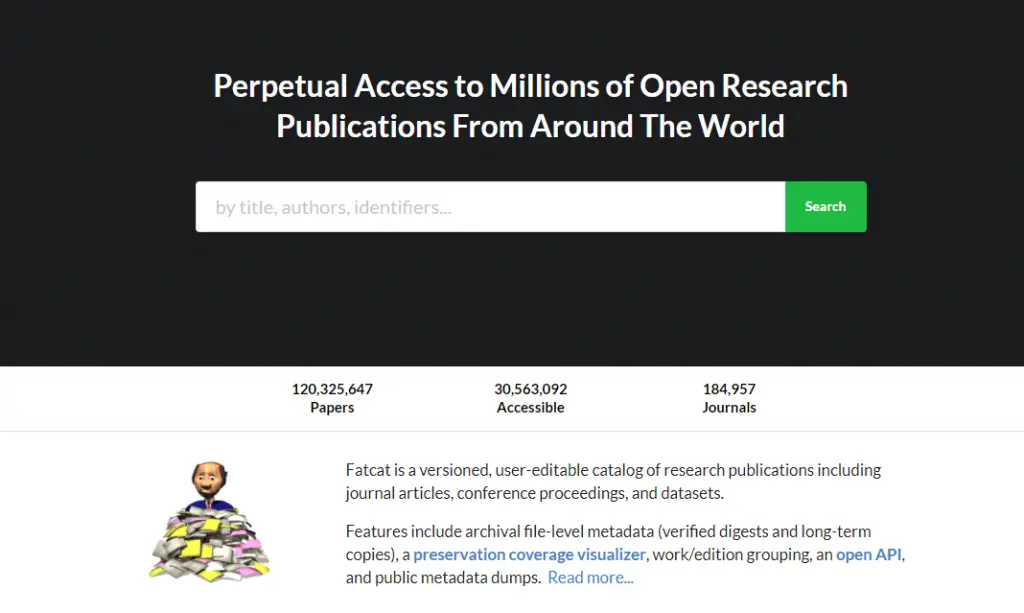

#16. Fatcat – wiki for bibliographic catalog

Fatcat is an open bibliographic catalog of written works. The scope of works is somewhat flexible, with a focus on published research outputs like journal articles, pre-prints, and conference proceedings. Records are collaboratively editable, versioned, available in bulk form, and include URL-agnostic file-level metadata.

- Open source and collaborative

- You can be part of the community that is very focused on its mission

- The archival file-level metadata (verified digests and long-term copies) is a great feature.

- Could prove to be another rabbit hole

- People either love or hate the text-only interface

#17. Lexis Web – Legal database

Are you researching legal topics? You can turn to Lexis Web for any law-related questions you may have. The results are drawn from legal sites and can be filtered based on criteria such as news, blogs, government, and commercial. Additionally, users can filter results by jurisdiction, practice area, source and file format.

- Results are drawn from legal sites.

- Filters are available based on criteria such as news, blogs, government, and commercial.

- Users can filter results by jurisdiction, practice area, source and file format.

- Not all law-related questions will be answered by this search engine.

- Coverage is limited to legal sites only.

Lexis Web is free for up to three searches per day. After that, a subscription is required.

#18. Infotopia – part of the VLRC family

Infotopia touts itself as an “alternative to Google safe search.” Scholarly book results are curated by librarians, teachers, and other educational workers. Users can select from a range of topics such as art, health, and science and technology, and then see a list of resources pertaining to the topic.

Consequently, if you aren’t able to find what you are looking for within Infotopia’s pages, you will probably find it on one of its many suggested websites.

#19. Virtual Learning Resources Center

Virtual Learning Resources Center (VLRC) is an academic search engine that features thousands of academic sites chosen by educators and librarians worldwide. Using an index generated from a research portal, university, and library internet subject guides, students and instructors can find current, authoritative information for school.

- Thousands of academic information websites indexed by it. You will also be able to get more refined results with custom Google search, which will speed up your research.

- Many people consider VLRC as one of the best free search engines to start looking for research material.

- TeachThought rated the Virtual LRC #3 in it’s list of 100 Search Engines For Academic Research

- More relevant to education

- More relevant to students

Powered by Google Custom Search Engine (CSE), Jurn is a free online search engine for accessing and downloading free full-text scholarly papers. It was created by David Haden in a public open beta version in February 2009, initially for locating open access electronic journal articles in the arts and humanities.

After the indexing process was completed, a website containing additional public directories of web links to indexed publications was introduced in mid-2009. The Jurn search service and directory has been regularly modified and cleaned since then.

- A great resource for finding academic papers that are behind paywalls.

- The content is updated regularly.uren

Jurn is free to use.

#21. WorldWideScience

The Office of Scientific and Technical Information—a branch of the Office of Science within the U.S. Department of Energy—hosts the portal WorldWideScience , which has dubbed itself “The Global Science Gateway.”

Over 70 countries’ databases are used on the website. When a user enters a query, it contacts databases from all across the world and shows results in both English and translated journals and academic resources.

- Results can be filtered by language and type of resource

- Interface is easy to use

- Contains both academic journal articles and translated academic resources

- The website can be difficult to navigate.

WorldWideScience is free to use.

#22. Google Books

A user can browse thousands of books on Google Books, from popular titles to old titles, to find pages that include their search terms. You can look through pages, read online reviews, and find out where to buy a hard copy once you find the book you are interested in.

#23. DOAJ (Directory of Open Access Journals)

DOAJ is a free search engine for scientific and scholarly materials. It is a searchable database with over 8,000 peer-reviewed research papers organized by subject. It’s one of the most comprehensive libraries of scientific and scholarly resources, with over 8,000 journals available on a variety of themes.

#24. Baidu Scholar

Baidu Xueshu (Academic) is the Chinese version for Google Scholar. IDU Scholar indexes academic papers from a variety of disciplines in both Chinese and English.

- Articles are available in full text PDF.

- Covers a variety of academic disciplines.

- No abstracts are available for most articles, but summaries are provided for some.

- A great portal that takes you to different specialized research platform

- You need to be able to read Chinese to use the site

- Since 2021 there is a rise of focus on China and the Chinese Communist Party

Baidu Scholar is free to use.

#25. PubMed Central

PubMed is a free search engine that provides references and abstracts for medical, life sciences, and biomedical topics.

If you’re studying anything related to healthcare or science, this site is perfect. PublicMed Central is operated by the National Center for Biotechnology Information, a division of the U.S. National Library of Medicine. It contains more than 3 million full-text journal articles.

It’s similar to PubMed Health, which focuses on health-related research and includes abstracts and citations to over 26 million articles.

#26. MEDLINE®

MEDLINE® is a paid subscription database for life sciences and biomedicine that includes more than 28 million citations to journal articles. For finding reliable, carefully chosen health information, Medline Plus provides a powerful search tool and even a dictionary.

- A great database for life sciences and biomedicine.

- Contains more than 28 million references to journal articles.

- References can be filtered by date, type of document, and language.

- The database is expensive to access.

- Some people find it difficult to navigate and find what they are looking for.

MEDLINE is not free to use ( pricing information ).

Defunct Academic Search Engines

#27. microsoft academic .

Microsoft Academic

Microsoft Academic Search seemed to be a failure from the beginning. It ended in 2012, then re-launched in 2016 as Microsoft Academic. It provides the researcher with the opportunity to search academic publications,

Microsoft Academic used to be the second-largest academic search engine after Google Scholar. Microsoft Academic provides a wealth of data for free, but Microsoft has announced that it will shut Microsoft Academic down in by 2022.

#28. Scizzle

Designed to help researchers stay on top of the literature by setting up email alerts, based on key terms, for newspapers.

Unfortunately, academic search engines come and go. These are two that are no longer available.

Final Thoughts

There are many academic search engines that can help researchers and scholars find the information they need. This list provides a variety of options, starting with more familiar engines and moving on to less well-known ones.

Keeping an open mind and exploring different sources is essential for conducting effective online research. With so much information at our fingertips, it’s important to make sure we’re using the best tools available to us.

Tell us in the comment below which academic search engine have you not heard of? Which database do you think we should add? What database do your professional societies use? What are the most useful academic websites for research in your opinion?

There is more.

Check out our other articles on the Best Academic Tools Series for Research below.

- Learn how to get more done with these Academic Writing Tools

- Learn how to proofread your work with these Proofreading Tools

- Learn how to broaden your research landscape with these Academic Search Engines

- Learn how to manage multiple research projects with these Project Management Tools

- Learn how to run effective survey research with these Survey Tools for Research

- Learn how get more insights from important conversations and interviews with Transcription Tools

- Learn how to manage the ever-growing list of references with these Reference Management Software

- Learn how to double your productivity with literature reviews with these AI-Based Summary Generators

- Learn how to build and develop your audience with these Academic Social Network Sites

- Learn how to make sure your content is original and trustworthy with these Plagiarism Checkers

- Learn how to talk about your work effectively with these Science Communication Tools

10 thoughts on “28 Best Academic Search Engines That make your research easier”

Thank you so much Joannah..I have found this information useful to me as librarian in an academic library

You are welcome! We are happy to hear that!

Thank You Team, for providing a comprehensive list of academic search engines that can help make research easier for students and scholars. The variety of search engines included offers a range of options for finding scholarly articles, journals, and other academic resources. The article also provides a brief summary of each search engine’s features, which helps in determining which one is the best fit for a specific research topic. Overall, this article is a valuable resource for anyone looking for a quick and easy way to access a wealth of academic information.

Thank you for taking the time to share your feedback with us. We are delighted to hear that you found our list of academic search engines helpful in making research easier for students and scholars. We understand the importance of having a variety of options when it comes to finding scholarly articles, journals, and other academic resources, and we strive to provide a comprehensive list of resources to meet those needs.

We are glad that you found the brief summary of each search engine’s features helpful in determining which one is the best fit for a specific research topic. Our goal is to make it easy for our readers to access valuable academic information and we’re glad that we were able to achieve that for you.

We appreciate your support and thank you for your kind words. We will continue to provide valuable resources for students and researchers in the future. Please let us know if you have any further questions or suggestions.

No more questions Thank You

I cannot thank you enough!!! thanks alot 🙂

Typography animation is a technique that combines text and motion to create visually engaging and dynamic animations. It involves animating individual letters, words, or phrases in various ways to convey a message, evoke emotions, or enhance the visual impact of a design or video. – Typography Animation Techniques Tools and Online Software {43}

Hi Joannah! Here’s another one you may want to add! Expontum ( https://www.expontum.com/ ) – Helps researchers quickly find knowledge gaps and identify what research projects have been completed before. Thanks!

Expontum – Helps researchers quickly find knowledge gaps and identify what research projects have been completed before. Expontum is free, open access, and available to all globally with no paid versions of the site. Automated processes scan research article information 24/7 so this website is constantly updating. By looking at over 35 million research publications (240 million by the end of 2023), the site has 146 million tagged research subjects and 122 million tagged research attributes. Learn more about methodology and sources on the Expontum About Page ( https://www.expontum.com/about.php )

Hey Ryan, I clicked and checked your site and thought it was very relevant to our reader. Thank you for sharing. And, we will be reviewing your site soon.

Sounds good! Thanks, Joannah!

Leave a Comment Cancel reply

Save my name, email, and website in this browser for the next time I comment.

We maintain and update science journals and scientific metrics. Scientific metrics data are aggregated from publicly available sources. Please note that we do NOT publish research papers on this platform. We do NOT accept any manuscript.

2012-2024 © scijournal.org

A free, AI-powered research tool for scientific literature

- Stuart Adler

- Parliamentary System

- Moral Reasoning

New & Improved API for Developers

Introducing semantic reader in beta.

Stay Connected With Semantic Scholar Sign Up What Is Semantic Scholar? Semantic Scholar is a free, AI-powered research tool for scientific literature, based at the Allen Institute for AI.

Help us improve our Library guides with this 5 minute survey . We appreciate your feedback!

- UOW Library

- Key guides for students

Literature Review

How to search effectively.

- Find examples of literature reviews

- How to write a literature review

- Grey literature

The Literature searching interactive tutorial includes self-paced, guided activities to assist you in developing effective search skills..

1. Identify search words

Analyse your research topic or question.

- What are the main ideas?

- What concepts or theories have you already covered?

- Write down your main ideas, synonyms, related words and phrases.

- If you're looking for specific types of research, use these suggested terms: qualitative, quantitative, methodology, review, survey, test, trend (and more).

- Be aware of UK and US spelling variations. E.g. organisation OR organization, ageing OR aging.

- Interactive Keyword Builder

- Identifying effective keywords

2. Connect your search words

Find results with one or more search words.

Use OR between words that mean the same thing.

E.g. adolescent OR teenager

This search will find results with either (or both) of the search words.

Find results with two search words

Use AND between words which represent the main ideas in the question.

E.g. adolescent AND “physical activity”

This will find results with both of the search words.

Exclude search words

Use NOT to exclude words that you don’t want in your search results.

E.g. (adolescent OR teenager) NOT “young adult”

3. Use search tricks

Search for different word endings.

Truncation *

The asterisk symbol * will help you search for different word endings.

E.g. teen* will find results with the words: teen, teens, teenager, teenagers

Specific truncation symbols will vary. Check the 'Help' section of the database you are searching.

Search for common phrases

Phrase searching “...........”

Double quotation marks help you search for common phrases and make your results more relevant.

E.g. “physical activity” will find results with the words physical activity together as a phrase.

Search for spelling variations within related terms

Wildcards ?

Wildcard symbols allow you to search for spelling variations within the same or related terms.

E.g. wom?n will find results with women OR woman

Specific wild card symbols will vary. Check the 'Help' section of the database you are searching.

Search terms within specific ranges of each other

Proximity w/#

Proximity searching allows you to specify where your search terms will appear in relation to each other.

E.g. pain w/10 morphine will search for pain within ten words of morphine

Specific proximity symbols will vary. Check the 'Help' section of the database you are searching.

4. Improve your search results

All library databases are different and you can't always search and refine in the same way. Try to be consistent when transferring your search in the library databases you have chosen.

Narrow and refine your search results by:

- year of publication or date range (for recent or historical research)

- document or source type (e.g. article, review or book)

- subject or keyword (for relevance). Try repeating your search using the 'subject' headings or 'keywords' field to focus your search

- searching in particular fields, i.e. citation and abstract. Explore the available dropdown menus to change the fields to be searched.

When searching, remember to:

Adapt your search and keep trying.

Searching for information is a process and you won't always get it right the first time. Improve your results by changing your search and trying again until you're happy with what you have found.

Keep track of your searches

Keeping track of searches saves time as you can rerun them, store references, and set up regular alerts for new research relevant to your topic.

Most library databases allow you to register with a personal account. Look for a 'log in', 'sign in' or 'register' button to get started.

- Literature review search tracker (Excel spreadsheet)

Manage your references

There are free and subscription reference management programs available on the web or to download on your computer.

- EndNote - The University has a license for EndNote. It is available for all students and staff, although is recommended for postgraduates and academic staff.

- Zotero - Free software recommended for undergraduate students.

- Previous: How to write a literature review

- Next: Where to search when doing a literature review

- Last Updated: Mar 13, 2024 8:37 AM

- URL: https://uow.libguides.com/literaturereview

Insert research help text here

LIBRARY RESOURCES

Library homepage

Library SEARCH

A-Z Databases

STUDY SUPPORT

Academic Skills Centre

Referencing and citing

Digital Skills Hub

MORE UOW SERVICES

UOW homepage

Student support and wellbeing

IT Services

On the lands that we study, we walk, and we live, we acknowledge and respect the traditional custodians and cultural knowledge holders of these lands.

Copyright & disclaimer | Privacy & cookie usage

- Open access

- Published: 06 December 2017

Optimal database combinations for literature searches in systematic reviews: a prospective exploratory study

- Wichor M. Bramer 1 ,

- Melissa L. Rethlefsen 2 ,

- Jos Kleijnen 3 , 4 &

- Oscar H. Franco 5

Systematic Reviews volume 6 , Article number: 245 ( 2017 ) Cite this article

149k Accesses

854 Citations

88 Altmetric

Metrics details

Within systematic reviews, when searching for relevant references, it is advisable to use multiple databases. However, searching databases is laborious and time-consuming, as syntax of search strategies are database specific. We aimed to determine the optimal combination of databases needed to conduct efficient searches in systematic reviews and whether the current practice in published reviews is appropriate. While previous studies determined the coverage of databases, we analyzed the actual retrieval from the original searches for systematic reviews.

Since May 2013, the first author prospectively recorded results from systematic review searches that he performed at his institution. PubMed was used to identify systematic reviews published using our search strategy results. For each published systematic review, we extracted the references of the included studies. Using the prospectively recorded results and the studies included in the publications, we calculated recall, precision, and number needed to read for single databases and databases in combination. We assessed the frequency at which databases and combinations would achieve varying levels of recall (i.e., 95%). For a sample of 200 recently published systematic reviews, we calculated how many had used enough databases to ensure 95% recall.

A total of 58 published systematic reviews were included, totaling 1746 relevant references identified by our database searches, while 84 included references had been retrieved by other search methods. Sixteen percent of the included references (291 articles) were only found in a single database; Embase produced the most unique references ( n = 132). The combination of Embase, MEDLINE, Web of Science Core Collection, and Google Scholar performed best, achieving an overall recall of 98.3 and 100% recall in 72% of systematic reviews. We estimate that 60% of published systematic reviews do not retrieve 95% of all available relevant references as many fail to search important databases. Other specialized databases, such as CINAHL or PsycINFO, add unique references to some reviews where the topic of the review is related to the focus of the database.

Conclusions

Optimal searches in systematic reviews should search at least Embase, MEDLINE, Web of Science, and Google Scholar as a minimum requirement to guarantee adequate and efficient coverage.

Peer Review reports

Investigators and information specialists searching for relevant references for a systematic review (SR) are generally advised to search multiple databases and to use additional methods to be able to adequately identify all literature related to the topic of interest [ 1 , 2 , 3 , 4 , 5 , 6 ]. The Cochrane Handbook, for example, recommends the use of at least MEDLINE and Cochrane Central and, when available, Embase for identifying reports of randomized controlled trials [ 7 ]. There are disadvantages to using multiple databases. It is laborious for searchers to translate a search strategy into multiple interfaces and search syntaxes, as field codes and proximity operators differ between interfaces. Differences in thesaurus terms between databases add another significant burden for translation. Furthermore, it is time-consuming for reviewers who have to screen more, and likely irrelevant, titles and abstracts. Lastly, access to databases is often limited and only available on subscription basis.

Previous studies have investigated the added value of different databases on different topics [ 8 , 9 , 10 , 11 , 12 , 13 , 14 , 15 ]. Some concluded that searching only one database can be sufficient as searching other databases has no effect on the outcome [ 16 , 17 ]. Nevertheless others have concluded that a single database is not sufficient to retrieve all references for systematic reviews [ 18 , 19 ]. Most articles on this topic draw their conclusions based on the coverage of databases [ 14 ]. A recent paper tried to find an acceptable number needed to read for adding an additional database; sadly, however, no true conclusion could be drawn [ 20 ]. However, whether an article is present in a database may not translate to being found by a search in that database. Because of this major limitation, the question of which databases are necessary to retrieve all relevant references for a systematic review remains unanswered. Therefore, we research the probability that single or various combinations of databases retrieve the most relevant references in a systematic review by studying actual retrieval in various databases.

The aim of our research is to determine the combination of databases needed for systematic review searches to provide efficient results (i.e., to minimize the burden for the investigators without reducing the validity of the research by missing relevant references). A secondary aim is to investigate the current practice of databases searched for published reviews. Are included references being missed because the review authors failed to search a certain database?

Development of search strategies

At Erasmus MC, search strategies for systematic reviews are often designed via a librarian-mediated search service. The information specialists of Erasmus MC developed an efficient method that helps them perform searches in many databases in a much shorter time than other methods. This method of literature searching and a pragmatic evaluation thereof are published in separate journal articles [ 21 , 22 ]. In short, the method consists of an efficient way to combine thesaurus terms and title/abstract terms into a single line search strategy. This search is then optimized. Articles that are indexed with a set of identified thesaurus terms, but do not contain the current search terms in title or abstract, are screened to discover potential new terms. New candidate terms are added to the basic search and evaluated. Once optimal recall is achieved, macros are used to translate the search syntaxes between databases, though manual adaptation of the thesaurus terms is still necessary.

Review projects at Erasmus MC cover a wide range of medical topics, from therapeutic effectiveness and diagnostic accuracy to ethics and public health. In general, searches are developed in MEDLINE in Ovid (Ovid MEDLINE® In-Process & Other Non-Indexed Citations, Ovid MEDLINE® Daily and Ovid MEDLINE®, from 1946); Embase.com (searching both Embase and MEDLINE records, with full coverage including Embase Classic); the Cochrane Central Register of Controlled Trials (CENTRAL) via the Wiley Interface; Web of Science Core Collection (hereafter called Web of Science); PubMed restricting to records in the subset “as supplied by publisher” to find references that not yet indexed in MEDLINE (using the syntax publisher [sb]); and Google Scholar. In general, we use the first 200 references as sorted in the relevance ranking of Google Scholar. When the number of references from other databases was low, we expected the total number of potential relevant references to be low. In this case, the number of hits from Google Scholar was limited to 100. When the overall number of hits was low, we additionally searched Scopus, and when appropriate for the topic, we included CINAHL (EBSCOhost), PsycINFO (Ovid), and SportDiscus (EBSCOhost) in our search.

Beginning in May 2013, the number of records retrieved from each search for each database was recorded at the moment of searching. The complete results from all databases used for each of the systematic reviews were imported into a unique EndNote library upon search completion and saved without deduplication for this research. The researchers that requested the search received a deduplicated EndNote file from which they selected the references relevant for inclusion in their systematic review. All searches in this study were developed and executed by W.M.B.

Determining relevant references of published reviews

We searched PubMed in July 2016 for all reviews published since 2014 where first authors were affiliated to Erasmus MC, Rotterdam, the Netherlands, and matched those with search registrations performed by the medical library of Erasmus MC. This search was used in earlier research [ 21 ]. Published reviews were included if the search strategies and results had been documented at the time of the last update and if, at minimum, the databases Embase, MEDLINE, Cochrane CENTRAL, Web of Science, and Google Scholar had been used in the review. From the published journal article, we extracted the list of final included references. We documented the department of the first author. To categorize the types of patient/population and intervention, we identified broad MeSH terms relating to the most important disease and intervention discussed in the article. We copied from the MeSH tree the top MeSH term directly below the disease category or, in to case of the intervention, directly below the therapeutics MeSH term. We selected the domain from a pre-defined set of broad domains, including therapy, etiology, epidemiology, diagnosis, management, and prognosis. Lastly, we checked whether the reviews described limiting their included references to a particular study design.

To identify whether our searches had found the included references, and if so, from which database(s) that citation was retrieved, each included reference was located in the original corresponding EndNote library using the first author name combined with the publication year as a search term for each specific relevant publication. If this resulted in extraneous results, the search was subsequently limited using a distinct part of the title or a second author name. Based on the record numbers of the search results in EndNote, we determined from which database these references came. If an included reference was not found in the EndNote file, we presumed the authors used an alternative method of identifying the reference (e.g., examining cited references, contacting prominent authors, or searching gray literature), and we did not include it in our analysis.

Data analysis

We determined the databases that contributed most to the reviews by the number of unique references retrieved by each database used in the reviews. Unique references were included articles that had been found by only one database search. Those databases that contributed the most unique included references were then considered candidate databases to determine the most optimal combination of databases in the further analyses.

In Excel, we calculated the performance of each individual database and various combinations. Performance was measured using recall, precision, and number needed to read. See Table 1 for definitions of these measures. These values were calculated both for all reviews combined and per individual review.

Performance of a search can be expressed in different ways. Depending on the goal of the search, different measures may be optimized. In the case of a clinical question, precision is most important, as a practicing clinician does not have a lot of time to read through many articles in a clinical setting. When searching for a systematic review, recall is the most important aspect, as the researcher does not want to miss any relevant references. As our research is performed on systematic reviews, the main performance measure is recall.

We identified all included references that were uniquely identified by a single database. For the databases that retrieved the most unique included references, we calculated the number of references retrieved (after deduplication) and the number of included references that had been retrieved by all possible combinations of these databases, in total and per review. For all individual reviews, we determined the median recall, the minimum recall, and the percentage of reviews for which each single database or combination retrieved 100% recall.

For each review that we investigated, we determined what the recall was for all possible different database combinations of the most important databases. Based on these, we determined the percentage of reviews where that database combination had achieved 100% recall, more than 95%, more than 90%, and more than 80%. Based on the number of results per database both before and after deduplication as recorded at the time of searching, we calculated the ratio between the total number of results and the number of results for each database and combination.

Improvement of precision was calculated as the ratio between the original precision from the searches in all databases and the precision for each database and combination.

To compare our practice of database usage in systematic reviews against current practice as evidenced in the literature, we analyzed a set of 200 recent systematic reviews from PubMed. On 5 January 2017, we searched PubMed for articles with the phrase “systematic review” in the title. Starting with the most recent articles, we determined the databases searched either from the abstract or from the full text until we had data for 200 reviews. For the individual databases and combinations that were used in those reviews, we multiplied the frequency of occurrence in that set of 200 with the probability that the database or combination would lead to an acceptable recall (which we defined at 95%) that we had measured in our own data.

Our earlier research had resulted in 206 systematic reviews published between 2014 and July 2016, in which the first author was affiliated with Erasmus MC [ 21 ]. In 73 of these, the searches and results had been documented by the first author of this article at the time of the last search. Of those, 15 could not be included in this research, since they had not searched all databases we investigated here. Therefore, for this research, a total of 58 systematic reviews were analyzed. The references to these reviews can be found in Additional file 1 . An overview of the broad topical categories covered in these reviews is given in Table 2 . Many of the reviews were initiated by members of the departments of surgery and epidemiology. The reviews covered a wide variety of disease, none of which was present in more than 12% of the reviews. The interventions were mostly from the chemicals and drugs category, or surgical procedures. Over a third of the reviews were therapeutic, while slightly under a quarter answered an etiological question. Most reviews did not limit to certain study designs, 9% limited to RCTs only, and another 9% limited to other study types.

Together, these reviews included a total of 1830 references. Of these, 84 references (4.6%) had not been retrieved by our database searches and were not included in our analysis, leaving in total 1746 references. In our analyses, we combined the results from MEDLINE in Ovid and PubMed (the subset as supplied by publisher) into one database labeled MEDLINE.

Unique references per database

A total of 292 (17%) references were found by only one database. Table 3 displays the number of unique results retrieved for each single database. Embase retrieved the most unique included references, followed by MEDLINE, Web of Science, and Google Scholar. Cochrane CENTRAL is absent from the table, as for the five reviews limited to randomized trials, it did not add any unique included references. Subject-specific databases such as CINAHL, PsycINFO, and SportDiscus only retrieved additional included references when the topic of the review was directly related to their special content, respectively nursing, psychiatry, and sports medicine.

Overall performance

The four databases that had retrieved the most unique references (Embase, MEDLINE, Web of Science, and Google Scholar) were investigated individually and in all possible combinations (see Table 4 ). Of the individual databases, Embase had the highest overall recall (85.9%). Of the combinations of two databases, Embase and MEDLINE had the best results (92.8%). Embase and MEDLINE combined with either Google Scholar or Web of Science scored similarly well on overall recall (95.9%). However, the combination with Google Scholar had a higher precision and higher median recall, a higher minimum recall, and a higher proportion of reviews that retrieved all included references. Using both Web of Science and Google Scholar in addition to MEDLINE and Embase increased the overall recall to 98.3%. The higher recall from adding extra databases came at a cost in number needed to read (NNR). Searching only Embase produced an NNR of 57 on average, whereas, for the optimal combination of four databases, the NNR was 73.

Probability of appropriate recall

We calculated the recall for individual databases and databases in all possible combination for all reviews included in the research. Figure 1 shows the percentages of reviews where a certain database combination led to a certain recall. For example, in 48% of all systematic reviews, the combination of Embase and MEDLINE (with or without Cochrane CENTRAL; Cochrane CENTRAL did not add unique relevant references) reaches a recall of at least 95%. In 72% of studied systematic reviews, the combination of Embase, MEDLINE, Web of Science, and Google Scholar retrieved all included references. In the top bar, we present the results of the complete database searches relative to the total number of included references. This shows that many database searches missed relevant references.

Percentage of systematic reviews for which a certain database combination reached a certain recall. The X -axis represents the percentage of reviews for which a specific combination of databases, as shown on the y -axis, reached a certain recall (represented with bar colors). Abbreviations: EM Embase, ML MEDLINE, WoS Web of Science, GS Google Scholar. Asterisk indicates that the recall of all databases has been calculated over all included references. The recall of the database combinations was calculated over all included references retrieved by any database

Differences between domains of reviews

We analyzed whether the added value of Web of Science and Google Scholar was dependent of the domain of the review. For 55 reviews, we determined the domain. See Fig. 2 for the comparison of the recall of Embase, MEDLINE, and Cochrane CENTRAL per review for all identified domains. For all but one domain, the traditional combination of Embase, MEDLINE, and Cochrane CENTRAL did not retrieve enough included references. For four out of five systematic reviews that limited to randomized controlled trials (RCTs) only, the traditional combination retrieved 100% of all included references. However, for one review of this domain, the recall was 82%. Of the 11 references included in this review, one was found only in Google Scholar and one only in Web of Science.

Percentage of systematic reviews of a certain domain for which the combination Embase, MEDLINE and Cochrane CENTRAL reached a certain recall

Reduction in number of results

We calculated the ratio between the number of results found when searching all databases, including databases not included in our analyses, such as Scopus, PsycINFO, and CINAHL, and the number of results found searching a selection of databases. See Fig. 3 for the legend of the plots in Figs. 4 and 5 . Figure 4 shows the distribution of this value for individual reviews. The database combinations with the highest recall did not reduce the total number of results by large margins. Moreover, in combinations where the number of results was greatly reduced, the recall of included references was lower.

Legend of Figs. 3 and 4

The ratio between number of results per database combination and the total number of results for all databases

The ratio between precision per database combination and the total precision for all databases

Improvement of precision

To determine how searching multiple databases affected precision, we calculated for each combination the ratio between the original precision, observed when all databases were searched, and the precision calculated for different database combinations. Figure 5 shows the improvement of precision for 15 databases and database combinations. Because precision is defined as the number of relevant references divided by the number of total results, we see a strong correlation with the total number of results.

Status of current practice of database selection

From a set of 200 recent SRs identified via PubMed, we analyzed the databases that had been searched. Almost all reviews (97%) reported a search in MEDLINE. Other databases that we identified as essential for good recall were searched much less frequently; Embase was searched in 61% and Web of Science in 35%, and Google Scholar was only used in 10% of all reviews. For all individual databases or combinations of the four important databases from our research (MEDLINE, Embase, Web of Science, and Google Scholar), we multiplied the frequency of occurrence of that combination in the random set, with the probability we found in our research that this combination would lead to an acceptable recall of 95%. The calculation is shown in Table 5 . For example, around a third of the reviews (37%) relied on the combination of MEDLINE and Embase. Based on our findings, this combination achieves acceptable recall about half the time (47%). This implies that 17% of the reviews in the PubMed sample would have achieved an acceptable recall of 95%. The sum of all these values is the total probability of acceptable recall in the random sample. Based on these calculations, we estimate that the probability that this random set of reviews retrieved more than 95% of all possible included references was 40%. Using similar calculations, also shown in Table 5 , we estimated the probability that 100% of relevant references were retrieved is 23%.

Our study shows that, to reach maximum recall, searches in systematic reviews ought to include a combination of databases. To ensure adequate performance in searches (i.e., recall, precision, and number needed to read), we find that literature searches for a systematic review should, at minimum, be performed in the combination of the following four databases: Embase, MEDLINE (including Epub ahead of print), Web of Science Core Collection, and Google Scholar. Using that combination, 93% of the systematic reviews in our study obtained levels of recall that could be considered acceptable (> 95%). Unique results from specialized databases that closely match systematic review topics, such as PsycINFO for reviews in the fields of behavioral sciences and mental health or CINAHL for reviews on the topics of nursing or allied health, indicate that specialized databases should be used additionally when appropriate.

We find that Embase is critical for acceptable recall in a review and should always be searched for medically oriented systematic reviews. However, Embase is only accessible via a paid subscription, which generally makes it challenging for review teams not affiliated with academic medical centers to access. The highest scoring database combination without Embase is a combination of MEDLINE, Web of Science, and Google Scholar, but that reaches satisfactory recall for only 39% of all investigated systematic reviews, while still requiring a paid subscription to Web of Science. Of the five reviews that included only RCTs, four reached 100% recall if MEDLINE, Web of Science, and Google Scholar combined were complemented with Cochrane CENTRAL.

The Cochrane Handbook recommends searching MEDLINE, Cochrane CENTRAL, and Embase for systematic reviews of RCTs. For reviews in our study that included RCTs only, indeed, this recommendation was sufficient for four (80%) of the reviews. The one review where it was insufficient was about alternative medicine, specifically meditation and relaxation therapy, where one of the missed studies was published in the Indian Journal of Positive Psychology . The other study from the Journal of Advanced Nursing is indexed in MEDLINE and Embase but was only retrieved because of the addition of KeyWords Plus in Web of Science. We estimate more than 50% of reviews that include more study types than RCTs would miss more than 5% of included references if only traditional combination of MEDLINE, Embase, and Cochrane CENTAL is searched.

We are aware that the Cochrane Handbook [ 7 ] recommends more than only these databases, but further recommendations focus on regional and specialized databases. Though we occasionally used the regional databases LILACS and SciELO in our reviews, they did not provide unique references in our study. Subject-specific databases like PsycINFO only added unique references to a small percentage of systematic reviews when they had been used for the search. The third key database we identified in this research, Web of Science, is only mentioned as a citation index in the Cochrane Handbook, not as a bibliographic database. To our surprise, Cochrane CENTRAL did not identify any unique included studies that had not been retrieved by the other databases, not even for the five reviews focusing entirely on RCTs. If Erasmus MC authors had conducted more reviews that included only RCTs, Cochrane CENTRAL might have added more unique references.

MEDLINE did find unique references that had not been found in Embase, although our searches in Embase included all MEDLINE records. It is likely caused by difference in thesaurus terms that were added, but further analysis would be required to determine reasons for not finding the MEDLINE records in Embase. Although Embase covers MEDLINE, it apparently does not index every article from MEDLINE. Thirty-seven references were found in MEDLINE (Ovid) but were not available in Embase.com . These are mostly unique PubMed references, which are not assigned MeSH terms, and are often freely available via PubMed Central.

Google Scholar adds relevant articles not found in the other databases, possibly because it indexes the full text of all articles. It therefore finds articles in which the topic of research is not mentioned in title, abstract, or thesaurus terms, but where the concepts are only discussed in the full text. Searching Google Scholar is challenging as it lacks basic functionality of traditional bibliographic databases, such as truncation (word stemming), proximity operators, the use of parentheses, and a search history. Additionally, search strategies are limited to a maximum of 256 characters, which means that creating a thorough search strategy can be laborious.

Whether Embase and Web of Science can be replaced by Scopus remains uncertain. We have not yet gathered enough data to be able to make a full comparison between Embase and Scopus. In 23 reviews included in this research, Scopus was searched. In 12 reviews (52%), Scopus retrieved 100% of all included references retrieved by Embase or Web of Science. In the other 48%, the recall by Scopus was suboptimal, in one occasion as low as 38%.

Of all reviews in which we searched CINAHL and PsycINFO, respectively, for 6 and 9% of the reviews, unique references were found. For CINAHL and PsycINFO, in one case each, unique relevant references were found. In both these reviews, the topic was highly related to the topic of the database. Although we did not use these special topic databases in all of our reviews, given the low number of reviews where these databases added relevant references, and observing the special topics of those reviews, we suggest that these subject databases will only add value if the topic is related to the topic of the database.

Many articles written on this topic have calculated overall recall of several reviews, instead of the effects on all individual reviews. Researchers planning a systematic review generally perform one review, and they need to estimate the probability that they may miss relevant articles in their search. When looking at the overall recall, the combination of Embase and MEDLINE and either Google Scholar or Web of Science could be regarded sufficient with 96% recall. This number however is not an answer to the question of a researcher performing a systematic review, regarding which databases should be searched. A researcher wants to be able to estimate the chances that his or her current project will miss a relevant reference. However, when looking at individual reviews, the probability of missing more than 5% of included references found through database searching is 33% when Google Scholar is used together with Embase and MEDLINE and 30% for the Web of Science, Embase, and MEDLINE combination. What is considered acceptable recall for systematic review searches is open for debate and can differ between individuals and groups. Some reviewers might accept a potential loss of 5% of relevant references; others would want to pursue 100% recall, no matter what cost. Using the results in this research, review teams can decide, based on their idea of acceptable recall and the desired probability which databases to include in their searches.

Strengths and limitations

We did not investigate whether the loss of certain references had resulted in changes to the conclusion of the reviews. Of course, the loss of a minor non-randomized included study that follows the systematic review’s conclusions would not be as problematic as losing a major included randomized controlled trial with contradictory results. However, the wide range of scope, topic, and criteria between systematic reviews and their related review types make it very hard to answer this question.

We found that two databases previously not recommended as essential for systematic review searching, Web of Science and Google Scholar, were key to improving recall in the reviews we investigated. Because this is a novel finding, we cannot conclude whether it is due to our dataset or to a generalizable principle. It is likely that topical differences in systematic reviews may impact whether databases such as Web of Science and Google Scholar add value to the review. One explanation for our finding may be that if the research question is very specific, the topic of research might not always be mentioned in the title and/or abstract. In that case, Google Scholar might add value by searching the full text of articles. If the research question is more interdisciplinary, a broader science database such as Web of Science is likely to add value. The topics of the reviews studied here may simply have fallen into those categories, though the diversity of the included reviews may point to a more universal applicability.

Although we searched PubMed as supplied by publisher separately from MEDLINE in Ovid, we combined the included references of these databases into one measurement in our analysis. Until 2016, the most complete MEDLINE selection in Ovid still lacked the electronic publications that were already available in PubMed. These could be retrieved by searching PubMed with the subset as supplied by publisher. Since the introduction of the more complete MEDLINE collection Epub Ahead of Print , In-Process & Other Non-Indexed Citations , and Ovid MEDLINE® , the need to separately search PubMed as supplied by publisher has disappeared. According to our data, PubMed’s “as supplied by publisher” subset retrieved 12 unique included references, and it was the most important addition in terms of relevant references to the four major databases. It is therefore important to search MEDLINE including the “Epub Ahead of Print, In-Process, and Other Non-Indexed Citations” references.

These results may not be generalizable to other studies for other reasons. The skills and experience of the searcher are one of the most important aspects in the effectiveness of systematic review search strategies [ 23 , 24 , 25 ]. The searcher in the case of all 58 systematic reviews is an experienced biomedical information specialist. Though we suspect that searchers who are not information specialists or librarians would have a higher possibility of less well-constructed searches and searches with lower recall, even highly trained searchers differ in their approaches to searching. For this study, we searched to achieve as high a recall as possible, though our search strategies, like any other search strategy, still missed some relevant references because relevant terms had not been used in the search. We are not implying that a combined search of the four recommended databases will never result in relevant references being missed, rather that failure to search any one of these four databases will likely lead to relevant references being missed. Our experience in this study shows that additional efforts, such as hand searching, reference checking, and contacting key players, should be made to retrieve extra possible includes.

Based on our calculations made by looking at random systematic reviews in PubMed, we estimate that 60% of these reviews are likely to have missed more than 5% of relevant references only because of the combinations of databases that were used. That is with the generous assumption that the searches in those databases had been designed sensitively enough. Even when taking into account that many searchers consider the use of Scopus as a replacement of Embase, plus taking into account the large overlap of Scopus and Web of Science, this estimate remains similar. Also, while the Scopus and Web of Science assumptions we made might be true for coverage, they are likely very different when looking at recall, as Scopus does not allow the use of the full features of a thesaurus. We see that reviewers rarely use Web of Science and especially Google Scholar in their searches, though they retrieve a great deal of unique references in our reviews. Systematic review searchers should consider using these databases if they are available to them, and if their institution lacks availability, they should ask other institutes to cooperate on their systematic review searches.

The major strength of our paper is that it is the first large-scale study we know of to assess database performance for systematic reviews using prospectively collected data. Prior research on database importance for systematic reviews has looked primarily at whether included references could have theoretically been found in a certain database, but most have been unable to ascertain whether the researchers actually found the articles in those databases [ 10 , 12 , 16 , 17 , 26 ]. Whether a reference is available in a database is important, but whether the article can be found in a precise search with reasonable recall is not only impacted by the database’s coverage. Our experience has shown us that it is also impacted by the ability of the searcher, the accuracy of indexing of the database, and the complexity of terminology in a particular field. Because these studies based on retrospective analysis of database coverage do not account for the searchers’ abilities, the actual findings from the searches performed, and the indexing for particular articles, their conclusions lack immediate translatability into practice. This research goes beyond retrospectively assessed coverage to investigate real search performance in databases. Many of the articles reporting on previous research concluded that one database was able to retrieve most included references. Halladay et al. [ 10 ] and van Enst et al. [ 16 ] concluded that databases other than MEDLINE/PubMed did not change the outcomes of the review, while Rice et al. [ 17 ] found the added value of other databases only for newer, non-indexed references. In addition, Michaleff et al. [ 26 ] found that Cochrane CENTRAL included 95% of all RCTs included in the reviews investigated. Our conclusion that Web of Science and Google Scholar are needed for completeness has not been shared by previous research. Most of the previous studies did not include these two databases in their research.

We recommend that, regardless of their topic, searches for biomedical systematic reviews should combine Embase, MEDLINE (including electronic publications ahead of print), Web of Science (Core Collection), and Google Scholar (the 200 first relevant references) at minimum. Special topics databases such as CINAHL and PsycINFO should be added if the topic of the review directly touches the primary focus of a specialized subject database, like CINAHL for focus on nursing and allied health or PsycINFO for behavioral sciences and mental health. For reviews where RCTs are the desired study design, Cochrane CENTRAL may be similarly useful. Ignoring one or more of the databases that we identified as the four key databases will result in more precise searches with a lower number of results, but the researchers should decide whether that is worth the >increased probability of losing relevant references. This study also highlights once more that searching databases alone is, nevertheless, not enough to retrieve all relevant references.

Future research should continue to investigate recall of actual searches beyond coverage of databases and should consider focusing on the most optimal database combinations, not on single databases.

Levay P, Raynor M, Tuvey D. The contributions of MEDLINE, other bibliographic databases and various search techniques to NICE public health guidance. Evid Based Libr Inf Pract. 2015;10:50–68.

Article Google Scholar

Stevinson C, Lawlor DA. Searching multiple databases for systematic reviews: added value or diminishing returns? Complement Ther Med. 2004;12:228–32.

Article CAS PubMed Google Scholar

Lawrence DW. What is lost when searching only one literature database for articles relevant to injury prevention and safety promotion? Inj Prev. 2008;14:401–4.

Lemeshow AR, Blum RE, Berlin JA, Stoto MA, Colditz GA. Searching one or two databases was insufficient for meta-analysis of observational studies. J Clin Epidemiol. 2005;58:867–73.

Article PubMed Google Scholar

Zheng MH, Zhang X, Ye Q, Chen YP. Searching additional databases except PubMed are necessary for a systematic review. Stroke. 2008;39:e139. author reply e140

Beyer FR, Wright K. Can we prioritise which databases to search? A case study using a systematic review of frozen shoulder management. Health Inf Libr J. 2013;30:49–58.

Higgins JPT, Green S. Cochrane handbook for systematic reviews of interventions: The Cochrane Collaboration, London, United Kingdom. 2011.

Wright K, Golder S, Lewis-Light K. What value is the CINAHL database when searching for systematic reviews of qualitative studies? Syst Rev. 2015;4:104.

Article PubMed PubMed Central Google Scholar

Wilkins T, Gillies RA, Davies K. EMBASE versus MEDLINE for family medicine searches: can MEDLINE searches find the forest or a tree? Can Fam Physician. 2005;51:848–9.

PubMed Google Scholar

Halladay CW, Trikalinos TA, Schmid IT, Schmid CH, Dahabreh IJ. Using data sources beyond PubMed has a modest impact on the results of systematic reviews of therapeutic interventions. J Clin Epidemiol. 2015;68:1076–84.

Ahmadi M, Ershad-Sarabi R, Jamshidiorak R, Bahaodini K. Comparison of bibliographic databases in retrieving information on telemedicine. J Kerman Univ Med Sci. 2014;21:343–54.

Google Scholar

Lorenzetti DL, Topfer L-A, Dennett L, Clement F. Value of databases other than MEDLINE for rapid health technology assessments. Int J Technol Assess Health Care. 2014;30:173–8.

Beckles Z, Glover S, Ashe J, Stockton S, Boynton J, Lai R, Alderson P. Searching CINAHL did not add value to clinical questions posed in NICE guidelines. J Clin Epidemiol. 2013;66:1051–7.

Hartling L, Featherstone R, Nuspl M, Shave K, Dryden DM, Vandermeer B. The contribution of databases to the results of systematic reviews: a cross-sectional study. BMC Med Res Methodol. 2016;16:1–13.

Aagaard T, Lund H, Juhl C. Optimizing literature search in systematic reviews—are MEDLINE, EMBASE and CENTRAL enough for identifying effect studies within the area of musculoskeletal disorders? BMC Med Res Methodol. 2016;16:161.

van Enst WA, Scholten RJ, Whiting P, Zwinderman AH, Hooft L. Meta-epidemiologic analysis indicates that MEDLINE searches are sufficient for diagnostic test accuracy systematic reviews. J Clin Epidemiol. 2014;67:1192–9.

Rice DB, Kloda LA, Levis B, Qi B, Kingsland E, Thombs BD. Are MEDLINE searches sufficient for systematic reviews and meta-analyses of the diagnostic accuracy of depression screening tools? A review of meta-analyses. J Psychosom Res. 2016;87:7–13.

Bramer WM, Giustini D, Kramer BM, Anderson PF. The comparative recall of Google Scholar versus PubMed in identical searches for biomedical systematic reviews: a review of searches used in systematic reviews. Syst Rev. 2013;2:115.

Bramer WM, Giustini D, Kramer BMR. Comparing the coverage, recall, and precision of searches for 120 systematic reviews in Embase, MEDLINE, and Google Scholar: a prospective study. Syst Rev. 2016;5:39.

Ross-White A, Godfrey C. Is there an optimum number needed to retrieve to justify inclusion of a database in a systematic review search? Health Inf Libr J. 2017;33:217–24.

Bramer WM, Rethlefsen ML, Mast F, Kleijnen J. A pragmatic evaluation of a new method for librarian-mediated literature searches for systematic reviews. Res Synth Methods. 2017. doi: 10.1002/jrsm.1279 .

Bramer WM, de Jonge GB, Rethlefsen ML, Mast F, Kleijnen J. A systematic approach to searching: how to perform high quality literature searches more efficiently. J Med Libr Assoc. 2018.