- Bibliography

- More Referencing guides Blog Automated transliteration Relevant bibliographies by topics

- Automated transliteration

- Relevant bibliographies by topics

- Referencing guides

Dissertations / Theses on the topic 'Computer virtualization'

Create a spot-on reference in apa, mla, chicago, harvard, and other styles.

Consult the top 50 dissertations / theses for your research on the topic 'Computer virtualization.'

Next to every source in the list of references, there is an 'Add to bibliography' button. Press on it, and we will generate automatically the bibliographic reference to the chosen work in the citation style you need: APA, MLA, Harvard, Chicago, Vancouver, etc.

You can also download the full text of the academic publication as pdf and read online its abstract whenever available in the metadata.

Browse dissertations / theses on a wide variety of disciplines and organise your bibliography correctly.

Southern, Gabriel. "Symmetric multiprocessing virtualization." Fairfax, VA : George Mason University, 2008. http://hdl.handle.net/1920/3225.

Pelletingeas, Christophe. "Performance evaluation of virtualization with cloud computing." Thesis, Edinburgh Napier University, 2010. http://researchrepository.napier.ac.uk/Output/4010.

Koppe, Jason. "Differential virtualization for large-scale system modeling /." Online version of thesis, 2008. http://hdl.handle.net/1850/7543.

Pham, Duy M. "Performance comparison between x86 virtualization technologies." Thesis, California State University, Long Beach, 2014. http://pqdtopen.proquest.com/#viewpdf?dispub=1528024.

In computing, virtualization provides the capability to service users with different resource requirements and operating system platform needs on a single host computer system. The potential benefits of virtualization include efficient resource utilization, flexible service offering, as well as scalable system planning and expansion, all desirable whether it is for enterprise level data centers, personal computing, or anything in between. These benefits, however, involve certain costs of performance degradation. This thesis compares the performance costs between two of the most popular and widely-used x86 CPU-based virtualization technologies today in personal computing. The results should be useful for users when determining which virtualization technology to adopt for their particular computing needs.

Jensen, Deron Eugene. "System-wide Performance Analysis for Virtualization." PDXScholar, 2014. https://pdxscholar.library.pdx.edu/open_access_etds/1789.

Narayanan, Sivaramakrishnan. "Efficient Virtualization of Scientific Data." The Ohio State University, 2008. http://rave.ohiolink.edu/etdc/view?acc_num=osu1221079391.

Johansson, Marcus, and Lukas Olsson. "Comparative evaluation of virtualization technologies in the cloud." Thesis, Mälardalens högskola, Akademin för innovation, design och teknik, 2017. http://urn.kb.se/resolve?urn=urn:nbn:se:mdh:diva-49242.

Athreya, Manoj B. "Subverting Linux on-the-fly using hardware virtualization technology." Thesis, Georgia Institute of Technology, 2010. http://hdl.handle.net/1853/34844.

Chen, Wei. "Light-Weight Virtualization Driven Runtimes for Big Data Applications." Thesis, University of Colorado Colorado Springs, 2019. http://pqdtopen.proquest.com/#viewpdf?dispub=13862451.

Datacenters are evolving to host heterogeneous Big Data workloads on shared clusters to reduce the operational cost and achieve higher resource utilization. However, it is challenging to schedule heterogeneous workloads with diverse resource requirements and QoS constraints. For example, when consolidating latency critical jobs and best-effort batch jobs in the same cluster, latency critical jobs may suffer from long queuing delay if their resource requests cannot be met immediately; while best-effort jobs would suffer from killing overhead when preempted. Moreover, resource contention may harm task performance running on worker nodes. Since resource requirements for diverse applications show heterogeneity and is not known before task execution, either the cluster manager has to over-provision resources for all incoming applications resulting in low cluster utilization; or applications may experience performance slowdown or even failure due to resource insufficiency. Existing approaches focus on either application awareness or system awareness and fail to address the semantic gap between the application layer and the system layer (e.g., OS scheduling mechanisms or cloud resource allocators).

To address these issues, we propose to attack these problems from a different angle. In other words, applications and underlying systems should cooperate synergistically. This this way, the resource demands of application can be exposed to the system. At the same time, application schedulers can be assisted with more runtimes of the system layer and perform more dedicated scheduling. However, the system and application co-design is challenging. First, the real resource demands for an application is hard to be predicted since its requirements vary during its lifetime. Second, there are tons of information generated from system layers (e.g., OS process schedulers or hardware counters), from which it is hard to associate these information to a dedicated task. Fortunately, with the help of lightweight virtualization, applications could run in isolated containers such that system level runtime information can be collected at the container level. The rich APIs of container based virtualization also enable to perform more advanced scheduling.

In this thesis, we focus on efficient and scalable techniques in datacenter scheduling by leveraging lightweight virtualization. Our thesis is two folds. First, we focus on profiling and optimizing the performance of Big Data applications. In this aspect, we built a tool to trace the scheduling delay for low-latency online data analytics workloads. We further built a map execution engine to address the performance heterogeneity for MapReduce. Second, we focus on leveraging OS containers to build advanced cluster scheduling mechanisms. In that, we built a preemptive cluster scheduler, an elastic memory manager and an OOM killer for Big Data applications. We also conducted a supplementary research on tracing the performance of Big Data training on TensorFlow.

We conducted extensive evaluations of the proposed projects in a real-world cluster. The experimental results demonstrate the effectiveness of proposed approaches in terms of improving performance and utilization of Big Data clusters.

WENG, LI. "Automatic and efficient data virtualization system for scientific datasets." The Ohio State University, 2006. http://rave.ohiolink.edu/etdc/view?acc_num=osu1154717945.

Le, Duy. "Understanding and Leveraging Virtualization Technology in Commodity Computing Systems." W&M ScholarWorks, 2012. https://scholarworks.wm.edu/etd/1539623603.

Semnanian, Amir Ali. "A study on virtualization technology and its impact on computer hardware." Thesis, California State University, Long Beach, 2013. http://pqdtopen.proquest.com/#viewpdf?dispub=1523065.

Underutilization of hardware is one of the challenges that large organizations have been trying to overcome. Most of today's computer hardware is designed and architected for hosting a single operating system and application. Virtualization is the primary solution for this problem. Virtualization is the capability of a system to host multiple virtual computers while running on a single hardware platform. This has both advantages and disadvantages. This thesis concentrates on introducing virtualization technology and comparing different techniques through which virtualization is achieved. It will examine how computer hardware can be virtualized and the impact virtualization would have on different parts of the system. This study evaluates the changes necessary to hardware architectures when virtualization is used. This thesis provides an analysis of the benefits of this technology which conveys to the computer industry and the disadvantages which accompany this new solution. Finally the future of virtualization technology and how it can affect the infrastructure of an organization is evaluated.

Isenstierna, Tobias, and Stefan Popovic. "Computer systems in airborne radar : Virtualization and load balancing of nodes." Thesis, Blekinge Tekniska Högskola, Institutionen för datavetenskap, 2019. http://urn.kb.se/resolve?urn=urn:nbn:se:bth-18300.

Wei, Junyi. "QoS-aware joint power and subchannel allocation algorithms for wireless network virtualization." Thesis, University of Essex, 2017. http://repository.essex.ac.uk/20142/.

Saleh, Mehdi. "Virtualization and self-organization for utility computing." Master's thesis, University of Central Florida, 2011. http://digital.library.ucf.edu/cdm/ref/collection/ETD/id/5026.

Köhler, Fredrik. "Network Virtualization in Multi-hop Heterogeneous Architecture." Thesis, Mälardalens högskola, Akademin för innovation, design och teknik, 2018. http://urn.kb.se/resolve?urn=urn:nbn:se:mdh:diva-38696.

McAdams, Sean. "Virtualization Components of the Modern Hypervisor." UNF Digital Commons, 2015. http://digitalcommons.unf.edu/etd/599.

Ghodke, Ninad Hari. "Virtualization techniques to enable transparent access to peripheral devices across networks." [Gainesville, Fla.] : University of Florida, 2004. http://purl.fcla.edu/fcla/etd/UFE0005684.

Raj, Himanshu. "Virtualization services scalable methods for virtualizing multicore systems /." Diss., Atlanta, Ga. : Georgia Institute of Technology, 2008. http://hdl.handle.net/1853/22677.

Coogan, Kevin Patrick. "Deobfuscation of Packed and Virtualization-Obfuscation Protected Binaries." Diss., The University of Arizona, 2011. http://hdl.handle.net/10150/202716.

Oljira, Dejene Boru. "Telecom Networks Virtualization : Overcoming the Latency Challenge." Licentiate thesis, Karlstads universitet, Institutionen för matematik och datavetenskap (from 2013), 2018. http://urn.kb.se/resolve?urn=urn:nbn:se:kau:diva-67243.

Oliveira, Diogo. "Multi-Objective Resource Provisioning in Network Function Virtualization Infrastructures." Scholar Commons, 2018. http://scholarcommons.usf.edu/etd/7206.

Paladi, Nicolae. "Trusted Computing and Secure Virtualization in Cloud Computing." Thesis, Security Lab, 2012. http://urn.kb.se/resolve?urn=urn:nbn:se:ri:diva-24035.

Zahedi, Saed. "Virtualization Security Threat Forensic and Environment Safeguarding." Thesis, Linnéuniversitetet, Institutionen för datavetenskap (DV), 2014. http://urn.kb.se/resolve?urn=urn:nbn:se:lnu:diva-32144.

Nimgaonkar, Satyajeet. "Secure and Energy Efficient Execution Frameworks Using Virtualization and Light-weight Cryptographic Components." Thesis, University of North Texas, 2014. https://digital.library.unt.edu/ark:/67531/metadc699986/.

Chen, Yu-hsin M. Eng Massachusetts Institute of Technology. "Dynamic binary translation from x86-32 code to x86-64 code for virtualization." Thesis, Massachusetts Institute of Technology, 2009. http://hdl.handle.net/1721.1/53095.

Oprescu, Mihaela Iuniana. "Virtualization and distribution of the BGP control plane." Phd thesis, Institut National Polytechnique de Toulouse - INPT, 2012. http://tel.archives-ouvertes.fr/tel-00785007.

Nemati, Hamed. "Secure System Virtualization : End-to-End Verification of Memory Isolation." Doctoral thesis, KTH, Teoretisk datalogi, TCS, 2017. http://urn.kb.se/resolve?urn=urn:nbn:se:kth:diva-213030.

QC 20170831

Young, Bobby Dalton. "MPI WITHIN A GPU." UKnowledge, 2009. http://uknowledge.uky.edu/gradschool_theses/614.

Anwer, Muhammad Bilal. "Enhancing capabilities of the network data plane using network virtualization and software defined networking." Diss., Georgia Institute of Technology, 2015. http://hdl.handle.net/1853/54422.

Svärd, Petter. "Live VM Migration : Principles and Performance." Licentiate thesis, Umeå universitet, Institutionen för datavetenskap, 2012. http://urn.kb.se/resolve?urn=urn:nbn:se:umu:diva-87246.

Yousif, Wael K. Yousif. "EXAMINING ENGINEERING & TECHNOLOGY STUDENTS ACCEPTANCE OF NETWORK VIRTUALIZATION TECHNOLOGY USING THE TECHNOLOGY ACCEPTANCE MODE." Doctoral diss., University of Central Florida, 2010. http://digital.library.ucf.edu/cdm/ref/collection/ETD/id/3039.

Färlind, Filip, and Kim Ottosson. "Servervirtualisering idag : En undersökning om servervirtualisering hos offentliga verksamheter i Sverige." Thesis, Linnéuniversitetet, Institutionen för datavetenskap (DV), 2014. http://urn.kb.se/resolve?urn=urn:nbn:se:lnu:diva-37032.

Vellanki, Mohit. "Performance Evaluation of Cassandra in a Virtualized Environment." Thesis, Blekinge Tekniska Högskola, Institutionen för datalogi och datorsystemteknik, 2017. http://urn.kb.se/resolve?urn=urn:nbn:se:bth-14032.

Wilcox, David Luke. "Packing Virtual Machines onto Servers." BYU ScholarsArchive, 2010. https://scholarsarchive.byu.edu/etd/2798.

Kotikela, Srujan D. "Secure and Trusted Execution Framework for Virtualized Workloads." Thesis, University of North Texas, 2018. https://digital.library.unt.edu/ark:/67531/metadc1248514/.

Pham, Khoi Minh. "NEURAL NETWORK ON VIRTUALIZATION SYSTEM, AS A WAY TO MANAGE FAILURE EVENTS OCCURRENCE ON CLOUD COMPUTING." CSUSB ScholarWorks, 2018. https://scholarworks.lib.csusb.edu/etd/670.

Su, Yu. "Big Data Management Framework based on Virtualization and Bitmap Data Summarization." The Ohio State University, 2015. http://rave.ohiolink.edu/etdc/view?acc_num=osu1420738636.

Klemperer, Peter Friedrich. "Efficient Hypervisor Based Malware Detection." Research Showcase @ CMU, 2014. http://repository.cmu.edu/dissertations/466.

Al, burhan Mohammad. "Differences between DockerizedContainers and Virtual Machines : A performance analysis for hosting web-applications in a virtualized environment." Thesis, Blekinge Tekniska Högskola, Institutionen för programvaruteknik, 2020. http://urn.kb.se/resolve?urn=urn:nbn:se:bth-19816.

Kieu, Le Truong Van. "Container Based Virtualization Techniques on Lightweight Internet of Things Devices : Evaluating Docker container effectiveness on Raspberry Pi computers." Thesis, Mittuniversitetet, Institutionen för informationssystem och –teknologi, 2021. http://urn.kb.se/resolve?urn=urn:nbn:se:miun:diva-42745.

Lee, Min. "Memory region: a system abstraction for managing the complex memory structures of multicore platforms." Diss., Georgia Institute of Technology, 2013. http://hdl.handle.net/1853/50398.

Indukuri, Pavan Sutha Varma. "Performance comparison of Linux containers(LXC) and OpenVZ during live migration : An experiment." Thesis, Blekinge Tekniska Högskola, Institutionen för datalogi och datorsystemteknik, 2016. http://urn.kb.se/resolve?urn=urn:nbn:se:bth-13540.

Hudzina, John Stephen. "An Enhanced MapReduce Workload Allocation Tool for Spot Market Resources." NSUWorks, 2015. http://nsuworks.nova.edu/gscis_etd/34.

Arvidsson, Jonas. "Utvärdering av containerbaserad virtualisering för telekomsignalering." Thesis, Karlstads universitet, Institutionen för matematik och datavetenskap (from 2013), 2018. http://urn.kb.se/resolve?urn=urn:nbn:se:kau:diva-67721.

Craig, Kyle. "Exploration and Integration of File Systems in LlamaOS." University of Cincinnati / OhioLINK, 2014. http://rave.ohiolink.edu/etdc/view?acc_num=ucin1418910310.

Eriksson, Magnus, and Staffan Palmroos. "Comparative Study of Containment Strategies in Solaris and Security Enhanced Linux." Thesis, Linköping University, Department of Computer and Information Science, 2007. http://urn.kb.se/resolve?urn=urn:nbn:se:liu:diva-9078.

To minimize the damage in the event of a security breach it is desirable to limit the privileges of remotely available services to the bare minimum and to isolate the individual services from the rest of the operating system. To achieve this there is a number of different containment strategies and process privilege security models that may be used. Two of these mechanisms are Solaris Containers (a.k.a. Solaris Zones) and Type Enforcement, as implemented in the Fedora distribution of Security Enhanced Linux (SELinux). This thesis compares how these technologies can be used to isolate a single service in the operating system.

As these two technologies differ significantly we have examined how the isolation effect can be achieved in two separate experiments. In the Solaris experiments we show how the footprint of the installed zone can be reduced and how to minimize the runtime overhead associated with the zone. To demonstrate SELinux we create a deliberately flawed network daemon and show how this can be isolated by writing a SELinux policy.

We demonstrate how both technologies can be used to achieve isolation for a single service. Differences between the two technologies become apparent when trying to run multiple instances of the same service where the SELinux implementation suffers from lack of namespace isolation. When using zones the administration work is the same regardless of the services running in the zone whereas SELinux requires a separate policy for each service. If a policy is not available from the operating system vendor the administrator needs to be familiar with the SELinux policy framework and create the policy from scratch. The overhead of the technologies is small and is not a critical factor for the scalability of a system using them.

Modig, Dennis. "Assessing performance and security in virtualized home residential gateways." Thesis, Högskolan i Skövde, Institutionen för informationsteknologi, 2014. http://urn.kb.se/resolve?urn=urn:nbn:se:his:diva-9966.

Struhar, Vaclav. "Improving Soft Real-time Performance of Fog Computing." Licentiate thesis, Mälardalens högskola, Inbyggda system, 2021. http://urn.kb.se/resolve?urn=urn:nbn:se:mdh:diva-55679.

Svantesson, Björn. "Software Defined Networking : Virtual Router Performance." Thesis, Högskolan i Skövde, Institutionen för informationsteknologi, 2016. http://urn.kb.se/resolve?urn=urn:nbn:se:his:diva-13417.

- VMware Technology Network

- Cloud & SDDC

- Virtualization

- Virtualization Technology & Industry Discussions

master thesis on virtualization

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Printer Friendly Page

- Mark as New

- Report Inappropriate Content

- virtualization

- All forum topics

- Previous Topic

- Publications

- Completed Theses

- Hardware & Infrastructure

- Help our Research

Completed Bachelor and Master Theses

Here, we show an excerpt of bachelor and master theses that have been completed since 2017.

Master Thesis: Be the teacher! - Viewport Sharing in Collaborative Virtual Environments [in progress]

Have you ever tried to show somebody a star in the night sky by pointing towards it? Conveying viewport relative information to the people surrounding us is always challenging, which often leads to difficulties and misunderstandings when trying to teach content to others. This issue also occurs in collaborative virtual environments, especially when used in an educational setting. However, virtual reality allows us to manipulate the otherwise constrained space to perceive the viewports of collaborators in a more direct manner. The goal of this thesis is to develop, analyze, implement and evaluate techniques on how students in a collaborative virtual environment can better perceive the instructor’s viewport. Considerations such as cybersickness, personal space, and performance need to be weighed and compared. The thesis should be implemented in Unity (Unreal can be discussed), and you should be interested in working with networked collaborative environments. Further details will be discussed in a meeting, but experience with Unity or more general Netcode would be very helpful.

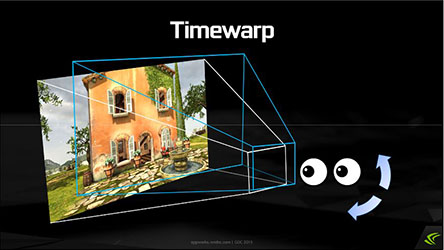

Master Thesis: Impact of Framerate in Virtual Reality [in progress]

When talking about rendering in virtual reality high framerates and low latency are said to be crucial. While there is a lot of research regarding the impact of latency on the user this master thesis aims to focus on the impact of the framerate. The goal of the thesis is to design and evaluate a VR application that measures the influence of the framerate on the user. The solution should be evaluated in an expert study. Further details will be discussed in a meeting. Contact: Marcel Krüger, M.Sc. Simon Oehrl, M. Sc.

Bachelor Thesis: Interaction with Biological Neural Networks in the Context of Brain Simulation [in progress]

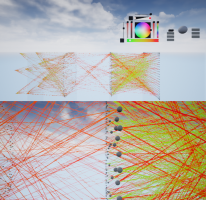

The ability to explore and analyze data generated by brain simulations can give various new insights about the inner workings of neural networks. One of the biggest challenges is to find interaction techniques and user interfaces that allow scientists to easily explore these types of data. Immersive technology can aid in this task and support the user in finding relevant information in large-scale neural networks simulations. The goal of this thesis is to explore techniques to view and interact with biological neural networks from a brain simulation in Unreal Engine 4. The solution should provide easy-to-use and intuitive abilities to explore the network and access neuron-specific properties/data. A strong background in C++ is needed, experience with UE4 would be very helpful. Since this thesis focuses on the visualization of such networks, an interest in immersive visualization is needed, knowledge about neural networks or brain simulation is not necessary. Contact: Marcel Krüger, M.Sc.

Bachelor/Master Thesis: Exploring Immersive Visualization of Artificial Neural Networks with the ANNtoNIA Framework [in progress]

Bachelor Thesis: Group Navigation with Virtual Agents [in progress]

Bachelor Thesis: Walking and Talking Side-by-Side with a Virtual Agent [in progress]

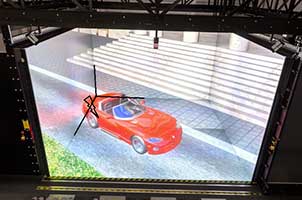

- Common head-mounted displays (HMDs) only provide a small field of view , thus limiting the peripheral view of the user. To this end, seeing an agent in a side-by-side alignment is either hampered or not possible at all without constantly turning one’s head. For room-mounted displays such as CAVEs with at least three projection screens, the alignment itself is possible.

- Which interaction partner aligns with the other? Influencing aspects here are, e.g., is the goal of the joint locomotion known by both walkers, or just by one?

- For the fine-grained alignment , the agent’s animation or the user’s navigation strategy needs to allow many nuances, trajectory- and speed-wise.

Master Thesis: Exploring a Virtual City with an Accompanying Guide [in progress]

Master Thesis: Unaided Scene Exploration while being Guided by Pedestrians-as-Cues [in progress]

Bachelor Thesis: Teacher Training System to Experience how own Behavior Influences Student Behavior [in progress]

Bachelor/Master Thesis: Immersive Node Link Visualization of Invertible Neural Networks

Neural networks have the ability to approximate arbitrary functions. For example, neural networks can model manufacturing processes, i.e., given the machine parameters, a neural network can predict the properties of the resulting work piece. In practice, however, we are more interested in the inverse problem, i.e., given the desired work piece properties, generate the optimal machine parameters. Invertible neural networks (INNs) have shown to be well suited to address this challenge. However, like almost all kinds of neural networks, they are an opaque model. This means that humans cannot easily interpret the inner workings of INNs. To gain insights into the underlying process and the reasons for the model’s decisions, an immersive visualization should be developed in this thesis. The visualization should make use of the ANNtoNIA framework (developed at VCI), which is based on Python and Unreal Engine 4. Requirements are a basic understanding of Machine Learning and Neural Networks as well as good Python programming skills. Understanding of C++ and Unreal Engine 4 is a bonus but not necessary. Contact: Martin Bellgardt, M. Sc.

Master Thesis: Automatic Gazing Behavior for Virtual Agents Based on the Visible Scene Content [in progress]

Master Thesis: Active Bezel Correction to Reduce the Transparency Illusion of Visible Bezels Behind Opaque Virtual Objects

Bachelor/Master Thesis: Augmented Reality for Process Documentation in Textile Engineering [in progress]

Bachelor Thesis: Fast Body Avatar Calibration Based on Limited Sensor Input

Bachelor Thesis: Benchmarking Interactive Crowd Simulations for Virtual Environments in HMD and CAVE Settings

Bachelor Thesis: Investigating the effect of incorrect lighting on the user

Master Thesis: Frame extrapolation to enhance rendering framerate

Bachelor Thesis: The grid processing library

Scalar, vector, tensor and higher-order fields are commonly used to represent scientific data in various disciplines including geology, physics and medicine. Although standards for storage of such data exists (e.g. HDF5), almost every application has its custom in-memory format. The core idea of this engineering-oriented work is to develop a library to standardize in-memory representation of such fields, and providing functionality for (parallel) per-cell and per-region operations on them (e.g. computation of gradients/Jacobian/Hessian). Contact: Ali Can Demiralp, M. Sc.

Bachelor Thesis: Scalar and vector field compression for GPUs based on ASTC texture compression [in progress]

Scalar and vector fields are N-dimensional, potentially non-regular, grids commonly used to store scientific data. Adaptive Scalable Texture Compression (ASTC) is a lossy block-based texture compression method, which covers the features of all texture compression approaches to date and more. The limited memory space of GPUs pose a challenge to interactive compute and visualization on large datasets. The core idea of this work is to explore the potential uses of ASTC for compression of large 2D/3D scalar and vector fields, attempting the minimize and bound the errors introduced by lossiness. Contact: Ali Can Demiralp, M. Sc.

Master Thesis: The multi-device ray tracing library

There are various solutions for ray tracing on CPUs and GPUs today: Intel Embree for shared parallelism on the CPU, Intel Ospray for distributed parallelism on the CPU, NVIDIA OptiX for shared and distributed parallelism on the GPU. Each of these libraries have their pros and cons. Intel Ospray scales to distributed settings for large data visualization, however is bound by the performance of the CPU which is subpar to the GPU for the embarassingly-parallel problem of ray tracing. NVIDIA OptiX provides a powerful programmable pipeline similar to OpenGL but is bound by the memory limitations of the GPU. The core idea of this engineering-oriented work is to develop a library (a) enabling development of ray tracing algorithms without explicit knowledge of the device the algorithm will run on, (b) bringing ease-of-use of Intel Ospray and functional programming concepts of NVIDIA OptiX together. Contact: Ali Can Demiralp, M. Sc.

Master Thesis: Numerical relativity library

Numerical relativity is one of the branches of general relativity that uses numerical methods to analyze problems. The primary goal of numerical relativity is to study spacetimes whose exact form is not known. Within this context the geodesic equation generalizes the notion of a straight line to curved spacetime. The core idea of this work is to develop a library for solving the geodesic equation, which in turn enables 4-dimensional spacetime ray tracing. The implementation should at least provide the Schwarzschild and Kerr solutions to the Einstein Field Equations, providing visualizations of non-rotating and rotating uncharged black holes. Contact: Ali Can Demiralp, M. Sc.

Master Thesis: Mean curvature flow for truncated spherical harmonics expansions

Curvature flows produce successively smoother approximations of a given piece of geometry, by reducing a fairing energy. Within this context, mean curvature flow is a curvature flow defined for hypersurfaces in a Riemannian manifold (e.g. smooth 3D surfaces in Euclidean space), which emphasizes regions of higher frequency and converges to a sphere. Truncated spherical harmonics expansions are commonly used to represent scientific data as well as arbitrary geometric shapes. The core idea of this work is to establish the mathematical concept of mean curvature flow within the spherical harmonics basis, which is empirically done through interpolation of the harmonic coefficients to the coefficient 0,0. Contact: Ali Can Demiralp, M. Sc.

Master Thesis: Orientation distribution function topology

Topological data analysis methods have been applied extensively to scalar and vector fields for revealing features such as critical and saddle points. There is recent effort on generalizing these approaches to tensor fields, although limited to 2D. Orientation distribution functions, which are the spherical analogue to a tensor, are often represented using truncated spherical harmonics expansions and are commonly used in visualization of medical and chemistry datasets. The core idea of this work is to establish the mathematical framework for extraction of topological skeletons from an orientation distribution function field. Contact: Ali Can Demiralp, M. Sc.

Master Thesis: Variational inference tractography

Tractography is a method for estimation of nerve tracts from discrete brain data, often obtained through Magnetic Resonance Imaging. The family of Markov Chain Monte Carlo (MCMC) methods form the current standard to (global) tractography, and have been extensively researched to date. Yet, Variational Inference (VI) methods originating in Machine Learning provide a quicker alternative to statistical inference. Stein Variational Gradient Descent (SVGD) is one such method which not only extracts minima/maxima but is able to estimate the complete distribution. The core idea of this work is to apply SVGD to tractography, working with both Magnetic Resonance and 3D-Polarized Light Imaging data. Contact: Ali Can Demiralp, M. Sc.

Master Thesis: Block connectivity matrices

Connectivity matrices are square matrices for describing structural and functional connections between distinct brain regions. Traditionally, connectivity matrices are computed for segmented brain data, describing the connectivity e.g. among Brodmann areas in order to provide context to the neuroscientist. The core idea in this work is to take an alternative approach, dividing the data into a regular grid and computing the connectivity between each block, in a hierarchical manner. The presentation of such data as a matrix is non-trivial, since the blocks are in 3D and the matrix is bound to 2D, hence it is necessary to (a) reorder the data using space filling curves so that the spatial relationship between the blocks are preserved (b) seek alternative visualization techniques to replace the matrix (e.g. volume rendering). Contact: Ali Can Demiralp, M. Sc.

Bachelor Thesis: Lip Sync in Unreal Engine 4 [in progress]

Computer-controlled, embodied, intelligent virtual agents are increasingly often embedded in various applications to enliven the virtual sceneries. Thereby, conversational virtual agents are of prime importance. To this end, adequate mimics and lip sync is required to show realistic and plausible talking characters. The goal of this bachelor thesis is to enable an effective however easy-to-integrate lip sync in our Unreal projects for text-to-speech input as well as recorded speech. Contact: Jonathan Ehret, M.Sc.

Master Thesis: Meaningful and Self-Reliant Spare Time Activities of Virtual Agents

Bachelor Thesis: Joining Social Groups of Conversational Virtual Agents

Bachelor Thesis: Integrating Human Users into Crowd Simulations

Bachelor Thesis: Supporting Scene Exploration in the Realm of Social Virtual Reality

Master Thesis: Efficient Terrain Rendering for Virtual Reality Applications with Level of Detail via Progressive Meshes

Terrain rendering is a major and widely researched field. It has a variety of applications, from software that allows the user to interactively explore a surface, like NASA World Wind or Google Earth, over flight simulators to computer games. It is not surprising that terrain rendering is also interesting for virtual reality applications as virtual reality can also be a tool to support the solution of difficult problems by providing natural ways of interaction. In combination virtual reality and terrain rendering can combine their solution promoting potential. In this work the terrain representing LOD structure will be a Progressive Mesh (PM) constructed from an unconnected point cloud. A preprocessing step first connects the point cloud so that it forms a triangle mesh which approximates the underlying surface. Then the mesh can be decimated so that the data volume of the PM can be reduced if needed. When the mesh has the desired complexity the actual Progressive Mesh structure is build. Afterwards, real-time rendering just needs to read the PM structure from a file and can perform a selective refinement on it to match the current viewer position and direction. Contact: Prof. Dr. Tom Vierjahn

Master Thesis: Voxel-based Transparency: Comparing Rasterization and Volume Ray Casting

This thesis deals with a performance visualization scheme, which represents the load factor of single threads on a high performance computer through colored voxels. The voxels are arranged in a three-dimensional grid so only the shell of the grid is initially visible. The aim of this thesis is to introduce transparent voxels to the visualization in order to let the user look also into the inside of the grid and thereby display more information at once. First, an intuitive user interface for assigning transparencies to each voxel is presented. For the actual rendering of transparent voxels, two different approaches are then examined: rasterization and volume ray casting. Efficient implementations of both transparency rendering techniques are realized by exploiting the special structure of the voxel grid. The resulting algorithms are able to render even very large grids of transparent voxels in real-time. A more detailed comparison of both approaches eventually points out the better suited of the two methods and shows to what extent the transparency rendering enhances the performance visualization. Contact: Prof. Dr. Tom Vierjahn

Bachelor Thesis: Designing and Implementing Data Structures and Graphical Tools for Data Flow Networks Controlling Virtual Environments

In this bachelor thesis a software tool for editing graphs is designed and implemented. There exist some interactive tools but this new tool is specifically aimed at creating, editing and working with graphs representing data flow networks like they appear in virtual environments. This thesis compares existing tools and documents the implementation process of VistaViz (the application). In the current stage of development, VistaViz is able to create new graphs, load existing ones and make them editable interactively. Contact: Prof. Dr. Tom Vierjahn

Master Thesis: Raycasting of Hybrid Scenes for Interactive Virtual Environments

Scientific virtual reality applications often make use of both geometry and volume data. For example in medical applications, a three dimensional scan of the patient such as a CT scan results in a volume dataset. Ray casting could make the algorithms needed to handle these hybrid scenes significantly simpler than the more traditional rasterizing algorithms. It is a very flexible and powerful way of generating images of virtual environments. Also there are many effects that can be easily realized using ray-based algorithms such as shadows and ambient occlusion. This thesis describes a ray casting renderer that was implemented in order to measure how well a ray casting based renderer performs and if it is feasible to use it to visualize interactive virtual environments. Having a performance baseline for an implementation of a modern ray caster has multiple advantages. The renderer itself could be used to measure how different techniques could improve the performance of the ray casting. Also with such a renderer it is possible to test hardware. This helps to estimate how much the available hardware would have to improve in order to make ray casting a sensible choice for rendering virtual environments. Contact: Prof. Dr. Tom Vierjahn

Master Thesis: CPU Ray-Tracing in ViSTA

Scientific data visualization is an inherent tool in modern science. Virtual reality (VR) is one of the areas where data visualization constitutes an indispensable part. Current advances in VR as well as the growing ubiquitousness of the VR tools bring the necessity to visualize large data volumes in real time to the forefront. However, it also presents new challenges to the visualization software used in high performance computer clusters. CPU-based real-time rendering algorithms can be used in such visualization tasks. However, they only recently started to achieve real-time performance, mostly due to the progress in hardware development. Currently ray tracing is one of the most promising algorithms for CPU-based real-time rendering. This work aims at studying the possibility to use CPU-based ray tracing in VR scenarios. In particular, we consider the CPU-based rendering algorithm implemented in the Intel OSPRay framework. For VR tasks, the ViSTA Virtual Reality Toolkit, developed at the Virtual Reality and Immersive Visualization Group at RWTH Aachen University is used. Contact: Prof. Dr. Tom Vierjahn

Master Thesis: Streaming Interactive 3D-Applications to Web Environments

This thesis develops a framework for streaming interactive 3D-applications to web environments. The framework uses a classical client-server architecture where the client is implemented as a web application. The framework aims at providing a flexible and scalable solution for streaming an application inside a local network as well as remotely over the internet. It supports streaming to multiple clients simultaneously and provides solutions for handling the input of multiple users as well as streaming at multiple resolutions. Its main focus lies on reducing the latency perceived by the user. The thesis evaluates the image-based compression standards JPEG and ETC as well as the video-based compression standards H.264 and H.265 for use in the framework. The communication between the client and the server was implemented using standardized web technologies such as WebSockets and WebRTC. The framework was integrated into a real-world application and was able to stream it locally and remotely achieving satisfying latencies. Contact: Prof. Dr. Tom Vierjahn

Master Thesis: Benchmarking interactive Rendering Frameworks for virtual Environments

In recent years virtual reality applications that utilize head mounted displays have become more popular due to the release of head mounted displays such as the Oculus Rift and the HTC Vive. Applications that utilize head mounted displays, however, require very fast rendering algorithms. Traditionally, the most common way to achieve real time rendering is triangle rasterization; another approach is ray tracing. In order to provide insight into the performance behavior of rasterization and raytracing, in this Master thesis a toolkit for benchmarking the performance of different rendering frameworks under different conditions was implemented. It was used to benchmark the performance of CPU-ray-tracing-based, GPU-ray-tracing-based and rasterization-based rendering in order to identify the influence of different factors on the rendering time for different rendering schemes. Contact: Prof. Dr. Tom Vierjahn

Master Thesis: Generating co-verbal Gestures for a Virtual Human using Recurrent Neural Networks [in progress]

Master Thesis: Extraction and Interactive Rendering of Dissipation Element Geometry – A ParaView Plugin

After approximate Dissipation Elements (DE) were introduced by Vierjahn et al., the goal of this work is to make their results available for users in the field of fluid mechanics in form of a ParaView plugin, a standard software in this field. It allows to convert DE trajectories into the approximated, tube- like, form and render it via ray tracing and classic OpenGL rendering. The results are suggesting it is ready to be tested by engineers working with ParaView, as interactive frame rates and fast loading times are achieved. By using approximated DEs instead of DE trajectories, significant amounts of data storage can be saved. Contact: Prof. Dr. Tom Vierjahn

Bachelor Thesis: Comparison and Evaluation of two Frameworks for in situ Visualization

On the road to exa-scale computing, the widening gap between I/O-capabilities and compute power of today’s compute clusters encourages the use of in situ methods. This thesis evaluates two frameworks designed to simplify in situ coupling, namely SENSEI and Conduit, and compares them in terms of runtime overhead, memory footprint, and implementation complexity. The frameworks were used to implement in situ pipelines between a proxy simulation and an analysis based on the OSPRay ray tracing framework. The frameworks facilitate a low-complexity integration of in situ analysis methods, providing considerable speedups with an acceptable memory footprint. The use of general-purpose in situ coupling frameworks allows for an easy integration of simulations with analysis methods, providing the advantages of in situ methods with little effort. Contact: Prof. Dr. Tom Vierjahn

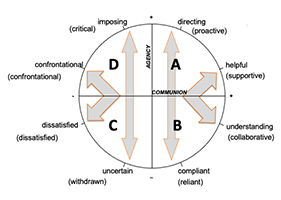

Master Thesis: An Intelligent Recommendation System for an Efficient and Effective Control of Virtual Agents in a Wizard-of-Oz paradigm.

In this work, techniques were studied to control virtual agents embedded as interaction partners in immersive, virtual environments. He implemented a graphical user interface (GUI) for a Wizard-of-Oz paradigm, allowing to select and control individual virtual agents manually. The key component of the GUI is an intelligent recommendation system predicting which virtual agents are very likely to be the next interaction partners based on the user’s actions in order to allow an efficient and effective control. Published as poster at VRST 2017. Contact: Andrea Bönsch, M. Sc.

Master Thesis: Automatic Virtual Tour Generation for Immersive Virtual Environments based on Viewpoint Quality

The exploration of a virtual environment is often the first and one of the most important actions a user performs when experiencing it for the first time, as knowledge of the scene and a cognitive map of the environment are prerequisites for many other tasks. However, as the user does not know the environment, their exploration path is likely to be flawed, taking longer than necessary, missing important parts of the scene and visiting other parts multiple times by accident. This can be remedied by virtual tours that provide an efficient path through the environment that visits all important places. However, for most virtual environments, manually created virtual tours are not available. Furthermore, most scenes are not provided with the information of where the most important locations are, such that automatic generation of good virtual tours is challenging. However, the informativeness of a position in a virtual environment can be computed automatically using viewpoint quality estimation techniques. In addition to providing interesting places as waypoints, this concept also allows the evaluation of the quality of the tour between waypoints. Therefore, in this thesis, an automatic method to compute efficient and informative virtual tours through a virtual scenery is designed and developed, based on an evolutionary approach that aims at maximizing the quality of the viewpoints encountered during the tour. Contact: Dr. Sebastian Freitag

Master Thesis: Fluid Sketching - 3D Sketching Based on Fluid Flow in Immersive Virtual Environments

Bachelor Thesis: Conformal Mapping of the Cortical Surface

The cerebral cortex holds most of the cerebrum’s functional processing ability. To visualize functional areas on the cortical surface, the cortical surface is usually mapped to a representation which makes the convoluted areas of the brain visible. This work focuses on mapping the surface into the 2D domain. For this purpose, two parameterization algorithms have been implemented: Linear Angle Based Parameterization (Zayer et al., 2007) and Least Squares Conformal Maps (Lévy et al., 2002). The results of the two algorithms are then compared to the iterative flattening approach by Fischl et al. regarding computational time and introduced distortions. Contact: Dr. Claudia Hänel

CUNY Academic Works

Home > Dissertations, Theses & Capstones Projects by Program > Data Analysis & Visualization Master’s Theses and Capstone Projects

Data Analysis & Visualization Master’s Theses and Capstone Projects

Dissertations/theses/capstones from 2024 2024.

The Charge Forward: An Assessment of Electric Vehicle Charging Infrastructure in New York City , Christopher S. Cali

Visualizing a Life, Uprooted: An Interactive, Web-Map and Scroll-Driven Exploration of the Oral History of my Great-Grandfather – from Ottoman Cilicia to Lebanon and Beyond , Alyssa Campbell

Examining the Health Risks of Particulate Matter 2.5 in New York City: How it Affects Marginalized Groups and the Steps Needed to Reduce Air Pollution , Freddy Castro

Clustering of Patients with Heart Disease , Mukadder Cinar

Modeling of COVID-19 Clinical Outcomes in Mexico: An Analysis of Demographic, Clinical, and Chronic Disease Factors , Livia Clarete

Invisible Hand of Socioeconomic Factors in Rising Trend of Maternal Mortality Rates in the U.S. , Disha Kanada

Multi-Perspective Analysis for Derivative Financial Product Prediction with Stacked Recurrent Neural Networks, Natural Language Processing and Large Language Model , Ethan Lo

What Does One Billion Dollars Look Like?: Visualizing Extreme Wealth , William Mahoney Luckman

Making Sense of Making Parole in New York , Alexandra McGlinchy

Employment Outcomes in Higher Education , Yunxia Wei

Dissertations/Theses/Capstones from 2023 2023

Phantom Shootings , Allan Ambris

Naming Venus: An Exploration of Goddesses, Heroines, and Famous Women , Kavya Beheraj

Social Impacts of Robotics on the Labor and Employment Market , Kelvin Espinal

Fighting the Invisibility of Domestic Violence , Yesenny Fernandez

Navigating Through World’s Military Spending Data with Scroll-Event Driven Visualization , Hong Beom Hur

Evocative Visualization of Void and Fluidity , Tomiko Karino

Analyzing Relationships with Machine Learning , Oscar Ko

Analyzing ‘Fight the Power’ Part 1: Music and Longevity Across Evolving Marketing Eras , Shokolatte Tachikawa

Stand-up Comedy Visualized , Berna Yenidogan

Dissertations/Theses/Capstones from 2022 2022

El Ritmo del Westside: Exploring the Musical Landscape of San Antonio’s Historic Westside , Valeria Alderete

A Comparison of Machine Learning Techniques for Validating Students’ Proficiency in Mathematics , Alexander Avdeev

A Machine Learning Approach to Predicting the Onset of Type II Diabetes in a Sample of Pima Indian Women , Meriem Benarbia

Disrepair, Displacement and Distress: Finding Housing Stories Through Data Visualizations , Jennifer Cheng

Blockchain: Key Principles , Nadezda Chikurova

Data for Power: A Visual Tool for Organizing Unions , Shay Culpepper

Happiness From a Different Perspective , Suparna Das

Happiness and Policy Implications: A Sociological View , Sarah M. Kahl

Heating Fire Incidents in New York City , Merissa K. Lissade

NYC vs. Covid-19: The Human and Financial Resources Deployed to Fight the Most Expensive Health Emergency in History in NYC during the Year 2020 , Elmer A. Maldonado Ramirez

Slices of the Big Apple: A Visual Explanation and Analysis of the New York City Budget , Joanne Ramadani

The Value of NFTs , Angelina Tham

Air Pollution, Climate Change, and Our Health , Kathia Vargas Feliz

Peru's Fishmeal Industry: Its Societal and Environmental Impact , Angel Vizurraga

Why, New York City? Gauging the Quality of Life Through the Thoughts of Tweeters , Sheryl Williams

Dissertations/Theses/Capstones from 2021 2021

Data Analysis and Visualization to Dismantle Gender Discrimination in the Field of Technology , Quinn Bolewicki

Remaking Cinema: Black Hollywood Films, Filmmakers, and Finances , Kiana A. Carrington

Detecting Stance on Covid-19 Vaccine in a Polarized Media , Rodica Ceslov

Dota 2 Hero Selection Analysis , Zhan Gong

An Analysis of Machine Learning Techniques for Economic Recession Prediction , Sheridan Kamal

Black Women in Romance , Vianny C. Lugo Aracena

The Public Innovations Explorer: A Geo-Spatial & Linked-Data Visualization Platform For Publicly Funded Innovation Research In The United States , Seth Schimmel

Making Space for Unquantifiable Data: Hand-drawn Data Visualization , Eva Sibinga

Who Pays? New York State Political Donor Matching with Machine Learning , Annalisa Wilde

- Colleges, Schools, Centers

- Disciplines

Advanced Search

- Notify me via email or RSS

Author Corner

- Data Analysis & Visualization Program

Home | About | FAQ | My Account | Accessibility Statement

Privacy Copyright

SEAS master's project, thesis address life cycle and carbon impact of maple syrup production

Image Caption Maple sap being transformed to maple syrup at a sugar shack.

In Fall 2022, the Center for Sustainable Systems (CSS) at the University of Michigan School for Environment and Sustainability (SEAS) received a $500,000 research grant through the United States Department of Agriculture’s (USDA) Acer Access and Development Program to conduct a life cycle assessment (LCA) for maple syrup production. As a result of the grant, SEAS students worked on a master’s project and thesis centered on maple syrup production. Both of these projects were advised by CSS researcher and SEAS adjunct lecturer Geoffrey Lewis.

SEAS students Jenna Weinstein, Zhu Zhu, Yuan-Chi Li and Thu Rain Yi Win were part of the master’s project team, which involved collaborating with maple syrup producers to produce a life cycle assessment model of maple syrup retail distribution.

SEAS student Spencer Checkoway worked in tandem with the master’s project team on his own thesis, which is focused on the carbon footprint of maple syrup production.

According to Checkoway, a public life cycle assessment on maple syrup production had never been conducted prior to the LCA initiated by this grant funding.

Zhu, who is focused on the sustainable systems track at SEAS like his fellow master’s project colleagues, was interested in the master’s project because of the skills they could develop working on a LCA.

“I was very interested in understanding how to conduct a life cycle assessment and how to decarbonize the production cycle,” he said.

Likewise, Weinstein felt like it was a great way to apply what she had learned in her sustainable systems classes to a real-world project.

“Sustainable system courses focused on long-term thinking about the system impacts,” she said. “We are not just thinking about production in the scope of glass versus plastic, but we are thinking about the distribution impacts throughout each decision of the life cycle process.”

Before looking at the impacts of maple syrup production or conducting an LCA, Weinstein noted that the team had to first understand what maple syrup entails by focusing on stakeholder engagement.

Weinstein said the interviews with maple syrup producers were important because they allowed the students to shape their master’s project to the needs of the producers and to the skills they wanted to develop.

“I have realized that even the most quantitative projects should still begin with stakeholder engagement to give context to whatever quantitative model you are creating in order to create an output that meets community needs,” she said. “If all an LCA is telling us is to use reverse osmosis, we are not getting at the deeper issue.”

Zhu saw that it was important for the team to understand each component of maple syrup from the producers so they could figure out what variables to include in the quantitative analysis component of the project.

“We did interviews with maple syrup producers to get data about packing materials, from the weight of materials to where they got materials,” he said. “We also wanted to know about their transportation methods.”

The team used the original survey data they collected through interviews with maple syrup producers to model the LCA, Weinstein said.

“Based on the themes that we saw in the interviews, some people [on the team] focused on the theme of package distribution and addressing the central question of what are life cycle impacts of different package types,” she said.

According to Weinstein, this model allows producers to input the decisions they are making in their sugar bush about their fuel sources, where it is located and the materials they are using. The model outputs an analysis of the life cycle and carbon impacts of the production.

Lewis said a life cycle assessment is an important component of the project because it will help to produce an online carbon footprint calculator tool, which is a deliverable the CSS promised in the grant application.

“In order to do [the online calculator tool] we have to understand the process of maple syrup production, and that varies by size of the producer,” he said. “We had to make sure the calculator can accommodate all variables, from how much wood a producer is using to how much tubing was installed, so anyone who makes syrup can go into the calculator with their parameters and equipment to determine the emissions per gallon of syrup they are producing.”

While the master’s project focused on modeling a maple syrup distribution model, Checkoway focused on the carbon footprint of maple syrup production for his thesis.

Like the master’s project team, Checkoway began by meeting with maple syrup producers at maple syrup conferences in Michigan. He noted that the maple syrup producer community is an extremely collaborative and open community.

“I met with producers to build data around what sugar makers are doing and what practices they are performing,” he said. “These conferences are like trade shows, specific to maple syrup technology. There is a real exchange of information on topics from reverse osmosis to how to yield more sap or increase the production rates.”

Checkoway collected data focusing on carbon footprints and accounting.

“Producers submitted each step of their production, as well as what equipment and fuel they were using,” he said. “They also submitted the water and solid waste they produced.”

With these data, Checkoway has worked on a carbon accounting model and published two reports for his thesis. According to Checkoway, this model will help to inform the online calculator tool.

“I built submodels that producers can access and use for specific processes to account for their carbon and energy emissions,” he said.

Checkoway highlighted that the work funded by the grant, to aid in decarbonizing maple syrup production, is not complete.

“The grant is funding three years of research,” he said. “The goal is to collect data from producers over three full seasons and produce an LCA to see how things are changing over those three years.”

Lewis hopes that the models created by the master’s project and thesis can be used to compare maple syrup production to the production of other sweeteners.

“Down the line, we could use these models to make comparisons with other sweeteners like corn syrup,” he said.

Checkoway said his thesis and the master’s project can create a collective body of work that allows maple syrup producers to make rational decisions about the sustainability of their product.

“I hope that producers can look at [the models and calculator] and become aware of the climate impact of maple syrup to understand how they can mitigate those impacts,” he said.

Weinstein views this project as an opportunity to empower maple syrup producers to make informed decisions with easily accessible information.

“We are trying to make it as easy as possible to give [producers] access to the best information possible,” she said. “This is a tool for them to take on the work of reducing their impacts to whatever extent they are willing to.”

Lewis would like this project to raise the profile of maple syrup production, what is involved in making syrup, and how production can be done in a more sustainable way.

“It takes a lot of energy to make maple syrup,” he said. “But what has been great about doing this project is that people always smile when they hear us talk about maple syrup.”

Discover the Arts

- Arizona Arts

- Arizona Arts Live

- Center for Creative Photography

- University of Arizona Museum of Art

- College of Fine Arts

- School of Art

- School of Dance

- Fred Fox School of Music

- School of Theatre, Film & Television

- Hours, Admission, Directions

- Group Visits

- Accessibility

- Know Before You Go

- Exhibitions

- Newsletter Signup

- Mobile Guide

- Search the Collection

- Become a Member

- Ways to Give

- Work With Us

- Mission + Vision

- Woman-Ochre’s Journey

- Collections

- Join + Give

Contact Info

We believe in the power of art to spark essential conversations and enhance research at our university and in our community.

Gain access to exclusive exhibitions, events, and behind-the-scenes tours.

Experiences that expose you to beautiful and inspiring works of art.

Educational programs designed to help you learn more about and enjoy art.

Closing Reception: 2024 MFA Thesis Exhibition

- Closing Reception: 2024 M...

Meet this year's MFA students and glimpse the future of visual art!

Join the University of Arizona Museum of Art and School of Art in celebrating the 2024 Master of Fine Arts Thesis Exhibition . The reception is free and open to the public, and refreshments will be served.

This exhibition is the culmination of the Master of Fine Arts Studio Degree and is presented during a graduate student’s final semester in the program. During the last year of their coursework, graduates work closely with faculty to develop a body of original art to present to the public in lieu of a written thesis.

The end result offers visitors the opportunity to see new, cutting-edge art in a variety of mediums and styles.

With questions about access or to request any disability-related accommodations at this event — such as ASL interpreting, closed-captioning, wheelchair access, or electronic text, etc. — please contact Visitor & Member Services Lead Myriam Sandoval , 520-626-2087.

Related Exhibition

April 13, 2024 through May 11, 2024

2024 MFA Thesis

Join UAMA and gain access to exclusive exhibitions, events, behind-the-scenes tours, and other exclusive benefits.

Support exhibitions, educational programs, acquisitions, archives, public art and enhancement of the Museum’s general operations.

A taxonomy of virtualization technologies Master’s thesis

Delft University of Technology Faculty of Systems Engineering Policy Analysis & Management

August, 2010 Paulus Kampert 1175998

Taxonomy of virtualization technologies

Preface This thesis has been written as part of the Master Thesis Project (spm5910). This is the final course from the master programme Systems Engineering, Policy Analysis & Management (SEPAM), educated by Delft University of Technology at the Faculty of Technology, Policy and Management. This thesis is the product of six months of intensive work in order to obtain the Master degree. This research project provides an overview of the different types of virtualization technologies and virtualization trends. A taxonomy model has been developed that demonstrates the various layers of a virtual server architecture and virtualization domains in a structured way.

Special thanks go to my supervisors. My effort would be useless without the priceless support from them. First, I would like to thank my professor Jan van Berg for his advice, support, time and effort for pushing me in the right direction. I am truly grateful. I would also like to thank my first supervisor Sietse Overbeek for frequently directing and commenting on my research. His advice and feedback was very constructive. Many thanks go to my external supervisor Joris Haverkort for his tremendous support and efforts. He made me feel right at home at Atos Origin and helped me a lot through the course of this research project. Especially, the lectures on virtualization technologies and his positive feedback gave me a better understanding of virtualization. Also, thanks go to Jeroen Klompenhouwer (operations manager at Atos Origin), who introduced me to Joris Haverkort and gave me the opportunity to do my graduation project at Atos Origin. I would also like to thank Stephan Lukosch for his feedback, which helped me to be more precise.

Furthermore, I would like to thank the persons interviewed from Atos Origin, Mick Symonds, Jacco van Hoorn, Gerard Scheuierman and Joris Haverkort. Likewise, my gratitude goes to the persons from the virtualization vendors, Michel Roth (Quest Software), Jan Willem Lammers (VMware), Robert-Jan Ponsen (Citrix) and Robert Bakker (Microsoft). Orchestrating a meeting with the interviewees was not always easy and specials thanks go my supervisor Joris Haverkort from Atos Origin, who assisted me during the whole interview process and helped me find the right persons for the interviews. It was a pleasure working on this topic, although not also easy due to virtualization being a very broad concept. Through the course of the research I picked up so much information on the topic virtualization that it was sometimes difficult to determine what to include in the report and in how much detail.

Paulus Kampert August, 2010

Graduation committee Professor : Dr. ir. J. van den Berg (Section of ICT) First supervisor : Dr. S. Overbeek (Section of ICT) Second supervisor : Dr. S.G. Lukosch (Section Systems Engineering) External supervisor : Dhr. J. Haverkort (Product manager at Atos Origin)

Management summary The past decennium virtualization technologies have emerged in the IT world. In this period, many IT companies shifted their attention towards virtualization. Whereas competition increased, many IT companies started to develop their own virtualization technologies that have led to the development of many different types of virtualization technologies. Contemporary organizations have access to a swiftly expanding selection of computing, storage and networking technologies than ever before. However, the increasing number of virtualization technologies from virtualization vendors have made is difficult to keep track of all the types of virtualization technologies. Furthermore, in the current body of literature there is a lot of technical information about a specific virtualization technology, but there no clear overview of all the different types of virtualization technologies. Also, for virtualization service provider Atos Origin, it is imperative to keep track of the latest developments in virtualization technologies to stay competitive. Due to the many developments by virtualization vendors, Atos Origin wants to have an overview of the virtualization domains and trends. In this report, the following research question is answered:

Which trends in virtualization technologies can be identified and how can they be structured?

The goal of this research projects is to design a structured overview of virtualization technologies and at the same time identify the virtualization trends. To answer the main research question, a taxonomy model has been made that provides a structured overview of the virtualization domains. The research methods that have been used can be characterized as Design Science Research (DSR). The data collection consisted of literature papers, documents from Atos Origin, presentations from events and interviews with experts from Atos Origin and virtualization vendors, VMware, Citrix, Microsoft and Quest Software. Also, during the course of the research project, the case study research method has been used to evaluate the taxonomy model and to identify the virtualization trends. In the analysis phase of the research, it became apparent that there are many types of virtualization technologies that can be categorized into several virtualization domains. Also, many virtualization technologies still have to mature and are accompanied with many challenges, in particular management and security. At this point of the research, a taxonomy model was made to structure the different types of virtualization technologies. Subsequently, the taxonomy model was evaluated by using the case study research method where at the same time trends in virtualization technologies were identified. The case study provided a lot of constructive remarks on the taxonomy model and information about the main virtualization trends. The overall findings of the virtualization trends indicated that desktop virtualization and management technologies are becoming very popular and have received much attention by virtualization vendors. Reasons for its popularity are the need by organizations for seeking new flexible and easier methods of offering work places and the limited functionalities of traditional management tools for virtual environments that have hinder successful management. The case study showed that there are many issues regarding management of virtual environments, such as configuration management and capacity management. Also, it showed that the main business reasons for virtualization are in particular cost savings. New management tools are being developed that must

tackle many of the virtualization challenges, thereby reducing the complexity of controlling virtual environments, which can also lead to additional cost savings. Furthermore, security has become more critical to address, because virtualization has brought new security challenges. Security technologies for virtualization are currently receiving more attention by virtualization vendors and better virtualization aware security technologies are expected to make their appearance very soon. However, the remarks made by the virtualization experts on the taxonomy model led to a revision of the first taxonomy model. While the first taxonomy did provide an overview of the different types of virtualization technologies, it lacked to show the relations and dependencies between the virtualization domains. Also, not all virtualization domains were shown clearly. Therefore, a new taxonomy model was designed using a layered approach that was able to structure the virtualization domains in such a way that it illustrates their relations as well as their dependencies. The taxonomy model demonstrates the various layers of a virtual server architecture and virtualization domains in a structured way.

Finally, for Atos Origin the following recommendations were made based on the findings of this research project:

Desktop virtualization is expected to continue and increase its growth significantly in the years to come. However, combining desktop virtualization with application virtualization and user state virtualization hold interesting business opportunities. Application virtualization is currently offered as a separate service. Combining this service with desktop virtualization and user state virtualization allows for a better desktop service offering. This combination is also called the three layered approach and is different from standard VDI solutions.

Interesting developments are happening for virtualization security technologies. Keep an eye out for new virtual security appliances, as it can help providing better security solutions to the customer.

New management tools for virtualization tackle a lot of management issues and hold interesting business opportunities. These management tools can offer clients better control of their virtual IT environment and can be provided via a new virtualization management service.

Table of contents

1. Introduction ...... 1

1.1 Research problem ...... 2

1.2 Research questions ...... 3

1.3 Research methodology ...... 3

2. Conceptualization ...... 6

2.1 Definition of virtualization ...... 6

2.2 Role of virtualization in data centers ...... 7

2.3 Summary and outlook ...... 8

3. Analysis: Part I ...... 9

3.1 Virtualization domains ...... 9

3.2. Virtualization domains of Atos Origin ...... 22

3.3 Summary and outlook ...... 23

4. Analysis: Part II ...... 25

4.1 Virtualization journey...... 25

4.2 Virtualization challenges: two examples ...... 26

4.3 Current developments ...... 28

4.4 Summary and outlook ...... 31

5. Taxonomy model of virtualization technologies ...... 33

5.1 Modeling Language ...... 33

5.2 Taxonomy model ...... 33

5.3 Summary and outlook ...... 38

6. Evaluation using case study method ...... 40

6.1 Case Study ...... 40

6.2 Case 1: internal group ...... 41

6.3 Case 2: external group ...... 43

6.4 Conclusion of case studies ...... 44

6.5 Quality of Case study ...... 46

6.6 Summary and outlook ...... 47

7. Revised taxonomy model of virtualization domains ...... 48

7.1 Layers of the taxonomy model ...... 49

7.2 Conclusions ...... 51

8. Reflection ...... 52

9. Conclusions & Recommendations ...... 53

9.1 Conclusions ...... 53

9.2 Recommendations for Atos Origin ...... 55

Literature ...... 58

Appendix A: History of virtualization ...... 66

Appendix B: Terminology ...... 68

Appendix C: Benefits and challenges of virtualization...... 71

Appendix D Case study interviews...... 79

1. Introduction Contemporary organizations have access to massive amounts of computing technologies. A remarkable recent trend is the advent of virtualization technologies. While the origins of virtualization go way back to the large mainframe period, virtualization has been reintroduced to servers, desktop computers and many other IT devices of today [1]. The reintroduction of virtualization received much interest and many IT companies shifted their attention towards virtualization [1]. Whereas competition increased, IT companies eagerly developed many new virtualization technologies, which have led to the development of many different types of virtualization technologies [2]. In general, virtualization attempts to reduce complexity by separating different layers of software and hardware. This enables an organization to interact with their IT resources in a more efficient way and allows for a much greater utilization. Therefore, virtualization technologies have rapidly become a standard piece of deployment in many IT organizations. An example of virtualization is depicted in figure 1. On the left a traditional IT infrastructure is depicted and on the right a new IT infrastructure is depicted that uses virtualization. An IT infrastructure can be seen as everything that supports the flow and processing of information such as the physical hardware that interconnects computers and users, plus the software that enables sending, receiving and management of information [3].

Figure 1 Traditional and new IT infrastructure

In figure 1, both IT infrastructures consist of a storage, network, server, operating system and application layer. In the traditional setting, all the components of one layer are connected to a single component of another layer. Each server is comprised of one operating system, a set of applications, a network and a storage device. The network connects the different servers and storage systems together and can also connect the server to other public IT infrastructures such as the internet or private IT infrastructures from other organizations. On the left, the vertical blue pillars point out a specific type of configuration of applications, server, network and storage system. When there is a failure of a component in one of the layers, the

whole pillar is affected. For example, when there is a hardware failure in a server, the whole blue pillar becomes dysfunctional. Until the hardware failure is repaired the operating system and applications on that particular server are down. On the right, the horizontal blue pillars display the new configuration of servers, network and storage that now function as a pool of resources. The software, marked by orange pillars, on top of the servers show that it is not tied to a specific server or hardware configuration. This means that if one server has become non-operational the applications can continue to function on another server in the resource pool. The example of figure 1 shows a small portion of the many features of virtualization technologies [4]. There are many different virtualization technologies that concentrate on particular layers of the IT infrastructure that together enable the transformation of a traditional IT infrastructure into a virtual IT infrastructure. Currently, there are many virtualization vendors that offer the same kind of virtualization technologies, but use a different approach and implementation method underneath. Atos Origin, who is a virtualization service provider, uses virtualization technologies of different virtualization providers to provide suitable IT solutions according to the wishes of their clients, which are often large enterprises. In the following sections the research of this master’s thesis is described. In section 1.1, the research problem is explained, which leads to the research questions in section 1.2. The research method for answering the research questions is described in section 1.3.

1.1 Research problem As was mentioned in the introduction, many IT companies entered the virtualization market. Furthermore, the growth of virtualization vendors has led to the development of many different virtualization technologies [2]. Possibilities of virtualization seem to be endless, seeing that new virtualization technologies keep on making their appearance [4]. This has made is difficult to keep track of all the types of virtualization technologies that are available. The current body of literature shows that there is a lot of technical information about a specific virtualization technology, but there no clear overview of virtualization technologies [5, 6, 7, 8, 9, 10]. Furthermore, for Atos Origin it is imperative to keep track of the latest developments in virtualization technologies to be competitive as a virtualization service provider [11]. Due to the many developments by virtualization vendors, Atos Origin wants to have an overview of the virtualization domains and trends. At the moment Atos Origin offers five types of virtualization services: server, desktop, application, storage and disaster recovery virtualization. However, other types of virtualization technologies might hold interesting business opportunities for Atos Origin. The goal of this research is to design a model that illustrates the different virtualization domains. To do this, a taxonomy model is designed and evaluated in a valid way that provides a taxonomical classification of the virtualization technologies that also explicates the relations between the technologies. By taxonomy we mean “A model for naming and organizing things *...+ into groups which share similar qualities” *12+. A taxonomy model is used, because it allows an overview of virtualization technologies to be presented in a visual and logical way. It can be used as a reference model to show the different types and layers of virtualization technologies, but also to indicate what virtualization technologies have become important, based on current trends.

1.2 Research questions The research problem made it clear that one of the latest IT trends is the emergence of virtualization technologies. Much literature can be found about a certain virtualization technology, but there is no clear overview of the different virtualization technologies. Also, for Atos Origin it is considered to be very important to keep track of the latest trends, because new virtualization technologies can hold new business opportunities. Following the problem statement the main research question can be formulated as: