An official website of the United States government

The .gov means it's official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you're on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- Browse Titles

NCBI Bookshelf. A service of the National Library of Medicine, National Institutes of Health.

StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2024 Jan-.

StatPearls [Internet].

Case control studies.

Steven Tenny ; Connor C. Kerndt ; Mary R. Hoffman .

Affiliations

Last Update: March 27, 2023 .

- Introduction

A case-control study is a type of observational study commonly used to look at factors associated with diseases or outcomes. [1] The case-control study starts with a group of cases, which are the individuals who have the outcome of interest. The researcher then tries to construct a second group of individuals called the controls, who are similar to the case individuals but do not have the outcome of interest. The researcher then looks at historical factors to identify if some exposure(s) is/are found more commonly in the cases than the controls. If the exposure is found more commonly in the cases than in the controls, the researcher can hypothesize that the exposure may be linked to the outcome of interest.

For example, a researcher may want to look at the rare cancer Kaposi's sarcoma. The researcher would find a group of individuals with Kaposi's sarcoma (the cases) and compare them to a group of patients who are similar to the cases in most ways but do not have Kaposi's sarcoma (controls). The researcher could then ask about various exposures to see if any exposure is more common in those with Kaposi's sarcoma (the cases) than those without Kaposi's sarcoma (the controls). The researcher might find that those with Kaposi's sarcoma are more likely to have HIV, and thus conclude that HIV may be a risk factor for the development of Kaposi's sarcoma.

There are many advantages to case-control studies. First, the case-control approach allows for the study of rare diseases. If a disease occurs very infrequently, one would have to follow a large group of people for a long period of time to accrue enough incident cases to study. Such use of resources may be impractical, so a case-control study can be useful for identifying current cases and evaluating historical associated factors. For example, if a disease developed in 1 in 1000 people per year (0.001/year) then in ten years one would expect about 10 cases of a disease to exist in a group of 1000 people. If the disease is much rarer, say 1 in 1,000,0000 per year (0.0000001/year) this would require either having to follow 1,000,0000 people for ten years or 1000 people for 1000 years to accrue ten total cases. As it may be impractical to follow 1,000,000 for ten years or to wait 1000 years for recruitment, a case-control study allows for a more feasible approach.

Second, the case-control study design makes it possible to look at multiple risk factors at once. In the example above about Kaposi's sarcoma, the researcher could ask both the cases and controls about exposures to HIV, asbestos, smoking, lead, sunburns, aniline dye, alcohol, herpes, human papillomavirus, or any number of possible exposures to identify those most likely associated with Kaposi's sarcoma.

Case-control studies can also be very helpful when disease outbreaks occur, and potential links and exposures need to be identified. This study mechanism can be commonly seen in food-related disease outbreaks associated with contaminated products, or when rare diseases start to increase in frequency, as has been seen with measles in recent years.

Because of these advantages, case-control studies are commonly used as one of the first studies to build evidence of an association between exposure and an event or disease.

In a case-control study, the investigator can include unequal numbers of cases with controls such as 2:1 or 4:1 to increase the power of the study.

Disadvantages and Limitations

The most commonly cited disadvantage in case-control studies is the potential for recall bias. [2] Recall bias in a case-control study is the increased likelihood that those with the outcome will recall and report exposures compared to those without the outcome. In other words, even if both groups had exactly the same exposures, the participants in the cases group may report the exposure more often than the controls do. Recall bias may lead to concluding that there are associations between exposure and disease that do not, in fact, exist. It is due to subjects' imperfect memories of past exposures. If people with Kaposi's sarcoma are asked about exposure and history (e.g., HIV, asbestos, smoking, lead, sunburn, aniline dye, alcohol, herpes, human papillomavirus), the individuals with the disease are more likely to think harder about these exposures and recall having some of the exposures that the healthy controls.

Case-control studies, due to their typically retrospective nature, can be used to establish a correlation between exposures and outcomes, but cannot establish causation . These studies simply attempt to find correlations between past events and the current state.

When designing a case-control study, the researcher must find an appropriate control group. Ideally, the case group (those with the outcome) and the control group (those without the outcome) will have almost the same characteristics, such as age, gender, overall health status, and other factors. The two groups should have similar histories and live in similar environments. If, for example, our cases of Kaposi's sarcoma came from across the country but our controls were only chosen from a small community in northern latitudes where people rarely go outside or get sunburns, asking about sunburn may not be a valid exposure to investigate. Similarly, if all of the cases of Kaposi's sarcoma were found to come from a small community outside a battery factory with high levels of lead in the environment, then controls from across the country with minimal lead exposure would not provide an appropriate control group. The investigator must put a great deal of effort into creating a proper control group to bolster the strength of the case-control study as well as enhance their ability to find true and valid potential correlations between exposures and disease states.

Similarly, the researcher must recognize the potential for failing to identify confounding variables or exposures, introducing the possibility of confounding bias, which occurs when a variable that is not being accounted for that has a relationship with both the exposure and outcome. This can cause us to accidentally be studying something we are not accounting for but that may be systematically different between the groups.

The major method for analyzing results in case-control studies is the odds ratio (OR). The odds ratio is the odds of having a disease (or outcome) with the exposure versus the odds of having the disease without the exposure. The most straightforward way to calculate the odds ratio is with a 2 by 2 table divided by exposure and disease status (see below). Mathematically we can write the odds ratio as follows.

Odds ratio = [(Number exposed with disease)/(Number exposed without disease) ]/[(Number not exposed to disease)/(Number not exposed without disease) ]

This can be rewritten as:

Odds ratio = [ (Number exposed with disease) x (Number not exposed without disease) ] / [ (Number exposed without disease ) x (Number not exposed with disease) ]

The odds ratio tells us how strongly the exposure is related to the disease state. An odds ratio of greater than one implies the disease is more likely with exposure. An odds ratio of less than one implies the disease is less likely with exposure and thus the exposure may be protective. For example, a patient with a prior heart attack taking a daily aspirin has a decreased odds of having another heart attack (odds ratio less than one). An odds ratio of one implies there is no relation between the exposure and the disease process.

Odds ratios are often confused with Relative Risk (RR), which is a measure of the probability of the disease or outcome in the exposed vs unexposed groups. For very rare conditions, the OR and RR may be very similar, but they are measuring different aspects of the association between outcome and exposure. The OR is used in case-control studies because RR cannot be estimated; whereas in randomized clinical trials, a direct measurement of the development of events in the exposed and unexposed groups can be seen. RR is also used to compare risk in other prospective study designs.

- Issues of Concern

The main issues of concern with a case-control study are recall bias, its retrospective nature, the need for a careful collection of measured variables, and the selection of an appropriate control group. [3] These are discussed above in the disadvantages section.

- Clinical Significance

A case-control study is a good tool for exploring risk factors for rare diseases or when other study types are not feasible. Many times an investigator will hypothesize a list of possible risk factors for a disease process and will then use a case-control study to see if there are any possible associations between the risk factors and the disease process. The investigator can then use the data from the case-control study to focus on a few of the most likely causative factors and develop additional hypotheses or questions. Then through further exploration, often using other study types (such as cohort studies or randomized clinical studies) the researcher may be able to develop further support for the evidence of the possible association between the exposure and the outcome.

- Enhancing Healthcare Team Outcomes

Case-control studies are prevalent in all fields of medicine from nursing and pharmacy to use in public health and surgical patients. Case-control studies are important for each member of the health care team to not only understand their common occurrence in research but because each part of the health care team has parts to contribute to such studies. One of the most important things each party provides is helping identify correct controls for the cases. Matching the controls across a spectrum of factors outside of the elements of interest take input from nurses, pharmacists, social workers, physicians, demographers, and more. Failure for adequate selection of controls can lead to invalid study conclusions and invalidate the entire study.

- Review Questions

- Access free multiple choice questions on this topic.

- Comment on this article.

2x2 table with calculations for the odds ratio and 95% confidence interval for the odds ratio Contributed by Steven Tenny MD, MPH, MBA

Disclosure: Steven Tenny declares no relevant financial relationships with ineligible companies.

Disclosure: Connor Kerndt declares no relevant financial relationships with ineligible companies.

Disclosure: Mary Hoffman declares no relevant financial relationships with ineligible companies.

This book is distributed under the terms of the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International (CC BY-NC-ND 4.0) ( http://creativecommons.org/licenses/by-nc-nd/4.0/ ), which permits others to distribute the work, provided that the article is not altered or used commercially. You are not required to obtain permission to distribute this article, provided that you credit the author and journal.

- Cite this Page Tenny S, Kerndt CC, Hoffman MR. Case Control Studies. [Updated 2023 Mar 27]. In: StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2024 Jan-.

In this Page

Bulk download.

- Bulk download StatPearls data from FTP

Related information

- PMC PubMed Central citations

- PubMed Links to PubMed

Similar articles in PubMed

- Suicidal Ideation. [StatPearls. 2024] Suicidal Ideation. Harmer B, Lee S, Duong TVH, Saadabadi A. StatPearls. 2024 Jan

- Qualitative Study. [StatPearls. 2024] Qualitative Study. Tenny S, Brannan JM, Brannan GD. StatPearls. 2024 Jan

- Folic acid supplementation and malaria susceptibility and severity among people taking antifolate antimalarial drugs in endemic areas. [Cochrane Database Syst Rev. 2022] Folic acid supplementation and malaria susceptibility and severity among people taking antifolate antimalarial drugs in endemic areas. Crider K, Williams J, Qi YP, Gutman J, Yeung L, Mai C, Finkelstain J, Mehta S, Pons-Duran C, Menéndez C, et al. Cochrane Database Syst Rev. 2022 Feb 1; 2(2022). Epub 2022 Feb 1.

- Review The epidemiology of classic, African, and immunosuppressed Kaposi's sarcoma. [Epidemiol Rev. 1991] Review The epidemiology of classic, African, and immunosuppressed Kaposi's sarcoma. Wahman A, Melnick SL, Rhame FS, Potter JD. Epidemiol Rev. 1991; 13:178-99.

- Review Epidemiology of Kaposi's sarcoma. [Cancer Surv. 1991] Review Epidemiology of Kaposi's sarcoma. Beral V. Cancer Surv. 1991; 10:5-22.

Recent Activity

- Case Control Studies - StatPearls Case Control Studies - StatPearls

Your browsing activity is empty.

Activity recording is turned off.

Turn recording back on

Connect with NLM

National Library of Medicine 8600 Rockville Pike Bethesda, MD 20894

Web Policies FOIA HHS Vulnerability Disclosure

Help Accessibility Careers

- En español – ExME

- Em português – EME

Case-control and Cohort studies: A brief overview

Posted on 6th December 2017 by Saul Crandon

Introduction

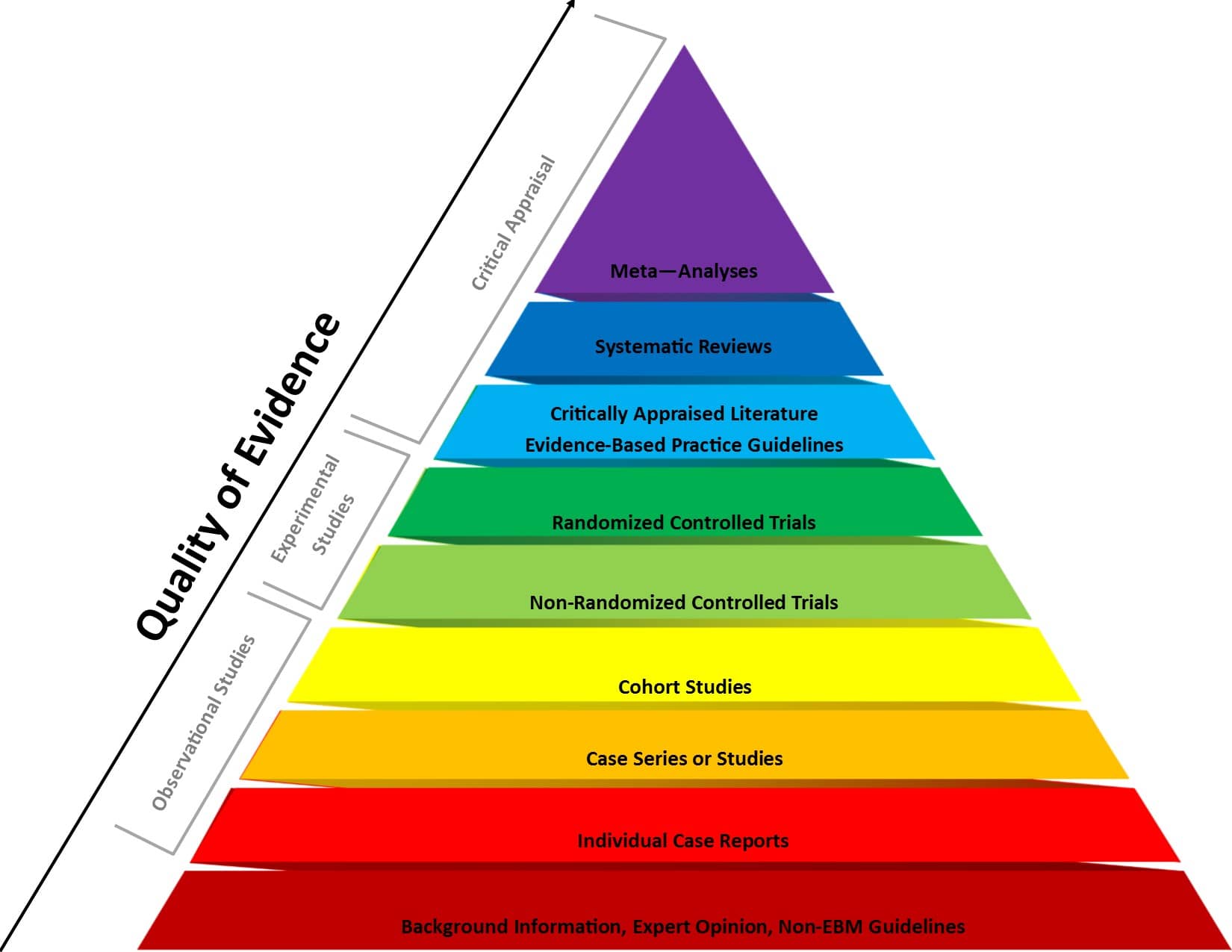

Case-control and cohort studies are observational studies that lie near the middle of the hierarchy of evidence . These types of studies, along with randomised controlled trials, constitute analytical studies, whereas case reports and case series define descriptive studies (1). Although these studies are not ranked as highly as randomised controlled trials, they can provide strong evidence if designed appropriately.

Case-control studies

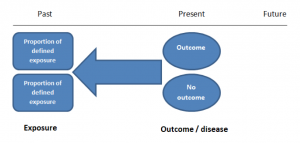

Case-control studies are retrospective. They clearly define two groups at the start: one with the outcome/disease and one without the outcome/disease. They look back to assess whether there is a statistically significant difference in the rates of exposure to a defined risk factor between the groups. See Figure 1 for a pictorial representation of a case-control study design. This can suggest associations between the risk factor and development of the disease in question, although no definitive causality can be drawn. The main outcome measure in case-control studies is odds ratio (OR) .

Figure 1. Case-control study design.

Cases should be selected based on objective inclusion and exclusion criteria from a reliable source such as a disease registry. An inherent issue with selecting cases is that a certain proportion of those with the disease would not have a formal diagnosis, may not present for medical care, may be misdiagnosed or may have died before getting a diagnosis. Regardless of how the cases are selected, they should be representative of the broader disease population that you are investigating to ensure generalisability.

Case-control studies should include two groups that are identical EXCEPT for their outcome / disease status.

As such, controls should also be selected carefully. It is possible to match controls to the cases selected on the basis of various factors (e.g. age, sex) to ensure these do not confound the study results. It may even increase statistical power and study precision by choosing up to three or four controls per case (2).

Case-controls can provide fast results and they are cheaper to perform than most other studies. The fact that the analysis is retrospective, allows rare diseases or diseases with long latency periods to be investigated. Furthermore, you can assess multiple exposures to get a better understanding of possible risk factors for the defined outcome / disease.

Nevertheless, as case-controls are retrospective, they are more prone to bias. One of the main examples is recall bias. Often case-control studies require the participants to self-report their exposure to a certain factor. Recall bias is the systematic difference in how the two groups may recall past events e.g. in a study investigating stillbirth, a mother who experienced this may recall the possible contributing factors a lot more vividly than a mother who had a healthy birth.

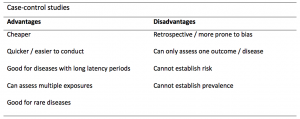

A summary of the pros and cons of case-control studies are provided in Table 1.

Table 1. Advantages and disadvantages of case-control studies.

Cohort studies

Cohort studies can be retrospective or prospective. Retrospective cohort studies are NOT the same as case-control studies.

In retrospective cohort studies, the exposure and outcomes have already happened. They are usually conducted on data that already exists (from prospective studies) and the exposures are defined before looking at the existing outcome data to see whether exposure to a risk factor is associated with a statistically significant difference in the outcome development rate.

Prospective cohort studies are more common. People are recruited into cohort studies regardless of their exposure or outcome status. This is one of their important strengths. People are often recruited because of their geographical area or occupation, for example, and researchers can then measure and analyse a range of exposures and outcomes.

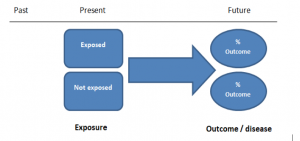

The study then follows these participants for a defined period to assess the proportion that develop the outcome/disease of interest. See Figure 2 for a pictorial representation of a cohort study design. Therefore, cohort studies are good for assessing prognosis, risk factors and harm. The outcome measure in cohort studies is usually a risk ratio / relative risk (RR).

Figure 2. Cohort study design.

Cohort studies should include two groups that are identical EXCEPT for their exposure status.

As a result, both exposed and unexposed groups should be recruited from the same source population. Another important consideration is attrition. If a significant number of participants are not followed up (lost, death, dropped out) then this may impact the validity of the study. Not only does it decrease the study’s power, but there may be attrition bias – a significant difference between the groups of those that did not complete the study.

Cohort studies can assess a range of outcomes allowing an exposure to be rigorously assessed for its impact in developing disease. Additionally, they are good for rare exposures, e.g. contact with a chemical radiation blast.

Whilst cohort studies are useful, they can be expensive and time-consuming, especially if a long follow-up period is chosen or the disease itself is rare or has a long latency.

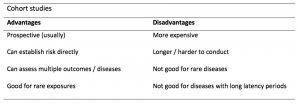

A summary of the pros and cons of cohort studies are provided in Table 2.

The Strengthening of Reporting of Observational Studies in Epidemiology Statement (STROBE)

STROBE provides a checklist of important steps for conducting these types of studies, as well as acting as best-practice reporting guidelines (3). Both case-control and cohort studies are observational, with varying advantages and disadvantages. However, the most important factor to the quality of evidence these studies provide, is their methodological quality.

- Song, J. and Chung, K. Observational Studies: Cohort and Case-Control Studies . Plastic and Reconstructive Surgery.  2010 Dec;126(6):2234-2242.

- Ury HK. Efficiency of case-control studies with multiple controls per case: Continuous or dichotomous data . Biometrics . 1975 Sep;31(3):643–649.

- von Elm E, Altman DG, Egger M, Pocock SJ, Gøtzsche PC, Vandenbroucke JP; STROBE Initiative. The Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) statement: guidelines for reporting observational studies.  Lancet 2007 Oct;370(9596):1453-14577. PMID: 18064739.

Saul Crandon

Leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

No Comments on Case-control and Cohort studies: A brief overview

Very well presented, excellent clarifications. Has put me right back into class, literally!

Very clear and informative! Thank you.

very informative article.

Thank you for the easy to understand blog in cohort studies. I want to follow a group of people with and without a disease to see what health outcomes occurs to them in future such as hospitalisations, diagnoses, procedures etc, as I have many health outcomes to consider, my questions is how to make sure these outcomes has not occurred before the “exposure disease”. As, in cohort studies we are looking at incidence (new) cases, so if an outcome have occurred before the exposure, I can leave them out of the analysis. But because I am not looking at a single outcome which can be checked easily and if happened before exposure can be left out. I have EHR data, so all the exposure and outcome have occurred. my aim is to check the rates of different health outcomes between the exposed)dementia) and unexposed(non-dementia) individuals.

Very helpful information

Thanks for making this subject student friendly and easier to understand. A great help.

Thanks a lot. It really helped me to understand the topic. I am taking epidemiology class this winter, and your paper really saved me.

Happy new year.

Wow its amazing n simple way of briefing ,which i was enjoyed to learn this.its very easy n quick to pick ideas .. Thanks n stay connected

Saul you absolute melt! Really good work man

am a student of public health. This information is simple and well presented to the point. Thank you so much.

very helpful information provided here

really thanks for wonderful information because i doing my bachelor degree research by survival model

Quite informative thank you so much for the info please continue posting. An mph student with Africa university Zimbabwe.

Thank you this was so helpful amazing

Apreciated the information provided above.

So clear and perfect. The language is simple and superb.I am recommending this to all budding epidemiology students. Thanks a lot.

Great to hear, thank you AJ!

I have recently completed an investigational study where evidence of phlebitis was determined in a control cohort by data mining from electronic medical records. We then introduced an intervention in an attempt to reduce incidence of phlebitis in a second cohort. Again, results were determined by data mining. This was an expedited study, so there subjects were enrolled in a specific cohort based on date(s) of the drug infused. How do I define this study? Thanks so much.

thanks for the information and knowledge about observational studies. am a masters student in public health/epidemilogy of the faculty of medicines and pharmaceutical sciences , University of Dschang. this information is very explicit and straight to the point

Very much helpful

Subscribe to our newsletter

You will receive our monthly newsletter and free access to Trip Premium.

Related Articles

Cluster Randomized Trials: Concepts

This blog summarizes the concepts of cluster randomization, and the logistical and statistical considerations while designing a cluster randomized controlled trial.

Expertise-based Randomized Controlled Trials

This blog summarizes the concepts of Expertise-based randomized controlled trials with a focus on the advantages and challenges associated with this type of study.

An introduction to different types of study design

Conducting successful research requires choosing the appropriate study design. This article describes the most common types of designs conducted by researchers.

Randomized Trials and Case–Control Matching Techniques

- First Online: 14 December 2022

Cite this chapter

- Emanuele Russo 34 ,

- Annalaura Montalti 35 ,

- Domenico Pietro Santonastaso 34 &

- Giuliano Bolondi 34

Part of the book series: Hot Topics in Acute Care Surgery and Trauma ((HTACST))

310 Accesses

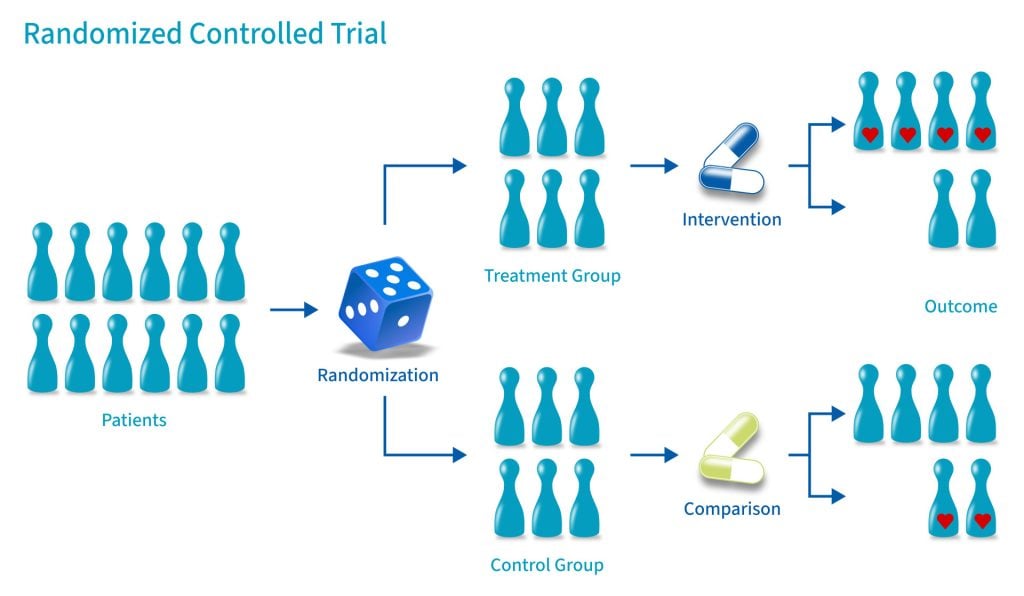

Randomized control trials (RCTs) are deemed to be among the most powerful and rigorous clinical research instruments. The main application is to evaluate the effectiveness and safety of new treatment or clinical approach. Researchers employ several strategies to reduce bias and increase the strength of results such as “blinding,” multicenter enrollment, and different randomization designs. Finding’s interpretation needs meticulous reporting of each phase of the trial. RCTs are not appropriate for the validation of screening tests and for the study of rare outcomes.

Case–control studies are a sub-type of retrospective observational studies. The main goal of case–control studies is to investigate the risk factors that led to the development of the disease. This type of design allows relative risk to be estimated by means of odds ratios and it is deemed to be an efficient means of studying rare diseases with a long-term latency period.

In case–control studies, matching techniques are often employed. Pairing techniques allow to control some confounding factors and increase statistical power in studies with small populations. Patient matching is increasingly performed by complex techniques such as propensity score and inverse probability.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

- Durable hardcover edition

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Holy Bible Book of Daniel (1; 1–21).

Google Scholar

Amberson JB, McMahon BT, Pinner M. A clinical trial of sanocrysin in pulmonary tuberculosis. Am Rev Tuberc. 1931;24:401–35.

Streptomycin treatment of pulmonary tuberculosis. Br Med J. 1948;2(4582):769–82.

Article Google Scholar

Stolberg HO, Norman G, Trop I. Randomized controlled trials. Fundamentals of clinical research for radiologists. Am J Roentgenol. 2004;183:1539–44. https://doi.org/10.2214/ajr.183.6.01831539 .

De Angelis C, Drazen JM, Frizelle FA, et al. Clinical trial registration: a statement from the International Committee of Medical Journal Editors. N Engl J Med. 2004;351(12):1250–1.

Pocock SJ, Assmann SE, Enos LE, Kasten LE. Subgroup analysis, covariate adjustment and baseline comparisons in clinical trial reporting: current practice and problems. Stat Med. 2002;21(19):2917–30. https://doi.org/10.1002/sim.1296 .

Horton R. From star signs to trial guidelines. Lancet. 2000;355(9209):1033–4. https://doi.org/10.1016/S0140-6736(00)02031-6 .

Article CAS Google Scholar

World Medical Association. World Medical Association Declaration of Helsinki: ethical principles for medical research involving human subjects. JAMA. 2013;310(20):2191–4. https://doi.org/10.1001/jama.2013.281053 .

Hannan EL. Randomized clinical trials and observational studies: guidelines for assessing respective strengths and limitations. JACC Cardiovasc Interv. 2008;1(3):211–7. https://doi.org/10.1016/j.jcin.2008.01.008 .

Benson K, Hartz AJ. A comparison of observational studies and randomized, controlled trials. N Engl J Med. 2000;342(25):1878–86. https://doi.org/10.1056/NEJM200006223422506 .

Feys F, et al. Do randomized clinical trials with inadequate blinding report enhanced placebo effects for intervention groups and nocebo effects for placebo groups? Syst Rev. 2014;3:14.

Lee CS, Lee AY. How artificial intelligence can transform randomized controlled trials. Transl Vis Sci Technol. 2020;9(2):9. https://doi.org/10.1167/tvst.9.2.9 .

Banerjee A, Chitnis UB, Jadhav SL, Bhawalkar JS, Chaudhury S. Hypothesis testing, type I and type II errors. Ind Psychiatry J. 2009;18(2):127–31. https://doi.org/10.4103/0972-6748.62274 .

Moher D, Schulz KF, Altman DG, CONSORT GROUP (Consolidated Standards of Reporting Trials). The CONSORT statement: revised recommendations for improving the quality of reports of parallel-group randomized trials. Ann Intern Med. 2001;134(8):657–62. https://doi.org/10.7326/0003-4819-134-8-200104170-00011 .

Begg C, Cho M, Eastwood S, Horton R, Moher D, Olkin I, et al. Improving the quality of reporting of randomized controlled trials. The CONSORT Statement. JAMA. 1996;276(8):637–9. https://doi.org/10.1001/jama.276.8.637 .

Piaggio G, Elbourne DR, Altman DG, Pocock SJ, SJW E, CONSORT Group FT. Reporting of noninferiority and equivalence randomized trials: an extension of the CONSORT Statement. JAMA. 2006;295(10):1152–60. https://doi.org/10.1001/jama.295.10.1152 .

Ioannidis JPA, Dixon DO, McIntosh M, Albert JM, Bozzette SA, Schnittman SN. Relationship between event rates and treatment effects in clinical site differences within multicenter trials: an example from primary Pneumocystis carinii Prophylaxi. Control Clin Trials. 1999;20:253–66.

CRASH-2 Collaborators, Roberts I, Shakur H, Afolabi A, Brohi K, Coats T, Dewan Y, Gando S, Guyatt G, Hunt BJ, Morales C, Perel P, Prieto-Merino D, Woolley T. The importance of early treatment with tranexamic acid in bleeding trauma patients: an exploratory analysis of the CRASH-2 randomised controlled trial. Lancet. 2011;377(9771):1096–101, 1101.e1–2. https://doi.org/10.1016/S0140-6736(11)60278-X .

Mitra B, Mazur S, Cameron PA, Bernard S, Burns B, Smith A, Rashford S, Fitzgerald M, Smith K, Gruen RL. Tranexamic acid for trauma: filling the GAP in evidence. Emerg Med Australas. 2014;26:194–7.

Hróbjartsson A, Boutron I. Blinding in randomized clinical trials: imposed impartiality. Clin Pharmacol Ther. 2011;90(5):732–6. https://doi.org/10.1038/clpt.2011.207 .

Karanicolas PJ, Farrokhyar F, Bhandari M. Practical tips for surgical research: blinding: who, what, when, why, how? Can J Surg. 2010;53(5):345–8.

Kao LS, Tyson JE, Blakely ML, Lally KP. Clinical research methodology I: introduction to randomized trials. J Am Coll Surg. 2008;206(2):361–9.

Suresh KP. An overview of randomization techniques: an unbiased assessment of outcome in clinical research. J Hum Reprod Sci. 2011;4:8–11.

Hopewell S, Dutton S, Yu LM, Chan AW, Altman DG. The quality of reports of randomised trials in 2000 and 2006: comparative study of articles indexed in PubMed. BMJ. 2010;340:c723. https://doi.org/10.1136/bmj.c723 .

Deaton A, Cartwright N. Understanding and misunderstanding randomized controlled trials. Soc Sci Med. 2018;210:2–21. https://doi.org/10.1016/j.socscimed.2017.12.005 .

Sibbald B, Roland M. Why are randomized controlled trials important? BMJ. 1998;316:201.

Hein S, Weeland J. Introduction to the special issue. Randomized control trials (RCTs) in clinical and community settings: challenges, alternatives and supplementary designs. New Dir Child Adolesc Dev. 2019;2019(167):7–15. https://doi.org/10.1002/cad.20312 .

Thompson D. Understanding financial conflicts of interest. N Engl J Med. 1993;329:573–6.

Bekelman JE, Li Y, Gross CP. Scope and impact of financial conflicts of interest in biomedical research: a systematic review. JAMA. 2003;289(4):454–65. https://doi.org/10.1001/jama.289.4.454 .

Bhandari M, Busse JW, Jackowski D, Montori VM, Schünemann H, Sprague S, Mears D, Schemitsch EH, Heels-Ansdell D, Devereaux PJ. Association between industry funding and statistically significant pro-industry findings in medical and surgical randomized trials. CMAJ. 2004;170(4):477–80.

Sason-Fisher RW, Bonevski B, Green LW, D’Este C. Limitations of the randomized controlled trial in evaluation population-based Health intervention. Am J Prev Med. 2007;33(2):155–61.

Kraemer HC, Robinson TN. Are certain multicenter randomized clinical trial structures misleading clinical and policy decisions? Contemp Clin Trials. 2005;26(5):518–29. https://doi.org/10.1016/j.cct.2005.05.002 .

Harris PNA, Tambyah PA, Lye DC, et al. MERINO Trial Investigators and the Australasian Society for Infectious Disease Clinical Research Network (ASID-CRN). Effect of Piperacillin-Tazobactam vs Meropenem on 30-day mortality for patients with E coli or Klebsiella pneumoniae bloodstream infection and ceftriaxone resistance: a randomized clinical trial. JAMA. 2018;320(10):984–94. [Erratum in: JAMA. 2019 Jun 18;321(23):2370]. https://doi.org/10.1001/jama.2018.12163 .

Rodríguez-Baño J, Gutiérrez-Gutiérrez B, Kahlmeter G. Antibiotics for ceftriaxone-resistant gram-negative bacterial bloodstream infections. JAMA. 2019;321(6):612–3. https://doi.org/10.1001/jama.2018.19345 .

Missing information on sample size. JAMA. 2019;321(23):2370. [Erratum for: JAMA. 2018;320(10):984–994]. https://doi.org/10.1001/jama.2019.6706 .

Pearce N. Analysis of matched case-control studies. BMJ. 2016;352:i969. https://doi.org/10.1136/bmj.i969 .

Wachoider S, Silverman DT, McLaughlin JK, Mandel JS. Selection of controls in case-control studies. Am J Epidemiol. 1992;135(9):1042–50.

Rosenbaum PR, Rubin DB. The central role of the propensity score in observational studies for causal effects. Biometrika. 1983;70(1):41–55.

Chesnaye NC, Stel VS, Tripepi G, Dekker FW, Fu EL, Zoccali C, Jager KJ. An introduction to inverse probability of treatment weighting in observational research. Clin Kidney J. 2021;15(1):14–20. https://doi.org/10.1093/ckj/sfab158 .

Schulte PJ, Mascha EJ. Propensity score methods: theory and practice for anesthesia research. Anesth Analg. 2018;127(4):1074–84. https://doi.org/10.1213/ANE.0000000000002920 .

Rodríguez-Pardo J, Plaza Herráiz A, Lobato-Pérez L, Ramírez-Torres M, De Lorenzo I, Alonso de Leciñana M, Díez-Tejedor E, Fuentes B. Influence of oral anticoagulation on stroke severity and outcomes: a propensity score matching case-control study. J Neurol Sci. 2020;410:116685. https://doi.org/10.1016/j.jns.2020.116685 .

Austin PC, Stuart EA. Moving towards best practice when using inverse probability of treatment weighting (IPTW) using the propensity score to estimate causal treatment effects in observational studies. Stat Med. 2015;34(28):3661–79. https://doi.org/10.1002/sim.6607 .

Download references

Author information

Authors and affiliations.

Anesthesia and Intensive Care Unit, AUSL Romagna, Maurizio Bufalini Hospital, Cesena FC, Italy

Emanuele Russo, Domenico Pietro Santonastaso & Giuliano Bolondi

Risk and Compliance, Healthcare, KPMG Advisory S.p.A., Milan, Italy

Annalaura Montalti

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Emanuele Russo .

Editor information

Editors and affiliations.

General and Emergency Surgery Department, School of Medicine and Surgery, Milano-Bicocca University, Monza, Italy

Marco Ceresoli

Department of Surgery, College of Medicine and Health Science, United Arab Emirates University, Abu Dhabi, United Arab Emirates

Fikri M. Abu-Zidan

Department of Surgery, Stanford University, Stanford, CA, USA

Kristan L. Staudenmayer

General and Emergency Surgery Department, Bufalini Hospital, Cesena, Italy

Fausto Catena

Department of General, Emergency and Trauma Surgery, Pisa University Hospital, Pisa, Pisa, Italy

Federico Coccolini

Rights and permissions

Reprints and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Russo, E., Montalti, A., Santonastaso, D.P., Bolondi, G. (2022). Randomized Trials and Case–Control Matching Techniques. In: Ceresoli, M., Abu-Zidan, F.M., Staudenmayer, K.L., Catena, F., Coccolini, F. (eds) Statistics and Research Methods for Acute Care and General Surgeons. Hot Topics in Acute Care Surgery and Trauma. Springer, Cham. https://doi.org/10.1007/978-3-031-13818-8_10

Download citation

DOI : https://doi.org/10.1007/978-3-031-13818-8_10

Published : 14 December 2022

Publisher Name : Springer, Cham

Print ISBN : 978-3-031-13817-1

Online ISBN : 978-3-031-13818-8

eBook Packages : Medicine Medicine (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

- Research article

- Open access

- Published: 12 May 2020

A mixed methods case study investigating how randomised controlled trials (RCTs) are reported, understood and interpreted in practice

- Ben E. Byrne ORCID: orcid.org/0000-0002-2183-8166 1 ,

- Leila Rooshenas 1 ,

- Helen S. Lambert 2 &

- Jane M. Blazeby 1 , 3 , 4

BMC Medical Research Methodology volume 20 , Article number: 112 ( 2020 ) Cite this article

3143 Accesses

2 Citations

10 Altmetric

Metrics details

While randomised controlled trials (RCTs) provide high-quality evidence to guide practice, much routine care is not based upon available RCTs. This disconnect between evidence and practice is not sufficiently well understood. This case study explores this relationship using a novel approach. Better understanding may improve trial design, conduct, reporting and implementation, helping patients benefit from the best available evidence.

We employed a case-study approach, comprising mixed methods to examine the case of interest: the primary outcome paper of a surgical RCT (the TIME trial). Letters and editorials citing the TIME trial’s primary report underwent qualitative thematic analysis, and the RCT was critically appraised using validated tools. These analyses were compared to provide insight into how the TIME trial findings were interpreted and appraised by the clinical community.

23 letters and editorials were studied. Most authorship included at least one academic (20/23) and one surgeon (21/23). Authors identified wide-ranging issues including confounding variables or outcome selection. Clear descriptions of bias or generalisability were lacking. Structured appraisal identified risks of bias. Non-RCT evidence was less critically appraised. Authors reached varying conclusions about the trial without consistent justification. Authors discussed aspects of internal and external validity covered by appraisal tools but did not use these methodological terms in their articles.

Conclusions

This novel method for examining interpretation of an RCT in the clinical community showed that published responses identified limited issues with trial design. Responses did not provide coherent rationales for accepting (or not) trial results. Findings may suggest that authors lacked skills in appraisal of RCT design and conduct. Multiple case studies with cross-case analysis of other trials are needed.

Peer Review reports

It is widely recognised that clinical practice is often not in line with the best available evidence. This is the so-called ‘gap’ between research and practice [ 1 , 2 ]. Best evidence predominantly comes from well designed and conducted randomised controlled trials (RCTs) [ 3 ]. However, RCTs are often complex and challenging. Surgical RCTs present specific issues with recruitment, blinding of patients and surgeons, and intervention standardisation [ 4 ]. Many of these issues have been clarified with methodological research [ 5 , 6 , 7 , 8 , 9 , 10 ]. Such work has led to improvements in trial quality over time [ 11 , 12 ]. However, the gap between trials and implementation of their results in practice persists [ 13 ], potentially compromising patient care and wasting resources. Reasons for the disconnect are myriad.

Trial findings that report putative evidence for a change in clinical practice may not be implemented because of poor conduct and reporting [ 14 ], limitations in generalisation and applicability [ 15 ], cost, and unacceptability of new interventions. Clinical culture may emphasise the importance of experience over evidence [ 16 ], and some clinicians may have limited numeracy skills required to understand and apply quantitative results from trials [ 17 ]. Appropriate understanding of RCTs is critical to implementation and of vital importance to clinicians, researchers and funders. We have previously described a novel approach to explore understanding and interpretation of RCT evidence, by examining writings about individual surgical trials [ 18 ]. The present study aims to apply this new method to a single case study: the TIME (Traditional Invasive versus Minimally invasive Esophagectomy) RCT [ 19 ]. The purpose is to better understand how this trial has been interpreted and to illustrate the potential of this novel approach.

The methodology used in this study has been described in detail elsewhere [ 18 ] and will be summarised here. The approach represents a form of case-study research, comprising mixed methods analysis of documentary evidence relating to a published RCT [ 20 ]. Case-study approaches have been defined in various ways and used across numerous disciplines. Their central tenet is to explore an event or phenomenon in depth and in its natural context [ 21 ]. The ‘real-world context’ in this study was the landscape of published articles that interpreted, appraised and discussed implementation of the TIME trial’s findings. Our approach aligned with Stake’s ‘instrumental case-study’ [ 22 ], using a particular case (the TIME RCT’s outcomes paper) to gain a broader appreciation of the issue or phenomenon of interest (in this case, interpretation and appraisal of RCTs in the clinical community, and implications for implementation). We conducted qualitative analysis of selected published articles citing this RCT’s primary report and compared this with structured critical appraisal of the RCT using established tools. We also sought to demonstrate the utility of this novel approach, which we intend to apply in future case studies.

Identify and analyse articles citing a trial

Purposefully select a major surgical rct.

An index RCT was identified and summarised as the case of interest. We sought a highly cited trial report, published in a high-impact journal within the last 10 years. The TIME trial [ 19 ], comparing open and minimally invasive surgical access for removal of oesophageal cancer, was selected as it met these criteria and was within our area of expertise.

Identify and systematically sample articles citing the RCT

All articles citing this RCT were identified using Web of Science and Scopus citation tracking tools. Letter, editorial and discussion article types were included. On-line comments were identified using the Altmetric.com bookmarklet. Non-English language articles were excluded. Searches were conducted in October 2017.

Undertake in-depth qualitative analysis and identify relevant themes

Included articles were thematically analysed using the constant comparison technique, adopted from grounded theory [ 23 , 24 ]. Articles were read in detail, with no a priori coding framework. Text was considered against the research topic, which focused on understanding how the authors interpreted, appraised and/or applied the findings of the trial. New findings or interpretations were continuously related to existing findings to develop the data set as a whole (i.e. the constant comparison technique). Coding was not constrained by pre-defined boundaries defining relevance. Rather, this was guided by the content of the articles being analysed. During analysis, it transpired that understanding authors’ interpretations of the RCT required examination of their discussion of evidence from other studies. Therefore, other articles cited by the authors were sought to determine the types of evidence being referenced. The designs of these additional studies were ascertained based on the descriptions in those articles (rather than our assessment).

Analysis was performed by BEB and LR. BEB is a senior surgical trainee and postdoctoral researcher with previous experience of qualitative research. LR is a Lecturer in Qualitative Health Science with an interest in trial recruitment issues, implementation of trial evidence, and experience of working on multiple surgical RCTs. Both researchers work within a department with expertise in trials methodology and have detailed knowledge in this field which is likely to have influenced their identification and coding of relevant themes.

Two rounds of double coding of five articles were performed by BEB and LR. Further coding was conducted by BEB and reviewed among the team to revise coded themes. Descriptive data on authorship and origins of the articles were collected.

Summarise validity and reporting of the RCT

The RCT was assessed by BEB using a range of critical appraisal tools commonly used to appraise RCTs. These included two of the most commonly used tools to assess RCTs: one examining trial reporting in a broad sense (Consolidated Standards of Reporting Trials for Non-Pharmacological Treatments (CONSORT-NPT) [ 5 ]), and another focusing on internal validity as commonly assessed in systematic reviews of trials (the updated Cochrane Risk of Bias Tool (ROBT 2.0) [ 7 ]). In addition, the Pragmatic Explanatory Continuum Indicator Scale (PRECIS-2) tool [ 8 ] was included, to examine domains associated with the broad applicability and utility of the trial, and the Context and Implementation of Complex Interventions (CICI) framework [ 25 ] was included on an exploratory basis to identify broader contextual factors that could be relevant. JMB contributed to assessment during piloting of the tools and in discussion with BEB where there was uncertainty.

Broad comparison of all results to develop deeper understanding of how trials are understood and relationship with trial quality

The results of both qualitative analysis and structured critical appraisal were considered side-by-side, with the overall aim of better understanding how other authors’ interpretations of the TIME trial compared with the critical appraisal guided by the above tools. The qualitative analysis of the authors’ interpretations was conducted before the structured critical appraisal to ensure the coding/themes were grounded in authors’ writings, rather than our experience of conducting the structured appraisals. The final step aimed to draw together both analyses, to see whether authors discussing the trial raised concerns across similar domains to the areas covered by the critical appraisal tools, or whether their topics of discussion addressed other considerations.

Ethical considerations

This study involved secondary use of publicly available written material and did not require ethical review.

Patient and public involvement

Patients and members of the public were not involved in any aspect of the design of this study.

Summary of index RCT

The TIME trial was a two-group, multicentre randomised trial comparing a minimally invasive approach to the surgical removal of oesophageal cancer with an open approach to the abdomen and chest. It was conducted in five centres across four European countries from 2009 to 2011 and is summarised in Table 1 .

Characteristics of articles

Searches identified 26 articles, and 23 were included (exclusions: an incorrectly classified case report and two articles in German). Summary characteristics are provided in Table 2 . Most articles (18/23, 78%) originated from Europe or the United States. The majority (20/23, 87%) included at least one author holding an academic position; 18/23 (78%) included at least one professor or associate professor (as defined within their own institution). Nearly all included at least one consultant or trainee surgeon (21/23, 91%).

Altmetric.com identified several references to the TIME trial, detailed in Table 3 . Only one, part of the British Medical Journal blog series, included text discussing the trial, rather than simply restating its results or directing readers to the study report.

Themes identified

Qualitative analysis resulted in description of three key themes: identification of wide-ranging issues with the RCT; limited appraisal of non-RCT studies; and variable recommendations for future practice and research. Codes linking quotes to articles and bibliographic data are provided in supplementary Table 1 .

Identification of wide-ranging issues with the RCT

Authors extensively discussed and critiqued several features of trial design and conduct. These included the population, intervention and outcomes of the trial.

If the author’s primary outcome was focused on pulmonary infection, perhaps other patient associated inclusion / exclusion criteria may have been of value. These would include patients with poor pulmonary function parameters … patients with major organ disease … and recent history of prior malignancy. (E2).

In the present [TIME] trial, the difference between minimally invasive and open oesophagectomy was maximised with a purely thoracoscopic (prone position) and laparoscopic technique. (E1).

The primary outcome … was pulmonary infection within the first 2 weeks after surgery and during the whole stay in hospital. This cannot be considered as the relevant primary outcome with reference to the decision problem outline by the authors … (E5).

Beyond these basic trial design parameters, authors of the citing articles also highlighted important confounding variables.

Many non-studied variables, including malnutrition, previous and current smoking, pulmonary comorbidities, functional status, and clinical TNM (tumour, node, metastasis) staging, have all been shown to strongly affect the primary endpoint of this trial – postoperative pulmonary infection. (L2).

Several correspondents suggest that lower rates of respiratory infection might have been achieved by use of alternative strategies for preoperative preparation, patient positioning, ventilator settings, anaesthetic agents, or postoperative care. (L6).

The articles also covered other potential problems with the trial, such as sample size and learning curve effects.

The sample size for sufficient statistical power for major morbidity, survival, total morbidity and other similarly important outcomes may actually be larger. (E2).

The inclusion criteria for participating surgeons appears to have the performance of a minimum of only 10 MIOs and this low level of experience may be reflected in relatively high conversion rate of 13%. (E4).

Only one article (E2) made clear statements praising aspects of the trial:

‘…The protocols for the RCT appear sound with randomization, intention to treat, PICO … and bias elimination.’

The next sentence of this article balanced these positive comments with discussion of limits due to the lack of blinding and other potential confounding variables.

Limited appraisal of non-RCT studies

Authors often cited other types of evidence in the same field to support their views without discussing their methodological limitations. Types of evidence included single-surgeon series, non-randomised comparative studies, systematic reviews (SRs) and meta-analyses (MAs).

Luketich et al. , one of the earlier pioneers of MIE, reported their extensive experience of 1033 consecutive patients undergoing MIE with acceptable lymph node resection, postoperative outcomes, and a 1.7% mortality rate. (L8).

In a population-based national study, … the incidence of pneumonia was 18.6% after open oesophagectomy and 19.9% after minimally invasive oesophagectomy … (L3).

Although systematic reviews and a large comparative study of minimally invasive oesophagectomy have not shown this technique to be beneficial as compared with open oesophagectomy, some meta-analyses have suggested specific advantages. (E1).

The existing SRs and MAs were discussed in relation to the intervention and its outcomes, without directly relating them to the TIME trial itself. The implications for authors’ impressions of the TIME trial findings were generally unclear.

There was limited appraisal of these SRs and MAs, especially when contrasted with discussion of the TIME trial. Several authors referred to the large, single-surgeon series of MIO by Luketich, but only one author described limits of this single-institution non-comparative study.

We must not rely on the limitation of single-institution studies and historical data. This procedure must be broadly applicable and not the domain of a few experts for it to become the new gold standard. (E12).

A few others highlighted the limits of other study designs, but there was a striking disparity in the level of critique, when compared with that of the TIME trial.

In their systematic review … Uttley et al. correctly conclude that due to factors such as selection bias, sufficient evidence does not exist to suggest the MIO is either equivalent to or superior to open surgery. (E6).

All these studies however, concede that due to a lack of feasible evidence by way of prospective randomized controlled trials (RCT), no definitive statement of MIE ‘superiority’ over standard open techniques can be made. (E2).

Although several authors referred to the existing SRs and MAs, none reported the design of the included primary studies, which were largely retrospective and non-randomised.

Variable recommendations for future practice and research

The authors had differing interpretations and recommendations for implementation based on the TIME trial. Some articles discussed issues with the trial and did not make recommendations for future practice, in some cases asking for additional information to better understand or interpret the trial.(L1, L3–5) For example, one simply wrote that the authors ‘have several concerns’, before reporting differences in outcomes between TIME and other studies, and describing practice in their own institution. (L1) Others reported that more work was required, such as further analysis of long-term results of patients included in TIME, or called for further trials in different patient populations.

However, the main issue which this study [TIME] does not address is that of long-term survival. … If the authors can indeed demonstrate at least equivalent long-term oncological outcome for MIO and open oesophagectomy, then this paper should provide an impetus for driving forward the widespread adoption of MIO. (E4).

Of interest will be whether similar results can be repeated in patients in Asia, with mainly squamous cell cancers that are proximally located. … The substantial benefit shown in this trial [TIME] … might encourage investigators to do further randomised studies at other centres. If these results can be confirmed in other settings, minimally invasive oesophagectomy could truly become the standard of care. (E1).

One article (E6) considered the evidence for MIO, discussed this against methodological aspects of a colorectal trial evaluating a minimally invasive approach, before restating the findings of TIME, opining that:

‘This study confirms that RCT [sic] for open versus MIO is indeed possible, but further larger trials are required.’

Later in that article, the authors suggested extensive control of wide-ranging aspects of perioperative care would be important for future trials.

Authors of three articles (E7, E9, E11) suggested that the available evidence was enough for increasing adoption of MIO.

…The available evidence increasingly favors a prominent role for minimally invasive approaches in the management of esophageal cancer. Endoscopic therapies and minimally invasive approaches offer at least equivalent oncologic outcomes, with reduced complications and improved quality of life compared with maximal surgery. (E11).

We are close to a situation in which one can argue that MIE is ready for prime time in the curative treatment of invasive esophageal cancer. If we critically analyse the level and grading of evidence, the current situation concerning MIE and hybrid MIE is far better than was the case when laparoscopic cholecystectomy, anti-reflux surgery, and bariatric surgery were introduced into clinical practice. (E9).

No authors called for the cessation of MIO, although one referred to some centres stopping ‘their MIE [minimally invasive esophagectomy] program due to safety reasons’. (E13).

Assessment of RCT using validated tools

The TIME trial results and protocol papers [ 19 , 26 ] were examined to assess the trial and its reporting. Assessment using CONSORT-NPT demonstrated reporting shortfalls in several areas (full notes in supplementary Table 2 ). These included: lack of information on adherence of care providers and patients to the treatment protocol; discrepancies between the primary outcomes proposed in the protocol (3 pulmonary outcomes) and the trial report (one pulmonary result); no information on interim analyses or stopping criteria; a lack of information regarding statistical analysis to allow for clustering of patients by centre; and absence of discussion of the trial limitations or generalisability.

Risk of bias was assessed as shown in Table 4 . Overall, the TIME trial was considered at high risk of bias.

Assessment using the PRECIS-2 tool is shown in Table 5 . Overall, TIME had features in keeping with a more pragmatic rather than explanatory trial. This suggested a reasonable degree of applicability and usefulness to wider clinical practice.

Application of the CICI framework highlighted several higher-level considerations relevant to the applicability of the TIME trial not described in the protocol or study report (see Table 6 ). These included lack of detail on the setting, as well as epidemiological and socio-economic information.

Overall, these tools suggested that TIME had several limitations. These included issues with standardisation and monitoring of intervention adherence, lack of blinding, failure to use hierarchical analysis and a lack of information on provider volume. The risk of bias was high, limiting confidence attributing outcomes to the allocated interventions. Broad applicability was considered reasonable, though study utility was compromised by a short-term clinical outcome, rather than longer term or patient-reported outcomes. While TIME may have provided early evidence for benefit of MIO to reduce pulmonary infection within 2 weeks of surgery, the appraisal suggested more evidence was needed before considering wider adoption of MIO.

Broad comparison of all results to develop deeper understanding

We considered the findings from the qualitative analysis in relation to those of the critical appraisal. In doing so, broad domains of internal and external validity seemed a useful system to bring together results of both analyses. While the ROBT was described by its creators as focused on internal validity, the PRECIS-2 and CICI tools were not described in terms of validity. Rather, their authors referred to applicability and reproducibility in other settings, which may also be described as external validity. CONSORT-NPT is a tool focused on reporting of trials, and its authors referred to both domains, with some duplication of factors covered in the other tools. However, authors of the articles included in the qualitative analysis did not adopt such methodological terminology when expressing concerns about these aspects of the index RCT’s conduct or reporting.

Robust internal validity allows confident attribution of treatment effects to the experimental intervention. The ROBT identified high risk of bias in the TIME trial. Qualitative analysis revealed discussion of various aspects relevant to internal validity. For example, several authors discussed differences in patient positioning and anaesthetic techniques. These confounding variables may have introduced systematic differences in care between groups, aside from the allocated intervention, resulting in bias. However, the article authors did not articulate the implications of their concerns in such terms and did not consider whether these problems rendered the trial fatally biased.

Sound external validity suggests similar treatment effects may be achieved by other clinicians in other settings for other patients. Pragmatic trials have broad applicability, with wide inclusion criteria, and patient-centred outcomes. The PRECIS-2 describes domains relevant to this applicability. TIME had several features of a pragmatic trial, suggesting relatively broad applicability. The qualitative analysis showed authors were concerned about these issues. For example, several discussed the appropriateness and utility of 2-week and in-hospital pulmonary infection rates as the primary outcome measure. However, authors did not directly relate such concerns to external validity or generalisability, to reach a conclusion about whether the trial should influence practice.

While many authors identified issues relevant to internal and external validity, the lack of clear explanation of their implications meant it was difficult to determine whether they thought the trial justified a change in practice. This contrasts with the structured assessments, which defined clear problems with the trial and limits to its usefulness.

This study presents the first application and results of a new method to generate insights into how evidence from a trial was understood, contextualised and related to practice. Qualitative analysis of letters and editorials, largely written by academic surgeons, documented extensive discussion of problems with the trial, but without clear formulation of the implications of these concerns for its internal or external validity and applicability. These authors reached a variety of conclusions about the implications of the trial for surgical practice. A separate assessment using structured tools defined specific weaknesses in trial methodology. Whilst this new approach yielded useful findings in this single case study, the method should be further tested using multiple trials and cross-case analyses. The initial findings based on this single case study suggest a need to clarify standards against which a trial may be assessed to guide decisions about its role in changing practice, and potentially also to guide efforts to influence practitioners to implement change if appropriate. Within this, our findings suggest a need to focus efforts on educating surgeons about trial design and quality, which may contribute to implementation science-based efforts to inform clinical decision-making and implementation of trial results.

This study contributes to the wider literature showing that evidence does not speak for itself. New evidence is often considered alongside competing bodies of existing evidence that may support different ideas, theories or interventions [ 27 , 28 ]. When a study is published, this new evidence is assimilated into the wider scientific context. Its strengths, weaknesses and overall contribution are debated and disputed. Through the lens of Latour’s actor-network theory [ 29 , 30 ], the new trial can be considered a novel actor within the wider network of actors that includes other trials and studies of the intervention, as well as the consumers of this evidence. Those commenting on the trial have an important role in how different features of the trial are identified, discussed and debated, and how its findings are framed. This agency may be influenced by their own clinical experience, education, skill set, work environment and colleagues, amongst other factors. Given these complexities, it is not surprising to find that different authors reached different conclusions about the TIME trial.

The way authors of the included articles used and appraised different types of study raises questions about how the hierarchy of evidence, and the primacy of the RCT, is applied to routine clinical practice. We found extensive criticism of the TIME trial. Article authors described several limitations relating to its population, intervention, associated co-interventions and confounding variables, as well as the outcomes selected. Certainly, the authors presented valid criticisms that limited the trial’s validity, as identified by structured critical appraisal. Over recent years, trials methodologists have worked to better understand and optimise many such aspects of trial conduct. The development of the CONSORT reporting standards promotes detailed description of key methods, such as random sequence generation and allocation concealment, that allow critical judgements about internal validity to be made [ 5 ]. The growth of pragmatic trials, featuring wide inclusion criteria, conducted across multiple sites, with clinically meaningful outcomes, reflects a concerted effort to improve applicability or external validity of RCTs [ 8 , 31 ]. It may never be possible to conduct a ‘perfect’ trial, but improvements in the rigor and transparency of design hopefully ensure that RCTs can provide sufficiently robust evidence that is useful to the broad population of patients and clinicians within a healthcare system. Whether these developments, designed to address valid criticisms of RCTs, are widely understood outside the sphere of trials methodologists is unclear.

Conversely, the authors of the included articles were far less critical of non-RCT evidence. For example, several authors referred to the single-surgeon case-series of Luketich [ 32 ]. Only one author discussed its limitations for generalisation. Surgical skill and performance vary [ 33 ]; what is possible for a single surgeon cannot be generalised to what is usual for most. Similarly, authors cited systematic reviews and meta-analyses without clear description of the original study designs. Evidence synthesis cannot eliminate biases in retrospective, non-randomised studies using statistical techniques. Failure to clearly articulate limitations of these different studies may support our contention that the authors lacked appropriate appraisal skills. Alternatively, it may suggest bias in favour of the intervention, such that the authors understood, but did not want to articulate its limitations.

While RCTs have not been toppled from their position at the top of the hierarchy of evidence about the efficacy of interventions, developments in other areas have seen increasingly sophisticated use of observational data to better understand the effects of treatments. Researchers have taken advantage of increasing availability of vast quantities of genetic data. In epidemiology, the concept of Mendelian randomisation has been used to try and unpick causal relationships from non-causative correlations [ 34 ]. At the patient level, genetic testing of different types of cancer has allowed targeting of treatments according to cellular sensitivities [ 35 ]. The development of such markers by which to tailor treatment have led to proposals of an idealised future whereby individual treatments are entirely personalised according to a panel of markers that accurately predict treatment response and prognosis. These different research approaches are inevitably competing for resources and intellectual priority. However, as has been argued by Backmann, for these other study types to take priority, “what needs to be shown is not only that RCTs might be problematic …, but that other methods such as cohort studies actually have better external validity.” [ 36 ]

Evidence-based medicine aims to apply the best available evidence to individual patients [ 37 ]. This aim, by its very nature, creates a disconnect between evidence from RCTs, which are aggregated studies of groups of patients to determine average effects, and clinical decision-making at the individual level [ 38 ]. This could be considered to represent an insurmountable ‘get-out’ clause, whereby a clinician may always justify deviation from ‘the evidence’ due to differences between the patient in front of them and those included in the relevant study. It may also prove very difficult to allow the theory-based weight of a journal article to over-ride an individual clinician’s personal lived experience of different interventions and their efficacy. This may be particularly problematic in surgical practice [ 16 ] where the practitioner is usually physically connected with the intervention. This may increase the importance attached to experience, even if that experience is at odds with large-scale studies. We do not disagree that clinicians must treat individual patients according to their specific condition and their wishes. However, it may be considered that aggregate practice, across a surgeon’s cases or across a department, should fall roughly in line with an appropriate body of suitably valid and relevant evidence.

Implementation science research has illuminated many factors affecting implementation beyond knowledge of the evidence. Damschroder et al. described the Consolidated Framework for Implementation Research (CFIR) to identify real-world constructs influencing implementation, relating to the intervention, individuals, organisations and systems [ 39 ]. These included ‘evidence strength and quality’ as well as ‘knowledge and beliefs about the intervention’, constructs readily identified within the present study. Their framework also highlights many other important factors such as cost, patient needs and resources, peer pressure, external policies and incentives, and organisational culture. Surgical research has demonstrated wide variation in practice, even in the presence of high quality evidence [ 40 ], and the broad range of factors affecting implementation of interventions, such as Enhanced Recovery After Surgery [ 41 ]. Our approach may contribute as another tool to understand barriers and facilitators to evidence implementation. It may prove particularly useful in conjunction with other methods such as interviews and observations, informed by a relevant framework, such as the Theoretical Domains Framework [ 42 , 43 ].

The early promise of our new method needs further work to conduct multiple case studies of different RCTs to allow cross-case analyses and a more thorough understanding of how RCTs are interpreted and appraised in the landscape of written commentaries. Examination of further case-studies may also inform refinements to the methods. For example, further analyses may indicate recurring themes across case-studies, which may in turn contribute towards a priori coding criteria and more efficient approaches to analyses (e.g. framework analysis [ 44 ]). It will also be important to include assessment of how each trial is situated in the wider context of relevant evidence, across study types. For individual trials, combined qualitative and structured analyses may determine the extent to which that RCT is flawed and requires further evaluation in a more methodologically sound study. Alternatively, it may demonstrate that the problem in bridging the gap between evidence and practice resides in the competition between different bodies of evidence, comprised of different types of study, and appropriate understanding of their strengths and weaknesses, as well as their applicability to practice. Work should also be undertaken to investigate how contemporary practice may have changed alongside publication of such articles, to investigate the relationship between what is written about the trial, and clinical practice as delivered.

While this study has shown the potential of this new method, its strengths and limitations must be considered. Rigorous analysis using robust qualitative methods and double coding by experienced researchers was undertaken. The articles examined were written without knowledge that they would be analysed in this manner, limiting bias this could introduce. The use of multiple tools to assess the index RCT created a broad overview of its strengths and weaknesses. The most important study limitation was that we did not directly explore authors’ understandings and interpretations, so underlying understanding of the key issues was inferred, rather than directly scrutinised. Failure to articulate is not the same as a lack of understanding. Further, we did not ask authors their motivations to publish their articles, an activity with its own significance. In addition, this study attempted to provide insights into the authors understanding and interpretation of the trial, and it does not purport to be an assessment of practice itself, which would benefit from other approaches to investigation (e.g. qualitative observations, interviews, quantitative procedure rate analyses). This study applied our new method to a single, surgical RCT. The issues identified may be particular to that intervention, specialty, or trial design; further case studies are required to determine broader relevance.

This study has successfully applied a new method to better understand how clinicians and academics understand evidence from a surgical RCT - the TIME trial. It identified discussion of many issues with the trial, but the authors who cited the trial did not specifically articulate the implications of these issues in terms of its internal and external validity. The authors reached a wide range of conclusions, ranging from further evaluation of the intervention, to widespread adoption. Structured appraisal of TIME suggested that the trial was at high risk of bias with limited generalisability. Further application of this method to multiple trials will allow cross-case analyses to determine whether the issues identified are similar across other trials and yield information to better understand how this type of evidence is interpreted and related to practice. This approach may be complemented by other data, such as in-depth interviews. This may reveal genuine flaws in trial design that limit application, or that other issues such as poor understanding or competing non-clinical factors impede the translation of evidence into practice. We hope that this work may help existing efforts to close the research-practice gap, and help ensure that patients receive the best care, based upon the highest level of evidence.

Availability of data and materials

The dataset upon which this work is based consists of articles already available within the published literature.

Abbreviations

- Randomised controlled trial

Traditional Invasive versus Minimally invasive Esophagectomy

CONsolidated Standards Of Reporting Trials for Non-Pharmacological Treatments

PRagmatic Explanatory Continuum Indicator Scale

Context and Implementation of Complex Interventions

Risk Of Bias Tool

Minimally Invasive Oesophagectomy

Systematic Review

Meta-Analysis

Bero LA, Grilli R, Grimshaw JM, Harvey E, Oxman AD, Thomson MA, et al. Closing the gap between research and practice: an overview of systematic reviews of interventions to promote the implementation of research findings. Br Med J. 1998;317:465–8 Available from: http://www.bmj.com/cgi/doi/10.1136/bmj.317.7156.465 .

Article CAS Google Scholar

Grol R, Grimshaw J. From best evidence to best practice: effective implementation of change in patients’ care. Lancet. 2003;362:1225–30.

Article Google Scholar

Oxford Centre for Evidence-Based Medicine. Levels of evidence. 2009 [cited 2018 Sep 25]. Available from: http://www.cebm.net/oxford-centre-evidence-based-medicine-levels-evidence-march-2009/ .

Google Scholar

Ergina PL, Cook JA, Blazeby JM, Boutron I, Clavien P-A, Reeves BC, et al. Challenges in evaluating surgical innovation. Lancet. 2009;374:1097–104. Available from. https://doi.org/10.1016/S0140-6736(09)61086-2 .

Article PubMed PubMed Central Google Scholar

Boutron I, Moher D, Altman DG, Schulz KF, Ravaud P. Methods and processes of the CONSORT group: example of an extension for trials assessing nonpharmacologic treatments. Ann Intern Med. 2008;148:295–309. Available from. https://doi.org/10.7326/0003-4819-148-4-200802190-00008 .

Article PubMed Google Scholar

Higgins JPT, Altman DG, Gøtzsche PC, Jüni P, Moher D, Oxman AD, et al. The Cochrane Collaboration’s tool for assessing risk of bias in randomised trials. Br Med J. 2011;343:d5928 Available from: http://www.bmj.com/cgi/doi/10.1136/bmj.d5928 .

Higgins JPT, Sterne JAC, Savović J, Page MJ, Hróbjartsson A, Boutron I, et al. A revised tool for assessing risk of bias in randomized trials. Cochrane Database Syst Rev. 2016;10:CD201601.

Loudon K, Treweek S, Sullivan F, Donnan P, Thorpe KE, Zwarenstein M. The PRECIS-2 tool: designing trials that are fit for purpose. Br Med J. 2015;350:h2147 Available from: http://www.bmj.com/cgi/doi/10.1136/bmj.h2147 .

McDonald AM, Knight RC, Campbell MK, Entwistle VA, Grant AM, Cook JA, et al. What influences recruitment to randomised controlled trials? A review of trials funded by two UK funding agencies. Trials. 2006;7:1–8.

Donovan JL, Rooshenas L, Jepson M, Elliott D, Wade J, Avery K, et al. Optimising recruitment and informed consent in randomised controlled trials: the development and implementation of the quintet recruitment intervention (QRI). Trials. 2016;17:1–11. Available from. https://doi.org/10.1186/s13063-016-1391-4 .

Antoniou SA, Andreou A, Antoniou GA, Koch OO, Köhler G, Luketina RR, et al. Volume and methodological quality of randomized controlled trials in laparoscopic surgery: assessment over a 10-year period. Am J Surg. 2015;210:922–9.

Ali UA, van der Sluis PC, Issa Y, Habaga IA, Gooszen HG, Flum DR, et al. Trends in worldwide volume and methodological quality of surgical randomized controlled trials. Ann Surg. 2013;258:199–207.

Kristensen N, Nymann C, Konradsen H. Implementing research results in clinical practice - the experiences of healthcare professionals. BMC Health Serv Res. 2016;16:48. Available from. https://doi.org/10.1186/s12913-016-1292-y .

Blencowe NS, Boddy AP, Harris A, Hanna T, Whiting P, Cook JA, et al. Systematic review of intervention design and delivery in pragmatic and explanatory surgical randomized clinical trials. Br J Surg. 2015;102:1037–47.