Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- How to Write a Strong Hypothesis | Steps & Examples

How to Write a Strong Hypothesis | Steps & Examples

Published on May 6, 2022 by Shona McCombes . Revised on November 20, 2023.

A hypothesis is a statement that can be tested by scientific research. If you want to test a relationship between two or more variables, you need to write hypotheses before you start your experiment or data collection .

Example: Hypothesis

Daily apple consumption leads to fewer doctor’s visits.

Table of contents

What is a hypothesis, developing a hypothesis (with example), hypothesis examples, other interesting articles, frequently asked questions about writing hypotheses.

A hypothesis states your predictions about what your research will find. It is a tentative answer to your research question that has not yet been tested. For some research projects, you might have to write several hypotheses that address different aspects of your research question.

A hypothesis is not just a guess – it should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations and statistical analysis of data).

Variables in hypotheses

Hypotheses propose a relationship between two or more types of variables .

- An independent variable is something the researcher changes or controls.

- A dependent variable is something the researcher observes and measures.

If there are any control variables , extraneous variables , or confounding variables , be sure to jot those down as you go to minimize the chances that research bias will affect your results.

In this example, the independent variable is exposure to the sun – the assumed cause . The dependent variable is the level of happiness – the assumed effect .

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

Step 1. Ask a question

Writing a hypothesis begins with a research question that you want to answer. The question should be focused, specific, and researchable within the constraints of your project.

Step 2. Do some preliminary research

Your initial answer to the question should be based on what is already known about the topic. Look for theories and previous studies to help you form educated assumptions about what your research will find.

At this stage, you might construct a conceptual framework to ensure that you’re embarking on a relevant topic . This can also help you identify which variables you will study and what you think the relationships are between them. Sometimes, you’ll have to operationalize more complex constructs.

Step 3. Formulate your hypothesis

Now you should have some idea of what you expect to find. Write your initial answer to the question in a clear, concise sentence.

4. Refine your hypothesis

You need to make sure your hypothesis is specific and testable. There are various ways of phrasing a hypothesis, but all the terms you use should have clear definitions, and the hypothesis should contain:

- The relevant variables

- The specific group being studied

- The predicted outcome of the experiment or analysis

5. Phrase your hypothesis in three ways

To identify the variables, you can write a simple prediction in if…then form. The first part of the sentence states the independent variable and the second part states the dependent variable.

In academic research, hypotheses are more commonly phrased in terms of correlations or effects, where you directly state the predicted relationship between variables.

If you are comparing two groups, the hypothesis can state what difference you expect to find between them.

6. Write a null hypothesis

If your research involves statistical hypothesis testing , you will also have to write a null hypothesis . The null hypothesis is the default position that there is no association between the variables. The null hypothesis is written as H 0 , while the alternative hypothesis is H 1 or H a .

- H 0 : The number of lectures attended by first-year students has no effect on their final exam scores.

- H 1 : The number of lectures attended by first-year students has a positive effect on their final exam scores.

If you want to know more about the research process , methodology , research bias , or statistics , make sure to check out some of our other articles with explanations and examples.

- Sampling methods

- Simple random sampling

- Stratified sampling

- Cluster sampling

- Likert scales

- Reproducibility

Statistics

- Null hypothesis

- Statistical power

- Probability distribution

- Effect size

- Poisson distribution

Research bias

- Optimism bias

- Cognitive bias

- Implicit bias

- Hawthorne effect

- Anchoring bias

- Explicit bias

Prevent plagiarism. Run a free check.

A hypothesis is not just a guess — it should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations and statistical analysis of data).

Null and alternative hypotheses are used in statistical hypothesis testing . The null hypothesis of a test always predicts no effect or no relationship between variables, while the alternative hypothesis states your research prediction of an effect or relationship.

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

McCombes, S. (2023, November 20). How to Write a Strong Hypothesis | Steps & Examples. Scribbr. Retrieved April 9, 2024, from https://www.scribbr.com/methodology/hypothesis/

Is this article helpful?

Shona McCombes

Other students also liked, construct validity | definition, types, & examples, what is a conceptual framework | tips & examples, operationalization | a guide with examples, pros & cons, what is your plagiarism score.

- Resources Home 🏠

- Try SciSpace Copilot

- Search research papers

- Add Copilot Extension

- Try AI Detector

- Try Paraphraser

- Try Citation Generator

- April Papers

- June Papers

- July Papers

The Craft of Writing a Strong Hypothesis

Table of Contents

Writing a hypothesis is one of the essential elements of a scientific research paper. It needs to be to the point, clearly communicating what your research is trying to accomplish. A blurry, drawn-out, or complexly-structured hypothesis can confuse your readers. Or worse, the editor and peer reviewers.

A captivating hypothesis is not too intricate. This blog will take you through the process so that, by the end of it, you have a better idea of how to convey your research paper's intent in just one sentence.

What is a Hypothesis?

The first step in your scientific endeavor, a hypothesis, is a strong, concise statement that forms the basis of your research. It is not the same as a thesis statement , which is a brief summary of your research paper .

The sole purpose of a hypothesis is to predict your paper's findings, data, and conclusion. It comes from a place of curiosity and intuition . When you write a hypothesis, you're essentially making an educated guess based on scientific prejudices and evidence, which is further proven or disproven through the scientific method.

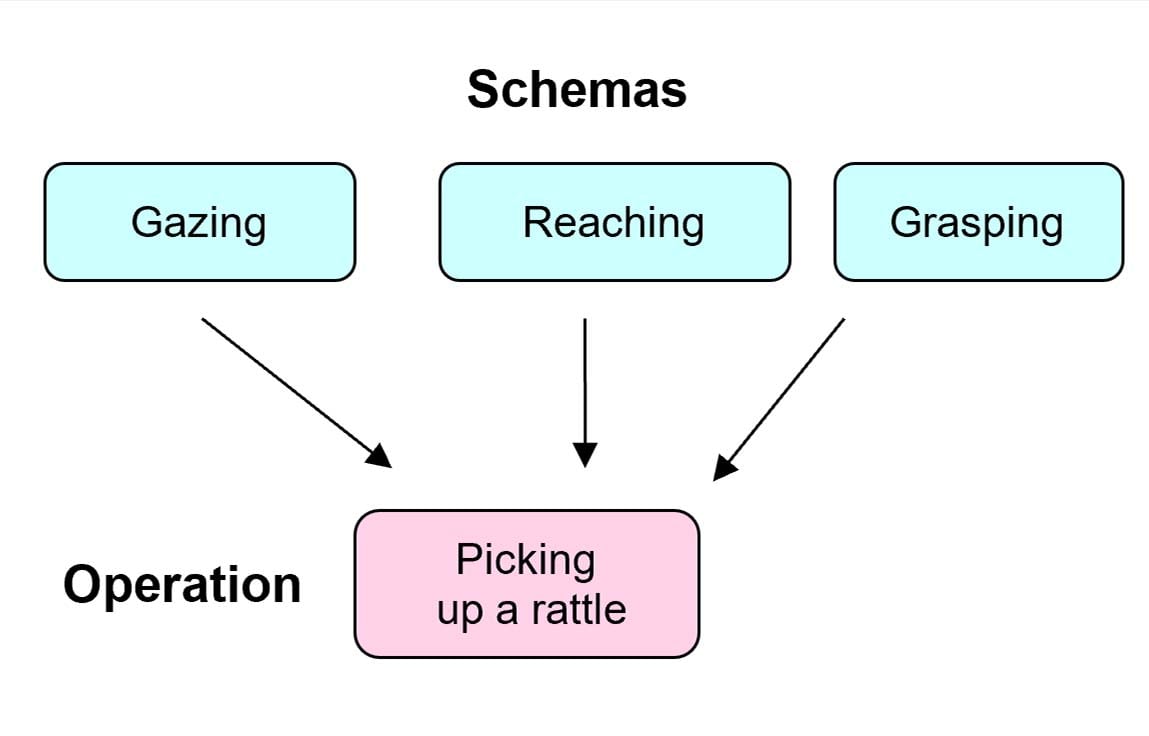

The reason for undertaking research is to observe a specific phenomenon. A hypothesis, therefore, lays out what the said phenomenon is. And it does so through two variables, an independent and dependent variable.

The independent variable is the cause behind the observation, while the dependent variable is the effect of the cause. A good example of this is “mixing red and blue forms purple.” In this hypothesis, mixing red and blue is the independent variable as you're combining the two colors at your own will. The formation of purple is the dependent variable as, in this case, it is conditional to the independent variable.

Different Types of Hypotheses

Types of hypotheses

Some would stand by the notion that there are only two types of hypotheses: a Null hypothesis and an Alternative hypothesis. While that may have some truth to it, it would be better to fully distinguish the most common forms as these terms come up so often, which might leave you out of context.

Apart from Null and Alternative, there are Complex, Simple, Directional, Non-Directional, Statistical, and Associative and casual hypotheses. They don't necessarily have to be exclusive, as one hypothesis can tick many boxes, but knowing the distinctions between them will make it easier for you to construct your own.

1. Null hypothesis

A null hypothesis proposes no relationship between two variables. Denoted by H 0 , it is a negative statement like “Attending physiotherapy sessions does not affect athletes' on-field performance.” Here, the author claims physiotherapy sessions have no effect on on-field performances. Even if there is, it's only a coincidence.

2. Alternative hypothesis

Considered to be the opposite of a null hypothesis, an alternative hypothesis is donated as H1 or Ha. It explicitly states that the dependent variable affects the independent variable. A good alternative hypothesis example is “Attending physiotherapy sessions improves athletes' on-field performance.” or “Water evaporates at 100 °C. ” The alternative hypothesis further branches into directional and non-directional.

- Directional hypothesis: A hypothesis that states the result would be either positive or negative is called directional hypothesis. It accompanies H1 with either the ‘<' or ‘>' sign.

- Non-directional hypothesis: A non-directional hypothesis only claims an effect on the dependent variable. It does not clarify whether the result would be positive or negative. The sign for a non-directional hypothesis is ‘≠.'

3. Simple hypothesis

A simple hypothesis is a statement made to reflect the relation between exactly two variables. One independent and one dependent. Consider the example, “Smoking is a prominent cause of lung cancer." The dependent variable, lung cancer, is dependent on the independent variable, smoking.

4. Complex hypothesis

In contrast to a simple hypothesis, a complex hypothesis implies the relationship between multiple independent and dependent variables. For instance, “Individuals who eat more fruits tend to have higher immunity, lesser cholesterol, and high metabolism.” The independent variable is eating more fruits, while the dependent variables are higher immunity, lesser cholesterol, and high metabolism.

5. Associative and casual hypothesis

Associative and casual hypotheses don't exhibit how many variables there will be. They define the relationship between the variables. In an associative hypothesis, changing any one variable, dependent or independent, affects others. In a casual hypothesis, the independent variable directly affects the dependent.

6. Empirical hypothesis

Also referred to as the working hypothesis, an empirical hypothesis claims a theory's validation via experiments and observation. This way, the statement appears justifiable and different from a wild guess.

Say, the hypothesis is “Women who take iron tablets face a lesser risk of anemia than those who take vitamin B12.” This is an example of an empirical hypothesis where the researcher the statement after assessing a group of women who take iron tablets and charting the findings.

7. Statistical hypothesis

The point of a statistical hypothesis is to test an already existing hypothesis by studying a population sample. Hypothesis like “44% of the Indian population belong in the age group of 22-27.” leverage evidence to prove or disprove a particular statement.

Characteristics of a Good Hypothesis

Writing a hypothesis is essential as it can make or break your research for you. That includes your chances of getting published in a journal. So when you're designing one, keep an eye out for these pointers:

- A research hypothesis has to be simple yet clear to look justifiable enough.

- It has to be testable — your research would be rendered pointless if too far-fetched into reality or limited by technology.

- It has to be precise about the results —what you are trying to do and achieve through it should come out in your hypothesis.

- A research hypothesis should be self-explanatory, leaving no doubt in the reader's mind.

- If you are developing a relational hypothesis, you need to include the variables and establish an appropriate relationship among them.

- A hypothesis must keep and reflect the scope for further investigations and experiments.

Separating a Hypothesis from a Prediction

Outside of academia, hypothesis and prediction are often used interchangeably. In research writing, this is not only confusing but also incorrect. And although a hypothesis and prediction are guesses at their core, there are many differences between them.

A hypothesis is an educated guess or even a testable prediction validated through research. It aims to analyze the gathered evidence and facts to define a relationship between variables and put forth a logical explanation behind the nature of events.

Predictions are assumptions or expected outcomes made without any backing evidence. They are more fictionally inclined regardless of where they originate from.

For this reason, a hypothesis holds much more weight than a prediction. It sticks to the scientific method rather than pure guesswork. "Planets revolve around the Sun." is an example of a hypothesis as it is previous knowledge and observed trends. Additionally, we can test it through the scientific method.

Whereas "COVID-19 will be eradicated by 2030." is a prediction. Even though it results from past trends, we can't prove or disprove it. So, the only way this gets validated is to wait and watch if COVID-19 cases end by 2030.

Finally, How to Write a Hypothesis

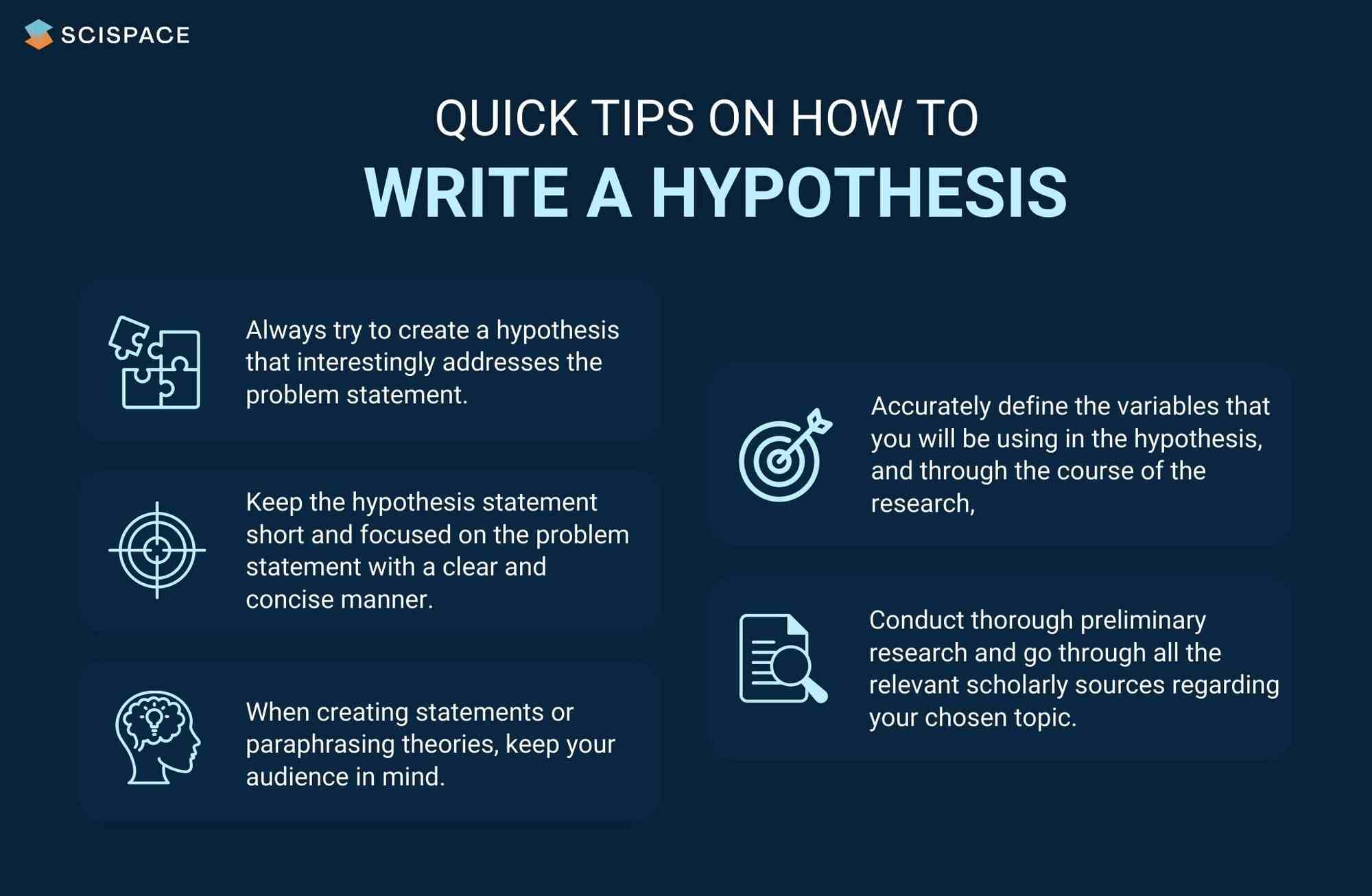

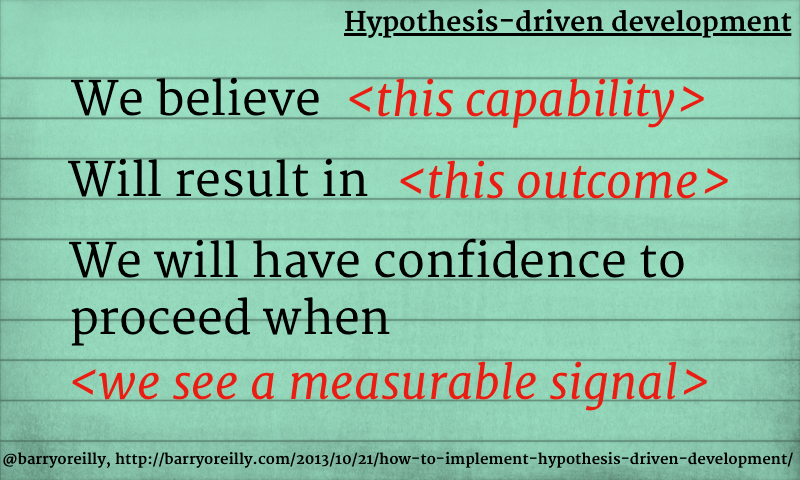

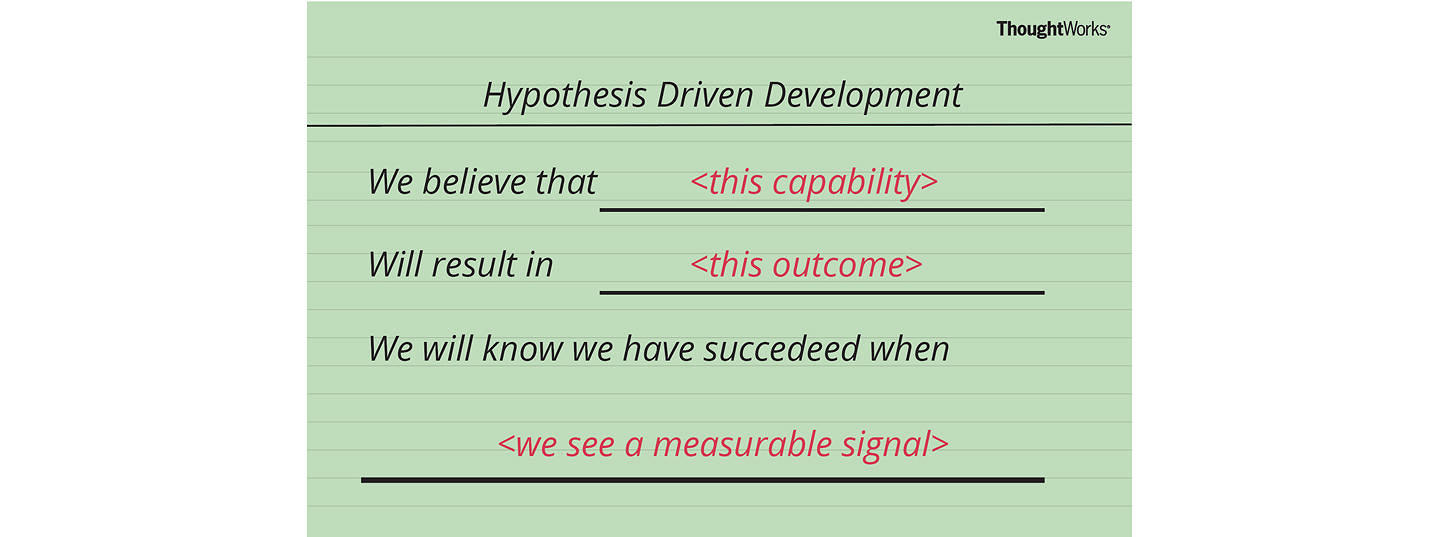

Quick tips on writing a hypothesis

1. Be clear about your research question

A hypothesis should instantly address the research question or the problem statement. To do so, you need to ask a question. Understand the constraints of your undertaken research topic and then formulate a simple and topic-centric problem. Only after that can you develop a hypothesis and further test for evidence.

2. Carry out a recce

Once you have your research's foundation laid out, it would be best to conduct preliminary research. Go through previous theories, academic papers, data, and experiments before you start curating your research hypothesis. It will give you an idea of your hypothesis's viability or originality.

Making use of references from relevant research papers helps draft a good research hypothesis. SciSpace Discover offers a repository of over 270 million research papers to browse through and gain a deeper understanding of related studies on a particular topic. Additionally, you can use SciSpace Copilot , your AI research assistant, for reading any lengthy research paper and getting a more summarized context of it. A hypothesis can be formed after evaluating many such summarized research papers. Copilot also offers explanations for theories and equations, explains paper in simplified version, allows you to highlight any text in the paper or clip math equations and tables and provides a deeper, clear understanding of what is being said. This can improve the hypothesis by helping you identify potential research gaps.

3. Create a 3-dimensional hypothesis

Variables are an essential part of any reasonable hypothesis. So, identify your independent and dependent variable(s) and form a correlation between them. The ideal way to do this is to write the hypothetical assumption in the ‘if-then' form. If you use this form, make sure that you state the predefined relationship between the variables.

In another way, you can choose to present your hypothesis as a comparison between two variables. Here, you must specify the difference you expect to observe in the results.

4. Write the first draft

Now that everything is in place, it's time to write your hypothesis. For starters, create the first draft. In this version, write what you expect to find from your research.

Clearly separate your independent and dependent variables and the link between them. Don't fixate on syntax at this stage. The goal is to ensure your hypothesis addresses the issue.

5. Proof your hypothesis

After preparing the first draft of your hypothesis, you need to inspect it thoroughly. It should tick all the boxes, like being concise, straightforward, relevant, and accurate. Your final hypothesis has to be well-structured as well.

Research projects are an exciting and crucial part of being a scholar. And once you have your research question, you need a great hypothesis to begin conducting research. Thus, knowing how to write a hypothesis is very important.

Now that you have a firmer grasp on what a good hypothesis constitutes, the different kinds there are, and what process to follow, you will find it much easier to write your hypothesis, which ultimately helps your research.

Now it's easier than ever to streamline your research workflow with SciSpace Discover . Its integrated, comprehensive end-to-end platform for research allows scholars to easily discover, write and publish their research and fosters collaboration.

It includes everything you need, including a repository of over 270 million research papers across disciplines, SEO-optimized summaries and public profiles to show your expertise and experience.

If you found these tips on writing a research hypothesis useful, head over to our blog on Statistical Hypothesis Testing to learn about the top researchers, papers, and institutions in this domain.

Frequently Asked Questions (FAQs)

1. what is the definition of hypothesis.

According to the Oxford dictionary, a hypothesis is defined as “An idea or explanation of something that is based on a few known facts, but that has not yet been proved to be true or correct”.

2. What is an example of hypothesis?

The hypothesis is a statement that proposes a relationship between two or more variables. An example: "If we increase the number of new users who join our platform by 25%, then we will see an increase in revenue."

3. What is an example of null hypothesis?

A null hypothesis is a statement that there is no relationship between two variables. The null hypothesis is written as H0. The null hypothesis states that there is no effect. For example, if you're studying whether or not a particular type of exercise increases strength, your null hypothesis will be "there is no difference in strength between people who exercise and people who don't."

4. What are the types of research?

• Fundamental research

• Applied research

• Qualitative research

• Quantitative research

• Mixed research

• Exploratory research

• Longitudinal research

• Cross-sectional research

• Field research

• Laboratory research

• Fixed research

• Flexible research

• Action research

• Policy research

• Classification research

• Comparative research

• Causal research

• Inductive research

• Deductive research

5. How to write a hypothesis?

• Your hypothesis should be able to predict the relationship and outcome.

• Avoid wordiness by keeping it simple and brief.

• Your hypothesis should contain observable and testable outcomes.

• Your hypothesis should be relevant to the research question.

6. What are the 2 types of hypothesis?

• Null hypotheses are used to test the claim that "there is no difference between two groups of data".

• Alternative hypotheses test the claim that "there is a difference between two data groups".

7. Difference between research question and research hypothesis?

A research question is a broad, open-ended question you will try to answer through your research. A hypothesis is a statement based on prior research or theory that you expect to be true due to your study. Example - Research question: What are the factors that influence the adoption of the new technology? Research hypothesis: There is a positive relationship between age, education and income level with the adoption of the new technology.

8. What is plural for hypothesis?

The plural of hypothesis is hypotheses. Here's an example of how it would be used in a statement, "Numerous well-considered hypotheses are presented in this part, and they are supported by tables and figures that are well-illustrated."

9. What is the red queen hypothesis?

The red queen hypothesis in evolutionary biology states that species must constantly evolve to avoid extinction because if they don't, they will be outcompeted by other species that are evolving. Leigh Van Valen first proposed it in 1973; since then, it has been tested and substantiated many times.

10. Who is known as the father of null hypothesis?

The father of the null hypothesis is Sir Ronald Fisher. He published a paper in 1925 that introduced the concept of null hypothesis testing, and he was also the first to use the term itself.

11. When to reject null hypothesis?

You need to find a significant difference between your two populations to reject the null hypothesis. You can determine that by running statistical tests such as an independent sample t-test or a dependent sample t-test. You should reject the null hypothesis if the p-value is less than 0.05.

You might also like

Consensus GPT vs. SciSpace GPT: Choose the Best GPT for Research

Literature Review and Theoretical Framework: Understanding the Differences

Types of Essays in Academic Writing - Quick Guide (2024)

- Privacy Policy

Buy Me a Coffee

Home » What is a Hypothesis – Types, Examples and Writing Guide

What is a Hypothesis – Types, Examples and Writing Guide

Table of Contents

Definition:

Hypothesis is an educated guess or proposed explanation for a phenomenon, based on some initial observations or data. It is a tentative statement that can be tested and potentially proven or disproven through further investigation and experimentation.

Hypothesis is often used in scientific research to guide the design of experiments and the collection and analysis of data. It is an essential element of the scientific method, as it allows researchers to make predictions about the outcome of their experiments and to test those predictions to determine their accuracy.

Types of Hypothesis

Types of Hypothesis are as follows:

Research Hypothesis

A research hypothesis is a statement that predicts a relationship between variables. It is usually formulated as a specific statement that can be tested through research, and it is often used in scientific research to guide the design of experiments.

Null Hypothesis

The null hypothesis is a statement that assumes there is no significant difference or relationship between variables. It is often used as a starting point for testing the research hypothesis, and if the results of the study reject the null hypothesis, it suggests that there is a significant difference or relationship between variables.

Alternative Hypothesis

An alternative hypothesis is a statement that assumes there is a significant difference or relationship between variables. It is often used as an alternative to the null hypothesis and is tested against the null hypothesis to determine which statement is more accurate.

Directional Hypothesis

A directional hypothesis is a statement that predicts the direction of the relationship between variables. For example, a researcher might predict that increasing the amount of exercise will result in a decrease in body weight.

Non-directional Hypothesis

A non-directional hypothesis is a statement that predicts the relationship between variables but does not specify the direction. For example, a researcher might predict that there is a relationship between the amount of exercise and body weight, but they do not specify whether increasing or decreasing exercise will affect body weight.

Statistical Hypothesis

A statistical hypothesis is a statement that assumes a particular statistical model or distribution for the data. It is often used in statistical analysis to test the significance of a particular result.

Composite Hypothesis

A composite hypothesis is a statement that assumes more than one condition or outcome. It can be divided into several sub-hypotheses, each of which represents a different possible outcome.

Empirical Hypothesis

An empirical hypothesis is a statement that is based on observed phenomena or data. It is often used in scientific research to develop theories or models that explain the observed phenomena.

Simple Hypothesis

A simple hypothesis is a statement that assumes only one outcome or condition. It is often used in scientific research to test a single variable or factor.

Complex Hypothesis

A complex hypothesis is a statement that assumes multiple outcomes or conditions. It is often used in scientific research to test the effects of multiple variables or factors on a particular outcome.

Applications of Hypothesis

Hypotheses are used in various fields to guide research and make predictions about the outcomes of experiments or observations. Here are some examples of how hypotheses are applied in different fields:

- Science : In scientific research, hypotheses are used to test the validity of theories and models that explain natural phenomena. For example, a hypothesis might be formulated to test the effects of a particular variable on a natural system, such as the effects of climate change on an ecosystem.

- Medicine : In medical research, hypotheses are used to test the effectiveness of treatments and therapies for specific conditions. For example, a hypothesis might be formulated to test the effects of a new drug on a particular disease.

- Psychology : In psychology, hypotheses are used to test theories and models of human behavior and cognition. For example, a hypothesis might be formulated to test the effects of a particular stimulus on the brain or behavior.

- Sociology : In sociology, hypotheses are used to test theories and models of social phenomena, such as the effects of social structures or institutions on human behavior. For example, a hypothesis might be formulated to test the effects of income inequality on crime rates.

- Business : In business research, hypotheses are used to test the validity of theories and models that explain business phenomena, such as consumer behavior or market trends. For example, a hypothesis might be formulated to test the effects of a new marketing campaign on consumer buying behavior.

- Engineering : In engineering, hypotheses are used to test the effectiveness of new technologies or designs. For example, a hypothesis might be formulated to test the efficiency of a new solar panel design.

How to write a Hypothesis

Here are the steps to follow when writing a hypothesis:

Identify the Research Question

The first step is to identify the research question that you want to answer through your study. This question should be clear, specific, and focused. It should be something that can be investigated empirically and that has some relevance or significance in the field.

Conduct a Literature Review

Before writing your hypothesis, it’s essential to conduct a thorough literature review to understand what is already known about the topic. This will help you to identify the research gap and formulate a hypothesis that builds on existing knowledge.

Determine the Variables

The next step is to identify the variables involved in the research question. A variable is any characteristic or factor that can vary or change. There are two types of variables: independent and dependent. The independent variable is the one that is manipulated or changed by the researcher, while the dependent variable is the one that is measured or observed as a result of the independent variable.

Formulate the Hypothesis

Based on the research question and the variables involved, you can now formulate your hypothesis. A hypothesis should be a clear and concise statement that predicts the relationship between the variables. It should be testable through empirical research and based on existing theory or evidence.

Write the Null Hypothesis

The null hypothesis is the opposite of the alternative hypothesis, which is the hypothesis that you are testing. The null hypothesis states that there is no significant difference or relationship between the variables. It is important to write the null hypothesis because it allows you to compare your results with what would be expected by chance.

Refine the Hypothesis

After formulating the hypothesis, it’s important to refine it and make it more precise. This may involve clarifying the variables, specifying the direction of the relationship, or making the hypothesis more testable.

Examples of Hypothesis

Here are a few examples of hypotheses in different fields:

- Psychology : “Increased exposure to violent video games leads to increased aggressive behavior in adolescents.”

- Biology : “Higher levels of carbon dioxide in the atmosphere will lead to increased plant growth.”

- Sociology : “Individuals who grow up in households with higher socioeconomic status will have higher levels of education and income as adults.”

- Education : “Implementing a new teaching method will result in higher student achievement scores.”

- Marketing : “Customers who receive a personalized email will be more likely to make a purchase than those who receive a generic email.”

- Physics : “An increase in temperature will cause an increase in the volume of a gas, assuming all other variables remain constant.”

- Medicine : “Consuming a diet high in saturated fats will increase the risk of developing heart disease.”

Purpose of Hypothesis

The purpose of a hypothesis is to provide a testable explanation for an observed phenomenon or a prediction of a future outcome based on existing knowledge or theories. A hypothesis is an essential part of the scientific method and helps to guide the research process by providing a clear focus for investigation. It enables scientists to design experiments or studies to gather evidence and data that can support or refute the proposed explanation or prediction.

The formulation of a hypothesis is based on existing knowledge, observations, and theories, and it should be specific, testable, and falsifiable. A specific hypothesis helps to define the research question, which is important in the research process as it guides the selection of an appropriate research design and methodology. Testability of the hypothesis means that it can be proven or disproven through empirical data collection and analysis. Falsifiability means that the hypothesis should be formulated in such a way that it can be proven wrong if it is incorrect.

In addition to guiding the research process, the testing of hypotheses can lead to new discoveries and advancements in scientific knowledge. When a hypothesis is supported by the data, it can be used to develop new theories or models to explain the observed phenomenon. When a hypothesis is not supported by the data, it can help to refine existing theories or prompt the development of new hypotheses to explain the phenomenon.

When to use Hypothesis

Here are some common situations in which hypotheses are used:

- In scientific research , hypotheses are used to guide the design of experiments and to help researchers make predictions about the outcomes of those experiments.

- In social science research , hypotheses are used to test theories about human behavior, social relationships, and other phenomena.

- I n business , hypotheses can be used to guide decisions about marketing, product development, and other areas. For example, a hypothesis might be that a new product will sell well in a particular market, and this hypothesis can be tested through market research.

Characteristics of Hypothesis

Here are some common characteristics of a hypothesis:

- Testable : A hypothesis must be able to be tested through observation or experimentation. This means that it must be possible to collect data that will either support or refute the hypothesis.

- Falsifiable : A hypothesis must be able to be proven false if it is not supported by the data. If a hypothesis cannot be falsified, then it is not a scientific hypothesis.

- Clear and concise : A hypothesis should be stated in a clear and concise manner so that it can be easily understood and tested.

- Based on existing knowledge : A hypothesis should be based on existing knowledge and research in the field. It should not be based on personal beliefs or opinions.

- Specific : A hypothesis should be specific in terms of the variables being tested and the predicted outcome. This will help to ensure that the research is focused and well-designed.

- Tentative: A hypothesis is a tentative statement or assumption that requires further testing and evidence to be confirmed or refuted. It is not a final conclusion or assertion.

- Relevant : A hypothesis should be relevant to the research question or problem being studied. It should address a gap in knowledge or provide a new perspective on the issue.

Advantages of Hypothesis

Hypotheses have several advantages in scientific research and experimentation:

- Guides research: A hypothesis provides a clear and specific direction for research. It helps to focus the research question, select appropriate methods and variables, and interpret the results.

- Predictive powe r: A hypothesis makes predictions about the outcome of research, which can be tested through experimentation. This allows researchers to evaluate the validity of the hypothesis and make new discoveries.

- Facilitates communication: A hypothesis provides a common language and framework for scientists to communicate with one another about their research. This helps to facilitate the exchange of ideas and promotes collaboration.

- Efficient use of resources: A hypothesis helps researchers to use their time, resources, and funding efficiently by directing them towards specific research questions and methods that are most likely to yield results.

- Provides a basis for further research: A hypothesis that is supported by data provides a basis for further research and exploration. It can lead to new hypotheses, theories, and discoveries.

- Increases objectivity: A hypothesis can help to increase objectivity in research by providing a clear and specific framework for testing and interpreting results. This can reduce bias and increase the reliability of research findings.

Limitations of Hypothesis

Some Limitations of the Hypothesis are as follows:

- Limited to observable phenomena: Hypotheses are limited to observable phenomena and cannot account for unobservable or intangible factors. This means that some research questions may not be amenable to hypothesis testing.

- May be inaccurate or incomplete: Hypotheses are based on existing knowledge and research, which may be incomplete or inaccurate. This can lead to flawed hypotheses and erroneous conclusions.

- May be biased: Hypotheses may be biased by the researcher’s own beliefs, values, or assumptions. This can lead to selective interpretation of data and a lack of objectivity in research.

- Cannot prove causation: A hypothesis can only show a correlation between variables, but it cannot prove causation. This requires further experimentation and analysis.

- Limited to specific contexts: Hypotheses are limited to specific contexts and may not be generalizable to other situations or populations. This means that results may not be applicable in other contexts or may require further testing.

- May be affected by chance : Hypotheses may be affected by chance or random variation, which can obscure or distort the true relationship between variables.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Data Collection – Methods Types and Examples

Delimitations in Research – Types, Examples and...

Research Process – Steps, Examples and Tips

Research Design – Types, Methods and Examples

Institutional Review Board – Application Sample...

Evaluating Research – Process, Examples and...

2.4 Developing a Hypothesis

Learning objectives.

- Distinguish between a theory and a hypothesis.

- Discover how theories are used to generate hypotheses and how the results of studies can be used to further inform theories.

- Understand the characteristics of a good hypothesis.

Theories and Hypotheses

Before describing how to develop a hypothesis it is imporant to distinguish betwee a theory and a hypothesis. A theory is a coherent explanation or interpretation of one or more phenomena. Although theories can take a variety of forms, one thing they have in common is that they go beyond the phenomena they explain by including variables, structures, processes, functions, or organizing principles that have not been observed directly. Consider, for example, Zajonc’s theory of social facilitation and social inhibition. He proposed that being watched by others while performing a task creates a general state of physiological arousal, which increases the likelihood of the dominant (most likely) response. So for highly practiced tasks, being watched increases the tendency to make correct responses, but for relatively unpracticed tasks, being watched increases the tendency to make incorrect responses. Notice that this theory—which has come to be called drive theory—provides an explanation of both social facilitation and social inhibition that goes beyond the phenomena themselves by including concepts such as “arousal” and “dominant response,” along with processes such as the effect of arousal on the dominant response.

Outside of science, referring to an idea as a theory often implies that it is untested—perhaps no more than a wild guess. In science, however, the term theory has no such implication. A theory is simply an explanation or interpretation of a set of phenomena. It can be untested, but it can also be extensively tested, well supported, and accepted as an accurate description of the world by the scientific community. The theory of evolution by natural selection, for example, is a theory because it is an explanation of the diversity of life on earth—not because it is untested or unsupported by scientific research. On the contrary, the evidence for this theory is overwhelmingly positive and nearly all scientists accept its basic assumptions as accurate. Similarly, the “germ theory” of disease is a theory because it is an explanation of the origin of various diseases, not because there is any doubt that many diseases are caused by microorganisms that infect the body.

A hypothesis , on the other hand, is a specific prediction about a new phenomenon that should be observed if a particular theory is accurate. It is an explanation that relies on just a few key concepts. Hypotheses are often specific predictions about what will happen in a particular study. They are developed by considering existing evidence and using reasoning to infer what will happen in the specific context of interest. Hypotheses are often but not always derived from theories. So a hypothesis is often a prediction based on a theory but some hypotheses are a-theoretical and only after a set of observations have been made, is a theory developed. This is because theories are broad in nature and they explain larger bodies of data. So if our research question is really original then we may need to collect some data and make some observation before we can develop a broader theory.

Theories and hypotheses always have this if-then relationship. “ If drive theory is correct, then cockroaches should run through a straight runway faster, and a branching runway more slowly, when other cockroaches are present.” Although hypotheses are usually expressed as statements, they can always be rephrased as questions. “Do cockroaches run through a straight runway faster when other cockroaches are present?” Thus deriving hypotheses from theories is an excellent way of generating interesting research questions.

But how do researchers derive hypotheses from theories? One way is to generate a research question using the techniques discussed in this chapter and then ask whether any theory implies an answer to that question. For example, you might wonder whether expressive writing about positive experiences improves health as much as expressive writing about traumatic experiences. Although this question is an interesting one on its own, you might then ask whether the habituation theory—the idea that expressive writing causes people to habituate to negative thoughts and feelings—implies an answer. In this case, it seems clear that if the habituation theory is correct, then expressive writing about positive experiences should not be effective because it would not cause people to habituate to negative thoughts and feelings. A second way to derive hypotheses from theories is to focus on some component of the theory that has not yet been directly observed. For example, a researcher could focus on the process of habituation—perhaps hypothesizing that people should show fewer signs of emotional distress with each new writing session.

Among the very best hypotheses are those that distinguish between competing theories. For example, Norbert Schwarz and his colleagues considered two theories of how people make judgments about themselves, such as how assertive they are (Schwarz et al., 1991) [1] . Both theories held that such judgments are based on relevant examples that people bring to mind. However, one theory was that people base their judgments on the number of examples they bring to mind and the other was that people base their judgments on how easily they bring those examples to mind. To test these theories, the researchers asked people to recall either six times when they were assertive (which is easy for most people) or 12 times (which is difficult for most people). Then they asked them to judge their own assertiveness. Note that the number-of-examples theory implies that people who recalled 12 examples should judge themselves to be more assertive because they recalled more examples, but the ease-of-examples theory implies that participants who recalled six examples should judge themselves as more assertive because recalling the examples was easier. Thus the two theories made opposite predictions so that only one of the predictions could be confirmed. The surprising result was that participants who recalled fewer examples judged themselves to be more assertive—providing particularly convincing evidence in favor of the ease-of-retrieval theory over the number-of-examples theory.

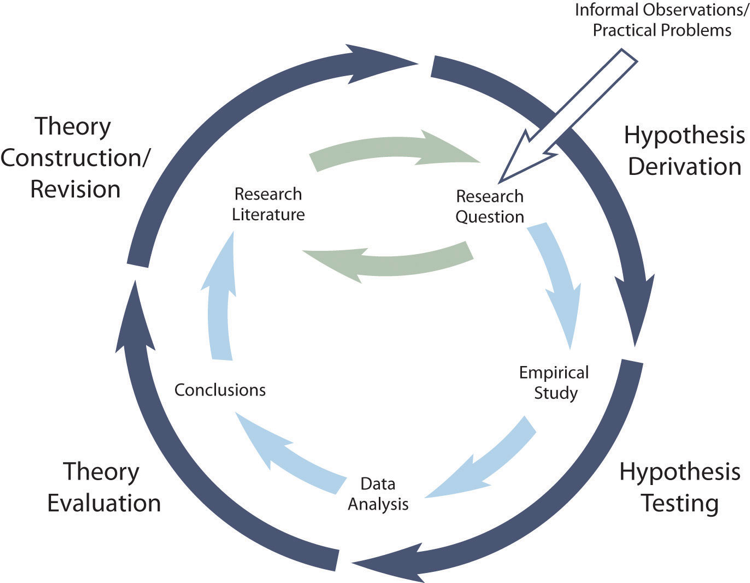

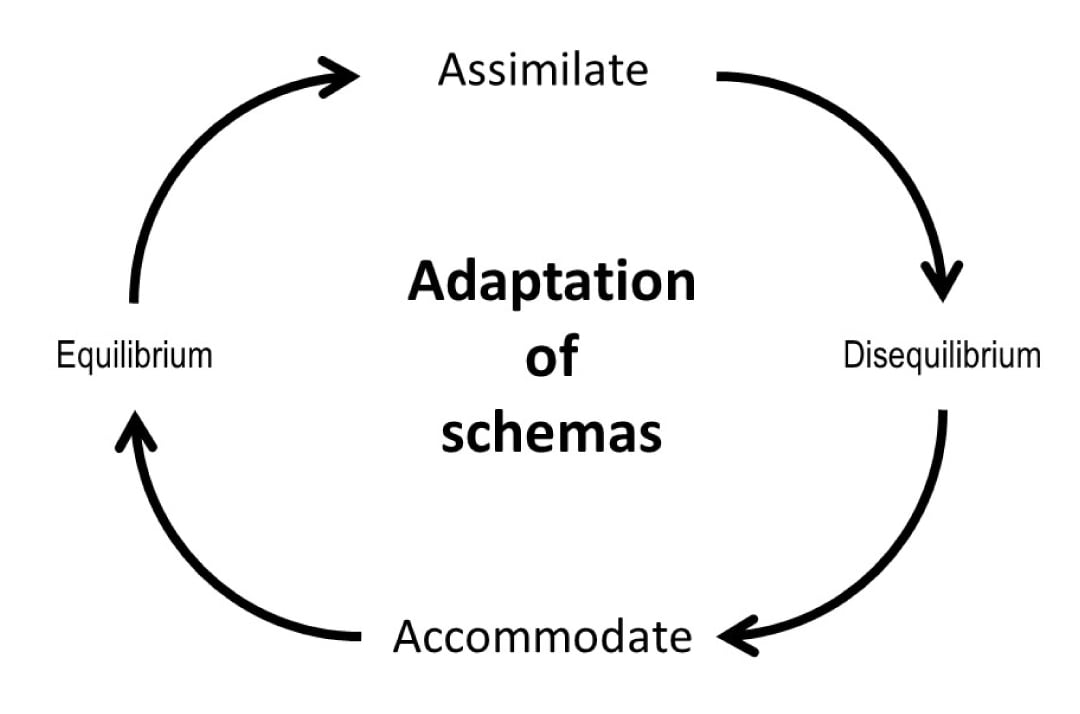

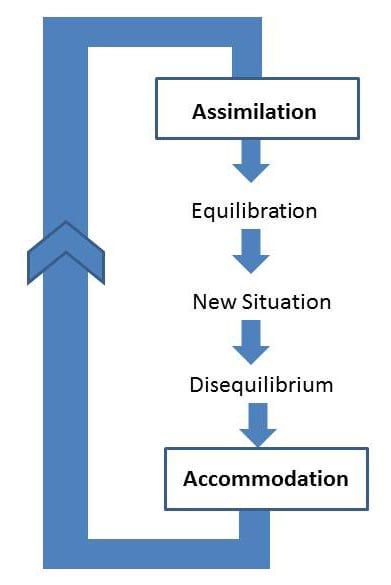

Theory Testing

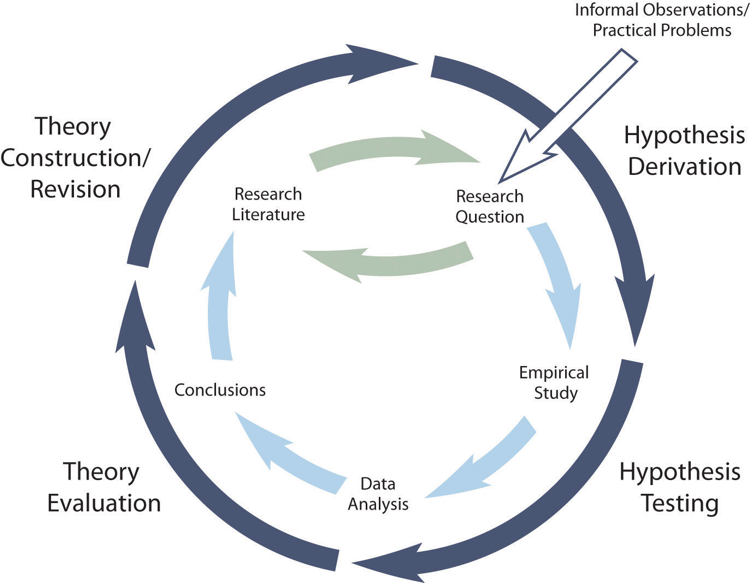

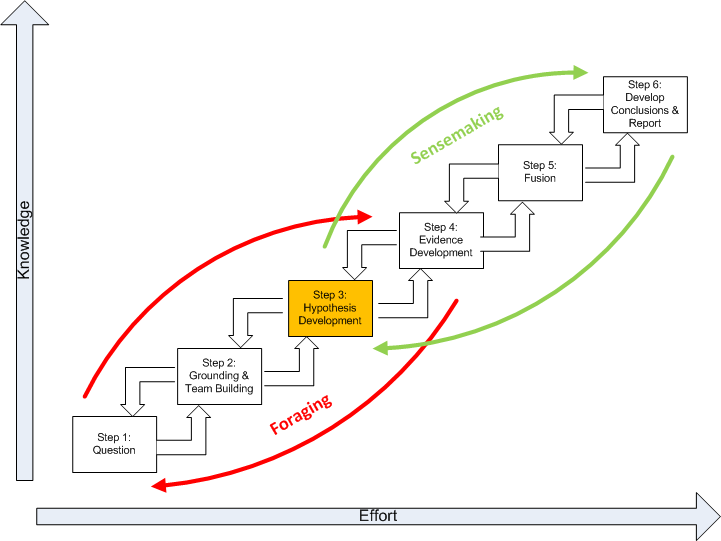

The primary way that scientific researchers use theories is sometimes called the hypothetico-deductive method (although this term is much more likely to be used by philosophers of science than by scientists themselves). A researcher begins with a set of phenomena and either constructs a theory to explain or interpret them or chooses an existing theory to work with. He or she then makes a prediction about some new phenomenon that should be observed if the theory is correct. Again, this prediction is called a hypothesis. The researcher then conducts an empirical study to test the hypothesis. Finally, he or she reevaluates the theory in light of the new results and revises it if necessary. This process is usually conceptualized as a cycle because the researcher can then derive a new hypothesis from the revised theory, conduct a new empirical study to test the hypothesis, and so on. As Figure 2.2 shows, this approach meshes nicely with the model of scientific research in psychology presented earlier in the textbook—creating a more detailed model of “theoretically motivated” or “theory-driven” research.

Figure 2.2 Hypothetico-Deductive Method Combined With the General Model of Scientific Research in Psychology Together they form a model of theoretically motivated research.

As an example, let us consider Zajonc’s research on social facilitation and inhibition. He started with a somewhat contradictory pattern of results from the research literature. He then constructed his drive theory, according to which being watched by others while performing a task causes physiological arousal, which increases an organism’s tendency to make the dominant response. This theory predicts social facilitation for well-learned tasks and social inhibition for poorly learned tasks. He now had a theory that organized previous results in a meaningful way—but he still needed to test it. He hypothesized that if his theory was correct, he should observe that the presence of others improves performance in a simple laboratory task but inhibits performance in a difficult version of the very same laboratory task. To test this hypothesis, one of the studies he conducted used cockroaches as subjects (Zajonc, Heingartner, & Herman, 1969) [2] . The cockroaches ran either down a straight runway (an easy task for a cockroach) or through a cross-shaped maze (a difficult task for a cockroach) to escape into a dark chamber when a light was shined on them. They did this either while alone or in the presence of other cockroaches in clear plastic “audience boxes.” Zajonc found that cockroaches in the straight runway reached their goal more quickly in the presence of other cockroaches, but cockroaches in the cross-shaped maze reached their goal more slowly when they were in the presence of other cockroaches. Thus he confirmed his hypothesis and provided support for his drive theory. (Zajonc also showed that drive theory existed in humans (Zajonc & Sales, 1966) [3] in many other studies afterward).

Incorporating Theory into Your Research

When you write your research report or plan your presentation, be aware that there are two basic ways that researchers usually include theory. The first is to raise a research question, answer that question by conducting a new study, and then offer one or more theories (usually more) to explain or interpret the results. This format works well for applied research questions and for research questions that existing theories do not address. The second way is to describe one or more existing theories, derive a hypothesis from one of those theories, test the hypothesis in a new study, and finally reevaluate the theory. This format works well when there is an existing theory that addresses the research question—especially if the resulting hypothesis is surprising or conflicts with a hypothesis derived from a different theory.

To use theories in your research will not only give you guidance in coming up with experiment ideas and possible projects, but it lends legitimacy to your work. Psychologists have been interested in a variety of human behaviors and have developed many theories along the way. Using established theories will help you break new ground as a researcher, not limit you from developing your own ideas.

Characteristics of a Good Hypothesis

There are three general characteristics of a good hypothesis. First, a good hypothesis must be testable and falsifiable . We must be able to test the hypothesis using the methods of science and if you’ll recall Popper’s falsifiability criterion, it must be possible to gather evidence that will disconfirm the hypothesis if it is indeed false. Second, a good hypothesis must be logical. As described above, hypotheses are more than just a random guess. Hypotheses should be informed by previous theories or observations and logical reasoning. Typically, we begin with a broad and general theory and use deductive reasoning to generate a more specific hypothesis to test based on that theory. Occasionally, however, when there is no theory to inform our hypothesis, we use inductive reasoning which involves using specific observations or research findings to form a more general hypothesis. Finally, the hypothesis should be positive. That is, the hypothesis should make a positive statement about the existence of a relationship or effect, rather than a statement that a relationship or effect does not exist. As scientists, we don’t set out to show that relationships do not exist or that effects do not occur so our hypotheses should not be worded in a way to suggest that an effect or relationship does not exist. The nature of science is to assume that something does not exist and then seek to find evidence to prove this wrong, to show that really it does exist. That may seem backward to you but that is the nature of the scientific method. The underlying reason for this is beyond the scope of this chapter but it has to do with statistical theory.

Key Takeaways

- A theory is broad in nature and explains larger bodies of data. A hypothesis is more specific and makes a prediction about the outcome of a particular study.

- Working with theories is not “icing on the cake.” It is a basic ingredient of psychological research.

- Like other scientists, psychologists use the hypothetico-deductive method. They construct theories to explain or interpret phenomena (or work with existing theories), derive hypotheses from their theories, test the hypotheses, and then reevaluate the theories in light of the new results.

- Practice: Find a recent empirical research report in a professional journal. Read the introduction and highlight in different colors descriptions of theories and hypotheses.

- Schwarz, N., Bless, H., Strack, F., Klumpp, G., Rittenauer-Schatka, H., & Simons, A. (1991). Ease of retrieval as information: Another look at the availability heuristic. Journal of Personality and Social Psychology, 61 , 195–202. ↵

- Zajonc, R. B., Heingartner, A., & Herman, E. M. (1969). Social enhancement and impairment of performance in the cockroach. Journal of Personality and Social Psychology, 13 , 83–92. ↵

- Zajonc, R.B. & Sales, S.M. (1966). Social facilitation of dominant and subordinate responses. Journal of Experimental Social Psychology, 2 , 160-168. ↵

Share This Book

- Increase Font Size

How to Develop a Good Research Hypothesis

The story of a research study begins by asking a question. Researchers all around the globe are asking curious questions and formulating research hypothesis. However, whether the research study provides an effective conclusion depends on how well one develops a good research hypothesis. Research hypothesis examples could help researchers get an idea as to how to write a good research hypothesis.

This blog will help you understand what is a research hypothesis, its characteristics and, how to formulate a research hypothesis

Table of Contents

What is Hypothesis?

Hypothesis is an assumption or an idea proposed for the sake of argument so that it can be tested. It is a precise, testable statement of what the researchers predict will be outcome of the study. Hypothesis usually involves proposing a relationship between two variables: the independent variable (what the researchers change) and the dependent variable (what the research measures).

What is a Research Hypothesis?

Research hypothesis is a statement that introduces a research question and proposes an expected result. It is an integral part of the scientific method that forms the basis of scientific experiments. Therefore, you need to be careful and thorough when building your research hypothesis. A minor flaw in the construction of your hypothesis could have an adverse effect on your experiment. In research, there is a convention that the hypothesis is written in two forms, the null hypothesis, and the alternative hypothesis (called the experimental hypothesis when the method of investigation is an experiment).

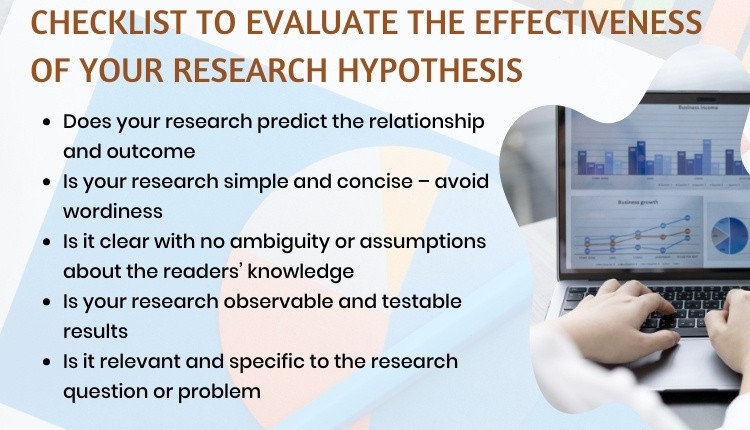

Characteristics of a Good Research Hypothesis

As the hypothesis is specific, there is a testable prediction about what you expect to happen in a study. You may consider drawing hypothesis from previously published research based on the theory.

A good research hypothesis involves more effort than just a guess. In particular, your hypothesis may begin with a question that could be further explored through background research.

To help you formulate a promising research hypothesis, you should ask yourself the following questions:

- Is the language clear and focused?

- What is the relationship between your hypothesis and your research topic?

- Is your hypothesis testable? If yes, then how?

- What are the possible explanations that you might want to explore?

- Does your hypothesis include both an independent and dependent variable?

- Can you manipulate your variables without hampering the ethical standards?

- Does your research predict the relationship and outcome?

- Is your research simple and concise (avoids wordiness)?

- Is it clear with no ambiguity or assumptions about the readers’ knowledge

- Is your research observable and testable results?

- Is it relevant and specific to the research question or problem?

The questions listed above can be used as a checklist to make sure your hypothesis is based on a solid foundation. Furthermore, it can help you identify weaknesses in your hypothesis and revise it if necessary.

Source: Educational Hub

How to formulate a research hypothesis.

A testable hypothesis is not a simple statement. It is rather an intricate statement that needs to offer a clear introduction to a scientific experiment, its intentions, and the possible outcomes. However, there are some important things to consider when building a compelling hypothesis.

1. State the problem that you are trying to solve.

Make sure that the hypothesis clearly defines the topic and the focus of the experiment.

2. Try to write the hypothesis as an if-then statement.

Follow this template: If a specific action is taken, then a certain outcome is expected.

3. Define the variables

Independent variables are the ones that are manipulated, controlled, or changed. Independent variables are isolated from other factors of the study.

Dependent variables , as the name suggests are dependent on other factors of the study. They are influenced by the change in independent variable.

4. Scrutinize the hypothesis

Evaluate assumptions, predictions, and evidence rigorously to refine your understanding.

Types of Research Hypothesis

The types of research hypothesis are stated below:

1. Simple Hypothesis

It predicts the relationship between a single dependent variable and a single independent variable.

2. Complex Hypothesis

It predicts the relationship between two or more independent and dependent variables.

3. Directional Hypothesis

It specifies the expected direction to be followed to determine the relationship between variables and is derived from theory. Furthermore, it implies the researcher’s intellectual commitment to a particular outcome.

4. Non-directional Hypothesis

It does not predict the exact direction or nature of the relationship between the two variables. The non-directional hypothesis is used when there is no theory involved or when findings contradict previous research.

5. Associative and Causal Hypothesis

The associative hypothesis defines interdependency between variables. A change in one variable results in the change of the other variable. On the other hand, the causal hypothesis proposes an effect on the dependent due to manipulation of the independent variable.

6. Null Hypothesis

Null hypothesis states a negative statement to support the researcher’s findings that there is no relationship between two variables. There will be no changes in the dependent variable due the manipulation of the independent variable. Furthermore, it states results are due to chance and are not significant in terms of supporting the idea being investigated.

7. Alternative Hypothesis

It states that there is a relationship between the two variables of the study and that the results are significant to the research topic. An experimental hypothesis predicts what changes will take place in the dependent variable when the independent variable is manipulated. Also, it states that the results are not due to chance and that they are significant in terms of supporting the theory being investigated.

Research Hypothesis Examples of Independent and Dependent Variables

Research Hypothesis Example 1 The greater number of coal plants in a region (independent variable) increases water pollution (dependent variable). If you change the independent variable (building more coal factories), it will change the dependent variable (amount of water pollution).

Research Hypothesis Example 2 What is the effect of diet or regular soda (independent variable) on blood sugar levels (dependent variable)? If you change the independent variable (the type of soda you consume), it will change the dependent variable (blood sugar levels)

You should not ignore the importance of the above steps. The validity of your experiment and its results rely on a robust testable hypothesis. Developing a strong testable hypothesis has few advantages, it compels us to think intensely and specifically about the outcomes of a study. Consequently, it enables us to understand the implication of the question and the different variables involved in the study. Furthermore, it helps us to make precise predictions based on prior research. Hence, forming a hypothesis would be of great value to the research. Here are some good examples of testable hypotheses.

More importantly, you need to build a robust testable research hypothesis for your scientific experiments. A testable hypothesis is a hypothesis that can be proved or disproved as a result of experimentation.

Importance of a Testable Hypothesis

To devise and perform an experiment using scientific method, you need to make sure that your hypothesis is testable. To be considered testable, some essential criteria must be met:

- There must be a possibility to prove that the hypothesis is true.

- There must be a possibility to prove that the hypothesis is false.

- The results of the hypothesis must be reproducible.

Without these criteria, the hypothesis and the results will be vague. As a result, the experiment will not prove or disprove anything significant.

What are your experiences with building hypotheses for scientific experiments? What challenges did you face? How did you overcome these challenges? Please share your thoughts with us in the comments section.

Frequently Asked Questions

The steps to write a research hypothesis are: 1. Stating the problem: Ensure that the hypothesis defines the research problem 2. Writing a hypothesis as an 'if-then' statement: Include the action and the expected outcome of your study by following a ‘if-then’ structure. 3. Defining the variables: Define the variables as Dependent or Independent based on their dependency to other factors. 4. Scrutinizing the hypothesis: Identify the type of your hypothesis

Hypothesis testing is a statistical tool which is used to make inferences about a population data to draw conclusions for a particular hypothesis.

Hypothesis in statistics is a formal statement about the nature of a population within a structured framework of a statistical model. It is used to test an existing hypothesis by studying a population.

Research hypothesis is a statement that introduces a research question and proposes an expected result. It forms the basis of scientific experiments.

The different types of hypothesis in research are: • Null hypothesis: Null hypothesis is a negative statement to support the researcher’s findings that there is no relationship between two variables. • Alternate hypothesis: Alternate hypothesis predicts the relationship between the two variables of the study. • Directional hypothesis: Directional hypothesis specifies the expected direction to be followed to determine the relationship between variables. • Non-directional hypothesis: Non-directional hypothesis does not predict the exact direction or nature of the relationship between the two variables. • Simple hypothesis: Simple hypothesis predicts the relationship between a single dependent variable and a single independent variable. • Complex hypothesis: Complex hypothesis predicts the relationship between two or more independent and dependent variables. • Associative and casual hypothesis: Associative and casual hypothesis predicts the relationship between two or more independent and dependent variables. • Empirical hypothesis: Empirical hypothesis can be tested via experiments and observation. • Statistical hypothesis: A statistical hypothesis utilizes statistical models to draw conclusions about broader populations.

Wow! You really simplified your explanation that even dummies would find it easy to comprehend. Thank you so much.

Thanks a lot for your valuable guidance.

I enjoy reading the post. Hypotheses are actually an intrinsic part in a study. It bridges the research question and the methodology of the study.

Useful piece!

This is awesome.Wow.

It very interesting to read the topic, can you guide me any specific example of hypothesis process establish throw the Demand and supply of the specific product in market

Nicely explained

It is really a useful for me Kindly give some examples of hypothesis

It was a well explained content ,can you please give me an example with the null and alternative hypothesis illustrated

clear and concise. thanks.

So Good so Amazing

Good to learn

Thanks a lot for explaining to my level of understanding

Explained well and in simple terms. Quick read! Thank you

Rate this article Cancel Reply

Your email address will not be published.

Enago Academy's Most Popular Articles

- Reporting Research

Choosing the Right Analytical Approach: Thematic analysis vs. content analysis for data interpretation

In research, choosing the right approach to understand data is crucial for deriving meaningful insights.…

Comparing Cross Sectional and Longitudinal Studies: 5 steps for choosing the right approach

The process of choosing the right research design can put ourselves at the crossroads of…

- Industry News

COPE Forum Discussion Highlights Challenges and Urges Clarity in Institutional Authorship Standards

The COPE forum discussion held in December 2023 initiated with a fundamental question — is…

- Career Corner

Unlocking the Power of Networking in Academic Conferences

Embarking on your first academic conference experience? Fear not, we got you covered! Academic conferences…

Research Recommendations – Guiding policy-makers for evidence-based decision making

Research recommendations play a crucial role in guiding scholars and researchers toward fruitful avenues of…

Choosing the Right Analytical Approach: Thematic analysis vs. content analysis for…

Comparing Cross Sectional and Longitudinal Studies: 5 steps for choosing the right…

How to Design Effective Research Questionnaires for Robust Findings

Sign-up to read more

Subscribe for free to get unrestricted access to all our resources on research writing and academic publishing including:

- 2000+ blog articles

- 50+ Webinars

- 10+ Expert podcasts

- 50+ Infographics

- 10+ Checklists

- Research Guides

We hate spam too. We promise to protect your privacy and never spam you.

I am looking for Editing/ Proofreading services for my manuscript Tentative date of next journal submission:

What should universities' stance be on AI tools in research and academic writing?

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Developing a Hypothesis

Rajiv S. Jhangiani; I-Chant A. Chiang; Carrie Cuttler; and Dana C. Leighton

Learning Objectives

- Distinguish between a theory and a hypothesis.

- Discover how theories are used to generate hypotheses and how the results of studies can be used to further inform theories.

- Understand the characteristics of a good hypothesis.

Theories and Hypotheses

Before describing how to develop a hypothesis, it is important to distinguish between a theory and a hypothesis. A theory is a coherent explanation or interpretation of one or more phenomena. Although theories can take a variety of forms, one thing they have in common is that they go beyond the phenomena they explain by including variables, structures, processes, functions, or organizing principles that have not been observed directly. Consider, for example, Zajonc’s theory of social facilitation and social inhibition (1965) [1] . He proposed that being watched by others while performing a task creates a general state of physiological arousal, which increases the likelihood of the dominant (most likely) response. So for highly practiced tasks, being watched increases the tendency to make correct responses, but for relatively unpracticed tasks, being watched increases the tendency to make incorrect responses. Notice that this theory—which has come to be called drive theory—provides an explanation of both social facilitation and social inhibition that goes beyond the phenomena themselves by including concepts such as “arousal” and “dominant response,” along with processes such as the effect of arousal on the dominant response.

Outside of science, referring to an idea as a theory often implies that it is untested—perhaps no more than a wild guess. In science, however, the term theory has no such implication. A theory is simply an explanation or interpretation of a set of phenomena. It can be untested, but it can also be extensively tested, well supported, and accepted as an accurate description of the world by the scientific community. The theory of evolution by natural selection, for example, is a theory because it is an explanation of the diversity of life on earth—not because it is untested or unsupported by scientific research. On the contrary, the evidence for this theory is overwhelmingly positive and nearly all scientists accept its basic assumptions as accurate. Similarly, the “germ theory” of disease is a theory because it is an explanation of the origin of various diseases, not because there is any doubt that many diseases are caused by microorganisms that infect the body.

A hypothesis , on the other hand, is a specific prediction about a new phenomenon that should be observed if a particular theory is accurate. It is an explanation that relies on just a few key concepts. Hypotheses are often specific predictions about what will happen in a particular study. They are developed by considering existing evidence and using reasoning to infer what will happen in the specific context of interest. Hypotheses are often but not always derived from theories. So a hypothesis is often a prediction based on a theory but some hypotheses are a-theoretical and only after a set of observations have been made, is a theory developed. This is because theories are broad in nature and they explain larger bodies of data. So if our research question is really original then we may need to collect some data and make some observations before we can develop a broader theory.

Theories and hypotheses always have this if-then relationship. “ If drive theory is correct, then cockroaches should run through a straight runway faster, and a branching runway more slowly, when other cockroaches are present.” Although hypotheses are usually expressed as statements, they can always be rephrased as questions. “Do cockroaches run through a straight runway faster when other cockroaches are present?” Thus deriving hypotheses from theories is an excellent way of generating interesting research questions.

But how do researchers derive hypotheses from theories? One way is to generate a research question using the techniques discussed in this chapter and then ask whether any theory implies an answer to that question. For example, you might wonder whether expressive writing about positive experiences improves health as much as expressive writing about traumatic experiences. Although this question is an interesting one on its own, you might then ask whether the habituation theory—the idea that expressive writing causes people to habituate to negative thoughts and feelings—implies an answer. In this case, it seems clear that if the habituation theory is correct, then expressive writing about positive experiences should not be effective because it would not cause people to habituate to negative thoughts and feelings. A second way to derive hypotheses from theories is to focus on some component of the theory that has not yet been directly observed. For example, a researcher could focus on the process of habituation—perhaps hypothesizing that people should show fewer signs of emotional distress with each new writing session.

Among the very best hypotheses are those that distinguish between competing theories. For example, Norbert Schwarz and his colleagues considered two theories of how people make judgments about themselves, such as how assertive they are (Schwarz et al., 1991) [2] . Both theories held that such judgments are based on relevant examples that people bring to mind. However, one theory was that people base their judgments on the number of examples they bring to mind and the other was that people base their judgments on how easily they bring those examples to mind. To test these theories, the researchers asked people to recall either six times when they were assertive (which is easy for most people) or 12 times (which is difficult for most people). Then they asked them to judge their own assertiveness. Note that the number-of-examples theory implies that people who recalled 12 examples should judge themselves to be more assertive because they recalled more examples, but the ease-of-examples theory implies that participants who recalled six examples should judge themselves as more assertive because recalling the examples was easier. Thus the two theories made opposite predictions so that only one of the predictions could be confirmed. The surprising result was that participants who recalled fewer examples judged themselves to be more assertive—providing particularly convincing evidence in favor of the ease-of-retrieval theory over the number-of-examples theory.

Theory Testing

The primary way that scientific researchers use theories is sometimes called the hypothetico-deductive method (although this term is much more likely to be used by philosophers of science than by scientists themselves). Researchers begin with a set of phenomena and either construct a theory to explain or interpret them or choose an existing theory to work with. They then make a prediction about some new phenomenon that should be observed if the theory is correct. Again, this prediction is called a hypothesis. The researchers then conduct an empirical study to test the hypothesis. Finally, they reevaluate the theory in light of the new results and revise it if necessary. This process is usually conceptualized as a cycle because the researchers can then derive a new hypothesis from the revised theory, conduct a new empirical study to test the hypothesis, and so on. As Figure 2.3 shows, this approach meshes nicely with the model of scientific research in psychology presented earlier in the textbook—creating a more detailed model of “theoretically motivated” or “theory-driven” research.

As an example, let us consider Zajonc’s research on social facilitation and inhibition. He started with a somewhat contradictory pattern of results from the research literature. He then constructed his drive theory, according to which being watched by others while performing a task causes physiological arousal, which increases an organism’s tendency to make the dominant response. This theory predicts social facilitation for well-learned tasks and social inhibition for poorly learned tasks. He now had a theory that organized previous results in a meaningful way—but he still needed to test it. He hypothesized that if his theory was correct, he should observe that the presence of others improves performance in a simple laboratory task but inhibits performance in a difficult version of the very same laboratory task. To test this hypothesis, one of the studies he conducted used cockroaches as subjects (Zajonc, Heingartner, & Herman, 1969) [3] . The cockroaches ran either down a straight runway (an easy task for a cockroach) or through a cross-shaped maze (a difficult task for a cockroach) to escape into a dark chamber when a light was shined on them. They did this either while alone or in the presence of other cockroaches in clear plastic “audience boxes.” Zajonc found that cockroaches in the straight runway reached their goal more quickly in the presence of other cockroaches, but cockroaches in the cross-shaped maze reached their goal more slowly when they were in the presence of other cockroaches. Thus he confirmed his hypothesis and provided support for his drive theory. (Zajonc also showed that drive theory existed in humans [Zajonc & Sales, 1966] [4] in many other studies afterward).

Incorporating Theory into Your Research

When you write your research report or plan your presentation, be aware that there are two basic ways that researchers usually include theory. The first is to raise a research question, answer that question by conducting a new study, and then offer one or more theories (usually more) to explain or interpret the results. This format works well for applied research questions and for research questions that existing theories do not address. The second way is to describe one or more existing theories, derive a hypothesis from one of those theories, test the hypothesis in a new study, and finally reevaluate the theory. This format works well when there is an existing theory that addresses the research question—especially if the resulting hypothesis is surprising or conflicts with a hypothesis derived from a different theory.

To use theories in your research will not only give you guidance in coming up with experiment ideas and possible projects, but it lends legitimacy to your work. Psychologists have been interested in a variety of human behaviors and have developed many theories along the way. Using established theories will help you break new ground as a researcher, not limit you from developing your own ideas.

Characteristics of a Good Hypothesis

There are three general characteristics of a good hypothesis. First, a good hypothesis must be testable and falsifiable . We must be able to test the hypothesis using the methods of science and if you’ll recall Popper’s falsifiability criterion, it must be possible to gather evidence that will disconfirm the hypothesis if it is indeed false. Second, a good hypothesis must be logical. As described above, hypotheses are more than just a random guess. Hypotheses should be informed by previous theories or observations and logical reasoning. Typically, we begin with a broad and general theory and use deductive reasoning to generate a more specific hypothesis to test based on that theory. Occasionally, however, when there is no theory to inform our hypothesis, we use inductive reasoning which involves using specific observations or research findings to form a more general hypothesis. Finally, the hypothesis should be positive. That is, the hypothesis should make a positive statement about the existence of a relationship or effect, rather than a statement that a relationship or effect does not exist. As scientists, we don’t set out to show that relationships do not exist or that effects do not occur so our hypotheses should not be worded in a way to suggest that an effect or relationship does not exist. The nature of science is to assume that something does not exist and then seek to find evidence to prove this wrong, to show that it really does exist. That may seem backward to you but that is the nature of the scientific method. The underlying reason for this is beyond the scope of this chapter but it has to do with statistical theory.

- Zajonc, R. B. (1965). Social facilitation. Science, 149 , 269–274 ↵

- Schwarz, N., Bless, H., Strack, F., Klumpp, G., Rittenauer-Schatka, H., & Simons, A. (1991). Ease of retrieval as information: Another look at the availability heuristic. Journal of Personality and Social Psychology, 61 , 195–202. ↵

- Zajonc, R. B., Heingartner, A., & Herman, E. M. (1969). Social enhancement and impairment of performance in the cockroach. Journal of Personality and Social Psychology, 13 , 83–92. ↵

- Zajonc, R.B. & Sales, S.M. (1966). Social facilitation of dominant and subordinate responses. Journal of Experimental Social Psychology, 2 , 160-168. ↵

A coherent explanation or interpretation of one or more phenomena.

A specific prediction about a new phenomenon that should be observed if a particular theory is accurate.

A cyclical process of theory development, starting with an observed phenomenon, then developing or using a theory to make a specific prediction of what should happen if that theory is correct, testing that prediction, refining the theory in light of the findings, and using that refined theory to develop new hypotheses, and so on.