- Write my thesis

- Thesis writers

- Buy thesis papers

- Bachelor thesis

- Master's thesis

- Thesis editing services

- Thesis proofreading services

- Buy a thesis online

- Write my dissertation

- Dissertation proposal help

- Pay for dissertation

- Custom dissertation

- Dissertation help online

- Buy dissertation online

- Cheap dissertation

- Dissertation editing services

- Write my research paper

- Buy research paper online

- Pay for research paper

- Research paper help

- Order research paper

- Custom research paper

- Cheap research paper

- Research papers for sale

- Thesis subjects

- How It Works

177 Great Artificial Intelligence Research Paper Topics to Use

In this top-notch post, we will look at the definition of artificial intelligence, its applications, and writing tips on how to come up with AI topics. Finally, we shall lock at top artificial intelligence research topics for your inspiration.

What Is Artificial Intelligence?

It refers to intelligence as demonstrated by machines, unlike that which animals and humans display. The latter involves emotionality and consciousness. The field of AI has gained proliferation in recent days, with many scientists investing their time and effort in research.

How To Develop Topics in Artificial Intelligence

Developing AI topics is a critical thinking process that also incorporates a lot of creativity. Due to the ever-dynamic nature of the discipline, most students find it hard to develop impressive topics in artificial intelligence. However, here are some general rules to get you started:

Read widely on the subject of artificial intelligence Have an interest in news and other current updates about AI Consult your supervisor

Once you are ready with these steps, nothing is holding you from developing top-rated topics in artificial intelligence. Now let’s look at what the pros have in store for you.

Artificial Intelligence Research Paper Topics

- The role of artificial intelligence in evolving the workforce

- Are there tasks that require unique human abilities apart from machines?

- The transformative economic impact of artificial intelligence

- Managing a global autonomous arms race in the face of AI

- The legal and ethical boundaries of artificial intelligence

- Is the destructive role of AI more than its constructive role in society?

- How to build AI algorithms to achieve the far-reaching goals of humans

- How privacy gets compromised with the everyday collection of data

- How businesses and governments can suffer at the hands of AI

- Is it possible for AI to devolve into social oppression?

- Augmentation of the work humans do through artificial intelligence

- The role of AI in monitoring and diagnosing capabilities

Artificial Intelligence Topics For Presentation

- How AI helps to uncover criminal activity and solve serial crimes

- The place of facial recognition technologies in security systems

- How to use AI without crossing an individual’s privacy

- What are the disadvantages of using a computer-controlled robot in performing tasks?

- How to develop systems endowed with intellectual processes

- The challenge of programming computers to perform complex tasks

- Discuss some of the mathematical theorems for artificial intelligence systems

- The role of computer processing speed and memory capacity in AI

- Can computer machines achieve the performance levels of human experts?

- Discuss the application of artificial intelligence in handwriting recognition

- A case study of the key people involved in developing AI systems

- Computational aesthetics when developing artificial intelligence systems

Topics in AI For Tip-Top Grades

- Describe the necessities for artificial programming language

- The impact of American companies possessing about 2/3 of investments in AI

- The relationship between human neural networks and A.I

- The role of psychologists in developing human intelligence

- How to apply past experiences to analogous new situations

- How machine learning helps in achieving artificial intelligence

- The role of discernment and human intelligence in developing AI systems

- Discuss the various methods and goals in artificial intelligence

- What is the relationship between applied AI, strong AI, and cognitive simulation

- Discuss the implications of the first AI programs

- Logical reasoning and problem-solving in artificial intelligence

- Challenges involved in controlled learning environments

AI Research Topics For High School Students

- How quantum computing is affecting artificial intelligence

- The role of the Internet of Things in advancing artificial intelligence

- Using Artificial intelligence to enable machines to perform programming tasks

- Why do machines learn automatically without human hand holding

- Implementing decisions based on data processing in the human mind

- Describe the web-like structure of artificial neural networks

- Machine learning algorithms for optimal functions through trial and error

- A case study of Google’s AlphaGo computer program

- How robots solve problems in an intelligent manner

- Evaluate the significant role of M.I.T.’s artificial intelligence lab

- A case study of Robonaut developed by NASA to work with astronauts in space

- Discuss natural language processing where machines analyze language and speech

Argument Debate Topics on AI

- How chatbots use ML and N.L.P. to interact with the users

- How do computers use and understand images?

- The impact of genetic engineering on the life of man

- Why are micro-chips not recommended in human body systems?

- Can humans work alongside robots in a workplace system?

- Have computers contributed to the intrusion of privacy for many?

- Why artificial intelligence systems should not be made accessible to children

- How artificial intelligence systems are contributing to healthcare problems

- Does artificial intelligence alleviate human problems or add to them?

- Why governments should put more stringent measures for AI inventions

- How artificial intelligence is affecting the character traits of children born

- Is virtual reality taking people out of the real-world situation?

Quality AI Topics For Research Paper

- The use of recommender systems in choosing movies and series

- Collaborative filtering in designing systems

- How do developers arrive at a content-based recommendation

- Creation of systems that can emulate human tasks

- How IoT devices generate a lot of data

- Artificial intelligence algorithms convert data to useful, actionable results.

- How AI is progressing rapidly with the 5G technology

- How to develop robots with human-like characteristics

- Developing Google search algorithms

- The role of artificial intelligence in developing autonomous weapons

- Discuss the long-term goal of artificial intelligence

- Will artificial intelligence outperform humans at every cognitive task?

Computer Science AI Topics

- Computational intelligence magazine in computer science

- Swarm and evolutionary computation procedures for college students

- Discuss computational transactions on intelligent transportation systems

- The structure and function of knowledge-based systems

- A review of the artificial intelligence systems in developing systems

- Conduct a review of the expert systems with applications

- Critique the various foundations and trends in information retrieval

- The role of specialized systems in transactions on knowledge and data engineering

- An analysis of a journal on ambient intelligence and humanized computing

- Discuss the various computer transactions on cognitive communications and networking

- What is the role of artificial intelligence in medicine?

- Computer engineering applications of artificial intelligence

AI Ethics Topics

- How the automation of jobs is going to make many jobless

- Discuss inequality challenges in distributing wealth created by machines

- The impact of machines on human behavior and interactions

- How artificial intelligence is going to affect how we act accordingly

- The process of eliminating bias in Artificial intelligence: A case of racist robots

- Measures that can keep artificial intelligence safe from adversaries

- Protecting artificial intelligence discoveries from unintended consequences

- How a man can stay in control despite the complex, intelligent systems

- Robot rights: A case of how man is mistreating and misusing robots

- The balance between mitigating suffering and interfering with set ethics

- The role of artificial intelligence in negative outcomes: Is it worth it?

- How to ethically use artificial intelligence for bettering lives

Advanced AI Topics

- Discuss how long it will take until machines greatly supersede human intelligence

- Is it possible to achieve superhuman artificial intelligence in this century?

- The impact of techno-skeptic prediction on the performance of A.I

- The role of quarks and electrons in the human brain

- The impact of artificial intelligence safety research institutes

- Will robots be disastrous for humanity shortly?

- Robots: A concern about consciousness and evil

- Discuss whether a self-driving car has a subjective experience or not

- Should humans worry about machines turning evil in the end?

- Discuss how machines exhibit goal-oriented behavior in their functions

- Should man continue to develop lethal autonomous weapons?

- What is the implication of machine-produced wealth?

AI Essay Topics Technology

- Discuss the implication of the fourth technological revelation in cloud computing

- Big database technologies used in sensors

- The combination of technologies typical of the technological revolution

- Key determinants of the civilization process of industry 4.0

- Discuss some of the concepts of technological management

- Evaluate the creation of internet-based companies in the U.S.

- The most dominant scientific research in the field of artificial intelligence

- Discuss the application of artificial intelligence in the literature

- How enterprises use artificial intelligence in blockchain business operations

- Discuss the various immersive experiences as a result of digital AI

- Elaborate on various enterprise architects and technology innovations

- Mega-trends that are future impacts on business operations

Interesting Topics in AI

- The role of the industrial revolution of the 18 th century in A.I

- The electricity era of the late 19 th century and its contribution to the development of robots

- How the widespread use of the internet contributes to the AI revolution

- The short-term economic crisis as a result of artificial intelligence business technologies

- Designing and creating artificial intelligence production processes

- Analyzing large collections of information for technological solutions

- How biotechnology is transforming the field of agriculture

- Innovative business projects that work using artificial intelligence systems

- Process and marketing innovations in the 21 st century

- Medical intelligence in the era of smart cities

- Advanced data processing technologies in developed nations

- Discuss the development of stelliform technologies

Good Research Topics For AI

- Development of new technological solutions in I.T

- Innovative organizational solutions that develop machine learning

- How to develop branches of a knowledge-based economy

- Discuss the implications of advanced computerized neural network systems

- How to solve complex problems with the help of algorithms

- Why artificial intelligence systems are predominating over their creator

- How to determine artificial emotional intelligence

- Discuss the negative and positive aspects of technological advancement

- How internet technology companies like Facebook are managing large social media portals

- The application of analytical business intelligence systems

- How artificial intelligence improves business management systems

- Strategic and ongoing management of artificial intelligence systems

Graduate AI NLP Research Topics

- Morphological segmentation in artificial intelligence

- Sentiment analysis and breaking machine language

- Discuss input utterance for language interpretation

- Festival speech synthesis system for natural language processing

- Discuss the role of the Google language translator

- Evaluate the various analysis methodologies in N.L.P.

- Native language identification procedure for deep analytics

- Modular audio recognition framework

- Deep linguistic processing techniques

- Fact recognition and extraction techniques

- Dialogue and text-based applications

- Speaker verification and identification systems

Controversial Topics in AI

- Ethical implication of AI in movies: A case study of The Terminator

- Will machines take over the world and enslave humanity?

- Does human intelligence paint a dark future for humanity?

- Ethical and practical issues of artificial intelligence

- The impact of mimicking human cognitive functions

- Why the integration of AI technologies into society should be limited

- Should robots get paid hourly?

- What if AI is a mistake?

- Why did Microsoft shut down chatbots immediately?

- Should there be AI systems for killing?

- Should machines be created to do what they want?

- Is the computerized gun ethical?

Hot AI Topics

- Why predator drones should not exist

- Do the U.S. laws restrict meaningful innovations in AI

- Why did the campaign to stop killer robots fail in the end?

- Fully autonomous weapons and human safety

- How to deal with rogues artificial intelligence systems in the United States

- Is it okay to have a monopoly and control over artificial intelligence innovations?

- Should robots have human rights or citizenship?

- Biases when detecting people’s gender using Artificial intelligence

- Considerations for the adoption of a particular artificial intelligence technology

Are you a university student seeking research paper writing services or dissertation proposal help ? We offer custom help for college students in any field of artificial intelligence.

Leave a Reply Cancel reply

- Onsite training

3,000,000+ delegates

15,000+ clients

1,000+ locations

- KnowledgePass

- Log a ticket

01344203999 Available 24/7

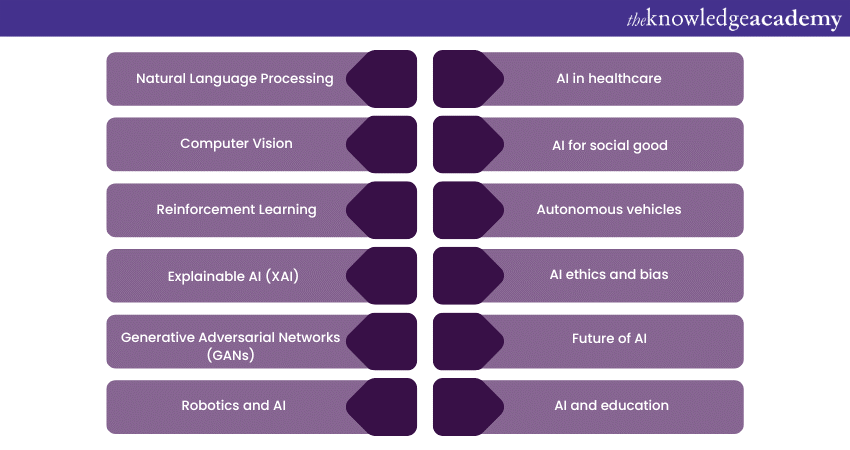

12 Best Artificial Intelligence Topics for Research in 2024

Explore the "12 Best Artificial Intelligence Topics for Research in 2024." Dive into the top AI research areas, including Natural Language Processing, Computer Vision, Reinforcement Learning, Explainable AI (XAI), AI in Healthcare, Autonomous Vehicles, and AI Ethics and Bias. Stay ahead of the curve and make informed choices for your AI research endeavours.

Exclusive 40% OFF

Training Outcomes Within Your Budget!

We ensure quality, budget-alignment, and timely delivery by our expert instructors.

Share this Resource

- AI Tools in Performance Marketing Training

- Deep Learning Course

- Natural Language Processing (NLP) Fundamentals with Python

- Machine Learning Course

- Duet AI for Workspace Training

Table of Contents

1) Top Artificial Intelligence Topics for Research

a) Natural Language Processing

b) Computer vision

c) Reinforcement Learning

d) Explainable AI (XAI)

e) Generative Adversarial Networks (GANs)

f) Robotics and AI

g) AI in healthcare

h) AI for social good

i) Autonomous vehicles

j) AI ethics and bias

2) Conclusion

Top Artificial Intelligence Topics for Research

This section of the blog will expand on some of the best Artificial Intelligence Topics for research.

Natural Language Processing

Natural Language Processing (NLP) is centred around empowering machines to comprehend, interpret, and even generate human language. Within this domain, three distinctive research avenues beckon:

1) Sentiment analysis: This entails the study of methodologies to decipher and discern emotions encapsulated within textual content. Understanding sentiments is pivotal in applications ranging from brand perception analysis to social media insights.

2) Language generation: Generating coherent and contextually apt text is an ongoing pursuit. Investigating mechanisms that allow machines to produce human-like narratives and responses holds immense potential across sectors.

3) Question answering systems: Constructing systems that can grasp the nuances of natural language questions and provide accurate, coherent responses is a cornerstone of NLP research. This facet has implications for knowledge dissemination, customer support, and more.

Computer Vision

Computer Vision, a discipline that bestows machines with the ability to interpret visual data, is replete with intriguing avenues for research:

1) Object detection and tracking: The development of algorithms capable of identifying and tracking objects within images and videos finds relevance in surveillance, automotive safety, and beyond.

2) Image captioning: Bridging the gap between visual and textual comprehension, this research area focuses on generating descriptive captions for images, catering to visually impaired individuals and enhancing multimedia indexing.

3) Facial recognition: Advancements in facial recognition technology hold implications for security, personalisation, and accessibility, necessitating ongoing research into accuracy and ethical considerations.

Reinforcement Learning

Reinforcement Learning revolves around training agents to make sequential decisions in order to maximise rewards. Within this realm, three prominent Artificial Intelligence Topics emerge:

1) Autonomous agents: Crafting AI agents that exhibit decision-making prowess in dynamic environments paves the way for applications like autonomous robotics and adaptive systems.

2) Deep Q-Networks (DQN): Deep Q-Networks, a class of reinforcement learning algorithms, remain under active research for refining value-based decision-making in complex scenarios.

3) Policy gradient methods: These methods, aiming to optimise policies directly, play a crucial role in fine-tuning decision-making processes across domains like gaming, finance, and robotics.

Explainable AI (XAI)

The pursuit of Explainable AI seeks to demystify the decision-making processes of AI systems. This area comprises Artificial Intelligence Topics such as:

1) Model interpretability: Unravelling the inner workings of complex models to elucidate the factors influencing their outputs, thus fostering transparency and accountability.

2) Visualising neural networks: Transforming abstract neural network structures into visual representations aids in comprehending their functionality and behaviour.

3) Rule-based systems: Augmenting AI decision-making with interpretable, rule-based systems holds promise in domains requiring logical explanations for actions taken.

Generative Adversarial Networks (GANs)

The captivating world of Generative Adversarial Networks (GANs) unfolds through the interplay of generator and discriminator networks, birthing remarkable research avenues:

1) Image generation: Crafting realistic images from random noise showcases the creative potential of GANs, with applications spanning art, design, and data augmentation.

2) Style transfer: Enabling the transfer of artistic styles between images, merging creativity and technology to yield visually captivating results.

3) Anomaly detection: GANs find utility in identifying anomalies within datasets, bolstering fraud detection, quality control, and anomaly-sensitive industries.

Robotics and AI

The synergy between Robotics and AI is a fertile ground for exploration, with Artificial Intelligence Topics such as:

1) Human-robot collaboration: Research in this arena strives to establish harmonious collaboration between humans and robots, augmenting industry productivity and efficiency.

2) Robot learning: By enabling robots to learn and adapt from their experiences, Researchers foster robots' autonomy and the ability to handle diverse tasks.

3) Ethical considerations: Delving into the ethical implications surrounding AI-powered robots helps establish responsible guidelines for their deployment.

AI in healthcare

AI presents a transformative potential within healthcare, spurring research into:

1) Medical diagnosis: AI aids in accurately diagnosing medical conditions, revolutionising early detection and patient care.

2) Drug discovery: Leveraging AI for drug discovery expedites the identification of potential candidates, accelerating the development of new treatments.

3) Personalised treatment: Tailoring medical interventions to individual patient profiles enhances treatment outcomes and patient well-being.

AI for social good

Harnessing the prowess of AI for Social Good entails addressing pressing global challenges:

1) Environmental monitoring: AI-powered solutions facilitate real-time monitoring of ecological changes, supporting conservation and sustainable practices.

2) Disaster response: Research in this area bolsters disaster response efforts by employing AI to analyse data and optimise resource allocation.

3) Poverty alleviation: Researchers contribute to humanitarian efforts and socioeconomic equality by devising AI solutions to tackle poverty.

Unlock the potential of Artificial Intelligence for effective Project Management with our Artificial Intelligence (AI) for Project Managers Course . Sign up now!

Autonomous vehicles

Autonomous Vehicles represent a realm brimming with potential and complexities, necessitating research in Artificial Intelligence Topics such as:

1) Sensor fusion: Integrating data from diverse sensors enhances perception accuracy, which is essential for safe autonomous navigation.

2) Path planning: Developing advanced algorithms for path planning ensures optimal routes while adhering to safety protocols.

3) Safety and ethics: Ethical considerations, such as programming vehicles to make difficult decisions in potential accident scenarios, require meticulous research and deliberation.

AI ethics and bias

Ethical underpinnings in AI drive research efforts in these directions:

1) Fairness in AI: Ensuring AI systems remain impartial and unbiased across diverse demographic groups.

2) Bias detection and mitigation: Identifying and rectifying biases present within AI models guarantees equitable outcomes.

3) Ethical decision-making: Developing frameworks that imbue AI with ethical decision-making capabilities aligns technology with societal values.

Future of AI

The vanguard of AI beckons Researchers to explore these horizons:

1) Artificial General Intelligence (AGI): Speculating on the potential emergence of AI systems capable of emulating human-like intelligence opens dialogues on the implications and challenges.

2) AI and creativity: Probing the interface between AI and creative domains, such as art and music, unveils the coalescence of human ingenuity and technological prowess.

3) Ethical and regulatory challenges: Researching the ethical dilemmas and regulatory frameworks underpinning AI's evolution fortifies responsible innovation.

AI and education

The intersection of AI and Education opens doors to innovative learning paradigms:

1) Personalised learning: Developing AI systems that adapt educational content to individual learning styles and paces.

2) Intelligent tutoring systems: Creating AI-driven tutoring systems that provide targeted support to students.

3) Educational data mining: Applying AI to analyse educational data for insights into learning patterns and trends.

Unleash the full potential of AI with our comprehensive Introduction to Artificial Intelligence Training . Join now!

Conclusion

The domain of AI is ever-expanding, rich with intriguing topics about Artificial Intelligence that beckon Researchers to explore, question, and innovate. Through the pursuit of these twelve diverse Artificial Intelligence Topics, we pave the way for not only technological advancement but also a deeper understanding of the societal impact of AI. By delving into these realms, Researchers stand poised to shape the trajectory of AI, ensuring it remains a force for progress, empowerment, and positive transformation in our world.

Unlock your full potential with our extensive Personal Development Training Courses. Join today!

Frequently Asked Questions

Upcoming data, analytics & ai resources batches & dates.

Fri 26th Apr 2024

Fri 2nd Aug 2024

Fri 15th Nov 2024

Get A Quote

WHO WILL BE FUNDING THE COURSE?

My employer

By submitting your details you agree to be contacted in order to respond to your enquiry

- Business Analysis

- Lean Six Sigma Certification

Share this course

Our biggest spring sale.

We cannot process your enquiry without contacting you, please tick to confirm your consent to us for contacting you about your enquiry.

By submitting your details you agree to be contacted in order to respond to your enquiry.

We may not have the course you’re looking for. If you enquire or give us a call on 01344203999 and speak to our training experts, we may still be able to help with your training requirements.

Or select from our popular topics

- ITIL® Certification

- Scrum Certification

- Change Management Certification

- Business Analysis Courses

- Microsoft Azure Certification

- Microsoft Excel & Certification Course

- Microsoft Project

- Explore more courses

Press esc to close

Fill out your contact details below and our training experts will be in touch.

Fill out your contact details below

Thank you for your enquiry!

One of our training experts will be in touch shortly to go over your training requirements.

Back to Course Information

Fill out your contact details below so we can get in touch with you regarding your training requirements.

* WHO WILL BE FUNDING THE COURSE?

Preferred Contact Method

No preference

Back to course information

Fill out your training details below

Fill out your training details below so we have a better idea of what your training requirements are.

HOW MANY DELEGATES NEED TRAINING?

HOW DO YOU WANT THE COURSE DELIVERED?

Online Instructor-led

Online Self-paced

WHEN WOULD YOU LIKE TO TAKE THIS COURSE?

Next 2 - 4 months

WHAT IS YOUR REASON FOR ENQUIRING?

Looking for some information

Looking for a discount

I want to book but have questions

One of our training experts will be in touch shortly to go overy your training requirements.

Your privacy & cookies!

Like many websites we use cookies. We care about your data and experience, so to give you the best possible experience using our site, we store a very limited amount of your data. Continuing to use this site or clicking “Accept & close” means that you agree to our use of cookies. Learn more about our privacy policy and cookie policy cookie policy .

We use cookies that are essential for our site to work. Please visit our cookie policy for more information. To accept all cookies click 'Accept & close'.

Research Topics & Ideas

Artifical Intelligence (AI) and Machine Learning (ML)

If you’re just starting out exploring AI-related research topics for your dissertation, thesis or research project, you’ve come to the right place. In this post, we’ll help kickstart your research topic ideation process by providing a hearty list of research topics and ideas , including examples from past studies.

PS – This is just the start…

We know it’s exciting to run through a list of research topics, but please keep in mind that this list is just a starting point . To develop a suitable research topic, you’ll need to identify a clear and convincing research gap , and a viable plan to fill that gap.

If this sounds foreign to you, check out our free research topic webinar that explores how to find and refine a high-quality research topic, from scratch. Alternatively, if you’d like hands-on help, consider our 1-on-1 coaching service .

AI-Related Research Topics & Ideas

Below you’ll find a list of AI and machine learning-related research topics ideas. These are intentionally broad and generic , so keep in mind that you will need to refine them a little. Nevertheless, they should inspire some ideas for your project.

- Developing AI algorithms for early detection of chronic diseases using patient data.

- The use of deep learning in enhancing the accuracy of weather prediction models.

- Machine learning techniques for real-time language translation in social media platforms.

- AI-driven approaches to improve cybersecurity in financial transactions.

- The role of AI in optimizing supply chain logistics for e-commerce.

- Investigating the impact of machine learning in personalized education systems.

- The use of AI in predictive maintenance for industrial machinery.

- Developing ethical frameworks for AI decision-making in healthcare.

- The application of ML algorithms in autonomous vehicle navigation systems.

- AI in agricultural technology: Optimizing crop yield predictions.

- Machine learning techniques for enhancing image recognition in security systems.

- AI-powered chatbots: Improving customer service efficiency in retail.

- The impact of AI on enhancing energy efficiency in smart buildings.

- Deep learning in drug discovery and pharmaceutical research.

- The use of AI in detecting and combating online misinformation.

- Machine learning models for real-time traffic prediction and management.

- AI applications in facial recognition: Privacy and ethical considerations.

- The effectiveness of ML in financial market prediction and analysis.

- Developing AI tools for real-time monitoring of environmental pollution.

- Machine learning for automated content moderation on social platforms.

- The role of AI in enhancing the accuracy of medical diagnostics.

- AI in space exploration: Automated data analysis and interpretation.

- Machine learning techniques in identifying genetic markers for diseases.

- AI-driven personal finance management tools.

- The use of AI in developing adaptive learning technologies for disabled students.

AI & ML Research Topic Ideas (Continued)

- Machine learning in cybersecurity threat detection and response.

- AI applications in virtual reality and augmented reality experiences.

- Developing ethical AI systems for recruitment and hiring processes.

- Machine learning for sentiment analysis in customer feedback.

- AI in sports analytics for performance enhancement and injury prevention.

- The role of AI in improving urban planning and smart city initiatives.

- Machine learning models for predicting consumer behaviour trends.

- AI and ML in artistic creation: Music, visual arts, and literature.

- The use of AI in automated drone navigation for delivery services.

- Developing AI algorithms for effective waste management and recycling.

- Machine learning in seismology for earthquake prediction.

- AI-powered tools for enhancing online privacy and data protection.

- The application of ML in enhancing speech recognition technologies.

- Investigating the role of AI in mental health assessment and therapy.

- Machine learning for optimization of renewable energy systems.

- AI in fashion: Predicting trends and personalizing customer experiences.

- The impact of AI on legal research and case analysis.

- Developing AI systems for real-time language interpretation for the deaf and hard of hearing.

- Machine learning in genomic data analysis for personalized medicine.

- AI-driven algorithms for credit scoring in microfinance.

- The use of AI in enhancing public safety and emergency response systems.

- Machine learning for improving water quality monitoring and management.

- AI applications in wildlife conservation and habitat monitoring.

- The role of AI in streamlining manufacturing processes.

- Investigating the use of AI in enhancing the accessibility of digital content for visually impaired users.

Recent AI & ML-Related Studies

While the ideas we’ve presented above are a decent starting point for finding a research topic in AI, they are fairly generic and non-specific. So, it helps to look at actual studies in the AI and machine learning space to see how this all comes together in practice.

Below, we’ve included a selection of AI-related studies to help refine your thinking. These are actual studies, so they can provide some useful insight as to what a research topic looks like in practice.

- An overview of artificial intelligence in diabetic retinopathy and other ocular diseases (Sheng et al., 2022)

- HOW DOES ARTIFICIAL INTELLIGENCE HELP ASTRONOMY? A REVIEW (Patel, 2022)

- Editorial: Artificial Intelligence in Bioinformatics and Drug Repurposing: Methods and Applications (Zheng et al., 2022)

- Review of Artificial Intelligence and Machine Learning Technologies: Classification, Restrictions, Opportunities, and Challenges (Mukhamediev et al., 2022)

- Will digitization, big data, and artificial intelligence – and deep learning–based algorithm govern the practice of medicine? (Goh, 2022)

- Flower Classifier Web App Using Ml & Flask Web Framework (Singh et al., 2022)

- Object-based Classification of Natural Scenes Using Machine Learning Methods (Jasim & Younis, 2023)

- Automated Training Data Construction using Measurements for High-Level Learning-Based FPGA Power Modeling (Richa et al., 2022)

- Artificial Intelligence (AI) and Internet of Medical Things (IoMT) Assisted Biomedical Systems for Intelligent Healthcare (Manickam et al., 2022)

- Critical Review of Air Quality Prediction using Machine Learning Techniques (Sharma et al., 2022)

- Artificial Intelligence: New Frontiers in Real–Time Inverse Scattering and Electromagnetic Imaging (Salucci et al., 2022)

- Machine learning alternative to systems biology should not solely depend on data (Yeo & Selvarajoo, 2022)

- Measurement-While-Drilling Based Estimation of Dynamic Penetrometer Values Using Decision Trees and Random Forests (García et al., 2022).

- Artificial Intelligence in the Diagnosis of Oral Diseases: Applications and Pitfalls (Patil et al., 2022).

- Automated Machine Learning on High Dimensional Big Data for Prediction Tasks (Jayanthi & Devi, 2022)

- Breakdown of Machine Learning Algorithms (Meena & Sehrawat, 2022)

- Technology-Enabled, Evidence-Driven, and Patient-Centered: The Way Forward for Regulating Software as a Medical Device (Carolan et al., 2021)

- Machine Learning in Tourism (Rugge, 2022)

- Towards a training data model for artificial intelligence in earth observation (Yue et al., 2022)

- Classification of Music Generality using ANN, CNN and RNN-LSTM (Tripathy & Patel, 2022)

As you can see, these research topics are a lot more focused than the generic topic ideas we presented earlier. So, in order for you to develop a high-quality research topic, you’ll need to get specific and laser-focused on a specific context with specific variables of interest. In the video below, we explore some other important things you’ll need to consider when crafting your research topic.

Get 1-On-1 Help

If you’re still unsure about how to find a quality research topic, check out our Research Topic Kickstarter service, which is the perfect starting point for developing a unique, well-justified research topic.

You Might Also Like:

can one come up with their own tppic and get a search

can one come up with their own title and get a search

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

Artificial Intelligence

Since the 1950s, scientists and engineers have designed computers to "think" by making decisions and finding patterns like humans do. In recent years, artificial intelligence has become increasingly powerful, propelling discovery across scientific fields and enabling researchers to delve into problems previously too complex to solve. Outside of science, artificial intelligence is built into devices all around us, and billions of people across the globe rely on it every day. Stories of artificial intelligence—from friendly humanoid robots to SkyNet—have been incorporated into some of the most iconic movies and books.

But where is the line between what AI can do and what is make-believe? How is that line blurring, and what is the future of artificial intelligence? At Caltech, scientists and scholars are working at the leading edge of AI research, expanding the boundaries of its capabilities and exploring its impacts on society. Discover what defines artificial intelligence, how it is developed and deployed, and what the field holds for the future.

View Artificial Intelligence Terms to Know >

What Is AI ?

Artificial intelligence is transforming scientific research as well as everyday life, from communications to transportation to health care and more. Explore what defines AI, how it has evolved since the Turing Test, and the future of artificial intelligence.

READ MORE >

What Is the Difference Between "Artificial Intelligence" and "Machine Learning"?

The term "artificial intelligence" is older and broader than "machine learning." Learn how the terms relate to each other and to the concepts of "neural networks" and "deep learning."

How Do Computers Learn?

Machine learning applications power many features of modern life, including search engines, social media, and self-driving cars. Discover how computers learn to make decisions and predictions in this illustration of two key machine learning models.

How Is AI Applied in Everyday Life?

While scientists and engineers explore AI's potential to advance discovery and technology, smart technologies also directly influence our daily lives. Explore the sometimes surprising examples of AI applications.

What Is Big Data?

The increase in available data has fueled the rise of artificial intelligence. Find out what characterizes big data, where big data comes from, and how it is used.

Read More >

Will Machines Become More Intelligent Than Humans?

Whether or not artificial intelligence will be able to outperform human intelligence—and how soon that could happen—is a common question fueled by depictions of AI in movies and other forms of popular culture. Learn the definition of "singularity" and see a timeline of advances in AI over the past 75 years.

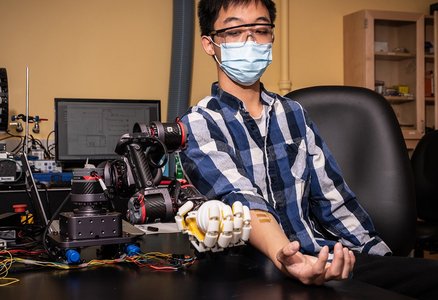

How Does AI Drive Autonomous Systems?

Learn the difference between automation and autonomy, and hear from Caltech faculty who are pushing the limits of AI to create autonomous technology, from self-driving cars to ambulance drones to prosthetic devices.

Can We Trust AI?

As AI is further incorporated into everyday life, more scholars, industries, and ordinary users are examining its effects on society. The Caltech Science Exchange spoke with AI researchers at Caltech about what it might take to trust current and future technologies.

What is Generative AI?

Generative AI applications such as ChatGPT, a chatbot that answers questions with detailed written responses; and DALL-E, which creates realistic images and art based on text prompts; became widely popular beginning in 2022 when companies released versions of their applications that members of the public, not just experts, could easily use.

Ask a Caltech Expert

Where can you find machine learning in finance? Could AI help nature conservation efforts? How is AI transforming astronomy, biology, and other fields? What does an autonomous underwater vehicle have to do with sustainability? Find answers from Caltech researchers.

Terms to Know

A set of instructions or sequence of steps that tells a computer how to perform a task or calculation. In some AI applications, algorithms tell computers how to adapt and refine processes in response to data, without a human supplying new instructions.

Artificial intelligence describes an application or machine that mimics human intelligence.

A system in which machines execute repeated tasks based on a fixed set of human-supplied instructions.

A system in which a machine makes independent, real-time decisions based on human-supplied rules and goals.

The massive amounts of data that are coming in quickly and from a variety of sources, such as internet-connected devices, sensors, and social platforms. In some cases, using or learning from big data requires AI methods. Big data also can enhance the ability to create new AI applications.

An AI system that mimics human conversation. While some simple chatbots rely on pre-programmed text, more sophisticated systems, trained on large data sets, are able to convincingly replicate human interaction.

A subset of machine learning . Deep learning uses machine learning algorithms but structures the algorithms in layers to create "artificial neural networks." These networks are modeled after the human brain and are most likely to provide the experience of interacting with a real human.

An approach that includes human feedback and oversight in machine learning systems. Including humans in the loop may improve accuracy and guard against bias and unintended outcomes of AI.

A computer-generated simplification of something that exists in the real world, such as climate change , disease spread, or earthquakes . Machine learning systems develop models by analyzing patterns in large data sets. Models can be used to simulate natural processes and make predictions.

Interconnected sets of processing units, or nodes, modeled on the human brain, that are used in deep learning to identify patterns in data and, on the basis of those patterns, make predictions in response to new data. Neural networks are used in facial recognition systems, digital marketing, and other applications.

A hypothetical scenario in which an AI system develops agency and grows beyond human ability to control it.

The data used to " teach " a machine learning system to recognize patterns and features. Typically, continual training results in more accurate machine learning systems. Likewise, biased or incomplete datasets can lead to imprecise or unintended outcomes.

An interview-based method proposed by computer pioneer Alan Turing to assess whether a machine can think.

Dive Deeper

Artificial Skin Gives Robots Sense of Touch and Beyond

Artificial Intelligence: The Good, the Bad, and the Ugly

The AI Researcher Giving Her Field Its Bitter Medicine

More Caltech Computer and Information Sciences Research Coverage

The present and future of AI

Finale doshi-velez on how ai is shaping our lives and how we can shape ai.

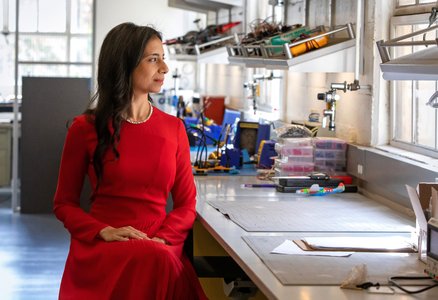

Finale Doshi-Velez, the John L. Loeb Professor of Engineering and Applied Sciences. (Photo courtesy of Eliza Grinnell/Harvard SEAS)

How has artificial intelligence changed and shaped our world over the last five years? How will AI continue to impact our lives in the coming years? Those were the questions addressed in the most recent report from the One Hundred Year Study on Artificial Intelligence (AI100), an ongoing project hosted at Stanford University, that will study the status of AI technology and its impacts on the world over the next 100 years.

The 2021 report is the second in a series that will be released every five years until 2116. Titled “Gathering Strength, Gathering Storms,” the report explores the various ways AI is increasingly touching people’s lives in settings that range from movie recommendations and voice assistants to autonomous driving and automated medical diagnoses .

Barbara Grosz , the Higgins Research Professor of Natural Sciences at the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS) is a member of the standing committee overseeing the AI100 project and Finale Doshi-Velez , Gordon McKay Professor of Computer Science, is part of the panel of interdisciplinary researchers who wrote this year’s report.

We spoke with Doshi-Velez about the report, what it says about the role AI is currently playing in our lives, and how it will change in the future.

Q: Let's start with a snapshot: What is the current state of AI and its potential?

Doshi-Velez: Some of the biggest changes in the last five years have been how well AIs now perform in large data regimes on specific types of tasks. We've seen [DeepMind’s] AlphaZero become the best Go player entirely through self-play, and everyday uses of AI such as grammar checks and autocomplete, automatic personal photo organization and search, and speech recognition become commonplace for large numbers of people.

In terms of potential, I'm most excited about AIs that might augment and assist people. They can be used to drive insights in drug discovery, help with decision making such as identifying a menu of likely treatment options for patients, and provide basic assistance, such as lane keeping while driving or text-to-speech based on images from a phone for the visually impaired. In many situations, people and AIs have complementary strengths. I think we're getting closer to unlocking the potential of people and AI teams.

There's a much greater recognition that we should not be waiting for AI tools to become mainstream before making sure they are ethical.

Q: Over the course of 100 years, these reports will tell the story of AI and its evolving role in society. Even though there have only been two reports, what's the story so far?

There's actually a lot of change even in five years. The first report is fairly rosy. For example, it mentions how algorithmic risk assessments may mitigate the human biases of judges. The second has a much more mixed view. I think this comes from the fact that as AI tools have come into the mainstream — both in higher stakes and everyday settings — we are appropriately much less willing to tolerate flaws, especially discriminatory ones. There's also been questions of information and disinformation control as people get their news, social media, and entertainment via searches and rankings personalized to them. So, there's a much greater recognition that we should not be waiting for AI tools to become mainstream before making sure they are ethical.

Q: What is the responsibility of institutes of higher education in preparing students and the next generation of computer scientists for the future of AI and its impact on society?

First, I'll say that the need to understand the basics of AI and data science starts much earlier than higher education! Children are being exposed to AIs as soon as they click on videos on YouTube or browse photo albums. They need to understand aspects of AI such as how their actions affect future recommendations.

But for computer science students in college, I think a key thing that future engineers need to realize is when to demand input and how to talk across disciplinary boundaries to get at often difficult-to-quantify notions of safety, equity, fairness, etc. I'm really excited that Harvard has the Embedded EthiCS program to provide some of this education. Of course, this is an addition to standard good engineering practices like building robust models, validating them, and so forth, which is all a bit harder with AI.

I think a key thing that future engineers need to realize is when to demand input and how to talk across disciplinary boundaries to get at often difficult-to-quantify notions of safety, equity, fairness, etc.

Q: Your work focuses on machine learning with applications to healthcare, which is also an area of focus of this report. What is the state of AI in healthcare?

A lot of AI in healthcare has been on the business end, used for optimizing billing, scheduling surgeries, that sort of thing. When it comes to AI for better patient care, which is what we usually think about, there are few legal, regulatory, and financial incentives to do so, and many disincentives. Still, there's been slow but steady integration of AI-based tools, often in the form of risk scoring and alert systems.

In the near future, two applications that I'm really excited about are triage in low-resource settings — having AIs do initial reads of pathology slides, for example, if there are not enough pathologists, or get an initial check of whether a mole looks suspicious — and ways in which AIs can help identify promising treatment options for discussion with a clinician team and patient.

Q: Any predictions for the next report?

I'll be keen to see where currently nascent AI regulation initiatives have gotten to. Accountability is such a difficult question in AI, it's tricky to nurture both innovation and basic protections. Perhaps the most important innovation will be in approaches for AI accountability.

Topics: AI / Machine Learning , Computer Science

Cutting-edge science delivered direct to your inbox.

Join the Harvard SEAS mailing list.

Scientist Profiles

Finale Doshi-Velez

Herchel Smith Professor of Computer Science

Press Contact

Leah Burrows | 617-496-1351 | [email protected]

Related News

Alumni profile: Jacomo Corbo, Ph.D. '08

Racing into the future of machine learning

AI / Machine Learning , Computer Science

Ph.D. student Monteiro Paes named Apple Scholar in AI/ML

Monteiro Paes studies fairness and arbitrariness in machine learning models

AI / Machine Learning , Applied Mathematics , Awards , Graduate Student Profile

A new phase for Harvard Quantum Computing Club

SEAS students place second at MIT quantum hackathon

Computer Science , Quantum Engineering , Undergraduate Student Profile

AI Research Trends

Main navigation, related documents.

2015 Study Panel Charge

June 2016 Interim Summary

Download Full Report

[ go to the annotated version ]

Until the turn of the millennium, AI’s appeal lay largely in its promise to deliver, but in the last fifteen years, much of that promise has been redeemed. [15] AI already pervades our lives. And as it becomes a central force in society, the field is now shifting from simply building systems that are intelligent to building intelligent systems that are human-aware and trustworthy.

Several factors have fueled the AI revolution. Foremost among them is the maturing of machine learning, supported in part by cloud computing resources and wide-spread, web-based data gathering. Machine learning has been propelled dramatically forward by “deep learning,” a form of adaptive artificial neural networks trained using a method called backpropagation. [16] This leap in the performance of information processing algorithms has been accompanied by significant progress in hardware technology for basic operations such as sensing, perception, and object recognition. New platforms and markets for data-driven products, and the economic incentives to find new products and markets, have also contributed to the advent of AI-driven technology.

All these trends drive the “hot” areas of research described below. This compilation is meant simply to reflect the areas that, by one metric or another, currently receive greater attention than others. They are not necessarily more important or valuable than other ones. Indeed, some of the currently “hot” areas were less popular in past years, and it is likely that other areas will similarly re-emerge in the future.

Large-scale machine learning

Many of the basic problems in machine learning (such as supervised and unsupervised learning) are well-understood. A major focus of current efforts is to scale existing algorithms to work with extremely large data sets. For example, whereas traditional methods could afford to make several passes over the data set, modern ones are designed to make only a single pass; in some cases, only sublinear methods (those that only look at a fraction of the data) can be admitted.

Deep learning

The ability to successfully train convolutional neural networks has most benefited the field of computer vision, with applications such as object recognition, video labeling, activity recognition, and several variants thereof. Deep learning is also making significant inroads into other areas of perception, such as audio, speech, and natural language processing.

Reinforcement learning

Whereas traditional machine learning has mostly focused on pattern mining, reinforcement learning shifts the focus to decision making, and is a technology that will help AI to advance more deeply into the realm of learning about and executing actions in the real world. It has existed for several decades as a framework for experience-driven sequential decision-making, but the methods have not found great success in practice, mainly owing to issues of representation and scaling. However, the advent of deep learning has provided reinforcement learning with a “shot in the arm.” The recent success of AlphaGo, a computer program developed by Google Deepmind that beat the human Go champion in a five-game match, was due in large part to reinforcement learning. AlphaGo was trained by initializing an automated agent with a human expert database, but was subsequently refined by playing a large number of games against itself and applying reinforcement learning.

Robotic navigation, at least in static environments, is largely solved. Current efforts consider how to train a robot to interact with the world around it in generalizable and predictable ways. A natural requirement that arises in interactive environments is manipulation, another topic of current interest. The deep learning revolution is only beginning to influence robotics, in large part because it is far more difficult to acquire the large labeled data sets that have driven other learning-based areas of AI. Reinforcement learning (see above), which obviates the requirement of labeled data, may help bridge this gap but requires systems to be able to safely explore a policy space without committing errors that harm the system itself or others. Advances in reliable machine perception, including computer vision, force, and tactile perception, much of which will be driven by machine learning, will continue to be key enablers to advancing the capabilities of robotics.

Computer vision

Computer vision is currently the most prominent form of machine perception. It has been the sub-area of AI most transformed by the rise of deep learning. Until just a few years ago, support vector machines were the method of choice for most visual classification tasks. But the confluence of large-scale computing, especially on GPUs, the availability of large datasets, especially via the internet, and refinements of neural network algorithms has led to dramatic improvements in performance on benchmark tasks (e.g., classification on ImageNet [17] ). For the first time, computers are able to perform some (narrowly defined) visual classification tasks better than people. Much current research is focused on automatic image and video captioning.

Natural Language Processing

Often coupled with automatic speech recognition, Natural Language Processing is another very active area of machine perception. It is quickly becoming a commodity for mainstream languages with large data sets. Google announced that 20% of current mobile queries are done by voice, [18] and recent demonstrations have proven the possibility of real-time translation. Research is now shifting towards developing refined and capable systems that are able to interact with people through dialog, not just react to stylized requests.

Collaborative systems

Research on collaborative systems investigates models and algorithms to help develop autonomous systems that can work collaboratively with other systems and with humans. This research relies on developing formal models of collaboration, and studies the capabilities needed for systems to become effective partners. There is growing interest in applications that can utilize the complementary strengths of humans and machines—for humans to help AI systems to overcome their limitations, and for agents to augment human abilities and activities.

Crowdsourcing and human computation

Since human abilities are superior to automated methods for accomplishing many tasks, research on crowdsourcing and human computation investigates methods to augment computer systems by utilizing human intelligence to solve problems that computers alone cannot solve well. Introduced only about fifteen years ago, this research now has an established presence in AI. The best-known example of crowdsourcing is Wikipedia, a knowledge repository that is maintained and updated by netizens and that far exceeds traditionally-compiled information sources, such as encyclopedias and dictionaries, in scale and depth. Crowdsourcing focuses on devising innovative ways to harness human intelligence. Citizen science platforms energize volunteers to solve scientific problems, while paid crowdsourcing platforms such as Amazon Mechanical Turk provide automated access to human intelligence on demand. Work in this area has facilitated advances in other subfields of AI, including computer vision and NLP, by enabling large amounts of labeled training data and/or human interaction data to be collected in a short amount of time. Current research efforts explore ideal divisions of tasks between humans and machines based on their differing capabilities and costs.

Algorithmic game theory and computational social choice

New attention is being drawn to the economic and social computing dimensions of AI, including incentive structures. Distributed AI and multi-agent systems have been studied since the early 1980s, gained prominence starting in the late 1990s, and were accelerated by the internet. A natural requirement is that systems handle potentially misaligned incentives, including self-interested human participants or firms, as well as automated AI-based agents representing them. Topics receiving attention include computational mechanism design (an economic theory of incentive design, seeking incentive-compatible systems where inputs are truthfully reported), computational social choice (a theory for how to aggregate rank orders on alternatives), incentive aligned information elicitation (prediction markets, scoring rules, peer prediction) and algorithmic game theory (the equilibria of markets, network games, and parlor games such as Poker—a game where significant advances have been made in recent years through abstraction techniques and no-regret learning).

Internet of Things (IoT)

A growing body of research is devoted to the idea that a wide array of devices can be interconnected to collect and share their sensory information. Such devices can include appliances, vehicles, buildings, cameras, and other things. While it's a matter of technology and wireless networking to connect the devices, AI can process and use the resulting huge amounts of data for intelligent and useful purposes. Currently, these devices use a bewildering array of incompatible communication protocols. AI could help tame this Tower of Babel.

Neuromorphic Computing

Traditional computers implement the von Neumann model of computing, which separates the modules for input/output, instruction-processing, and memory. With the success of deep neural networks on a wide array of tasks, manufacturers are actively pursuing alternative models of computing—especially those that are inspired by what is known about biological neural networks—with the aim of improving the hardware efficiency and robustness of computing systems. At the moment, such “neuromorphic” computers have not yet clearly demonstrated big wins, and are just beginning to become commercially viable. But it is possible that they will become commonplace (even if only as additions to their von Neumann cousins) in the near future. Deep neural networks have already created a splash in the application landscape. A larger wave may hit when these networks can be trained and executed on dedicated neuromorphic hardware, as opposed to simulated on standard von Neumann architectures, as they are today.

[15] Appendix I offers a short history of AI, including a description of some of the traditionally core areas of research, which have shifted over the past six decades.

[16] Backpropogation is an abbreviation for "backward propagation of errors,” a common method of training artificial neural networks used in conjunction with an optimization method such as gradient descent. The method calculates the gradient of a loss function with respect to all the weights in the network.

[17] ImageNet, Stanford Vision Lab, Stanford University, Princeton University, 2016, accessed August 1, 2016, www.image-net.org/ .

[18] Greg Sterling, "Google says 20% of mobile queries are voice searches," Search Engine Land , May 18, 2016, accessed August 1, 2016, http://searchengineland.com/google-reveals-20-percent-queries-voice-queries-249917 .

In this section

Overall Trends and the Future of AI Research

Cite This Report

Peter Stone, Rodney Brooks, Erik Brynjolfsson, Ryan Calo, Oren Etzioni, Greg Hager, Julia Hirschberg, Shivaram Kalyanakrishnan, Ece Kamar, Sarit Kraus, Kevin Leyton-Brown, David Parkes, William Press, AnnaLee Saxenian, Julie Shah, Milind Tambe, and Astro Teller. "Artificial Intelligence and Life in 2030." One Hundred Year Study on Artificial Intelligence: Report of the 2015-2016 Study Panel, Stanford University, Stanford, CA, September 2016. Doc: http://ai100.stanford.edu/2016-report . Accessed: September 6, 2016.

Report Authors

AI100 Standing Committee and Study Panel

© 2016 by Stanford University. Artificial Intelligence and Life in 2030 is made available under a Creative Commons Attribution-NoDerivatives 4.0 License (International): https://creativecommons.org/licenses/by-nd/4.0/ .

Tackling the most challenging problems in computer science

Our teams aspire to make discoveries that positively impact society. Core to our approach is sharing our research and tools to fuel progress in the field, to help more people more quickly. We regularly publish in academic journals, release projects as open source, and apply research to Google products to benefit users at scale.

Featured research developments

Mitigating aviation’s climate impact with Project Contrails

Consensus and subjectivity of skin tone annotation for ML fairness

A toolkit for transparency in AI dataset documentation

Building better pangenomes to improve the equity of genomics

A set of methods, best practices, and examples for designing with AI

Learn more from our research

Researchers across Google are innovating across many domains. We challenge conventions and reimagine technology so that everyone can benefit.

Publications

Google publishes over 1,000 papers annually. Publishing our work enables us to collaborate and share ideas with, as well as learn from, the broader scientific community.

Research areas

From conducting fundamental research to influencing product development, our research teams have the opportunity to impact technology used by billions of people every day.

Tools and datasets

We make tools and datasets available to the broader research community with the goal of building a more collaborative ecosystem.

Meet the people behind our innovations

Our teams collaborate with the research and academic communities across the world

Mobile Navigation

Research index, filter and sort, filter selections, sort options, research papers.

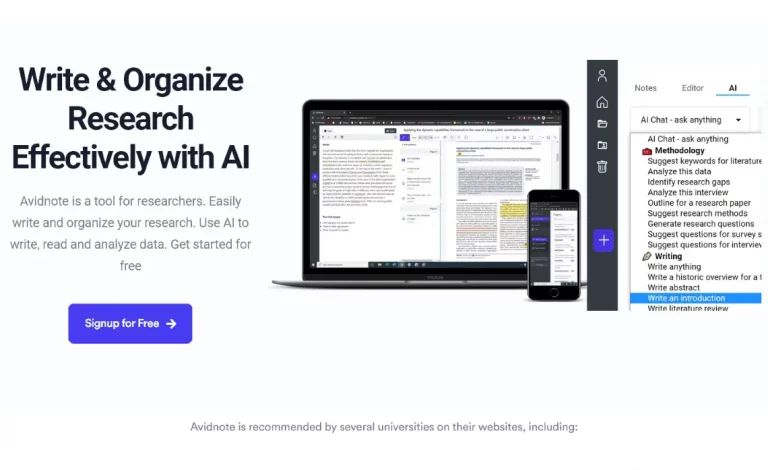

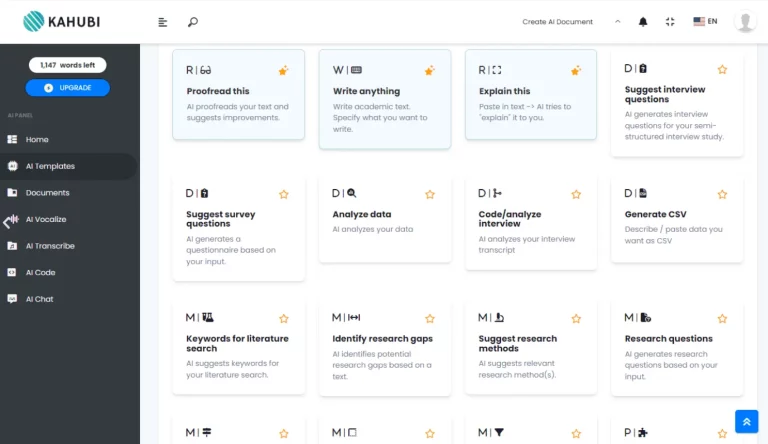

The best AI tools for research papers and academic research (Literature review, grants, PDFs and more)

As our collective understanding and application of artificial intelligence (AI) continues to evolve, so too does the realm of academic research. Some people are scared by it while others are openly embracing the change.

Make no mistake, AI is here to stay!

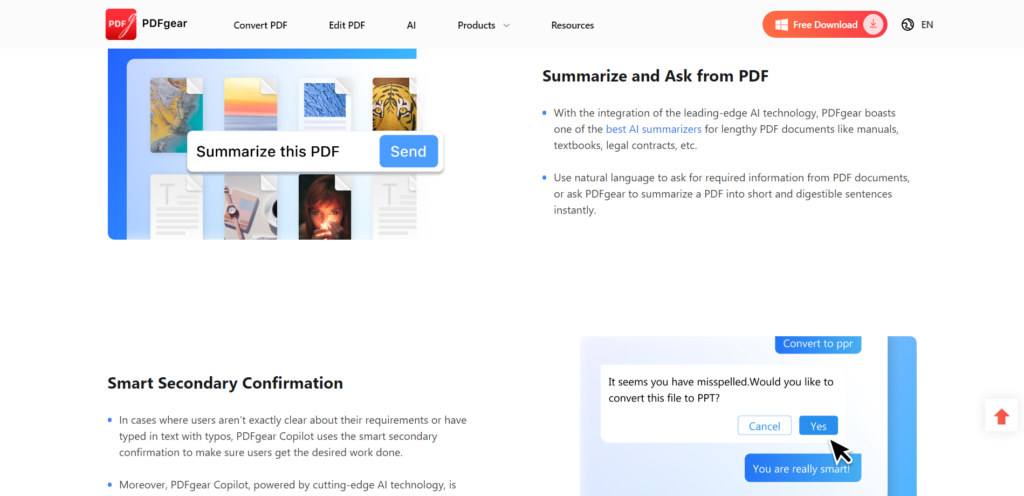

Instead of tirelessly scrolling through hundreds of PDFs, a powerful AI tool comes to your rescue, summarizing key information in your research papers. Instead of manually combing through citations and conducting literature reviews, an AI research assistant proficiently handles these tasks.

These aren’t futuristic dreams, but today’s reality. Welcome to the transformative world of AI-powered research tools!

The influence of AI in scientific and academic research is an exciting development, opening the doors to more efficient, comprehensive, and rigorous exploration.

This blog post will dive deeper into these tools, providing a detailed review of how AI is revolutionizing academic research. We’ll look at the tools that can make your literature review process less tedious, your search for relevant papers more precise, and your overall research process more efficient and fruitful.

I know that I wish these were around during my time in academia. It can be quite confronting when trying to work out what ones you should and shouldn’t use. A new one seems to be coming out every day!

Here is everything you need to know about AI for academic research and the ones I have personally trialed on my Youtube channel.

Best ChatGPT interface – Chat with PDFs/websites and more

I get more out of ChatGPT with HeyGPT . It can do things that ChatGPT cannot which makes it really valuable for researchers.

Use your own OpenAI API key ( h e re ). No login required. Access ChatGPT anytime, including peak periods. Faster response time. Unlock advanced functionalities with HeyGPT Ultra for a one-time lifetime subscription

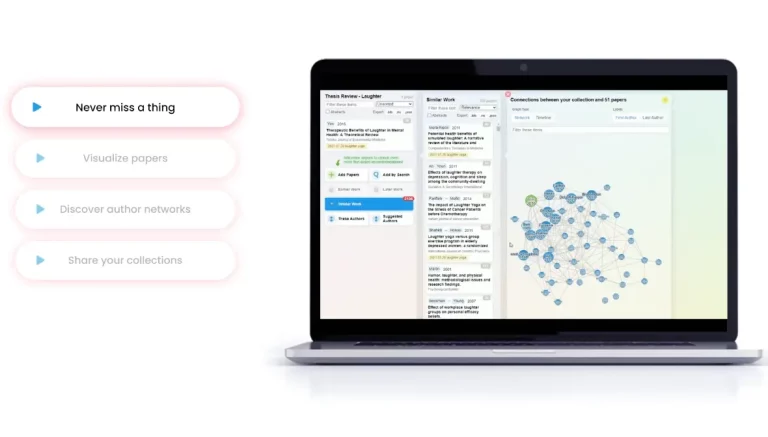

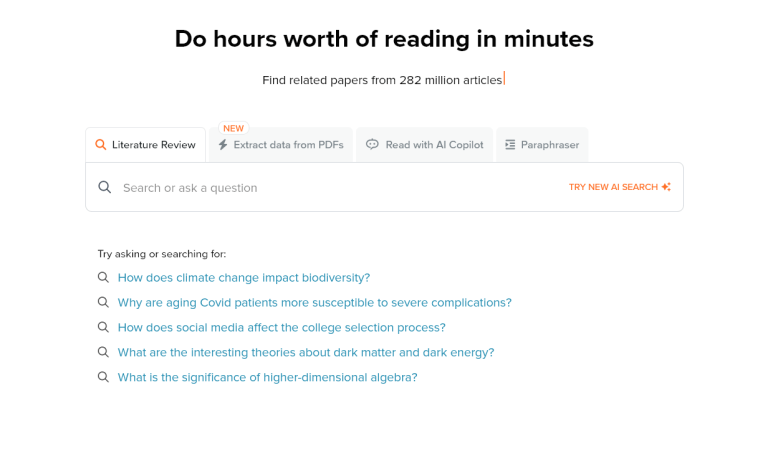

AI literature search and mapping – best AI tools for a literature review – elicit and more

Harnessing AI tools for literature reviews and mapping brings a new level of efficiency and precision to academic research. No longer do you have to spend hours looking in obscure research databases to find what you need!

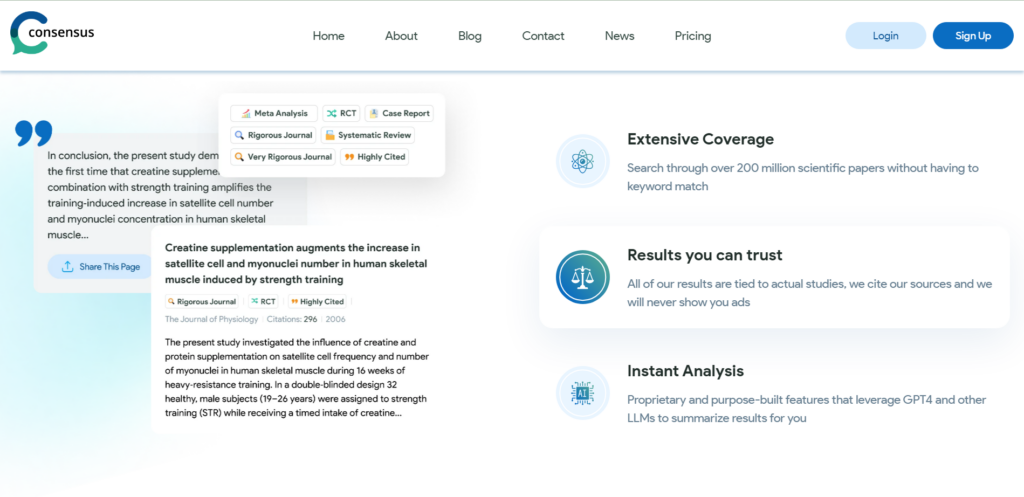

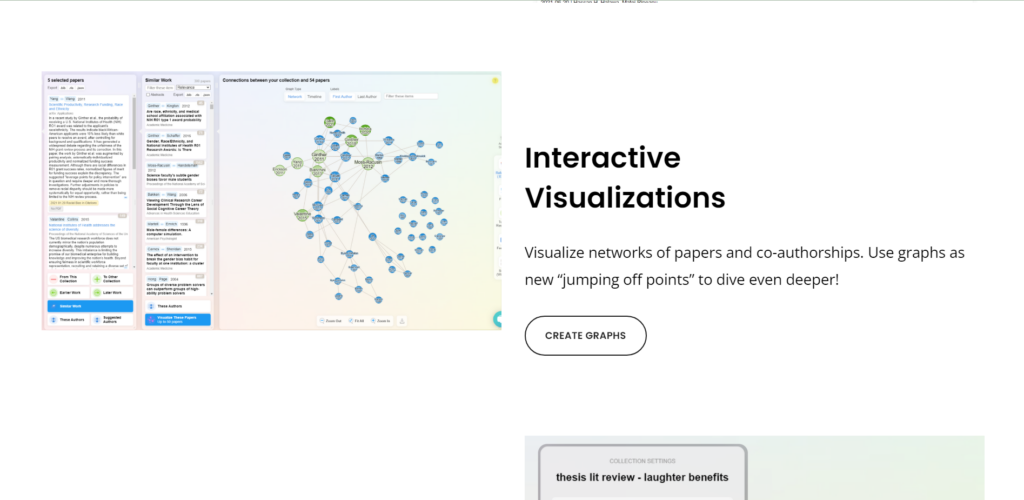

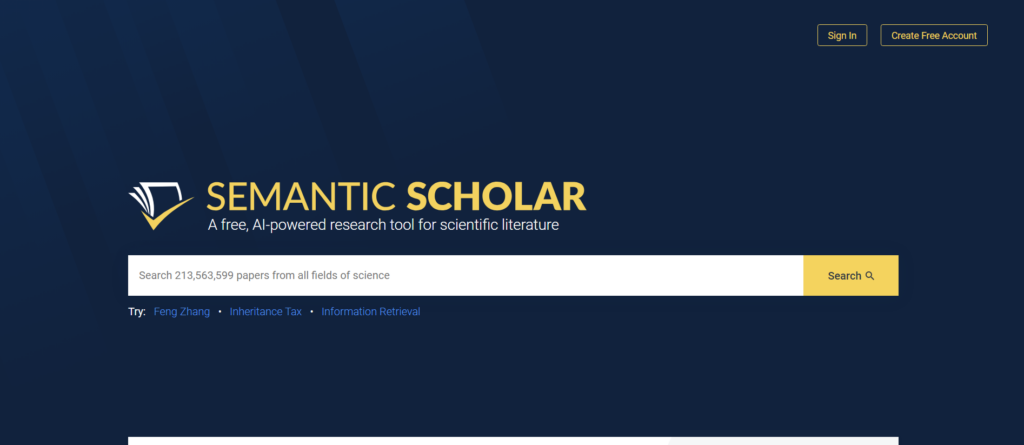

AI-powered tools like Semantic Scholar and elicit.org use sophisticated search engines to quickly identify relevant papers.

They can mine key information from countless PDFs, drastically reducing research time. You can even search with semantic questions, rather than having to deal with key words etc.

With AI as your research assistant, you can navigate the vast sea of scientific research with ease, uncovering citations and focusing on academic writing. It’s a revolutionary way to take on literature reviews.

- Elicit – https://elicit.org

- Supersymmetry.ai: https://www.supersymmetry.ai

- Semantic Scholar: https://www.semanticscholar.org

- Connected Papers – https://www.connectedpapers.com/

- Research rabbit – https://www.researchrabbit.ai/

- Laser AI – https://laser.ai/

- Litmaps – https://www.litmaps.com

- Inciteful – https://inciteful.xyz/

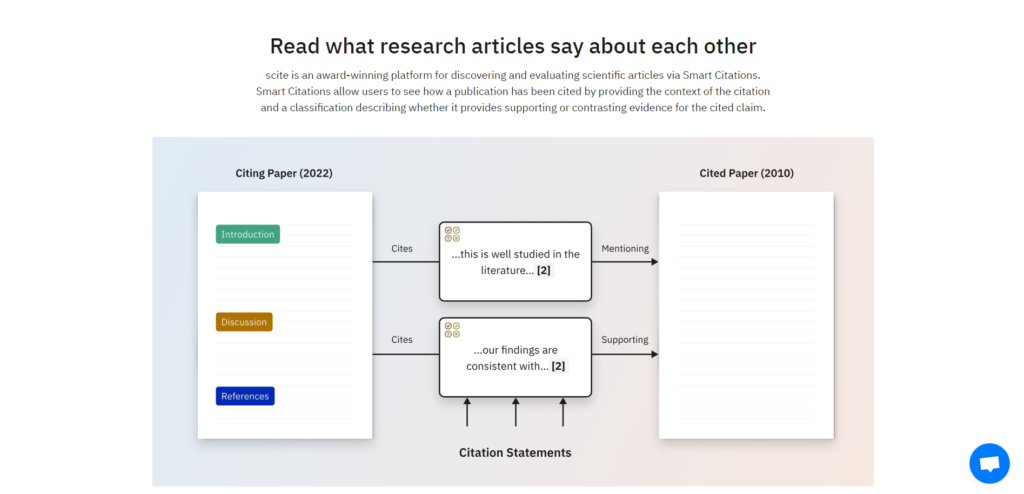

- Scite – https://scite.ai/

- System – https://www.system.com

If you like AI tools you may want to check out this article:

- How to get ChatGPT to write an essay [The prompts you need]

AI-powered research tools and AI for academic research

AI research tools, like Concensus, offer immense benefits in scientific research. Here are the general AI-powered tools for academic research.

These AI-powered tools can efficiently summarize PDFs, extract key information, and perform AI-powered searches, and much more. Some are even working towards adding your own data base of files to ask questions from.

Tools like scite even analyze citations in depth, while AI models like ChatGPT elicit new perspectives.

The result? The research process, previously a grueling endeavor, becomes significantly streamlined, offering you time for deeper exploration and understanding. Say goodbye to traditional struggles, and hello to your new AI research assistant!

- Bit AI – https://bit.ai/

- Consensus – https://consensus.app/

- Exper AI – https://www.experai.com/

- Hey Science (in development) – https://www.heyscience.ai/

- Iris AI – https://iris.ai/

- PapersGPT (currently in development) – https://jessezhang.org/llmdemo

- Research Buddy – https://researchbuddy.app/

- Mirror Think – https://mirrorthink.ai

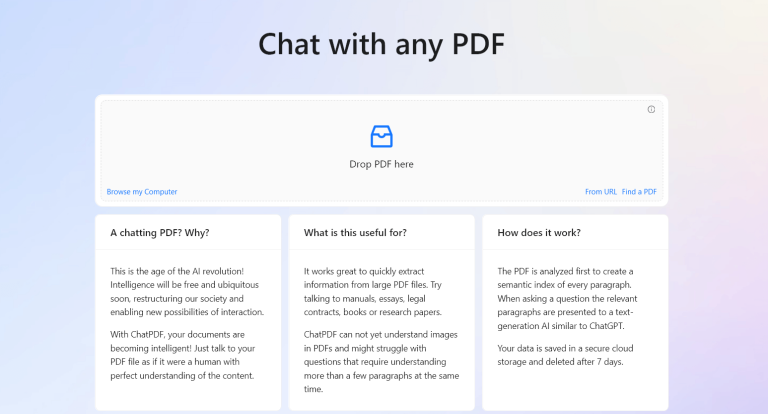

AI for reading peer-reviewed papers easily

Using AI tools like Explain paper and Humata can significantly enhance your engagement with peer-reviewed papers. I always used to skip over the details of the papers because I had reached saturation point with the information coming in.

These AI-powered research tools provide succinct summaries, saving you from sifting through extensive PDFs – no more boring nights trying to figure out which papers are the most important ones for you to read!

They not only facilitate efficient literature reviews by presenting key information, but also find overlooked insights.

With AI, deciphering complex citations and accelerating research has never been easier.

- Open Read – https://www.openread.academy

- Chat PDF – https://www.chatpdf.com

- Explain Paper – https://www.explainpaper.com

- Humata – https://www.humata.ai/

- Lateral AI – https://www.lateral.io/

- Paper Brain – https://www.paperbrain.study/

- Scholarcy – https://www.scholarcy.com/

- SciSpace Copilot – https://typeset.io/

- Unriddle – https://www.unriddle.ai/

- Sharly.ai – https://www.sharly.ai/

AI for scientific writing and research papers

In the ever-evolving realm of academic research, AI tools are increasingly taking center stage.

Enter Paper Wizard, Jenny.AI, and Wisio – these groundbreaking platforms are set to revolutionize the way we approach scientific writing.

Together, these AI tools are pioneering a new era of efficient, streamlined scientific writing.

- Paper Wizard – https://paperwizard.ai/

- Jenny.AI https://jenni.ai/ (20% off with code ANDY20)

- Wisio – https://www.wisio.app

AI academic editing tools

In the realm of scientific writing and editing, artificial intelligence (AI) tools are making a world of difference, offering precision and efficiency like never before. Consider tools such as Paper Pal, Writefull, and Trinka.

Together, these tools usher in a new era of scientific writing, where AI is your dedicated partner in the quest for impeccable composition.

- Paper Pal – https://paperpal.com/

- Writefull – https://www.writefull.com/

- Trinka – https://www.trinka.ai/

AI tools for grant writing

In the challenging realm of science grant writing, two innovative AI tools are making waves: Granted AI and Grantable.

These platforms are game-changers, leveraging the power of artificial intelligence to streamline and enhance the grant application process.

Granted AI, an intelligent tool, uses AI algorithms to simplify the process of finding, applying, and managing grants. Meanwhile, Grantable offers a platform that automates and organizes grant application processes, making it easier than ever to secure funding.

Together, these tools are transforming the way we approach grant writing, using the power of AI to turn a complex, often arduous task into a more manageable, efficient, and successful endeavor.

- Granted AI – https://grantedai.com/

- Grantable – https://grantable.co/

Free AI research tools

There are many different tools online that are emerging for researchers to be able to streamline their research processes. There’s no need for convience to come at a massive cost and break the bank.

The best free ones at time of writing are:

- Elicit – https://elicit.org

- Connected Papers – https://www.connectedpapers.com/

- Litmaps – https://www.litmaps.com ( 10% off Pro subscription using the code “STAPLETON” )

- Consensus – https://consensus.app/

Wrapping up

The integration of artificial intelligence in the world of academic research is nothing short of revolutionary.

With the array of AI tools we’ve explored today – from research and mapping, literature review, peer-reviewed papers reading, scientific writing, to academic editing and grant writing – the landscape of research is significantly transformed.

The advantages that AI-powered research tools bring to the table – efficiency, precision, time saving, and a more streamlined process – cannot be overstated.

These AI research tools aren’t just about convenience; they are transforming the way we conduct and comprehend research.

They liberate researchers from the clutches of tedium and overwhelm, allowing for more space for deep exploration, innovative thinking, and in-depth comprehension.

Whether you’re an experienced academic researcher or a student just starting out, these tools provide indispensable aid in your research journey.

And with a suite of free AI tools also available, there is no reason to not explore and embrace this AI revolution in academic research.

We are on the precipice of a new era of academic research, one where AI and human ingenuity work in tandem for richer, more profound scientific exploration. The future of research is here, and it is smart, efficient, and AI-powered.

Before we get too excited however, let us remember that AI tools are meant to be our assistants, not our masters. As we engage with these advanced technologies, let’s not lose sight of the human intellect, intuition, and imagination that form the heart of all meaningful research. Happy researching!

Thank you to Ivan Aguilar – Ph.D. Student at SFU (Simon Fraser University), for starting this list for me!

Dr Andrew Stapleton has a Masters and PhD in Chemistry from the UK and Australia. He has many years of research experience and has worked as a Postdoctoral Fellow and Associate at a number of Universities. Although having secured funding for his own research, he left academia to help others with his YouTube channel all about the inner workings of academia and how to make it work for you.

Thank you for visiting Academia Insider.

We are here to help you navigate Academia as painlessly as possible. We are supported by our readers and by visiting you are helping us earn a small amount through ads and affiliate revenue - Thank you!

2024 © Academia Insider

AI-Driven Hypotheses: Real world examples exploring the potential and challenges of AI-generated hypotheses in science

Artificial intelligence (AI) is no longer confined to mere automation; it is now an active participant in the pursuit of knowledge and understanding. However, in the realm of scientific research, the integration of AI marks a significant paradigm shift, ushering in an era where machines and human actively collaborate to formulate research hypotheses and questions. While AI systems have traditionally served as powerful tools for data analysis, their evolution now allows them to go beyond analysis and generate hypotheses, prompting researchers to explore uncharted domains of research.

Let’s delve deeper in understanding this transformative capability of AI and the challenges established in research hypothesis formation, emphasizing the crucial role of human intervention throughout the AI integration process.

Table of Contents

Potential of AI-Generated Research Hypothesis: Is it enough?

The discerning ability of AI, particularly through machine learning algorithms, has demonstrated a unique capacity to identify patterns across vast datasets. This has given rise to AI systems not only proficient in analyzing existing data but also in formulating hypotheses based on patterns that may elude human observation alone. The synergy between machine-driven hypothesis generation and human expertise represents a promising frontier for scientific discovery, underscoring the importance of human oversight and interpretation.

The capability of AI to generate hypotheses raises thought-provoking questions about the nature of creativity in the research process. However, although AI can identify patterns within data, the question remains: can they exhibit true creativity in proposing hypotheses, or are they limited to recognizing patterns within existing data?

Furthermore, the intersection of AI and research transcends the generation of hypotheses to include the formulation of research questions. By actively engaging with data and recognizing gaps in knowledge, AI systems can propose insightful questions that guide researchers toward unexplored avenues. This collaborative approach between machines and researchers enhances the scope and depth of scientific inquiry, emphasizing the indispensable role of human insight in shaping the research agenda.

Challenges in AI-Driven Hypothesis Formation