Join thousands of product people at Insight Out Conf on April 11. Register free.

Insights hub solutions

Analyze data

Uncover deep customer insights with fast, powerful features, store insights, curate and manage insights in one searchable platform, scale research, unlock the potential of customer insights at enterprise scale.

Featured reads

Inspiration

Three things to look forward to at Insight Out

Tips and tricks

Make magic with your customer data in Dovetail

Four ways Dovetail helps Product Managers master continuous product discovery

Events and videos

© Dovetail Research Pty. Ltd.

What is causal research design?

Last updated

14 May 2023

Reviewed by

Examining these relationships gives researchers valuable insights into the mechanisms that drive the phenomena they are investigating.

Organizations primarily use causal research design to identify, determine, and explore the impact of changes within an organization and the market. You can use a causal research design to evaluate the effects of certain changes on existing procedures, norms, and more.

This article explores causal research design, including its elements, advantages, and disadvantages.

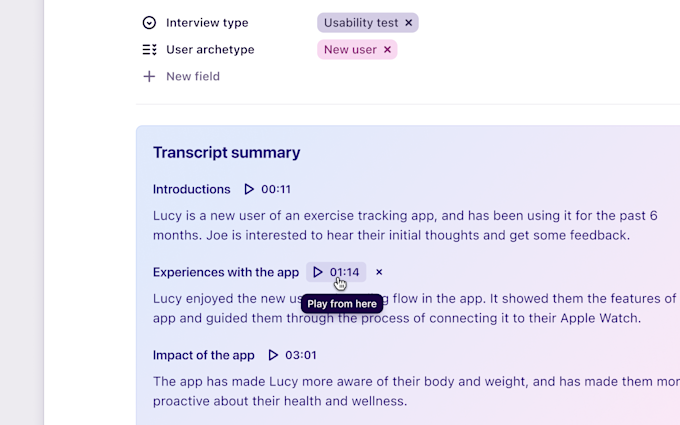

Analyze your causal research

Dovetail streamlines causal research analysis to help you uncover and share actionable insights

- Components of causal research

You can demonstrate the existence of cause-and-effect relationships between two factors or variables using specific causal information, allowing you to produce more meaningful results and research implications.

These are the key inputs for causal research:

The timeline of events

Ideally, the cause must occur before the effect. You should review the timeline of two or more separate events to determine the independent variables (cause) from the dependent variables (effect) before developing a hypothesis.

If the cause occurs before the effect, you can link cause and effect and develop a hypothesis .

For instance, an organization may notice a sales increase. Determining the cause would help them reproduce these results.

Upon review, the business realizes that the sales boost occurred right after an advertising campaign. The business can leverage this time-based data to determine whether the advertising campaign is the independent variable that caused a change in sales.

Evaluation of confounding variables

In most cases, you need to pinpoint the variables that comprise a cause-and-effect relationship when using a causal research design. This uncovers a more accurate conclusion.

Co-variations between a cause and effect must be accurate, and a third factor shouldn’t relate to cause and effect.

Observing changes

Variation links between two variables must be clear. A quantitative change in effect must happen solely due to a quantitative change in the cause.

You can test whether the independent variable changes the dependent variable to evaluate the validity of a cause-and-effect relationship. A steady change between the two variables must occur to back up your hypothesis of a genuine causal effect.

- Why is causal research useful?

Causal research allows market researchers to predict hypothetical occurrences and outcomes while enhancing existing strategies. Organizations can use this concept to develop beneficial plans.

Causal research is also useful as market researchers can immediately deduce the effect of the variables on each other under real-world conditions.

Once researchers complete their first experiment, they can use their findings. Applying them to alternative scenarios or repeating the experiment to confirm its validity can produce further insights.

Businesses widely use causal research to identify and comprehend the effect of strategic changes on their profits.

- How does causal research compare and differ from other research types?

Other research types that identify relationships between variables include exploratory and descriptive research .

Here’s how they compare and differ from causal research designs:

Exploratory research

An exploratory research design evaluates situations where a problem or opportunity's boundaries are unclear. You can use this research type to test various hypotheses and assumptions to establish facts and understand a situation more clearly.

You can also use exploratory research design to navigate a topic and discover the relevant variables. This research type allows flexibility and adaptability as the experiment progresses, particularly since no area is off-limits.

It’s worth noting that exploratory research is unstructured and typically involves collecting qualitative data . This provides the freedom to tweak and amend the research approach according to your ongoing thoughts and assessments.

Unfortunately, this exposes the findings to the risk of bias and may limit the extent to which a researcher can explore a topic.

This table compares the key characteristics of causal and exploratory research:

Descriptive research

This research design involves capturing and describing the traits of a population, situation, or phenomenon. Descriptive research focuses more on the " what " of the research subject and less on the " why ."

Since descriptive research typically happens in a real-world setting, variables can cross-contaminate others. This increases the challenge of isolating cause-and-effect relationships.

You may require further research if you need more causal links.

This table compares the key characteristics of causal and descriptive research.

Causal research examines a research question’s variables and how they interact. It’s easier to pinpoint cause and effect since the experiment often happens in a controlled setting.

Researchers can conduct causal research at any stage, but they typically use it once they know more about the topic.

In contrast, causal research tends to be more structured and can be combined with exploratory and descriptive research to help you attain your research goals.

- How can you use causal research effectively?

Here are common ways that market researchers leverage causal research effectively:

Market and advertising research

Do you want to know if your new marketing campaign is affecting your organization positively? You can use causal research to determine the variables causing negative or positive impacts on your campaign.

Improving customer experiences and loyalty levels

Consumers generally enjoy purchasing from brands aligned with their values. They’re more likely to purchase from such brands and positively represent them to others.

You can use causal research to identify the variables contributing to increased or reduced customer acquisition and retention rates.

Could the cause of increased customer retention rates be streamlined checkout?

Perhaps you introduced a new solution geared towards directly solving their immediate problem.

Whatever the reason, causal research can help you identify the cause-and-effect relationship. You can use this to enhance your customer experiences and loyalty levels.

Improving problematic employee turnover rates

Is your organization experiencing skyrocketing attrition rates?

You can leverage the features and benefits of causal research to narrow down the possible explanations or variables with significant effects on employees quitting.

This way, you can prioritize interventions, focusing on the highest priority causal influences, and begin to tackle high employee turnover rates.

- Advantages of causal research

The main benefits of causal research include the following:

Effectively test new ideas

If causal research can pinpoint the precise outcome through combinations of different variables, researchers can test ideas in the same manner to form viable proof of concepts.

Achieve more objective results

Market researchers typically use random sampling techniques to choose experiment participants or subjects in causal research. This reduces the possibility of exterior, sample, or demography-based influences, generating more objective results.

Improved business processes

Causal research helps businesses understand which variables positively impact target variables, such as customer loyalty or sales revenues. This helps them improve their processes, ROI, and customer and employee experiences.

Guarantee reliable and accurate results

Upon identifying the correct variables, researchers can replicate cause and effect effortlessly. This creates reliable data and results to draw insights from.

Internal organization improvements

Businesses that conduct causal research can make informed decisions about improving their internal operations and enhancing employee experiences.

- Disadvantages of causal research

Like any other research method, casual research has its set of drawbacks that include:

Extra research to ensure validity

Researchers can't simply rely on the outcomes of causal research since it isn't always accurate. There may be a need to conduct other research types alongside it to ensure accurate output.

Coincidence

Coincidence tends to be the most significant error in causal research. Researchers often misinterpret a coincidental link between a cause and effect as a direct causal link.

Administration challenges

Causal research can be challenging to administer since it's impossible to control the impact of extraneous variables .

Giving away your competitive advantage

If you intend to publish your research, it exposes your information to the competition.

Competitors may use your research outcomes to identify your plans and strategies to enter the market before you.

- Causal research examples

Multiple fields can use causal research, so it serves different purposes, such as.

Customer loyalty research

Organizations and employees can use causal research to determine the best customer attraction and retention approaches.

They monitor interactions between customers and employees to identify cause-and-effect patterns. That could be a product demonstration technique resulting in higher or lower sales from the same customers.

Example: Business X introduces a new individual marketing strategy for a small customer group and notices a measurable increase in monthly subscriptions.

Upon getting identical results from different groups, the business concludes that the individual marketing strategy resulted in the intended causal relationship.

Advertising research

Businesses can also use causal research to implement and assess advertising campaigns.

Example: Business X notices a 7% increase in sales revenue a few months after a business introduces a new advertisement in a certain region. The business can run the same ad in random regions to compare sales data over the same period.

This will help the company determine whether the ad caused the sales increase. If sales increase in these randomly selected regions, the business could conclude that advertising campaigns and sales share a cause-and-effect relationship.

Educational research

Academics, teachers, and learners can use causal research to explore the impact of politics on learners and pinpoint learner behavior trends.

Example: College X notices that more IT students drop out of their program in their second year, which is 8% higher than any other year.

The college administration can interview a random group of IT students to identify factors leading to this situation, including personal factors and influences.

With the help of in-depth statistical analysis, the institution's researchers can uncover the main factors causing dropout. They can create immediate solutions to address the problem.

Is a causal variable dependent or independent?

When two variables have a cause-and-effect relationship, the cause is often called the independent variable. As such, the effect variable is dependent, i.e., it depends on the independent causal variable. An independent variable is only causal under experimental conditions.

What are the three criteria for causality?

The three conditions for causality are:

Temporality/temporal precedence: The cause must precede the effect.

Rationality: One event predicts the other with an explanation, and the effect must vary in proportion to changes in the cause.

Control for extraneous variables: The covariables must not result from other variables.

Is causal research experimental?

Causal research is mostly explanatory. Causal studies focus on analyzing a situation to explore and explain the patterns of relationships between variables.

Further, experiments are the primary data collection methods in studies with causal research design. However, as a research design, causal research isn't entirely experimental.

What is the difference between experimental and causal research design?

One of the main differences between causal and experimental research is that in causal research, the research subjects are already in groups since the event has already happened.

On the other hand, researchers randomly choose subjects in experimental research before manipulating the variables.

Get started today

Go from raw data to valuable insights with a flexible research platform

Editor’s picks

Last updated: 21 December 2023

Last updated: 16 December 2023

Last updated: 17 February 2024

Last updated: 19 November 2023

Last updated: 5 March 2024

Last updated: 15 February 2024

Last updated: 11 March 2024

Last updated: 12 December 2023

Last updated: 6 March 2024

Last updated: 10 April 2023

Last updated: 20 December 2023

Latest articles

Related topics, log in or sign up.

Get started for free

Chapter 10 Rhetorical Modes

10.8 cause and effect, learning objectives.

- Determine the purpose and structure of cause and effect in writing.

- Understand how to write a cause-and-effect essay.

The Purpose of Cause and Effect in Writing

It is often considered human nature to ask, “why?” and “how?” We want to know how our child got sick so we can better prevent it from happening in the future, or why our colleague a pay raise because we want one as well. We want to know how much money we will save over the long term if we buy a hybrid car. These examples identify only a few of the relationships we think about in our lives, but each shows the importance of understanding cause and effect.

A cause is something that produces an event or condition; an effect is what results from an event or condition. The purpose of the cause-and-effect essay is to determine how various phenomena relate in terms of origins and results. Sometimes the connection between cause and effect is clear, but often determining the exact relationship between the two is very difficult. For example, the following effects of a cold may be easily identifiable: a sore throat, runny nose, and a cough. But determining the cause of the sickness can be far more difficult. A number of causes are possible, and to complicate matters, these possible causes could have combined to cause the sickness. That is, more than one cause may be responsible for any given effect. Therefore, cause-and-effect discussions are often complicated and frequently lead to debates and arguments.

Use the complex nature of cause and effect to your advantage. Often it is not necessary, or even possible, to find the exact cause of an event or to name the exact effect. So, when formulating a thesis, you can claim one of a number of causes or effects to be the primary, or main, cause or effect. As soon as you claim that one cause or one effect is more crucial than the others, you have developed a thesis.

Consider the causes and effects in the following thesis statements. List a cause and effect for each one on your own sheet of paper.

- The growing childhood obesity epidemic is a result of technology.

- Much of the wildlife is dying because of the oil spill.

- The town continued programs that it could no longer afford, so it went bankrupt.

- More young people became politically active as use of the Internet spread throughout society.

- While many experts believed the rise in violence was due to the poor economy, it was really due to the summer-long heat wave.

Write three cause-and-effect thesis statements of your own for each of the following five broad topics.

- Health and nutrition

The Structure of a Cause-and-Effect Essay

The cause-and-effect essay opens with a general introduction to the topic, which then leads to a thesis that states the main cause, main effect, or various causes and effects of a condition or event.

The cause-and-effect essay can be organized in one of the following two primary ways:

- Start with the cause and then talk about the effects.

- Start with the effect and then talk about the causes.

For example, if your essay were on childhood obesity, you could start by talking about the effect of childhood obesity and then discuss the cause or you could start the same essay by talking about the cause of childhood obesity and then move to the effect.

Regardless of which structure you choose, be sure to explain each element of the essay fully and completely. Explaining complex relationships requires the full use of evidence, such as scientific studies, expert testimony, statistics, and anecdotes.

Because cause-and-effect essays determine how phenomena are linked, they make frequent use of certain words and phrases that denote such linkage. See Table 10.4 “Phrases of Causation” for examples of such terms.

Table 10.4 Phrases of Causation

The conclusion should wrap up the discussion and reinforce the thesis, leaving the reader with a clear understanding of the relationship that was analyzed.

Be careful of resorting to empty speculation. In writing, speculation amounts to unsubstantiated guessing. Writers are particularly prone to such trappings in cause-and-effect arguments due to the complex nature of finding links between phenomena. Be sure to have clear evidence to support the claims that you make.

Look at some of the cause-and-effect relationships from Note 10.83 “Exercise 2”. Outline the links you listed. Outline one using a cause-then-effect structure. Outline the other using the effect-then-cause structure.

Writing a Cause-and-Effect Essay

Choose an event or condition that you think has an interesting cause-and-effect relationship. Introduce your topic in an engaging way. End your introduction with a thesis that states the main cause, the main effect, or both.

Organize your essay by starting with either the cause-then-effect structure or the effect-then-cause structure. Within each section, you should clearly explain and support the causes and effects using a full range of evidence. If you are writing about multiple causes or multiple effects, you may choose to sequence either in terms of order of importance. In other words, order the causes from least to most important (or vice versa), or order the effects from least important to most important (or vice versa).

Use the phrases of causation when trying to forge connections between various events or conditions. This will help organize your ideas and orient the reader. End your essay with a conclusion that summarizes your main points and reinforces your thesis. See Chapter 15 “Readings: Examples of Essays” to read a sample cause-and-effect essay.

Choose one of the ideas you outlined in Note 10.85 “Exercise 3” and write a full cause-and-effect essay. Be sure to include an engaging introduction, a clear thesis, strong evidence and examples, and a thoughtful conclusion.

Key Takeaways

- The purpose of the cause-and-effect essay is to determine how various phenomena are related.

- The thesis states what the writer sees as the main cause, main effect, or various causes and effects of a condition or event.

- Start with the cause and then talk about the effect.

- Start with the effect and then talk about the cause.

- Strong evidence is particularly important in the cause-and-effect essay due to the complexity of determining connections between phenomena.

- Phrases of causation are helpful in signaling links between various elements in the essay.

- Successful Writing. Authored by : Anonymous. Provided by : Anonymous. Located at : http://2012books.lardbucket.org/books/successful-writing/ . License : CC BY-NC-SA: Attribution-NonCommercial-ShareAlike

- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Best Family Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Verywell Mind Insights

- 2023 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

How to Write a Great Hypothesis

Hypothesis Format, Examples, and Tips

Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

:max_bytes(150000):strip_icc():format(webp)/IMG_9791-89504ab694d54b66bbd72cb84ffb860e.jpg)

Amy Morin, LCSW, is a psychotherapist and international bestselling author. Her books, including "13 Things Mentally Strong People Don't Do," have been translated into more than 40 languages. Her TEDx talk, "The Secret of Becoming Mentally Strong," is one of the most viewed talks of all time.

:max_bytes(150000):strip_icc():format(webp)/VW-MIND-Amy-2b338105f1ee493f94d7e333e410fa76.jpg)

Verywell / Alex Dos Diaz

- The Scientific Method

Hypothesis Format

Falsifiability of a hypothesis, operational definitions, types of hypotheses, hypotheses examples.

- Collecting Data

Frequently Asked Questions

A hypothesis is a tentative statement about the relationship between two or more variables. It is a specific, testable prediction about what you expect to happen in a study.

One hypothesis example would be a study designed to look at the relationship between sleep deprivation and test performance might have a hypothesis that states: "This study is designed to assess the hypothesis that sleep-deprived people will perform worse on a test than individuals who are not sleep-deprived."

This article explores how a hypothesis is used in psychology research, how to write a good hypothesis, and the different types of hypotheses you might use.

The Hypothesis in the Scientific Method

In the scientific method , whether it involves research in psychology, biology, or some other area, a hypothesis represents what the researchers think will happen in an experiment. The scientific method involves the following steps:

- Forming a question

- Performing background research

- Creating a hypothesis

- Designing an experiment

- Collecting data

- Analyzing the results

- Drawing conclusions

- Communicating the results

The hypothesis is a prediction, but it involves more than a guess. Most of the time, the hypothesis begins with a question which is then explored through background research. It is only at this point that researchers begin to develop a testable hypothesis. Unless you are creating an exploratory study, your hypothesis should always explain what you expect to happen.

In a study exploring the effects of a particular drug, the hypothesis might be that researchers expect the drug to have some type of effect on the symptoms of a specific illness. In psychology, the hypothesis might focus on how a certain aspect of the environment might influence a particular behavior.

Remember, a hypothesis does not have to be correct. While the hypothesis predicts what the researchers expect to see, the goal of the research is to determine whether this guess is right or wrong. When conducting an experiment, researchers might explore a number of factors to determine which ones might contribute to the ultimate outcome.

In many cases, researchers may find that the results of an experiment do not support the original hypothesis. When writing up these results, the researchers might suggest other options that should be explored in future studies.

In many cases, researchers might draw a hypothesis from a specific theory or build on previous research. For example, prior research has shown that stress can impact the immune system. So a researcher might hypothesize: "People with high-stress levels will be more likely to contract a common cold after being exposed to the virus than people who have low-stress levels."

In other instances, researchers might look at commonly held beliefs or folk wisdom. "Birds of a feather flock together" is one example of folk wisdom that a psychologist might try to investigate. The researcher might pose a specific hypothesis that "People tend to select romantic partners who are similar to them in interests and educational level."

Elements of a Good Hypothesis

So how do you write a good hypothesis? When trying to come up with a hypothesis for your research or experiments, ask yourself the following questions:

- Is your hypothesis based on your research on a topic?

- Can your hypothesis be tested?

- Does your hypothesis include independent and dependent variables?

Before you come up with a specific hypothesis, spend some time doing background research. Once you have completed a literature review, start thinking about potential questions you still have. Pay attention to the discussion section in the journal articles you read . Many authors will suggest questions that still need to be explored.

To form a hypothesis, you should take these steps:

- Collect as many observations about a topic or problem as you can.

- Evaluate these observations and look for possible causes of the problem.

- Create a list of possible explanations that you might want to explore.

- After you have developed some possible hypotheses, think of ways that you could confirm or disprove each hypothesis through experimentation. This is known as falsifiability.

In the scientific method , falsifiability is an important part of any valid hypothesis. In order to test a claim scientifically, it must be possible that the claim could be proven false.

Students sometimes confuse the idea of falsifiability with the idea that it means that something is false, which is not the case. What falsifiability means is that if something was false, then it is possible to demonstrate that it is false.

One of the hallmarks of pseudoscience is that it makes claims that cannot be refuted or proven false.

A variable is a factor or element that can be changed and manipulated in ways that are observable and measurable. However, the researcher must also define how the variable will be manipulated and measured in the study.

For example, a researcher might operationally define the variable " test anxiety " as the results of a self-report measure of anxiety experienced during an exam. A "study habits" variable might be defined by the amount of studying that actually occurs as measured by time.

These precise descriptions are important because many things can be measured in a number of different ways. One of the basic principles of any type of scientific research is that the results must be replicable. By clearly detailing the specifics of how the variables were measured and manipulated, other researchers can better understand the results and repeat the study if needed.

Some variables are more difficult than others to define. How would you operationally define a variable such as aggression ? For obvious ethical reasons, researchers cannot create a situation in which a person behaves aggressively toward others.

In order to measure this variable, the researcher must devise a measurement that assesses aggressive behavior without harming other people. In this situation, the researcher might utilize a simulated task to measure aggressiveness.

Hypothesis Checklist

- Does your hypothesis focus on something that you can actually test?

- Does your hypothesis include both an independent and dependent variable?

- Can you manipulate the variables?

- Can your hypothesis be tested without violating ethical standards?

The hypothesis you use will depend on what you are investigating and hoping to find. Some of the main types of hypotheses that you might use include:

- Simple hypothesis : This type of hypothesis suggests that there is a relationship between one independent variable and one dependent variable.

- Complex hypothesis : This type of hypothesis suggests a relationship between three or more variables, such as two independent variables and a dependent variable.

- Null hypothesis : This hypothesis suggests no relationship exists between two or more variables.

- Alternative hypothesis : This hypothesis states the opposite of the null hypothesis.

- Statistical hypothesis : This hypothesis uses statistical analysis to evaluate a representative sample of the population and then generalizes the findings to the larger group.

- Logical hypothesis : This hypothesis assumes a relationship between variables without collecting data or evidence.

A hypothesis often follows a basic format of "If {this happens} then {this will happen}." One way to structure your hypothesis is to describe what will happen to the dependent variable if you change the independent variable .

The basic format might be: "If {these changes are made to a certain independent variable}, then we will observe {a change in a specific dependent variable}."

A few examples of simple hypotheses:

- "Students who eat breakfast will perform better on a math exam than students who do not eat breakfast."

- Complex hypothesis: "Students who experience test anxiety before an English exam will get lower scores than students who do not experience test anxiety."

- "Motorists who talk on the phone while driving will be more likely to make errors on a driving course than those who do not talk on the phone."

Examples of a complex hypothesis include:

- "People with high-sugar diets and sedentary activity levels are more likely to develop depression."

- "Younger people who are regularly exposed to green, outdoor areas have better subjective well-being than older adults who have limited exposure to green spaces."

Examples of a null hypothesis include:

- "Children who receive a new reading intervention will have scores different than students who do not receive the intervention."

- "There will be no difference in scores on a memory recall task between children and adults."

Examples of an alternative hypothesis:

- "Children who receive a new reading intervention will perform better than students who did not receive the intervention."

- "Adults will perform better on a memory task than children."

Collecting Data on Your Hypothesis

Once a researcher has formed a testable hypothesis, the next step is to select a research design and start collecting data. The research method depends largely on exactly what they are studying. There are two basic types of research methods: descriptive research and experimental research.

Descriptive Research Methods

Descriptive research such as case studies , naturalistic observations , and surveys are often used when it would be impossible or difficult to conduct an experiment . These methods are best used to describe different aspects of a behavior or psychological phenomenon.

Once a researcher has collected data using descriptive methods, a correlational study can then be used to look at how the variables are related. This type of research method might be used to investigate a hypothesis that is difficult to test experimentally.

Experimental Research Methods

Experimental methods are used to demonstrate causal relationships between variables. In an experiment, the researcher systematically manipulates a variable of interest (known as the independent variable) and measures the effect on another variable (known as the dependent variable).

Unlike correlational studies, which can only be used to determine if there is a relationship between two variables, experimental methods can be used to determine the actual nature of the relationship—whether changes in one variable actually cause another to change.

A Word From Verywell

The hypothesis is a critical part of any scientific exploration. It represents what researchers expect to find in a study or experiment. In situations where the hypothesis is unsupported by the research, the research still has value. Such research helps us better understand how different aspects of the natural world relate to one another. It also helps us develop new hypotheses that can then be tested in the future.

Some examples of how to write a hypothesis include:

- "Staying up late will lead to worse test performance the next day."

- "People who consume one apple each day will visit the doctor fewer times each year."

- "Breaking study sessions up into three 20-minute sessions will lead to better test results than a single 60-minute study session."

The four parts of a hypothesis are:

- The research question

- The independent variable (IV)

- The dependent variable (DV)

- The proposed relationship between the IV and DV

Castillo M. The scientific method: a need for something better? . AJNR Am J Neuroradiol. 2013;34(9):1669-71. doi:10.3174/ajnr.A3401

Nevid J. Psychology: Concepts and Applications. Wadworth, 2013.

By Kendra Cherry, MSEd Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

10.8 Cause and Effect

Learning objectives.

- Determine the purpose and structure of cause and effect in writing.

- Understand how to write a cause-and-effect essay.

The Purpose of Cause and Effect in Writing

It is often considered human nature to ask, “why?” and “how?” We want to know how our child got sick so we can better prevent it from happening in the future, or why our colleague a pay raise because we want one as well. We want to know how much money we will save over the long term if we buy a hybrid car. These examples identify only a few of the relationships we think about in our lives, but each shows the importance of understanding cause and effect.

A cause is something that produces an event or condition; an effect is what results from an event or condition. The purpose of the cause-and-effect essay is to determine how various phenomena relate in terms of origins and results. Sometimes the connection between cause and effect is clear, but often determining the exact relationship between the two is very difficult. For example, the following effects of a cold may be easily identifiable: a sore throat, runny nose, and a cough. But determining the cause of the sickness can be far more difficult. A number of causes are possible, and to complicate matters, these possible causes could have combined to cause the sickness. That is, more than one cause may be responsible for any given effect. Therefore, cause-and-effect discussions are often complicated and frequently lead to debates and arguments.

Use the complex nature of cause and effect to your advantage. Often it is not necessary, or even possible, to find the exact cause of an event or to name the exact effect. So, when formulating a thesis, you can claim one of a number of causes or effects to be the primary, or main, cause or effect. As soon as you claim that one cause or one effect is more crucial than the others, you have developed a thesis.

Consider the causes and effects in the following thesis statements. List a cause and effect for each one on your own sheet of paper.

- The growing childhood obesity epidemic is a result of technology.

- Much of the wildlife is dying because of the oil spill.

- The town continued programs that it could no longer afford, so it went bankrupt.

- More young people became politically active as use of the Internet spread throughout society.

- While many experts believed the rise in violence was due to the poor economy, it was really due to the summer-long heat wave.

Write three cause-and-effect thesis statements of your own for each of the following five broad topics.

- Health and nutrition

The Structure of a Cause-and-Effect Essay

The cause-and-effect essay opens with a general introduction to the topic, which then leads to a thesis that states the main cause, main effect, or various causes and effects of a condition or event.

The cause-and-effect essay can be organized in one of the following two primary ways:

- Start with the cause and then talk about the effects.

- Start with the effect and then talk about the causes.

For example, if your essay were on childhood obesity, you could start by talking about the effect of childhood obesity and then discuss the cause or you could start the same essay by talking about the cause of childhood obesity and then move to the effect.

Regardless of which structure you choose, be sure to explain each element of the essay fully and completely. Explaining complex relationships requires the full use of evidence, such as scientific studies, expert testimony, statistics, and anecdotes.

Because cause-and-effect essays determine how phenomena are linked, they make frequent use of certain words and phrases that denote such linkage. See Table 10.4 “Phrases of Causation” for examples of such terms.

Table 10.4 Phrases of Causation

The conclusion should wrap up the discussion and reinforce the thesis, leaving the reader with a clear understanding of the relationship that was analyzed.

Be careful of resorting to empty speculation. In writing, speculation amounts to unsubstantiated guessing. Writers are particularly prone to such trappings in cause-and-effect arguments due to the complex nature of finding links between phenomena. Be sure to have clear evidence to support the claims that you make.

Look at some of the cause-and-effect relationships from Note 10.83 “Exercise 2” . Outline the links you listed. Outline one using a cause-then-effect structure. Outline the other using the effect-then-cause structure.

Writing a Cause-and-Effect Essay

Choose an event or condition that you think has an interesting cause-and-effect relationship. Introduce your topic in an engaging way. End your introduction with a thesis that states the main cause, the main effect, or both.

Organize your essay by starting with either the cause-then-effect structure or the effect-then-cause structure. Within each section, you should clearly explain and support the causes and effects using a full range of evidence. If you are writing about multiple causes or multiple effects, you may choose to sequence either in terms of order of importance. In other words, order the causes from least to most important (or vice versa), or order the effects from least important to most important (or vice versa).

Use the phrases of causation when trying to forge connections between various events or conditions. This will help organize your ideas and orient the reader. End your essay with a conclusion that summarizes your main points and reinforces your thesis. See Chapter 15 “Readings: Examples of Essays” to read a sample cause-and-effect essay.

Choose one of the ideas you outlined in Note 10.85 “Exercise 3” and write a full cause-and-effect essay. Be sure to include an engaging introduction, a clear thesis, strong evidence and examples, and a thoughtful conclusion.

Key Takeaways

- The purpose of the cause-and-effect essay is to determine how various phenomena are related.

- The thesis states what the writer sees as the main cause, main effect, or various causes and effects of a condition or event.

The cause-and-effect essay can be organized in one of these two primary ways:

- Start with the cause and then talk about the effect.

- Start with the effect and then talk about the cause.

- Strong evidence is particularly important in the cause-and-effect essay due to the complexity of determining connections between phenomena.

- Phrases of causation are helpful in signaling links between various elements in the essay.

Writing for Success Copyright © 2015 by University of Minnesota is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

- cognitive sophistication

- tolerance of diversity

- exposure to higher levels of math or science

- age (which is currently related to educational level in many countries)

- social class and other variables.

- For example, suppose you designed a treatment to help people stop smoking. Because you are really dedicated, you assigned the same individuals simultaneously to (1) a "stop smoking" nicotine patch; (2) a "quit buddy"; and (3) a discussion support group. Compared with a group in which no intervention at all occurred, your experimental group now smokes 10 fewer cigarettes per day.

- There is no relationship among two or more variables (EXAMPLE: the correlation between educational level and income is zero)

- Or that two or more populations or subpopulations are essentially the same (EXAMPLE: women and men have the same average science knowledge scores.)

- the difference between two and three children = one child.

- the difference between eight and nine children also = one child.

- the difference between completing ninth grade and tenth grade is one year of school

- the difference between completing junior and senior year of college is one year of school

- In addition to all the properties of nominal, ordinal, and interval variables, ratio variables also have a fixed/non-arbitrary zero point. Non arbitrary means that it is impossible to go below a score of zero for that variable. For example, any bottom score on IQ or aptitude tests is created by human beings and not nature. On the other hand, scientists believe they have isolated an "absolute zero." You can't get colder than that.

How to Write a Hypothesis

If I [do something], then [this] will happen.

This basic statement/formula should be pretty familiar to all of you as it is the starting point of almost every scientific project or paper. It is a hypothesis – a statement that showcases what you “think” will happen during an experiment. This assumption is made based on the knowledge, facts, and data you already have.

How do you write a hypothesis? If you have a clear understanding of the proper structure of a hypothesis, you should not find it too hard to create one. However, if you have never written a hypothesis before, you might find it a bit frustrating. In this article from EssayPro - custom essay writing services , we are going to tell you everything you need to know about hypotheses, their types, and practical tips for writing them.

Hypothesis Definition

According to the definition, a hypothesis is an assumption one makes based on existing knowledge. To elaborate, it is a statement that translates the initial research question into a logical prediction shaped on the basis of available facts and evidence. To solve a specific problem, one first needs to identify the research problem (research question), conduct initial research, and set out to answer the given question by performing experiments and observing their outcomes. However, before one can move to the experimental part of the research, they should first identify what they expect to see for results. At this stage, a scientist makes an educated guess and writes a hypothesis that he or she is going to prove or refute in the course of their study.

Get Help With Writing a Hypothesis Now!

Head on over to EssayPro. We can help you with editing and polishing up any of the work you speedwrite.

A hypothesis can also be seen as a form of development of knowledge. It is a well-grounded assumption put forward to clarify the properties and causes of the phenomena being studied.

As a rule, a hypothesis is formed based on a number of observations and examples that confirm it. This way, it looks plausible as it is backed up with some known information. The hypothesis is subsequently proved by turning it into an established fact or refuted (for example, by pointing out a counterexample), which allows it to attribute it to the category of false statements.

As a student, you may be asked to create a hypothesis statement as a part of your academic papers. Hypothesis-based approaches are commonly used among scientific academic works, including but not limited to research papers, theses, and dissertations.

Note that in some disciplines, a hypothesis statement is called a thesis statement. However, its essence and purpose remain unchanged – this statement aims to make an assumption regarding the outcomes of the investigation that will either be proved or refuted.

Characteristics and Sources of a Hypothesis

Now, as you know what a hypothesis is in a nutshell, let’s look at the key characteristics that define it:

- It has to be clear and accurate in order to look reliable.

- It has to be specific.

- There should be scope for further investigation and experiments.

- A hypothesis should be explained in simple language—while retaining its significance.

- If you are making a relational hypothesis, two essential elements you have to include are variables and the relationship between them.

The main sources of a hypothesis are:

- Scientific theories.

- Observations from previous studies and current experiences.

- The resemblance among different phenomena.

- General patterns that affect people’s thinking process.

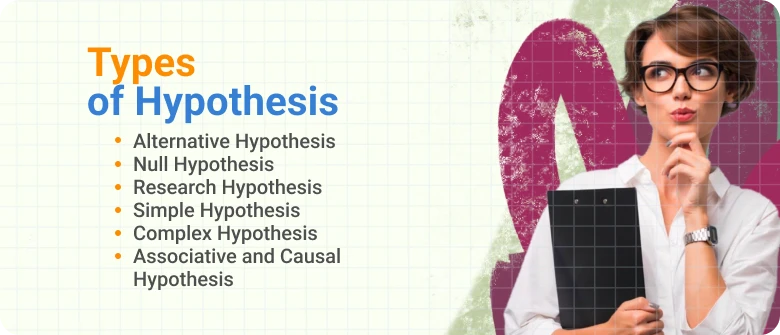

Types of Hypothesis

Basically, there are two major types of scientific hypothesis: alternative and null.

- Alternative Hypothesis

This type of hypothesis is generally denoted as H1. This statement is used to identify the expected outcome of your research. According to the alternative hypothesis definition, this type of hypothesis can be further divided into two subcategories:

- Directional — a statement that explains the direction of the expected outcomes. Sometimes this type of hypothesis is used to study the relationship between variables rather than comparing between the groups.

- Non-directional — unlike the directional alternative hypothesis, a non-directional one does not imply a specific direction of the expected outcomes.

Now, let’s see an alternative hypothesis example for each type:

Directional: Attending more lectures will result in improved test scores among students. Non-directional: Lecture attendance will influence test scores among students.

Notice how in the directional hypothesis we specified that the attendance of more lectures will boost student’s performance on tests, whereas in the non-directional hypothesis we only stated that there is a relationship between the two variables (i.e. lecture attendance and students’ test scores) but did not specify whether the performance will improve or decrease.

- Null Hypothesis

This type of hypothesis is generally denoted as H0. This statement is the complete opposite of what you expect or predict will happen throughout the course of your study—meaning it is the opposite of your alternative hypothesis. Simply put, a null hypothesis claims that there is no exact or actual correlation between the variables defined in the hypothesis.

To give you a better idea of how to write a null hypothesis, here is a clear example: Lecture attendance has no effect on student’s test scores.

Both of these types of hypotheses provide specific clarifications and restatements of the research problem. The main difference between these hypotheses and a research problem is that the latter is just a question that can’t be tested, whereas hypotheses can.

Based on the alternative and null hypothesis examples provided earlier, we can conclude that the importance and main purpose of these hypotheses are that they deliver a rough description of the subject matter. The main purpose of these statements is to give an investigator a specific guess that can be directly tested in a study. Simply put, a hypothesis outlines the framework, scope, and direction for the study. Although null and alternative hypotheses are the major types, there are also a few more to keep in mind:

Research Hypothesis — a statement that is used to test the correlation between two or more variables.

For example: Eating vitamin-rich foods affects human health.

Simple Hypothesis — a statement used to indicate the correlation between one independent and one dependent variable.

For example: Eating more vegetables leads to better immunity.

Complex Hypothesis — a statement used to indicate the correlation between two or more independent variables and two or more dependent variables.

For example: Eating more fruits and vegetables leads to better immunity, weight loss, and lower risk of diseases.

Associative and Causal Hypothesis — an associative hypothesis is a statement used to indicate the correlation between variables under the scenario when a change in one variable inevitably changes the other variable. A causal hypothesis is a statement that highlights the cause and effect relationship between variables.

Be sure to read how to write a DBQ - this article will expand your understanding.

Add a secret ingredient to your hypothesis

Help of a professional writer.

Hypothesis vs Prediction

When speaking of hypotheses, another term that comes to mind is prediction. These two terms are often used interchangeably, which can be rather confusing. Although both a hypothesis and prediction can generally be defined as “guesses” and can be easy to confuse, these terms are different. The main difference between a hypothesis and a prediction is that the first is predominantly used in science, while the latter is most often used outside of science.

Simply put, a hypothesis is an intelligent assumption. It is a guess made regarding the nature of the unknown (or less known) phenomena based on existing knowledge, studies, and/or series of experiments, and is otherwise grounded by valid facts. The main purpose of a hypothesis is to use available facts to create a logical relationship between variables in order to provide a more precise scientific explanation. Additionally, hypotheses are statements that can be tested with further experiments. It is an assumption you make regarding the flow and outcome(s) of your research study.

A prediction, on the contrary, is a guess that often lacks grounding. Although, in theory, a prediction can be scientific, in most cases it is rather fictional—i.e. a pure guess that is not based on current knowledge and/or facts. As a rule, predictions are linked to foretelling events that may or may not occur in the future. Often, a person who makes predictions has little or no actual knowledge of the subject matter he or she makes the assumption about.

Another big difference between these terms is in the methodology used to prove each of them. A prediction can only be proven once. You can determine whether it is right or wrong only upon the occurrence or non-occurrence of the predicted event. A hypothesis, on the other hand, offers scope for further testing and experiments. Additionally, a hypothesis can be proven in multiple stages. This basically means that a single hypothesis can be proven or refuted numerous times by different scientists who use different scientific tools and methods.

To give you a better idea of how a hypothesis is different from a prediction, let’s look at the following examples:

Hypothesis: If I eat more vegetables and fruits, then I will lose weight faster.

This is a hypothesis because it is based on generally available knowledge (i.e. fruits and vegetables include fewer calories compared to other foods) and past experiences (i.e. people who give preference to healthier foods like fruits and vegetables are losing weight easier). It is still a guess, but it is based on facts and can be tested with an experiment.

Prediction: The end of the world will occur in 2023.

This is a prediction because it foretells future events. However, this assumption is fictional as it doesn’t have any actual grounded evidence supported by facts.

Based on everything that was said earlier and our examples, we can highlight the following key takeaways:

- A hypothesis, unlike a prediction, is a more intelligent assumption based on facts.

- Hypotheses define existing variables and analyze the relationship(s) between them.

- Predictions are most often fictional and lack grounding.

- A prediction is most often used to foretell events in the future.

- A prediction can only be proven once – when the predicted event occurs or doesn’t occur.

- A hypothesis can remain a hypothesis even if one scientist has already proven or disproven it. Other scientists in the future can obtain a different result using other methods and tools.

We also recommend that you read about some informative essay topics .

Now, as you know what a hypothesis is, what types of it exist, and how it differs from a prediction, you are probably wondering how to state a hypothesis. In this section, we will guide you through the main stages of writing a good hypothesis and provide handy tips and examples to help you overcome this challenge:

1. Define Your Research Question

Here is one thing to keep in mind – regardless of the paper or project you are working on, the process should always start with asking the right research question. A perfect research question should be specific, clear, focused (meaning not too broad), and manageable.

Example: How does eating fruits and vegetables affect human health?

2. Conduct Your Basic Initial Research

As you already know, a hypothesis is an educated guess of the expected results and outcomes of an investigation. Thus, it is vital to collect some information before you can make this assumption.

At this stage, you should find an answer to your research question based on what has already been discovered. Search for facts, past studies, theories, etc. Based on the collected information, you should be able to make a logical and intelligent guess.

3. Formulate a Hypothesis

Based on the initial research, you should have a certain idea of what you may find throughout the course of your research. Use this knowledge to shape a clear and concise hypothesis.

Based on the type of project you are working on, and the type of hypothesis you are planning to use, you can restate your hypothesis in several different ways:

Non-directional: Eating fruits and vegetables will affect one’s human physical health. Directional: Eating fruits and vegetables will positively affect one’s human physical health. Null: Eating fruits and vegetables will have no effect on one’s human physical health.

4. Refine Your Hypothesis

Finally, the last stage of creating a good hypothesis is refining what you’ve got. During this step, you need to define whether your hypothesis:

- Has clear and relevant variables;

- Identifies the relationship between its variables;

- Is specific and testable;

- Suggests a predicted result of the investigation or experiment.

In case you need some help with your essay, leave us a notice ' pay someone to write my essay ' and we'll help asap. We also provide nursing writing services .

Hypothesis Examples

Following a step-by-step guide and tips from our essay writers for hire , you should be able to create good hypotheses with ease. To give you a starting point, we have also compiled a list of different research questions with one hypothesis and one null hypothesis example for each:

Ask Pros to Make a Perfect Hypothesis for You!

Sometimes, coping with a large academic load is just too much for a student to handle. Papers like research papers and dissertations can take too much time and effort to write, and, often, a hypothesis is a necessary starting point to get the task on track. Writing or editing a hypothesis is not as easy as it may seem. However, if you need help with forming it, the team at EssayPro is always ready to come to your rescue! If you’re feeling stuck, or don’t have enough time to cope with other tasks, don’t hesitate to send us you rewrite my essay for me or any other request.

Related Articles

.webp)

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

12.1: The Purpose of Cause and Effect in Writing

- Last updated

- Save as PDF

- Page ID 6297

- Amber Kinonen, Jennifer McCann, Todd McCann, & Erica Mead

- Bay College Library

It is often considered human nature to ask, “why?” and “how?” We want to know how our child got sick so we can better prevent it from happening in the future, or why our colleague received a pay raise because we want one as well. We want to know how much money we will save over the long term if we buy a hybrid car. These examples identify only a few of the relationships we think about in our lives, but each shows the importance of understanding cause and effect.

A cause is something that produces an event or condition; an effect is what results from an event or condition. The purpose of the cause-and-effect essay is to determine how various phenomena relate in terms of origins and results. Sometimes the connection between cause and effect is clear, but often determining the exact relationship between the two is very difficult. For example, the following effects of a cold may be easily identifiable: a sore throat, runny nose, and a cough. However, determining the cause of the sickness can be far more difficult. A number of causes are possible, and to complicate matters, these possible causes could have combined to cause the sickness. That is, more than one cause may be responsible for any given effect. Therefore, cause-and-effect discussions are often complicated and frequently lead to debates and arguments.

Use the complex nature of cause and effect to your advantage. Often it is not necessary, or even possible, to find the exact cause of an event or to name the exact effect. So, when formulating a thesis, you can claim one of a number of causes or effects to be the primary, or main, cause or effect. As soon as you claim that one cause or one effect is more significant than the others, you have developed a thesis.

Writing a Strong Hypothesis Statement

All good theses begins with a good thesis question. However, all great theses begins with a great hypothesis statement. One of the most important steps for writing a thesis is to create a strong hypothesis statement.

What is a hypothesis statement?

A hypothesis statement must be testable. If it cannot be tested, then there is no research to be done.

Simply put, a hypothesis statement posits the relationship between two or more variables. It is a prediction of what you think will happen in a research study. A hypothesis statement must be testable. If it cannot be tested, then there is no research to be done. If your thesis question is whether wildfires have effects on the weather, “wildfires create tornadoes” would be your hypothesis. However, a hypothesis needs to have several key elements in order to meet the criteria for a good hypothesis.

In this article, we will learn about what distinguishes a weak hypothesis from a strong one. We will also learn how to phrase your thesis question and frame your variables so that you are able to write a strong hypothesis statement and great thesis.

What is a hypothesis?

A hypothesis statement posits, or considers, a relationship between two variables.

As we mentioned above, a hypothesis statement posits or considers a relationship between two variables. In our hypothesis statement example above, the two variables are wildfires and tornadoes, and our assumed relationship between the two is a causal one (wildfires cause tornadoes). It is clear from our example above what we will be investigating: the relationship between wildfires and tornadoes.

A strong hypothesis statement should be:

- A prediction of the relationship between two or more variables

A hypothesis is not just a blind guess. It should build upon existing theories and knowledge . Tornadoes are often observed near wildfires once the fires reach a certain size. In addition, tornadoes are not a normal weather event in many areas; they have been spotted together with wildfires. This existing knowledge has informed the formulation of our hypothesis.

Depending on the thesis question, your research paper might have multiple hypothesis statements. What is important is that your hypothesis statement or statements are testable through data analysis, observation, experiments, or other methodologies.

Formulating your hypothesis

One of the best ways to form a hypothesis is to think about “if...then” statements.

Now that we know what a hypothesis statement is, let’s walk through how to formulate a strong one. First, you will need a thesis question. Your thesis question should be narrow in scope, answerable, and focused. Once you have your thesis question, it is time to start thinking about your hypothesis statement. You will need to clearly identify the variables involved before you can begin thinking about their relationship.

One of the best ways to form a hypothesis is to think about “if...then” statements . This can also help you easily identify the variables you are working with and refine your hypothesis statement. Let’s take a few examples.

If teenagers are given comprehensive sex education, there will be fewer teen pregnancies .

In this example, the independent variable is whether or not teenagers receive comprehensive sex education (the cause), and the dependent variable is the number of teen pregnancies (the effect).

If a cat is fed a vegan diet, it will die .

Here, our independent variable is the diet of the cat (the cause), and the dependent variable is the cat’s health (the thing impacted by the cause).

If children drink 8oz of milk per day, they will grow taller than children who do not drink any milk .

What are the variables in this hypothesis? If you identified drinking milk as the independent variable and growth as the dependent variable, you are correct. This is because we are guessing that drinking milk causes increased growth in the height of children.

Refining your hypothesis

Do not be afraid to refine your hypothesis throughout the process of formulation.

Do not be afraid to refine your hypothesis throughout the process of formulation. A strong hypothesis statement is clear, testable, and involves a prediction. While “testable” means verifiable or falsifiable, it also means that you are able to perform the necessary experiments without violating any ethical standards. Perhaps once you think about the ethics of possibly harming some cats by testing a vegan diet on them you might abandon the idea of that experiment altogether. However, if you think it is really important to research the relationship between a cat’s diet and a cat’s health, perhaps you could refine your hypothesis to something like this:

If 50% of a cat’s meals are vegan, the cat will not be able to meet its nutritional needs .

Another feature of a strong hypothesis statement is that it can easily be tested with the resources that you have readily available. While it might not be feasible to measure the growth of a cohort of children throughout their whole lives, you may be able to do so for a year. Then, you can adjust your hypothesis to something like this:

I f children aged 8 drink 8oz of milk per day for one year, they will grow taller during that year than children who do not drink any milk .

As you work to narrow down and refine your hypothesis to reflect a realistic potential research scope, don’t be afraid to talk to your supervisor about any concerns or questions you might have about what is truly possible to research.

What makes a hypothesis weak?

We noted above that a strong hypothesis statement is clear, is a prediction of a relationship between two or more variables, and is testable. We also clarified that statements, which are too general or specific are not strong hypotheses. We have looked at some examples of hypotheses that meet the criteria for a strong hypothesis, but before we go any further, let’s look at weak or bad hypothesis statement examples so that you can really see the difference.

Bad hypothesis 1: Diabetes is caused by witchcraft .

While this is fun to think about, it cannot be tested or proven one way or the other with clear evidence, data analysis, or experiments. This bad hypothesis fails to meet the testability requirement.

Bad hypothesis 2: If I change the amount of food I eat, my energy levels will change .

This is quite vague. Am I increasing or decreasing my food intake? What do I expect exactly will happen to my energy levels and why? How am I defining energy level? This bad hypothesis statement fails the clarity requirement.

Bad hypothesis 3: Japanese food is disgusting because Japanese people don’t like tourists .

This hypothesis is unclear about the posited relationship between variables. Are we positing the relationship between the deliciousness of Japanese food and the desire for tourists to visit? or the relationship between the deliciousness of Japanese food and the amount that Japanese people like tourists? There is also the problematic subjectivity of the assessment that Japanese food is “disgusting.” The problems are numerous.

The null hypothesis and the alternative hypothesis

The null hypothesis, quite simply, posits that there is no relationship between the variables.

What is the null hypothesis?

The hypothesis posits a relationship between two or more variables. The null hypothesis, quite simply, posits that there is no relationship between the variables. It is often indicated as H 0 , which is read as “h-oh” or “h-null.” The alternative hypothesis is the opposite of the null hypothesis as it posits that there is some relationship between the variables. The alternative hypothesis is written as H a or H 1 .

Let’s take our previous hypothesis statement examples discussed at the start and look at their corresponding null hypothesis.

H a : If teenagers are given comprehensive sex education, there will be fewer teen pregnancies .

H 0 : If teenagers are given comprehensive sex education, there will be no change in the number of teen pregnancies .

The null hypothesis assumes that comprehensive sex education will not affect how many teenagers get pregnant. It should be carefully noted that the null hypothesis is not always the opposite of the alternative hypothesis. For example:

If teenagers are given comprehensive sex education, there will be more teen pregnancies .

These are opposing statements that assume an opposite relationship between the variables: comprehensive sex education increases or decreases the number of teen pregnancies. In fact, these are both alternative hypotheses. This is because they both still assume that there is a relationship between the variables . In other words, both hypothesis statements assume that there is some kind of relationship between sex education and teen pregnancy rates. The alternative hypothesis is also the researcher’s actual predicted outcome, which is why calling it “alternative” can be confusing! However, you can think of it this way: our default assumption is the null hypothesis, and so any possible relationship is an alternative to the default.

Step-by-step sample hypothesis statements

Now that we’ve covered what makes a hypothesis statement strong, how to go about formulating a hypothesis statement, refining your hypothesis statement, and the null hypothesis, let’s put it all together with some examples. The table below shows a breakdown of how we can take a thesis question, identify the variables, create a null hypothesis, and finally create a strong alternative hypothesis.

Once you have formulated a solid thesis question and written a strong hypothesis statement, you are ready to begin your thesis in earnest. Check out our site for more tips on writing a great thesis and information on thesis proofreading and editing services.

Editor’s pick

Get free updates.

Subscribe to our newsletter for regular insights from the research and publishing industry!

Review Checklist

Start with a clear thesis question

Think about “if-then” statements to identify your variables and the relationship between them

Create a null hypothesis

Formulate an alternative hypothesis using the variables you have identified

Make sure your hypothesis clearly posits a relationship between variables

Make sure your hypothesis is testable considering your available time and resources

What makes a hypothesis strong? +

A hypothesis is strong when it is testable, clear, and identifies a potential relationship between two or more variables.

What makes a hypothesis weak? +

A hypothesis is weak when it is too specific or too general, or does not identify a clear relationship between two or more variables.

What is the null hypothesis? +

The null hypothesis posits that the variables you have identified have no relationship.

Fastest Nurse Insight Engine

- MEDICAL ASSISSTANT

- Abdominal Key

- Anesthesia Key

- Basicmedical Key

- Otolaryngology & Ophthalmology

- Musculoskeletal Key

- Obstetric, Gynecology and Pediatric

- Oncology & Hematology

- Plastic Surgery & Dermatology

- Clinical Dentistry

- Radiology Key

- Thoracic Key

- Veterinary Medicine

- Gold Membership

Cause and Effect, Hypothesis Testing and Estimation