Research on Social Work Practice - Impact Score, Ranking, SJR, h-index, Citescore, Rating, Publisher, ISSN, and Other Important Details

Published By: SAGE Publications Inc.

Abbreviation: Res. Soc. Work Pract.

Impact Score The impact Score or journal impact score (JIS) is equivalent to Impact Factor. The impact factor (IF) or journal impact factor (JIF) of an academic journal is a scientometric index calculated by Clarivate that reflects the yearly mean number of citations of articles published in the last two years in a given journal, as indexed by Clarivate's Web of Science. On the other hand, Impact Score is based on Scopus data.

Important details, about research on social work practice.

Research on Social Work Practice is a journal published by SAGE Publications Inc. . This journal covers the area[s] related to Social Sciences (miscellaneous), Sociology and Political Science, Psychology (miscellaneous), Social Work, etc . The coverage history of this journal is as follows: 1991-2022. The rank of this journal is 8809 . This journal's impact score, h-index, and SJR are 1.99, 70, and 0.586, respectively. The ISSN of this journal is/are as follows: 10497315, 15527581 . The best quartile of Research on Social Work Practice is Q1 . This journal has received a total of 581 citations during the last three years (Preceding 2022).

Research on Social Work Practice Impact Score 2022-2023

The impact score (IS), also denoted as the Journal impact score (JIS), of an academic journal is a measure of the yearly average number of citations to recent articles published in that journal. It is based on Scopus data.

Prediction of Research on Social Work Practice Impact Score 2023

Impact Score 2022 of Research on Social Work Practice is 1.99 . If a similar upward trend continues, IS may increase in 2023 as well.

Impact Score Graph

Check below the impact score trends of research on social work practice. this is based on scopus data., research on social work practice h-index.

The h-index of Research on Social Work Practice is 70 . By definition of the h-index, this journal has at least 70 published articles with more than 70 citations.

What is h-index?

The h-index (also known as the Hirsch index or Hirsh index) is a scientometric parameter used to evaluate the scientific impact of the publications and journals. It is defined as the maximum value of h such that the given Journal has published at least h papers and each has at least h citations.

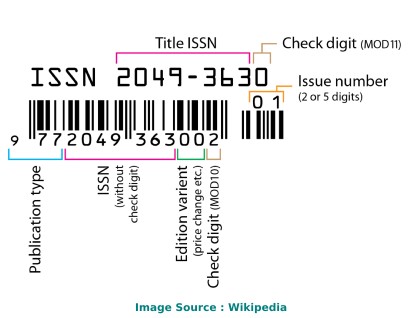

Research on Social Work Practice ISSN

The International Standard Serial Number (ISSN) of Research on Social Work Practice is/are as follows: 10497315, 15527581 .

The ISSN is a unique 8-digit identifier for a specific publication like Magazine or Journal. The ISSN is used in the postal system and in the publishing world to identify the articles that are published in journals, magazines, newsletters, etc. This is the number assigned to your article by the publisher, and it is the one you will use to reference your article within the library catalogues.

ISSN code (also called as "ISSN structure" or "ISSN syntax") can be expressed as follows: NNNN-NNNC Here, N is in the set {0,1,2,3...,9}, a digit character, and C is in {0,1,2,3,...,9,X}

Research on Social Work Practice Ranking and SCImago Journal Rank (SJR)

SCImago Journal Rank is an indicator, which measures the scientific influence of journals. It considers the number of citations received by a journal and the importance of the journals from where these citations come.

Research on Social Work Practice Publisher

The publisher of Research on Social Work Practice is SAGE Publications Inc. . The publishing house of this journal is located in the United States . Its coverage history is as follows: 1991-2022 .

Call For Papers (CFPs)

Please check the official website of this journal to find out the complete details and Call For Papers (CFPs).

Abbreviation

The International Organization for Standardization 4 (ISO 4) abbreviation of Research on Social Work Practice is Res. Soc. Work Pract. . ISO 4 is an international standard which defines a uniform and consistent system for the abbreviation of serial publication titles, which are published regularly. The primary use of ISO 4 is to abbreviate or shorten the names of scientific journals using the technique of List of Title Word Abbreviations (LTWA).

As ISO 4 is an international standard, the abbreviation ('Res. Soc. Work Pract.') can be used for citing, indexing, abstraction, and referencing purposes.

How to publish in Research on Social Work Practice

If your area of research or discipline is related to Social Sciences (miscellaneous), Sociology and Political Science, Psychology (miscellaneous), Social Work, etc. , please check the journal's official website to understand the complete publication process.

Acceptance Rate

- Interest/demand of researchers/scientists for publishing in a specific journal/conference.

- The complexity of the peer review process and timeline.

- Time taken from draft submission to final publication.

- Number of submissions received and acceptance slots

- And Many More.

The simplest way to find out the acceptance rate or rejection rate of a Journal/Conference is to check with the journal's/conference's editorial team through emails or through the official website.

Frequently Asked Questions (FAQ)

What is the impact score of research on social work practice.

The latest impact score of Research on Social Work Practice is 1.99. It is computed in the year 2023.

What is the h-index of Research on Social Work Practice?

The latest h-index of Research on Social Work Practice is 70. It is evaluated in the year 2023.

What is the SCImago Journal Rank (SJR) of Research on Social Work Practice?

The latest SCImago Journal Rank (SJR) of Research on Social Work Practice is 0.586. It is calculated in the year 2023.

What is the ranking of Research on Social Work Practice?

The latest ranking of Research on Social Work Practice is 8809. This ranking is among 27955 Journals, Conferences, and Book Series. It is computed in the year 2023.

Who is the publisher of Research on Social Work Practice?

Research on Social Work Practice is published by SAGE Publications Inc.. The publication country of this journal is United States.

What is the abbreviation of Research on Social Work Practice?

This standard abbreviation of Research on Social Work Practice is Res. Soc. Work Pract..

Is "Research on Social Work Practice" a Journal, Conference or Book Series?

Research on Social Work Practice is a journal published by SAGE Publications Inc..

What is the scope of Research on Social Work Practice?

- Social Sciences (miscellaneous)

- Sociology and Political Science

- Psychology (miscellaneous)

- Social Work

For detailed scope of Research on Social Work Practice, check the official website of this journal.

What is the ISSN of Research on Social Work Practice?

The International Standard Serial Number (ISSN) of Research on Social Work Practice is/are as follows: 10497315, 15527581.

What is the best quartile for Research on Social Work Practice?

The best quartile for Research on Social Work Practice is Q1.

What is the coverage history of Research on Social Work Practice?

The coverage history of Research on Social Work Practice is as follows 1991-2022.

Credits and Sources

- Scimago Journal & Country Rank (SJR), https://www.scimagojr.com/

- Journal Impact Factor, https://clarivate.com/

- Issn.org, https://www.issn.org/

- Scopus, https://www.scopus.com/

Note: The impact score shown here is equivalent to the average number of times documents published in a journal/conference in the past two years have been cited in the current year (i.e., Cites / Doc. (2 years)). It is based on Scopus data and can be a little higher or different compared to the impact factor (IF) produced by Journal Citation Report. Please refer to the Web of Science data source to check the exact journal impact factor ™ (Thomson Reuters) metric.

Impact Score, SJR, h-Index, and Other Important metrics of These Journals, Conferences, and Book Series

Check complete list

Research on Social Work Practice Impact Score (IS) Trend

Top journals/conferences in social sciences (miscellaneous), top journals/conferences in sociology and political science, top journals/conferences in psychology (miscellaneous), top journals/conferences in social work.

Research On Social Work Practice impact factor, indexing, ranking (2024)

Aim and Scope

The Research On Social Work Practice is a research journal that publishes research related to Psychology; Social Sciences . This journal is published by the SAGE Publications Inc.. The ISSN of this journal is 10497315, 15527581 . Based on the Scopus data, the SCImago Journal Rank (SJR) of research on social work practice is 0.586 .

Research On Social Work Practice Ranking

The Impact Factor of Research On Social Work Practice is 1.984.

The impact factor (IF) is a measure of the frequency with which the average article in a journal has been cited in a particular year. It is used to measure the importance or rank of a journal by calculating the times its articles are cited.

The impact factor was devised by Eugene Garfield, the founder of the Institute for Scientific Information (ISI) in Philadelphia. Impact factors began to be calculated yearly starting from 1975 for journals listed in the Journal Citation Reports (JCR). ISI was acquired by Thomson Scientific & Healthcare in 1992, and became known as Thomson ISI. In 2018, Thomson-Reuters spun off and sold ISI to Onex Corporation and Baring Private Equity Asia. They founded a new corporation, Clarivate , which is now the publisher of the JCR.

Important Metrics

Research on social work practice indexing.

The research on social work practice is indexed in:

- Web of Science (SSCI)

An indexed journal means that the journal has gone through and passed a review process of certain requirements done by a journal indexer.

The Web of Science Core Collection includes the Science Citation Index Expanded (SCIE), Social Sciences Citation Index (SSCI), Arts & Humanities Citation Index (AHCI), and Emerging Sources Citation Index (ESCI).

Research On Social Work Practice Impact Factor 2024

The latest impact factor of research on social work practice is 1.984 .

The impact factor (IF) is a measure of the frequency with which the average article in a journal has been cited in a particular year. It is used to measure the importance or rank of a journal by calculating the times it's articles are cited.

Note: Every year, The Clarivate releases the Journal Citation Report (JCR). The JCR provides information about academic journals including impact factor. The latest JCR was released in June, 2023. The JCR 2024 will be released in the June 2024.

Research On Social Work Practice Quartile

The latest Quartile of research on social work practice is Q1 .

Each subject category of journals is divided into four quartiles: Q1, Q2, Q3, Q4. Q1 is occupied by the top 25% of journals in the list; Q2 is occupied by journals in the 25 to 50% group; Q3 is occupied by journals in the 50 to 75% group and Q4 is occupied by journals in the 75 to 100% group.

Call for Papers

Visit to the official website of the journal/ conference to check the details about call for papers.

How to publish in Research On Social Work Practice?

If your research is related to Psychology; Social Sciences, then visit the official website of research on social work practice and send your manuscript.

Tips for publishing in Research On Social Work Practice:

- Selection of research problem.

- Presenting a solution.

- Designing the paper.

- Make your manuscript publication worthy.

- Write an effective results section.

- Mind your references.

Acceptance Rate

Journal publication time.

The publication time may vary depending on factors such as the complexity of the research and the current workload of the editorial team. Journals typically request reviewers to submit their reviews within 3-4 weeks. However, some journals lack mechanisms to enforce this deadline, making it difficult to predict the duration of the peer review process.

The review time also depends upon the quality of the research paper.

Final Summary

- The impact factor of research on social work practice is 1.984.

- The research on social work practice is a reputed research journal.

- It is published by SAGE Publications Inc. .

- The journal is indexed in UGC CARE, Scopus, SSCI .

- The (SJR) SCImago Journal Rank is 0.586 .

SIMILIAR JOURNALS

JOURNAL OF LATINX PSYCHOLOGY

INTERNATIONAL JOURNAL OF TRANSGENDER HEALTH

DEATH STUDIES

INTERNATIONAL JOURNAL OF INTERCULTURAL RELATIONS

JOURNAL OF HEALTH AND SOCIAL BEHAVIOR

NETWORK SCIENCE

HISTORICAL SOCIAL RESEARCH-HISTORISCHE SOZIALFORSCHUNG

QUALITATIVE HEALTH RESEARCH

ANALYSES OF SOCIAL ISSUES AND PUBLIC POLICY

CHILD ABUSE & NEGLECT

TOP RESEARCH JOURNALS

- Agricultural & Biological Sciences

- Arts & Humanities

- Business, Management and Accounting

- Computer Science

- Engineering

- Mathematics

- Social Sciences

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- HHS Author Manuscripts

The Pursuit of Quality for Social Work Practice: Three Generations and Counting

Enola proctor.

Shanti K. Khinduka Distinguished Professor and director of the Center for Mental Health Services Research at Washington University in St. Louis

Social work addresses some of the most complex and intractable human and social problems: poverty, mental illness, addiction, homelessness, and child abuse. Our field may be distinct among professions for its efforts to ameliorate the toughest societal problems, experienced by society’s most vulnerable, while working from under-resourced institutions and settings. Members of our profession are underpaid, and most of our agencies lack the data infrastructure required for rigorous assessment and evaluation.

Moreover, social work confronts these challenges as it is ethically bound to deliver high-quality services. Policy and regulatory requirements increasingly demand that social work deliver and document the effectiveness of highest quality interventions and restrict reimbursement to those services that are documented as evidence based. Social work’s future, its very survival, depends on our ability to deliver services with a solid base of evidence and to document their effectiveness. In the words of the American Academy of Social Work and Social Welfare (AASWSW; n.d.) , social work seeks to “champion social progress powered by science.” The research community needs to support practice through innovative and rigorous science that advances the evidence for interventions to address social work’s grand challenges.

My work seeks to improve the quality of social work practice by pursuing answers to three questions:

- What interventions and services are most effective and thus should be delivered in social work practice?

- How do we measure the impact of those interventions and services? (That is, what outcomes do our interventions achieve?)

- How do we implement the highest quality interventions?

This paper describes this work, demonstrates the substantive and methodological progression across the three questions, assesses what we have learned, and forecasts a research agenda for what we still need to learn. Given Aaron Rosen’s role as my PhD mentor and our many years of collaboration, the paper also addresses the role of research mentoring in advancing our profession’s knowledge base.

What Interventions and Services Are Most Effective?

Answering the question “What services are effective?” requires rigorous testing of clearly specified interventions. The first paper I coauthored with Aaron Rosen—“Specifying the Treatment Process: The Basis for Effectiveness Research” ( Rosen & Proctor, 1978 )—provided a framework for evaluating intervention effectiveness. At that time, process and outcomes were jumbled and intertwined concepts. Social work interventions were rarely specified beyond theoretical orientation or level of focus: casework (or direct practice); group work; and macro practice, which included community, agency-level, and policy-focused practice. Moreover, interventions were not named, nor were their components clearly identified. We recognized that gross descriptions of interventions obstruct professional training, preclude fidelity assessment, and prevent accurate tests of effectiveness. Thus, in a series of papers, Rosen and I advocated that social work interventions be specified, clearly labeled, and operationally defined, measured, and tested.

Specifying Interventions

Such specification of interventions is essential to two professional responsibilities: professional education and demonstrating the effectiveness of the field’s interventions. Without specification, interventions cannot be taught. Social work education is all about equipping students with skills to deliver interventions, programs, services, administrative practices, and policies. Teaching interventions requires an ability to name, define, see them in action, measure their presence (or absence), assess the fidelity with which they are delivered, and give feedback to students on how to increase or refine the associated skills.

To advance testing the effectiveness of social work interventions, we drew distinctions between interventions and outcomes and proposed these two constructs as the foci for effectiveness research. We defined interventions as practitioner behaviors that can be volitionally manipulated by practitioners (used or not, varied in intensity and timing), that are defined in detail, can be reliably measured, and can be linked to specific identified outcomes ( Rosen & Proctor, 1978 ; Rosen & Proctor, 1981 ). This definition foreshadowed the development of treatment manuals, lists of specific evidence-based practices, and calls for monitoring intervention fidelity. Recognizing the variety of intervention types, and to advance their more precise definition and measurement, we proposed that interventions be distinguished in terms of their complexity. Interventive responses comprise discrete or single responses, such as affirmation, expression of empathy, or positive reinforcement. Interventive strategies comprise several different actions that are, together, linked to a designated outcome, such as motivational interviewing. Most complex are interventive programs , which are a variety of intervention actions organized and integrated as a total treatment package; collaborative care for depression or community assertive treatment are examples. To strengthen the professional knowledge base, we also called for social work effectiveness research to begin testing the optimal dose and sequencing of intervention components in relation to attainment of desired outcomes.

Advancing Intervention Effectiveness Research

Our “specifying paper” also was motivated by the paucity of literature at that time on actual social work interventions. Our literature review of 13 major social work journals over 5 years of published research revealed that only 15% of published social work research addressed interventions. About a third of studies described social problems, and about half explored factors associated with the problem ( Rosen, Proctor, & Staudt, 2003 ). Most troubling was our finding that only 3% of articles described the intervention or its components in sufficient detail for replication in either research or practice. Later, Fraser (2004) found intervention research to comprise only about one fourth of empirical studies in social work. Fortunately, our situation has improved. Intervention research is more frequent in social work publications, thanks largely to the publication policies of the Journal of the Society for Social Work and Research and Research on Social Work Practice .

Research Priorities

Social work faces important and formidable challenges as it advances research on intervention effectiveness. The practitioner who searches the literature or various intervention lists can find more than 500 practices that are named or that are shown to have evidence from rigorous trials that passes a bar to qualify as evidence-based practices. However, our profession still lacks any organized compendium or taxonomy of interventions that are employed in or found to be effective for social work practice. Existing lists of evidence-based practices, although necessary, are insufficient for social work for several reasons. First, as a 2015 National Academies Institute of Medicine (IOM) report—“Psychosocial Interventions for Mental and Substance Use Disorders: A Framework for Establishing Evidence-Based Standards” ( IOM, 2015 )—concluded, too few evidence-based practices have been found to be appropriate for low-resource settings or acceptable to minority groups. Second, existing interventions do not adequately reflect the breadth of social work practice. We have too few evidence-based interventions that can inform effective community organization, case management, referral practice, resource development, administrative practice, or policy. Noting that there is far less literature on evidence-based practices relevant to organizational, community, and policy practice, a social work task force responding to the 2015 IOM report recommended that this gap be a target of our educational and research efforts ( National Task Force on Evidence-Based Practice in Social Work, 2016 ). And finally, our field—along with other professions that deliver psychosocial interventions—lacks the kinds of procedure codes that can identify the specific interventions we deliver. Documenting social work activities in agency records is increasingly essential for quality assurance and third-party reimbursement.

Future Directions: Research to Advance Evidence on Interventions

Social work has critically important research needs. Our field needs to advance the evidence base on what interventions work for social work populations, practices, and settings. Responding to the 2015 IOM report, the National Task Force on Evidence-Based Practice in Social Work (2016) identified as a social work priority the development and testing of evidence-based practices relevant to organizational, community, and policy practice. As we advance our intervention effectiveness research, we must respond to the challenge of determining the key mechanisms of change ( National Institute of Mental Health, 2016 ) and identify key modifiable components of packaged interventions ( Rosen & Proctor, 1978 ). We need to explore the optimal dosage, ordering, or adapted bundling of intervention elements and advance robust, feasible ways to measure and increase fidelity ( Jaccard, 2016 ). We also need to conduct research on which interventions are most appropriate, acceptable, and effective with various client groups ( Zayas, 2003 ; Videka, 2003 ).

Documenting the Impact of Interventions: Specifying and Measuring Outcomes

Outcomes are key to documenting the impact of social work interventions. My 1978 “specifying” paper with Rosen emphasized that the effectiveness of social work practice could not be adequately evaluated without clear specification and measurement of various types of outcomes. In that paper, we argued that the profession cannot rely only on an assertion of effectiveness. The field must also calibrate, calculate, and communicate its impact.

The nursing profession’s highly successful campaign, based on outcomes research, positioned that field to claim that “nurses save lives.” Nurse staffing ratios were associated with in-hospital and 30-day mortality, independent of patient characteristics, hospital characteristics, or medical treatment ( Person et al., 2004 ). In contrast, social work has often described—sometimes advertised—itself as the low-cost profession. The claim of “cheapest service” may have some strategic advantage in turf competition with other professions. But social work can do better. Our research base can and should demonstrate the value of our work by naming and quantifying the outcomes—the added value of social work interventions.

As a start to this work—a beginning step in compiling evidence about the impact of social work interventions—our team set out to identify the outcomes associated with social work practice. We felt that identifying and naming outcomes is essential for conveying what social work is about. Moreover, outcomes should serve as the focus for evaluating the effectiveness of social work interventions.

We produced two taxonomies of outcomes reflected in published evaluations of social work interventions ( Proctor, Rosen, & Rhee, 2002 ; Rosen, Proctor, & Staudt, 2003 ). They included such outcomes as change in clients’ social functioning, resource procurement, problem or symptom reduction, and safety. They exemplify the importance of naming and measuring what our profession can contribute to society. Although social work’s growing body of effectiveness research typically reports outcomes of the interventions being tested, the literature has not, in the intervening 20 years, addressed the collective set of outcomes for our field.

Fortunately, the Grand Challenges for Social Work (AASWSW, n.d.) now provide a framework for communicating social work’s goals. They reflect social work’s added value: improving individual and family well-being, strengthening social fabric, and helping to create a more just society. The Grand Challenges for Social Work include ensuring healthy development for all youth, closing the health gap, stopping family violence, advancing long and productive lives, eradicating social isolation, ending homelessness, creating social responses to a changing environment, harnessing technology for social good, promoting smart decarceration, reducing extreme economic inequality, building financial capability for all, and achieving equal opportunity and justice ( AASWSW, n.d. ).

These important goals appropriately reflect much of what we are all about in social work, and our entire field has been galvanized—energized by the power of these grand challenges. However, the grand challenges require setting specific benchmarks—targets that reflect how far our professional actions can expect to take us, or in some areas, how far we have come in meeting the challenge.

For the past decade, care delivery systems and payment reforms have required measures for tracking performance. Quality measures have become critical tools for all service providers and organizations ( IOM, 2015 ). The IOM defines quality of care as “the degree to which … services for individuals and populations increase the likelihood of desired … outcomes and are consistent with current professional knowledge” ( Lohr, 1990 , p. 21). Quality measures are important at multiple levels of service delivery: at the client level, at the practitioner level, at the organization level, and at the policy level. The National Quality Forum has established five criteria for quality measures: They should address (a) the most important, (b) the most scientifically valid, (c) the most feasible or least burdensome, (d) the most usable, and (e) the most harmonious set of measures ( IOM, 2015 .) Quality measures have been advanced by accrediting groups (e.g., the Joint Commission of the National Committee for Quality Assurance), professional societies, and federal agencies, including the U.S. Department of Health and Human Services. However, quality measures are lacking for key areas of social work practice, including mental health and substance-use treatment. And of the 55 nationally endorsed measures related to mental health and substance use, only two address a psychosocial intervention. Measures used for accreditation and certification purposes often reflect structural capabilities of organizations and their resource use, not the infrastructure required to deliver high-quality services ( IOM, 2015 ). I am not aware of any quality measure developed by our own professional societies or agreed upon across our field.

Future Directions: Research on Quality Monitoring and Measure Development

Although social work as a field lacks a strong tradition of measuring and assessing quality ( Megivern et al., 2007 ; McMillen et al., 2005 ; Proctor, Powell, & McMillen, 2012 ), social work’s role in the quality workforce is becoming better understood ( McMillen & Raffol, 2016 ). The small number of established and endorsed quality measures reflects both limitations in the evidence for effective interventions and challenges in obtaining the detailed information necessary to support quality measurement ( IOM, 2015 ). According to the National Task Force on Evidence-Based Practice in Social Work (2016) , developing quality measures to capture use of evidence-based interventions is essential for the survival of social work practice in many settings. The task force recommends that social work organizations develop relevant and viable quality measures and that social workers actively influence the implementation of quality measures in their practice settings.

How to Implement Evidence-Based Care

A third and more recent focus of my work addresses this question: How do we implement evidence-based care in agencies and communities? Despite our progress in developing proven interventions, most clients—whether served by social workers or other providers—do not receive evidence-based care. A growing number of studies are assessing the extent to which clients—in specific settings or communities—receive evidence-based interventions. Kohl, Schurer, and Bellamy (2009) examined quality in a core area of social work: training for parents at risk for child maltreatment. The team examined the parent services and their level of empirical support in community agencies, staffed largely by master’s-level social workers. Of 35 identified treatment programs offered to families, only 11% were “well-established empirically supported interventions,” with another 20% containing some hallmarks of empirically supported interventions ( Kohl et al., 2009 ). This study reveals a sizable implementation gap, with most of the programs delivered lacking scientific validation.

Similar quality gaps are apparent in other settings where social workers deliver services. Studies show that only 19.3% of school mental health professionals and 36.8% of community mental health professionals working in Virginia’s schools and community mental health centers report using any evidence-based substance-abuse prevention programs ( Evans, Koch, Brady, Meszaros, & Sadler, 2013 ). In mental health, where social workers have long delivered the bulk of services, only 40% to 50% of people with mental disorders receive any treatment ( Kessler, Chiu, Demler, Merikangas, & Walters, 2005 ; Merikangas et al., 2011 ), and of those receiving treatment, a fraction receive what could be considered “quality” treatment ( Wang, Demler, & Kessler, 2002 ; Wang et al., 2005 ). These and other studies indicate that, despite progress in developing proven interventions, most clients do not receive evidence-based care. In light of the growth of evidence-based practice, this fact is troubling evidence that testing interventions and publishing the findings is not sufficient to improve quality.

So, how do we get these interventions in place? What is needed to enable social workers to deliver, and clients to receive, high-quality care? In addition to developing and testing evidence-based interventions, what else is needed to improve the quality of social work practice? My work has focused on advancing quality of services through two paths.

Making Effective Interventions Accessible to Providers: Intervention Reviews and Taxonomies

First, we have advocated that research evidence be synthesized and made available to front-line practitioners. In a research-active field where new knowledge is constantly produced, practitioners should not be expected to rely on journal publications alone for information about effective approaches to achieve desired outcomes. Mastering a rapidly expanding professional evidence base has been characterized as a nearly unachievable challenge for practitioners ( Greenfield, 2017 ). Reviews should critique and clarify the intervention’s effectiveness as tested in specific settings, populations, and contexts, answering the question, “What works where, and with whom?” Even more valuable are studies of comparative effectiveness—those that answer, “Which intervention approach works better, where, and when?”

Taxonomies of clearly and consistently labeled interventions will enhance their accessibility and the usefulness of research reports and systematic reviews. A pre-requisite is the consistent naming of interventions. A persistent challenge is the wide variation in names or labels for interventive procedures and programs. Our professional activities are the basis for our societal sanction, and they must be capable of being accurately labeled and documented if we are to describe what our profession “does” to advance social welfare. Increasingly, and in short order, that documentation will be in electronic records that are scrutinized by third parties for purposes of reimbursement and assessment of value toward outcome attainment.

How should intervention research and reviews be organized? Currently, several websites provide lists of evidence-based practices, some with links, citations, or information about dissemination and implementation organizations that provide training and facilitation to adopters. Practitioners and administrators find such lists helpful but often note the challenge in determining which are most appropriate for their needs. In the words of one agency leader, “The drug companies are great at presenting [intervention information] in a very easy form to use. We don’t have people coming and saying, ‘Ah, let me tell you about the best evidence-based practice for cognitive behavioral therapy for depression,’” ( Proctor et al., 2007 , p. 483). We have called for the field to devise decision aids for practitioners to enhance access to the best available empirical knowledge about interventions ( Proctor et al., 2002 ; Proctor & Rosen, 2008 ; Rosen et al., 2003 ). We proposed that intervention taxonomies be organized around outcomes pursued in social work practice, and we developed such a taxonomy based on eight domains of outcomes—those most frequently tested in social work journals. Given the field’s progress in identifying its grand challenges, its associated outcomes could well serve as the organizing focus, with research-tested interventions listed for each challenge. Compiling the interventions, programs, and services that are shown—through research—to help achieve one of the challenges would surely advance our field.

We further urged profession-wide efforts to develop social work practice guidelines from intervention taxonomies ( Rosen et al., 2003 ). Practice guidelines are systematically compiled, critiqued, and organized statements about the effectiveness of interventions that are organized in a way to help practitioners select and use the most effective and appropriate approaches for addressing client problems and pursuing desired outcomes.

At that time, we proposed that our published taxonomy of social work interventions could provide a beginning architecture for social work guidelines ( Rosen et al., 2003 ). In 2000, we organized a conference for thought leaders in social work practice. This talented group wrestled with and formulated recommendations for tackling the professional, research, and training requisites to developing social work practice guidelines to enable researchers to access and apply the best available knowledge about interventions ( Rosen et al., 2003 ). Fifteen years later, however, the need remains for social work to synthesize its intervention research. Psychology and psychiatry, along with most fields of medical practice, have developed practice guidelines. Although their acceptance and adherence is fraught with challenges, guidelines make evidence more accessible and enable quality monitoring. Yet, guidelines still do not exist for social work.

The 2015 IOM report, “Psychosocial Interventions for Mental and Substance Use Disorders: A Framework for Establishing Evidence-Based Standards,” includes a conclusion that information on the effectiveness of psychosocial interventions is not routinely available to service consumers, providers, and payers, nor is it synthesized. That 2015 IOM report called for systematic reviews to inform clinical guidelines for psychosocial interventions. This report defined psychosocial interventions broadly, encompassing “interpersonal or informational activities, techniques, or strategies that target biological, behavioral, cognitive, emotional, interpersonal, social, or environmental factors with the aim of reducing symptoms and improving functioning or well-being” ( IOM, 2015 , p. 5). These interventions are social work’s domain; they are delivered in the very settings where social workers dominate (behavioral health, schools, criminal justice, child welfare, and immigrant services); and they encompass populations across the entire lifespan within all sociodemographic groups and vulnerable populations. Accordingly, the National Task Force on Evidence Based Practice in Social Work (2016) has recommended the conduct of more systematic reviews of the evidence supporting social work interventions.

If systematic reviews are to lead to guidelines for evidence-based psychosocial interventions, social work needs to be at the table, and social work research must provide the foundation. Whether social work develops its own guidelines or helps lead the development of profession-independent guidelines as recommended by the IOM committee, guidelines need to be detailed enough to guide practice. That is, they need to be accompanied by treatment manuals and informed by research that details the effect of moderator variables and contextual factors reflecting diverse clientele, social determinants of health, and setting resource challenges. The IOM report “Clinical Practice Guidelines We Can Trust” sets criteria for guideline development processes ( IOM, 2011 ). Moreover, social work systematic reviews of research and any associated evidence-based guidelines need to be organized around meaningful taxonomies.

Advancing the Science of Implementation

As a second path to ensuring the delivery of high-quality care, my research has focused on advancing the science of implementation. Implementation research seeks to inform how to deliver evidence-based interventions, programs, and policies into real-world settings so their benefits can be realized and sustained. The ultimate aim of implementation research is building a base of evidence about the most effective processes and strategies for improving service delivery. Implementation research builds upon effectiveness research then seeks to discover how to use specific implementation strategies and move those interventions into specific settings, extending their availability, reach, and benefits to clients and communities. Accordingly, implementation strategies must address the challenges of the service system (e.g., specialty mental health, schools, criminal justice system, health settings) and practice settings (e.g., community agency, national employee assistance programs, office-based practice), and the human capital challenge of staff training and support.

In an approach that echoes themes in an early paper, “Specifying the Treatment Process—The Basis for Effectiveness Research” ( Rosen & Proctor, 1978 ), my work once again tackled the challenge of specifying a heretofore vague process—this time, not the intervention process, but the implementation process. As a first step, our team developed a taxonomy of implementation outcomes ( Proctor et al., 2011 ), which enable a direct test of whether or not a given intervention is adopted and delivered. Although it is overlooked in other types of research, implementation science focuses on this distinct type of outcome. Explicit examination of implementation outcomes is key to an important research distinction. Often, evaluations yield disappointing results about an intervention, showing that the expected and desired outcomes are not attained. This might mean that the intervention was not effective. However, just as likely, it could mean that the intervention was not actually delivered, or it was not delivered with fidelity. Implementation outcomes help identify the roadblocks on the way to intervention adoption and delivery.

Our 2011 taxonomy of implementation outcomes ( Proctor et al., 2011 ), became the framework for two national repositories of measures for implementation research: the Seattle Implementation Research Collaborative ( Lewis et al., 2015 ) and the National Institutes of Health GEM measures database ( Rabin et al., 2012 ). These repositories of implementation outcomes seek to harmonize and increase the rigor of measurement in implementation science.

We also have developed taxonomies of implementation strategies ( Powell et al., 2012 ; Powell et al., 2015 ; Waltz et al., 2014 , 2015) . Implementation strategies are interventions for system change—how organizations, communities, and providers can learn to deliver new and more effective practices ( Powell et al., 2012 ).

A conversation with a key practice leader stimulated my interest in implementation strategies. Shortly after our school endorsed an MSW curriculum emphasizing evidence-based practices, a pioneering CEO of a major social service agency in St. Louis met with me and asked,

Enola Proctor, I get the importance of delivering evidence based practices. My organization delivers over 20 programs and interventions, and I believe only a handful of them are really evidence based. I want to decrease our provision of ineffective care, and increase our delivery of evidence-based practices. But how? What are the evidence-based ways I, as an agency director, can transform my agency so that we can deliver evidence-based practices?

That agency director was asking a question of how . He was asking for evidence-based implementation strategies. Moving effective programs and practices into routine care settings requires the skillful use of implementation strategies, defined as systematic “methods or techniques used to enhance the adoption, implementation, and sustainability of a clinical program or practice into routine service” ( Proctor et al., 2013 , p. 2).

This question has shaped my work for the past 15 years, as well as the research priorities of several funding agencies, including the National Institutes of Health, the Agency for Healthcare Research and Quality, the Patient-Centered Outcomes Research Institute, and the World Health Organization. Indeed, a National Institutes of Health program announcement—Dissemination and Implementation Research in Health ( National Institutes of Health, 2016 )—identified the discovery of effective implementation strategies as a primary purpose of implementation science. To date, the implementation science literature cannot yet answer that important question, but we are making progress.

To identify implementation strategies, our teams first turned to the literature—a literature that we found to be scattered across a wide range of journals and disciplines. Most articles were not empirical, and most articles used widely differing terms to characterize implementation strategies. We conducted a structured literature review to generate common nomenclature and a taxonomy of implementation strategies. That review yielded 63 distinct implementation strategies, which fell into six groupings: planning, educating, financing, restructuring, managing quality, and attending to policy context ( Powell et al., 2012 ).

Our team refined that compilation, using Delphi techniques and concept mapping to develop conceptually distinct categories of implementation strategies ( Powell et al., 2015 ; Waltz et al., 2014 ). The refined compilation of 73 discrete implementation strategies was then further organized into nine clusters:

- changing agency infrastructure,

- using financial strategies,

- supporting clinicians,

- providing interactive assistance,

- training and educating stakeholders,

- adapting and tailoring interventions to context,

- developing stakeholder relationships,

- using evaluative and iterative strategies, and

- engaging consumers.

These taxonomies of implementation strategies position the field for more robust research on implementation processes. The language used to describe implementation strategies has not yet “gelled” and has been described as a “Tower of Babel” ( McKibbon et al., 2010 ). Therefore, we also developed guidelines for reporting the components of strategies ( Proctor et al., 2013 ) so researchers and implementers would have more behaviorally specific information about what a strategy is, who does it, when, and for how long. The value of such reporting guidelines is illustrated in the work of Gold and colleagues (2016) .

What have we learned, through our own program of research on implementation strategies—the “how to” of improving practice? First, we have been able to identify from practice-based evidence the implementation strategies used most often. Using novel activity logs to track implementation strategies, Bunger and colleagues (2017) found that strategies such as quality improvement tools, using data experts, providing supervision, and sending clinical reminders were frequently used to facilitate delivery of behavioral health interventions within a child-welfare setting and were perceived by agency leadership as contributing to project success.

Second, reflecting the complexity of quality improvement processes, we have learned that there is no magic bullet ( Powell, Proctor, & Glass, 2013 ). Our study of U.S. Department of Veterans Affairs clinics working to implement evidence-based HIV treatment found that implementers used an average of 25 (plus or minus 14) different implementation strategies ( Rogal, et al., 2017 ). Moreover, the number of implementation strategies used was positively associated with the number of new treatment starts. These findings suggest that implementing new interventions requires considerable effort and resources.

To advance our understanding of the effectiveness of implementation strategies, our teams have conducted a systematic review ( Powell et al., 2013 ), tested specific strategies, and captured practice-based evidence from on-the-ground implementers. Testing the effectiveness of implementation strategies has been identified as a top research priority by the IOM (2009) . In work with Charles Glisson in St. Louis, our 15-agency-based randomized clinical trial found that an organizational-focused intervention—the attachment, regulatory, and competency model—improved agency culture and climate, stimulated more clinicians to enroll in evidence-based-practice training, and boosted clinical effect sizes of various evidence-based practices ( Glisson, Williams, Hemmelgarn, Proctor, & Green, 2016a , 2016b ). And in a hospital critical care unit, the implementation strategies of developing a team, selecting and using champions, provider education sessions, and audit and feedback helped increase team adherence to phlebotomy guidelines ( Steffen et al., in press ).

We are also learning about the value of different strategies. Experts in implementation science and implementation practice identified as most important the strategies of “use evaluate and iterative approaches” and “train and educate stakeholders.” Reported as less helpful were such strategies as “access new funding streams” and “remind clinicians of practices to use” ( Waltz et al., 2015 ). Successful implementers in Veterans Affairs clinics relied more heavily on such strategies as “change physical structures and equipment” and “facilitate relay of clinical data to providers” than did less successful implementers ( Rogal et al., 2017 ).

Many strategies have yet to be investigated empirically, as has the role of dissemination and implementation organizations—organizations that function to promote, provide information about, provide training in, and scale up specific treatments. Most evidence-based practices used in behavioral health, including most listed on the Substance Abuse and Mental Health Services Administration National Registry of Promising and Effective Practices, are disseminated and distributed by dissemination and implementation organizations. Unlike drugs and devices, psychosocial interventions have no Federal Drug Administration-like delivery system. Kreuter and Casey (2012) urge better understanding and use of the intervention “delivery system,” or mechanisms to bring treatment discoveries to the attention of practitioners and into use in practice settings.

Implementation strategies have been shown to boost clinical effectiveness ( Glisson et al., 2010 ), reduce staff turnover ( Aarons, Sommerfield, Hect, Silvosky, & Chaffin, 2009 ) and help reduce disparities in care ( Balicer et al., 2015 ).

Future directions: Research on implementation strategies

My work in implementation science has helped build intellectual capital for the rapidly growing field of dissemination and implementation science, leading teams to distinguish, clearly define, develop taxonomies, and stimulate more systematic work to advance the conceptual, linguistic, and methodological clarity in the field. Yet, we continue to lack understanding of many issues. What strategies are used in usual implementation practice, by whom, for which empirically supported interventions? What strategies are effective in which organizational and policy contexts? Which strategies are effective in attaining which specific implementation outcomes? For example, are the strategies that are effective for initial adoption also effective for scale up, spread, and sustained use of interventions? Social workers have the skill set for roles as implementation facilitators, and refining packages of implementation strategies that are effective in social service and behavioral health settings could boost the visibility, scale, and impact of our work.

The Third Generation and Counting

Social work faces grand, often daunting challenges. We need to develop a more robust base of evidence about the effectiveness of interventions and make that evidence more relevant, accessible, and applicable to social work practitioners, whether they work in communities, agencies, policy arenas, or a host of novel settings. We need to advance measurement-based care so our value as a field is recognized. We need to know how to bring proven interventions to scale for population-level impact. We need to discover ways to build capacity of social service agencies and the communities in which they reside. And we need to learn how to sustain advances in care once we achieve them ( Proctor et al., 2015 ). Our challenges are indeed grand, far outstripping our resources.

So how dare we speak of a quality quest? Does it not seem audacious to seek the highest standards in caring for the most vulnerable, especially in an era when we face a new political climate that threatens vulnerable groups and promises to strip resources from health and social services? Members of our profession are underpaid, and most of our agencies lack the data infrastructure required for assessment and evaluation. Quality may be an audacious goal, but as social workers we can pursue no less. By virtue of our code of ethics, our commitment to equity, and our skills in intervening on multiple levels of systems and communities, social workers are ideally suited for advancing quality.

Who will conduct the needed research? Who will pioneer its translation to improving practice? Social work practice can be only as strong as its research base; the responsibility for developing that base, and hence improve practice, is lodged within social work research.

If my greatest challenge is pursuing this quest, my greatest joy is in mentoring the next generation for this work. My research mentoring has always been guided by the view that the ultimate purpose of research in the helping professions is the production and systemization of knowledge for use by practitioners ( Rosen & Proctor, 1978 ). For 27 years, the National Institute of Mental Health has supported training in mental health services research based in the Center for Mental Health Services Research ( Hasche, Perron, & Proctor, 2009 ; Proctor & McMillen, 2008 ). And, with colleague John Landsverk, we are launching my sixth year leading the Implementation Research Institute, a training program for implementation science supported by the National Institute of Mental Health ( Proctor et al., 2013 ). We have trained more than 50 social work, psychology, anthropology, and physician researchers in implementation science for mental health. With three more cohorts to go, we are working to assess what works in research training for implementation science. Using bibliometric analysis, we have learned that intensive training and mentoring increases research productivity in the form of published papers and grants that address how to implement evidence-based care in mental health and addictions. And, through use of social network analysis, we have learned that every “dose” of mentoring increases scholarly collaboration when measured two years later ( Luke, Baumann, Carothers, Landsverk, & Proctor, 2016 ).

As his student, I was privileged to learn lessons in mentoring from Aaron Rosen. He treated his students as colleagues, he invited them in to work on the most challenging of questions, and he pursued his work with joy. When he treated me as a colleague, I felt empowered. When he invited me to work with him on the field’s most vexing challenges, I felt inspired. And as he worked with joy, I learned that work pursued with joy doesn’t feel like work at all. And now the third, fourth, and fifth generations of social work researchers are pursuing tough challenges and the quality quest for social work practice. May seasoned and junior researchers work collegially and with joy, tackling the profession’s toughest research challenges, including the quest for high-quality social work services.

Acknowledgments

Preparation of this paper was supported by IRI (5R25MH0809160), Washington University ICTS (2UL1 TR000448-08), Center for Mental Health Services Research, Washington University in St. Louis, and the Center for Dissemination and Implementation, Institute for Public Health, Washington University in St. Louis.

This invited article is based on the 2017 Aaron Rosen Lecture presented by Enola Proctor at the Society for Social Work and Research 21st Annual Conference—“Ensure Healthy Development for All Youth”—held January 11–15, 2017, in New Orleans, LA. The annual Aaron Rosen Lecture features distinguished scholars who have accumulated a body of significant and innovative scholarship relevant to practice, the research base for practice, or effective utilization of research in practice.

- Aarons GA, Sommerfield DH, Hect DB, Silvosky JF, Chaffin MJ. The impact of evidence-based practice implementation and fidelity monitoring on staff turnover: Evidence for a protective effect. Journal of Consulting and Clinical Psychology. 2009; 77 (2):270–280. https://doi.org/10.1037/a0013223 . [ PMC free article ] [ PubMed ] [ Google Scholar ]

- American Academy of Social Work and Social Welfare (AASWSW) Grand challenges for social work (n.d.) Retrieved from http://aaswsw.org/grand-challenges-initiative/

- Balicer RD, Hoshen M, Cohen-Stavi C, Shohat-Spitzer S, Kay C, Bitterman H, Shadmi E. Sustained reduction in health disparities achieved through targeted quality improvement: One-year follow-up on a three-year intervention. Health Services Research. 2015; 50 :1891–1909. http://dx.doi.org/10.1111/1475-6773.12300 . [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Bunger AC, Powell BJ, Robertson HA, MacDowell H, Birken SA, Shea C. Tracking implementation strategies: A description of a practical approach and early findings. Health Research Policy and Systems. 2017; 15 (15):1–12. https://doi.org/10.1186/s12961-017-0175-y . [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Evans SW, Koch JR, Brady C, Meszaros P, Sadler J. Community and school mental health professionals’ knowledge and use of evidence based substance use prevention programs. Administration and Policy in Mental Health and Mental Health Services Research. 2013; 40 (4):319–330. https://doi.org/10.1007/s10488-012-0422-z . [ PubMed ] [ Google Scholar ]

- Fraser MW. Intervention research in social work: Recent advances and continuing challenges. Research on Social Work Practice. 2004; 14 (3):210–222. https://doi.org/10.1177/1049731503262150 . [ Google Scholar ]

- Glisson C, Schoenwald SK, Hemmelgarn A, Green P, Dukes D, Armstrong KS, Chapman JE. Randomized trial of MST and ARC in a two-level evidence-based treatment implementation strategy. Journal of Consulting and Clinical Psychology. 2010; 78 (4):537–550. https://doi.org/10.1037/a0019160 . [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Glisson C, Williams NJ, Hemmelgarn A, Proctor EK, Green P. Increasing clinicians’ EBT exploration and preparation behavior in youth mental health services by changing organizational culture with ARC. Behaviour Research and Therapy. 2016a; 76 :40–46. https://doi.org/10.1016/j.brat.2015.11.008 . [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Glisson C, Williams NJ, Hemmelgarn A, Proctor EK, Green P. Aligning organizational priorities with ARC to improve youth mental health service outcomes. Journal of Consulting and Clinical Psychology. 2016b; 84 (8):713–725. https://doi.org/10.1037/ccp0000107 . [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Gold R, Bunce AE, Cohen DJ, Hollombe C, Nelson CA, Proctor EK, DeVoe JE. Reporting on the strategies needed to implement proven interventions: An example from a “real-world” cross-setting implementation study. Mayo Clinic Proceedings. 2016; 91 (8):1074–1083. https://doi.org/10.1016/j.mayocp.2016.03.014 . [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Greenfield S. Clinical practice guidelines: Expanded use and misuse. Journal of the American Medical Association. 2017; 317 (6):594–595. doi: 10.1001/jama.2016.19969. [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Hasche L, Perron B, Proctor E. Making time for dissertation grants: Strategies for social work students and educators. Research on Social Work Practice. 2009; 19 (3):340–350. https://doi.org/10.1177/1049731508321559 . [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Institute of Medicine (IOM), Committee on Comparative Effectiveness Research Prioritization. Initial national priorities for comparative effectiveness research. Washington, DC: The National Academies Press; 2009. [ Google Scholar ]

- Institute of Medicine (IOM) Clinical practice guidelines we can trust. Washington, DC: The National Academies Press; 2011. [ Google Scholar ]

- Institute of Medicine (IOM) Psychosocial interventions for mental and substance use disorders: A framework for establishing evidence-based standards. Washington, DC: The National Academies Press; 2015. https://doi.org/10.17226/19013 . [ PubMed ] [ Google Scholar ]

- Jaccard J. The prevention of problem behaviors in adolescents and young adults: Perspectives on theory and practice. Journal of the Society for Social Work and Research. 2016; 7 (4):585–613. https://doi.org/10.1086/689354 . [ Google Scholar ]

- Kessler RC, Chiu WT, Demler O, Merikangas KR, Walters EE. Prevalence, severity, and comorbidity of 12-month DSM-IV disorders in the National Comorbidity Survey Replication. Archives of General Psychiatry. 2005; 62 (6):617–627. https://doi.org/10.1001/archpsyc.62.6.617 . [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Kohl PL, Schurer J, Bellamy JL. The state of parent training: Program offerings and empirical support. Families in Society: The Journal of Contemporary Social Services. 2009; 90 (3):248–254. http://dx.doi.org/10.1606/1044-3894.3894 . [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Kreuter MW, Casey CM. Enhancing dissemination through marketing and distribution systems: A vision for public health. In: Brownson R, Colditz G, Proctor E, editors. Dissemination and implementation research in health: Translating science to practice. New York, NY: Oxford University Press; 2012. [ Google Scholar ]

- Lewis CC, Stanick CF, Martinez RG, Weiner BJ, Kim M, Barwick M, Comtois KA. The Society for Implementation Research collaboration instrument review project: A methodology to promote rigorous evaluation. Implementation Science. 2015; 10 (2):1–18. https://doi.org/10.1186/s13012-014-0193-x . [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Lohr KN. Medicare: A strategy for quality assurance. I. Washington, DC: National Academies Press; 1990. [ PubMed ] [ Google Scholar ]

- Luke D, Baumann A, Carothers B, Landsverk J, Proctor EK. Forging a link between mentoring and collaboration: A new training model for implementation science. Implementation Science. 2016; 11 (137):1–12. http://dx.doi.org/10.1186/s13012-016-0499-y . [ PMC free article ] [ PubMed ] [ Google Scholar ]

- McKibbon KA, Lokker C, Wilczynski NL, Ciliska D, Dobbins M, Davis DA, Straus SS. A cross-sectional study of the number and frequency of terms used to refer to knowledge translation in a body of health literature in 2006: A tower of Babel? Implementation Science. 2010; 5 (16) doi: 10.1186/1748-5908-5-16. [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- McMillen JC, Proctor EK, Megivern D, Striley CW, Cabasa LJ, Munson MR, Dickey B. Quality of care in the social services: Research agenda and methods. Social Work Research. 2005; 29 (3):181–191. doi.org/10.1093/swr/29.3.181. [ Google Scholar ]

- McMillen JC, Raffol M. Characterizing the quality workforce in private U.S. child and family behavioral health agencies. Administration and Policy in Mental Health and Mental Health Services Research. 2016; 43 (5):750–759. doi: 10.1007/s10488-0150-0667-4. [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Megivern DA, McMillen JC, Proctor EK, Striley CW, Cabassa LJ, Munson MR. Quality of care: Expanding the social work dialogue. Social Work. 2007; 52 (2):115–124. https://dx.doi.org/10.1093/sw/52.2.115 . [ PubMed ] [ Google Scholar ]

- Merikangas KR, He J, Burstein M, Swendsen J, Avenevoli S, Case B, Olfson M. Service utilization for lifetime mental disorders in U.S. adolescents: Results of the National Comorbidity Survey Adolescent Supplement (NCS-A) Journal of the American Academy of Child and Adolescent Psychiatry. 2011; 50 (1):32–45. https://doi.org/10.1016/j.jaac.2010.10.006 . [ PMC free article ] [ PubMed ] [ Google Scholar ]

- National Institute of Mental Health. Psychosocial research at NIMH: A primer. 2016 Retrieved from https://www.nimh.nih.gov/research-priorities/psychosocial-research-at-nimh-a-primer.shtml .

- National Institutes of Health. Dissemination and implementation research in health (R01) 2016 Sep 14; Retrieved from https://archives.nih.gov/asites/grants/09-14-2016/grants/guide/pa-files/PAR-16-238.html .

- National Task Force on Evidence-Based Practice in Social Work. Unpublished recommendations to the Social Work Leadership Roundtable 2016 [ Google Scholar ]

- Person SD, Allison JJ, Kiefe CI, Weaver MT, Williams OD, Centor RM, Weissman NW. Nurse staffing and mortality for Medicare patients with acute myocardial infarction. Medical Care. 2004; 42 (1):4–12. https://doi.org/10.1097/01.mlr.0000102369.67404.b0 . [ PubMed ] [ Google Scholar ]

- Powell BJ, McMillen C, Proctor EK, Carpenter CR, Griffey RT, Bunger AC, York JL. A compilation of strategies for implementing clinical innovations in health and mental health. Medical Care Research and Review. 2012; 69 (2):123–157. https://dx.doi.org/10.1177/1077558711430690 . [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Powell BJ, Proctor EK, Glass JE. A systematic review of strategies for implementing empirically supported mental health interventions. Research on Social Work Practice. 2013; 24 (2):192–212. https://doi.org/10.1177/1049731513505778 . [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, Kirchner JE. A refined compilation of implementation strategies: Results from the Expert Recommendations for Implementing Change (ERIC) project. Implementation Science. 2015; 10 (21):1–14. https://doi.org/10.1186/s13012-015-0209-1 . [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Proctor EK, Knudsen KJ, Fedoravicius N, Hovmand P, Rosen A, Perron B. Implementation of evidence-based practice in community behavioral health: Agency director perspectives. Administration and Policy in Mental Health and Mental Health Services Research. 2007; 34 (5):479–488. https://doi.org/10.1007/s10488-007-0129-8 . [ PubMed ] [ Google Scholar ]

- Proctor EK, Landsverk J, Baumann AA, Mittman BS, Aarons GA, Brownson RC, Chambers D. The Implementation Research Institute: Training mental health implementation researchers in the United States. Implementation Science. 2013; 8 (105):1–12. https://doi.org/10.1186/1748-5908-8-105 . [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Proctor EK, Luke D, Calhoun A, McMillen C, Brownson R, McCrary S, Padek M. Sustainability of evidence-based healthcare: Research agenda, methodological advances, and infrastructure support. Implementation Science. 2015; 10 (88):1–13. https://doi.org/10.1186/s13012-015-0274-5 . [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Proctor EK, McMillen JC. Quality of care. In: Mizrahi T, Davis L, editors. Encyclopedia of Social Work. 20. Washington, DC, and New York, NY: NASW Press and Oxford University Press; 2008. http://dx.doi.org/10.1093/acrefore/9780199975839.013.33 . [ Google Scholar ]

- Proctor EK, Powell BJ, McMillen CJ. Implementation strategies: Recommendations for specifying and reporting. Implementation Science. 2012; 8 (139):1–11. https://doi.org/10.1186/1748-5908-8-139 . [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Proctor EK, Rosen A. From knowledge production to implementation: Research challenges and imperatives. Research on Social Work Practice. 2008; 18 (4):285–291. https://doi.org/10.1177/1049731507302263 . [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Proctor EK, Rosen A, Rhee C. Outcomes in social work practice. Social Work Research & Evaluation. 2002; 3 (2):109–125. [ Google Scholar ]

- Proctor EK, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, Hensley M. Outcomes for implementation research: Conceptual distinctions, measurement challenges, and research agenda. Administration and Policy in Mental Health and Mental Health Services Research. 2011; 38 (2):65–76. https://doi.org/10.1007/s10488-010-0319-7 . [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Rabin BA, Purcell P, Naveed S, Moser RP, Henton MD, Proctor EK, Glasgow RE. Advancing the application, quality and harmonization of implementation science measures. Implementation Science. 2012; 7 (119):1–11. https://doi.org/10.1186/1748-5908-7-119 . [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Rogal SS, Yakovchenko V, Waltz TJ, Powell BJ, Kirchner JE, Proctor EK, Chinman MJ. The association between implementation strategy use and the uptake of hepatitis C treatment in a national sample. Implementation Science. 2017; 12 (60) http://doi.org/10.1186/s13012-017-0588-6 . [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Rosen A, Proctor EK. Specifying the treatment process: The basis for effectiveness research. Journal of Social Service Research. 1978; 2 (1):25–43. https://doi.org/10.1300/J079v02n01_04 . [ Google Scholar ]

- Rosen A, Proctor EK. Distinctions between treatment outcomes and their implications for treatment evaluation. Journal of Consulting and Clinical Psychology. 1981; 49 (3):418–425. http://dx.doi.org/10.1037/0022-006X.49.3.418 . [ PubMed ] [ Google Scholar ]

- Rosen A, Proctor EK, Staudt M. Targets of change and interventions in social work: An empirically-based prototype for developing practice guidelines. Research on Social Work Practice. 2003; 13 (2):208–233. https://dx.doi.org/10.1177/1049731502250496 . [ Google Scholar ]

- Steffen K, Doctor A, Hoerr J, Gill J, Markham C, Riley S, Spinella P. Controlling phlebotomy volume diminishes PICU transfusion: Implementation processes and impact. Pediatrics (in press) [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Videka L. Accounting for variability in client, population, and setting characteristics: Moderators of intervention effectiveness. In: Rosen A, Proctor EK, editors. Developing practice guidelines for social work intervention: Issues, methods, and research agenda. New York, NY: Columbia University Press; 2003. pp. 169–192. [ Google Scholar ]

- Waltz TJ, Powell BJ, Chinman MJ, Smith JL, Matthieu MM, Proctor EK, Kirchner JE. Expert Recommendations for Implementing Change (ERIC): Protocol for a mixed methods study. Implementation Science. 2014; 9 (39):1–12. https://doi.org/10.1186/1748-5908-9-39 . [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Waltz TJ, Powell BJ, Matthieu MM, Damschroder LJ, Chinman MJ, Smith JL, Kirchner JE. Use of concept mapping to characterize relationships among implementation strategies and assess their feasibility and importance: Results from the Expert Recommendations for Implementing Change (ERIC) study. Implementation Science. 2015; 10 (109):1–8. https://doi.org/10.1186/s13012-015-0295-0 . [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Wang PS, Lane M, Olfson M, Pincus HA, Wells KB, Kessler RC. Twelvemonth use of mental health services in the United States: Results from the National Comorbidity Survey Replication. Archives of General Psychiatry. 2005; 62 (6):629–640. doi: 10.1001/archpsyc.62.6.629. [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Wang PS, Demler O, Kessler RC. Adequacy of treatment for serious mental illness in the United States. American Journal of Public Health. 2002; 92 (1):92–98. http://ajph.aphapublications.org/doi/abs/10.2105/AJPH.92.1.92 . [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Zayas L. Service delivery factors in the development of practice guidelines. In: Rosen A, Proctor EK, editors. Developing practice guidelines for social work intervention: Issues, methods, and research agenda. New York, NY: Columbia University Press; 2003. pp. 169–192. https://doi.org/10.7312/rose12310-010 . [ Google Scholar ]

- Search Menu

- Advance articles

- Author Guidelines

- Submission Site

- About Social Work

- About the National Association of Social Workers

- Editorial Board

- Advertising and Corporate Services

- Journals Career Network

- Self-Archiving Policy

- Dispatch Dates

- Journals on Oxford Academic

- Books on Oxford Academic

Impact Factor Articles

Thomson Reuters has released the latest Journal Citation Reports® and Impact Factors, and Oxford Journals is pleased to give access to a selection of highly cited articles from recent years. These articles are just a sample of the impressive collection of research published in Social Work .

Balancing Micro and Macro Practice: A Challenge for Social Work Jack Rothman; Terry Mizrahi Volume 59, Issue 1

Service Needs among Latino Immigrant Families: Implications for Social Work Practice Cecilia Ayón Volume 59, Issue 1

Witness to Suffering: Mindfulness and Compassion Fatigue among Traumatic Bereavement Volunteers and Professionals Kara Thieleman; Joanne Cacciatore Volume 59, Issue 1

The Role of Empathy in Burnout, Compassion Satisfaction, and Secondary Traumatic Stress among Social Workers M. Alex Wagaman; Jennifer M. Geiger; Clara Shockley; Elizabeth A. Segal Volume 60, Issue 3

Reproductive Health in the United States: A Review of the Recent Social Work Literature Rachel L. Wright; Melissa Bird; Caren J. Frost Volume 60, Issue 4

+ Read a selection of highly cited articles from Social Work Research and Health & Social Work .

- Recommend to your Library

Affiliations

- Online ISSN 1545-6846

- Print ISSN 0037-8046

- Copyright © 2024 National Association of Social Workers

- About Oxford Academic

- Publish journals with us

- University press partners

- What we publish

- New features

- Open access

- Institutional account management

- Rights and permissions

- Get help with access

- Accessibility

- Advertising

- Media enquiries

- Oxford University Press

- Oxford Languages

- University of Oxford

Oxford University Press is a department of the University of Oxford. It furthers the University's objective of excellence in research, scholarship, and education by publishing worldwide

- Copyright © 2024 Oxford University Press

- Cookie settings

- Cookie policy

- Privacy policy

- Legal notice

This Feature Is Available To Subscribers Only

Sign In or Create an Account

This PDF is available to Subscribers Only

For full access to this pdf, sign in to an existing account, or purchase an annual subscription.

- All subject areas

- Agricultural and Biological Sciences

- Arts and Humanities

- Biochemistry, Genetics and Molecular Biology

- Business, Management and Accounting

- Chemical Engineering

- Computer Science

- Decision Sciences

- Earth and Planetary Sciences

- Economics, Econometrics and Finance

- Engineering

- Environmental Science

- Health Professions

- Immunology and Microbiology

- Materials Science

- Mathematics

- Multidisciplinary

- Neuroscience

- Pharmacology, Toxicology and Pharmaceutics

- Physics and Astronomy

- Social Sciences

- All subject categories

- Acoustics and Ultrasonics

- Advanced and Specialized Nursing

- Aerospace Engineering

- Agricultural and Biological Sciences (miscellaneous)

- Agronomy and Crop Science

- Algebra and Number Theory

- Analytical Chemistry

- Anesthesiology and Pain Medicine

- Animal Science and Zoology

- Anthropology

- Applied Mathematics

- Applied Microbiology and Biotechnology

- Applied Psychology

- Aquatic Science

- Archeology (arts and humanities)

- Architecture

- Artificial Intelligence

- Arts and Humanities (miscellaneous)

- Assessment and Diagnosis

- Astronomy and Astrophysics

- Atmospheric Science

- Atomic and Molecular Physics, and Optics

- Automotive Engineering

- Behavioral Neuroscience

- Biochemistry

- Biochemistry, Genetics and Molecular Biology (miscellaneous)

- Biochemistry (medical)

- Bioengineering

- Biological Psychiatry

- Biomaterials

- Biomedical Engineering

- Biotechnology

- Building and Construction

- Business and International Management

- Business, Management and Accounting (miscellaneous)

- Cancer Research

- Cardiology and Cardiovascular Medicine

- Care Planning

- Cell Biology

- Cellular and Molecular Neuroscience

- Ceramics and Composites

- Chemical Engineering (miscellaneous)

- Chemical Health and Safety

- Chemistry (miscellaneous)

- Chiropractics

- Civil and Structural Engineering

- Clinical Biochemistry

- Clinical Psychology

- Cognitive Neuroscience

- Colloid and Surface Chemistry

- Communication

- Community and Home Care

- Complementary and Alternative Medicine

- Complementary and Manual Therapy

- Computational Mathematics

- Computational Mechanics

- Computational Theory and Mathematics

- Computer Graphics and Computer-Aided Design

- Computer Networks and Communications

- Computer Science Applications

- Computer Science (miscellaneous)

- Computer Vision and Pattern Recognition

- Computers in Earth Sciences

- Condensed Matter Physics

- Conservation

- Control and Optimization

- Control and Systems Engineering

- Critical Care and Intensive Care Medicine

- Critical Care Nursing

- Cultural Studies

- Decision Sciences (miscellaneous)

- Dental Assisting

- Dental Hygiene

- Dentistry (miscellaneous)

- Dermatology

- Development

- Developmental and Educational Psychology

- Developmental Biology

- Developmental Neuroscience

- Discrete Mathematics and Combinatorics

- Drug Discovery

- Drug Guides

- Earth and Planetary Sciences (miscellaneous)

- Earth-Surface Processes

- Ecological Modeling

- Ecology, Evolution, Behavior and Systematics

- Economic Geology

- Economics and Econometrics

- Economics, Econometrics and Finance (miscellaneous)

- Electrical and Electronic Engineering

- Electrochemistry

- Electronic, Optical and Magnetic Materials

- Emergency Medical Services

- Emergency Medicine

- Emergency Nursing

- Endocrine and Autonomic Systems

- Endocrinology

- Endocrinology, Diabetes and Metabolism

- Energy Engineering and Power Technology

- Energy (miscellaneous)

- Engineering (miscellaneous)

- Environmental Chemistry

- Environmental Engineering

- Environmental Science (miscellaneous)

- Epidemiology

- Experimental and Cognitive Psychology

- Family Practice

- Filtration and Separation