- Product Management

How to Generate and Validate Product Hypotheses

What is a product hypothesis.

A hypothesis is a testable statement that predicts the relationship between two or more variables. In product development, we generate hypotheses to validate assumptions about customer behavior, market needs, or the potential impact of product changes. These experimental efforts help us refine the user experience and get closer to finding a product-market fit.

Product hypotheses are a key element of data-driven product development and decision-making. Testing them enables us to solve problems more efficiently and remove our own biases from the solutions we put forward.

Here’s an example: ‘If we improve the page load speed on our website (variable 1), then we will increase the number of signups by 15% (variable 2).’ So if we improve the page load speed, and the number of signups increases, then our hypothesis has been proven. If the number did not increase significantly (or not at all), then our hypothesis has been disproven.

In general, product managers are constantly creating and testing hypotheses. But in the context of new product development , hypothesis generation/testing occurs during the validation stage, right after idea screening .

Now before we go any further, let’s get one thing straight: What’s the difference between an idea and a hypothesis?

Idea vs hypothesis

Innovation expert Michael Schrage makes this distinction between hypotheses and ideas – unlike an idea, a hypothesis comes with built-in accountability. “But what’s the accountability for a good idea?” Schrage asks. “The fact that a lot of people think it’s a good idea? That’s a popularity contest.” So, not only should a hypothesis be tested, but by its very nature, it can be tested.

At Railsware, we’ve built our product development services on the careful selection, prioritization, and validation of ideas. Here’s how we distinguish between ideas and hypotheses:

Idea: A creative suggestion about how we might exploit a gap in the market, add value to an existing product, or bring attention to our product. Crucially, an idea is just a thought. It can form the basis of a hypothesis but it is not necessarily expected to be proven or disproven.

- We should get an interview with the CEO of our company published on TechCrunch.

- Why don’t we redesign our website?

- The Coupler.io team should create video tutorials on how to export data from different apps, and publish them on YouTube.

- Why not add a new ‘email templates’ feature to our Mailtrap product?

Hypothesis: A way of framing an idea or assumption so that it is testable, specific, and aligns with our wider product/team/organizational goals.

Examples:

- If we add a new ‘email templates’ feature to Mailtrap, we’ll see an increase in active usage of our email-sending API.

- Creating relevant video tutorials and uploading them to YouTube will lead to an increase in Coupler.io signups.

- If we publish an interview with our CEO on TechCrunch, 500 people will visit our website and 10 of them will install our product.

Now, it’s worth mentioning that not all hypotheses require testing . Sometimes, the process of creating hypotheses is just an exercise in critical thinking. And the simple act of analyzing your statement tells whether you should run an experiment or not. Remember: testing isn’t mandatory, but your hypotheses should always be inherently testable.

Let’s consider the TechCrunch article example again. In that hypothesis, we expect 500 readers to visit our product website, and a 2% conversion rate of those unique visitors to product users i.e. 10 people. But is that marginal increase worth all the effort? Conducting an interview with our CEO, creating the content, and collaborating with the TechCrunch content team – all of these tasks take time (and money) to execute. And by formulating that hypothesis, we can clearly see that in this case, the drawbacks (efforts) outweigh the benefits. So, no need to test it.

In a similar vein, a hypothesis statement can be a tool to prioritize your activities based on impact. We typically use the following criteria:

- The quality of impact

- The size of the impact

- The probability of impact

This lets us organize our efforts according to their potential outcomes – not the coolness of the idea, its popularity among the team, etc.

Now that we’ve established what a product hypothesis is, let’s discuss how to create one.

Start with a problem statement

Before you jump into product hypothesis generation, we highly recommend formulating a problem statement. This is a short, concise description of the issue you are trying to solve. It helps teams stay on track as they formalize the hypothesis and design the product experiments. It can also be shared with stakeholders to ensure that everyone is on the same page.

The statement can be worded however you like, as long as it’s actionable, specific, and based on data-driven insights or research. It should clearly outline the problem or opportunity you want to address.

Here’s an example: Our bounce rate is high (more than 90%) and we are struggling to convert website visitors into actual users. How might we improve site performance to boost our conversion rate?

How to generate product hypotheses

Now let’s explore some common, everyday scenarios that lead to product hypothesis generation. For our teams here at Railsware, it’s when:

- There’s a problem with an unclear root cause e.g. a sudden drop in one part of the onboarding funnel. We identify these issues by checking our product metrics or reviewing customer complaints.

- We are running ideation sessions on how to reach our goals (increase MRR, increase the number of users invited to an account, etc.)

- We are exploring growth opportunities e.g. changing a pricing plan, making product improvements , breaking into a new market.

- We receive customer feedback. For example, some users have complained about difficulties setting up a workspace within the product. So, we build a hypothesis on how to help them with the setup.

BRIDGES framework for ideation

When we are tackling a complex problem or looking for ways to grow the product, our teams use BRIDGeS – a robust decision-making and ideation framework. BRIDGeS makes our product discovery sessions more efficient. It lets us dive deep into the context of our problem so that we can develop targeted solutions worthy of testing.

Between 2-8 stakeholders take part in a BRIDGeS session. The ideation sessions are usually led by a product manager and can include other subject matter experts such as developers, designers, data analysts, or marketing specialists. You can use a virtual whiteboard such as Figjam or Miro (see our Figma template ) to record each colored note.

In the first half of a BRIDGeS session, participants examine the Benefits, Risks, Issues, and Goals of their subject in the ‘Problem Space.’ A subject is anything that is being described or dealt with; for instance, Coupler.io’s growth opportunities. Benefits are the value that a future solution can bring, Risks are potential issues they might face, Issues are their existing problems, and Goals are what the subject hopes to gain from the future solution. Each descriptor should have a designated color.

After we have broken down the problem using each of these descriptors, we move into the Solution Space. This is where we develop solution variations based on all of the benefits/risks/issues identified in the Problem Space (see the Uber case study for an in-depth example).

In the Solution Space, we start prioritizing those solutions and deciding which ones are worthy of further exploration outside of the framework – via product hypothesis formulation and testing, for example. At the very least, after the session, we will have a list of epics and nested tasks ready to add to our product roadmap.

How to write a product hypothesis statement

Across organizations, product hypothesis statements might vary in their subject, tone, and precise wording. But some elements never change. As we mentioned earlier, a hypothesis statement must always have two or more variables and a connecting factor.

1. Identify variables

Since these components form the bulk of a hypothesis statement, let’s start with a brief definition.

First of all, variables in a hypothesis statement can be split into two camps: dependent and independent. Without getting too theoretical, we can describe the independent variable as the cause, and the dependent variable as the effect . So in the Mailtrap example we mentioned earlier, the ‘add email templates feature’ is the cause i.e. the element we want to manipulate. Meanwhile, ‘increased usage of email sending API’ is the effect i.e the element we will observe.

Independent variables can be any change you plan to make to your product. For example, tweaking some landing page copy, adding a chatbot to the homepage, or enhancing the search bar filter functionality.

Dependent variables are usually metrics. Here are a few that we often test in product development:

- Number of sign-ups

- Number of purchases

- Activation rate (activation signals differ from product to product)

- Number of specific plans purchased

- Feature usage (API activation, for example)

- Number of active users

Bear in mind that your concept or desired change can be measured with different metrics. Make sure that your variables are well-defined, and be deliberate in how you measure your concepts so that there’s no room for misinterpretation or ambiguity.

For example, in the hypothesis ‘Users drop off because they find it hard to set up a project’ variables are poorly defined. Phrases like ‘drop off’ and ‘hard to set up’ are too vague. A much better way of saying it would be: If project automation rules are pre-defined (email sequence to responsible, scheduled tickets creation), we’ll see a decrease in churn. In this example, it’s clear which dependent variable has been chosen and why.

And remember, when product managers focus on delighting users and building something of value, it’s easier to market and monetize it. That’s why at Railsware, our product hypotheses often focus on how to increase the usage of a feature or product. If users love our product(s) and know how to leverage its benefits, we can spend less time worrying about how to improve conversion rates or actively grow our revenue, and more time enhancing the user experience and nurturing our audience.

2. Make the connection

The relationship between variables should be clear and logical. If it’s not, then it doesn’t matter how well-chosen your variables are – your test results won’t be reliable.

To demonstrate this point, let’s explore a previous example again: page load speed and signups.

Through prior research, you might already know that conversion rates are 3x higher for sites that load in 1 second compared to sites that take 5 seconds to load. Since there appears to be a strong connection between load speed and signups in general, you might want to see if this is also true for your product.

Here are some common pitfalls to avoid when defining the relationship between two or more variables:

Relationship is weak. Let’s say you hypothesize that an increase in website traffic will lead to an increase in sign-ups. This is a weak connection since website visitors aren’t necessarily motivated to use your product; there are more steps involved. A better example is ‘If we change the CTA on the pricing page, then the number of signups will increase.’ This connection is much stronger and more direct.

Relationship is far-fetched. This often happens when one of the variables is founded on a vanity metric. For example, increasing the number of social media subscribers will lead to an increase in sign-ups. However, there’s no particular reason why a social media follower would be interested in using your product. Oftentimes, it’s simply your social media content that appeals to them (and your audience isn’t interested in a product).

Variables are co-dependent. Variables should always be isolated from one another. Let’s say we removed the option “Register with Google” from our app. In this case, we can expect fewer users with Google workspace accounts to register. Obviously, it’s because there’s a direct dependency between variables (no registration with Google→no users with Google workspace accounts).

3. Set validation criteria

First, build some confirmation criteria into your statement . Think in terms of percentages (e.g. increase/decrease by 5%) and choose a relevant product metric to track e.g. activation rate if your hypothesis relates to onboarding. Consider that you don’t always have to hit the bullseye for your hypothesis to be considered valid. Perhaps a 3% increase is just as acceptable as a 5% one. And it still proves that a connection between your variables exists.

Secondly, you should also make sure that your hypothesis statement is realistic . Let’s say you have a hypothesis that ‘If we show users a banner with our new feature, then feature usage will increase by 10%.’ A few questions to ask yourself are: Is 10% a reasonable increase, based on your current feature usage data? Do you have the resources to create the tests (experimenting with multiple variations, distributing on different channels: in-app, emails, blog posts)?

Null hypothesis and alternative hypothesis

In statistical research, there are two ways of stating a hypothesis: null or alternative. But this scientific method has its place in hypothesis-driven development too…

Alternative hypothesis: A statement that you intend to prove as being true by running an experiment and analyzing the results. Hint: it’s the same as the other hypothesis examples we’ve described so far.

Example: If we change the landing page copy, then the number of signups will increase.

Null hypothesis: A statement you want to disprove by running an experiment and analyzing the results. It predicts that your new feature or change to the user experience will not have the desired effect.

Example: The number of signups will not increase if we make a change to the landing page copy.

What’s the point? Well, let’s consider the phrase ‘innocent until proven guilty’ as a version of a null hypothesis. We don’t assume that there is any relationship between the ‘defendant’ and the ‘crime’ until we have proof. So, we run a test, gather data, and analyze our findings — which gives us enough proof to reject the null hypothesis and validate the alternative. All of this helps us to have more confidence in our results.

Now that you have generated your hypotheses, and created statements, it’s time to prepare your list for testing.

Prioritizing hypotheses for testing

Not all hypotheses are created equal. Some will be essential to your immediate goal of growing the product e.g. adding a new data destination for Coupler.io. Others will be based on nice-to-haves or small fixes e.g. updating graphics on the website homepage.

Prioritization helps us focus on the most impactful solutions as we are building a product roadmap or narrowing down the backlog . To determine which hypotheses are the most critical, we use the MoSCoW framework. It allows us to assign a level of urgency and importance to each product hypothesis so we can filter the best 3-5 for testing.

MoSCoW is an acronym for Must-have, Should-have, Could-have, and Won’t-have. Here’s a breakdown:

- Must-have – hypotheses that must be tested, because they are strongly linked to our immediate project goals.

- Should-have – hypotheses that are closely related to our immediate project goals, but aren’t the top priority.

- Could-have – hypotheses of nice-to-haves that can wait until later for testing.

- Won’t-have – low-priority hypotheses that we may or may not test later on when we have more time.

How to test product hypotheses

Once you have selected a hypothesis, it’s time to test it. This will involve running one or more product experiments in order to check the validity of your claim.

The tricky part is deciding what type of experiment to run, and how many. Ultimately, this all depends on the subject of your hypothesis – whether it’s a simple copy change or a whole new feature. For instance, it’s not necessary to create a clickable prototype for a landing page redesign. In that case, a user-wide update would do.

On that note, here are some of the approaches we take to hypothesis testing at Railsware:

A/B testing

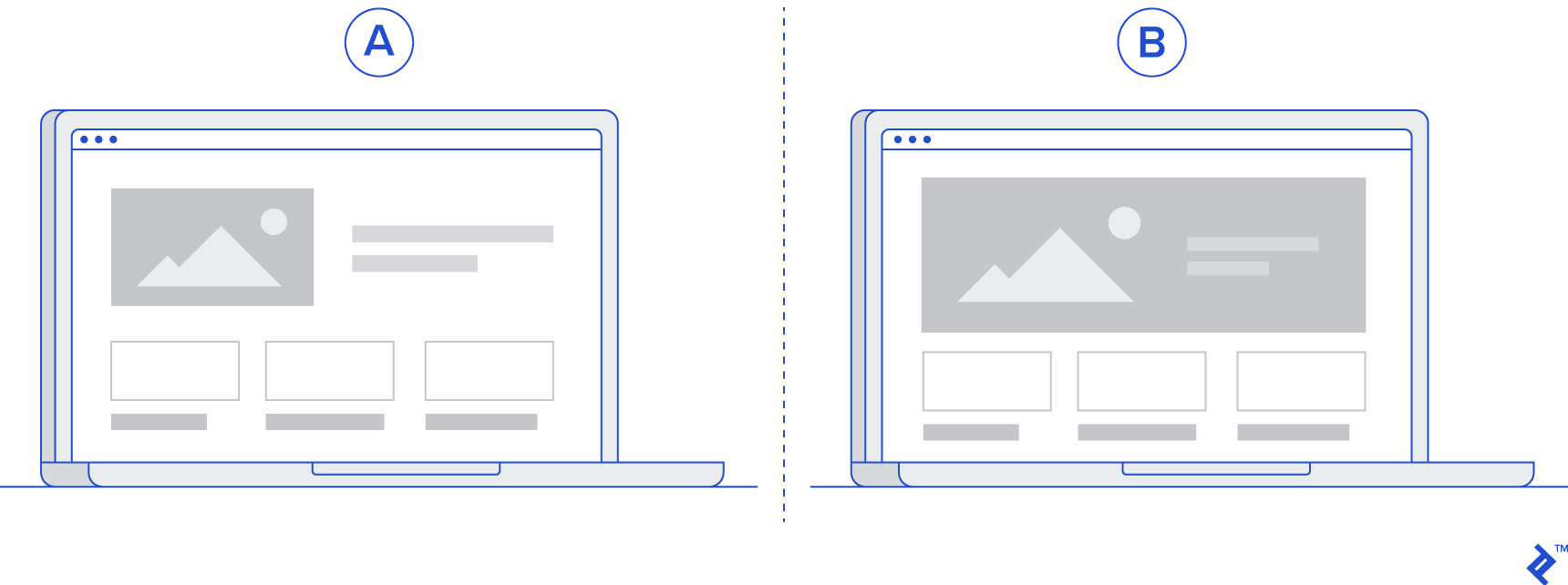

A/B or split testing involves creating two or more different versions of a webpage/feature/functionality and collecting information about how users respond to them.

Let’s say you wanted to validate a hypothesis about the placement of a search bar on your application homepage. You could design an A/B test that shows two different versions of that search bar’s placement to your users (who have been split equally into two camps: a control group and a variant group). Then, you would choose the best option based on user data. A/B tests are suitable for testing responses to user experience changes, especially if you have more than one solution to test.

Prototyping

When it comes to testing a new product design, prototyping is the method of choice for many Lean startups and organizations. It’s a cost-effective way of collecting feedback from users, fast, and it’s possible to create prototypes of individual features too. You may take this approach to hypothesis testing if you are working on rolling out a significant new change e.g adding a brand-new feature, redesigning some aspect of the user flow, etc. To control costs at this point in the new product development process , choose the right tools — think Figma for clickable walkthroughs or no-code platforms like Bubble.

Deliveroo feature prototype example

Let’s look at how feature prototyping worked for the food delivery app, Deliveroo, when their product team wanted to ‘explore personalized recommendations, better filtering and improved search’ in 2018. To begin, they created a prototype of the customer discovery feature using web design application, Framer.

One of the most important aspects of this feature prototype was that it contained live data — real restaurants, real locations. For test users, this made the hypothetical feature feel more authentic. They were seeing listings and recommendations for real restaurants in their area, which helped immerse them in the user experience, and generate more honest and specific feedback. Deliveroo was then able to implement this feedback in subsequent iterations.

Asking your users

Interviewing customers is an excellent way to validate product hypotheses. It’s a form of qualitative testing that, in our experience, produces better insights than user surveys or general user research. Sessions are typically run by product managers and involve asking in-depth interview questions to one customer at a time. They can be conducted in person or online (through a virtual call center , for instance) and last anywhere between 30 minutes to 1 hour.

Although CustDev interviews may require more effort to execute than other tests (the process of finding participants, devising questions, organizing interviews, and honing interview skills can be time-consuming), it’s still a highly rewarding approach. You can quickly validate assumptions by asking customers about their pain points, concerns, habits, processes they follow, and analyzing how your solution fits into all of that.

Wizard of Oz

The Wizard of Oz approach is suitable for gauging user interest in new features or functionalities. It’s done by creating a prototype of a fake or future feature and monitoring how your customers or test users interact with it.

For example, you might have a hypothesis that your number of active users will increase by 15% if you introduce a new feature. So, you design a new bare-bones page or simple button that invites users to access it. But when they click on the button, a pop-up appears with a message such as ‘coming soon.’

By measuring the frequency of those clicks, you could learn a lot about the demand for this new feature/functionality. However, while these tests can deliver fast results, they carry the risk of backfiring. Some customers may find fake features misleading, making them less likely to engage with your product in the future.

User-wide updates

One of the speediest ways to test your hypothesis is by rolling out an update for all users. It can take less time and effort to set up than other tests (depending on how big of an update it is). But due to the risk involved, you should stick to only performing these kinds of tests on small-scale hypotheses. Our teams only take this approach when we are almost certain that our hypothesis is valid.

For example, we once had an assumption that the name of one of Mailtrap ’s entities was the root cause of a low activation rate. Being an active Mailtrap customer meant that you were regularly sending test emails to a place called ‘Demo Inbox.’ We hypothesized that the name was confusing (the word ‘demo’ implied it was not the main inbox) and this was preventing new users from engaging with their accounts. So, we updated the page, changed the name to ‘My Inbox’ and added some ‘to-do’ steps for new users. We saw an increase in our activation rate almost immediately, validating our hypothesis.

Feature flags

Creating feature flags involves only releasing a new feature to a particular subset or small percentage of users. These features come with a built-in kill switch; a piece of code that can be executed or skipped, depending on who’s interacting with your product.

Since you are only showing this new feature to a selected group, feature flags are an especially low-risk method of testing your product hypothesis (compared to Wizard of Oz, for example, where you have much less control). However, they are also a little bit more complex to execute than the others — you will need to have an actual coded product for starters, as well as some technical knowledge, in order to add the modifiers ( only when… ) to your new coded feature.

Let’s revisit the landing page copy example again, this time in the context of testing.

So, for the hypothesis ‘If we change the landing page copy, then the number of signups will increase,’ there are several options for experimentation. We could share the copy with a small sample of our users, or even release a user-wide update. But A/B testing is probably the best fit for this task. Depending on our budget and goal, we could test several different pieces of copy, such as:

- The current landing page copy

- Copy that we paid a marketing agency 10 grand for

- Generic copy we wrote ourselves, or removing most of the original copy – just to see how making even a small change might affect our numbers.

Remember, every hypothesis test must have a reasonable endpoint. The exact length of the test will depend on the type of feature/functionality you are testing, the size of your user base, and how much data you need to gather. Just make sure that the experiment running time matches the hypothesis scope. For instance, there is no need to spend 8 weeks experimenting with a piece of landing page copy. That timeline is more appropriate for say, a Wizard of Oz feature.

Recording hypotheses statements and test results

Finally, it’s time to talk about where you will write down and keep track of your hypotheses. Creating a single source of truth will enable you to track all aspects of hypothesis generation and testing with ease.

At Railsware, our product managers create a document for each individual hypothesis, using tools such as Coda or Google Sheets. In that document, we record the hypothesis statement, as well as our plans, process, results, screenshots, product metrics, and assumptions.

We share this document with our team and stakeholders, to ensure transparency and invite feedback. It’s also a resource we can refer back to when we are discussing a new hypothesis — a place where we can quickly access information relating to a previous test.

Understanding test results and taking action

The other half of validating product hypotheses involves evaluating data and drawing reasonable conclusions based on what you find. We do so by analyzing our chosen product metric(s) and deciding whether there is enough data available to make a solid decision. If not, we may extend the test’s duration or run another one. Otherwise, we move forward. An experimental feature becomes a real feature, a chatbot gets implemented on the customer support page, and so on.

Something to keep in mind: the integrity of your data is tied to how well the test was executed, so here are a few points to consider when you are testing and analyzing results:

Gather and analyze data carefully. Ensure that your data is clean and up-to-date when running quantitative tests and tracking responses via analytics dashboards. If you are doing customer interviews, make sure to record the meetings (with consent) so that your notes will be as accurate as possible.

Conduct the right amount of product experiments. It can take more than one test to determine whether your hypothesis is valid or invalid. However, don’t waste too much time experimenting in the hopes of getting the result you want. Know when to accept the evidence and move on.

Choose the right audience segment. Don’t cast your net too wide. Be specific about who you want to collect data from prior to running the test. Otherwise, your test results will be misleading and you won’t learn anything new.

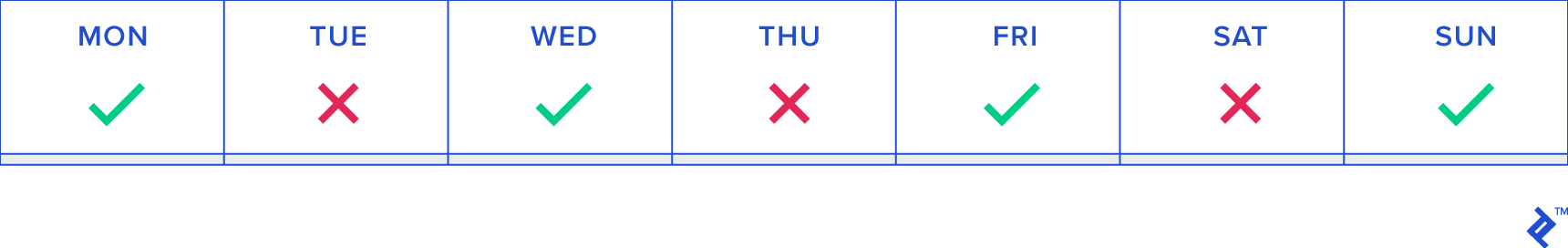

Watch out for bias. Avoid confirmation bias at all costs. Don’t make the mistake of including irrelevant data just because it bolsters your results. For example, if you are gathering data about how users are interacting with your product Monday-Friday, don’t include weekend data just because doing so would alter the data and ‘validate’ your hypothesis.

- Not all failed hypotheses should be treated as losses. Even if you didn’t get the outcome you were hoping for, you may still have improved your product. Let’s say you implemented SSO authentication for premium users, but unfortunately, your free users didn’t end up switching to premium plans. In this case, you still added value to the product by streamlining the login process for paying users.

- Yes, taking a hypothesis-driven approach to product development is important. But remember, you don’t have to test everything . Use common sense first. For example, if your website copy is confusing and doesn’t portray the value of the product, then you should still strive to replace it with better copy – regardless of how this affects your numbers in the short term.

Wrapping Up

The process of generating and validating product hypotheses is actually pretty straightforward once you’ve got the hang of it. All you need is a valid question or problem, a testable statement, and a method of validation. Sure, hypothesis-driven development requires more of a time commitment than just ‘giving it a go.’ But ultimately, it will help you tune the product to the wants and needs of your customers.

If you share our data-driven approach to product development and engineering, check out our services page to learn more about how we work with our clients!

Advisory boards aren’t only for executives. Join the LogRocket Content Advisory Board today →

- Product Management

- Solve User-Reported Issues

- Find Issues Faster

- Optimize Conversion and Adoption

How to write an effective hypothesis

Hypothesis validation is the bread and butter of product discovery. Understanding what should be prioritized and why is the most important task of a product manager. It doesn’t matter how well you validate your findings if you’re trying to answer the wrong question.

A question is as good as the answer it can provide. If your hypothesis is well written, but you can’t read its conclusion, it’s a bad hypothesis. Alternatively, if your hypothesis has embedded bias and answers itself, it’s also not going to help you.

There are several different tools available to build hypotheses, and it would be exhaustive to list them all. Apart from being superficial, focusing on the frameworks alone shifts the attention away from the hypothesis itself.

In this article, you will learn what a hypothesis is, the fundamental aspects of a good hypothesis, and what you should expect to get out of one.

The 4 product risks

Mitigating the four product risks is the reason why product managers exist in the first place and it’s where good hypothesis crafting starts.

The four product risks are assessments of everything that could go wrong with your delivery. Our natural thought process is to focus on the happy path at the expense of unknown traps. The risks are a constant reminder that knowing why something won’t work is probably more important than knowing why something might work.

These are the fundamental questions that should fuel your hypothesis creation:

Is it viable for the business?

Is it relevant for the user, can we build it, is it ethical to deliver.

Is this hypothesis the best one to validate now? Is this the most cost-effective initiative we can take? Will this answer help us achieve our goals? How much money can we make from it?

Has the user manifested interest in this solution? Will they be able to use it? Does it solve our users’ challenges? Is it aesthetically pleasing? Is it vital for the user, or just a luxury?

Do we have the resources and know-how to deliver it? Can we scale this solution? How much will it cost? Will it depreciate fast? Is it the best cost-effective solution? Will it deliver on what the user needs?

Is this solution safe both for the user and for the business? Is it inclusive enough? Is there a risk of public opinion whiplash? Is our solution enabling wrongdoers? Are we jeopardizing some to privilege others?

Over 200k developers and product managers use LogRocket to create better digital experiences

There is an infinite amount of questions that can surface from these risks, and most of those will be context dependent. Your industry, company, marketplace, team composition, and even the type of product you handle will impose different questions, but the risks remain the same.

How to decide whether your hypothesis is worthy of validation

Assuming you came up with a hefty batch of risks to validate, you must now address them. To address a risk, you could do one of three things: collect concrete evidence that you can mitigate that risk, infer possible ways you can mitigate a risk and, finally, deep dive into that risk because you’re not sure about its repercussions.

This three way road can be illustrated by a CSD matrix :

Certainties

Suppositions.

Everything you’re sure can help you to mitigate whatever risk. An example would be, on the risk “how to build it,” assessing if your engineering team is capable of integrating with a certain API. If your team has made it a thousand times in the past, it’s not something worth validating. You can assume it is true and mark this particular risk as solved.

To put it simply, a supposition is something that you think you know, but you’re not sure. This is the most fertile ground to explore hypotheses, since this is the precise type of answer that needs validation. The most common usage of supposition is addressing the “is it relevant for the user” risk. You presume that clients will enjoy a new feature, but before you talk to them, you can’t say you are sure.

Doubts are different from suppositions because they have no answer whatsoever. A doubt is an open question about a risk which you have no clue on how to solve. A product manager that tries to mitigate the “is it ethical to deliver” risk from an industry that they have absolute no familiarity with is poised to generate a lot of doubts, but no suppositions or certainties. Doubts are not good hypothesis sources, since you have no idea on how to validate it.

A hypothesis worth validating comes from a place of uncertainty, not confidence or doubt. If you are sure about a risk mitigation, coming up with a hypothesis to validate it is just a waste of time and resources. Alternatively, trying to come up with a risk assessment for a problem you are clueless about will probably generate hypotheses disconnected with the problem itself.

That said, it’s important to make it clear that suppositions are different from hypotheses. A supposition is merely a mental exercise, creativity executed. A hypothesis is a measurable, cartesian instrument to transform suppositions into certainties, therefore making sure you can mitigate a risk.

How to craft a hypothesis

A good hypothesis comes from a supposed solution to a specific product risk. That alone is good enough to build half of a good hypothesis, but you also need to have measurable confidence.

More great articles from LogRocket:

- How to implement issue management to improve your product

- 8 ways to reduce cycle time and build a better product

- What is a PERT chart and how to make one

- Discover how to use behavioral analytics to create a great product experience

- Explore six tried and true product management frameworks you should know

- Advisory boards aren’t just for executives. Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

You’ll rarely transform a supposition into a certainty without an objective. Returning to the API example we gave when talking about certainties, you know the “can we build it” risk doesn’t need validation because your team has made tens of API integrations before. The “tens” is the quantifiable, measurable indication that gives you the confidence to be sure about mitigating a risk.

What you need from your hypothesis is exactly this quantifiable evidence, the number or hard fact able to give you enough confidence to treat your supposition as a certainty. To achieve that goal, you must come up with a target when creating the hypothesis. A hypothesis without a target can’t be validated, and therefore it’s useless.

Imagine you’re the product manager for an ecommerce app. Your users are predominantly mobile users, and your objective is to increase sales conversions. After some research, you came across the one click check-out experience, made famous by Amazon, but broadly used by ecommerces everywhere.

You know you can build it, but it’s a huge endeavor for your team. You best make sure your bet on one click check-out will work out, otherwise you’ll waste a lot of time and resources on something that won’t be able to influence the sales conversion KPI.

You identify your first risk then: is it valuable to the business?

Literature is abundant on the topic, so you are almost sure that it will bear results, but you’re not sure enough. You only can suppose that implementing the one click functionality will increase sales conversion.

During case study and data exploration, you have reasons to believe that a 30 percent increase of sales conversion is a reasonable target to be achieved. To make sure one click check-out is valuable to the business then, you would have a hypothesis such as this:

We believe that if we implement a one-click checkout on our ecommerce, we can grow our sales conversion by 30 percent

This hypothesis can be played with in all sorts of ways. If you’re trying to improve user-experience, for example, you could make it look something like this:

We believe that if we implement a one-click checkout on our ecommerce, we can reduce the time to conversion by 10 percent

You can also validate different solutions having the same criteria, building an opportunity tree to explore a multitude of hypothesis to find the better one:

We believe that if we implement a user review section on the listing page, we can grow our sales conversion by 30 percent

Sometimes you’re clueless about impact, or maybe any win is a good enough win. In that case, your criteria of validation can be a fact rather than a metric:

We believe that if we implement a one-click checkout on our ecommerce, we can reduce the time to conversion

As long as you are sure of the risk you’re mitigating, the supposition you want to transform into a certainty, and the criteria you’ll use to make that decision, you don’t need to worry so much about “right” or “wrong” when it comes to hypothesis formatting.

That’s why I avoided following up frameworks on this article. You can apply a neat hypothesis design to your product thinking, but if you’re not sure why you’re doing it, you’ll extract nothing out of it.

What comes after a good hypothesis?

The final piece of this puzzle comes after the hypothesis crafting. A hypothesis is only as good as the validation it provides, and that means you have to test it.

If we were to test the first hypothesis we crafted, “we believe that if we implement a one-click checkout on our ecommerce, we can grow our sales conversion by 30 percent,” you could come up with a testing roadmap to build up evidence that would eventually confirm or deny your hypothesis. Some examples of tests are:

A/B testing — Launch a quick and dirty one-click checkout MVP for a controlled group of users and compare their sales conversion rates against a control group. This will provide direct evidence on the effect of the feature on sales conversions

Customer support feedback — Track any inquiries or complaints related to the checkout process. You can use organic user complaints as an indirect measure of latent demand for one-click checkout feature

User survey — Ask why carts were abandoned for a cohort of shoppers that left the checkout step close to completion. Their reasons might indicate the possible success of your hypothesis

Effective hypothesis crafting is at the center of product management. It’s the link between dealing with risks and coming up with solutions that are both viable and valuable. However, it’s important to recognize that the formulation of a hypothesis is just the first step.

The real value of a hypothesis is made possible by rigorous testing. It’s through systematic validation that product managers can transform suppositions into certainties, ensuring the right product decisions are made. Without validation, even the most well-thought-out hypothesis remains unverified.

Featured image source: IconScout

LogRocket generates product insights that lead to meaningful action

Get your teams on the same page — try LogRocket today.

Share this:

- Click to share on Twitter (Opens in new window)

- Click to share on Reddit (Opens in new window)

- Click to share on LinkedIn (Opens in new window)

- Click to share on Facebook (Opens in new window)

- #product strategy

- #project management

Stop guessing about your digital experience with LogRocket

Recent posts:.

Leader Spotlight: Closing the gap in nutrition literacy, with Peter Chau

Peter Chau discusses MyFitnessPal’s focus to close the gap in nutrition literacy by educating users on adequate nutrition intake and health.

Mastering customer surveys: Design, execution, and analysis

A customer survey is a structured research tool that product people use to gather insights about their customers.

Leader Spotlight: Growing the omnichannel market, with Christine Kuei

Christine Kuei, Director of Product Management at Forever 21, shares her experience growing and optimizing omnichannel experiences.

Decoding marketing jargon: A glossary of terms

The world of product marketing is always evolving. Even for experts, it can be hard to keep up with the latest concepts, terms, and jargon.

Leave a Reply Cancel reply

SHARE THIS POST

Product best practices

Product hypothesis - a guide to create meaningful hypotheses.

13 December, 2023

Growth Manager

Data-driven development is no different than a scientific experiment. You repeatedly form hypotheses, test them, and either implement (or reject) them based on the results. It’s a proven system that leads to better apps and happier users.

Let’s get started.

What is a product hypothesis?

A product hypothesis is an educated guess about how a change to a product will impact important metrics like revenue or user engagement. It's a testable statement that needs to be validated to determine its accuracy.

The most common format for product hypotheses is “If… than…”:

“If we increase the font size on our homepage, then more customers will convert.”

“If we reduce form fields from 5 to 3, then more users will complete the signup process.”

At UXCam, we believe in a data-driven approach to developing product features. Hypotheses provide an effective way to structure development and measure results so you can make informed decisions about how your product evolves over time.

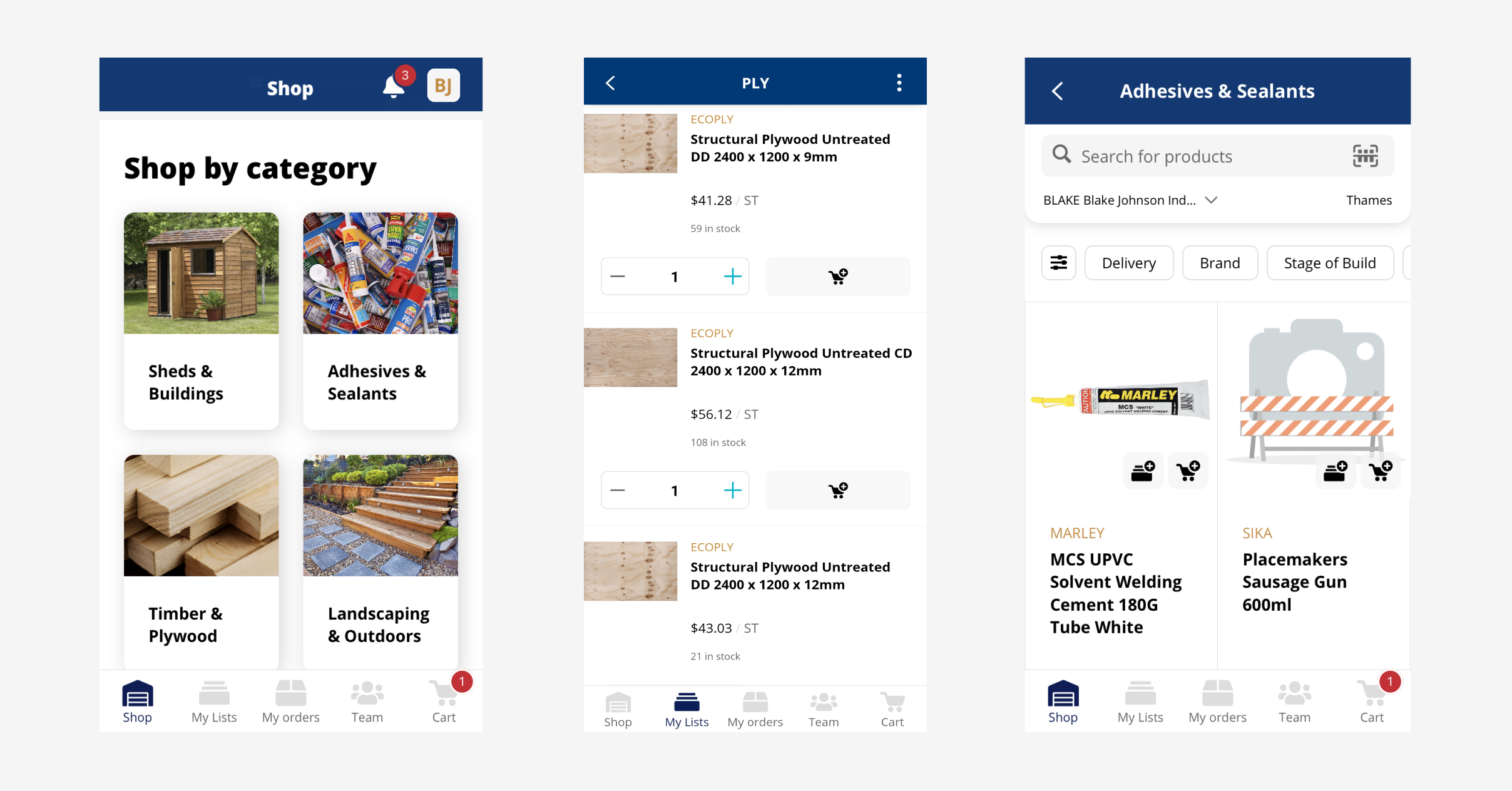

Take PlaceMakers , for example.

PlaceMakers faced challenges with their app during the COVID-19 pandemic. Due to supply chain shortages, stock levels were not being updated in real-time, causing customers to add unavailable products to their baskets. The team added a “Constrained Product” label, but this caused sales to plummet.

The team then turned to UXCam’s session replays and heatmaps to investigate, and hypothesized that their messaging for constrained products was too strong. The team redesigned the messaging with a more positive approach, and sales didn’t just recover—they doubled.

Types of product hypothesis

1. counter-hypothesis.

A counter-hypothesis is an alternative proposition that challenges the initial hypothesis. It’s used to test the robustness of the original hypothesis and make sure that the product development process considers all possible scenarios.

For instance, if the original hypothesis is “Reducing the sign-up steps from 3 to 1 will increase sign-ups by 25% for new visitors after 1,000 visits to the sign-up page,” a counter-hypothesis could be “Reducing the sign-up steps will not significantly affect the sign-up rate.

2. Alternative hypothesis

An alternative hypothesis predicts an effect in the population. It’s the opposite of the null hypothesis, which states there’s no effect.

For example, if the null hypothesis is “improving the page load speed on our mobile app will not affect the number of sign-ups,” the alternative hypothesis could be “improving the page load speed on our mobile app will increase the number of sign-ups by 15%.”

3. Second-order hypothesis

Second-order hypotheses are derived from the initial hypothesis and provide more specific predictions.

For instance, “if the initial hypothesis is Improving the page load speed on our mobile app will increase the number of sign-ups,” a second-order hypothesis could be “Improving the page load speed on our mobile app will increase the number of sign-ups.”

Why is a product hypothesis important?

Guided product development.

A product hypothesis serves as a guiding light in the product development process. In the case of PlaceMakers, the product owner’s hypothesis that users would benefit from knowing the availability of items upfront before adding them to the basket helped their team focus on the most critical aspects of the product. It ensured that their efforts were directed towards features and improvements that have the potential to deliver the most value.

Improved efficiency

Product hypotheses enable teams to solve problems more efficiently and remove biases from the solutions they put forward. By testing the hypothesis, PlaceMakers aimed to improve efficiency by addressing the issue of stock levels not being updated in real-time and customers adding unavailable products to their baskets.

Risk mitigation

By validating assumptions before building the product, teams can significantly reduce the risk of failure. This is particularly important in today’s fast-paced, highly competitive business environment, where the cost of failure can be high.

Validating assumptions through the hypothesis helped mitigate the risk of failure for PlaceMakers, as they were able to identify and solve the issue within a three-day period.

Data-driven decision-making

Product hypotheses are a key element of data-driven product development and decision-making. They provide a solid foundation for making informed, data-driven decisions, which can lead to more effective and successful product development strategies.

The use of UXCam's Session Replay and Heatmaps features provided valuable data for data-driven decision-making, allowing PlaceMakers to quickly identify the problem and revise their messaging approach, leading to a doubling of sales.

How to create a great product hypothesis

Map important user flows

Identify any bottlenecks

Look for interesting behavior patterns

Turn patterns into hypotheses

Step 1 - Map important user flows

A good product hypothesis starts with an understanding of how users more around your product—what paths they take, what features they use, how often they return, etc. Before you can begin hypothesizing, it’s important to map out key user flows and journey maps that will help inform your hypothesis.

To do that, you’ll need to use a monitoring tool like UXCam .

UXCam integrates with your app through a lightweight SDK and automatically tracks every user interaction using tagless autocapture. That leads to tons of data on user behavior that you can use to form hypotheses.

At this stage, there are two specific visualizations that are especially helpful:

Funnels : Funnels are great for identifying drop off points and understanding which steps in a process, transition or journey lead to success.

In other words, you’re using these two tools to define key in-app flows and to measure the effectiveness of these flows (in that order).

Average time to conversion in highlights bar.

Step 2 - Identify any bottlenecks

Once you’ve set up monitoring and have started collecting data, you’ll start looking for bottlenecks—points along a key app flow that are tripping users up. At every stage in a funnel, there’s going to be dropoffs, but too many dropoffs can be a sign of a problem.

UXCam makes it easy to spot dropoffs by displaying them visually in every funnel. While there’s no benchmark for when you should be concerned, anything above a 10% dropoff could mean that further investigation is needed.

How do you investigate? By zooming in.

Step 3 - Look for interesting behavior patterns

At this stage, you’ve noticed a concerning trend and are zooming in on individual user experiences to humanize the trend and add important context.

The best way to do this is with session replay tools and event analytics. With a tool like UXCam, you can segment app data to isolate sessions that fit the trend. You can then investigate real user sessions by watching videos of their experience or by looking into their event logs. This helps you see exactly what caused the behavior you’re investigating.

For example, let’s say you notice that 20% of users who add an item to their cart leave the app about 5 minutes later. You can use session replay to look for the behavioral patterns that lead up to users leaving—such as how long they linger on a certain page or if they get stuck in the checkout process.

Step 4 - Turn patterns into hypotheses

Once you’ve checked out a number of user sessions, you can start to craft a product hypothesis.

This usually takes the form of an “If… then…” statement, like:

“If we optimize the checkout process for mobile users, then more customers will complete their purchase.”

These hypotheses can be tested using A/B testing and other user research tools to help you understand if your changes are having an impact on user behavior.

Product hypothesis emphasizes the importance of formulating clear and testable hypotheses when developing a product. It highlights that a well-defined hypothesis can guide the product development process, align stakeholders, and minimize uncertainty.

UXCam arms product teams with all the tools they need to form meaningful hypotheses that drive development in a positive direction. Put your app’s data to work and start optimizing today— sign up for a free account .

You might also be interested in these;

Product experimentation framework for mobile product teams

7 Best AB testing tools for mobile apps

A practical guide to product experimentation

5 Best product experimentation tools & software

How to use data to challenge the HiPPO

Ardent technophile exploring the world of mobile app product management at UXCam.

Get the latest from UXCam

Stay up-to-date with UXCam's latest features, insights, and industry news for an exceptional user experience.

Related articles

Curated List

7 best ab testing tools for mobile apps.

Learn with examples how qualitative tools like funnel analysis, heat maps, and session replays complement quantitative...

Content Director

5 best behavioral analytics tools & software for mobile apps

Behavioral analytics offers deep insights into user interaction. Learn about the best behavioral analytics tools to optimize the user experience on your mobile...

Jonas Kurzweg

Growth Lead

7 Ways to increase FinTech app account activation

7 Ways to increase FinTech app account activation, including optimizing the KYC process, using product tours, and personalizing communication...

Kent McDonald

- Open Workshops

- LevelUp! – PM Skills Training

- Outcome-Driven PM Masterclass

- Coaching Fundamentals

- LevelUp! Leadership

per person /

Starting from 490, hypothesis-driven product management.

Agile Product Management Product Discovery Strategy

Description

Course attendees.

Still no participant

Course Reviews

I attended the Hypothesis-Driven Workshop with my team. Thanks to the mix of theory and practical exercise, I significantly strengthen my knowledge on how to structure hypotheses: from ideation, prioritisation and execution of the hypothesis with the highest value for the business. I recommend every product team to take part on this workshop if you wish to elevate your overall skills. After this training, we have required every team to start anything from hypothesis and

Ich habe an einem Workshop mit Tanja zu "Hypothesis-Driven Product Management" teilgenommen. Der Workshop war kurzweilig und ich habe viel gelernt. Vielen Dank dafür! :)

Ich bin über eine Empfehlung zu Tanja in die Product Academy gekommen und habe an einem Ein-Tages Workshop teilgenommen. Ich war sehr begeistert von dem Workshop und konnte viel lernen. Tanja hat einen sehr ganzheitlichen Blick auf Produktmanagement und bringt viele Aspekte des Changemangements mit ein. Neben den Inhalten des Kurses, war auch der Austausch mit den anderen Teilnehmern sehr wertvoll und durch Tanjas gutes Netzwerk verknüpft sie ihre Teilnehmer auch nachträglich noch und schafft damit einen extremen Mehrwert. (Head of Product bei Alasco)

Top workshops, very enthusiastic, good mix of teaching and interactive sessions! (Product Manager Alasco)

A handy framework on how-to Manage your Products. Tanja coaches you in a super interesting and sympathetic way through an exciting day. The tools we used during the workshop weren't new. However, at least to me, they seem much more approachable after the workshop. Thank you, Product Academy. Thank you, Tanja.

Such condense, high quality materials! Tanja is a great sparring partner for the art of product management, and she has practical knowledge & experience to keep our feet on the ground. I learned a lot in just one day about how to develop my product with scientific approach that is hypothesis-driven product management. I would not hesitate to book another training with her!

The workshop by Product Academy was a genuine experience with high business value. Tanja Lau is a lovely facilitator for digital business and a very passionate ambassador for product team topics. It was very inspiring and in the end I went home inspired with a bunch of good, practical examples. Thank you! (Product Manager at hd digital)

Where & When?

- August 19, 2024 - 9.00 - 16.30 CET

Who is it for?

Key takeaways.

- how to write useful hypotheses

- how to prioritize hypotheses by dealing with risk and assessing opportunities

- introduction to dual-track discovery and delivery

- how to choose the right experiment for your hypothesis

What we will achieve

- Acquire a systematic framework for working with hypothesis-driven product management

- Heighten your awareness of the importance of asking the right questions in the right way

- Learn how to improve your team setup

- Discuss ways to work towards building a learning organization

Hands-on exercises

- including one homework assignment and pre-reading material

- writing hypotheses and setting up a hypothesis backlog

- working on real examples from your company

- choosing experiments based on hypotheses

Individual Follow-up Coaching Option

Certificate, course language, our main teachers.

Founder of Product Academy

At Product Academy, Tanja is combining her passion for continuous learning with her professional background as product leader. As founding partner of start-ups in Munich, Madrid and Zurich, she has gained valuable entrepreneurial experience which she passes on in her classes and as public speaker on various occasions. She was listed among the top 30 […]

© Copyright 2022 Product Academy

Privacy Overview

Hypothesis Driven Product Management

- Post author By admin

- Post date September 23, 2020

- No Comments on Hypothesis Driven Product Management

What is Lean Hypothesis Testing?

“The first principle is that you must not fool yourself and you are the easiest person to fool.” – Richard P. Feynman

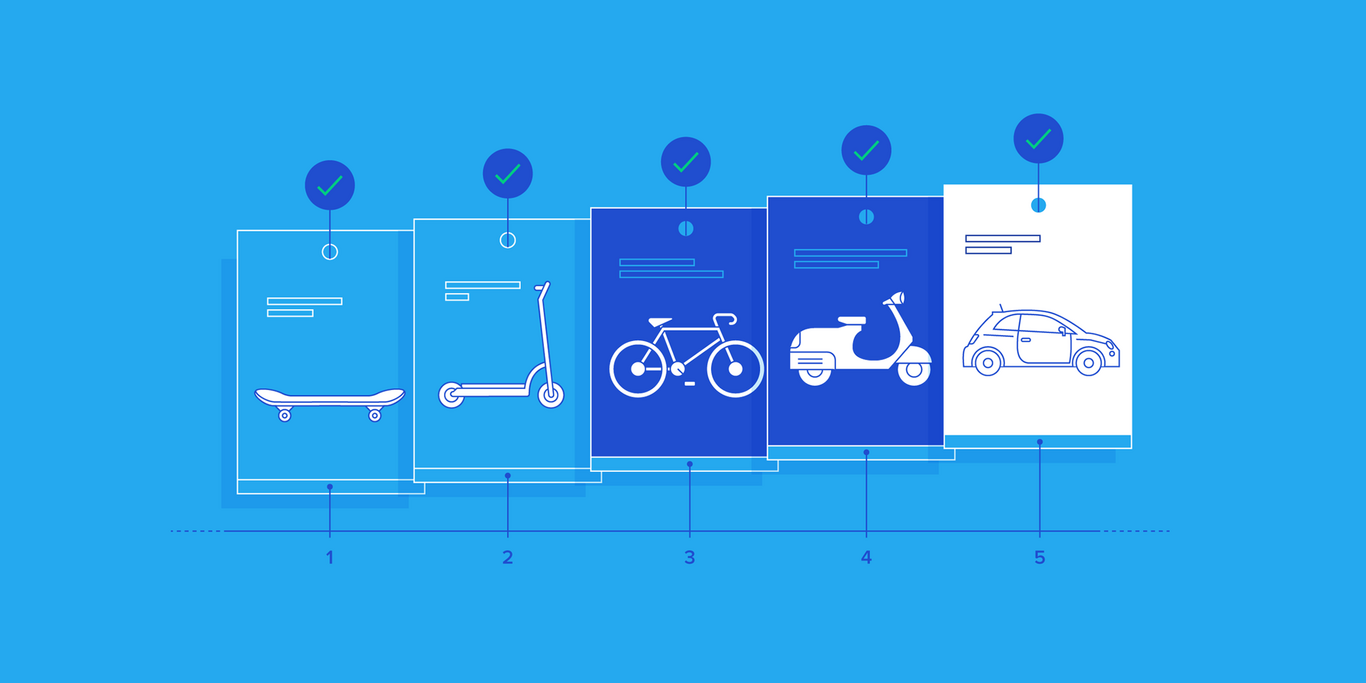

Lean hypothesis testing is an approach to agile product development that’s designed to minimize risk, increase the speed of development, and hone business outcomes by building and iterating on a minimum viable product (MVP).

The minimum viable product is a concept famously championed by Eric Ries as part of the lean startup methodology. At its core, the concept of the MVP is about creating a cycle of learning. Rather than devoting long development timelines to building a fully polished end product, teams working through lean product development build, in short, iterative cycles. Each cycle is devoted to shipping an MVP, defined as a product that’s built with the least amount of work possible for the purpose of testing and validating that product with users.

In lean hypothesis testing, the MVP itself can be framed as a hypothesis. A well-designed hypothesis breaks down an issue into a problem, solution, and result.

When defining a good hypothesis, start with a meaningful problem: an issue or pain-point that you’d like to solve for your users. Teams often use multiple qualitative and quantitative sources to the scope and describe this problem.

How do you get started?

Two core practices underlie lean:

- Use of the scientific method and

- Use of small batches. Science has brought us many wonderful things.

I personally prefer to expand the Build-Measure-Learn loop into the classic view of the scientific method because I find it’s more robust. You can see that process to the right, and we’ll step through the components in the balance of this section.

The use of small batches is critical. It gives you more shots at a successful outcome, particularly valuable when you’re in a high risk, high uncertainty environment.

A great example from Eric Ries’ book is the envelope folding experiment: If you had to stuff 100 envelopes with letters, how would you do it? Would you fold all the sheets of paper and then stuff the envelopes? Or would you fold one sheet of paper, stuff one envelope? It turns out that doing them one by one is vastly more efficient, and that’s just on an operational basis. If you don’t actually know if the envelopes will fit or whether anyone wants them (more analogous to a startup), you’re obviously much better off with the one-by-one approach.

So, how do you do it? In 6 simple (in principle) steps :

- Start with a strong idea , one where you’ve gone out a done customer strong discovery which is packaged into testable personas and problem scenarios. If you’re familiar with design thinking, it’s very much about doing good work in this area.

- Structure your idea(s) in a testable format (as hypotheses).

- Figure out how you’ll prove or disprove these hypotheses with a minimum of time and effort.

- Get focused on testing your hypotheses and collecting whatever metrics you’ll use to make a conclusion.

- Conclude and decide ; did you prove out this idea and is it time to throw more resources at it? Or do you need to reformulate and re-test?

- Pivot or persevere ; If you’re pivoting and revising, the key is to make sure you have a strong foundation in customer discovery so you can pivot in a smart way based on your understanding of the customer/user.

By using a hypothesis-driven development process you:

- Articulate your thinking

- Provide others with an understanding of your thinking

- Create a framework to test your designs against

- Develop a standard way of documenting your work

- Make better stuff

Free Template: Lean Hypothesis template

Eric Ries: Test & experiment, turn your feeling into a hypothesis

5 case studies on experimentation :.

- Adobe takes a customer-centric to innovating Photoshop

- Test Paper prototypes to save time and money: the Mozilla case study

- Walmart.ca increases on-site conversions by 13%

- Icons8 web app. Redesign based on usability testing.

- Experiments at Airbnb

- Tags Hypothesis Driven

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- +49 30 / 254 71 0

Startseite » Newsroom » Blog » Product development through hypotheses: formulating hypotheses

Product development through hypotheses: formulating hypotheses

16. February 2018

Product development is confronted with the constant challenge of supplying the customer with a product that exactly meets his needs. In our new blog series, etventure’s product managers provide an insight into their work and approach. The focus is on hypothesis-driven product development. In the first part of the series, we show why and how to define a verifiable hypothesis as the starting point for an experiment.

For the development of new products, features and services as well as the development of start-ups, we at etventure rely on a hypothesis-driven method that is strongly oriented towards the “Lean Startup” 1 philosophy. Having already revealed our remedy for successful product development last week, we now want to take a closer look at the first step of an experiment – the formulation of the hypothesis.

“Done is better than perfect.” – Sheryl Sandberg

Where do hypotheses come from?

Scientists observe nature and ask many questions that lead to hypotheses. Product teams can also be inspired by observations, personal opinions, previous experiences or the discovery of patterns and outliers in data. These observations are often associated with a number of problems and open questions.

- Who is our target group?

- Why does X do this and not that?

- How can person X be motivated to take action Y?

- How can we encourage potential users to sign up for our service?

First of all, it is important that the team meets for brainstorming and becomes creative. Subsequently, those ideas are selected that are “true” from the team’s point of view and are therefore referred to as hypotheses.

What makes a good hypothesis?

Unlike science, we cannot afford to spend too much time on a hypothesis. Nevertheless, one of the key qualifications of every product developer is to recognize a well-formulated hypothesis. The following checklist serves as a basis for this:

A good hypothesis…

- is something we believe to be true, but we don’t know for sure yet

- is a prediction we expect to arrive

- can be easily tested

- may be true or false

- includes the target group

- is clear and measurable

Assumption ≠ Fact

An assumption may be true, but it may also be false. A fact is always true and can be proven by evidence. Therefore, an assumption always offers an opportunity to learn something. If we already have strong evidence of what we believe in, we don’t need to test it again – there is nothing new to learn. However, we never accept anything as a fact until it has been validated. Awareness of this difference is essential for our product decisions. That’s why we keep asking ourselves questions: Do we have proof of our assumptions, are they facts, or does it end with assumption? In other words: Is it objectively measurable?

Human behaviour is often “predictably irrational”. 2 This is because our brain uses shortcuts when processing information to save time and energy. 3 This is also true in product development: We often tend to ignore evidence that our assumption might be wrong. Instead, we feel confirmed in existing beliefs. The good news is that these distortions are consistent and well known, so we can design systems to correct them. In order to avoid misinterpretations of the test results, it helps, for example, to make the following prediction: What would happen if my assumption was confirmed?

In order for hypotheses to be validated, it must be possible to test them in at least one, but preferably in different scenarios. Since both temporal and monetary resources are usually very limited, hypotheses must always be testable as easy as possible and with justifiable effort.

Testability and falsification

Learning means finding answers to questions. In product development, we want to know whether our assumption is true or not. When testing our ideas, we have to assume that both could happen. What is important is that both results are correct, both mean progress. This concept, is derived from science 4 and helps to avoid an always applicable hypothesis such as “Tomorrow it will either rain or not”.

Target group

Product development should mainly focus on the customer’s needs. Therefore, the target group must be included in the formulation of the hypothesis. This prevents distortion and makes the hypotheses more specific. During development, hypotheses can be refined or the target audience can be adapted.

Clarity and measurability

And last but not least, a hypothesis must always be clear and measurable. Complex hypotheses are not uncommon in science, but in practice it must be immediately clear what is at stake. Product developers should be able to explain their hypotheses within 30 seconds to someone who has never heard of the subject.

Why formulate hypotheses?

Product teams benefit in many ways if they take the time to formulate a hypothesis.

- Impartial decisions: Hypotheses reduce the influence of prejudices on our decision-making.

- Team orientation: Similar to a common vision, a hypothesis strengthens team thinking and prevents conflicts in the experimental phase.

- Focus: Testing without hypothesis is like sailing without a goal. A hypothesis helps to focus and control the experimental design.

How can good hypotheses be formulated?

Various blogs and articles provide a series of templates that help to formulate hypotheses quickly and easily. Most of them differ only slightly from each other. Product teams can freely decide which format they like – as long as the final hypothesis meets the above criteria. We have put together a selection of the most important templates:

- We believe that [this ability] will lead to [this result]. We will know that we have succeeded when [we see a measurable sign].

- I believe that [target group] will [execute this repeatable action/use this solution], which for [this reason] will lead to [an expected measurable result].

- If [cause], then [effect], because [reason].

- If [I do], then [thing] will happen.

- We believe that with [activity] for [these people] [this result / this effect] will happen.

The following hypotheses have actually been used by us in the past weeks and months. During the test phase some of them could be validated, others were rejected.

- After 1,000 visits to the registration page, the reduction of registration steps from 3 to 1 increases the registration rate for new visitors by 25%.

- This subject line increases the opening rates for newsletter subscribers by 15% after 3 days.

- If we offer online training to our customers, the number of training sessions will increase by 35% within the next 2 weeks.

- We believe that the sale of a machine-optimized packaging material to our customers will lead to a higher demand for our packaging material. We will know that we have been successful if we have sold 50% more packaging material within the next 4 weeks.

How to turn hypotheses into experiments?

Formulating good hypotheses is essential for successful product development. And yet it is only the first step in a multi-step development and testing process. In our next article you will learn how hypotheses become experiments.

Further links:

1 Eric Ries: The Lean Startup

2 Predictably Irrational: The Hidden Forces that Shape Our Decisions

3 Cognitive Bias Cheat Sheet

4 Karl Popper

You have a question or an opinion about the article? Share it with us! Cancel reply

Your email address will not be published. Required fields are marked *.

Display a Gravatar image next to my comments.

Ich habe die Hinweise zum Datenschutz gelesen und akzeptiere diese. *

* Required field

Autor Kristopher Berks

Product Manager bei etventure

Visit us at

You might also be interested in.

Does Artificial Intelligence always make the better decision?

Toolbox “Digital Transformation” – 7 steps to the digital business model

#DIGITALLEARNING 7 – Agile Leadership

How and why to use hypotheses in Product Management ?

Looking to reduce risks and pinpoint the right challenges? Explore the world of product hypotheses for effective decision-making!

Do you want to develop your product but aren’t sure which direction to take? Use product hypotheses to test and analyze assumptions that will help you develop a successful product. Here’s how.

The key word for a Product Manager: de-risk

Launching a product or new features can be time-consuming and costly. It is therefore necessary to de-risk our decisions as much as possible to develop impactful and truly useful features for your users.

In the case of launching a new product, you must make sure you are dealing with the right problem before thinking about the best solution. For example: “Is there a problem around online plane booking?”. If you answer yes to this based solely on your own perception, there is a risk that your answer will be biased and therefore incorrect.

Verifying that a problem is accurate or that your product provides the right answer can be time-consuming. It is essential to be methodical to limit the costs and the time you will spend. The Minimum Viable Product (MVP) is a good tool to validate an imperfect first version of your product and test your hypotheses in the field, with the most realistic conditions possible.

📚 Read our article on the Minimum Viable Product to learn more about the method.

How to set up effective product hypotheses?

In this article, let's imagine that our product is a newsletter and that we need to boost the number of subscriptions.

The challenge is to find the answers to your questions in order to improve your performance. For example: “Why are my newsletter subscriptions so low?”

To begin, you will always need to take a step back and map out everything you know before thinking about a solution.

This is what we will call: the formalization of hypotheses.

Step 1 - Formalizing the hypotheses

At Hubvisory, we use hypotheses to test hunches, user needs, and preferences.

“Our question is…” is the heart of your problem. This is the problem you are looking to solve. You have identified this problem because it blocks your objectives and therefore demands a solution.

You can also present this problem in the form of an affirmation. For example: “We want to double the rate of users who subscribe to the newsletter”.

The formalization of this problem is important because, like an OKR objective, it allows you to illustrate your objective.

Then, the formalization of your hypotheses goes as follows:

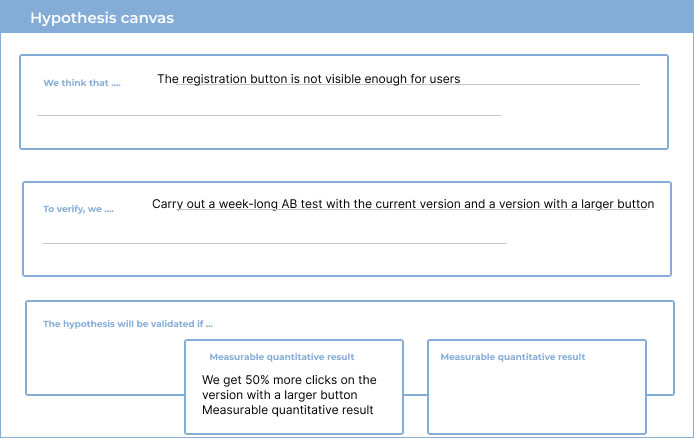

Example of Product Hypothesis CanvasSource : Hubvisory

“We think…” represents your intuition. To obtain a list of hypotheses, you can organize a reflection workshop with the stakeholders affected by your problem.

For the issue of subscriptions to the newsletter, we could invite a person responsible for writing the newsletters, or even a loyalty officer. Our advice at Hubvisory is to always make sure that someone carries the voice of the user, or that the user himself participates in the improvement of the product.

To make assumptions, it is important to know your market as well as your users. This will increase your ability to find relevant hypotheses and stay in line with the expectations of your customers.

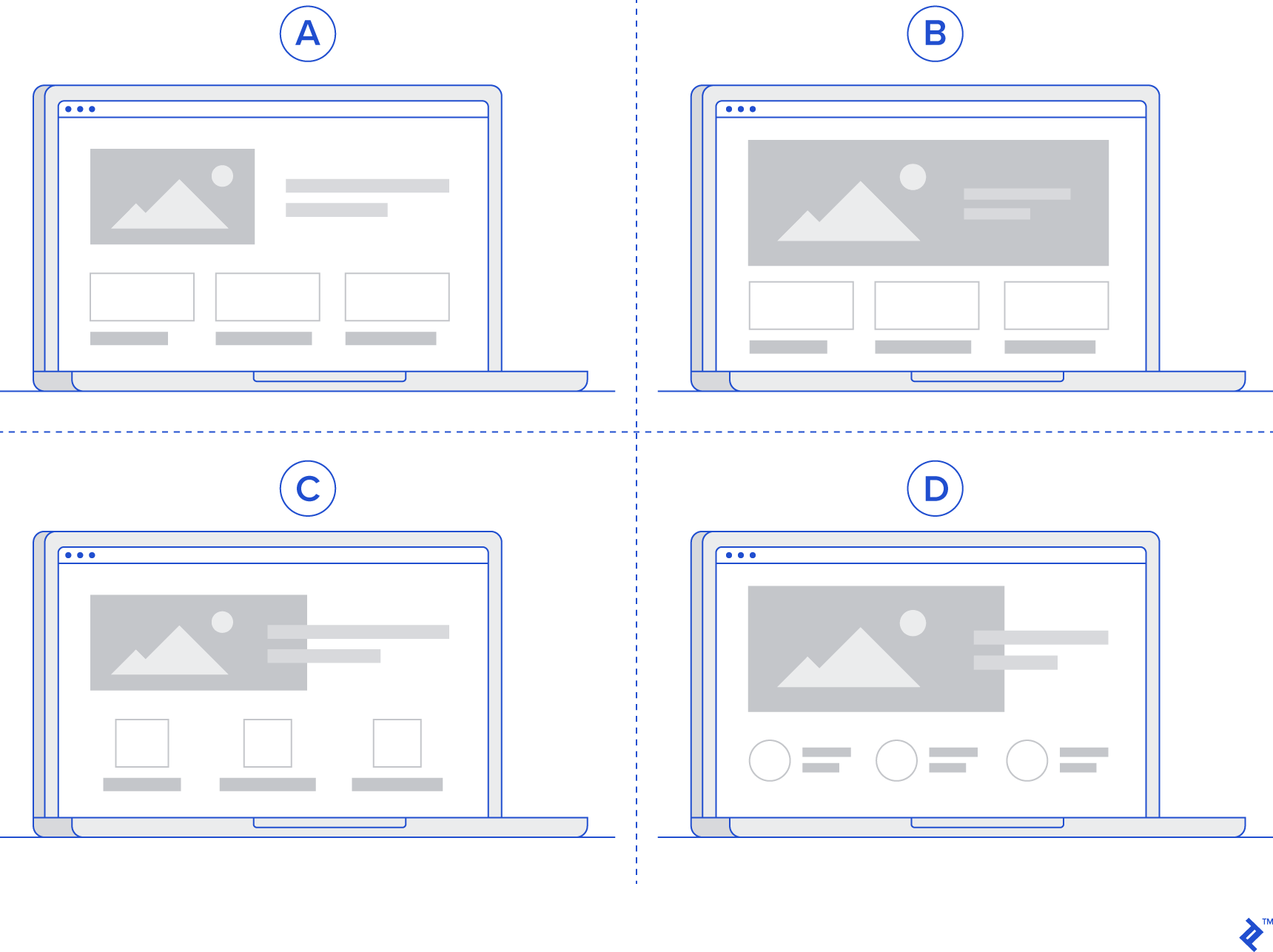

“To verify we ...” is the formalization of the method. You must explain the test you are going to perform. For example, indicate that you are going to perform an A/B test on a percentage of the population with version A (a white button) and version B (a red button). I also recommend that you indicate a notion of time during which your experiment will have to be tested.

“The hypothesis will be validated if…” is the hardest part of formulating hypotheses. The objective is to determine which criterion (a measurable quantitative result) will allow you to say whether this hypothesis is confirmed or invalidated. Like any choice of KPI, it must be SMART (Specific, Measurable, Achievable, Realistic and Time-bound).

"SMART", what does it mean?

- Specific: simple and understandable by all

- Measurable: the result can be read easily

- Acceptable: shared and accepted by the whole team

- Realistic: the chosen metric must be achievable

- Temporally defined: a hypothesis must be validated at a time T; if it is necessary to extend the deadlines, it is because the experience is not conclusive.

This metric can be qualitative (response from our users following an interview, etc.) or quantitative (factual data supported by figures).

In our example, we'll know the hypothesis is confirmed if we get 50% more clicks on a version with a more conspicuous button on a week-long A/B test.

Step 2 - Experimentation

Once your hypotheses have been formalized, you will be able to move on to the experimentation phase. The idea is then to validate your hypothesis to make your intuition a conviction.

You have to see your list of hypotheses as a backlog that you want to prioritize and achieve. By ordering by priority, you can define an experimentation schedule.

Having a backlog of clear and prioritized experiments has the advantage of guaranteeing good follow-up, especially when several people have to intervene in the experiments.

Your experiment sheets list the tests you are going to carry out and the results you have obtained.

Step 3 - The hypothesis is validated

If you have completed your experiment, you will get all the answers to your questions. This will allow you to more serenely embark on the design of a relevant solution.

Step 3 bis - I made a mistake

Your experimentation did not lead you where you wanted? It's still a victory. As we have seen, experimentation is there to save you time and money in your product development.

Whether your experimentation is positive or negative, it will allow you to better know your product and your users. You now have all the keys in hand to work on your problems and make your product a high-performance product!

What are the advantages of working on product hypotheses?

This method is popular because of its many advantages that allow you to improve the quality of your deliverables and thus, your daily life as a Product Manager:

- You avoid relying solely on intuition and you guarantee a likely impact with the solutions you put in place. The objective is not to completely eliminate your intuition, which is essential in the daily life of a Product Manager, but to support it with evidence and tangible results.

- You avoid incurring costs for the development of a feature or product. We know that launching a product or developing a feature requires time and money that, once spent, cannot be recovered if you have made the wrong choices.

- You have a problem-based approach, not a solution-based one. The product hypothesis method allows you to question the health of your product and the state of your market. By identifying the right pain points and the right opportunities, you will be able to work more efficiently on the right solution to put in place.

- You can get ROI from your solution through experimentation. Indeed, performing an A/B test or any other method of experimentation can give you an ROI trend. If by validating only one version of your Homepage, it brings you 10% more sessions on your experiment, you can easily consider a trend if you develop this Homepage on a larger scale.

In conclusion

The product hypothesis is the ideal tool for the Product Manager to de-risk and limit the costs that could be incurred on low-value features. In a few steps, the product hypothesis allows the Product Manager to create a product whose evolutions have been tested and analyzed, ensuring the creation of a successful digital product.

Ne manquez pas ces articles

3 Success Stories produit : décryptage !

Découvrez les précieuses leçons en matière de gestion de produits que l'on peut apprendre des succès de Dropbox, Airbnb et ManoMano !

Coach Produit : je me suis dirigé vers une plus petite structure

Pourquoi prendre la décision de quitter un grand groupe pour rejoindre une petite structure de 40 personnes lorsqu’on est Coach ? Jérémy vous répond.

How to scale your product organization !

Martin, our co-founder, shares valuable insights and tips on how to effectively scale your product organization for success

Échangeons sur votre produit

Nous croyons en un nouveau modèle de consulting où l’excellence commence par l’écoute, le partage et une vraie vision

Product Talk

Make better product decisions.

The 5 Components of a Good Hypothesis

November 12, 2014 by Teresa Torres

Update: I’ve since revised this hypothesis format. You can find the most current version in this article:

- How to Improve Your Experiment Design (And Build Trust in Your Product Experiments)

“My hypothesis is …”

These words are becoming more common everyday. Product teams are starting to talk like scientists. Are you?

The internet industry is going through a mindset shift. Instead of assuming we have all the right answers, we are starting to acknowledge that building products is hard. We are accepting the reality that our ideas are going to fail more often than they are going to succeed.

Rather than waiting to find out which ideas are which after engineers build them, smart product teams are starting to integrate experimentation into their product discovery process. They are asking themselves, how can we test this idea before we invest in it?

This process starts with formulating a good hypothesis.

These Are Not the Hypotheses You Are Looking For

When we are new to hypothesis testing, we tend to start with hypotheses like these:

- Fixing the hard-to-use comment form will increase user engagement.

- A redesign will improve site usability.

- Reducing prices will make customers happy.

There’s only one problem. These aren’t testable hypotheses. They aren’t specific enough.

A good hypothesis can be clearly refuted or supported by an experiment. – Tweet This

To make sure that your hypotheses can be supported or refuted by an experiment, you will want to include each of these elements:

- the change that you are testing

- what impact we expect the change to have

- who you expect it to impact

- by how much

- after how long

The Change: This is the change that you are introducing to your product. You are testing a new design, you are adding new copy to a landing page, or you are rolling out a new feature.

Be sure to get specific. Fixing a hard-to-use comment form is not specific enough. How will you fix it? Some solutions might work. Others might not. Each is a hypothesis in its own right.

Design changes can be particularly challenging. Your hypothesis should cover a specific design not the idea of a redesign.

In other words, use this:

- This specific design will increase conversions.

- Redesigning the landing page will increase conversions.

The former can be supported or refuted by an experiment. The latter can encompass dozens of design solutions, where some might work and others might not.

The Expected Impact: The expected impact should clearly define what you expect to see as a result of making the change.

How will you know if your change is successful? Will it reduce response times, increase conversions, or grow your audience?

The expected impact needs to be specific and measurable. – Tweet This