An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Wiley-Blackwell Online Open

An overview of methodological approaches in systematic reviews

Prabhakar veginadu.

1 Department of Rural Clinical Sciences, La Trobe Rural Health School, La Trobe University, Bendigo Victoria, Australia

Hanny Calache

2 Lincoln International Institute for Rural Health, University of Lincoln, Brayford Pool, Lincoln UK

Akshaya Pandian

3 Department of Orthodontics, Saveetha Dental College, Chennai Tamil Nadu, India

Mohd Masood

Associated data.

APPENDIX B: List of excluded studies with detailed reasons for exclusion

APPENDIX C: Quality assessment of included reviews using AMSTAR 2

The aim of this overview is to identify and collate evidence from existing published systematic review (SR) articles evaluating various methodological approaches used at each stage of an SR.

The search was conducted in five electronic databases from inception to November 2020 and updated in February 2022: MEDLINE, Embase, Web of Science Core Collection, Cochrane Database of Systematic Reviews, and APA PsycINFO. Title and abstract screening were performed in two stages by one reviewer, supported by a second reviewer. Full‐text screening, data extraction, and quality appraisal were performed by two reviewers independently. The quality of the included SRs was assessed using the AMSTAR 2 checklist.

The search retrieved 41,556 unique citations, of which 9 SRs were deemed eligible for inclusion in final synthesis. Included SRs evaluated 24 unique methodological approaches used for defining the review scope and eligibility, literature search, screening, data extraction, and quality appraisal in the SR process. Limited evidence supports the following (a) searching multiple resources (electronic databases, handsearching, and reference lists) to identify relevant literature; (b) excluding non‐English, gray, and unpublished literature, and (c) use of text‐mining approaches during title and abstract screening.

The overview identified limited SR‐level evidence on various methodological approaches currently employed during five of the seven fundamental steps in the SR process, as well as some methodological modifications currently used in expedited SRs. Overall, findings of this overview highlight the dearth of published SRs focused on SR methodologies and this warrants future work in this area.

1. INTRODUCTION

Evidence synthesis is a prerequisite for knowledge translation. 1 A well conducted systematic review (SR), often in conjunction with meta‐analyses (MA) when appropriate, is considered the “gold standard” of methods for synthesizing evidence related to a topic of interest. 2 The central strength of an SR is the transparency of the methods used to systematically search, appraise, and synthesize the available evidence. 3 Several guidelines, developed by various organizations, are available for the conduct of an SR; 4 , 5 , 6 , 7 among these, Cochrane is considered a pioneer in developing rigorous and highly structured methodology for the conduct of SRs. 8 The guidelines developed by these organizations outline seven fundamental steps required in SR process: defining the scope of the review and eligibility criteria, literature searching and retrieval, selecting eligible studies, extracting relevant data, assessing risk of bias (RoB) in included studies, synthesizing results, and assessing certainty of evidence (CoE) and presenting findings. 4 , 5 , 6 , 7

The methodological rigor involved in an SR can require a significant amount of time and resource, which may not always be available. 9 As a result, there has been a proliferation of modifications made to the traditional SR process, such as refining, shortening, bypassing, or omitting one or more steps, 10 , 11 for example, limits on the number and type of databases searched, limits on publication date, language, and types of studies included, and limiting to one reviewer for screening and selection of studies, as opposed to two or more reviewers. 10 , 11 These methodological modifications are made to accommodate the needs of and resource constraints of the reviewers and stakeholders (e.g., organizations, policymakers, health care professionals, and other knowledge users). While such modifications are considered time and resource efficient, they may introduce bias in the review process reducing their usefulness. 5

Substantial research has been conducted examining various approaches used in the standardized SR methodology and their impact on the validity of SR results. There are a number of published reviews examining the approaches or modifications corresponding to single 12 , 13 or multiple steps 14 involved in an SR. However, there is yet to be a comprehensive summary of the SR‐level evidence for all the seven fundamental steps in an SR. Such a holistic evidence synthesis will provide an empirical basis to confirm the validity of current accepted practices in the conduct of SRs. Furthermore, sometimes there is a balance that needs to be achieved between the resource availability and the need to synthesize the evidence in the best way possible, given the constraints. This evidence base will also inform the choice of modifications to be made to the SR methods, as well as the potential impact of these modifications on the SR results. An overview is considered the choice of approach for summarizing existing evidence on a broad topic, directing the reader to evidence, or highlighting the gaps in evidence, where the evidence is derived exclusively from SRs. 15 Therefore, for this review, an overview approach was used to (a) identify and collate evidence from existing published SR articles evaluating various methodological approaches employed in each of the seven fundamental steps of an SR and (b) highlight both the gaps in the current research and the potential areas for future research on the methods employed in SRs.

An a priori protocol was developed for this overview but was not registered with the International Prospective Register of Systematic Reviews (PROSPERO), as the review was primarily methodological in nature and did not meet PROSPERO eligibility criteria for registration. The protocol is available from the corresponding author upon reasonable request. This overview was conducted based on the guidelines for the conduct of overviews as outlined in The Cochrane Handbook. 15 Reporting followed the Preferred Reporting Items for Systematic reviews and Meta‐analyses (PRISMA) statement. 3

2.1. Eligibility criteria

Only published SRs, with or without associated MA, were included in this overview. We adopted the defining characteristics of SRs from The Cochrane Handbook. 5 According to The Cochrane Handbook, a review was considered systematic if it satisfied the following criteria: (a) clearly states the objectives and eligibility criteria for study inclusion; (b) provides reproducible methodology; (c) includes a systematic search to identify all eligible studies; (d) reports assessment of validity of findings of included studies (e.g., RoB assessment of the included studies); (e) systematically presents all the characteristics or findings of the included studies. 5 Reviews that did not meet all of the above criteria were not considered a SR for this study and were excluded. MA‐only articles were included if it was mentioned that the MA was based on an SR.

SRs and/or MA of primary studies evaluating methodological approaches used in defining review scope and study eligibility, literature search, study selection, data extraction, RoB assessment, data synthesis, and CoE assessment and reporting were included. The methodological approaches examined in these SRs and/or MA can also be related to the substeps or elements of these steps; for example, applying limits on date or type of publication are the elements of literature search. Included SRs examined or compared various aspects of a method or methods, and the associated factors, including but not limited to: precision or effectiveness; accuracy or reliability; impact on the SR and/or MA results; reproducibility of an SR steps or bias occurred; time and/or resource efficiency. SRs assessing the methodological quality of SRs (e.g., adherence to reporting guidelines), evaluating techniques for building search strategies or the use of specific database filters (e.g., use of Boolean operators or search filters for randomized controlled trials), examining various tools used for RoB or CoE assessment (e.g., ROBINS vs. Cochrane RoB tool), or evaluating statistical techniques used in meta‐analyses were excluded. 14

2.2. Search

The search for published SRs was performed on the following scientific databases initially from inception to third week of November 2020 and updated in the last week of February 2022: MEDLINE (via Ovid), Embase (via Ovid), Web of Science Core Collection, Cochrane Database of Systematic Reviews, and American Psychological Association (APA) PsycINFO. Search was restricted to English language publications. Following the objectives of this study, study design filters within databases were used to restrict the search to SRs and MA, where available. The reference lists of included SRs were also searched for potentially relevant publications.

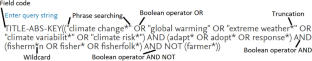

The search terms included keywords, truncations, and subject headings for the key concepts in the review question: SRs and/or MA, methods, and evaluation. Some of the terms were adopted from the search strategy used in a previous review by Robson et al., which reviewed primary studies on methodological approaches used in study selection, data extraction, and quality appraisal steps of SR process. 14 Individual search strategies were developed for respective databases by combining the search terms using appropriate proximity and Boolean operators, along with the related subject headings in order to identify SRs and/or MA. 16 , 17 A senior librarian was consulted in the design of the search terms and strategy. Appendix A presents the detailed search strategies for all five databases.

2.3. Study selection and data extraction

Title and abstract screening of references were performed in three steps. First, one reviewer (PV) screened all the titles and excluded obviously irrelevant citations, for example, articles on topics not related to SRs, non‐SR publications (such as randomized controlled trials, observational studies, scoping reviews, etc.). Next, from the remaining citations, a random sample of 200 titles and abstracts were screened against the predefined eligibility criteria by two reviewers (PV and MM), independently, in duplicate. Discrepancies were discussed and resolved by consensus. This step ensured that the responses of the two reviewers were calibrated for consistency in the application of the eligibility criteria in the screening process. Finally, all the remaining titles and abstracts were reviewed by a single “calibrated” reviewer (PV) to identify potential full‐text records. Full‐text screening was performed by at least two authors independently (PV screened all the records, and duplicate assessment was conducted by MM, HC, or MG), with discrepancies resolved via discussions or by consulting a third reviewer.

Data related to review characteristics, results, key findings, and conclusions were extracted by at least two reviewers independently (PV performed data extraction for all the reviews and duplicate extraction was performed by AP, HC, or MG).

2.4. Quality assessment of included reviews

The quality assessment of the included SRs was performed using the AMSTAR 2 (A MeaSurement Tool to Assess systematic Reviews). The tool consists of a 16‐item checklist addressing critical and noncritical domains. 18 For the purpose of this study, the domain related to MA was reclassified from critical to noncritical, as SRs with and without MA were included. The other six critical domains were used according to the tool guidelines. 18 Two reviewers (PV and AP) independently responded to each of the 16 items in the checklist with either “yes,” “partial yes,” or “no.” Based on the interpretations of the critical and noncritical domains, the overall quality of the review was rated as high, moderate, low, or critically low. 18 Disagreements were resolved through discussion or by consulting a third reviewer.

2.5. Data synthesis

To provide an understandable summary of existing evidence syntheses, characteristics of the methods evaluated in the included SRs were examined and key findings were categorized and presented based on the corresponding step in the SR process. The categories of key elements within each step were discussed and agreed by the authors. Results of the included reviews were tabulated and summarized descriptively, along with a discussion on any overlap in the primary studies. 15 No quantitative analyses of the data were performed.

From 41,556 unique citations identified through literature search, 50 full‐text records were reviewed, and nine systematic reviews 14 , 19 , 20 , 21 , 22 , 23 , 24 , 25 , 26 were deemed eligible for inclusion. The flow of studies through the screening process is presented in Figure 1 . A list of excluded studies with reasons can be found in Appendix B .

Study selection flowchart

3.1. Characteristics of included reviews

Table 1 summarizes the characteristics of included SRs. The majority of the included reviews (six of nine) were published after 2010. 14 , 22 , 23 , 24 , 25 , 26 Four of the nine included SRs were Cochrane reviews. 20 , 21 , 22 , 23 The number of databases searched in the reviews ranged from 2 to 14, 2 reviews searched gray literature sources, 24 , 25 and 7 reviews included a supplementary search strategy to identify relevant literature. 14 , 19 , 20 , 21 , 22 , 23 , 26 Three of the included SRs (all Cochrane reviews) included an integrated MA. 20 , 21 , 23

Characteristics of included studies

SR = systematic review; MA = meta‐analysis; RCT = randomized controlled trial; CCT = controlled clinical trial; N/R = not reported.

The included SRs evaluated 24 unique methodological approaches (26 in total) used across five steps in the SR process; 8 SRs evaluated 6 approaches, 19 , 20 , 21 , 22 , 23 , 24 , 25 , 26 while 1 review evaluated 18 approaches. 14 Exclusion of gray or unpublished literature 21 , 26 and blinding of reviewers for RoB assessment 14 , 23 were evaluated in two reviews each. Included SRs evaluated methods used in five different steps in the SR process, including methods used in defining the scope of review ( n = 3), literature search ( n = 3), study selection ( n = 2), data extraction ( n = 1), and RoB assessment ( n = 2) (Table 2 ).

Summary of findings from review evaluating systematic review methods

There was some overlap in the primary studies evaluated in the included SRs on the same topics: Schmucker et al. 26 and Hopewell et al. 21 ( n = 4), Hopewell et al. 20 and Crumley et al. 19 ( n = 30), and Robson et al. 14 and Morissette et al. 23 ( n = 4). There were no conflicting results between any of the identified SRs on the same topic.

3.2. Methodological quality of included reviews

Overall, the quality of the included reviews was assessed as moderate at best (Table 2 ). The most common critical weakness in the reviews was failure to provide justification for excluding individual studies (four reviews). Detailed quality assessment is provided in Appendix C .

3.3. Evidence on systematic review methods

3.3.1. methods for defining review scope and eligibility.

Two SRs investigated the effect of excluding data obtained from gray or unpublished sources on the pooled effect estimates of MA. 21 , 26 Hopewell et al. 21 reviewed five studies that compared the impact of gray literature on the results of a cohort of MA of RCTs in health care interventions. Gray literature was defined as information published in “print or electronic sources not controlled by commercial or academic publishers.” Findings showed an overall greater treatment effect for published trials than trials reported in gray literature. In a more recent review, Schmucker et al. 26 addressed similar objectives, by investigating gray and unpublished data in medicine. In addition to gray literature, defined similar to the previous review by Hopewell et al., the authors also evaluated unpublished data—defined as “supplemental unpublished data related to published trials, data obtained from the Food and Drug Administration or other regulatory websites or postmarketing analyses hidden from the public.” The review found that in majority of the MA, excluding gray literature had little or no effect on the pooled effect estimates. The evidence was limited to conclude if the data from gray and unpublished literature had an impact on the conclusions of MA. 26

Morrison et al. 24 examined five studies measuring the effect of excluding non‐English language RCTs on the summary treatment effects of SR‐based MA in various fields of conventional medicine. Although none of the included studies reported major difference in the treatment effect estimates between English only and non‐English inclusive MA, the review found inconsistent evidence regarding the methodological and reporting quality of English and non‐English trials. 24 As such, there might be a risk of introducing “language bias” when excluding non‐English language RCTs. The authors also noted that the numbers of non‐English trials vary across medical specialties, as does the impact of these trials on MA results. Based on these findings, Morrison et al. 24 conclude that literature searches must include non‐English studies when resources and time are available to minimize the risk of introducing “language bias.”

3.3.2. Methods for searching studies

Crumley et al. 19 analyzed recall (also referred to as “sensitivity” by some researchers; defined as “percentage of relevant studies identified by the search”) and precision (defined as “percentage of studies identified by the search that were relevant”) when searching a single resource to identify randomized controlled trials and controlled clinical trials, as opposed to searching multiple resources. The studies included in their review frequently compared a MEDLINE only search with the search involving a combination of other resources. The review found low median recall estimates (median values between 24% and 92%) and very low median precisions (median values between 0% and 49%) for most of the electronic databases when searched singularly. 19 A between‐database comparison, based on the type of search strategy used, showed better recall and precision for complex and Cochrane Highly Sensitive search strategies (CHSSS). In conclusion, the authors emphasize that literature searches for trials in SRs must include multiple sources. 19

In an SR comparing handsearching and electronic database searching, Hopewell et al. 20 found that handsearching retrieved more relevant RCTs (retrieval rate of 92%−100%) than searching in a single electronic database (retrieval rates of 67% for PsycINFO/PsycLIT, 55% for MEDLINE, and 49% for Embase). The retrieval rates varied depending on the quality of handsearching, type of electronic search strategy used (e.g., simple, complex or CHSSS), and type of trial reports searched (e.g., full reports, conference abstracts, etc.). The authors concluded that handsearching was particularly important in identifying full trials published in nonindexed journals and in languages other than English, as well as those published as abstracts and letters. 20

The effectiveness of checking reference lists to retrieve additional relevant studies for an SR was investigated by Horsley et al. 22 The review reported that checking reference lists yielded 2.5%–40% more studies depending on the quality and comprehensiveness of the electronic search used. The authors conclude that there is some evidence, although from poor quality studies, to support use of checking reference lists to supplement database searching. 22

3.3.3. Methods for selecting studies

Three approaches relevant to reviewer characteristics, including number, experience, and blinding of reviewers involved in the screening process were highlighted in an SR by Robson et al. 14 Based on the retrieved evidence, the authors recommended that two independent, experienced, and unblinded reviewers be involved in study selection. 14 A modified approach has also been suggested by the review authors, where one reviewer screens and the other reviewer verifies the list of excluded studies, when the resources are limited. It should be noted however this suggestion is likely based on the authors’ opinion, as there was no evidence related to this from the studies included in the review.

Robson et al. 14 also reported two methods describing the use of technology for screening studies: use of Google Translate for translating languages (for example, German language articles to English) to facilitate screening was considered a viable method, while using two computer monitors for screening did not increase the screening efficiency in SR. Title‐first screening was found to be more efficient than simultaneous screening of titles and abstracts, although the gain in time with the former method was lesser than the latter. Therefore, considering that the search results are routinely exported as titles and abstracts, Robson et al. 14 recommend screening titles and abstracts simultaneously. However, the authors note that these conclusions were based on very limited number (in most instances one study per method) of low‐quality studies. 14

3.3.4. Methods for data extraction

Robson et al. 14 examined three approaches for data extraction relevant to reviewer characteristics, including number, experience, and blinding of reviewers (similar to the study selection step). Although based on limited evidence from a small number of studies, the authors recommended use of two experienced and unblinded reviewers for data extraction. The experience of the reviewers was suggested to be especially important when extracting continuous outcomes (or quantitative) data. However, when the resources are limited, data extraction by one reviewer and a verification of the outcomes data by a second reviewer was recommended.

As for the methods involving use of technology, Robson et al. 14 identified limited evidence on the use of two monitors to improve the data extraction efficiency and computer‐assisted programs for graphical data extraction. However, use of Google Translate for data extraction in non‐English articles was not considered to be viable. 14 In the same review, Robson et al. 14 identified evidence supporting contacting authors for obtaining additional relevant data.

3.3.5. Methods for RoB assessment

Two SRs examined the impact of blinding of reviewers for RoB assessments. 14 , 23 Morissette et al. 23 investigated the mean differences between the blinded and unblinded RoB assessment scores and found inconsistent differences among the included studies providing no definitive conclusions. Similar conclusions were drawn in a more recent review by Robson et al., 14 which included four studies on reviewer blinding for RoB assessment that completely overlapped with Morissette et al. 23

Use of experienced reviewers and provision of additional guidance for RoB assessment were examined by Robson et al. 14 The review concluded that providing intensive training and guidance on assessing studies reporting insufficient data to the reviewers improves RoB assessments. 14 Obtaining additional data related to quality assessment by contacting study authors was also found to help the RoB assessments, although based on limited evidence. When assessing the qualitative or mixed method reviews, Robson et al. 14 recommends the use of a structured RoB tool as opposed to an unstructured tool. No SRs were identified on data synthesis and CoE assessment and reporting steps.

4. DISCUSSION

4.1. summary of findings.

Nine SRs examining 24 unique methods used across five steps in the SR process were identified in this overview. The collective evidence supports some current traditional and modified SR practices, while challenging other approaches. However, the quality of the included reviews was assessed to be moderate at best and in the majority of the included SRs, evidence related to the evaluated methods was obtained from very limited numbers of primary studies. As such, the interpretations from these SRs should be made cautiously.

The evidence gathered from the included SRs corroborate a few current SR approaches. 5 For example, it is important to search multiple resources for identifying relevant trials (RCTs and/or CCTs). The resources must include a combination of electronic database searching, handsearching, and reference lists of retrieved articles. 5 However, no SRs have been identified that evaluated the impact of the number of electronic databases searched. A recent study by Halladay et al. 27 found that articles on therapeutic intervention, retrieved by searching databases other than PubMed (including Embase), contributed only a small amount of information to the MA and also had a minimal impact on the MA results. The authors concluded that when the resources are limited and when large number of studies are expected to be retrieved for the SR or MA, PubMed‐only search can yield reliable results. 27

Findings from the included SRs also reiterate some methodological modifications currently employed to “expedite” the SR process. 10 , 11 For example, excluding non‐English language trials and gray/unpublished trials from MA have been shown to have minimal or no impact on the results of MA. 24 , 26 However, the efficiency of these SR methods, in terms of time and the resources used, have not been evaluated in the included SRs. 24 , 26 Of the SRs included, only two have focused on the aspect of efficiency 14 , 25 ; O'Mara‐Eves et al. 25 report some evidence to support the use of text‐mining approaches for title and abstract screening in order to increase the rate of screening. Moreover, only one included SR 14 considered primary studies that evaluated reliability (inter‐ or intra‐reviewer consistency) and accuracy (validity when compared against a “gold standard” method) of the SR methods. This can be attributed to the limited number of primary studies that evaluated these outcomes when evaluating the SR methods. 14 Lack of outcome measures related to reliability, accuracy, and efficiency precludes making definitive recommendations on the use of these methods/modifications. Future research studies must focus on these outcomes.

Some evaluated methods may be relevant to multiple steps; for example, exclusions based on publication status (gray/unpublished literature) and language of publication (non‐English language studies) can be outlined in the a priori eligibility criteria or can be incorporated as search limits in the search strategy. SRs included in this overview focused on the effect of study exclusions on pooled treatment effect estimates or MA conclusions. Excluding studies from the search results, after conducting a comprehensive search, based on different eligibility criteria may yield different results when compared to the results obtained when limiting the search itself. 28 Further studies are required to examine this aspect.

Although we acknowledge the lack of standardized quality assessment tools for methodological study designs, we adhered to the Cochrane criteria for identifying SRs in this overview. This was done to ensure consistency in the quality of the included evidence. As a result, we excluded three reviews that did not provide any form of discussion on the quality of the included studies. The methods investigated in these reviews concern supplementary search, 29 data extraction, 12 and screening. 13 However, methods reported in two of these three reviews, by Mathes et al. 12 and Waffenschmidt et al., 13 have also been examined in the SR by Robson et al., 14 which was included in this overview; in most instances (with the exception of one study included in Mathes et al. 12 and Waffenschmidt et al. 13 each), the studies examined in these excluded reviews overlapped with those in the SR by Robson et al. 14

One of the key gaps in the knowledge observed in this overview was the dearth of SRs on the methods used in the data synthesis component of SR. Narrative and quantitative syntheses are the two most commonly used approaches for synthesizing data in evidence synthesis. 5 There are some published studies on the proposed indications and implications of these two approaches. 30 , 31 These studies found that both data synthesis methods produced comparable results and have their own advantages, suggesting that the choice of the method must be based on the purpose of the review. 31 With increasing number of “expedited” SR approaches (so called “rapid reviews”) avoiding MA, 10 , 11 further research studies are warranted in this area to determine the impact of the type of data synthesis on the results of the SR.

4.2. Implications for future research

The findings of this overview highlight several areas of paucity in primary research and evidence synthesis on SR methods. First, no SRs were identified on methods used in two important components of the SR process, including data synthesis and CoE and reporting. As for the included SRs, a limited number of evaluation studies have been identified for several methods. This indicates that further research is required to corroborate many of the methods recommended in current SR guidelines. 4 , 5 , 6 , 7 Second, some SRs evaluated the impact of methods on the results of quantitative synthesis and MA conclusions. Future research studies must also focus on the interpretations of SR results. 28 , 32 Finally, most of the included SRs were conducted on specific topics related to the field of health care, limiting the generalizability of the findings to other areas. It is important that future research studies evaluating evidence syntheses broaden the objectives and include studies on different topics within the field of health care.

4.3. Strengths and limitations

To our knowledge, this is the first overview summarizing current evidence from SRs and MA on different methodological approaches used in several fundamental steps in SR conduct. The overview methodology followed well established guidelines and strict criteria defined for the inclusion of SRs.

There are several limitations related to the nature of the included reviews. Evidence for most of the methods investigated in the included reviews was derived from a limited number of primary studies. Also, the majority of the included SRs may be considered outdated as they were published (or last updated) more than 5 years ago 33 ; only three of the nine SRs have been published in the last 5 years. 14 , 25 , 26 Therefore, important and recent evidence related to these topics may not have been included. Substantial numbers of included SRs were conducted in the field of health, which may limit the generalizability of the findings. Some method evaluations in the included SRs focused on quantitative analyses components and MA conclusions only. As such, the applicability of these findings to SR more broadly is still unclear. 28 Considering the methodological nature of our overview, limiting the inclusion of SRs according to the Cochrane criteria might have resulted in missing some relevant evidence from those reviews without a quality assessment component. 12 , 13 , 29 Although the included SRs performed some form of quality appraisal of the included studies, most of them did not use a standardized RoB tool, which may impact the confidence in their conclusions. Due to the type of outcome measures used for the method evaluations in the primary studies and the included SRs, some of the identified methods have not been validated against a reference standard.

Some limitations in the overview process must be noted. While our literature search was exhaustive covering five bibliographic databases and supplementary search of reference lists, no gray sources or other evidence resources were searched. Also, the search was primarily conducted in health databases, which might have resulted in missing SRs published in other fields. Moreover, only English language SRs were included for feasibility. As the literature search retrieved large number of citations (i.e., 41,556), the title and abstract screening was performed by a single reviewer, calibrated for consistency in the screening process by another reviewer, owing to time and resource limitations. These might have potentially resulted in some errors when retrieving and selecting relevant SRs. The SR methods were grouped based on key elements of each recommended SR step, as agreed by the authors. This categorization pertains to the identified set of methods and should be considered subjective.

5. CONCLUSIONS

This overview identified limited SR‐level evidence on various methodological approaches currently employed during five of the seven fundamental steps in the SR process. Limited evidence was also identified on some methodological modifications currently used to expedite the SR process. Overall, findings highlight the dearth of SRs on SR methodologies, warranting further work to confirm several current recommendations on conventional and expedited SR processes.

CONFLICT OF INTEREST

The authors declare no conflicts of interest.

Supporting information

APPENDIX A: Detailed search strategies

ACKNOWLEDGMENTS

The first author is supported by a La Trobe University Full Fee Research Scholarship and a Graduate Research Scholarship.

Open Access Funding provided by La Trobe University.

Veginadu P, Calache H, Gussy M, Pandian A, Masood M. An overview of methodological approaches in systematic reviews . J Evid Based Med . 2022; 15 :39–54. 10.1111/jebm.12468 [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- - Google Chrome

Intended for healthcare professionals

- Access provided by Google Indexer

- My email alerts

- BMA member login

- Username * Password * Forgot your log in details? Need to activate BMA Member Log In Log in via OpenAthens Log in via your institution

Search form

- Advanced search

- Search responses

- Search blogs

- The PRISMA 2020...

The PRISMA 2020 statement: an updated guideline for reporting systematic reviews

PRISMA 2020 explanation and elaboration: updated guidance and exemplars for reporting systematic reviews

- Related content

- Peer review

- Matthew J Page , senior research fellow 1 ,

- Joanne E McKenzie , associate professor 1 ,

- Patrick M Bossuyt , professor 2 ,

- Isabelle Boutron , professor 3 ,

- Tammy C Hoffmann , professor 4 ,

- Cynthia D Mulrow , professor 5 ,

- Larissa Shamseer , doctoral student 6 ,

- Jennifer M Tetzlaff , research product specialist 7 ,

- Elie A Akl , professor 8 ,

- Sue E Brennan , senior research fellow 1 ,

- Roger Chou , professor 9 ,

- Julie Glanville , associate director 10 ,

- Jeremy M Grimshaw , professor 11 ,

- Asbjørn Hróbjartsson , professor 12 ,

- Manoj M Lalu , associate scientist and assistant professor 13 ,

- Tianjing Li , associate professor 14 ,

- Elizabeth W Loder , professor 15 ,

- Evan Mayo-Wilson , associate professor 16 ,

- Steve McDonald , senior research fellow 1 ,

- Luke A McGuinness , research associate 17 ,

- Lesley A Stewart , professor and director 18 ,

- James Thomas , professor 19 ,

- Andrea C Tricco , scientist and associate professor 20 ,

- Vivian A Welch , associate professor 21 ,

- Penny Whiting , associate professor 17 ,

- David Moher , director and professor 22

- 1 School of Public Health and Preventive Medicine, Monash University, Melbourne, Australia

- 2 Department of Clinical Epidemiology, Biostatistics and Bioinformatics, Amsterdam University Medical Centres, University of Amsterdam, Amsterdam, Netherlands

- 3 Université de Paris, Centre of Epidemiology and Statistics (CRESS), Inserm, F 75004 Paris, France

- 4 Institute for Evidence-Based Healthcare, Faculty of Health Sciences and Medicine, Bond University, Gold Coast, Australia

- 5 University of Texas Health Science Center at San Antonio, San Antonio, Texas, USA; Annals of Internal Medicine

- 6 Knowledge Translation Program, Li Ka Shing Knowledge Institute, Toronto, Canada; School of Epidemiology and Public Health, Faculty of Medicine, University of Ottawa, Ottawa, Canada

- 7 Evidence Partners, Ottawa, Canada

- 8 Clinical Research Institute, American University of Beirut, Beirut, Lebanon; Department of Health Research Methods, Evidence, and Impact, McMaster University, Hamilton, Ontario, Canada

- 9 Department of Medical Informatics and Clinical Epidemiology, Oregon Health & Science University, Portland, Oregon, USA

- 10 York Health Economics Consortium (YHEC Ltd), University of York, York, UK

- 11 Clinical Epidemiology Program, Ottawa Hospital Research Institute, Ottawa, Canada; School of Epidemiology and Public Health, University of Ottawa, Ottawa, Canada; Department of Medicine, University of Ottawa, Ottawa, Canada

- 12 Centre for Evidence-Based Medicine Odense (CEBMO) and Cochrane Denmark, Department of Clinical Research, University of Southern Denmark, Odense, Denmark; Open Patient data Exploratory Network (OPEN), Odense University Hospital, Odense, Denmark

- 13 Department of Anesthesiology and Pain Medicine, The Ottawa Hospital, Ottawa, Canada; Clinical Epidemiology Program, Blueprint Translational Research Group, Ottawa Hospital Research Institute, Ottawa, Canada; Regenerative Medicine Program, Ottawa Hospital Research Institute, Ottawa, Canada

- 14 Department of Ophthalmology, School of Medicine, University of Colorado Denver, Denver, Colorado, United States; Department of Epidemiology, Johns Hopkins Bloomberg School of Public Health, Baltimore, Maryland, USA

- 15 Division of Headache, Department of Neurology, Brigham and Women's Hospital, Harvard Medical School, Boston, Massachusetts, USA; Head of Research, The BMJ , London, UK

- 16 Department of Epidemiology and Biostatistics, Indiana University School of Public Health-Bloomington, Bloomington, Indiana, USA

- 17 Population Health Sciences, Bristol Medical School, University of Bristol, Bristol, UK

- 18 Centre for Reviews and Dissemination, University of York, York, UK

- 19 EPPI-Centre, UCL Social Research Institute, University College London, London, UK

- 20 Li Ka Shing Knowledge Institute of St. Michael's Hospital, Unity Health Toronto, Toronto, Canada; Epidemiology Division of the Dalla Lana School of Public Health and the Institute of Health Management, Policy, and Evaluation, University of Toronto, Toronto, Canada; Queen's Collaboration for Health Care Quality Joanna Briggs Institute Centre of Excellence, Queen's University, Kingston, Canada

- 21 Methods Centre, Bruyère Research Institute, Ottawa, Ontario, Canada; School of Epidemiology and Public Health, Faculty of Medicine, University of Ottawa, Ottawa, Canada

- 22 Centre for Journalology, Clinical Epidemiology Program, Ottawa Hospital Research Institute, Ottawa, Canada; School of Epidemiology and Public Health, Faculty of Medicine, University of Ottawa, Ottawa, Canada

- Correspondence to: M J Page matthew.page{at}monash.edu

- Accepted 4 January 2021

The Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) statement, published in 2009, was designed to help systematic reviewers transparently report why the review was done, what the authors did, and what they found. Over the past decade, advances in systematic review methodology and terminology have necessitated an update to the guideline. The PRISMA 2020 statement replaces the 2009 statement and includes new reporting guidance that reflects advances in methods to identify, select, appraise, and synthesise studies. The structure and presentation of the items have been modified to facilitate implementation. In this article, we present the PRISMA 2020 27-item checklist, an expanded checklist that details reporting recommendations for each item, the PRISMA 2020 abstract checklist, and the revised flow diagrams for original and updated reviews.

Systematic reviews serve many critical roles. They can provide syntheses of the state of knowledge in a field, from which future research priorities can be identified; they can address questions that otherwise could not be answered by individual studies; they can identify problems in primary research that should be rectified in future studies; and they can generate or evaluate theories about how or why phenomena occur. Systematic reviews therefore generate various types of knowledge for different users of reviews (such as patients, healthcare providers, researchers, and policy makers). 1 2 To ensure a systematic review is valuable to users, authors should prepare a transparent, complete, and accurate account of why the review was done, what they did (such as how studies were identified and selected) and what they found (such as characteristics of contributing studies and results of meta-analyses). Up-to-date reporting guidance facilitates authors achieving this. 3

The Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) statement published in 2009 (hereafter referred to as PRISMA 2009) 4 5 6 7 8 9 10 is a reporting guideline designed to address poor reporting of systematic reviews. 11 The PRISMA 2009 statement comprised a checklist of 27 items recommended for reporting in systematic reviews and an “explanation and elaboration” paper 12 13 14 15 16 providing additional reporting guidance for each item, along with exemplars of reporting. The recommendations have been widely endorsed and adopted, as evidenced by its co-publication in multiple journals, citation in over 60 000 reports (Scopus, August 2020), endorsement from almost 200 journals and systematic review organisations, and adoption in various disciplines. Evidence from observational studies suggests that use of the PRISMA 2009 statement is associated with more complete reporting of systematic reviews, 17 18 19 20 although more could be done to improve adherence to the guideline. 21

Many innovations in the conduct of systematic reviews have occurred since publication of the PRISMA 2009 statement. For example, technological advances have enabled the use of natural language processing and machine learning to identify relevant evidence, 22 23 24 methods have been proposed to synthesise and present findings when meta-analysis is not possible or appropriate, 25 26 27 and new methods have been developed to assess the risk of bias in results of included studies. 28 29 Evidence on sources of bias in systematic reviews has accrued, culminating in the development of new tools to appraise the conduct of systematic reviews. 30 31 Terminology used to describe particular review processes has also evolved, as in the shift from assessing “quality” to assessing “certainty” in the body of evidence. 32 In addition, the publishing landscape has transformed, with multiple avenues now available for registering and disseminating systematic review protocols, 33 34 disseminating reports of systematic reviews, and sharing data and materials, such as preprint servers and publicly accessible repositories. To capture these advances in the reporting of systematic reviews necessitated an update to the PRISMA 2009 statement.

Summary points

To ensure a systematic review is valuable to users, authors should prepare a transparent, complete, and accurate account of why the review was done, what they did, and what they found

The PRISMA 2020 statement provides updated reporting guidance for systematic reviews that reflects advances in methods to identify, select, appraise, and synthesise studies

The PRISMA 2020 statement consists of a 27-item checklist, an expanded checklist that details reporting recommendations for each item, the PRISMA 2020 abstract checklist, and revised flow diagrams for original and updated reviews

We anticipate that the PRISMA 2020 statement will benefit authors, editors, and peer reviewers of systematic reviews, and different users of reviews, including guideline developers, policy makers, healthcare providers, patients, and other stakeholders

Development of PRISMA 2020

A complete description of the methods used to develop PRISMA 2020 is available elsewhere. 35 We identified PRISMA 2009 items that were often reported incompletely by examining the results of studies investigating the transparency of reporting of published reviews. 17 21 36 37 We identified possible modifications to the PRISMA 2009 statement by reviewing 60 documents providing reporting guidance for systematic reviews (including reporting guidelines, handbooks, tools, and meta-research studies). 38 These reviews of the literature were used to inform the content of a survey with suggested possible modifications to the 27 items in PRISMA 2009 and possible additional items. Respondents were asked whether they believed we should keep each PRISMA 2009 item as is, modify it, or remove it, and whether we should add each additional item. Systematic review methodologists and journal editors were invited to complete the online survey (110 of 220 invited responded). We discussed proposed content and wording of the PRISMA 2020 statement, as informed by the review and survey results, at a 21-member, two-day, in-person meeting in September 2018 in Edinburgh, Scotland. Throughout 2019 and 2020, we circulated an initial draft and five revisions of the checklist and explanation and elaboration paper to co-authors for feedback. In April 2020, we invited 22 systematic reviewers who had expressed interest in providing feedback on the PRISMA 2020 checklist to share their views (via an online survey) on the layout and terminology used in a preliminary version of the checklist. Feedback was received from 15 individuals and considered by the first author, and any revisions deemed necessary were incorporated before the final version was approved and endorsed by all co-authors.

The PRISMA 2020 statement

Scope of the guideline.

The PRISMA 2020 statement has been designed primarily for systematic reviews of studies that evaluate the effects of health interventions, irrespective of the design of the included studies. However, the checklist items are applicable to reports of systematic reviews evaluating other interventions (such as social or educational interventions), and many items are applicable to systematic reviews with objectives other than evaluating interventions (such as evaluating aetiology, prevalence, or prognosis). PRISMA 2020 is intended for use in systematic reviews that include synthesis (such as pairwise meta-analysis or other statistical synthesis methods) or do not include synthesis (for example, because only one eligible study is identified). The PRISMA 2020 items are relevant for mixed-methods systematic reviews (which include quantitative and qualitative studies), but reporting guidelines addressing the presentation and synthesis of qualitative data should also be consulted. 39 40 PRISMA 2020 can be used for original systematic reviews, updated systematic reviews, or continually updated (“living”) systematic reviews. However, for updated and living systematic reviews, there may be some additional considerations that need to be addressed. Where there is relevant content from other reporting guidelines, we reference these guidelines within the items in the explanation and elaboration paper 41 (such as PRISMA-Search 42 in items 6 and 7, Synthesis without meta-analysis (SWiM) reporting guideline 27 in item 13d). Box 1 includes a glossary of terms used throughout the PRISMA 2020 statement.

Glossary of terms

Systematic review —A review that uses explicit, systematic methods to collate and synthesise findings of studies that address a clearly formulated question 43

Statistical synthesis —The combination of quantitative results of two or more studies. This encompasses meta-analysis of effect estimates (described below) and other methods, such as combining P values, calculating the range and distribution of observed effects, and vote counting based on the direction of effect (see McKenzie and Brennan 25 for a description of each method)

Meta-analysis of effect estimates —A statistical technique used to synthesise results when study effect estimates and their variances are available, yielding a quantitative summary of results 25

Outcome —An event or measurement collected for participants in a study (such as quality of life, mortality)

Result —The combination of a point estimate (such as a mean difference, risk ratio, or proportion) and a measure of its precision (such as a confidence/credible interval) for a particular outcome

Report —A document (paper or electronic) supplying information about a particular study. It could be a journal article, preprint, conference abstract, study register entry, clinical study report, dissertation, unpublished manuscript, government report, or any other document providing relevant information

Record —The title or abstract (or both) of a report indexed in a database or website (such as a title or abstract for an article indexed in Medline). Records that refer to the same report (such as the same journal article) are “duplicates”; however, records that refer to reports that are merely similar (such as a similar abstract submitted to two different conferences) should be considered unique.

Study —An investigation, such as a clinical trial, that includes a defined group of participants and one or more interventions and outcomes. A “study” might have multiple reports. For example, reports could include the protocol, statistical analysis plan, baseline characteristics, results for the primary outcome, results for harms, results for secondary outcomes, and results for additional mediator and moderator analyses

PRISMA 2020 is not intended to guide systematic review conduct, for which comprehensive resources are available. 43 44 45 46 However, familiarity with PRISMA 2020 is useful when planning and conducting systematic reviews to ensure that all recommended information is captured. PRISMA 2020 should not be used to assess the conduct or methodological quality of systematic reviews; other tools exist for this purpose. 30 31 Furthermore, PRISMA 2020 is not intended to inform the reporting of systematic review protocols, for which a separate statement is available (PRISMA for Protocols (PRISMA-P) 2015 statement 47 48 ). Finally, extensions to the PRISMA 2009 statement have been developed to guide reporting of network meta-analyses, 49 meta-analyses of individual participant data, 50 systematic reviews of harms, 51 systematic reviews of diagnostic test accuracy studies, 52 and scoping reviews 53 ; for these types of reviews we recommend authors report their review in accordance with the recommendations in PRISMA 2020 along with the guidance specific to the extension.

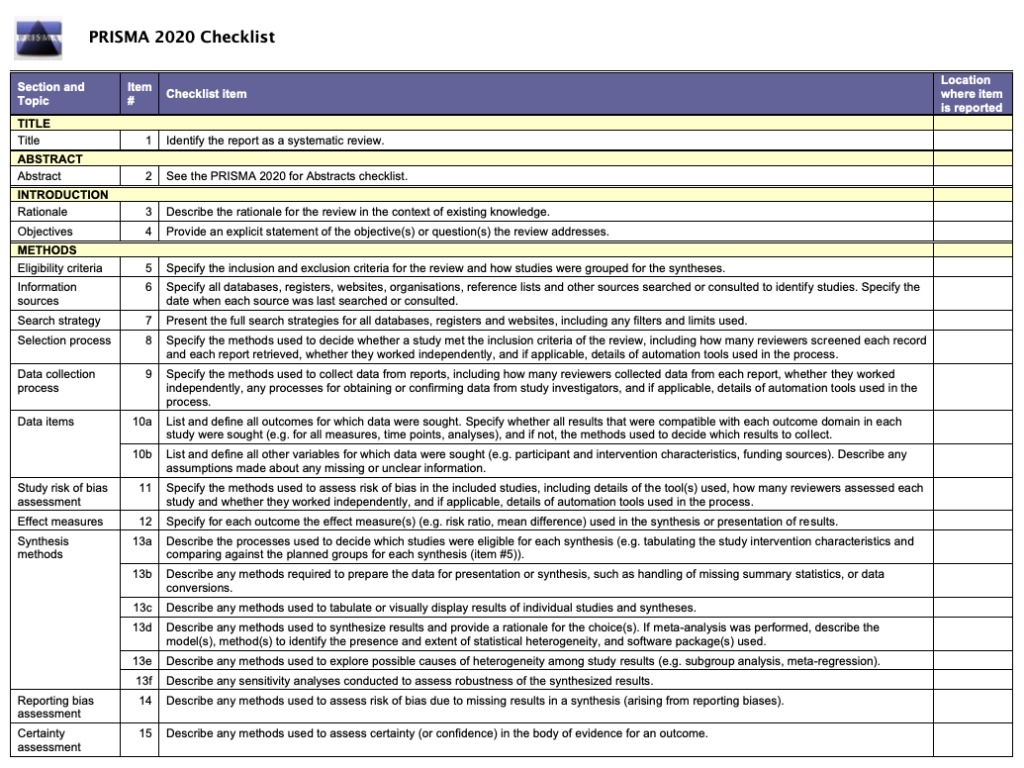

How to use PRISMA 2020

The PRISMA 2020 statement (including the checklists, explanation and elaboration, and flow diagram) replaces the PRISMA 2009 statement, which should no longer be used. Box 2 summarises noteworthy changes from the PRISMA 2009 statement. The PRISMA 2020 checklist includes seven sections with 27 items, some of which include sub-items ( table 1 ). A checklist for journal and conference abstracts for systematic reviews is included in PRISMA 2020. This abstract checklist is an update of the 2013 PRISMA for Abstracts statement, 54 reflecting new and modified content in PRISMA 2020 ( table 2 ). A template PRISMA flow diagram is provided, which can be modified depending on whether the systematic review is original or updated ( fig 1 ).

Noteworthy changes to the PRISMA 2009 statement

Inclusion of the abstract reporting checklist within PRISMA 2020 (see item #2 and table 2 ).

Movement of the ‘Protocol and registration’ item from the start of the Methods section of the checklist to a new Other section, with addition of a sub-item recommending authors describe amendments to information provided at registration or in the protocol (see item #24a-24c).

Modification of the ‘Search’ item to recommend authors present full search strategies for all databases, registers and websites searched, not just at least one database (see item #7).

Modification of the ‘Study selection’ item in the Methods section to emphasise the reporting of how many reviewers screened each record and each report retrieved, whether they worked independently, and if applicable, details of automation tools used in the process (see item #8).

Addition of a sub-item to the ‘Data items’ item recommending authors report how outcomes were defined, which results were sought, and methods for selecting a subset of results from included studies (see item #10a).

Splitting of the ‘Synthesis of results’ item in the Methods section into six sub-items recommending authors describe: the processes used to decide which studies were eligible for each synthesis; any methods required to prepare the data for synthesis; any methods used to tabulate or visually display results of individual studies and syntheses; any methods used to synthesise results; any methods used to explore possible causes of heterogeneity among study results (such as subgroup analysis, meta-regression); and any sensitivity analyses used to assess robustness of the synthesised results (see item #13a-13f).

Addition of a sub-item to the ‘Study selection’ item in the Results section recommending authors cite studies that might appear to meet the inclusion criteria, but which were excluded, and explain why they were excluded (see item #16b).

Splitting of the ‘Synthesis of results’ item in the Results section into four sub-items recommending authors: briefly summarise the characteristics and risk of bias among studies contributing to the synthesis; present results of all statistical syntheses conducted; present results of any investigations of possible causes of heterogeneity among study results; and present results of any sensitivity analyses (see item #20a-20d).

Addition of new items recommending authors report methods for and results of an assessment of certainty (or confidence) in the body of evidence for an outcome (see items #15 and #22).

Addition of a new item recommending authors declare any competing interests (see item #26).

Addition of a new item recommending authors indicate whether data, analytic code and other materials used in the review are publicly available and if so, where they can be found (see item #27).

PRISMA 2020 item checklist

- View inline

PRISMA 2020 for Abstracts checklist*

PRISMA 2020 flow diagram template for systematic reviews. The new design is adapted from flow diagrams proposed by Boers, 55 Mayo-Wilson et al. 56 and Stovold et al. 57 The boxes in grey should only be completed if applicable; otherwise they should be removed from the flow diagram. Note that a “report” could be a journal article, preprint, conference abstract, study register entry, clinical study report, dissertation, unpublished manuscript, government report or any other document providing relevant information.

- Download figure

- Open in new tab

- Download powerpoint

We recommend authors refer to PRISMA 2020 early in the writing process, because prospective consideration of the items may help to ensure that all the items are addressed. To help keep track of which items have been reported, the PRISMA statement website ( http://www.prisma-statement.org/ ) includes fillable templates of the checklists to download and complete (also available in the data supplement on bmj.com). We have also created a web application that allows users to complete the checklist via a user-friendly interface 58 (available at https://prisma.shinyapps.io/checklist/ and adapted from the Transparency Checklist app 59 ). The completed checklist can be exported to Word or PDF. Editable templates of the flow diagram can also be downloaded from the PRISMA statement website.

We have prepared an updated explanation and elaboration paper, in which we explain why reporting of each item is recommended and present bullet points that detail the reporting recommendations (which we refer to as elements). 41 The bullet-point structure is new to PRISMA 2020 and has been adopted to facilitate implementation of the guidance. 60 61 An expanded checklist, which comprises an abridged version of the elements presented in the explanation and elaboration paper, with references and some examples removed, is available in the data supplement on bmj.com. Consulting the explanation and elaboration paper is recommended if further clarity or information is required.

Journals and publishers might impose word and section limits, and limits on the number of tables and figures allowed in the main report. In such cases, if the relevant information for some items already appears in a publicly accessible review protocol, referring to the protocol may suffice. Alternatively, placing detailed descriptions of the methods used or additional results (such as for less critical outcomes) in supplementary files is recommended. Ideally, supplementary files should be deposited to a general-purpose or institutional open-access repository that provides free and permanent access to the material (such as Open Science Framework, Dryad, figshare). A reference or link to the additional information should be included in the main report. Finally, although PRISMA 2020 provides a template for where information might be located, the suggested location should not be seen as prescriptive; the guiding principle is to ensure the information is reported.

Use of PRISMA 2020 has the potential to benefit many stakeholders. Complete reporting allows readers to assess the appropriateness of the methods, and therefore the trustworthiness of the findings. Presenting and summarising characteristics of studies contributing to a synthesis allows healthcare providers and policy makers to evaluate the applicability of the findings to their setting. Describing the certainty in the body of evidence for an outcome and the implications of findings should help policy makers, managers, and other decision makers formulate appropriate recommendations for practice or policy. Complete reporting of all PRISMA 2020 items also facilitates replication and review updates, as well as inclusion of systematic reviews in overviews (of systematic reviews) and guidelines, so teams can leverage work that is already done and decrease research waste. 36 62 63

We updated the PRISMA 2009 statement by adapting the EQUATOR Network’s guidance for developing health research reporting guidelines. 64 We evaluated the reporting completeness of published systematic reviews, 17 21 36 37 reviewed the items included in other documents providing guidance for systematic reviews, 38 surveyed systematic review methodologists and journal editors for their views on how to revise the original PRISMA statement, 35 discussed the findings at an in-person meeting, and prepared this document through an iterative process. Our recommendations are informed by the reviews and survey conducted before the in-person meeting, theoretical considerations about which items facilitate replication and help users assess the risk of bias and applicability of systematic reviews, and co-authors’ experience with authoring and using systematic reviews.

Various strategies to increase the use of reporting guidelines and improve reporting have been proposed. They include educators introducing reporting guidelines into graduate curricula to promote good reporting habits of early career scientists 65 ; journal editors and regulators endorsing use of reporting guidelines 18 ; peer reviewers evaluating adherence to reporting guidelines 61 66 ; journals requiring authors to indicate where in their manuscript they have adhered to each reporting item 67 ; and authors using online writing tools that prompt complete reporting at the writing stage. 60 Multi-pronged interventions, where more than one of these strategies are combined, may be more effective (such as completion of checklists coupled with editorial checks). 68 However, of 31 interventions proposed to increase adherence to reporting guidelines, the effects of only 11 have been evaluated, mostly in observational studies at high risk of bias due to confounding. 69 It is therefore unclear which strategies should be used. Future research might explore barriers and facilitators to the use of PRISMA 2020 by authors, editors, and peer reviewers, designing interventions that address the identified barriers, and evaluating those interventions using randomised trials. To inform possible revisions to the guideline, it would also be valuable to conduct think-aloud studies 70 to understand how systematic reviewers interpret the items, and reliability studies to identify items where there is varied interpretation of the items.

We encourage readers to submit evidence that informs any of the recommendations in PRISMA 2020 (via the PRISMA statement website: http://www.prisma-statement.org/ ). To enhance accessibility of PRISMA 2020, several translations of the guideline are under way (see available translations at the PRISMA statement website). We encourage journal editors and publishers to raise awareness of PRISMA 2020 (for example, by referring to it in journal “Instructions to authors”), endorsing its use, advising editors and peer reviewers to evaluate submitted systematic reviews against the PRISMA 2020 checklists, and making changes to journal policies to accommodate the new reporting recommendations. We recommend existing PRISMA extensions 47 49 50 51 52 53 71 72 be updated to reflect PRISMA 2020 and advise developers of new PRISMA extensions to use PRISMA 2020 as the foundation document.

We anticipate that the PRISMA 2020 statement will benefit authors, editors, and peer reviewers of systematic reviews, and different users of reviews, including guideline developers, policy makers, healthcare providers, patients, and other stakeholders. Ultimately, we hope that uptake of the guideline will lead to more transparent, complete, and accurate reporting of systematic reviews, thus facilitating evidence based decision making.

Acknowledgments

We dedicate this paper to the late Douglas G Altman and Alessandro Liberati, whose contributions were fundamental to the development and implementation of the original PRISMA statement.

We thank the following contributors who completed the survey to inform discussions at the development meeting: Xavier Armoiry, Edoardo Aromataris, Ana Patricia Ayala, Ethan M Balk, Virginia Barbour, Elaine Beller, Jesse A Berlin, Lisa Bero, Zhao-Xiang Bian, Jean Joel Bigna, Ferrán Catalá-López, Anna Chaimani, Mike Clarke, Tammy Clifford, Ioana A Cristea, Miranda Cumpston, Sofia Dias, Corinna Dressler, Ivan D Florez, Joel J Gagnier, Chantelle Garritty, Long Ge, Davina Ghersi, Sean Grant, Gordon Guyatt, Neal R Haddaway, Julian PT Higgins, Sally Hopewell, Brian Hutton, Jamie J Kirkham, Jos Kleijnen, Julia Koricheva, Joey SW Kwong, Toby J Lasserson, Julia H Littell, Yoon K Loke, Malcolm R Macleod, Chris G Maher, Ana Marušic, Dimitris Mavridis, Jessie McGowan, Matthew DF McInnes, Philippa Middleton, Karel G Moons, Zachary Munn, Jane Noyes, Barbara Nußbaumer-Streit, Donald L Patrick, Tatiana Pereira-Cenci, Ba’ Pham, Bob Phillips, Dawid Pieper, Michelle Pollock, Daniel S Quintana, Drummond Rennie, Melissa L Rethlefsen, Hannah R Rothstein, Maroeska M Rovers, Rebecca Ryan, Georgia Salanti, Ian J Saldanha, Margaret Sampson, Nancy Santesso, Rafael Sarkis-Onofre, Jelena Savović, Christopher H Schmid, Kenneth F Schulz, Guido Schwarzer, Beverley J Shea, Paul G Shekelle, Farhad Shokraneh, Mark Simmonds, Nicole Skoetz, Sharon E Straus, Anneliese Synnot, Emily E Tanner-Smith, Brett D Thombs, Hilary Thomson, Alexander Tsertsvadze, Peter Tugwell, Tari Turner, Lesley Uttley, Jeffrey C Valentine, Matt Vassar, Areti Angeliki Veroniki, Meera Viswanathan, Cole Wayant, Paul Whaley, and Kehu Yang. We thank the following contributors who provided feedback on a preliminary version of the PRISMA 2020 checklist: Jo Abbott, Fionn Büttner, Patricia Correia-Santos, Victoria Freeman, Emily A Hennessy, Rakibul Islam, Amalia (Emily) Karahalios, Kasper Krommes, Andreas Lundh, Dafne Port Nascimento, Davina Robson, Catherine Schenck-Yglesias, Mary M Scott, Sarah Tanveer and Pavel Zhelnov. We thank Abigail H Goben, Melissa L Rethlefsen, Tanja Rombey, Anna Scott, and Farhad Shokraneh for their helpful comments on the preprints of the PRISMA 2020 papers. We thank Edoardo Aromataris, Stephanie Chang, Toby Lasserson and David Schriger for their helpful peer review comments on the PRISMA 2020 papers.

Contributors: JEM and DM are joint senior authors. MJP, JEM, PMB, IB, TCH, CDM, LS, and DM conceived this paper and designed the literature review and survey conducted to inform the guideline content. MJP conducted the literature review, administered the survey and analysed the data for both. MJP prepared all materials for the development meeting. MJP and JEM presented proposals at the development meeting. All authors except for TCH, JMT, EAA, SEB, and LAM attended the development meeting. MJP and JEM took and consolidated notes from the development meeting. MJP and JEM led the drafting and editing of the article. JEM, PMB, IB, TCH, LS, JMT, EAA, SEB, RC, JG, AH, TL, EMW, SM, LAM, LAS, JT, ACT, PW, and DM drafted particular sections of the article. All authors were involved in revising the article critically for important intellectual content. All authors approved the final version of the article. MJP is the guarantor of this work. The corresponding author attests that all listed authors meet authorship criteria and that no others meeting the criteria have been omitted.

Funding: There was no direct funding for this research. MJP is supported by an Australian Research Council Discovery Early Career Researcher Award (DE200101618) and was previously supported by an Australian National Health and Medical Research Council (NHMRC) Early Career Fellowship (1088535) during the conduct of this research. JEM is supported by an Australian NHMRC Career Development Fellowship (1143429). TCH is supported by an Australian NHMRC Senior Research Fellowship (1154607). JMT is supported by Evidence Partners Inc. JMG is supported by a Tier 1 Canada Research Chair in Health Knowledge Transfer and Uptake. MML is supported by The Ottawa Hospital Anaesthesia Alternate Funds Association and a Faculty of Medicine Junior Research Chair. TL is supported by funding from the National Eye Institute (UG1EY020522), National Institutes of Health, United States. LAM is supported by a National Institute for Health Research Doctoral Research Fellowship (DRF-2018-11-ST2-048). ACT is supported by a Tier 2 Canada Research Chair in Knowledge Synthesis. DM is supported in part by a University Research Chair, University of Ottawa. The funders had no role in considering the study design or in the collection, analysis, interpretation of data, writing of the report, or decision to submit the article for publication.

Competing interests: All authors have completed the ICMJE uniform disclosure form at http://www.icmje.org/conflicts-of-interest/ and declare: EL is head of research for the BMJ ; MJP is an editorial board member for PLOS Medicine ; ACT is an associate editor and MJP, TL, EMW, and DM are editorial board members for the Journal of Clinical Epidemiology ; DM and LAS were editors in chief, LS, JMT, and ACT are associate editors, and JG is an editorial board member for Systematic Reviews . None of these authors were involved in the peer review process or decision to publish. TCH has received personal fees from Elsevier outside the submitted work. EMW has received personal fees from the American Journal for Public Health , for which he is the editor for systematic reviews. VW is editor in chief of the Campbell Collaboration, which produces systematic reviews, and co-convenor of the Campbell and Cochrane equity methods group. DM is chair of the EQUATOR Network, IB is adjunct director of the French EQUATOR Centre and TCH is co-director of the Australasian EQUATOR Centre, which advocates for the use of reporting guidelines to improve the quality of reporting in research articles. JMT received salary from Evidence Partners, creator of DistillerSR software for systematic reviews; Evidence Partners was not involved in the design or outcomes of the statement, and the views expressed solely represent those of the author.

Provenance and peer review: Not commissioned; externally peer reviewed.

Patient and public involvement: Patients and the public were not involved in this methodological research. We plan to disseminate the research widely, including to community participants in evidence synthesis organisations.

This is an Open Access article distributed in accordance with the terms of the Creative Commons Attribution (CC BY 4.0) license, which permits others to distribute, remix, adapt and build upon this work, for commercial use, provided the original work is properly cited. See: http://creativecommons.org/licenses/by/4.0/ .

- Gurevitch J ,

- Koricheva J ,

- Nakagawa S ,

- Liberati A ,

- Tetzlaff J ,

- Altman DG ,

- PRISMA Group

- Tricco AC ,

- Sampson M ,

- Shamseer L ,

- Leoncini E ,

- de Belvis G ,

- Ricciardi W ,

- Fowler AJ ,

- Leclercq V ,

- Beaudart C ,

- Ajamieh S ,

- Rabenda V ,

- Tirelli E ,

- O’Mara-Eves A ,

- McNaught J ,

- Ananiadou S

- Marshall IJ ,

- Noel-Storr A ,

- Higgins JPT ,

- Chandler J ,

- McKenzie JE ,

- López-López JA ,

- Becker BJ ,

- Campbell M ,

- Sterne JAC ,

- Savović J ,

- Sterne JA ,

- Hernán MA ,

- Reeves BC ,

- Whiting P ,

- Higgins JP ,

- ROBIS group

- Hultcrantz M ,

- Stewart L ,

- Bossuyt PM ,

- Flemming K ,

- McInnes E ,

- France EF ,

- Cunningham M ,

- Rethlefsen ML ,

- Kirtley S ,

- Waffenschmidt S ,

- PRISMA-S Group

- ↵ Higgins JPT, Thomas J, Chandler J, et al, eds. Cochrane Handbook for Systematic Reviews of Interventions : Version 6.0. Cochrane, 2019. Available from https://training.cochrane.org/handbook .

- Dekkers OM ,

- Vandenbroucke JP ,

- Cevallos M ,

- Renehan AG ,

- ↵ Cooper H, Hedges LV, Valentine JV, eds. The Handbook of Research Synthesis and Meta-Analysis. Russell Sage Foundation, 2019.

- IOM (Institute of Medicine)

- PRISMA-P Group

- Salanti G ,

- Caldwell DM ,

- Stewart LA ,

- PRISMA-IPD Development Group

- Zorzela L ,

- Ioannidis JP ,

- PRISMAHarms Group

- McInnes MDF ,

- Thombs BD ,

- and the PRISMA-DTA Group

- Beller EM ,

- Glasziou PP ,

- PRISMA for Abstracts Group

- Mayo-Wilson E ,

- Dickersin K ,

- MUDS investigators

- Stovold E ,

- Beecher D ,

- Noel-Storr A

- McGuinness LA

- Sarafoglou A ,

- Boutron I ,

- Giraudeau B ,

- Porcher R ,

- Chauvin A ,

- Schulz KF ,

- Schroter S ,

- Stevens A ,

- Weinstein E ,

- Macleod MR ,

- IICARus Collaboration

- Kirkham JJ ,

- Petticrew M ,

- Tugwell P ,

- PRISMA-Equity Bellagio group

Which review is that? A guide to review types.

- Which review is that?

- Review Comparison Chart

- Decision Tool

- Critical Review

- Integrative Review

- Narrative Review

- State of the Art Review

- Narrative Summary

- Systematic Review

- Meta-analysis

- Comparative Effectiveness Review

- Diagnostic Systematic Review

- Network Meta-analysis

- Prognostic Review

- Psychometric Review

- Review of Economic Evaluations

- Systematic Review of Epidemiology Studies

- Living Systematic Reviews

- Umbrella Review

- Review of Reviews

- Rapid Review

- Rapid Evidence Assessment

- Rapid Realist Review

- Qualitative Evidence Synthesis

- Qualitative Interpretive Meta-synthesis

- Qualitative Meta-synthesis

- Qualitative Research Synthesis

- Framework Synthesis - Best-fit Framework Synthesis

- Meta-aggregation

- Meta-ethnography

- Meta-interpretation

- Meta-narrative Review

- Meta-summary

- Thematic Synthesis

- Mixed Methods Synthesis

- Narrative Synthesis

- Bayesian Meta-analysis

- EPPI-Centre Review

- Critical Interpretive Synthesis

- Realist Synthesis - Realist Review

- Scoping Review

- Mapping Review

- Systematised Review

- Concept Synthesis

- Expert Opinion - Policy Review

- Technology Assessment Review

Methodological Review

- Systematic Search and Review

A methodological review is a type of systematic secondary research (i.e., research synthesis) which focuses on summarising the state-of-the-art methodological practices of research in a substantive field or topic" (Chong et al, 2021).

Methodological reviews "can be performed to examine any methodological issues relating to the design, conduct and review of research studies and also evidence syntheses". Munn et al, 2018)

Further Reading/Resources

Clarke, M., Oxman, A. D., Paulsen, E., Higgins, J. P. T., & Green, S. (2011). Appendix A: Guide to the contents of a Cochrane Methodology protocol and review. Cochrane Handbook for systematic reviews of interventions . Full Text PDF

Aguinis, H., Ramani, R. S., & Alabduljader, N. (2023). Best-Practice Recommendations for Producers, Evaluators, and Users of Methodological Literature Reviews. Organizational Research Methods, 26(1), 46-76. https://doi.org/10.1177/1094428120943281 Full Text

Jha, C. K., & Kolekar, M. H. (2021). Electrocardiogram data compression techniques for cardiac healthcare systems: A methodological review. IRBM . Full Text

References Munn, Z., Stern, C., Aromataris, E., Lockwood, C., & Jordan, Z. (2018). What kind of systematic review should I conduct? A proposed typology and guidance for systematic reviewers in the medical and health sciences. BMC medical research methodology , 18 (1), 1-9. Full Text Chong, S. W., & Reinders, H. (2021). A methodological review of qualitative research syntheses in CALL: The state-of-the-art. System , 103 , 102646. Full Text

- << Previous: Technology Assessment Review

- Next: Systematic Search and Review >>

- Last Updated: Apr 17, 2024 12:42 PM

- URL: https://unimelb.libguides.com/whichreview

The ABC of systematic literature review: the basic methodological guidance for beginners

- Published: 23 October 2020

- Volume 55 , pages 1319–1346, ( 2021 )

Cite this article

- Hayrol Azril Mohamed Shaffril 1 ,

- Samsul Farid Samsuddin 2 &

- Asnarulkhadi Abu Samah 1 , 3

17k Accesses

125 Citations

2 Altmetric

Explore all metrics

There is a need for more methodological-based articles on systematic literature review (SLR) for non-health researchers to address issues related to the lack of methodological references in SLR and less suitability of existing methodological guidance. With that, this study presented a beginner's guide to basic methodological guides and key points to perform SLR, especially for those from non-health related background. For that, a total of 75 articles that passed the minimum quality were retrieved using systematic searching strategies. Seven main points of SLR were discussed, namely (1) the development and validation of the review protocol/publication standard/reporting standard/guidelines, (2) the formulation of research questions, (3) systematic searching strategies, (4) quality appraisal, (5) data extraction, (6) data synthesis, and (7) data demonstration.

This is a preview of subscription content, log in via an institution to check access.

Access this article

Price includes VAT (Russian Federation)

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

Similar content being viewed by others

What is Qualitative in Qualitative Research

Patrik Aspers & Ugo Corte

Qualitative Research: Ethical Considerations

Criteria for Good Qualitative Research: A Comprehensive Review

Drishti Yadav

Athukorala, K., Głowacka, D., Jacucci, G., Oulasvirta, A., Vreeken, J.: Is exploratory search different?: A comparison of information search behavior for exploratory and lookup tasks. J. Assoc. Inf. Sci. Technol. 67 (11), 2635–2651 (2016). https://doi.org/10.1002/asi.23617

Article Google Scholar

Barnett-Page, E., Thomas, J.: Methods for the synthesis of qualitative research: a critical review. BMC Med. Res. Methodol. (2009). https://doi.org/10.1186/1471-2288-9-59

Bates, J., Best, P., McQuilkin, J., Taylor, B.: Will web search engines replace bibliographic databases in the systematic identification of research? J. Acad. Librariansh. 43 (1), 8–17 (2017)

Google Scholar

Berrang-Ford, L., Pearce, T., Ford, J.D.: Systematic review approaches for climate change adaptation research. Reg. Environ. Change 15 (5), 755–769 (2015). https://doi.org/10.1007/s10113-014-0708-7

Braun, V., Clarke, V.: Using thematic analysis in psychology. Qual. Res. Psychol. 3 (2), 77–101 (2006). https://doi.org/10.1191/1478088706qp063oa

Burgers, C., Brugman, B.C., Boeynaems, A.: Systematic literature reviews: four applications for interdisciplinary research. J. Pragmat. 145 , 102–109 (2019)

Brunton, G., Oliver, S., Oliver, K., Lorenc, T.: A Synthesis of Research Addressing Children’s, Young People’s and Parents’ Views of Walking and Cycling for Transport. EPPI-Centre, Social. Science Research Unit, Institute of Education, University of London, London (2006)